Abstract

Considering recent developments in gene manipulation methods for honey bees, establishing simple and robust assay systems which can analyze behavioral components in detail inside a laboratory is important for the rise of behavioral genetics in the honey bee. We focused on the antennal movements of the honey bee and developed an experimental system for analyzing the antennal responses (ARs) of the honey bee using DeepLabCut, a markerless posture-tracking tool using deep learning. The tracking of antennal movements using DeepLabCut during the presentation of vertical (downward and upward) motion stimuli successfully detected the direction-specific ARs in the transverse plane, which has been reported in the previous studies where bees tilted their antennae in the direction opposite to the motion stimuli. In addition, we found that honey bees also exhibited direction-specific ARs in the coronal plane in response to horizontal (forward and backward) motion stimuli. Furthermore, an investigation of the developmental maturation of honey bee ARs showed that ARs to motion stimuli were not detected in bees immediately after emergence but became detectable through post-emergence development in an experience-independent manner. Finally, unsupervised clustering analysis using multidimensional data created by processing tracking data using DeepLabCut classified antennal movements into different clusters, suggesting that data-driven behavioral classification can apply to AR paradigms. In summary, our results revealed direction-specific ARs even in the coronal plane to horizontal motion stimuli and developmental maturation of ARs for the first time, and suggest the efficacy of data-driven analysis for behavioral classification in behavioral studies of the honey bee.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Genome sequencing and comprehensive gene expression analyses have been conducted on many insect species (Ellegren 2014; Oppenheim et al. 2015). Combined with the establishment of gene manipulation methods (Mello and Conte 2004; Adli 2018), the molecular and neural bases of insect behavior have been elucidated in various non-model insect species other than Drosophila (Sun et al. 2017; Mansourian et al. 2019; Walton et al. 2020). The European honey bee (Apis mellifera) is a well-known social insect, and its social behavior has been extensively studied for many years (Frisch et al. 1967; Winston 1987; Seeley 1995). In addition, genome editing and transgenic technologies have been established and applied for molecular- and neuro-ethological analyses in honey bees (Schulte et al. 2014; Kohno et al. 2016; Otte et al. 2018; Kohno and Kubo 2018, 2019; Roth et al. 2019; Carcaud et al. 2023), although the molecular and neural bases underlying honey bee behaviors still remain to be solved.

Most innate behaviors of honey bees, such as nursing their brood, division of labor of workers, and waggle dance, have been described by observations and behavioral experiments in the field (Frisch et al. 1967; Seeley 1995), but genetically modified honey bees must be confined to laboratory conditions due to legal restrictions. To date, a simple and robust behavioral experimental paradigm, olfactory conditioning of the proboscis extension reflex (PER), has been extensively utilized to analyze the abilities and mechanisms of learning and memory of honey bees inside a laboratory (Kuwabara 1957; Giurfa and Sandoz 2012; Eisenhardt 2014). Some previous studies have developed original devices for analyzing bee behaviors and sophisticated psychological experimental paradigms (Giurfa et al. 2001; Kirkerud et al. 2013, 2017; Schultheiss et al. 2017; Howard et al. 2018, 2019; Marchal et al. 2019; Nouvian and Galizia 2019; Geng et al. 2022), but they have not necessarily been widely used due to their uniqueness or difficulties requiring skilled handling of bees with care. Against this background, developing a variety of robust (highly reproducible), simple, and versatile behavioral experimental systems which can be used inside a laboratory other than the olfactory PER associative learning paradigm is important for the rise of honey bee behavioral genetics.

We focused on the antennal response (AR) of honey bees as one of these behavioral experimental systems. Insect antennae are essential for various sensory receptions such as olfaction, gustation, and mechanoreception and are used to sense the external world (Vogt and Riddiford 1981; Staudacher et al. 2005; Hallem et al. 2006). Various insect species move their antennae in response to odorants, visual stimuli, and mechanical stimuli (Honegger 1981; Staudacher et al. 2005; Mamiya et al. 2011; Natesan et al. 2019). In honey bees, ARs to motion, odor, and mechanical stimuli and learning-dependent changes in AR to odors have been reported (Suzuki 1975; Erber et al. 1993; Erber and Kloppenburg 1995; Cholé et al. 2015; Gascue et al. 2022). In addition, antennal contact is essential in maintaining society through nestmate recognition and pheromone reception in eusocial insects, including honey bees (Ozaki et al. 2005; Sharma et al. 2015; Gomez Ramirez et al. 2023). To date, multi-animal tracking studies in eusocial insects using individual identification tags have revealed developmental changes in the social behaviors and responses of individuals to different social circumstances, which were inferred from individual positions inside the nest and inter-individual interactions (Mersch et al. 2013; Crall et al. 2015, 2018; Wario et al. 2015; Ai et al. 2017; Stroeymeyt et al. 2018; Liberti et al. 2022). However, by colony-level observations, determining which behavioral components change and affect individual responses is generally difficult. Therefore, the measurement and analysis of antennal movements in honey bees is expected to lead to a better understanding of the behavioral components that influence not only environmental recognition but also social behaviors.

In previous studies, the movements of insect antennae were measured by manually determining their position and angle for each frame of video or using phototransistors, which register the movement of the antennae (Erber and Schildberger 1980; Erber et al. 1993; Erber and Kloppenburg 1995; Okada and Toh 2004). Recently, video analysis technologies have advanced, and methods for automatically tracking the movement of body parts of the honey bee have been reported, such as tracking the marked colors at the tip of the antennae or separating the outline of the antennae from the background by image processing (Cholé et al. 2015, 2022; Khurana and Sane 2016). In addition, a markerless posture-tracking tool using deep learning, DeepLabCut (Mathis et al. 2018; Nath et al. 2019), has been widely used in various research fields, such as ecology and neuroscience (Mathis and Mathis 2020), and is becoming recognized as a powerful tool that does not require special devices or expertise, in addition to being noninvasive and robust in analyzing videos with a complex background. Recently, DeepLabCut has also been used in honey bees and bumble bees, a close relative of honey bees, and the AR to odors with positive or negative valence (Gascue et al. 2022) or 3D antennal movements in response to odor stimuli (Claverie et al. 2023) were analyzed, revealing more accurate behavioral descriptions than traditional PER protocols which only output binary response.

This study aimed to enhance the experimental system for analyzing the ARs of honey bees using DeepLabCut. We focused on the AR to motion stimuli reported in previous studies, in which bees tilted their antennae in the opposite direction to the upward and downward motion stimuli in the transverse plane (Erber et al. 1993; Erber and Kloppenburg 1995) and confirmed that this AR was successfully detected by tracking antennal movements using DeepLabCut. In addition, we revealed that ARs in the coronal plane to forward and backward motion stimuli were observed in honey bees. An investigation of the developmental maturation of honey bee ARs showed that ARs to motion stimuli were not detectable in bees immediately after emergence but became mature through post-emergence development in an experience-independent manner. Furthermore, unsupervised clustering analysis using multidimensional data created by processing tracking data using DeepLabCut classified antennal movements into different clusters, suggesting the efficacy of data-driven analysis for the behavioral classification of honey bees.

Materials and Methods

Animal

European honey bee (Apis mellifera) colonies were purchased from Kumagaya Beekeeping Company (Saitama, Japan) and Okinawa Kariyushi apiary (Okinawa, Japan) and maintained at the University of Tokyo (Tokyo, Japan). Workers flying outside the hive were collected and used for experiments examining ARs to motion stimuli, and for the ‘flying (F)’ group in the experiment examining the developmental maturation of AR. To test whether ARs are acquired based on each individual’s flight experience or developmental maturation after emergence, we collected dozens of newly emerged workers identified by their fuzzy appearance (Winston 1987) from the hives and divided them into four groups; ‘newly emerged (NE)’ group whose AR was analyzed on the day of collection, ‘colony-reared (Cr)’ group which was returned to the colony without any treatments, ‘colony-reared with one wing removed (CrWR)’ group which had one wing removed and returned to the colony, and ‘incubator-reared (Ir)’ group which was maintained in an acryl cage (95 × 55 × 110 mm in size) with a piece of honeycomb and approximately 30 workers captured from the hive, and kept in an incubator at 34 ºC with honey and water fed ad libitum. Bees in the different groups were marked on their thoraxes with different colors using paint markers (POSCA, Japan). After rearing in the hive or incubator for ten days, bees in the Cr, CrWR, and Ir groups were collected from the hive or acrylic cage and used in the experiment.

Recording Antennal Movement During Presenting Motion Stimuli to Bees

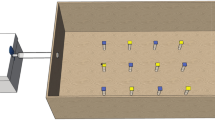

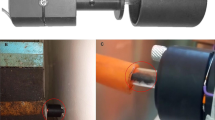

The collected workers were anesthetized on ice and fixed in a P-1000 pipette tip with masking tape. After awakening from anesthesia, bees were fed 4 µL of 30% (w/v) sucrose solution. 6 mm wide black and white vertical stripes were displayed on two LED monitors (BenQ, GW2283) with 60 Hz refresh rate placed facing each other at a 30º angle, and motion stimuli were presented to a bee set between the monitors by moving these stripes at 24 mm s−1 using PowerPoint animation (Fig. 1). Bees were set vertically when vertical motion stimuli were presented, or set horizontally when horizontal motion stimuli were presented. After familiarization for at least 3 min, each of the vertical or horizontal motion stimuli was presented alternately twice with an interstimulus interval of approximately 10 s (Fig. 1a). The heads of the bees were recorded at 30 fps (frames per second) with a resolution of 1024 × 768 by a Raspberry pi camera module (Kumantech) set above the bees during the experiment. To capture the entire antenna, the camera was tilted at 20° when the bees were set horizontally (Fig. 1d).

Overview of the experimental setup for the presentation of motion stimuli to a fixed bee. a The time course for the experiments. Blue boxes indicate the periods during the presentation of upward (U1 and U2) or backward (B1 and B2) motion stimuli, and magenta boxes indicate the periods during the presentation of downward (D1 and D2) or forward (U1 and U2) motion stimuli. b A picture of the experimental setup. c Schematic diagram of the experimental setup viewed from the top. The positions of monitors and a bee are shown. d Schematic diagrams of the side view of the experimental setup in the experiment for the presentation of vertical (left) or horizontal (right) motion

Automatic Tracking of Antennal Movement Using DeepLabCut

Antennal movements were tracked using the DeepLabCut software (DeepLabCut GUI v2.2.2)(Mathis et al. 2018; Nath et al. 2019). A total of 200 frames were extracted from videos of 10 individuals (20 frames per individual) to create a training dataset for each vertical or horizontal motion presentation experiment. The following eight points were manually labeled on each extracted frame: the base, middle (the joint between the scape and flagellum), and tip of each antenna, central ocellus, and middle of the ventral clypeus (for the vertical motion presentation experiment), or middle of posterior part of the head (for the horizontal motion presentation experiment). We trained the networks using these data with default parameter settings (ResNet50, 500,000 iterations) and evaluated the trained networks by checking the labeled frames. Frames with low-likelihood labeling were extracted for additional training, and the networks were retrained after manually correcting the mislabeled points with the same settings as in the first training. The networks created were used to track the antennal movement in each video.

Data Analysis

Data analyses were performed using R version 4.1.0. R package ‘dlcpr’ was used to load the tracking data for each stimulus for each individual into tidy data. Tracked points with a low likelihood (< 0.90) were linearly interpolated from the points in the previous and following frames using the ‘imputeTS’ package. The angle of each antenna was calculated as the angle of the line connecting the base and tip of each antenna from the centerline of the head connecting the central ocellus and the ventral center (for the vertical motion presentation experiment) or the posterior center (for the horizontal motion presentation experiment). For each antenna, the density plot and the arrow of the average angle were drawn using all the frames for each stimulus using the ‘circular’ package. One-way analysis of variance (ANOVA) and post hoc Tukey’s honest significant difference (HSD) tests were performed using the average antennal angle of both antennae of each individual during the presentation of each stimulus. A Kruskal-Wallis test and post-hoc Steel-Dwass test were used to compare the direction-specific antennal response (DAR). The angular range of antennae of each individual was calculated by subtracting the minimum angle from the maximum angle of antennae using the tracking data for the spontaneous antennal movements. The moving distance of spontaneously moving antennae was calculated using the tracking data for the tip of both antennae of each individual. One-way ANOVA and post hoc Tukey’s HSD tests were performed using the angular range and moving distance of each group. Unsupervised clustering was performed using data obtained by tracking the full-length video of each individual in the vertical motion presentation experiment. To standardize each frame, all tracking points were translated such that the dorsal center was at the origin and rotated such that the ventral center overlapped with the y-axis, followed by scaling to make the distance from the dorsal center to the ventral center constant. For every 10 frames (0.33 s), the maximum, minimum, mean, and standard deviation of the x- and y-coordinates were calculated for eight points: the base, middle, and tip of each antenna and the centroid of these three points for each antenna. The resulting data points, with a total of 64 dimensions, were used to determine the appropriate number of clusters by calculating the gap statistic (Tibshirani et al. 2001) and were clustered into eight clusters using Hartigan–Wong’s k-means algorithm. Clustering results were visualized using a uniform manifold approximation and projection (UMAP). The corresponding stimulus (spontaneous, downward, or upward) in each frame was manually annotated.

Results

Antennal Response to Motion Stimuli

First, we examined whether the ARs to upward and downward motion stimuli in the transverse plane, reported in previous studies (Erber et al. 1993; Erber and Kloppenburg 1995), could be detected in our experimental system by automated tracking using DeepLabCut. Vertical motion stimuli were presented to a fixed worker between the monitors (Fig. 1c, d, and 2a). The movements of the left and right antennae were tracked using DeepLabCut during the presentation of each stimulus, and the angles of the antennae from the centerline of the head in the transverse plane were calculated (Fig. 2b, Movie 1). The angular distribution of all frames of the antennae during the presentation of upward or downward motion stimuli showed a tendency to move the antennae in the opposite direction of the motion stimuli (Fig. 2c). The angular distribution of the spontaneous antennal movement was rather similar to that of the downward motion stimulus. However, the average angle of all frames of ‘spontaneous’ movement was in between the average angles during ‘upward’ and ‘downward’ motion presentation (Fig. 2c). Furthermore, we calculated the average angles of both the antennae of each bee for each stimulus and found significant differences in the angles between the stimuli: approximately 90°, 60°, and 100° for spontaneous movement, responses to upward and downward motion stimuli, respectively (Fig. 2d). These results are consistent with those of previous studies (Erber et al. 1993; Erber and Kloppenburg 1995), confirming the applicability of our automated tracking system.

ARs in the transverse plane to vertical motion stimuli. a Schematic diagram of the experimental setup to present the vertical motion stimuli displayed on the monitors to a fixed honey bee. A bee was fixed in a plastic tip (light blue) with masking tape (yellow). Magenta and blue arrows indicate downward and upward motions, respectively. b One frame of the video after processing using DeepLabCut (left) and the schematic diagram of angles of the left (θL) and right (θR) antennae (right) in the transverse plane. c Density plots of θL and θR for all frames (15,000 frames per stimulus; 25 individuals, 10 s × 2 for each stimulus, recording at 30 fps) of spontaneous movements or during the presentation of the upward or downward motion stimulus. The angles of arrows in the circles indicate the average antennal angles of all frames for each stimulus, and their lengths indicate the lengths of the summed unit vectors of the antennal angles for all frames divided by the number of frames. d Comparison of ARs among stimuli. Each dot represents the average angle of both antennae for each individual while each motion stimulus was presented. N = 25 for each stimulus. *** : p < 0.001, ** : p < 0.01 by Tukey’s HSD test

The previous study also examined the ARs to horizontal motion stimuli by measuring the antennal angles in the transverse plane and reported that the antennal angles in response to horizontal motion stimuli were smaller than those during spontaneous movements (Erber et al. 1993). Although the antennal angles in the transverse plane in response to forward and backward motion stimuli did not differ in the previous study, we assumed that bees would exhibit direction-specific ARs even to horizontal motion stimuli if the antennal angles were measured in the coronal plane, which is parallel to the horizontal motion stimuli. To test this possibility, we next analyzed the AR in response to backward and forward motion stimuli by measuring the antennal angles in the coronal plane. A worker fixed in a plastic tip was placed horizontally between the monitors to present horizontal motion stimuli (Fig. 1c, d, and 3a). Antennal movements were tracked as in the experiment for AR to vertical motion stimuli, and the angles of the antennae from the centerline in the coronal plane were calculated (Fig. 3b, Movie 2). As in the AR to vertical motion stimuli, the angular histogram of all video frames during the presentation of each stimulus showed a tendency to move the antennae in the opposite direction to the motion stimuli, and the average angles of the antennae spontaneously moving were between those during the presentation of each stimulus (Fig. 3c). The average angles of both antennae of each bee also differed significantly between the stimuli: approximately 65°, 45°, and 70° for spontaneous movement, responses to backward and forward motion stimuli, respectively (Fig. 3d). The difference in the average angles for different stimuli in the coronal plane was smaller than that in the transverse plane because of the narrower range of antennal movements in the coronal plane. These results clearly indicated that workers respond to external motion stimuli by moving their antennae in the direction opposite to the motion in at least four directions: upward, downward, backward, and forward.

ARs in the coronal plane to horizontal motion stimuli. a Schematic diagram of the experimental setup to present the horizontal motion stimuli displayed on the monitors to a fixed honey bee. A bee was fixed in a plastic tip (light blue) with masking tape (yellow). Magenta and blue arrows indicate forward and backward motions, respectively. b One frame of the video after processing using DeepLabCut (left) and the schematic diagram of angles of the left (θL) and right (θR) antennae (right) in the coronal plane. c Density plots of θL and θR for all frames (15,000 frames per stimulus; 25 individuals, 10 s × 2 for each stimulus, recording at 30 fps) of spontaneous movements or during the presentation of the backward or forward motion stimulus. The angles of arrows in the circles indicate the average antennal angles of all frames for each stimulus, and their lengths indicate the lengths of the summed unit vectors of the antennal angles for all frames divided by the number of frames. d Comparison of antennal responses among stimuli. Each dot represents the average angle of both antennae for each individual while each motion stimulus was presented. n = 25 for each stimulus. *** : p < 0.001, * : p < 0.05 by Tukey’s HSD test

Developmental Maturation of AR After Emergence

A previous study reported that newly emerged workers exhibit limited ARs to some odorants and pheromones compared to older workers, suggesting that these ARs are acquired during the adult life (Cholé et al. 2022). Thus, we next compared the AR to the vertical motion stimuli of newly emerged (NE) and flying (F) workers to investigate whether ARs to visual stimuli also mature after emergence. We calculated the direction-specific antennal response (DAR), which is the angular difference between the average angle of the antennae during upward and downward motion stimuli presentation for each bee (Fig. 4a) (Erber et al. 1993; Erber and Kloppenburg 1995), and compared the results between groups. The results showed that F had a significantly larger DAR than NE, which had almost zero DAR (Fig. 4b). The NE showed similar angular distributions for all frames and average angles during the presentation of each stimulus (Fig. S1a, b), which was contrary to the results obtained for F (Fig. S1c, d).

Development of AR to vertical motion stimuli. a Schematic diagram of DAR. The difference between the mean angles of antennae during the presentation of upward and downward motions is defined as DAR. b Comparison of DARs of bees in the groups analyzed at different developmental stages (NE, F) or with different treatments (Cr, CrWR, Ir). * : p < 0.05 by Steel-Dwass test. c, d Comparison of the angular ranges (c) and the moving distances (d) of antennae of bees in different groups. ** : p < 0.01, * : p < 0.05 by Tukey’s HSD test. n = 6, 6, 6, 6, 7 for NE, F, Cr, CrWR, Ir, respectively. NE: newly emerged, F: flying, Cr: colony-reared, CrWR: colony-reared with one wing removed, Ir: incubator-reared

As the motion presented to the compound eye is opposite to the direction in which a bee moves under natural conditions, moving the antennae in the direction opposite to the motion stimuli displayed on the monitors in this experimental system implies that bees were trying to move their antennae to perceive sensory information in the direction in which they are moving, for example, to recognize objects around the landing site (Evangelista et al. 2010). Therefore, the difference in DAR between the NE and F groups suggests that AR to motion stimuli is acquired based on each individual’s flight experience or developmental maturation after emergence. To test these possibilities, we divided newly emerged workers collected from a hive into three groups and reared them under different conditions: (1) those that were returned to the same hive from which they were collected with no treatment (colony-reared [Cr]), (2) those that were returned to the same hive from which they were collected with two wings on one side removed (colony-reared with wings removed [CrWR]), and (3) those that were reared in a small acrylic cage containing approximately 30 adult workers in an incubator (incubator-reared [Ir]). The bees in the CrWR and Ir groups could not fly, thus, they could not acquire AR through experience. The ARs to motion stimuli of bees in the Cr, CrWR, and Ir groups were analyzed 10 days after the treatments because the first orientating flight of workers was reported to occur as early as 4 days old (Winston 1987), and bees in the Cr group were considered to have flight experience. The results showed that the DARs of bees in the Cr, CrWR, and Ir groups were significantly different from those in the NE group. In contrast, they were comparable to that of the F group and not significantly different from each other (Fig. 4b). In addition, the average angle of the antennae during the presentation of each stimulus was not constant across stimuli in the Cr, CrWR, and Ir groups (Fig. S1e-j).

To examine whether the smaller DAR in the NE group is simply explained by the lower antennal mobility due to, for example, the underdevelopment of antennal muscles, we compared the angular ranges and the moving distances of antennae of bees in the different groups during spontaneous antennal movements (10 s × 2 times). Both the angular range and moving distance of antennae in the NE group tended to be smaller than those in the other groups (Fig. 4c, d). Especially, the angular ranges in the Cr and Ir groups and the moving distances in the F, Cr, and CrWR groups were significantly larger than those in the NE group. However, the angular range of antennae in NE group was approximately 130° (Fig. 4c), which was larger than DARs of bees in any groups (all less than 80°) (Fig. 4b). Therefore, the fact that the NE group had a smaller DAR than the other groups could not be explained solely by differences in antennal mobilities. These results indicate that AR to motion develops with age in a flight experience-independent manner, suggesting that this response is an innate honey bee behavior.

Classification of AR by Unsupervised Clustering

Animal tracking data can be used for unsupervised behavioral classification (Fujimori et al. 2020; Huang et al. 2021; Segalin et al. 2021). Therefore, we examined whether data-driven analysis could classify the tracking data of the antennal movements. Antennal movements were tracked using DeepLabCut for all video frames of 25 individuals in the vertical motion presentation experiment. After the tracking points of each frame were standardized (see Methods for details), the maximum, minimum, mean, and standard deviation of the x- and y-coordinates were calculated every 10 frames (0.33 s) for the following eight points: the base, middle, and tip of each antenna and the centroid of these three points. The resulting data points with 64 dimensions were divided into eight clusters according to the appropriate number of clusters estimated by the gap statistic (Tibshirani et al. 2001) and visualized using a uniform manifold approximation and projection (UMAP) (Fig. 5a). While data points for spontaneous movements were distributed almost uniformly on the UMAP, those during the presentation of upward and downward motion stimuli were distributed nonuniformly, with gradients in opposite directions (Fig. 5b). The proportions of data points for spontaneous movements included in each cluster were almost uniform (approximately 50% in each cluster), whereas those during the presentation of the downward and upward motion stimuli varied across clusters (Fig. 5c). As each video contained frames from over 10 s before the first stimulus presentation to a few seconds after the last stimulus, the number of data points corresponding to spontaneous movements was greater than the sum of the number of data points during the presentation of the upward and downward motion stimuli. In addition, we examined how the data points corresponding to spontaneous movements or during the presentation of upward and downward motion stimuli were distributed into eight clusters. The data points for spontaneous movements were somewhat uniformly classified into all clusters, with a slight tendency to be more classified into Cluster 3 and less classified into Cluster 2 (Fig. 5d). The data points during the presentation of the upward motion stimulus were prominently classified into Clusters 2 and 4 and less frequently classified into Cluster 3 (Fig. 5e). The data points during the presentation of the downward motion stimulus were more classified into Cluster 3 and less into Clusters 2 and 3, showing the opposite tendency to that during the presentation of the upward motion stimulus (Fig. 5f). All clusters included data points from at least 23 of the 25 individuals, and there were no clusters with a high percentage of data points from any particular individual, suggesting that the biases described above were not due to variations among individuals, such as variations in the AR, the angle at which bees were fixed in the experimental setup, or the distance from the head of the bee to the monitors (Fig. S2).

Unsupervised clustering of antennal movements. a UMAP visualization of clusters of data points processed from tracking data of all individuals for every 10 frames. b Distribution of data points corresponding to spontaneous movements (gray) or during the presentation of upward motion (blue) and downward motion (magenta) on the UMAP. c The proportion of data points corresponding to each stimulus in each cluster. Gray indicates the proportion of spontaneous movements, whereas blue and magenta indicate that of downward and upward motion stimuli, respectively. d-f Pie charts of the proportions of data points classified into each cluster among all data points corresponding to spontaneous movements (d), upward motion stimulus (e), and downward motion stimulus (f). g, h Boxplots of the left (g) and right (h) antennae angles for each cluster. The antennal angles of each data point were calculated from the averages of the x- and y-coordinates of the tip of each antenna

Since the distribution of data points across clusters and the average antennal angle of each individual varied depending on the presented motion stimuli (Fig. 2c, d, and 5b-f), we measured the antennal angle for each cluster to examine the properties that characterize each cluster. Because we noticed that the bees often moved their left and right antennae separately when observing the frames corresponding to each cluster, we calculated the angle of each antenna. The antennal angles varied among clusters; for example, the angles of both antennae in Cluster 3 tended to be large, whereas those in Clusters 2 and 4 were small, and the difference between the angles of the left and right antennae in Clusters 1 and 6 was large compared to the other clusters (Fig. 5g, h). Reasonably, the data points in Cluster 3 had large angles for both antennae, and those in Clusters 2 and 4 had small angles, considering the differences in the distribution of data points (Fig. 5c, e, f) and the average angles (Fig. 2d) during the presentation of upward and downward motion stimuli. These results suggest that a data-driven analysis using data points processed from tracking data can be used to classify antennal movements based on their characteristics.

Discussion

We investigated AR to motion stimuli in a honey bee using a video analysis software based on deep learning, DeepLabCut, which has recently been utilized for analyzing ARs to odor stimuli in the honey bees and bumble bees (Gascue et al. 2022; Claverie et al. 2023). Our video analysis also successfully detected ARs in honey bees; honey bees moved their antennae in the direction opposite to the vertical motion stimuli in the transverse plane, as reported in previous studies (Erber et al. 1993; Erber and Kloppenburg 1995). In addition, we found that honey bees exhibit direction-specific AR to horizontal motion stimuli by moving their antennae in the direction opposite to the motion stimuli, when the antennal angles were measured in the coronal plane. However, compared with previous studies (Erber et al. 1993; Erber and Kloppenburg 1995), the antennal angles and DAR, when presented with upward and downward motion stimuli, tended to be larger in the present study. This might be due to differences in the experimental setup, such as the width of the stripes used to present motion stimuli, the distance between the monitors and bees, and the speed of the motion stimuli. In addition, this could be due to the difference in the measurement devices because previous studies used only four phototransistors to measure the angles of each antenna and could not measure angles with as fine a resolution as the motion tracking by DeepLabCut in this study. However, the tendency to move the antennae in the direction opposite to the motion stimulus is consistent between previous and present studies, showing that AR to motion is a robust behavioral property. Future studies by 3D tracking of antennal movements, which has already utilized in other bee species (Claverie et al. 2023), will provide a more comprehensive understanding of ARs to motion stimuli in the honey bee.

AR to motion has been observed in flying insect species other than the honey bee, such as fruit flies and hawk moths, and is believed to play an important role in sensory perception during flight, in combination with AR to other sensory information (Mamiya et al. 2011; Krishnan and Sane 2014). Although we examined AR only to motion at a constant speed (visual stimulus) in the present study, previous studies have examined how insects control their antennal position during exposure to airflow (physical stimuli) or airflow and visual stimuli together (Khurana and Sane 2016; Natesan et al. 2019). Considering that a recent study used DeepLabCut to examine AR to odors with positive or negative valence in honey bees (Gascue et al. 2022), the experimental setup coupled with automated tracking using DeepLabCut used in this study could easily be expanded to examine AR to multimodal sensory information by simultaneously presenting motion (visual stimulus), airflow (physical stimulus), and odor (olfactory stimulus). This will deepen our understanding of how insects control their antennal movements to properly acquire sensory feedback in nature.

In honey bees, direction-specific AR to motion stimuli has been reported to be affected by the administration of serotonin and octopamine in the optic lobes, which are the primary visual centers in the insect brain. The effect of serotonin on AR was observed when injected into the lobula, medulla, and lamina, whereas that of octopamine was observed only when injected into the lobula, suggesting a particularly important role of the lobula for AR in motion stimuli (Erber and Kloppenburg 1995). In Drosophila, some neurons which project to the lobula plate respond specifically in each of four directions (upward, downward, forward, and backward) (Fischbach and Dittrich 1989). Notably, the motor neurons that control antennal movement in the honey bee extend dendrites to the dorsal lobe of the antennal lobe, where projections from the lobula terminate (Maronde 1991; Kloppenburg 1995). This study revealed the presence of AR to each of the four motion directions (upward, downward, forward, and backward), suggesting that AR may arise in response to direction-specific neuronal input from the lobula to the motor neurons regulating antennal movements. This study also revealed that ARs to motion stimuli are not experience-dependent but are acquired through individual development after emergence. Because workers engage in in-hive tasks for a certain period after emergence (Winston 1987; Seeley 1995) and do not need to respond to visual stimuli, the inability of newly emerged workers to respond to motion stimuli is not expected to significantly impact their survival rate. Since previous immunohistochemical studies have revealed the presence of serotonin, serotonin receptor 5-HT1A, and octopamine in the lobula of the honey bee (Schürmann and Klemm 1984; Sinakevitch et al. 2005; Thamm et al. 2010), investigating changes in their expression patterns after emergence in correlation with the development of AR can contribute to elucidating the molecular and neural mechanisms of AR to motion stimuli.

Finally, the data points processed from the tracking data using DeepLabCut were classified into clusters with different antennal movement characteristics by data-driven analysis. Contrary to the analysis focusing on the antennal angle in each frame, in which the responses of the left and right antennae were averaged and the differences between them could not be detected (Fig. 2), data-driven analysis successfully classified the data points with different left and right antennal movements into different clusters, indicating the efficacy of data-driven analysis without relying on any hypotheses. Honey bees use their antennae not only to perceive sensory cues from the environment but also to interact with nestmates (Winston 1987). Therefore, tracking antennal movements during inter-individual interactions and subsequent data-driven clustering may contribute to unveiling the details of how nest-mate recognition and interactions between individuals engaging in different tasks are reflected in antennal movements. Recently, behavior classification by data-driven analysis has been used to explore behavior-related brain regions in combination with neural activity mapping, or to analyze the phenotypes of individuals whose gene functions and neuronal activities are manipulated (Huang et al. 2021; Markowitz et al. 2023). Since genome editing and transgenesis have recently been utilized in the honey bee (Kohno and Kubo 2018; Roth et al. 2019; Değirmenci et al. 2020; Chen et al. 2021; Nie et al. 2021; Wang et al. 2021; Carcaud et al. 2023; Cheng et al. 2023), it is expected that the data-driven behavioral analysis could contribute to the analysis of the molecular and neural basis of social behaviors of the honey bee, which are still largely unknown, in the near future.

Data Availability

All relevant data are within the paper and its Supplementary files.

References

Adli M (2018) The CRISPR tool kit for genome editing and beyond. Nat Commun. https://doi.org/10.1038/s41467-018-04252-2

Ai H, Kobayashi Y, Matake T et al (2017) Development of honeybee waggle dance and its differences between recruits and scouts. bioRxiv. https://doi.org/10.1101/179408

Carcaud JI, Otte M, Grü newald B et al (2023) Multisite imaging of neural activity using a genetically encoded calcium sensor in the honey bee. PLOS Biol 21:e3001984. https://doi.org/10.1371/JOURNAL.PBIO.3001984

Chen Z, Traniello IM, Rana S et al (2021) Neurodevelopmental and transcriptomic effects of CRISPR/Cas9-induced somatic orco mutation in honey bees. J Neurogenet. https://doi.org/10.1080/01677063.2021.1887173

Cheng FP, Hu XF, Pan LX et al (2023) Transcriptome changes of Apis mellifera female embryos with fem gene knockout by CRISPR/Cas9. Int J Biol Macromol 229:260–267. https://doi.org/10.1016/J.IJBIOMAC.2022.12.229

Cholé H, Junca P, Sandoz JC (2015) Appetitive but not aversive olfactory conditioning modifies antennal movements in honeybees. Learn Mem 22:604–616. https://doi.org/10.1101/LM.038448.115

Cholé H, Merlin A, Henderson N et al (2022) Antenna movements as a function of odorants’ biological value in honeybees (Apis mellifera L). Sci Rep 12:11674. https://doi.org/10.1038/s41598-022-14354-z

Claverie N, Buvat P, Casas J (2023) Active sensing in bees through antennal movements is Independent of odor molecule. Integr Comp Biol 63:315–331. https://doi.org/10.1093/ICB/ICAD010

Crall JD, Gravish N, Mountcastle AM et al (2018) Spatial fidelity of workers predicts collective response to disturbance in a social insect. Nat Commun 9:1201. https://doi.org/10.1038/s41467-018-03561-w

Crall JD, Gravish N, Mountcastle AM, Combes SA (2015) BEEtag: a low-Cost, image-based tracking system for the study of animal behavior and locomotion. PLoS ONE 10:e0136487. https://doi.org/10.1371/journal.pone.0136487

Değirmenci L, Geiger D, Rogé Ferreira FL et al (2020) CRISPR/Cas 9-mediated mutations as a new tool for studying taste in honeybees. Chem Senses 45:655–666. https://doi.org/10.1093/chemse/bjaa063

Eisenhardt D (2014) Molecular mechanisms underlying formation of long-term reward memories and extinction memories in the honeybee (Apis mellifera). Learn Mem 21:534–542. https://doi.org/10.1101/LM.033118.113

Ellegren H (2014) Genome sequencing and population genomics in non-model organisms. Trends Ecol Evol 29:51–63. https://doi.org/10.1016/J.TREE.2013.09.008

Erber J, Kloppenburg P (1995) The modulatory effects of serotonin and octopamine in the visual system of the honey bee (Apis mellifera L.) - I. behavioral analysis of the motion-sensitive antennal reflex. J Comp Physiol A 176:111–118. https://doi.org/10.1007/BF00197757

Erber J, Pribbenow B, Bauer A, Kloppenburg P (1993) Antennal reflexes in the honeybee: tools for studying the nervous system. Apidologie 24:283–296. https://doi.org/10.1051/APIDO:19930308

Erber J, Schildberger K (1980) Conditioning of an antennal reflex to visual stimuli in bees (Apis mellifera L). J Comp Physiol A 135:217–225. https://doi.org/10.1007/BF00657249/METRICS

Evangelista C, Kraft R, Dacke M et al (2010) The moment before touchdown: landing manoeuvres of the honeybee Apis mellifera. J Exp Biol 213:262–270. https://doi.org/10.1242/JEB.037465

Fischbach KF, Dittrich APM (1989) The optic lobe of Drosophila melanogaster. I. A golgi analysis of wild-type structure. Cell Tissue Res 258:441–475. https://doi.org/10.1007/BF00218858

Frisch KV, Wenner AM, Johnson DL (1967) Honeybees: do they use direction and distance information provided by their dancers? Science 158:1072–1077. https://doi.org/10.1126/science.158.3804.1072

Fujimori S, Ishikawa T, Watanabe H (2020) Animal behavior classification using DeepLabCut. 2020 IEEE 9th Glob Conf Consum Electron GCCE 2020 254–257. https://doi.org/10.1109/GCCE50665.2020.9291715

Gascue F, Marachlian E, Azcueta M et al (2022) Antennal movements can be used as behavioral readout of odor valence in honey bees. IBRO Neurosci Reports 12:323–332. https://doi.org/10.1016/J.IBNEUR.2022.04.005

Geng H, Lafon G, Avarguès-Weber A et al (2022) Visual learning in a virtual reality environment upregulates immediate early gene expression in the mushroom bodies of honey bees. Commun Biol 5:130. https://doi.org/10.1038/s42003-022-03075-8

Giurfa M, Sandoz J-C (2012) Invertebrate learning and memory: fifty years of olfactory conditioning of the proboscis extension response in honeybees. Learn Mem 19:54–66. https://doi.org/10.1101/LM.024711.111

Giurfa M, Zhang S, Jenett A et al (2001) The concepts of ‘sameness’ and ‘difference’ in an insect. Nature 410:930–933. https://doi.org/10.1038/35073582

Gomez Ramirez WC, Thomas NKT, Muktar IJ, Riabinina O (2023) The neuroecology of olfaction in bees. Curr Opin Insect Sci 101018. https://doi.org/10.1016/J.COIS.2023.101018

Hallem EA, Dahanukar A, Carlson JR (2006) Insect odor and taste receptors. Annu Rev Entomol 51:113–135. https://doi.org/10.1146/ANNUREV.ENTO.51.051705.113646

Honegger HW (1981) A preliminary note on a new optomotor response in crickets: antennal tracking of moving targets. J Comp Physiol A 142:419–421. https://doi.org/10.1007/BF00605454/METRICS

Howard SR, Avarguès-Weber A, Garcia JE et al (2018) Numerical ordering of zero in honey bees. Science 360:1124–1126. https://doi.org/10.1126/science.aar4975

Howard SR, Avarguès-Weber A, Garcia JE et al (2019) Numerical cognition in honeybees enables addition and subtraction. Sci Adv 5:eaav0961. https://doi.org/10.1126/sciadv.aav0961

Huang K, Han Y, Chen K et al (2021) A hierarchical 3D-motion learning framework for animal spontaneous behavior mapping. Nat Commun 12:2784. https://doi.org/10.1038/s41467-021-22970-y

Khurana TR, Sane SP (2016) Airflow and optic flow mediate antennal positioning in flying honeybees. Elife 5:e14449. https://doi.org/10.7554/eLife.14449

Kirkerud NH, Schlegel U, Giovanni Galizia C (2017) Aversive learning of colored lights in walking honeybees. Front Behav Neurosci 11:94. https://doi.org/10.3389/fnbeh.2017.00094

Kirkerud NH, Wehmann H-N, Galizia CG, Gustav D (2013) APIS—a novel approach for conditioning honey bees. Front Behav Neurosci 7:29. https://doi.org/10.3389/fnbeh.2013.00029

Kloppenburg P (1995) Anatomy of the antennal motoneurons in the brain of the honeybee (Apis mellifera). J Comp Neurol 363:333–343. https://doi.org/10.1002/CNE.903630213

Kohno H, Kubo T (2018) mKast is dispensable for normal development and sexual maturation of the male European honeybee. Sci Rep 8:11877. https://doi.org/10.1038/s41598-018-30380-2

Kohno H, Kubo T (2019) Genetics in the honey bee: achievements and prospects toward the functional analysis of molecular and neural mechanisms underlying social behaviors. Insects 10:348. https://doi.org/10.3390/insects10100348

Kohno H, Suenami S, Takeuchi H et al (2016) Production of knockout mutants by CRISPR/Cas9 in the European honeybee, Apis mellifera L. Zoolog Sci 33:505–512. https://doi.org/10.2108/zs160043

Krishnan A, Sane SP (2014) Visual feedback influences antennal positioning in flying hawk moths. J Exp Biol 217:908–917. https://doi.org/10.1242/jeb.094276

Kuwabara M (1957) Bildung Des Bedingten reflexes Von Pavlovs Typus Bei Der Honigbiene, Apis mellifica. J Fac Hokkaido Uni Ser VI Zool 13:458–464

Liberti J, Kay T, Quinn A et al (2022) The gut microbiota affects the social network of honeybees. Nat Ecol Evol 2022 610 6:1471–1479. https://doi.org/10.1038/s41559-022-01840-w

Mamiya A, Straw AD, Tómasson E, Dickinson MH (2011) Active and passive antennal movements during visually guided steering in flying Drosophila. J Neurosci 31:6900–6914. https://doi.org/10.1523/JNEUROSCI.0498-11.2011

Mansourian S, Fandino RA, Riabinina O (2019) Progress in the use of genetic methods to study insect behavior outside Drosophila. Curr Opin Insect Sci 36:45–56. https://doi.org/10.1016/J.COIS.2019.08.001

Marchal P, Villar ME, Geng H et al (2019) Inhibitory learning of phototaxis by honeybees in a passive-avoidance task. Learn Mem 26:412–423. https://doi.org/10.1101/lm.050120.119

Markowitz JE, Gillis WF, Jay M et al (2023) Spontaneous behaviour is structured by reinforcement without explicit reward. Nature 614:108–117. https://doi.org/10.1038/s41586-022-05611-2

Maronde U (1991) Common projection areas of antennal and visual pathways in the honeybee brain, Apis mellifera. J Comp Neurol 309:328–340. https://doi.org/10.1002/CNE.903090304

Mathis A, Mamidanna P, Cury KM et al (2018) DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat Neurosci 21:1281–1289. https://doi.org/10.1038/s41593-018-0209-y

Mathis MW, Mathis A (2020) Deep learning tools for the measurement of animal behavior in neuroscience. Curr Opin Neurobiol 60:1–11. https://doi.org/10.1016/J.CONB.2019.10.008

Mello CC, Conte D (2004) Revealing the world of RNA interference. Nature 431:338–342. https://doi.org/10.1038/nature02872

Mersch DP, Crespi A, Keller L (2013) Tracking individuals shows spatial fidelity is a key regulator of ant social organization. Science 340:1090–1093. https://doi.org/10.1126/SCIENCE.1234316/SUPPL_FILE/MERSCH.SM.PDF

Natesan D, Saxena N, Ekeberg Ö, Sane SP (2019) Tuneable reflexes control antennal positioning in flying hawkmoths. Nat Commun 10:5593. https://doi.org/10.1038/s41467-019-13595-3

Nath T, Mathis A, Chen AC et al (2019) Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat Protoc 14:2152–2176. https://doi.org/10.1038/s41596-019-0176-0

Nie H-Y, Liang L-Q, Li Q-F et al (2021) CRISPR/Cas9 mediated knockout of Amyellow-y gene results in melanization defect of the cuticle in adult Apis mellifera. J Insect Physiol 132:104264. https://doi.org/10.1016/j.jinsphys.2021.104264

Nouvian M, Galizia CG (2019) Aversive training of honey bees in an automated Y-maze. Front Physiol 10:678. https://doi.org/10.3389/fphys.2019.00678

Okada J, Toh Y (2004) Spatio-temporal patterns of antennal movements in the searching cockroach. J Exp Biol 207:3693–3706. https://doi.org/10.1242/JEB.01201

Oppenheim SJ, Baker RH, Simon S, Desalle R (2015) We can’t all be supermodels: the value of comparative transcriptomics to the study of non-model insects. Insect Mol Biol 24:139–154

Otte M, Netschitailo O, Kaftanoglu O et al (2018) Improving genetic transformation rates in honeybees. Sci Rep 8:16534. https://doi.org/10.1038/s41598-018-34724-w

Ozaki M, Wada-Katsumata A, Fujikawa K et al (2005) Ant nestmate and non-nestmate discrimination by a chemosensory sensillum. Science 309:311–314. https://doi.org/10.1126/science.110524

Roth A, Vleurinck C, Netschitailo O et al (2019) A genetic switch for worker nutritionmediated traits in honeybees. PLoS Biol 17:e3000171. https://doi.org/10.1371/journal.pbio.3000171

Schulte C, Theilenberg E, Muller-Borg M et al (2014) Highly efficient integration and expression of piggybac-derived cassettes in the honeybee (Apis mellifera). Proc Natl Acad Sci 111:9003–9008. https://doi.org/10.1073/pnas.1402341111

Schultheiss P, Buatois A, Avarguès-Weber A, Giurfa M (2017) Using virtual reality to study visual performances of honeybees. Curr Opin Insect Sci 24:43–50. https://doi.org/10.1016/j.cois.2017.08.003

Schürmann FW, Klemm N (1984) Serotonin-immunoreactive neurons in the brain of the honeybee. J Comp Neurol 225:570–580. https://doi.org/10.1002/CNE.902250407

Seeley TD (1995) The wisdom of the hive: the social physiology of honey bee colonies. Harvard University Press, Cambridge

Segalin C, Williams J, Karigo T et al (2021) The mouse action recognition system (MARS) software pipeline for automated analysis of social behaviors in mice. Elife 10:e63720. https://doi.org/10.7554/ELIFE.63720

Sharma KR, Enzmann BL, Schmidt Y et al (2015) Cuticular hydrocarbon pheromones for social behavior and their coding in the ant antenna. Cell Rep 12:1261–1271. https://doi.org/10.1016/J.CELREP.2015.07.031

Sinakevitch I, Niwa M, Strausfeld NJ (2005) Octopamine-like immunoreactivity in the honey bee and cockroach: comparable organization in the brain and subesophageal ganglion. J Comp Neurol 488:233–254. https://doi.org/10.1002/CNE.20572

Staudacher EM, Gebhardt M, Dürr V (2005) Antennal movements and mechanoreception: neurobiology of active tactile sensors. Adv in Insect Phys 32:49–205. https://doi.org/10.1016/S0065-2806(05)32002-9

Stroeymeyt N, Grasse AV, Crespi A et al (2018) Social network plasticity decreases Disease transmission in a eusocial insect. Science 362:941–945. https://doi.org/10.1126/SCIENCE.AAT4793/SUPPL_FILE/AAT4793_STROEYMEYT_SM.PDF

Sun D, Guo Z, Liu Y, Zhang Y (2017) Progress and prospects of CRISPR/Cas systems in insects and other arthropods. Front Physiol 8:608. https://doi.org/10.3389/fphys.2017.00608

Suzuki H (1975) Antennal movements induced by odour and central projection of the antennal neurones in the honey-bee. J Insect Physiol 21:831–847. https://doi.org/10.1016/0022-1910(75)90012-8

Thamm M, Balfanz S, Scheiner R et al (2010) Characterization of the 5-HT1A receptor of the honeybee (Apis mellifera) and involvement of serotonin in phototactic behavior. Cell Mol Life Sci 67:2467–2479. https://doi.org/10.1007/s00018-010-0350-6

Tibshirani R, Walther G, Hastie T (2001) Estimating the number of clusters in a data set via the gap statistic. J R Stat Soc Ser B (Statistical Methodol) 63:411–423. https://doi.org/10.1111/1467-9868.00293

Vogt RG, Riddiford LM (1981) Pheromone binding and inactivation by moth antennae. Nature 293:161–163. https://doi.org/10.1038/293161a0

Walton A, Sheehan MJ, Toth AL (2020) Going wild for functional genomics: RNA interference as a tool to study gene-behavior associations in diverse species and ecological contexts. Horm Behav 124:104774. https://doi.org/10.1016/J.YHBEH.2020.104774

Wang X, Lin Y, Liang L et al (2021) Transcriptional profiles of diploid mutant Apis mellifera embryos after knockout of csd by CRISPR/Cas9. Insects 12:704. https://doi.org/10.3390/INSECTS12080704

Wario F, Wild B, Couvillon MJ et al (2015) Automatic methods for long-term tracking and the detection and decoding of communication dances in honeybees. Front Ecol Evol 3:103. https://doi.org/10.3389/FEVO.2015.00103/BIBTEX

Winston ML (1987) The biology of the honey bee. Harvard University Press, Cambridge

Funding

Open Access funding provided by The University of Tokyo. This work was supported by Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Number 20K15839 (Grant-in-Aid for Early-Career Scientists).

Author information

Authors and Affiliations

Contributions

The study was conceived and designed by Hiroki Kohno. All experiments were performed by Hiroki Kohno. Data analyses were performed by Hiroki Kohno and Shuichi Kamata. The first draft of the manuscript was written by Hiroki Kohno and all authors reviewed, edited, and approved the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

ESM 1

(PDF 496 KB)

(MP4 9.93 MB)

(MP4 8.43 MB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kohno, H., Kamata, S. & Kubo, T. Analysis of Antennal Responses to Motion Stimuli in the Honey Bee by Automated Tracking Using DeepLabCut. J Insect Behav 36, 332–346 (2023). https://doi.org/10.1007/s10905-023-09845-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10905-023-09845-4