Abstract

Global optimization problems with limited structure (e.g., convexity or differentiability of the objective function) can arise in many fields. One approach to solving these problems is by modeling the evolution of a probability density function over the solution space, similar to the Fokker–Planck equation for diffusions, such that at each time instant, additional weight is given to better solutions. We propose an addition to the class of model-based methods, cumulative weighting optimization (CWO), whose general version can be proven convergent to an optimal solution and stable under disturbances (e.g., floating point inaccuracy). These properties encourage us to design a class of CWO algorithms for solving global optimization problems. Beyond the general convergence and stability analysis, we prove that with some additional assumptions the Monte Carlo version of the CWO algorithm is also convergent and stable. Interestingly, the well known cross-entropy method is a CWO algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many questions in engineering and science can be formulated as optimizing over an objective function. When the objective function is differentiable, its derivative has explicit form, and has few finite local extrema, the problem is highly tractable—the first-order necessary condition generates a set of candidate solutions, the greatest of which is an optimal solution. On the other hand, objective functions absent any structural information can be challenging to solve analytically. Approaches developed to solve these problems numerically can be divided into two categories: deterministic and random search. Random search is further divided into instance-based (e.g., simulated annealing, genetic algorithm, tabu search, nested partitions, generalized hill climbing, and evolutionary programming) and model-based algorithms [e.g., annealing-adaptive search (AAS), cross-entropy (CE), model reference adaptive search (MRAS), and estimation of distribution algorithms (EDAs)]. For the interested reader, Hu et al. [1] have a recent survey paper on model-based methods, which also contains references to instance-based methods mentioned in this paragraph.

The cumulative weighting optimization (CWO) method extends the class of model-based methods by introducing an alternative weight-update equation. The weight-update equation is important for model-based methods, as it decides the search direction for the next time step. Our equation is inspired by Cumulative Prospect Theory (CPT) and has an intuitive connection with the risk-sensitive nature of the human decision making process. The new equation can be proven to converge to solutions of optimization problems when it can be solved analytically. Interestingly, the well known cross-entropy method is a special case of the CWO method. We also provide a convergence result when an analytical solution cannot be obtained and the problem requires an approximate solution. The approximate version, later referred to as the Monte Carlo version, will first project the underlying distribution onto a family of easy-to-sample from distributions and then sample from the projected distribution. The techniques used in the convergence analysis of the Monte Carlo version will follow the work of Hu et al. [2] with two major differences: the class of functions considered and the mean vector equation.

Moving from theory to implementation, this paper proceeds as follows: Section 2 presents the problem statement. Section 3 introduces the concept of probability weighting functions. In Sect. 4, rigorous convergence and stability analyses on both the general and Monte Carlo versions of the CWO method are presented. In this section, we provide additional analysis for the case when the optimizers are isolated and the family of distributions used has continuous density, due to the additional assumptions required. Finally, Sect. 5 describes a few CWO numerical algorithms and tabulates their simulation results.

2 Problem

In many engineering applications, we seek a best solution based on an objective function. For example, in the well known traveling salesman problem (TSP), we are looking for the cheapest route that visits all cities and terminates at the starting point. Problems of this nature can be formulated as the following optimization problem:

where \(x^{*}\) is an optimal solution to the problem and \(\mathbf {X}\) is a compact subset of \(\mathbf {R}^{n}\) (the n-dimension real space). \(H:\mathbf {X}\mapsto \mathbf {R}\), the objective function, is a bounded deterministic measurable function possibly with multiple local extrema. The set of optimizers for Eq. (1) is denoted by \(\mathbf {X}^{*}:=\left\{ x^{*}\in \mathbf {X}|H\left( x\right) \le H\left( x^{*}\right) ,\;\forall x\in \mathbf {X}\right\} \). The following assumption holds throughout this paper.

Assumption 1

There exists a global optimal solution to Eq. (1), i.e., \(\mathbf {X}^{*}\) is nonempty.

In practice, this assumption is true for many optimization problems. For example, the assumption holds trivially when H is continuous. In general, the objective function lacks properties such as convexity and differentiability. Let the set of non-negative reals be denoted by \(\mathbf {R}^{+}.\) Common in many situations, a measurable strictly increasing fitness function, \(\phi :\mathbf {R}\mapsto \mathbf {R}^{+},\) is introduced to reformulate Eq. (1) as: \(x^{*}\in \arg \max _{x\in \mathbf {X}}\phi \left( H\left( x\right) \right) .\) A similar fitness function modified problem statement can be found in Hu et al. [1].

Remark 1

Since the reformulated problem guarantees the range of the new fitness-objective function [i.e., \(\phi \left( H(\cdot )\right) \)] is non-negative, and it has the same optimizers as the original problem; we will only need to consider the case when H is non-negative in Eq. (1), i.e., \(H:\mathbf {X}\mapsto \mathbf {R}^{+}.\)

3 Probability weighting functions

Probability weighting functions have many applications in science and engineering. Kahneman and Tversky [3] proposed the original Prospect Theory (PT) in the 1970s, which has probabilistic weighting as one of its main features. They were unsatisfied with PT due to its violation of stochastic dominance, and thus suggested CPT in the 1990s [4]. CPT improves PT by re-weighting outcome cumulative distribution functions (CDFs) instead of outcome probability density functions (PDFs). An example of weighting functions used by CPT is \(w\left( p\right) :=\frac{p^{\gamma }}{\left( p^{\gamma }+\left( 1-p\right) ^{\gamma }\right) ^{1/\gamma }},\;\gamma \in \left( 0,1\right) ,\, p\in \left[ 0,1\right] ,\) which can be applied to a CDF or a complementary CDF. Their definition is presented below.

Definition 1

A weighting function, \(w:[0,1]\mapsto [0,1]\), is a monotonically non-decreasing and Lipschitz continuous function with \(w(0)=0\) and \(w(1)=1\).

There are a few well-known weighting functions: 1) a simple polynomial weighting function has the form: \(w\left( p\right) =1-\left( 1-p\right) ^{b},\ b>1;\) 2) a more complicated weighting function involving exponentials has the form: \(w(p)=\frac{\text {e}^{cp}-1}{\text {e}^{c}-1},\) where \(c<0\). Other parametric weighting functions can be found in [5].

In later sections, weighting functions are used in models to update a PDF over the solution space; hence, we are interested in weighting functions with the additional property of optimal-seeking; this is important for guaranteeing CWO’s convergence to optimality.

Definition 2

A weighting function, \(w:[0,1]\mapsto [0,1]\), is optimal-seeking if

Proposition 1

An optimal-seeking weighting function satisfies the inequality \(w(p)>p,\;\forall p\in \left( 0,1\right) .\)

Proof

If we let \(x=1\) and \(y=0\), we have

by Definition 2. By applying equations \(\alpha =p\) and \(w\left( 1\right) =1\), the proof follows trivially.

Optimal-seeking is called risk-seeking in fields that model risk-sensitivity. In this paper, we only consider optimal-seeking weighting functions. \(\square \)

Assumption 2

w is an optimal-seeking weighting function.

In its historical application, an optimal-seeking weighting function places more weight on highly rewarding outcomes. In particular, it is used to overweight the probabilities of unlikely events and underweight the probabilities of highly likely events. In our context, the optimal-seeking property of the weighting function is used to place more weight on higher ranked or more desirable outcomes. In the example below, we apply an optimal-seeking weighting function to a complementary CDF.

Example 1

A die is rolled and the player receives a payoff that is equivalent to the outcome of the roll. For example, if the player rolled a 1, then he/she is given a $1 reward. The expected payoffs for both the risk-neutral and optimal-seeking cases are calculated below assuming the die is fair. The outcome of the roll is a random variable denoted by R.

The risk-neutral expected payoff is calculated as: \(E\left[ R\right] =\sum _{n=1}^{6}\left( 1-F\left( n\right) \right) =\sum _{n=1}^{6}\frac{n}{6}=\frac{21}{6}\approx 3.5,\) where \(F\left( n\right) \) is the CDF evaluated at outcome n. Using the weighting function \(w\left( p\right) =1-\left( 1-p\right) ^{2},\) the corresponding optimal-seeking re-weighted expected payoff is: \(E^{w}\left[ R\right] =\sum _{n=1}^{6}w\left( 1-F\left( n\right) \right) = \sum _{n=1}^{6}w\big (\frac{n}{6}\big )= \sum _{n=1}^{6}1-\big (1-\frac{n}{6}\big )^{2}=\frac{161}{36}\approx 4.47222.\)

Remark 2

The reader should observe the fact that the optimal-seeking re-weighted expected payoff is greater than that of the risk-neutral, which will be key in proving the convergence of the CWO method.

4 Convergence and stability analysis

Convergence and stability are two desirable properties for any global optimization method. In particular, we would like to provide a theoretical guarantee that the CWO method will converge to an optimal solution and remain there under “reasonable” disturbances. These properties will be proven true for both the general and Monte Carlo versions of the CWO method in this section, with each version explained in detail in a subsection. We highlight the case of isolated optimizers in this section due to the additional assumptions, and a slight modification of the standard approach is required.

4.1 General theory

The best way to gain some intuition for the CWO method is to understand the finite solution space case. To make the idea concrete, let \(\mathbf {X}\) in Eq. (1) be the set \(\left\{ 1,\dots ,N\right\} .\) In this case, Assumption 1 is trivially satisfied and Assumption 2 is always true in our analysis. Let \({\mathbb {P}}_{x}\) denote the set of probability mass functions (PMFs) over \(\mathbf {X},\) and the set of PMFs exclusively supported on optimal solutions is denoted by \({\mathbb {P}}_{\mathbf {X}^{*}}:=\left\{ P\in {\mathbb {P}}_{x}|\sum _{x\in \mathbf {X}^{*}}P\left( x\right) =1\right\} .\) Since any element of \({\mathbb {P}}_{\mathbf {X}^{*}}\) has positive weight assigned to optimal solutions and zero weight assigned to non-optimal solutions, it follows that finding an element of \({\mathbb {P}}_{\mathbf {X}^{*}}\) solves Eq. (1).

As alluded to earlier, finite solution space model-based methods iterate on a PMF so that it will eventually concentrate its mass on optimal solutions. Let \(P_{t}\) denote a PMF over \(\mathbf {X}\) at time t, i.e., \(P_{t}\in {\mathbb {P}}_{x};\) then solving Eq. (1) is equivalent to finding an algorithm that can update \(P_{t}\) iteratively such that \(P_{t}\in {\mathbb {P}}_{\mathbf {X}^{*}},\;\forall t>\tau \in \left( 0,\infty \right) .\) If \(P_{0}\) has a positive mass on at least one of the optimal solutions, i.e., \(P_{0}\left( \mathbf {X}^{*}\right) >0,\) then one way of insuring \(P_{t}\) eventually reaches \({\mathbb {P}}_{\mathbf {X}^{*}}\) is by using an optimal-seeking weighting function. The idea can be better demonstrated by introducing a step size variable \(\varDelta \). Let the set-valued map \(M:\mathbf {X}\mapsto 2^{\mathbf {X}}\) return all elements in the solution space with the same outcome, i.e., \(M\left( x\right) :=\left\{ \xi \in \mathbf {X}|H\left( \xi \right) =H\left( x\right) \right\} ;\) then \(P_{t}\) can be updated according to the following equation:

where \(\sum _{\xi \in M\left( x\right) }\beta _{t}(\xi )=1,\;\forall x\in \mathbf {X},\) and w is an optimal-seeking weighting function. In Eq. (2), the difference between the first w distorted term and the second w distorted term is the set \(\left\{ \xi \in \mathbf {X}|H\left( \xi \right) =H\left( x\right) \right\} .\) In the finite solution space case, we can verify that \(P_{t+\varDelta }\) is indeed a probability measure by summing over \(\mathbf {X}\), i.e., \(\sum _{x\in \mathbf {X}}P_{t+\varDelta }\left( x\right) \), and checking that the sum is 1. Since for each \(x\in \mathbf {X}\), the negative term in Eq. (2) cancels out with a positive term in the summation except for \(w\left( 1\right) =1\), it is verified that \(\sum _{x\in \mathbf {X}}P_{t+\varDelta }\left( x\right) =1\) and \(P_{t+\varDelta }\) is a probability measure.

When \(\varDelta =1\), we obtain a simpler equation evolving on \(t=\left\{ 0,1,2,\dots \right\} \):

where time t can be treated as the iteration count.

Similar to the observation in Remark 2, it will be shown later in the paper that

where the equality is achieved only when \(P_{t}\in {\mathbb {P}}_{\mathbf {X}^{*}}\) and H is treated as a random variable. The following example illustrates in continuous-time the point that \(E_{t}\left[ H\right] \) is strictly increasing in t unless \(P_{t}\) reaches \({\mathbb {P}}_{\mathbf {X}^{*}}.\)

Example 2

Let \(\mathbf {X}\) be the finite solution space \(\left[ 1,2,3,4\right] \) and pick any optimal-seeking probability weighting function w. We assume that \(H(4)=H(3)>H(2)>H(1)\ge 0\). The continuous-time analogue of Eq. (2) for this example is written as

From Proposition 1, we know that \(w(p)>p,\;\forall p\in (0,1),\) which implies \(\frac{\text {d}P_{t}\left( 3\right) }{\text {d}t}+\frac{\text {d}P_{t}\left( 4\right) }{\text {d}t}>0,\;\forall P_{t}\left( 3\right) +P_{t}\left( 4\right) \in \left( 0,1\right) \) and \(\frac{\text {d}P_{t}\left( 3\right) }{\text {d}t}+\frac{\text {d}P_{t}\left( 4\right) }{\text {d}t}=0,\ \text {if }P_{t}\left( 3\right) +P_{t}\left( 4\right) \in \left\{ 0,1\right\} .\)

The weight on the optimal solutions for this example (i.e., the mass on \(\mathbf {X}^{*}=\{3,4\}\)) monotonically increases and asymptotically approaches the set \({\mathbb {P}}_{\mathbf {X}^{*}}.\) Since the weight on the optimal solutions is increasing and the total weight has to sum to one, the weight on the non-optimal solutions approaches zero, i.e.,

Remark 3

Since the weight on optimal solutions monotonically increases and approaches 1, it follows that the corresponding re-weighted expected payoff is monotonically increasing in t until optimality.

Thus, the CWO method updates \(P_{t}\) iteratively, so that \(P_{t}\) approaches \({\mathbb {P}}_{\mathbf {X}^{*}}\) asymptotically. The limit of \(P_{t}\) as \(t\rightarrow \infty \) only has weight on optimal solutions; hence, optimal solutions can be inferred from it.

The finite solution space case offers the most intuition; however, to apply the CWO method to a wide variety of problems, we will need to work with more general solution spaces. In the rest of this section, Eq. (1) is solved given that \(\mathbf {X}\) is a compact subset of a finite-dimensional vector space i.e., \(\mathbf {R}^{n}\). Similar to the finite solution space case, the set of probability measures defined on the Borel measurable space \(\left( \mathbf {X},{\mathscr {B}}\left( \mathbf {X}\right) \right) \) is denoted by \({\mathbb {P}}_{x},\) which has the Prohorov topology. We reference [6] and [7] for technical details on the Prohorov topology. While the following assumption is not strictly required for the CWO method, it is used in the analysis for ease of notation.

Assumption 3

w is differentiable and has a bounded first derivative, which is denoted by \(w'\).

In general, boundedness of the sub-gradient should be sufficient for the application of the CWO method.

At each time t, the push-forward measure of \(P_{t}\) through H in Eq. (1) is denoted by

where \(H^{-1}\left( B\right) \) is the preimage of B under H, and \({\mathscr {B}}\left( \mathbf {R}^{+}\right) \) denotes the Borel \(\sigma \)-algebra for \(\mathbf {R}^{+}.\) For the justification of using \(\mathbf {R}^{+}\) in Eq. (5), see Remark 1. Furthermore, H can be treated as a random variable from \(\left( \mathbf {X},{\mathscr {B}}\left( \mathbf {X}\right) \right) \) to \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \).

For any \(B\in {\mathscr {B}}\left( \mathbf {R}^{+}\right) \) and \(A\in {\mathscr {B}}\left( \mathbf {X}\right) \), the generalization of Eq. (2) to the continuous-time solution space \(\mathbf {X}\) is

where \(\int _{M\left( x\right) }\beta _{t}\left( d\xi \right) =1,\ M\left( x\right) :=\left\{ \xi \in \mathbf {X}|H\left( \xi \right) =H\left( x\right) \right\} ,\ \forall x\in \mathbf {X};\) i.e., \(\beta _{t}\) can be viewed as a probability measure supported on all \(\xi \in \mathbf {X}\) with the same \(H\left( x\right) \) value. Here, \(\dot{P}_{t}^{H}\left( B\right) \) is interpreted as the time derivative of \(P_{t}^{H}\left( B\right) \). Note that Eq. (7) says that given \(\beta _{t},\) \(P_{t}\) can be determined from \(P_{t}^{H},\) which is a solution of Eq. (6). Special attention is paid to the equation governing the optimal solutions:

where \(y^{*}=\max _{x\in \mathbf {X}}H\left( x\right) .\)

Wang [8] proposes an alternative set of evolution equations, also nonlinear Fokker–Planck equations [9, 10], motivated by evolutionary game theory. As the reader will see later, we reach the same convergence results as Wang et al. [11] with a modified approach.

Similar to the finite solution space case, the set of probability measures exclusively supported on optimal solutions is denoted by \({\mathbb {P}}_{\mathbf {X}^{*}}:=\left\{ P\in {\mathbb {P}}_{x}|P\left( \mathbf {X}^{*}\right) =1\right\} ,\) where \(\mathbf {X}^{*}\) denotes the set of optimal solutions, i.e., \(\mathbf {X}^{*}:=\left\{ x^{*}\in \mathbf {X}|H(x)\le H(x^{*}),\ \forall x\in \mathbf {X}\right\} .\) The reader is reminded that obtaining an element of \({\mathbb {P}}_{\mathbf {X}^{*}}\) is equivalent to solving the optimization problem stated in Eq. (1). The goal is to prove that Eqs. (6–7) update \(P_{t}\) such that \(P_{t}\) approaches \({\mathbb {P}}_{\mathbf {X}^{*}}\) asymptotically. The first step is to prove the existence and uniqueness of a solution for Eq. (6).

Theorem 1

For each \(P_{0}\in {\mathbb {P}}_{x}\) and its corresponding push-forward measure \(P_{0}^{H}\), the ordinary differential equation (6) has a unique solution for \(t\in \mathbf {R}^{+}\).

Proof

The outline of our proof follows [12] and [13]. The total variation norm on a \(\sigma \)-finite signed measure P over \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \) at time t is denoted by:

where the \(\sup \) is taken over all measurable functions \(g:\mathbf {R}^{+}\rightarrow \mathbf {R}\) and

We simplify our notations by introducing the following shorthand:

where \(B\in {\mathscr {B}}\left( \mathbf {R}^{+}\right) \). Since \(P_{t}^{H}\) is a probability measure on \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \), we have

where K is the Lipschitz constant for w. The inequality above proves the boundedness of \({\mathscr {C}}\left( P_{t}^{H}\right) \).

Next, we need to prove that the right hand side of Eq. (6) is Lipschitz continuous. Letting \(P_{t}^{H}\) and \(Q_{t}^{H}\) denote two probability measures defined on \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \), we know from the definition of the norm that

Furthermore, we have

By substituting Eq. (10) into Eq. (8), we have

Hence, the right hand side of Eq. (6) is Lipschitz continuous in \(P_{t}^{H}\) with the constant \(K+1.\) Using [14, Corollary3.9], we conclude that Eq. (6) with an initial measure \(P_{0}^{H}\) has a unique solution. \(\square \)

Next, \(P_{t}^{H}\) is proved to be a probability measure over \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \) for any t.

Lemma 1

Given that \(P_{0}^{H}\) is a probability measure, then a solution \(P_{t}^{H}\) of Eq. (6) at each time \(t>0\) is a probability measure, i.e.,

where \(\left\{ B_{i}\right\} \) is any countable collection of pairwise disjoint elements of \({\mathscr {B}}\left( \mathbf {R}^{+}\right) \).

Proof

If we can prove that \(\dot{P}_{t}^{H}\left( \mathbf {R}^{+}\right) =0\) and \(\dot{P}_{t}^{H}\left( \cup _{i}B_{i}\right) =\sum _{i}\dot{P}_{t}^{H}\left( B_{i}\right) \), then we have obtained our desired result. Using Eq. (6), the fact that \(\int _{0}^{1}w'\left( s\right) ds=w\left( 1\right) -w\left( 0\right) =1,\) \(w'\) is bounded, and the dominated convergence theorem, it is straightforward to prove this assertion. \(\square \)

The next Lemma is needed in Theorem 5, which shows \(E_{t}\left[ H\right] \) is monotonically increasing in t [cf. Remark 2 and Eq. (4)].

Lemma 2

Given an optimal-seeking weighting function, w, there exists a \(\tilde{y}\in \mathbf {R}^{+}\) such that

can be decomposed into the sum of its non-negative and negative parts, i.e., Eq. (11) equals

Proof

We constructively find \(\tilde{y}.\) Since w is a monotonically non-decreasing function, it satisfies

Furthermore, since w is also optimal-seeking, we have

Because \(w\left( 0\right) =0\) and \(w\left( 1\right) =1\) by definition, we have \(w'\left( P_{t}^{H}\left( \left[ y,\infty \right) \right) \right) >1,\) for some \(y\in \mathbf {R}^{+}\). From Eq. (12), we know if \(y_{2}\) satisfies the above inequality, then so does \(y_{1}\ge y_{2}\in \mathbf {R}^{+}.\) Hence, we can conclude that \(\tilde{y}\) is the smallest such y. \(\square \)

The theorems below present a blueprint to obtain an element of \({\mathbb {P}}_{\mathbf {X}^{*}}\) utilizing the solution \(P_{t}\) of Eqs. (6–7). Accomplishing this goal, the initial point set in Theorem 1 is restricted to measures \(P_{0}\) that allow \(P_{t}\) to approach \({\mathbb {P}}_{\mathbf {X}^{*}},\) i.e., \(\lim _{t\rightarrow \infty }P_{t}\in {\mathbb {P}}_{\mathbf {X}^{*}}.\) The following definition helps us to present this idea succinctly.

Definition 3

The set of all optimal initial solution probability measures is denoted by:

The corresponding set of H push-forward optimal probability measures over \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \) is denoted by \({\mathbb {I}}_{H^{*}}:=\left\{ P\circ H^{-1}|P\in {\mathbb {I}}_{\mathbf {X}^{*}}\right\} .\)

Definition 3 is essential, as a requirement on the initial condition of the system (i.e., \(P_{0}\in {\mathbb {I}}_{\mathbf {X}^{*}}\)), for the convergence and stability of the system. This condition can be too stringent when the optimizers are isolated and \({\mathbb {I}}_{\mathbf {X}^{*}}\) is restricted to measures with continuous densities, since no positive measure can be placed on isolated points. In the next section, we will address this issue specifically by modifying the definition of \({\mathbb {I}}_{\mathbf {X}^{*}}\) and adding an extra assumption on the objective function H. The next theorem proves \(P_{t}^{H}\left( \max _{x\in \mathbf {X}}H\left( x\right) \right) \), the probability measure on optimal solutions, will converge to 1 as \(t\rightarrow \infty .\) On the other hand, the probability measure on non-optimal solutions will approach zero as \(t\rightarrow \infty .\)

Theorem 2

If \(P_{t}^{H}\) is a solution of Eq. (6) with initial points in \({\mathbb {I}}_{H^{*}}\) (i.e., \(P_{0}^{H}\in {\mathbb {I}}_{H^{*}}\)) and \(y^{*}\,=\,\max _{x\in \mathbf {X}}H\left( x\right) ,\) then the following claims hold:

-

1.

\(P_{t}^{H}\left( y^{*}\right) \) is a monotonically non-decreasing function of t that converges to 1 as \(t\rightarrow \infty \);

-

2.

\(P_{t}^{H}\left( \mathbf {R}^{+}\backslash y^{*}\right) \rightarrow 0\) as t\(\rightarrow \infty \).

Proof

We recall that the following equation is true :

From Proposition 1, we obtain the fact that \(w\left( P_{t}^{H}\left( \left[ y^{*},\infty \right) \right) \right) >P_{t}^{H}\left( \left[ y^{*},\infty \right) \right) .\) From the two equations above, we conclude that

Since \(P_{0}^{H}\in {\mathbb {I}}_{H^{*}}\), the first claim is obvious. The second claim follows from the first claim.

\(\square \)

The next theorem connects the properties of \(P_{t}^{H}\) with those of \(P_{t}\) as \(t\rightarrow \infty .\) This is an important step for understanding the evolution of Eqs. (6–7).

Theorem 3

If \(P_{0}\in {\mathbb {I}}_{\mathbf {X}^{*}}\) and \(P_{t}\) is a solution of Eqs. (6–7), then the following claims hold: 1) \(\lim _{t\rightarrow \infty }P_{t}\left( \mathbf {X}^{*}\right) \)=1; 2) \(\lim _{t\rightarrow \infty }P_{t}\left( \mathbf {X}\backslash \mathbf {X}^{*}\right) \)=0.

Proof

Since we know

the first claim is proved by writing down the following equation and using Theorem 2:

The second claim follows from the first claim. \(\square \)

We are interested in finding the limit points of Eqs. (6–7). Ideally, these limit points should be elements in \({\mathbb {P}}_{\mathbf {X}^{*}}.\) In order to guarantee this, we restrict the initial points to be elements of \({\mathbb {I}}_{\mathbf {X}^{*}}.\) To facilitate our discussion, we introduce the following definition.

Definition 4

A limit set of Eqs. (6–7) from an initial set \(\mathcal {I}\) is denoted by

where \(P_{t}\) is a solution of Eqs. (6–7) and \(\lim _{k\rightarrow \infty }t_{k}=+\infty .\) The useful limit set \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) is characterized by the theorem below.

Theorem 4

The limit set of Eqs. (6–7) starting from any initial point \(P_{0}\in {\mathbb {I}}_{\mathbf {X}^{*}}\) is \({\mathbb {P}}_{\mathbf {X}^{*}},\) i.e.,

Proof

The proof will be done in two parts: first by proving \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \supset {\mathbb {P}}_{\mathbf {X}^{*}},\) then by proving \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \subset {\mathbb {P}}_{\mathbf {X}^{*}}.\) The first case can be proved by taking an element \(P\in {\mathbb {P}}_{\mathbf {X}^{*}}.\) By the definition of \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) (i.e., the limit set of Eqs. (6–7) starting from \({\mathbb {I}}_{\mathbf {X}^{*}}\)), we conclude that \(P\in \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \).

The second claim is proved by contradiction. We assume there is an element \(Q_{\infty }\in \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] ,\) but not in \({\mathbb {P}}_{\mathbf {X}^{*}}\), which implies

The first equality is due to the fact that \(Q_{\infty }\in \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \). The second equality due to dominated convergence theorem in the equation above, along with Eqs. (6–7), implies \(\lim _{t\rightarrow \infty }P_{t}^{H}\left( \mathbf {R}^{+}\backslash y^{*}\right) >0,\) which contradicts the second claim of Theorem 2 stating \(\lim _{t\rightarrow \infty }P_{t}^{H}\left( \mathbf {R}^{+}\backslash y^{*}\right) =0\). \(\square \)

The following theorem shows the monotonically increasing nature of \(E_{t}\left[ H\right] \), which will be useful later in proving the stability of Eqs. (6–7).

Theorem 5

Let \(P_{t}^{H}\) be a solution for Eq. (6) with its initial point in \({\mathbb {I}}_{H^{*}},\) then the following claims are true:

-

1.

\(E_{t}\left[ H\right] \) is monotonically non-decreasing in \(t\in \mathbf {R}^{+}\);

-

2.

if \(P_{\tau }\notin \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] ,\) then \(E_{\tau }\left[ H\right] \) is strictly increasing in \(\tau \in \mathbf {R}^{+}\).

Proof

We start our proof by differentiating the expected value:

The swapping of integration and differentiation in the last equation is allowed due to the dominated convergence theorem. The \(\tilde{y}\) variable is used to decompose the expected outcome function into non-negative and negative parts (cf. Lemma 2). The last line of the inequality is true because \(P_{t}^{H}\) is a probability measure (cf. Lemma 1). The first claim is proved.

The second claim is proved by contradiction. Assume \(P_{\tau }\notin \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \), i.e., \(P_{\tau }\) is not a limit point, and \(\frac{d}{d\tau }E_{\tau }\left[ H\right] =0,\) i.e., \(E_{\tau }\left[ H\right] \) is not increasing. Along with Theorem 2, the equality above implies that H is equal to a constant \(C=\max _{x\in \mathbf {X}}H\left( x\right) \). This implies that \(P_{\tau }^{H}\) is a Dirac measure concentrated at C, which means \(P_{\tau }\in \mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) (cf. Theorem 4). \(\square \)

At this point, we have demonstrated the convergence to optimality property of our method. We now explore the stability property of our method. The metric function d in the following definitions is the Prohorov metric found in the appendix of [6, p. 170].

Definition 5

Let \({\mathcal {L}}\) be a subset of \({\mathbb {P}}_{x}\). For a point \(P\in {\mathbb {P}}_{x}\), we define the distance between P and \({\mathcal {L}}\) as

\({\mathcal {L}}\) is called Lyapunov stable, with respect to a sequence of measures \(\left\{ P_{t}\right\} \), if for all \(\epsilon >0\), there exists a \(\delta >0\) such that

\({\mathcal {L}}\) is called asymptotically stable, with respect to a sequence of measures \(\left\{ P_{t}\right\} \), if \({\mathcal {L}}\) is Lyapunov stable, and there exists a \(\delta >0\) such that

as \(t\rightarrow \infty \).

The next theorem is the main result of this section.

Theorem 6

\(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) is a compact set and it is asymptotically stable.

Proof

We need to first prove that \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) is a compact set. Since from Theorem 4, we have \(\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] ={\mathbb {P}}_{\mathbf {X}^{*}},\) we can instead prove that

is compact. It is easy to see that \({\mathbb {P}}_{\mathbf {X}^{*}}\) is tight, since \(\mathbf {X}^{*}\) is pre-compact (i.e., \(\mathbf {X}^{*}\subset \mathbf {X}\) and \(\mathbf {X}\) is compact). Furthermore, we can prove it is a closed set by contradiction. Assume there exists a sequence \(\left\{ P_{n}\right\} \in {\mathbb {P}}_{\mathbf {X}^{*}}\) such that \(P_{n}\rightarrow \hat{P}\notin {\mathbb {P}}_{\mathbf {X}^{*}}\). This implies \(\exists N\) such that \(\forall n>N,\) we have \(P_{n}\left( \mathbf {X}^{*}\right) <1,\) and \(P_{n}\left( \mathbf {X}\backslash \mathbf {X}^{*}\right) >0\), which implies

This contradicts the second claim of Theorem 2; hence, \({\mathbb {P}}_{\mathbf {X}^{*}}=\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) is a compact set.

We pick the Lyapunov function

where \(V\left( P_{t}\right) >0,\) for all \(P_{t}\in {\mathbb {P}}_{x}\backslash {\mathbb {P}}_{\mathbf {X}^{*}}\), and \(V\left( P_{t}\right) =0,\) for \(P_{t}\in {\mathbb {P}}_{\mathbf {X}^{*}}=\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \). From Theorem 5 we have \(\dot{V}\left( P_{t}\right) <0\) for all \(t>0\) if \(P_{t}\notin {\mathbb {P}}_{\mathbf {X}^{*}}\). Furthermore, we know that \(\dot{V}\left( P_{t}\right) =0,\ \forall t>0,\) if \(P_{t}\in {\mathbb {P}}_{\mathbf {X}^{*}}\). Using \(V\left( P_{t}\right) \) as the Lyapunov function, and the fact that \({\mathbb {P}}_{\mathbf {X}^{*}}=\mathbb {L}\left[ {\mathbb {I}}_{\mathbf {X}^{*}}\right] \) is a compact set, we can appeal to a generalized version of Lyapunov’s theorem (see [15, Chapter 5]). The desired conclusion is reached. \(\square \)

The use of a Lyapunov function for proving the asymptotic stability of the limit set can be found previously in Wang’s dissertation [8].

4.2 Isolated optimizers

In Sect. 4.1, we require that our initial distribution \(P_{0}\) belong to \({\mathbb {I}}_{\mathbf {X}^{*}},\) which requires \(P_{0}\left( X^{*}\right) >0\). This is reasonable in many cases: (1) if the solution space is large but finite; (2) if the optimizers are not isolated. However, for the case when the optimizers are isolated and \({\mathbb {I}}_{\mathbf {X}^{*}}\) is restricted to measures with continuous densities, the condition \(P_{0}\left( X^{*}\right) >0\) cannot be satisfied, because the probability measure at a single point is always zero. For example, when minimizing \(H\left( x\right) =x^{2}\), it is convenient to start with a Gaussian distribution due to its simple form. Since Gaussian measures have continuous densities, it is impossible to satisfy the condition \(P_{0}\left( X^{*}\right) >0\). Thus, we need to make a slight modification to the definition for \({\mathbb {I}}_{\mathbf {X}^{*}}\) and the statements of the theorems in the previous section. The modifications will lead to the conclusion that the weight update system will converge to measures that place all their weight in the ball neighborhoods of the optimizers. We make a slight modification to the definition of the \({\mathbb {I}}_{\mathbf {X}^{*}}\) so that the positive measure requirement is satisfied as long as the measure is positive for any neighborhood of at least one element in \(\mathbf {X}^{*}.\) In other words, as long as the initial measure has not excluded all neighborhoods of all optimizers, it should be included in the admissible initial probability measure set \(\tilde{{\mathbb {I}}}_{\mathbf {X}^{*}},\) which is defined below.

Definition 6

The set of all optimal initial solution probability measures is denoted by:

The corresponding set of H push-forward optimal probability measures over \(\left( \mathbf {R}^{+},{\mathscr {B}}\left( \mathbf {R}^{+}\right) \right) \) is denoted by \(\tilde{{\mathbb {I}}}{}_{H^{*}}:=\left\{ P\circ H^{-1}|P\in \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\right\} .\)

An example can illustrate the reasonableness of \(\tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\) for the isolated optimizers case. If we would like to minimize the function \(H\left( x\right) =x^{2},\) which has a single isolated optimizer at \(x=0,\) then any Gaussian distribution can satisfy the requirement imposed by \(\tilde{{\mathbb {I}}}_{\mathbf {X}^{*}},\) since for any \(\delta \)-neighborhood around \(x=0\), the measure under \(P_{0}\) is non-zero. After we redefined the admissible initial distributions, there needs to be an additional continuity assumption on the objective function H.

Assumption 4

H, the objective function, is continuous at all \(x^{*}\in \mathbf {X}^{*}\).

Assumption 4 is needed here so that the neighborhoods around elements in \(\mathbf {X}^{*}\) have values close to the optimal objective value \(y^{*}\). In the rest of this section, we will list the modified version of the theorems analogous to the theorems in the previous section eliminating any redundancy.

Theorem 7

If \(P_{t}^{H}\) is a solution of Eq. (6) with initial points in \(\tilde{{\mathbb {I}}}{}_{H^{*}}\) (i.e., \(P_{0}^{H}\in \tilde{{\mathbb {I}}}{}_{H^{*}}\)) and \(y^{*}\,=\,\max _{x\in \mathbf {X}}H\left( x\right) ,\) then the following claims hold for an arbitrary \(\epsilon >0\):

-

1.

\(P_{t}^{H}\left( \left[ y^{*}-\epsilon ,\infty \right) \right) \) is a monotonically non-decreasing function of t that converges to 1 as \(t\rightarrow \infty \);

-

2.

\(P_{t}^{H}\left( \mathbf {R}^{+}\backslash \left[ y^{*}-\epsilon ,\infty \right) \right) \rightarrow 0\) as t\(\rightarrow \infty \).

Proof

Using Assumption 4, we know for any \(\epsilon >0\), there exists a \(\tilde{\delta }>0\) such that

As a consequence, we know that \(P_{0}^{H}\in \tilde{{\mathbb {I}}}{}_{H^{*}}\), which is induced by \(P_{0}\in \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\) that has non-zero probability over the \(\tilde{\delta }\)-neighborhood of at least one optimizer \(x^{*}\in \mathbf {X}^{*}\), has non-zero probability over the \(\epsilon \)-neighborhood of \(y^{*}.\) The rest follows from the same proof technique as in Theorem 2.

For the following theorems, we introduce the notation:

The next theorem is needed to characterize the limiting behavior of Eqs. (6–7).

Theorem 8

If \(P_{0}\in {\mathbb {I}}_{\mathbf {X}^{*}}\) and \(P_{t}\) is a solution of Eqs. (6–7), then the following claims hold for any \(\delta >0\): 1) \(\lim _{t\rightarrow \infty }P_{t}\left( \mathbf {X}^{\delta ,*}\right) \)=1; 2) \(\lim _{t\rightarrow \infty }P_{t}\left( \mathbf {X}\backslash \mathbf {X}^{\delta ,*}\right) =0\).

Proof

Using the fact that the objective function is continuous around the optimizers (i.e., Assumption 4), we know that there exists an corresponding \(\delta \) for each \(\epsilon .\) Furthermore, since the \(\epsilon \) is arbitrary in Theorem 7 and we can always shrink the \(\delta \) by shrinking the \(\epsilon \) around the isolated optimizers, \(\delta \) can be made arbitrarily small as well.

The next theorem states that if we start from \(\tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\), the limit set will place all the weight in the neighborhoods of the optimizers.

Theorem 9

The limit set of Eqs. (6–7) starting from any initial point \(P_{0}\in \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\) is \({\mathbb {P}}_{\tilde{\mathbf {X}}^{*}}\), i.e.,

Proof

See the proof for Theorem 4 and replace Theorem 2 with Theorem 7.

The next theorem is needed in our final conclusion stated in Theorem 11.

Theorem 10

Let \(P_{t}^{H}\) be a solution for Eq. (6) with its initial point in \(\tilde{{\mathbb {I}}}_{\mathbf {X}^{*}},\) then the following claims are true:

-

1.

\(E_{t}\left[ H\right] \) is monotonically non-decreasing in \(t\in \mathbf {R}^{+}\);

-

2.

if \(P_{\tau }\notin \mathbb {L}\left[ \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\right] ,\) then \(E_{\tau }\left[ H\right] \) is strictly increasing in \(\tau \in \mathbf {R}^{+}\).

Proof

See the proof for Theorem 5.

Finally, by using Theorems 7 and 10 and applying a Lyapunov function, we have our desired conclusion.

Theorem 11

\(\mathbb {L}\left[ \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\right] \) is a compact set and it is asymptotically stable.

Proof

See the proof for Theorem 6 and replace Theorems 2 and 5 with Theorems 7 and 10.

In other words, the modified theorems state that if Eqs. (6–7) start with a probability measure that places some weight on an arbitrarily small neighborhood of at least one optimizer, then the system will converge to an element in \(\mathbb {L}\left[ \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\right] \), the set of probability measures that only has weight on arbitrarily small neighborhoods of the optimizers. Furthermore, \(\mathbb {L}\left[ \tilde{{\mathbb {I}}}_{\mathbf {X}^{*}}\right] \) is asymptotically stable.

4.3 Monte Carlo version

In Sect. 4.1, we have demonstrated that when the solution space is a subset of \(\mathbf {R}^{n}\), the CWO method will exhibit convergence and stability properties that are desirable for any optimization method. The theorems are proven when the probability measure can be modeled perfectly; however, there is no result on the CWO method’s Monte Carlo version (cf. Algorithm 1) convergence, which is important in practice. The analysis techniques applied and the convergence proved in this section are significantly different from that of the general version. The difference is caused by the two layers of approximation used for efficient simulation: projection and sampling. In addition, we are able to apply the analysis techniques in [2] to Eq. (16), which is a more general version of equations considered previously for model-based methods.

In practice, we often assume the existence of a PDF \(p_{t}\) for \(P_{t}\) such that

where \(\mu \) is the Lebesgue or discrete measure on \(\mathbf {X}.\) For brevity, we use the following notations: \(\xi ^{\ge }\left( H\left( x\right) \right) :=\left\{ \xi \in \mathbf {X}|H(\xi )\ge H\left( x\right) \right\} ,\) \(\xi ^{>}\left( H\left( x\right) \right) :=\left\{ \xi \in \mathbf {X}|H(\xi )>H\left( x\right) \right\} ,\) and \(\xi ^{=}\left( H\left( x\right) \right) :=\left\{ \xi \in \mathbf {X}|H(\xi )=H\left( x\right) \right\} .\) In this section, we restrict the \(\beta _{t}\) function to be of the form:

Then, in discrete-time (i.e., \(t\in \left\{ 0,1,2,\dots \right\} \)), Eqs. (6–7) is rewritten more simply as:

where

is introduced to highlight its dependency on \(H\left( x\right) \) and \(p_{t}\). It is common to let \(\beta \left( x,p_{t}\right) =\frac{p_{t}\left( x\right) }{P_{t}\left( \xi ^{=}\left( H\left( x\right) \right) \right) }\), thus \(\sigma \left( x,p_{t}\right) =1\) and Eq. (14) becomes

Note that in the previous section, we allow \(\beta \) to vary with time t; however, in this section, we require a more explicit structure on \(\beta \), namely it depends on x and \(p_{t}\). To recap, the relevant assumptions for this section are Assumptions 1, 2, and 3. Furthermore, we will use t to denote time and k to denote the iteration count going forward.

While Eq. (14) brings us a step closer to an implementable algorithm, we are still faced with a major challenge—given a \(p_{0},\) an initial point, it is not clear that we can solve Eq. (14), a nonlinear Fokker-Planck equation, analytically. Hence, solving Eq. (14) numerically is a natural alternative. In solving Eq. (14), most numerical methods need to sample from the PDF \(p_{t},\) which can be difficult at times. One common approach to circumvent this difficulty is to project \(p_{t}\) onto a family of easy-to-sample-from parameterized density functions denoted by \(\mathbb {F}:=\left\{ f_{\theta }|\theta \in \varvec{\Theta }\right\} \), via the Kullback–Leibler (KL) divergence:

where \(\theta \in {\varvec{\Theta }}\) and \(f_{\theta }\in \mathbb {F}\). The projection is done by minimizing the KL divergence, i.e.,

so that the reference PDF \(p_{k}\) is updated through its surrogate PDF \(f_{\theta }\). We adopt the notations \(P_{\theta }\left( \cdot \right) \) and \(E_{\theta }\left[ \cdot \right] \) for the probability measure and expectation with respect to the surrogate PDF \(f_{\theta }.\) On the other hand, \(P_{k}\left( \cdot \right) \) and \(E_{k}\left[ \cdot \right] \) denote the probability measure and expectation of the reference PDF at the k-th iteration. With a slight abuse of notation, we write X for both \(X_{k}\) and \(X_{\theta },\) the random variables with the PDFs \(p_{k}\) and \(f_{\theta }\), respectively.

The natural exponential families (NEFs), in many applications, can be used as the surrogate parameterized family of PDFs. They are convenient to use in implementations and can lead to a closed analytical solution in our analysis. Their definition from [2, Definition 2.1] is presented below.

Definition 7

A parameterized family \(\mathbb {F}:=\left\{ f_{\theta }|\theta \in \varvec{\Theta }\subseteq \mathbf {R}^{d}\right\} \) on \(\mathbf {X}\) is called a natural exponential family (NEF) if there exist continuous mappings \(\varGamma :\mathbf {R}^{n}\mapsto \mathbf {R}^{d}\) and \(K:\mathbf {R}^{d}\mapsto \mathbf {R}\) such that \(f_{\theta }\left( x\right) =\exp \left( \theta ^{T}\varGamma \left( x\right) -K\left( \theta \right) \right) ,\) where \(\varvec{\Theta }:=\left\{ \theta \in \mathbf {R}^{d}|\left| K\left( \theta \right) \right| <\infty \right\} \) is the natural parameter space and \(K\left( \theta \right) =\ln \int _{\mathbf {X}}\exp \left( \theta ^{T}\varGamma \left( x\right) \right) \mu \left( \text {d}x\right) .\)

Remark 4

Let the interior of \(\varvec{\Theta }\) be denoted by \(\mathring{\varvec{\Theta }}.\) In this section, we use the following properties of the family from [16]: (1) \(K\left( \theta \right) \) is strictly convex on \(\mathring{\varvec{\Theta }};\) (2) the Jacobian for \(K\left( \theta \right) \) is \(E_{\theta }\left[ \varGamma \left( X\right) \right] \), i.e., \(\nabla K\left( \theta \right) =E_{\theta }\left[ \varGamma \left( X\right) \right] ;\) (3) the Hessian matrix for \(K\left( \theta \right) \) is \(\mathrm {Cov_{\theta }\left[ \varGamma \left( X\right) \right] },\) where \(\mathrm {Cov_{\theta }\left[ \cdot \right] }\) is the covariance with respect to \(f_{\theta }.\) Therefore, the Jacobian for the parameterized mean vector function, \(m\left( \theta \right) :=E_{\theta }\left[ \varGamma \left( X\right) \right] ,\) is strictly positive definite and invertible. This fact implies, along with the inverse function theorem, that \(m\left( \theta \right) \) is also invertible. Since \(m\left( \theta \right) \) is invertible, we can iterate our algorithm on \(m\left( \theta \right) \) instead of \(\theta ,\) and recover \(\theta \) as needed via the inverse function \(m^{-1}\left( \cdot \right) .\)

In the existing work using NEFs for global optimization, the model follows the form:

where \(S:\mathbf {R}^{+}\mapsto \mathbf {R}^{+}\) is an increasing, possibly iteration-varying function. Examples include: (1) proportional selection [17–19], where

(2) Boltzmann distribution [20], where

and \(\left\{ T_{k}\right\} \) is a sequence of predetermined parameters; (3) importance sampling [21], where

In the CWO method, looking at Eq. (14), our model has the form:

There are two major differences between our model [cf. Eq. (16)] and previous models [cf. Eq. (15)]. Firstly, the S function in our model, a mapping from \(\left( \mathbf {X},\mathbf {R}^{+},{\mathbb {P}}_{x}\right) \) to \(\mathbf {R}^{+}\), takes two additional parameters x and \(p_{k}\). Secondly, our model ensures that \(E_{p_{k}}\left[ S\left( X,H\left( X\right) ,p_{k}\right) \right] =1;\) hence, there is no need for normalization. It is also interesting to note that, for a fixed \(p_{t}\) and x, \(S\left( x,\cdot ,p_{t}\right) \) is an increasing function. Acknowledging these differences, we will prove that the Monte Carlo version of Eq. (14) converges to the internal chain recurrent set of an ordinary differential equation. The definition of internal chain recurrent sets will be introduced later along with the corresponding ordinary differential equation in our analysis. The development of the theorems below runs in parallel with the work of Hu et al. [2], with the major difference in the structure of the S function as discussed in this paragraph. The main idea is to apply techniques from the stochastic approximation literature to model-based methods.

With \(p_{k+1}\) defined by Eq. (16) and \(f_{\theta _{k}}\) the surrogate PDF at the k-th iteration, the smoothed reference PDF is denoted by:

The smoothing factor \(\alpha _{k}\) is a design parameter that can be used to limit the effect of \(p_{k+1}\) on \(\tilde{p}_{k+1}.\)

Lemma 3

Assume that \(f_{\theta _{k+1}}\) is a member of a natural exponential family with the parameter space \(\varvec{\Theta }\) denoted by \(\mathbb {F}\) optimizing the KL divergence between \(\tilde{p}_{k+1}\) and \(\mathbb {F}\), i.e., \(\theta _{k+1}\in \arg \min _{\theta \in \varvec{\Theta }}D\left( \tilde{p}_{k+1},f_{\theta }\right) \) and \(\theta _{k+1}\in \mathring{\varvec{\Theta }},\) the interior of \(\varvec{\Theta },\) for all k. Then

where \(m:\mathbf {R}^{d}\mapsto \mathbf {R}^{d}\) is the mean vector function (cf. Remark 4).

Proof

Using the argument made for proving Lemma 2 of [19] and the assumption that \(\theta _{k+1}\in \mathring{\varvec{\Theta }},\) we can prove that \(E_{\theta _{k+1}}\left[ \varGamma \left( X\right) \right] =E_{\tilde{p}_{k+1}}\left[ \varGamma \left( X\right) \right] ,\) where by definition \(m\left( \theta _{k+1}\right) :=E_{\theta _{k+1}}\left[ \varGamma \left( X\right) \right] \) (cf. Remark 4). The rest of the proof uses the same argument as in Lemma 2.1 of [2]. Using the fact that \(E_{\theta _{k+1}}\left[ \varGamma \left( X\right) \right] =E_{\tilde{p}_{k+1}}\left[ \varGamma \left( X\right) \right] ,\) along with Eq. (17), it follows that

which can be rewritten as

where the last equality is obtained from the definitions of KL divergence and NEFs. \(\square \)

So far, Eq. (18) provides us a way to update \(\theta _{k}\) via \(p_{k+1}.\) Of course, in reality we do not know \(p_{k+1};\) hence, we denote the estimator for \(p_{k+1}\) by \(\hat{p}_{k+1},\) which has the form: \(\hat{p}_{k+1}\left( x\right) :=S\left( X,H\left( x\right) ,f_{\theta _{k}}\right) f_{\theta _{k}}.\) By substituting \(\hat{p}_{k+1}\) into Eq. (18), we have

where the interchange of the derivative and the integral above is justified by the dominated convergence theorem. The intuition from the equation above is that the algorithm will move in the direction that will increase \(E_{\theta }\left[ S\left( X,H\left( X\right) ,f_{\theta _{k}}\right) \right] .\)

In the CWO method, the S mapping has the specific form [cf. Eq. (14)]:

hence, its estimated form is:

The mean vector function below, which differs from that of Proposition 3.1 in [2], describes the dynamics for the mean vector function in the CWO method:

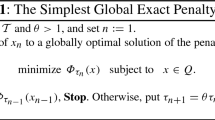

By replacing the mean vector function in Algorithm 2 from [2] with Eq. (19) (cf. Algorithm 1), we can prove the convergence of Algorithm 1.

Finally, we can prove that \(\eta _{k}\) in Eq. (20) will converge to the internally chain recurrent sets (cf. Definition 8) of an ODE:

where \(L\left( \eta \right) :=\nabla _{\theta }\left. \ln E_{\theta }\left[ \sigma \left( X,m^{-1}\left( \eta \right) \right) W\left( H\left( X\right) ,m^{-1}\left( \eta \right) \right) \right] \right| _{\theta =m^{-1}\left( \eta \right) }\) is the \(\left( 1-\rho \right) \)-quantile of H under \(f_{m^{-1}\left( \eta \right) }\).

Definition 8

Given an initial condition \(\eta \left( 0\right) =y,\) let \(\eta _{y}\left( t\right) \) be the solution to Eq. (21). A point x is said to be chain recurrent if for any \(\delta >0\) and \(T>0,\) there exist an integer \(k\ge 1,\) points \(y_{0},\dots ,y_{k}\) with \(y_{k}=x,\) and time instances \(t_{0},\dots ,t_{k-1}\) such that \(t_{i}\ge T,\) \(\left\| x-y_{0}\right\| \le \delta ,\) and \(\left\| \eta _{y_{i}}\left( t_{i}\right) -y_{i+1}\right\| \le \delta \) for \(i=0,\dots ,k-1.\) A compact invariant set \(\mathcal {A}\) (i.e., for any \(y\in \mathcal {A},\) the trajectory \(\eta _{y}\left( t\right) \) satisfies \(\eta _{y}\left( t\right) \subset \mathcal {A},\;\forall t\in \mathbf {R}^{+}\)) is said to be internally chain recurrent if every point \(x\in \mathcal {A}\) is chain recurrent.

The following theorem proves the convergence and stability of the Monte Carlo Algorithm 1.

Theorem 12

Assume that \(L\left( \eta \right) \) is continuous with a unique integral curve and the following assumptions hold:

-

1.

The parameter \(\hat{\theta }_{k+1}\) computed at step 4 satisfies \(\hat{\theta }_{k+1}\in \mathring{\varvec{\Theta }},\;\forall k\);

-

2.

The gain \(\left\{ \alpha _{k}\right\} \) satisfies \(\alpha _{k}>0,\;\forall k,\,\alpha _{k}\rightarrow 0\) as \(k\rightarrow \infty ,\) and \(\sum _{k=0}^{\infty }\alpha _{k}=\infty .\) \(\limsup _{k\rightarrow \infty }\left( \frac{\lambda _{k}}{k^{-\lambda }}\right) <\infty \) for some constant \(\lambda \ge 0.\) Furthermore, there exists a \(\beta >\max \left\{ 0,1-2\lambda \right\} \) such that

$$\begin{aligned} \limsup _{k\rightarrow \infty }\left( \frac{N_{k}}{k^{\beta }}\right) =\limsup _{k\rightarrow \infty }\left( \frac{k^{\beta }}{N_{k}}\right) . \end{aligned}$$ -

3.

For a given \(\rho \in \left( 0,1\right) \) and a distribution family \(\mathbb {F}\), the \(\left( 1-\rho \right) \)-quantile of \(\left\{ H\left( X\right) ,\, X\sim f_{\theta }\left( x\right) \right\} \) is unique for each \(\theta \in \varvec{\Theta }.\) Then, the sequence \(\left\{ \eta _{k}\right\} \) generated by Eq. (20) converges to a compact connected internally chain recurrent set of Eq. (21) w.p.1. Furthermore, if the internally chain recurrent sets of Eq. (21) are isolated equilibrium points, then w.p.1 \(\left\{ \eta _{k}\right\} \) converges to a unique equilibrium point.

Proof

See Theorem 3.1 in [2].

We have thus far shown that the Monte Carlo version of the CWO method converges to the internally chain recurrent set of Eq. (21), an invariant set. Since Eq. (20) will remain in the invariant set upon entrance, we have proved that Algorithm 1 is asymptotically stable. The major difference between the general and Monte Carlo convergence is that in the general case we can characterize the limiting behavior more precisely, i.e., having all the weight on optimal solutions. However, since the Monte Carlo version is an approximation of the general version, it can converge to a set that contains more than distributions with optimal solutions as support. The precise nature of the internally chain recurrent set of Eq. (21) depends on the projection used, hence requires additional analysis for each problem. The chain recurrent set is closed and invariant; it contains all equilibrium points and any point that is able to reach itself by making a series of following the system dynamic and then jumping to a close by state (e.g., period orbits). The existence of these non-optimal recurrent points can only be confirm by plotting the vector field of Eq. (21). Knowing that in the worst case scenario the system will converge to the chain recurrent set is helpful.

5 Numerical algorithms

In this section, we present a few numerical algorithms based on the CWO method. These algorithms attempt to find an optimal solution iteratively. Each iteration consists of 5 stages: generation, quantile-update, parameter-update, weight-update, and projection. The generation, quantile-update and projection stages remain the same for all variations of the generic algorithm (i.e., Algorithm 2, where arrows are used as indentation markers). The weight-update and projection steps, along with the equation in step 2 of Algorithm 2, correspond to step 4 in Algorithm 1. The additional uniform random variable is included in step 2 of Algorithm 2 to ensure all solutions are considered. We propose several approaches for constructing the weight-update stage. These algorithms build on the theoretical results using the same types of modifications as are found in CE and MRAS (see [11, 19, 22]).

There are seven parameters in Algorithm 2. \(\rho _{0}\) is the initial percentile threshold, \(\rho _\mathrm{min}\) is the minimum allowed percentile threshold, \(N_{0}\) is the initial sample size, \(\frac{\epsilon }{2}\) is the minimal \(\gamma _{k}\) threshold improvement requirement, where \(\gamma _{k}\) is the corresponding value at threshold \(\rho _{k}.\) \(\zeta \) is the growth factor for the sample size, and \(\varsigma \) is introduced to ensure all solutions are considered. Lastly, \(\alpha \) controls how much information the algorithm should retain from the last iteration. In general, we want to start \(N_{0}\) to be at least in the hundreds; increasing this parameter will improve the final solution. From condition 2 of Theorem 12, we know that the number of samples, \(N_{k},\) grows with the iteration count. In practice, we found that the decision to increase the sample size can be made when the value found at the current threshold \(\rho _{k}\) does not improve \(\gamma _{k-1}\) by at least \(\frac{\epsilon }{2}.\) For CWO algorithms, \(\rho _{0}\) controls the trade-off between speed and optimality. The lower the \(\rho _{0}\) the faster the algorithm will converge to a solution; however, that solution might be far from optimal. Similar considerations go into picking \(\rho _\mathrm{min}\). We introduce the \(\epsilon \) parameter to ensure that the threshold \(\gamma \) calculated at the \((1-\rho )\) percentile is improving at each iteration. If an improvement does not happen, then the algorithm is forced to either 1) reduce the threshold \(\rho _{k}\) until a sample better than \(\gamma \) is found; 2) increase the sample size. In general, you want to avoid small \(\rho \) values in early iterations of the algorithm; hence, \(\rho _{0}\) should not be too low such that the algorithm eliminates many suboptimal solutions that are near optimal solutions too early. \(\zeta \) is used to decide how fast we want to grow our sample size when a satisfactory \(\rho _{k}\) update cannot happen. How fast we are growing our sample size depends on \(\rho _{k}\) and \(\epsilon \). Finally, \(\varsigma \) is introduced, so that there is a non-zero probability over the neighborhood of any element of the solution space satisfying the requirement imposed by \({\mathbb {I}}_{\mathbf {X}^{*}}\). \(\varsigma \) is generally on the order of 0.01. In addition to the parameters listed above, we also need to decide the family of distributions, \(g_{\theta }\), we employ for each of the optimization problem in this section. The decision is discussed in detail in their respective sections.

In our examples, Eq. (3) has a specific form:

where \(\beta \left( x,p_{k}\right) =\frac{p_{k}\left( x\right) }{\sum _{\xi \in \mathbf {X}:H\left( \xi \right) =H\left( x\right) }p_{k}\left( \xi \right) }\), and \(p_{k+1}\) is the PMF at iteration \(k+1,\) and \(w:[0,1]\rightarrow [0,1]\) is the probability weighting function. Let \(F_{k}^{H}\left( \cdot \right) \) be the continuous cumulative distribution function for the outcome random variable \(H\left( X\right) ,\) then Eq. (22) can be written more compactly as:

Algorithm 2 is the generic CWO algorithm using Eq. (22) as the weight updating equation. Later, two algorithms with different ways of updating the density function are described. Although both weight-update methods will use Eq. (22), they differ in their assignment of the sample weights. The performance of the algorithms is measured using asymmetric traveling salesman problems and continuous test functions, which we will introduce in the section below.

5.1 Combinatorial optimization: ATSP

We apply variations of Algorithm 2 to several asymmetric traveling salesman problems (ATSPs). They are taken from the website http://www.iwr.uni-heidelberg.de/groups/comopt/software/TSPLIB95. We follow a similar approach as in Hu et al. [19], which is outlined below. The reader is reminded here that Algorithm 2 is designed for maximization problems, whereas we are searching for the minimum distances of ATSPs. The goal in each ATSP problem is to find the minimum length of a tour that connects \(N_{cities}\) cities with the same starting and ending cities. For an ATSP, we are given an \(N_{cities}\)-by-\(N_{cities}\) distance matrix D, whose (i, j)th element \(D_{i,j}\) represents the distance from city i to city j. The problem can be mathematically stated as:

where \(x:=\left\{ x_{1},x_{2},\dots ,x_{_{N_{cities}}},x_{1}\right\} \) is an admissible tour and \(\mathbf {X}\) is the set of all admissible tours.

We use the same approach suggested by Rubinstein [22], and De Boer et al. [23] for solving these problems. Each distance matrix D is given an initial state probability transition matrix, whose (i, j)th element specifies the probability of transitioning from city i to city j. At each iteration of the algorithm, there are two important steps: (1) generate random admissible tours according to the probability transition matrix and evaluate the performance of each sampled tour; (2) update the probability transition matrix based on the tours generated from step 1. We denote the set of tours generated at the kth iteration by \(\left\{ x_{k}^{i}\right\} ,\) where \(i\in \left\{ 1,\dots ,N_{k}\right\} \). Without loss of generality, we will assume the samples are sorted according to their values (i.e., \(H\left( x_{k}^{i}\right) <H\left( x_{k}^{j}\right) \) if and only if \(i<j\)).

A detailed discussion of the admissible tour generation process can be found in de Boer et al. [23]. The CWO algorithm differs from other algorithms in how it updates its transition matrix. At the kth iteration of CWO, the probability density function, \(p_{k}\left( \cdot ,\theta _{k}\right) \), parametrized by the transition matrix \(\theta _{k}\) is given by the equation below:

where \(\mathbf {X}_{i,j}\left( l\right) \) is the set of all tours in \(\mathbf {X}\) such that the lth transition is from city i to city j. We can show that the new transition matrix is updated (i.e., stage 6 of Algorithm 2) as:

where we denote the updated density by \(p_{k+1}^{w}\left( \cdot \right) \) and \(\{x_{k+1}^{i}\}\) is generated from \(p_{k}(\cdot ,\theta _{k})\) (i.e., a density function that is parameterized by \(\theta _{k}\)). The superscript w is used to emphasize the dependence of the updated probability mass function on the probability weighting function w. The construction of \(p_{k+1}^{w}\left( \cdot \right) \) depends on the specific weight-update method.

5.1.1 Weight-update methods

In this section, we present several different methods of obtaining \(p_{k+1}^{w}\left( \cdot \right) \) from a collection of samples \(\{x_{k}^{i}\}\) at the kth step. The first method we introduce is called tilted weight update.

Tilted weight update (CWO_T)

The tilted weight-update method is described in Algorithm 3. The key idea behind this variation is that we assign the initial weights of the samples according to their outcome values: the smaller the value, the more initial weight it gets (see stage 2 in Algorithm 3).

We ran 30 independent experiments for seven ATSPs. In those experiments, we used the probability weighting function: \(w\left( p\right) :=1-\left( 1-p\right) ^{2}.\) The trials are done using Algorithm 2 with the parameters \(\rho _{0}=\rho _\mathrm{min}=0.6,\) \(N_{0}=1000,\) \(\epsilon =1\), \(\zeta =2\), \(\varsigma =0.02,\) \(\alpha =0.7\) and the weight-update scheme in Algorithm 3. The results are summarized in Table 1. \(N_{cities}\) is the the number of cities for each problem; \(N_{Total}\) is the average number of total samples until the solutions stop changing; \(H_{best}\) is the best known solution; \(H_{*}\) is the worst algorithm solution from the repeated runs; \(H^{*}\) is the best algorithm solution from the repeated runs; \(\delta _{*}\) and \(\delta ^{*}\) are the percentage deviation of the worst and best algorithm solutions from the best known solution, respectively; \(\delta \) is the average percentage deviation of the algorithm solutions from the best known solution. The important thing to note about the algorithm is its dependence on the actual outcome values. In the next weight-update method, this dependence is eliminated; instead, we weight the samples uniformly.

Uniform Weight Update(CWO_U) Tilting assigns the initial weights of the samples \(\{x_{k}^{i}\}\) using their values. Uniform weight updating differs from tilting by assuming a uniform distribution over the samples. Another major difference from the above approach is that we no longer only consider elite samples. Instead, we use a carefully chosen probability weighting function that smoothly re-weights the samples. More specifically in stage 5 of Algorithm 2, we assume a uniform initial density and use the weighting function

where \(\sigma \) is the optimal-seeking factor and \(\rho \) is the quantile threshold. \(\sigma \) and \(\rho \) are treated as variables that parameterize the weighting function, whereas p is the argument of the parameterized weighting function. Using Eq. (23), we modify the generic CWO algorithm by altering the way the sample weights are updated. The algorithm has a strong connection with the traditional cross-entropy method, which is explained below.

Derivatives of the weighting function and numerical results. a Derivatives of Eq. (23) as \(\sigma \) increases. b CE versus CWO_U sorted trial runs

We remind the reader that the density update equation for cross-entropy is

where an indicator function is used to select the elite samples based on a \(\rho \) dependent threshold \(\gamma \). In fact, the cross-entropy equation is just the limiting case, as \(\sigma \rightarrow \infty \), of the CWO_U algorithm. As we increase the optimal-seeking factor, the derivative of Eq. (23) fixing \(\rho \) at 0.1 will approach a step function [i.e., Eq. (24)] with its discontinuity occurring at \(\rho =0.1\) (see Fig. 1a). Table 2 contains the results from running 20 trials of CWO_U and CE algorithms with the parameters \(\varDelta =0.01,\) \(\rho _{0}=0.1,\) \(\rho _\mathrm{min}=0.001,\) \(N_{0}=1000,\) \(\epsilon =0\), \(\zeta =1\), \(\varsigma =0.01\), and \(\alpha =0.7\). Note the additional parameter \(\varDelta \) updates the weight function in each iteration by increasing the \(\sigma \) parameter in Eq. (23) by \(\varDelta \).

We plot the sorted minimum tour distances obtained from the 20 trials of CE and CWO_U algorithms in Fig. 1b. We observe from Fig. 1b that compared with the standard cross-entropy method, our approach does better in every percentile. For example, the \(\frac{19}{20}\)th percentile would contain the lowest optimal solution obtained among the 20 trials. The \(\frac{18}{20}\)th percentile would contain the second lowest optimal solution obtained among the 20 trials.

5.2 Continuous problems

We further tested the CWO uniform weight update scheme on the continuous case, comparing CWO_U against the CE method by minimizing two continuous test functions with many local minima and isolated optimizers: 1) Forrester: \(H_{1}(x)=\left( 6x-2\right) ^{2}\sin \left( 12x-4\right) ,\) \(0\le x\le 1;\) 2) Shekel: \(H_{2}(x)=-\sum _{j=1}^{5}\left( \sum _{i=1}^{4}\left( x_{i}-A_{ij}\right) ^{2}+B_{j}\right) ^{-1},\) \(0\le x_{i}\le 10,\) where \(A_{1}=A_{3}=\left[ 4,1,8,6,3\right] ,\ A_{2}=A_{4}=\left[ 4,1,8,6,7\right] ,\) and \(A_{i}\) represents the ith row of the matrix A. Furthermore, \(B=\left[ 0.1,0.2,0.2,0.4,0.4\right] .\) Table 3 contains the results of our 20 trial runs for each scenario, using the parameters \(\varDelta =0.1,\) \(\rho _{0}=\rho _\mathrm{min}=0.1,\) \(N_{0}=100,\) \(\epsilon =0\), \(\zeta =1\), \(\varsigma =0\), and \(\alpha =0.7.\) We employed independent Gaussian distributions with zero mean and standard deviation of 10 as the initial distributions in all dimensions for all runs.

6 Conclusion

In the first part of this paper, we proved the convergence and stability of both the theoretical and Monte Carlo versions of the CWO-based method. The proofs provided a rigorous mathematical foundation for the two practical algorithms we proposed in the numerical examples section. These two algorithms are variations of the generic CWO algorithm described in Algorithm 2. The two algorithm variations, CWO_T and CWO_U, differ by how they update their probability density functions over the solution space for each iteration. The first approach, CWO_T, weights the samples according to their outcome values. On the other hand, CWO_U, uniformly weights the samples. We benchmarked the performance of the CWO_T algorithm and summarized the results in Table 1. Although the numeric values are quite satisfactory, we wanted to see if we could improve these results. This effort led us to the development of the second approach, CWO_U, which we consider as the preferred implementation of the CWO-base algorithm. Perhaps the most surprising fact is that by not taking into account the outcome values of the samples, we are able to achieve better performance results. Even more interesting is the fact that the standard cross-entropy approach is just a limiting case of the CWO_U approach. Comparing the numerical results of CWO_U with those of CE, we believe our algorithm is better at obtaining an optimal solution (see Fig. 1b). Of course, the improvement in performance is at the expense of increasing computational costs.

References

Hu, J., Wang, Y., Zhou, E., Fu, M.C., Marcus, S.I.: A survey of some model-based methods for global optimization. In: Hernández-Hernández, D., Minjárez-Sosa, J.A. (eds.) Optimization, Control, and Applications of Stochastic Systems, pp. 157–179. Birkhäuser, Boston (2012)

Hu, J., Hu, P., Chang, H.S.: A stochastic approximation framework for a class of randomized optimization algorithms. IEEE Trans. Autom. Control 57, 165–178 (2012)

Kahneman, D., Tversky, A.: Prospect Theory: An Analysis of Decision Under Risk. National Emergency Training Center, Emmitsburg (1979)

Tversky, A., Kahneman, D.: Advances in prospect theory: cumulative representation of uncertainty. J. Risk Uncertain 5(4), 297–323 (1992)

Diecidue, E., Schmidt, U., Zank, H.: Parametric weighting functions. J. Econ. Theory 144, 1102–1118 (2009)

Borkar, V.S.: Topics in Controlled Markov Chains. CRC Press, Boca Raton (1991)

Billingsley, P.: Convergence of Probability Measures, 2nd edn. Wiley-Interscience, New York (1999)

Wang, Y.: Simulation-Based Methods for Stochastic Control and Global Optimization. PhD thesis, University of Maryland - College Park (2011)

Frank, T.D.: Nonlinear Fokker–Planck Equations—Fundamentals and Applications. Springer, Berlin (2005)

Kolokoltsov, V.N.: Nonlinear Markov Processes and Kinetic Equations. Cambridge University Press, Cambridge (2010)

Wang, Y., Fu, M.C., Marcus, S.I.: Model-based evolutionary optimization. In: Proceedings of the Winter Simulation Conference, WSC’10, pp. 1199–1210, Winter Simulation Conference (2010)

Oechssler, J., Riedel, F.: Evolutionary dynamics on infinite strategy spaces. Econ. Theory 17(1), 141–162 (2001)

Hofbauer, J., Oechssler, J., Riedel, F.: Brown-von Neumann–Nash dynamics: the continuous strategy case. Games Econ. Behav. 65, 406–429 (2009)

Zeidler, E.: Nonlinear Functional Analysis and Its Applications: Part 2 B: Nonlinear Monotone Operators. Springer, Berlin (1989)

Bhatia, N.P., Szegö, G.P.: Stability Theory of Dynamical Systems. Springer, Berlin (1970)

Morris, C.N.: Natural exponential families with quadratic variance functions. The Annals of Statistics 10, pp. 65–80, Mar. 1982. Mathematical Reviews number (MathSciNet) MR642719, Zentralblatt MATH identifier0498.62015

Mühlenbein, H., Paaß, G.: From recombination of genes to the estimation of distributions I. Binary parameters. In: Voigt, H.-M., Ebeling, W., Rechenberg, I., Schwefel, H.-P. (eds.) Parallel Problem Solving from Nature IV. Lecture Notes in Computer Science, pp. 178–187. Springer, Berlin (1996)

Wolpert, D.H.: Finding bounded rational equilibria part i: Iterative focusing. In: Proceedings of the International Society of Dynamic Games Conference, 2004. Citeseer (2004)

Hu, J., Fu, M.C., Marcus, S.I.: A model reference adaptive search method for global optimization. Oper. Res. 55, 549–568 (2007)

Zabinsky, Z.B.: Stochastic Adaptive Search for Global Optimization, vol. 72. Springer, Berlin (2003)

Rubinstein, R.Y., Kroese, D.P.: The Cross-Entropy Method—A Unified Approach to Combinatorial Optimization, Monte-Carlo Simulation. Springer, New York (2004)

Rubinstein, R.Y.: Combinatorial optimization, cross-entropy, ants and rare events. In: Uryasev, P.M.P.S. (ed.) Stochastic Optimization: Algorithms and Applications, pp. 304–358. Kluwer, Dordrecht (2001)

de Boer, P.-T., Kroese, D.P., Mannor, S., Rubinstein, R.Y.: A tutorial on the cross-entropy method. Ann. Oper. Res. 134, 19–67 (2005)

Acknowledgments

This work was supported in part by the National Science Foundation (NSF) under Grants CNS-0926194, CMMI-0856256, CMMI-1362303, CNS-1446665 and CCF-0926194, and by the Air Force Office of Scientific Research (AFOSR) under Grant FA9550-15-10050. A preliminary and shorter version of this paper was presented at the 2013 Winter Simulation Conference.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Lin, K., Marcus, S.I. Cumulative weighting optimization. J Glob Optim 65, 487–512 (2016). https://doi.org/10.1007/s10898-015-0374-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-015-0374-4