Abstract

We study rates of mixing for small random perturbations of one-dimensional Lorenz maps. Using a random tower construction, we prove that, for Hölder observables, the random system admits exponential rates of quenched correlation decay.

Similar content being viewed by others

1 Introduction

In 1963, Lorenz [17] introduced the following system of equations

as a simplified model for atmospheric convection. Numerical simulations performed by Lorenz showed that the above system exhibits sensitive dependence on initial conditions and has a non-periodic “strange” attractor. Since then, (1) became a basic example of a chaotic deterministic system that is notoriously difficult to analyze. We refer to [4] for a thorough account on this topic.

It is now well known that a useful technique to analyze the statistical properties of such a flow, and any nearby flow in the C2-topology, is to study the dynamics of the flow restricted to a Poincaré section via a well defined Poincaré map [5]. Such a Poincaré map admits an invariant stable foliation; moreover, it is strictly uniformly contracting along stable leaves [5]. Therefore, the dynamics of the Poincaré map can be understood via quotienting along stable leaves, i.e., by studying the dynamics of its one-dimensional quotient map along stable leaves. The above technique have been employed to obtain statistical properties of Lorenz flows [15] and to prove that such statistical properties of this family of flows is stable under deterministic perturbations [3, 7, 8]. This illustrates the importance of understanding the statistical properties of one-dimensional Lorenz maps.

One-dimensional Lorenz maps have been thoroughly studied in the literature. In [12], which is the main inspiration for this paper, a deterministic Lorenz-like map is studied for which it is proved there is exponential decay of correlation. Additionally, in [8] they examine a perturbed family of Lorenz-like maps with differing singularity points and show statistical stability. Another example is [2], where they examine a family of perturbed contracting Lorenz-like maps, in contrast to the expanding case. They show that the set of points that have not achieved exponential growth in the derivative or slow recurrence to the critical point at a given time decays exponentially, which thereby implies further statistical properties.

In this paper, we study rates of mixing for small random perturbations of such one-dimensional maps. We use a random tower construction to prove that for Hölder observables the random system admits exponential rates of quenched correlation decay. The paper is organized as follows. In Section 2 we present the setup and introduce the random system under consideration. Section 2 also includes the main result of the paper, Theorem 2.2. Section 3 includes the proof of Theorem 2.2. The Appendix contains a version of the abstract random tower result of [9, 14], which is used to deduce Theorem 2.2.

2 Setup

2.1 Our Unperturbed System

We assume that the following conditions hold.

-

(A1)

T0 : I → I, \(I=[-\frac 12, \frac 12]\), is C1 on I ∖{0} with a singularity at 0 and one-sided limits T0(0+) < 0 and T0(0−) > 0. Furthermore, T0 is uniformly expanding on I ∖{0}, i.e., there are constants \(\tilde C>0\) and ℓ > 0 such that \(D{T_{0}^{n}}(x)> \tilde {C} e^{n \ell }\) for all n ≥ 1 whenever \(x\notin \bigcup _{j=0}^{n-1}({T_{0}^{j}})^{-1}(0)\);

-

(A2)

There exists C > 1 and \(0<\lambda _{0}<\frac 12\) such that in a one-sided neighborhood of 0

$$ \begin{array}{@{}rcl@{}} C^{-1}|x|^{\lambda_{0}-1}\le |DT_{0}(x)|\le C|x|^{\lambda_{0}-1}. \end{array} $$(2)Moreover, for any α ∈ (0, 1), 1/DT0 is α-Hölder on \([-\frac 12, 0)\) and \((0, \frac 12]\) with α-Hölder constant K = K(α);

-

(A3)

T0 is transitive (for the construction, we use that pre-images of 0 is dense in I).

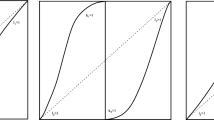

See Fig. 1 on the next page for a visual depiction of this graph. Notice that (A2) implies that for all x, y ∈ I we have

where \(K^{\prime } = C^{2} K\). Furthermore, if 1 − λ0 ≤ α, then DT0 is locally Hölder, in the sense that for all x, y ∈ I we have

Definition 2.1

Let {Tε : I → I}ε> 0 be a family of maps. For some fixed α ∈ (0,1), we say Tε is C1+α on I ∖{0} if Tε is C1 on I ∖{0} and \(\frac {1}{DT_{\varepsilon }}\) is α-Hölder on I ∖{0}. Furthermore, we say {Tε : I → I}ε> 0 is a continuous family of C1+α(I ∖{0}) maps if

and

2.2 Our Perturbed System

Let T0 : I → I be the map introduced in Section 2.1. Notice that T0 is C1+α on I ∖{0} for any α ∈ (0, 1) according to the definition in the previous subsection. Fix some α ∈ [1 − λ0,1). Define \(\mathcal A(\varepsilon )\) to be a C1+α continuous family of maps containing T0, in the sense of Definition 2.1. Thus, there exists a sufficiently small interval \([\lambda _{0}, \bar \lambda ] \subset (0, \frac 12)\), \(\lambda _{0}<\bar \lambda \) with \(T_{\lambda }\in \mathcal A(\varepsilon )\) for any \(\lambda \in [\lambda _{0}, \bar \lambda ]\). Note that for \(\lambda \in (\lambda _{0}, \bar \lambda ]\), the map Tλ satisfies the order of singularity condition (A2) with respect to λ instead of λ0. Additionally, note that for any \(\lambda \in [\lambda _{0}, \bar \lambda ]\) we have

Let P be the normalized Lebesgue measure on \([\lambda _{0}, \bar \lambda ]\). Let \({\Omega }=[\lambda _{0}, \bar \lambda ]^{\mathbb {Z}}\), \(\mathbb {P}=P^{\mathbb {Z}}\) and σ : Ω →Ω be the left shift map, i.e., \(\omega ^{\prime }=\sigma (\omega )\) if and only if \(\omega ^{\prime }_{i}=\omega _{i+1}\) for all \(i\in \mathbb {Z}\). Then σ is an invertible map that preserves \(\mathbb {P}\). Notice that ω denotes a bi-infinite sequence of parameter values from \([\lambda _{0}, \bar \lambda ]\), i.e.,

We express the random dynamics of our system in terms of the skew product

where

Iterates of S are defined naturally as

To simplify the notation, we denote \(T_{\omega } = T_{\omega _{0}}\). We assume uniform expansion on random orbits: for every ω ∈ Ω,

whenever \(x\notin \bigcup _{j=0}^{n-1}(T_{\omega }^{j})^{-1}(0)\).

Here we are looking at the quenched statistical properties of S, i.e., we study statistical properties of the system generated by the compositions \(T^{n}_{\omega }\) on I for almost every ω ∈ Ω, which we refer to as {Tω} without confusion, since the underlying driving process \(({\Omega }, \sigma , \mathbb {P})\) is fixed. We call a family of Borel probability measures {μω}ω∈ Ω on I equivariant if ω↦μω is measurable and

For fixed η ∈ (0,1), let \(\mathcal {C}^{\eta }(I)\) denote the set of η-Hölder functions on I, and let \(L^{\infty }(I)\) denote the set of bounded functions on I. The following theorem is the main result of this paper.

Theorem 2.2

The random system {Tω} admits a unique equivariant family of absolutely continuous probability measures {μω}. Moreover, there exists a constant b > 0 such that for every \(\varphi \in \mathcal {C}^{\eta }(I)\) and \(\psi \in L^{\infty }(I)\), we have

and

for some constant Cφ, ψ > 0 which only depends on φ and ψ and is uniform for all ω ∈ Ω.

3 Proof of Theorem 2.2

3.1 Strategy of the Proof

We prove Theorem 2.2 by showing that the random system {Tω} admits a Random Young Tower structure [9, 14] for every ω ∈ Ω with exponential decay for the tail of the return times. Thus, uniform (in ω) rates leads to uniform (in ω) exponential decay of correlations. For convenience, we include the random tower theorem of [9, 14] in the Appendix, which we will refer to in the proof of Theorem 2.2. Similar to the work of [12, 13] in the deterministic setting, to construct a random tower, we first construct an auxiliary stopping time called escaping time \(E:J\to \mathbb {N}\) on subintervals of I. Roughly speaking, E is a moment of time when the image of a small interval \(J^{\prime }\subset J\) reaches a fixed interval length δ > 0 while at the same time \(T_{\omega }^{E}(J^{\prime })\) does not intersect a fixed neighborhood Δ0 of the singularity 0. We show that \(T^{E}_{\omega }\) has good distortion bounds and that |{E > n}| decays exponentially fast as n goes to infinity. The next step will be to construct a full return partition \(\mathcal {Q}^{\omega }\) of some small neighborhood \({\Delta }^{\ast }\subset {\Delta }_{0}\) of the singularity. Once this is obtained, we induce a random Gibbs-Markov map Fω in the sense of Definition 1.2.1 of [14]. This means we will need to show that \(F_{\omega }: {\Delta }^{*}\to {\Delta }^{*}\), defined as \(F_{\omega }(x) = T_{\omega }^{\tau _{\omega }(x)}(x)\), where \(\tau _{\omega }:{\Delta }^{*}\to \mathbb N\) a measurable return time function, has the following properties:

-

τω|Q is constant for every \(Q\in \mathcal {Q}^{\omega }\) and every ω ∈ Ω;

-

\(F_{\omega }|Q:Q \to {\Delta }^{\ast } \) is a uniformly expanding diffeomorphism with bounded distortion for every \(Q\in \mathcal {Q}^{\omega }\) and every ω ∈ Ω;

-

|{τω > n}|≤ Be−bn for some constants B > 0, b ∈ (0,1);

-

there exists \(N_{0}\in \mathbb {N}\) and two sequence \(\{t_{i}\in \mathbb {N}, i=1,2, \dots , N_{0}\}\), \(\{\varepsilon _{i}>0, i=1, \dots , N_{0}\}\) such that g.c.d.{ti} = 1 and |{x ∈Δ∗∣τω(x) = ti}| > εi for almost every ω ∈ Ω;

-

on sets {τω = n}, τω only depends on the first n elements of ω, i.e., \(\omega _{0}, \dots , \omega _{n-1}\).

3.2 Escape Time and Partition

Let us choose two sufficiently large constants r0, r∗ > 0 satisfying r0 < r∗. Define \(\delta ^{*}=e^{-r_{\ast }}\) and \(\delta =e^{-r_{0}}\). Moreover, let Δ0 = (−δ, δ) and Δ∗ = (−δ∗, δ∗). Consider an exponential partition \(\mathcal {P}=\{I_{r}\}_{r\in \mathbb {Z}}\) of Δ0 as in [10], where

and for |r| < r0 we set Ir = ∅. Furthermore, fix \(\vartheta =\left [\frac 1\alpha \right ]+1\), where α is the same as in 2.2. We divide every Ir into r𝜗 equal parts. Let Ir, m denote one of the small intervals, \(m=1, \dots , r^{\vartheta }\). We use the usual notation \(x_{\omega ,i}=T^{i}_{\omega }(x)\), \(J_{\omega , i}=T^{i}_{\omega }(J)\) for i ≥ 0, x ∈ I and any interval J ⊂ I.

Let J0 ⊂ I be an interval such that either J0 ∩Δ0 = ∅ and |J0|≥ δ/5, or J0 = Δ0. We wish to construct an escape partition \(\mathcal {P}^{\omega }(J_{0})\) of J0, and we also wish to construct a stopping time function \(E_{\omega }: J_{0}\to \mathbb {N}\), which we call the escape time, that has the following properties for every ω ∈ Ω:

-

Eω|J is constant on each \(J \in \mathcal {P}^{\omega }(J_{0})\);

-

\(T_{\omega }^{E_{\omega }(J)}(J) \cap {\Delta }_{0} = \emptyset \) for each \(J \in \mathcal {P}^{\omega }(J_{0})\);

-

\(|T_{\omega }^{E_{\omega }(J)}(J)| \ge \delta \) for all \(J \in \mathcal {P}^{\omega }(J_{0})\);

-

for every \(J\in \mathcal {P}^{\omega }(J_{0})\) and for every time j ≤ Eω(J) such that \(T_{\omega }^{j}(J)\cap {\Delta }_{0} \neq \emptyset \), \(T_{\omega }^{j}(J)\) does not intersect more than three adjacent intervals of the form Ir, m, |r|≥ r0, \(m=1,\dots , r^{\vartheta }\).

We have the following proposition.

Proposition 3.1

Let δ > 0 be our previously chosen constant, and consider J0 ⊂ I as described above. For every ω ∈ Ω there exists a countable partition \(\mathcal {P}^{\omega }(J_{0})\) and an escape time \(E_{\omega }:J_{0}\to \mathbb {N}\) that satisfies the properties mentioned above. Furthermore, there exist constants Cδ > 0, γ > 0 such that for any given time \(n \in \mathbb {N}\) we have

We prove the proposition above in a series of lemmas. It is important to keep distortion under control, which means that we have to keep track of visits of the orbits near the singularity. To this end, we use a chopping algorithm following [10].

Let us fix ω ∈ Ω and k ≥ 1, and suppose that the elements \(J^{*} \in \mathcal {P}^{\omega }(J_{0})\) satisfying Eω(J∗) < n have already been constructed. Let J ⊂ J0 be an interval in the complement of {Eω(J∗) < n}. If \(T^{k}_{\omega }(J)\cap {\Delta }_{0}=\emptyset \), we call k a free iterate for J. Now, let us consider the following cases depending on the position and length of \(J_{\omega , k}=T^{k}_{\omega }(J)\), \(k\in \mathbb {N}\).

- Non-chopping intervals. :

-

Suppose that |Jω, k| < δ, Δ0 ∩ Jω, k ≠ ∅ and Jω, k does not intersect more than three adjacent intervals Ir, m. Then k is called an inessential return time for J. We set the return depth as \(r_{\omega }=\min \limits \{|r|: J_{\omega , k} \cap I_{r} \neq \emptyset \}\). Notice that rω depends only on \(\omega _{0}, \dots , \omega _{k-1}\).

- Chopping times. :

-

Suppose that |Jω, k| < δ, Δ0 ∩ Jω, k ≠ ∅ and Jω, k intersects more than three adjacent intervals Ir, m. Then k is called an essential return time for J. In this case we chop J into pieces Jω, r, j ⊂ J in such that

$$ I_{r, j}\subset T^{k}_{\omega}(J_{\omega, r, j})\subset \hat I_{r, j}, $$where \(\hat I_{r, j}\) is the union of Ir, j and its two neighbors. In this case, we say that Jω, r, j has the associated return depth \(r_{\omega }=\min \limits \{|r|: T^{k}_{\omega }(J_{\omega , r, j}) \cap I_{r} \neq \emptyset \}\), and we refer to J as the ancestor of Jω, r, j.

- Escape times. :

-

Suppose that k is the first free iterate for J such that |Jω, k|≥ δ. We then set Eω(J) = k and add J to \(\mathcal {P}^{\omega }(J_{0})\), where we call Jω, k an escape interval. Notice that the partition will depend on ω.

The proof of Proposition 3.1 closely follows the approach of [12]. Suppose that \(J\in \mathcal {P}^{\omega }(J_{0})\), and suppose that J had s returns before escaping. Let us denote ri as the return depth of the i th return of J. Note that ri will depend on ω, but for ease of notation this is not denoted explicitly. We define the total return depth as \(R_{\omega }(J)={\sum }_{i=1}^{s} r_{i}\).

Lemma 3.2

There exists \(\hat \lambda < \frac {1}{2} \) depending on δ such that for any \(J\in \mathcal {P}^{\omega }(J_{0})\)

Proof

Suppose that Rω(J)≠ 0 and that J has s returns before escaping. Let d0 be the number of iterates before the first return, let d1,..., ds− 1 be the free iterates between successive returns or before escaping for ds. Then \(E_{\omega }(J)=d_{0}+1+ d_{1} + 1 + {\dots } + d_{s} +1\). We have

for some ξ ∈ J. Notice that by using the chain rule we obtain the following:

where \(\nu _{i} = d_{0} + 1 + {\dots } + d_{i-1} + 1\) is the i th return time for \(i = 1, \dots , s\) and ν0 = 0. Notice that for every \(i=0, \dots , s\), every x ∈ I and every ω ∈ Ω, we have \(DT^{d_{i}}_{\omega }(x)\ge \tilde Ce^{\ell d_{i}}\) by the expansion property from (6). Likewise, since \(T_{\omega }^{\nu _{i}}(x)\) returns for all x ∈ J, and since we can write \(T_{\omega }^{\nu _{i}}(x) = e^{-r_{x}}\) for some rx > ri, then \(DT_{\sigma ^{\nu _{i}}(\omega )}(T_{\omega }^{\nu _{i}}(x))\ge C^{-1} e^{(1-\bar \lambda )r_{x}} \ge C^{-1} e^{(1-\bar \lambda )r_{i}}\) for all x ∈ J by the order of singularity condition (2). Using these two inequalities, we have

where \(\hat C =\min \limits \{C^{-1}, \tilde C^{(s+1)/s}\}\).

Recall that ri ≥ r0, where \(e^{-r_{0}}=\delta \). Thus Rω ≥ sr0, which implies \(1-\bar \lambda +\frac {s}{R_{\omega }}\log \hat C\le 1-\bar \lambda +\frac {\log \hat C}{\log \delta ^{-1}}\). Assume we have chosen δ small enough such that \(\hat \lambda =\bar \lambda -\frac {\log \hat C}{\log \delta ^{-1}}<\frac {1}{2}\). Thus, we have

which finishes the proof. □

The above lemma allows us to prove the following exponential estimate.

Lemma 3.3

Let J0 ⊂ I and \(R_{\omega }:J_{0}\to \mathbb {N}\) be the sum of return depths for all ω ∈ Ω. Then we have

Proof

Let \(\mathcal N_{k}\) denote the set of all sequences of return depths \((r_{1}, \dots , r_{s})\) with s ≥ 1 such that \(r_{1}+{\dots } + r_{s}=k\). Then from [11, Lemma 3.4], we know that for sufficiently large k we have

Furthermore, we know that for any given sequence of return times \((r_{1}, \dots , r_{s})\), there can be at most two escaping intervals. Thus, combining these and Lemma 3.2 we have

where in the last inequality we have used |J0|≥ δ/5. □

The following lemma relates the total return depth and the escape time.

Lemma 3.4

Let \(J\in \mathcal {P}^{\omega }(J_{0})\) and let \(\mathcal R = (r_{1}, \dots , r_{s})\) be its associated sequence of return depths. Furthermore, let \(R_{\omega }(J)={\sum }_{i=1}^{s} r_{i} \). Then we have

Proof

Since intervals are not chopped at the inessential return times, we distinguish between essential and inessential return times. Thus, let \(R_{\omega }(J)=R^{e}_{\omega }(J)+R^{ie}_{\omega }(J)\) be the corresponding splitting into essential and inessential total return depths respectively. Furthermore, let \(\mathcal {K}^{e}= \{{\nu _{1}^{e}} < ... <{\nu _{q}^{e}} \}\) be the ordered set of essential return times, and define \(\mathcal {D}^{e}(J)={d_{0}^{e}}+{\dots } + {d_{q}^{e}}\), where \({d_{0}^{e}}\) is the number of free iterates before the first essential return, each \({d_{i}^{e}} \) is the number of free iterates between consecutive essential return times, and \({d_{q}^{e}}\) is the number of free iterates after the last essential return and before the escape time. Likewise, let \(\mathcal {K}= \{\nu _{1} < ... <\nu _{s} \}\) and \(\mathcal {D}(J)=d_{0}+{\dots } +d_{s}\), where we have already defined the νi’s and di’s in the proof of lemma 3.2. Notice that if \(d_{i_{j}}\) is the number of free iterates between \(\nu _{j+1}^{e}\) and the previous return before \(\nu _{j+1}^{e}\), essential or inessential, for some \(i_{j} = 1, \dots , s-1\), then \({d_{j}^{e}} \ge d_{i_{j}} \) for all \(j=0, \dots , q-1\). But also notice that \(\mathcal {D}^{e}(J)=\mathcal {D}(J)\), thus q ≤ s.

Recall in the definition of chopping times that we say an interval \(\tilde J \supset J\) is the ancestor of J if J is obtained via chopping of \(\tilde J\) at an essential return time. In fact, we can write of sequence of subsets of the form \(J = J^{(q)} \subset J^{(q-1)} \subset {\dots } \subset J^{(1)} \subset J^{(0)}\) such that J(0) is our starting interval and J(i) is obtained by chopping J(i− 1) at the i th essential return time. We call J(i) the ancestor of J of order i.

Now, let J(i− 1) be the ancestor of J of order i − 1 which is obtained at the essential return time \(\nu _{i-1}^{e}\). We claim that

where \({r_{i}^{e}}\) is the return depth of the i th essential return. Indeed, the first inequality is true by the definition of essential return. For the second inequality, one can easily show this by calculating the ratio |Ir+ 1|/|Ir|, which is just a constant not dependent on r, and then taking the geometric sum.

Let ρ0 be the number of inessential returns before the first essential return, let ρi denote the number of inessential returns between \({\nu _{i}^{e}}\) and \(\nu _{i+1}^{e}\) for \(i=1, \dots , q- 1\), and let ρq denote the number of inessential returns after the last essential return and before the escape time. Now, using the above inequality we have

for \(i=1, \dots , q\), where we just set \(\nu _{q+1}^{e}= E_{\omega }(J)\), and where we use \(e^{\ell \rho _{i}} \ge 1\). Now, if r0 is sufficiently large, then \(\frac {e^{(1-\bar \lambda ){r_{i}^{e}}}}{{{r_{i}^{e}}}^{\vartheta - 1}} \ge 1\). If \(\tilde C C^{-1} < 1\), then we can simply choose an even larger r0 to cancel these terms out. Otherwise, if \(\tilde C C^{-1} \ge 1\), then we can simply remove these terms. Thus, we obtain \(1 \ge e^{\ell {d^{e}_{i}} - {r_{i}^{e}}},\) and taking the log of both sides we obtain \(0 \ge \ell {d_{i}^{e}} - {r_{i}^{e}}\), and thus \({d_{i}^{e}} \le \frac {{r_{i}^{e}}}{\ell }\) for \(i=1, \dots , q\). Similarly for \({d_{0}^{e}}\), we know that

Using a similar argument to the previous one above, we obtain \({d_{0}^{e}} \le \frac {{r_{1}^{e}}}{\ell }\).

Note by assumption that there must be (s − q) inessential returns and that there must be (q + 1) iterates when a return or the escape happens. Thus, using the fact that Rω(J) ≥ s and \(R_{\omega }^{e} (J) \le R_{\omega }(J)\) we obtain

□

Finally, we are ready to prove Proposition 3.1.

Proof of Proposition 3.1

Let us fix some \(n \in \mathbb {N}\). By the above lemma, for every J in \(\mathcal {P}^{\omega }(J_{0})\) such that n ≤ Eω(J), we therefore have that \(n\le \frac {2+ \ell }{\ell }R_{\omega }(J) + 1\). Thus, \(R_{\omega }\ge \frac {(n-1)\ell }{2+\ell }\). Combining this with lemma 3.3 we have that

Notice that we need to fix δ > 0, since \(C_{\delta }=\mathcal O(\delta ^{-1}).\)

Thus, \(\mathcal {P}^{\omega }(J_{0})\) defines a partition of J0 and every element of the partition is assigned an escape time. Notice that the way we constructed the escape time immediately implies that for every \(J\in \mathcal {P}^{\omega }(J_{0})\) and time j ≤ Eω(J) such that \(T_{\omega }^{j}(J)\cap {\Delta }_{0}\neq \emptyset \), the image \(T_{\omega }^{j}(J)\) does not intersect more than three adjacent intervals of the form Ir, m, |r|≥ r0, \(m=1,\dots , r^{\vartheta }\). □

3.3 Bounded Distortion

In this subsection we prove that \(T^{n}_{\omega }\) has bounded distortion on every interval J such that \(T_{\omega }^{k}(J)\) does not intersect more than three adjacent intervals Ir, m for all 1 ≤ k ≤ n. Therefore, the escape map in Proposition 3.1 has bounded distortion.

We prove the following lemma:

Lemma 3.5

For some fixed ω ∈ Ω, consider an interval J ⊂ I such that |J| < δ and for which there exists \(n_{J} \in \mathbb {N}\) such that for every 0 ≤ k ≤ nJ − 1 either Jω, k ∩Δ0 = ∅ or Jω, k is contained in at most three intervals Ir, m. There exists a constant \(\mathcal {D} = \mathcal {D}(\delta )\) (independent of ω) such that for every ω and for every such J described above, we have

Proof

Let ω ∈ Ω. If we set \(x_{\omega , i} = T_{\omega }^{i}(x)\), then by using the chain rule and \(\log (1+x)\le x\) for x ≥ 0 we obtain

One can show that

where \(\lambda _{\sigma ^{i} \omega } \in [\lambda _{0}, \bar \lambda ]\) is the order of singularity associated with the map \(T_{\sigma ^{i} \omega }\). Furthermore, we know \(|DT_{\sigma ^{i} \omega }(y_{\omega , i})| \geq C^{-1} |y_{\omega , i}|^{\lambda _{\sigma ^{i} \omega } - 1}\), and we also know that \(\frac {1}{|x|^{1 - \lambda }} \le \frac {1}{|x|^{\alpha }}\) for any x ∈ I because α ≥ 1 − λ for any \(\lambda \in [\lambda _{0}, \bar \lambda ]\). Thus, combining these and setting κ = KC, we obtain

Let J = [x, y] ⊂ I, and let \(\mathcal {K}= \{\nu _{1} < \nu _{2} < ... < \nu _{i} < ... <\nu _{p} \}\) be the ordered set of all returns under the dynamics of \(T_{\omega }^{k}\) on J from k = 0 to k = nJ − 1. Note that since we have already assumed there are no essential returns at or before nJ − 1, all of these returns must be inessential. The largest possible size for \(J_{\omega , \nu _{i}}=T_{\omega }^{\nu _{i} }(J)\) is if it is contained in one interval of the form \(I_{r_{i}, m}\) and two of the form \(I_{(r_{i} -1), m}\), thus

with \(c_{r_{i}}= \frac {r_{i}^{\vartheta }}{(r_{i} -1)^{\vartheta }} \big (2e - 1 - e^{-1} \big )\).

Let us examine time k. There are two possibilities: k ≤ νp + 1 or νp + 1 < k ≤ nJ − 1. We will start with the case that k ≤ νp + 1. Let νs(k) denote the last return time before k, and let us split the sum in (9) accordingly:

where the first sum is all times up to time νs(k) but excluding return times, the second sum is the times after νs(k), and the third sum is the return times.

For the first sum, we use the fact that our expansion condition implies that \(|J_{\omega , \nu _{s(k)-i}}| \leq \tilde {C}^{-1} e^{-i\ell }|J_{\omega , \nu _{s(k)}}|\) and that \(|x_{\omega , \nu _{s(k)} - i}|\geq \delta \) for i = 1,..., νs(k), \((\nu _{s(k)} - i) \notin \mathcal {K}\) to show that

Thus, we have

with \(C_{0}= \tilde {C}^{-\alpha } \) and \(C_{1}= \Big (\frac {e^{-r_{s(k)}}}{\delta } \Big )^{\alpha }\). We note that D1 is independent of both k and δ.

For the second sum, we also have that |xω, i|≥ δ and \(|J_{\omega , i}| \leq \tilde {C}^{-1} e^{-\ell (k - 1 - i)}|J_{\omega , k-1}|\) for i = νs(k) + 1,.., k − 1. Thus

Finally, for the third sum, we define the set \(\mathcal {K}_{r}= \{\nu _{\eta _{1}} < \nu _{\eta _{2}} < ... < \nu _{\eta _{i}} < ... <\nu _{\eta _{q}} \}\) such that for the associated return depths we have \(r_{\eta _{i}}=r\) for all \(\nu _{\eta _{i}} \in \mathcal {K}_{r}\). Clearly

Let M(r) denote the maximum value in \(\mathcal {K}_{r}\). Then we have \(|J_{\omega ,i}| \leq \tilde {C}^{-1} e^{-\ell (M(r) - i)}\) |Jω, M(r)| and \(|J_{\omega ,M(r)}|< c_{M(r)}\frac {e^{-r}}{r^{\vartheta }}\). Additionally, since \(i \in \mathcal {K}_{r}\) implies e−r ≤|xω, i|, we have

Thus, we have

with \(C_{2}= C_{0} c_{M(r)}^{\alpha } \frac {1}{1 - e^{-\ell \alpha }}\). Thus,

This concludes the part of the proof for k ≤ νp + 1.

If we instead have νp + 1 < k, then the above proof will not work. This is because, after νp, the upper bound of |Jω, i| < δ no longer applies so long as the i th iterate does not intersect Δ0. Instead, we use the fact that, since Jω, i does not intersect Δ0, we have |Jω, i| < 1 − δ to give us the following estimate:

where D2(δ) is a function dependent on δ. Thus, we set our distortion constant \(\mathcal {D}(\delta ) = \kappa (D_{1} + D_{2}(\delta ) + D_{3})\). □

To summarize the last two sections, we have now shown that for a chosen δ > 0 we can construct an escape partition and an escape time function Eω, the tails for which decay exponentially. Furthermore, since |J| < δ for every \(J\in \mathcal {P}^{\omega }(J_{0})\), then the above bounded distortion result holds on each \(J\in \mathcal {P}^{\omega }(J_{0})\).

3.4 Full Return Partition

Below we construct the full return partition of some neighborhood of 0. For this we use the following lemma:

Lemma 3.6

Let δ > 0 be our previously chosen constant. Then there exists some sufficiently small δ∗ > 0 and some \(t^{\ast }\in \mathbb {N}\) such that for every ω ∈ Ω and for every interval J with |J|≥ δ and J ∩Δ0 = ∅ there exists \(\tilde J \subset J\) with the following properties:

-

(i)

There exists t < t∗ such that \(T_{\omega }^{t}: \tilde J \to {\Delta }^{*}=(-\delta ^{\ast }, \delta ^{\ast })\) is a diffeomorphism;

-

(ii)

both components of \(J \backslash \tilde J\) have size greater than δ/5;

-

(iii)

\(|\tilde J| \ge \beta |J|\), where β is a uniform constant.

Proof

By assumption (A3) of the unperturbed map, we know that \(\bigcup _{n\in \mathbb {N}}T_{0}^{-n}(0)\) is dense in I. Since all the maps in \(\mathcal {A}(\varepsilon )\) are close to T0, for any small constant \(\bar \delta >0\) there exists an iterate \(t_{\bar \delta }\in \mathbb {N}\) such that \(\bigcup _{n\le t_{\bar \delta }}(T_{\omega }^{n})^{-1}(0)\) is \(\bar \delta \)-dense in I and uniformly bounded away from 0 for all ω. Let us fix some \(0<\bar \delta < \delta /5\), and thereby we also fix some \(t_{\bar \delta }\). Then the following holds:

-

(a)

if we take the subinterval in the middle of J with length \(\bar \delta \), then there is a point x∗∈ J which is a preimage of 0, i.e., there exists some \(t \le t_{\bar \delta }\) such that \(T_{\omega }^{t}(x_{*}) = 0\);

-

(b)

there exists δ∗ sufficiently small such that any connected component of \((T^{n}_{\omega })^{-1}({\Delta }^{\ast })\) has length less than \(\bar \delta \) for any \(n \le t_{\bar \delta }\), where Δ∗ = (−δ∗, δ∗), and is uniformly bounded away from 0 for all ω ∈ Ω;

-

(c)

the distance from x∗ to the boundary of J is larger than δ/5.

We let \(\tilde J=J \cap (T^{t}_{\omega })^{-1}({\Delta }^{\ast })\). Then item (i) follows automatically. Likewise, item (ii) follows from (c). For item (iii), we know that \(T_{\omega }^{t} (\tilde J) = {\Delta }^{*}\) and \(T_{\omega }^{t} (J) = {\Delta }_{1}\), where Δ1 is just some larger interval containing Δ∗. We also know by using the chain rule that

where \(\xi _{1} \in \tilde J\), ξ2 ∈ J. Thus,

To show there is lower bound, β, for this, we need to show there is an upper bound for \(DT_{\omega }^{t}(\xi _{1})\). Recall that |J|≥ δ, that Tω is expanding, and that both components of \(J \backslash \tilde J\) have size greater than δ/5. For t = 1, the lower bound is automatic since J ∩Δ0 = ∅. For \(1< t \le t_{\bar \delta }\), if \(T_{\omega }^{i} (J) \cap {\Delta }_{0} \neq \emptyset \) for some i < t, then |ξ1|≥ δ/5, and thus \(DT_{\omega }^{t}(\xi _{1})\) has an upper bound. This completes the proof □

The above lemma implies that, since each escape interval has length equal to or greater than δ, each escape interval therefore contains a subinterval which maps bijectively onto Δ∗ within a uniformly bounded number of iterates. We use this property repeatedly in order to construct a full return partition \(\mathcal {Q}^{\omega }({\Delta }^{\ast })\) of Δ∗ = (−δ∗, δ∗), i.e., for every ω ∈ Ω we construct a countable partition \(\mathcal {Q}^{\omega }({\Delta }^{\ast })\) such that for every \(J\in \mathcal {Q}^{\omega }({\Delta }^{\ast })\) there exists an associated return time \( \tau _{\omega } (J)\in \mathbb {N}\) such that \(T_{\omega }^{\tau _{\omega }(J)}:J\to {\Delta }^{\ast }\) is a diffeomorphism.Footnote 1

To this end, we start with an exponential partition of Δ∗ of the form \(\mathcal {P} ({\Delta }^{*}) = \{ I_{r,m} \}_{|r| \ge r_{\ast }}\), where each Ir, m is defined as in the beginning of Section 3.2. We construct an escape partition on each Ir, m, which by extension naturally induces a partition on Δ∗, denoted by \(\mathcal {P}_{\omega , 1}({\Delta }^{\ast })\). Thus, for every \(J\in \mathcal {P}_{{\omega , 1}}({\Delta }^{\ast })\) we have J ⊂ Ir, m for some r and m which has an associated escape time Eω(J). Lemma 3.6 implies that each \(J\in \mathcal {P}_{{\omega ,1}} ({\Delta }^{*})\) contains a subinterval \(\tilde J\) that maps diffeomorphically to Δ∗ under some number of iterates bounded above by Eω(J) + t∗. Thus, in order to construct the next partition \(\mathcal {P}_{{\omega ,2}}\), we divide J into \(\tilde J\) and the components of \(J \backslash \tilde J\). To each of these we assign the first escape time Eω,1 = Eω(J), and we assign to the returning subinterval \(\tilde J\) the return time \(\tau _{\omega }(\tilde J) = E_{\omega , 1}(\tilde J) + t(\tilde J)\), where \(t(\tilde J)\) is the number of iterates such that \(T_{\sigma ^{E_{\omega , 1}}\omega }^{t(\tilde J)}(T_{\omega }^{E_{\omega , 1}} (\tilde J)) = {\Delta }^{*}\). We place \(\tilde J\) in \(\mathcal {P}_{{\omega , 2}}\), and it will remain unchanged in all subsequent \(\mathcal {P}_{{\omega , k}}\)’s.

Next, we apply the escape partition algorithm to the connected components of \(T_{\omega }^{E_{\omega ,1}}(J \backslash \tilde J)\). This is possible since Lemma 3.6 guarantees that these components will be of size greater than δ/5. Let JNR denote one of the non-returning components of \(J \backslash \tilde J\), and let K ⊂ JNR be the preimage of a subinterval that is obtained after applying the escape partition algorithm to \(T_{\omega }^{E_{\omega ,1}}(J_{NR})\). We place K in \(\mathcal {P}_{{\omega , 2}}\), and again by Lemma 3.6 there exists \(\tilde K \subset K\) that also maps diffeomorphically to Δ∗ after a bounded number of iterates. We divide K into \(\tilde K\) and the components of \(K \backslash \tilde K\), and to each of these we assign the second escape time \(E_{\omega , 2} = E_{\sigma ^{E_{\omega , 1}}\omega } (T_{\omega }^{E_{\omega , 1}} K) + E_{\omega , 1}\), and to \(\tilde K\) we assign the return time \(\tau _{\omega }(\tilde K)= E_{\omega , 2}(\tilde K) + t(\tilde K)\).

We then repeat this process ad infinitum, by 1) taking each non-returning \(L \in \mathcal {P}_{{\omega , k}}\) (for some k); 2) chopping L into its returning component and non-returning components; 3) assigning to each of these the k th escape time \(E_{\omega , k} = E_{\sigma ^{E_{\omega , k-1}} \omega }(T_{\omega }^{E_{\omega , k-1}}(L)) + E_{\omega , k-1}\); 4) assigning to the returning component \(\tilde L\) the return time \(\tau _{\omega }(\tilde L)=E_{\omega , k}(\tilde L) + t(\tilde L) \) and placing \(\tilde L\) in \(\mathcal {P}_{{\omega , k+1}}\); and 5) performing the escape partition on the remaining non-returning components and placing the resulting subintervals in \(\mathcal {P}_{{\omega , k+1}}\).

Note that the i th escape time of a non-returning interval, i ≤ k, will be the same as the escape time of its i th ancestor.

Finally, using the above we define the full partition as

i.e., the set of all possible intersections of all \(\mathcal {P}_{{\omega , k}}\)’s. Below we will show that \(\mathcal {Q}^{\omega }\) defines a full-measure partition of Δ∗ and set τω(J) = Eω, k(J) + t(J) for every \(J\in \mathcal {Q}^{\omega }\).

Remark 3.7

One must note in the above algorithm that, when constructing \(\mathcal {P}_{{\omega , k}}\), the choice of \(\tilde J \subset J\) which maps diffeomorphically onto Δ∗ is not necessarily unique. However, we also want that if τω(J) = k for some \(J \in \mathcal {Q}^{\omega }\), then τω only depends on the first k − 1 elements of ω. Furthermore, we want that if ω and \(\omega ^{\prime }\) share their first k − 1 entries, then \(\tau _{\omega }(J) = k^{\prime } \le k\) for some \(J \in \mathcal {Q}^{\omega }\) if and only if \(\tau _{\omega '}(J) = k^{\prime }\). To ensure this, for any ω’s that share the first k − 1 entries, we require that the same \(\tilde J \subset J\) is chosen for all such ω when defining \(\tau _{\omega }(\tilde J) \le k\).

The following lemmas are useful for us.

Lemma 3.8

Let \(\tilde J \subset J \in \mathcal {P}_{{\omega , i}}\) be a non-returning subinterval of J which has had its i th escape. Then we have

where \(C_{3} = e^{\mathcal {D}} \cdot C_{\delta }\) uniformly over ω.

Proof

Since \(J \in \mathcal {P}_{{\omega , i}}\), we therefore know that \(T_{\omega }^{j} (J)\) is contained in at most three intervals of the form I(r, m) for j ≤ Eω, k, which means we can apply our bounded distortion Lemma 3.5. We make use of the following property of bounded distortion: if Tω is of bounded distortion with distortion constant \(\mathcal {D}\), for any intervals A ⊂ I and B ⊂ I we have

where \(k \leq {\min \limits } \{n_{A}, n_{B} \}\). Here \(n_{A} \in \mathbb {N}\) is the constant such that for every 0 ≤ k ≤ nA − 1, either \(T_{\omega }^{k}(A)\) does not intersect Δ0 or \(T_{\omega }^{k}(A)\) is contained in at most three intervals of the form Ir, m, and nB denotes the same condition for B. Indeed, since Tω is a differentiable function, we know from the mean value theorem that there exist ξ1 ∈ A, ξ2 ∈ B such that

Thus,

and rearranging this we obtain inequality (17). Using this, we can write

□

An important corollary of this is the following:

Corollary 3.9

We denote by \(Q_{\omega }^{(n)}(E_{\omega ,1}, ..., E_{\omega ,i })\) the set of all J ∈ Qω such that J has escape times {Eω,1 < Eω,2 < ... < Eω, i < n} and whose (i + 1)-th escape time is after n. Then we have

Proof

For each \(J \in Q_{\omega }^{(n)} (E_{\omega ,1}, ..., E_{\omega ,i })\) there exists a sequence of ancestors J ⊂ J(i) ⊂ J(i− 1) ⊂ ... ⊂ J(2) ⊂ J(1) ⊂ J(0) = Ir, m. Note that we can write

where we set Eω,0(J(0)) = 0. Let us define Mj = {J ⊂ J(j) : Eω, j+ 1(J) ≥ Eω, j(J(j)) + (Eω, j+ 1(J) − Eω, j(J(j)))}, and then apply (16) recursively: we have

and thus by recursion we have

□

Furthermore, we make use of the following lemma, the proof of which can be found in [11], lemma 3.4:

Lemma 3.10

Let η ∈ (0,1) and \(\mathcal R_{k, q} = \{(n_{1}, n_{2}, ..., n_{q}) : n_{i} \geq 1 \forall i = 1,..., q : {\sum }_{i=0}^{q} n_{i} = k \}\). Then for q ≤ ηk there exists a positive function \(\hat \eta (\eta )\) such that \(\hat \eta \to 0\) as η → 0, and

3.5 The Tail of the Return Times

To establish mixing rates, we need to obtain decay rates for the tails of the return times. By construction, the tail of the return times depend on the number of escape times that occurred before returning. We have the following lemma:

Lemma 3.11

Fix ω ∈ Ω and let \((n_{1}, n_{2}, \dots , n_{i})\) be the sequence of escape times of an interval \(J \in \mathcal {Q}^{\omega }\) before time n ≥ 1 such that \({\sum }_{j=1}^{i} n_{j} = n.\) Then there exist constants B, b > 0 that are uniform in ω such that

Proof

Let us define the following:

We decompose Q(n) into the following sums:

where ζ denotes the proportion of total time n that we consider having few escapes (< ζn) or many escapes (> ζn), which the two sums above represent respectively. For the many escapes, we have

where we recall β, defined in lemma 3.6, is the uniform minimum proportion of size between an escape interval and its returning subinterval. Furthermore, for big enough i we have

where \(\gamma _{\beta } = \zeta (\log (1 - \beta )^{-1})\) and C4 = 2/β.

For the intervals that have few escapes, we have

with \(C_{5} = {\sum }_{i < \zeta n} {C_{3}^{i}}\). This constant can grow exponentially if C4 ≥ 1, so to avoid this we choose a sufficiently small ζ. Combining this with the inequality for many escapes, we have

for positive constants B and b, as long as we have chose small enough ζ. □

Thus, for every ω ∈ Ω we have that τω(x) is defined and finite for a.e. x ∈Δ∗. It should be emphasized that the rates of decay of the return times are exponential, independently of ω. Furthermore, for every ω ∈ Ω, if \(J \in \mathcal Q^{\omega }\) has escape times \((n_{1}, n_{2}, \dots , n_{i})\) before returning, then for the return time we have τω(J) < ni + t∗, where t∗ is the constant in lemma 3.6 which is independent of ω.

3.6 Gibbs-Markov

Let Δ∗, \(\mathcal {Q}^{\omega }\) and τω be as in Section 3.4. By definition, \(T_{\omega }^{\tau _{\omega }}:{\Delta }^{\ast }\to {\Delta }^{\ast }\) has fiber-wise Markov property: every \(J\in \mathcal {Q}^{\omega }\) is mapped diffeomorphically onto \(\sigma ^{\tau _{\omega }(J)}\omega \times {\Delta }^{\ast } \). Notice that if τω(J) = k then τω depends only the first k − 1 components of ω. This implies that if τω(x) = k and \(\omega _{i}^{\prime }=\omega _{i}\) for 0 ≤ i ≤ k − 1 then \(\tau _{\omega }(x)=\tau _{\omega ^{\prime }}(x)\), i.e., τω(x) is a stopping time. The uniform expansion is immediate in our case. The tail of the return times are obtained in Section 3.5. We still need to show bounded distortion and aperiodicity, which we address below.

Set \(F_{\omega }(x)=T_{\omega }^{\tau _{\omega }(x)}(x)\) for (ω, x) ∈ Ω×Δ∗, and define \(\mathcal {Q} = \big \{ \{\omega \} \times J | \omega \in {\Omega }, J \in \mathcal {Q}^{\omega } \big \}.\) Note that \(F_{\omega }^{2} (x) = F_{\sigma ^{\tau _{\omega }(x)}\omega } \circ F_{\omega } (x)\), and if we set \(\ell = {{\tau _{\sigma ^{\tau _{\omega }(x)}\omega }(F_{\omega }(x)) + \tau _{\omega }(x)}}\), then \(F_{\omega }^{3} (x) = F_{\sigma ^{\ell } \omega } \circ F_{\sigma ^{\tau _{\omega }(x)}\omega } \circ F_{\omega } (x)\), etc..

As usual, we introduce a separation time for \(x,y\in \mathcal Q^{\omega }\) by setting

Lemma 3.12

There exists constants \(\tilde D\) and \(\hat \beta \in (0, 1)\) such that for all ω ∈ Ω, for all \(J\in \mathcal {Q}^{\omega }\), and for all x, y ∈ J

Proof

Let us define

Then for every \(x, y\in J_{n}\in \mathcal {Q}_{n}^{\omega }\), \(F^{i}_{\omega }(x)\) and \(F^{i}_{\omega }(y)\) stay in the same element of \(\mathcal {Q}\) for \(i=1, 2, \dots , n-1\). Furthermore, \(F^{n}_{\omega }(J_{n})={\Delta }^{\ast }\). Define diam\((\mathcal {Q}^{\omega })=\sup \{|J|: J\in \mathcal {Q}^{\omega }\}\). We show that there exists \(\bar \kappa \in (0, 1)\) such that for all ω ∈ Ω and \(J_{n}\in \mathcal {Q}^{\omega }_{n}\) holds

We prove this inequality via induction. For n = 1 notice that \(\mathcal {Q}^{\omega }_{1}=\mathcal {Q}^{\omega }\) and proceed as follows: fix ω ∈ Ω and let \(J_{2}\in \mathcal {Q}^{\omega }_{2}\) be such that \(J_{2}\subset J_{1}\in \mathcal {Q}^{\omega }_{1}\). Since \(F_{\omega } J_{1}={\Delta }^{\ast }\) and \(F_{\omega } J_{2}\in \mathcal {Q}_{1}^{\omega }\). we have

Thus, we obtain \(|J_{2}| \le (1-\bar \kappa )|J_{1}|\). Iterating the process we obtain (25).

Consider x, y ∈ J1 with n = sω(x, y) and \(F_{\omega }(x), F_{\omega }(y)\in J_{n}\in \mathcal {Q}^{\omega }_{n}\) and suppose that \( J_{n}\subset J\in \mathcal {Q}^{\omega }\). By (17) and Lemma 3.5 we have \(\frac {|T_{\omega }^{k}(J_{n})|}{|T_{\omega }^{k}(J_{1})|}\le e^{\mathcal {D}} \frac {|F_{\omega }(J_{n})|}{|F_{\omega }(J_{1})|}\) for all k ≤ n and ω ∈ Ω. Thus proceeding as in the proof of Lemma 3.5 we have

The sum on the right-hand side can be bounded by a constant \(\mathcal {D}\) as in the proof of Lemma 3.5. Therefore, using (25) and \(\text {diam}(\mathcal {Q}^{\omega })\le 2\delta ^{\ast }\) we have

for suitable constant \(\tilde D\) and \(\hat \beta \). □

3.7 Aperiodicity

Finally, we address the problem of aperiodicity; i.e., there exists \(N_{0}\in \mathbb {N}\) and two sequence \(\{t_{i}\in \mathbb {N}, i=1,2, \dots , N_{0}\}\), \(\{\varepsilon _{i}>0, i=1, \dots , N_{0}\}\) such that g.c.d.{ti} = 1 and |{x ∈Δ∗∣τω(x) = ti}| > εi for almost every ω ∈ Ω. To show this, we recall that the original Lorenz system and all sufficiently close systems are mixing [18]. Thus, we can proceed as in [1, Remark 3.14]. Since the unperturbed map admits a unique invariant probability measure, it can be lifted to the induced map over Δ∗ constructed following the algorithm in the previous 2 subsections. Moreover, the lifted measure is invariant and mixing for the tower map. Therefore, there exists partition \(\mathcal {Q}^{0}\) of Δ∗ and a return time \(\tau ^{0}:{\Delta }^{\ast }\to \mathbb {N}\) such that \({\tau _{i}^{0}}=\tau ^{0}(Q_{i})\), \(Q_{i}\in \mathcal {Q}^{0}\) such that g.c.d.\(\{{\tau _{i}^{0}}\}_{i=1}^{N_{0}}=1\) for some N0 > 1. Now, by shrinking ε if necessary, we can ensure that the first N0 elements of the partition \(\mathcal {Q}^{\omega }\) satisfy \(|Q_{i}^{\omega }\cap Q_{i}|\ge |Q_{i}|/2\) with \(\tau _{\omega }^{0}(Q_{i}^{\omega })={\tau _{i}^{0}}\) for \(i=1, {\dots } N_{0}\) and for all ω ∈ Ω. Thus, we may take εi = |Qi|/2. Notice that we define only finitely many domains in this way. Therefore, the tails estimates, distortion, etc. are not affected, and we can stop at some ε0 > 0, thereby proving aperiodicity.

Finally, we can now give the proof of Theorem 2.2 by applying the results of [9] and [14]:

Proof

(Proof Theorem 2.2) In the above we have shown that conditions (C2)–(C6) of the Appendix hold. Condition (C1) is true by construction. Using this and Theorem 4.1 in the Appendix we are now ready to prove Theorem 2.2. We begin the proof by defining the tower projectionπω : Δω → I for almost every ω ∈ Ω as \(\pi _{\omega } (x, \ell ) = T_{\sigma ^{-\ell }\omega }^{\ell } (x)\). One should note that \(\pi _{\sigma \omega } \circ \hat F_{\omega } = T_{\omega } \circ \pi _{\omega } \), where \(\hat F_{\omega }\) is the tower map defined in the Appendix. Then μω = (πω)∗νω provides an equivariant family of measures for {Tω} and the absolute continuity follows from the fact that Tω are non-singular. Now, “lift” the observables \(\varphi \in L^{\infty }(X)\) and ψ ∈ Cη(X) to the tower. Let \(\bar {\varphi }_{\omega } = \varphi \circ \pi _{\omega }\) and \(\bar {\psi }_{\omega } = \psi \circ \pi _{\omega }\) respectively. Now, using the definition of μω and that we have

where dνω = hω ⋅ dm. Equally, we have

and

Thus, we have

This means that if we can show that \(\bar {\varphi }_{\omega } \in {\mathscr{L}}_{\infty }^{K_{\omega }}\) and \(\bar \psi _{\omega } h_{\omega } \in \mathcal {F}_{\gamma }^{K_{\omega }}\), then we can apply Theorem (4.1) in the Appendix and thereby obtain exponential decay of correlations on the original dynamics. The first condition is trivial since \(\varphi \in L^{\infty }(X)\). To show the second condition, since Fω is uniformly expanding; i.e., |(Tω)′|≥ κ > 1, we have \(|x-y| \le {(\frac {1}{\kappa })}^{s_{\omega }(x, y)}\). Hence, for any (x, ℓ),(y, ℓ) ∈Δω we have the following inequality:

where ∥⋅∥η is the Hölder norm with exponent η ∈ (0,1).

Let us define \(\gamma =\frac {1}{\kappa }\), and let take the separation time on the tower, \(\hat s_{\omega } : {\Delta }_{\omega } \times {\Delta }_{\omega } \to \mathbb {Z}_{+}\), which is defined in the Appendix. Notice that \(\hat s_{\omega }((x, \ell ), (y, \ell )) = s(x,y)\). Thus, inequality (28) implies

This completes the proof. □

Notes

Notice that in principle we need to distinguish between different fibers and consider Tω : I ×{ω}→ I ×{σω}. Then we obtain the inducing domain at fiber ω by \({\Delta }_{\omega }^{\ast }= {\Delta }^{\ast }\). Then the induced map is \(T_{\omega }^{\tau (\omega , k)}: J \times \{\omega \}\to {\Delta }^{\ast }_{\sigma ^{\tau (\omega , k)}\omega }\). This extension does not cause any problem, since all the estimates are uniform in ω.

References

Alves JF, Bahsoun W, Ruziboev M. Almost sure rates of mixing for partially hyperbolic attractors, arXiv:1904.12844.

Alves JF, Soufi M. Statistical stability and limit laws for Rovella maps. Nonlinearity 2012;25:3527–3552.

Alves JF, Soufi M. M. Statistical stability of geometric Lorenz attractors. Fund Math 2014;224(3):219–231.

Araújo V, Pacífico MJ, Vol. 53. Three-dimensional flows. Ergebnisse der Mathematik und ihrer Grenzgebiete. 3. Folge. A Series of Modern Surveys in Mathematics. Results in Mathematics and Related Areas. 3rd Series. Berlin: Springer; 2010.

Araújo V, Pacífico MJ, Pujals ER, Viana M. Singular-hyperbolic attractors are chaotic. Trans Amer Math Soc 2009;361(5):2431–2485.

Bahsoun W, Bose C, Ruziboev M. Quenched decay of correlations for slowly mixing systems. Trans Amer Math Soc 2019;372(9):6547–6587.

Bahsoun W, Melbourne I, Ruziboev M. Variance continuity for Lorenz flows. Annales Henri Poincaré 2020;21:1873–1892.

Bahsoun W, Ruziboev M. On the statistical stability of Lorenz attractors with a c1+α stable foliation. Ergodic Theory Dynam Systems 2019;39(12):3169–3184.

Baladi V, Benedicks M, Maume-Deschamps V. Almost sure rates of mixing for i.i.d. unimodal maps. Ann Sci École Norm Sup (4) 2002;35(1):77–126.

Benedicks M, Carleson L. The dynamics of the hénon map. Ann Math (2) 1991;133(1):73–169.

Bruin H, Luzzatto S, van Strien S. Decay of correlations in one-dimensional dynamics. Ann Sci École Norm Sup 2003;36(4):621–646.

Díaz-Ordaz K. Decay of correlations for non-Hölder observables for one-dimensional expanding Lorenz-like maps. Discrete Contin Dyn Syst Series A 2006;15(1):159–176.

Díaz-Ordaz K, Holland M, Luzzatto S. Statistical properties of one-dimensional maps with critical points and singularities. Stoch Dyn 2006;6(4):423–458.

Du Z. 2015. On mixing rates for random perturbations, Ph.D Thesis, National University of Singapore.

Holland M, Melbourne I. Central limit theorems and invariance principles for Lorenz attractors. J Lond Math Central limit Soc (2) 2007;76(2):345–364.

Li X, Vilarinho H. Almost sure mixing rates for non-uniformly expanding maps. Stoch Dyn 2018;18(4):1850027, 34.

Lorenz ED. Deterministic nonperiodic flow. J Atmosph Sci 1963;20: 130–141.

Melbourne S, Luzzatto I, Paccaut F. The Lorenz attractor is mixing. Comm Math Phys 2005;260(2):393–401.

Acknowledgments

I would like to thank Marks Ruziboev for our helpful discussions throughout this work. I would also like to thank anonymous referees whose comments improved the presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Abstract random towers with exponential tails

Appendix. Abstract random towers with exponential tails

This appendix is based on the work and results of [9, 14]. See also the related work of [6, 16] on random towers. Using the notation and definitions in Section 3, we can introduce a random tower for almost every ω as follows:

We can also define the induced map \(\hat F_{\omega } : {\Delta }_{\omega } \to {\Delta }_{\sigma \omega }\) as

Notice that this allows us to construct a partition on the random tower as

Let us define the separation time on the tower as

Assume:

-

(C1)

Return and separation time: the return time function τω can be extended to the whole tower as \(\tau _{\omega }: {\Delta }_{\omega } \to \mathbb {Z}_{+}\) with τω constant on each \(J \in \mathcal {Z}_{\omega }\), and there exists a positive integer p0 such that τω ≥ p0. Furthermore, if (x, ℓ) and (y, ℓ) are both in the same partition element \(J \in \mathcal {Z}_{\omega }\), then \(\hat s_{\omega }((x,0), (y,0)) \ge \ell \), and for every \((x, 0), (y,0) \in J \in \mathcal {Z}_{\omega }\) we have

$$ \hat s_{\omega}((x,0), (y,0))= \tau_{\omega}(x, 0) + \hat s_{\sigma^{\tau_{\omega}}\omega}(\hat F_{\omega}^{\tau_{\omega} (x,0)}(x,0), \hat F_{\omega}^{\tau_{\omega} (y,0)}(y,0)) $$ -

(C2)

Markov property: for each \(J \in \mathcal {Q}^{\omega } ({\Delta }^{*})\) the map \(\hat F_{\omega }^{\tau _{\omega }} |J : J \to {\Delta }^{*}\) is bijective and both \(\hat F_{\omega }^{\tau _{\omega }} |J\) and its inverse are non-singular.

-

(C3)

Bounded distortion: There exist constants 0 < γ < 1 and \(\mathcal {D}> 0\) such that for all \(J \in \mathcal {Z}_{\omega }\) and all (x, ℓ), (y, ℓ) = x, y ∈ J

$$ \Big| \frac{J\hat F_{\omega}^{\tau_{\omega}}(x)}{J\hat F_{\omega}^{\tau_{\omega}}(y)} - 1 \Big| \le \mathcal{D} \gamma^{\hat s_{\sigma^{\tau_{\omega}}\omega}(\hat F_{\omega}^{\tau_{\omega}}(x,0), \hat F^{\tau_{\omega}}_{\omega}(y,0))}. $$where \(J\hat F_{\omega }^{\tau _{\omega }}\) denotes the Jacobian of \(\hat F_{\omega }^{\tau _{\omega }}\).

-

(C4)

Weak forwards expansion: the diameters of the partitions \(\bigvee ^{n}_{j=0} (\hat F_{\omega }^{j})^{-1} \mathcal {Z}_{\sigma ^{j} \omega }\) tend to zero as \(n \to \infty \).

-

(C5)

Return time asymptotics: there exist constants B, b > 0, a full-measure subset Ω1 ⊂Ω such that for every ω ∈ Ω1 we have

$$ m(\{ x \in {\Delta}^{*} | \tau_{\omega} > n \}) \le Be^{-bn} $$We also have for almost every ω ∈ Ω

$$ m({\Delta}_{\omega}) = \sum\limits_{\ell \in \mathbb{Z}_{+}} m(\{\tau_{\sigma^{-\ell}\omega} > \ell \}) < \infty $$which gives us the existence of a family of finite equivariant sample measures. We also have for almost every ω ∈ Ω

$$ \lim_{\ell_{0} \to \infty} \sum\limits_{\ell \ge \ell_{0}} m({\Delta}_{\sigma^{\ell_{0}} \omega, \ell}) = 0. $$ -

(C6)

Aperiodicity: there exists N0 ≥ 1, a full-measure subset Ω2 ⊂Ω and a set \(\{ t_{i} \in \mathbb {Z}_{+} : i = 1,2,...,N \}\) such that g.c.d.{ti} = 1 and there exist 𝜖i > 0 such that for every ω ∈ Ω2 and every i ∈{1,..., N0} we have m({x ∈Λ|τ(x) = ti}) > 𝜖i.

Let us also define the following function spaces:

For almost every ω let \(K_{\omega }: {\Omega } \to \mathbb {R}_{+}\) be a random variable which satisfies \(\inf _{\Omega } K_{\omega } > 0\) and

where u, v > 0. We then define the spaces

We assign to \({\mathscr{L}}^{K_{\omega }}_{\infty }\) and \(\mathcal {F}_{\gamma }^{K_{\omega }}\) the norms \(|| \varphi ||_{{\mathscr{L}}_{\infty }} = \inf C_{\varphi }^{\prime }\) and \(||\varphi ||_{\mathcal {F}} = {\max \limits } \{ \inf C_{\varphi _{\omega }}^{\prime }, \inf C_{\varphi _{\omega }}^{\prime \prime }\}\) respectively, which makes them Banach spaces. We can now apply the following theorem from [9, 14]:

Theorem 4.1

Let \(\hat F_{\omega }\) satisfy (C1)–(C6), and let Kω satisfy the above condition. Then for almost every ω ∈ Ω there exists an absolutely continuous \(\hat F_{\omega }\)-equivariant probability measure νω = hωm on Δω, satisfying \((\hat F_{\omega })_{*} \nu _{\omega } = \nu _{\sigma \omega }\), with \(h_{\omega } \in \mathcal {F}_{\gamma }^{+}\). Furthermore, there exists a full-measure subset Ω2 ⊂Ω such that for every ω ∈ Ω2, \(\varphi _{\omega } \in {\mathscr{L}}_{\infty }^{K_{\omega }}\) and \(\psi _{\omega } \in \mathcal {F}_{\gamma }^{K_{\omega }}\) there exists a constant Cφ, ψ such that for all n we have

and

Usually there would be a constant Cω dependent on ω in front of the right-hand side for both (32) and (33) because there is often a waiting time dependent on ω before we see exponential tails of return times. However, since we have uniform tails in (C5), in the sense that we do not have a waiting time and instead we immediately have exponential tails of returns, we just have a uniform constant B > 0 instead of Cω, which we can just absorb into Cφ, ψ.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Larkin, A. Quenched Decay of Correlations for One-dimensional Random Lorenz Maps. J Dyn Control Syst 29, 185–207 (2023). https://doi.org/10.1007/s10883-021-09583-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10883-021-09583-w