Abstract

Previous researchers have demonstrated that using stimuli identified via daily brief preference assessments may produce more responding under concurrent-schedule arrangements than using stimuli identified via lengthy, pre-treatment preference assessments (DeLeon et al., 2001). To date, this has not been evaluated within the context of skill acquisition. Thus, the extent to which conducting daily brief preference assessments impacts the rate of skill acquisition during discrete trial instruction (DTI) remains unknown. The purpose of this study was to evaluate how frequent, pre-session preference assessments, influence the rate of skill acquisition relative to an initial preference assessment during DTI sessions for three children with autism spectrum disorder (ASD). Two of the three children acquired the targeted skills faster in the frequent preference assessment condition. The third participant showed no difference in the rate of skill acquisition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Researchers have shown that methods of varying reinforcers can enhance task performance in individuals with intellectual and developmental disabilities (IDD). Some methods include stimulus variation (e.g., Egel, 1981; Becraft & Rolider, 2015); within-session reinforcer choice (e.g., Graff & Libby, 1999; Keyl-Austin et al., 2012); differential reinforcer quality (e.g., Cariveau & La Cruz Montilla, 2022) and conducting frequent, brief preference assessments prior to training sessions (e.g., DeLeon et al., 2001). Behavioral interventions that incorporate choice-making opportunities may be favored over interventions that do not, as choice permits learners to contribute to their habilitation and may promote increased interest, support, or personal investment in the intervention. One way to provide choice for participants in behavioral interventions is to arrange stimulus conditions that allow learners to determine the consequences of their behavior (e.g., stimulus preference assessments).

Generally, research on preference assessment indicates a correspondence between highly ranked stimuli and performance on a variety of measures (e.g., response allocation, DeLeon et al., 2001; response output, Call et al., 2012, DeLeon et al., 2009; response rate, Lee et al., 2010). That is, results of prior research suggest that higher preference stimuli typically function as more effective reinforcers than do lower preference stimuli. For example, DeLeon et al. (2009) assessed the extent to which high-, moderate-, and low-preference stimuli supported different amounts of work when used as reinforcers under a progressive-ratio preparation. Results of DeLeon et al. showed that, for 3 out of 4 participants, the higher ranked item produced higher break points than the lower ranked items. Similarly, Lee et al. (2010) evaluated the correspondence of reinforcing efficacy and participants’ preference for six edibles for two participants with developmental disabilities. Lee et al. found high levels of correspondence between preference rank and performance, particularly for high preference stimuli.

Applied behavior analysis researchers have reported on a variety of systematic preference assessment formats to directly and empirically assess participant preference. Thus, researchers and clinicians have a variety of methods from which to choose, depending on their learner’s specific skill set and educational needs. Two commonly used trial-based preference assessments are the paired stimulus (PS; Fisher et al., 1992) format and the multiple stimulus without replacement (MSWO; DeLeon & Iwata, 1996) format. In the PS format, participants are presented items in pairs and instructed to select one of the available items. Selections produce access to the item followed by a new trial. Each item is paired with every other item and a preference hierarchy, based on selection percentages, is constructed. Despite its many benefits, one potential limitation of the PS format is that it can be time consuming (e.g., Hagopian et al., 2004). Thus, it is unlikely that clinicians would conduct frequent PS assessments to assess any shifts in preference that may occur over time. By contrast, an MSWO is another trial-based preference assessment format during which participants are presented with an array of all potential choices and instructed to select one at a time. The logic of the MSWO is that the order of selection corresponds with preference; thus, the most highly preferred item would be selected first. Results of an MSWO also produce a preference hierarchy. However, this process would be accomplished more quickly than a PS assessment (e.g., Hagopian et al., 2004).

Several studies have reported that preferences can shift across time and experience (e.g., Hanley et al., 2006; MacNaul et al., 20212020; Zhou et al., 2001). It may, therefore, be beneficial to conduct frequent preference assessments to ensure that reinforcers delivered during instructional or therapeutic activities remain effective. That is, preference shifts in reinforcers used in therapy sessions may go undetected if frequent assessments are not conducted. Graff and Karsten (2012) found that the main barrier reported by behavior analysts to conduct frequent preference assessments was lack of time. However, in the same survey, Graff and Karsten reported that certified behavior analysts (BCBAs and BCaBAs) were more likely to conduct PS assessments (70.4% of respondents endorsing use of the assessment) relative to MSWO assessments (20.4% of respondents endorsing use of the assessment). Given that the MSWO is a shorter assessment that produces a preference hierarchy, there might be clinical advantages to conducting frequent MSWOs over frequent PS assessments. Shorter effective assessments presumably provide more time for learning trials and may result in more frequent assessments of learner preference. Therefore, conducting frequent MSWOs might be a viable clinical solution for assessing daily preferences and identifying shifts in preference.

DeLeon et al. (2001) conducted an initial PS preference assessment to establish a preference hierarchy. Then, prior to daily work sessions, the authors conducted a brief MSWO preference assessment to gauge which stimulus was most preferred that day. When the most highly preferred item that day differed from the highest preference item in the initial PS preference assessment, a concurrent-schedule reinforcer assessment session was conducted to evaluate relative reinforcer strength between the two stimuli. Four out of 5 participants generally allocated more responding towards the option selected via that day’s MSWO assessment, suggesting that frequent preference assessment can enhance performance by accommodating momentary fluctuations in stimulus preference.

The results of DeLeon et al. (2001) suggested that fluctuations in preference can indeed affect changes in performance. However, in DeLeon et al. this effect was demonstrated using relative response allocation among mastered targets reinforced under a concurrent schedule. With a few exceptions (e.g., Egel, 1981), similar studies have used response rates under different reinforcement schedules (e.g., progressive ratio) for previously mastered or simple arbitrary tasks (e.g., microswitch presses) to gauge reinforcer efficacy (Egel, 1980; Fisher et al., 1997; Call et al., 2012). For example, Call et al. (2012) evaluated correspondence between preference assessments results (PS and MSWO) and reinforcer efficacy using a progressive-ratio schedule. DeLeon et al. (2009), however, suggested that neither concurrent-schedule arrangements nor response rate as a dependent measure can fully characterize the applied utility of preference assessment methods. Measurement of response rates using simple tasks or previously mastered tasks may be subject to ceiling effects. That is, as long as a consequent event (varied or not) is moderately potent, the person may respond as quickly as possible to maximize gains. Concurrent arrangements may be more sensitive to relative reinforcement effects (Fisher & Mazur, 1997), but they do not mirror typical teaching arrangements. Preference assessment methods and methods to enhance reinforcement effects are developed, ostensibly, to enhance performance. In the context of behavioral intervention of individuals with intellectual and developmental disabilities, this often takes the form of learning previously unlearned responses. As such, their benefits should be evaluated on measures of acquisition (i.e., independent correct responses), rather than rate or relative allocation measures, as the dependent variable. Carr, Nicholson, and Higbee (2000) evaluated the extent to which, high-, medium-, and low-preference stimuli functioned as reinforcers for a low-rate clinically relevant response (e.g., motor imitation, vocal imitation) and found that high-preference stimuli identified via a brief MSWO produced more correct responses than medium- and low-preference stimuli. However, (a) the target response was the same across all conditions and (b) the comparison was done in the context of a brief reinforcer assessment. Thus, the extent to which the results could inform broader matters of skill acquisition may be limited.

To date, the extent to which frequent pre-session brief preference assessments produce more efficient learning than using stimuli identified via an initial lengthy, pre-treatment preference assessment in the context of skill acquisition, which typically involve novel targets prompted by a therapist or experimenter and reinforced under a single schedule, has not been evaluated. Thus, the purpose of this study was to evaluate how stimuli identified via frequent pre-session preference assessments (i.e., MSWOs) influence the rate of skill acquisition relative to stimuli identified via an initial preference assessment (i.e., PS) during discrete trial instruction (DTI) sessions for children with autism spectrum disorder (ASD).

Method

Participants and Setting

Three children diagnosed with ASD participated in this study. Participants were nominated by the clinical staff from their respective educational or clinical placements. The on-site study team member described the purpose of the experiment to a caregiver and caregivers signed informed consent documents prior to initiating experimental procedures. Matt was a seven-year-old Hispanic male who communicated using six- to eight-word phrases, responded to multiple-step instructions, and emitted multiple tact and intraverbal responses. However, he had difficulties responding independently to reciprocal conversation with more than two verbal exchanges with peers or adults. Matt received 15 h of in-home behavioral intervention and 30 min of speech therapy a week. Moreover, he received occupational therapy and additional speech therapy in school. Matt attended a special education classroom in a public school in Florida. Matt’s sessions were conducted in the living room of his home. The open room contained a small table with two small chairs, some toys, a couch, a TV, behavioral intervention session work materials, and materials used for this study.

Julian was a four-year-old Caucasian male who communicated using one- to two-word phrases, responded to one-step instructions, emitted multiple tacts, and had a limited intraverbal repertoire. Julian received 20 h of center-based behavioral intervention per week and 90 min of speech and occupational therapy every day in a developmental pediatric center. Julian’s sessions were conducted in a developmental pediatric center in New York. Sessions were conducted in a classroom. The classroom contained a small table with two small chairs, shelving with instructional materials, toys, miscellaneous classroom items, and materials used for this study.

Joey was a five-year-old Hispanic male who communicated using one-word phrases, responded to simple one-step instructions, emitted a limited number of tacts, and displayed a very limited intraverbal repertoire. Joey was initially referred for behavioral intervention for the assessment and treatment of problem behavior (i.e., aggression). Problem behavior was at near zero levels when Joey participated in this study. Joey attended a special education classroom in a public elementary school. All of Joey’s sessions were conducted in the same room as his behavioral intervention sessions within his school. The room contained a table, two chairs, miscellaneous school items, and materials used for this study.

Response Measurement, Interobserver Agreement, and Procedural Fidelity

Trained graduate students served as observers and collected pencil and paper data. During the PS and MSWO preference assessments, an approach response was defined as reaching for a stimulus within 5 s of a stimulus presentation. During the DTI sessions, target responses varied based on individual participant clinical programming at the start of the study. Tasks consisted of answering “WH” questions for Matt, receptive discrimination of motor tasks for Julian, and receptive identification of shapes for Joey. Independent correct responses were defined as answering the “WH” questions with the pre-determined vocal correct response independently within 5 s from the presentation of the question for Matt, engaging in the correct motor task within 5 s from the presentation of the instruction for Julian, and pointing to the correct target picture from an array of three pictures within 5 s from the presentation of the instruction for Joey. Prompted correct responses were defined as answering the “WH” questions with the pre-determined vocal correct response following a vocal prompt (i.e., echoic) for Matt, engaging in the correct motor task or pointing to the correct target picture following a physical prompt for Julian and Joey, respectively. Incorrect responses were defined as the individual engaging in a different response following the prompts or not responding. Performance data were summarized as the percentage of trials in which the participants emitted an independent correct response. See Table 1 for specific targets for each participant in each condition.

We assessed interobserver agreement (IOA) by comparing two data collectors’ recorded responses during PS assessments, MSWO assessments, and DTI sessions. Agreements during the PS and MSWO assessments were defined as each observer recording the same selection on a trial. Agreements during the DTI sessions were defined as both observers recording the same responses (independent correct, prompted correct, or incorrect) for each trial. Interobserver agreement was calculated by dividing the number of agreements by the number of agreements plus disagreements and multiplying by 100 to yield a percentage. Interobserver agreement was calculated for 37% of Matt’s sessions with a mean of 98% (range, 83 − 100%), 38% of Julian’s sessions with a mean of 100%, and 40% of Joey’s sessions with a mean of 99% (range, 97 − 100%).

We assessed procedural fidelity by evaluating the therapists’ delivery of the correct stimulus as a consequence for correct responding in each experimental condition of the treatment phase. That is, we calculated the percentage of treatment sessions during which the therapist delivered (a) the top-ranked stimulus identified in the PS preference assessment in the initial assessment condition and (b) the top-ranked stimulus identified in the MSWO prior to each frequent assessment condition session. Procedural fidelity was calculated by dividing the total number of treatment sessions during which the correct stimulus was delivered over the total number of treatment sessions conducted in each condition (i.e., initial assessment condition, frequent assessment condition). Mean procedural fidelity was calculated for 100% of Matt’s treatment sessions, 100% of Julian’s treatment sessions, and 57% of Joey’s treatment sessions, mean procedural fidelity was 100% for all participants. Additionally, we collected procedural fidelity of DTI components for Joey (40% of sessions) and Matt (32%) during teaching sessions. We evaluated a global measure of DTI components, including securing attention, delivering a concise instruction, and issuing a controlling prompt. Mean procedural fidelity for DTI components was 96.5% (range, 93 − 100%) and 92% (range, 89 − 100%) for Joey and Matt, respectively. Procedural fidelity of DTI components was scored following the sessions based on video recording. We did not obtain consent to video record Julian’s sessions; therefore, this measure was not obtained for Julian.

Pre-Experimental Procedures

Paired Stimulus (PS)

We conducted paired stimulus preference assessments using procedures described by Fisher et al. (1992). All stimuli included in the preference assessments were nominated by the caregivers and clinical team. For Matt, we conducted two separate PS assessments consisting of five items each (i.e., tangibles and edibles). Additionally, we conducted a combined preference assessment using the three highest ranked stimuli from each individual tangible and edible PS assessment plus an attention card for a total of seven items. The attention card was exchanged for therapist attention in the form of positive statements (e.g., “This is so fun!”) and tickles. Prior to including the attention card, we conducted training sessions to teach Matt to exchange the attention card for therapist attention. This interaction was included based on feedback from Matt’s clinical team that social reinforcers in the form of verbal and brief physical interaction were often rotated in as reinforcers in his clinical teaching contexts. Thus, we wanted to assess relative preference of attention as compared to tangible and food reinforcers. For Julian and Joey, we conducted one PS assessment consisting of eight and six tangible items, respectively (neither Julian nor Joey’s programming included edible reinforcers prior to the start of the study).

Items were evaluated in each PS assessment by pairing every item once with every other item and asking the participant to choose one. Item selection resulted in 30-s access to the item (for tangible items and attention) or delivery of one edible item. The next trial began following consumption of the food item or 30 s. Following each assessment, selection percentages were calculated by dividing the number of times a stimulus was chosen by the number of times it was available. Stimuli in each category were then rank ordered according to selection percentages (e.g., highest selection percentage was ranked first, second highest selection percentage ranked second, etc.). The most highly preferred item identified in the combined (Matt) or tangible (Julian and Joey) PS preference assessment was then delivered contingent on correct responses during the initial assessment condition of the treatment evaluation.

Multiple Stimulus without Replacement (MSWO)

We conducted brief MSWOs prior to each session in the frequent assessment condition following procedures similar to Carr et al. (2000). Each pre-session assessment consisted of three repetitions of the MSWO format. Stimuli in the MSWOs were the same as in the combined (Matt) or tangible (Julian and Joey) PS preference assessments. Stimuli were nominated by the participants’ caregivers and clinical team. Thus, there were seven stimuli in Matt’s MSWOs, eight stimuli in Julian’s MSWOs and six stimuli in Joey’s MSWOs. At the start of the assessment, all stimuli were placed in front of the participant and the participant was instructed to choose one. Item selection resulted in 30-s access to the item (for tangible items and attention) or delivery of the edible item. After an item was selected, it was removed from the array and the remaining items were rotated and represented simultaneously in a re-arranged order. This process continued until each item had been selected or 30 s elapsed without a choice. This process was then repeated two more times. Following the third repetition, each stimulus was ranked according to its selection percentage, calculated by dividing the number of times the item was selected by the number of trials it was available across the three assessments and multiplying by 100. The item with the highest selection percentage that day was delivered contingent on correct responses during the frequent assessment condition of the treatment evaluation. For Julian (all sessions), and for Matt starting at session 11, we conducted abbreviated MSWOs (i.e., first three trials only) because of problem behavior during later selections and/or consistently refusing selections following the third item.

Research Design

We used an adapted alternating treatments design (Cariveau et al., 2021) with an initial baseline phase to evaluate the effects of the independent variable on the percentage of trials with correct independent responses during skill acquisition. This design is frequently used in research that assesses the impact of reinforcement parameters (e.g., choice, magnitude, delay) on the rate of acquisition (see Weinsztok et al., 2022 for a recent review).

Experimental Procedures

Teaching procedures varied slightly across participants depending on their current clinical arrangements. Prompting procedures, number of trials per session, error correction, and mastery criteria were based on individual participant clinical programming. That is, we adopted teaching procedures that the participant’s behavior analysts were using for other clinical programs at the start of the study. Additionally, targets were (a) informed by caregiver input and (b) guided by each participant’s clinical behavior analysis team based on current intervention goals. For all participants, we used a logical analysis by controlling features of the antecedent stimuli and the target responses. For example, we equated targets based on stimulus characteristics (e.g., all geometric shapes), response characteristics (e.g., all vocal responses), and task difficulty (e.g., answering one WH question with the same number of vocal responses). Following recommendations by Cariveau et al. (2021) we used targets that were novel to all participants, clinically relevant as determined by the clinical team, and required the same number of vocal responses (Matt) or motor responses (Julian and Joey) emitted by the participants. In addition, we used targets within the same class (e.g., daily activities for Matt, body parts for Julian, shapes for Joey). Two to four sessions were conducted per day. Sessions were conducted one to five times a week. The time between sessions conducted on the same days varied across participants but generally consisted of five to thirty minutes. When the preferred item from the frequent assessment resulted in the same preferred item from the initial assessment, the participant received the same item, even if the sessions occurred on the same day. For Matt, sessions consisted of eight trials (i.e., four WH questions each presented two times). During Matt’s treatment sessions, prompting consisted of a most-to-least prompting sequence and a time-delay. That is, during the initial sessions, the experimenter provided the “WH” question (e.g., “Who drives you to school”) and immediately (i.e., 0-s delay) provided the correct response (e.g., “mom”) using a full echoic prompt. Following three consecutive trials with correct responding with a full echoic prompt, the therapist continued with the immediate (i.e., 0-s delay) presentation of the correct answer, but this time provided a partial echoic prompt (e.g., “m”). All prompted correct responses during the 0-s time delay were reinforced. Following three consecutive trials with correct responding with the partial echoic prompt, the therapist increased the time delay to 5 s and implemented differential reinforcement. That is, when the trial started, the experimenter presented the “WH” question (e.g., “Who drives you to school”) and then waited 5 s before providing the partial echoic prompt. Only independent correct responses resulted in access to reinforcement when the 5-s delay was in place. Matt’s mastery criteria consisted of two consecutive sessions at 100% independent correct responses. In addition to following current clinical arrangements, we also followed recommendations for teaching receptive conditional discriminations by Grow and LeBlanc (2013) and Green (2001) for Julian and Joey. Sessions for these participants consisted of nine trials (i.e., three receptive discrimination targets each presented three times). For Joey, target cards were presented in an array of three. The correct response depended on the therapist’s instructions in each trial. All target cards were equal in size and colors. Targets were presented semi-randomly and in a counterbalanced order (each instruction was presented three times in each session [nine trials]). For Julian and Joey, prompting consisted of a full physical prompt and a time delay. During the initial treatment sessions, the experimenter provided the instruction (e.g., “touch octagon”) and after 2 s, physically guided the participant to emit the correct response (e.g., touching correct card with picture of octagon). Following one session per condition at the 2-s delay, the delay increased and remained at 4 s. During the 4-s time delay, differential reinforcement started during which only correct independent responses produced the programmed reinforcer. Julian and Joey’s mastery criteria consisted of three consecutive sessions at or above 89% independent correct responses. If participants emitted an incorrect response at any point during treatment, a simple error correction procedure was implemented. Error correction consisted of representing the trial and providing a full echoic prompt (Matt) or full physical prompt (Julian and Joey) at a 0-s delay. No reinforcement was provided during error correction trials.

Baseline

During baseline, no prompting or programmed tangible reinforcers were provided for any response. Brief praise was provided by the experimenter if the participants emitted a correct independent response (e.g., “that’s right,” “nice job”).

Treatment Comparison

Correct responses resulted in 30-s access to stimuli on a fixed-ratio (FR) 1 schedule during both conditions according to the differential reinforcement criteria described above. During the initial assessment condition, the most selected stimulus from the combined paired-stimulus preference assessment was used as the reinforcer for correct responding for Matt. For Julian and Joey, the highest ranked item in the tangible paired-stimulus preference assessment was used as the reinforcer. The reinforcer for this condition did not change across sessions.

During the frequent assessment condition, an MSWO was conducted before the beginning of each session and the highest ranked stimulus selected during that assessment was used as the reinforcer for correct responding during that session. The initial and frequent assessment conditions were run in pairs that varied across sessions during the treatment evaluation. Thus, even if the item selected during the MSWO was the same as the item in the initial assessment condition, we conducted that session using that reinforcer. This differed from the procedure used by DeLeon et al. (2001) who only conducted reinforcer assessment sessions when the MSWO item selected was different from the initial PSPA item. We did this to control for exposure to the teaching targets in each condition and to more closely approximate how an MSWO condition might be utilized during DTI.

Results

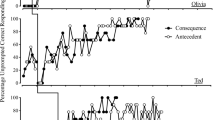

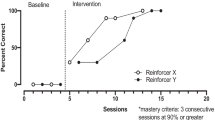

Figure 1 displays the results of the treatment comparison. Data for Matt are depicted on the top panel, data for Julian are in the middle panel, and data for Joey are on the bottom panel. During baseline, all participants emitted zero to low levels of correct independent responses. Following the implementation of treatment, acquisition of targets occurred faster for Matt and Julian in the frequent assessment condition. Note that Matt’s performance plateaued in the initial assessment condition from sessions 28 to 39. Thus, at session 40 (indicated by an asterisk on the figure), the contingency was switched from initial assessment to frequent assessment which resulted in an increase in responding for two consecutive sessions at 80%. However, he did not meet the formal mastery criteria in this condition. Unfortunately, Matt was discontinued from the study due to a change in his ABA therapy placement. For Joey, there was no difference in the rate of acquisition across conditions. Figure 1 also displays the sessions (indicated by the grey circles) in which the participant selected a different stimulus in the frequent assessment condition from the stimulus used in the initial assessment condition.

Percentage of trials with correct independent responses across initial (triangles) and frequent (circles) assessment conditions. Gray circles indicate sessions in which the participant selected a different stimulus in the MSWO assessment than what was selected in the PS assessment. Asterisk denotes change in reinforcer from PS to MSWO in initial assessment condition

Figure 2 displays the rank order of items during the PS assessment and consecutive MSWO administrations for Matt, Julian, and Joey. Recall that for Julian, and for Matt starting at session 11, an abbreviated MSWO was conducted. Overall, Matt selected a stimulus different from the stimulus used in the initial assessment condition in 8 out of 15 sessions of the frequent assessment condition. Julian selected a different stimulus from the stimulus used in the initial assessment condition in 5 out of 9 sessions of the frequent assessment condition. Joey selected a different stimulus from the stimulus used in the initial assessment condition in 5 out of 7 sessions of the frequent assessment condition. Although each participant often selected a different item in the frequent assessment condition relative to the item from the initial assessment condition, preferences were relatively stable for the high-ranking items for Matt and Julian. For Joey, the initial assessment item was selected last during the first three MSWO administrations. Over time, Joey began to select this item earlier on during the assessment and selected it first or second during the last two MSWO assessments.

Discussion

In this study, we evaluated the extent to which frequent, (i.e., pre-session MSWOs) and lengthier, initial (i.e., PS) preference assessments influenced the rate of skill acquisition of novel targets during DTI for individuals diagnosed with ASD. The results may shed some light on optimal timing and frequency of preference assessments for clients in skill acquisition programs. Although limited to a small sample, our preliminary results suggest that conducting daily MSWOs may be beneficial in improving the efficiency of skill acquisition programs for some individuals. Conducting frequent preference assessments may be particularly important for individuals whose preferences shift frequently between sessions and may help produce faster learning of targets. Specifically, this may be an important consideration for individuals whose preferences shift across different classes of reinforcers. For example, Matt, the participant for whom we included both food and leisure items, often alternated between food and leisure reinforcers as the top ranked MSWO item and was the participant for whom the frequent preference assessment appeared to have the biggest impact on learning. The extent to which frequent assessment accelerates the rate of skill acquisition may be related to fluctuations in preferences (i.e., may be most useful for individuals whose preferences change frequently). On the other hand, for individuals with stable preferences, conducting more frequent preference assessments might only be warranted if there are other clinical indicators (i.e., slowed learning relative to previous rate of acquisition; rejection of programmed reinforcer). These recommendations are consistent with those by Call et al. (2012), who suggested frequent MSWOs are an efficient and effective approach to identifying reinforcers.

Two other notable findings emerged from the consecutive MSWO results. First, for Matt, the item that ranked 5th in the initial PS preference assessment, was initially also a low preference item during the first MSWO assessments. As sessions progressed, Matt began to select this item earlier in the hierarchy, eventually choosing it as number one. In fact, responding stalled until we switched the reinforcement contingency from PS to MSWO for this condition. Second, for Joey, the top-ranked item from the PS preference assessment was selected last during the first three MSWO assessments. Across MSWO applications, Joey began selecting that item sooner, ultimately selecting it first during the last MSWO session. Additionally, the item that was selected first during the first two MSWO sessions was selected last during the PS assessment, resulting in the rank-order correlation changes reported above. It is unclear what variables were contributing to Joey’s responding during the MSWO, but it is possible that this is an example of the “save the best for last” phenomenon (e.g., Borrero et al., 2022). Our results suggest that repeated exposure to the MSWO assessment and subsequent DTI sessions may have strengthened the selection contingency (i.e., the stimulus chosen first is used in upcoming instructional trials). Future researchers could expose participants to procedures that link the results of preference assessments to programmed reinforcement contingencies in practice to assess the extent to which such linking impacts responding during preference assessments. Joey was also the only participant for whom there was no difference in the rate of skill acquisition. This combination of findings begs the question of whether Joey’s performance during DTI would have more closely resembled the other participants had he understood from the beginning that the first item chosen in the MSWO would be used as a reinforcer in the DTI sessions.

Several limitations of the study should be considered. First, although we followed some of the recommendations by Cariveau et al. (2021) to identify and arrange targets across sets (e.g., novel stimuli, clinically significant, same number of vocal or motor responses, same class of activities), our targets were selected by the participants’ caregivers and clinical behavior analysts. In some cases, the targets included stimuli that rhymed or sounded similar (e.g., Joey) or targets that required similar responses (e.g., Julian; gross motor skills that included hands) which could have resulted in the antecedent verbal stimuli being more disparate than targets or could have reduced the stimulus disparity and impacted acquisition (Halbur et al., 2021). Additionally, during baseline, Julian consistently engaged in a chain of responses that included raising and waving hands which resulted in 33% correct responding in most sessions. Second, we used an adapted alternating treatments design with an initial baseline. Although this design is often used in skill acquisition research, future researchers should consider more rigorous experimental designs that include within-subject replication. Third, we did not account for time in assessment across the two conditions. One consideration for clinicians seeking to adopt more frequent preference assessment is the amount of time in assessment that could otherwise be allocated to instruction. In this study, we conducted more than one MSWO in accordance with procedures described by Carr et al. (2000). Previous researchers have shown high correlation across MSWO administrations (Conine et al., 2021), particularly following a second presentation (Verriden & Roscoe, 2016). Future researchers could examine shorter variations of frequent MSWO assessments for practitioners to use. These variations may include (a) assessments with only one session (e.g., DeLeon et al., 2001), (b) assessments with fewer items, or (c) one-trial assessments, prior to skill acquisition sessions.

Despite the previously mentioned limitations, the results of this preliminary study may have important implications for practitioners and applied researchers. Clinically, practitioners may consider including brief MSWOs into their sessions to quickly assess for fluctuations in preference while maximizing session time to improve efficiency of skill acquisition programs. Because skill acquisition programs often involve a variety of components (e.g., prompting, error correction), the mere fact that the learner is acquiring new skills may present the illusion that a strong reinforcement contingency is in place. However, practitioners should not conclude that formal preference assessments are not necessary simply because learning is occurring. It is possible that potentially faster learning could occur if a more potent reinforcer was made available. For example, Karsten and Carr (2009) demonstrated that although praise alone was an effective reinforcer for two children with ASD, praise plus a highly preferred edible item produced more responding on a progressive-ratio reinforcer assessment. Additionally, providing a choice of reinforcers may seem like an attractive solution to addressing preference shifts in learners. However, the effect of choice on the efficiency of skill acquisition programs is not well investigated. Recent researchers in this area have demonstrated that choice may not always result in faster acquisition (e.g., Gureghian et al., 2019; Northgrave et al., 2019). As a general rule, including choice-making opportunities may be considered best practice. However, determining which items to present in a choice context to maximize efficiency of teaching procedures remains an empirical question that should be the focus of any choice preparation. Future researchers could examine choice of known high-preference items versus experimenter derived (e.g., treasure box, “grab bag”) items on the rate of skill acquisition.

In a recent survey of practicing behavior analysts, Morris et al. (2023) found that practitioners reported frequently changing reinforcers within a session. Among the reasons reported were (a) [participant] not responding, (b) [participant] not attending, and (c) problem behavior, which were endorsed by 62%, 77%, and 57% of respondents, respectively. Although responsiveness to fluctuations in the establishing operation (EO) is an important clinical skill, it is possible that changing the programmed reinforcer following occurrences of problem behavior or periods without participant engagement may inadvertently reinforce those response patterns. To the extent that informally troubleshooting reinforcer selection during an instructional session involves time not spent in instruction, it may be more efficient to frequently assess item preference during a session. Therapeutic programs that assess participant preference prior to signs of disengagement may be one way to increase choice opportunities and increase autonomy of participants of behavioral interventions.

Our preliminary results suggest that including brief, pre-session preference assessments may have improved the efficiency of discrete trial instruction, although the effects were not observed in every participant and, sometimes, the difference was just a small number of sessions. Although the differences across conditions may seem small at this scale (e.g., 4 sessions for Julian), these savings compounded across many tasks over the course of 2–3 years of early intervention could result in significant savings of instructional time. To further improve efficiency of DTI for individuals with ASD, applied researchers could examine pre-treatment assessments that identify participants whose responding is sensitive to changes in reinforcing efficacy across time. In other words, are there participant characteristics or behavioral repertoires that would be suggestive of the need for more frequent preference assessments and if so, could a pre-treatment assessment correctly identify those participants? Practitioners could consider conducting brief, one-trial MSWOs prior to learning blocks (either daily or at multiple points in a session) to formally assess preference for an item and capitalize on fluctuating EOs that may occur throughout a teaching session. Finally, from a social validity perspective, embedding frequent, brief preference assessments into teaching sessions would provide participants in behavioral interventions with more choice making opportunities and autonomy related to their instructional arrangement.

References

Becraft, J. L., & Rolider, N. U. (2015). Reinforcer variation in a token economy. Behavioral Interventions, 30(2), 157–165. https://doi.org/10.1002/bin.1401.

Borrero, J. C., Rosenblum, A. K., Castillo, M. I., Spann, M. W., & Borrero, C. S. W. (2022). Do children who exhibit food selectivity prefer to save the best (bite) for last? Behavioral Interventions, 37(2), 529–544. https://doi.org/10.1002/bin.1845.

Call, N. A., Trosclair-Lasserre, N. M., Findley, A. J., Reavis, A. R., & Shillingsburg, M. A. (2012). Correspondence between single versus daily preference assessment outcomes and reinforcer efficacy under progressive‐ratio schedules. Journal of Applied Behavior Analysis, 45(4), 763–777. https://doi.org/10.1901/jaba.2012.45-763.

Cariveau, T., & La Montilla, C. A. (2022). Effects of the onset of differential reinforcer quality on skill acquisition. Behavior Modification, 46(4), 732–754. https://doi.org/10.1177/0145445520988142

Cariveau, T., Batchelder, S., Ball, S., & La Montilla, C., A (2021). Review of methods to equate target sets in the adapted alternating treatments design. Behavior Modification, 45(5), 695–714. https://doi.org/10.1177/0145445520903049.

Carr, J. E., Nicolson, A. C., & Higbee, T. S. (2000). Evaluation of a brief multiple-stimulus preference assessment in a naturalistic context. Journal of Applied Behavior Analysis, 33(3), 353–357. https://doi.org/10.1901/jaba.2000.33-353.

Conine, D. E., Morris, S. L., Kronfli, F. R., Slanzi, C. M., Petronelli, A. K., Kalick, L., & Vollmer, T. R. (2021). Comparing the results of one-session, two-session, and three-session MSWO preference assessments. Journal of Applied Behavior Analysis, 54(2), 700–712. https://doi.org/10.1002/jaba.808.

DeLeon, I. G., & Iwata, B. A. (1996). Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis, 29(4), 519–533. https://doi.org/10.1901/jaba.1996.29-519.

DeLeon, I. G., Fisher, W. W., Rodriguez-Catter, V., Maglieri, K., Herman, K., & Marhefka, J. (2001). Examining the relative reinforcement effects of stimuli identified through pretreatment and daily brief assessments. Journal of Applied Behavior Analysis, 34(4), 463–473. https://doi.org/10.1901/jaba.2001.34-463.

DeLeon, I. G., Frank, M. A., Gregory, M. K., & Allman, M. J. (2009). On the correspondence between preference assessment outcomes and progressive-ratio schedule assessments of stimulus value. Journal of Applied Behavior Analysis, 42(3), 729–733. https://doi.org/10.1901/jaba.2009.42-729.

Egel, A. L. (1980). The effects of constant vs varied reinforcer presentation on responding by autistic children. Journal of Experimental Child Psychology, 30(3), 455–463. https://doi.org/10.1016/0022-0965(80)90050-8.

Egel, A. L. (1981). Reinforcer variation: Implications for motivating developmentally disabled children. Journal of Applied Behavior Analysis, 14(3), 345–350. https://doi.org/10.1901/jaba.1981.14-345.

Fisher, W. W., & Mazur, J. E. (1997). Basic and applied research on choice responding. Journal of Applied Behavior Analysis, 30(3), 387–410. https://doi.org/10.1901/jaba.1997.30-387.

Fisher, W., Piazza, C. C., Bowman, L. G., Hagopian, L. P., Owens, J. C., & Slevin, I. (1992). A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis, 25(2), 491–498. https://doi.org/10.1901/jaba.1992.25-491.

Graff, R. B., & Karsten, A. M. (2012). Assessing preferences of individuals with developmental disabilities: A survey of current practices. Behavior Analysis in Practice, 5(2), 37–48. https://doi.org/10.1007/BF03391822.

Graff, R. B., & Libby, M. E. (1999). A comparison of presession and within-session reinforcement choice. Journal of Applied Behavior Analysis, 32(2), 161–173. https://doi.org/10.1901/jaba.1999.32-161.

Green, G. (2001). Behavior analytic instruction for learners with autism: Advances in stimulus control technology. Focus on Autism and Other Developmental Disorders, 16(2), 72–85. https://doi.org/10.1177/108835760101600203.

Grow, L., & LeBlanc, L. (2013). Teaching receptive language skills: Recommendations for instructors. Behavior Analysis in Practice, 6, 56–75. https://doi.org/10.1007/BF03391791.

Gureghian, D. L., Vladescu, J. C., Gashi, R., & Campanaro, A. (2019). Reinforcer choice as an antecedent versus consequence during skill acquisition. Behavior Analysis in Practice, 13, 462–466. https://doi.org/10.1007/s40617-019-00356-3.

Hagopian, L. P., Long, E. L., & Rush, K. S. (2004). Preference assessment procedures for individuals with developmental disabilities. Behavior Modification, 28(5), 668–677. https://doi.org/10.1177/0145445503259836.

Halbur, M., Kodak, T., Williams, X. A., Reidy, J., & Halbur, C. (2021). Comparison of sounds and words as sample stimuli for discrimination training. Journal of Applied Behavior Analysis, 54(3), 1126–1138. https://doi.org/10.1002/jaba.830.

Hanley, G. P., Iwata, B. A., & Roscoe, E. M. (2006). Some determinants of changes in preference over time. Journal of Applied Behavior Analysis, 39(2), 189–202. https://doi.org/10.1901/jaba.2006.163-04.

Karsten, A. M., & Carr, J. E. (2009). The effects of differential reinforcement of unprompted responding on the skill acquisition of children with autism. Journal of Applied Behavior Analysis, 42(2), 327–334. https://doi.org/10.1901/jaba.2009.42-327.

Keyl-Austin, A. A., Samaha, A. L., Bloom, S. E., & Boyle, M. A. (2012). Effects of preference and reinforcer variation on within‐session patterns of responding. Journal of Applied Behavior Analysis, 45(3), 637–641. https://doi.org/10.1901/jaba.2012.45-637.

Lee, M. S., Yu, C. T., Martin, T. L., & Martin, G. L. (2010). On the relation between reinforcer efficacy and preference. Journal of Applied Behavior Analysis, 43(1), 95–100. https://doi.org/10.1901/jaba.2010.43-95.

MacNaul, H., Cividini-Motta, C., Wilson, S., & Di Paola, H. (2021). A systematic review of research on stability of preference assessment outcomes across repeated administrations. Behavioral Interventions, 36, 962–983. https://doi.org/10.1002/bin.1797.

Morris, S., Conine, L., Slanzi, D. E., Knronfli, C. M., F. R., & Etchison, H. M. (2023). A survey of why and how clinicians change reinforcers during teaching sessions. Behavior Analysis in Practice. https://doi.org/10.1007/s40617-023-00847-4.

Northgrave, J., Vladescu, J. C., DeBar, R. M., Toussaint, K. A., & Schnell, L. K. (2019). Reinforcer choice on skill acquisition for children with autism spectrum disorder: A systematic replication. Behavior Analysis in Practice, 12, 401–406. https://doi.org/10.1007/s40617-018-0246-8.

Verriden, A. L., & Roscoe, E., M (2016). A comparison of preference assessment methods. Journal of Applied Behavior Analysis, 49(2), 265–285. https://doi.org/10.1002/jaba.302.

Weinsztok, S. C., Goldman, K. J., & DeLeon, I. G. (2023). Assessing parameters of reinforcement on efficiency of acquisition A systematic review. Behavior Analysis in Practice, 16, 76–92. https://doi.org/10.1007/s40617-022-00715-7

Zhou, L., Iwata, B. A., Goff, G. A., & Shore, B. A. (2001). Longitudinal analysis of leisure-item preferences. Journal of Applied Behavior Analysis, 34, 179–184. https://doi.org/10.1901/jaba.2001.34-179.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

All procedures performed in the current study were in accordance with the ethical standards of the institution and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from the caregivers of the participants included in the study.

Conflict of Interest

The authors declare no potential conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Data for this paper were collected by the 3rd, 4th, and 5th authors as partial fulfillment of requirements for the Master of Arts degree in Professional Behavior Analysis from the Florida Institute of Technology.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

León, Y., Campos, C., Baratz, S. et al. Effects of Initial versus Frequent Preference Assessments on Skill Acquisition. J Dev Phys Disabil (2024). https://doi.org/10.1007/s10882-024-09971-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s10882-024-09971-7