Abstract

Synchronization is a widespread phenomenon in the brain. Despite numerous studies, the specific parameter configurations of the synaptic network structure and learning rules needed to achieve robust and enduring synchronization in neurons driven by spike-timing-dependent plasticity (STDP) and temporal networks subject to homeostatic structural plasticity (HSP) rules remain unclear. Here, we bridge this gap by determining the configurations required to achieve high and stable degrees of complete synchronization (CS) and phase synchronization (PS) in time-varying small-world and random neural networks driven by STDP and HSP. In particular, we found that decreasing P (which enhances the strengthening effect of STDP on the average synaptic weight) and increasing F (which speeds up the swapping rate of synapses between neurons) always lead to higher and more stable degrees of CS and PS in small-world and random networks, provided that the network parameters such as the synaptic time delay \(\tau _c\), the average degree \(\langle k \rangle\), and the rewiring probability \(\beta\) have some appropriate values. When \(\tau _c\), \(\langle k \rangle\), and \(\beta\) are not fixed at these appropriate values, the degree and stability of CS and PS may increase or decrease when F increases, depending on the network topology. It is also found that the time delay \(\tau _c\) can induce intermittent CS and PS whose occurrence is independent F. Our results could have applications in designing neuromorphic circuits for optimal information processing and transmission via synchronization phenomena.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Synchronization phenomena are processes wherein many dynamical systems adjust a given property (e.g., amplitude, phase, frequency, and even membrane potential in coupled neurons) of their motion due to suitable coupling configurations. In the brain, they can emerge from the collaboration between neurons or neural networks and significantly affect all neurons and network functioning. It is well-established that synchronization of neural activity within and across brain regions promotes normal physiological functioning, such as the precise temporal coordination of processes underlying cognition, memory, and perception [1]. However, synchronization of neural activity is also well known to be responsible for some pathological behaviors such as epilepsy [2]. It has been shown that changes in the strength of the synaptic coupling and the connectivity of the neurons could lead to epileptic-like synchronization behaviors. Furthermore, changes in neural connectivity can lead to hyper-synchronized states related to epileptic seizures that occur intermittently with asynchronous states [3]. It has been demonstrated in [4] that by manipulating synaptic coupling and creating a hysteresis loop, square current pulses can induce abnormal synchronization similar to epileptic seizures. Synchronization may present various forms (see [5, 6] for a comprehensive review), and the behavior of each form of synchronization may depend on the nature of the interacting systems, the type of coupling, the distances between the interacting systems, the time delays between the components of the systems, and also the network topology.

In this paper, we focus on two common forms of synchronization for reasons given alongside their descriptions: (i) Complete synchronization (CS) is the simplest (and probably the most intuitive) form of synchronization. A system made up of, e.g., two coupled sub-systems, say \(x_1(t)\) and \(x_2(t)\), is said to be completely synchronized when there is a set of initial conditions so that the coupled systems eventually evolve identically in time (i.e., \(|x_1(t) - x_2(t)|=0\), as \(t \rightarrow \infty\)) [6,7,8,9]. Because of the intuitiveness and simplicity of CS, it will be one of the main phenomena investigated in this paper. (ii) Phase synchronization (PS) was introduced by Rosenblum et al. [10], Pikovsky et al. [11] and experimentally confirmed in [12]. It involves sub-system properties called phases [13] and is characterized by \(2\pi\) phase locking of two or more oscillators with uncorrelated amplitudes. It has been shown that the phase synchronization between different brain regions supports both working memory and long-term memory and facilitates neural communication by promoting neural plasticity [14], making PS a good candidate for investigation in this paper.

In recent years, extensive research (see, e.g., the reviews in [15,16,17]) has been conducted on synchronization dynamics in non-adaptive neural systems with varying degrees of complexity. In particular, in the study [18], it was discovered that excitatory and inhibitory connections between brain areas are crucial for phase and anti-phase synchronization. It was found that the phase angles of neurons in the receiving area could be influenced by unidirectional non-adaptive synapses from the sender area. When the neurons in the sender area synchronize, the variability of phase angles in the receiver area can be reduced with certain conductance values. Additionally, the study observed both phase and anti-phase synchronization in the case of non-adaptive bidirectional interactions. It has also been demonstrated in [19] that the coupling strength and the probability of connections in a random network of adaptive exponential integrate-and-fire neurons can induce spike and bursting synchronization, with bursting synchronization being more robust than spike synchronization.

Furthermore, it has been shown that axonal time delays can play crucial roles in synchronization dynamics in neural networks [20,21,22,23,24]. For example, in [23], the authors used phase oscillator and conductance-based neuron models to study synchronization and coupling between two bidirectionally coupled neurons in the presence of transmission delays and STDP, which influence emergent pairwise activity-connectivity patterns. Their results showed that depending on the range of transmission delays, the two-neuron motif could achieve in-phase/anti-phase synchronization and symmetric/asymmetric coupling. The co-evolutionary dynamics of the neuronal system and synaptic weights, governed by STDP, stabilize the motif in these states through transitions at specific transmission delays. They further showed that these transitions are sensitive to the phase response curve of the neurons but are robust to heterogeneity in transmission delays and STDP imbalance. Motivated by such rich time-delay-induced dynamical behavior in synchronization dynamics, in the current paper, we shall investigate the effects of axonal time delays on CS and PS in neural networks driven by two forms of adaptive rules.

It is essential to consider the effects of the inherently adaptive nature of neural networks on information processing via synchronization. Besides the colossal efforts to study synchronization in neuronal networks with synaptic plasticity (see, e.g., [22, 25,26,27,28,29]), it is essential to be mindful of the need to explore more dynamic scenarios in order to fully comprehend the emergence of synchronous patterns in adaptive networks. Synaptic plasticity in neural networks refers to the ability to modify the strength of synaptic couplings over time and/or the architecture of neural network topology through specific rules. Two significant mechanisms associated with adaptive rules in neural networks are spike-timing-dependent plasticity (STDP) and homeostatic structural plasticity (HSP). STDP-induced synaptic modification relies on the repeated pairing of pre- and postsynaptic membrane potentials. The degree and direction of the modification depend on the relative timing of neuron firing. Depending on the precise timing of pre- and and postsynaptic spikes, the synaptic weights can either exhibit long-term depression (LTD) or long-term potentiation (LTP), which represent persistent weakening or strengthening of synapses, respectively. This concept has been extensively discussed in [30, 31].

HSP-induced synaptic modification involves altering the connectivity between neurons by creating, pruning, or swapping synaptic connections. This results in changes to the network’s architecture while maintaining its functional structure, which maximizes specific functions of interconnected groups of neurons and improves sensory processing efficiency [32]. Early evidence of structural plasticity was observed through histological studies of spine density following new sensory experiences or training [33]. Further research has shown that the micro-connectome, which describes the connectome at the level of individual synapses, undergoes rewiring [34,35,36]. While brain networks adhere to specific topologies, such as small-world and random networks [37, 38], despite their time-varying dynamics, recent studies suggest that these networks can benefit from homeostasis by increasing the efficiency of information processing [39]. Motivated by these studies, the current paper focuses on time-varying small-world and random networks adhering to their respective topologies through HSP.

Previous studies [40,41,42,43] on synchronization in adaptive neural networks have focused on either time-invariant neural networks with STDP or time-varying neural networks without STDP. Research on time-invariant neural networks has shown that good synchronization improves via LTD of the averaged synaptic weight, while bad synchronization deteriorates via LTP [42]. This effect is due to inhibitory STDP [42], which contrasts the findings on excitatory STDP [44], where good synchronization gets better and bad synchronization gets worse via LTP and LTD, respectively. The article [45] demonstrated that STDP enhances synchronization in inhibitory networks even when there is heterogeneity. Similarly, [46] revealed that noise can facilitate synchronization in spiking neural networks driven by STDP. It is shown that the average synaptic coupling of the network increases with an increase in the noise intensity, with an optimal noise level where the strength of average synaptic coupling reaches its maximum in a resonance-like fashion that maximizes synchronization. The research in [40] demonstrated the crucial combined effect of the uni-directional chemical synapses and STDP on the synchronization in random neural networks. The study also reveals that synchronization increases as the connection probability of the network grow in the presence of STDP and no external input current.

However, introducing a non-zero external input current results in spiking resynchronization. The study in [47] explores the behavior of an adaptive array of phase oscillators and highlights that a specially designed adaptive law can amplify the coupling between pairs of oscillators with greater phase incoherence, leading to improved synchronization. This approach yields more realistic coupling dynamics in networks of oscillators with varying intrinsic frequencies. Additionally, adjusting the parameters of the adaptive law can accelerate synchronization. The paper also demonstrated the method’s versatility by examining nearest-neighbor ring coupling in addition to global coupling.

The research in [48,49,50] has shown that in networks with a time-varying topology but without STDP, a faster rewiring of the topology always leads to a higher degree of synchronization. However, in our current work, we challenge this notion and demonstrate that more rapid switching of synapses can actually also decrease the degree of synchronization in certain situations. The issues of synchronization phenomena in networks undergoing two adaptive processes have not received sufficient research attention. In one study, published in [51], the authors examined this problem by analyzing Kuramoto oscillator networks that undergo two adaptation processes: one that modifies coupling strengths and another that changes the network structure by pruning existing synaptic contacts and adding new ones. By comparing networks with only STDP to those with both STDP and structural plasticity, the authors assessed the effects of structural plasticity and found that it enhances the synchronized state of a network.

The current study aims to narrow the gap in the research on synchronization in time-varying neural networks driven by STDP and HSP rules in small-world and random networks. Specifically, we focus on determining the following: (i) the joint effect of the adjusting potentiation rate of the STDP rule and the characteristic rewiring frequency of the HSP rule on the degree of CS and PS; (ii) the joint effect of the synaptic time delay, the rewiring frequency of the HSP rule, and the adjusting potentiation rate of the STDP rule on the degree of CS and PS; (iii) the joint effect of the average degree of the network, the rewiring frequency of the HSP rule, and the adjusting potentiation rate of the STDP rule on the degree of CS and PS; and (iv) the joint effect of the rewiring probability of the Watts-Strogatz small-world network, the rewiring frequency of the HSP rule, and the adjusting potentiation rate of the STDP rule on the degree of CS and PS. The study employs extensive numerical simulations to investigate these issues.

Based on our numerical results, the stability of degrees of CS and PS are influenced by parameters governing STDP and HSP, as well as network topology parameters. For instance, decreasing the STDP potentiation rate parameter (P) and increasing the HSP characteristic frequency parameter (F) leads to more stable and higher levels of CS and PS in small-world and random networks, provided that average degree (\(\langle k \rangle\)), rewiring probability (\(\beta\)), and synaptic time delay (\(\tau _c\)) are at appropriate values. Furthermore, we found that PS can be achieved more reliably and at a higher degree than CS in both small-world and random networks. Additionally, the random network generates more stable and higher levels of CS and PS than the small-world network. Our findings on the variations in the degree of CS and PS are summarized in Table 1.

The paper is structured as follows: Sect. 2 describes the mathematical model, the STDP learning rule, and the HSP rewiring rules, which facilitate the adherence of time-varying small-world and random networks to their respective architecture. Section 3 outlines the computational methods utilized, while Sect. 4 presents and analyzes the numerical findings. In Sect. 5, we have conclusions.

2 Model description

2.1 Neural network model

The presence of intracellular and extracellular ions leads to the development of an electromagnetic field in biological neurons, which affects their membrane potential and, consequently, their firing modes. To incorporate these effects in a memristive neuron model, Lv et al. [52] proposed improved neuron models that include a variable for magnetic flux. The influence of this electromagnetic field is well-established [53]. Thus, in the current work, we study the joint effects of HSP and STDP on synchronization in a memristive neural network. The FitzHugh-Nagumo (FHN) model [54, 55], initially proposed to describe the spiking activity of neurons, now serves as a fundamental model for excitable systems. Its applications have expanded beyond neuroscience and biological processes [56] to include optoelectronics [57], chemical oscillators [58], and nonlinear electronic circuits [59]. Although the FHN model lacks the same level of biophysical relevance as the Hodgkin-Huxley (HH) neuron model [60], it nevertheless does capture some essential aspects of the HH model’s behavior. Moreover, the computational cost is reduced due to the lower dimensionality of the 2D FHN model compared to the 4D HH model, which is particularly advantageous when analyzing large networks. Our study considers the memristive FHN model, incorporating the memristive aspect via an additional equation as per [61]:

where the variables \(v_i\), \(w_i\), and \(\phi _i\) correspond to the voltage, slow current variable, and magnetic flux, respectively. To maintain electrophysiological relevance, the parameter a is typically set within the (0, 1) range, with 0.5 chosen for our purposes [62]. The values of \(\varepsilon\) and d are fixed at 0.025 and 1, respectively, representing a specific set of values at which the non-memristive FHN model (i.e., Eq. (1) with \(k_1=K_2=k_3=0\)) is in the quiescent state. The flux-controlled memristor term \(\rho (\phi _i)\) is modeled using \(\rho (\phi ) = \lambda +3\beta \phi ^2\), where \(\lambda\) and \(\beta\) are fixed at 0.1 and 0.02, respectively [63]. The memristor parameters \(k_1=0.5\), \(k_2=0.9\), \(k_3=1.0\), and \(\phi _{ext}=2.4\) are also fixed. With these parameter values, the model in Eq. (1) can only produce regular spiking [61]—the regime in which we are interested.

2.2 Synapses and STDP rule

The term \(I_{i}^{syn}(t)\) in Eq. (1) represents the uni-directional excitatory chemical synapses between neurons and governs the STDP learning rule between coupled neurons. The synaptic current \(I_{i}^{syn}(t)\) of the ith neuron at time t is defined in Eq. (2):

where the synaptic connectivity matrix \(L (=\{\ell _{ij}(t)\})\) has \(\ell _{ij}(t)=1\) if neuron j is connected to neuron i and disconnected when \(\ell _{ij}(t)=0\). We model the synaptic connections as either a time-varying small-world network or a time-varying random network. Starting with a regular ring network with \(\langle k \rangle\) nearest neighbors, we use the Watts-Strogatz algorithm [64] to generate small-world and random networks with parameters \(\beta\) and \(\langle k \rangle\), where \(\beta\) represents the rewiring probability and ranges from 0 to 1, and \(\langle k \rangle\), the average degree connectivity (i.e., the average number of synaptic inputs per neuron), which is calculated as \(\langle k \rangle =\frac{1}{N} \sum _{i=1}^{N}k_i\), where \(k_i\) is the in-degree of the ith neuron (i.e., the number of synaptic inputs to neuron i) and is given by \(k_i=\sum _{j=1(\ne i)}^{N}\ell _{ij}(t)\). In the algorithm, \(\beta \in [0,1]\) plays a crucial role in determining the type of network generated. If \(\beta\) falls between 0 and 1, a small-world network is created, while a completely random network is generated when \(\beta\) is 1. This work does not consider regular networks (when \(\beta\) is 0). The average degree connectivity \(\langle k \rangle\) and the rewiring probability \(\beta\) serve as control parameters for the network topology.

The time-dependent behavior of the open synaptic ion channels in the jth neuron is denoted by \(s_j(t)\) in Eq. (2). The rate of change of \(s_j(t)\) is determined by

Chemical synapses involve the release and diffusion of neurotransmitters across the synaptic cleft, which takes a finite amount of time. Including time delays allows for a more accurate representation of the temporal dynamics and signal transmission between neurons. Thus, we incorporate a time delay parameter, \(\tau _c\), which will be utilized to control the chemical synapses. With a time delay \(\tau _c\), the action potential of the pre-synaptic neuron j fired at the earlier time given by \(t-\tau _c\) is represented by \(v_j(t-\tau _c)\) [23, 65]. The threshold of the membrane potential, denoted by \(v_{shp}=0.05\), determines the threshold above which the pre-synaptic neuron j has an impact on post-synaptic neuron i. Additionally, the reversal potential, set at \(v_{syn} = 2.0\), ensures that all synapses are excitatory.

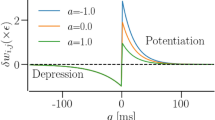

In Eq. (2), the strength of the synaptic connection between the jth pre-synaptic neuron and the ith post-synaptic neuron is denoted by \(g_{ij}(t)\). The STDP mechanism states that the synaptic strength of each synapse is updated using a nearest-spike pair-based STDP rule [66] as time t increases. There are two commonly used forms of STDP (see, e.g., [25, 67, 68] and [69,70,71]) for each of the forms. In our study, the update of the synaptic coupling strength \(g_{ij}(t)\) is determined by the synaptic modification function M, which is defined based on the current value of \(g_{ij}(t)\) [69,70,71]:

where \(\Delta t=t_i -t_j\), with \(t_i\) and \(t_j\) representing the spiking times of the post-synaptic neuron i and the pre-synaptic neuron j, respectively. We determine the spike occurrence times from the instant t when a membrane potential variable crosses the threshold value of \(v_{\textrm{th}}=0.5\). It is worth noting that only the excitatory-to-excitatory synapses are modified by this learning rule [69, 72], making it an ideal learning rule for our study since all the synapses in our network are excitatory—thanks to the value of the reversal potential, \(v_{syn} = 2.0\), which ensures that all synapses are excitatory. The extent of synaptic modification is regulated by two parameters, namely the potentiation and depression rate represented by P and D, respectively. The temporal window for synaptic modification is determined by two additional parameters, \(\tau _p\) and \(\tau _d\). Experimental results [69, 73, 74] suggest that \(D\tau _d>P\tau _p\), which ensures the overall weakening of synapses. Furthermore, experimental studies show that the temporal window for synaptic weakening is roughly the same as that for synaptic strengthening [69, 75]. Hence, to be consistent with experimental results, we chose the STDP parameters such that the STDP rule in Eq. (4) is typically depression-dominated, i.e., we set \(\tau _p=\tau _d=2.0\), \(D/P=1.05\), and chose P as the control parameter of this STDP rule. In order to prevent unbounded growth, negative coupling strength, and elimination of synapses (i.e., \(g_{ij}=0\)), we set a range with the lower and upper bounds: \(g_{ij}\in [g_{min},g_{max}]=[0.001,0.5]\).

2.3 Time-varying networks and HSP rule

To investigate the impact of the time-varying nature of the network architectures on the synchronization dynamics of the coupled neurons, we consider a small-world and random structure [76,77,78,79] constructed using a Watts-Strogatz network algorithm [64]. The network’s Laplacian matrix is a zero-row-sum matrix with an average degree connectivity of \(\langle k\rangle\) and a rewiring probability \(\beta \in (0,1]\). To generate a time-varying small-world network (with \(\beta \in (0,1)\)) that adheres to its small-worldness at all times, we implement the following process during the rewiring of synapses:

-

During each integration time step dt, a synapse between two distant neurons is rewired to a nearest neighbor of one of the neurons with probability \((1 - \beta )Fdt\). If the synapse is between two nearest neighbors, it is replaced by a synapse to a randomly chosen distant neuron with probability \(\beta Fdt\). A neuron i is considered a distant node to neuron j if \(|i-j |>\langle k \rangle\), where \(\langle k \rangle\) is the average degree of the original ring network used in the Watts-Strogatz algorithm.

To generate a time-varying random network (also generated with the Watts-Strogatz algorithm when \(\beta =1\)) that adheres to its randomness at all times, we implement the following process during the rewiring of synapses:

-

During each integration time step dt, if there is a synapse between neuron i and j, it will be rewired such that neuron i (j) connects to any other neuron except for neuron j (i) with a probability of \(\big (1-\frac{\langle k\rangle }{N-1}\big )Fdt\).

Note that the rewiring algorithms described above always maintain the small-worldness or randomness of the networks, even though the connectivity matrix changes over time—these are precisely the HSP rules we will use in this study. However, it is essential also to acknowledge that real neural networks may employ different rewiring processes to achieve such time-varying network structures, which may not necessarily align with the HSP rules described here. Nonetheless, for the purpose of our study, it is relevant that both small-world and random networks exhibit changing connections over time while preserving their respective small-worldness or randomness, similar to what is observed in real neural networks.

Here, we will use the characteristic rewiring frequency F as the control parameter for HSP. This parameter reflects the synapse changes over time, specifically during each integration time step dt. Notably, synapses in actual neural networks may change at varying rates, depending on factors such as the network’s developmental stage or environmental stimuli. Therefore, this study aims to investigate a broad range of rewiring frequencies, ranging from 0.0 to \(1.0\times 10^2\).

3 Computational methods

As we need to quantify the degree of complete synchronization (CS) and phase synchronization (PS) of neural activity in the networks, we use the error for variable traces E for CS and the Kuramoto order parameter R for PS [80, 81], respectively given by

where \(\Psi _{_{i}}(t)= 2\pi \ell +2\pi \frac{ t-t_i^{(\ell )}}{t_i^{(\ell +1)}-t_i^{(\ell )}}\), \(t_i^{(\ell )}\le t<t_i^{(\ell +1)}\), and \(\big \langle \cdot \big \rangle _{{t}}\) is the time average obtained over a large time interval [0, T]. In the argument of the exponential function, we have \(z = \sqrt{-1}\), and the quantity \(\Psi _{_{k}}(t)\) approximates the phase of the kth neuron and linearly increases over \(2\pi\) from one spike to the next. We determine the spike time occurrences from the instant the membrane potential variable \(v_k\) crosses the threshold \(v_{\textrm{th}}=0.5\) from below. The norm of this complex exponential function is represented by \(|\cdot |\). The time at which the ith neuron fires its \(\ell\)th spike (\(\ell = 0, 1, 2,...\)) is represented by \(t_i^{(\ell )}\).

CS corresponds to when all neurons follow the same trajectory and yields zero synchronization error \(E=0\). The Kuramoto order parameter R ranges from 0 to 1, corresponding to the absence of PS to complete PS (i.e., all neurons fire at precisely the same times), respectively. It is worth noting that the error E, which measures the degree of CS, uses the actual and all the values of the membrane variable \(v_{_{k}}(t)\) (including subthreshold oscillations), while the Kuramoto order parameter uses only the spike times of \(v_{_{k}}(t)\) to inform us about the synchronization of spiking times. Thus, the synchronization behavior of the neurons during CS can be very different from what happens during PS.

For N neurons, we numerically integrate Eqs. (1)–(3) with the STDP learning rule of Eq. (4) and the HSP rewiring models described above using a standard fourth-order Runge–Kutta algorithm with a time step \(dt = 0.01\) and for a total integration time of \(T=3.0\times 10^{3}\) units. The results shown in Sect. 4 below were averaged over 25 independent realizations for each set of parameter values and random initial conditions to warrant reliable statistical accuracy with respect to the small-world and random network generations and the global stability of CS and PS. For each realization, we choose random initial points \([v_k(0),w_k(0),\phi _k(0)]\) for the kth (\(k = 1,...,N\)) neuron with uniform probability distribution in the range of \(v_k(0)\in [-0.5, 1.6]\), \(w_k(0)\in [0.1,1]\), \(\phi _{k}(0)=[2.45,3.5]\). It is worth pointing out that we have carefully excluded the transient behavior from simulations as with all the quantities calculated. After an initial transient time of \(T_0=2.4\times 10^{3}\) units, we start recording the values of the variables \((v_k,w_k,\phi _k)\) and the spiking times \(t_k^{\ell }\) (\(\ell \in \mathbb {N}\) counts the spiking times). Furthermore, the initial weights of all excitable synapses are normally distributed in the interval \([g_{min},g_{max}]=[0.001,0.5]\), with mean \(g_0=0.35\) and standard deviation \(\sigma _0=0.01\).

The flow of control in the simulations is presented in Table 2 and the algorithm in Appendix. The two outermost loops in the pseudo-code are on the parameters P and F, resulting in Fig. 1. Other parameters replace the parameter in the outermost loop (i.e., P) to get results presented in the rest of the figures.

The global stability of CS and PS is analyzed using basin stability measure B, defined as

where \(\Omega\) represents the set of all possible random perturbations \(\omega\) and \(h(\omega )\) equals unity if the neural network converges to synchronized states after a perturbation \(\omega\) and zero otherwise. The density of the perturbed states, represented by \(g(\omega )\), satisfies the condition \(\int _{\Omega }g(\omega )d\omega =1\).

In our computation, we integrate the system for a sufficiently large number Q of realizations. Each realization is executed with random initial conditions drawn uniformly from a prescribed region of phase space. If q is the number of initial conditions that eventually arrive at the synchronous state, then the basin stability B for the synchronous state is estimated as q/Q. Thus, B is bounded in the unit interval [0,1], whereby \(B = 0\) indicates that the synchronized state is completely unstable and has the size of its basin of attraction tending to zero, and when \(B = 1\), all sampled initial conditions are pulled to the synchronized state, implying a globally stable synchronized state; and when \(0<B<1\), the probability (in the classical sense) of getting the synchronous states for random initial conditions located in the prescribed region of the phase space. We can also interpret \(0<B<1\) as the coexistence of synchronized and desynchronized states within a given region of phase space.

As we indicated earlier, a full level synchronization is hardly attained in many real-world systems [82], including biological neurons, where we can have heterogeneous initial conditions and coupling strengths (which are controlled by STDP) and/or the presence of uncorrelated random perturbations. Even though their degree of synchronization could be very high (i.e., \(E\le \delta\), \(0<\delta \ll 1\) or \(R\le \delta _0\), \(0\ll \delta _0<1\), as \(t \rightarrow \infty\)), it is hardly full (i.e., it is hard to get exactly \(E=0\) and \(R=1\), as \(t \rightarrow \infty\)). Thus, in our computations, we sample the phase space volume prescribed above and consider \(E < 10^{-1}\) and \(R>0.9\) a satisfactory precision for CS and PS, respectively. In the rest of this paper, we use the notations \(B^{E}\) and \(B^{R}\) to distinguish between the basin stability measure of CS and PS, respectively.

4 Results

The purpose of our study is to examine the impact of the HSP, which is governed by the rewiring frequency parameter F, in conjunction with (i) the STDP, which is influenced by the adjusting rate parameter P, (ii) the time delay \(\tau _c\), (iii) the average degree connectivity \(\langle k \rangle\), and (iv) the rewiring probability \(\beta\), on the degree of CS and PS in small-world and random networks. Our findings on the alterations of the degree of CS and PS are summarized in Table 1.

4.1 Combined effects of F and P

From many previous research works, it is well established that the strength of the coupling between oscillators (neurons included) is crucial for their synchronization. Essentially, if the coupling strength is zero or below a non-zero threshold, the oscillators cannot synchronize or achieve a certain degree of synchronization. Thus, for a better understanding of synchronization as a function of the STDP parameter P, which controls the modification of the synaptic coupling strengths and F, it is necessary to first investigate how the average synaptic weight G given in Eq. (7) varies with P and F.

where \(\langle \cdot \rangle _t\) is the average over time, \(g_{ij}(t)\in [0.001,0.5]\), and \(g_{ij}(t=0) \sim \mathcal {N}(0.35,\,0.01)\).

In Fig. 1a and b, we present the variation of G as a function of P and F in Watts-Strogatz small-world (\(\beta =0.25\)) and completely random (\(\beta =1\)) networks, respectively. We observe that increasing P weakens the average synaptic weight in both small-world and random networks, and at the same time, for a given value of P, increasing F has no significant effect on the average synaptic weight. One major difference between the two topologies is that the weakening of synapses after STDP is significantly stronger in the random network, with average synaptic weight reaching a value as low as \(G=0.0718\) compared to \(G=0.102\) in the small-world network.

The fact that the synapses strengthen with decreasing P leads to the dominant depression of the synaptic weights (as D/P increases and G never exceeds the mean value of the initial synaptic weights distribution \(\mathcal {N}(0.35,\,0.01)\)) is in agreement with experimental studies [73, 74]. Hence, we expect that decreasing P would favor synchronization.

The variation of G in Fig. 1 is robust, as extensive numerical simulations (not shown) indicate that G displays the same qualitative behavior with respect to P and F and for other values of the synaptic time delay \(\tau _c\in [0,80]\), average degree connectivity \(\langle k \rangle \in [2,30]\), rewiring probability \(\beta \in (0,1]\), and network size \(N\in [80,120]\). Thus, even though G is not a variable of main interest in our study, it is worth pointing out that the way the dynamics of G relate to the degree of CS and PS can be inferred from its inverse and monotonic variation with P. In the next subsections, we will investigate the combined effect of F and a network parameter (\(\tau _c\), \(\langle k \rangle\), \(\beta\)) on synchronization at the smallest value of P \((=1.0\times 10^{-6})\), i.e., the largest value of average synaptic strength \(G(=0.35)\) in the network.

Variation of the average synaptic weight G as a function of P and F in a small-world (\(\beta =0.25\)) and b random (\(\beta =1\)) network. In both topologies, decreasing P strengthens the average synaptic weight after STDP learning, while F has no significant effect on G, especially at larger P. Parameter values: \(\langle k \rangle = 10\), \(\tau _c=0.0\), \(N=100\)

In Fig. 2a and b, we show, respectively, the time series of a few spiking neurons and the spatiotemporal pattern of all the spiking neurons in a small world network of Fig. 1a, when the STDP parameter is relatively large, i.e., \(P=1.0\times 10^{-3}\), leading to a weak average synaptic strength \(G\approx 0.1\). In these figures, it can be seen the neurons exhibit a poor degree of CS (see the red curve in Fig. 3b) and a poor degree of PS at early times of the time-series (see the red curve in Fig. 3c) due to the weak average synaptic strength (see the red curve in Fig. 3a). Figure 2c and d display the time series of a few spiking neurons and the spatiotemporal pattern of all spiking neurons in the random network of Fig. 1b, when the STDP parameter is relatively small, i.e., \(P=1.0\times 10^{-6}\), leading to a stronger average synaptic strength \(G\approx 0.35\). In this case, the neurons exhibit good degree of CS (see the blue curve in Fig. 3b) and a good degree of PS (see the blue curve in Fig. 3c) due to the stronger average synaptic strength (see the blue curve in Fig. 3a).

Time series of some neurons’ membrane potential in a and corresponding spatiotemporal pattern in b of a small-world network (\(\beta =0.25\)) with \(P=1.0\times 10^{-3}\) exhibiting poor degree CS and PS. Time series of some neurons’ membrane potential in c and corresponding spatiotemporal pattern in d of the random network (\(\beta =1\)) with \(P=1.0\times 10^{-6}\) exhibiting good degree CS and PS. Parameter values: \(F=100\), \(\langle k \rangle = 10\), \(\tau _c=0.0\), \(N=100\)

The red curve in Fig. 3a represents the time series of the averaged synaptic weight G of the small-world network (\(\beta =0.25\)) when the STDP parameter is relatively large \(P=1.0\times 10^{-3}\)—just as in Fig. 2a and b. In this case, we can see that G saturates at a relatively low value. Hence, the poor degree of CS, as indicated in Fig. 2a and b, and the relatively high synchronization error E represented by the red curve in Fig. 3b. Furthermore, for this same value of \(P(=1.0\times 10^{-3})\), we also observe a poor degree of PS measured by the relatively low Kuramoto order parameter R represented by the red curve in Fig. 3c.

However, towards the end of the time series in Fig. 3b, the red curve increases from a relatively low value to higher values near 1, indicating a better degree of PS. This explains why in Fig. 2a, some neurons towards the end of the time series turn to synchronize their spiking times, leading to a higher degree of PS. Nevertheless, it is worth noting that CS is still very poor as most neurons have synchronized only their spiking times and not the traces of their membrane potentials.

Furthermore, we observe that towards the end of the time series in Fig. 3a, there is no growth in the average synaptic strength G. Hence, the synaptic strength is not responsible for this improvement in the degree of PS towards the end of the time series in Fig. 2a and the red curve in Fig. 3c. This behavior is explained by the fact that our oscillators (FHN neurons in Eq. (1)) are identical. Thus, with a sufficiently long transient time, identical oscillators with weak coupling can still synchronize because of the similarity of their attractors in phase space. In this case, the oscillators adjust their phases to align in a specific relationship, while their amplitudes may differ (hence the poor degree of CS). This PS occurs due to the shared properties of the oscillators, such as having identical parameter values, natural frequencies, and similar dynamical behaviors. When the coupling between the identical oscillators weakens, their interaction is not strong enough to force CS. However, some or most oscillators may occasionally achieve a state of PS where their phases become correlated—like at the end of the time series in Fig. 2a (where the last spiking time of some neuron coincide), leading to a higher degree of PS as indicated by the higher values of R towards the end of the time series in Fig. 3c.

On the other hand, the blue curve in Fig. 3a represents the time series of the averaged synaptic weight G of the random network (\(\beta =1\)) when the STDP parameter is relatively small \(P=1.0\times 10^{-6}\)—just as in Fig. 2c and d. In this case, we can see that G saturates at a relatively high value. Hence, the high degree of CS, as indicated in Fig. 2c and d, and the relatively low synchronization error E represented by the blue curve in Fig. 3b. Furthermore, for this same value of \(P(=1.0\times 10^{-6})\), we also observe a high degree of PS measured by the relatively high Kuramoto order parameter R represented by the blue curve in Fig. 3c.

Time series of average synaptic weight G in a for small-world (\(\beta =0.25\), red curve) and random (\(\beta =1.0\), blue curve) networks at various STDP parameter P values. Time series of average error of membrane potential traces in b for small-world (\(\beta =0.25\), red curve) and random (\(\beta =1.0\), blue curve) networks at different P. Time series of average error for Kuramoto order parameter R in a for small-world (\(\beta =0.25\), red) and random (\(\beta =1.0\), blue) networks at different P. Parameter values: \(F=100\), \(\langle k \rangle = 10\), \(\tau _c=0.0\), \(N=100\)

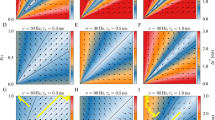

In the rest of the paper, we present the behaviors of CS and PS in the small-world and random networks as a function of each network parameter and the network rewriting frequency F, at the best STDP parameter value (i.e., \(P=1.0\times 10^{-6}\)) for both types of synchronization. In Fig. 4a and b, we depict the variations in the degree of CS and PS as a function of P and F in a small-world network (\(\beta =0.25\)), respectively. It is evident from these two figures that decreasing the value of P (i.e., strengthening the average synaptic weights in the network after STDP, as shown in Fig. 1) enhances the degree of CS (i.e., \(E \rightarrow 0\)) and the degree of PS (i.e., \(R \rightarrow 1\)). At the same time, for any given value of P, increasing the value of F has no significant effect on the degree of CS and PS, except in the case of CS for very small values of P \((\approx 10^{-6})\), where increasing F occasionally enhances the degree of CS to almost full synchrony (\(E\approx 0\)). This implies that when the average synaptic weight is strong, a more rapidly changing small-world network can achieve larger windows of CS. Comparing the degree of CS and PS, we observe that a relatively weaker average synaptic weight (controlled by P) is required to achieve a high degree of PS (shown in light yellow) as opposed to CS, which requires a much stronger average synaptic weight to attain a high degree.

In Fig. 4c and d, we present the basin stability of CS and PS corresponding to Fig. 4a and b, respectively. Figure 4c indicates the highest degrees of CS (i.e., the dark blue regions in Fig. 4a, with \(P\approx 10^{-6}\) and \(E<10^{-1}\)) that are not globally stable (i.e., \(B^{E}<1\)) in the prescribed region of phase space. Instead, we have the co-existence of a desynchronized state and a synchronized state (the latter being more probable than the former since \(0.7<B^{E}<1\)). Furthermore, it can be observed in Fig. 4c that when \(P\approx 10^{-6}\), increasing F leads to an increase in \(B^E\), indicating that small-world network with more rapidly switching synapses and a strong average synaptic weight after STDP will yield a globally stable CS. Figure 4d indicates that the highest degree PS achieved in Fig. 4b (light yellow regions) is globally stable (i.e., \(B^{R}\approx 1\)) for slightly lower values of P. It can also be seen that for \(10^{-6}<P<10^{-5}\), increasing F yields an increase in \(B^{R}\) from 0.6 to almost 1, indicating that, just like with CS, rapidly switching synapses increases the basin stability of PS. Moreover, comparing the basins stability of CS and PS, it is clear that PS is more stable than CS in the above-prescribed region of phase space. Qualitatively similar results (not shown) are obtained for the random network (\(\beta =1\)). In the following sections, when we refer to the optimal value of P, we specifically indicate \(P=1.0\times 10^{-6}\). The results in Fig. 4 indicate that this value of P yields the highest degrees of CS and PS.

In summary, in the \(P-F\) parameter plane, decreasing P (which increases the weakening effect of STDP on the synaptic weights) and increasing F (which speeds up the swapping rate of synapses between neurons) leads to a more stable and higher degree of CS and PS in both the small-world and random networks, provided that \(\tau _c\), \(\beta\), and \(\langle k \rangle\) are fixed at suitable values.

Variation in the degree of synchronization and the corresponding global stability w.r.t. P and F in a small-world network. a and c Degree of CS and the corresponding basin stability measure. b and d Degree of PS and the corresponding basin stability measure. Parameter values: \(\langle k \rangle = 10\), \(\beta = 0.25\), \(\tau _c=0.0\), \(N=100\)

4.2 Combined effect of F and \(\tau _c\) at the optimal P

In Fig. 5a and b, we present the variations in the degree of CS and PS as a function of the synaptic time delay \(\tau _c\in [0,160]\) and F at the optimal value of P in a small-world network. The results indicate that the small-world network exhibits intermittent CS and PS, irrespective of the switching frequency of synapses F. Next, we provide a mathematical explanation for intermittent CS and PS as \(\tau _c\) increases. First, we recall that if a deterministic delayed differential equation is generally given as \(\dot{x}= f(x(t),x(t-\tau _c))\), where \(\tau _c\) is the time delay, possesses a solution x(t) with period \(\overline{\tau }\), then x(t) also solves \(\dot{x}= f(x(t),x(t-\tau _c-n\overline{\tau }))\), for all positive integers \(n\in \mathbb {N}\). It suffices to check if the distance between the horizontal bands of the maximum degree of CS and PS in Fig. 5a and b, compares to the average (over the total number of neurons) interspike interval (ISI), alias period of the neural activity which is computed and given by \(ISI\approx 80\). It is observed from Fig. 5a that three deep blue horizontal bands where the network exhibits the highest degree of CS are equidistant, and the distance between each is given \(\overline{\tau }\approx 80\approx ISI\). Hence, the synchronization pattern for CS repeats itself n times after \(n\overline{\tau }\), n = 0, 1, 2, ..., waiting time. This explanation applies to the case of PS in Fig. 5b.

Figure 5c and d display the basin stability measure of CS and PS presented in Fig. 5a and b, respectively. It can be observed from Fig. 5c that higher rewiring frequencies increase the basin stability of CS, especially at intermediate time delays, i.e., at \(\tau _c\approx 80\). Furthermore, we can again see that the highest degree of CS is less stable than that of PS. In the case of the random network (\(\beta =1\)), we have obtained qualitatively similar results (not shown).

Variation in the degree of synchronization and the corresponding global stability w.r.t. \(\tau _c\) and F at the optimal value of P indicated. For a small-world network. a and c Degree of CS and the corresponding basin stability measure. b and d Degree of PS and the corresponding basin stability measure. Parameter values: \(\langle k \rangle = 10\), \(\beta = 0.25\), \(\tau _c=0.0\), \(N=100\)

In summary, in the \(\tau _c-F\) parameter plane, both small-world and random networks display intermittent CS and PS as \(\tau _c\) increases, with the highest degrees of CS and PS occurring when the synaptic time delay \(\tau _c\) is multiple of the average inter-spike interval of the networks.

4.3 Combined effect of F and \(\langle k \rangle\) at the optimal P

In Fig. 6a and b, we depict the variations in the degree of CS and PS, respectively, as a function of \(\langle k \rangle\) and F in a small-world network (\(\beta =0.25\)) at the optimal value of P indicated. The results suggest that higher values of the average degree connectivity (\(\langle k \rangle >8\) for CS and \(\langle k \rangle >5\) for PS) yield a high degree of CS and PS, irrespective of the rewiring frequency F. This behavior can be explained by the fact that with higher values of \(\langle k \rangle\), the network becomes denser, leading to more interactions between the connected neurons which facilitate their global synchronization. As the small-world network becomes sparser (\(\langle k \rangle <8\) for CS and \(\langle k \rangle <5\) for PS), the degree of both forms of synchronization decreases, especially when the synapses switch more rapidly (\(F\ge 10^{-1}\)).

In Fig. 6c and d, we present the basin stability measures of CS and PS corresponding to Fig. 6a and b, respectively. Figure 6c indicates that the highest degree of CS (\(E\lessapprox 0.1\)) obtained at higher values of \(F\ge 10^{-1}\) and \(\langle k \rangle >8\) is more stable than in the rest of the \(F-\langle k \rangle\) plane for above-prescribed phase space region. Meanwhile, Fig. 6d indicates that (i) PS is fully stable for all values of F and average degree connectivity \(\langle k \rangle >8\) (ii) PS is more stable than CS, since max \(B^R(=1)\) > max \(B^E(=0.8)\).

In the case of the random network (\(\beta =1.0\)) shown in Fig. 7, firstly, we observe in Fig. 7a that higher values of F \((\ge 10^{-2})\) increases the degree of CS irrespective of the value of \(\langle k \rangle\), while lower values of F \((<10^{-2})\) deteriorate the degree CS for lower values of \(\langle k\) \(\rangle (<8)\). This is in contrast with a small-world network in Fig. 6a, where lower values of F \((<10^{-2})\) enhance the degree of CS, especially at higher values of \(\langle k\) \(\rangle (>8)\). In Fig. 7b, we observe that for lower \(\langle k\) \(\rangle (<8)\) lower values of \(F(<1)\), the degree of PS deteriorates. But unlike with degree of PS in Fig. 6b, which decreases for \(\langle k\) \(\rangle (<5)\) and F \((>1)\), the degree of PS in Fig. 7b slightly increases for these same ranges of parameter values.

Secondly, it can be seen that the degrees of CS and PS are significantly higher in the random network in Fig. 7 than in the small-world network in Fig. 6. In Fig. 7c and d, we present the basin stability measures of CS and PS corresponding to Fig. 7a and b, respectively. It is evident that CS and PS are more stable in the random than in the small-world network depicted in Fig. 6c and d. These behaviors can be explained by the fact that in a random network, neurons interact, on average, with as many nearest and as distant neighbors, while in the small-world network (with \(\beta =0.25\)), most of the neurons interact only with their nearest neighbors and a relatively few distant neighbors. These fewer interactions in the small-world network reduce the degree of synchronization.

In summary, in the \(\langle k \rangle -F\) parameter plane, lower values of F and higher values of \(\langle k \rangle\) yield higher and more stable degrees of CS and PS in small-world networks, while the higher values of F and higher values of \(\langle k \rangle\) yield higher and more stable degrees of CS and PS in the random network.

Variation in the degree of synchronization and the corresponding global stability w.r.t. \(\langle k \rangle\) and F in a small-world network with an optimal STDP parameter value P. a and c Degree of CS and the corresponding basin stability measure. b and d Degree of PS and the corresponding basin stability measure. Parameter values: \(\beta = 0.25\), \(\tau _c=3.0\), \(N=100\)

Variation in the degree of synchronization and the corresponding global stability w.r.t. \(\langle k \rangle\) and F in the completely random network with an optimal STDP parameter value P. a and c Degree of CS and the corresponding basin stability measure. b and d Degree of PS and the corresponding basin stability measure. Parameter values: \(\beta = 1.0\), \(\tau _c=3.0\), \(N=100\)

4.4 Combined effect of F and \(\beta\) at the optimal P

In Fig. 8a and b, we show the variations in the degree of CS and PS, respectively, as a function of \(\beta \in [0.05,1]\) and F at the optimal value of P indicated. It can be seen that the degrees of CS and PS are relatively low for (i) small-world networks built with a low rewiring probability (i.e., \(\beta <0.1\)) and have slowly switching synapses (i.e., \(F<10^{-3}\)) and (ii) for almost all small-world networks with rapidly switching synapses (i.e., \(F>1\)). For the random network (i.e., when \(\beta =1\)), the degrees of CS and PS stay relatively high irrespective of F.

In Fig. 8c and d, we present the basin stability measures of CS and PS corresponding to Fig. 8a and b, respectively. It can be observed that the CS is more stable for more small-world networks with a higher number of random shortcuts (i.e., higher rewiring probability \(\beta >0.4\)) and intermediate rewiring frequencies (i.e., \(10^{-2}<F<10^{0}\)). For the case of a completely random network (i.e., \(\beta =1\)), we have more stable CS for a wider range of the rewiring frequency (i.e., \(10^{-2}<F<10^{2}\)). The degree of PS in Fig. 8b shows similar behavior. Comparing Fig. 8a and b, we see that PS is more stable than CS both in terms of the size of the region where \(B^E\) and \(B^R\) achieve their maximum values and of the actual maximum values of \(B^E\) and \(B^R\).

Variation in the degree of synchronization and the corresponding global stability w.r.t. \(\beta\) and F with an optimal STDP parameter value P. a and c Degree of CS and the corresponding basin stability measure. b and d Degree of PS and the corresponding basin stability measure. Parameter values: \(\langle k \rangle =5\), \(\tau _c=3.0\), \(N=100\)

In summary, in the \(\beta -F\) parameter plane, higher values of \(\beta \in [0.05,1]\) and intermediate values of F yield a higher and more stable degree of CS and PS (i.e., random network yields better synchronization than small-world networks).

5 Conclusions

This paper investigated the properties of two important phenomena, complete synchronization (CS) and phase synchronization (PS), in adaptive small-world and random neural networks. These networks were driven by two adaptive rules: spike-timing-dependent plasticity (STDP) and homeostatic structural plasticity (HSP). Our study yielded valuable insights into the factors that significantly affect the degree and stability of CS and PS. We found that various parameters, including the potentiation rate parameter P for STDP, the rewiring frequency parameter F for HSP, and the network topology parameters such as synaptic time delay \(\tau _c\), average degree connectivity \(\langle k \rangle\), and rewiring probability \(\beta\), play a crucial role in shaping the dynamics and stability of CS and PS.

Our results consistently demonstrated that PS exhibits greater stability compared to CS. This observation is particularly significant because precise spike timing is known to be crucial for information processing in neural systems [83]. The greater stability of PS, as indicated by the basin stability measure, may explain why neurons rely on the precise timing of spikes to encode information rather than the trace of the voltage (represented by the actual values of the voltage v), which is used to evaluate the degree of CS through the error E.

Furthermore, recent experiments have shown that the modulation of STDP can be influenced by signaling molecules such as acetylcholine [84]. Additionally, advances in neuroscience research have made it possible to manipulate synapse control in the brain using drugs that affect neurotransmitters [85] or optical fibers to stimulate genetically engineered neurons selectively [86]. Consequently, our findings hold practical implications for optimizing neural information processing through synchronization in experimental settings and designing artificial neural circuits that enhance signal processing through synchronization.

Data availability

The simulation data supporting this study’s findings are available within the article.

References

Neustadter, E., Mathiak, K., Turetsky, B.: EEG and MEG probes of schizophrenia pathophysiology. In: The Neurobiology of Schizophrenia, pp. 213–236. Elsevier (2016)

Lehnertz, K., Bialonski, S., Horstmann, M.T., Krug, D., Rothkegel, A., Staniek, M., Wagner, T.: Synchronization phenomena in human epileptic brain networks. J. Neurosci. Methods 183(1), 42–48 (2009)

Borges, F., Gabrick, E., Protachevicz, P., Higa, G., Lameu, E., Rodriguez, P., Ferraz, M., Szezech, J., Jr., Batista, A., Kihara, A.: Intermittency properties in a temporal lobe epilepsy model. Epilepsy Behav. 139, 109072 (2023)

Protachevicz, P.R., Borges, F.S., Lameu, E.L., Ji, P., Iarosz, K.C., Kihara, A.H., Caldas, I.L., Szezech, J.D., Jr., Baptista, M.S., Macau, E.E., et al.: Bistable firing pattern in a neural network model. Front. Comput. Neurosci. 13, 19 (2019)

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., Hwang, D.U.: Complex networks: structure and dynamics. Phys. Rep. 424(4–5), 175–308 (2006)

Osipov, G.V., Kurths, J., Zhou, C.: Synchronization in Oscillatory Networks. Springer Science & Business Media (2007)

Fujisaka, H., Yamada, T.: Stability theory of synchronized motion in coupled-oscillator systems. Progress Theoret. Phys. 69(1), 32–47 (1983)

Pecora, L.M., Carroll, T.L.: Synchronization of chaotic systems. Chaos: An Interdisciplinary Journal of Nonlinear Science 25(9), 097611 (2015)

Yamakou, E.M., Inack, E.M., Moukam Kakmeni, F.: Ratcheting and energetic aspects of synchronization in coupled bursting neurons. Nonlinear Dyn. 83, 541–554 (2016)

Rosenblum, M.G., Pikovsky, A.S., Kurths, J.: Phase synchronization of chaotic oscillators. Phys. Rev. Lett. 76(11), 1804 (1996)

Pikovsky, A., Rosenblum, M., Kurths, J.: Synchronization in a population of globally coupled chaotic oscillators. Europhys. Lett. 34(3), 165 (1996)

Parlitz, U., Junge, L., Lauterborn, W., Kocarev, L.: Experimental observation of phase synchronization. Phys. Rev. E 54(2), 2115 (1996)

Pietras, B., Daffertshofer, A.: Network dynamics of coupled oscillators and phase reduction techniques. Phys. Rep. 819, 1–105 (2019)

Fell, J., Axmacher, N.: The role of phase synchronization in memory processes. Nat. Rev. Neurosci. 12(2), 105–118 (2011)

Tang, Y., Qian, F., Gao, H., Kurths, J.: Synchronization in complex networks and its application-a survey of recent advances and challenges. Annu. Rev. Control. 38(2), 184–198 (2014)

Boccaletti, S., Kurths, J., Osipov, G., Valladares, D., Zhou, C.: The synchronization of chaotic systems. Phys. Rep. 366(1–2), 1–101 (2002)

Arenas, A., Díaz-Guilera, A., Kurths, J., Moreno, Y., Zhou, C.: Synchronization in complex networks. Phys. Rep. 469(3), 93–153 (2008)

Protachevicz, P.R., Hansen, M., Iarosz, K.C., Caldas, I.L., Batista, A.M., Kurths, J.: Emergence of neuronal synchronisation in coupled areas. Front. Comput. Neurosci. 15, 663408 (2021)

Borges, F.S., Protachevicz, P.R., Lameu, E.L., Bonetti, R., Iarosz, K.C., Caldas, I.L., Baptista, M.S., Batista, A.M.: Synchronised firing patterns in a random network of adaptive exponential integrate-and-fire neuron model. Neural Netw. 90, 1–7 (2017)

Hansen, M., Protachevicz, P.R., Iarosz, K.C., Caldas, I.L., Batista, A.M., Macau, E.E.: The effect of time delay for synchronisation suppression in neuronal networks. Chaos, Solitons Fractals 164, 112690 (2022)

Khoshkhou, M., Montakhab, A.: Spike-timing-dependent plasticity with axonal delay tunes networks of Izhikevich neurons to the edge of synchronization transition with scale-free avalanches. Front. Syst. Neurosci. 13, 73 (2019)

Lameu, E.L., Macau, E.E., Borges, F., Iarosz, K.C., Caldas, I.L., Borges, R.R., Protachevicz, P., Viana, R.L., Batista, A.M.: Alterations in brain connectivity due to plasticity and synaptic delay. Eur. Phys. J.: Spec. Top. 227, 673–682 (2018)

Madadi Asl, M., Ramezani Akbarabadi, S.: Delay-dependent transitions of phase synchronization and coupling symmetry between neurons shaped by spike-timing-dependent plasticity. Cogn. Neurodyn. 17(2), 523–536 (2023)

Protachevicz, P.R., Borges, F.S., Iarosz, K.C., Baptista, M.S., Lameu, E.L., Hansen, M., Caldas, I.L., Szezech, J.D., Jr., Batista, A.M., Kurths, J.: Influence of delayed conductance on neuronal synchronization. Front. Physiol. 11, 1053 (2020)

Protachevicz, P.R., da Silva Borges, F., Batista, A.M., da Silva Baptista, M., Caldas, I.L., Macau, E.E.N., Lameu, E.L.: Plastic neural network with transmission delays promotes equivalence between function and structure. Chaos Solitons Fractals 171, 113480 (2023)

Ratas, I., Pyragas, K.: Interplay of different synchronization modes and synaptic plasticity in a system of class I neurons. Sci. Rep. 12(1), 19631 (2022)

Schmalz, J., Kumar, G.: Controlling synchronization of spiking neuronal networks by harnessing synaptic plasticity. Front. Comput. Neurosci. 13, 61 (2019)

Silveira, J.A.P., Protachevicz, P.R., Viana, R.L., Batista, A.M.: Effects of burst-timing-dependent plasticity on synchronous behaviour in neuronal network. Neurocomputing 436, 126–135 (2021)

Solís-Perales, G., Estrada, J.S.: A model for evolutionary structural plasticity and synchronization of a network of neurons. Comput. Math. Methods Med. 2021 (2021)

Gerstner, W., Kempter, R., Van Hemmen, J.L., Wagner, H.: A neuronal learning rule for sub-millisecond temporal coding. Nature 383(6595), 76–78 (1996)

Markram, H., Lübke, J., Frotscher, M., Sakmann, B.: Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275(5297), 213–215 (1997)

Shine, J.M., Bissett, P.G., Bell, P.T., Koyejo, O., Balsters, J.H., Gorgolewski, K.J., Moodie, C.A., Poldrack, R.A.: The dynamics of functional brain networks: integrated network states during cognitive task performance. Neuron 92(2), 544–554 (2016)

Greenough, W.T., Bailey, C.H.: The anatomy of a memory: convergence of results across a diversity of tests. Trends Neurosci. 11(4), 142–147 (1988)

Bennett, S.H., Kirby, A.J., Finnerty, G.T.: Rewiring the connectome: evidence and effects. Neurosci. Biobehav. Rev. 88, 51–62 (2018)

Van Ooyen, A., Butz-Ostendorf, M.: The Rewiring Brain: a Computational Approach to Structural Plasticity in the Adult Brain. Academic Press (2017)

Yamakou, M.E., Kuehn, C.: Combined effects of spike-timing-dependent plasticity and homeostatic structural plasticity on coherence resonance. Phys. Rev. E 107(4), 044302 (2023)

Hilgetag, C.C., Goulas, A.: Is the brain really a small-world network? Brain Struct. Funct. 221(4), 2361–2366 (2016)

Valencia, M., Martinerie, J., Dupont, S., Chavez, M.: Dynamic small-world behavior in functional brain networks unveiled by an event-related networks approach. Phys. Rev. E 77(5), 050905 (2008)

Butz, M., Steenbuck, I.D., van Ooyen, A.: Homeostatic structural plasticity increases the efficiency of small-world networks. Front. Synaptic Neurosci. 6, 7 (2014)

Borges, R.R., Borges, F.S., Lameu, E.L., Batista, A.M., Iarosz, K.C., Caldas, I.L., Viana, R.L., Sanjuán, M.A.: Effects of the spike timing-dependent plasticity on the synchronisation in a random Hodgkin-Huxley neuronal network. Commun. Nonlinear Sci. Numer. Simul. 34, 12–22 (2016)

Borges, R.R., Borges, F.S., Lameu, E.L., Protachevicz, P.R., Iarosz, K.C., Caldas, I.L., Viana, R.L., Macau, E.E., Baptista, M.S., Grebogi, C., et al.: Synaptic plasticity and spike synchronisation in neuronal networks. Braz. J. Phys. 47(6), 678–688 (2017)

Kim, S.Y., Lim, W.: Effect of inhibitory spike-timing-dependent plasticity on fast sparsely synchronized rhythms in a small-world neuronal network. Neural Netw. 106, 50–66 (2018)

Kim, S.Y., Lim, W.: Effect of spike-timing-dependent plasticity on stochastic burst synchronization in a scale-free neuronal network. Cogn. Neurodyn. 12(3), 315–342 (2018)

Kim, S.Y., Lim, W.: Stochastic spike synchronization in a small-world neural network with spike-timing-dependent plasticity. Neural Netw. 97, 92–106 (2018)

Talathi, S.S., Hwang, D.U., Ditto, W.L.: Spike timing dependent plasticity promotes synchrony of inhibitory networks in the presence of heterogeneity. J. Comput. Neurosci. 25(2), 262–281 (2008)

Popovych, O.V., Yanchuk, S., Tass, P.A.: Self-organized noise resistance of oscillatory neural networks with spike timing-dependent plasticity. Sci. Rep. 3(1), 1–6 (2013)

Ren, Q., Zhao, J.: Adaptive coupling and enhanced synchronization in coupled phase oscillators. Phys. Rev. E 76(1), 016207 (2007)

Faggian, M., Ginelli, F., Rosas, F., Levnajić, Z.: Synchronization in time-varying random networks with vanishing connectivity. Sci. Rep. 9(1), 1–11 (2019)

Ghosh, D., Frasca, M., Rizzo, A., Majhi, S., Rakshit, S., Alfaro-Bittner, K., Boccaletti, S.: The synchronized dynamics of time-varying networks. Phys. Rep. 949, 1–63 (2022)

Rakshit, S., Bera, B.K., Ghosh, D., Sinha, S.: Emergence of synchronization and regularity in firing patterns in time-varying neural hypernetworks. Phys. Rev. E 97(5), 052304 (2018)

Chauhan, K., Khaledi-Nasab, A., Neiman, A.B., Tass, P.A.: Dynamics of phase oscillator networks with synaptic weight and structural plasticity. Sci. Rep. 12(1), 15003 (2022)

Lv, M., Wang, C., Ren, G., Ma, J., Song, X.: Model of electrical activity in a neuron under magnetic flow effect. Nonlinear Dyn. 85(3), 1479–1490 (2016)

Lv, M., Ma, J.: Multiple modes of electrical activities in a new neuron model under electromagnetic radiation. Neurocomputing 205, 375–381 (2016)

FitzHugh, R.: Impulses and physiological states in theoretical models of nerve membrane. Biophys. J. 1(6), 445–466 (1961)

Nagumo, J., Arimoto, S., Yoshizawa, S.: An active pulse transmission line simulating nerve axon. Proc. IRE 50(10), 2061–2070 (1962)

Ciszak, M., Calvo, O., Masoller, C., Mirasso, C.R., Toral, R.: Anticipating the response of excitable systems driven by random forcing. Phys. Rev. Lett. 90(20), 204102 (2003)

Rosin, D.P., Callan, K.E., Gauthier, D.J., Schöll, E.: Pulse-train solutions and excitability in an optoelectronic oscillator. Europhys. Lett. 96(3), 34001 (2011)

Shima, S.i., Kuramoto, Y.: Rotating spiral waves with phase-randomized core in nonlocally coupled oscillators. Phys. Rev. E 69(3), 036213 (2004)

Heinrich, M., Dahms, T., Flunkert, V., Teitsworth, S.W., Schöll, E.: Symmetry-breaking transitions in networks of nonlinear circuit elements. New J. Phys. 12(11), 113030 (2010)

Hodgkin, A.L., Huxley, A.F.: A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117(4), 500–544 (1952)

Fu, Y.X., Kang, Y.M., Xie, Y.: Subcritical Hopf bifurcation and stochastic resonance of electrical activities in neuron under electromagnetic induction. Front. Comput. Neurosci. 12, 6 (2018)

Xu, B., Binczak, S., Jacquir, S., Pont, O., Yahia, H.: Parameters analysis of Fitzhugh-Nagumo model for a reliable simulation. In: 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 4334–4337. IEEE (2014)

Yamakou, M.E.: Chaotic synchronization of memristive neurons: Lyapunov function versus Hamilton function. Nonlinear Dyn. 101(1), 487–500 (2020)

Watts, D.J., Strogatz, S.H.: Collective dynamics of ‘small-world’ networks. Nature 393(6684), 440–442 (1998)

Yu, H., Guo, X., Wang, J., Deng, B., Wei, X.: Spike coherence and synchronization on Newman-Watts small-world neuronal networks modulated by spike-timing-dependent plasticity. Physica A 419, 307–317 (2015)

Morrison, A., Aertsen, A., Diesmann, M.: Spike-timing-dependent plasticity in balanced random networks. Neural Comput. 19(6), 1437–1467 (2007)

Lameu, E.L., Borges, F.S., Iarosz, K.C., Protachevicz, P.R., Antonopoulos, C.G., Macau, E.E., Batista, A.M.: Short-term and spike-timing-dependent plasticity facilitate the formation of modular neural networks. Commun. Nonlinear Sci. Numer. Simul. 96, 105689 (2021)

Yang, C., Santos, M.S., Protachevicz, P.R., dos Reis, P.D., Iarosz, K.C., Caldas, I.L., Batista, A.M.: Chimera states induced by spike timing-dependent plasticity in a regular neuronal network. AIP Adv. 12(10) (2022)

Song, S., Miller, K.D., Abbott, L.F.: Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3(9), 919–926 (2000)

Xie, H., Gong, Y., Wang, B.: Spike-timing-dependent plasticity optimized coherence resonance and synchronization transitions by autaptic delay in adaptive scale-free neuronal networks. Chaos Solitons Fractals 108, 1–7 (2018)

Yu, H., Guo, X., Wang, J., Deng, B., Wei, X.: Effects of spike-time-dependent plasticity on the stochastic resonance of small-world neuronal networks. Chaos: An Interdisciplinary Journal of Nonlinear Science 24(3), 033125 (2014)

Li, X., Zhang, J., Small, M.: Self-organization of a neural network with heterogeneous neurons enhances coherence and stochastic resonance. Chaos: An Interdisciplinary Journal of Nonlinear Science 19(1) (2009)

Bi, G.Q., Poo, M.M.: Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18(24), 10464–10472 (1998)

Feldman, D.E., Brecht, M.: Map plasticity in somatosensory cortex. Science 310(5749), 810–815 (2005)

Zhang, L.I., Tao, H.W., Holt, C.E., Harris, W.A., Poo, M.M.: A critical window for cooperation and competition among developing retinotectal synapses. Nature 395(6697), 37–44 (1998)

Bassett, D.S., Bullmore, E.: Small-world brain networks. Neuroscientist 12(6), 512–523 (2006)

Bassett, D.S., Meyer-Lindenberg, A., Achard, S., Duke, T., Bullmore, E.: Adaptive reconfiguration of fractal small-world human brain functional networks. Proc. Natl. Acad. Sci. U.S.A. 103(51), 19518–19523 (2006)

Liao, X., Vasilakos, A.V., He, Y.: Small-world human brain networks: perspectives and challenges. Neurosci. Biobehav. Rev. 77, 286–300 (2017)

Muldoon, S.F., Bridgeford, E.W., Bassett, D.S.: Small-world propensity and weighted brain networks. Sci. Rep. 6(1), 1–13 (2016)

Bertolotti, E., Burioni, R., di Volo, M., Vezzani, A.: Synchronization and long-time memory in neural networks with inhibitory hubs and synaptic plasticity. Phys. Rev. E 95(1), 012308 (2017)

Kuramoto, Y.: Chemical turbulence. In: Chemical Oscillations, Waves, and Turbulence, pp. 111–140. Springer (1984)

Rosenblum, M.G., Pikovsky, A.S., Kurths, J.: From phase to lag synchronization in coupled chaotic oscillators. Phys. Rev. Lett. 78(22), 4193 (1997)

Pei, X., Wilkens, L., Moss, F.: Noise-mediated spike timing precision from aperiodic stimuli in an array of Hodgekin-Huxley-type neurons. Phys. Rev. Lett. 77(22), 4679 (1996)

Brzosko, Z., Mierau, S.B., Paulsen, O.: Neuromodulation of spike-timing-dependent plasticity: past, present, and future. Neuron 103(4), 563–581 (2019)

Pardridge, W.M.: Drug transport across the blood-brain barrier. Journal of cerebral blood flow & metabolism 32(11), 1959–1972 (2012)

Packer, A.M., Roska, B., Häusser, M.: Targeting neurons and photons for optogenetics. Nat. Neurosci. 16(7), 805–815 (2013)

Funding

Open Access funding enabled and organized by Projekt DEAL. MEY acknowledges the support from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Project No. 456989199 and the Neuromod Institute of the Université Côte d’Azur, Sophia Antipolis, France, and the warm hospitality of the MathNeuro Inria Project-Team. SR acknowledges support from Ikerbasque (The Basque Foundation for Science), the Basque Government through the BERC 2022-2025 program and by the Ministry of Science and Innovation: BCAM Severo Ochoa accreditation CEX2021-001142-S / MICIN / AEI / 10.13039/501100011033, and Elkartek 2023 via grant ONBODY no. KK-2023/00070.

Author information

Authors and Affiliations

Contributions

MEY conceptualized the study and did the numerical simulations. All authors contributed equally to the result analysis and manuscript writing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yamakou, M.E., Desroches, M. & Rodrigues, S. Synchronization in STDP-driven memristive neural networks with time-varying topology. J Biol Phys 49, 483–507 (2023). https://doi.org/10.1007/s10867-023-09642-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10867-023-09642-2