Abstract

Overlap is one of the data aspects that are expected to be assessed when visually inspecting single-case experimental designs (SCED) data. A frequently used quantification of overlap is the Nonoverlap of All Pairs (NAP). The current article reviews the main strengths and challenges when using this index, as compared to other nonoverlap indices such as Tau and the Percentage of data points exceeding the median. Four challenges are reviewed: the difficulty in representing NAP graphically, the presence of a ceiling effect, the disregard of trend, and the limitations in using p-values associated with NAP. Given the importance of complementing quantitative analysis and visual inspection of graphed data, straightforward quantifications and new graphical elements for the time-series plot are proposed as options for addressing the first three challenges. The suggestions for graphical representations (representing within-phase monotonic trend and across-phases overlaps) and additional numerical summaries (quantifying the degree of separation in case of complete nonoverlap or the proportion of data points in the overlap zone) are illustrated with two multiple-baseline data sets. To make it easier to obtain the plots and quantifications, the recommendations are implemented in a freely available user-friendly website. Educational researchers can use this article to inform their use and application of NAP to meaningfully interpret this quantification in the context of SCEDs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Single-case experimental designs (SCEDs) allow obtaining empirical evidence on the effectiveness of interventions, by studying intensively and longitudinally one or a few individuals, under different conditions, manipulated by the researcher. A basic effect is demonstrated in each comparison between a baseline and an intervention condition (i.e., A-B comparison). In the context of visual analysis, this comparison entails assessing level, trend, variability, overlap, and immediacy (Kratochwill et al., 2013; Lane & Gast, 2014; Ledford et al., 2019; Maggin et al., 2018). Furthermore, it is necessary to replicate the basic effect (Iversen, 2021; Morley, 2018; Perdices et al., 2023; What Works Clearinghouse, 2022) in different moments in time, within the same participant (e.g., in a reversal design) or across participants (e.g., in a multiple-baseline design). When the evidence of several A-B comparisons is considered together, two types of consistency are expected to be assessed (Kratochwill et al., 2013; Tanious et al., 2020): (a) the consistency of data patterns belonging to similar phases (e.g., whether the data pattern in the baselines for all the tiers in a multiple-baseline design is similar); and (b) the consistency of effects (e.g., whether the amount of change in level is similar in all tiers in a multiple-baseline design).

Aims, Scope, and Organization of the Article

Formative Versus Summative Analysis

The article deals with quantifications and plots for summative analysis (Ledford et al., 2019) or following a static approach (Johnson & Cook, 2019) rather than a more dynamic approach allowing for formative analysis. Regarding formative analysis, one recently explored option is masked visual analysis, entailing a separation between an intervention team and an analysis team (Byun et al., 2017; Ferron et al., 2017). Such an approach can be useful for addressing any potential bias in response-guided experimentation, although this may not mitigate the general criticisms about visual analysis (e.g., unclear decision rules and lack of transparency; Danov & Symons, 2008; Ottenbacher, 1990).

Focal Data Aspect

More specifically, the focus of the current article is on the data aspect overlap, as nonoverlap indices have been commonly used both in the analysis of individual SCED studies (Fingerhut et al., 2023; Tanious & Onghena, 2021) and in the meta-analysis of several SCED studies on the same topic (Jamshidi et al., 2023; Maggin et al., 2011; see also Schlosser et al., 2008). Moreover, the latest version of the What Works Clearinghouse (2022) Standards suggests using nonoverlap measures to compare different portions of the baseline data in order to detect trends,Footnote 1 and the initial baseline to a withdrawal phase (e.g., in an ABAB designs) for checking whether the behavior is reversible.

Purpose

In this context (data analysis with a focus on overlap), the article is intended as a review of the strengths and limitations of one nonoverlap index, the Nonoverlap of all pairs (NAP; Parker & Vannest, 2009). It has to be kept in mind that some of these pros and cons are shared with other nonoverlap indices. For instance, in terms of pros, both NAP and the different versionsFootnote 2 of Tau (Parker et al., 2011a, 2011b; Tarlow, 2017) do not reduce the data to a single value or mean line, whereas in terms of cons, both NAP and the percentage of data points exceeding the median disregard trend (PEM; Ma, 2006). The focus on NAP is related to Parker et al.,’ (2011a, 2011b) claim that it is among the nonoverlap indices with greatest statistical power, as well as being closely related to the Tau version disregarding trend and focusing only on overlap (i.e., Tau-UA vs. B) and also to Pustejovsky’s (2019) findings that it is not sensitive to the number of measurements. Further reasons for choosing NAP are the advantages commented on later in the article. This review is the first aim of the article.

The second aim is to provide a proof of concept of the recommendations made for overcoming some of the main limitations of NAP. Thus, proposing graphical elements added to the time series plot and an additional quantification for addressing the limitations of NAP is the second aim. The focus on the graphical representations is due to visual analysis still being considered a cornerstone of SCED data analysis (DeRosa et al., 2021; Kipfmiller, 2019; Ledford et al., 2019; Maggin et al., 2018; Ninci, 2023; Wolfe et al., 2019a, 2019b) and it is frequently used alone or together with simple descriptive statistics (Nikles et al., 2022; Rader et al., 2022; Tanious & Onghena, 2021). The current double focus on visualization and quantification is well-aligned with previous recommendations (Fisher et al., 2003; Harrington & Velicer, 2015; Houle, 2009) and with recent texts on representing quantifications of effect (Ferron et al., 2024; Verboon & Peters, 2020). Specifically, visual inspection can be useful as a way of validating any kind of quantification (Parker et al., 2006). For instance, the between-case standardized mean difference or design-comparable effect size (Pustejovsky et al., 2014) recommended by the What Works Clearinghouse (2022) entails assuming a specific model for the data, which may or may not be reasonable for the data at hand and can still benefit from the use of visual inspection by checking whether the model assumptions appear to be reasonable (Kratochwill et al., 2021; Maggin et al., 2022).

Out of Scope

Given that we aim to provide a document guiding SCED researchers to appropriately use NAP, by overviewing its benefits and challenges, while providing application-oriented recommendations, there is no new data collection (i.e., empirical research) or simulation carried out in the context of the current article. Complementarily, the already available literature on nonoverlap indices has illustrated how the nonoverlap indices are computed on the same data and yield potentially different results (as in Brossart et al., 2014; Chen et al., 2016; Lenz, 2013; Maggin et al., 2019; Parker et al., 2011a, 2011b; Rakap, 2015; Vannest & Ninci, 2015), has compared nonoverlap indices to the judgments by visual analysts (Barton et al., 2019; Rakap et al., 2014; Wolery et al., 2010; Wolfe et al., 2019a, 2019b) or to quantitative data analysis (Barton et al., 2019; Costello et al., 2022; Heyvaert et al., 2015; Jaksic et al., 2018; Parker & Hagan-Burke, 2007; Ross & Begeny, 2014; Solomon et al., 2015; Waddell et al., 2011), and has studied the statistical properties of nonoverlap indices under varying conditions (e.g., different series lengths, degrees of autocorrelation, or magnitude of effect) via simulation studies (e.g., Fingerhut et al., 2021; Giannakakos & Lanovaz, 2023; Manolov et al., 2010; Pustejovsky, 2019).

Regarding data aspects beyond overlap, if the researchers are interested in level, there are several alternatives (e.g., Hedges et al., 2012; Landman et al., 2024; Pustejovsky, 2018), as is also the case if the focus is trend (e.g., Ferron et al., 2009; Swaminathan et al., 2014), immediacy (e.g., Manolov & Onghena, 2022; Natesan & Hedges, 2017), variability (Levin et al., 2021), or consistency (Tanious et al., 2020, 2021). Furthermore, the interested reader can consult the reviews of data analytical options provided by Busse et al. (2015), Chen et al. (2015), Dowdy et al., (2021), Gage and Lewis (2013), and Manolov et al. (2022).

Organization of the Article

Below, we first review of strengths of NAP, as compared to indices that quantify different data aspects and also as compared to other nonoverlap indices. Afterwards, we review its limitations and subsequently make recommendations for addressing these limitations. These recommendations are briefly outlined first and later illustrated in greater detail with real data, because we consider that they are better understood in the context of actual data rather than merely in abstract terms. Finally, a discussion is provided on the contribution made, its applicability, its limitations, and the need for further research.

Strengths of NAP

Interpretability

Even outside the SCED context, overlap measures have been considered as possible effect size quantifications (Hedges & Olkin, 2016; Huberty & Lowman, 2000). NAP specifically can be understood as probability of superiority (Parker & Vannest, 2009) and has specifically been suggested also outside the SCED context (Acion et al., 2006; Karch, 2021). The commonly used in group-design research standardized mean difference (Cohen’s d) can also be expressed as probability of superiority when normality and homoscedasticity are met (Grissom & Kim, 2001). This is in line with the efforts to make standardized mean difference more interpretable, by converting it to a common language effect size (McGraw & Wong, 1992; Parker & Hagan-Burke, 2007). The between-case standardized mean difference (Hedges, 2012, 2013) has been developed in the SCED context to be in the same metric as Cohen’s d, but its interpretation as probability of superiority has not been discussed, and it is unclear whether the same assumptions of normality and homogeneity of variance are required.

Referring to the different indices called Tau, unlike NAP, they are prone to confusions in terms of their interpretation. When disregarding baseline trend, Tau by Parker et al., (2011a, 2011b), referred to as Tau-UA vs. B by Tarlow (2017), performs pairwise comparisons between measurements belonging to the different phases, followed by a subtraction of the overlaps from the nonoverlaps. For instance, if in a study the aim is to increase a desirable behavior and the values obtained are 2, 4, and 7 in the baseline phase, and 5, 9, and 8 in the intervention phase, there will be \(3\times 3=9\) comparisons, of which six are nonoverlaps (9 and 8 are greater than all three baseline measurements) and three overlaps (5 is smaller than all three baseline measurements). In contrast, Tau by Tarlow (2017) is Kendall’s correlation between the measurements (e.g., 2, 4, 7, 5, 9, 8) and a dummy variable representing the phase (e.g., 0, 0, 0, 1, 1, 1, for the three baseline and three intervention phase measurements respectively). This different definition logically entails that the Tau values do not have to coincide.Footnote 3 Neither of these two indices can be interpreted as probability of superiority: NAP is interpretable in such terms because it quantifies the proportion of nonoverlaps plus ties (with half a weight) out of all pairwise comparisons.

Previous graphical representations related to nonoverlap indices, using data from participant Michael as gathered by Singh et al. (2007)

Regarding the way in which the Tau indices deal with trend, there are several steps involved. Tau by Parker et al., (2011a, 2011b), referred to as Tau-UA vs. B-Trend A by Tarlow (2017), first defines monotonic trend as the number of baseline phase measurements that improve previous measurements from the same phase. Afterwards, this index subtracts this tally from the number of nonoverlaps counted when comparing data from different phases.Footnote 4 Pustejovsky (2016b, 2016c) presents several expressions for the way in which baseline trend control is performed in the context of Tau-UA vs. B–Trend A. Baseline corrected Tau by Tarlow (2017) entails an initial test of monotonic trend, quantified as the Kendall correlation between the measurement occasions (i.e., session numbers) and the baseline data points. In case a statistically significant trend is detected, a linear trend is removed, after estimating it from the baseline measurements via the Theil-Sen method, a method resistant to outliers, which defines the slope as the median of the slopes of the lines that connect all pairs of measurements (see Manolov & Vannest, 2023; Tarlow & Brossart, 2018; Vannest et al., 2012).

Moreover, the scientific literature includes more than one rule for deciding whether to control for trend in the context of Tau-UA vs. B–Trend A, and it is not directly clear which rule should be followed. First, Parker et al. (2011a, 2011b) suggest assessing visually whether trend is pronounced, but they also mention the need for trend to be "credible" (p. 287), "reliable" (p. 293) and "statistically significant" (p. 287). Second, Vannest and Ninci (2015) propose arbitrary cut-off points of the monotonic trend estimate (0.10 or 0.20, which do not coincide with the cutoff from Parker et al., 2011a, 2011b, set to 0.40). Third, Tarlow (2017) suggests focusing on the statistical test, albeit adverting the possible lack of power for short baselines, which entails that the statistical test is not appropriate for all situations. Regarding NAP, it does not take trend into account and, therefore, there is no need to decide whether and how to control for trend.

Finally, NAP as well as Tau-UA vs. B are not affected by the number of measurement occasions in the baseline and the intervention phase (Pustejovsky, 2019), something that is not the case for Tau-UA vs. B–Trend A with baseline trend correction (Parker et al., 2011a, 2011b), as shown by Pustejovsky (2016b). Also, Tau-UA vs. B–Trend A may take values outside its theoretical range between − 1 and + 1 (Tarlow, 2017).

In summary, while one of the limitations of NAP (to be further discussed later in the article) is disregarding trend, the way in which the different versions of Tau deal with trend may lead to confusions in terms of which version is used and regarding whether the trend controlled for is monotonic (in general) or more specifically linear (e.g., Van Norman et al., 2022).

Applicability to Ordinal Data

All nonoverlap indices, including NAP, can be computed on discrete data (counts), continuous data, and also ordinal data. The latter is true because the quantifications focus on which value is larger rather than how large the difference between values is. This makes them widely applicable in the SCED context, including for instance the use of goal attainment scales (Kiresuk & Sherman, 1968). Other data analytical procedures such as procedures based on mean differences (e.g., Hedges et al., 2012) and regression analysis (e.g., Swaminathan et al., 2014) require at least interval scale data, whereas the log-response ratio (Pustejovsky, 2018) requires ratio scale data.

Applicability to Variable Data

NAP also presents another advantage: it does not reduce the data to a single mean/median or trend line. Instead, all measurements belonging to different conditions are compared pairwise. This makes NAP applicable even in the presence of high variability when a straight trend or mean/median line cannot be assumed to represent well the data within a phase. In addition, due to the way in which it is computed, NAP does not discard outliers (as all data are included in the pairwise comparisons), but it does not assign too much weight to any potential outlier. Specifically, each data point has the same weight and the distance between the two data points being compared is not considered; only the ordinal dominance of one over the other.

In contrast, the percentage of nonoverlapping data (PND; Scruggs et al., 1987) reduces the baseline data to a single value, whereas the percentage of data points exceeding the median (PEM; Ma, 2006) represents the baseline data with a single straight line.

Challenges When Using NAP and Recommendations for Addressing Them

In the current section, four challenges related to the use of NAP are reviewed and the corresponding recommendations are made. These challenges are listed in Table 1 and can be summarized as follows: (a) the difficulty to represent visually what is being quantified in NAP which may make its interpretation less straightforward; (b) the presence of ceiling effects which limit the quantification of the magnitude of effect; (c) disregarding trend which can lead to erroneous conclusions; (d) and the limitations entailed by using the p-values associated with NAP. Note that challenges (b) and (c) actually refer to other data features different from overlap (level and trend, respectively). This is the reason why ceiling effects and trend can lead to suboptimal performance of nonoverlap indices.

Lack of a Graphical Representation

The Challenge

Visual inspection can help detecting data patterns that may lead to misleading conclusions when simply assessing the quantification of nonoverlap. This is related to two of the challenges discussed later: the ceiling effect and NAP’s disregard of trend.

The main challenge is that there is not a single visual representation of nonoverlap, as it depends on the specific operational definition (i.e., nonoverlap index) used. We will illustrate some options in Fig. 1, using data from Singh et al. (2007) on the verbal aggressive behavior of a 24-year-old participant called Michael, before and after mindfulness training. In what follows, we review the graphical representations for several nonoverlap indices, for some of which the comparisons performed are more easily visualized than for NAP.

Superimposing a Single Line

For PND (Scruggs et al., 1987), the graphical representation consists of marking the best (e.g., lowest) baseline data point with a line and extending it into the intervention phase (see the upper left panel of Fig. 1), as in Morgan and Morgan (2009) and Kratochwill et al. (2010). Similarly, Barton et al. (2018) project the whole range of the baseline data into the intervention phase, which is equivalent to projecting the best baseline measurement (as in PND) and also the worst one. For PEM (Ma, 2006), the graphical representation entails superimposing a line for the baseline median and extending it into the intervention phase (Lenz, 2013; Ma, 2006; Parker et al., 2011a, b, 2014), as illustrated in the upper right panel of Fig. 1.

Kratochwill et al. (2013) suggest detrending if necessary, before evaluating overlap, but did not further elaborate on the topic. Regarding nonoverlap indices taking trend into account, PEM-T (Wolery et al., 2010) fits a trend line to the baseline data via the split-middle method. Graphically, this trend line is extended into the intervention phase (Parker et al., 2011a, 2011b; Rakap et al., 2014), as illustrated in the middle left panel of Fig. 1. Regarding Tau by Tarlow (2017), The Theil-Sen trend line can be superimposed on the baseline data, as illustrated by Tarlow (2017) and also in the middle right panel of Fig. 1.

Overlap Zone

For other indices such as the Percentage of All Nonoverlapping Data (PAND; Parker et al., 2007) and the Improvement Rate Difference (IRD; Parker et al., 2009), the graphical representation is less clear. For instance, Parker et al., (2011a, 2011b) draw for IRD the same “nonoverlap line” as for PND and PAND and they (as well as Vannest & Ninci, 2015) also point at the possible data points that can be removed to eliminate overlap. Similarly, Parker and colleagues (2014) represent an “overlap zone” for PAND, without a specific explanation. For NAP (Parker & Vannest, 2009), Parker et al., (2011a, 2011b) draw also an “overlap zone”. We assume that this overlap zone refers to the range of values between the best (e.g., smallest) baseline measurement and the worst (e.g., largest) intervention phase measurement, as in Morley (2018). An overlap zone is represented in the lower left panel in Fig. 1.

Pairwise Comparisons

For NAP, focusing on individual data points rather than entire phases, Parker and colleagues (2014) depict specific examples of pairwise comparisons which are nonoverlaps (marked with a plus sign) and ties (marked with a T). Similar examples of comparisons are presented in the lower right panel of Fig. 1. Also focusing on individual data points comparisons, Rakap et al. (2014) represent, on the time series plot, above the dots that represent the data points, for each baseline measurement, the number of intervention phase measurements that improve it (plus ties). This is illustrated for the first two baseline measurements in the lower right panel of Fig. 1.

Data Matrix

In the context of Tau-UA vs. B–Trend A (Parker et al., 2011a, 2011b), pairwise comparisons across phases have also been represented by a data matrix, which could be argued to be more similar to a tabular display of the data than to a graph. In the shaded rectangle in Fig. 2, a plus sign represents an intervention phase value that improves a baseline phase value, a minus sign represents a deterioration and a zero represents a tie. There are 56 (\(4\times 14\)) comparisons, of which 50 are improvements, there are two deteriorations and four ties. The same comparisons take place in NAP, but as stated previously, the way in which overlaps and ties are dealt with is different. In any case, NAP (usually expressed as a percentage) can be converted to Tau-UA vs. B (expressed on a − 1 to + 1 scale) via \(Tau=2\frac{NAP}{100}-1\) (Pustejovsky, 2019). Parker, Vannest, and David (2011) represent the overlap zone in the same way for NAP and for Tau-UA vs. B, regardless of the fact that the definition of overlap in both indices is not the same (even when trend is not taken into account). The data matrix also represents the assessment of monotonic baseline trend (i.e., the triangle to the right of the shaded rectangle in Fig. 2), but we will deal with trend in a later section. Finally, it should be noted that Tarlow (2017) did not propose any graphical representation for his version of Tau (when not controlling for baseline trend), based on Kendall’s correlation.

Data matrix for performing pairwise comparisons between measurements, using data from participant Michael as gathered by Singh et al. (2007)

The monotonic trend quantified in the context of Tau-UA vs. B–Trend A cannot be depicted visually beyond the data matrix presented in Fig. 2, as is also the case for the resulting removal of trend (Parker et al., 2011a, 2011b). Brossart et al. (2018) specifically mention the impossibility to visually represent Tau-UA vs. B–Trend A as a limitation, making it a “black box” (p. 14).

Recommendation for Addressing the Challenge

We propose an additional plot, the Time-Series Nonoverlap Plot, with two panels, to accompany the classical time-series line graph. One of these panels represents, for each measurement, how many data points it improves as compared to the other phase (i.e., how many nonoverlaps does this measurement contribute). This information is the essential part of computing NAP. The other panel represents, for each measurement, how many of the previous data points from the same phase it improves. This latter plot helps assessing the presence of monotonic trend within each phase, which is related to the third challenge discussed here (i.e., the fact that NAP disregards trend).

Ceiling Effects

The Challenge

Nonoverlap indices suffer from a ceiling effect when complete nonoverlap is achieved (Carter, 2013; Landman et al., 2024; Van Norman et al., 2022). This effect makes the distinction between different degrees of intervention effectiveness impossible beyond stating that 100% nonoverlap was achieved. For instance, suppose the data on the percentage of intervals with problematic behavior in the baseline are 52, 91, and 89 and in the intervention phase are 17, 17, 32, 40, 13, and 6. NAP is 100% for these data and it would have been equal to 100% also in case: (a) all baseline measurements were 52 (as is the best baseline data point) and all intervention phase measurements were 40 (as is the worst intervention phase data point); and (b) all baseline measurements were 100 (i.e., the worst possible value) and all intervention measurements were 0 (i.e., the best possible value).

Therefore, a quantification of nonoverlap may not always be conceptualized as an “effect size” (Carter, 2013; Wolery et al., 2010). A ceiling effect also entails that it may not be justified to state that two A-B comparisons for which 100% nonoverlap is obtained are consistent (i.e., represent a similar effect), because the degree of separation (i.e., the distance on the y-axis between the measurements from different phases) may not be the same. For that purpose, we recommend comparing the degree of separation across A-B comparisons as illustrated later.

Recommendation for Addressing the Challenge

The recommendation we make here is to accompany the NAP value with an additional quantification. When there is complete nonoverlap (i.e., NAP = 100%), this quantification is the degree of separation between the data belonging to the different conditions, allowing for distinguishing different magnitudes of effect. Formally, it is expressed as the difference between the best baseline measurement and the worst intervention phase measurement, expressed in the same measurement units as the dependent variable. Analogously, using the same calculation, when NAP ≠ 100%, the overlap zone is obtained (as depicted in the bottom left panel of Fig. 1). When there is such an overlap zone, it is also possible to compute the percentage of data points included in it. Both the separation zone and the overlap zone can be graphically represented by horizontal lines, superimposed on the Time-Series Nonoverlap Plot. Quantifying the degree of separation also allows assessing whether two data sets for which NAP = 100% actually represent consistent effects, i.e., whether the degree of separation is similar across replications within or between cases. When there is overlap, the percentage of data points within the overlap zone can also be used as a way of assessing consistency. In summary, the quantification of the distance between the best intervention phase data point and the worst intervention phase data point is not necessarily novel (as this distance represents the overlap zone in case there is not a complete nonoverlap), but it is potentially informative as a supplement to NAP.

Disregarding Trend

Challenge

The different versions of Tau (Parker et al., 2011a, 2011b; Tarlow, 2017) have been proposed because NAP does not take trend into account and may lead to erroneous conclusions if an improving baseline trend is present. For instance, if an improving baseline trend continues exactly the same with no change during the intervention phase, this could lead to NAP = 100% when there is actually no effect due to the intervention. Conversely, a worsening baseline trend can be followed by an improving intervention phase trend and still get NAP = 50%, suggesting no effect. It should be noted that NAP is not the only quantification disregarding trend, as there are other instances among nonoverlap indices (e.g., PEM, PND) and indices focusing on level [e.g., the between-case standardized mean difference (Hedges et al., 2012, 2013), the log-response ratio (Pustejovsky, 2018)].

Recommendation for Addressing the Challenge

Our recommendation is not to introduce a quantitative correction to NAP, as the Tau indices by Parker et al., (2011a, 2011b) and by Tarlow (2017) do, but rather to represent trend graphically in the Time-Series Nonoverlap Plot. Specifically, in order to assess the presence of baseline trend, our recommendation is to introduce the information from the Tau-UA vs. B–Trend A data matrix (Fig. 2) into the time series plot. We opted not to represent linear trend, as in PEM-T, given that a straight line may not represent well the data and also to avoid potentially unreasonable projections of linear trends (Manolov et al., 2019; Parker et al., 2011a, 2011b). Moreover, the split-middle method is not the only way of fitting a straight line to SCED data (Chen et al., 2019; Manolov, 2018; see also Janosky, 1992; Sullivan et al., 2015; Swan & Pustejovsky, 2018; Verboon & Peters, 2020, for modeling nonlinear data patterns). In relation to not choosing PEM-T and the Baseline corrected Tau (Tarlow, 2017), Van Norman et al. (2022) report some counterintuitive results (in terms of the sign of the quantifications and the sign of the slope estimates) after applying these indices (e.g., deteriorating intervention phase trends associated with quantifications suggesting improvement and improving intervention phase trends associated with quantifications suggesting deterioration).

Inference

Challenge

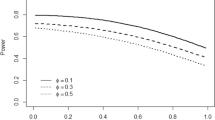

The values of nonoverlap indices such as NAP and Tau-UA vs. B can be accompanied by confidence intervals and p-values. However, any such inferential information referring to either NAP or Tau-UA vs. B is based on expressions for estimating the standard error of the indices, requiring the assumption of independent data (Pustejovsky et al., 2023a; b; Solomon et al., 2015). Moreover, Pustejovsky (2016a) presents different possible expressions for the standard error of NAP (or probability of superiority). A further challenge in terms of inference is also present for Tau-UA vs. B–Trend A by Parker et al., (2011a, 2011b), because no confidence interval can be constructed once baseline trend has been controlled for, as the sampling distribution of the quantification is unknown.

Recommendation for Addressing the Challenge

In the current article we focus exclusively on the descriptive values of the nonoverlap indices, due to two reasons. First, a descriptive value already provides an objective number that can be easily communicated across researchers and used in subsequent meta-analyses.Footnote 5 Second, statistical inference (e.g., via a p-value) is only justified when there is either random sampling from a population and or random allocation in the design (Heyvaert & Onghena, 2014a), plus the aim to generalize the results to untreated individuals. Just like any fixed interpretative benchmark cannot be considered universally valid for interpreting the magnitude of effect (Vannest & Sallese, 2021), p-values also do not replace the need for interpreting the nonoverlap (or any effect size) values. Specifically regarding p-values, several limitations have been highlighted in the SCED context, such as the difficulty to interpret them correctly, deviating the attention from the magnitude of the effect and favoring publication bias (Branch, 2014), Consequently, experimental control and the judgments by the researchers (beyond automatic rules regarding whether a p-value is smaller than 0.05 or not) have been underlined as more important (Imam, 2021; Perone, 1999), as well as the need for replication in order to have information about the generality of findings (Branch, 2019). If the challenges outweigh the benefits of using p-values, it may be reasonable to avoid focusing (exclusively) on them for the time being.

Illustration of the Recommendations

Illustrative Data

We consider that it is easiest to present the recommendations in depth, beyond the previous description mentioned so far, via an application to real SCED data. Specifically, the data used for the current illustration were gathered by Pierce and Schreibman (1994) and by Singh et al. (2007), both applying a multiple baseline design across participants and across behaviors. Multiple baseline design data were selected, given that this is the most commonly used SCED in published research (Pustejovsky, Swan, & English, 2023a, 2023b; Shadish & Sullivan, 2011; Tanious & Onghena, 2021; Zanuttini, 2020).

Pierce and Schreibman (1994) taught daily living skills to three low-functioning children (John, Howard, and Robby) diagnosed with autism spectrum disorder, via pictorial self-management as an intervention. The behaviors taught were as follows: (a) for John—setting the table, making lunch, and doing the laundry; (b) for Howard—setting the table, making a drink, and making the bed; and (c) for Robby—setting the table, getting dressed, and making lunch. The percentage of engagement was recorded not only for on-task behaviors, but also for inappropriate behaviors. Here, the focus is on a decrease of inappropriate behaviors for the three participants, during “setting the table”. Figure 3 represents the data from Pierce and Schreibman (1994).

Percentage of 10-s intervals that contained inappropriate behaviors when setting the table: data gathered by Pierce and Schreibman (1994) for three participants

Singh et al. (2007) used mindfulness training for controlling physical and verbal aggressive behavior in people (Jason, Michael, and Tim) diagnosed with several mental disorders such as depression, schizoaffective disorder, borderline personality, and antisocial personality. Here, the focus on verbal aggressive behaviors. Figure 4 represents the data from Singh et al. (2007).

Frequency of verbal aggressive behaviors: data gathered by Singh et al. (2007) for three participants

For both datasets, the measurements were retrieved from the plots published in the articles, using WebPlotDigitizer (https://automeris.io/WebPlotDigitizer/), a validated data retrieval tool (Aydin & Yassikaya, 2022; Drevon et al., 2017).

Time-Series Nonoverlap Plot for Taking Within-Phase Trend into Account and Representing Across-Phases Pairwise Nonoverlaps

Figure 5 represents the data for Robby from the Pierce and Schreibman (1994) study. We chose to illustrate the Time-Series Nonoverlap Plot for this participant, as it is only for him that some overlap is observed. Figure 6 represents the data for Michael (with improving baseline trend), Fig. 7 the data for Tim (considerable baseline variability), and Fig. 8 the data for Jason (complete nonoverlap), all from the Singh et al. (2007) study.

Time-Series Nonoverlap Plot for participant Robby from the Pierce and Schreibman (1994) study. Note The left panel represents within-phase monotonic trend, whereas the right panel represents across-phases pairwise comparisons

Time-Series Nonoverlap Plot for participant Michael from the Singh et al. (2007) study. Note The left panel represents within-phase monotonic trend, whereas the right panel represents across-phases pairwise comparisons

Time-Series Nonoverlap Plot for participant Tim from the Singh et al. (2007) study. Note The left panel represents within-phase monotonic trend, whereas the right panel represents across-phases pairwise comparisons

Time-Series Nonoverlap Plot for participant Jason from the Singh et al. (2007) study. Note. The left panel represents within-phase monotonic trend, whereas the right panel represents across-phases pairwise comparisons

There are two panels in the plot. The left panel is the one representing within-phase trend, in a way consistent with the data matrix for Tau-UA vs. B–Trend A as presented by Parker et al., (2011a, 2011b) for assessing monotonic trend and illustrated in Fig. 2. For the baseline phase, each data point is denoted by a tally of the previous baseline measurements that it improves. In case there is a general improving trend, the tallies will be increasing with time. The data from Fig. 5 suggest that in general there is no improving trend: it is only noteworthy that the last three baseline measurements improve the third baseline data point. The data from the left panel of Fig. 6 suggest a certain improving baseline trend, as the first baseline value is the worst (largest) and the last (fourth) baseline value is improving two of the three previous values. In the left panel of Fig. 7, we see that two of the last three measurements improve several previous baseline data points, but the pattern is apparently better conceptualized as variable and alternating rather than clearly improving (downwards). Thus, it is important to make a distinction between large values due to variability and large values representing trend. In order to have evidence for trend, an increasing sequence is required in the numbers next to each data point (i.e., each subsequent measurement improves more preceding measurements from the same phase). Otherwise, if the numbers do not increase with the progress of time, they only illustrate different kinds of variability (incl. the presence of outliers).

For the intervention phase, each data point is denoted by a tally of the previous intervention phase measurements that it improves. If there is an improving trend in the intervention phase, it should be reflected by these tallies increasing with time. For instance, the last two intervention phase measurements in Fig. 5 suggest an improving trend. However, more data are necessary to evaluate whether this is not part of an alternating (up-and-down) pattern, as the immediately preceding third and fourth intervention phase data points were worse than the first and second ones (i.e., improved no previous measurements from the same phase). The improving intervention phase trend is clearer in Figs. 7 and 8, although a floor effect is observed, as the measurements cannot be smaller than zero. In Fig. 7, there is also an improving intervention phase trend, with the number of improved previous data points increasing, but not as clear as in Figs. 7 and 8.

A formal comparison between the baseline and intervention trends is not represented, but in case there is evidence for an improving baseline trend, any further conclusions need to be made with caution and requiring at least an improving intervention phase trend (unless the maximal possible score of the target behavior is reached). This is illustrated in Fig. 6.

The right panel has a different way of marking the data points. The digits depicted represent the quantification performed in NAP, in which all pairwise comparisons between values belonging to the two conditions are performed. This is tantamount to saying that the digits represent the shaded rectangle from the data matrix in Fig. 2. Specifically, each baseline data point is denoted by the number of intervention phase values it improves, representing overlaps. This is in contrast with Rakap et al., 2014, who depict, for each baseline data point, the number of intervention phase data points that improve it, representing nonoverlaps. For the intervention phase, each data point is denoted by the number of baseline values it improves, representing nonoverlaps. The Time-Series Nonoverlap Plot also includes an overlap zone superimposed on the data. On the right panel of Figs. 6, 7, and 8, only the baseline measurements within the overlap zone have values greater than 0 (i.e., are better than at least one intervention phase data point). Complementarily, the intervention phase values outside the overlap zone improve all baseline measurements (i.e., have a tally equal to the number of baseline data points).

An intervention effect would be more evident if the baseline numbers are small and the intervention phase numbers are large. Figure 8 with complete nonoverlap is the clearest example, but an effect is also present in Figs. 5 and 6. In contrast, in Fig. 7, there are several baseline measurements that are lower (i.e., improved) in comparison to many of the intervention phase measurements and, complementarily, some intervention phase measurements are improving only few baseline phase data points. Finally, focusing on Fig. 7, the fact that the numbers in the intervention phase (i.e., baseline measurements improved) are generally larger for later measurement occasions is an indication of a progressive or a delayed effect.

Complementary Quantifications for Addressing Ceiling Effects

For two participants from the Pierce and Schreibman (1994) study there is complete nonoverlap, but the degree of separationFootnote 6 is greater for Howard (34.74%) than for Jon (12.00%), as summarized in Table 2. For Robby, the overlap zone is considerable, ranging from 26.10 to 87.92 percentage points, including almost 42% of the measurements from both phases. In general, there is no consistency in (non)overlap across all three participants; the shared area of separation between conditions for Jon and Howard ranges from 40.11 to 52.11%.

Regarding the Singh et al. (2007) data, there is complete nonoverlap only for Jason, with the degree of separation being 2 measurement units,Footnote 7 as summarized in Table 3. For Michael, the overlap zone ranges from 2 to 4 units (including approximately 39% of data), whereas for Tim it is larger: ranging from 2 to 7 units and including 86% of the data. In general, there is no consistency in (non)overlap across all three participants; the shared overlap zone for Michael and Tim ranges from 2 to 4 units.

Summary of the Information Provided by the Proposed Visual and Numerical Complements

Referring to the results, Pierce and Schreibman (1994) comment only that “inappropriate behavior was typically high during baseline for all three children. During posttreatment, inappropriate behavior decreased and on-task behavior increased to high levels, often 100%, for all 3 children” (p. 479). The plots and quantifications proposed provide more detailed information. Referring to “setting the table”, as summarized in Table 2 that there is complete nonoverlap for two of the participants (with greater separation for Howard than for Jon) and overlap for one of them (Robby), with almost 42% of the values in the overlap zone. Specifically, for Robby, the left panel of Fig. 5 shows, for the baseline phase, that the last three baseline measurements are not as bad as the third measurement, but that there is no trend of improvement in the baseline (as the numbers do not increase). For the intervention phase, the left panel of Fig. 5 shows that the last two intervention phase measurements improve most of the preceding ones. The right panel of Fig. 5 shows that four of the six intervention phase measurements improve all baseline phase measurements, and five of the six intervention phase measurements improve at least five of the six baseline phase measurements. This more detailed information would help avoiding excessively general conclusions regarding intervention effectiveness (i.e., pointing at for whom the intervention worked).

The results for the Singh et al. (2007) data are summarized by the primary authors as “data presented in Fig. 1 show that, regardless of baseline frequencies, verbal and physical aggression decreased as training in mindfulness proceeded” (p. 320). Once again, the recommendations provide more nuanced information. Focusing on verbal aggressive behaviors, Table 3 shows that there is complete nonoverlap only for Jason, whereas there is a lot of overlap (86% of the measurements in the overlap zone) for Tim and less overlap (almost 39% of the values in the overlap zone) for Michael. Focusing on Michael, the left panel of Fig. 6 illustrates the general improving trend during the baseline and the intervention phases (values increasing in both conditions), which may indicate that his behavior improved naturally over time rather than as a result of the intervention. The right panel of Fig. 6 shows that most of the intervention phase measurements improve all the baseline phase measurements. Focusing on Tim, the left panel of Fig. 7 shows that there is no clear baseline trend (just variability), whereas there is an improving monotonic trend in the intervention phase (as the numbers increase). The right panel of Fig. 7 shows that the intervention effect can be considered progressive, as later intervention phase measurements improve more baseline data points than earlier ones do. In summary, the graphical representations suggested allow detecting trends either in the baseline phase (Michael) or in the intervention phase (Tim), whereas the numerical summaries allow quantifying the separation in presence of complete nonoverlap (Jason) and the different proportion of values in the overlap zone for Michael and Tim.

Discussion

Contribution

Visual Representation: Building on the Data Matrix and Overlap Zone

In terms of the graphical representation of overlap, we present a plot (right panel of Figs. 5, 6, 7, 8) that indicates for each measurement obtained the number of measurements it “improves” from the other condition (as in the comparisons performed in the context of NAP and Tau-UA vs. B by Parker et al., 2011a, 2011b). Beyond what NAP quantifies, this plot it also includes the time sequence in which the measurements were obtained and thus makes possible to combine the numerical values with the time information. Specifically, Parsonson and Baer (1992) state that, when discussing overlap, it is important to distinguish between points in the beginning versus the end of the phase, given that an overlap in the beginning can be better tolerated in an eventually useful intervention. Therefore, representing how many baseline data points each intervention data point improves can be useful for this distinction. If later intervention phase measurements improve more baseline phase measurements, this could be an indication of a gradual or progressive effect. In contrast, if only earlier intervention phase measurements show an improvement, but not later ones, this could be indicative of an immediate, but transient or temporary effect.

Another version of the plot (left panels of Figs. 5, 6, 7, 8) presents, for each data point, the number of previous measurements from the same phase that are improved, enabling the assessment of monotonic within-phase trend. This is well-aligned with the What Works Clearinghouse (2022) Standards, which suggest using NAP as a way of performing a within-phase assessment of whether there are trends within the same condition. Both panels of the plot build on the data matrix presented by Parker et al., (2011a, 2011b).

Furthermore, superimposing the overlap zone on the data avoids relying on a single baseline value (e.g., the best one or the median one), as a reference for comparing the intervention phase data to. The joint use of the overlap zone and the tallies of improved measurements from the other condition enables distinguishing which part of the intervention phase measurements (i.e., the earlier or later ones) improve more baseline data points. This makes easier the assessment of immediate and delayed effects.

Numerical Values: Beyond Ceiling Effects and Towards the Assessment of Consistency

Apart from proposing a plot allowing to assess overlap as defined in NAP, we suggest the need to complement the NAP value and the graphical summary with further quantifications, specifically the degree of separation between data from different conditions, once complete nonoverlap is achieved, overcoming the ceiling effect. The same calculation can be used when there is overlap: instead of quantifying separation, computing the difference between the best baseline measurement and the worst intervention phase measurement would lead to identifying the range of the overlap zone, which can be complemented by computing the percentage of values it contains. We consider these quantifications more informative and intuitive (as a summary, representing the degree of overlap) than the number of data points that have to be removed in order to achieve complete nonoverlap (as in PAND and IRD).

The Time Series Nonoverlap plot (illustrated in Figs. 5, 6, 7 and 8) was discussed in the context of comparing adjacent phases, which represent different conditions. However, in the What Works Clearinghouse (2022) Standards it is stated that NAP can be used for assessing the consistency of similar phases (e.g., the first and second A phases in an ABAB design). The plots presented here can be used to check whether the expected overlap (as a way of conceptualizing similarity) is taking place or not. Beyond looking for a large NAP value as evidence of consistency across similar phases, it is possible to assess the consistency in nonoverlap across different phases. Specifically, we suggest assessing the consistency in overlap in a novel way, beyond comparing NAP values. Specifically, when there is complete nonoverlap, beyond just saying that NAP = 100% for all A-B comparisons, it is possible to check whether the degree of separation is similar in size and whether this separation zone is located in the same values in the range of the dependent variable. Complementarily, in case of overlap, beyond just looking at whether the NAP is similar, it is possible to check whether the proportion of values in the nonoverlap zone is similar and whether the overlap zone is in the same values in the range of the dependent variable.

Software Implementation

Previous proposals for graphical representations of level, trend, and variability (Bulté & Onghena, 2012) have been made available in a website (https://tamalkd.shinyapps.io/scda/), which is also the case for multilevel model (Declercq et al., 2020: http://34.251.13.245/MultiSCED/), the assessment of functional relations for multielement designs (Kranak & Hall, 2022: https://ansa.shinyapps.io/ansa/), and the assessment of consistency (Manolov & Tanious, 2022; https://manolov.shinyapps.io/Brinley/; Tanious et al., 2021; https://manolov.shinyapps.io/CONDAP/]). Similarly, the current recommendations for graphical representation have been implemented in a freely available, menu-driven website (https://manolov.shinyapps.io/Overlap/): details on its use are available in the Appendix.

Applicability

Design Types

The recommendations are applicable to designs that involve phases (i.e., multiple-baseline, withdrawal/reversal, and changing criterion designs), the first of which are the most commonly used SCEDs, according to several reviews of published literature (Pustejovsky, Swan, & English, 2023a, 2023b; Shadish & Sullivan, 2011; Smith, 2012; Tanious & Onghena, 2021). Each A-B comparison for which level, trend, variability and overlap are represented, can be a tier in a multiple-baseline design across participants, across behaviors or across settings. Similarly, each A-B comparison could stem from an ABAB design.

It should be noted that, in the context of alternating treatments designs (Barlow & Hayes, 1979) in which conditions change frequently, overlap can be defined in relation to whether the data paths in an alternating treatments design cross or are completely separated / differentiated (Horner & Odom, 2014; Riley-Tillman et al., 2020). This definition does not entail or require a complete nonoverlap in the sense of, for instance, NAP or Tau-UA vs. B being equal to 100%. In the context of changing criterion designs, overlap has not been traditionally considered as a main aspect to be assessed (Hartmann & Hall, 1976; Klein et al., 2017; McDougall, 2005), but it is possible to define the range of acceptable behavior in such a way as to ensure lack of overlap (Manolov et al., 2020).

Within-Study Assessment

Two simple quantifications are suggested: the percentage of data points within the overlap zone when there is overlap and the distance (expressed in the measurement units of the dependent variable) between the data from different conditions in case there is complete nonoverlap. We recommend using the degree of separation in the context of individual studies in order to help assessing the magnitude of effect; in that sense, we are not recommending this degree of separation as another effect size index to be used in meta-analysis, given that it is expressed in measurement units that can differ across studies.

Need for Expert Judgment

No specific objective criterion is proposed as a formal decision rule regarding intervention effectiveness. This is in contrast with previous proposals for visual aids with a decision rule (e.g., Fisher et al., 2003; Manolov & Vannest, 2023), but it is in agreement with previous proposals for making the assessment of the six data features more systematic without including a clear and objective rule (Maggin et al., 2013; Wolfe et al., 2019a, 2019b) when visually inspecting time-series line graphs. Despite the absence of such a rule it should be noted that relying on the judgment of the applied researchers, on the basis of the graphed data, the experimental manipulation, and their knowledge of the client, the issue dealt with and the target behavior, is inherent to SCED research and data analysis (Branch, 2014; Imam, 2021; Perone, 1999).

Need for Considering Several Data Features

Despite the current focus on overlap, the recommendation made here, when assessing each A-B comparison separately, is to prioritize some of the data features (level, trend, variability, immediacy, overlap), according to the expected data pattern (Manolov et al., 2022; this is also commonly recommended in the context of randomization tests; Levin et al., 2017, 2021). When several A-B comparisons are considered, the idea would be to assess consistency for the focal data feature(s). In that sense, we are not advocating for an exclusive focus on overlap or an exclusive use of nonoverlap indices and the corresponding plots. We are rather suggesting that, when the researchers have already decided to use a nonoverlap index (e.g., due to the target behavior being measured in ordinal terms or due to an expectation of considerable variability that will make a summary such as a mean or trend line less useful), their interpretation can benefit from the additional plots and quantifications presented here.

Limitations and Future Research

An initial limitation refers to the fact that the graphical and numerical recommendations are mainly focused on static/summative analysis (as is also the case for NAP on which they build), rather than for dynamic/formative analysis. However, if desired, the Time-Series Nonoverlap plot can be constructed and the separation or overlap zone can be computed even if the data collection in the intervention phase is still ongoing.

Another limitation refers specifically to the recommendation for dealing with trend. The graphical representation involves a visual inspection of trend, but it ignores the "magnitude" of the trend (i.e., the slope of any straight trend line). In that sense, future research could compare the use of NAP accompanied by the Time-Series Nonoverlap Plot with the use of Tau-UA vs. B–Trend A (Parker et al., 2011a, 2011b) and the use of the Baseline corrected Tau (Tarlow, 2017).

A third limitation refers to the definition of overlap zone (Morley, 2018; Parker et al., 2011a, b, 2014) and the degree of separation. Given that both are based on identifying the best baseline measurement and the worst intervention phase measurement, they can be sensitive to outliers. Complementing the overlap zone with the percentage of values that are included in it, as suggested in the current article, is expected to mitigate this sensitivity: if the overlap is due to an outlier, a small percentage of data points would be included in the overlap zone.

The recommendations are theoretically based rather than empirically validated, which entails that further research is necessary. Given that the current article is best understood as proof of concept including few examples, a broader field test with real data can be performed, comparing the conclusions reached after using only NAP and the conclusions reached complementing NAP with the plot and quantifications suggested here. A different line of research could focus on simulation studies comparing the quantifications obtained from the current plot (i.e., percentage of data in the overlap zone) to other commonly used overlap measures such as NAP and Tau-UA vs. B–Trend A. Such a simulation study could help answering the question whether different quantifications lead to converging or discrepant conclusions (according to the simulation conditions). In that context, it would also be possible to develop potential cut-off scores for the percentage of data in the overlap zone to categorize effects into weak, medium, and strong as has been commonly done for other overlap measures.

Notes

Nevertheless, considering that there are other options for assessing trend (e.g., Manolov, 2018), using a nonoverlap index for that purpose may not be reasonable, taking into account that these are two different data features.

Although it will be discussed at length later in the article, it is worth noting since the beginning that there are several indices called Tau. Here we discuss two indices proposed by Parker, Vannest, Davis, and Sauber (2011a, 2011b): Tau-UA vs. B only quantifies overlap disregarding trend, whereas Tau-UA vs. B–Trend A controls for baseline trend. Furthermore, Tarlow (2017) proposed the Baseline corrected Tau, which presents some differences both in the quantification of overlap and in the way in which baseline trend is corrected. These differences are commented later.

For instance, for the data depicted in Figs. 1 and 3 (presented formally later in the article), Tau (as defined by Parker, Vannest, Davis, & Sauber (2011a, 2011b) is equal to − 0.58, whereas Tau as defined by Tarlow (2017) is equal to − 0.86. Moreover, for certain data that visually show complete nonoverlap (e.g., participant Howard from the Pierce and Schreibman, 1994, study; see middle panel of Fig. 4 later in the article), Tau does not necessarily reach its bound (e.g., for these data it is equal to − .76 and not to − 1).

“Classical” meta-analysis entails using inverse-variance weighting (e.g., as when the between-case standardized mean difference is used; Shadish et al., 2014), which requires estimating the standard error of the effect size (here, the nonoverlap quantification). However, given that for the standard error for nonoverlap indices is based on the assumption of independent data, it is possible to use a different weight such as the number of measurements (Horner & Kratochwill, 2012), uniform weighting for (i.e., a simple mean of) all effect size values, as illustrated by the results of the review by Jamshidi et al. (2023), or even to use the median of the effect sizes (Schlosser et al., 2008).

Given that in the Pierce and Schreibman (1994) study the dependent variable is measured as the percentage of 10-s intervals with inappropriate behavior, the separation is expressed as a percentage.

Given that in the Singh et al. (2007) study the dependent variable is measured as the frequency of aggressive behaviors, separation is expressed as number of behaviors.

References

Acion, L., Peterson, J. J., Temple, S., & Arndt, S. (2006). Probabilistic index: An intuitive non-parametric approach to measuring the size of treatment effects. Statistics in Medicine, 25(4), 591–602. https://doi.org/10.1002/sim.2256

Aydin, O., & Yassikaya, M. Y. (2022). Validity and reliability analysis of the PlotDigitizer software program for data extraction from single-case graphs. Perspectives on Behavior Science, 45(1), 239–257. https://doi.org/10.1007/s40614-021-00284-0

Barlow, D. H., & Hayes, S. C. (1979). Alternating treatments design: One strategy for comparing the effects of two treatments in a single subject. Journal of Applied Behavior Analysis, 12(2), 199–210. https://doi.org/10.1901/jaba.1979.12-199

Barton, E. E., Lloyd, B. P., Spriggs, A. D., & Gast, D. L. (2018). Visual analysis of graphic data. In J. R. Ledford & D. L. Gast (2018), Single case research methodology: Applications in special education and behavioral sciences (3rd ed.) (pp. 179–214). Routledge.

Barton, E. E., Meadan, H., & Fettig, A. (2019). Comparison of visual analysis, non-overlap methods, and effect sizes in the evaluation of parent implemented functional assessment based interventions. Research in Developmental Disabilities, 85, 31–41. https://doi.org/10.1016/j.ridd.2018.11.001

Branch, M. (2014). Malignant side effects of null-hypothesis significance testing. Theory & Psychology, 24(2), 256–277. https://doi.org/10.1177/0959354314525282

Branch, M. N. (2019). The “reproducibility crisis:” Might the methods used frequently in behavior-analysis research help? Perspectives on Behavior Science, 42(1), 77–89. https://doi.org/10.1007/s40614-018-0158-5

Brossart, D. F., Laird, V. C., & Armstrong, T. W. (2018). Interpreting Kendall’s Tau and Tau-U for single-case experimental designs. Cogent Psychology, 5(1), 1518687. https://doi.org/10.1080/23311908.2018.1518687

Brossart, D. F., Vannest, K., Davis, J., & Patience, M. (2014). Incorporating nonoverlap indices with visual analysis for quantifying intervention effectiveness in single-case experimental designs. Neuropsychological Rehabilitation, 24(3–4), 464–491. https://doi.org/10.1080/09602011.2013.868361

Bulté, I., & Onghena, P. (2012). When the truth hits you between the eyes: A software tool for the visual analysis of single-case experimental data. Methodology, 8(3), 104–114. https://doi.org/10.1027/1614-2241/a000042

Busse, R. T., McGill, R. J., & Kennedy, K. S. (2015). Methods for assessing single-case school-based intervention outcomes. Contemporary School Psychology, 19(3), 136–144. https://doi.org/10.1007/s40688-014-0025-7

Byun, T. M., Hitchcock, E. R., & Ferron, J. (2017). Masked visual analysis: Minimizing Type I error in visually guided single-case design for communication disorders. Journal of Speech, Language, and Hearing Research, 60(6), 1455–1466. https://doi.org/10.1044/2017_JSLHR-S-16-0344

Carter, M. (2013). Reconsidering overlap-based measures for quantitative synthesis of single-subject data: What they tell us and what they don’t. Behavior Modification, 37(3), 378–390. https://doi.org/10.1177/0145445513476609

Chen, L.-T., Peng, C.-Y.J., & Chen, M.-E. (2015). Computing tools for implementing standards for single-case designs. Behavior Modification, 39(6), 835–869. https://doi.org/10.1177/0145445515603706

Chen, M., Hyppa-Martin, J. K., Reichle, J. E., & Symons, F. J. (2016). Comparing single case design overlap-based effect size metrics from studies examining speech generating device interventions. American Journal on Intellectual and Developmental Disabilities, 121(3), 169–193. https://doi.org/10.1352/1944-7558-121.3.169

Chen, L. T., Wu, P. J., & Peng, C. Y. J. (2019). Accounting for baseline trends in intervention studies: Methods, effect sizes, and software. Cogent Psychology, 6(1), 1679941. https://doi.org/10.1080/23311908.2019.1679941

Costello, M. S., Bagley, R. F., Fernández Bustamante, L., & Deochand, N. (2022). Quantification of behavioral data with effect sizes and statistical significance tests. Journal of Applied Behavior Analysis, 55(4), 1068–1082. https://doi.org/10.1002/jaba.938

Danov, S. E., & Symons, F. J. (2008). A survey evaluation of the reliability of visual inspection and functional analysis graphs. Behavior Modification, 32(6), 828–839. https://doi.org/10.1177/0145445508318606

Declercq, L., Cools, W., Beretvas, S. N., Moeyaert, M., Ferron, J. M., & Van den Noortgate, W. (2020). MultiSCED: A tool for (meta-)analyzing single-case experimental data with multilevel modeling. Behavior Research Methods, 52(1), 177–192. https://doi.org/10.3758/s13428-019-01216-2

DeRosa, N. M., Sullivan, W. E., Roane, H. S., & Kadey, H. J. (2021). Single-case experimental designs. In W. W. Fisher, C. C. Piazza, & H. S. Roane (Eds.), Handbook of applied behavior analysis (2nd ed., pp. 155–171). The Guilford Press.

Dowdy, A., Peltier, C., Tincani, M., Schneider, W. J., Hantula, D. A., & Travers, J. C. (2021). Meta-analyses and effect sizes in applied behavior analysis: A review and discussion. Journal of Applied Behavior Analysis, 54(4), 1317–1340. https://doi.org/10.1002/jaba.862

Drevon, D., Fursa, S. R., & Malcolm, A. L. (2017). Intercoder reliability and validity of WebPlotDigitizer in extracting graphed data. Behavior Modification, 41(2), 323–339. https://doi.org/10.1177/0145445516673998

Ferron, J. M., Bell, B. A., Hess, M. R., Rendina-Gobioff, G., & Hibbard, S. T. (2009). Making treatment effect inferences from multiple-baseline data: The utility of multilevel modeling approaches. Behavior Research Methods, 41(2), 372–384. https://doi.org/10.3758/BRM.41.2.372

Ferron, J. M., Joo, S.-H., & Levin, J. R. (2017). A Monte Carlo evaluation of masked visual analysis in response-guided versus fixed-criteria multiple-baseline designs. Journal of Applied Behavior Analysis, 50(4), 701–716. https://doi.org/10.1002/jaba.410

Ferron, J. M., Kirby, M. S., & Lipien, L. (2024). Fine-grained effect sizes. Advance Online Publication. https://doi.org/10.1037/spq0000634

Fingerhut, J., Xu, X., & Moeyaert, M. (2021). Impact of within-case variability on Tau-U indices and the hierarchical linear modeling approach for multiple-baseline design data: A Monte Carlo simulation study. Evidence-Based Communication Assessment and Intervention, 15(3), 115–141. https://doi.org/10.1080/17489539.2021.1933727

Fingerhut, J., Moeyaert, M., Manolov, R., Xu, X., & Park, K. H. (2023). Systematic review of descriptions and justifications provided for single-case quantification techniques. Behavior Modification, 47(5), 1115–1143. https://doi.org/10.1177/01454455231178469

Fisher, W. W., Kelley, M. E., & Lomas, J. E. (2003). Visual aids and structured criteria for improving visual inspection and interpretation of single-case designs. Journal of Applied Behavior Analysis, 36(3), 387–406. https://doi.org/10.1901/jaba.2003.36-387

Gage, N. A., & Lewis, T. J. (2013). Analysis of effect for single-case design research. Journal of Applied Sport Psychology, 25(1), 46–60. https://doi.org/10.1080/10413200.2012.660673

Giannakakos, A. R., & Lanovaz, M. J. (2023). Using AB designs with nonoverlap effect size measures to support clinical decision-making: A Monte Carlo validation. Behavior Modification, 47(6), 1407–1422. https://doi.org/10.1177/0145445519860219

Grissom, R. J., & Kim, J. J. (2001). Review of assumptions and problems in the appropriate conceptualization of effect size. Psychological Methods, 6(2), 135–146. https://doi.org/10.1037/1082-989X.6.2.135

Harrington, M., & Velicer, W. F. (2015). Comparing visual and statistical analysis in single-case studies using published studies. Multivariate Behavioral Research, 50(2), 162–183. https://doi.org/10.1080/00273171.2014.973989

Hartmann, D. P., & Hall, R. V. (1976). The changing criterion design. Journal of Applied Behavior Analysis, 9(4), 527–532. https://doi.org/10.1901/jaba.1976.9-527

Hedges, L. V., & Olkin, I. (2016). Overlap between treatment and control distributions as an effect size measure in experiments. Psychological Methods, 21(1), 61–68. https://doi.org/10.1037/met0000042

Hedges, L. V., Pustejovsky, J. E., & Shadish, W. R. (2012). A standardized mean difference effect size for single case designs. Research Synthesis Methods, 3(3), 224–239. https://doi.org/10.1002/jrsm.1052

Heyvaert, M., & Onghena, P. (2014a). Analysis of single-case data: Randomisation tests for measures of effect size. Neuropsychological Rehabilitation, 24(3–4), 507–527. https://doi.org/10.1080/09602011.2013.818564

Heyvaert, M., & Onghena, P. (2014b). Randomization tests for single-case experiments: State of the art, state of the science, and state of the application. Journal of Contextual Behavioral Science, 3(1), 51–64. https://doi.org/10.1016/j.jcbs.2013.10.002

Heyvaert, M., Saenen, L., Maes, B., & Onghena, P. (2015). Comparing the percentage of non-overlapping data approach and the hierarchical linear modeling approach for synthesizing single-case studies in autism research. Research in Autism Spectrum Disorders, 11, 112–125. https://doi.org/10.1016/j.rasd.2014.12.002

Horner, R. H., & Kratochwill, T. R. (2012). Synthesizing single-case research to identify evidence-based practices: Some brief reflections. Journal of Behavioral Education, 21(3), 266–272. https://doi.org/10.1007/s10864-012-9152-2

Horner, R. J., & Odom, S. L. (2014). Constructing single-case research designs: Logic and options. In T. R. Kratochwill & J. R. Levin (Eds.), Single-case intervention research: Methodological and statistical advances (pp. 27–51). American Psychological Association. https://doi.org/10.1037/14376-002

Houle, T. T. (2009). Statistical analyses for single-case experimental designs. In D. H. Barlow, M. K. Nock, & M. Hersen (Eds.), Single case experimental designs: Strategies for studying behavior change (pp. 271–305) (3rd ed.) Pearson.

Huberty, C. J., & Lowman, L. L. (2000). Group overlap as a basis for effect size. Educational and Psychological Measurement, 60(4), 543–563. https://doi.org/10.1177/0013164400604004

Imam, A. A. (2021). Historically recontextualizing Sidman’s Tactics: How behavior analysis avoided psychology’s methodological Ouroboros. Journal of the Experimental Analysis of Behavior, 115(1), 115–128. https://doi.org/10.1002/jeab.661

Iversen, I. H. (2021). Sidman or statistics? Journal of the Experimental Analysis of Behavior, 115(1), 102–114. https://doi.org/10.1002/jeab.660

Jaksic, H., Vause, T., Frijters, J. C., & Feldman, M. (2018). A comparison of a novel application of hierarchical linear modeling and nonparametric analysis for single-subject designs. Behavior Analysis: Research and Practice, 18(2), 203–218. https://doi.org/10.1037/bar0000091

Jamshidi, L., Heyvaert, M., Declercq, L., Fernández-Castilla, B., Ferron, J. M., Moeyaert, M., Beretvas, S. N., Onghena, P., & Van den Noortgate, W. (2023). A systematic review of single-case experimental design meta-analyses: Characteristics of study designs, data, and analyses. Evidence-Based Communication Assessment and Intervention, 17(1), 6–30. https://doi.org/10.1080/17489539.2022.2089334

Janosky, J. E. (1992). Use of the nonparametric smoother for examination of data from a single-subject design. Behavior Modification, 16(3), 387–399. https://doi.org/10.1177/01454455920163005

Johnson, A. H., & Cook, B. G. (2019). Preregistration in single-case design research. Exceptional Children, 86(1), 95–112. https://doi.org/10.1177/0014402919868529

Karch, J. D. (2021). Psychologists should use Brunner-Munzel’s instead of Mann-Whitney’s U test as the default nonparametric procedure. Advances in Methods and Practices in Psychological Science, 4(2), 1–14. https://doi.org/10.1177/2515245921999602

Kipfmiller, K. J., Brodhead, M. T., Wolfe, K., LaLonde, K., Sipila, E. S., Bak, M. S., & Fisher, M. H. (2019). Training front-line employees to conduct visual analysis using a clinical decision-making model. Journal of Behavioral Education, 28(3), 301–322. https://doi.org/10.1007/s10864-018-09318-1

Klein, L. A., Houlihan, D., Vincent, J. L., & Panahon, C. J. (2017). Best practices in utilizing the changing criterion design. Behavior Analysis in Practice, 10(1), 52–61. https://doi.org/10.1007/s40617-014-0036-x

Kiresuk, T. J., & Sherman, R. (1968). Goal Attainment Scaling: A general method for evaluating comprehensive community mental health programs. Community Mental Health Journal, 4(6), 443–453. https://doi.org/10.1007/BF01530764

Kranak, M. P., & Hall, S. S. (2022). Implementing automated nonparametric statistical analysis on functional analysis data: A guide for practitioners and researchers. Perspectives on Behavior Science, 45(1), 53–75. https://doi.org/10.1007/s40614-021-00290-2

Kratochwill, T. R., Hitchcock, J., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M. & Shadish, W. R. (2010). Single-case designs technical documentation. Retrieved from What Works Clearinghouse website: https://ies.ed.gov/ncee/wwc/Docs/ReferenceResources/wwc_scd.pdf

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2013). Single-case intervention research design standards. Remedial and Special Education, 34(1), 26–38. https://doi.org/10.1177/0741932512452794

Kratochwill, T. R., Horner, R. H., Levin, J. R., Machalicek, W., Ferron, J., & Johnson, A. (2021). Single-case design standards: An update and proposed upgrades. Journal of School Psychology, 89, 91–105. https://doi.org/10.1016/j.jsp.2021.10.006

Landman, W., Bogaerts, S., & Spreen, M. (2024). Typicality of Level Change (TLC) as an additional effect measure to NAP and Tau-U in single case research. Behavior Modification, 48(1), 51–74. https://doi.org/10.1177/0145445523119074

Lane, J. D., & Gast, D. L. (2014). Visual analysis in single case experimental design studies: Brief review and guidelines. Neuropsychological Rehabilitation, 24(3–4), 445–463. https://doi.org/10.1080/09602011.2013.815636

Ledford, J. R., Barton, E. E., Severini, K. E., & Zimmerman, K. N. (2019). A primer on single-case research designs: Contemporary use and analysis. American Journal on Intellectual and Developmental Disabilities, 124(1), 35–56. https://doi.org/10.1352/1944-7558-124.1.35

Lenz, A. S. (2013). Calculating effect size in single-case research: A comparison of nonoverlap methods. Measurement and Evaluation in Counseling and Development, 46(1), 64–73. https://doi.org/10.1177/0748175612456401

Levin, J. R., Ferron, J. M., & Gafurov, B. S. (2017). Additional comparisons of randomization-test procedures for single-case multiple-baseline designs: Alternative effect types. Journal of School Psychology, 63, 13–34. https://doi.org/10.1016/j.jsp.2017.02.003

Levin, J. R., Ferron, J. M., & Gafurov, B. S. (2021). Investigation of single-case multiple-baseline randomization tests of trend and variability. Educational Psychology Review, 33(2), 713–737. https://doi.org/10.1007/s10648-020-09549-7

Ma, H. H. (2006). An alternative method for quantitative synthesis of single-subject research: Percentage of data points exceeding the median. Behavior Modification, 30(5), 598–617. https://doi.org/10.1177/0145445504272974

Maggin, D. M., Barton, E., Reichow, B., Lane, K., & Shogren, K. A. (2022). Commentary on the what works clearinghouse standards and procedures handbook (v. 4.1) for the review of single-case research. Remedial and Special Education, 43(6), 421–433. https://doi.org/10.1177/07419325211051317

Maggin, D. M., Briesch, A. M., & Chafouleas, S. M. (2013). An application of the What Works Clearinghouse standards for evaluating single-subject research: Synthesis of the self-management literature base. Remedial and Special Education, 34(1), 44–58. https://doi.org/10.1177/0741932511435176

Maggin, D. M., Cook, B. G., & Cook, L. (2018). Using single-case research designs to examine the effects of interventions in special education. Learning Disabilities Research & Practice, 33(4), 182–191. https://doi.org/10.1111/ldrp.12184

Maggin, D. M., Cook, B. G., & Cook, L. (2019). Making sense of single-case design effect sizes. Learning Disabilities Research & Practice, 34(3), 124–132. https://doi.org/10.1111/ldrp.12204

Maggin, D. M., O’Keefe, B. V., & Johnson, A. H. (2011). A quantitative synthesis of methodology in the meta-analysis of single-subject research for students with disabilities: 1985–2009. Exceptionality, 19(2), 109–135. https://doi.org/10.1080/09362835.2011.565725

Manolov, R. (2018). Linear trend in single-case visual and quantitative analyses. Behavior Modification, 42(5), 684–706. https://doi.org/10.1177/0145445517726301

Manolov, R., Moeyaert, M., & Fingerhut, J. (2022). A priori justification for effect measures in single-case experimental designs. Perspectives on Behavior Science, 45(1), 156–189. https://doi.org/10.1007/s40614-021-00282-2

Manolov, R., & Onghena, P. (2022). Defining and assessing immediacy in single case experimental designs. Journal of the Experimental Analysis of Behavior, 118(3), 462–492. https://doi.org/10.1002/JEAB.799

Manolov, R., Solanas, A., & Leiva, D. (2010). Comparing “visual” effect size indices for single-case designs. Methodology, 6(2), 49–58. https://doi.org/10.1027/1614-2241/a000006

Manolov, R., Solanas, A., & Sierra, V. (2019). Extrapolating baseline trend in single-case data: Problems and tentative solutions. Behavior Research Methods, 51(6), 2847–2869. https://doi.org/10.3758/s13428-018-1165-x

Manolov, R., Solanas, A., & Sierra, V. (2020). Changing criterion designs: Integrating methodological and data analysis recommendations. The Journal of Experimental Education, 88(2), 335–350. https://doi.org/10.1080/00220973.2018.1553838

Manolov, R., & Tanious, R. (2022). Assessing consistency in single-case data features using modified Brinley plots. Behavior Modification, 46(3), 581–627. https://doi.org/10.1177/0145445520982969

Manolov, R., & Vannest, K. (2023). A visual aid and objective rule encompassing the data features of visual analysis. Behavior Modification, 47(6), 1345–1376. https://doi.org/10.1177/0145445519854323

McDougall, D. (2005). The range-bound changing criterion design. Behavioral Interventions, 20(2), 129–137. https://doi.org/10.1002/bin.189

McGraw, K. O., & Wong, S. P. (1992). A common language effect size statistic. Psychological Bulletin, 111(2), 361–365. https://doi.org/10.1037/0033-2909.111.2.361

Morgan, D. L., & Morgan, R. K. (2009). Single-case research methods for the behavioral and health sciences. Sage.

Morley, S. (2018). Single-case methods in clinical psychology: A practical guide. Routledge.

Natesan, P., & Hedges, L. V. (2017). Bayesian unknown change-point models to investigate immediacy in single case designs. Psychological Methods, 22(4), 743–759. https://doi.org/10.1037/met0000134

Nikles, J., Evans, K., Hams, A., & Sterling, M. (2022). A systematic review of N-of-1 trials and single case experimental designs in physiotherapy for musculoskeletal conditions. Musculoskeletal Science and Practice, 62, 102639. https://doi.org/10.1016/j.msksp.2022.102639

Ninci, J. (2023). Single-case data analysis: A practitioner guide for accurate and reliable decisions. Behavior Modification, 47(6), 1455–1481. https://doi.org/10.1177/0145445519867054