Abstract

Beck Depression Inventory-II (BDI-II) is one of the most used instruments for depression assessment. Stepankova Georgi, H., Vlckova,H., Lukavsky, K., Kopecek, J., M., & Bares, M. (2019). Beck Depression Inventory-II: Self-report or interview-based administrations show different results in older persons. International Psychogeriatrics, 31(5), 735–742.) found that BDI-II yielded lower scores when administered to elderly participants in the interview form after previous questionnaire form. Stepankova Georgi et al. imply that some participants misunderstand the instructions, which inflates their score, and that the interview form of BDI-II has a potential to prevent such bias thanks to the interviewer’s feedback. However, many studies have found a decrease in BDI-II scores simply due to the repeated administration. Our study aims to test whether the hypothesis of Stepankova Georgi, H., Vlckova, H., Lukavsky, K., Kopecek, J., M., & Bares, M. (2019). Beck Depression Inventory-II: Self-report or interview-based administrations show different results in older persons. International Psychogeriatrics, 31(5), 735–742.) is plausible by using a sample of young adults without cognitive impairment. We administered the Czech version of BDI-II in both the questionnaire and the interview form to a convenience sample of 125 young adults (Mage = 22.3, 60% women) and performed a Wilcoxon signed-rank test to within-subject compare mean scores. We show that both administration forms yield similar mean scores. The results imply that BDI-II can be administered to young adults without cognitive impairment in either form without the risk of substantial bias, but the interview form can still prevent misunderstanding of instructions in some respondents.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

BDI-II: Self-report and interview-based Administration Yield the same Results in Young Adults

Beck Depression Inventory-II (BDI-II; Beck et al., 1996) is one of the most commonly used instruments for depression assessment in research and clinical practice. BDI-II contains 21 items assessing various depressive symptoms (e.g., anhedonia, suicide ideation), which can be administered as a questionnaire or in an interview. In the interview form, the administrator reads the questions and possible responses aloud and lets the respondent choose the most appropriate answer. The intended use of the interview form is presumably to accommodate respondents with impaired cognitive or reading abilities and to prevent confusion in the respondents’ understanding of the questions’ meaning. The interview form can be reasonably expected to be used more frequently in the clinical or therapeutic setting. In contrast, the questionnaire form is likely to be preferred in research.

Stepankova Georgi et al. (2019) found a large decrease in BDI-II scores (Cohen’s dz = 1.2, approximately 3.5 fewer points in sum scores, indicating lesser intensity/lower number of depressive symptoms) in older people during an immediate repeated interview-form administration, compared to the first administration via a paper-based questionnaire. Stepankova Georgi et al. attributed the decrease in scores to the interviewer clarifying the instructions when participants expressed confusion (e.g., about the meaning of certain words or the time frame of the question). The authors reported that during the first administration, the elderly participants tended to compare how they felt during the last two weeks, as stated in the BDI-II instructions, to their youth (as in, e.g., the loss of energy when getting older, instead of loss of energy in the last two weeks), which might have significantly inflated the number and severity of reported symptoms. Additionally, some participants found the wording (especially expressions such as ’as ever,’ ’than usual,’ and ’used to be;’ p. 739) ambiguous and unclear. The authors hypothesized that the immediate second administration in the interview form allowed the participants to clarify the instructions and wording with the interviewer. The interviewer’s explanation could have helped the participants to focus on the intended timeframe and clarified the potentially ambiguous formulations, which prevented the inflation of BDI-II scores, manifesting as the reported difference between the questionnaire and the interview form of administration.

The explanation of Stepankova Georgi et al. (2019) seems reasonable. It is in line with research showing that older adults can provide unreliable answers to questions concerning clinical information, like depressive symptoms, when self-reported through a questionnaire (Hébert et al., 1996). Hauer et al. (2010) compared interview and self-report data from a clinical measure geared towards use with older people. They found the interview form superior to the questionnaire one in many regards, including test-retest reliability. However, attributing causal effect is not warranted without balanced conditions – all the Stepankova Georgi et al. participants were first administered the questionnaire and then the interview which could have affected the results. Notwithstanding this limitation, we argue that the effect of repeated measurement may also explain the difference in scores found by Stepankova Georgi et al. A number of studies observed that scores of BDI-II tend to decrease when administrated repeatedly (Choquette & Hesselbrock, 1987; Longwell & Truax, 2005; Sharpe & Gilbert, 1998). This effect has practical implications because people are often asked to fill out BDI-II multiple times in order to monitor their progress in therapy (Learmonth et al., 2008) or as a follow-up measure after finished treatment (Lemmens et al., 2019). Repeated measurement is also necessary for a longitudinal study of depression (Tibbs et al., 2020) and its (individual) dynamics (Bringmann et al., 2015). Moreover, Fried et al. (2016) found that scores of depression measures other than BDI-II tend to decrease as well if administered repeatedly. Therefore, the decrease in BDI-II scores in the study of Stepankova Georgi et al. (2019) can be attributed to the effect of repeated administration rather than to the effect of instruction/question clarification during the interview after filling out the questionnaire. However, to our best knowledge, no studies up to date have examined whether immediate repeated measurement may also lead to a drop in depression scores or whether it occurs only after a notable delay.

In this study, we aim to test which one of the effects is more plausible to explain the results of Stepankova Georgi et al. (2019), using a sample of young adults. If the repeated administration effect also occurs during immediate repeated administration, it should manifest through subsequently lower BDI-II scores across different contexts and populations. This implies that we would observe significantly lower BDI-II scores regardless of the administration order; whether the interview form is administered first or second would not matter. The competing explanation by Stepankova Georgi et al. (2019) assumes that the decrease in observed scores occurs when an older adult has a biased understanding of the questionnaire instructions or questions. This misunderstanding is then corrected through the interviewer’s feedback during the subsequent interview. The explanation provided by Stepankova Georgi et al. (2019) would thus be plausible under two conditions: Either we would not observe a consistent difference in BDI-II scores of young adults, regardless of the administration form. This is because the effect described by Stepankova Georgi et al. (2019) should apply mostly to older adults or potentially those with impaired cognitive or linguistic abilities. Or, we would observe significantly lower BDI-II scores in young adults, but only when the interview is administered after the questionnaire version. Essentially, this would replicate the score differences observed by Stepankova Georgi et al. (2019), suggesting their proposed clarification effect is stronger than expected because it affects responding even in younger populations.

Method

We report how we determined our sample size, all data exclusions (if any), all data inclusion/exclusion criteria, whether inclusion/exclusion criteria were established prior to data analysis, all measures in the study, and all analyses, including all tested models. If we use inferential tests, we report exact p-values, effect sizes, and 95% confidence or credible intervals (Simmons et al., 2012). This study was preregistered. The analytic script, data, and study materials are available at https://osf.io/z4cb2/.

Given the serious and intimate nature of certain questions (e.g., on suicidal ideation), once the administration was completed, we conducted a semi-structured debriefing with each participant and addressed any concerns about their BDI-II results or mental health in general. Also, regardless of their results, we provided participants with an information leaflet on mental health support and information on a crisis helpline and a local crisis intervention centre and encouraged them to use the contacts should they feel mentally unwell.

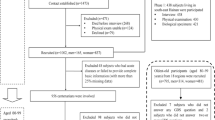

Participants

Recruitment

Participants needed to be 20–30 years old, have no personal history of mental disorders, and not be psychology students or graduates. We collected a sample of 137 participants conveniently in the halls of the faculty building. The administrators asked the participants about study participation and gave those willing to participate a digital informed consent to sign (see Supplementary materials). We excluded one participant who answered only three items out of 21 and 11 cases with no data due to an administration error. To estimate the required sample size, we conducted a power analysis using G*Power 3.1 (Erdfelder et al., 1996). To reliably detect (α = 0.05, β = 0.10) the effect of Cohen’s dz = 1.2 comparable to Stepankova Georgi et al. (2019), we would need at least 10 participants. However, we designed our study so that we would be able to detect a much smaller effect. Based on the power analysis for a two-tailed Wilcoxon signed-rank test, we aimed to collect 125 participants to achieve α = 0.05 and β = 0.10, given the minimal effect of Cohen’s dz = 0.3. We terminated the data collection as soon as we gathered 125 valid cases.

Sample Characteristics

Out of 125 remaining participants, 75 (60%) were women, and one did not report their gender. The participants were 22.3 years old on average (SD = 2, min = 20, max = 28), and all were Caucasians of Czech and Slovak cultural backgrounds. Participants were almost exclusively undergraduate or graduate students. We did not collect data on income or socioeconomic status for the sake of lesser time requirements for participants and because these variables are not focal to our research question and are unlikely to intervene with the observed effects.

Measures

Beck Depression Inventory (BDI-II) consists of 21 items measuring the severity of various depressive symptoms. All items are answered using a series of graded statements from which respondents choose the statement most appropriate for their situation, with the statements being scored based on their depressive symptom severity from zero (no depressive symptoms) to three (severe depressive symptoms). Our study used the Czech version of BDI-II by Preiss and Vacíř (1999). Internal consistency of the questionnaire was good in this study, with Cronbach’s α = 0.85 and McDonald’s ωt = 0.85 (RMSEA = 0.067), as supported by previous studies showing the Czech version of BDI-II having good internal consistency (Ociskova et al., 2017) and acceptable test-retest reliability (Ptáček et al., 2016).

Procedure

We administered BDI-II to each participant face-to-face and individually. In order to control for score differences caused by repeated measurements (see p. 2), we decided to administer each of the 21 items to each respondent only once. We grouped the items into two administration parts (questionnaire and interview) following each other. Each administration part consisted of 10 or 11 randomly selected items (within each administration session separately, per Qualtrics survey algorithm) so that, ultimately, every participant was administered all BDI-II items, but each item was administered only once. For each participant, it was randomly determined whether they start with the questionnaire or the interview. The informed consent and the questionnaire were presented and filled out on a tablet. The participants who started with the questionnaire part handed the tablet over to the administrator immediately after answering all the items in the questionnaire part. Then, the administrator administered the interview part. During this part, participants could also ask for a clarification of any ambiguities. As opposed to Stepankova Georgi et al. (2019), the administrator recorded the responses in the interview partFootnote 1 once the respondent indicated their preferred statement. The participants who started with the interview part received the exact same treatment; the only difference was in the administration form order.

Statistical Methods

Similarly to Stepankova Georgi et al. (2019), we used the Wilcoxon signed-rank test to detect the administration form effect. We compared the mean BDI-II score of the interview administration to the mean score of the questionnaire. We set the statistical significance level to p = .05. Analysis was conducted in R v. 4.3.0 (R Core Team, 2023), utilizing the following packages: foreign, ggplot2, ggpubr, psych, tidyverse (Ben-Shachar et al., 2020; Kassambara, 2023; R Core Team, 2022; Revelle, 2023; Wickham, 2016; Wickham et al., 2019).

Results

Figure 1 shows the distribution of the within-subject differences in mean scores (n = 125) between the two administration forms, regardless of the administration order. In the interview form of administration, the mean score was 0.68 (SE = 0.04), while in the questionnaire form, it was 0.64 (SE = 0.30). Overall, according to the Wilcoxon signed-rank test, we found no significant differences between the mean scores of both administration forms, V = 4312, p = .208, Cohen’s dz = 0.15, 95% CI [-0.03, 0.33].

Figure 2 expands on this comparison by considering the administration order. The group with the administration order identical to Stepankova Georgi et al. (2019), i.e., interview after questionnaire, see the left graph in Fig. 2, scored slightly higher during the interview, n = 64, M = 0.09, SE = 0.03, V = 1335, p = .026, Cohen’s dz = 0.35, 95% CI [0.09, 0.60]. In the group participating in the interview before the questionnaire, we did not find any significant difference in the scores from either mode of administration, n = 61, M = -0.01, SE = 0.04, V = 846, p = .614, Cohen’s dz = -0.04, 95% CI [-0.29, 0.22].

Discussion

In this study, we assessed whether the effect of instruction clarification suggested by Stepankova Georgi et al. (2019) is plausible by administering BDI-II in different forms to young adults. Overall, we found no differences in mean scores based on the type of administration, which is in line with Stepankova Georgi et al. (2019), who presume that the interview administration should yield less inflated scores due to misunderstandings, mostly in older adults or those with impaired cognitive or language abilities who had troubles understanding instructions in the first place. From this point of view, our results support Stepankova Georgi et al. (2019) because we found no such effect in healthy young adults, who are much less likely to experience such misunderstandings.

However, we found an unexpected small increase in the interview-form scores in the group interviewed after the questionnaire. This effect is surprising because it is in the opposite direction than we would expect based on Stepankova Georgi et al. (2019), who argued that the interview form administered after the questionnaire form has the potential to yield lower scores due to the instruction clarification. Nevertheless, we think our results support their notion that the interview administration of BDI-II helps prevent response bias due to misunderstanding. We found a slight difference in scores only when the interview was administered second; the group that had the interview administered first scored nearly the same in the latter questionnaire form. This suggests that the interview in itself might have helped to clear up some misunderstandings for this group of participants from the very beginning. Because of this, their mean scores were comparable across both administration forms, unlike in the group which went through the interview after the questionnaire.

Overall, our results indirectly support the hypothesis of Stepankova Georgi et al. (2019) that the interview administration of BDI-II has the potential to yield less biased scores, whether inflated or reduced, compared to the questionnaire form. However, our study shows that when administering BDI-II to participants who have little to no problems with understanding and following instructions, this potential will be limited. Another important finding of our study is that for practical and research purposes of interpreting the sum/mean BDI-II scores of young adults, it should not matter what form of BDI-II is chosen as long as all the items are administered the same way. The choice between the questionnaire and the interview form may only be of importance in certain populations, such as older people or cognitively impaired people, or when there is a general concern about the participants not understanding the instructions properly. In such cases, the interview form should be preferred over the questionnaire.

Limitations

Due to our planned-missingness design, we did not test the measurement invariance across administration forms on the item level. Measurement invariance assessment could have provided us with additional information on the functioning of individual items. On the other hand, our focus on mean scores reflects the current clinical practice of taking a sum score of BDI-II items or an unweighted average. Also, similar to Stepankova Georgi et al. (2019), we worked with a sample of population without cognitive impairment. It is, therefore, problematic to generalize the findings to clinical samples where BDI-II is often administered.

Conclusion

We agree with Stepankova Georgi et al. (2019) that when administering BDI-II to the senior population, it is advisable to administer BDI-II in the interview form, especially when the respondents are at risk of misunderstanding the instructions or the item’s content. However, based on our results, practitioners and researchers do not need to worry about biased results due to the administration form in young adults unless there are other reasons to expect that the instructions may not be clear to them. The questionnaire form, which is also more time efficient, should perform adequately in young adults.

Data and Material Availability

This study was preregistered. The analytic script, data, and study materials are available at https://osf.io/z4cb2/.

Notes

In Stepankova Georgi et al. (2019), the administrator read the items out loud and clarified the ambiguities, but the participants noted down their answers themselves.

References

Beck, A. T., Steer, R. A., & Brown, G. (1996). Beck depression inventory-ii. Psychological Assessment. https://doi.org/10.1037/t00742-000

Ben-Shachar, M. S., Lüdecke, D., & Makowski, D. (2020). Effectsize: Estimation of effect size indices and standardized parameters. Journal of Open Source Software, 5(56), 2815. https://doi.org/10.21105/joss.02815.

Bringmann, L. F., Lemmens, L. H., Huibers, M. J., Borsboom, D., & Tuerlinckx, F. (2015). Revealing the dynamic network structure of the Beck depression inventory-ii. Psychological Medicine, 45(4), 747–757. https://doi.org/10.1017/S0033291714001809

Choquette, K. A., & Hesselbrock, M. N. (1987). Effects of retesting with the Beck and Zung depression scales in alcoholics. Alcohol and Alcoholism, 22(3), 277–283. https://doi.org/10.1093/oxfordjournals.alcalc.a044709

R Core Team (2023). R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria. https://www.R-project.org/.

R Core Team (2022). Foreign: Read data stored by ’minitab’, ’s’, ’sas’, ’spss’, ’stata’, ’systat’, ’weka’, ’dbase’, … [R package version 0.8–84]. https://CRAN.R-project.org/package=foreign.

Erdfelder, E., Faul, F., & Buchner, A. (1996). Gpower: A general power analysis program. Behavior Research Methods Instruments & Computers, 28, 1–11. https://doi.org/10.3758/BF03203630

Fried, E. I., van Borkulo, C. D., Epskamp, S., Schoevers, R. A., Tuerlinckx, F., & Borsboom, D. (2016). Measuring depression over time… or not? Lack of unidimensionality and longitudinal measurement invariance in four common rating scales of depression. Psychological Assessment, 28(11), 1354. https://doi.org/10.1037/pas0000275

Hauer, K., Yardley, L., Beyer, N., Kempen, G. I. J. M., Dias, N., Campbell, M., & Todd, C. (2010). Validation of the Falls Efficacy Scale and Falls Efficacy Scale International in geriatric patients with and without cognitive impairment: Results of self-report and interview-based questionnaires. Gerontology, 56(2), 190–199. https://doi.org/10.1159/000236027

Hébert, R., Bravo, G., Korner-Bitensky, N., & Voyer, L. (1996). Refusal and information bias associated with postal questionnaires and face-to-face interviews in very elderly subjects. Journal of Clinical Epidemiology, 49(3), 373–381. https://doi.org/10.1016/0895-4356(95)00527-7.

Kassambara, A. (2023). Ggpubr: ’ggplot2’ based publication ready plots [R package version 0.6.0]. https://CRAN.R-project.org/package=ggpubr.

Learmonth, D., Trosh, J., Rai, S., Sewell, J., & Cavanagh, K. (2008). The role of computer-aided psychotherapy within an NHS CBT specialist service. Counselling and Psychotherapy Research, 8(2), 117–123. https://doi.org/10.1080/14733140801976290

Lemmens, L. H., Van Bronswijk, S. C., Peeters, F., Arntz, A., Hollon, S. D., & Huibers, M. J. (2019). Long-term outcomes of acute treatment with cognitive therapy v. interpersonal psychotherapy for adult depression: Follow-up of a randomized controlled trial. Psychological Medicine, 49(3), 465–473. https://doi.org/10.1017/S0033291718001083

Longwell, B. T., & Truax, P. (2005). The differential effects of weekly, monthly, and bimonthly administrations of the Beck Depression Inventory-II: Psychometric properties and clinical implications. Behavior Therapy, 36(3), 265–275. https://doi.org/10.1016/S0005-7894(05)80075-9

Ociskova, M., Prasko, J., Kupka, M., Marackova, M., Latalova, K., Cinculova, A., & Vrbova, K. (2017). Psychometric evaluation of the Czech Beck Depression Inventory-II in a sample of depressed patients and healthy controls. Neuroendocrinology Letters, 38(2), 98–106.

Preiss, M., & Vacíř, K. (1999). BDI-II. Beckova sebeposuzovací škála pro dospělé. Brno: Psychodiagnostika [Manual].

Ptáček, R., Raboch, J., Vňuková, M., Hlinka, J., & Anders, M. (2016). Beckova škála deprese BDI-II – standardizace a využití v praxi [Beck depression scale BDI-II – standardization and application in practice]. Česká a Slovenská Psychiatrie, 112(6), 270–274.

Revelle, W. (2023). Psych: Procedures for psychological, psychometric, and personality research [R package version 2.3.3]. https://CRAN.R-project.org/package=psych.

Sharpe, J. P., & Gilbert, D. G. (1998). Effects of repeated administration of the Beck Depression Inventory and other measures of negative mood states. Personality and Individual Differences, 24(4), 457–463. https://doi.org/10.1016/S0191-8869(97)00193-1

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2012). A 21 word solution. Available at SSRN 2160588.

Stepankova Georgi, H., Vlckova, H., Lukavsky, K., Kopecek, J., M., & Bares, M. (2019). Beck Depression Inventory-II: Self-report or interview-based administrations show different results in older persons. International Psychogeriatrics, 31(5), 735–742. https://doi.org/10.1017/S1041610218001187

Tibbs, M. D., Huynh-Le, M. P., Reyes, A., Macari, A. C., Karunamuni, R., Tringale, K., Burkeen, J., Marshall, D., Xu, R., McDonald, C. R., et al. (2020). Longitudinal analysis of depression and anxiety symptoms as independent predictors of neurocognitive function in primary brain tumor patients. International Journal of Radiation Oncology - Biology - Physics, 108(5), 1229–1239. https://doi.org/10.1016/j.ijrobp.2020.07.002

Wickham, H. (2016). Ggplot2: Elegant graphics for data analysis. Springer. https://ggplot2.tidyverse.org.

Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L. D., François, R., Grolemund, G., Hayes, A., Henry, L., Hester, J., Kuhn, M., Pedersen, T. L., Miller, E., Bache, S. M., Müller, K., Ooms, J., Robinson, D., Seidel, D. P., Spinu, V., & Yutani, H. (2019). Welcome to the tidyverse. Journal of Open Source Software, 4(43), 1686. https://doi.org/10.21105/joss.01686.

Funding

The study and article preparation were not supported by any specific funding.

Open Access funding provided by the IReL Consortium

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception, design, data collection, and material and manuscript preparation. Analysis was performed by Jaroslav Gottfried.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics Approval

Since we did not collect any personal data that would allow for the identification of participants and no invasive, dangerous, or distressing procedures were involved, the study received an exempt from requiring institutional ethical approval.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gottfried, J., Chvojka, E., Klocek, A. et al. BDI-II: Self-Report and Interview-based Administration Yield the Same Results in Young Adults. J Psychopathol Behav Assess (2024). https://doi.org/10.1007/s10862-024-10154-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10862-024-10154-z