Abstract

This study uses structural equation modeling to investigate the relationships between pre-service teachers’ (PSTs’) course-taking history, beliefs about mathematics, beliefs about students’ mathematical ability, and opinions about (1) how student errors should be addressed when they occur and (2) how much emphasis should be given to various forms of assessment. The results indicate that some types of courses are strongly associated with beliefs about the nature of mathematics. Specifically, PSTs who have taken more mathematics courses are more likely to see mathematics as a set of rules. PSTs’ views about mathematics strongly predict their beliefs about who can do high-level mathematics, how they think student errors should be addressed, and which forms of assessment they think are most appropriate. Implications for teacher preparation programs are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Teachers’ beliefs and knowledge about their subject matter influence their classroom practices (Cross, 2009; Fives & Buehl, 2012; Pajares, 1992; White et al., 2005), which directly affect student learning opportunities and outcomes (see Minarni et al., 2018). Although mathematics researchers, teacher educators, and professional organizations have long stressed the use of student-centered techniques (e.g., Biggs, 1988; Boekaerts, 1997; Education Committee of the EMS, 2012, 2013a, 2013b; NCTM, 2000; Niemi, 2002; Vygotsky, 1978), teachers often revert to more teacher-centered methods, such as lecturing (Boaler & Selling, 2017; Charalambous, 2015; Davis et al., 2020; Lee & Huh, 2014; Noyes, 2012). This is likely because teachers need both the capacity and the desire to use reform-based instruction (Wellberg and Evans, 2022; McLaughlin, 1990), which includes content and pedagogical knowledge (Hiebert et al., 2019; Shulman, 1986) and a belief that their students can fully participate in, and benefit from, more collaborative and cognitively demanding work (Abrami et al., 2004; Cross, 2009; Sztajn, 2003).

While there is quantitative research on the relationships between mathematics teachers’ knowledge, beliefs about mathematics, and instructional practices (e.g., Campbell et al., 2014; Corkin et al., 2015; Lui & Bonner, 2016; Wilkins, 2008; Yang et al., 2020; Yates, 2006), the studies that have addressed the connections between teachers’ views of mathematics, their beliefs about students’ abilities to deeply engage with mathematical content, and their classroom assessment practices have been largely qualitative in nature (e.g., Abrami et al., 2004; Bray, 2011; Charalambous, 2015; Cimer & Cimer, 2010; Cross, 2009; Schulz & FitzPatrick, 2016; Tallman et al., 2016; Thompson, 1984). The extant qualitative work provides mathematics education researchers with valuable insights into how various aspects of teachers’ belief systems and classroom practices relate to one another. The aim of the present study is to complement and to extend these findings. Much of the extant work in this area was conducted using relatively small and localized samples of teachers. By using a broader, international sample, I hoped to determine whether the findings of these qualitative studies, which have been quite consistent with one another, are generalizable to the larger population of teachers. The studies that are currently available also typically focus on specific relationships between various combinations of teachers’ knowledge, beliefs, and classroom practices, and one aim of the present study is to explore how all these factors work together as a system.

In order to accomplish these goals, I used structural equation modeling (SEM) with data from the Mathematics Teaching in the 21st Century (MT21) survey (Schmidt, 2013) to investigate the following research questions:

-

1.

How do the courses that pre-service teachers (PSTs) have taken relate to their beliefs about mathematics and about which students can do high-level mathematics?

-

2.

How do PSTs’ beliefs about both mathematics and student ability relate to their opinions about how student errors should be addressed?

-

3.

How do those beliefs relate to the amount of emphasis PSTs think is appropriate to give to various forms of classroom assessment?

The MT21 survey was an international pilot for the Teacher Education and Development Study in Mathematics (TEDS-M) survey (Tatto et al., 2008). While others have used data from the MT21 and TEDS-M surveys to investigate the relationships between PSTs’ content knowledge and beliefs about mathematics (e.g., Burroughs & Schmidt, 2014; Yang et al., 2020), none, to my knowledge, have used SEM to investigate how these things relate to beliefs about student ability, how student errors should be addressed, or assessment preferences. The benefit of using SEM for this research is that it combines measurement and regression models, such that a set of related survey items is considered to represent a latent (unobserved) variable (LV), which can then predict, and/or be predicted by, other variables in the regression model. SEM takes measurement error into account and produces model fit statistics and standard errors to help the researcher determine how well the data fits the hypothesized model. Additionally, when determining a person’s “score” on an LV, SEM scales item responses according to how strongly each item represents the LV, which helps produce results that are more accurate than may be obtained using more simplistic methods. These aspects of SEM allowed me to use a combination of observed and latent variables to explore the relationships of interest in a statistically robust way.

Theoretical framework

If students are to be proficient in mathematics, they need to develop procedural fluency, which allows them to quickly perform the computations needed to correctly complete the problems with which they are presented (Kilpatrick et al., 2001; NCTM, 2000). However, developing students’ procedural fluency should not be the sole focus of mathematics classes, as students should ideally also develop other skills, such as adaptive reasoning, strategic competence, conceptual understanding, and a productive disposition toward mathematics (Kilpatrick et al., 2001). It seems to be much more difficult for students to develop these essential skills in teacher-centered classes, as students in such environments typically take a more passive role in the learning process, which often limits their opportunities to engage in the types of productive struggle that help facilitate the development of these skills and understandings (Stein & Lane, 1996; Stein et al., 1996; Tekkumru-Kisa & Stein, 2015; Tekkumru-Kisa et al., 2020). Even though mathematics education researchers have been promoting student-centered instruction with a focus on developing conceptual understanding and the strategic application of vital mathematical practices for decades (e.g., Boaler, 1998; Hiebert & Grouws, 2007; Yackel & Cobb, 1996), a teacher-centered approach to instruction is still prevalent in mathematics classrooms, particularly at the secondary level (Boaler & Selling, 2017; Davis et al., 2020; Lee & Huh, 2014; Noyes, 2012).

The present study is premised on the idea that student-centered instruction, in which students are active participants in their learning, is preferable to a teacher-centered approach where students are passive recipients of information. This view has substantial implications for the classroom culture both in terms of how student errors are addressed when they inevitably occur and for how students’ knowledge is assessed. For teachers to take up student-centered practices, they need to know how to implement them and to believe that their students will benefit from such an approach (Wellberg and Evans, 2022; McLaughlin, 1990).

Student-centered pedagogy

Education researchers have long emphasized the importance of actively engaging students during instruction (e.g., Biggs, 1988; Boekaerts, 1997; Niemi, 2002; Vygotsky, 1978), as this approach helps students retain information (Kvam, 2000; McCarthy & Anderson, 2000), build confidence (Townsend et al., 1998), and develop critical thinking skills (Kim et al., 2013). In these classes, students learn by doing rather than by watching. While traditional, teacher-centered instruction does have a place in modern classrooms, it should ideally not be the primary mode of instruction (NCTM, 2000), as students in teacher-centered classrooms are more prone to seeing mathematics as a fixed set of formulas and algorithms to be memorized and regurgitated (Franke et al., 2007). By focusing instead on helping students build their problem-solving and explanation skills, student-centered instruction can help facilitate views of mathematics as a set of interconnected processes and tools for solving novel problems (Franke et al., 2007).

Students who are actively involved in the learning process will necessarily make mistakes as they grapple with new concepts (McMillan & Moore, 2020). The ways that teachers address errors in the classroom can impact how students view both the subject and themselves as learners (Ball & Bass, 2000; Cowie, 2005; Franke et al., 2007; Gardee & Brodie, 2022; Steuer et al., 2013; Tulis, 2013). Similarly, the forms of assessment that a teacher uses can influence which aspects of mathematics their students’ believe to be most important and worthy of their time and effort (Crooks, 1988; Fukuzawa & DeBraga, 2019; Tallman et al., 2016). These two aspects of classroom practice are the focus of the present study.

Classroom discussions and student errors

One key component of student-centered pedagogy is learning through discussion (Carpenter et al., 1989; Cobb et al., 1997; Delaney, 1991; Helme & Clarke, 2001; Leikin & Zaslavsky, 1997; McKeachie & Kulik, 1975; Wade, 1994). These discussions may serve as instances of formative assessment, as they can expose inconsistencies or gaps in students’ reasoning, which teachers can then use to guide future instruction (Gardee & Brodie, 2022; Kazemi & Stipek, 2001; Lampert, 2001; Leinhardt & Steele, 2005). Borasi (1994) described student errors as “springboards for inquiry”, as they can create space for students to discuss mathematical ideas and to examine their own and others’ thinking. Although making mistakes is an essential part of learning (Dweck, 2006; McMillan, 2017; McMillan & Moore, 2020), errors are often treated as shameful and something to avoid (Gardee & Brodie, 2022; Ingram et al., 2015). This stigma can have differential impacts on students, as the social consequences of making a mistake tend to be harsher for those in marginalized groups (Lubienski, 2002). Therefore, if classroom conversations are to be engaging and useful, all students need to feel safe sharing their ideas, which requires a great deal of effort from the teacher in establishing a productive set of norms where students are allowed to make mistakes (McMillan & Moore, 2020; Walshaw & Anthony, 2008; Yackel et al., 1991). A large part of establishing these norms is how the teacher responds to incorrect or partially correct answers (Gardee & Brodie, 2022; Steuer et al., 2013).

Students are not always entirely correct or incorrect when discussing mathematical concepts. Rather, students’ statements are often somewhere along a continuum of accuracy, in which some parts of their reasoning are mathematically correct and other parts are not (Rougee, 2017). If a teacher ignores these responses, the student may not identify which aspects of their thinking need further consideration (Beswick, 2007; Kaufmann et al., 2022); however, if a teacher treats partially correct statements as entirely wrong and in need of total correction, they discount the understandings that the student has demonstrated and deny the student the opportunity to reflect on and to realign aspects of their thinking (Franke et al., 2009; Shaughnessy et al., 2020). Students have also been shown to develop negative feelings toward expressing their ideas in class when the teacher corrects their statements or has another student give the answer without allowing the original student space to reevaluate their thinking (Steuer et al., 2013; Tulis, 2013). Furthermore, providing students with correct answers without discussing the (mis)understandings that underly students’ incorrect or partially correct statements can teach them that mathematics is a set of disjointed rules to be memorized rather than a coherent discipline (Ball & Bass, 2000) and that arriving at the correct answer is what is most important, not the understanding that gets them there (Franke et al., 2007).

Forms of assessment

Students’ understandings of what is important for them to learn are greatly influenced by the ways in which their knowledge is assessed (Brookhart, 2003; McMillan, 2003; Tallman et al., 2016). Formative assessment, in which teachers give continuous feedback to students about their progress (Black & Wiliam, 1998), has been shown to improve student learning (see Lee et al., 2020 for a review). If the tasks and the feedback that students receive are focused on the understanding and the application of mathematical concepts, assessment can also help students see mathematics as a connected web of ideas rather than as a set of formulas and algorithms (Chow, 2011). If, however, teachers’ approaches to classroom assessment center on the use of written tests comprised primarily of items that require students to recognize a known problem format and to apply the appropriate computation (e.g., solve 2x + 3 = 9 for x), students are likely to develop the belief that mathematics is primarily about memorizing and regurgitating rules (Tallman et al., 2016). Unfortunately, written tests are used extensively, with around half of teachers in the US giving at least one test or quiz per week (Banilower et al., 2018), for example. Furthermore, these exams tend to include a large proportion of computation-focused items (Wellberg, 2023) and to make up at least half of students’ final course grades (Guskey & Link, 2019).

While alternative forms of assessment, such as projects and reports are much more engaging for students and have been shown to improve student learning and attitudes toward mathematics (e.g., Boaler, 1998; Laursen et al., 2011), they are rarely used in mathematics classrooms. As an example, less than 10% of eighth-grade students in the US in 2019 had mathematics teachers who placed a substantial amount of emphasis on projects and nearly half of eighth-grade students had a teacher who did not use projects at all (Mullis et al., 2020). Similarly, researchers have suggested that having students write about mathematical concepts can help teachers assess depth of understanding (Bolte, 1999; Santos & Semana, 2015). Writing can also serve as a form of assessment as learning, as it offers students space to draw connections between concepts and has been shown to improve student achievement (Bell & Bell, 1985; Jurdak & Abu Zein, 1998; Zollman, 2012) and to promote the development of conceptual understanding and problem-solving skills (Hensberry & Jacobbe, 2012; Johanning, 2000; Pugalee, 2001, 2004; Steele, 2005). Despite these benefits, this strategy is rarely used in mathematics classrooms (Wellberg, 2023; Kosko, 2016).

Knowledge, beliefs, and classroom practices

While the strategies described above have been shown to improve student learning, teacher-centered instruction and assessment techniques are still much more prominent in classrooms (Wellberg, 2023; Banilower et al., 2018; Boaler & Selling, 2017; Charalambous, 2015; Davis et al., 2020; Lee & Huh, 2014; Noyes, 2012). One potential reason for this discrepancy between implemented classroom practices and the practices that research suggests are most beneficial for students is that it is often impractical for an individual teacher to use only student-centered techniques in their classroom. For example, there are often national-, state/province-, district-, and/or school-level policies around instruction and assessment that limit what teachers can do. There are also logistical factors, such as the length of class periods, large class sizes, the time it takes to develop and to grade/interpret assessments of student learning, and the amount of content that must be covered before end-of-year accountability testing that may incentivize teachers to adopt instruction and assessment strategies that they see as being more time efficient (Wellberg and Evans, 2022; Wellberg, 2023; Liang & Akiba, 2017; Senk et al., 1997). While these contextual factors do make it difficult for teachers to run purely student-centered classrooms, education researchers would hope that teachers would attempt to incorporate some student-centered strategies into their classroom practice.

While contextual factors certainly play a role in the proportion of student- and teacher-centered techniques that teachers can reasonably use, teachers’ pedagogical and subject-matter knowledge and teachers’ beliefs about the subject matter and about their students also have an enormous impact on their willingness to learn about and to implement student-centered instruction and assessment strategies (Wellberg, 2023; Fives & Buehl, 2016; McLaughlin, 1990). For a teacher to adopt a more student-centered approach, they must have both the capacity and the desire to do so (Wellberg and Evans, 2022; McLaughlin, 1990). This means that they need to know how to implement these pedagogical techniques and to believe that they will be able to do so successfully (Gordon et al., 2023; NRC, 2001). They also need to have adequate time, training, and administrative support to find/develop and to implement student-centered instruction and assessment strategies, which often take more time to prepare and to implement than do more traditional approaches (Wellberg and Evans, 2022; Wellberg, 2023; Senk et al., 1997). Finally, teachers need to believe that their students will actually engage in activities that require more than the passive reception of information and that these activities will help students develop deeper understandings of the content (Abrami et al., 2004; Cross, 2009; Sztajn, 2003). In the following sections, I describe commonly documented connections between teachers’ knowledge, beliefs, and classroom practices and how these relationships are often moderated by teachers’ beliefs about the group of students they are teaching.

Instructional practices

Secondary mathematics teachers typically have strong mathematics backgrounds. The results of the 2019 TIMSS, for example, indicate that across the countries that participated, on average, 89% of eighth-grade students were taught mathematics by a teacher who had earned a degree in mathematics and/or mathematics education (Mullis et al., 2020). Although teachers must deeply understand mathematical concepts to teach them, having taken more mathematics courses does not automatically mean they can teach all students effectively (Charalambous, 2015; Wilkins, 2008). In fact, high-school teachers, who generally have the most college-level mathematics education, tend to be the least receptive to instructional reforms, such as the Common Core State Standards in the US (Matlock et al., 2016). This resistance to implementing standards that were meant to promote a more student-centered instructional approach may be related to these teachers’ beliefs about what mathematics is and how it should be taught.

Believing that mathematics is static, in that it is a fixed set of interconnected facts, formulas, and procedures (Ernest, 1989; Thompson, 1992), is strongly associated with a traditional orientation toward teaching and learning (Cross, 2009; Yang et al., 2020). These beliefs may stem from teachers’ prior educational experiences in which their own teachers implicitly (or explicitly) communicated what mathematics is and how it should be taught (De Corte et al., 2008; Thompson, 1984). The more teachers are exposed to traditional views of mathematics as students, the more entrenched those views become, and the more likely these teachers are to emphasize procedural, teacher-centered learning in their own classrooms (Bray, 2011; Charalambous, 2015; Cross, 2009; Thompson, 1984; Windschitl, 2004). While there has been a slow shift toward student-centered instruction in university-level mathematics, particularly within the past decade, most undergraduate mathematics classes are still primarily lecture-based (Lutzer et al., 2007; Melhuish et al., 2022; Speer et al., 2010). It is, therefore, unsurprising that teachers with higher levels of mathematical content knowledge have been found to have less interest in inquiry-based pedagogy (Wilkins, 2008; Yang et al., 2020).

While rules-based beliefs about mathematics tend to be associated with more teacher-centered instruction, and views of mathematics as being about problem-solving tend to lend themselves to a more student-centered approach, there is evidence that teachers can hold both of these views simultaneously (Beswick, 2007). A common combination of beliefs, for example, is that problem-solving is an essential part of mathematics, but students must first learn the relevant formulas and algorithms before they can attempt to tackle complex problems, rather than learning by way of working through those problems (see Franzak, 2019; Thompson, 1984; Wilson & Cooney, 2002). While teachers may think that they are using reform-based instructional techniques, these conflicting ideas tend to impede their implementation, as teachers often step in with the correct answers when there are difficulties instead of allowing students to engage in productive struggle (Beswick, 2007; Russo et al., 2020; Stein & Lane, 1996; Tekkumru-Kisa et al., 2020). As a result, teachers often believe that their practices are much more student-centered than they may be judged to be by researchers (Beswick, 2007; Nathan & Knuth, 2003; Simmons et al., 1999).

In addition to beliefs about mathematics, teachers’ perceptions of the students in their class can have an enormous impact on their practices. This association is a reoccurring theme in the literature, with consistent findings that teachers use more traditional, procedure-driven instruction with groups of students they believe to be less able (e.g., Beswick, 2003, 2004; Bray, 2011; Browman et al., 2020; Corkin et al., 2015; Cross, 2009; Dunn, 2003; Hyland, 2005; Jackson et al., 2017; Louie, 2017; Pagiling et al., 2021; Park et al., 2016; Sztajn, 2003; Thompson, 1984). Teachers who have low expectations are less likely to have students work collaboratively and to discuss errors in class (Abrami et al., 2004; Alvidrez et al., 2022; Bray, 2011). Additionally, beliefs about mathematics as a set system that emphasizes correctness are strongly associated with a fixed mindset about students’ mathematical ability (Stipek et al., 2001) and a stronger focus on performance rather than on process (Biberman-Shalev et al., 2011). Therefore, teachers who believe that mathematics is rules-based may be less likely to believe that all students can engage deeply with the content and may use fewer student-centered practices as a result.

Classroom assessment

In addition to driving their instructional practices, teachers’ knowledge and beliefs also influence how they assess their students. Most teachers have had little assessment training (DeLuca & Bellara, 2013; Popham, 2009) and do not feel confident in their ability to properly assess their students (DeLuca & Klinger, 2010; Maclellan, 2004; Volante & Fazio, 2007). If a teacher has not been adequately trained in how to choose or to develop high-quality assessments and to interpret students’ responses, they are less likely to use assessments formatively to improve student learning (Heitink et al., 2016; Rashid & Jaidin, 2014; Zhang & Burry-Stock, 2003). Even if teachers are highly trained, the information they receive from the assessments they give their students, and what they can do with it, depends greatly upon what they think is important to assess. If teachers see mathematics as a set of procedures used to arrive at a single correct answer, they are more likely to assess computational skills (Wellberg, 2023; Cross, 2009; Sfard, 1991). If teachers, themselves, were taught in a way that emphasized algorithmic knowledge, they are more likely to hold this limited view of mathematics (Bray, 2011; Charalambous, 2015; Cross, 2009; Thompson, 1984). The aforementioned prevalence of lecture-based mathematics courses at the university level may explain why teachers with strong mathematical backgrounds tend to focus more on correctness over process when assessing student progress (Biberman-Shalev et al., 2011).

Teachers’ views of their students also influence their classroom assessment practices (Wellberg, 2023; Brookhart, 2003; Cimer & Cimer, 2010; Cross, 2009; DeLuca et al., 2018; McMillan, 2001; Schulz & FitzPatrick, 2016). If teachers do not believe that their students are capable of higher-level work, they are less likely to use tasks with high levels of cognitive demand and are more likely to assess rote memorization of formulas and procedures (Wellberg, 2023; Bray, 2011; Cimer & Cimer, 2010; Cross, 2009; McMillan, 2001; Schulz & FitzPatrick, 2016). Around one-third of teachers in a recent study reported differentiating assessments between classes based on their perceptions of the students in those classes (DeLuca et al., 2018). Given that, particularly at the secondary level, grades are a large factor in determining students’ academic success in terms of future course-taking and college admissions (e.g., Sahin et al., 2017; Wang, 2013), this differentiation may be a form of “pulling for” students. McMillan (2003) described this phenomenon as teachers making assessment and grading decisions based upon the idea “that it is best if all students are successful” (p. 38). If a teacher does not expect their students to be able to complete more rigorous, conceptually demanding tasks, they are less likely to use those tasks, as that could lower students’ grades and endanger their academic futures.

Study 1

Methods

The MT21 survey was administered in 2006 to secondary mathematics PSTs in six countries: Bulgaria, Germany, Korea, Mexico, Taiwan, and the US (Schmidt, 2013). Respondents were randomly given one of two survey forms. These two forms had many common sets of items, but there were also some sets of items that were exclusive to one form or the other. Only Form A included items that focused on PSTs’ beliefs about students’ mathematical abilities and about how student errors should be addressed, and only Form B included items that focused on classroom assessment techniques. SEM analysis is based upon the covariances among item groups, and a covariance cannot be estimated if no respondent has answered both sets of items. Because the items about student ability and assessment preferences were seen by disjoint sets of respondents, I was unable to examine those relationships. Consequently, I split my investigation into two studies, each of which used only data from the PSTs who had received the applicable form of the MT21 survey. I began with Study 1, in which I explored the relationships between course-taking, beliefs about mathematics and student ability, and intentions around how to address student errors using only the subset of respondents who received Form A.

Sample

The MT21 survey was taken by 2,628 mathematics PSTs from 34 institutions across six countries. Because my interest is in teachers who choose to teach mathematics, specifically, I filtered the sample to only include respondents who indicated that they wanted to teach any of the secondary grades (6–12). This removed 310 respondents and left me with an overall sample of 2,318 PSTs. Because I began my investigation with only those who received Form A, the final analytic sample for Study 1 was 1,118 PSTs. The survey sampled at the institution level within each country rather than at the PST level, so the researchers calculated sampling weights for each teacher. The provided sampling weights were used in all analyses to make the results more representative of the overall PST population. The sample counts and percentages of respondents are broken down by categories of interest in Table 1.

The most common countries represented in this part of the sample were Taiwan (29.6%) and Germany (25.2%), and most respondents identified as femaleFootnote 1 (58.4%). Nearly half of respondents (44.9%) were interested in teaching either middle or high school (labeled Secondary), and the others were fairly evenly split between only wanting to teach one or the other, with a bit more leaning toward high school only. Finally, it was most common for the PSTs to be in the last year of their teacher preparation programs, though around one-third of the respondents were in their first year.

Constructs measured

The MT21 survey included groups of items that asked whether respondents took a variety of mathematics, mathematics education, or education courses, whether they had the opportunity to learn about various classroom assessment techniques, their beliefs about the nature of mathematics and about students’ abilities to do mathematics, and how they thought student errors should be handled in the classroom. Because the MT21 survey was a pilot for a later survey (the TEDS-M), I used the scales indicated by the final version of the survey whenever possible. If the items for a given construct were well-aligned between the two surveys, I used confirmatory factor analysis (CFA) using the lavaan package (Rosseel, 2012) in the R statistical computing environment to check that the item groups formed coherent factors within the MT21 data. If the items composing a given scale had been altered between the two surveys, I split the data into random halves and conducted exploratory factor analysis (EFA) on one half using the psych package (Revelle, 2018) for the R statistical computing environment. Next, I used CFA with the other random half of the data to check the fit of the factors obtained in the EFA. Each time I used this process, it resulted in scales that were qualitatively similar to those found in the TEDS-M. The only set of items for which I did not follow these processes was the one regarding mathematics education courses. Nearly all these items were replaced between the MT21 and TEDS-M surveys, so I decided to omit these MT21 items from my analyses, as the survey developers had removed them.

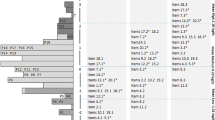

By the end of this process, I had four scales reflecting the topics included in the university-level courses the respondents had taken during their teacher training program: mathematics, education practice, education theory, and classroom assessment. In this section, I describe each of these scales. I also provide tables that present the survey text, the mean value, and the standardized CFA loading for each item. As previously mentioned, the mean and CFA values were calculated using the provided sampling weights.

The mathematics scale was comprised of twelve advanced mathematics topics that the PSTs reported that they either had or had not been exposed to (Table 2). The PSTs were also asked whether they had been exposed to a variety of education topics (Table 3). The education practices scale included general teaching methods and more specific topics such as using technology in the classroom and teaching diverse sets of students. The education theory scale included educational psychology, sociology, and philosophy as well as measurement theory. Because course-taking is often mandated by program requirements and not based upon individual decisions, I used the total number of course topics teachers had been exposed to in each of the three groups described above rather than having them represent LVs. I must emphasize that the sum scores for each group reflect the total number of topics taught to PSTs, which is not necessarily the same as the number of courses a PST took because multiple topics could have been addressed in the same course, or the same topic may have been covered in several different courses.

The final set of course topic items asked the PSTs about the opportunities they had to learn about various aspects of classroom assessment such as developing questions, analyzing student responses, and using the information they obtain from assessments to improve their teaching (Table 4). These items were originally presented with a six-point Likert scale ranging from 0 = “Not at all” to 5 = “To a great extent”, but I dichotomized the responses such that PSTs who selected 0–2 were assigned a score of zero, indicating relatively little opportunity and those who selected 3–5 were assigned a score of one, indicating moderate to high opportunity to learn these skills. This recoding greatly improved the fit of the CFA model and allowed me to retain more items than I would have if I had kept the original options. Because respondents gave their subjective impressions of their opportunities to learn assessment skills rather than giving a yes/no indication of their exposure to certain topics as they had on the other items, I included the assessment items as an LV instead of using a sum score.

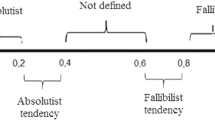

There were also three scales that measured respondents’ beliefs about the nature of mathematics and about students’ abilities to be successful in mathematics. The items measuring PSTs’ beliefs about the nature of mathematics were split into two LVs: mathematics as inquiry and mathematics as rules and procedures (Table 5). The items representing mathematics as inquiry included the ideas that students can discover mathematical concepts and apply them to real life problems. The set of items representing mathematics as a set of rules and procedures, on the other hand, included the ideas that students cannot properly solve mathematical problems if they have not been explicitly taught how to do so and that students must memorize a fixed set of facts and formulas to be successful in mathematics.

The final LV was comprised of items asking about PSTs’ beliefs about which students are capable of being successful in mathematics (Table 6). These items focused on the idea that some people are naturally better at mathematics than are others. Because these items were all negatively worded, such that high responses indicated a more limited view of ability, I reverse scored the responses to these items so that the LV reflected a more open view of who can do mathematics. As with the assessment items, I dichotomized the responses to these items, which were originally presented with a six-point Likert scale from 0 = “Strongly disagree” to 5 = “Strongly agree.” Respondents who selected 0–2 were assigned a score of zero, indicating some level of disagreement, and those who selected 3–5 were assigned a score of one, indicating some level of agreement. The CFA fit statistics for the scales described above were all acceptable and are presented in Table 9 in Appendix.

A bit of context around the sampled teacher training programs

Unfortunately, the universities sampled in this research were anonymized in the data I had, so I was unable to determine what the official requirements were for the programs represented. A detailed description of the typical training programs implemented in the six sampled countries is beyond the scope of this study (see Schmidt et al., 2008 for this information), but I am obliged to note that these countries do have very different educational and social cultures that influence the training that PSTs receive, the beliefs that they hold, and the classroom practices that they believe to be most appropriate.

To provide some context around the training that PSTs in the six sampled countries received, I calculated for each country the weighted percentage of PSTs in the full sample who were in the last year of their preparation programsFootnote 2 who had been exposed to different amounts of topics in the three mathematics and education course categories previously described. The percentages for mathematics and education topics are presented in Tables 10 and 11, respectively, in Appendix. PSTs from Korea, Taiwan, and Bulgaria had typically been exposed to the largest number of mathematics topics, and there was the most variation in mathematics exposure for PSTs from the US. PSTs in Mexico and the US appear to have gotten the most training in education practice topics, and PSTs from Korea, the US, and Mexico had the most exposure to education theory topics. It is important to note that there is variability in the number of topics PSTs were exposed to within each country, which indicates that training programs are not highly standardized across universities in any of these six countries.

Analytic approach

I used SEM analyses to answer my research questions. The sections below present path diagrams (Hox & Bechger, 1998) for the hypothesized and alternative models that I used to address each question. SEM combines measurement and regression models to give researchers a sense of how people’s responses to items/groups of items predict their responses to other items/groups of items. In the path diagrams presented below, LVs are indicated by ovals and observed variables are indicated by rectangles. Presumed causal relationships between variables are indicated by unidirectional arrows, and variables that are not hypothesized to have a causal relationship but are still likely correlated with one another are indicated by a bidirectional, curved arrow. All variables that are dependent on other variables in the model have some amount of measurement error, which is indicated by a small circle with an “e” inside. This includes the latent variables, because they are determined by the sets of items described in Tables 2, 3, 4, 5, and 6.

To answer the first research question, I used the hypothesized and alternative models shown in Fig. 1. Commensurate with my theoretical framework, I expected that the scales reflecting exposure to various topics in mathematics, education theory and practice, and classroom assessment would predict the strength of respondents’ beliefs about the nature of mathematics as either inquiry- or rules-based and that those two LVs would predict the strength of beliefs about whether all students can do mathematics. I expected that greater exposure to university-level mathematics topics would be associated with a rules-based view. Conversely, the more training PSTs had in a variety of assessment techniques, the less likely they may be to see mathematics as just a set of rules to be followed, as an emphasis on the formative assessment techniques included in the classroom assessment LV would likely be paired with a focus on developing students’ conceptual understanding. I also hypothesized that teachers with a more rules-based view of mathematics would tend to believe that not all students can be successful with higher-level mathematics.

I also checked an alternative model (Fig. 1b) in which the course topics had direct relationships with beliefs about student ability rather than the indirect relationships that pass through the two beliefs about mathematics LVs shown in the hypothesized model (Fig. 1a). I compared the \({R}^{2}\) values for the two models and retained the model that explained the most variance for the beliefs about student ability LV. I refer to this model as the “beliefs model.”

The second research question asked how the course topics and beliefs explored in RQ1 relate to the ways that the PSTs think student errors should be addressed when they occur. The survey asked the PSTs how strongly they agreed that student errors should be corrected, discussed by the class, evaluated by other students, or ignored (Table 7). These items were presented with a six-point Likert scale from 0 = “Strongly disagree” to 5 = “Strongly agree.” I used the retained beliefs model as the base for a new model in which beliefs predicted PSTs’ ideas about how student errors should be addressed (Fig. 2). The student errors items did not form a coherent LV, so I kept the original response options and used each item as an individual outcome in the model. I anticipated that PSTs who had a more limited view of student ability and less exposure to a variety of assessment techniques in their training program would be more inclined to correct or to ignore errors than to have a deeper class discussion about them. Because they are related to assessing student understanding, I also hypothesized that PSTs’ opportunities to learn about assessment may predict their ideas about how to address student errors directly in addition to any indirect influence they may have through the beliefs LVs. I must also note that I did not have access to information about whether the PSTs who took this survey had classroom teaching experience, so I cannot be sure whether their responses to the student errors items are based upon their theoretical opinions or on their practical experience.

Although the existing literature led me to believe that ability beliefs would help determine teachers’ behaviors in a specific classroom context, the decontextualized nature of the MT21 survey may obscure this relationship. Consequently, while the hypothesized model (Fig. 2a) included relationships between all three beliefs LVs and the student errors variables, the alternative model (Fig. 2b) removed the paths from the student ability LV to the errors items. These paths are replaced by direct paths from the course topics variables. This allowed me to determine whether any non-assessment courses were able to predict PSTs’ ideas about how to address student errors over-and-above what could be predicted by beliefs about the nature of mathematics. To arrive at the retained model (which I refer to as the “errors model”), I compared the R2 values of the models and inspected the regression results to determine which variables appeared to be the primary drivers of each outcome variable.

Results

Course-taking and beliefs

The fit statistics were almost identical for the two models (Table 12 in Appendix), but the R2 value for the beliefs about student ability LV was considerably higher in the hypothesized model (0.615 versus 0.158) (Table 13 in Appendix). Consequently, I retained the hypothesized model. The regression results for the hypothesized and alternative models are presented in Table 14 in Appendix, and the path diagram for the retained beliefs model is shown in Fig. 3. This diagram shows the standardized relationships between items, with a value of one indicating a perfectly positive relationship, negative one indicating a perfectly negative relationship, and zero indicating no relationship. As expected, the more mathematics courses a PST had taken, the more likely they were to hold a rules-based view of mathematics. More education practice courses were associated with stronger views of mathematics as inquiry and weaker views of mathematics as rules-based. Interestingly, opportunities to learn about assessment were positively associated with both types of beliefs. The correlation between the two views of mathematics was statistically significant at only the p < 0.05 level and was quite small (0.18), which suggests that it is likely not practically significant. This indicates that one may be able to view mathematics as being about both inquiry and procedures.

As predicted, respondents’ views of the nature of mathematics had a significant association with their beliefs about student ability. Those with a strong rules-based view of mathematics were significantly less likely (− 0.73) to think all students can do high-level mathematics. While seeing mathematics as inquiry-based had a moderately positive association with student ability beliefs (0.45), that relationship was much weaker than with a rules-based view. Although the R2 values for the inquiry and rules-based views of mathematics LVs are relatively low (with coure-taking explaining 11.1% and 23.2% of the variance in the LVs, respectively), the R2 value for beliefs about student ability was very high at 0.615, which means that most (61.5%) of the variation we see in respondents’ reported beliefs about students’ mathematical abilities was explained by variation in their views on the nature of mathematics.

Beliefs and the handling of student errors

The regression results for the hypothesized, alternative, and retained errors models are presented in Table 15 in Appendix, and the retained model is presented in Fig. 4. In the hypothesized model, the two LVs representing beliefs about the nature of mathematics were typically significant predictors of the ways student errors may be addressed, though the LV concerning beliefs about student ability was not (Table 15 in Appendix). As discussed previously, this lack of significance is likely at least partly a result of the decontextualized nature of the survey. As anticipated, the classroom assessment training LV did predict PSTs’ responses to some of the student errors items beyond their indirect path through the beliefs about mathematics LVs in both the hypothesized and alternative models. The other course topic variables, however, were not significant predictors of how PSTs thought student errors should be addressed, with one exception. The number of mathematics topics PSTs had been exposed to significantly predicted whether they thought other students should evaluate incorrect answers in the alternative model.

To arrive at the retained model, I removed the beliefs about student ability LV as a possible predictor and proceeded with only the two mathematics beliefs LVs and the opportunities to learn about assessment LV as possible predictors of the outcome variables, where they had been significant in the original two models. I also kept mathematics topics as a predictor of whether PSTs reported that errors should be evaluated by other students. The retained model had acceptable fit statistics (Table 12 in Appendix), which were very similar to those for the hypothesized and alternative models.

Unsurprisingly, an inquiry view of mathematics was negatively associated with correcting or ignoring incorrect answers and positively associated with having students evaluate their classmates’ errors. The largest association between beliefs about mathematics and the ways that student errors should be addressed was the positive relationship between a rules-based view and correcting answers and moving on (0.41). The negative relationship between holding a rules-based view of mathematics and thinking students should evaluate their classmates’ errors was weaker but still practically significant at − 0.21. Opportunities to learn about assessment only significantly predicted responses to the items about ignoring errors and having students discuss them as a class. Surprisingly, the association between opportunities to learn about classroom assessment and an inclination to simply ignore student errors when they occur was positive. This relationship, however, was very small (0.12) and only significant at the p < 0.05 level, which suggests that this relationship could be an artifact of the specific sample used in this analysis rather than being indicative of a general trend. The R2 values for the outcome variables (Table 13 in Appendix) indicate that only around 2–5% of the variation in these items is explained by variation in PSTs’ beliefs about mathematics and opportunities to learn about classroom assessment, except for correcting and moving on, which had 19.7% of the variation explained by respondents’ reported beliefs about mathematics.

Discussion

The results of the retained beliefs model (Fig. 3) support previous findings that teachers’ own educational experiences predict how they view their subject matter (Charalambous, 2015; Cross, 2009; De Corte et al., 2008; Simmons et al., 1999; Thompson, 1984; Wilkins, 2008; Yang et al., 2020) and that those views, in turn, predict their assumptions about student ability (Bray, 2011; Cross, 2009). The strong relationship between a rules-based view of mathematics and the belief that not all students can do mathematics is concerning, as is the supporting relationship between a rules-based view and high-level mathematics course-taking.

These results can make teacher educators aware that those coming in with strong mathematics backgrounds may hold stronger rules-based views. This should not preclude students with this background from entering into training programs, but these programs should aim to counter those experiences with other courses that are shown to be associated with a more inquiry-based view of mathematics, such as courses around education practice. The small, and likely not practically significant, relationship between inquiry- and rules-based views of mathematics aligns with prior qualitative findings (Beswick, 2007) and is encouraging for teacher educators, as it suggests that even if candidates enter a teacher training program with strong rules-based views, they may still be able to develop stronger inquiry-based views, which may help PSTs adopt the belief that all students are capable of doing high-level work.

The finding that PSTs who have taken more mathematics courses tend to have a more rules-based view of the subject also has implications for university mathematics departments. It is doubtful that mathematics professors, who themselves have a deep knowledge of and appreciation for mathematical structures and the creative thinking needed to identify and to prove new mathematical concepts, want their students to develop simplistic, rules-based understandings of the subject. Although there has been some recent movement toward using a more student-centered approach in undergraduate mathematics courses (see Melhuish et al., 2022), more may need to be done to impress upon professors the potential consequences of using lecture-driven instruction.

One notable inconsistency of the current study with prior research is that the hypothesized errors model (Table 15 in Appendix) showed relatively weak associations between respondents’ beliefs about student ability and the ways that they thought errors should be handled in the classroom. There is ample evidence from other studies that beliefs about student ability can have an enormous impact on actual classroom practice (Beswick, 2004; Bosse et al., 2011; Bray, 2011; Browman et al., 2020; Cross, 2009; Pagiling et al., 2021; Park et al., 2016; Sztajn, 2003; Thompson, 1984). One key limitation of the present study that may explain this anomalous result is that the respondents were PSTs who may not have had classroom teaching experience, and the survey was presented in a general way rather than asking about a specific classroom environment. Teachers who espouse progressive views of mathematics often fail to implement associated practices when they teach students whom they do not think can handle high-level work (Cross, 2009; Sztajn, 2003; Thompson, 1984). Consequently, it is possible that the decontextualized nature of the survey may have lowered the observed relationships between beliefs about student ability and intended techniques for addressing student errors in the classroom.

The fact that the analytic sample includes PSTs from six countries with distinct educational and social contexts may have also muddied the results. For example, there is ample evidence that teachers in the US often believe that racially minoritized students are less capable, and that belief is reflected in their classroom practice (Chubbuck, 2004; Diamond et al., 2004; Dunn, 2003; Franzak, 2019; Grantham & Ford, 2003; Henfield & Washington, 2012; Hyland, 2005; McGee & Martin, 2011; Niesz, 2006; Terrill & Mark, 2000). Those types of social prejudices may not be as prominent in sampled countries with less racial diversity. Future research is needed that looks at these relationships in context.

Study 2

In Study 1, I used the data from survey Form A only to investigate whether PSTs’ beliefs about student ability predicted the ways they thought student errors should be addressed, because these two sets of items were only present on that survey form. Unfortunately, I was unable to investigate the relationships between beliefs about student ability and assessment format preferences, because these two sets of items were on different forms of the survey. Rather, in Study 2, I focused on the relationships between PSTs’ beliefs about mathematics and their thoughts about various forms of assessment using the data from survey Form B.

Methods

Sample

The second study used the responses provided by the 1,200 PSTs who took Form B of the MT21 survey. Again, the provided sampling weights were used in all analyses to make the results more representative of the population. The sample counts and percentages of respondents are broken down by categories of interest in Table 1 and are very similar to those seen in the first study. One difference is that while Taiwan and Germany were still the most heavily represented countries, Germany now had the largest percentage of PSTs (31.1%) and Taiwan the second largest percentage (26.9%). Most respondents still identified as female (56.4%). Slightly more respondents stated that they would want to teach any secondary level (50.5%), and while the rest of the respondents were still a bit more inclined to only teach high school, the difference in the percentage of middle- and high-school-only responses was smaller than in Study 1. There was also a slightly higher percentage of PSTs in the last year of their teaching preparation programs than we saw in the group who took survey Form A.

Analytic approach

I used the same hypothesized and alternative models that I had used for the errors models in Study 1 (Fig. 2), but I removed the student ability beliefs LV and replaced the four student errors items with five survey items asking how much emphasis respondents thought was appropriate to give to various forms of assessment: formative assessment, projects, oral reports, written tests, and writing about their thinking in mathematics (Table 8). As in Study 1, I used the \({R}^{2}\) and regression coefficient values to determine a retained model, which I refer to as the “assessment model.” One thing of note in the assessment items used in this model is that the question asking about formative assessment specifically included tests as an example. While students and teachers can certainly glean information from tests about which concepts and skills a student has not yet mastered, this is not typically what classroom assessment researchers have in mind when they think about formative assessment, and the results of this model need to be interpreted accordingly. Also, I must again note that I do not know which PSTs had classroom teaching experience and, therefore, cannot be sure whether responses were based upon theoretical opinions or personal experience.

Results

The regression results for the hypothesized, alternative, and retained models are provided in Table 16 in Appendix, and the retained assessment model is presented in Fig. 5. Beliefs about mathematics significantly predicted responses to most assessment items. Projects, formative assessments, and oral assessments were also predicted by various course topics. For the sake of simplicity, the retained assessment model included only the paths from the mathematics beliefs LVs and the significant paths from course topics. The fit statistics did not change much between models (Table 12 in Appendix).

It should be noted that as a result of using a different subsample of survey respondents, the path values from course topics to beliefs have changed slightly from what we saw in Figs. 3 and 4. It is expected that random differences in the subsample would result in different values, and most of the changes are not large enough to be of concern. The notable exception is that the path from education theory courses to a rules-and-procedures view of mathematics was significant at the p < 0.05 level in the beliefs and errors models and was no longer significant in the assessment model. Similarly, the small correlation between the two types of beliefs about mathematics is no longer statistically significant.

As expected, it appears that a rules-based view of mathematics was most strongly associated with the use of written tests (0.39). Conversely, a more inquiry-based view of mathematics predicted increased use of writing about mathematics (0.34) and less emphasis on written tests, though that negative relationship was fairly small (− 0.12). An inquiry-based view of mathematics was not significantly associated with the use of formative assessment, but a rules-based view was (0.17). This finding may be linked to the wording of the formative assessment item, which specifically mentioned the use of tests. Additionally, the more opportunities PSTs had to learn about various assessment techniques, the more likely they were to want to use formative assessment (0.21). The use of projects was predicted more strongly by the courses that PSTs had taken than by their beliefs about mathematics. While there was a small negative relationship between a rules-based view of mathematics and the use of projects (− 0.12), the number of mathematics topics to which PSTs had been exposed was a much stronger predictor, with more topics being associated with less interest in using projects (− 0.24). There was also a small positive relationship between exposure to education practice topics and interest in using projects (0.11).

The R2 values for the assessment items (Table 13 in Appendix) were fairly low, indicating that course-taking and beliefs about mathematics only explained a small amount of the variation in responses. The form of assessment with the most variance explained by the predictors was written tests (16.1%). Course-taking and beliefs about mathematics explained roughly 12% of the variance for formative assessments, projects, and writing about mathematics, but they only explained 3.5% of the variance for the oral assessment item.

Discussion

The results of the assessment model are well-aligned with the prior literature on teacher beliefs about mathematics and approaches to assessment. As anticipated, PSTs who held a stronger rules-based view of mathematics were more likely to focus on traditional forms of assessment (tests) and less on alternative formats that are more likely to require a greater depth of conceptual understanding from students (Cross, 2009; Sfard, 1991). Interestingly, the use of formative assessment was associated with a rules-based view of mathematics and not with an inquiry-based view. This could be a result of the wording of the item (see Table 8), which specifically mentioned using tests for diagnostic purposes. If the item had offered the use of informal data from classroom discussions or observations of students’ performance on instructional tasks as examples instead, the relationship may have been different.

Another outcome that corroborates findings in the extant literature was the positive relationship between seeing mathematics as a process of inquiry and using writing in the classroom. Teachers who want their students to use mathematics as a toolkit to solve problems are more likely to require them to explain and to justify their thinking (Franke et al., 2007). Given that the items that compose the LV about opportunities to learn about assessment were focused on diagnosing student understanding, it is also unsurprising that PST’s who had more of that training were likely to plan to use formative assessment in their classrooms, as teachers typically feel more confident when they have been adequately trained (Heitink et al., 2016; Rashid & Jaidin, 2014; Zhang & Burry-Stock, 2003).

Conclusion

In the studies detailed above, I used SEM analysis to investigate the relationships between mathematics PSTs’ training, beliefs, and intentions around the handling of student errors and approaches to classroom assessment. There are many qualitative studies that have provided valuable, in-depth explorations of various combinations of these factors. My intention in conducting the present studies was to take a broad view of all the relationships of interest at once. In doing so, I was able to corroborate prior qualitative work around each pair-wise combination of the training, belief, and classroom practice factors previously described while also getting a sense of how these relationships form a kind of web of experiences that inform one another. I was also able to get a sense of the relative strength of these relationships.

Study 1 supported previous qualitative findings that teachers with stronger mathematics backgrounds are more likely to see mathematics as a set of rules (Cross, 2009; Simmons et al., 1999; Wilkins, 2008; Yang et al., 2020) and are, in turn, less likely to believe that all students are capable of being mathematically successful (Stipek et al., 2001). A rules-based view of mathematics was also associated with a preference for simply correcting student errors and moving on as opposed to engaging students in discussions around any misconceptions they may hold.

Study 2 explored the relationships between PSTs’ prior training and beliefs about mathematics and their preferred assessment formats. My findings support prior qualitative research around the relationships between beliefs and assessment preferences and also extended prior understandings by indicating that university-level coursework can also directly relate to PSTs’ preferred assessment strategies. PSTs with stronger rules-based views of mathematics were more likely to emphasize traditional tests as opposed to alternatives that would allow students to demonstrate deeper conceptual understandings of the material (Wellberg, 2023; Biberman-Shalev et al., 2011). The more PSTs saw mathematics as being about inquiry, on the other hand the more likely they were to have students write about their thinking (see also Wellberg, 2023). Those who had more assessment training were more likely to plan to use their assessment results formatively, which supports previous findings (Zhang & Burry-Stock, 2003). A new finding in this study was that PSTs who had taken more university-level mathematics classes were less likely to assign projects, even when beliefs about mathematics as being rules-based (which is also strongly predicted by mathematics course-taking) were accounted for.

I used separate subsamples in each study to avoid issues of missing data resulting from the inclusion of form-specific sets of items. The change in sample slightly altered the relationships between course topics and beliefs about mathematics between the two studies. While most of these changes were minor, two relationships that had been significant at the p < 0.05 level in Study 1 became nonsignificant in Study 2. The overall stability of the directions and significance levels of the results in these two studies can be interpreted as evidence that most of the findings hold up across different samples, while the barely significant relationships between the two views of mathematics and between education theory courses and a rules-based view of mathematics seen in Study 1 may have been artifacts of that sample and not generalizable to the larger mathematics PST population.

The findings from these two studies have implications for teacher education in that teacher candidates who come in with a strong mathematics background may hold views of mathematics and student ability that may hinder their abilities to implement student-centered instruction and assessment techniques in their future classrooms. While teacher preparation programs should certainly not reject prospective students on this basis, teacher educators should be aware that candidates with high levels of mathematical knowledge may be in greater need of training specifically designed to counteract static views of mathematics and of students.

Limitations and future directions

The main limitation of the studies above is the lack of context around teacher education program requirements, PSTs’ practical classroom experience, and other institutional/policy constraints in the MT21 survey. There is ample evidence that teachers’ beliefs about their students greatly influence their classroom practice (e.g., Beswick, 2003, 2004; Bray, 2011; Browman et al., 2020; Corkin et al., 2015; Cross, 2009; DeLuca et al., 2018; Dunn, 2003; Hyland, 2005; Jackson et al., 2017; Louie, 2017; Pagiling et al., 2021; Park et al., 2016; Schulz & FitzPatrick, 2016; Sztajn, 2003; Thompson, 1984). It is likely that the nonsignificant associations found between the beliefs about student ability LV and the outcome variables were the result of the decontextualized nature of the survey. If the survey were given to in-service teachers, and they were asked to describe their practices with a specific class (or classes) of students, the relationships may be clearer. Future research is needed to determine how strongly class level and demographic composition predicts teachers’ assessment practices.

Another limitation is the wording of some of the survey items. In particular, the item asking about formative assessment specifically mentioned using tests to diagnose gaps in students’ knowledge, which is not what formative assessment means to most classroom assessment experts. This wording issue also highlights the limitation that the survey only had one item to represent each form of assessment, which makes the results contingent upon PSTs’ interpretations of the wording of those items. Future research in this area may want to give participants more opportunities to describe their preferred practices by using surveys that include a wider spread of questions that can be combined into LVs rather than relying upon a single question for each type of assessment.

A final note is that the data used in these studies was collected in 2006, and the relationships between course-taking and beliefs about mathematics may have changed as teacher education programs have evolved.

Data availability

The data used to produce this work are available to the public online (https://doi.org/10.3886/ICPSR34430.v1).

Notes

Respondents were only presented with Male and Female options and were not able to specify alternative identities, though some respondents left the item blank.

Note that there were 93 such PSTs from Bulgaria, 267 from Germany, 104 from Korea, 138 from Mexico, 261 from Taiwan, and 193 from the USA in the full, unweighted sample.

References

Abrami, P. C., Poulsen, C., & Chambers, B. (2004). Teacher motivation to implement an educational innovation: Factors differentiating users and non-users of cooperative learning. Educational Psychology, 24(2), 201–216.

Alvidrez, M., Louie, N., & Tchoshanov, M. (2022). From mistakes, we learn? Mathematics teachers’ epistemological and positional framing of mistakes. Journal of Mathematics Teacher Education. https://doi.org/10.1007/s10857-022-09553-4

Ball, D. L., & Bass, H. (2000). Interweaving content and pedagogy in teaching and learning to teach: Knowing and using mathematics. In J. Boaler (Ed.), Multiple perspectives on mathematics teaching and learning (Vol. 1, pp. 83–104). Ablex.

Banilower, E. R., Smith, P. S., Malzahn, K. A., Plumley, C. L., Gordon, E. M., & Hayes, M. L. (2018). Report of the 2018 NSSME+. Horizon Research, Inc.

Bell, E. S., & Bell, R. N. (1985). Writing and mathematical problem solving: Arguments in favor of synthesis. School Science and Mathematics, 85(3), 210–221.

Beswick, K. (2003). Accounting for the contextual nature of teachers’ beliefs in considering their relationship to practice (vol. 1), pp. 152–159.

Beswick, K. (2004). The impact of teachers’ perceptions of student characteristics on the enactment of their beliefs. In M. J. Hoines & A. B. Fuglestad (Eds.), Proceedings of the 28th annual conference of the international group for the psychology of mathematics education (pp. 111–118).

Beswick, K. (2007). Teachers’ beliefs that matter in secondary mathematics classrooms. Educational Studies in Mathematics, 65(1), 95–120. https://doi.org/10.1007/s10649-006-9035-3

Biberman-Shalev, L., Sabbagh, C., Resh, N., & Kramarski, B. (2011). Grading styles and disciplinary expertise: The mediating role of the teacher’s perception of the subject matter. Teaching and Teacher Education, 27(5), 831–840. https://doi.org/10.1016/j.tate.2011.01.007

Biggs, J. (1988). The role of metacognition in enhancing learning. Australian Journal of Education, 32(2), 127–138. https://doi.org/10.1177/000494418803200201

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy and Practice, 5(1), 7–74.

Boaler, J. (1998). Open and closed mathematics: Student experiences and understandings. Journal for Research in Mathematics Education, 29(1), 41–62.

Boaler, J., & Selling, S. K. (2017). Psychological imprisonment or intellectual freedom? A longitudinal study of contrasting school mathematics approaches and their impacton adults’ lives. Journal for Research in Mathematics Education, 48(1), 78–105.

Boekaerts, M. (1997). Self-regulated learning: A new concept embraced by researchers, policy makers, educators, teachers, and students. Learning and Instruction, 7(2), 161–186. https://doi.org/10.1016/S0959-4752(96)00015-1

Bolte, L. A. (1999). Using concept maps and interpretive essays for assessment in mathematics. School Science and Mathematics, 99(1), 19–30. https://doi.org/10.1111/j.1949-8594.1999.tb17442.x

Borasi, R. (1994). Capitalizing on errors as “springboards for inquiry”: A teaching experiment. Journal for Research in Mathematics Education, 25(2), 166–208.

Bosse, M. J., Adu-Gyamfi, K., & Cheetham, M. (2011). Translations among mathematical representations: Teacher beliefs and practices. International Journal for Mathematics Teaching and Learning, 1–23.

Bray, W. S. (2011). A collective case study of the influence of teachers’ beliefs and knowledge on error-handling practices during class discussion of mathematics. Journal for Research in Mathematics Education, 42(1), 2–38. https://doi.org/10.5951/jresematheduc.42.1.0002

Brookhart, S. M. (2003). Developing measurement theory for classroom assessment purposes and uses. Educational Measurement: Issues and Practice, 22(4), 5–12. https://doi.org/10.1111/j.1745-3992.2003.tb00139.x

Browman, A. S., Miele, D. B., O’Dwyer, L. M., & May, S. C. (2020). Teachers’ growth mindsets and the differential treatment of high- and low-ability students [Preprint]. Open Science Framework. https://doi.org/10.31219/osf.io/mkh4s

Burroughs, N., & Schmidt, W. (2014). An examination of future primary teachers attitudes about the teaching of mathematics: An international perspective. In International perspectives on teacher knowledge, beliefs and opportunities to learn (pp. 279–296). Springer.

Campbell, P. F., Nishio, M., Smith, T. M., Clark, L. M., Conant, D. L., Rust, A. H., DePiper, J. N., Frank, T. J., Griffin, M. J., & Choi, Y. (2014). The relationship between teachers’ mathematical content and pedagogical knowledge, teachers’ perceptions, and student achievement. Journal for Research in Mathematics Education, 45(4), 419–459. https://doi.org/10.5951/jresematheduc.45.4.0419

Carpenter, T. P., Fennema, E., Chiang, C.-P., & Loef, M. (1989). Using knowledge of children’s mathematics thinking in classroom teaching: An experimental study. American Educational Research Journal, 26(4), 499–531.

Charalambous, C. Y. (2015). Working at the intersection of teacher knowledge, teacher beliefs, and teaching practice: A multiple-case study. Journal of Mathematics Teacher Education, 18(5), 427–445. https://doi.org/10.1007/s10857-015-9318-7

Chow, T.-C. F. (2011). Students’ difficulties, conceptions and attitudes towards learning algebra: An intervention study to improve teaching and learning.

Chubbuck, S. M. (2004). Whiteness enacted, whiteness disrupted: The complexity of personal congruence. American Educational Research Journal, 41(2), 301–333. https://doi.org/10.3102/00028312041002301

Cimer, S. O., & Cimer, A. (2010). What teachers assess and its consequences (vol. 11, no. 2, p. 24).

Cobb, P., Boufi, A., McClain, K., & Whitenack, J. (1997). Reflective discourse and collective reflection. Journal for Research in Mathematics Education, 28(3), 258–277.

Corkin, D. M., Ekmekci, A., & Papakonstantinou, A. (2015). Antecedents of teachers’ educational beliefs about mathematics and mathematical knowledge for teaching among in-service teachers in high poverty urban schools. Australian Journal of Teacher Education. https://doi.org/10.14221/ajte.2015v40n9.3

Cowie, B. (2005). Pupil commentary on assessment for learning. Curriculum Journal, 16(2), 137–151.

Crooks, T. J. (1988). The impact of classroom evaluation practices on students. Review of Educational Research, 58(4), 438–481.

Cross, D. I. (2009). Alignment, cohesion, and change: Examining mathematics teachers’ belief structures and their influence on instructional practices. Journal of Mathematics Teacher Education, 12(5), 325–346. https://doi.org/10.1007/s10857-009-9120-5

Davis, B., Towers, J., Chapman, O., Drefs, M., & Friesen, S. (2020). Exploring the relationship between mathematics teachers’ implicit associations and their enacted practices. Journal of Mathematics Teacher Education, 23(4), 407–428. https://doi.org/10.1007/s10857-019-09430-7

De Corte, E., Verschaffel, L., & Depaepe, F. (2008). Unraveling the relationship between students’ mathematics-related beliefs and the classroom culture. European Psychologist, 13(1), 24–36. https://doi.org/10.1027/1016-9040.13.1.24

Delaney, E. (1991). Applying geography in the classroom through structured discussions. Journal of Geography, 90(3), 129–133.

DeLuca, C., & Bellara, A. (2013). The current state of assessment education: Aligning policy, standards, and teacher education curriculum. Journal of Teacher Education, 64(4), 356–372.

DeLuca, C., & Klinger, D. A. (2010). Assessment literacy development: Identifying gaps in teacher candidates’ learning. Assessment in Education: Principles, Policy and Practice, 17(4), 419–438. https://doi.org/10.1080/0969594X.2010.516643

DeLuca, C., Valiquette, A., Coombs, A., LaPointe-McEwan, D., & Luhanga, U. (2018). Teachers’ approaches to classroom assessment: A large-scale survey. Assessment in Education: Principles, Policy and Practice, 25(4), 355–375. https://doi.org/10.1080/0969594X.2016.1244514

Diamond, J. B., Randolph, A., & Spillane, J. P. (2004). Teachers’ expectations and sense of responsibility for student learning: The importance of race, class, and organizational habitus. Anthropology and Education Quarterly, 35(1), 75–98. https://doi.org/10.1525/aeq.2004.35.1.75

Dunn, T. (2003). Preparing preservice teachers for diverse classrooms: Influences of CMS on beliefs and instructional practices (pp. 444–447).

Dweck, C. S. (2006). Mindset: The new psychology of success. Random House.

Education Committee of the European Mathematical Society. (2012). What are the reciprocal expectations between teacher and students? Solid findings in mathematics education on didactical contract. Newsletter of the European Mathematical Society, 84, 53–55.

Education Committee of the European Mathematical Society. (2013a). Sociomathematical norms: In search of the normative aspects of mathematical discussions. Newsletter of the European Mathematical Society, 88, 59–61.

Education Committee of the European Mathematical Society. (2013b). Solid findings on students’ attitudes to mathematics. Newsletter of the European Mathematical Society, 89, 51–53.

Ernest, P. (1989). The knowledge, beliefs and attitudes of the mathematics teacher: A model. Journal of Education for Teaching, 15(1), 13–33.

Fives, H., & Buehl, M. M. (2012). Spring cleaning for the “messy” construct of teachers’ beliefs: What are they? Which have been examined? What can they tell us? In K. R. Harris, S. Graham, T. Urdan, S. Graham, J. M. Royer, & M. Zeidner (Eds.), APA educational psychology handbook, Vol 2: Individual differences and cultural and contextual factors (pp. 471–499). American Psychological Association. https://doi.org/10.1037/13274-019

Fives, H., & Buehl, M. M. (2016). Teachers’ beliefs, in the context of policy reform. Policy Insights from the Behavioral and Brain Sciences, 3(1), 114–121. https://doi.org/10.1177/2372732215623554

Franke, M. L., Kazemi, E., & Battey, D. (2007). Mathematics teaching and classroom practice. In F. K. Lester (Ed.), Second handbook of research on mathematics teaching and learning (Vol. 1, pp. 225–256). Information Age Publishing.

Franke, M. L., Webb, N. M., Chan, A. G., Ing, M., Freund, D., & Battey, D. (2009). Teacher questioning to elicit students’ mathematical thinking in elementary school classrooms. Journal of Teacher Education, 60(4), 380–392. https://doi.org/10.1177/0022487109339906

Franzak, M. D. (2019). Mathematics as a racialized space: An analysis of secondary teachers’ beliefs of teaching mathematics to latinx students [Dissertation]. New Mexico State University.

Fukuzawa, S., & DeBraga, M. (2019). Graded response method: Does question type influence the assessment of critical thinking. Journal of Curriculum and Teaching, 8(1), 1. https://doi.org/10.5430/jct.v8n1p1

Gardee, A., & Brodie, K. (2022). Relationships between teachers’ interactions with learner errors and learners’ mathematical identities. International Journal of Science and Mathematics Education, 20(1), 193–214.

Gordon, D., Blundell, C., Mills, R., & Bourke, T. (2023). Teacher self-efficacy and reform: A systematic literature review. The Australian Educational Researcher, 50(3), 801–821.

Grantham, T. C., & Ford, D. Y. (2003). Beyond self-concept and self-esteem: Racial identity and gifted African American students. The High School Journal, 87(1), 18–29.

Guskey, T. R., & Link, L. J. (2019). Exploring the factors teachers consider in determining students’ grades. Assessment in Education: Principles, Policy and Practice, 26(3), 303–320. https://doi.org/10.1080/0969594X.2018.1555515

Heitink, M. C., Van der Kleij, F. M., Veldkamp, B. P., Schildkamp, K., & Kippers, W. B. (2016). A systematic review of prerequisites for implementing assessment for learning in classroom practice. Educational Research Review, 17, 50–62.

Helme, S., & Clarke, D. (2001). Identifying cognitive engagement in the mathematics classroom. Mathematics Education Research Journal, 13(2), 133–153. https://doi.org/10.1007/BF03217103

Henfield, M. S., & Washington, A. R. (2012). “I want to do the right thing but what is it?”: White teachers’ experiences with African American students. The Journal of Negro Education, 81(2), 148. https://doi.org/10.7709/jnegroeducation.81.2.0148