Abstract

Selective segmentation involves incorporating user input to partition an image into foreground and background, by discriminating between objects of a similar type. Typically, such methods involve introducing additional constraints to generic segmentation approaches. However, we show that this is often inconsistent with respect to common assumptions about the image. The proposed method introduces a new fitting term that is more useful in practice than the Chan–Vese framework. In particular, the idea is to define a term that allows for the background to consist of multiple regions of inhomogeneity. We provide comparative experimental results to alternative approaches to demonstrate the advantages of the proposed method, broadening the possible application of these methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is an important application of image processing techniques in which some, or all, objects in an image are isolated from the background. In other words, for an image \(z(\varvec{x})\in {\mathbb {R}}^{2}\), we find the partitioning of the image domain \(\varOmega \subset {\mathbb {R}}^{2}\) into subregions of interest. In the case of two-phase approaches, this consists of the foreground domain \(\varOmega _\mathrm{F}\) and background domain \(\varOmega _\mathrm{B}\), such that \(\varOmega =\varOmega _\mathrm{F}\cup \varOmega _\mathrm{B}\). In this work, we concentrate on approaching this problem with variational methods, particularly in cases where user input is incorporated. Specifically, we consider the convex relaxation approach of [8, 14] and many others. This consists of a binary labelling problem where the aim is to compute a function \(u(x)\in \{0,1\}\) indicating regions belonging to \(\varOmega _\mathrm{F}\) and \(\varOmega _\mathrm{B}\), respectively. This is obtained by imposing a relaxed constraint on the function, \(u\in [0,1]\), and minimising a functional that fits the solution to the data with certain conditions on the regularity of the boundary of the foreground regions.

We will first introduce the seminal work of Chan and Vese [15], a segmentation model that uses the level set framework of Osher and Sethian [31]. This approach assumes that the image z is approximately piecewise-constant, but is dependent on the initialisation of the level set function as the minimisation problem is non-convex. The Chan–Vese model was reformulated to avoid this by Chan et al. [14], using convex relaxation methods, that has the following data fitting functional

where \(f_{1}(\varvec{x})\) and \(f_{2}(\varvec{x})\) are data fitting terms indicating the foreground and background regions, respectively. In particular, in [14, 15] these are given by

It should be noted that it is common to fix \(\lambda = \lambda _{1}=\lambda _{2}\). The introduction of binary labels to image segmentation was also proposed by Lie et al. [26], with the connections between [14] and [26] discussed in Wei et al. [44]. The data fitting functional is balanced against a regularisation term. Typically, this penalises the length of the contour. This is represented by the total variation (TV) of the function [15, 37] and is sometimes weighted by an edge detection function \(g(s) = 1/(1+\beta s^{2})\) [8, 33, 35, 39]. Therefore, the regularisation term is given as

The convex segmentation problem, assuming fixed constants \(c_{1}\) and \(c_{2}\), is then defined by

In the case where the intensity constants are unknown it is also possible to minimise \(F_\mathrm{{CV}}\) alternately with respect to \(u, c_{1}\), and \(c_{2}\), however, this would make the problem non-convex and hence dependent on the initialisation of u. Functionals of this type have been widely studied with respect to two-phase segmentation [8, 14, 15], which is our main interest. Alternative choices of data fitting terms can be used when different assumptions are made on the image, z. Examples include [1, 2, 16, 25, 40, 43]. We note that multiphase approaches [9, 42] are also closely related to this formulation although in this paper we focus on the two-phase problem due to associated applications of interest. It is also important to acknowledge analogous methods in the discrete setting such as [4, 18, 22, 36]. However, we do not go into detail about such methods here, although we introduce the work of [17] in Sect. 3 and compare corresponding results in Sect. 7.

In selective segmentation, the idea is to apply additional constraints such that user input is incorporated to isolate specific objects of interest. It is common for the user to input marker points to form a set \({\mathscr {M}}\), where \( {\mathscr {M}}=\{ (x_{i},y_{i})\in \varOmega , 1\le i\le k\} \) and from this we can form a foreground region \({\mathscr {P}}\) whose interior points are inside the object to be segmented. In the case that \({\mathscr {M}}\) is provided, \({\mathscr {P}}\) will be a polygon, but any user-defined region in the foreground is consistent with the proposed method. Some examples of selective or interactive methods include [10, 17, 21, 22, 27, 30, 35, 38, 41, 48]. A particular application of this in medical imaging is organ contouring in computed tomography (CT) images. This is often done manually which can be laborious and inefficient, and it is often not possible to enhance existing methods with training data. In cases where learning-based methods are applicable, the work of Xu et al. [46] and Bernard and Gygli [5] are state-of-the-art approaches. At this stage, we define the additional constraints in selective segmentation as follows:

where \({\mathscr {D}}(\varvec{x})\) is some distance penalty term, such as [34, 35, 39], and \(\theta \) is a selection parameter. Essentially, the idea is that the selection term \({\mathscr {D}}(\varvec{x})\) (based on the region \({\mathscr {P}}\) formed by the user input marker set) should penalise regions of the background (as defined by the data fitting term \(f_{2}({\varvec{x}})\)) and also pixels far from \({\mathscr {P}}\). In this paper, we choose \({\mathscr {D}}(\varvec{x})\) to be the geodesic distance penalty proposed in [35]. Explicitly, the geodesic distance from the region \({\mathscr {P}}\) formed from the marker set is given by:

where \({\mathscr {D}}_{M}^{0}(\varvec{x})\) is the solution of the following PDE:

The function \(q(\varvec{x})\) is image dependent and controls the rate of increase in the distance. It is defined as a function similar to

where \(\varepsilon _{{\mathscr {D}}}\) is a small nonzero parameter and \(\beta _{G}\) is a non-negative tuning parameter. We set the value of \(\beta _{G} =1000\) and \(\varepsilon _{{\mathscr {D}}} = 10^{-3}\) throughout. Note that if \(q(\varvec{x})\equiv 1\), then the distance penalty \({\mathscr {D}}_{M}(\varvec{x})\) is simply the normalised Euclidean distance, as used in [39].

A general selective segmentation functional, assuming homogeneous target regions, is therefore given by:

Assuming that the optimal intensity constants \(c_{1}\) and \(c_{2}\) are fixed, the minimisation problem is then:

Again, it is possible to alternately minimise \(F_{S}(u,c_1,c_2)\) with respect to the constants \(c_{1}\) and \(c_{2}\) to obtain the average intensity in \(\varOmega _\mathrm{F}\) and \(\varOmega _\mathrm{B}\), respectively. However, in selective segmentation it is often sufficient to fix these according to the user input. In the framework of (9), the Chan–Vese terms [14, 15, 29] have limitations due to the dependence on \(c_{2}\). In conventional two-phase segmentation problems, it makes sense to penalise deviances from \(c_{2}\) outside the contour; however, for selective segmentation we need not consider the intensities outside of the object we have segmented. Regardless of whether the intensity of regions outside the object is above or below \(c_{1}\), it should be penalised positively. The Chan–Vese terms cannot ensure this as they work based on a fixed “exterior” intensity \(c_{2}\) and can lead to negative penalties on regions which are outside the object of interest. It is our aim in this paper to address this problem.

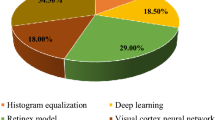

The motivation for this work comes from observing contradictions in using piecewise-constant intensity fitting terms in selective segmentation. Whilst good results are possible with this approach, the exceptional cases lead to severe limitations in practice. This is quite common in medical imaging as demonstrated in Fig. 1, where the target foreground has a low intensity. Given that the corresponding background includes large regions of low intensity, the optimal average intensities for this segmentation problem are \(c_{1}=0.1534\) and \(c_{2}=0.1878\). For cases where \(c_{1}\approx c_{2}\), we see that by (1), \(f_{1}-f_{2}\approx 0\) almost everywhere in the domain \(\varOmega \). This means that it is very difficult to achieve an adequate result, without an over-reliance on the user input or parameter selection.

The central premise for applying Chan–Vese-type methods is the assumption that the image approximately consists of

where \(\eta \) is noise, \(\chi _{i}\) is the characteristic function of the region \(\varOmega _{i}\), for \(i=F,B\), respectively. The idea of selective segmentation is to incorporate user input to apply constraints that exclude regions classified as foreground, based on their location in the image. We use a distance constraint which penalises the distance from the user input markers. However, a key problem for selective segmentation is that for cases where the optimal intensity values \(c_{1}\) and \(c_{2}\) are similar, the intensity fitting term will become obsolete as the contour evolves. This is illustrated in Fig. 3. The purpose of our approach is to construct a model that is based on assumptions that are consistent with the observed image and any homogeneous target region of interest. A common approach in selective segmentation is to discriminate between objects of a similar intensity [34, 35, 39]. However, the fitting terms in previous formulations [24, 34, 35, 39] aren’t applicable in many cases as there are contradictions in the formulation in this context. We will address this in detail in the following section.

In this paper, our main contribution is to highlight a crucial flaw in the assumptions behind many current selective segmentation approaches and propose a new fitting term in relation to such methods. We demonstrate how our reformulation is capable of achieving superior results and is more robust to parameter choices than existing approaches, allowing for more consistency in practice. In Sect. 2, we give a brief review of alternative intensity fitting terms proposed in the literature, and detail them in relation to selective segmentation. We then briefly detail alternative selective segmentation approaches to compare our method against in Sect. 3. In Sect. 4, we introduce the proposed model, focussing on a fitting term that allows for significant intensity variation in the background domain. In Sect. 5, we discuss the implementation of each approach in a convex relaxation framework, provide the algorithm in Sect. 6, and detail some experimental results in Sect. 7. Finally, in Sect. 8 we give some concluding remarks.

2 Related Approaches

Here, we introduce and discuss work that has introduced alternative data fitting terms closely related to Chan–Vese [15]. In order to make direct comparisons, we convert each approach to the unified framework of convex relaxation [14]. It is worth noting that this alternative implementation is equivalent in some respects, but that the results might differ slightly if using the original methods. We are considering these models in the terms of selective segmentation, so all formulations have the following structure:

We are interested in the effectiveness of f(u) in this context, which we will focus on next. In particular, we detail various choices of f(u) from the literature that are generalisations of the Chan–Vese approach. In the following, we refer to minimisers of convex formulations, such as (11), by \(u_{\gamma }\). Here, the minimiser of F(u) is thresholded for \(\gamma \in (0,1)\) in a conventional way [14].

2.1 Region-Scalable Fitting (RSF) [25]

The data fitting term from the work of Li et al. [25], known as Region-Scalable Fitting (RSF), consistent with the convex relaxation technique of [14] is given by

where

and \(K_{\sigma }(\varvec{x})\) is chosen as a Gaussian kernel with scale parameter \(\sigma >0\). The RSF selective formulation is then given as follows:

The functions \(h_{1}(\varvec{x})\) and \(h_{2}(\varvec{x})\), which are generalisations of \(c_{1}\) and \(c_{2}\) from Chan–Vese, are updated iteratively by

Using the RSF fitting term, any deviations of z from \(h_{1}\) and \(h_{2}\) are smoothed by the convolution operator, \(K_{\sigma }\). This allows for intensity inhomogeneity in the foreground and background of target objects.

2.2 Local Chan–Vese (LCV) Fitting [43]

Wang et al. [43] proposed the Local Chan–Vese (LCV) model. In terms of the equivalent convex formulation, the data fitting term is given by

where

and \(z^{*} = M_{k}*z\). Here, \(M_{k}\) is an averaging convolution with \(k \times k\) window. The LCV selective formulation is then given as

The values \(c_{1},c_{2},d_{1},d_{2}\) which minimise this functional for \(u_{\gamma }\) are given by

The formulation is minimised iteratively. The LCV fitting term that \(f_{1}(\varvec{x})\) and \(f_{2}(\varvec{x})\) includes an additional term weighted by the parameters \(\alpha \) and \(\beta \). The principle for the LCV model is that the difference image \(z^{*}-z\) is a higher contrast image than z and a two-phase segmentation on this image can be computed.

2.3 Hybrid (HYB) Fitting [1]

Based on extending the LCV model, Ali et al. [1] proposed the following data fitting term,

where

Here, \(z^{*} = M_{k}*z\), \(w = z^{*}z\), and \(w^{*} = M_{k}*w\), with \(M_{k}\) the averaging convolution as used in the LCV model. The values \(c_{1},c_{2},d_{1},d_{2}\) are updated in a similar way to [43], with further details found in [1]. The authors refer to this approach as the Hybrid (HYB) Model. The HYB selective formulation is then given as

The key aim of the HYB model is to account for intensity inhomogeneity in the foreground and background of the image through the product image w. In LCV, the presence of the blurred image \(z^{*}\) in the data fitting term deals with intensity inhomogeneity, whilst including z helps identify contrast between regions. The authors found that the product image \(w=z^{*}z\) can improve the data fitting in both respects. Therefore, they construct a LCV-type function with w rather than the original z. Their results suggest that this approach is more robust.

2.4 Generalised Averages (GAV) Fitting [2]

Recently, Ali et al. [2] proposed using the data fitting terms of Chan–Vese in a signed pressure force function framework [48]. They refer to this approach as Generalised Averages (GAV) as they update the intensity constants in an alternative way, detailed below. In the convex framework, we consider the selective GAV functional:

where \(f_\mathrm{{GAV}}(u)=f_\mathrm{{CV}}(u)\). This is identical to the CV selective formulation (8). However, the authors propose an alternative update for the fitting constants \(c_{1}\) and \(c_{2}\), given as follows:

with \(\beta \in {\mathbb {R}}\). If \(\beta = 1\), the approach is identical to CV. In [2], the authors assert that the proposed adjustments have the following properties. As \(\beta \rightarrow \infty \), \(c_{1}\) and \(c_{2}\) approach the maximum and minimum intensity in the foreground and background of the image, respectively. Also, as \(\beta \rightarrow -\infty \), \(c_{1}\) and \(c_{2}\) approach the minimum intensity in the foreground and background of the image, respectively. For example, if a high value of \(\beta \) is set, \(c_{1}\) will take a larger value than in CV which can be useful for selective segmentation. For example, if we consider the image in Fig. 1, we can achieve a larger \(c_{2}\) value by setting \(\beta >1\) and a smaller value by setting \(\beta <1\). Therefore, there is more flexibility when using this data fitting term in selective formulations. However, it should be noted that it involves the selection of the parameter \(\beta \), which can be difficult to optimise.

3 Alternative Selective Segmentation Models

We now introduce two recent methods that incorporate user input to perform selective segmentation. Each involves input in the form of foreground/background regions to indicate relevant structures of interest. An example of this can be seen in Fig. 18, where red regions indicate foreground and blue regions indicate background. We compare against the work of Nguyen et al. [30], which uses a similar convex relaxation framework to the proposed approach, and Dong et al. [17], which uses a variation of the random walk approach. We summarise the essential aspects of each approach in the following.

3.1 Constrained Active Contours (CAC) [30]

The authors use a probability map, \(P({\varvec{x}})\), from Bai and Sapiro [4] where the geodesic distances to the foreground/background regions are denoted by \(D_\mathrm{F}({\varvec{x}})\) and \(D_\mathrm{B}({\varvec{x}})\), respectively. An approximation of the probability that a point \({\varvec{x}}\) belongs to the foreground is then given by

Foreground/background Gaussian mixture models (GMM) are estimated from the user input. The terms \(\mathrm{Pr}({\varvec{x}}|F)\) and \(\mathrm{Pr}({\varvec{x}}|B)\) denote the probability that a point, \({\varvec{x}}\), belongs to the foreground and background, respectively. The normalised log likelihood for each is then given by

GMMs are widely used in selective segmentation [4, 17, 18, 22, 36], and the authors in [30] incorporate this idea into the framework we consider with the following data fitting term:

for a weighting parameter \(\alpha _{0}\in [0,1]\). It is proposed that \(\alpha _{0}\) is selected automatically as follows:

where N is the total number of pixels in the image. Defining \(g_{0}\) as the function g(s) applied to the image \(z({\varvec{x}})\) and \(g_{p}\) applied to the GMM probability map \(P_\mathrm{F}({\varvec{x}})\), an enhanced edge function is defined as

for a weighting parameter \(\beta _{0}\in [0,1]\), which can be set automatically in a similar way to (28). Thus, Nguyen et al. [30] define the Constrained Active Contours (CAC) Model as

They obtain a solution using the split Bregman method of Goldstein et al. [19], although other methods are applicable and will yield similar results. However, that is not the focus of this paper, so we omit the details here. In the results section, Sect. 7, we will compare our method against CAC to see how our data fitting term compares against a GMM-based approach.

3.2 Submarkov Random Walks (SRW) [17]

We now introduce a recent selective segmentation method by Dong et al. [17] known as Submarkov Random Walks (SRW). Rather than using the continuous framework of [14], this approach is based in the discrete setting where each pixel in the image is treated as a node in a weighted graph. Random walks (RW) have been widely used for segmentation since the work of Grady [22]. SRW is capable of achieving impressive results with user-defined foreground and background regions. The selective segmentation result can be obtained by assigning a label to each pixel based on the computed probabilities of the random walk approach. For brevity, we do not provide the full details of the method here; however, further details can be found in [17]. We compare SRW to our proposed approach on a CT data set in Sect. 7.4.

We now introduce essential notation to understand the approach of [17]. In RW, an image is formulated as a weighted undirected graph \(G=(V,E)\) with nodes \(v\in V\) and edges \(e\in E\subseteq V\times V\). Each node \(v_{i}\) represents an image pixel \(x_{i}\). An edge \(e_{ij}\) connects two nodes \(v_{i}\) and \(v_{j}\), and a weight \(w_{ij}\in W\) of edge \(e_{ij}\) measures the likelihood that a random walker will cross this edge:

where \(I_{i}\) and \(I_{j}\) are pixel intensities, with \(\sigma _{0},\varepsilon _{0}\in {\mathbb {R}}\). In SRW, a user indicates foreground/background regions in a similar way to CAC, as shown in Fig. 18, and can be viewed as a traditional random walker with added auxiliary nodes. In [17], these are defined as a set of labelled nodes \(V_{M}=\{V^{l_{1}},V^{l_{2}},\ldots ,V^{l_{K}}\}\). A set of labels is defined, \(LS=\{l_{1},l_{2},\ldots ,l_{K}\}\), with K the number of labels \(V^{l_{k}}=\{V_{1}^{l_{1}},V_{2}^{l_{1}},\ldots ,V_{M_{K}}^{l_{K}}\}\), and \(M_{k}\) the number of seeds labelled \(l_{k}\). The prior is then constructed from the seeded nodes (defined by the user). Assuming a label \(l_{k}\) has an intensity distribution \(H_{k}\) (based on GMM learning), a set of auxiliary nodes \(H_{k}=\{h_{1},h_{2},\ldots ,h_{K}\}\) is added into an expanded graph \(G_{e}\) to define a graph with prior \({\bar{G}}\). Each prior node is connected with all nodes in V and the weight, \(w_{ih_{k}}\), of an edge between a prior node \(h_{k}\) and a node \(v_{i}\in V\) is proportional to \(u^{k}_{i}\), the probability density belonging to \(H_{k}\) at \(v_{i}\) .

The authors define the probabilities of each node \(v_{i}\in V\) belonging to label \(l_{k}\) as the average reaching probability, denoted \({\bar{r}}_{i}^{l_{k}}\). This term incorporates the auxiliary nodes introduced above and is dependent on multiple variables and parameters, including \(w_{ij}\) (31). Further details can be found in [17]. The segmentation result is then found by solving the following discrete optimisation problem:

where \({\bar{R}}_{i}\) represents the final label for each node. In other words, for a two-phase segmentation problem, \({\bar{R}}_{i}\) is analogous to the discretised solution of a convex relaxation problem in the continuous setting. Comparisons in terms of accuracy can therefore be made directly, which we elaborate on further in Sect. 7. The authors also detail the optimisation procedure and aspects of dealing with noise reduction.

4 Proposed Model

In this section, we introduce the proposed data fitting term for selective segmentation. We consider objects that are approximately homogeneous in the target region. Intrinsically, it is then assumed that the region \({\mathscr {P}}\), provided by the user, is likely to provide a reasonable approximation of the optimal \(c_{1}\) value and therefore an appropriate foreground fitting function, \(f_{1}\), is given by CV (2). For this reason, it makes sense to retain this term in the proposed approach. The contradiction is in how the background fitting function \(f_{2}\) is defined. Considering piecewise-constant assumptions of the image, and many of the related approaches, the background is expected to be defined by a single constant value, \(c_{2}\). If \(c_{1}\approx c_{2}\), then \(f_{2}\approx f_{1}\) everywhere, and therefore the fitting term can’t accurately separate background regions from the foreground. It is not practical to rely on \(f_{S}(u)\) to overcome this difficulty as it will produce an over-dependence on the choice of \({\mathscr {M}}\) and \({\mathscr {P}}\). This is prohibitive in practice. An alternative function \(f_{2}\) must therefore be defined which is compatible with \(f_{1}\) and \(f_{S}(u)\). Here, we define a new data fitting term that penalises background objects in such a way that avoids these problems by allowing intensity variation above and below the value \(c_{1}\). In order to design a new functional, we first look at the original CV background fitting function

It is clear that in an approximately piecewise-constant image this function will be small outside the target region (i.e. where the image takes values near \(c_{2}\)) and larger inside the target region. Our aim in a new fitting term is to mimic this in such a way that is consistent with selective segmentation, where regions with a ‘foreground intensity’ are forced to be in the background. It is beneficial to introduce two parameters, \(\gamma _{1}\) and \(\gamma _{2}\), to enforce the penalty on regions of intensity in the range \([c_{1}-\gamma _{1},c_{1}+\gamma _{2}]\), i.e. enforce the penalty asymmetrically around \(c_{1}\). We propose the following function to achieve this:

This function takes its maximum value where \(z(\varvec{x})=c_{1}\) and is 0 for \(z(\varvec{x})>c_{1}-\gamma _{1}\) and \(z(\varvec{x}) < c_{1}+\gamma _{2}\). In Fig. 2, we provide a 1D representation of \({\tilde{f}}_{2}(\varvec{x})\) for various choices of \(\gamma _{1}\) and \(\gamma _{2}\), with \(z(\varvec{x})\in [0,1]\) and \(c_{1} = 0.5\). Here, it can be seen how the proposed data fitting term acts as a penalty in relation to a fixed constant \(c_{1}\). It is analogous to CV, whilst accounting for the idea of selective segmentation with a data fitting term. The main advantage of this term is that it replaces the dependence on \(c_{2}\) in the formulation, which has no meaningful relation to the solution of a selective segmentation problem. Even when the foreground is relatively homogeneous, the background may have intensities of a similar value to \(c_{1}\) which will cause difficulties in obtaining an accurate solution. We detail the proposed fitting term in the following section.

4.1 New Fitting Term

We define the proposed data fitting functional as follows:

for \(f_{1}(\varvec{x})=(z-c_{1})^{2}\) and \({\tilde{f}}_{2}(\varvec{x})\) as defined in (33). This is consistent with respect to the intensities of the observed object and the concept of selective segmentation. In Fig. 3, we see the difference between CV and the proposed fitting terms for given user input on a CT image. For the CT image, the CV fitting terms are near 0 within the target region. This is despite there being a distinct homogeneous area with good contrast on the boundary. This illustrates the problem we are aiming to overcome. With the proposed fitting term, this phenomenon should be avoided in cases like this. By defining \({\tilde{f}}_{2}\) as in (33), there is no contradiction if the foreground and background intensities of the target region are similar.

For images where we assume that the target foreground is approximately homogeneous, we have generally found that fixing \(c_{1}\) according to the user input is preferable. We compute \(c_{1}\) as the average intensity inside the region \({\mathscr {P}}\) formed from the user input marker point set. We therefore propose to minimise the following functional with respect to \(u\in [0,1]\), given a fixed \(c_{1}\) :

where \(f_{S}\) is the geodesic distance computed as described earlier using (6). The minimisation problem is given as

The model consists of weighted TV regularisation with a geodesic distance constraint as in [35]. However, alternative constraints are possible, such as Euclidean [39], or moments [24]. It is important to note that we have defined the model in a similar framework to the related approaches discussed previously. The main idea is to establish how the proposed fitting term, \(f_\mathrm{{PM}}(u)\), performs compared to alternative methods. Next we describe how we determine the values of \(\gamma _{1}\) and \(\gamma _{2}\) in the function \({\tilde{f}}_{2}(\varvec{x})\) automatically. This is important in practice as it avoids any additional user input or parameter dependence to achieve an accurate result. In subsequent sections, we provide details of how we obtain a solution for the proposed model.

4.2 Parameter Selection

For a particular problem, it is quite straightforward to optimise the choice of \(\gamma _{1}\) and \(\gamma _{2}\) experimentally, but we would like a method which is not sensitive to the choice of \(\gamma _{1}\) and \(\gamma _{2}\) and would also prefer that the user need not choose these values manually. Therefore, in this section we explain how to choose these values automatically based on justifiable assumptions about general selective segmentation problems. To select the parameters \(\gamma _{1}\) and \(\gamma _{2}\), we use Otsu’s method [32] to divide the histogram of image intensities into N partitions. Otsu’s thresholding is an automatic clustering method which chooses optimal threshold values to minimise the intra-class variance. This has been implemented very efficiently in MATLAB in the function \(\texttt {multithresh}\) for dividing a histogram such that there are \(N-1\) thresholds \(T_{i}\).

We use the thresholds from Otsu’s method to find \(\gamma _{1}\) and \(\gamma _{2}\) as follows. There are three cases to consider, based on the value of \(c_{1}\) computed from the user input: i) \(T_{i-1}\le c_{1}\le T_{i}\) for some \(i>1\), ii) \( 0 \le c_{1}\le T_{1}\), iii) \( T_{N-1} \le c_{1}\le 1\). For each case, we set the parameters as follows:

-

(i)

\(\gamma _{1} = c_{1} - T_{i-1},\ \ \ \gamma _{2} = T_{i} - c_{1}\)

-

(ii)

\(\gamma _{1}=c_{1},\ \ \ \gamma _{2} = T_{1} - c_{1}\)

-

(iii)

\(\gamma _{1} = c_{1}-T_{N-1},\ \ \ \gamma _{2} = 1-c_{1}\)

Choosing N too large could mean \(\gamma _{1}\) and \(\gamma _{2}\) are too small as the histogram would be partitioned too precisely. Generally, we only ever need to consider a maximum of 3 phases for selective segmentation. If there are a large number of pixels in the image with intensity above or below \(c_{1}\), the image can be considered two-phase in practice. Conversely, if a large number of pixels in the image have intensity above and below \(c_{1}\), the image can essentially be considered three-phase in the context of selective segmentation. This is due to the way \(\tilde{f_{2}}\) has been defined. Therefore, we set \(N=3\) for all tests. In Fig. 4, we can see the Otsu thresholds chosen for various images given in this paper. They divide the peaks in the histogram well, and once we know the value of \(c_{1}\) (the approximation of the intensity of the object we would like to segment), we can automatically choose \(\gamma _{1}\) and \(\gamma _{2}\) according to this criteria.

5 Numerical Implementation

We now introduce the framework in which we compute a solution to the minimisation of the proposed model, as well the related models introduced in Sects. 1 and 2. All consist of the minimisation problem

for \(X=\text {CV, RSF, LCV, HYB, GAV, PM}\), respectively. Minimisation problems of this type (37) have been widely studied in terms of continuous optimisation in imaging, including two-phase segmentation. A summary of such methods in recent years is given by Chambolle and Pock [13]. Details of the introduction of binary labels to image segmentation can be found in Lie et al. [26] and Chan et al. [14], and our numerical scheme follows the approach in [14]: enforcing the constraint in (37) with a penalty function, and deriving the Euler–Lagrange of the regularised functional. We then solve the corresponding PDE by following a splitting scheme first applied to this kind of problem by Spencer and Chen [39]. Whilst the numerical details are not the focus of the work, it is important to note widely used alternatives. A summary of such approaches, describing major developments in this area and the connections between each method, is given in a review by Wei et al. [44].

It has proved very effective to exploit the duality in the functional and avoid smoothing the TV term. A prominent example is the split Bregman approach for segmentation by Goldstein et al. [19]. This is closely related to augmented lagrangian methods, a matter further discussed by Boyd et al. [7]. Analogous approaches also consist of the first-order primal dual algorithm of Chambolle and Pock [12] and the max-flow/min-cut framework detailed by Yuan et al. [47]. There are practical advantages in implementing such a numerical scheme for our problem, primarily in terms of computational speed. However, in the numerical tests we include we’re mainly interested in accuracy comparisons. For this purpose, the convex splitting algorithm of [39] is sufficient, and the extension of splitting schemes for convex segmentation problems may be of interest. Further details can be found in [35, 39]. In the following, we first discuss the minimisation of (37) in a general sense and then mention some important aspects in relation to the alternative fitting terms discussed in Sect. 2.

5.1 Finding the Global Minimiser

To solve this constrained convex minimisation problem (38), we use the Additive Operator Splitting (AOS) scheme from Gordeziani et al. [20], Lu et al. [28] and Weickert et al. [45]. This is used extensively for image segmentation models [34, 35, 39]. It allows the 2D problem to be split into two 1D problems, each solved separately, with the results combined in an efficient manner. We address some aspects of AOS in Sect. 6, with further details provided in [35, 39].

A challenge with the functional (35), particularly with respect to AOS, is that this is a constrained minimisation problem. Consequently, it is reformulated by introducing an exact penalty function, \(\nu (u)\), given in [14]. To simplify the formulation, we define

\(f(\varvec{x})\) is the function associated with \(f_{X}(u)\). We introduce a new parameter, \({\tilde{\lambda }}\), which allows us to balance the data fitting terms to the regularisation term more reliably. To be clear, we still only have two main tuning parameters (\(\theta \) and \({\tilde{\lambda }}\)) as we fix any variable parameters in \(f({\varvec{x}})\) according to the choices in the corresponding papers. The unconstrained minimisation problem is then given as:

We rescale the data term with \({\mathscr {F}}(\varvec{x}) = r(\varvec{x}) / ||r(\varvec{x})||_{\infty }\). In effect, this change is simply a rescaling of the parameters. This allows for the parameter choices between different models to be more consistent, as the fitting terms are similar in value. The problem (38) has the corresponding Euler-Lagrange equation (for fixed \(c_{1}\)):

in \(\varOmega \) and \(\frac{\partial u}{\partial \varvec{n}} = 0\) where \(\varvec{n}\) is the outward unit normal. The constraint is enforced for \(\alpha >\frac{{\tilde{\lambda }}}{2}||r(\varvec{x})||\) by [14]. Two parameters, \(\varepsilon _{1}\) and \(\varepsilon _{2}\), are introduced here. The former is to avoid singularities in the TV term, and the latter is associated with the regularised penalty function \(\nu _{\varepsilon _{2}}(u)\) from [39]:

with \(b_{\varepsilon _{2}}(u)=\sqrt{(2u-1)^{2}+\varepsilon _{2}}-1\) and regularised Heaviside function

The viscosity solution of the parabolic formulation of (39), obtained by multiplying the PDE by \(|\nabla u|\), exists and is unique. The general proof for a class of PDEs to which (39) belongs is included in [35], and we refer the reader there for the details. Once the solution to (39) is found, denoted \(u^{*}\), we define the computed foreground region as follows:

We select \(\gamma =0.5\) (although other values \(\gamma \in (0,1)\) would yield a similar result according to Chan et al. [14]). In the following, we use the binary form of the solution, \(u^{*}\), denoted \(u_{\gamma }\). This partitions the domain into \(\varOmega _\mathrm{F}\) and \(\varOmega _\mathrm{B}\) according to the labelling function \(u_{\gamma }\).

5.2 Implementation for Related Models

The discussion in this section so far has used the function \(f(\varvec{x})\) associated with the data fitting functional \(f_{X}(u)\). This corresponding equations for the RSF, LCV, HYB and GAV models are detailed in Sect. 2, CV is discussed in Sect. 1, and our approach is given by (34). We use this implementation to obtain selective segmentation versions of each of those models, given by (37). When these terms contain parameter choices, we follow the advice in the corresponding papers as far as possible, unless we have found that alternatives will improve results. In the next section, we will give the results of these models and compare them to our proposed approach.

Note We now discuss details behind tuning parameters for the GAV model. It is noted in Sect. 2 that the GAV model requires a parameter \(\beta \) to adapt the \(c_{1}\) and \(c_{2}\) calculation. We find that it is actually better to consider \(c_{1}\) and \(c_{2}\) separately to achieve improved results, as sometimes we wish to tune the values to have a higher \(c_{1}\) and lower \(c_{2}\) (or vice-versa) simultaneously. Therefore, we introduce parameters \(\beta _{1}\) and \(\beta _{2}\) to tune \(c_{1}\) and \(c_{2}\) as follows:

In all experiments, we tested the following combinations of \((\beta _{1},\beta _{2})\): (1.5, 0.5), (2, 0), \((3,-1)\), \((4,-2)\), (0.5, 1.5), (0, 2), \((-1,3)\) and \((-2,4)\). For each choice, we optimised the values of \({\tilde{\lambda }}\) and \(\theta \) according to the procedure described in Sect. 7.1. This allowed us to select the optimal combination of \((\beta _{1},\beta _{2})\) for each image.

6 Algorithm

Here, we will discuss the algorithm that we use to minimise the selective segmentation model (37). We utilise additive operator splitting techniques to solve the minimisation problem efficiently.

6.1 An Additive Operator Splitting (AOS) Scheme

Additive Operator Splitting (AOS) [20, 28, 45] is a widely used method for solving PDEs with linear and nonlinear diffusion terms [34, 35, 39] such as

AOS allows us to split the two-dimensional problem into two one-dimensional problems, which we solve separately and then combine. Each one-dimensional problem gives rise to a tridiagonal system of equations which can be solved efficiently by Thomas’ algorithm, and hence AOS is a very efficient method for solving PDEs of this type. AOS is a semi-implicit method and permits far larger time-steps than the corresponding explicit schemes would. Hence AOS is more stable than an explicit method [45]. Note here that

and \(\mu =1\). The standard AOS scheme assumes \(f_{0}\) does not depend on u; however, in this instance that is not the case. This requires a modification to be used for convex segmentation problems, first introduced by [39]. This non-standard formulation incorporates the regularised penalty term, \(\nu _{\varepsilon _{2}}(u)\), into the AOS scheme which we briefly detail next.

The authors consider the Taylor expansions of \(\nu '_{\varepsilon _{2}}(u)\) around \(u=0\) and \(u=1\). They find that the coefficient b of the linear term in u is the same for both expansions. Therefore, for a change in u of \(\delta u\) around \(u=0\) and \(u=1\) the change in \(\nu '_{\varepsilon _{2}}(u)\) can be approximated by \(b\cdot \delta (u)\). To address this, the relevant interval is defined as

and a corresponding update function is given as

The solution for (44) is then obtained by discretising the equation as follows:

where \(A_{1}\) and \(A_{2}\) are discrete forms of \(\partial _{x}(G(u)\partial _{x})\) and \(\partial _{y}(G(u)\partial _{y})\), respectively (given in [35, 39]). The modified AOS update is then given by

where \({\tilde{B}}^{(k)} = \text {diag}(\tau \alpha {\tilde{b}}^{(k)})\) and \({\tilde{u}}^{(k)} = u^{(k)}+\tau (I+{\tilde{B}}^{(k)})^{-1}f_{0}^{(k)}\). This scheme allows for more control on the changes in \(f_{0}\) between iterations due to the function \({\tilde{b}}\) and parameter \(\zeta \) and therefore leads to a more stable convergence. We refer the reader to [39] for full details of the numerical method.

6.2 The Proposed Algorithm

In Algorithm 1, we provide details of how we find the minimiser of the various selective segmentation models detailed above, defined by (37). The algorithm is in a general form to be applied to any of the approaches discussed so far. It is important to reiterate that alternative solvers to AOS are available, such as the dual formulation [3, 8, 11], split Bregman [19], augmented Lagrange [6], primal dual [12], and max-flow/min-cut [47]. In all experiments, we use the tolerance of \(10^{-4}\) for the stopping criteria and set \(\varepsilon _{1} = 10^{-4}\), \(\varepsilon _{2} = 10^{-1}\) and \(\tau = 10^{-2}\).

7 Results

In this section, we will present results obtained using the proposed model and compare them to using fitting terms from similar models (CV [15], RSF [25], LCV [43], HYB [1], GAV [2]), detailed in Sect. 2, and additional comparisons to alternative selective models. Specifically, we compare against the work of Nguyen et al. [30] and Dong et al. [17], referred to as CAC and SRW, respectively, and detailed in Sect. 3. We intend to provide an overview of how effective each approach is in a number of key respects and analyse their potential for practical use in a reliable and consistent manner. Our focus is on how each fitting term can be applied to a consistent selective segmentation framework, and how robust the proposed model is overall. The key questions we consider are:

-

(i)

How sensitive are the results to variations of the parameters \({\tilde{\lambda }}\) and \(\theta \)?

-

(ii)

Is the model capable of achieving accurate results?

-

(iii)

To what extent is the proposed model dependent on the user input?

-

(iv)

Does the model compare favourably against alternative selective methods?

Test Images We will perform initial tests on the images shown in Figs. 5, 6 and 7. We have provided the ground truth and initialisation used for each image. Test Images 1–3 are synthetic, Test Image 4 is an MRI scan of a knee, Test Images 5–6 are abdominal CT scans, and Test Images 7–9 are lung CT scans. They have been selected to present challenges relevant to the discussion in Sect. 2. We focus on medical images as this is the application of most interest to our work. In the following, we will discuss the results in terms of synthetic images (1–3) and real images (4–9). We also test the proposed approach on a larger data set of 30 CT images (a sample of which is presented in Fig. 18), comparing against existing selective methods detailed in Sect. 3.

Measuring Segmentation Accuracy In our tests, we use the Jaccard Coefficient [23], often referred to as the Tanimoto Coefficient (TC), to measure the quality of the segmentation. We define accuracy with respect to a ground truth, GT, given by a manual segmentation:

The Tanimoto Coefficient is then calculated as

where \(N(\cdot )\) refers to the number of points in the enclosed region. This takes values in the range [0, 1], with higher TC values indicating a more accurate segmentation. In the following, we will represent accuracy visually from red (\(\text {TC}=0\)) to green (\(\text {TC}=1\)), with the intermediate scaling of colours used shown in Fig. 8. This will be particularly relevant in Sect. 7.2.

Note In Sect. 2.4, we mentioned the tuning of parameters in the GAV model. To be explicit, the optimal \((\beta _{1}, \beta _{2})\) pairs used in the following tests were (4, − 2) for Test Images 1 and 2, (1.5, 0.5) for Test Images 3, 4, and 6, (2,0) for Test Image 5, and (− 2, 4) for Test Images 7, 8, and 9. Results vary significantly as \((\beta _{1},\beta _{2})\) are varied, but we found these to be the best choices for each image.

The discussion of results is split into four sections, addressing the questions introduced above. First, in Sect. 7.1, we will examine the robustness to the parameters \({\tilde{\lambda }}\) and \(\theta \) for each model. Then, in Sect. 7.2, we will compare the optimal accuracy achieved by each method to determine what they are capable of in the context of selective segmentation for these examples. In Sect. 7.3, we will test the proposed model with respect to the user input. By randomising the input, we will determine to what extent the proposed model is suitable for use in practice. Finally, in Sect. 7.4 we will compare the proposed approach to the methods introduced in Sect. 3 on an additional CT data set. This will help further establish how the algorithm performs against competitive approaches in the literature.

7.1 Parameter Robustness

In these tests, we aim to demonstrate how sensitive to parameter choices each choice of fitting term is. To accomplish this, we perform the segmentations for each of the models discussed (CV, RSF, LCV, HYB, GAV) and the proposed model for a wide range of parameters and compute the TC value. The parameter range used is \({\tilde{\lambda }},\theta \in [1,50]\). Due to computational constraints, we run for each integer \({\tilde{\lambda }},\theta \) between 1 and 10, and every fifth from 15 to 50. This aspect of a model’s performance is vital when used in practice. The less sensitive to parameter choices a model is the more relevant it is in relation to potential applications. It should be noted that we neglect to test the selective models detailed in Sect. 3 with respect to parameter robustness as we are using the authors’ implementation of each approach. Instead, we make direct comparisons in the following sections.

Segmentation results and TC values for the proposed model whilst varying \({\tilde{\lambda }}\) (with \(\theta = 4\)). The colours correspond to the TC value (green is TC = 1, red is TC = 0), consistent with the scale in Fig. 8. This is for Test Image 5, with the corresponding heatmap provided in Fig. 12 (Color figure online)

Heatmaps of TC values for permutations of \({\tilde{\lambda }}\) and \(\theta \). Each row and column is labelled according to the model used and the image tested. The colour is consistent with the scale in Fig. 8. Here, we present Test Images 1–3 (Color figure online)

Heatmaps of TC values for permutations of \({\tilde{\lambda }}\) and \(\theta \). Each row and column is labelled according to the model used and the image tested. The colour is consistent with the scale in Fig. 8. Here, we present Test Images 4–6 (Color figure online)

Heatmaps of TC values for permutations of \({\tilde{\lambda }}\) and \(\theta \). Each row and column is labelled according to the model used and the image tested. The colour is consistent with the scale in Fig. 8. Here, we present Test Images 7–9 (Color figure online)

The TC values for the parameter sets \(({\tilde{\lambda }},\theta )\) are presented as heatmaps in Figs. 11, 12 and 13. A heatmap is a convenient way to display accuracy results for hundreds of tests concisely. In Fig. 9, we give an example heatmap with the same axes used for those in Figs. 11, 12 and 13. For each of the combinations of parameter values \(({\tilde{\lambda }},\theta )\), we give the TC value of the segmentation result and represent it by the appropriate colour. The corresponding colour scale is shown in Fig. 8. Qualitatively, the more green areas of the heatmap, the more accurate the model is for a wider set of parameters. Example results for Test Image 5 when varying \({\tilde{\lambda }}\) (with \(\theta =4\)) for the proposed model are given in Fig. 10. Here, it can be seen what each accuracy result corresponds to visually.

Note The axes have been removed from the heatmaps in Figs. 11, 12 and 13 for presentational clarity. However, to be explicit, the axes used in all heatmaps are the same as those in Fig. 9.

Synthetic Images These results are presented in Fig. 11. For Test Images 1–2, we see poor parameter robustness from all competing models, except for GAV which performs reasonably well. However, the proposed model has minimal parameter sensitivity for these images, with good results achieved for almost every combination of values tested. For Test Image 3, all models have a reasonable parameter range (except for RSF); however, the proposed model gives better quality results for a wider parameter range. The other models achieve reasonable results here as the foreground intensity of the ground truth is greater than the background \((c_1=0.75, c_2=0.49)\), whereas for Test Images 1–2 they are equal \((c_1=c_2=0.50)\). These results highlight the key advantage of the proposed model.

Real Images In Fig 12, we present results for Test Images 4–6. Here, the proposed model performs in a similar way to its competitors because these images are more typical selective segmentation problems in the sense that there is a clear distinction between the foreground and background intensities. In particular, the values in each case are: Test Image 4 \((c_1=0.85, c_2=0.25)\), Test Image 5 \((c_1=0.70, c_2=0.19)\), and Test Image 6 \((c_1=0.73, c_2=0.20)\). It can be seen that the proposed model is competitive compared to previous approaches. The performance is quite poor for Test Image 5, but is arguably still the best for this challenging case. In Fig. 13, we present results for Test Images 7–9. Here, the proposed model outperforms previous approaches significantly for each image. This is mainly due to the type of image considered. Specifically, the true intensities are: Test Image 7 \((c_1=0.12, c_2=0.24)\), Test Image 8 \((c_1=0.10, c_2=0.23)\), and Test Image 9 \((c_1=0.08, c_2=0.14)\). The proposed model is capable of achieving results where \(c_1\approx c_2\), with other models failing completely in these cases.

7.2 Accuracy Comparisons

Here, we aim to address the question of whether each model is capable of achieving an accurate result. In other words, assuming that factors such as parameter and user input sensitivity are ignored, how successful is each approach. In Table 1, we present the optimal TC values for each model found from the tests described in the previous section, with the highest value in bold. We include values for CAC [30] and SRW [17], which we have obtained by iteratively refining the user input and running the algorithm. It is worth mentioning that we are using the authors’ implementation of each method. For each image, the results presented in Table 1 are the most accurate we could obtain given a reasonable level of input (comparisons with identical input are discussed in Sect. 7.4). Immediately, we can see that the proposed model consistently outperforms the other models in terms of accuracy for the test images (RSF equals it for Test Image 1, SRW equals it for Test Images 1–3, and beats it for Test Image 8). Below we will discuss some relevant details of the results, again by splitting the test images into synthetic and real.

Synthetic Images We observe that for Test Images 1 and 2 (where \(c_{1}=c_{2}\)) CV, LCV, and HYB fail completely. GAV performs well, with the proposed model and RSF being the most accurate with perfect results. For Test Image 3, all models are capable of achieving a good result. It should be noted that in this case \(c_1=0.75\) and \(c_2=0.49\). This difference enables the other models to perform well, although the proposed model is slightly superior with a perfect result. The alternative selective models also perform well for these images, although CAC has minor errors on the boundaries of the foreground for each image.

Real Images In Table 1, we can see that the proposed model is the most successful in terms of optimal accuracy. It is worth noting some inconsistency in the other models, with all but GAV having results that fall below TC \(= 0.9\) for at least one image. GAV performs well for Test Images 4–9, with the proposed model slightly outperforming it in each case. It is worth reminding the reader that for GAV the parameters \((\beta _{1},\beta _{2})\) have been refined for each example. Fixing this results in more variability in the quality of results. The proposed model has no such parameter optimisation between examples. CAC and SRW perform reasonably well for these images, although are sometimes substandard for Test Images 4-7. This is despite extensive refinement of the user input to achieve an acceptable result. We present the optimal results for Test Image 9 in Fig. 14. Here, we can see how much variation there is in the quality of results for this lung CT image. CAC and SRW are competitive in this instance. Of the remaining approaches, GAV is the most competitive (TC \(= 0.919\)), but is visually inadequate. Two other models (CV, HYB) fail completely. In this case, the problem looks quite straightforward and yet other fitting terms are insufficient to produce a good result. Again, the proposed model tends to be superior in cases where \(c_{1}\approx c_{2}\) and is capable of achieving very good results for all the images considered. This highlights the advantages of the proposed fitting term.

We present the optimal results for Test Image 9. The accuracy is represented by colour, consistent with the scale in Fig. 8. The proposed model often significantly outperforms previous approaches in this case (Color figure online)

Boxplots of the TC values for 1000 random user inputs using the proposed model. We observe that the method is remarkably consistent. Even the worst results, excluding outliers, are competitive with the optimal results of the existing approaches shown in Table 1

7.3 User Input Randomisation

One key consideration for the practical use of selective segmentation models is that the result is not too reliant on user input. With intricate user input, accurate results are almost guaranteed. However, the benefit of this kind of approach is that accuracy should be attainable with minimal, intuitive user input. One challenge in this setting is how to ascertain to what extent a method is dependent on the user input. In this section, we will generalise the user input for the proposed model in order to determine how sensitive it is in this respect. By generalising in this way, we will make two assumptions about the markers, \({\mathscr {M}}\), consistent with the above considerations:

-

(i)

All points are within the target object.

-

(ii)

Only 3 markers are selected.

We regard neither of these assumptions to be too onerous on a user and are quite consistent with practical use. To perform this test, we randomly choose 1000 sets of 3 marker points and run each algorithm using them. The parameters \({\tilde{\lambda }}\) and \(\theta \) are fixed at those which gave the optimal TC values in Table 1. For each set of marker points, we compute the corresponding TC value of applying the proposed model with this input. The results for each image are summarised by boxplots in Fig. 15 with examples of the worst results, excluding outliers, shown in Fig. 16. Here, it can be seen that the worst result often outperforms the optimal results of the alternative models considered, which is impressive. Below we discuss the results for the test images, by again splitting them into synthetic and real images. Based on the authors’ implementation of CAC and SRW, it was not possible to generalise the input in this way. Instead we make direct comparisons of input in the next section.

Synthetic Images For the Test Images 1–3, we achieve near-perfect segmentations in all cases, shown by the mean TC being between 0.99 and 1.00 in all cases (for Test Image 1, the mean is precisely 1.00) and a small variance around the mean. Therefore, we can conclude that for images of this type, where the foreground is homogeneous, our method is very robust to user input. Essentially, any reasonable set of markers should produce excellent results. It should be noted that the optimal results from comparable approaches are less than the mean result of 1000 random tests for our method (except for SRW). This can be observed in Table 1. Furthermore, these methods often fail completely. This is a key result highlighting the advantages of our method. In visually simple cases (Test Images 1–3), our new data fitting term is an improvement on existing approaches by modifying the underlying assumptions involved.

Real Images In all cases for Test Images 4–9, the mean values show that the segmentation results are highly accurate. Also, we notice that the variances are very reasonable demonstrating the robustness of varying the user input. This is an important aspect of selective segmentation and highlights the advantages of the proposed fitting term. For Test Images 4–6, we observe more variability in the accuracy due to minor intensity inhomogeneity in the foreground. This means randomising the user input will be more sensitive. However, we can see that the results are very good with the mean accuracy being competitive with the optimal accuracy of comparable methods. In the case of the lung CT images (Test Images 7–9), the variance in TC values is very small, due to the homogeneity of the foreground. Again, it is important to compare the results of 1000 random results using our proposed model to the optimal result of comparable methods. For these images, all of the methods (except GAV,CAC, and SRW) have at least one TC value below 0.9. However, GAV requires the tuning of additional parameters \((\beta _{1},\beta _{2})\), whilst the proposed model does not. The results for CAC and SRW also rely on extensive requirements of the user input to achieve this accuracy, whereas random input compares favourably here. Compared to GAV, we can see that the mean of our tests is similar to the optimal value of GAV. One exception is for Test Image 9 (shown in Fig. 14), where there is a significant gap in favour of our model. Again, from Fig. 16, we can see that the worst result of randomising the user input for the proposed model is competitive with the optimal results of the alternatives. This is one of the most encouraging aspects of the tests; the proposed model is remarkably robust to varying user input. This proves that successful results with minimal, intuitive user input is possible for a range of examples.

7.4 Alternative Selective Methods

In order to further establish the robustness of our method, we now introduce the results of testing our approach against competing interactive segmentation methods on a larger data set. The results are presented in Fig. 17, showing a boxplot of accuracy in terms of TC on a set of 30 CT images (excluding outliers). The target structure we consider is the spleen, as this consists of a relatively homogeneous foreground, appropriate for the approach considered. The data have been manually contoured providing ground truth data for the image set. We compare CAC [30] and SRW [17] against our method with five variations of user input for each image. It is worth emphasising here that the input used in the tests is identical for each approach and was not refined in any way. It was designed to mimic what a user, unfamiliar with each approach, might select intuitively. A representative example for three images is shown in Fig. 18. This shows foreground (red) and background (blue) user input regions. For our method, we define the red region as \({\mathscr {P}}\) as discussed in Sect. 1 and enforce hard constraints on the blue region. We refer to the results of the proposed approach using this input as ours (i). We also include results of randomising the user input in an identical way to Sect. 7.3. For each image, we generate 1000 simulated user input choices, which we present as ours (ii). It is important to note that the difference between ours (i) and (ii) is only the definition of \({\mathscr {P}}\). The method and parameters are fixed between each.

The performance of CAC [30] is very good, as shown in Fig. 17. We have included an additional figure to highlight the difference between CAC and ours (i) and (ii) more precisely. This is shown in Fig. 19. (This is the same as Fig. 17 with TC restricted to [0.8,1].) Here, we can see that the proposed approach has a slightly better median (0.96 compared to 0.94) and is generally more consistent than CAC. This is particularly evident when considering the worst TC results of CAC (0.19) against ours (0.87).

In Fig. 17, it can be seen that our method exceeds the performance of SRW by a large margin (0.66 compared to 0.95). One possible reason for this is that the input used, as displayed in Fig. 18, is restricted to be as intuitive as possible. SRW is capable of achieving improved results with more elaborate foreground/background input. However, it is generally reliant on a trial and error approach which is not ideal in practice. This highlights an important advantage of our method. It is able to achieve a high standard of results with simple user input. This is reinforced by considering ours (ii), where the results of 30,000 random variations of the user input do not cause a dropoff in accuracy compared to the 150 manual user input selections. Again, this can be seen more clearly in Fig. 19. In fact, the results for the proposed approach with the random input are slightly better than with the manual input. This underlines the robustness to user input in the model, which is a vital aspect of selective segmentation.

8 Conclusion

In this paper, we have proposed a new intensity fitting term, for use in selective segmentation. We have compared it to fitting terms from comparable approaches (CV, RSF, LCV, HYB, GAV), in order to address an underlying problem in selective segmentation: if the foreground is approximately homogeneous what is the best way to define the intensity fitting term? Previous methods [34, 35, 39] involve contradictions in the formulation, which we attempt to address.

We have evaluated the success of the proposed model in four respects: parameter robustness, optimal accuracy, dependence on user input, and comparisons to competing selective models. Our focus is on medical applications, where the target object has approximately homogeneous intensity. In each way, the proposed model performs very well, particularly in cases where the true foreground and background intensities are similar. We have shown that our method is remarkably insensitive to varying user input, highlighting its potential for use in practice, and also outperforms competitive algorithms in the literature.

References

Ali, H., Badshah, N., Chen, K., Khan, G.: A variational model with hybrid images data fitting energies for segmentation of images with intensity inhomogeneity. Pattern Recognit. 51, 27–42 (2016)

Ali, H., Badshah, N., Chen, K., Khan, G.A., Zikria, N.: Multiphase segmentation based on new signed pressure force functions and one level set function. Turk. J. Electr. Eng. Comput. Sci. 25, 2943–2955 (2017)

Aujol, J.F., Gilboa, G., Chan, T., Osher, S.: Structure-texture decomposition-modeling, algorithms, and parameter selection. Int. J. Comput. Vis. 67(1), 111–136 (2006)

Bai, X., Sapiro, G.: A geodesic framework for fast interactive image and video segmentation and matting. In: IEEE International Conference on Computer Vision, pp. 1–8 (2007)

Benard, A., Gygli, M.: Interactive video object segmentation in the wild (2017). CoRR arXiv:1801.00269

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Academic Press, Cambridge (2014)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Bresson, X., Esedoglu, S., Vandergheynst, P., Thiran, J.P., Osher, S.: Fast global minimization of the active contour/snake model. J. Math. Imaging Vis. 28(2), 151–167 (2007)

Brox, T., Weickert, J.: Level set segmentation with multiple regions. IEEE Trans. Image Process. 15(10), 3213–3218 (2006)

Cai, X., Chan, R., Zeng, T.: A two-stage image segmentation method using a convex variant of the mumford-shah model and thresholding. SIAM J. Imaging Sci. 6(1), 368–390 (2013)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20, 89–97 (2004)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40, 120–145 (2011)

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numer. 25, 161–319 (2016)

Chan, T., Esedoḡlu, S., Nikolova, M.: Algorithms for finding global minimizers of image segmentation and denoising models. SIAM J. Appl. Math. 66(5), 1632–1648 (2006)

Chan, T., Vese, L.: Active contours without edges. IEEE Trans. Image Process. 10(2), 266–277 (2001)

Chen, D., Yang, M., Cohen, L.: Global minimum for a variant Mumford–Shah model with application to medical image segmentation. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 1(1), 48–60 (2013)

Dong, X., Shen, J., Shao, L.: Submarkov random walk for image segmentation. IEEE Trans. Image Process. 25(2), 516–527 (2016)

Falcao, A., Udupa, J., Migazawa, F.: An ultrafast user-steered image segmentation paradigm: live wire on the fly. IEEE Trans. Med. Imaging 19(1), 55–62 (2002)

Goldstein, T., Bresson, X., Osher, S.: Geometric applications of the split bregman method. J. Sci. Comput. 45(1–3), 272–293 (2010)

Gordeziani, D., Meladze, G.V.: The simulation of the third boundary value problem for multidimensional parabolic equations in an arbitrary domain by one-dimensional equations. Zhurnal Vychislitel’noi Matematiki i Matematicheskoi Fiziki 14(1), 246–250 (1974)

Gout, C., Guyader, C.L., Vese, L.: Segmentation under geometrical conditions with geodesic active contours and interpolation using level set methods. Numer. Algorithms 39, 155–173 (2005)

Grady, L.: Random walks for image segmentation. IEEE Trans. Pattern Anal. Mach. Intelligence 28(11), 1768–1783 (2006)

Jaccard, P.: The distribution of the flora in the alpine zone. 1. New Phytol. 11(2), 37–50 (1912)

Klodt, M., Steinbrücker, F., Cremers, D.: Moment constraints in convex optimization for segmentation and tracking. In: Farinella, G., Battiato, S., Cipolla, R. (eds.) Advanced Topics in Computer Vision, pp. 215–242. Springer, London (2013)

Li, C., Kao, C., Gore, J., Ding, Z.: Minimization of region-scalable fitting energy for image segmentation. IEEE Trans. Image Process. 17(10), 1940–1949 (2008)

Lie, J., Lysaker, M., Tai, X.: A binary level set model and some applications to mumford-shah image segmentation. IEEE Trans. Image Process. 15(5), 1171–1181 (2006)

Liu, C., Ng, M.K.P., Zeng, T.: Weighted variational model for selective image segmentation with application to medical images. Pattern Recognit. 76, 367–379 (2018)

Lu, T., Neittaanmäki, P., Tai, X.C.: A parallel splitting up method and its application to Navier–Stokes equations. Appl. Math. Lett. 4(2), 25–29 (1991)

Mumford, D., Shah, J.: Optimal approximation by piecewise smooth functions and associated variational problems. Commun. Pure Appl. Math. 42, 577–685 (1989)

Nguyen, T., Cai, J., Zhang, J., Zheng, J.: Robust interactive image segmentation using convex active contours. IEEE Trans. Image Process. 21, 3734–3743 (2012)

Osher, S., Sethian, J.: Fronts propagating with curvature-dependent speed: algorithms based on Hamilton–Jacobi formulations. J.Comput. Phys. 79(1), 12–49 (1988)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Perona, P., Malik, J.: Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12(7), 629–639 (1990)

Rada, L., Chen, K.: Improved selective segmentation model using one level-set. J. Algorithms Comput. Technol. 7(4), 509–540 (2013)

Roberts, M., Chen, K., Irion, K.L.: A convex geodesic selective model for image segmentation. J. Math. Imaging Vis. (2018). https://doi.org/10.1007/s10851-018-0857-2

Rother, C., Kolmogorov, V., Blake, A.: Grabcut: interactive foreground extraction using iterated graph cuts. ACM SIGGRAPH 23(3), 1–6 (2004)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1–4), 259–268 (1992)

Shen, J., Du, Y., Wang, W., Li, X.: Lazy random walks for superpixel segmentation. IEEE Trans. Image Process. 23(4), 1451–1462 (2014)

Spencer, J., Chen, K.: A convex and selective variational model for image segmentation. Commun. Math. Sci. 13(6), 1453–1472 (2015)

Spencer, J., Chen, K.: Stabilised bias field: segmentation with intensity inhomogeneity. J. Algorithms Comput. Technol. 10(4), 302–313 (2016)

Spencer, J., Chen, K., Duan, J.: Parameter-free selective segmentation with convex variational methods. IEEE Trans. Image Process. 28(5), 2163–2172 (2019)

Vese, L.A., Chan, T.F.: A multiphase level set framework for image segmentation using the mumford and shah model. Int. J. Comput. Vis. 50(3), 271–293 (2002)

Wang, X., Huang, D., Xu, H.: An efficient local Chan–Vese model for image segmentation. Pattern Recognit. 43(3), 603–618 (2010)

Wei, K., Tai, X., Chan, T., Leung, S.: Primal-dual method for continuous max-flow approaches. In: Proceedings of 5th ECCOMAS Conference on Computational Vision and Medical Image Processing, pp. 17–24 (2016)

Weickert, J., Ter Haar Romeny, B.M., Viergever, M.A.: Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Trans. Image Process. 7(3), 398–410 (1998)

Xu, N., Price, B., Cohen, S., Yang, J., Huang, T.S.: Deep interactive object selection. In: IEEE Conference on Computer Vision and Pattern Recognition (2016)

Yuan, J., Bae, E., Tai, X., Boykov, Y.: A spatially continuous max-flow and min-cut framework for binary labeling problems. Numer. Math. 126(3), 559–587 (2013)

Zhang, K., Zhang, L., Song, H., Zhou, W.: Active contours with selective local or global segmentation: a new formulation and level set method. Image Vis. Comput. 28(4), 668–676 (2010)

Acknowledgements

The authors would like to thank the Isaac Newton Institute for Mathematical Sciences, Cambridge, for support and hospitality during the programme “Variational methods and effective algorithms for imaging and vision” where work on this paper was undertaken. This work was supported by EPSRC grant no EP/K032208/1. The first author wishes to thank the UK EPSRC, the Smith Institute for Industrial Mathematics, and the Liverpool Heart and Chest Hospital for supporting the work through an Industrial CASE award. The second author would like to acknowledge the support of the EPSRC grant EP/N014499/1. This work was generously supported by the Wellcome Trust Institutional Strategic Support Award (204909/Z/16/Z).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Roberts, M., Spencer, J. Chan–Vese Reformulation for Selective Image Segmentation. J Math Imaging Vis 61, 1173–1196 (2019). https://doi.org/10.1007/s10851-019-00893-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-019-00893-0