Abstract

Modeling magnitude magnetic resonance images (MRI) Rician denoising in a Bayesian or generalized Tikhonov framework using total variation (TV) leads naturally to the consideration of nonlinear elliptic equations. These involve the so called 1-Laplacian operator and special care is needed to properly formulate the problem. The Rician statistics of the data are introduced through a singular equation with a reaction term defined in terms of modified first-order Bessel functions. An existence theory is provided here together with other qualitative properties of the solutions. Remarkably, each positive global minimum of the associated functional is one of such solutions. Moreover, we directly solve this nonsmooth nonconvex minimization problem using a convergent Proximal Point Algorithm. Numerical results based on synthetic and real MRI demonstrate a better performance of the proposed method when compared to previous TV-based models for Rician denoising which regularize or convexify the problem. Finally, an application on real Diffusion Tensor Images, a strongly affected by Rician noise MRI modality, is presented and discussed.

Similar content being viewed by others

1 Introduction

Multiple applications in computer vision and digital image processing can be modeled from the field of quasilinear elliptic equations. Variational formulations of these equations allow to introduce a concept of weak solution, which is well adapted to image analysis, providing faithful discontinuous solutions. Furthermore, the discrete formulations of these equations are readily suited for fast image processing. In particular, medical image denoising is an important application which allows to reduce scanning time of the patients, while preserving a good image quality. Moreover, several imaging applications like segmentation, classification, registration, super-resolution, object recognition, or tracking can benefit of preprocessed denoised images.

In this paper, we focus on the modality of Magnetic Resonance Imaging (MRI), where clinicians typically work with images contaminated by Rician noise. MRI scanners acquire complex data where both real and imaginary parts are corrupted with zero-mean uncorrelated Gaussian noise with equal variance. The calculation of the magnitude image transforms the original complex Gaussian noise into Rician noise [30, 31]. The Rician distribution considerably differs from a Gaussian distribution when low signal-to-noise-ratio (SNR) data are considered. This is the reason why several denoising methods that take into account the Rician distribution of the noise are focused on Diffusion-Weighted Images (DWI), one of the MRI modalities more severely affected by noise [9, 41, 44].

In particular, modeling these statistics in the framework of a Tikhonov Regularization through the Total Variation (TV) operator leads to consider a 1-Laplacian elliptic equation with a nonlinear lower-order term defined in terms of modified Bessel functions.

The TV operator

was introduced in the image community by Rudin, Osher, and Fatemi, [38] through their celebrated denoising model (ROF in the following) which is the Gaussian counterpart of the Rician model we are considering. The 1-Laplacian operator characterizes the subdifferential of the TV functional; for a proof of such result in the \(L^2\)-framework, we refer to [5] (see [5, Proposition 1.10]). We point out that in [12], the 1-Laplacian operator has also been characterized as the pointwise subdifferential of the TV operator in form

It is well known that inverse ill-posed problems can be dealt with in the framework of generalized Tikhonov regularization. The resulting functional is composed of two basic terms which reflect our belief in the data through one or more (hyper)-parameters weighting the amount of regularization. This in turn determines the smoothness of the denoised image, and functional analysis is invoked in order to select the appropriate functional space. Sobolev spaces are rapidly ruled out because of their excessive smoothing which generates continuous unrealistic images. So this very nonlinear operator, the TV operator emerges because it allows for (weak) distributional solutions in the very large space of functions of bounded variation, those whose gradient is a Radon measure [1]. Such a sophisticated setting is a generalized approach which allows for truly discontinuous functions and opens the way to theoretical as well as practical and accurate digital image processing [18]. Since the seminal paper from Rudin, Osher, and Fatemi [38], there has been a burst in the application of the Total Variation regularization to many different image processing problems which include inpainting, blind deconvolution, or multichannel image segmentation (see for instance [19] for a review on this topic). Fast and robust numerical methods have been proposed to exactly solve convex optimization problems with TV regularization, such as the dual approach of [15] and, more recently, the Split- Bregman method [29] and the primal–dual approach of [17].

Our proposed model equation arises as the (formal) Euler–Lagrange equation associated to an energy minimization problem obtained in a Bayesian framework. A key feature of this problem is that the nonlinear term modeling Rician noise in the energy functional can be a nonconvex changing sign function with a double-well profile. This leads to the study of nonconvex nonsmooth minimization problems. In fact, this nonconvexity of the energy functional is crucial because otherwise we could show uniqueness of the trivial solution \(u\equiv 0\). The variational minimization problem associated to the model equation we consider was proposed in [35], where the multivalued Euler–Lagrange equation for the 1-Laplacian operator is deduced as a first-order necessary optimality condition. This minimization problem was simultaneously and independently considered in [26], where blurring effects were included, and existence and comparison results in the pure denoising case were reported. In order to cope with the multivalued Euler–Lagrange equation an \(\epsilon \)-regularization of the TV term was introduced in both works [26, 35] . More recently, in [20] a convex variational model for restoring blurred images corrupted by Rician noise have been proposed to overcome the difficulties related to the nonconvex nature of the original problem we are considering here.

The nonsmoothness property of the model comes from the very singular 1-Laplacian elliptic equation, which had firstly been studied as a limit of equations involving the p-Laplacian. The interest in studying such a case came from an optimal design problem in the theory of torsion and related geometrical problems (see [32] and [33] for constant data, and [21] for more general data). The suitable notion of solution to the 1-Laplacian had to wait at the turn of the century [3]. Other important related papers published in the early twenty first century include [4, 6, 10, 11, 23, 24, 34]. Due to its unique properties, this operator has been the optimal choice for PDE-based image processing in the last twenty years. Briefly, the 1-Laplacian describes isotropic diffusion within each level surface with no diffusion across different level surfaces. In this way, its action does not over-regularize the data and preserves edges and fine details. This is not true when the p-Laplacian operator, for \(p>1\), is used, since an artificial smoothing is introduced.

While the ROF model has been mathematically studied and existence and uniqueness results have been obtained [16], the Euler–Lagrange quasilinear equation associated to the Rician problem has not been considered yet for mathematical analysis. Notice that the same is true even for the semilinear equation accounting for Rician noise and linear diffusion. Here, we focus on the mathematical analysis of the TV-based Rician model. We show that the nonlinear 1-Laplacian problem has, aside from the trivial solution, at least a positive distributional solution which is also a global minimum of the energy problem (provided that the datum is big enough). This result makes the solutions of the TV-Rician denoising model attractive for the application in MRI and in particular for the DWI modality. The existence result is based on the consideration of a sequence of approximating problems of the p-Laplacian type for which no existence results are known due to the very special nonlinearity associated to the Rician noise term. Standard techniques can be used. When \(p=2\), existence and uniqueness of positive solutions is also deduced. In contrast, for general \(1\le p <2\), the uniqueness of positive solutions is still an open problem. Nevertheless, it is proved that for constant data we have uniqueness of constant solutions for any p.

The numerical resolution of the proposed model is also challenging because the energy functional is nonconvex for any p and also nonsmooth for \(p=1\). To cope with the nonconvexity, we propose a suitable decomposition of the energy functional, which allows to write it as a Difference of Convex (DC) functionals. A primal–dual approach (suitable for nondifferentiable energy functionals such as the TV operator) embedded into a proximal algorithm (suitable for DC functionals) is then applied to show, also numerically, the convergence of the p-Laplacian approximate solutions to the true 1-Laplacian solution when \(p \rightarrow 1\). This provides a unified framework in which these problems can be solved using the same algorithm and then fairly compared. Our numerical method is then successfully compared with the primal gradient descent algorithm presented in [26] and the convexified models of [26] and [20].

This paper is organized as follows. In Sect. 2, we define the model problem characterizing the Bessel ratio function and its properties jointly with the statement of our main result (see Theorem 1 below). Weak solutions are defined in Sect. 3 where the main result is obtained considering the suitable regularizing approximating problems of the p-Laplacian type. Some qualitative properties are discussed in Sect. 4, before the numerical resolution of the related minimization problem is presented in Sect. 5. Finally, in Sect. 6, the performance of the algorithm is compared to other related methods and an application on real DTI is presented.

2 Preliminaries

2.1 The Model Problem and the Statement of the Main Result

Let \(\varOmega \) be an open, bounded domain in \({\mathbb {R}}^N\) (\(N\ge 2\)) with Lipschitz boundary \(\partial \varOmega \) (usually a rectangle in image processing). Thus, there exists a outer unit normal vector n(x) at \(x\in \partial \varOmega \), for \({\mathcal {H}}^{N-1}\)-almost all point; here and in what follows, \({\mathcal {H}}^{N-1}\) stands for the \((N-1)\)-dimensional Hausdorff measure.

We will consider in \(\varOmega \) a Neumann problem involving the 1-Laplacian. This operator has to be studied in the framework of functions of bounded variation. Recall that a function \(u\>:\>\varOmega \rightarrow {\mathbb {R}}\) is said to be of bounded variation if \(u\in L^1(\varOmega )\) and its distributional gradient Du is a (vector) Radon measure having finite total variation. We denote by \(BV(\varOmega )\) the space containing all functions of bounded variation. For a systematic study of this space, we refer to [1] (see also [28]). The appropriate concept of solution to deal with the Neumann problem for the 1-Laplacian is introduced in [3]. For a review on the early development of variational models in image processing and a deep study of equations involving the 1-Laplacian, see [6].

The boundary value problem in which we are interested is

We shall assume that \(h':\, \varOmega \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) is a nonmonotone Carathéodory function defined as

where \(\lambda >0\) and \(\sigma ^2 \ne 0\) are real given parameters, \(f(x)\ge 0\) for almost all \(x\in \varOmega \), and the function

is the ratio between the first- and zero-order-modified Bessel functions of the first kind. Series representations and general properties can be found in [43]. Notice the dependence (that we shall omit) \(r_{\sigma } (x,u)=r(x,u)\) of the Bessel ratio function on the parameter \(\sigma ^2\), which is the estimated variance of the original Gaussian noise of the complex MRI data. This implicit dependence renders problem (2) a truly 2-parametric problem in so far \(\sigma ^2\) cannot be scaled out from \(\lambda \) and it has to be estimated from the noisy data f(x).

Assuming \(\lambda >0\), \(\sigma ^2 \ne 0\) fixed and \(f(x) \in L^{\infty } (\varOmega )\) given, problem (2) reads

The modified Bessel functions \(I_\nu (s)\), \(\nu \ge 0\), \(s\ge 0\) which define the ratio r(x, u) (4) are monotone exponentially growing functions and this distinguish their behavior from ordinary Bessel functions which have oscillating wave-like forms [2, 37]. Moreover \(I_0 (0)=1\), \(I_0 (s) >1\) for any \(s>0\) and \(I_\nu (0)=0\), \(I_\nu (s)>0\) for any \(s>0\) and \(\nu >0\) so \(r(x,0)=0\) and the Bessel ratio function r (x, u) in (4) is well-defined and nonnegative for any \(f\ge 0\) and \(u \ge 0\). Also \(I_1 (s)<I_0 (s)\) for any \(s>0\) and then \(0\le r(x,u) <1\). By (3) we then have \(h' (x,0)=0\), and \(u\equiv 0\) is always a solution of (2) and (5) for any nonnegative datum f (x) and fixed parameters \(\lambda >0\) and \(\sigma ^2 \ne 0\).

The specific form of \(h' (x,u)\) given in (3) describes the Rician noise distribution of a given datum image f(x) and it has been deduced in several papers dealing with medical imaging since the paper [9] where it was proposed for DTI. When dealing with the image processing application, we shall assume that \(f\in L^\infty (\varOmega )\) even if our existence theory applies more generally to \(f\in L^2 (\varOmega )\).

The function \(h' (x,u)\) is the Gateaux derivative of h(x, u):

Using (3) we have

with \(h(x,0) =0\) and the logarithm is well-defined and nonnegative for any \(f\ge 0\) and \(u \ge 0\) because of \(I_0 (s)\ge 1\), \(\forall \,s\ge 0\).

Following the Bayesian modeling approach, the associated minimization problem is

where \(J_1 (u)=TV(u)\) is the Total Variation regularization functional, previously defined in (1), and that can also be denoted as

The fidelity term (modeling Rician noise) is

Notice that \(H(0,f)=0\), \(\forall \,f\).

The minimization problem for image denoising of Rician corrupted data is formulated as follows. An equivalent formulation is considered in [26]. Fixed real parameters \(\lambda >0\) and \(\sigma ^2 \ne 0\) and given a noisy image \(f \in L^{\infty } (\varOmega )\) recover a clean image \(u \in BV(\varOmega )\cap L^{\infty } (\varOmega )\) minimizing the energy:

This minimization problem can naturally be studied in the \(L^2\)-setting since

(see (10) in Lemma 1 below). Thus, our main result can be stated as follows:

Theorem 1

Let \(\lambda >0\) and \(\sigma ^2 \ne 0\) be given real parameters. For every nonnegative \(f\in L^2(\varOmega )\), there exists a nonnegative \(u\in BV(\varOmega )\cap L^2(\varOmega )\) which is a solution to problem (2), in the sense of Subsection 3.1, and it is a global minimum of functional \(E_1\) in (8).

Remark 1

This existence result relates problem (2) and the global minimization of functional (8), which is a nonsmooth and nonconvex optimization problem. Its proof can be found in Sect. 3 below, while Sect. 4 is devoted to complete this theorem. Among others, it is shown that the solution we find satisfies the following properties:

-

1.

If \(f\in L^\infty (\varOmega )\), then \(u\in L^\infty (\varOmega )\) and \(\Vert u\Vert _\infty \le \Vert f\Vert _\infty \).

-

2.

Solution u vanishes identically when \(f(x)\le \sqrt{2\sigma ^2}\) a.e. in \(\varOmega \).

-

3.

Solution u is strictly positive when \(f(x)\ge \mu >\sqrt{2 \sigma ^2} \) a.e. \(x \in \varOmega \), and moreover \(E_1(u)<0\) holds.

This last feature provides a sufficient condition in order to have a nontrivial minimizer of functional (8).

Remark 2

The problem of minimizing \(E_1\) has also been considered by Getreuer et al. (their results were announced in [26] and proved in [27, 40]). It is worth comparing these results with those in the present paper since both approaches are very different. We prove our results through the formal Euler–Lagrange equation of the minimization problem, while the results in [27, 40] are obtained by direct methods. We explicitly point out two aspects:

-

1.

Our existence result is more general, since we take data f belonging to \(L^2(\varOmega )\), and [27, 40] consider data \(f\in L^\infty (\varOmega )\) with the additional assumption \(\inf _{x\in \varOmega } f(x)\ge \alpha >0\).

-

2.

One important feature of the present paper is that a simple condition is provided to distinguish data which lead to nontrivial solutions. Instead, the results by Getreuer et al. do not identify nontrivial solutions.

Remark 3

Uniquenes of nontrivial solutions is still an open problem. Using the same arguments from [8] and some properties of the modified Bessel Functions, a comparison result for the solutions of the minimization problem is stated in [26, Theorem 2] and proved in [27, 40]. This comparison result establishes that given \(0<f_1 < f_2\) a.e. \(x\in \varOmega \), then \(u_1 \le u_2\) a.e. \(x\in \varOmega \), with \(u_1\), \(u_2\) being minimizers of (8) for \(f=f_1\), \(f=f_2\) respectively. Since \(f_1\) and \(f_2\) must be different, it does not imply uniqueness. Some partial results about uniqueness of nontrivial solutions shall be presented in Sect. 4.2

The existence result in Theorem 1 will be proved by approximating our functional through functionals defined on the Sobolev space \(W^{1,p}(\varOmega )\) and having p-growth (with \(p>1\)). The main advantage of these approximating functionals is their differentiability (in contrast with \(E_1\), which is not differentiable). So, we introduce, for subsequent analysis, the (differentiable) energy

Notice that \(E_p (0)=0\) for any \(p> 1\), and also \(E_1 (0)=0\). The weak (distributional) solutions of (5) formally coincide with the critical points of (8). The crucial point is that these energies (including (9) for \(p>1\)) may be nonconvex depending on the datum f and the (estimated) parameter \(\sigma ^2\). This fact does not depend on the regularizer but it is a feature of the Rician likelihood function. To explore further this point, we analyse the behavior of the Bessel ratio function defined in (4) which governs the qualitative properties of the energies (8) and (9). This leads to show the coerciveness of the functional in Sect. 2.3, implying the existence result for the p-approximating problems in Sect. 2.4.

2.2 A Nonconvex Semilinearity

The characterization of the model semilinearity h(x, u) leads to the study of the properties of the modified Bessel functions of the first kind. Our results are founded on some fundamental inequalities regarding the ratio function r(x, u) and its derivative which can be found in [2]. These results will allow to characterize suitable growth conditions related to the Rician statistics. Moreover, we shall prove that, depending on the data and parameters of the problem, \(h^{''} (x,u)\) is negative near \(u=0\) and hence \(h'\) is nonmonotone and h is nonconvex.

Lemma 1

Let \(h'\) be defined as in (3) with datum \(f(x)\ge 0\) and fixed parameters \(\lambda >0\) and \(\sigma ^2 \ne 0\). Then

and

Moreover

and

Proof

In order to simplify the notation when using the results of [2], we define \(s= u (x)f(x)/\sigma ^2 \) for fixed \(x\in \varOmega \) and denote the ratio function \(r(x,u)=r(s)=I_1 (s)/I_0 (s)\), \(s\ge 0\). Please notice that \(r'(x,u )\) is the Gateaux derivative, while \(r' (s)\) is the derivative w.r.t the real, nonnegative parameter s.

By definition and the monotonicity properties of the modified Bessel functions \(0\le r(s)<1\) for any \(s>0\) and \(r(s)\rightarrow 1\) when \(s\rightarrow \infty \). The first inequality is then straightforward. We simply use definition (3), the fact that \(0\le r(x,u) <1\) and the triangle inequality to deduce that \(h'\) verifies the sublinear growth condition (11) for a.e \(x\in \varOmega \).

As a consequence, we obtain (10). Indeed,

In order to show (12), we compute the second derivative of h(x, u) with respect to u which reads

where we used that \(I_0^{''} (s)=I_1^{'} (s)=(1/2)[I_2 (s)+I_0 (s) ]\), \(\forall \,s\ge 0\) [2].

Reasoning as in [2] and using its formulas 11, 12, 15, pg.242, the following bounds hold:

Using that \(\displaystyle r'(s)=1-\displaystyle \frac{r(s)}{s}-r^2 (s)\), \(s>0\), and inequalities \(0< \displaystyle r' (s) < \displaystyle \frac{r(s)}{s}\), \(s>0\) (formula 15 in [2]) we get the improved bounds

for all \(s>0\).

To show (12), we derive with respect to u the relationship \(r(x,u)=r(s)\) to have

and

because \(\displaystyle r'(s) < \frac{1}{2}\) for any \(s\ge 0\) by (16). Using (15), the above inequality implies:

and (12) holds true. Finally (13) is checked using (15) and \(I_0 (0)=1\), \(I_\nu (0)=0\) for any \(\nu >0\). Because of \(I_2 (s)/I_0 (s) \rightarrow 1\), \(I_1 (s)/I_0 (s) \rightarrow 1\) when \(s\rightarrow \infty \) we deduce (14). \(\square \)

As a consequence of the above analysis \(h^{''}(x,u)>0\) a.e. in \(\varOmega \) for (uniformly) small data \(f(x) <\sqrt{2\sigma ^2}\) and then \(h'\) is monotone, increasing and uniqueness of the trivial solution can be deduced (see Sect. 4.2 below). Summing up we have shown (see Fig. 1) that \(h^{''} (x,0) >0\) for \(f (x) <\sqrt{2\sigma ^2} \), \(h^{''} (x,0) =0\) for \(f (x) =\sqrt{2\sigma ^2} \) and \(h^{''} (x,0) <0\) for \(f (x) >\sqrt{2\sigma ^2} \).

The profile of \(h^{''}(x,u)\) is computed for constant data \(f=1\) and parametric values \(f^2 /\sigma ^2=1\), \(f^2 /\sigma ^2=2\) and \(f^2 /\sigma ^2=3\). The values of \(\lambda \) are chosen to get a constant ratio \(\lambda /\sigma ^2=3\). A limit behavior is obtained when \(f^2 /\sigma ^2=2\) (\(\sigma =1/\sqrt{2}\), in red). For \(f^2 /\sigma ^2\le 2\), we have uniqueness. On the other hand, for \(f^2 /\sigma ^2>2\) we have \(f^2 >2\sigma ^2\), and the corresponding profile is negative in a neighborhood of \(s=0\). Some properties of \(h''(x,u)\), (12), (13), and (14), can be observed in the figure (Color figure online)

The same fact is true for small u as \(h''(x,u)\) is continuous with respect to u. These properties characterize the local behavior near \(u=0\) of h(x, u). It turns out that \(h^{''} (x,u)\) is a changing sign function depending on the datum f, and the parameter \(\sigma ^2\). Then h(x, u) is possibly nonconvex. This implies that \(h' (x,u)\) is nonmonotone. Multiple solutions to problem (2) corresponding to critical points of the energy functional may exist. For \(f\equiv 0\), we have \(h(u)=(\lambda /2\sigma ^2 ) u^2 \), \(h' (u)=(\lambda /\sigma ^2 )u\), and \(h^{''} (u) =\lambda /\sigma ^2 >0\). Multiplying (formally) by u in the model equation appearing in (2) and integrating, it is easily seen that \(u\equiv 0\) is the unique solution. We shall see in Sect. 4.2 that the same phenomenon is true when f is small enough.

To get a deep insight into the features of the energy term related to Rician noisy data, in this subsection, we fix \(x\in \varOmega \) and describe the profile of \(h(x,\,u)\) defined in (6). The qualitative behavior of \(h''(u)\) and h(u) is shown in Figs. 1 and 2 respectively. We have

Lemma 2

Let h be defined as in (6) with datum \(f(x)\ge 0\), a.e. \(x\in \varOmega \) and fixed parameters \(\lambda >0\) and \(\sigma ^2 \ne 0\). Then

Moreover:

-

1.

If \(f(x)^2\le 2\sigma ^2\) a.e. \(x\in \varOmega \), then the function \(t\mapsto h(x,t)\) is convex and its minimum is attained at 0.

-

2.

If \(f(x)^2> 2\sigma ^2\) a.e. \(x\in \varOmega \), then \(t\mapsto h(x,t)\) has a unique positive critical point where it attains a global minimum.

The double-well potential for parametric values \(\lambda =\sigma ^2 =5\) is obtained when \(f^2 >2\sigma ^2=10\). In the figure above, we represent the profile of function \(t\mapsto h(t)\) for \(f^2=\sigma ^2 =5\) (Convex case), \(f^2=2\sigma ^2 =10\) (limiting behavior), \(f^2=4\sigma ^2 =20\) (double well)

Proof

We fix \(x\in \varOmega \). When \(f(x)=0\), the result is straightforward since then \(h(x,t)=\left( \displaystyle \frac{\lambda }{2\sigma ^2} \right) t^2\).

Assuming that \(f(x)>0\), we begin by showing the limit behavior. Consider (3) written in form

As \(h' (x,u)\) is the Gateaux derivative of h (x, u) we formally integrate in (0, |u|) with respect to u to obtain

We deduce from the boundedness \(| r (x,t) | \le 1\) a.e. in \(\varOmega \) for any t, the inequality

and owing to the fact that h(x, t) is an even function (because \(I_0 \) is even), it yields

Now, Young’s inequality implies

for any \(\epsilon >0\). Thus, (18) becomes

from where (17) follows choosing \(\epsilon <1\).

To go on, we need to know more features of the function \(\displaystyle s\mapsto \frac{r(s)}{s}\). Our starting point is (16). Indeed, letting \(s\rightarrow 0\) in (16), it yields \(\displaystyle \lim _{s\rightarrow 0}\frac{r(s)}{s}=\frac{1}{2}\) and letting \(s\rightarrow +\infty \), we deduce \(\displaystyle \lim _{s\rightarrow +\infty }\frac{r(s)}{s}=0\). On the other hand, (16) implies that the function \(s\mapsto \frac{r(s)}{s}\) is (strictly) decreasing in \([0,+\infty [\).

Next let w(x) be a positive critical point of

then \(h^\prime (x,w(x))=0\) and so \(\displaystyle w(x)=\displaystyle r\Big (\frac{f(x)w(x)}{\sigma ^2}\Big )f(x)\). In other words,

According to (16), it leads to the following dichotomy:

-

1.

If \(0<f(x)^2\le 2\sigma ^2\), then \(\frac{\sigma ^2}{f(x)^2}\ge \frac{1}{2}\), so that we cannot find a positive critical point. In this case, h(x, t) is convex and its minimum is attained at 0.

-

2.

If \(f(x)^2> 2\sigma ^2\), then \(0<\frac{\sigma ^2}{f(x)^2}<\frac{1}{2}\). As the function \(s\mapsto \frac{r(s)}{s}\) is (strictly) decreasing, recall (16), there exists a unique \(s_f>0\) satisfying

$$\begin{aligned} \frac{\sigma ^2}{f(x)^2}=\frac{r(s_f)}{s_f}\,. \end{aligned}$$Choosing w(x) such that \(\frac{f(x)w(x)}{\sigma ^2}=s_f\), we deduce that \(w(x)>0\) and h(x, t) has a critical point at \(t=w(x)\). Since h(x, t) is negative in a neighborhood of 0 (as a consequence of \(h''(x,0)<0\) and \(h' (x,0)=h(x,0)=0\)) and \(\lim _{t\rightarrow \pm \infty }h(x,t)=+\infty \), it follows that h(x, t) has, at least, a local minimum; wherewith that positive critical point must be a local minimum. Therefore, h(x, t) is an even function that, on \([0,+\infty [\), has the following profile: it is negative and decreasing in [0, w(x)]; it attains a global minimum at the point w(x); from the point w(x) on, it is increasing and goes to \(+\infty \) as \(t\rightarrow +\infty \).\(\square \)

2.3 Coercitiveness and Lower Bound

In this Subsection, we show that the energy minimization problem related to the (formal) Euler–Lagrange equation in (2) is coercive in \(BV (\varOmega )\cap L^2 (\varOmega )\) because the energy H(u, f) defined in (7) is coercive in \(L^2 (\varOmega )\). This shall be used to show that the energy \(E_1 (u)\) has, at least, a positive, nontrivial minimum (provided that the datum is big enough).

Integrating (19) in \(\varOmega \), using definition (7) and noticing that \(r(x,0)=0\) we deduce

where \(0< \epsilon < 1/2\), and the functional H(u, f) is coercive in \(L^2 (\varOmega )\). Then the energy functional \(E_p (u)\) in (9) is coercive in \(W^{1,p} (\varOmega )\cap L^2 (\varOmega )\) and \(E_1 (u)\) [defined in (8)] is coercive in \(BV (\varOmega )\cap L^2 (\varOmega )\). These energies are also (uniformly) bounded from below

2.4 Existence Result for the Approximating Problems

The analysis of problem (2) begins with the consideration of problems involving the \(p-\)Laplacian:

Since we want let \(p\rightarrow 1\), it is enough to take \(1<p< 2\). For such p, the existence of a solution to (20) is a standard result although we have not found references for this specific problem; so that we include its proof for the sake of completeness. We are proving the following adaptation of Theorem 1.

Proposition 1

Let \(1<p<2\) and \(\lambda >0\), \(\sigma ^2 \ne 0\) be given real parameters.

For every nonnegative \(f\in L^2(\varOmega )\), there exists a nonnegative \(u\in W^{1,p}(\varOmega )\cap L^2(\varOmega )\) which is a solution to problem (20) and it is a global minimum of functional \(E_p\).

Proof

Consider the functional, written in terms of (6),

Since the Euler–Lagrange equation corresponding to the functional \(E_p\) is (20) and \(E_p\) is differentiable, it is enough to find a nonnegative minimizer of \(E_p\) in the space \(W^{1,p}(\varOmega )\cap L^2(\varOmega )\).

The weakly lower semicontinuity of \(E_p\) can be obtained as follows. If \((u_n)_n\) is a sequence in \(W^{1,p}(\varOmega )\cap L^2(\varOmega )\) such that

then, due to the lower semicontinuity of the p-norm and the 2-norm, it yields

To pass to the limit in the remainder term, another consequence is in order, namely: the sequence \((fu_n)_n\) is weakly convergent in \(L^1(\varOmega )\), so that it is equi-integrable. Thus, it follows from the estimate

that the sequence \(\Big (\lambda \log I_0\Big (\frac{u_nf}{\sigma ^2}\Big )\Big )_n\) is equi-integrable as well. Moreover, applying the compact embedding of \(W^{1,p}(\varOmega )\) into \(L^1(\varOmega )\), we will assume that

This fact implies

By Vitali’s Theorem we conclude that

On the other hand, we have already proved the coerciveness of \(E_p\) previously in Sect. 2.3. Therefore, there exists \(u\in W^{1,p}(\varOmega )\cap L^2(\varOmega )\) which minimizes \(E_p\).

Moreover, we may choose u to be nonnegative. This feature is a consequence of being h(x, s), an even function with respect to s, since this fact induces \( E_p(|u|)=E_p(u) \) and so |u| is a minimizer of \(E_p\) as well. \(\square \)

Remark 4

Regarding uniqueness of problem (20), we point out that there always exists the trivial solution \(u\equiv 0\). This solution may be unique if the datum is small enough (see Sect. 4 below).

Nevertheless, we are interested in uniqueness of positive solutions. When \(p=2\), we may invoke the results in [13] and, noting that the function \(\displaystyle u\mapsto \frac{r(x,u)}{u}\) is decreasing, deduce that the positive solution to (20) must be unique. Since \(\displaystyle u\mapsto \frac{r(x,u)}{u^{p-1}}\) is not decreasing, this argument does not hold for \(p<2\), so that we cannot presume that the positive solution we have found is unique.

3 Solving the Model Problem

In this section, we write rigorously the model equation formally introduced in (2). We shall prove the existence of a weak (distributional) solution which is a global minimum of the energy functional \(E_1 (u)\) in (8).

3.1 Definition of Solution for the Model Problem

We shall say that \(u\in BV (\varOmega )\cap L^2(\varOmega )\) is a weak solution of problem (2) if \(h'(x,u)\in L^2(\varOmega )\) and there exists a vector field \({\mathbf {z}}\in L^{\infty } (\varOmega , {\mathbb {R}}^N )\), with \(\Vert {\mathbf {z}} \Vert _\infty \le 1\), such that

-

1.

\(-\displaystyle \text {div} ({\mathbf {z}}) + h' (x,u) =0 \qquad \text {in}\quad \mathcal{D}' (\varOmega ) \)

-

2.

the equality \(( {\mathbf {z}} , Du ) =|Du|\) holds in the sense of measures

-

3.

\([{\mathbf {z}}, n] =0\), \(\mathcal{H}^{N-1}\)-a.e. on the boundary \(\partial \varOmega \).

Roughly speaking, \({\mathbf {z}}\) plays the role of \(\frac{Du}{|Du|}\). The expressions \(( {\mathbf {z}} , Du )\) and \([{\mathbf {z}}, n]\) have sense thanks to the Anzellotti theory (see [7] or [5, Appendix C]) which defines a Radon measure \(({\mathbf {z}}, Dw)\), when \(w\in BV (\varOmega )\cap L^2(\varOmega )\) and \(\text {div} ({\mathbf {z}})\in L^2(\varOmega )\), and provides the definition of a weakly trace on \(\partial \varOmega \) to the normal component of \({\mathbf {z}}\), denoted by \([{\mathbf {z}}, n]\). That Radon measure is defined, as a distribution, by the expression

and its total variation satisfies the fundamental inequality

Furthermore, this theory also guarantees a Green’s formula that relates the function \([{\mathbf {z}}, n]\) and the measure \(({\mathbf {z}}, Dw)\)

Using this Green formula, we deduce a variational formulation of the solution to problem (2), namely

for all \(v\in BV(\varOmega )\cap L^2(\varOmega )\).

This formulation allows us to show in which sense solutions to problem (2) are critical points of the functional \(E_1=J_1+H\). In fact, it follows from (23) that

for all \(v\in BV(\varOmega )\cap L^2(\varOmega )\). Hence,

Remark 5

Observe that if we denote \(\displaystyle F(u)=\lambda \log \Big (I_0\Big (\frac{fu}{\sigma ^2}\Big )\Big )\), then we get that \(F^\prime (u)\) lies in the subdifferential at u of the convex functional defined by \(\displaystyle v\mapsto \frac{\lambda }{2\sigma ^2}\int _\varOmega v^2+\int _\varOmega |Dv|\).

3.2 A Priori Estimates

We are proving that problem (2) has a solution u for each \(f\in L^2(\varOmega )\). Moreover, we are getting \(u\ge 0\).

For \(1<p< 2\), consider \(u_p\in W^{1,p}(\varOmega )\cap L^2(\varOmega )\) a nonnegative solution to the approximating problem

The weak (variational) formulation of the boundary value problem (24), written in terms of (3) and (4), is

for all \( v\in W^{1,p} (\varOmega )\cap L^2(\varOmega )\). Choosing \(v=1\), we have the compatibility integral condition

i.e., \(h' (x,u_p)\) has mean zero and we easily deduce a first estimate:

We now use \(v=u_p\) as a test function in the variational formulation obtaining

hence the uniform estimate

It follows now from Young’s inequality that

Thus, \((u_p)_p\) is bounded in \(BV(\varOmega )\cap L^2(\varOmega )\) and there exist \(u\in BV(\varOmega )\cap L^2(\varOmega )\) and a subsequence, still denoted by \(u_p\), satisfying

We point out that \(u\ge 0\) due to being a pointwise limit of nonnegative functions. We deduce from \(u\in L^2(\varOmega )\) that \(h'(x,u)= (\lambda /\sigma ^2)[u- r(x,u)f] \in L^2(\varOmega )\) since r(x, u) is bounded. The boundedness of \((u_p)_p\) in \(BV(\varOmega )\) also implies that for every \(q,\, 1\le q<p'\), we have

So, for any \(q>1\) fixed, the sequence \(|\nabla u_p|^{p-2} \nabla u_p\) is bounded in \(L^q(\varOmega ;{\mathbb {R}}^N)\) and then there exists \({\mathbf {z}}_q\in L^q(\varOmega ;{\mathbb {R}}^N)\) such that, up to subsequences,

Moreover, by a diagonal argument we can find a limit \({\mathbf {z}}\) that does not depend on q, that is

Now by (28), we deduce

for \(1\le q<+\infty \) and for \( p\in ]1,q^\prime [\,\). Therefore, by lower semicontinuity of the norm, we have

Letting \(q\rightarrow \infty \), we get that \({\mathbf {z}}\in L^\infty (\varOmega ;{\mathbb {R}}^N)\) and

3.3 Checking that Function u is a Solution to the Model Problem (2)

We have to see that u satisfies the requirements of our definition (see Sect. 3.1 above).

Taking \(v=\varphi \in C_0^\infty (\varOmega )\) in (25) and letting \(p\rightarrow 1\), it yields

so that our equation holds in the sense of distributions.

Once we have proved 1 in the definition of solution, we proceed to see 2 and 3. To begin with 2, consider \(\varphi \in C_0^\infty (\varOmega )\) such that \(\varphi \ge 0\). Taking \(u_p\varphi \) as test function in (25), we obtain

We are studying each term in (30) to let \(p\rightarrow 1\). We apply Fatou’s Lemma in the first term. In the second, we use Young’s inequality and the lower semicontinuity of the total variation as follows:

Third term is handled using (27) and (29). In the right hand side, it is enough to have in mind that r is bounded. Therefore, (30) becomes

Taking into account that our equation holds in the sense of distributions and simplifying, we may write this inequality as

By (21), this is just

that is, \(|Du|\le ({\mathbf {z}},Du)\) as measures. The reverse inequality is a consequence of (22). Hence, 2 is seen.

It only remains to prove 3. To this end, consider \(v\in W^{1,2}(\varOmega )\) in (25) and take limits as p goes to 1. It yields

Using the equality \(\displaystyle \frac{\lambda }{\sigma ^2}(u- r(x,u)f)=\,\text {div }{\mathbf {z}}\), it follows that

so that Green’s formula implies

By a density argument, this leads to \([{\mathbf {z}}, n]=0\) \({\mathcal {H}}^{N-1}\)—a.e. on \(\partial \varOmega \).

Remark 6

We explicitly point out that the compatibility condition (26) also holds for the solution u to problem (2). To check this fact, it is enough to multiply

by a constant function and apply Green’s formula. Then we get

The same condition can be deduced letting \(p\rightarrow 1\) in (26) since \(|h'(x,u_p)|\le C(|u_p|+f)\) and \(u_p\rightarrow u\) strongly in \(L^1(\varOmega )\).

3.4 Function u is a Global Minimizer of Functional \(E_1\)

We will prove that the nonnegative function u considered in Sect. 3.2, which we have shown is a solution to problem (2) in Sect. 3.3, satisfies

To see it, we use several stages.

Step 1 To begin with, assume that \(v\in W^{1,2}(\varOmega )\). Observe first that the interpolation inequality implies

for all \(1<p<2\). Thus,

On the other hand, as a consequence of Young’s inequality, we have

for all \(1<p<2\); so that

Hence, the conclusion is

that is

Since \(u_p\) is a minimizer of \(E_p\) and \(v\in W^{1,p}(\varOmega )\cap L^2(\varOmega )\), we obtain

for all \(1<p<2\). On account of (31), using the lower semicontinuity of functional \(E_1\) and Young’s inequality we deduce that

Step 2 Assume now that \(v\in W^{1,1}(\varOmega )\cap L^2(\varOmega )\) satisfies \(v\big |_{\partial \varOmega }\in W^{1/2,2}(\partial \varOmega )\). Then there exists \(w\in W^{1,2}(\varOmega )\) such that \(v\big |_{\partial \varOmega }=w\big |_{\partial \varOmega }\) and so \(v-w\in W_0^{1,1}(\varOmega )\cap L^2(\varOmega )\). Thus, there exists a sequence \((v_n)_n\) in \(C_0^\infty (\varOmega )\) such that

Since Step 1 provides us

it follows that

Step 3 Consider the general case: \(v\in BV(\varOmega )\cap L^2(\varOmega )\). Some approximation sequences of v are in order. First (see [1, Theorem 3.9] and [28, Remark 2.12]), there exists a sequence \((v_n)_n\) in \(C^\infty (\varOmega )\cap W^{1,1}(\varOmega )\cap L^2(\varOmega )\) such that

On the other hand, given \(v\big |_{\partial \varOmega }\in L^1(\partial \varOmega )\), we may find a sequence \((\varphi _n)_n\) in \(W^{1/2,2}(\partial \varOmega )\) satisfying

For each \(n\in {\mathbb {N}}\), we apply [7, Lemma 5.5] to get \(w_n\in C(\varOmega )\cap W^{1,1}(\varOmega )\cap L^2(\varOmega )\) such that

Summing up, we have

-

1.

\(w_n+v_n\in C(\varOmega )\cap W^{1,1}(\varOmega )\cap L^2(\varOmega )\) for all \(n\in {\mathbb {N}}\);

-

2.

\(w_n+v_n\rightarrow v\), strongly in \(L^2(\varOmega )\);

-

3.

\((w_n+v_n)\big |_{\partial \varOmega }=\varphi _n\in W^{1/2,2}(\partial \varOmega )\) for all \(n\in {\mathbb {N}}\).

Moreover, since

and \(\varphi _n\rightarrow v\big |_{\partial \varOmega }\) strongly in \(L^{1}(\partial \varOmega )\), it follows that

The lower semicontinuity of the total variation now leads to

Therefore,

Finally, by Step 2, we already get

Letting n go to \(\infty \), we see that \(E_1(u)\le E_1(v)\). Since this fact holds for all \(v\in BV(\varOmega )\cap L^2(\varOmega )\), we are done.

4 Remarks and Properties of the Problem

4.1 Summability of the Solutions

We are interested in dealing with bounded data f. In this case, the solution we find is also bounded. More generaly, we will see in this remark that if \(f\in L^q(\varOmega )\), with \(q>N\), then the solution u is bounded. It is enough to check that an \(L^\infty \)-estimate holds on the approximate solutions \(u_p\). Since \(q > N\), then \(\frac{N}{q' (N-1)} > 1\). Fix \(p_0\), such that \(1< p_0 < \frac{N}{q' (N-1)}\), and take p such that \(1 < p \le p_0\). For any \(k >0\) consider the real function \(G_k(s) := (s - k)^+\), \(s \ge 0\). Taking \(G_k(u_p)\) as test function in (25), we get

Disregarding a nonnegative term and applying \(r(x,u_p)\le 1\), Hölder’s inequality leads to

This is the starting point for using the Stampacchia technique and get an \(L^\infty \)-estimate. Just be careful to check that the various constants appearing in the calculations do not depend on p. Details can be found at [36, Theorem 3.5, Step 3].

Furthermore, if \(f \in L^\infty (\varOmega )\), we may clarify a little more the situation by seeing the estimate \(\Vert u\Vert _\infty \le \Vert f\Vert _\infty \). This inequality makes explicit and extends the statement 8 of [26, Theorem 1].

Taking \(u_p^q\), with \(q>1\) large enough, as test function and dropping a nonnegative term, we obtain

It follows from Hölder’s inequality that

and so

Letting \(q\rightarrow \infty \), it yields \(\Vert u_p\Vert _\infty \le \Vert f\Vert _\infty \) for all \(1<p<2\), and recalling that u is the pointwise limit of \(u_p\), we are done.

4.2 Uniqueness

We will prove that if the function \(t\mapsto h^\prime (x,t)\) is increasing, then there exists at most a solution to (2).

Proof

Assume, to get a contradiction, that \(u_1\) and \(u_2\) are two solutions to (2) in the sense of the definition stated in Sect. 3.1 above. Denote by \({\mathbf {z}}_1\) and \({\mathbf {z}}_2\) the respective vector fields. It follows that

in the sense of distributions. Multiply both equations by \(u_1-u_2\), use Green’s formula, recall the second condition in the Definition of solution to problem (2) and subtract one expression from the other to obtain

The three terms are nonnegative since \(({\mathbf {z}}_i , Du_j)\le \Vert z_i\Vert _\infty |Du_j|\le |Du_j| \), for \(i,j=1,2\), and the function \(t\mapsto h^\prime (x,t)\) is increasing. Hence, they must vanish; in particular,

and \(h'\) increasing implies \(u_1\equiv u_2\), as desired. The qualitative profiles of \(h'(u)\) depending on the data f and the parametric value \(\sigma ^2\) are shown in Fig. 3. \(\square \)

4.3 NonTrivial Solutions

We have already commented that there always exists a trivial solution \(u\equiv 0\). On the other hand, \(0\le f\le \sqrt{2\sigma ^2}\) implies that \(h'(x,s)\) is increasing with respect to s and, as a consequence of the uniqueness result of the previous subsection, there is no other solution aside from the trivial one. Nevertheless, we are interested in the case when \(f\in L^\infty (\varOmega )\) is a.e. above this threshold and in finding nontrivial solutions.

Profile of \(h^{'}(x,u)\) for fixed \(x\in \varOmega \) and the parametric values \(\lambda =10\), \(\sigma ^2 =10\) for different constant values of the data: \(f=2\), \(f=f^* =\sqrt{20}\), and \(f=10\). A limit behavior is obtained when \(f=f^* =\sqrt{20} =\sqrt{ 2\sigma ^2}\). For \(f\le f^* \), we have uniqueness of the trivial solution. For \(f>f^* \), we have \(f^2 >2\sigma ^2\) and the corresponding profile is negative in a neighborhood of \(s=0\). Notice that when u is small, \(h' <0\), and \(h'\) behaves as a reactive term (a source) in the Euler–Lagrange equation. When u is sufficiently big \(h' >0\) and \(h'\) defines an absorption term (a sink) in the equation

Although constant data are unrealistic, we study them to get nontrivial solutions. In this Subsection, we are showing that if the datum is constant \(f(x)=\mu \) and \(\mu >\sqrt{2\sigma ^2}\), then the solution is constant and nontrivial. It is worth remarking that we obtain uniqueness of positive solutions for constant data. In the next Subsection, we will derive a criterion on the datum to obtain nontrivial solutions.

Considering (6), we define the function

which is related to the function h(x, u) setting \(h(x,u)=h(f(x),u(x))\). Fixed \(x\in \varOmega \), we have \(\varGamma (\mu , t)= h(f(x),u(x))\) and we can use the results in the proof Lemma 2, condition 2. Computing its derivative we have, \(\forall \, \mu>0,\, t>0\),

and \(\varGamma (\mu ,t)\) is decreasing with respect to \(\mu \). Owing to \(\mu >\sqrt{2\sigma ^2}\), the function \(\varGamma (\mu ,t)\) attains a negative minimum at a positive point, say \(t=\gamma \) (see the end of the proof in Lemma 2 , condition 2). Then, fixed \(\mu \), \(\gamma = {{\mathrm{arg\,min}}}\, \varGamma (\mu ,t)\) satisfies \(\varGamma _t (\mu ,t) =0\) which is

Actually, there is just a positive point \(\gamma \) satisfying (33); to see this it is enough to check that \(s_\mu =\frac{\mu \gamma }{\sigma ^2}\) is the unique solution to problem

and this fact is a consequence of being the function \(s\mapsto \frac{r(s)}{s}\) decreasing in \([0,+\infty [\) (see (16)). Thus \(\gamma \) is given by (33) and satisfies

Taking \(u(x)=\gamma \) for all \(x\in \varOmega \), it yields that u is the unique minimizer of the functional \(E_1\). Indeed, if \(v\in BV(\varOmega )\cap L^2(\varOmega )\), then \(h(x,u)\le h(x,v)\) and \(h(x,u)= h(x,v)\) only when \(v(x)=\gamma \) a.e., so that

and \(E_1(u)=E_1(v)\) only when \(u=v\).

4.4 Comparing with Constant Functions

Using the same notation of the above subsection, we may go further and prove that \(0\le \mu \le f(x)\) implies \(\gamma \le u(x)\) a.e.in \(\varOmega \), where \(\gamma \ge 0\) minimizes \(\varGamma (\mu ,t)\). We also assume that \(\mu >\sqrt{2\sigma ^2}\), otherwise \(\gamma =0\) and the inequality becomes obvious.

We begin by claiming that, for almost all \(x\in \varOmega \),

and

In both cases,

we will use that functions

and these facts are derived from (16). Notice that, for almost all \(x\in \varOmega \), the positive minimum w(x) of h(x, t) satisfies

It follows that

where \(s_f(x)=\frac{f(x)w(x)}{\sigma ^2}\). As seen in the previous subsection, a similar identity holds for the positive minimum \(\gamma \) of \(\varGamma (\mu ,t)\):

where \(s_{\mu }=\frac{\mu \gamma }{\sigma ^2}\). Hence, by (37), \(\mu \le f(x)\) implies \(s_{\mu }\le s_f(x)\) a.e. and so \(\mu \gamma \le f(x)w(x)\) a.e. Going back to (38), for almost all \(x\in \varOmega \), we have

where the last inequality is due to (37). Therefore, we have seen that \(w(x)\ge \gamma \) a.e. Finally, since \(h(x,\cdot )\) is decreasing in [0, w(x)] for almost all \(x\in \varOmega \), it yields that \(h(x,\cdot )\) is decreasing in \([0,\gamma ]\) for almost all \(x\in \varOmega \) and (35) is proved. The second claim follows using a similar argument.

Now we turn to check that \(u(x)\ge \gamma \) a.e. Since u is a global minimizer of functional \(E_1\), it follows that

Simplifying and dropping the nonnegative gradient term, we obtain

Applying now our first claim (35), we deduce that \(h(x,\gamma )< h(x,u)\) a.e. in \(\{u<\gamma \}\). Therefore, \(|\{u<\gamma \}|=0\), that is \(u(x)\ge \gamma \) a.e. in \(\varOmega \).

Starting from the inequality

it follows that

and our second claim (36) implies that \(u(x)\le \gamma _2\) a.e. in \(\varOmega \).

4.5 The Minimum is Decreasing with Respect to the Datum

In this remark, we will make explicit the dependence on the data. To this end, we stand our functional for \(E^f_1\).

Let \(f_i\in L^2(\varOmega )\), \(i=1,2\), be two data and denote by \(u_i\) the corresponding function where the minimum of \(E^{f_i}_1\) is attained. We will show that \(f_1\le f_2\) implies \(E_1^{f_1}(u_1)\ge E_1^{f_2}(u_2)\).

Since \(f_1(x)\le f_2(x)\) implies \(H(v,f_1)\ge H(v,f_2)\) for all \(v\in BV(\varOmega )\cap L^2(\varOmega )\), recall (32), it follows that

Combining this fact with the previous subsection and having in mind (34), we get that \(f(x)\ge \mu >\sqrt{2\sigma ^2}\) implies

4.6 Resolvents of the Subdifferential

With a view to the numerical resolution of problem (2), we now consider some properties of the resolvents of the subdifferential of a (possibly) quadratically perturbed Total Variation energy functional.

It is well known that subdifferentials of convex functions have nonexpansive resolvents. Thanks to the characterization of the subdifferential of the Total Variation appearing in [5], we may make explicit this feature in our case. Indeed, fix \(\alpha \ge 0\) and set

Using [5, Lemma 2.4], it yields that \(u\in (I+c\,\partial G_1)^{-1}(f)\), with \(c>0\), if and only if u is a solution to

We point out that this problem has a unique solution (just follow the arguments in subsection 4.2).

Consider now \(u_i\) solution to problem (40) with datum \(f_i\), \(i=1,2\). In other words, we have \(u_i= (I+c\,\partial G_1)^{-1}(f_i)\), \(i=1,2\). Then there exist \({\mathbf {z}}_i\in L^\infty (\varOmega ;{\mathbb {R}}^N)\) satisfying the requirements of Sect. 3. Take \(u_1-u_2\) as test function in each equation (40) (that with datum \(f_1\) and that with datum \(f_2\)) and subtract them. Then we get

Dropping a nonnegative term and applying Hölder’s inequality, it follows that

from where we conclude

Therefore, if \(\alpha >0\), then the Lipschitz constant satisfies \(\frac{1}{1+c\alpha }<1\) and so each resolvent is actually a contraction. Similar, simpler arguments show that the same result is true for \(1<p< 2\):

5 Numerical Resolution

In this section we shall exploit the underlying structure of the minimization problem to write the corresponding energy functional as the difference of convex functions. For this, we consider functionals (8) and (9) defined as \(E_1 (u)=J_1 (u)+ H(u,f)\) and \( E_p (u)=J_p (u)+ H(u,f)\). Using (39) and (41), we can decompose them in form \(E_1 (u)=G_1 (u)- F(u,f)\) and \( E_p (u)=G_p (u)- F(u,f)\) where (compare with (7))

The fundamental point is that the energy in (42) is convex. As a consequence, (8) and (9) are difference of convex energy functionals.

We now introduce a 2D discrete setting in which the functionals can be minimized by a convergent Proximal Point algorithm, in which a primal–dual method is used to solve the proximal operator for (39) and (41) together with an ascent gradient step for (42). The generalization to 3D (volumetric) datasets is straightforward.

5.1 Discrete Framework

Let \(\varOmega \subset {\mathbb {R}}^2\) be an ideally continuous rectangular image domain and consider a discretization in terms of a regular Cartesian grid \(\varOmega _h\) of size \(N \times M\): \((ih, jh), \,1 \le i \le N,\, 1\le j \le M\) where h denotes the size of the spacing. The matrix \((u^h_{i,j})\) represents a discrete image where each pixel \(u_{i,j}\) is located in the correspondent node (ih, jh). In what follows, we shall choose \(h = 1\) because it only causes a rescaling of the energy through the \(\lambda \) parameter. Henceforth, we shall drop the dependence of the mesh size and denote \(u^h =u\). Let \(X={\mathbb {R}}^{N \times M}\) be the space of solutions. We introduce the discrete gradient \(\nabla \,:\,X\rightarrow Y=X \times X\), defined as the forward finite differences operator

except for \((\nabla u)^x_{N, j}=0\), and \((\nabla u)^y_{i, M} =0\). The discrete p-norm of the gradient for \(1\le p <2\) is

which for \(p=1\) is the discrete version of the isotropic TV operator (1) and for \(1< p <2\), is the discrete version of the \(J_p(u)\) term of the energy (9). The discrete energy for the functionals defined in (8) and (9) reads as

where the matrix \((f_{i, j})\) represents the discrete noisy image, with each pixel \(f_{i, j}\) located at the node (i, j).

Endowing the spaces X and Y with the standard Euclidean scalar product, the adjoint operator of the discrete gradient (43) is \(\nabla ^*=-\text {div}\).

Given \(p=(p^{x},\, p^{y}) \in Y\), we have

for \(2\le i, j \le N-1\). The term \((p_{i, j}^{x}-p_{i-1, j}^{x})\) is replaced with \(p_{i,j}^{x}\) if \(i=1\) and with \(-p_{i-1,j}^{x}\) if \(i=N\), while the term \((p_{i, j}^{y}-p_{i, j-1}^{y})\) is replaced with \(p_{i, j}^{y}\) if \(j=1\) and with \(-p_{i, j-1}^{y}\) if \(j=N\).

5.2 A Proximal Point Algorithm for Rician Denoising

In this section, we address the numerical resolution of the nonsmooth nonconvex minimization problem associated to the energy functional (8) \((p=1)\) and the smooth nonconvex approximating minimization problems related to the differentiable energy (9) \((1<p<2)\). To this end, we shall adapt a general proximal point algorithm for the minimization of the difference of convex (DC) functions proposed in [39]. A decomposition of the energy functional as a difference of convex (DC) functions is then proposed. This is based on the fact that \(I_0(s)\) is strictly log-convex which means that \(\log I_0 (s) \) is strictly convex and so is the energy term defined in (42).

In the discrete setting introduced before, we can then write the Rician denoising functional (44) as follows. Given f, let \(F : X\rightarrow {\mathbb {R}}\) and \(G_p : X\rightarrow {\mathbb {R}}\) be the discretized analog of functionals (42) and (39) \((p=1)\), (41) \((1<p<2)\):

The functional in (44) can be seen as the difference of two strictly convex proper l.s.c functions \(G_p(u)\) and F(u):

Notice that \(G_p(u) \ge 0\), \(F(u) \ge 0\), and F is differentiable with Frechet derivative \(F' (u)\).

Then we can find a global minimizer of \(E_p(u)\) by applying the following Proximal Point algorithm:

-

Given an initial point \(u_0=f\), let \(c_k= c, \forall k\) and set \(k=0\) and \(\epsilon =10^{-6}\).

-

1.

Compute \(w_k= F' (u_k)\).

-

2.

Set \(y_k=u_k+c_k w_k\) .

-

3.

Compute \(u_{k+1}=(I+c_k \,\partial G_p)^{-1} (y_k)\)

-

4.

If \(\Vert u_{k+1} -u_k\Vert _2/\Vert u_k\Vert _2<\epsilon \) stop. Otherwise \(k=k+1\) and return to step 1.

-

1.

Notice that we can write Steps 1-3 as

which is a forward–backward splitting algorithm (see for example [45]).

Step 1 is explicitly given by

In Step 2 we set the descent direction for Step 3. Notice that any ascent direction for F is a descent direction for E. To compute the proximal operator \((I+c \,\partial G_p)^{-1} \) in Step 3, we need to solve the following strictly convex minimization problem:

Let \(R_p (u)=\displaystyle \frac{1}{p} \sum _{i.j} |(\nabla u)_{i.j}|^p \), and

Using Legendre Fenchel’s duality, we write the minimization problem (45) as a saddle-point problem:

We distinguish two cases. When \(p=1\), the Fenchel conjugate \(R_{p}^*(v)\) is the indicator function \(I_K\) of the convex set \(K=\{ v \in Y\,:\, \Vert v\Vert _{\infty } \le 1\}\), i.e \(I_K (v)=0\) if \(v\in K\), \(I_K (v)=+\infty \) if \(v\notin K\). In the differentiable case, \(1<p<2\), we have

with \(1/p+1/p'=1\). To solve this saddle-point problem (47), we use the Primal–Dual algorithm presented in [17]. This method allows an unified treatment of (47) for any p, so dealing with the nondifferentiability of \(G_1 (u)\). This algorithm performs Step 3 in \(\hbox {k}^{th}\) external iteration of the Proximal Point algorithm and reads as follows:

Given \(u^0=y_k\), set \(v^0 =\bar{0}\), \(\tau _d=\tau _p=1/\sqrt{12}\), and \(\bar{u}^0=u^0\). Iterate until convergence:

-

(i)

\(v^{n+1}=\left( I + \tau _d \partial R_{p'}^* \right) ^{-1} (v^n + \tau _d \nabla \bar{u})\)

-

(ii)

\(u^{n+1}=(I + \tau _p \partial S)^{-1} (u^n + \tau _p \,\text {div}\, v^{n+1} )\)

-

(iii)

\(\bar{u}^{n+1}=2u^{n+1}-u^n\)

This is an inner loop and the upper index n is the inner iteration counter. Steps (i) and (ii) aim to compute the proximal operators corresponding to \(R_p^*(u)\) and S(u) and are defined by:

Step (i) For \(p=1\), we compute \(\bar{v}^n =v^n+\tau _d \nabla \bar{u}^n\) and the resolvent operator with respect to \(R_1^*\) reduces to pointwise Euclidean projector onto \(\ell ^2\) balls:

For \(1<p<2\), with \(\bar{v}^n =v^n+\tau _d \nabla \bar{u}^n\) the computation of the resolvent operator \(\left( I + \tau _d \partial R^* \right) ^{-1} (\bar{v}^n ) \) leads to solve the following strictly convex minimization problem:

The first-order necessary (and sufficient) condition for optimality reads as follows:

It is easily seen that \(f^n (v)\) is a continuous monotone increasing with \(f^n (0)=\bar{v}^n\), and the equation \(f^n (v)=0\) has a unique real positive solution \(0<| v| \le |\bar{v}^n|\) for any \(p'\). For any fixed internal iteration n, we apply the Newton’s method to solve the nonlinear equation resulting in the following fixed point iteration: Set \(j=0\), \(v_{j}^{k,n+1} =v_{j}\), \(v_{j+1}^{k,n+1} =v_{j+1}\), and \(v_0 =v^{k,n}\). Compute, for \(j=1, 2, ...\) till convergence

Step (ii) The resolvent operator with respect to S poses simple pointwise quadratic problems. The solution is given by

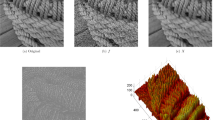

Denoising results of the noisy phantom brain image of Fig. 4 using the p-Laplacian for \(p=1.75, 1.5, 1.5, 1.1\) and 1 (Total Variation)

6 Numerical Results

In this section we test the performance of the proposed numerical scheme. We first validate the results of the TV-Rician denoising method using the Proximal Point Algorithm (PPA), denoted by TV-Rician in the following. We also test the numerical convergence of the p-approximating problems. Then, we compare TV-Rician with previously proposed methods for TV-Rician-based denoising [20, 26, 35] for different images and noise intensities. Finally we present an application on real Diffusion Tensor Images (DTI), which is an MRI modality heavily affected by Rician noise [9, 41].

6.1 Numerical Scheme Validation

In order to assess the performance of the proposed algorithm, we used a synthetic brain image obtained from the BrainWeb Simulated Brain DatabaseFootnote 1 at the Montreal Neurological Institute [22]. The central slice of the original phantom was extracted and normalized to be between 0 and 255. Finally, the slice was contaminated artificially with Rician noise for \(\sigma =15\). To compute the denoising quality, we use two different measures: the Peak-Signal-to-Noise-Ratio (PSNR) and the Structural Similarity Index (SSIM) [42].

In Fig. 4, we show the denoising results of the TV-Rician method for \(\lambda =22\). This \(\lambda \) value was optimized to obtain the best PSNR and SSIM with respect to original phantom. We can see how in the denoised image (Fig. 4c) most of the noise has been removed, while the fine details are preserved. Using the same regularization parameter, we repeat this test solving (44) for different values of p, \(p=\{1.1, 1.25, 1.5, 1.75\}\), to numerically asses the convergence of the \(p-\)sequence of regularizing approximating \(u_p\) solutions when \(p\rightarrow 1\). The \(u_p\) solutions are shown in Fig. 5, where, as expected, the closer p gets to 1, the more similar the p-Laplacian solution is to the TV image. This p-convergence can also be observed when plotting the energy minimization evolution of the Proximal Point Algorithm for these same values of p and the TV case (see Fig. 6).

6.2 Comparison with Other Variational Methods for Rician Denoising

In order to cope with the difficulties of the nonsmooth nonconvex problem (8), several methods have been proposed for TV-based denoising of Rician contaminated images. The first of them uses an \(\epsilon \)-approximation of the TV term [26, 35] to obtain a smooth minimization problem. With this regularization, a gradient descent can be applied to solve the problem. In the following, this approach will be denoted as \(\hbox {TV}_\epsilon \)-Rician. In the work of [26], a convexification of the functional was also proposed. This new minimization problem is solved by a Split-Bregman approach [29]. We will refer to this convexification as Getreuer model in the following. Finally, a different convexification of (8) by adding the term \(\frac{1}{\sigma }\int _{\varOmega }(\sqrt{u}-\sqrt{f})^2 \mathrm{d}x)\) has been recently presented in [20]. This new convex problem is then efficiently solved using a primal–dual algorithm [17]. For the comparisons, we will denote as Chen–Zeng this method.

Denoising results on the Liver image (a) for fixed parameters \(\sigma =20\), \(\gamma =0.035\). In b–e, the images resulting from applying the compared methods to f (f), the noisy version of I (a), the original image. In the second row, g–j, the absolute differences between the denoised images and I are shown. Careful inspection reveals a better perfomance of the proposed method (see e and j) in areas with lower SNR (dark zones)

All of these approaches rely on approximations of the problem (8) making it differentiable or convex. Notably, the proposed algorithm (TV-Rician) based on the PPA scheme copes with the original nonsmooth nonconvex functional. For this comparison, we use four images kindly provided by the authors of [20]: one natural image Camera man (256 \(\times \) 256), and three MR images Lumbar-Spine (200 \(\times \) 200), Brain \((217\,\times \,181)\), and Liver (214 \(\times \) 304). The images are then corrupted by Rician noise for \(\sigma =20\) and \(\sigma =30\). For the sake of fairness, all the algorithms were run until they fulfill the same convergence criterium based on the relative difference between the functional energy in two consecutive iterations. In our test, we set the tolerance to \(1\times 10^{-7}\). \(\hbox {TV}_\epsilon \)-Rician, Getreuer and TV-Rician use a regularization parameter \(\lambda \) which multiplies the data fidelity term, while the Chen–Zeng algorithm uses a parameter \(\gamma =1/\lambda \) multiplying the TV term. For all tests, the regularization parameters were separately optimized to get the best PSNR and to get the best SSIM with respect to the original images. The results of this comparison are displayed in Table 1. Notice that the optimal regularization parameter for each case is displayed in the table as \(\gamma \).

We see that TV-Rician gets the best results in both PSNR and SSIM for the Camera man, the Lumbar-Spine, and the Liver images for all levels of noise. The differences with other methods increase for higher noise level \((\sigma =30)\), confirming that the original problem (8) is best suited than its approximations for Rician denoising. For the case of the phantom Brain image, Getreuer model scores the best denoising results. In order to convexify the Rician data fidelity term, the authors in [26] substitute the original functional for small values of the solution by a linear approximation. This modified functional drives these values of the solution closer to 0 than the original Rician functional we considered. Since the background of the synthetic Brain image is 0, this model achieves a better solution for this image than the other methods. This effect can be observed in the other images. For instance, in Fig. 7h, the error of this model in the upper corners is considerably higher than in the rest of the algorithms because the background in the noise-free Liver image is not 0. Nevertheless, the proposed method (TV-Rician) gets higher PSNR and SSIM than \(\hbox {TV}_\epsilon \)-Rician and Chen–Zeng in the synthetic Brain image, and it is the best algorithm overall when computing the averaged PSNR and SSIM.

Moreover, when using the same regularization parameter for all the methods, the TV-Rician method also achieves a solution which is a lower minimum of (8). This comparison is performed for the Liver image and the parameters \(\sigma =20\) and \(\gamma =0.035\). These results are shown in Fig. 7 and Table 2: TV-Rician achieves the best denoising solution in terms of visual inspection, PSNR, and energy minimization.

Tractography generated from a seed placed in the corpus callosum. At left, the tractography generated from the original DWI (and DTI) data. At right, the tractography generated from the TV-Rician denoised data. Particular areas where the tractographies are different because of the noise are pointed by white arrows in the image

6.3 Application on Real Diffusion Tensor Imaging of the Brain

The data we used consist of a Diffusion-Weighted Images (DWI) dataset provided by Fundación CIEN-Fundación Reina Sofía which was acquired with a 3 Tesla General Electric scanner equipped with an 8-channel coil. The DWI have been obtained with a single-shot spin-echo EPI sequence (FOV = 24 cm, TR = 9600 ms, TE = 91.5 ms, slice thickness = 2 mm, spacing = 0.6 mm, matrix size = 128 \(\times \) 128, NEX = 1). The DWI data consist on a volume obtained with b=0 \(\hbox {s/mm}^2\) and 45 volumes with b=1000 \(\hbox {s}/\hbox {mm}^2\) corresponding with gradient directions that equally divide the 3-D space. These DWI, which represent diffusion measurements along multiples directions, are denoised by solving the proposed minimization problem (8) using the PPA. Then, Diffusion Tensor Images (DTI) are reconstructed from the original and denoised DWI data using the 3D Slicer tools.Footnote 2 DTI is one of the most popular methods for in vivo analysis of the white matter (WM) structure of the brain, helping to detect WM alterations that can be found from early stages in some degenerative diseases [25]. The DTI information is commonly used to generate a tractography of a particular area of the brain, which is a 3D representation of the fibers of WM involved. In Fig. 8, the tractographies generated from a seed placed in the corpus callosum are shown. White arrows indicate regions where the noise in the image generated from the original data (at left) affects the reconstruction of specific tracts which are nevertheless recovered in the tractography from the preprocessed data (at right). In order to highlight the regions where the fibers reconstruction differs, we display the tractographies over a sagittal view of the Fractional Anisotropy (FA) generated from the same DTI data (Fig. 9). It can be seen how the left arcuate fasciculus cannot be reconstructed from the original data but it is recovered after the preprocessing. The correct reconstruction of the left arcuate fasciculus is important since it is involved in important tasks like language and praxis [14].

7 Conclusions

In this paper, we presented the mathematical analysis of the quasilinear elliptic equation for the 1-Laplacian operator which arises from considering the minimization of the Total Variation based-energy functional modeling Rician denoising for MRI. Theoretical difficulties come from both ingredients of the model: the TV regularization term, which makes the problem nonsmooth, and the Rician statistics of the noise in MRI, which yield a nonconvex minimization problem. We provided sufficient conditions on the data for the existence of a bounded nontrivial BV solution of the elliptic equation which turns out to be a global minimizer of the associated energy functional. Several qualitative properties of this solution have been deduced. The uniqueness of a strictly positive solution is still an open problem. Extensive numerical experiments not reported here suggest that there exists only one such solution.

We also proposed and implemented a convergent Proximal Point Algorithm to solve this nonsmooth nonconvex minimization problem. The numerical results demonstrate the effectiveness of the proposed method compared to previous approximations to TV-based Rician denoising. Finally, we tested our algorithm in in vivo DTI tractography showing the benefits of preprocessing DWI data before DTI reconstruction.

Notes

Available at http://www.bic.mni.mcgill.ca/brainweb.

Freely available in http://www.slicer.org/.

References

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford University Press, New york (2000)

Amos, B.D.E.: Computation of modified bessel functions and their ratios. Math. Comput. 28(125), 239–251 (1974)

Andreu, F., Ballester, C., Caselles, V., Mazón, J.M.: Minimizing total variation flow. Differ. Integr. Equ. 14(3), 321–360 (2001a)

Andreu, F., Ballester, C., Caselles, V., Mazón, J.M.: The Dirichlet problem for the total variation flow. J. Funct. Anal. 180(2), 347–403 (2001b)

Andreu, F., Caselles, V., Mazón, J.M.: Parabolic Quasilinear Equations Minimizing Linear Growth Functionals, vol. 223. Birkhäuser, Basel (2004a)

Andreu, F., Caselles, V., Mazón, J.M., Moll, S.: The minimizing total variation flow with measure initial conditions. Commun. Contemp. Math. 6(3), 431–494 (2004b)

Anzellotti, G.: Pairings between measures and bounded functions and compensated compactness. Annali di Matematica 135, 293–318 (1983)

Aubert, G., Aujol, J.: A variational approach to removing multiplicative noise. SIAM J. Appl. Math. 68(4), 925–946s (2008)

Basu, S., Fletcher, T., Whitaker, R.: Rician noise removal in diffusion tensor MRI. Int. Conf. Med. Image Comput. Comput.-Assisted Interven. 9(1), 117–125 (2006)

Bellettini, G., Caselles, V., Novaga, M.: The total variation flow in \({{\mathbb{R}}}^N\). J. Differ. Equ. 184(2), 475–525 (2002)

Bellettini, G., Caselles, V., Novaga, M.: Explicit solutions of the eigenvalue problem \({\text{ div }}(Du/|Du|)=u,\,\) in \({\mathbb{R}}^2\). SIAM J. Math. Anal. 36(4), 1095–1129 (2005)

Bredies, K., Holler, M.: A pointwise characterization of the subdifferential of the total variation functional. SFB-Report 11 (2012)

Brezis, H., Oswald, L.: Remarks on sublinear elliptic equations. Nonlinear Anal. 10(1), 55–64 (1986)

Catani, M., Thiebaut de Schotten, M.: A diffusion tensor imaging tractography atlas for virtual in vivo dissections. Cortex 44(8), 1105–1132 (2008)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20(1–2), 89–97 (2004)

Chambolle, A., Pl, Lions: Image recovery via total variation minimization and related problems. Numer. Math. 76(2), 167–188 (1997)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chambolle, A., Caselles, V., Cremers, D., Novaga, M., Pock, T.: An introduction to total variation for image analysis. In: Fornasier, M. (ed.) Theoretical Foundations and Numerical Methods for Sparse Recovery, vol. 9, pp. 240–263. De Gruyter, Berlin (2010)

Chan, T., Esedoglu, S., Park, F., Yip, A.: Recent developments in total variation image restoration. Math. Models Comput. Vis. 17, 263 (2005)

Chen, L., Zeng, T.: A convex variational model for restoring blurred images with large Rician noise. J. Math. Imaging Vis. 53(1), 92–111 (2015)

Cicalese, M., Trombetti, C.: Asymptotic behaviour of solutions to p-Laplacian equation. Asymptot. Anal. 35(1), 27–40 (2003)

Cocosco, C.A., Kollokian, V., Kwan, R.K.S., Evans, A.C.: Brainweb: Online interface to a 3D MRI simulated brain database. Neuroimage 5(4), 425 (1997)

Demengel, F.: On some nonlinear equation involving the 1-Laplacian and trace map inequalities. Nonlinear Anal. 48(8), 1151–1163 (2002)

Demengel, F.: Functions locally almost 1-harmonic. Appl. Anal. 83(9), 865–896 (2004)

Gattellaro, G., Minati, L., Grisoli, M., Mariani, C., Carella, F., Osio, M., Ciceri, E., Albanese, A., Bruzzone, M.G.: White matter involvement in idiopathic parkinson disease: a diffusion tensor imaging study. Am. J. Neuroradiol. 30(6), 1222–1226 (2009)

Getreuer, P., Tong, M., Vese, L.: A variational model for the restoration of MR images corrupted by blur and Rician noise. Lect. Notes Comput. Sci. Adv. Vis. Comput. 6938, 686–698 (2011a)

Getreuer, P., Tong, M., Vese, L.: Total variation based Rician denoising and deblurring model. UCLA CAM Report 11(67) (2011b)

Giusti, E.: Minimal Surfaces and functions of bounded variation. Monographs in Mathematics, vol. 80. Birkhauser, Boston (1984)

Goldstein, T., Osher, S.: The split Bregman method for L1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Gudbjartsson, H., Patz, S.: The Rician distribution of noisy MRI data. Magn. Reson. Med. 34(6), 910–914 (1995)

Henkelman, R.: Measurement of signal intensities in the presence of noise in MR images. Med. Phys. 11(2), 232–233 (1985)

Kawohl, B.: From P-Laplace to Mean Curvature Operator and Related Questions. Preprint, Sonderforschungsbereich (1990)

Kawohl, B.: On a family of torsional creep problems. Journal für die reine und angewandte Mathematik 410, 1–22 (1991)

Kawohl, B., Fridman, V.: Isoperimetric estimates for the first eigenvalue of the $p$-Laplace operator and the Cheeger constant. Comment. Math. Univ. Carol. 44(4), 659–667 (2003)

Martin, A., Garamendi, J.F., Schiavi, E.: Iterated Rician denoising. In: Proceedings of International Conference on Image Processing, Computer Vision and Pattern Recognition (IPCV 2011), pp. 959–963. CSREA Press, Las Vegas, USA (2011)

Mazón, J.M., Segura de León, S.: The Dirichlet problem for a singular elliptic equation arising in the level set formulation of the inverse mean curvature flow. Adv. Calc. Var. 6, 123–164 (2013)

Neuman, E.: Inequalities involving modified Bessel functions of the first kind. J. Math. Anal. Appl. 171(1), 532–536 (1992)

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60, 259–268 (1992)

Sun, W., Sampaio, R.J.B., Candido, M.A.B.: Proximal point algorithm for minimization of DC function. J. Comput. Math. 21(4), 451–463 (2003)

Tong, M.: Restoration of images in the presence of Rician noise and in the presence of atmospheric turbulence. PhD thesis, University of California, Los Angeles (2012)

Tristán-Vega, A., Aja-Fernández, S.: DWI filtering using joint information for DTI and HARDI. Med. Image Anal. 14(2), 205–218 (2010)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Watson, G.: A Treatise on the Theory of Bessel Functions. Cambridge University Press, Cambridge (1922)

Wiest-Daesslé, N., Prima, S., Coupé, P., Morrissey, S.P., Barillot, C.: Rician noise removal by non-Local Means filtering for low signal-to-noise ratio MRI: applications to DT-MRI. Med. Image Comput. Comput.-Assist. Interven. 11(Pt 2), 171–179 (2008)

Zhang, X., Burger, M., Bresson, X., Osher, S.: Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM. J. Imaging Sci. 3(3), 253–276 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

The first and second authors wish to thank the Spanish Ministerio de Economía y Competitividad for supporting Project TEC2012-39095-C03-02. The third author has partially been supported by the Spanish Ministerio de Economía y Competitividad under Project MTM2012-31103. Finally, the authors wish to thank the reviewers for providing helpful comments that have improved the final manuscript redaction.

Rights and permissions

About this article

Cite this article

Martín, A., Schiavi, E. & Segura de León, S. On 1-Laplacian Elliptic Equations Modeling Magnetic Resonance Image Rician Denoising. J Math Imaging Vis 57, 202–224 (2017). https://doi.org/10.1007/s10851-016-0675-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-016-0675-3