Abstract

Despite the many advantages and increasing adoption of Electron Beam Powder Bed Fusion (PBF-EB) additive manufacturing by industry, current PBF-EB systems remain largely unstable and prone to unpredictable anomalous behaviours. Additionally, although featuring in-situ process monitoring, PBF-EB systems show limited capabilities in terms of timely identification of process failures, which may result into considerable wastage of production time and materials. These aspects are commonly recognized as barriers for the industrial breakthrough of PBF-EB technologies. On top of these considerations, in our research we aim at introducing real-time anomaly detection capabilities into the PBF-EB process. To do so, we build our case-study on top of a Arcam EBM A2X system, one of the most diffused PBF-EB machines in industry, and make access to the most relevant variables made available by this machine during the layering process. Thus, seeking a proficient interpretation of such data, we introduce a deep learning autoencoder-based anomaly detection framework. We demonstrate that this framework is able not only to early identify anomalous patterns from such data in real-time during the process with a F1 score around 90%, but also to anticipate the failure of the current job by 6 h, on average, and in one case by almost 20 h. This avoids waste of production time and opens the way to a more controllable PBF-EB process.

Similar content being viewed by others

Introduction

Additive manufacturing (AM) refers to a large family of technologies able to grow a part starting from a 3D model, by joining materials layer upon layer. AM implements a completely digitalized production flow, from design to final object, without intermediate steps like the creation of molds or dies. This process was demonstrated to have reduced development time and material waste, as well as much higher flexibility than traditional subtractive technologies. Thanks to these characteristics, AM plays a fundamental role in the current digital industrial revolution era, as it enables the rapid production of customized components at a reduced cost (Fu & Körner, 2022).

Among AM technologies for metallic components, Powder Bed Fusion technologies hold a dominant role in the market, being able to produce high-performance materials with almost unlimited geometrical freedom (Carolos & Cooper, 2022). As the name suggests, it involves a high-power beam melting together each layer of material in the form of powder.

Even though the most common beam source is currently laser (PBF-LB), Electron Beam Powder Bed Fusion (PBF-EB) is recently receiving a growing attention (Fu & Körner, 2022). PBF-EB exploits a high-power electron beam instead of a laser as heat source to fuse together layers of conductive metal powders, such as titanium and titanium alloy, cobalt, chrome, copper, etc. The resulting parts, highly dense and mechanically strong, are largely adopted in diverse applications, ranging from turbine blades to hip implants (Ladani & Sadeghilaridjani, 2021).

Compared to laser, Electron beam produces a much higher power, coupled with a faster beam speed, both resulting in a significantly shorter build time. Additionally, the presence of a high vacuum level during the process and the preheating step before melting results in high and homogeneous temperature on each layer, generating superior micro-structures under a mechanical point of view. As a result, components fabricated through PBF-EB show more homogeneous micro-structures with less geometrical distortion and less residual stresses. This is which makes them less prone to the formation of crack (Klassen, 2018). All these aspects make PBF-EB one of the most promising technology for the additive manufacturing of fully dense metallic objects with high productivity (Fu & Körner, 2022). Driven by these promises, decade-old industries in medical field such as Lima Corporate SPA, Ala Ortho srl and Smith &Nephew moved the entire production from conventional to PBF-EB based processes for the serial production of acetabular cups and other types of prostheses (Sing et al., 2016; Mumith et al., 2018). In addition, PBF-EB opens the possibility to work with unique materials and is the only successful solution to process some families of materials, as intermetallics. For this reason, Avio Aero, a General Electric (GE) company, deploys the PBF-EB process to produce serially TiAl low pressure turbine blades, which are part of the GE9X engine. Due to the intrinsic properties of this material, the blades produced with this material weighted 50% less compared to the traditional Ni-based alloy blades (Dzogbewu & du Preez, 2021). Recently, the Swedish company VBN Components has developed a new class of materials family processable only with PBF-EB, called Vibenite, which include the world’s hardest steel for AM (VBN Components, 2023).

As the possible industrial applications of PBF-EB are rapidly growing, the demand for high process stability and control is becoming foremost (Xames et al., 2023; Wang et al., 2022; Herzog et al., 2023). Indeed, all AM processes are known for their inherent complexity. The success of a job, as well as the mechanical properties of the obtained components, may depend on a huge number of heterogeneous variables, whose inter-dependency is not fully known and difficult to model (Sing & Yeong, 2020). As a consequence, the low robustness, stability and repeatability of the AM process becomes a major issue when compared to more traditional and consolidated manufacturing processes. Currently, this is seen as a barrier to a more widespread diffusion of AM technologies, including PBF-EB (Everton et al., 2016; Houser et al., 2023).

A commonly recognized solution to this problem is the continuous in-situ monitoring of the industrial processes. This enables early identification of irregular behaviours, and avoids instability and failure (Ladani & Sadeghilaridjani, 2021). To make this possible, the manufacturers are improving the PBF-EB machines put into market in a two-folds way. On the one hand, by equipping them with a large number of heterogeneous sensors, that constantly monitor all the stages of the layering process. On the other hand, by storing the temporal evolution of the sensed variables, as well as of many process parameters (among the others, electron beam currents, power, intensity, etc.).

While the availability of in-situ monitoring is a first step towards a more controllable process, the interpretation of the collected data is very challenging. To this date, the PBF-EB industrial machines in the market only feature very basic anomaly detection methods, implementing a set of independent thresholds on a number of critical variables. When one of such variables exceeds the safety threshold, which is typically undocumented and empirically set by the manufacturers during calibrations, this triggers an alarm, eventually causing the job to shutdown. Such basic methods are unsatisfactory mainly because they are limited in their scope. By setting independent thresholds on the “relevant” parameters, they overlook the complexity of the process, which is known to be affected by the interaction of many variables (Houser et al., 2023). By doing so, they do not allow the implementation of effective process control methods.

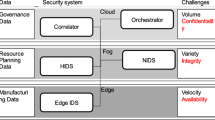

To address this issue, in this work we seek the application of a more sophisticated anomaly detection methodology to the PBF-EB process, taking advantage of all the information made available by an advanced machine by means of Machine Learning (ML). We start from the consideration that the PBF-EB data consist of multiple interdependent variables, hence falling under the definition of multivariate time series (MTS). Then, we take inspiration from the latest developments of MTS anomaly detection (MTSAD) to solve our problem (Belay et al., 2023; Tercan & Meisen, 2022). More specifically, we put into effect a class of so-called reconstruction-based anomaly detection, where a generative deep learning model (typically, an autoencoder) is trained so as to be able to reconstruct the data of a successful job, by considering all the available variables all at once. Then, the reconstruction error of the autoencoder is exploited as an anomaly score, to early identify any deviation from the “normal” distribution of the data. This approach, which proved effective in many other applications requiring MTS anomaly detection (Tziolas et al., 2022; Zhou et al., 2022; Li & Jung, 2023; Hong et al., 2022; Xiong et al., 2022), is promising in the case of PBF-EB for two main reasons. First, the autoencoder does not require any supervision on the anomalies that should be targeted, which is difficult to obtain. Second, it can detect any deviations from the “normal” data distribution much before of a possible system failure. Differently from the traditional threshold-based techniques, this leaves ground for the implementation of real-time process control procedures.

Up to our knowledge, our study is the first attempt to apply time-series anomaly detection to PBF-EB, leveraging a reconstruction based anomaly detector. As previously mentioned, our solution does not require specific external supervision on the anomalies that should be targeted, as it only leverages the data that is provided by the industrial machine. Furthermore, our method is real-time in principle, as it is able to identify anomalies while they occur during a process.

Specifically, in our research we use as a test-bed the Arcam EBM A2X, which is currently one of the PBF-EB machines most diffused in industry. Then, we put into effect the two following major contributions:

-

(i)

Starting from a description of the PBF-EB process and of its associated variables, we characterize the most relevant data made available by the machine. We use such data to build a ML-ready dataset, without any additional expert annotations.

-

(ii)

Starting from the data of (i), we design and validate a reconstruction-based MTSAD framework, using an autoencoder as the backbone. Our experiments prove that, by identifying anomalies while they occur during a process, our system is capable of predicting its failure at an early stage.

The remaining part of the paper proceeds as follows: “Machine learning for the in-situ monitoring of PBF processes” section draws an outline of machine learning techniques in in-situ monitoring of PBF processes, providing the necessary background for our work; “PBF-EB technology” section presents a detailed overview about the PBF-EB process and the corresponding hardware employed in our study; “Dataset collection and characterisation” section describes and characterizes the data that can be collected from the industrial machine used as the test-bed, and how these data were processed to be input to a MTSAD model; “Methodologies” section provides the background and methodological details of our MTSAD solution, and “Experimental results” section presents its experimental validation. Lastly, “Conclusions and future developments” section provides a final overview of our findings and contributions and highlights possible future developments.

Background

Machine learning for the in-situ monitoring of PBF processes

AM is a promising technology for the current industrial panorama, providing the benefits of printing complex shapes, lower production cycle time, minimum waste, and cost efficiency over traditional manufacturing processes (Xames et al., 2023).

Nonetheless, diverse issues still hamper a wide adoption of AM technologies in industry (Xames et al., 2023; Wang et al., 2022). One factor is the inherent complexity of the fundamental process dynamics of AM, as, for instance, the thermal behavior behind the PBF processes, which involves multi-physics mechanisms including heat absorption, high thermal gradients, local melting and solidification of particles (Wang et al., 2022). This complex phenomenon, highly sensitive to the selection and optimization of the involved process parameters (i.e, as beam power, scan speed, and beam shape), claims for synergies between designers, materials scientists, and manufacturers which are not easy to be gathered in real-life industrial scenarios.

A second factor, is the interaction of the various phase and sub-phases involved in the typical AM workflow, which involves a large number of heterogeneous variables interacting each other.

As a solution, manufacturers are equipping AM machines with a large number of heterogeneous sensors, that constantly monitor all the stages of the layering process to ensure a better quality of the final product, as well as a safe process.

The result is a data intensive manufacturing domain, which recently opened the way to the ML, a sub-field of artificial intelligence, nowadays largely adopted to solve complex problems associated with data (Xames et al., 2023).

De facto, over half of the literature of ML applied to AM, takes as its focus the PBF processes (Xames et al., 2023). This is mainly due to a two-fold reason. On the one hand, PBF technologies, being able to produce high-performance materials with almost unlimited geometrical freedom, are promising in a wide range of application domains (Wang et al., 2022). On the other hand, an extensive adoption of such technologies is in part still hampered by several sources of process instability, which may lead to various kinds of defects or even failure (Grasso et al., 2018). Hence, the potential of these technique have not yet been fully revealed.

On top of these considerations, research efforts are spent in diverse areas of possible ML applications for AM processes, recently categorized in the longitudinal review by Xames et al. (2023): AM design, process parameters, in-situ process monitoring and control, parts properties and performance, inspection, testing and validation, and cybermanufacturing.

Since detecting any irregular behaviour of the PBF production line is an essential feature to develop an adaptive system, able to ensures a better quality of final products, process monitoring and control are gaining a growing consideration with respect to other possible ML application in AM (Xames et al., 2023).

In this regard, the most used sensors and in-situ data collection devices for PBF-LB processes may be grouped into three main categories: (i) non-contact temperature measurements (i.e., infrared imaging), (ii) imaging in the visible range, (iii) low-coherence interferometric imaging.

Additionally, other employed sensors include: 2D laser displacement sensors, optical coherence tomography devices, accelerometers, ultra sound detectors, strain-gauges, thermo-couples and x-ray detectors (Everton et al., 2016).

Specifically concerning the process monitoring of PBF-LB jobs, a plethora of different studies concentrate on defect detection thorough imaging or time-series based ML solutions. This mainly includes support vector machines (Gobert et al., 2018; Scime & Beuth, 2019), decision trees and random forest (Khanzadeh et al., 2018; Mahmoudi et al., 2019), and convolutional neural network, which definitely represents the most used solution (Liu et al., 2020; Yazdi et al., 2020; Caggiano et al., 2019; Shevchik et al., 2019).

As regards of the PBF-EB process, industrial systems are typically equipped with many embedded sensors, for instance to monitor the vacuum chamber environment, the energy source and the different subsystems. On the other hand, monitoring of the powder bed in PBF-EB is more challenging due to the high temperature involved in the process. For this reason, techniques and sensors deployed for PBF-LB are not feasible in most of the cases. Research efforts were focused on monitoring the powder bed using cameras (Grasso, 2021), infrared cameras (Dinwiddie et al., 2013) and pyrometers (Cordero et al., 2017). However, these instruments lack in temperature and X-ray resistance and their performances are affected by metal vapours condensation (Fu & Körner, 2022). A new monitoring technique and most promising, called Electron Optical (ELO) imaging, exploits the back-scattered electrons created during the EB-PBF process allow the acquisition of detailed 2D and 3D images of the processes layer (Arnold et al., 2020; Bäreis et al., 2023).

However, the information about the data-driven potential for anomaly detection are little, especially if compared with existing research on PBF-LB fusion (Houser et al., 2023). Up to our knowledge, just two previously published studies investigated the potential of data-driven time-series anomaly detection applied to PBF-EB processes (Grasso et al., 2018; Houser et al., 2023). Although the authors of these works provide the first systematic studies that prove the feasibility of applying ML to predict defects generated during the PBF-EB process, these studies have some recognized limitations. First, they are built on top of supervised learning algorithms, which require expert annotations to learn how to recognize defects. These annotations represent a significant challenge in a real industrial scenario. Second, the real-time exploitation is not explored.

PBF-EB technology

This sections shortly introduces the PBF-EB technology, outlying the main phases of a typical PBF-EB process.

PBF-EB leverages electrons at high speed to selectively melt a bed of metallic powder, exploiting the heat generated when the beam impacts the powder bed (Galati, 2021; Dev Singh et al., 2021). The interaction between the electron beam and the powder bed is the most critical phase of the process (Galati, 2021). During the impact, the particles charge negatively, and thus rapidly diffuse all over the chamber owing to the generated repulsion forces between them (Cordero et al., 2017). This is the so-called “smoke” phenomenon (Milberg & Sigl, 2008; Sigl et al., 2006). Typically, to avoid, or at least to limit this issue, the powder bed is heated up with a preheating step before the component melting phase (Galati, 2021; Milberg & Sigl, 2008). This tends to increase the cohesion of the particles, enhancing the thermal and electrical conductivity (Weiwei et al., 2011) and the charge dispersion.

In our research, we employed the Arcam EBM A2X device, outlined in Fig. 1, being one of the PBF-EB systems most popular in industry. As most of the available device on the market, such system may be divided into two parts: the top column and the build chamber (see Fig. 1a and b respectively).

As it can be gathered from Fig. 1a, the top column includes the electron gun and the magnetic lenses. The electron gun accommodates the cathode: a LaB crystal or a Tungsten filament working at 60 kV and emitting electrons. The emitted electrons are accelerated up to 40% of the speed of the light by an anodic potential and then collimated by the magnetic lenses, which are responsible for the beam focusing and deflection (Körner, 2016).

Another central component of the electron beam gun is the grid cup (see Fig. 1a roughly in the middle). This element, located between the cathode and the anode, controls the current flow of the electrons reaching the anode. The voltage applied on the grid works as a gate: a negative voltage will push the electrons back toward the cathode and vice-versa. Hence, the grid cup acts as a filter, avoiding electron flow when not required.

The build chamber includes the build steel tank, the powder storing (a.k.a hopper), and the powder distribution system (a.k.a rake). See Fig. 1b for a schematic overview of such components. The steel build tank contains the build platform (Fig. 1b bottom), which moves along the build direction by means of an electric motor. In the Arcam A2X machine, we have two hoppers, one for each side of the build chamber. The powder is fed on the build platform by gravity, while the rake system, made up of a series of thin and flexible stainless-steel teethes (Galati, 2021), collects and distributes it (the so-called fetch phase). The amount of distributed powder is monitored by two magnetic sensors, leveraging a closed-loop control: if the amount of powder differs from the calibrated one, the positions of fetch are adjusted accordingly (Chandrasekar et al., 2020; Arcam, 2011) (see red circle in Fig. 1b and the corresponding inset).

A high vacuum level, ensured by four vacuum pumps, is maintained both in the beam column and in the build chamber where the pressure is kept respectively at around \(10^{-5}\) Pa and around \(10^{-3}\) Pa (Arcam, 2011). In addition, inert gas (typically Helium at a pressure of \(10^{-1}\) Pa) is insufflated during the process to avoid charge accumulation, guaranteeing high thermal stability, and accelerating the cooling phase (Gaytan et al., 2009).

Due to the high temperatures generated during the process, several thermocouples are installed in different areas of the machines to monitor the temperature evolution of some fundamental elements. To measure the temperature during the building job, a thermocouple is positioned under the start plate, while another monitors the temperature of the top column. If the top column thermocouple detects a value over a given threshold, the process is stopped to avoid damage in the electron gun.

In the following, a brief description of the main steps of a typical PBF-EB process is provided. Firstly, the start plate is heated, by means of the electron beam, up to a target temperature, depending on the given processed material (Mahale, 2009). After this step, the following sequence is repeated until the build job is completed (Körner, 2016):

-

1.

Table lowering: the start plate is lowered to a quantity equal to layer thickness.

-

2.

Powder spreading: the rake fetches the powder from one side and spreads the (new) powder bed.

-

3.

Preheating 1: a defocused electron beam heats a portion of the new powder layer equal to the maximum area that contains all the components (Rizza et al., 2022). As mentioned above, this step produces sintering of the particles.

-

4.

Preheating 2: the electron beam further heats only the portions of powder containing the parts to be built for an area corresponding to an offset of the section that must be melted (Rizza et al., 2022).

-

5.

Melting: the electron beam selectively melts the section of the component with the selected strategy (hatching and contouring (Galati et al., 2019b; Tammas-Williams et al., 2015)) and process parameters.

-

6.

Post-heating: based on the amount of energy provided in the two preheating and melting phases, it is possible to use the electron beam to further heat the section or let it cool down through Helium flow. This accomplishes the energy equilibrium and the average beam current to be achieved over the layer (Galati et al., 2019c).

Once the build has been completed, the build chamber is filled with Helium, and the job is cooled down until room temperature. Then, the build part is removed from the chamber, and cleaned from the sintered powder with a blasting operation. Lastly, the unmelted powder is collected and reused for the next jobs.

Dataset collection and characterisation

As already mentioned, our test-bed is an Arcam EBM A2X machine, monitored with different types of sensors observing the components and sub-processes during the activity of the machine. The system is also equipped with a set of internal alarms able to automatically stop the process in critical situations, in order to prevent severe damage to the machine. Most of these alarms do not provide early anomaly detection, as they are safety mechanisms that intervene only when the monitored variables have reached a severely critical value.

For each printing job, the system stores all the available collected data into a log file that is constantly updated. To ease the data analysis, the machine manufacturer provides an internal closed software to extract and export in a .plg log file all the gathered information. Such file reports different types of data in the form of values and absolute timestamps, organized into a number of categories:

-

(i)

Settings for the main process parameters;

-

(ii)

Internal software thresholds and machine-specific parameters;

-

(iii)

Signals from sensors placed on different hardware components;

-

(iv)

Control signals sent to the hardware components;

-

(v)

Status of machine-generated alarms and warnings

-

(vi)

Outcome of internal calculations and machine elaborations.

All the data are reported in the log not at fixed sampling rates, but only in the case there is a sensible change with respect to the previous timestamp.

To obtain a dataset which is suitable to train and validate a generative model, the available data needs to be reorganized in the form of time-series of variables, that should ideally provide a complete and unbiased description of the evolution of a process. To achieve this purpose, we constructed the input time-series for the generative model starting from the machine generated log file, as follows:

-

(i)

We dropped all the static settings and machine-specific parameters that do not show any change during the process;

-

(ii)

We dropped outputs of internal elaborations and machine-generated alarm or warning signals.

The so-obtained data consist of a time-series of 16 different variables, that are reported in Table 1 together with their characterization, as provided by the manufacturer’s documentation. All the values corresponding to these variables were resampled at a common sampling time of 133 ms, to serve as the input to our generative model.

Besides the input signals to be reconstructed, our anomaly detector would benefit a lot from a ground-truth annotation, provided for each data point, or at least for each new layer, based on which learn a representation of anomaly. As already anticipated in “Introduction” section, besides requiring a very in-depth understanding of the ongoing process, this annotation is cumbersome to collect and hardly possible to obtain in a real industrial setting.

To circumvent this problem, in our study we stick to the only information provided by the logs of the industrial machine, without any external manual annotations from experts of the process. To obtain an indirect ground-truth of the presence of anomalies in a given job, we leverage two machine-generated alarms, which flag the success or failure of a job: the Alarms.BuildDone and the Alarms.BuildFailed variables, shown and characterized in Table 2. In the logs, these two variables are set respectively to the tuple (1, 0) when the job is successful, or vice-versa when the job is failed, which triggers an anticipated stop of the building process. In our dataset, the tuple is translated into a binary label, where 0 represents a successful job and 1 represents a failed job.

In this regard, the so-obtained ground-truth label refers to the final outcome of the whole given PBF-EB process, without providing any information at the sample level. Nonetheless, we can reasonably assume that a job with significant anomalies will have a failed outcome, and vice-versa, and use this indirect information to train and test our anomaly detector.

Besides the final outcome of each collected job, we exploited the variable

Process.TimeApproximation.BuildDoneTime as additional ground truth for the end-time of the process (see Table 2). For successful jobs, this end-time coincides with the timestamp of the actual end of the process. For failed jobs, it coincides with the time the job was interrupted, or time-of-failure. Hence, it can be used as a baseline to assess the earliness of our predictions.

All the jobs collected in our dataset, were produced using the Arcam A2X system available at the Integrated Additive Manufacturing Center at Politecnico di Torino. To obtain a sizeable dataset to train and test our framework, we collected data from 80 different jobs. Such jobs are representative of all the stages of product development, from the process design (process parameters optimization) to the job design for the production (manufacturing of the first technical prototypes or series).

The use of jobs consisting of simple geometries with squared sections was aimed to obtain data from jobs with constant process parameters during the part melting (Galati et al., 2022b). For the characterization of the material properties, the geometries are more complex from the point of view of the process (e.g., circular section), and therefore, the Arcam A2X control was used to automatically adapt the process parameters according to the length to be melted (Galati et al., 2022b; Lunetto et al., 2020).

The production of real components was used to emulate the real manufacturing conditions. These jobs included the geometrical features characterizing the EB-PBF process: bulky components and lattice structures, and the parameters were adapted accordingly (Galati et al., 2023). The jobs were also characterized by two kinds of nesting in the 3D volume: arrangement of multiple parts with the same geometry or arrangement of multiple parts with different geometries. The first condition is representative of a large series production, while the second condition is more representative of a factory working on demand or producing small batches. This heterogeneity results in an average time per layer among the jobs spanning 300 s to 23 s. The higher time represents jobs designed for process development, while the shorter ones are optimized for production. For jobs designed to produce real parts, the application sectors were aerospace, biomedical and automotive. Notably, the jobs show significant variability also for a diversity of materials (mostly titanium alloys, such as Ti6Al4V, Ti6242 and intermetallic TiAl).

For the sake of completeness, we report in Table 3 the range of the main process parameters used to fabricate samples and components included in the collected jobs. Examples of geometries, their arrangement in the build volume and process parameters of fabricated jobs that were included in the dataset can be found in published works as Galati et al. (2019a, 2021) and Lunetto et al. (2020) for Ti6Al4V or in Galati et al. (2022a) for an intermetallic TiAl.

Lastly, Fig. 2 shows a representative example of the whole time-series data associated with a manufacturing PBF-EB job extracted from the collected dataset. From such figure, a transient behavior during the beginning and the end of the printing process can be easily recognized (see the red overly in Fig. 2). This is mainly due to the warm up and cool down of the system, respectively before and after the print of the given part. Since heating typically last between one and two hours, and the system cooling up to ten, a considerable amount of data is collected during such transient periods. To avoid training bias of the proposed solution due to the transient response of the system, we opted to train our solution only on the steady state of the system (see green overlap in Fig. 2).

Methodologies

In this section, we describe all the methodological details of our proposed anomaly detection framework. As before mentioned, the proposed solution belongs to the class of the reconstruction-based anomaly detectors, where the anomaly detection is built upon a generative deep learning model, in our case an autoencoder, trained on how to reconstruct the data distribution of the successful jobs (Li & Jung, 2023).

As the name suggests, the autoencoder is a generative model with an encoder-decoder structure, where the encoder is devoted to compress the original input, obtaining a corresponding latent representation, while the decoder uses such latent representation to produce an output that mimics the given original input. Notably, training an autoencoder neural network does not require any explicit annotation in the learning process, because it only leverages the reconstruction error between the generated output and the given input. This makes it ideal for our case, where the input data is in the form of a 16-dimensional multivariate time-series, without any annotation at the level of a single time point or interval. On the other hand, as already anticipated in “Dataset collection and characterisation” section, our dataset provides annotations only at the job level.

Hence, we proceed in the following way:

-

(i)

We train the autoencoder on a training set of successful jobs. By doing so, the autoencoder should be able to learn the inherent data distribution of such jobs.

-

(ii)

Then, at inference time we exploit the reconstruction error of this autoencoder on new data as an anomaly score, to early identify any deviation from the “canonical” distribution. As a matter of fact, since the backbone autoencoder is trained only on the data collected during successful processes, it is reasonable to expect that large reconstruction errors will correspond to anomalous events, which are not included in the training set.

-

iii)

Based on the cumulative number of anomalous events detected during a process, we provide a continuous prediction of the outcome of the job. By doing so, we are able to identify a time-step after which the job is prone to failure.

To handle the multivariate time-series nature of our data, we put into effect both the encoder and the decoder via Long-Short-Term Memory (LSTM) neural network, which is known for its capability of capturing the complex temporal dynamics of multidimensional data by exploiting historical values (Su et al., 2019). By doing so, our methodology is able to model the latent interaction among all the 16 variables that constitute the input, instead of considering the single data streams independently.

Figure 3 shows an overview of the main steps of the proposed anomaly detection framework. In the rest of this section, we provide a detailed description of each single step.

First, as illustrated in Fig. 3b, given a PBF-EB job \(p^*\) of length T, we can denote the associated multivariate time-series, made up of \(M=16\) signals, as \({\varvec{{X}}}_{p^*} = \{{\varvec{{x}}}_{1, p^*},\ldots , {\varvec{{x}}}_{T, p^*}\}\) with \({\varvec{{x}}}_{t, p^*} \in \mathbb {R}^M\), and \(t \le T\). For simplicity, and with abuse of notation, we hereafter omit the explicit reference to the process \(p^*\).

Architecture overview depicting the most salient modules of the proposed anomaly detection framework: a the available Arcam EBM A2X machine; b the gathered multivariate time-series data cohort; c the windowing phase; d the backbone autoencoder devoted to learn a plausible input reconstruction; e the computing of the reconstruction error as anomaly score; f the anomalous event identification step: the given time-series window is tagged as anomalous if it determines an anomaly score above the threshold \(\alpha \); g the total count of anomalous events with the downstream classification of the given input PBF-EB job

During the pre-processing phase, \({\varvec{{X}}}\) is cropped into a set of non-overlapping sub-sequences through a sliding window approach, with window size \(\tau \) equal to 64, empirically set. Thus, as shown in Fig. 3c, we can now represent our job \(p^*\) as a sequence of non overlapping observations of length \(\tau \): \({\varvec{{W}}} = \{{\varvec{{w}}}_1,\ldots , {\varvec{{w}}}_{T-\tau +1}\}\), where \({\varvec{{w}}}_j = \{{\varvec{{x}}}_t,\ldots , {\varvec{{x}}}_{t+\tau -1}\} \in \mathbb {R}^{M\times \tau }\) is the generic \(j-th\) window. Then, each \({\varvec{{w}}}_j\) is fed into the LSTM-based autoencoder (see Fig. 3d). As already mentioned, the output of the autoencoder is a reconstructed version of the signal, \(\hat{{\varvec{{w}}}_j}\). Finally, as shown in Fig. 3e, we leverage the magnitude of the reconstruction error between the original windowed signal \({\varvec{{w}}}_j\), and the reconstructed one \(\hat{{\varvec{{w}}}_j}\) as an anomaly score to identify anomalous events, as follows:

Where \(\hat{y}_t\) is the predicted anomaly label for the given time-interval t (with 0 meaning normal and 1 meaning anomalous), \(\Delta w_t = \left\Vert {\varvec{{w}}}_j - \hat{{\varvec{{w}}}_j} \right\Vert \) is the the anomaly score for the \(j-th\) window, \(\alpha \) is a predefined threshold, later detailed.

As before mentioned, our LSTM-based autoencoder is trained over a windowed multivariate time-series. This is typically done in time-series analysis to reduce the back-propagating effort, as well as to address the weights update to the most recent time-steps, assumed to be more relevant for the prediction (Li & Jung, 2023). Nonetheless, the absolute error \(\Delta w_t\) is continuous over time by definition. A representative example of the evolution of \(\Delta w_t\) over time-intervals is shown in Fig. 4, where the anomalous time-points are also highlighted in yellow.

To proficiently set the threshold \(\alpha \) (see Eq. 1), we exploit the mean and standard deviation of the distribution of \(\Delta w_t\), as it is commonly done in statistics to detect outliers. That is: when the reconstruction error deviates away from its mean by 3 times the standard deviations, the given event is tagged as anomalous (Li & Jung, 2023; Hong et al., 2022).

After defining the anomaly score, we proceed in the following way to predict the final outcome of a the job \(p^*\):

-

(i)

For each sub-sequence \({\varvec{{w}}}_j \in p^*\), we compute the anomaly score \(\Delta w_t\). Based on this, we can establish whether the given sub-sequence is anomalous.

-

(ii)

Every time a new anomalous sub-sequence is encountered, we update the cumulative count of the anomalies. In the case this count crosses a given threshold \(\delta \), the job is predicted as prone-to-failure. Hence, the final label of the job \(\hat{y}_{p^*}\) is obtained as follows:

$$\begin{aligned} \hat{y}_{p^*} = {\left\{ \begin{array}{ll} 1 &{} \text {if } (\mathop {\sum }\nolimits _{0}^{t}\hat{y}_{t}) \ge \delta , \text {with } \hat{y}_{t} \in \{0, 1\}\\ 0 &{} \text {otherwise}\\ \end{array}\right. } \end{aligned}$$(2)Where \(\sum _{0}^{t}\hat{y}_{t}\) is the count of the anomalies up-to time t.

To set the parameter \(\delta \), we exploit an independent validation dataset containing examples of successful and failed jobs, and then choose the value that obtains the best compromise between false positive rate and sensitivity in the receiver operating characteristics (ROC) curve (Bradley, 1997).

Experimental results

In this section we present the experimental validation of the proposed anomaly detection framework.

While our proposed framework is able to identify single anomalous events occurring in a small window of time during a process, the accuracy of this identification cannot be directly quantified, because there is no corresponding ground-truth to compare with. Conversely, each process is annotated at the job-level, in terms of final outcome (success or fail) and time of failure. Then, our performance assessment is done indirectly, by exploiting such annotations at the job level. More specifically, we assess the goodness of our system based on:

-

(i)

The accuracy in the prediction of the final outcome;

-

(ii)

The earliness of the prediction of a failed outcome, in relation to the given time-of-failure.

Experimental setup

The available cohort of collected PBF-EB jobs was randomly divided in three non-overlapping folds for training, validation and testing purpose, as reported in Table 4.

As already anticipated, the training set does not contain any failed jobs, as it is used to learn a model of what delineates a successful job. Conversely, the validation and the test sets present an equal number of successful and failed jobs. The validation fold contains jobs that are solely used to tune all the hyper-parameters of the framework. This includes all the network hyper-parameters, but also the threshold \(\delta \) based on which the prediction of failure is built upon. Finally, the jobs in the test set are solely used to compute the performance metrics.

As already described in “Methodologies” section, the backbone of the proposed solution is an LSTM-based autoencoder. Its specific structure and architectural parameters are detailed in Table 5, for the sake of repeatability. The autoencoder was randomly initialized and then trained for 100 epochs using Adam optimizer with a learning rate equal to 0.001, \(\beta _1\) and \(\beta _2\) parameters respectively set to 0.9 and 0.999, batch-size to 32, and leveraging the mean squared error as loss function. We exploited the early stopping strategy (i.e., loss no longer decreasing for more than 10 epochs stops the training) to prevent model over-fitting.

We optimized the autoencoder on the training set, leveraging a grid search on the the following parameters: shape of the layers (refer to the optimal values reported in Table 5), batch size, and learning rate (set to 32 and 0.001, respectively).

Once the backbone autoencoder was trained, we exploited the ROC curve obtained on the validation subset to decide the value of the threshold \(\delta \). As it can be seen from Fig. 5, \(\delta = 10\) is the value that identifies the best operating point in terms of sensitivity and false positive rate (see “Methodologies” section for the methodological details).

No part of the test set was used in the threshold optimization, nor in the autoencoder training.

Performance assessment

To fully characterize the effectiveness of the proposed framework in predicting the final status of an PBF-EB job, we exploit a confusion matrix, where each row represents the instances in an actual class while each column represents the instances in a predicted class. We opted for such figure of merit since it makes it easy to see whether the system is confusing the two classes of interest. As further performance metrics, we leverage in a percentage form, the precision, the recall, and the F1-score for the two separate classes, in Table 6. Specifically, precision is the fraction of relevant instances among the retrieved instances, recall is the fraction of relevant instances that were retrieved, and F1 is the harmonic mean of the precision and recall. It thus symmetrically represents both precision and recall in one metric.

From the reported figures of merit, we can draw a number of interesting observations. First of all, our framework was able to correctly identify all the successful jobs. Furthermore, it had a 100% precision in detecting failed processes. This means that the anomaly detector is not over-pessimistic, and the risk of having to unnecessarily stop an healthy process is low. On the other hand, only one failed job was misclassified as successful (see the first row and second column in the confusion matrix of Fig. 6). Hence, the given framework is able to anticipate the failure of a job in most of the cases. Overall, the good balance of precision and recall in the two classes is reflected by the F1-score, that was higher than 90% in both cases.

Besides the performance on predicting the successful/failed outcome of a job, for all the jobs of the test set that were correctly predicted as prone to failure, we also assessed the time of the prediction, in relation to the ground-truth time-of-failure. More specifically, in Table 7 we report the difference between the given time-of-failure and the timestamp starting from which our system predicts the failure of the job. The ratio is: the larger this difference, the earlier the prediction that we are able to provide.

As it can be seen from the table, on average, our solution is able to predict a failure with a significant advance compared to the very moment in which the system detects a fatal error and stops the process. In one case, this advance was of almost 20 h. This is a very important outcome, as it opens the way to a significant improvement of the current work-flows, in terms of reduced wastage of production time, materials and energy resources. On top of that, it leaves ample room for the integration with finer process control strategies.

F1 score, precision and recall of the proposed solution with different deep learning generative models as the backbone: a transformer-based autoencoder (T-AE), a variational autoencoder (V-AE), a convolutional autoencoder (C-AE), recurrent neural network autoencoder (RNN-AE) and a long short-term memory (LSTM) autoencoder

Ablation studies

For the sake of completeness, we investigated different versions of the proposed methodology, by implementing an ablation studies focused on the bakbone generative model of our solution.

Specifically, we replaced the LSTM-based autoencoder with other four state-of-the-art deep learning-based generative options: a transformer-based autoencoder (T-AE), a variational autoencoder (V-AE), a convolutional autoencoder (C-AE), a recurrent neural network autoencoder (RNN-AE). For the implementation details of each backbone, please refer to the corresponding table showed in the Online Resource 1. Each backbone model was trained and validated on the same identical folds as reported in Table 4. Figure 7 shows the relative comparison among the different backbones by means of the F1 score, the precision and the recall obtained over the test set. As it can be gathered, the LSTM-based versions is the most performing setup.

Conclusions and future developments

In this study, we exploited the data collected from an Arcam EBM A2X industrial PBF-EB machine, to train and test a reconstruction-based time-series anomaly detection framework. This framework, which is built on top of an autoencoder generative model, is able to indicate, with a good accuracy, whether a given job will fail. We also demonstrated that the tool is able to anticipate the failures by a significant margin, and it is real-time in principle, in the sense that it is able to run in parallel with the production cycle, analyze the log as quickly as it is produced by the machine, and provide predictions within the time of production of a new layer. The important practical implication of this achievement is twofold: (i) a job that is identified as prone to failure can be stopped much in advance (by 6 h, on average, and in one case by almost 20 h), thus saving considerable working time and material; (ii) opportune corrective strategies can be adopted, so as to avoid the failure altogether.

While the first point is already easily implementable, as it is a direct consequence of detecting the anomaly, the second one requires further studies, in the direction of understanding which specific process parameters/conditions determined the anomaly. This can be obtained by integrating our model, which currently works as a black-box, with explainability/interpretability strategies (Gunning et al., 2019; Naqvi et al., 2024).

Besides the above-mentioned points, future efforts should be devoted to: (i) considerably enlarge the dataset on which the model is trained, optimized and tested, so as to establish the robustness of the proposed solution and extend the case study to other types of machines, in order to prove its generalization capabilities; (ii) work on the optimization of the solution in terms of latency, inference time, power consumption etc, in order to enable execution on the edge, and achieve a complete integration with the AM machine. This integration is not currently implemented in our study, because our experimentation involved a commercial PBF-EB machine with a completely closed system.

Data availability

All of the data used in this study, property of the Department of Management and Production Engineering of the Politecnico di Torino, are available from the corresponding author upon reasonable request and with permission of the Department of Management and Production Engineering of the Politecnico di Torino.

References

Arcam. (2011). Arcam A2X user manual [Computer software manual].

Arnold, C., Böhm, J., & Körner, C. (2020). In operando monitoring by analysis of backscattered electrons during electron beam melting. Advanced Engineering Materials, 22(9), 1901102.

Bäreis, J., Semjatov, N., Renner, J., Ye, J., Zongwen, F., & Körner, C. (2023). Electronoptical in-situ crack monitoring during electron beam powder bed fusion of the ni-base superalloy cmsx-4. Progress in Additive Manufacturing, 8(5), 801–806.

Belay, M. A., Blakseth, S. S., Rasheed, A., & Salvo Rossi, P. (2023). Unsupervised anomaly detection for IoT-based multivariate time series: Existing solutions, performance analysis and future directions. Sensors, 23(5), 2844.

Bradley, A. P. (1997). The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognition, 30(7), 1145–1159.

Caggiano, A., Zhang, J., Alfieri, V., Caiazzo, F., Gao, R., & Teti, R. (2019). Machine learning-based image processing for on-line defect recognition in additive manufacturing. CIRP Annals, 68(1), 451–454.

Carolos, L. C., & Cooper, R. E. (2022). A review on the influence of process variables on the surface roughness of TI-6AL-4V by electron beam powder bed fusion. Additive Manufacturing, 59, 103103.

Chandrasekar, S., Coble, J. B., Yoder, S., Nandwana, P., Dehoff, R. R., Paquit, V. C., & Babu, S. S. (2020). Investigating the effect of metal powder recycling in electron beam powder bed fusion using process log data. Additive Manufacturing, 32, 100994.

Cordero, Z. C., Meyer, H. M., Nandwana, P., & Dehoff, R. R. (2017). Powder bed charging during electron-beam additive manufacturing. Acta Materialia, 124, 437–445.

Dev Singh, D., Mahender, T., & Raji Reddy, A. (2021). Powder bed fusion process: A brief review. Materials Today: Proceedings, 46, 350–355.

Dinwiddie, R. B., Dehoff, R. R., Lloyd, P. D., Lowe, L. E., & Ulrich, J. B. (2013). Thermographic in-situ process monitoring of the electron-beam melting technology used in additive manufacturing. In Thermosense: Thermal infrared applications xxxv (Vol. 8705, pp. 156–164).

Dzogbewu, T. C., & du Preez, W. B. (2021). Additive manufacturing of TI-based intermetallic alloys: A review and conceptualization of a next-generation machine. Materials, 14(15), 4317.

Everton, S. K., Hirsch, M., Stravroulakis, P., Leach, R. K., & Clare, A. T. (2016). Review of in-situ process monitoring and in-situ metrology for metal additive manufacturing. Materials & Design, 95, 431–445.

Fu, Z., & Körner, C. (2022). Actual state-of-the-art of electron beam powder bed fusion. European Journal of Materials, 2(1), 54–116.

Galati, M. (2021). Chapter 8—Electron beam melting process: a general overview. In J. Pou, A. Riveiro, & J. P. Davim (Eds.), Additive manufacturing (pp. 277–301). Elsevier.

Galati, M., Defanti, S., & Denti, L. (2022a). Performance analysis of electro-chemical machining of TI-48AL-2NB-2CR produced by electron beam melting. Smart and Sustainable Manufacturing Systems, 6(1), 53–67.

Galati, M., Defanti, S., Saboori, A., Rizza, G., Tognoli, E., Vincenzi, N., & Iuliano, L. (2022b). An investigation on the processing conditions of TI-6AL-2SN-4ZR-2MO by electron beam powder bed fusion: Microstructure, defect distribution, mechanical properties and dimensional accuracy. Additive Manufacturing, 50, 102564.

Galati, M., Giordano, M., & Iuliano, L. (2023). Process-aware optimisation of lattice structure by electron beam powder bed fusion. Progress in Additive Manufacturing, 8(3), 477–493.

Galati, M., Minetola, P., & Rizza, G. (2019a). Surface roughness characterisation and analysis of the electron beam melting (EBM) process. Materials, 12(13), 2211.

Galati, M., Snis, A., & Iuliano, L. (2019b). Experimental validation of a numerical thermal model of the EBM process for Ti6Al4V. Computers & Mathematics with Applications, 78(7), 2417–2427.

Galati, M., Snis, A., & Iuliano, L. (2019c). Powder bed properties modelling and 3D thermo-mechanical simulation of the additive manufacturing Electron Beam Melting process. Additive Manufacturing, 30, 100897.

Galati, M., Rizza, G., Defanti, S., & Denti, L. (2021). Surface roughness prediction model for electron beam melting (EBM) processing TI6AL4V. Precision Engineering, 69, 19–28.

Gaytan, S. M., Murr, L. E., Medina, F., Martinez, E., Lopez, M. I., & Wicker, R. B. (2009). Advanced metal powder based manufacturing of complex components by electron beam melting. Materials Technology, 24(3), 180–190.

Gobert, C., Reutzel, E. W., Petrich, J., Nassar, A. R., & Phoha, S. (2018). Application of supervised machine learning for defect detection during metallic powder bed fusion additive manufacturing using high resolution imaging. Additive Manufacturing, 21, 517–528.

Grasso, M. (2021). In situ monitoring of powder bed fusion homogeneity in electron beam melting. Materials, 14(22), 7015.

Grasso, M., Gallina, F., & Colosimo, B. M. (2018). Data fusion methods for statistical process monitoring and quality characterization in metal additive manufacturing. Procedia Cirp, 75, 103–107.

Gunning, D., Stefik, M., Choi, J., Miller, T., Stumpf, S., & Yang, G.-Z. (2019). Xai–explainable artificial intelligence. Science Robotics, 4(37), 7120.

Herzog, T., Brandt, M., Trinchi, A., Sola, A., & Molotnikov, A. (2023). Process monitoring and machine learning for defect detection in laser-based metal additive manufacturing. Journal of Intelligent Manufacturing, 1–31.

Hong, S.H., Kyzer, T., Cornelius, J., Zahiri, F., & Wang, Y. (2022). Intelligent anomaly detection of robot manipulator based on energy consumption auditing. 2022 IEEE aerospace conference (AERO) (pp. 1–11).

Houser, E., Shashaani, S., Harrysson, O., & Jeon, Y. (2023). Predicting additive manufacturing defects with robust feature selection for imbalanced data. IISE Transactions, just-accepted(just-accepted), 1–26.

Khanzadeh, M., Chowdhury, S., Marufuzzaman, M., Tschopp, M. A., & Bian, L. (2018). Porosity prediction: Supervised-learning of thermal history for direct laser deposition. Journal of Manufacturing Systems, 47, 69–82.

Klassen, A. (2018). Simulation of evaporation phenomena in selective electron beam melting. FAU University Press.

Körner, C. (2016). Additive manufacturing of metallic components by selective electron beam melting—A review. International Materials Reviews, 61(5), 361–377.

Ladani, L., & Sadeghilaridjani, M. (2021). Review of powder bed fusion additive manufacturing for metals. Metals, 11(9), 1391.

Li, G., & Jung, J. J. (2023). Deep learning for anomaly detection in multivariate time series: Approaches, applications, and challenges. Information Fusion, 91, 93–102.

Liu, Q., Wu, H., Paul, M. J., He, P., Peng, Z., Gludovatz, B., Kruzic, J. J., Wang, C. H., & Li, X. (2020). Machinelearning assisted laser powder bed fusion process optimization for ALSI10MG: New microstructure description indices and fracture mechanisms. Acta Materialia, 201, 316–328.

Lunetto, V., Galati, M., Settineri, L., & Iuliano, L. (2020). Unit process energy consumption analysis and models for electron beam melting (EBM): Effects of process and part designs. Additive Manufacturing, 33, 101115.

Mahale, T. R. (2009). Electron Beam Melting of Advanced Materials and Structures, mass customization, mass personalization (Unpublished doctoral dissertation). NC State.

Mahmoudi, M., Ezzat, A. A., & Elwany, A. (2019). Layerwise anomaly detection in laser powder-bed fusion metal additive manufacturing. Journal of Manufacturing Science and Engineering, 141(3), 031002.

Milberg, J., & Sigl, M. (2008). Electron beam sintering of metal powder. Production Engineering, 2(2), 117–122.

Mumith, A., Thomas, M., Shah, Z., Coathup, M., & Blunn, G. (2018). Additive manufacturing. The Bone & Joint Journal, 100–B(4), 455–460.

Naqvi, M. R., Elmhadhbi, L., Sarkar, A., Archimede, B., & Karray, M. H. (2024). Survey on ontology-based explainable AI in manufacturing. Journal of Intelligent Manufacturing, 1–23.

Rizza, G., Galati, M., & Iuliano, L. (2022). Simulating the sintering of powder particles during the preheating step of Electron Beam Melting process: Review, challenges and a proposal. Procedia CIRP, 112, 388–393.

Scime, L., & Beuth, J. (2019). Using machine learning to identify in-situ melt pool signatures indicative of flaw formation in a laser powder bed fusion additive manufacturing process. Additive Manufacturing, 25, 151–165.

Shevchik, S. A., Masinelli, G., Kenel, C., Leinenbach, C., & Wasmer, K. (2019). Deep learning for in situ and real-time quality monitoring in additive manufacturing using acoustic emission. IEEE Transactions on Industrial Informatics, 15(9), 5194–5203.

Sigl, M., Lutzmann, S., & Zaeh, M. F. (2006). Transient physical effects in electron beam sintering. In 17th solid freeform fabrication symposium. SFF (pp. 464–477).

Sing, S. L., An, J., Yeong, W. Y., & Wiria, F. E. (2016). Laser and electron-beam powder-bed additive manufacturing of metallic implants: A review on processes, materials and designs. Journal of Orthopaedic Research, 34(3), 369–385.

Sing, S. L., & Yeong, W. Y. (2020). Laser powder bed fusion for metal additive manufacturing: Perspectives on recent developments. Virtual and Physical Prototyping, 15(3), 359–370.

Su, Y., Zhao, Y., Niu, C., Liu, R., Sun, W., & Pei, D. (2019). Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining (pp. 2828–2837).

Tammas-Williams, S., Zhao, H., Léonard, F., Derguti, F., Todd, I., & Prangnell, P. B. (2015). XCT analysis of the influence of melt strategies on defect population in Ti-6Al-4V components manufactured by Selective Electron Beam Melting. Materials Characterization, 102, 47–61.

Tercan, H., & Meisen, T. (2022). Machine learning and deep learning based predictive quality in manufacturing: A systematic review. Journal of Intelligent Manufacturing, 33(7), 1879–1905.

Tziolas, T., Papageorgiou, K., Theodosiou, T., Papageorgiou, E., Mastos, T., & Papadopoulos, A. (2022). Autoencoders for anomaly detection in an industrial multivariate time series dataset. Engineering Proceedings, 18(1), 23.

VBN Components (n.d.). The vibenite materials—Hardest AM materials: Extreme wear-resistance. Retrieved May 5, 2023, from https://vbncomponents.com/materials/

Wang, P., Yang, Y., & Moghaddam, N. S. (2022). Process modeling in laser powder bed fusion towards defect detection and quality control via machine learning: The state-of-the-art and research challenges. Journal of Manufacturing Processes, 73, 961–984.

Weiwei, H., Wenpeng, J., Haiyan, L., Huiping, T., Xinting, K., & Yu, H. (2011). Research on preheating of titanium alloy powder in electron beam melting technology. Rare Metal Materials and Engineering, 40(12), 2072–2075.

Xames, M. D., Torsha, F. K., & Sarwar, F. (2023). A systematic literature review on recent trends of machine learning applications in additive manufacturing. Journal of Intelligent Manufacturing, 34(6), 2529–2555.

Xiong, Z., Zhu, D., Liu, D., He, S., & Zhao, L. (2022). Anomaly detection of metallurgical energy data based on iforest-ae. Applied Sciences, 12(19), 9977.

Yazdi, R. M., Imani, F., & Yang, H. (2020). A hybrid deep learning model of process-build interactions in additive manufacturing. Journal of Manufacturing Systems, 57, 460–468.

Zhou, H., Yu, K., Zhang, X., Wu, G., & Yazidi, A. (2022). Contrastive autoencoder for anomaly detection in multivariate time series. Information Sciences, 610, 266–280.

Funding

Open access funding provided by Politecnico di Torino within the CRUI-CARE Agreement. This research did not receive any specific grants from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Conceptualization, M.G., E.P., S.D.C.; methodology, D.C.; software, D.C.; validation, D.C., F.P.; investigation, D.C., F.P. and S.D.C.; data curation, D.C., P.A. and M.G.; writing-original draft preparation, D.C., F.P., P.A.; writing-review and editing, M.G., E.P. and S.D.C.; supervision, M.G., E.P. and S.D.C. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cannizzaro, D., Antonioni, P., Ponzio, F. et al. Machine learning-enabled real-time anomaly detection for electron beam powder bed fusion additive manufacturing. J Intell Manuf (2024). https://doi.org/10.1007/s10845-024-02359-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10845-024-02359-6