Abstract

The challenge on the contemporary market of consumer goods is a quick response to customer needs. It entails time restrictions, which a semi-finished products’ (including metal products) manufacturer must meet. This issue must be addressed during a design phase, which for the most of semi-finished products suppliers, takes part during a quotation preparation process. Our research is aimed at investigating possibility of application of Fuzzy Reasoning methods for shortening of a design process, being a part of this process. We present a study on application of simplified models for solving technological tasks, allowing obtaining expected properties of designed products. The core of our concept is replacing numerical models and classical metamodels with a rule-based reasoning. A quotation preparation process can be supported by solving a technological problem without numerical experiments. Our goal was to validate the thesis basing not only on the presentation of some potential solutions but also on the results of simulation studies. The problem is illustrated with an example of thermal treatment of aluminum alloys, aimed at evaluation of a summary fraction of precipitations as a function of time and technological parameters. We assumed that it is possible to use both unstructured and point numerical experiments for knowledge acquisition. Implementation of this concept required the use of hybrid knowledge acquisition methods that combine the results of point experiments with expert knowledge. A comparison of obtained results to the ones obtained with metamodels shows a similar efficiency of both approaches, while our method is less time and laborious.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

This work aims to validate the hypothesis that it is possible to support technological decisions by applying methods of knowledge extraction based on examples or sparse observations and Fuzzy Rule-Based Systems (FRBS).

The contemporary market of consumer goods requires a quick response to customer needs. Therefore, semifinished product manufacturers must meet sharp time restrictions. Metal products are, as a rule, semifinished products supplied to manufacturers of market products. While producers of final products have the option of negotiating with the client the deadline for the orders for subcontractors, the deadline for responding to demand is stiff and undisputed. Time-oriented production activities have become the answer to these challenges. It is a reaction to—previously recognized as a canon—situation when the cost of manufacturing is the only criterion for the selection of production processes. Time-oriented production processes are especially difficult in companies manufacturing in response to the products that are ordered. This approach is defined as versatile manufacturing. Companies that operate following these rules negotiate with clients and compete with other companies for each order they like to receive. They also customize a product for each client (Stonciuviene et al. 2020; Macioł 2017). This process is called Request for Quotation (RFQ). One form of versatile manufacturing companies is Engineering-to-Order (ETO) companies. In their case, the client’s order requires designing a product. This kind of production pattern requires considering the procedures related to the technical preparation of production during its realization.

The vast majority of studies related to the need for shortening of lead times and decreasing costs in versatile manufacturing is focused on the manufacturing phase. It is assumed that the problem described above can be handled with production methods employing information technology (IT) and the concept of time compression supported by Production Control Systems (PCSs). These are, among others: JIT, KANBAN, Lean Manufacturing, Paired-Cell Overlapping Loops of Cards with Authorization (POLCA), Workload Control (WLC), Control of Balance by Card-Based Navigation (COBACABANA) Gómez Paredes et al. (2020), and Quick Response Manufacturing (QRM). The latter approach combines elements of other concepts (Suri 2003) and is the most effective method of shortening the time of order fulfilment.

However, the authors of Mandolini et al. (2020) state that the production cost and related leading time must be managed during the design phase, not only during manufacturing activities. Furthermore, design costs consume approximately 20% of the total budget of a new project, while typically 80% of manufacturing costs are determined during the design phase (Ulrich et al. 2010). Manufacturing parameters are identified during the design stage, and their definition affects i.a. the selection of materials and a choice of technologies (in the case of metal products these are generally casting, forming, heat treatment and machining) that are used in the production process. However, for reducing as much as possible the time commitment of designers, technical parameters and cost estimation at the design phase is only feasible if the evaluation is automatically carried out starting from the virtual prototype of the product (i.e., a combination of a 3D CAD model, geometrical and non-geometrical attributes, and product manufacturing information) Mandolini et al. (2020). Unfortunately, for most of the metal products’ manufacturers, especially those employing Engineering-to-Order (ETO) approach, full automatization of a product life cycle is not possible. The problem is partially solved with numerical modelling-based approaches, among others Digital Twin concept, being a part of the Industry 4.0 paradigm or Integrated Computational Material Engineering (ICME) Horstemeyer (2018) but this is rather a future solution, currently applicable in limited cases.

For versatile manufacturing companies, preparing of time- and resource-demanding quotations is a daily routine and they need to ensure that these processes are efficient (Stadnicka and Ratnayake 2018). Such important and simultaneously complex business process as quotation preparation can be supported with many formalized methods. In the most general way, the quotation preparation supporting methods can be divided into two groups: quantitative and qualitative (Stonciuviene et al. 2020; Campi et al. 2020). The techniques that are included into the first group can be used when there is a possibility of developing of a suitable model for a statistical or analytical procedure that allows for a procedural determination of the production process characteristics. It depends on the explicitly and formally defined features of the product (Favi et al. 2021). Unfortunately, in most of the products of the metal industry, it is not possible to build a statistical or analytical model that allows for the full process parameters estimation. The only solution is to use sophisticated, complex qualitative methods.

In many cases, the determination of the parameters of the production process and, consequently, the manufacturing costs and the possible leading time requires conducting of material tests (in the present case, mainly metallurgical), necessary for a correct realisation of the quotation preparation process. Our research aimed to assess the possibility of using methods of Fuzzy Reasoning to solve the problem of shortening the design process in quotation preparation. This problem boils down to find, or rather to confirm, the possibility of using simplified methods to solve tasks requiring materials’ knowledge.

The problem is illustrated with an example of designing of thermal treatment of aluminium alloys as an important technology of shaping parts’ properties.

The key problem that we were trying to solve was to create knowledge extraction methods for FRBS when there are not enough experiments (physical or numerical) available under RFQ conditions to allow the use of machine learning methods. We assumed that an adequate approach would be to combine knowledge from unstructured (often random) experiments with expert knowledge. For this purpose, we used our original methodology described below.

We have directed our research to support ETO companies, because in their case the problem of rapid technology development is particularly important. However, it does not mean that the methodology developed by us cannot be used everywhere, where it is necessary to conduct technological research in a situation where a new order requires changes Make To Order (MTO) or even in the case of Make To Stock (MTS) in order to quickly adapt to the changing market needs. The aim of our research is to confirm that the use of Fuzzy Rule-Based Systems can, at least partially, replace the conduct of in-depth material testing. We decided to base our FRBS model on results of previously developed numerical model.

Metamodeling in quotation preparation processes

As mentioned above, the process of preparation of production of metal products comprises many stages, at least some of which relate to conducting material tests. The development of just an approximate pattern of behaviour usually requires numerous independent experiments or simulations. This is not a problem with designing new materials, products, or technologies where a design phase time might last for a month or even years. Under quotation preparation conditions, the problems are similar. Although it is based on already well-known materials and processes, developing not only a correct but also an economical solution is still a big challenge. At the same time, the time to solve these problems is much shorter. It is indisputable that reliable results for the optimal design of a manufacturing process can be obtained through experiments and physical simulations, most often by prototyping. Such a solution in quotation preparation conditions is practically excluded.

A solution to the problem of the impossibility of conducting physical tests before preparing the production process could be the direct use of theoretical formal models. Unfortunately, these models are limited to phenomena occurring under strictly defined boundary conditions and do not allow considering the simultaneous influence of various factors on processes describing actual phenomena. Since the 1950s it has been accepted that the solution to this problem can be Differential Methods, which combine the reliability and certainty of formal models with the possibility of describing complex and diverse phenomena - these methods will be called exact methods. As mentioned in Alizadeh et al. (2020) in the design of complex systems, computer experiments are frequently the only practical approach to obtaining a solution. The most common methods are Finite Element Analysis (FEA) packages to evaluate the performance of a structure, Computational Fluid Dynamics (CFD) packages to predict the flow characteristics of a fluid media and Monte Carlo Simulation (MCS) to estimate the reliability of a product (Viana et al. 2021). Modelling of the physical system means identifying, establishing, and analysing the input-output relationships of the system. Once the model is established with the help of properly planned experiments, it is possible to understand the quantitative change in the response values when the independent variables are changed from one set of values to another set without conducting real experiments (Parappagoudar and Vundavilli 2012). Unfortunately, an optimization of an engineering design request, which is the process of identifying the right combination of product parameters, cannot be solved with a single numerical model, even a very complex one. Managing of computations, including many simulation processes with several numerical models, is often done manually. It is also time-consuming and involves a step-by-step approach. The technological process includes several steps, and each of these steps requires identification of at least several, usually more than a dozen, technical parameters. Since many technical parameters, the try-and-error approach is very inefficient. At different stages, it is necessary to use different numerical models based on different ways of describing input and output parameters (different ontologies). Using of long-running computer simulations in design leads to a fundamental problem when trying to compare different competing options. It is also not possible to analyse all the combinations of variables that one would wish. Classical Design of Experiments (DoE) approaches, while widely applicable, are also inefficient because of high computational costs and strongly non-linear behaviour. On the one hand, applying a numerical model opens the possibility of optimization, but on the other, computational resources for modelling and human resources required to design such models, are sizeable. The need for computational resources might be mitigated by applying of metamodels (Kusiak et al. 2015).

There is a growing interest in utilizing design and analysis of computer experiment methods to replace the computationally expensive simulations with smooth analytic functions (metamodels) that can serve as surrogate models for efficient response estimation (Ye and Pan 2020). The basic approach is to construct a mathematical approximation of the expensive simulation code, which is then used in place of the original code. Metamodeling techniques aim at regression and/or interpolation fitting of the response data at the specified training (observation) points that are selected using one of the many DoE techniques. One might find many examples of metamodeling techniques in literature, i.a. Ye and Pan (2020); Viana et al. (2021), including Polynomial Response Surface (PRS) approximations, Multivariate Adaptive Regression Splines, Radial Basis Functions (RBF), Kriging (KR), Gaussian process, Neural Networks, Support Vector Regression (SVR) and others.

The essence of the metamodeling approach is to treat the modelled phenomenon or process as a black box problem (Zhan et al. 2022). The black-box function is an unknown function, which can be obtained without knowing its internal structure and physical or chemical processes involved. Instead, a list of inputs and corresponding outputs are provided. Unfortunately, in many cases, such an approach is a gross oversimplification. In metamodeling, regardless of the method used, three basic tasks must be conducted: (i) selecting a set of sample points in the design parameter space (i.e., an experimental design); (ii) fitting a statistical model(s) to the sample points (iii) and finding a method to interpolate the results between sample points. It was shown in many papers mentioned above that the use of metamodels gives results similar to those got with accurate methods, mainly using FEA packages, although, for obvious reasons, the level of deviation from the results of physical experiments or results describing the implementation of real production processes is often difficult to determine.

One of the metamodeling approach’s benefits is to solve the technological problems necessary to implement the RFQ process in an acceptable time and, in most cases, efficiently. Unfortunately, the effectiveness of these methods highly depends on arbitrarily made decisions about the selection of sample points, fitting statistical model(s) to the sample points, and finding a method to interpolate results between sample points, which requires highly qualified human resources. The need for human-researcher efforts might be, at least partially, mitigated with the automatized design of metamodels (Macioł et al. 2018). Unfortunately, this does not solve all problems.

Obtaining an acceptable metamodel does not create new “knowledge” and thus does not allow for the generalization of the experience obtained. This is because metamodels have a purely stochastic character resulting from the black box concept.

Unfortunately, it is also the case that preparing many sophisticated metamodels is time-consuming and requires the use of large computational power.

A fuzzy rule-based approach to support the quotation preparation process

The essence of our solution is to replace classical metamodels, interpolating results of partial numerical analyses, with rule-based reasoning methods. In our opinion, it is possible to formulate “meta-knowledge” written in the form of rules, which allow determining the sought result values in a manner characteristic for expert systems. The methods of knowledge acquisition we propose allow establishing rules that bind input values to expected output values as declarative knowledge. At the same time, in contrast to classical metamodels, the process of knowledge acquisition can be carried out independently of a particular problem, basing on appropriately designed experiments, results of many random experiments or real data describing representative examples or enough results obtained by metamodels. An important role in formulating the knowledge model in such a system will be played by experts who will be able to modify the rules established by formal methods. As a result of applying our approach, we may get a set of tools that will significantly support a quotation preparation process, applicable for many technological problems without the need to conduct numerical experiments even in such a limited scope as in the case of applying metamodels. Our research aims to prove that this approach gives results sufficient for being applied in quotation preparation to those obtainable using metamodels.

Our previous experiences have shown that when combining knowledge from experiments and/or experiments and expert judgments, it is necessary to use particularly expressive methods for representing knowledge extracted from differing sources. As stated above, researches presented in the literature, as well as our previous experience (Macioł 2017) show that the best results are to be expected from fuzzy logic reasoning systems (FLRS).

Our original proposal aims to use data from various sources supplemented by expert knowledge to maximally simplify the procedures involved in implementing RFQ procedures while guaranteeing an acceptable level of reliability of the obtained results. We assume that the source of data allowing the construction of the knowledge model can be both the results of random (unstructured) simulation experiments and the results of point experiments conducted in a much less elaborate grid of test patterns than in the previous studies described above. We also assume that the way knowledge is presented in the form of IF-THEN rules may allow for deliberate intervention by experts explaining or correcting the results extracted from the data.

The application of FLRS in modelling of manufacturing processes, including metal processing, is not a new concept. A comprehensive review of the applications of fuzzy logic in modelling of machining process can be found in Pandiyan et al. (2020). An interesting overview of the application of fuzzy logic to model unconventional machining techniques such as electric discharge machining (EDM), abrasive jet machining (AJM), ultrasonic machining (USM), electrochemical machining (ECM) and laser beam machining (LBM) is presented in Venkata Rao and Kalyankar (2014); Vignesh et al. (2019). The examples presented in those papers are particularly interesting due to the specific - novel - nature of these machining processes and thus the lack of established experimental results.

In each of these cases, the assumption is that data sources for formulating the necessary knowledge for inference are either a result of carefully but usually haphazardly designed experiments or a result of previous large-scale studies. In each of the modelling methods presented above, we are dealing with an approach in which knowledge is acquired from observations (a data-driven approach) and are opposed to those in which knowledge is formulated by experts (a knowledge-based approach) Mutlu et al. (2017). It is generally accepted that the data-driven knowledge acquisition is much more effective (Hüllermeier 2015). Clearly, the data-driven approach requires the availability of at least a statistically significant number of patterns (observations). Our solution is to develop a methodology that combines the results of studies or statistical experiments with the results of targeted experiments supported by expert knowledge.

The assumption that knowledge is formulated by experts basing on their subjective judgments and/or unorganized or fragmentary dataset and not based on a statistically significant set of examples (observations) precludes in principle the possibility of verifying the correctness of so-formulated rules. However, similarly to the previous research, we have tried to evaluate the effectiveness of the inference method we formulated (or, in fact, knowledge acquisition methods), using data and results from previous research for this purpose.

Theoretical background

The essence of our solution is to replace the statistical methods used in classical meta-modelling with fuzzy inference methods both at the stage of evaluation of relations between explanatory and explained variables at selected points determined on the basis of examples and at the stage of interpolation between those points. In contrast to the traditional understanding of the concept of interpolation as a numerical method that allows the definition of a so-called interpolation function that takes predetermined values at fixed points called nodes and is used to determine intermediate values in the case of rule-based methods, such a function is not formulated a priori. In our approach, experts formulate rules that bind values of outcome variables to premises described by linguistic variables in the form of IF...THEN rules for all nodes. The interpolation in this case consists in determining which of the predefined rules applies to a particular case under analysis that does not belong to the set of cases defining the nodes. At the same time, experts define rules for determining the membership of crisp values of premises to fuzzy sets defining premises. Such assumptions exclude the possibility of using “crisp” expert systems. According to our previous experience (Macioł 2017; Macioł et al. 2020), the problem can be obtained by using fuzzy inference methods. Determining the resulting values for instances involving a set of premise values not belonging to nodes is done on an ad-hoc basis and involves assessing how much a given instance of premises is “similar” to predefined rule premises.

The usual first-choice approach for fuzzy reasoning methods is the Mamdani’s model. Another common solution to the interpolation problem is to use the Takagi-Sugeno model. Both of these models are well described in the literature. With respect to the problem presented in this paper, it is worth noting the differences in the method of determining the weights of the membership of the analyzed cases to particular examples (nodes). These differences will be presented below.

Our concept was derived from the need to create effective decision support systems in various areas (in our case, technology) in the absence of adequate research. We assume that the only source of knowledge is expert judgment based on fragmented experiments (physical or numerical). Such data sets are insufficient for systematic machine learning algorithms. In the case presented in our work, “crisp” inference methods seem to be the most natural choice. In this case, rules formulated directly by experts based on data from unsystematic experiments have the form of Horns clauses, which may be represented as IF \(\dots \) THEN rules:

where \(A_i\) and B—the atoms in a selected logic (propositions, predicates, or other terms). In the case of “crisp” reasoning in expert systems, the atoms are most often in the form of:

where \(\circ \)—a logical connective (\(=, <,>\), in, between and others) between a given value and an element of its domain. For “crisp” reasoning, the whole process is controlled by a knowledge engineer cooperating with a domain expert.

As mentioned above the first-choice approach for fuzzy reasoning methods is Mamdani’s model. The rule scheme is very similar to the “crisp” scheme, except that the premises are formulated differently, which can be expressed in a form

where \(x_i\)—a crisp value of the current input and X is a linguistic term that represents the fuzzy set, given by a membership function \(\mu _A(x)\).

When the Takagi–Sugeno approach is employed, the form of a rule is more complex and can be expressed in a form proposed in Takagi and Sugeno (1985):

where: y—a variable of the conclusion whose value is inferred, \(x_1-x_k\)—variables of the premises that also appear in the part of the conclusion, \(A_1{-}A_k\)—fuzzy sets with linear membership functions representing a fuzzy subspace in which the implication R can be applied for reasoning, f—logical functions connecting propositions in the premise, d—a function that implies the value of y when \(x_1{-}x_k\) satisfies the premise.

The first problem in terms of the knowledge-based approach in both Mamdani and T–S is identifying the membership functions of the variables composing the premises. Recall that we do not have a sufficient amount of data that would allow us to formulate the premises using machine learning methods. Hence, membership functions of preconditions must be determined subjectively. All these decisions are made by experts using their experience and knowledge, but mainly using available examples of linking values of premises with conclusions.

The next issue is to explicitly define the form of the conclusion. In our case, we are talking about functions of summary fraction of precipitations in time which can be treated as a certain set of patterns (qualitative variable) or as the value of the fraction of precipitations for time coordinate t obtained or predicted at a certain (quantitative variable). We have assumed in our research that the latter will be implemented. We present the details in “Numerical example” section. Then we have to formulate the rules as in classical (“crisp”) expert systems but taking into account the fuzzy nature of inference. In our approach, the formulation of the structure of the Knowledge Base must be implemented on the basis of subjective expert decisions. In our case, we have partially used techniques applied in the fuzzy version of the ID3 algorithm (Begenova and Avdeenko 2018). The Cartesian product of rule premises in the form of linguistic variables initially determines the structure of the knowledge base. In the next step, examples (all or choosen by clustering methods) are automatically assigned to individual rules based on membership measures. The expert, evaluating the total value of the rule membership measure, based on his own knowledge, assigns a conclusion value for each rule.

In the case of the T-S method, it is necessary to formulate a model that allows determining a function that implies the values of the conclusion. In the original version of the T-S approach, one of the regression analysis methods (least squares method) is used to express the final form of the function defining the conclusion. Currently, many other methods described in the literature (Gu and Wang 2018) allow the formulation of such a function, i.a.: global least squares, local least squares, product space clustering, Evolutionary algorithms. and many others but unfortunately, all of these heuristic methods are based on the analysis of training data. In our case, there is no appropriate set of observations. To the best of the authors’ knowledge, there are no methods to formulate a function based only on expert predictions. In our previous studies (Macioł 2017), we applied the set of equations described at the support points. It turned out that for a larger number of premises and for some combinations of premise values, the estimated conclusion value was outside the predicted range. To avoid this problem, an approach derived from the LP linear programming concept was used. LP is used to find the best fit of the conclusion function to the extreme values of the premises in each rule. For computing parameters of an output function, the approach derived from the Linear Programming concept was used. The core of the process of generating functions employs support points. We assumed it would be the Cartesian product of the left and right endpoints of each precondition’s variables. For each combination of values of the preconditions’ variables, the conclusion’s numerical values must be in the range set for the appropriate conclusion value. For each variable describing the premise, we consider two extreme values and for them, we build two conditions limiting the solution (in any case, the value of the function must not exceed the range of acceptable variability of the function describing the conclusion). At the same time, we introduced constraints that do not allow for the extreme values of preconditions’ variables the total maximum distance of conclusion value from its borders to be greater than a given value determined in relation to the difference between the lower and upper limits of the range of conclusion. As an objective function, we adopted a function which, for extreme values of the premises’ variables gives the minimum Manhattan distance of the conclusion value from the borders of its range.

After solving the so-defined linear problem for each of the rules, we set the parameters of the function that implies the value of y when appropriate variables satisfy the premise:

where \(p_{i,j}\)—the coefficient of output function in jth rule, \(x_j\) – the crisp value of input variable j.

The reasoning in our approach can be carried out identically to the classical Mamdani’s and T-S approach.

Numerical example

Preparation of the technological process for metal parts under ETO conditions requires solving many subproblems. In RFQ the customer specifies the requirements to be met by the requested product but rarely specifies the way to achieve them. In the case of metal products, the requirements expected in the RFQ relate to the shape of the product, which is easy to assess in terms of manufacturability, but above all to the mechanical properties, which cannot be easily designed under ETO conditions. numerous requirements of an economic, logistical, organisational, etc. nature is also specified in the RFQ. In the case of metal products, however, it is crucial to meet the mechanical property requirements in conjunction with the economic requirements. The decision problem which decides to a significant extent about the attractiveness of quotation and, after winning the contract, about its effectiveness is the decision which combination of material and processes will lead to the mechanical properties stated in RFQ at the lowest cost. Because of the repeatedly mentioned complexity of metal product manufacturing processes, it is not possible to create formal methods that would allow solving this problem comprehensively. The only method is the implementation of the design process by an engineer based on his knowledge and experience step by step. Significant facilitation of such an inherently ad-hoc process is the use of IT tools that allow rapid and reasonably effective evaluation of the results of partial decisions. An example of such partial decisions is the setting of parameters of a thermal treatment process that allows significant increasing of metal-made parts with reasonable costs.

The subject of our research was the problems associated with the thermal treatment of aluminium alloys. Designing an effective thermal treatment process for any new or even similar to previous RFQ orders requires the use of flexible numerical models. In the case of thermal treatment of aluminium alloys, we can consider exact models, metamodels and the proposed solution based on FLRS.

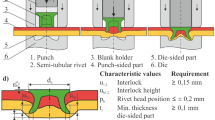

The direct numerical model of a microstructure behaviour of a particular aluminium alloy during thermo-mechanical treatment was designed in the Technical University of Graz and described in Macioł et al. (2015). The numerical model is based on CALPHAD-based MatCalc simulation framework. The nature of such models allows for an infinite number of parameters’ combinations - there are hundreds of tweakable parameters, with many of them being continuous numbers. The flexibility of the model is a disadvantage in optimization tasks. Hence, the metamodel proposed by Macioł et al. (2018) was significantly constrained. It could reproduce only a single technological process with single aluminium alloy (however three different initial states of the material are allowed). On the other hand, the process is controlled with six parameters, instead of hundreds. The second important modification was a replacing of CALPHAD-based computations, which are time-consuming with a machine-learned “black-box” model, using a Kriging approach. One might find all details in the cited paper. Only the most important features are summarized below.

As mentioned above, the modelled process is a heat treatment of an aluminium alloy. The process contains three phases, heating, keeping in elevated temperature, and cooling down. The temperatures’ changes during heating and cooling are assumed to be linear and the elevated temperature is assumed to be constant. While it is an obvious simplification, it allows a representation of a process with only 4 variables (initial and final temperatures are constant, they do not influence the process). Two additional parameters (Table 1) are grain and subgrain sizes (independent variables, which could be controlled with a proper mechanical treatment before heat treatment).

The CALPHAD model allows a very thoughtful analysis of an obtained microstructure. Unfortunately, numerous quantitative and qualitative output variables, worthwhile for a human researcher, cannot be directly used to constitute a useful objective function. Hence, simplifying of the metamodel also includes simplification of the output variables. Three aggregating measures had been defined: a mean precipitation diameter, a number of precipitations in volume, and a weight fraction of precipitations. These measures represent the state of the microstructure after the heat treatment process and directly influences the mechanical properties of a treated part.

In our research, we used the detailed data made available to us, developed for Macioł et al. (2018). In the cited research, three initial material states, obtained with earlier computations, were considered: as-cast, homogenized, and hot-rolled. For the presented research, the cases concerning cast material state have been chosen.

Original data had been organized into four classes (Fig. 1). The input variables are presented in Table 1. The exemplary data for cases 1 and 700 are presented in Tables 2 and 3.

We aimed to investigate whether using Artificial Intelligence methods can predict the summary fraction of precipitations as a function of time, basing only on known values of independent variables, without the need to conduct simulation experiments. The course of research based on the data provided was:

-

1.

Standardization of input variables values

-

2.

determining how to represent the summary fraction of precipitations as a function of time as the outcome variable

-

3.

transformation of the source descriptions of the summary fraction of precipitations to the agreed form

-

4.

to identify classes of the outcome variable, basing on the available data

-

5.

definition of input variables in fuzzy form

-

6.

formulation of rules based on available data without using machine learning methods

-

7.

estimation of the resulting function summary fraction of precipitations based on the adopted inference mechanism

-

8.

determination of estimation error

The research cycle was carried out for different ways of defining the outcome variables and different methods of inference. The two most common methods of fuzzy reasoning, the Mamdani’s 1975 and Takagi and Sugeno (1985) methods, were used here.

The formulation of fuzzy knowledge model

A first step in the model’s formulation of the preconditions is bringing the domains and hence the values of all variables in the premises to a common base. In our case, all input variables have a quantitative character, but their domains significantly differ. Therefore, it was necessary to normalize the data. We used internal normalization that comprises transforming the original scales into [0,1] ranges. In our case, we used the lowest value of each input variable as a reference.

The fuzzy reasoning methods require that all input variables are either directly presented in the form of fuzzy linguistic variables or transformed into this form. In our case, all input variables are presented in a crisp numerical form and therefore it must be fuzzyfied. The ranges of the input variables of the premise are divided into fuzzy subspaces. The number of subspaces can be different for each of the variables. Each of the subspaces is described by the left and right endpoints. The subspaces are not contiguous (the right endpoint of the subspace i differs from the left endpoint of the subspace \(i+1\)). In the absence of proper observation, the cardinality and the ranges of these subspaces must be based on subjective expert decisions. The number of rules and therefore potential reasoning efficiency depends on this decision (the more rules, the more precise the representation of the variation of the resulting summary fraction of precipitations). On the other hand, an increase of the cardinality of subspaces enforces a higher number of experiments (if knowledge is acquired from point experiments) or leads to a lack of possibility of formulating conclusions for some data combinations (if knowledge is acquired from unsystematic, partial examples). Large cardinality of subspaces also makes it difficult for experts to correct the rules, which is the essence of our concept. The division into subspaces for each input variable and the values of left and right endpoints were determined subjectively, but based on a rough analysis of the input data. Originally, it was assumed that all input variables will be presented in a two-steps scale (low, high). The reason was that the number of rules in the knowledge base should be as small as possible. Here, the number of examples and hence the number of the rules in a reasoning engine (product of the cardinalities of the domains of each variable) is equal to \(2^6\) (64). Unfortunately, preliminary research had shown that such a model does not sufficiently describe the variability of input variables and the effect of this variability on the course of the function describing the summary fraction of precipitations. In further research, it was decided that three input variables will be described with a two-values scale (low, high) - \(X_1\), \(X_2\), \(X_6\) and three with three values (low, middle, high) - \(X_3\), \(X_4\), \(X_5\). The variables that more significantly determine the summary fraction of precipitations are described in more detail. Knowledge about the assessment of the significance of outcome variables was derived from the analysis of available data supported by expert judgement.

It is well known that the performance of a fuzzy reasoning tool depends on the selection of a membership function. They might be linear (i.e., triangular and trapezoidal) or non-linear (i.e., sigmoid, Gaussian, bell-shaped). It was necessary to decide what membership functions would be used to represent particular input variables. Considering the fact that we did not have a statistically representative number of data, it was not possible to use machine learning methods known from the literature (Fernández et al. 2015) for this purpose. In our approach, the formulation of the structure of the knowledge base must be based on subjective expert decisions.

It is assumed that a linear function (trapezoidal) represents the variability of the input variables sufficiently. The proof can be found in the literature (Patel et al. 2015). The model used for fuzzification of input values we present in Tables 4, 5 and Figs. 2, 3.

While input values in our model are of a numerical character, the output variable, which is a function of time describing a certain phenomenon, cannot be easily quantified. The objectives of our study require that the outcome variable be defined in a form that allows solving problems in the classification or regression form. In both cases, the outcome variable should be presented in a form that allows the examples to be distinguished and compared. We have assumed that each pattern presenting the summary fraction of precipitations (v) as a function of time can be written in tabular form as a kind of relation \(t_{j,i}\), \(v_{j,i}\) for each experiment (pattern). Then the similarity between the patterns can be measured by any measure of the distance of the values \(v_{j,i}\) for the same values \(t_{j,i}\). The problem is that the data provided to us were generated according to the Piecewise Linear Functions (PLFs) method extended by the original algorithm to generate so colled nodes \((t_{j,i}^*, v_{j,i}^*)\). An individual PLF is established for the each output and for the each initial material state, hence finally the metamodel consisted of 9 PLFs. Since the quality of interpolation of simulation results is very sensitive to the method of points selection, a dedicated algorithm had been developed. As a result, each pattern is described for different values \(t_{j,i}^*\). It was necessary to carry out a kind of interpolation formulated for a different and noncontinuous number of values of independent variables in a homogeneous range T. Since some patterns can be complex, it is assumed that the step of T step will be the minimum timing accuracy (1 s). For each experiment j, calculations were made according to the obvious formula:

where

T is the duration - time of the experiment, \((t_{j,i}^*, v_{j,i}^*)\) is the ith node of jth pattern

An additional problem that makes it difficult, or even impossible, to compare patterns is the existence of a specific autocorrelation between the result variable and the input variables. Among the input variables, we have three values: the heating rate, the holding time and the cooling rate, which together with the holding temperature determine the duration of the entire operation. At the same time, these values are so different that the durations of the operations are not comparable (the shortest time is 1 892 s, and the longest is 32 591s and their distribution is non-linear). Therefore, for further analyses, we selected 700 examples for which the process execution time was less than 8 030 s (the set of examples was determined by the procedure of uniformly grouping examples by process duration). Unfortunately, this does not solve the problem of the dependence of the course of the resultant function on the three aforementioned parameters determining the process duration. It has been noticed that there appear repeatedly similar shaped courses of the output variable values shifted in time. It might be seen in Fig. 4.

To remove this limitation, we made a kind of internal normalisation so that each example has the same number of points on the timeline (2000). The transformation of values vi had been done with the following procedure:

The result of the transformation is shown in Fig. 5. Reverting of the transformation results during reasoning is straightforward.

Despite the standardisation of values describing the summary fraction of precipitations as a function of time, the problem of using such framed patterns in IF-THEN rules is not solved. In classical tasks solved with the support of rule systems, the output variable is a certain variable of crisp value (e.g., in the case of Takagi-Sugeno method) or a linguistic variable which in the case of control or regression problems is transformed into crisp value in the process of defuzzification. Thus, in the case of output variables, we are dealing with a single multiplicity (obviously with linguistic variables in the sense that they are represented by fuzzy numbers). In our specific case, despite the operations aimed at standardising the description of runs in the case of inference results, we have to deal with so-called multiply ordered variable as a conclusion. One of the considered solutions to this problem is to establish a set of patterns of “similar” courses of an output function and to assign labels to them. The labels of these patterns will be the conclusions of the rules. As a result of the inference, we obtain a fuzzy number described by a function of the membership of its value to the set of labels (Mamdani) or some sharp numerical value from the range of continuous numerical values assigned to the set of labels (Takagi-Sugeno). In the classical problem of fuzzy reasoning, determining the final value of the output variable requires determining which pattern can be assigned to the set of input values (classification problem) or assigning it a sharp value by defuzzification (regression problem). In our case, the first solution seems obvious. However, preliminary research comparing the inference results with courses of output functions for exemplary cases showed a high error rate. Contrary to the expectations resulting from previous studies, the error level was higher for T-S method than for the Mamdani’s method. This is because the T-S method was designed for control and regression problems and later adapted to classification tasks. The results of our earlier studies proved that in classification tasks the T-S method may give better results than other methods but only for a few recognised classes - two or three. In our research, the number of classes is significantly higher and even sophisticated classification techniques developed by us based on numerical inference result in a large error.

We decided that a better solution would be to treat the problem of recognizing runs described by a function as regression tasks. Each conclusion of the fired rules is represented by some pattern, which is a function described on the timeline.

The problem related to the definition of output variables concerns how to define patterns and assign them to individual rules. As mentioned above, the resulting patterns are described by the function that assigns the summary fraction of precipitations to times points whose values we denote as \(c_{k,t}\) where k is the rule index and t is a point on the time axis. The solution to this problem is very much dependent on how knowledge is obtained. We have assumed that our research concerns problems where there is no statistically significant amount of learning data. Here, there are two possibilities of knowledge acquisition for the reasoning system.

The first one concerns the situation when we have no information from previous experiments at our disposal and it is necessary to obtain it from point-wise appropriately planned experiments. We establish a set of values of premises, choose a “representative” for them, conduct an experiment, and take the obtained function describing the course of the summary fraction of precipitations as a pattern representing the conclusion of the rule corresponding to the relevant set of premises. In this case function values are determined by a simple formula:

where \(g_{k,t}\) is normalized output value of experiment assigned to kth rule at the times ponit t.

In the second case, we are dealing with a relatively large group of observations of a random (not related a priori to the structure of the input variables) nature. In view of the unstructured nature of the available examples, it is necessary to formulate patterns of result functions (patterns) and assign them to appropriate rules. We considered two potential solutions: example-independent and example-dependent. The first one (independent of examples - IE) was to group all samples based on similarity expressed by the Euclidean distance between corresponding points on the time axis. For calculations, the AgglomerativeClustering algorithm from the scikit-learn package of Python language was applied (Pedregosa et al. 2011). As a result of several tests verifying the quality of clustering understood as the distance between samples included in particular clusters and the distance between clusters, it was established that the number of clusters is equal 20, Euclidean metric used to compute the linkage and maximum linkage method uses the maximum distances between all observations of the two sets. Simplified results of clustering are shown in Fig. 6. Very diverse clusters were obtained in terms of the number of qualified examples, as shown in the diagram in Fig 7. this confirms that the input data analysed are random in nature, which may limit the effectiveness of IE knowledge acquisition method.

For each cluster, we set a pattern in such a way that the values of the course of the function of the summary fraction of precipitations for this pattern are calculated as the arithmetic mean of the values of all samples at any point in the timeline qualified for a given cluster - \(h_{l,t}\) where l is the index of the cluster and t is the point on the timeline. Since clustering is carried out independently of the values of input variables, it is necessary to assign a particular cluster, and in fact, the pattern representing it, to particular rules. It has been assumed that the cluster (template), which qualifies the sample which is the “best” representation of a set of premises, i.e., has the highest value of weight calculated by Mamdani’s or Takagi-Sugeno method respectively, will be assigned to the rule. If more than one pattern show same similarity, the expert decides on the allocation. Finally value of the summary fraction \(c_{k,t}\) of precipitations for time coordinate t in the conclusion of the rule k is defined as:

where \(h_{l,t}\) is averaged value for cluster l assigned to kth rule at the times point t.

A second solution to the problem of defining conclusions (depended on examples - DE) is based more on the analysis of samples “forming” the premises of all rules. In the case, when we have “redundant” but unstructured data, for some sets of premises (rules) described by crisp intervals we have many observations while there are no data for other sets. However, observations can be described in a way used for fuzzy reasoning. Then the same sample may “to some extent” correspond to many patterns, even if none of them corresponds exactly. Once the measure of membership of specific examples j for a set of conclusions of the rule k - \(\mu _{k,j}\) has been established, a hypothetical function of the summary fraction of precipitations is generated by calculating its successive values as the weighted averages of the corresponding values of all values considered for the rule examples. The estimated value of the summary fraction \(c_{k,t}\) of precipitations for time coordinate t in the conclusion of the rule k is defined as:

where ne is the number of examples, \(\mu _{j,k}\) is a measure of membership jth input value set (example) to kth rule and \(g_{j,t}\) is normalized output value of jth example at the times point t.

Let us consider this using the example of Rule 23, the preconditions for which is set out in Table 6. This is an example tuple of the Cartesian product of the domains of all variables describing the premises.

Of the 700 examples analyzed, 7 are “similar” enough to the pattern written in Rule 23 and therefore must be included in the expert analysis. These examples are presented in the Table 7.

The value of \(\mu _i\) as the membership function was set according to the formulas presented in Macioł and Rȩbiasz (2016). The way to determine this value, which is also used in inference, can be illustrated in the figures describing the procedure for example 353 (Fig. 8).

The predicted course of conclusion function shapes of rule 23, generated with knowledge independent of examples and dependent of examples are shown in Figs. 9 and 10. In the first case, the conclusion corresponds to pattern assigned to example 353. In the second case, the conclusion is determined as a weighted average (Takagi-Sugeno method was used for presentation purposes). Figures 11 and 12 show the same results for the rule 86, employing 10 clustered cases.

While the definition of the conclusion in the knowledge model required original solutions the other components of the model are basically standard.

The mechanism of inference in the case of Mamdani’s method does not differ from standard solutions. The ultimate form of the summary fraction of precipitations is determined according to the following formula, regardless of the mechanism of generating patterns describing the conclusions of the rules. The estimated value of the summary fraction \(e_{j,t}\) of precipitations \(e_{j,t}\) for the time coordinate t as a conclusion of the Mamdani’s reasoning is computed with a formula:

where nr is the number of rules, \(\mu _{j,k}\) is the measure of membership jth input value set (example) to kth rule and \(c_{k,t}\) is the value of the summary fraction of precipitations for time coordinate t in the conclusion of the rule k determined according to the formula (7) or (8).

In our research, we accepted the operator PROD as an aggregation function for measure of membership for rules. Tests using other operators had not bring visible changes in simulation results.

The output function in the T-S method adapted to our needs is an additional measure of whether a given example belongs to a fuzzy defined pattern. The parameters of this function were determined according to the methodology presented in “Metamodeling in quotation preparation processes” section.

In the case of T-S method with our modifications, a final course of a function of the summary fraction of precipitations \(e_{j,t}\) for time coordinate t for each case is defined with the following procedure:

-

1.

As in the case of Mamdani’s approach, the measures of whether a given set of premises belongs to the pattern of premises of each rule are determined \(\mu _{j,k}\).

-

2.

The measure is corrected with a coefficient based on the function (4) that implies the value of \(y_{j,k}\) when appropriate variables satisfy the premise. As we proposed i.a. in Macioł et al. (2020) this “similarity index” is calculated as a distance from the middle of the \(<0, 1>\) range - index is equal to 1 for \(y_{j,k}\) equal to 0.5, 0 for \(y_{j,k}\) greater or equal to 1.0, and in other cases is computed with formulae.

$$\begin{aligned} d_(j,k) = \left\{ \begin{aligned} y_{j,k}&\ge 1: 0 \\ y_{j,k}&< 1: 1-2 \cdot |0.5 - y_(j,k) |\end{aligned} \right. \end{aligned}$$(10) -

3.

Finally, we set a value \(e_{j,t}\) for each time coordinate t by the formula:

$$\begin{aligned} {e_{j,t}} = \frac{{\sum \limits _{k = 1}^{nr} {{\mu _{j,k}}{d_{j,k}}{c_{k,t}}} }}{{\sum \limits _{k = 1}^{nr} {{\mu _{j,k}}{d_{j,k}}} }} \end{aligned}$$(11)where nr is the number of rules, \(\mu _{j,k}\) is the measure of membership jth input value set (example) to kth rule, \({d_{j,k}}\) is the “similarity index” determined by the formula (10) and \(c_{k,t}\) is the value of the summary fraction of precipitations for time coordinate t in the conclusion of the rule k determined according to the formula (7) or (8).

The results of selected experiments

Of the many experiments carried out, we present those that gave the most promising results. These are four experiments: two for Mamdani’s inference method and two for T-S inference in both cases for knowledge extracted by example independent (EI) or example dependent (ED) of Knowledge Base formulation methods. In these experiments, after formulating the rules normalized and digitized (according to the previously described rules), the courses of functions of the summary fraction of precipitations had been compared for each of the 700 cases with the courses predicted by the inference system shapes, in the adequate form. The estimation error of the summary fraction of precipitations, measured as a sum of the relative difference between the inference results and the actual shapes for each time coordinate are defined with a formula:

where \(g_{j,t}\) is normalized output value of jth example at the times point t and \(e_{j,t}\) is estimated output value of jth example at the times point t.

Furthermore, for each of the four experiments, maximal deviations of the predicted values from the actual ones \(x_j\) (maximal error), computed with the formula below were analysed.

Furthermore, the number of examples for which the error was greater than the selected limit values. The main result of the study, i.e. the mean and maximum estimation error for the four cases, is presented in Figs. 13, 14 and 15.

The results presented above clearly show that in the vast majority of examples the error level does not exceed 10% for mean error or slightly above 20% for maximal errors. Unfortunately, there are a few examples in which these errors are much higher. In most cases, this applies to the same examples for all methods of knowledge extraction and inference. It should be remembered that the results considered as actual do not come from physical tests but are the results of numerical simulations, which are also subject to error.

To further analyse the effectiveness of our proposed method, we analysed the number of cases for which the predicted values differ significantly from the actual ones. In Fig. 16, the number of examples with a maximum error greater than the specified level is shown. That diagram should be interpreted in the following way. The number of examples with the maximum error less than 5% ranges from 566 to 643, what means that the number of examples almost perfectly matching the actual shapes is small and ranges from 57 to 134. However, when the maximum error is greater than 20%, the number of “wrong” examples decreases to a range from 73 to 168.

After consultation with technologists and considering our previous experience, we have concluded that a maximum error of 20% to 30% is acceptable. To illustrate the levels of this error, we present selected examples comparing actual and predicted shapes for the three error limits (Fig. 17). As seen from the graphs, the character of the predicted shapes does not differ significantly from the actual ones.

Comparing the Knowledge Base techniques and reasoning methods by the number of examples with a maximum error greater than 20%, 25% and 30% is shown in Figs. 18 and 19. The charts demonstrate significantly better results obtained by acquiring knowledge based on the input data.

Aggregated (averaged by examples) simulation results are shown in Table 8 and Figs. 20 and 21.

The aggregated results confirm that, as in assessing the number of examples for which the predicted courses of functions differ significantly from the actual ones, the knowledge acquisition based on input data gives much better results both in the case of mean and maximum error. The best result for the evaluation of the average error was obtained for the system of learning from input data and inference using the Takagi-Sugeno method (4.07%). On the other hand, in the case of the evaluation of the maximum error, the Mamdani’s method gave the better result.

The results obtained by using the proposed method were compared with the effects of using the metamodel presented in the paper (Macioł et al. 2018). The authors do not provide precise calculations, informing only that the average deviation of the predicted values from the actual is about 2%, which is better than the results obtained using our method. However, the authors of the cited paper admit that there are examples for which this deviation is much higher, which also confirms our observations.

Technologists looking for the best method to predict the course of technological processes may be guided by the evaluation of the average or maximum error presented by us. In the case when we are dealing with a small number of manufactured products, the maximum error is significant, whereas as the number of products increases, the significance of the average error increases.

Conclusions

This manuscript has proposed a novel approach to solving technological problems related to operational management in metal products plants operating according to Engineering-to-Order (ETO) rules. Here, the client’s order requires the creation of a design, which requires solving of technological problems in the quotation preparation phase. We used a microstructure evolution, in particular precipitation kinetic in a commercial grade aluminium alloy case study to show the proposed approach. The shape of the summary fraction of precipitations, being an output of reasoning based on six selected input parameters was an object of the analysis. The results of our research show that it is possible to create effective mechanisms for modelling of technological processes so that they can be used in the quotation preparation process. At the same time, we proved that such an effect can be obtained by combining different methods of knowledge acquisition with expert-based inference mechanisms. Fuzzy rule-based systems allow the formulation of decision models for the design of technologies supporting the multistage process of quotation preparation. The knowledge base for FRBS can be formulated with prior point experiments planned according to one of the many designs of experiments (DoE) techniques, results of prior partial (random) experiments or, as we presented in this paper, results of partially designed or random numerical simulations using complex models and/or metamodels. As an inference mechanism, we used Mamdani’s and modified Takagi-Sugeno methods. Two methods of extracting knowledge from examples were used, one that made conclusions of rules directly dependent on the values of the input variables values and another that involved generating cluster analysis method patterns of output function courses and then assigning them to the conclusions of all rules.

The evaluation of the results of simulations conducted with the use of the developed reasoning methods consisted in comparing the obtained results describing the courses of the analysed output function with the courses obtained with the use of exact methods (Macioł et al. 2018). Regardless of the reasoning mechanism, better results were obtained using the learning method making the conclusions dependent on the input data. The best result for the evaluation of the average error was obtained when using the method of learning from input data and inference using the Takagi-Sugeno method (4.07%). On the other hand, for the evaluation of the maximum error, the better result was given by the Mamdani’s method for the same knowledge acquisition mechanism. Unfortunately, as in the studies to design our experiments, there were few cases when the inference results differed significantly from the results obtained with metamodels.

The comparison of our results with the results obtained with metamodels (Macioł et al. 2018) shows similar prediction efficiency. Our solution has an important advantage consisting in the fact that the formulation of knowledge allowing for the prediction of the course of technological processes is independent of the evaluation of a specific business case. This allows us to significantly simplify and shorten the quotation preparation process.

Our proposed method of rapid modeling of technological processes can be applied where it is not possible to conduct accurate physical or numerical studies, but where there are other unstructured sources of knowledge and there is the possibility of formulating knowledge by experts.

The results of our study proved that the application of fuzzy inference methods can effectively solve the problem of rapid technology design in the design process of the metallic products under ETO conditions and also in some cases for MTO and MTS, when it is necessary to modify or change the material used to complete the order (MTO) or production plan (MTS).

References

Alizadeh, R., Allen, J. K., & Mistree, F. (2020). Managing computational complexity using surrogate models: a critical review. Research in Engineering Design, 313, 275–298. https://doi.org/10.1007/s00163-020-00336-7

Begenova, S., & Avdeenko, T. (2018). Building of fuzzy decision trees using ID3 algorithm. Journal of Physics, 1015, 022002. https://doi.org/10.1088/1742-6596/1015/2/022002

Campi, F., Mandolini, M., Favi, C., Checcacci, E., & Germani, M. (2020). An analytical cost estimation model for the design of axisymmetric components with open-die forging technology. The International Journal of Advanced Manufacturing Technology, 1107, 1869–1892. https://doi.org/10.1007/s00170-020-05948-w

Favi, C., Campi, F., Mandolini, M., Martinelli, I., & Germani, M. (2021). Key features and novel trends for developing cost engineering methods for forged components: a systematic literature review. International Journal of Advanced Manufacturing Technology, 1179–10, 2601–2625. https://doi.org/10.1007/s00170-021-07611-4

Fernández, A., López, V., del Jesus, M. J., & Herrera, F. (2015). Revisiting Evolutionary Fuzzy Systems: Taxonomy, applications, new trends and challenges. Knowledge-Based Systems, 80, 109–121. https://doi.org/10.1016/j.knosys.2015.01.013

Gómez Paredes, F.J., Godinho Filho, M., Thürer, M., Fernandes, N.O., Jabbour, C.J.C. (2020). Factors for choosing production control systems in make-to-order shops: A systematic literature review. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-020-01673-z

Gu, X., & Wang, S. (2018). Bayesian Takagi-Sugeno-Kang fuzzy model and its joint learning of structure identification and parameter estimation. IEEE Transactions on Industrial Informatics, 1412, 5327–5337. https://doi.org/10.1109/TII.2018.2813977

Horstemeyer, M. F. (2018). Integrated computational materials engineering (ICME) for metals: Concept and case studies. Wiley.

Hüllermeier, E. (2015). From knowledge-based to data-driven fuzzy modeling: Development, criticism, and alternative directions. Informatik-Spektrum, 386, 500–509. https://doi.org/10.1007/s00287-015-0931-8

Kusiak, J., Sztangret, Ł, & Pietrzyk, M. (2015). Effective strategies of metamodelling of industrial metallurgical processes. Advances in Engineering Software, 89, 90–97. https://doi.org/10.1016/j.advengsoft.2015.02.002

Macioł, A. (2017). Knowledge-based methods for cost estimation of metal casts. International Journal of Advanced Manufacturing Technology, 911–4, 641–656. https://doi.org/10.1007/s00170-016-9704-z

Macioł, A., Macioł, P., & Mrzygłód, B. (2020). Prediction of forging dies wear with the modified Takagi-Sugeno fuzzy identification method. Materials and Manufacturing Processes, 356, SI700–SI713. https://doi.org/10.1080/10426914.2020.1747627

Macioł, A., & Rȩbiasz, B. (2016). Advanced methods in investment projects evaluation. KrakowAGH University of Science and Technology Press.

Macioł, P., Bureau, R., Poletti, C., Sommitsch, C., Warczok, P., & Kozeschnik, E. (2015). Agile multiscale modelling of the thermo-mechanical processing of an aluminium alloy. Key Engineering Materials, 651–653, 1319–1324. https://doi.org/10.4028/www.scientific.net/KEM.651-653.1319

Macioł, P., Szeliga, D., & Sztangret, Ł. (2018). Methodology for metamodelling of microstructure evolution: Precipitation kinetic case study. International Journal of Material Forming, 116, 867–878. https://doi.org/10.1007/s12289-017-1396-x

Mamdani, E., & Assilian, S. (1975). An experiment in linguistic synthesis with a fuzzy logic controller. International Journal of Man-Machine Studies. https://doi.org/10.1016/S0020-7373(75)80002-2

Mandolini, M., Campi, F., Favi, C., Germani, M., & Raffaeli, R. (2020). A framework for analytical cost estimation of mechanical components based on manufacturing knowledge representation. International Journal of Advanced Manufacturing Technology, 4, 1131–1151. https://doi.org/10.1007/s00170-020-05068-5

Mutlu, B., Sezer, E. A., & Nefeslioglu, H. A. (2017). A defuzzification-free hierarchical fuzzy system (DF-HFS): Rock mass rating prediction. Fuzzy Sets and Systems, 307, 50–66. https://doi.org/10.1016/j.fss.2016.01.001

Pandiyan, V., Shevchik, S., Wasmer, K., Castagne, S., & Tjahjowidodo, T. (2020). Modelling and monitoring of abrasive finishing processes using artificial intelligence techniques: A review. Journal of Manufacturing Processes, 57, 114–135. https://doi.org/10.1016/j.jmapro.2020.06.013

Parappagoudar, M., & Vundavilli, P. R. (2012). Application of modeling tools in manufacturing to improve quality and productivity with case study. Proceedings in Manufacturing Systems, 74, 193–198.

Patel, M. G., Krishna, P., & Parappagoudar, M. B. (2015). Prediction of secondary dendrite arm spacing in squeeze casting using fuzzy logic based approaches. Archives of Foundry Engineering, 151, 51–68. https://doi.org/10.1515/afe-2015-0011

Pedregosa, F., Weiss, R., Brucher, M., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Perrot M., & Duchesnay, E. (2011). Scikit-learn: Machine learning in python. Journal of Machine Learning Research, 12, 2825–2830.

Stadnicka, D., & Ratnayake, R. M. (2018). Development of additional indicators for quotation preparation performance management: VSM-based approach. Journal of Manufacturing Technology Management, 295, 866–885. https://doi.org/10.1108/JMTM-01-2017-0016

Stonciuviene, N., Usaite-Duonieliene, R., & Zinkeviciene, D. (2020). Integration of activity-based costing modifications and LEAN accounting into full cost calculation. Engineering Economics, 311, 50–60. https://doi.org/10.5755/j01.ee.31.1.23750

Suri, R. (2003). QRM and POLCA: A winning combination for manufacturing enterprises in the 21st century 21st century markets are here (Tech. Rep. No. May).

Takagi, T., & Sugeno, M. (1985). Fuzzy identification of systems and its applications to modeling and control. IEEE Transactions on Systems, Man, and Cybernetics, 1, 116–132. https://doi.org/10.1109/TSMC.1985.6313399

Ulrich, K., Eppinger, S., Yang, M.C. (2010). Product design and development. Mc Graw Hill.

Venkata Rao, R., & Kalyankar, V. (2014). Optimization of modern machining processes using advanced optimization techniques: A review. International Journal of Advanced Manufacturing Technology, 735–8, 1159–1188. https://doi.org/10.1007/s00170-014-5894-4

Viana, F. A., Gogu, C., & Goel, T. (2021). Surrogate modeling: Tricks that endured the test of time and some recent developments. Structural and Multidisciplinary Optimization, 645, 2881–2908. https://doi.org/10.1007/s00158-021-03001-2

Vignesh, M., Sasindran, V., Arvind Krishna, S., Madusudhanan, A., & Gokulachandran, J. (2019). Predictive model development and optimization of surface roughness parameter in milling operations by means of fuzzy logic and artificial neural network approach. In S. Rao, C.S.P. Basavarajappa (Ed.), Iop conference series: Materials science and engineering (Vol. 577, p. 012011). https://doi.org/10.1088/1757-899X/577/1/012011

Ye, P., & Pan, G. (2020). Selecting the best quantity and variety of surrogates for an ensemble model. Mathematics. https://doi.org/10.3390/math8101721

Zhan, Z.-H., Shi, L., Tan, K. C., & Zhang, J. (2022). A survey on evolutionary computation for complex continuous optimization. Artificial Intelligence Review, 551, 59–110. https://doi.org/10.1007/s10462-021-10042-y

Acknowledgements

Piotr Macioł was supported by the Ministry of Science and Higher Education, project no. 16.16.110.663.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Andrzej Macioł and Piotr Macioł contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Macioł, A., Macioł, P. The use of Fuzzy rule-based systems in the design process of the metallic products on example of microstructure evolution prediction. J Intell Manuf 33, 1991–2012 (2022). https://doi.org/10.1007/s10845-022-01949-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-022-01949-6