Abstract

We introduce our implementation in HOL Light of the metatheory for Gödel–Löb provability logic (GL), covering soundness and completeness w.r.t. possible world semantics and featuring a prototype of a theorem prover for GL itself. The strategy we develop here to formalise the modal completeness proof overcomes the technical difficulty due to the non-compactness of GL and is an adaptation—according to the formal language and tools at hand—of the proof given in George Boolos’ 1995 monograph. Our theorem prover for GL relies then on this formalisation, is implemented as a tactic of HOL Light that mimics the proof search in the labelled sequent calculus \(\textsf{G3KGL}\), and works as a decision algorithm for the provability logic: if the algorithm positively terminates, the tactic succeeds in producing a HOL Light theorem stating that the input formula is a theorem of GL; if the algorithm negatively terminates, the tactic extracts a model falsifying the input formula. We discuss our code for the formal proof of modal completeness and the design of our proof search algorithm. Furthermore, we propose some examples of the latter’s interactive and automated use.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The origin of provability logic dates back to a short paper by Gödel [28] where propositions about provability are formalised through a unary operator \(\texttt{B}\) to give a classical reading of intuitionistic logic. That work opened the question of finding an adequate modal calculus for the formal properties of the provability predicate used in Gödel’s incompleteness theorems. The problem has been settled since the 1970s for many formal systems of arithmetic employing Gödel–Löb logic GL: the abstract properties of the formal provability predicate of any \(\Sigma _{1}\)-sound arithmetical theory T extending \(\mathsf {I\Sigma _{1}}\) [76] are captured by that modal system, as established by Solovay [73].

Solovay’s technique consists of an arithmetization of a relational countermodel for a given formula that is not a theorem of \(\mathbb{G}\mathbb{L}\), from which it is possible to define an appropriate arithmetical formula that is not a theorem of the mathematical system.Footnote 1

Therefore, on the one hand, completeness of formal systems w.r.t. the relevant relational semantics is still an unavoidable step in achieving the more substantial result of arithmetical completeness; on the other hand, however, the area of provability logic keeps flourishing and suggesting old and new open problems.Footnote 2

Our work starts then with a deep embedding of the syntax of propositional modal logic together with the corresponding relational semantics. Next, we introduce the traditional axiomatic calculus \(\mathbb{G}\mathbb{L}\) and prove the soundness of the system w.r.t. irreflexive transitive finite frames.

A more mathematical part then follows: our goal has been proving formally

Theorem 1

For any formula A, \(\mathbb{G}\mathbb{L}\vdash A\) iff A is true in any irreflexive transitive finite frame.

Since GL is not compact, the standard methodology based on canonical models [69] cannot be directly applied here. After Kozen and Parikh [43], it is common to restrict the construction to a finite subformula universe.Footnote 3 The same idea is basically used in Boolos [8] to prove modal completeness for GL. Proceeding in that same way, we have to formally verify a series of preliminary lemmas and constructions involving the behaviour of syntactical objects used in the standard proof of the completeness theorem. These unavoidable steps are often only proof-sketched in wide-adopted textbooks in logic—including [8]—for they mainly involve “standard” reasoning within the proof system we are dealing with. Nevertheless, when working in a formal setting, as we did with HOL Light, we need to split down the main goal into several subgoals, dealing with both the object- and the meta-level. Sometimes, the HOL Light automation does mirror—and, using automation mechanisms, simplify details of—the informal reasoning. On other occasions, we have to modify some aspects of the proof strategies, simplifying, through the computer tools at hand, some passages of the informal argument in Boolos [8], Chap. 5].

As it is known, for any logical calculus, a completeness result w.r.t. finite models—aka finite model property—implies the decidability of that very logic [36]. Therefore, the formal proof for Theorem 1 we discuss in the first part of the present work could be used, in principle, to develop a decision algorithm for \(\mathbb{G}\mathbb{L}\). That would be a valuable tool for automating in HOL Light the proof of theorems of GL.

Proof search in axiomatic calculi is a challenging task. In our formalisation, we had to develop several proofs in the axiomatic calculus for \(\mathbb{G}\mathbb{L}\), and only in a few cases, it has been possible to leave the proof search to the automation mechanism of HOL Light.

By having a formal proof of the finite model property, one could hope to solve the goal of checking whether a formula is a theorem of GL by shifting from the syntactic problem of finding a proof of that formula in the axiomatic calculus to the semantic problem of checking the validity of that formula in any finite model by applying automated first-order reasoning as implemented in the proof assistant.

Such a strategy has many shortcomings, unfortunately. We document some of its limits in Sect. 4.4 and at the beginning of the subsequent section, but the main points are the following: From the complexity theory viewpoint, a decision procedure based on the countermodel construction would be far from being optimal, belonging to EXPSPACE, rather than PSPACE, to which decidability of GL belongs [46];Footnote 4 from the practical viewpoint, implementing it in HOL Light would consists in a relatively simple formalisation exercise.

Structural proof theory for modal logics—briefly summarised in Sect. 5.1—suggests a more promising strategy.

Many contemporary sequent calculi for non-classical logics are based on an “internalisation” of possible world semantics in Gentzen’s original formalism [58, 67].

We resort to an explicit internalisation for developing our decision algorithm since our formalisation in HOL Light of Kripke semantics for GL is per se a labelling technique in disguise.

Therefore, in the second part of this paper, we introduce what might be considered a shallow embedding in HOL Light of Negri’s labelled sequent calculus \(\textsf{G3KGL}\) [53, 54].

To state it clearly: while in the first part, we defined the axiomatic system \(\mathbb{G}\mathbb{L}\) within our proof assistant employing an inductive definition of the derivability relation for \(\mathbb{G}\mathbb{L}\), in the second part, we define new tactics of HOL Light in order to perform a proof search in \(\textsf{G3KGL}\) by using the automation infrastructure provided by HOL Light itself.

By relying on the meta-theory for \(\textsf{G3KGL}\) developed in [54], we can safely claim that such an embedding provides a decision algorithm for GL: if proof search terminates on a modal formula given as input, HOL Light produces a new theorem, stating that the input formula is a theorem of GL; otherwise, our algorithm prints all the information necessary to construct an appropriate countermodel for the input formula.

Our code is integrated into the current HOL Light distribution and freely available from [35]. The files we will occasionally refer to during the presentation are freely accessible from there.

The present paper is structured as follows:

-

In Sect. 2, we introduce the basic ingredients of our development, namely the formal counterparts of the syntax and relational semantics for provability logic, along with some lemmas and general definitions which are useful to handle the implementation of these objects uniformly, i.e. without the restriction to the specific modal system we are interested in. The formalisation constitutes a large part of the file modal.ml;

-

In Sect. 3, we formally define the axiomatic calculus \(\mathbb{G}\mathbb{L}\) and prove neatly the validity lemma for this system. Moreover, we give formal proofs of several lemmas in \(\mathbb{G}\mathbb{L}\) (\(\mathbb{G}\mathbb{L}\)-lemmas, for short), whose majority is, in fact, common to all normal modal logics, so that our proofs might be re-used in subsequent implementations of different systems. This corresponds to the contents of our code in gl.ml;

-

In Sect. 4 we give our formal proof of modal completeness of \(\mathbb{G}\mathbb{L}\), starting with the definition of maximal consistent lists of formulas. In order to prove their syntactic properties—and, in particular, the extension lemma for consistent lists of formulas to maximal consistent lists—we use the \(\mathbb{G}\mathbb{L}\)-lemmas and at the same time, we adapt an already known general proof-strategy to maximise the gain from the formal tools provided by HOL Light—or, informally, from higher-order reasoning. At the end of the section, we give the formal definition of bisimilarity for our setup, and we prove the associated bisimulation theorem [69], Chap. 11]. Our notion of bisimilarity is polymorphic because it can relate classes of frames sitting on different types. With this tool at hand, we can correctly state our completeness theorem in its natural generality (COMPLETENESS_THEOREM_GEN)—i.e. for irreflexive, transitive finite frames over any (infinite) type—this way obtaining the finite model property for GL w.r.t. frames having finite sets of formulas as possible worlds, as in the standard Henkin’s construction. These results conclude the first part of the paper and are gathered in the file completeness.ml.

-

Section 5 opens the part of the paper describing our original theorem prover for GL. We collect some basic notions and techniques for proof-theoretic investigations on modal logic. In particular, we recall the methodology of explicit internalisation of relational semantics and the results that provide the meta-theory for our decision algorithm.

-

Finally, in Sect. 6, we describe our implementation of the labelled sequent calculus \(\textsf{G3KGL}\)—documented by the file decid.ml—to give a decision procedure for GL. We recover the formalisation of Kripke semantics for GL presented in Sect. 2 to define new tactics mimicking the rules for \(\textsf{G3KGL}\). Then, we properly define our decision algorithm by designing a specific terminating proof search strategy in the labelled sequent calculus. This way, we extend the HOL Light automation toolbox with an “internal” theorem prover for GL that can also produce a countermodel for any formula for which proof search fails. We propose some hands-on examples of use by considering modal principles that have a certain relevance for meta-mathematical investigations; the interested reader will find further examples in the file tests.ml.

-

Section 7 closes the paper and compares our results with related works on mechanised modal logic.

Our formalisation does not modify any original HOL Light tools, and it is therefore “foundationally safe”. Moreover, since we only used that original formal infrastructure, our results can be easily translated into another theorem prover belonging to the HOL family endowed with the same automation toolbox.

Revision notes.

This article is an expanded version of the conference paper [48], presented at Interactive Theorem Proving (ITP) 2021. Changes include adding Sects. 5 and 6 on our implementation in HOL Light of the theorem prover and countermodel constructor for GL, and some minor local revisions. An intermediate version of the contents discussed in the present paper appeared in [65]. Both authors wish to thank two anonymous referees for their valuable feedback and comments towards improving the presentation of the material we discuss here.

1.1 HOL Light Notation

The HOL Light proof assistant [35] is based on classical higher-order logic with polymorphic type variables and where equality is the only primitive notion. From a logical viewpoint, the formal engine defined by the term-conversions and inference rules underlying HOL Light is the same as that described in [47], extended by an infinity axiom and the classical characterization of Hilbert’s choice operator. From a practical perspective, it is a theorem prover privileging a procedural proof style development. I.e., when using it, we have to solve goals by applying tactics that reduce them to (hopefully) simpler subgoals so that the interactive aspect of proving is highlighted. Proof scripts can then be constructed using tacticals that compact the proof into a few lines of code evaluated by the machine.

In what follows, we will report several code fragments to give the flavour of our development and to provide additional documentation and information to the reader interested in the technical details. We partially edited the code to ease the reading of the mathematical formulas. For instance, we replaced the purely ASCII notations of HOL Light with the usual graphical notation. Every source code snippet in this paper has a link—indicated by the word “(sources)” on the right—to a copy of the source files stored on the Software HeritageFootnote 5 archive which is helpful to those who want to see the raw code and how it fits in its original context. Here is an example:

Note that we report theorems with their associated name (the name of its associated OCaml constant), and we write their statement prefixed with the turnstile symbol (\(\vdash \)). In the expository style, we omit formal proofs, but the meaning of definitions, lemmas, and theorems in natural language is clear.

The HOL Light printing mechanism omits type information completely. Therefore, we warn the reader about types when it might be helpful, or even indispensable, to avoid ambiguity—including the case of our main results, COMPLETENESS_THEOREM and COMPLETENESS_THEOREM_GEN.

We also recall that a Boolean function s : \(\alpha \) -> bool is also called a set on \(\alpha \) in the HOL parlance. The notation x IN s is equivalent to s x and must not be confused with a type annotation x : \(\alpha \).

As mentioned, our contribution is part of the HOL Light distribution. The reader interested in performing these results on her machine—and perhaps building further formalisation on top of it—can run our code with the command

at the HOL Light prompt.

2 Basics of Modal Logic

As we stated, we deal with a logic that extends classical propositional reasoning using a single modal operator intended to capture the abstract properties of the provability predicate for arithmetic.

To reason about and within this logic, we have to “teach” HOL Light—our meta-language—how to identify it, starting with its syntax—the object-language—and semantics—the interpretation of this very object-language.

From a foundational perspective, we want to keep everything neat and clean; therefore, we will define both the object-language and its interpretation with no relation to the HOL Light environment. In other terms: our formulas and operators are real syntactic objects which we keep distinct from their semantic counterparts—and from the logical operators of the theorem prover too.

2.1 Language and Semantics Defined

Let us start by fixing the propositional modal language \({\mathcal {L}}\) we will use throughout the present work. We consider all classical propositional operators—conjunction, disjunction, implication, equivalence, negation, along with the 0-ary symbols \(\top \) and \(\bot \)—and add a modal unary connective \(\Box \). The starting point is, as usual, a denumerable infinite set of propositional atoms \(a_{0},a_{1},\cdots \). Accordingly, formulas of this language will have one of the following forms

The following code extends the HOL system with an inductive type of formulas generated by the above syntactic constructions

Later in the code, the operators \( \texttt { \& \& }\), ||, - -> , \(\texttt {<->}\) are used infixed as usual. Next, we turn to semantics for our modal language. We use relational models—aka Kripke models.Footnote 6

Formally, a Kripke frame is made of a non-empty set ‘of possible worlds’ W, together with a binary relation R on W. To this, we add an evaluation function V, which assigns to each atom of our language and each world w in W a Boolean value. This is extended to a forcing relation holds, defined recursively on the structure of the input formula p, that computes the truth-value of p in a specific world w:

In the previous lines of code, f stands for a generic Kripke frame—i.e. a pair (W:W->bool,R:W->W->bool) of a set of worlds and an accessibility relation—and V:string->W->bool is an evaluation of propositional variables. Then, the validity of a formula p with respect to a frame (W,R), and a class of frames L, denoted respectively holds_in (W,R) p and L |= p, are

The above formalisation is essentially presented in Harrison’s HOL Light Tutorial [34, § 20]. Notice that the usual notion of Kripke frame requires that the set of possible worlds is non-empty: that condition could be imposed by adapting the valid relation. We have preferred to stick to Harrison’s original definitions in our code. However, in the next section, when we define the classes of frames, we are dealing with for GL, the requirement on W is correctly integrated with the corresponding types.

2.2 Frames for GL

For carrying out our formalisation, we are interested in the logic of the (non-empty) frames whose underlying relation R is transitive and conversely well-founded—aka Noetherian—on the corresponding set of possible worlds; in other terms, we want to study the modal tautologies in models based on an accessibility relation R on W such that

-

if xRy and yRz, then xRz; and

-

for no \(X\subseteq W\) there are infinite R-chains \(x_{0}Rx_{1}Rx_{2}\cdots \).

In HOL Light, WF R states that R is a well-founded relation: then, we express the latter condition as WF(\(\backslash \) x y. R y x). Here we see a recurrent motif in logic: defining a system from the semantic perspective requires non-trivial tools from the foundational point of view, for, in order to express the second condition, a first-order language is not enough. However, that is not an issue here since our underlying system is natively higher order:Footnote 7

We can characterize this class of frames by using a propositional language extended by a modal operator \(\Box \) that satisfies the Gödel–Löb axiom schema (\(\textsf{GL}\)) \(:\Box (\Box A\rightarrow A)\rightarrow \Box A.\) Here is the formal version of our claim:

The informal proof of the above result is standard and can be found in [8, Theorem 10] and in [69, Theorem 5.7]. The computer implementation of the proof is made easy thanks to Harrison’s tactic MODAL_SCHEMA_TAC for semantic reasoning in modal logic, documented in [34, § 20.3].

Using this preliminary result, we could say that the frame property of being transitive and Noetherian can be captured by Gödel–Löb modal axiom without recurring to a higher-order language.

Nevertheless, that class of frames is not particularly informative from a logical point of view: a frame in TRANSNT can be too huge to be used, e.g., for mechanically checking whether it does provide a countermodel for a formula of our logic. In fact, when aiming at a completeness theorem, one wants to consider models that are helpful for establishing further properties of the same logic. In the present case, the decidability of GL, which, as for any other normal modal logic, is a straightforward corollary of the finite model property [69], Chap. 13].

The frames we want to investigate are then those whose W is finite, and whose R is both irreflexive and transitive:

Now it is easy to see that ITF is a subclass of TRANSNT:

That will be the class of frames whose logic we are now going to define syntactically.

3 Axiomatizing GL

We want to identify the logical system generating all and only the modal tautologies for transitive Noetherian frames; more precisely, we want to isolate the generators of the modal tautologies in the subclass of transitive Noetherian frames which are finite, transitive, and irreflexive.Footnote 8

When dealing with the very notion of tautology—or theoremhood, discarding the complexity or structural aspects of derivability in a formal system—it is convenient to focus on axiomatic calculi. The calculus we deal with here is usually denoted by \(\mathbb{G}\mathbb{L}\).

It is clear from the definition of the forcing relation that for classical operators, any axiomatization of propositional classical logic will do the job. Here, we adopt a basic system in which only \(\rightarrow \) and \(\bot \) are primitive—from the axiomatic perspective—and all the remaining classical connectives are defined by axiom schemas and by the inference rule of Modus Ponens imposing their standard behaviour.

Therefore, to the classical engine, we add

-

the axiom schema \({\textsf{K}}\): \(\Box (A\rightarrow B)\rightarrow \Box A\rightarrow \Box B\);

-

the axiom schema \(\textsf{GL}\): \(\Box (\Box A\rightarrow A)\rightarrow \Box A\);

-

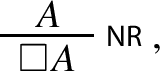

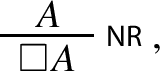

the necessitation rule \(\textsf{NR}\):

where A, B are generic formulas (not simply atoms). Then, here is the complete definition of the axiom system \(\mathbb{G}\mathbb{L}\). The inductive predicate GLaxiom encodes the set of axioms for GL:

The judgment \(\mathbb{G}\mathbb{L} \vdash A\), denoted |- - A in the machine code (not to be confused with the symbol for HOL theorems \(\vdash \)), is also inductively defined in the expected way:Footnote 9

3.1 Soundness Lemma

We can now prove that \(\mathbb{G}\mathbb{L}\) is sound—i.e. every formula derivable in the calculus is a tautology in the class of irreflexive transitive finite frames. This result is obtained by simply unfolding the relevant definitions and applying theorems TRANSNT_EQ_LOB and ITF_NT of Sect. 2.2:

From this, we get a model-theoretic proof of consistency for the calculus

We are now ready to consider the mechanised proof of completeness for the calculus w.r.t. this very class of frames.

3.2 \(\mathbb{G}\mathbb{L}\)-Lemmas

Proving some lemmas in the axiomatic calculus \(\mathbb{G}\mathbb{L}\) is a technical interlude necessary for obtaining the completeness result.

Following this aim, we denoted the classical axioms and rules of the system as the propositional schemas used by Harrison in the file Arithmetic/derived.ml of the HOL Light standard distribution [35]—where, in fact, many of our lemmas relying only on the propositional calculus are already proven there w.r.t. an axiomatic system for first-order classical logic; our further lemmas involving modal reasoning are denoted by names that are commonly used in informal presentations.

Therefore, the code in gl.ml mainly consists of the formalised proofs of those lemmas in \(\mathbb{G}\mathbb{L}\) that are useful for the formalised results we present in the next section. This file might be considered a “kernel” for further experiments in reasoning about axiomatic calculi using HOL Light. The lemmas we proved are, indeed, standard tautologies of classical propositional logic, along with specific theorems of minimal modal logic and its extension for transitive frames—i.e. of the systems \({\mathbb {K}}\) and \({\mathbb {K}}4\) [69]—, so that by applying minor changes in basic definitions, they are—so to speak—take-away proof scripts for extensions of that very minimal system within the realm of normal modal logics.

Whenever it was useful, we have also given a characterisation of classical operators in terms of an implicit (i.e. internal) deduction expressed by the connective - -> . When this internal deduction is from an empty set of assumptions, we named the HOL theorem with the suffix _th, and stated the deduction as a \(\mathbb{G}\mathbb{L}\)-lemma, such as

Moreover, we introduced some derived rules of the axiomatic system mimicking the behaviour in Gentzen’s formalism of classical connectives, as in e.g.

We had to prove about 120 such results of varying degrees of difficulty. We believe that this piece of code is well worth the effort of its development, for two main reasons to be considered – along with the just mentioned fact that they provide a (not so) minimal set of internal lemmas which can be moved to different axiomatic calculi at, basically, no cost.

On the one hand, these lemmas simplify the subsequent formal proofs involving consistent lists of formulas since they let us work formally within the scope of |- - so that we can rearrange subgoals according to their most useful equivalent form by applying the appropriate \(\mathbb{G}\mathbb{L}\)-lemma(s).

On the other hand, giving formal proofs of these lemmas of the calculus \(\mathbb{G}\mathbb{L}\) has been important for checking how much our proof-assistant is “friendly” and efficient in performing this specific task.

As it is known, any axiomatic system fits very well an investigation involving a notion of theoremhood-as-tautology for a specific logic, but its lack of naturalness w.r.t. the practice of developing proper derivations makes it an unsatisfactory model for structural aspects of deducibility. In more practical terms: developing a formal proof of a theorem in an axiomatic system by pencil and paper can be a dull and uninformative task, even when dealing with trivial propositions.

We, therefore, left the proof search to the HOL Light toolbox as much as possible. Unfortunately, we have to express mixed feelings about the general experience. In most cases, relying on this specific proof assistant’s automation tools did save our time and resources when trying to give a formal proof in \(\mathbb{G}\mathbb{L}\). Nevertheless, those automation tools did not turn out to be helpful at all in proving some \(\mathbb{G}\mathbb{L}\)-lemmas. In those cases, we had to search for the specific instances of axioms from which deriving the lemmas,Footnote 10 so that interactive proving them had advantages as well as traditional instruments of everyday mathematicians.

To stress the general point: it is possible—and valuable in general—to rely on the resources of HOL Light to develop formal proofs both about and within an axiomatic calculus for a specific logic, in particular when the lemmas of the object system have relevance or practical utility for mechanising (meta-)results on it; however, these very resources—and, as far as we can see, the tools of any other general proof assistant—do not look particularly satisfactory for pursuing investigations on derivability within axiomatic systems.

4 Modal Completeness

When dealing with normal modal logics, it is common to develop a proof of completeness w.r.t. relational semantics by using the so-called ‘canonical model method’. This approach can be summarised as a standard construction of countermodels made of maximal consistent sets of formulas and an appropriate accessibility relation, according to e.g. the textbooks [69] and [8].

For \(\mathbb{G}\mathbb{L}\), we cannot pursue this strategy since the logic is not compact: maximal consistent sets are (in general) infinite objects, though the notion of derivability involves only a finite set of formulas. We cannot, therefore, reduce the semantic notion of (in)coherent set of formulas to the syntactic one of (in)consistent set of formulas: when extending a consistent set of formulas to a maximal consistent one, we might end up with a syntactically consistent set that nevertheless cannot be semantically satisfied.

Despite this, it is possible to achieve a completeness result by

-

1.

identifying the relevant properties of maximal consistent sets of formulas; and

-

2.

modifying the definitions so that those properties hold for specific consistent sets of formulas related to the formula to which we want to find a countermodel.

That is the fundamental idea behind the proof in Boolos’ monograph [8], Chap. 5]. In that presentation, however, constructing a maximal consistent set from a simply consistent one is only proof-sketched and relies on a syntactic manipulation of formulas. By using HOL Light, we succeed in giving a detailed proof of completeness as directly as that by Boolos. Moreover, we can do that by carrying out in a very natural way a tweaked Lindenbaum construction to extend consistent lists to maximal consistent ones. This way, we succeed in preserving the standard Henkin-style completeness proofs, and, at the same time, we avoid the symbolic subtleties sketched in [8] that have the unpleasant consequence of making the formalised proof rather pedantic—or even dull.

Furthermore, the proof of the main lemma EXTEND_MAXIMAL_CONSISTENT is rather general and does not rely on any specific property of \(\mathbb{G}\mathbb{L}\): our strategy suits all the other normal (mono)modal logics—we only need to modify the subsequent definition of GL_STANDARD_REL according to the specific system under consideration. Thus, we provide a way for formally establishing completeness á la Henkin and the finite model property without resorting to filtrations [69] of canonical models for those systems.

4.1 Maximal Consistent Lists

Following the standard practice, we need to consider consistent finite sets of formulas for our proof of completeness. In principle, we can employ general sets of formulas in the formalisation. However, from the practical viewpoint, lists without repetitions are better suited since they are automatically finite and we can easily manipulate them by structural recursion. We define first the operation of finite conjunction of formulas in a list:Footnote 11

We prove some properties on lists of formulas and some \(\mathbb{G}\mathbb{L}\)-lemmas involving CONJLIST. These properties and lemma allow us to define the notion of consistent list of formulas and prove the main properties of this kind of objects:

In particular, we prove that:

-

a consistent list cannot contain both p and \(\texttt {Not}\;\texttt{p}\) for any formula \(\texttt{p}\), nor False;

-

for any consistent list \(\texttt{X}\) and formula \(\texttt{p}\), either \(\texttt{X}+\texttt{p}\) is consistent, or \(\texttt{X}+\texttt {Not}\;\texttt{p}\) is consistent, where \(+\) denotes the usual operation of appending an element to a list.

Our maximal consistent lists w.r.t. a given formula \(\texttt{p}\) will be consistent lists that do not contain repetitions and that contain, for any subformula of \(\texttt{p}\), that very subformula or its negation:Footnote 12

where X is a list of formulas and MEM q X is the membership relation for lists. We then establish the main closure property (MAXIMAL_CONSISTENT_LEMMA) of maximal consistent lists, namely closure under modus ponens (sources).

After proving some further lemmas with practical utility—in particular, the fact that any maximal consistent list behaves like a restricted bivalent evaluation for classical connectives—we can finally define the ideal (type of counter)model we are interested in.

We define first the relation of subsentences as

Next, given a formula p, we take as “standard”—and define the class GL_STANDARD_MODEL—those models consisting of:

-

C1.

the set of maximal consistent lists w.r.t. p made of subsentences of p—i.e. its subformulas or their negations—as possible worlds;

-

C2.

an accessibility relation R such that

-

a.

it is irreflexive and transitive, and

-

b.

for any subformula Box q of p and any world w, Box q is in w iff, for any x R-accessible from w, q is in x;

-

a.

-

C3.

an atomic evaluation that gives value T (true) to a in w iff a is a subformula of p.

The corresponding code is the following:

Notice that the conditions C1, C2.b and C3 are very general and do not relate to the logic under consideration: they constitute a minimal set of conditions required for normal modal systems; on the contrary, the condition C2.a is specific to GL, and needs to be properly mirrrored at the syntactic level. That is the role played by the last two lines of the definition GL_STANDARD_REL discussed at the end of the next section.

4.2 Maximal Extensions

What we have to do now is to show that the relation GL_STANDARD_MODEL on the type of relational models is non-empty. We achieve this by constructing suitable maximal consistent lists of formulas from specific consistent ones.

Our original strategy differs from the presentation in e.g. [8] for being closer to the standard Lindenbaum construction commonly used to prove completeness results. By doing so, we have been able to circumvent both many technicalities in formalising the combinatorial argument sketched by Boolos in [8, p. 79] and the problem – inherent to the Lindenbaum extension—due to the non-compactness of the system, as we mentioned before.

The main lemma states then that, from any consistent list \(\texttt{X}\) of subsentences of a formula \(\texttt{p}\), we can construct a maximal consistent list of subsentences of \(\texttt{p}\) by extending (if necessary) \(\texttt{X}\):

where X SUBLIST M denotes that each element of the list X is an elment of the list M.

The proof sketch is as follows: given a formula \(\texttt{p}\), we proceed in a step-by-step construction by iterating over the subformulas \(\texttt{q}\) of \(\texttt{p}\) not contained in X. At each step, we append to the list \(\texttt {X}\) the subformula \(\texttt{q}\)—if the resulting list is consistent—or its negation \(\texttt {Not}\; \texttt{q}\)—otherwise.

This way, we do not have to worry about the non-compactness of \(\mathbb{G}\mathbb{L}\) since we are working with finite objects—the type list—from the very beginning.

Henceforth, we see that—under the assumption that \(\texttt{p}\) is not a \(\mathbb{G}\mathbb{L}\)-lemma—the set of possible worlds in GL_STANDARD_FRAME w.r.t. \(\texttt{p}\) is non-empty, as required by the definition of relational structures:

Next, we have to define an \(\texttt{R}\) satisfying the conditions C2.a and C2b for a GL_STANDARD_FRAME; the following does the job:

Notice that the last two lines of this definition assure that the condition C2.a is satisfied, i.e., that we considering irreflexive transitive frames. Condition C2.b also needs to be satisfied: our ACCESSIBILITY_LEMMA assures thatFootnote 13

Such a “standard” accessibility relation (together with the set of the specific maximal consistent lists we are dealing with) defines then a structure in ITF with the required properties in order to satisfy the relation GL_STANDARD_FRAME:

Notice that we might easily modify the formal proofs of conditions C1, C2.b and C3 when dealing with different axiomatic systems, e.g. \({\mathbb {K}},{\mathbb {K}}4,{\mathbb {T}},{\mathbb {S}}4,{\mathbb {B}},{\mathbb {S}}5\), as it happens at the informal level in Boolos [8]. In fact, for each of each systems, we would only have to modify the definition of the “standard relation” (in particular, the last two lines of our GL_STANDARD_REL) and the parts of code in the proof of the accessibility lemma where we need to reason within the specific axiomatic calculus under investigation. For each of these additional logics, a semantic condition on standard frames (line 4 of the definition GL_STANDARD_FRAME) would then been defined formalising what [8], Chap. 5] reports on the topic.

4.3 Truth Lemma and Completeness

For our ideal model, it remains to reduce the semantic relation of forcing to the more tractable one of membership to the specific world. More formally, we prove—by induction on the complexity of the subformula \(\texttt{q}\) of \(\texttt{p}\)—that if \(\mathbb{G}\mathbb{L}\not \vdash \texttt{p}\), then for any world \(\texttt{w}\) of the standard model, \(\texttt{q}\) holds in \(\texttt{w}\) iff \(\texttt{q}\) is member of \(\texttt{w}\):Footnote 14

Finally, we can prove the main result: if \(\mathbb{G}\mathbb{L}\not \vdash \texttt{p}\), then the list \( [\texttt{Not}\; \texttt{p}]\) is consistent, and by applying EXTEND_MAXIMAL_CONSISTENT, we obtain a maximal consistent list \(\texttt{X}\) w.r.t. \(\texttt{p}\) that extends it, so that, by applying GL_truth_lemma, we have that \(\texttt{X}\not \Vdash \texttt{p}\) in our standard model. The corresponding formal proof reduces to the application of those previous results and the appropriate instantiations:

Notice that the family of frames ITF is polymorphic, but, at this stage, our result holds only for frames on the domain form list: the explicit type annotation would be

This is not an intrinsic limitation: the next section is devoted to generalising this theorem to frames on an arbitrary domain.

4.4 Generalizing via Bisimulation

As we stated before, our theorem COMPLETENESS_THEOREM provides the modal completeness for \(\mathbb{G}\mathbb{L}\) with respect to a semantics defined using models built on the type :form list. This result would suffice to provide a (very non-optimal) bound on the complexity of \(\mathbb{G}\mathbb{L}:\) Testing the validity of a formula of size n requires considering all models with cardinality k, for any \(k\le 2^{n}!\).

The same completeness result must also hold when considering models built on any infinite type. To obtain a formal proof of this fact, we need to establish a correspondence between models built on different types. It is well-known that a good way to make rigorous such a correspondence is through the notion of bisimulation [75].

In our context, given two frames (W1,R1) and (W2,R2) sitting respectively on types :A and :B, each with an evaluation function V1 and V2, a bisimulation is a binary relation Z:A->B->bool that relates two worlds w1:A and w2:B when they can simulate each other. The formal definition is as follows:

Then, we say that two worlds are bisimilar if there exists a bisimulation between them:

The key fact is that the semantic predicate holds respects bisimilarity:

From this, we can prove that bisimilarity preserves validity. The precise statements are the following:

In the last theorem, recall that the statement L(W,R) means that (W R) is a frame in the class of frames L.

Finally, we can explicitly define a bisimulation between ITF-models on the type :form list and on any infinite type :A. From this, it follows at once the desired generalization of completeness for \(\mathbb{G}\mathbb{L}\):

Furthermore, from the proof that the relation

defines a bisimulation between the ITF-standard model based on maximal consistent lists of formulas and the model based on corresponding sets of formulas, we obtain the traditional version of modal completeness, corresponding to theorem GL_COUNTERMODEL_FINITE_ SETS (sources) in our code. That, in turn, would enhance the complexity measure of derivability in \(\mathbb{G}\mathbb{L}\) as one would expect: now, in order to check whether a formula with size n is a theorem of Gödel–Löb logic, one may forget about the order of the subsentences, and consider all \(\texttt{ITF}\)-models with cardinality k, for any \(k\le 2^{n}\).

5 Decidability via Proof Theory

By using our EXTEND_MAXIMAL_CONSISTENT lemma, we succeeded in giving a rather neat mechanised proof of completeness w.r.t. relational semantics for \(\mathbb{G}\mathbb{L}\).Footnote 15 Since the relational countermodel we construct is finite, we can safely claimFootnote 16 that the finite model property holds for \(\mathbb{G}\mathbb{L}\).

As an immediate corollary, we have that theoremhood in \(\mathbb{G}\mathbb{L}\) is decidable, and, in principle, we could implement a decision procedure for that logic in OCaml. Our theorem GL_COUNTERMODEL_FINITE_SETS would also provide an explicit bound on the complexity of that task.

A naive approach to the implementation would proceed as follows.

We define the tactic NAIVE_GL_TAC and its associated rule NAIVE_GL_RULE that perform the following steps:

-

1.

Apply the completeness theorem w.r.t. finite sets;

-

2.

Unfold some definitions;

-

3.

Try to solve the resulting semantic problem using first-order reasoning.

Here is the corresponding code.

The above strategy can already prove some lemmas common to normal modal logics automatically but require some effort when derived in an axiomatic system. As an example, consider the following \(\mathbb{G}\mathbb{L}\)-lemma:

When developing a proof of it within the axiomatic calculus, we need to “help” HOL Light by instantiating several further \(\mathbb{G}\mathbb{L}\)-lemmas so that the resulting proof script consists of ten lines of code. On the contrary, our rule is able to check it in a few steps:

In spite of this, the automation offered by MESON tactic is often unsuccessful, even on trivial lemmas. For instance, the previous procedure is not even able to prove the basic instance Gödel-Löb scheme

This suggests that NAIVE_GL_TAC is based on a very inadequate strategy.

A better approach would consist of introducing an OCaml bound function on the size of the frames on which the validity of a formula A has to be checked, according to the cardinality remarks we made at the end of Sect. 4.4.

That is, for sure, a doable way to solve the task, but we were looking for a more principled and modern approach.

That is where structural proof theory has come to the rescue.

5.1 Bits of Structural Proof Theory

In very abstract terms, a deductive system consists of a set of starting formal expressions together with inference rules. Its principal aim is to find proofs of valid expressions w.r.t. a given logic L. A proof (or derivation) in a deductive system is obtained by application of the inference rules to starting expressions, followed by further application of the inference rules to the conclusion, and so on, recursively. A theorem (or lemma) in such a system is the formal expression obtained after a finite run of the procedure just sketched.

Such a definition captures the system \(\mathbb{G}\mathbb{L}\), and the derivability relation formalised in HOL Light by the predicate |- - of Sect. 3. Our previous remarks on \(\mathbb{G}\mathbb{L}\)-lemmas make explicit that this kind of deductive system has shortcomings that its computerisation per se cannot overcome.

The proof-theoretic paradigm behind \(\mathbb{G}\mathbb{L}\) and, more generally, axiomatic calculi could be called synthetic: proof search in such systems is not guided by the components of the formula one wishes to prove. The human prover and the proof assistant dealing with a formal derivability relation have to guess both the correct instances of the axiom schemas and the correct application order of inference rules required in the proof.

A better paradigm is provided by Gentzen’s sequent calculi, introduced first in [22, 23]. That work marks the advent of structural proof theory and the definite shift from investigations in synthetic deductive systems to analytic ones. Gentzen’s original calculi have been further refined by Ketonen [41] and Kleene [42] into the so-called \(\textsf{G3}\)-style systems.

In those systems, a sequent is a formal expression with shape

where \(\Gamma ,\Delta \) are finite multisets—i.e. finite lists modulo permutations—of formulas of a given language. The symbol \(\Rightarrow \) reflects the deducibility relation at the meta-level in the object language. \(\Gamma \) is called the antecedent of the sequent; \(\Delta \) is its consequent.

A derivation in a \(\textsf{G3}\)-style sequent calculus is a finite rooted tree labelled with sequents such that:

-

its leaves are labelled by initial sequents (the starting formal expressions of the abstract deductive system);

-

its intermediate nodes are labelled by sequents obtained from the sequent(s) labelling the node(s) directly above by a correct application of an inference rule of the calculus;

-

its root is the conclusion of the derivation, and it is called the end-sequent.

Figure 1 summarises the calculus \(\textsf{G3cp}\) for classical propositional logic. For each rule, one distinguishes:

-

its main formula, which is the formula occurring in the conclusion and containing the logical connective naming the rule;

-

its active formulas, which are the formulas occurring in the premise(s) of the rule;

-

its context, which consists of the formulas occurring in the premise(s) and the conclusion, untouched by the rule.

\(\textsf{G3}\)-style systems are the best available option for (efficiently) automating decision procedures: once an adequate—i.e. sound and complete—\(\textsf{G3}\)-calculus for a given logic L has been defined, in order to decide whether a formula is a theorem of L it suffices to start a root-first proof search of that exact formula in the related \(\textsf{G3}\)-calculus.

This is so because, by design, good \(\textsf{G3}\)-style systems satisfy the following desiderata:

-

1.

Analyticity: Each formula occurring in a derivation is a subformula of the formulas occurring lower in the derivation branch. This means that no guesses are required to the prover when developing a formal proof in the \(\textsf{G3}\)-calculus;

-

2.

Invertibility of all the rules: For each rule of the system, the derivability of the conclusion implies the derivability of the premise(s). This property avoids backtracking on the proof search. It also means that at each step of the proof search procedure no bit of information gets lost, so that the construction of one derivation tree is enough to decide the derivability of a sequent;

-

3.

Termination: Each proof search must come to an end. If the procedure’s final state generates a derivation, the end-sequent is a theorem; otherwise, it is generally possible to extract a refutation of the sequent from the failed proof search.Footnote 17

Satisfaction of all those desiderata by a pure Gentzen-style sequent calculus has been considered for long almost a mirage for non-classical logics. Times have changed with the advent of internalisation techniques of semantic notions in sequent calculi for non-classical logics.

The starting point of that perspective is still the basic \(\textsf{G3}\)-paradigm, but the formalism of the sequent system is extended either by

-

enriching the language of the calculus itself (explicit internalisation); or by

-

enriching the structure of sequents (implicit internalisation).

The implicit approach adds structural connectives to sequents other than ‘\(\Rightarrow \)’ and commas.Footnote 18 Most \({\textsf{G}}3\)-style calculi obtained this way provide, in general, very efficient decision procedures for the related logics. However, they are sometimes rather hard to design and might lack an additional and highly valuable desideratum of modularity.

The explicit approach reverses the situation. Explicit internalisation uses specific items to represent semantic elements; the formulas of the basic language are then labelled by those items and have shape e.g. x : A. That expression formalises the forcing relation we rephrased in Sect. 2.2, and classical propositional rules operate within the scope of labels. Furthermore, to handle modal operators, the antecedent of any sequent may now contain relational atoms of shape xRy, or any other expression borrowed from the semantics for the logic under investigation.

The rules for the modalities formalise the forcing condition for each modal connective. For instance, the rules in Fig. 2 define the labelled sequent calculus \(\textsf{G3K}\).

Extensions of the minimal normal modal logic K are obtained by rules for relational atoms, formalising the characteristic properties of each specific extension. For instance, the system \(\textsf{G3K4}\) for the logic K4 is defined by adding to \(\textsf{G3K}\) the rule

Labelled sequent calculi do satisfy, in general, the basic desiderata of \(\textsf{G3}\)-style systems and are rather modular.Footnote 19 However, since analyticity holds in a less strict version than the subformula principle mentioned in the previous definition, termination of proof search is sometimes hard to prove and, in many cases, produces complexity results far from optimal.

In the present paper, internalisation is considered only in its explicit version for some syntactic economy: we adopted Negri’s labelled sequent calculus [53] to achieve our goal of implementing a decision algorithm for GL.

Before proceeding, let us clarify the methodology behind labelled sequents for normal modal logics.

For those logics, labelled sequent calculi are based on a language \({\mathcal {L}}_{LS}\) which extends \({\mathcal {L}}\) with a set of world labels and a set of relational atoms of the form xRy for world labels x, y. Formulas of \({\mathcal {L}}_{LS}\) have only two possible forms

where A is a formula of \({\mathcal {L}}\).

Sequents are now pairs \(\Gamma \Rightarrow \Delta \) of multisets of formulas of \({\mathcal {L}}_{LS}\).

Accordingly, the rules for all logical operators are based on the inductive definition of the forcing relation between worlds and formulas of \({\mathcal {L}}\), here denoted by x : A. In particular, the forcing condition for the \(\Box \) modality

determines the following rules:

with the side condition for \({\mathcal {R}}\Box \) that y does not occur in \(\Gamma ,\Delta \).

This is the very general framework behind the labelled sequent calculus \(\textsf{G3K}\) for the basic normal modal logic \({\mathbb {K}}\) we defined in Fig. 2. The results presented by Negri and von Plato in [57], Chap. 6] assure that any extension of \({\mathbb {K}}\) that is semantically characterised by (co)geometric frame conditionsFootnote 20 can also be captured by an extension of \(\textsf{G3K}\) with appropriate (co)geometric rules.

Any of such extensions will keep the good structural properties of \(\textsf{G3cp}\). Most relevantly, proving termination of the proof search in these calculi provides a neat proof of decidability for the corresponding logics, which allows the direct construction of a countermodel for a given formula generating a failed proof search. In many cases, such a proof of decidability is obtained as follows: because of the structural correspondence between the proof tree generated during a backward proof search and the relational frames at the semantic level, proving the finite model property (hence, decidability) for a given logic reduces to proving that the such a root-first search generates only a finite number of new labels; in general, a specific proof search strategy needs to be defined for each system under consideration, in order to prevent looping interactions of (some of) the rules of the given calculus. A detailed account of the methodology is given in Garg et al. [21].

6 Implementing \(\textsf{G3KGL}\)

The frame conditions characterising GL—i.e. Noetherianity and transitivity, or, equivalently, irreflexivity, transitivity and finiteness—cannot be expressed by a (co)geometric implication since finiteness and Noetherianity are intrinsically second order.

Therefore it is not possible to define a labelled sequent calculus for GL by simply extending \(\textsf{G3K}\) with naive semantic rules.

Nevertheless, it is still possible to internalise the possible world semantics by relying on a modified definition of the forcing relation—valid for \(\texttt{ITF}\) models—in which the standard condition for \(\Box \) is substituted by the following

Following Negri [53], this suggests modifying the \({\mathcal {R}}\Box \) rule according to the right-to-left direction of (1).

The resulting labelled sequent calculus \(\textsf{G3KGL}\) is then characterised by the initial sequents, the propositional rules and the \({\mathcal {L}}\Box \) in Fig. 2 together with the rules in Fig. 3.

The rule \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\) obeys to the side condition of not being y free in \(\Gamma ,\Delta \), in line with the universal quantifier used in the forcing condition.

In Negri [54], it is proven that \(\textsf{G3KGL}\) has good structural properties. Moreover, in the same paper, it is easily shown that the proof search in this calculus is terminating. Its failure allows to construct a countermodel for the formula at the root of the derivation tree: it suffices to consider the top sequent of an open branch, and assume that all the labelled formulas and relational atoms in its antecedent are true, while all the labelled formulas in its consequent are false. Termination is assured by imposing saturation conditions on sequents that might prevent the application of useless rules, according to the Schütte-Takeuti-Tait reduction for classical \(\textsf{G3}\) calculi. The reader is referred to Negri [54] for a detailed description of the proof search algorithm for \(\textsf{G3KGL}\).

This way, a syntactic proof of decidability for GL is obtained.

6.1 How to Use \(\textsf{G3KGL}\)

It is not hard to see how to use both our formalisation of modal completeness and the already known proof theory for \(\textsf{G3KGL}\) to the aim of implementing a decision algorithm in HOL Light for GL: our predicate holds (W,R) V A x corresponds precisely to the labelled formula x : A. Thus we have three different ways of expressing the fact that a world x forces A in a given model \(\langle W,R,V\rangle \):

Semantic notation | \(x\Vdash A\) |

|---|---|

Labelled sequent calculus notation | x : A |

HOL Light notation | holds (W,R) V A x |

This correspondence suggests that a deep embedding of \(\textsf{G3KGL}\) is unnecessary. Since internalising possible world semantics in sequent calculi is, in fact, a syntactic formalisation of that very semantics, we can use our own formalisation in HOL Light of validity in Kripke frames and adapt the goal stack mechanism of the theorem prover to develop \(\textsf{G3KGL}\) proofs by relying on that very mechanism.

That adaptation starts with generalising the standard tactics for classical propositional logic already defined in HOL Light.

Let us call any expression of forcing in HOL Light notation a holds-proposition.

As an abstract deductive system, the logical engine underlying the proof development in HOL Light consists of a single-consequent sequent calculus for higher-order logic. We must work on a multi-consequent sequent calculus made of multisets of holds-propositions and (formalised) relational atoms. To handle commas, we recur to the logical operators of HOL Light: a comma in the antecedent is formalised by a conjunction; in the consequent, it is formalised by disjunction.

Since we cannot directly define multisets, we need to formalise the sequent calculus rules to operate on lists and handle permutations through standard conversions of a goal with the general shape of an n-ary disjunction of holds-propositions, for \(n\ge 0\).

The intermediate tactics we now need to define operate

-

on the components of a general goal-term consisting of a finite disjunction of holds-propositions: these correspond to the labelled formulas that appear on the right-hand side of a sequent of \(\textsf{G3KGL}\) so that some of our tactics can behave as the appropriate right rules of that calculus;

-

on the list of hypotheses of the goal stack, which correspond to the labelled formulas and possible relational atoms that occur on the left-hand side of a sequent of \(\textsf{G3KGL}\), so that some of our tactics can behave as the appropriate left rules of that calculus.

In order to make the automation of the process easier, among the hypotheses of each goal stack, we make explicit the assumptions about the transitivity of the accessibility relation, the left-to-right direction of the standard forcing condition for the \(\Box \), and, separately, the right-to-left direction of the forcing condition for the same modality in ITF models. This way, the formal counterparts of, respectively, \(\textit{Trans}\), \({\mathcal {L}}\Box \), and \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\) can be executed adequately by combining standard tactics of HOL Light.

Our classical propositional tactics implement, for purely practical reasons, left and right rules for the (bi) implication-free fragment of \({\mathcal {L}}\). Therefore, the only intermediate tactics determining a branching in the derivation tree—i.e. the generation of subgoals in HOL Light goal-stack—are those corresponding to \({\mathcal {L}}\vee \) and \({\mathcal {R}}\wedge \). The translation of a formula of \({\mathcal {L}}_{LS}\), given as a goal, to its equivalent formula in the implemented fragment is produced by our conversion HOLDS_NNFC_UNFOLD_CONV (sources). A similar conversion is defined for the labelled formulas that appear among the hypotheses, i.e. on the left-hand side of our formal sequents.

Specific tactics handle left rules for propositional connectives: each is defined by using HOL Light theorem tactic(al)s, which can be thought of as operators on a given goal, taking theorems as input to apply a resulting tactic to the latter. For instance, the left rule for negation \({\mathcal {L}}\lnot \) is defined by

which uses the propositional tautology \(\lnot P \Longrightarrow (P \vee Q) \Longrightarrow Q\) in an MP rule instantiated with a negated holds-proposition occurring among the hypotheses; then it adds the holds-proposition among the disjuncts of the new goal, as expected. \({\mathcal {R}}\lnot \) is defined analogously by NEG_RIGHT_TAC (sources).

On the contrary, \({\mathcal {L}}\vee \) and \({\mathcal {R}}\wedge \) are implemented by combining theorem tactic(al)s based on the basic operators CONJ_TAC and DISJ_CASES.

Modal rules are handled employing the explicit hypotheses we previously mentioned to deal with \({\mathcal {L}}\Box \) and \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\). The same approach also works for the rule Trans, which we handle through a theorem tactical ACC_TCL (sources) operating on the relational atoms among the hypotheses of a current goal stack.

This basically completes the formalisation—or shallow embedding—of \(\textsf{G3KGL}\) in HOL Light.

6.2 Design of the Proof Search

Our efforts now turn to run, in HOL Light, an automated proof search w.r.t. the implementation of \(\textsf{G3KGL}\) we have sketched in the previous section.

We can again rely on theorem tactic(al)s to build the main algorithm, but we need to define them recursively this time.

First, we have to apply recursively the left rules for propositional connectives, as well as the \({\mathcal {L}}\Box \) rule: this is made possible by the theorem tactic HOLDS_TAC (sources). Furthermore, we need to saturate the sequents w.r.t. the latter modal rule, by considering all the possible relational atoms and applications of the rule for transitivity, and, eventually, further left rules: that is the job of the theorem tactic SATURATE_ACC_TAC (sources).

After that, it is possible to proceed with the \({\mathcal {R}}\Box ^{\text {L}\ddot{\textrm{o}}\text {b}}\): that is trigged by the BOX_RIGHT_TAC (sources), which operates by applying (the implementation of) \({\mathcal {R}}\Box ^{\text {L}\ddot{\textrm{o}}\text {b}}\) after SATURATE_ ACC_TAC and HOLDS_TAC.

At this point, it is possible to optimise the application of BOX_RIGHT_TAC by applying the latter tactic after a “sorting tactic” SORT_BOX_TAC (sources): that tactic performs a conversion of the goal term and orders it so that priority is given to negated holds-propositions, followed by those holds-propositions formalising the forcing of a boxed formula. Each of these types of holds-propositions are sorted furthermore as follows:

holds WR V p w precedes holds WR V q w if p occurs free in q and q does not occur free in p; or if p is “less than” q w.r.t. the structural ordering of types provided by the OCaml module Pervasives.

We programmed the tactic GL_TAC to perform the complete proof search; from that tactic, we define the expected GL_RULE (sources).

To keep it short, our tactic works as expected:

-

1.

Given a formula A of \({\mathcal {L}}\), let-terms are rewritten together with definable modal operators, and the goal is set to |- A;

-

2.

A model \(\langle W,R,V\rangle \) and a world \(w\in W\)—where W sits on the type num—are introduced. The main goal is now holds (W,R) V A w;

-

3.

Explicit additional hypotheses are introduced to be able to handle modal and relational rules, as anticipated before;

-

4.

All possible propositional rules are applied after unfolding the modified definition of the predicate holds given by HOLDS_NNFC_UNFOLD_CONV. This assures that at each step of the proof search, the goal term is a finite conjunction of disjunctions of positive and negative holds-propositions. As usual, priority is given to non-branching rules, i.e. to those that do not generate subgoals. Furthermore, the hypothesis list is checked, and Trans is applied whenever possible; the same holds for \({\mathcal {L}}\Box \), which is applied any appropriate hypothesis after the tactic triggering transitivity. Each new goal term is reordered by SORT_BOX_TAC, which always precedes the implementation of \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\).

The procedure is repeated starting from step 2. The tactic governing this repetition is FIRST o map CHANGED_TAC, which triggers the correct tactic—corresponding to a specific step of the very procedure—in GL_STEP_TAC (sources) that does not fail.

At each step, moreover, the following condition is checked by calling ASM_REWRITE_TAC:

- Closing:

-

The same holds-proposition occurs both among the current hypotheses and the disjuncts of the (sub)goal; or holds (W,R) V False x occurs in the current hypothesis list for some label x.

This condition states that the current branch is closed, i.e. an initial sequent has been reached, or the sequent currently analysed has a labelled formula \(x:\bot \) in the antecedent.

Termination of the proof search is assured by the results presented in [54]. Therefore, we can justify our choice to conclude GL_TAC by a FAIL_TAC that, when none of the steps 2–4 can be repeated during a proof search, our algorithm terminates, informing us that a countermodel for the input formula can be built.

That is exactly the job of our GL_BUILD_COUNTERMODEL (sources) tactic: it considers the goal state which the previous tactics of GL_TAC stopped at; collects all the hypotheses, discarding the meta-hypotheses; and negates all the disjuncts constituting the goal term. Again, by referring to the results in [54, 58], we know that this information suffices to the user to construct a relational countermodel for the formula A given as input to our theorem prover for GL.

6.3 Some Examples

Because of its adequate arithmetical semantics, Gödel–Löb logic reveals an exceptionally simple instrument to study the arithmetical phenomenon of self-reference, as well as Gödel’s results concerning (in)completeness and (un)provability of consistency.

From a formal viewpoint, an arithmetical realisation \(*\) in Peano arithmetic (PA)Footnote 21 of our modal language consists of a function commuting with propositional connectives and such that \((\Box A)^{*}:=Bew(\ulcorner A^{*}\urcorner )\), where Bew(x) is the formal provability predicate for PA.

Under this interpretation, we will read modal formulas as follows:

\(\Box A\) | A is provable in PA |

\(\lnot \Box \lnot A\) | A is consistent with PA |

\(\lnot \Box A\) | A is unprovable in PA |

\(\Box \lnot A\) | A is refutable in PA |

\((\Box A)\vee (\Box \lnot A)\) | A is decidable in PA |

\((\lnot \Box A)\wedge (\lnot \Box \lnot A)\) | A is undecidable in PA |

\(\Box (A \leftrightarrow B)\) | A and B are equivalent over PA |

\(\Box \bot \) | PA is inconsistent |

\(\lnot \Box \bot ,\; \Diamond \top \) | PA is consistent |

We tested our procedure GL_RULE on some examples.Footnote 22 First, as a sanity check, we applied it to all the schemas (including axioms but excluding rules) that were initially proven directly in the GL calculus (see our discussion in Sect. 3.2). In total, 56 such lemmas were (re-)proven by our procedure in about 3.5 seconds.

Next, we used the procedure to prove some other results of meta-mathematical relevance; for instance:

-

Undecidability of consistency. If PA does not prove its inconsistency, then its consistency is undecidable. The corresponding modal formula is

$$\begin{aligned} \lnot (\Box \Box \bot )\rightarrow \lnot (\Box \lnot \Box \bot ) \wedge \lnot (\Box \lnot \lnot \Box \bot ) \end{aligned}$$ -

Undecidability of Gödel’s formula. The formula stating its own unprovability is undecidable in PA, if the latter does not prove its inconsistency. The corresponding modal formula is

$$\begin{aligned} \Box (A \leftrightarrow \lnot \Box A) \wedge \lnot \Box \Box \bot \rightarrow \lnot \Box A \wedge \lnot \Box \lnot A \end{aligned}$$ -

Reflection and iterated consistency.

$$\begin{aligned} \Box ((\Box p \rightarrow p) \rightarrow \Diamond \Diamond \top ) \rightarrow \Diamond \Diamond \top \rightarrow \Box p \rightarrow p \end{aligned}$$ -

Formalised Gödel’s second incompleteness theorem. In PA, the following is provable: If PA is consistent, it cannot prove its own consistency. The corresponding modal formula is

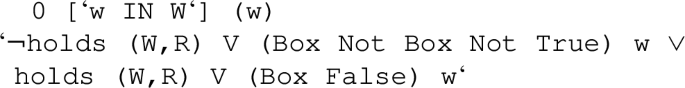

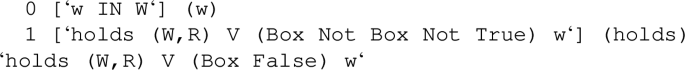

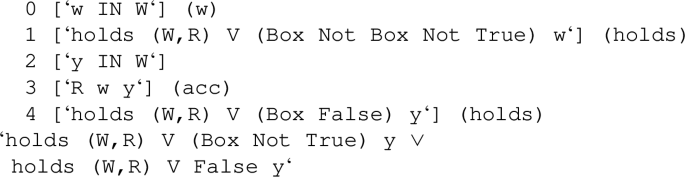

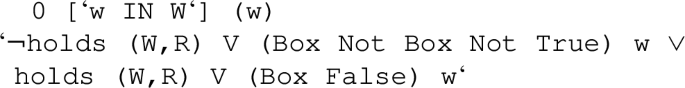

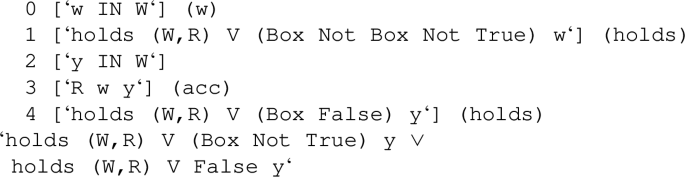

$$\begin{aligned} \lnot \Box \bot \;\rightarrow \;\lnot \Box \Diamond \top \end{aligned}$$For the reader’s sake, we describe for this specific example the main steps constituting both the decision procedure (GL_RULE) and the shallow embedding of \(\textsf{G3KGL}\) in the interactive proof mechanism of HOL Light. First, the goal is set to

Then, by applying the completeness theorem and unfolding and sorting the disjuncts (HOLDS_NNFC_UNFOLD_CONV, and SORT_BOX_TAC discussed above), we obtain the following goal stack:

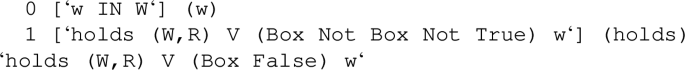

Next, by using \({\mathcal {R}}\lnot \) (NEG_RIGHT_TAC), we have

Now, we can trigger \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\) followed by \({\mathcal {L}}\Box \) (BOX_RIGHT_TAC), to obtain:

By now, the second disjunct is deleted, and by applying \({\mathcal {R}}\Box ^{{L\ddot{o}b}}\) followed by \({\mathcal {R}}\lnot \), we are done by \({\mathcal {L}}\bot \) (closing condition by ASM_REWRITE_TAC).

As already discussed, most of these proofs seem to be unfeasible using a generic proof search approach such as the one implemented by MESON or METIS, either axiomatically or semantically (see Sect. 3.2 and the beginning of Sect. 5, respectively). Thus, obtaining the formal proof in HOL Light of some of the above four results using more basic techniques can be challenging. Our procedure can prove them in a few seconds.

Finally, we tested the ability of our procedure to find countermodels. For instance, consider this reflection principle, which is a non-theorem in GL:

Our procedure fails (meaning it does not produce a theorem) on this formula and warns the user that a countermodel has been constructed. As expected, the structure that the countermodel constructor GL_BUILD_COUNTERMODEL returns is the one that can be graphically rendered as

7 Related Work

Our formalisation gives a mechanical proof of completeness for \(\mathbb{G}\mathbb{L}\) in HOL Light which sticks to the original Henkin’s method for classical logic. In its standard version, its nature is synthetic and intrinsically semantic [18]. As we stated before, it is the core of the canonical model construction for most normal modal logics.

That approach does not work for \(\mathbb{G}\mathbb{L}\) because of its non-compactness. This issue is usually sidestepped by restricting to a finite subformula universe, as done in Boolos [8]. Nevertheless, we build the subformula universe in our mechanised proof by recurring to a restricted version of Henkin’s construction without resorting to the syntactic manipulations that are proof-sketched in [8], Chap. 5].

As far as we know, no other mechanised proof of modal completeness for \(\mathbb{G}\mathbb{L}\) has been given before, despite there exist formalisations of similar results for several other logics.

In particular, both Doczkal and Bard [13], and Doczkal and Smolka [14] deal with completeness and decidability of non-compact modal systems—namely, converse PDL and CTL, respectively. They use Coq/SSReflect to give constructive proofs of completeness on the basis of Kozen and Parikh [43] for PDL and Emerson and Halpern [16] for CTL. Moreover, they propose a simulation of natural deduction for their formal axiomatic systems to make the proof development of lemmas in the Hilbert calculi that are required in the formalisation of completeness results easier. Their methodology is based on a variant of pruning [38], and the proposed constructive proof of completeness provides an algorithm to build derivations in the appropriate axiomatic calculi for valid formulas.

Formal proof of semantic completeness for classical logic has defined an established trend in interactive theorem proving since Shankar [72], where a Hintikka-style strategy is used to define a theoremhood checker for formulas built up by negation and disjunction only.

A very general treatment of systems for classical propositional logic is given in Michaelis and Nipkow [50]. They investigate an axiomatic calculus in Isabelle/HOL along with natural deduction, sequent calculus, and resolution system, and completeness is proven by Hintikka-style method for sequent calculus first, to be lifted then to the other formalisms through translations of each system into the others. Their formalisation is more ambitious than ours, but, at the same time, it is focused on a very different aim. A similar overview of meta-theoretical results for several calculi formalised in Isabelle/HOL is given in Blanchette [7], who provides a more general investigation, though unrelated to modal logics.

Regarding intuitionistic modalities, Bak [3] gives a constructive proof of completeness for IS4 w.r.t. a specific relational semantics verified in Agda. It uses natural deduction and applies modal completeness to obtain a normalisation result for the terms of the associated \(\lambda \)-calculus.

Bentzen [6] presents a Henkin-style completeness proof for \({\mathbb {S}}5\) formalised in Lean. That work applies the standard method of canonical models—since \({\mathbb {S}}5\) is compact.

More recently, Xu and Norrish [77] used the HOL4 theorem prover to treat model theory of modal systems. For future work, it might be interesting to use their formalisation along with the main lines of our implementation of axiomatic calculi to merge the two presentations—syntactic and semantic—exhaustively.

Our formalisation, however, has been led by the aim of developing a (prototypical) theorem prover in HOL Light for normal modal logics. The results concerning GL that we have presented here can be considered a case study of our original underlying methodology.

Automated deduction for modal logic has become a relevant scientific activity recently. An exhaustive comparison of our prover with other implementations of modal systems is beyond our scope. Nevertheless, we care to mention at least three different development lines on that trend.

The work [30] by Goré and Kikkert consists of a highly efficient hybridism of SAT solvers, modal clause-learning, and tableaux methods for modal systems. That prover deals with minimal modal logic K and its extensions T and S4. The current version of their implementation does not produce proof or a countermodel for the input formula; however, the code is publicly available, and minor tweaks should make it do so.

The conference paper [26] by Girlando and Straßburger presents a theorem prover for intuitionistic modal logics implementing proof search for Tait-style nested sequent calculi in Prolog. Because of the structural properties of those calculi, that prover returns, for each input formula, a proof in the appropriate calculus or a countermodel extracted from the failed proof search in the system. Similar remarks could be formulated for the implementation described in [27] concerning several logics for counterfactuals.

The latter formalisations are just two examples of an established modus operandi in implementing proof search in extended sequent calculi for non-classical logics by using the mere depth-first search mechanism of Prolog. Other instances of that line are e.g. [1, 24, 25][59,60,61,62], and [12]. None of those provers deals explicitly with GL, but that development approach would find no issue formalising \(\textsf{G3KGL}\) too.

Similar remarks about Papapanagiotou and Fleuriot [64] could be made. They propose a general framework for object-level reasoning with multiset-based sequent calculi in HOL Light. More precisely, they present a deep embedding of those systems by defining an appropriate relation between multisets in HOL Light and encode two \(\textsf{G1}\) calculi: a fragment of intuitionistic propositional logic and its Curry-Howard analogous type theory. Specific tactics are then defined to perform an interactive proof search of a given sequent. For our purposes, it might be interesting to check whether their implementation may be of some help to enhance the performance and functionality of our prototypical theorem prover.Footnote 23

It is worth noticing that the paper [31] by Goré et al. formalises directly in Coq the \(\textsf{G3}\)-style calculus \(\textsf{GLS}\) and proves that the system is structurally complete; most notably, it gives a computer checked proof that the cut rule is admissible in that calculus, and by this formalisation, it clarifies many aspects of the structural analysis of this logic that had remained opaque at the “pencil and paper” level.Footnote 24

A specific tableaux based theorem prover for GL is described in Goré and Kelly [29], where they also discuss many efficiency related aspects of their artifact.

When writing this prototype, we aimed at high flexibility in experimenting with the certification of GL tautologies and with constructing possible countermodels to a modal input formula. The current stage of the artefact is heavily based on the HOL Light tactic machinery and is slightly naive in some aspects, most notably concerning efficiency. A more mature approach would provide a dedicated procedure for the search phase, which uses the tactic mechanism in the last stage of its execution, as done in tactics such as METIS, MESON or ARITH, implemented, for instance, by Harrison [33], Hurd [37], and Färber and Kaliszyk [17].

An immediate step would be to enhance the implementation of formal proofs in \(\textsf{G3KGL}\) so that derivation trees are represented as proof objects in the HOL Logic and checked by our procedure. Incidentally, this would constitute an alternative implementation of the labelled sequent calculus using the paradigm of deep embedding instead of shallow embedding. Moreover, we could use it to construct concrete proof trees for \(\textsf{G3KGL}\). The ideal goal would be to make the prover run in PSPACE.

We also intend to measure the performance of our prototype—and its eventual definitive version—using a benchmark set for GL in Balsiger et al. Logics Workbenchs [4], and compare it with, e.g. Goré and Kelly’s theorem prover.

Moving from the experiments about GL we propose in the present work, we plan to develop a more general mechanism to deal with (ideally) the whole set of normal modal logics within HOL Light. At the same time, we intend to add the necessary machinery to check the generated countermodels without affecting the program’s performance.

Notes

In contemporary research, this is still the main strategy to prove arithmetical completeness for other modalities for provability and related concepts, particularly for interpretability logics.

Some of them are closely related to the field of proof theory; others point at developing a uniform proof-strategy to establish adequate semantics in formal theories of arithmetic having different strengths and flavours. The reader is referred to Beklemishev and Visser [5] for a survey of open problems in provability logics.

We are grateful to an anonymous referee for pointing us to that work.

Refer to Sect. 4.4 for the explicit bound one can obtain for the complexity of such a decision procedure.

See Copeland [10] for the historical development of this notion.

We warn the reader about a potentially misleading notation. In the following statement, two interrelated mathematical objects occur, both denoted by W for convenience: one is the type W, and the other is the set W on the former—in a sense explained in the introduction about the HOL Light notation.

Notice that the lemma ITF_NT allows us to derive the former result as a corollary of the latter.

Small modifications to limit the application of \(\textsf{NR}\) on the definition of |- - would introduce the notion of derivability from a set of assumptions, so that the deduction theorem would hold [32].

The HOL Light tactics for first-order reasoning MESON and METIS were unable, for example, to instantiate autonomously the obvious middle formula for the transitivity of an implication, or even the specific formulas of a schema to apply to the goal in order to rewrite it.

Notice that in this definition, we perform a case analysis where the singleton list is treated separately (i.e. we have CONJLIST [p] = p). This is slightly uncomfortable in certain formal proof steps: in retrospect, we might have used a simpler version of this function. However, since this is a minor detail, we preferred not to change our code.

Here we define the set of subformulas of \(\texttt{p}\) as the reflexive, transitive closure of the set of formulas on which the main connective of \(\texttt{p}\) operates: this way, the definition is simplified, and it is easier to establish standard properties of the set of subformulas employing general higher-order lemmas in HOL Light for the closure of a given relation.