Abstract

The educational significance of eliciting students’ implicit theories of intelligence is well established with the majority of this work focussing on theories regarding entity and incremental beliefs. However, a second paradigm exists in the prototypical nature of intelligence for which to view implicit theories. This study purports to instigate an investigation into students’ beliefs concerning intellectual behaviours through the lens of prototypical definitions within STEM education. To achieve this, the methodology designed by Sternberg et al. (J Pers Soc Psychol 41(1):37–55, 1981) was adopted with surveys being administered to students of technology education requiring participants to describe characteristics of intelligent behaviour. A factor analytic approach including exploratory factor analysis, confirmatory factor analysis and structural equation modelling was taken in analysing the data to determine the underlying constructs which the participants viewed as critical in their definition of intelligence. The findings of this study illustrate that students of technology education perceive intelligence to be multifaceted, comprising of three factors including social, general and technological competences. Implications for educational practice are discussed relative to these findings. While initially this study focuses on the domain of technology education, a mandate for further work in other disciplines is discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Theories of intelligence can be broadly discriminated into two categories; implicit theories and explicit theories (Spinath et al. 2003). Implicit theories describe peoples’ conceptions of intelligence with pertinent frameworks emerging from their amalgams. Explicit theories differ as they are borne from empirical evidence of cognitive processing. Models of intelligence from both categories have significant value in a variety of fields such as education, occupational psychology and cognitive science. However, their contributions to such fields have important variances. Explicit theories, due to their empirical foundations, offer evidence based schematics illustrating networks of cognitive functions. Examples of which include the Cattell-Horn-Carroll (CHC) theory of intelligence (Schneider and McGrew 2012) which has emerged largely as a result of psychometric research on intelligence test scores, the theory of the ‘Adaptive Toolbox’ (Gigerenzer 2001; Gigerenzer and Todd 1999) which describes a set of heuristics generated from research on human problem solving and decision making, and the Parieto-Frontal Integration Theory (P-FIT) of intelligence (Jung and Haier 2007) which synthesises neurological evidence to present a “parsimonious account for many of the empirical observations, to date, which relate individual differences in intelligence test scores to variations in brain structure and function” (p. 135). Implicit theories serve a different purpose than describing cognitive functioning. Sternberg (2000) presents four such merits which include; (1) implicit theories govern the way people evaluate their own intelligence and that of others, (2) they give rise to explicit theories, (3) they are useful in auditing the validity of explicit theories, and (4) they can help illuminate cross-cultural differences pertinent to intellectual and cognitive development. A fifth function of implicit theories concerns their pragmatic potential within educational settings, both in their predictive capacity for academic performance (e.g. Dweck and Leggett 1988) and their capacity to elicit intellectual traits of importance within a discipline (e.g. Sternberg et al. 1981). Implicit theories have also be shown to effect enacted behaviour of teachers (Brevik 2014; Pui-Wah and Stimpson 2004) further emphasising their educational significance.

Implicit theories of intelligence as predictors of academic achievement

One perspective of implicit theories of intelligence which is regularly adopted within investigations into academic ability is that of ‘entity’ and ‘incremental’ beliefs (Dweck and Leggett 1988). This perspective describes an implicit belief system that ability or intelligence is either fixed (entity belief) or malleable (incremental belief). While typically considered to be a continuum from entity to incremental (Tarbetsky et al. 2016) some researchers adopt a dichotomous position (Kennett and Keefer 2006). People with entity beliefs view intelligence and ability as uncontrollable constructs which can only be demonstrated but not developed (Tarbetsky et al. 2016). People who hold these beliefs have been associated with a fear of failure (Dweck and Leggett 1988) and are therefore prone to adopting behaviour which can lead to the abandonment of self-regulatory strategies in problem solving (Dweck 1999; Stipek and Gralinski 1996). In contrast, people who hold incremental beliefs react more positively to challenges as they perceive such experiences as positive influences on learning (Dweck 1999). This framework is often subscribed to when examining retention within education. Underpinned by the recognition of academic achievement as a predictor of retention (Stinebrickner and Stinebrickner 2014), significant efforts have been invested in examining the role of implicit beliefs relative to such achievement with pertinent findings indicating a positive association (Blackwell et al. 2007; Dai and Cromley 2014; Dupeyrat and Mariné 2005). Specifically within Science, Technology, Engineering and Mathematics (STEM) disciplines, Dai and Cromley (2014) elucidate the importance of students’ developing and maintaining incremental beliefs towards their abilities. Critically, the adaptive nature of these implicit beliefs has been illuminated (Flanigan et al. 2017; Shively and Ryan 2013) identifying the capacity for pragmatic attempts to positively affect educational change.

Implicit theories of the prototypical nature of intelligence

Implicit theories viewed through the lens of entity and incremental beliefs have been investigated more broadly within education than just with regards to achievement and retention. They have also been shown to be associated with other constructs including academic motivation (Ommundsen et al. 2005), cognitive engagement (Dupeyrat and Mariné 2005), learning and achievement goals (Blackwell et al. 2007; Dinger and Dickhäuser 2013), epistemic beliefs and goal orientations (Chen and Pajares 2010), self-efficacy (Chen and Pajares 2010; Davis et al. 2011), and self-regulated learning (Burnette et al. 2013; Greene et al. 2010). While clearly an important framework, an alternative position on implicit theories exists with respect to the ‘prototypical’ nature of intelligence. This perspective emerged from the recognition that intelligence cannot be explicitly defined (Neisser 1979). Neisser (1979), an early proponent of this view, recounts a symposium conducted by the Journal of Educational Psychology in 1921 concerning experts definitions of intelligence which saw a number of prominent theorists offer definitions in an attempt to arrive at a unified understanding. A number of definitions were offered which include being “able to carry on abstract thinking” (Terman 1921, p. 128), involving “sensory capacity; capacity for perceptual recognition; quickness, range or flexibility of association; facility and imagination; span or steadiness of attention; quickness or alertness in response” (Freeman 1921, p. 133), and to have “learned, or can learn to adjust [oneself] to [ones] environment” (Colvin 1921, p. 136). These definitions illustrate a range of emergent themes such as the qualification of intelligence as a combination of multiple specific capacities (Freeman 1921; Haggerty 1921; Thurstone 1921) and its association with the environment (Colvin 1921; Pintner 1921). A second symposium was subsequently held with the aim of revising the aforementioned definitions (Sternberg and Detterman 1986). A moderate overlap (p = .5) was found between frequencies of listed behaviours across the symposia and the discussion as to whether intelligence is singular or manifold continued with no consensus (Sternberg 2000). Differences across the symposia did emerge such as the introduction of the concept of metacognition as an element of intelligence in the latter symposium, as well as seeing a greater emphasis on the interaction between knowledge and mental process, and on context and culture (Sternberg 2000). Sternberg (2000) argues that the increased emphasis on the interaction between knowledge and mental process stemmed from the origination of the computational ontology of intelligence supporting the view that conceptions of intelligence will continue to evolve in tandem with the progression of pertinent research agendas.

With a lack of an explicit definition for intelligence, Neisser (1979) promoted the idea of intelligence as being prototypical in nature. Utilising the work of Rosch as a foundation (Rosch 1977; Rosch and Mervis 1975; Rosch et al. 1976), Neisser analogises the concept of an ‘intelligent person’ to that of a ‘chair’ at a categorical level. Within each ‘Roschian’ category exists a list of descriptive properties. In the example of a chair, these may include properties such as containing as a horizontal or near horizontal surface to sit on, legs for support, a vertical or near vertical surface to act as a back support, and being constructed to sit on. Neisser (1979, p. 182) describes the prototype of a category or concept as being “that instance (if there is one) which displays all the typical properties”. Stemming from this, an intelligent person, or by extension intelligence, can be prototyped through the extrapolation of typical descriptive properties ascribed by people within a specific cultural context. While Dweck’s work is useful in eliciting conceptions regarding the nature of intelligence, this framework affords the capacity to determine what is being described when referring to intelligence.

One of the earliest pieces of empirical evidence to support the adoption of a prototypical approach towards intelligence is the seminal work of Sternberg (Sternberg 1985; Sternberg et al. 1981). Initially, Sternberg et al. (1981) aspired to elicit if experts conceived intelligence differently than laypeople. Across multiple experiments and adopting the use of surveys as a primary method, both cohorts were asked to list behaviours characteristic of intelligence, academic intelligence, everyday intelligence, and unintelligence, and to rate themselves on a Likert-type scale for each characteristic. Subsequent to this, different cohorts of each demographic then rated the previously generated lift of behaviours on their importance in defining an ideally intelligent, academically intelligent, and everyday intelligent person, and on how characteristic each behaviour was of these people. While many interesting findings emerged, of most interest to examining the prototypical nature of intelligence are the results of a factor analysis on the characteristic ratings. Both demographics conceived intelligence as a three factor structure. For experts, intelligence was conceived to include verbal intelligence, problem-solving ability, and practical intelligence while for laypeople it was conceived as including practical problem-solving ability, verbal ability, and social competence. Interestingly, Sternberg et al. (1981) noted how the first two factors for each cohort appear similar to the constructs of fluid and crystallised intelligence as described in Cattell and Horn’s Gf–Gc Theory (Cattell 1941, 1963; Cattell and Horn 1978; Horn and Cattell 1966). The third factor for each cohort describes a practical intelligence. For experts, this was literally termed ‘practical intelligence’ and for laypeople it was termed ‘social competence’. This appears to be a cohort specific factor describing a set of behaviours important specifically but not exclusively within each demographics cultural context. In this respect, this approach provides an interesting methodology to capture the idiosyncrasies of perceived intelligence in multiple cultural contexts with such knowledge ultimately affording the capacity to fulfil each of the functions of implicit theories of intelligence that Sternberg (2000) alludes to.

Subsequent to the work of Sternberg et al. (1981), a number of studies have been conducted which examine implicit theories of intelligence from a prototypical perspective. These have been conducted across various demographics including lay adults (Fry 1984; Mugny and Carugati 1989), experts (Mason and Rebok 1984), and children (Leahy and Hunt 1983; Yussen and Kane 1983). In addition, conceptions of intelligence spanning across the adult life span have been examined (Berg and Sternberg 1992; Cornelius et al. 1989). Results from this work indicate that the prototypical characteristics of an intelligent person vary for people of different experiential backgrounds and of different ages. Sternberg (1985), as an extension of his original investigation, further examined conceptions of intelligence in conjunction with those of wisdom and creativity. Interestingly, he found that each construct showed convergent-discriminant validity with respect to each other. Participants also utilised their implicit theories in the evaluations of themselves and of hypothetical others. These findings illustrate the importance of understanding peoples’ implicit theories to assist in examining personal and interpersonal judgements and interactions. More recently, the methodology utilised by Sternberg et al. (1981) was adopted in a study where the prototypical characteristics of intelligence were framed through the theory of multiple intelligences (Gardner 1983). The aim of the study was to investigate gender differences in intelligence estimation and with results corresponding to those of other studies confirming the hypothesis that females make lower self-estimates then males (Pérez et al. 2010). Finally, the prototypical paradigm of investigation has been adopted in studies which examine constructs other than those of intelligence. Notably, it has been adopted in studies concerned with defining the concept of emotion (Fehr and Russell 1984; Russell 1991). This approach was adopted as it is difficult to offer a classical definition for the construct of emotion however when viewed from a prototypical perspective it becomes easier to understand.

Intelligence and ability in technology education

Typically, studies on the prototypical nature of intelligence have traditionally been conducted with domain general cohorts. Conducting similar investigations in a domain specific context evokes a paradigm of expertise which needs to be differentiated from intellectual ability. This particular study aspires to elicit conceptions of intelligence within STEM education from the perspective of technology education. Technology education has evolved from a vocational heritage (Dow 2006; Gibson 2008; Ritz 2009; Stables 2008) and as a result there are many pertinent skillsets which people can develop expertise in. Examples of such skillsets include developing expertise in engineering drawing, computer aided design (CAD), sketching, and model creation (Lin 2016). It is important to note that while important within the discipline, they do not constitute intellectual traits but rather activities which are operationalised through the utilisation of intellectual processes. To examine intelligence within the discipline, it is therefore important to examine the frameworks which describe activity at a macro level, and in the case of technology education such frameworks typically centre around the construct of ‘technological capability’. This concept has traditionally been difficult to define (Gagel 2004). One definition ascribed to the term suggests having an “understanding [of] appropriate concepts and processes; the ability to apply knowledge and skills by thinking and acting confidently, imaginatively, creatively and with sensitivity; [and] the ability to evaluate technological activities, artefacts and systems critically and constructively” (Scottish 1996, p. 7). Gibson’s (2008) model provides structure to this definition by describing technological capability as the unison of skills, values and problem solving underpinned by appropriate conceptual knowledge. Black and Harrison’s (1985) model adds an additional dimension to the term through their recognition of the dichotomy of designing and making. They define technological capability as being able “to perform, to originate, to get things done, [and] to make and stand by decisions” (Black and Harrison 1985, p. 6). Despite slight variances in each definition, there are important commonalities. One trait which is regularly alluded to is the capacity to problem solve within the technological context.

The present study

As previously noted, this study aims to uncover implicit theories of intelligence within STEM education. As STEM constitutes the amalgam of four unique disciplines, it is important to extrapolate these individually to ensure that the potential nuanced perspectives of each discipline are uncovered. Results from each discipline could then be synthesised into a holistic theoretical model to be examined more intimately in the context of practice. As shown through the work of Sternberg et al. (1981), a cohort specific factor may emerge which is unique to the cohort and therefore the discipline. Understanding the remit of such a factor has significant potential for guiding the evolution of the discipline and for pedagogical planning. In addition to this, there has been a resurgence in the investigation of cognition in STEM education, specifically in relation to spatial ability (Lubinski 2010; Uttal and Cohen 2012; Wai et al. 2009). While spatial ability is a significant intellectual trait in its own right, it is paramount that other potential traits which merit investigation within STEM education and specifically technology education are identified. By understanding the behavioural and intellectual traits which are important to technology education, pedagogical practices can be designed around these to more effectively enhance student learning opportunities.

For this study, the methodology used by Sternberg et al. (1981) was adopted with minor variances. Two experiments were conducted where the aim of the first experiment was to generate a list of behaviours characteristic of intelligence within STEM education and the aim of the second was to generate a prototypical model of the participants’ implicit theories within this context. The study cohort (N = 404; males = 383, females = 21) consisted of undergraduate Initial Teacher Education (ITE) students specialising in technology education. Additional subject areas studied by these students other than technology and education include mathematics, design and communication graphics, material science and engineering. The students within this cohort can be described as quasi-experts (Kaufman et al. 2013) as they do not hold the formal qualification to be considered as discipline experts however they are more informed than laypeople. This is an important consideration as engagement in the pertinent ITE programme provides exposure to contemporary educational theory in technology education while also providing the pragmatic experience of being a student. This suggests that conceptions of intelligence within this cohort will be borne from both types of experience providing a holistic prototypical model. All students from the 4 year groups of the undergraduate degree programme were included in the cohort however as the surveys were administered on a voluntary basis not all students participated in each one.

Experiment 1

Participants

As discussed, participation in this study was voluntary and not all students responded to the survey instrument. In this experiment a total of 205 students responded to the survey meaning that results from this sample have a margin of error of ± 4.81% at the 95% confidence interval. A full breakdown of the participants for this experiment is provided in Table 1.

Design and implementation

The survey for this experiment was anonymous. The first part consisted of an initial set of questions to gather the demographic information presented in Table 1. The second part of the survey contained one question which asked participants to “list all behaviours characteristic of intelligence in the context of STEM (Science, Technology, Engineering and Mathematics) education”. This question was designed to reflect the intent of Sternberg et al.’s (1981, p. 40) initial question which asked participants to “list behaviors characteristic of intelligence, academic intelligence, everyday intelligence, or unintelligence” however it added the contextual element of STEM education. The survey was created electronically and distributed individually to all students within the cohort.

Treatment of data

As participants were listing behaviours, minor variations in language emerged. For example, the characteristic of “problem-solving” was frequently cited with variations such as “the ability to solve problems”, “problem solving ability”, and “problem-solving skills”. Therefore, prior to further analysis, all listed characteristics were coded to remove duplicates emerging from minor variations in language.

Experiment 1 results

A total list of 84 unique behaviours was generated as a result of the survey from Experiment 1 (See Table 5 for full list). An overview of the item statistics for each year group within the sample is presented in Table 2. Interestingly, the amount of answers offered by participants increased consecutively for each year group. This is perhaps reflective of greater experience and a more developed conception of intelligence. The low item reliability statistics across year groups (.165–.399) reflects a widely varied selection of behaviours offered by participating students. However the high subject reliability statistics (.706–.867) suggest that despite offering varied sets of behaviours, there was a high level of consensus within year groups as to what intelligence within the discipline constitutes. The higher reliability statistics in the 3rd and 4th year groups further suggests a crystallisation of conceptions is attained from educational experience.

Further to analysing the reliability of behaviours offered by the participants, an examination of the correlations between the frequencies of each between year groups was conducted. The results are presented in Table 3. The correlations range from strong (r = .625) to very strong (r = .842) (Evans 1996) and were all significant at the p < .001 level which, in conjunction with the subject reliability statistics, suggest that not only is there a shared conception of intelligence within year groups but there is consensus between groups as well.

Experiment 2

Participants

In this experiment a total of 213 students responded to the survey meaning that results from this sample have a margin of error of ± 4.62% at the 95% confidence interval. While this experiment contains a different sample than Experiment 1, the participant population remained the same. A full breakdown of the participants for this experiment is provided in Table 4.

Design and implementation

The survey for this experiment was anonymous. The first part consisted of an initial set of questions to gather the demographic information presented in Table 4. The second part of the survey contained the list of 84 behavioural characteristics generated from Experiment 1 with one question which asked participants to “rate how important each of these characteristics are in defining ‘your’ conception/understanding of an intelligent person within STEM education”. The order of the items were randomised for each individual participant to prevent the occurence of an order bias. Each behaviour was rated on a 5-point Likert scale with the ratings “1—Not important at all”, “2—Unimportant”, “3—Neither important nor unimportant”, “4—Important”, and “5—Very important” (Cohen et al. 2007). This question was designed to reflect the intent of Sternberg et al.’s (1981) question regarding how characteristic behaviours were of intelligence, however it again added the context element of STEM education. The survey was created electronically and distributed individually to all students within the cohort.

Data screening

As the multivariate analyses of exploratory factor analysis (EFA), confirmatory factor analysis (CFA), and structural equation modelling (SEM) utilised to analyse the data from this experiment assume normal distributions and are sensitive to extreme outliers, the data was screened for both univariate and multivariate outliers prior to the conduction of these tests (Kline 2016). Univariate outliers were identified as results which exceeded three standard deviations from the mean. 123 data points (.68% of the dataset) were identified as univariate outliers under this criterion and were transformed to the value equal to three standard deviations from the mean (Kline 2016). Data was then screened for multivariate outliers using both the Mahalanobis D and Cook’s D statistics. The criterion for identifying outliers with the Mahalanobis D statistic was p < .001 (Kline 2016) and for the Cook’s D statistic it was any instance greater than 1 (Cook 1977). While no cases were identified as multivariate outliers under the Cook’s D criterion, seven cases (3.29% of the dataset) were identified as outliers under the Mahalanobis D statistic. These seven cases were excluded from the analysis leaving a total dataset of 206 responses. Additionally, skewness and kurtosis values for all behaviours were within acceptable limits of between ± 2 (Gravetter and Wallnau 2014; Trochim and Donnelly 2006).

Experiment 2 results

Descriptive statistics

Prior to the conduction of any statistical analyses, it was of interest to examine the rank order of behaviours pertinent to how important they were in defining the participants’ conceptions of intelligence. An observation of the standard deviation values suggests a relatively high degree of consensus from the participants. The behaviours listed range considerably in terms of how important they were with a minimum value of 1.831 (Being awkward) and a maximum of 4.516 (Being interested in the subject area). An examination of the lower ranked items illustrates social actions which could be described as negative, inhibitory or nonsocial such as “being awkward” and “being antisocial” are not considered as being important characteristics in defining intelligence with mean scores rising considerably where behaviours transition to neutral and positive traits (Table 5).

Correlations and reliability statistics

Subsequent to examining the descriptive statistics and rank order of the behavioural traits, correlations between year groups, item reliability and subject reliability coefficients were determined (Table 6). All observed correlations were very strong (r = .919– r = .980) (Evans 1996) and were all significant at the p < .001 level. In addition, all reliability statistics were very high with the minimum item reliability statistic being observed within the 4th year cohort (α = .927) and the minimum subject reliability statistic being observed within the 3rd year cohort (α = .974). These results indicate there is a very strong conception of what it means to be intelligent within STEM education within this cohort. The strength of this conception is further emphasised when considering the results of Sternberg et al.’s (1981) study, where correlations among experts ranged from r = .67 to .90 and among laypeople ranged from r = .36 to .81. Interestingly, there appears to be little variance between year groups however considering the strongest correlations are observed between the 1st and 2nd year cohorts (r = .972) and between the 3rd and 4th year cohorts (r = .980) there may be a shift in thinking occurring as the students transition from the initial 2 years to the latter 2 years of study. Finally, considering the strength of these results, it is important to appreciate the reliability statistics from Experiment 1 which highlight the large variance in the participants’ individual understandings of STEM intelligence.

Exploratory factor analysis

A factor analytic approach was adopted for the final element of the analysis. This included a combination of exploratory factor analyses (EFA), confirmatory factor analyses (CFA) and structural equation modelling (SEM). EFA was selected as the intent of this analysis was to determine underlying relationships between the variables in the dataset (Byrne 2005). Specifically, the maximum likelihood method of extraction was selected as in the data screening stage assumptions of normality were not violated (Fabrigar et al. 1999). An oblique promax rotation was selected as it was hypothesised that the factors would correlate (Osborne 2015).

To determine the factorability of the dataset for the EFA a number of approaches were used. The correlation matrix was examined and revealed that 455 out of 3486 correlations were above .3. The anti-image correlation matrix was examined which showed anti-images correlation for all 84 variables as greater than .5. The Kaiser–Meyer–Olkin measure of sampling adequacy was .821, above the recommended value of .6 (Kaiser 1974), and Bartlett’s test of sphericity was significant (χ2 (3486) = 8067.943, p < .000). These criteria suggest a reasonable level of factorability within the data (Tabachnick and Fidell 2007).

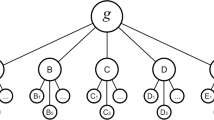

To determine the quantity of factors to extract a number of criteria were examined including eigenvalues > 1 (Kaiser 1960), a scree test (Cattell 1966) and a parallel analysis (Horn 1965). Horn’s parallel analysis has been identified as one of the most accurate priori empirical criteria with scree sometimes a useful addition (Velicer et al. 2000). Figure 1 illustrates the scree plot with parallel analysis. An examination of the number of factors with eigenvalues > 1 suggests a 23 factor solution. The result of the parallel analysis suggests a five factor solution. Finally, an examination of the scree plot further corroborates a five factor solution however it suggests merit in examining three and four factor solutions as well. Therefore, EFA’s were conducted with three, four and five factor solutions.

The results of the three EFA’s are presented in Table 7 (five factor solution), Table 8 (four factor solution) and Table 9 (three factor solution). In each instance only variables with a salient loading of > .4 on at least one factor are represented. No variable had a salient loading of > .4 on more than one factor and therefore a simple structure was attained (Thurstone 1947) in each circumstance.

The first two factors of the five factor model (Table 7) appear to represent factors describing ‘social competence’ and ‘general competence’. The social competence factor was named to reflect the factor found by Sternberg et al. (1981). The general competence factor was named to reflect Spearman’s (1904) idea of a general intelligence (g) while preserving the idea that the variables describe a level of competency. The third factor, through its inclusion of craft skill, imagination, and designerly abilities, suggests a discipline specific factor relative to the cohort and was therefore named ‘technological competence’. The fourth factor includes a series of behaviours arguably not conducive to positive social interactions such as being antisocial, regularly procrastinating and being awkward. While being quiet and reserved does not necessarily mean a person is not socially adept, in this instance the behaviour is posited to be reflective of a person who does not actively seek to engage in social interactions. Therefore this factor was termed ‘nonsocial behaviour’ to encompass both a lack of social skills and/or a reservation towards social interaction. The fifth factor, containing only three variables, is difficult to ascribe a name to. While being mathematical and being scientific appear to suggest a distinct type of personality, the high level of literacy factor is arguably representative of many different personas. Therefore, the tentative title of ‘scientific presence’ was ascribed to this factor under the position that in this circumstance, the level of literacy variable was considered to represent a level of technical literacy (Dakers 2006; Ingerman and Collier-Reed 2011).

In the four factor model (Table 8) the factor structure remained similar to the five factor solution however the fifth factor from the previous model (scientific presence) no longer emerged. Considering it only described three variables with two of these no longer having a salient loading of > .4 on any of the remaining factors in the four factor solution, this suggests a five factor solution may be representative of overextraction (Wood et al. 1996). In the three factor solution the previously named technological competence factor no longer emerged. In the five factor model it correlated moderately with the general competence factor (.414) with a similar correlation being observed between the two factors in the four factor model (.487). Many of the variables from the technological competence factor can be observed within the variables of the previously described general competence factor and therefore in the three factor model it was renamed to ‘general and technological competence’ to reflect this change. However, considering the evidence for a cohort specific factor from the work of Sternberg et al. (1981) and the clear distinction between the variables in the general competence and technological competence factors, it is posited that the three factor model is representative of underextracting (Wood et al. 1996).

Confirmatory factor analysis

Developing on the results from the EFA, further analysis was conducted through both CFA and SEM. While the four factor model is the most theoretically sound, both the three and five factor models were also initially examined through SEM to confirm which model best fit that data. In addition to examining these models, the existence of the nonsocial behaviour factor is questionable in terms of how characteristic it is of intelligence. An examination of the mean scores achieved by the variables within it suggests that it is not an important factor (Table 5). Therefore, SEM was conducted on the five and four factor models with this factor excluded. The three factor solution was not examined without the nonsocial behaviour factor as the model would require an additional constraint to make it identifiable. The results of this analysis are presented in Table 10.

A number of fit indices were included to support the interpretation of the model of best fit. These include the relative Chi square statistic (χ2/df) (Wheaton, Muthén, Alwin, and Summers 1977) which should have values < 2 (Tabachnick and Fidell 2007; Ullman 2001), the goodness-of-fit index (GFI) (Jöreskog and Sörbom 1986) which should be ≥ .95 (Shevlin and Miles 1998), the adjusted goodness-of-fit index (AGFI) (Jöreskog and Sörbom 1986) which should be ≥ .90 (Hooper et al. 2008), the comparative fit Index (CFI) (Bentler 1990) which has a cut-off point of ≥ .95 (Hu and Bentler 1999), the root mean square error of approximation (RMSEA) (Steiger and Lind 1980, cited in Steiger 1990) with a cut-off point of ≤ .06 (Hu and Bentler 1999; Lei and Wu 2007), and the Tucker-Lewis index (TLI) (Tucker and Lewis 1973) which is advised to be ≥ .95 (Lei and Wu 2007).

However, models not meeting these cut-off points should not necessarily be rejected as there are degrees of model fit. For the RMSEA, it is suggested that values lower than .08 are indicative of reasonable fit with values lower than .05 indicating good fit, while for CFI values greater than .90 are suggested to indicate reasonable fit and values greater than .95 to indicate good fit (Kline 2005). Based on this, many researchers regularly opt for the cut of .90 as the cut-off point for the GFI, AGFI, TLI and CFI indices and .08 for the RMSEA (e.g. Engle et al. 1999; Kozhevnikov and Hegarty 2001; Maeda and Yoon 2015; Vander Heyden et al. 2016). In addition to this, the GFI and AGFI indices are susceptible effects caused by sample size (Hooper et al. 2008; Sharma et al. 2005) and therefore should not be used exclusively.

An examination of the fit indices for each model reveals that all models meet the χ2/df and RMSEA criteria but no model meets the criteria for GFI, AGFI, CFI or TLI. As model D (the EFA four factor solution excluding the nonsocial behaviour factor) is the best fitting for these criteria, modifications were made by removing observed variables with low loadings on latent factors (Table 11). The approach taken was to remove a small number of variables at a time which loaded below .5 on their respective latent factor. A final model (Model D4) was examined in which all observed variables had loadings of ≥ .5.

Ultimately no model achieved the criteria for model fit under the GFI and AGFI indices however due to the previously described argument regarding sample size effects this was not regarded as critical to the analysis. After the second round of adjustments (Model D2(SEM)), reasonable model fit was achieved under the TLI and CFI indices. These were improved upon in subsequent refinements (Models D3(SEM) and D4(SEM) respectively). It was decided not to remove any more observed variable to improve model D4(SEM) as its current structure provided a clear perspective of each latent variable and further reductions would render factor interpretation theoretically difficult. The final models are presents in Figs. 2 and 3 which show the factor loadings on the participants combined implicit theory of intelligence and the covariances between these factors.

Discussion

Implications for human intelligence research

Sternberg (1984) postulates the potential for a ‘common core’ of intellectual functions which are culturally shared. This construct is premised on the theory that certain intellectual behaviours are associated more with being human in general than with operating in any specific discipline. Defining the components of this common core as ‘metacomponents’ of intelligence, Sternberg posits them to include recognising the existence and nature of a problem, deciding upon the processes needed to solve the problem, deciding upon a strategy into which to combine those processes, deciding upon a mental representation upon which the processes and strategy will act, allocating processing resources in an efficacious way, monitoring one’s place in problem solving, being sensitive to the existence and nature of feedback, knowing what to do in response to this feedback, and actually acting upon this feedback (Sternberg 1980, 1982, 1984). Considering this theory in conjunction with the results of Sternberg et al.’s (1981) study and the results of this study suggests that, at least from an implicit perspective, the acknowledement of such a common core does exist. Explicit evidence for a common core is offered through the wealth of psychometric research conducted with the aim of eliciting an empirically based objective theory of human intelligence. Of particular relevance is the theory of fluid and crystallised intelligence (Gf–Gc theory). The Gf–Gc theory was first theorised by Cattell (1941, 1943) as an advancement of Spearman’s (1904) idea of a singular general intelligence, g, into the dichotomy of a fluid and a crystallised intelligence. Cattell (1943) conceived his theory of fluid and crystallised intelligences from observations of intelligence tests designed for children and their lack of applicability to adult populations. Synthesising the observations of the adult dissociation of cognitive speed from power and the diminished g saturation in adult intellectual performances with neurological evidence identifying a localised brain legions as effecting children generally while a corresponding legion effecting adults more in terms of speeded tasks, abstract reasoning problems, and unfamiliar performances than in vocabulary, information and comprehension (e.g. Hebb 1941, 1942), Cattell (1943) postulated the potential for general intelligence to comprise of two separate entities. Fluid intelligence is defined as “a facility in reasoning, particularly where adaptation to new situations is required” while crystallised intelligence is defined as “accessible stores of knowledge and the ability to acquire further knowledge via familiar learning strategies” (Wasserman and Tulsky 2005, p. 18). Sternberg et al. (1981) identified factors in both the expert and laypeople cohorts resemblent of fluid and crystallised intelligence factors and the general competence factor apparent in this study also aligns with a fluid intelligence factor. The general competence factor identified within this study is reflective of Sternberg’s idea of a common core with it’s included variables corrseponding to his descriptions of metacomponents. Interestingly, a factor similar to a crystallised intelligence factor was not identified within this study however this is posited to be reflective of the nature of knowledge inherent within technology education.

Significance within STEM education

In order to appreciate the nature of the three factors identified from this study it is paramount that the context of technology education is examined. The results of this study do not suggest a crystallised intelligence factor as being perceived as inherenlty characteristic of intelligence within technology education. Considering crystallised intelligence as associated with having and developing a defined knowledge base, it is interesting that such a factor should not emerge within a specific discipline. While models of technological capability suggest the importance of an appropriate knowledge base (e.g. Gibson 2008), it is arguable that an explicit and defined knowledge base does not exist within the discipline. A reason for this is offered by McCormick (1997) who notes that technological activity is multidimensional, drawing on subjects such as science, mathematics and engineering, and that it is found in all spheres of life making defining an explicit knowledge base very difficult. Instead, McCormick (1997) suggests that explicit technological knowledge will be relative to specific tasks and circumstances. Kimbell (2011) argues that technological knowledge is inherently different to scientific knowledge whereby scientific knowledge is concerned with literal truths and technological knowledge is more aptly associated with usefulness. As design is a core element of technology education, absolute knowledge is not always necessary. Instead, Kimbell (2011) suggests that ‘provisional knowledge’ is more aligned with the discipline and that learners reside in an “indeterminate zone of activity where hunch, half-knowledge and intuition are essential ingredients” (p. 7) in using their provisional knowledge to support further inquiry in response to particular tasks. Aligning with this and recognising that design is embedded within a personal and social context, Williams (2009, pp. 248–249) argues that “the domain of knowledge as a separate entity is irrelevant; the relevance of knowledge is determined by its application to the technological issue at hand. So the skill does not lie in the recall and application of knowledge, but in the decisions about, and sourcing of, what knowledge is relevant”.

The results of this study indicate that a defined knowledge base is not perceived as being inherently important within the discipline. Having empirical data corroborating this perspective from a cohort who are engaging with the domain both as learners and educators is particularly advantageous due to their unique position. From a pedagogical perspective, this presents educators with a particularly interesting challenge in that they need to negotiate the type of explicit knowledge they should teach as a medium for developing the broader intellectual and behavioural traits pertinent within the discipline. Furthermore, the findings from this study serve to facilitate the development of an empirically supported explicit theory as this evidence suggests that domain-free general capacities (Schneider and McGrew 2012) may serve as an auspicious core set of cognitive factors pertinent to technology education. It is important to note that this argument does not aspire to dilute the importance of developing technical expertise within the discipline as an advanced level of expertise in particular areas does typically constitute a developed and refined content knowledge. Instead, it serves to reiterate the position that, at least within technology education, intelligence and expertise can be seen as separable constructs. Intelligence within the discipline is multifaceted, perceived to consist of general, technological and social competencies. Expertise in specific areas is critical however the particular type of expertise developed will be in response to a specific need. Therefore, when considering pedagogy in technology education, as the nature of explicit technological knowledge can be variable. The results of this study are significant in refining the type of general competencies which the teachers should aim to develop while they themselves use their own professional judgement in the selection design and selection of specific knowledge and tasks.

The results of this study do suggest a social competence factor and a general competence factor similar to fuild intelligence. Building on the idea that a defined knowledge base may not exist, these factors further describe the applied nature of technology education. Considering the magnitude of influence that design has within technology education, students regularly encounter novel situations. Fluid intelligence describes the ability to engage in such situations and a recognition of this substantiates the idea that intellectual behaviour in technology education is more concerned with a capacity to react to negotiate within ones environment rather than having acquired highly developed schema. Further considering the nature of technology education, design is recognised as a social activity (Hamilton 2003, 2004; Murphy and Hennessy 2001). Conversation is placed at the core of the educational process (Trebell 2007) where teacher-student and student–student interactions are critical for learning. Specifically within technology education, Murphy and Hennessy (2001) have shown that students seek opportunities to interact with peers even when not explicitly advocated for within the pedagogical approach adopted by the teacher. The social competence factor which emerged within this study reflects this idea and the importance of such social interactions within technology education are reflected in the contemporary agenda to support discourse through the provision of a shared language (O’Connor 2016; O’Connor et al. 2016a, b). Interestingly, a tentatively named nonsocial behaviour factor did emerge. While ultimately its removal did increase the model fit of the data, its initial presence is further suggestive of the importance of social skills within the discipline. It emerged in the results of Experiment 1 as some participants conceived intelligence as synonymous with an antisocial or nonsocial stereotype however the importance of such behaviours were ultimaltey considered unimportant in the cohorts shared conception.

Perhaps of most importance to the discipline is the technological competence factor which emerged in the final model. Its importance to the cohort is shown in the SEM model (Fig. 2) as it has the highest loading (.93) on their implict theory of intelligence. The variables which describe this factor are particularly interesting. While the “having good coordination” variable is ambiguous, it is posited that this was conceived as having a similar meaning to achieving a high level of craft skill. This particular theme is extremely pertinent to the discipline as the philosophical underpinnings of the discipline emphasis the criticality of craft skill and the ‘make’ aspect of the subject.

As technology education is only one of the four disciplines which describe STEM education, it would be of significant interest to conduct similar studies in the areas of science, engineering and mathematics education where the results of each could be synthesised to provide a holistic model of implicit theories within STEM. In particular, it would be interesting to identify if cohort specific factors similar to the technological competence factor would emerge as conceptions of intelligence have a significant role within multiple areas of education. It is posited that a factor similar to the general competence factor in this study would exist within the other disciplines with a factor similar to crystallised intelligence also being probable due to more explicit knowledge bases. One of the most significant implications of this work and for its progression is in its usefulness in developing an explicit theory of intelligence. There have been calls to make education and pedagogy more scientific (OECD 2002) and while many relevant explicit theories exist and are being developed, knowing what is perceived to constitute intellectual behaviour within specific disciplines can add an additional perspective to guide this research to further support teaching and learning practices.

Limitations and future research

There are a number of limitations which should be considered for interpreting the generalisability of the results and in framing pertinent future research agendas. Firstly, little work has been conducted with this particular methodology to examine prototypical conceptions on intelligence in education since the work of Sternberg (Sternberg 1985; Sternberg et al. 1981). Therefore, while theoretically a person’s belief about the structure of intelligence should influence their educational experience and engagement, further work needs to be conducted to objectively examine such effects. Substantial work in the area of entity and incremental beliefs as previously discussed shows that beliefs about the nature of intelligence have a significant effect however the generalisability of implicit theories cannot be assumed to include the prototypical perspective.

Secondly, this study was conducted with a cohort representative of only one cultural context. In this case the participant population comprised of a cohort of ITE students in one institution who have therefore been exposed to an educational philosophy synonymous with that context. In order to reduce the potential bias associated with an inherent pedagogy, ITE students from other technology education courses in other institutions and countries should be included to support generalisation. In addition, the cohort represents only one group of stakeholders within technology education. As quasi-experts, the knowledge base is not sufficient to provide certainty in an absolute model of theoretical intelligence within STEM education from the perspective of technology education. The perspectives of other stakeholders such as primary and post-primary pupils, primary and post-primary teachers, international technology education researchers and experts, and pertinent governmental representatives should be considered.

Thirdly, the study only considered one discipline from within STEM education and therefore further evidence is required to support the hypothesis of a cohort specific factor. It would be of significant interest to determine if ITE students from science, engineering and mathematics disciplines also conceived a cohort specific factor pertinent to their implicit theories of intelligence and to examine the similarities and variances between these. Combining the implicit theories of intelligence from all disciplines may result in a model containing factors which are appropriate to all disciplines which could be classified generally as perceived core intellectual traits within STEM education. This would support the creation of an explicit theoretical model of cognitive factors pertinent to STEM education through the adoption of empirical and objective measures, with core general factors pertinent to all disciplines and peripheral factors associated with individual disciplines.

Fourthly, as the list of behaviours generated in the initial experiment governed the remit of the second experiment, it may be appropriate for future studies to include an additional data collection phase in the interim. For example, the individual questionnaire from Experiment 1 could be succeeded by a series of focus groups in a quasi-Delphi approach where individual perspectives may act as a stimulus to prompt further thoughts from their peers. This approach would preserve the unbiased nature of the data from Experiment 1 but may increase the number of variables for Experiment 2 and would increase their validity.

Finally, the sample size for the multivariate analyses conducted in Experiment 2 could be considered small. While the sample (n > 200) is sufficient (Tabachnick and Fidell 2007), it would be advantageous to increase this. While the model fit indices from the CFA and SEM analyses suggest this was not a necessity, this would further reduce the biases previously discussed and would add to the statistical strength of the analysis.

Conclusion

The findings of this study provide insight into the way students of technology education perceive intelligence. While the importance of educators understanding student’s implicit theories about intelligence concerning entity and incremental beliefs is acknowledged (Flanigan et al. 2017), it is also of paramount importance for educators to understand what students perceive to be intelligent behaviour. A misalignment between student and teacher expectations has the potential to elicit many negative educational implications and therefore the knowledge of student beliefs can support the development of mutual understandings.

The findings of this study suggest that students of technology education perceive intelligence within the discipline to be multifaceted, comprising of three factors including social, general and technological competences. As these are context specific, it would now be advantageous to synthesise these results with similar findings from other educational disciplines. Within technology education, these results afford educators, researchers and other stakeholders a lens for which to view educational planning and provision. Within the broader remit of STEM, the results of this study have the potential to frame the unique position of technology education. It allows this at a minimum by looking at the technological competence factor. It also facilitates comparisons with additional disciplines by looking at the other factors as well.

References

Bentler, P. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107(2), 238–246.

Berg, C., & Sternberg, R. (1992). Adults’ conceptions of intelligence across the adult life span. Psychology and Aging, 7(2), 221–231.

Black, P., & Harrison, G. (1985). In place of confusion: Technology and science in the school curriculum. London: Nuffield-Chelsea Curriculum Trust and the National Centre for School Technology.

Blackwell, L., Trzesniewski, K., & Dweck, C. (2007). Implicit theories of intelligence predict achievement across an adolescent transition: A longitudinal study and an intervention. Child Development, 78(1), 246–263.

Brevik, L. (2014). Making implicit practice explicit: How do upper secondary teachers describe their reading comprehension strategies instruction? International Journal of Educational Research, 67(1), 52–66.

Burnette, J., O’Boyle, E., VanEpps, E., Pollack, J., & Finkel, E. (2013). Mind-sets matter: A meta-analytic review of implicit theories and self-regulation. Psychological Bulletin, 139(3), 655–701.

Byrne, B. (2005). Factor analytic models: Viewing the structure of an assessment instrument from three perspectives. Journal of Personality Assessment, 85(1), 17–32.

Cattell, R. (1941). Some theoretical issues in adult intelligence testing. Psychological Bulletin, 38(7), 592.

Cattell, R. (1943). The measurement of adult intelligence. Psychological Bulletin, 40(3), 153–193.

Cattell, R. (1963). Theory of fluid and crystallized intelligence: A critical experiment. Journal of Educational Psychology, 54(1), 1–22.

Cattell, R. (1966). The scree test for the number of factors. Multivariate Behavioral Research, 1(2), 245–276.

Cattell, R., & Horn, J. (1978). A check on the theory of fluid and crystallized intelligence with description of new subtest designs. Journal of Educational Measurement, 15(3), 139–164.

Chen, J., & Pajares, F. (2010). Implicit theories of ability of grade 6 science students: Relation to epistemological beliefs and academic motivation and achievement in science. Contemporary Educational Psychology, 35(1), 75–87.

Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education (6th ed.). Oxfordshire: Routledge.

Colvin, S. (1921). Intelligence and its measurement IV. Journal of Educational Psychology, 12(2), 136–139.

Cook, R. D. (1977). Detection of influential observation in linear regression. Technometrics, 19(1), 15–18.

Cornelius, S., Kenny, S., & Caspi, A. (1989). Academic and everyday intelligence in adulthood: Conceptions of self and ability tests. In J. Sinnott (Ed.), Everyday problem solving (pp. 191–210). New York: Praeger Publishers.

Dai, T., & Cromley, J. (2014). Changes in implicit theories of ability in biology and dropout from STEM majors: A latent growth curve approach. Contemporary Educational Psychology, 39(3), 233–247.

Dakers, J. (Ed.). (2006). Defining technological literacy: Towards an epistemological framework. New York and Hampshire: Palgrave McMillan.

Davis, J., Burnette, J., Allison, S., & Stone, H. (2011). Against the odds: Academic underdogs benefit from incremental theories. Social Psychology of Education, 14(3), 331–346.

Dinger, F., & Dickhäuser, O. (2013). Does implicit theory of intelligence cause achievement goals? Evidence from an experimental study. International Journal of Educational Research, 61(1), 38–47.

Dow, W. (2006). The need to change pedagogies in science and technology subjects: A European perspective. International Journal of Technology and Design Education, 16(3), 307–321.

Dupeyrat, C., & Mariné, C. (2005). Implicit theories of intelligence, goal orientation, cognitive engagement, and achievement: A test of Dweck’s model with returning to school adults. Contemporary Educational Psychology, 30(1), 43–59.

Dweck, C. (1999). Self-theories: Their role in motivation, personality, and development. Philadelphia: Psychology Press.

Dweck, C., & Leggett, E. (1988). A social-cognitive approach to motivation and personality. Psychological Review, 95(2), 256–273.

Engle, R., Tuholski, S., Laughlin, J., & Conway, A. (1999). Working memory, short-term memory, and general fluid intelligence: A latent-variable approach. Journal of Experimental Psychology: General, 128(3), 309–331.

Evans, J. (1996). Straightforward statistics for the behavioral sciences. California: Brooks/Cole Publishing Company.

Fabrigar, L., Wegener, D., Maccallum, R., & Strahan, E. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299.

Fehr, B., & Russell, J. (1984). Concept of emotion viewed from a prototype perspective. Journal of Experimental Psychology: General, 113(3), 464–486.

Flanigan, A., Peteranetz, M., Shell, D., & Soh, L.-K. (2017). Implicit intelligence beliefs of computer science students: Exploring change across the semester. Contemporary Educational Psychology, 48(2017), 179–196.

Freeman, F. (1921). Intelligence and its measurement III. Educational and Psychological Measurement, 12(3), 133–136.

Fry, P. (1984). Teachers’ conceptions of students’ intelligence and intelligent functioning: A cross-sectional study of elementary, secondary and tertiary level teachers. International Journal of Psychology, 19(4), 457–474.

Gagel, C. (2004). Technology profile: An assessment strategy for technological literacy. The Journal of Technology Studies, 30(4), 38–44.

Gardner, H. (1983). Frames of mind: The theory of multiple intelligences. London: Heinemann.

Gibson, K. (2008). Technology and technological knowledge: A challenge for school curricula. Teachers and Teaching, 14(1), 3–15.

Gigerenzer, G. (2001). The adaptive toolbox. In G. Gigerenzer & R. Selten (Eds.), Bounded rationality: The adaptive toolbox (pp. 37–50). Cambridge: The MIT Press.

Gigerenzer, G., & Todd, P. M. (1999). Fast and frugal heuristics: The adaptive toolbox. In G. Gigerenzer, P. Todd, & A. R. Group (Eds.), Simple heuristics that make us smart (pp. 3–34). New York: Oxford University Press.

Gravetter, F., & Wallnau, L. (2014). Essentials of statistics for the bevahioural sciences. California: Wadsworth.

Greene, J., Costa, L.-J., Robertson, J., Pan, Y., & Deekens, V. (2010). Exploring relations among college students’ prior knowledge, implicit theories of intelligence, and self-regulated learning in a hypermedia environment. Computers & Education, 55(3), 1027–1043.

Haggerty, M. (1921). Intelligence and its measurement XIII. Journal of Educational Psychology, 12(4), 212–216.

Hamilton, J. (2003). Interaction, dialogue and a creative spirit of inquiry. In E. Norman & D. Spendlove (Eds.), Design matters: DATA international research conference 2003 (pp. 35–44). Warwickshire: The Design and Technology Association.

Hamilton, J. (2004). Enhancing learning through dialogue and reasoning within collaborative problem solving. In E. Norman, D. Spendlove, P. Grover, & A. Mitchell (Eds.), Creativity and innovation: DATA international research conference (pp. 89–101). Sheffield: The Design and Technology Association.

Hebb, D. (1941). Clinical evidence concerning the nature of normal adult test performance. Psychological Bulletin, 38(7), 593.

Hebb, D. (1942). The effect of early and late brain injury upon test scores, and the nature of normal adult intelligence. Proceedings of the American Philosophical Society, 85(3), 275–292.

Hooper, D., Coughlan, J., & Mullen, M. (2008). Structural equation modelling: Guidelines for determining model fit. Electronic Journal of Business Research Methods, 6(1), 53–60.

Horn, J. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika, 30(2), 179–185.

Horn, J., & Cattell, R. (1966). Refinement and test of the theory of fluid and crystallized general intelligences. Journal of Educational Psychology, 57(5), 253–270.

Hu, L., & Bentler, P. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55.

Ingerman, A., & Collier-Reed, B. (2011). Technological literacy reconsidered: A model for enactment. International Journal of Technology and Design Education, 21(2), 137–148.

Jöreskog, K., & Sörbom, D. (1986). LISREL VI: Analysis of linear structural relationships by maximum likelihood, instrumental variables, and least squares methods. Indianapolis: Scientific Software Inc.

Jung, R., & Haier, R. (2007). The parieto-frontal integration theory (P-FIT) of intelligence: Converging neuroimaging evidence. Behavioral and Brain Sciences, 30(1), 135–187.

Kaiser, H. (1960). The application of electronic computers to factor analysis. Educational and Psychological Measurement, 20(1), 141–151.

Kaiser, H. (1974). An index of factorial simplicity. Psychometrika, 39(1), 31–36.

Kaufman, J., Baer, J., Cropley, D., Reiter-Palmon, R., & Sinnett, S. (2013). Furious activity vs. understanding: How much expertise is needed to evaluate creative work? Psychology of Aesthetics, Creativity, and the Arts, 7(4), 332–340.

Kennett, D., & Keefer, K. (2006). Impact of learned resourcefulness and theories of intelligence on academic achievement of university students: An integrated approach. Educational Psychology, 26(3), 441–457.

Kimbell, R. (2011). Wrong… but right enough. Design and Technology Education: An International Journal, 16(2), 6–7.

Kline, R. (2005). Principles and practice of structural equation modelling. New York: The Guilford Press.

Kline, R. (2016). Principles and practice of structural equation modelling. New York: Guilford Press.

Kozhevnikov, M., & Hegarty, M. (2001). A dissociation between object manipulation spatial ability and spatial orientation ability. Memory & Cognition, 29(5), 745–756.

Leahy, R., & Hunt, T. (1983). A cognitive-developmental approach to the development of conceptions of intelligence. In R. Leahy (Ed.), The child’s construction of social inequality (pp. 135–160). New York: Academic Press.

Lei, P.-W., & Wu, Q. (2007). Introduction to structural equation modeling: Issues and practical considerations. Educational Measurement Issues and Practice, 26(3), 33–43.

Lin, H. (2016). Influence of design training and spatial solution strategies on spatial ability performance. International Journal of Technology and Design Education, 2016(1), 123–131.

Lubinski, D. (2010). Spatial ability and STEM: A sleeping giant for talent identification and development. Personality and Individual Differences, 49(4), 344–351.

Maeda, Y., & Yoon, S. Y. (2015). Are gender differences in spatial ability real or an artifact? Evaluation of measurement invariance on the revised PSVT:R. Journal of Psychoeducational Assessment, 33(8), 1–7.

Mason, C., & Rebok, G. (1984). Psychologists’ self-perceptions of their intellectual aging. International Journal of Behavioral Development, 7(3), 255–266.

McCormick, R. (1997). Conceptual and procedural knowledge. International Journal of Technology and Design Education, 7(1–2), 141–159.

Mugny, G., & Carugati, F. (1989). Social representations of intelligence. Cambridge: Cambridge University Press.

Murphy, P., & Hennessy, S. (2001). Realising the potential—And lost opportunities—For peer collaboration in a D&T setting. International Journal of Technology and Design Education, 11(3), 203–237.

Neisser, U. (1979). The concept of intelligence. In R. Sternberg & D. Detterman (Eds.), Human intelligence: Perspectives on its theory and measurement (pp. 179–189). New Jersey: Ablex.

O’Connor, A. (2016). Supporting discourse using technology-mediated communication: A model for enhancing practice in second level education. Limerick: University of Limerick.

O’Connor, A., Seery, N., & Canty, D. (2016a). The experiential domain: Developing a model for enhancing practice in D&T education. International Journal of Technology and Design Education. https://doi.org/10.1007/s10798-016-9378-8.

O’Connor, A., Seery, N., & Canty, D. (2016b). The psychological domain: Enhancing traditional practice in K-12 education. In N. Ostashewski, J. Howell, & M. Cleveland-Innes (Eds.), Optimizing K-12 education through online and blended learning (pp. 109–126). Hershey, PA: IGI Global.

OECD. (2002). Understanding the brain: Towards a new learning science. Paris: Organisation for Economic Co-Operation and Development.

Ommundsen, Y., Haugen, R., & Lund, T. (2005). Academic self-concept, implicit theories of ability, and self-regulation strategies. Scandinavian Journal of Educational Research, 49(5), 461–474.

Osborne, J. (2015). What is rotating in exploratory factor analysis? Practical Assessment, Research & Evaluation, 20(2), 1–7.

Pérez, L., González, C., & Beltrán, J. (2010). Parental estimates of their own and their relatives’ intelligence: A spanish replication. Learning and Individual Differences, 20(6), 669–676.

Pintner, R. (1921). Intelligence and its measurement V. Journal of Educational Psychology, 12(3), 139–143.

Pui-Wah, D. C., & Stimpson, P. (2004). Articulating contrasts in kindergarten teachers’ implicit knowledge on play-based learning. International Journal of Educational Research, 41(1), 339–352.

Ritz, J. (2009). A new generation of goals for technology education. Journal of Technology Education, 20(2), 50–64.

Rosch, E. (1977). Human categorization. In N. Warren (Ed.), Studies in cross-cultural psychology (pp. 1–49). London: Academic Press.

Rosch, E., & Mervis, C. (1975). Family resemblances: Studies in the internal structure of categories. Cognitive Psychology, 7(4), 573–605.

Rosch, E., Mervis, C., Gray, W., Johnson, D., & Boyes-Braem, P. (1976). Basic objects in natural categories. Cognitive Psychology, 8(3), 382–439.

Russell, J. (1991). In defense of a prototype approach to emotion concepts. Journal of Personality and Social Psychology, 60(1), 37–47.

Schneider, J., & McGrew, K. (2012). The Cattell–Horn–Carroll model of intelligence. In D. Flanagan & P. Harrison (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (3rd ed., pp. 99–144). New York: Guilford Press.

Scottish, C. C. C. (1996). Technology education in Scottish schools: A statement of position. Dundee: Scottish Consultative Council of the Curriculum.

Sharma, S., Mukherjee, S., Kumar, A., & Dillon, W. R. (2005). A simulation study to investigate the use of cutoff values for assessing model fit in covariance structure models. Journal of Business Research, 58(7), 935–943.

Shevlin, M., & Miles, J. (1998). Effects of sample size, model specification and factor loadings on the GFI in confirmatory factor analysis. Personality and Individual Differences, 25(1), 85–90.

Shively, R., & Ryan, C. (2013). Longitudinal changes in college math students’ implicit theories of intelligence. Social Psychology of Education, 16(2), 241–256.

Spearman, C. (1904). “General intelligence”, objectively determined and measured. The American Journal of Psychology, 15(2), 201–292.

Spinath, B., Spinath, F., Riemann, R., & Angleitner, A. (2003). Implicit theories about personality and intelligence and their relationship to actual personality and intelligence. Personality and Individual Differences, 35(4), 939–951.

Stables, K. (2008). Designing matters; designing minds: The importance of nurturing the designerly in young people. Design and Technology Education: An International Journal, 13(3), 8–18.

Steiger, J. (1990). Structural model evaluation and modification: An interval estimation approach. Multivariate Behavioral Research, 25(2), 173–180.

Sternberg, R. (1980). Sketch of a componential subtheory of human intelligence. Behavioral and Brain Sciences, 3(4), 573–584.

Sternberg, R. (1982). A componential approach to intellectual development. In R. Sternberg (Ed.), Advances in the psychology of human intelligence (Vol. 1, pp. 413–463). New Jersey: Erlbaum.

Sternberg, R. (1984). A contextualist view of the nature of intelligence. International Journal of Psychology, 19(3), 307–334.

Sternberg, R. (1985). Implicit theories of intelligence, creativity, and wisdom. Journal of Personality and Social Psychology, 49(3), 607–627.

Sternberg, R. (2000). The concept of intelligence. In R. Sternberg (Ed.), Handbook of intelligence (pp. 3–15). Cambridge: Cambridge University Press.

Sternberg, R., Conway, B., Ketron, J., & Bernstein, M. (1981). People’s conceptions of intelligence. Journal of Personality and Social Psychology, 41(1), 37–55.

Sternberg, R., & Detterman, D. (Eds.). (1986). What is intelligence? Contemporary viewpoints on its nature and definition. New Jersey: Ablex.

Stinebrickner, R., & Stinebrickner, T. (2014). Academic performance and college dropout: Using longitudinal expectations data to estimate a learning model. Journal of Labor Economics, 32(3), 601–644.

Stipek, D., & Gralinski, J. H. (1996). Children’s beliefs about intelligence and school performance. Journal of Educational Psychology, 88(3), 397–407.

Tabachnick, B., & Fidell, L. (2007). Using multivariate statistics (Vol. 5). Massachusetts: Allyn & Bacon.

Tarbetsky, A., Collie, R., & Martin, A. (2016). The role of implicit theories of intelligence and ability in predicting achievement for indigenous (aboriginal) Australian students. Contemporary Educational Psychology, 47(1), 61–71.

Terman, L. (1921). Intelligence and its measurement II. Journal of Educational Psychology, 12(3), 127–133.

Thurstone, L. L. (1921). Intelligence and its measurement X. Journal of Educational Psychology, 12(4), 201–207.

Thurstone, L. L. (1947). Multiple-factor analysis: A development and expansion of the vectors of mind. Chicago: University of Chicago Press.

Trebell, D. (2007). A literature review in search of an appropriate theoretical erspective to frame a study of designerly activity in secondary design and technology. In E. Norman & D. Spendlove (Eds.), The design and technology association international research conference 2007 (pp. 91–94). Wellesbourne: The Design and Technology Association.

Trochim, W., & Donnelly, J. (2006). The research methods knowledge base. Ohio: Atomic Dog Publishing.

Tucker, L., & Lewis, C. (1973). A reliability coefficient for maximum likelihood factor analysis. Psychometrika, 38(1), 1–10.

Ullman, J. (2001). Structural equation modelling. In B. Tabachnick & L. Fidell (Eds.), Using multivariate statistics (Vol. 4, pp. 653–771). Massachusetts: Allyn & Bacon.

Uttal, D., & Cohen, C. (2012). Spatial thinking and STEM education: When, why, and how? Psychology of Learning and Motivation, 57(1), 147–181.

Vander Heyden, K., Huizinga, M., Kan, K.-J., & Jolles, J. (2016). A developmental perspective on spatial reasoning: Dissociating object transformation from viewer transformation ability. Cognitive Development, 38(1), 63–74.

Velicer, W., Eaton, C., & Fava, J. (2000). Construct explication through factor or component analysis: A review and evaluation of alternative procedures for determining the number of factors of components. In R. Goffin & E. Helmes (Eds.), Problems and solutions in human assessment: Honoring Douglas N. Jackson at seventy (pp. 47–71). Boston: Kluwer Academic.

Wai, J., Lubinski, D., & Benbow, C. (2009). Spatial ability for STEM domains: Aligning over 50 years of cumulative psychological knowledge solidifies its importance. Journal of Educational Psychology, 101(4), 817–835.

Wasserman, J., & Tulsky, D. (2005). A history of intelligence assessment. In D. Flanagan & P. Harrison (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (pp. 3–22). New York: The Guilford Press.

Wheaton, B., Muthén, B., Alwin, D., & Summers, G. (1977). Assessing reliability and stability in panel models. Sociological Methodology, 8(1), 84–136.

Williams, P. J. (2009). Technological literacy: A multliteracies approach for democracy. International Journal of Technology and Design Education, 19(3), 237–254.

Wood, J., Tataryn, D., & Gorsuch, R. (1996). Effects of under- and overextraction on principal axis factor analysis with varimax rotation. Psychological Methods, 1(4), 354–365.

Yussen, S., & Kane, P. (1983). Children’s ideas about intellectual ability. In R. Leahy (Ed.), The child’s construction of social inequality (pp. 109–134). New York: Academic Press.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Buckley, J., O’Connor, A., Seery, N. et al. Implicit theories of intelligence in STEM education: perspectives through the lens of technology education students. Int J Technol Des Educ 29, 75–106 (2019). https://doi.org/10.1007/s10798-017-9438-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10798-017-9438-8