Abstract

The emerging information revolution makes it necessary to manage vast amounts of unstructured data rapidly. As the world is increasingly populated by IoT devices and sensors that can sense their surroundings and communicate with each other, a digital environment has been created with vast volumes of volatile and diverse data. Traditional AI and machine learning techniques designed for deterministic situations are not suitable for such environments. With a large number of parameters required by each device in this digital environment, it is desirable that the AI is able to be adaptive and self-build (i.e. self-structure, self-configure, self-learn), rather than be structurally and parameter-wise pre-defined. This study explores the benefits of self-building AI and machine learning with unsupervised learning for empowering big data analytics for smart city environments. By using the growing self-organizing map, a new suite of self-building AI is proposed. The self-building AI overcomes the limitations of traditional AI and enables data processing in dynamic smart city environments. With cloud computing platforms, the self-building AI can integrate the data analytics applications that currently work in silos. The new paradigm of the self-building AI and its value are demonstrated using the IoT, video surveillance, and action recognition applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Urban migration is an increasing trend in the twenty-first century and it is estimated that more than 68% of the worlds’ population will live in urban environments by 2050 (World population projection by UN 2018). Such migration will strain the abilities of cities to cope and this situation has created an urgent need for finding smarter ways to manage the challenges such as congestion, traffic and transport, increased crime rates, social disorder, higher need and distribution of utilities and resources, etc. with smart cities being proposed as the solution (Gupta et al. 2019). Using technological advancement as the base, smart cities are expected not only to cater to the needs of a huge increase in population, but also provide improved living environments, business functions, utilize resources more efficiently and responsibly as well as be environmentally sustainable (Kar et al. 2019; Pappas et al. 2018). In such environments, the city and home infrastructures, human behaviors and the technology which captures such behaviors in digital form develop in to an eco-system with dependencies and interactions. Artificial Intelligence (AI) and data analytics are becoming essential enablers for such eco-systems to function and it is important to understand the limitations of existing AI and data analytics technologies and address these for the future.

Basic technology facilitated functions within smart cities will include the collection of data using sensors, CCTV cameras, smart energy meters as well as social media engines that capture real-time human activity which are then relayed through communication systems such as fibre optics, broadband networks, internet and Bluetooth (Alter 2019), thereby, generating a digitalized eco-system of Big Data and smart environments, augmented by analytics has the potential to drive optimized and informed decision making (Mikalef et al. 2020). For smart city services to take shape, large amounts of such data emerging from many sources must be (i) collected, (ii) integrated, and (iii) analyzed to generate insights in order to take informed actions and decisions automatically and/or semi-automatically (Jones et al. 2017; Eldrandaly et al. 2019; Guelzim and Obaidat 2016). The data generated are generally very large in volume, diverse and could be in structured, semi-structured or unstructured form and thus requires big data management and analysis techniques to manage them effectively (Sivarajah et al. 2017; Allam and Dhunny 2019). While the Internet of Things (IoT) technologies have extended the borders of capturing and collecting the smart city environments in high granularity (Li et al. 2015), cloud computing technologies have emerged to provide a solution for big data integration and to be used as the foundation to combine physical infrastructure and organize service delivery platforms for managing, processing, and synthesizing huge flows of information in real time. Cloud computing systems have demonstrated capabilities in smart cities for moving data into the cloud, indexing and searching as well as coordinating large scale cloud databases (Lin and Chen 2012).

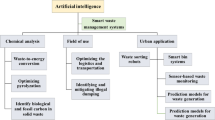

Artificial Intelligence (AI) has the potential to analyze the collected and integrated big data, and use insights derived to optimize operational costs and resources, and enable sound citizen engagement in smart city environments. As discussed in (Guelzim and Obaidat 2016), public safety and security could be enhanced by AI through sophisticated surveillance technologies, accident pattern monitoring, linking crime databases and combating gang violence. AI can also help with crowd management, estimation of size, predicting behaviour, tracking objects and enabling rapid response to incidents (Emam 2015; L. Wang et al. 2010). Utilities management and optimal use of resources such as distributed energy and water are further applications which could benefit from the use of AI (De Silva et al. 2020). Social media analytics to understand citizen needs in real time and use of AI powered chat bots for routine communication has also shown value (Adikari et al. 2019; Peng et al. 2019).

In the smart city environments, the type of data captured will vary widely from images, videos, sensors, electricity and water consumption, social media, text etc. A key factor will be that there will be an infinite number of potential situations that could occur in such an environment (Mohammad et al. 2019). Although the utilization of cloud computing technology and AI, machine learning and advanced analytics have provided much value for smart cities, there are three key constraints that limit the realization of the advantages from such technological advances. The first is that the utilization of AI and advanced analytics is currently carried out in silos and as isolated applications due to the lack of information integration and sharing mechanisms (Bundy 2017; Varlamov et al. 2019). The second problem is the need for significant human involvement in the application of AI and machine learning technology in an environment which is volatile and dynamic as such making fast, real time automated applications difficult (B. Liu 2018; Nawaratne et al. 2019a, b, c). Majority of AI and machine learning techniques used for smart city related applications use supervised learning which are better suited for deterministic situations and require past data which are labelled with known outcomes. As such, the third significant problem in many smart city situations where even labelled or classified past data for training machine learning algorithms are available, the relevance of labels become obsolete due to the fast-changing dynamics (Nawaratne et al. 2018). As such unsupervised machine learning techniques without the need of pre-labelled data that could self-learn and incrementally adapt to new situations become more relevant (Nallaperuma et al. 2019). The research discussed in this paper proposes an innovative solution to address these problems based on a new paradigm of self-building AI.

Since each incident, occurrence, behavior and situation will be different in terms time taken, number of people and objects involved, spatial features, background and types of data representations, smart city environments are non-deterministic and deciding the appropriate architecture or structure of machine learning models becomes a difficult of even impossible task (Mohammad et al. 2019). Therefore, the main requirements of AI and machine learning for dynamic and volatile environments (non-deterministic) such as smart cities are:

-

1.

The ability to self-learn and adapt without pre-labelled past data

-

2.

The ability to self-build the architecture or network structure to represent a particular situation

The main contribution of this paper is the proposal of a self-building AI framework which is capable of providing the self-learning and self-adapting capability within an unsupervised learning paradigm to address key issues highlighted above in smart cities environments. The proposed solution framework is built using Growing Self-Organizing Map (GSOM) algorithm and its extensions as the core components. As such this paper proposes to use GSOM algorithm as the base technology for a novel concept of machine learning models with the ability self-build and self-learn (unsupervised learning) to match individual situations. The GSOM (Alahakoon et al. 2000) is a variant of the unsupervised learning algorithm called the Self Organizing Map (SOM) (Kohonen 1997) with the ability to adapt its structure depending on the input data. The self-building capability of the model is harnessed to reduce the need for human involvement in utilizing machine learning at the ‘front end’ data capture and initial analysis stage where real time detection of anomalies, pattern and trend detection and prediction could be achieved. The captured data could then be passed on for further specialized processing and advanced analytics. A further innovation based on the GSOM called the Distributed GSOM (Jayaratne et al. 2017), that is proposed as a data fusion technology to develop a ‘positioning’ mechanism for local incidents and detected patterns. The proposed techniques can provide a Global Position Map for the local on-site applications and for integrated global processing where appropriate.

The rest of the paper is organised as follows. Section 2 provides the contextual and theoretical background for the work. The proposed framework with local and global processing using cloud platforms is described in Section 3. Section 4 provides experimental results to demonstrate the effect of self-building AI in several smart city situations and Section 5 concludes the paper with an extensive discussion about the applicability of proposed framework in real-world situations.

2 Background

The background and foundations for the research described in this paper are twofold: the application context and theoretical background. The research focuses the smart city environment and enabling technologies as well as technological infrastructure. Thereby, Section 2.1 provides an outline of smart cities, cloud computing platforms for smart cities and how AI is being used within smart cities. The proposed technology solution is based on unsupervised machine learning technique called Self Organizing Map (SOM) and a suite of self-building (structure adapting) versions of the SOM developed in the past decade. The theoretical background of these base techniques and algorithms are described in section 2.2 and used as the components of the proposed solution in Section 3.

2.1 Context

2.1.1 Smart Cities

Cities with heavy populations escalate burden on transportation, energy, water, buildings, security and many other things, resulting in poor livability, workability and sustainability. In order to remedy such circumstances, it is important to bring the advancements of technology into practical applications. This brings up the concept of ‘Smart Cities’, which can be defined as “A city that monitors and integrates conditions of all of its critical infrastructures including roads, bridges, tunnels, rails, subways, airports, sea-ports, communications, and water, power. Even major buildings can better optimize its resources, plan its preventive maintenance activities, and monitor security aspects while maximizing services to its citizens.” (Hall et al. 2000). A multitude of world cities has embarked on smart city projects, including Seoul, New York, Tokyo, and Shanghai. These cities seem futuristic, however with current advances in technology and especially cloud computing, they are exploiting to a certain extent what current technology has to offer (Guelzim and Obaidat 2016). Ultimately, as per to the vision of ‘Smart Cities’, the futuristic city should made safe, secure environmentally green, and efficient with all the utility functions such as power, water, transportation, etc. are designed, constructed and maintained making use of integrated materials, sensors, electronics and networks which are interfaced with computerized systems comprised of databases, tracking and real-time decision-making algorithms. Therefore, the communication and connectedness between these connected sensors, networks and electronics has been an utmost important part of this smart city eco-system.

2.1.2 Cloud Vs Edge Computing for Smart Cities

Cloud computing represents the delivery of hardware and software resources on demand over the world wide networks (Marinescu 2017). Current cloud computing systems have demonstrated large capabilities for moving data into the cloud, indexing and searching it as well as coordinating large scale cloud computer systems across networks (Guelzim and Obaidat 2016). Cloud computing can provide a sustainable and efficient solution to fill the communication and connectedness gap in smart city eco-systems. Further cloud computing over smart cities could provide a global common approach for communication and connectedness (Petrolo et al. 2017).

“Edge computing” refers to computing as a distributed paradigm which brings data storage and computing power closer to the device or data source which requires such resources thus eliminating lag time and bandwidth usage. This is in contrast to cloud computing which could be considered as a more centralized form of data storage and processing. Chen et al., have proposed a self-organizing architecture which uses edge computing as a platform for enabling services within a smart city environment (B.-W. Chen et al. 2019). In the AI framework proposed in this paper, we propose a combined edge and cloud computing approach where advantages of both concepts are harnessed.

2.1.3 Smart Cities and AI

Big data and cheap computing have enabled the explosion of AI in software applications supporting every aspect of life. With the radical transformation of smart city eco-systems, by the emergence of big data streams generated by large arrays of smart sensors and surveillance feeds, it is timely and pertinent to harness the potential of AI to uplift the smart city eco-systems.

With the recent advancements in AI, a number of approaches has been suggested for the upliftment of different aspects of smart cities such as smart road networks, intelligent surveillance, smart electricity, etc. In (Lana et al. 2018), the authors present a comprehensive review on the novel AI techniques utilized for traffic forecasting in order to condense the road traffic using forecasting based optimization algorithms. Garcia-Font et al. (Garcia-Font et al. 2016) presents a comparative study on anomaly detection techniques for smart city wireless networks using real data from the smart city of Barcelona.

However, a majority of AI and machine learning techniques used for smart city related applications use supervised learning which require past data which are labelled with a known outcome. The availability of labelled or classified past data for training machine learning algorithms becomes a significant problem in many smart city situations where even when such data is available, the relevance of labels becoming obsolete due to the fast-changing dynamics. As such unsupervised machine learning techniques without the need of pre-labelled data which could self-learn and incrementally adapt to new situations become more relevant (Nawaratne et al. 2018).

Thus, focusing on the unsupervised domain, (Silva et al. 2011) presents an self-learning algorithm for electricity consumption pattern analysis based on smart meter readings. Further, Nawaratne et al. presents an AI based intelligent surveillance approach to detect anomalies on smart-city and smart-factory based contexts (Nawaratne et al. 2017). Here, the authors use an unsupervised machine learning based incremental learning approach to detect such anomalies. Moving along the unsupervised AI spectrum, (Kiran et al. 2018) provides a comprehensive overview on novel unsupervised deep learning techniques for anomaly detection on video surveillance footages, applicable to smart city contexts. A further key limitation in current AI applications in smart cities is that they are carried out as silos and in isolation while combining and integrating these applications can provide far more value.

2.2 Theoretical Background

2.2.1 The Need for Unsupervised Learning to Cater Technology Advancement

Given the importance of development of advance AI systems, it is vital to select a proper algorithmic base from its ideation. The widely used prospects in algorithmic development are: i) mathematical modeling, ii) supervised learning, and iii) unsupervised learning. The former relates to the development of mathematical models in order to represent a rigid formulation of the data, whereas the latter, supervised and unsupervised machine learning paradigms relate to learn representations based on the experience derived from input data.

The nature is unpredictable if not indeterminate. In (Cziko 2016), the authors argue the complexity of nature based on multiple directions including individual differences in entities, chaos theory, the evolutionary nature of natural entities, the role of consciousness, free will in human behavior and the implications of quantum mechanics relating to the feasibility of modeling the natural environment (Boccaletti et al. 2000). With this indeterminism, it is not practical to develop mathematical models to represent input stimuli from the natural environment. Thus, developing on the foundations of machine learning paradigms can be thought suitable as they merely derive the representation from previous experience, similar to how human perceives the nature and learn from it.

The machine learning mainly constitutes of two learning paradigms: supervised learning and unsupervised learning. In supervised learning, the algorithm infers a function from a set of training examples. The aim is to approximate a mapping function, such that given a new input, the mapping function to be able to infer (or predict) the output. In contrast, unsupervised learning aims to find the hidden structure in unlabeled data. The main difference of aforementioned is the availability of labeled data or ground truth. The supervised learning requires a prior knowledge of what the output values for the training samples should be, while unsupervised learning does not.

In typical smart city environments, a wide array of sensors exists to capture the environment in the forms of images, videos, sensor readings, social media, text etc. With that, due to the indeterministic nature of the environment there will be an infinite number of potential situations that could occur in such an environment. In such smart city environments, most of the AI and machine learning techniques used for smart city related applications use supervised learning that are better suited for deterministic situations which require past data which are labelled with known outcomes. A significant problem in many smart city situations where even labelled or classified past data for training machine learning algorithms are available, the relevance of labels become obsolete due to the fast-changing dynamics. If a system is to develop using supervised AI, it will typically need a large number of training examples in order to learn effectively. However, labeling of training data is often done manually and it is highly labour-intensive and time-consuming. The world is widely complex with many possible tasks, making it almost impossible to label a large number of examples for every possible task for an AI algorithm to learn. Adding further complications, the environment changes constantly, and any labeling thus needs to be done frequently and regularly to be useful, making it a daunting task for humans. As such unsupervised machine learning techniques without the need of pre-labelled data that could self-learn and incrementally adapt to new situations become more relevant (Nallaperuma et al. 2019). In this light, AI systems of the future is expected to incorporate higher degrees of unsupervised learning in order to generate value from unlabeled data (Nawaratne et al. 2019a, 2019b, 2019c).

Usually, unsupervised machine learning approaches derive grouping of data with similar characteristics. This process is known as clustering, where the unsupervised learning process separate the input space into groups of data points that are called clusters based on the similarity between the data points. As such, the outcomes of this learning process represent similarity of the information presented at the inputs, and the unsupervised learning models have a memory capacity, learning capacity and pattern recognition capacity (Tan et al. 2016). K-Means algorithm and Self Organizing Maps algorithm (SOM) are two unsupervised learning algorithm families that have been widely used in smart city domain application (W.-C. Liu and Lin 2017; Nallaperuma et al. 2019; Nawaratne et al. 2019a, 2019b, 2019c; Yang et al. 2020). In recent work, Riveros et al. compared the validity and robustness of K-means with respect to SOM in a case study of smart healthcare. The results demonstrated that the learning resulted by the SOM outperforms K-means results, evaluated based on Cohen’s Kappa index to evaluate concordance and measures such as higher sensitivity, specificity, precision, and negative predictive value (NPV) where SOM is used in classification context (Melo Riveros et al. 2019). Further, Chen et al. compared SOM and K-means for natural language clustering, which resulted in identifying that K-means is sensitive to initiative distribution, whereas the overall clustering performance of SOM is better than that of K-means (Y. Chen et al. 2010). It was also identified that SOM performs well for noisy inputs and topology preservation. Thereby, given the ability to represent complex data environments and the ability of topology preservation demonstrated by SOM, it presents a viable option to further explore self-organization under the context of unsupervised learning.

In general, self-organization can be identified a natural phenomenon which has been computationally recreated to achieve unsupervised learning that resemble both biological brain and natural phenomenon. Self-organization can be understood as a process where a form of overall order arises from local interactions between parts of an initially disordered system. Self-organization process is spontaneous when sufficient energy is available, without any need for control by any external agents. The self-organization provides means in chaos theory in terms of islands of predictability in a sea of chaotic unpredictability (Khadartsev and Eskov 2014).

On this premise, Teuvo Kohenen’s Self-Organizing Feature Maps (abbreviated as SOFM or SOM) is a human cerebral cortex inspired neural network that produce a nonlinear high-dimensional input space into a reduced dimensional discretized representation, while preserving the topological relationship in the input space (Kohonen 1997). On the basis of Hebbian Learning (Hebb 1949), competition and correlative learning (Webber 1991), as input signals are presented, neurons compete amongst for ownership of the input and the winner strengthens its relationships with this input.

Prior research has suggested that the paradigm of competitive learning might constitute a viable mechanism based on the fact that the response properties of the cells of the visual cortex could develop to form coding units suitable for representing the visual stimuli encountered in natural life (Webber 1991). Thereby, the competitive learning can be based as suitable in representing a self-building foundation in computation models, which in turn makes self-organization a viable candidate for the proposed approach.

2.2.2 Self Organizing Map (SOM)

The Self-Organizing Map (SOM), as introduced prior, is a neural network that produce a reduced dimensional, typically a two-dimensional, representation of the input space while preserving the topological relations in the input space. This enables to conduct exploratory data analytics in the input data space in a wide range of applications.

In SOM workings, initially, the neural network is mapped as a lattice of neurons each having a weight vector, Wk(t) ∈ ℝn, representing the input space. The coordinate systems in the SOM represents the output space. In the growing phase of SOM, each input vector, xi ∈ ℝn, is presented to the neural network to calculate the best matching unit (BMU) based on the distance between the input and the weight vectors of the neurons. Usually, the Euclidian distance is selected and the distance is named the quantization error E, which is given by Eq. (1).

After calculation of the quantization error, the neuron with the lowers quantization error is selected and it is called the Best Matching Unit (BMU) or the winning node. Subsequently, the winning node and its neighbours are updated using the Eq. (2).

In which wk(t + 1) is the updated value of the weight vector of the kth neuron while wk(t) is the previous value of the weight vector of the same neuron. α is the learning rate, in which has the function of time-decaying, hck is a neighborhood function which decays the neighbourhood over time. Generally, the input space is presented to the SOM for a predefined number of times, which is names as training iterations.

In the SOM algorithm, the size of the reduced dimensional grid structure and dimensionality needs to be defined in advance. However, as SOM is typically employed for exploratory analytics tasks, in which a little or no information about the data is presented upfront. Thus, having to define the structure and dimensionality would result in limiting the self-organizing structure to represent the input data space as it is. This this is a key limitation with the SOM.

2.2.3 Growing SOM (GSOM)

In order to overcome the limitations of SOM architecture, several studies have introduced growing variants of the SOM. Growing Cell Structure (Fritzke 1994) is one of the growing variants of SOM, in which, a k-dimensional network space is made where the constant k is predefined. Node growth occurs at every iteration by inserting a node and position to facilitate the node that has accumulated highest error. The network will grow until the stop criterion is met, either limited by the predefined network size or to satisfy the maximum accumulated error predefined is met. Grow When Required (Marsland et al. 2002) is another growing variant of SOM, which grows nodes whenever the neural network does not adequately match the input.

The growing self-organizing map (GSOM) (Alahakoon et al. 2000) is an improved growing variant of SOM, in which the output network structure starts with a minimal number of nodes and grows on boundary based on heuristics and input representation. Generally, the initial structure starts with four nodes in the GSOM. GSOM consists of two phases, firstly the growing phase where the neural network adjusts its weights to sufficiently represent the input space and secondly, the smoothing phase in which the node weights are fine tuned.

In the growing phase, once the input vectors are presented to the map over a number of iterations, the best matching units neurons accumulate quantization error based on the distance between the input and its weight vector. A neuron is said to under represent the input space once the accumulated quantization error of the neuron is greater than the growth threshold (GT), as defined in (3). In that case, new nodes are inserted into the neural network to sufficiently represent the input space. If BMU neuron is on the boundary, the map is grown from boundary by adding new neurons to the map. Otherwise, the error is spread among neighbouring neurons. The newly added neuron is initialized to match the weights of the existing neighbouring neurons.

The GT is determined by the number of dimensions D in the input space, and novel introduced parameter named the spread factor (SF). The SF can be utilized to control the spread of the network structure independent of the dimensionality of the dataset.

In the smoothing phase similar to the growing phase, inputs are presented and weights are adjusted. However, no new neuron will be inserted in this phase as the purpose of the smoothing phase is to smooth out any existing quantization error.

The capability of (self-building) self-structuring without any prior knowledge about the data space is one of the key advantages of GSOM over SOM and its variants. GSOM is capable of self-structuring on the latent space to discriminate between distinct input as well, cluster similar input. And its ability to control the spread of the map enables achieving structural adaptation and hierarchical clustering, which are two important aspects in the human sensory perception system.

2.2.4 Distributed GSOM

A major drawback of SOM algorithm and its growing variants is time complexity, which makes SOM unsuitable for Big Data applications in their current form. In (Ganegedara and Alahakoon 2012; Ganegedara and Alahakoon 2011) a parallel version of GSOM algorithm which utilizes data parallelism and horizontal data splitting has been proposed as a solution. Further, a number of strategies have been proposed for data partitioning, including random partitioning, class based partitioning and high-level clustering-based partitioning. The main advantage of the proposed algorithm is that it preserves the final map of the whole dataset. Sammon’s projection (Ganegedara and Alahakoon 2011) is used to generate the final map using the intermediate SOMs. Sammon’s projection is based upon point mapping of higher dimensional vectors to lower dimensional space such that the inherent structure of the data is preserved.

Distributed GSOM utilize a distributed SOM growing phase where multiple smaller SOMs are trained on partitions of data in parallel. Algorithms for MapReduce, bulk synchronous parallel (BSP) and restorative digital dentistry (RDD) paradigms have been developed based on the working principles of the Distributed GSOM algorithm and implemented on popular platforms Apache Hadoop, Hama and Spark respectively.

2.2.5 GSOM Fusion

In the context of growing self-organization, there has been focus on the hierarchical element of clustering because hierarchical information processing closely resembles how human brain perceive their external environment from different sensors and processes information in order to produce ultimate perception. As such, an enhanced variant of the GSOM has been implemented, a Multi–Layer GSOM (A. Fonseka and Alahakoon 2010), which is motivated by the hierarchical sensory information processing in the human brain.

The proposed Multi–Layer GSOM approach uses a layered architecture which resembles the cortical hierarchy of the human brain to build concepts (cluster) hierarchy. The input data space is initially represented to the lowest level GSOM and finds the trained weights or prototypes which represent the input space. These set of prototypes are next fed to the next layer and obtained the trained weights. This process is iteratively carried out until a certain criterion is met.

The Multi–Layer GSOM algorithm is based on several layers of growing self-organizing maps which are connected together (Fig. 1).

Generally, Multi–Layer GSOM employs several layers of GSOMs (which represent the cortical layers of the human brain) to build cluster (concept) hierarchy for the input data space and subsequently calculates a cluster validity index for each layer to select the best GSOM layer which represent the input space more accurately. The use of layered GSOMs, enable to capture more abstract concepts which can be seen at the top levels of the hierarchy and drilling down through the levels allows obtaining more granular and detailed concepts.

This was extended to fuse multimodal sensory information by proposing a multisensory self-organizing neural architecture by (Jayaratne et al. 2019). This consists of GSOM layers for learning individual modalities. This algorithm has incorporated scalable computing for self-organization, so the processing can be scaled to support large datasets leading to short computation times. The lateral associative connections capture the co-occurrence relationships across individual modalities for cross-modal fusion in obtaining a multimodal representation. The multimodal fusion algorithm was experimented audio-visual datasets consisting of utterances to evaluate the quality of multimodal fusion over individual unimodal representations, demonstrating its capability to form effective multimodal representations in short computation times from congruent multisensory stimuli. A further limitation in the traditional SOM is the inability to adapt to changing dimensions (variables). This has been addressed in the GSOM fusion extension by incorporating dynamic time warping where the number of variables are different across similar data types to calculate the difference for creating a common mapping (Fonseka et al. 2011). In addition, when there are significant variations in the type of variables collected (even within the same type of applications), Jayaratne et al. (Jayaratne et al. 2017) create multiple GSOMs and use a concept graph layer above the GSOMs to link the GSOM clusters using either existing or custom built knowledge hierarchies/ontologies, that will enable to transfer the new information post growing phase.

We have presented a summarization of the evolution of the self-building AI algorithms in Table 1. Starting from the primitive self-organizing maps (SOM) to produce a reduced dimensional representation of the input space while preserving the topological relations in the input space, the unsupervised self-building AI has evolved to hierarchical, multimodal fusion based self-organization architectures that are implemented on distributed computing environments enabling the exploration and latent representation generation of the natural world and behaviors using Big Data.

3 Proposed Self-Building AI Framework for Smart Cities

3.1 Overview of the Framework

This section describes the proposed AI framework for smart cities based on unsupervised machine learning and using the structure adapting GSOM based AI algorithms components. The proposed framework addresses the problems of AI and advanced analytics being carried out in silos and as isolated applications due to the lack of information integration and sharing mechanisms and the need for significant human involvement in building the structure of the models. Figure 2 represents the framework as three layers. On-site and local layers sit within local machines while global layer could be implemented as a platform layer within a cloud platform.

The first (lower) layer handles onsite data capturing with devices such as CCTV cameras or sensors and generates the on-site GSOM after the initial data pre-processing. The onsite GSOM could be spawned with each new incident (or at regular time intervals) and transforms the map to layer 2, the local GSOM and local analytics. The on-site GSOM also receives inputs from the upper layers with updates of global and local trend, anomaly and pattern information thus enabling the on-site processing to detect occurrences of interest and generate alerts.

The second (local processing) layer receives inputs from on-site processing and spawns a local GSOM, which is passed on to layer 3, Cloud PaaS processing. The local GSOM may be passed on to Global layer at a suitable level of granularity with the spread factor of GSOM thus representing an appropriate level of abstraction. The appropriate spread factor will be self-generated based on the abstraction required by the individual application or the level of summarization required for confidentiality. Layer 2 receives a regular Global GSOM which will provide the ‘positioning’ of the local data within the overall domain. For instance, consider a situation where a pedestrian monitoring video camera. The local videos may only show low density of pedestrians while the global map might show that some cameras from other regions captured high density as well as faster moving pedestrian flow, thus providing an overall understanding of the bigger picture).

The third layer (cloud and global processing) receives inputs from multiple local processing units of similar applications and using the Distributed GSOM technique, generates a global GSOM. The GSOM mapping supports global analytics to detect global patterns and insights and is also passed on to the second (local) layer to provide the global positioning as described above.

The GSOMs with their ability to spawn when required and self-build according to the needs of the individual local situation, reduce the need for human involvement in the process. The unsupervised learning capability enables the models to learn patterns from inputs without the need of pre-labelled inputs. The global GSOMs facilitates the integration of multiple data sources of the same domain and therefore serves the valuable function of positioning local data within the bigger picture. The Global GSOMs, thus, addresses the problem of applications working in isolation. After the initial unsupervised processing with GSOMs, the data could be directed to specialized supervised analytics using appropriate techniques. The series and layers of GSOMs will thus act as the position identifiers which enable the linking of outcomes from different applications from multiple locations.

3.2 Task Flow within the Proposed AI Framework

The different tasks and functionality of the local and global layers are detailed in this section. Figure 3 elaborates the task flow within layer 2 (local) and layer 3 (global). Layer 2 has three main tasks, local GSOM generation, granularity calibration and local analytics. Once the local GSOM is generated using information provided from layer 1 (on-site processing) the content of clusters from GSOM will be compared with the application requirements. For example, if the application is facial recognition, the cluster content will be analysed to detect if the clusters provide suitable images. Where unsuitable, the GSOM will self-generate different levels of abstractions using spread factors and resulting clusters will be analysed until suitable outcomes are found. As such, the ability of the GSOM to self-structure into multiple levels of abstraction is used in layer 2 to self-generate appropriate outcomes to match the need of the application. Further localized analysis and trend capture will be conducted for local insight generation. In layer 3, once a global GSOM is generated, a cluster disambiguation phase is carried out. Since GSOMs are unsupervised techniques, the cluster content may not directly relate to the needs of the application. The disambiguation phase carried out by bringing in domain ontologies and other forms of domain knowledge to clear up ambiguity and associate diverse contents of clusters with each other using any additional domain knowledge available. The global GSOM is used as the base for global analysis and the outcomes are the communicated to the local sites (where appropriate).

4 Experiments

The proposed AI framework is experimented on a smart city context in this section. In the context of smart cities, a number of sensory modalities are available such as vehicular traffic data, pollution data, weather data, parking data, surveillance data, etc. Among these data types video surveillance data can be considered as one of the most complex type of data in terms of computational overhead (Nawaratne et al. 2019a, 2019b, 2019c). Thereby, we intend to conduct experiments on the proposed self-building AI framework using three video benchmark datasets applicable to smart city environments: CUHK Avenue dataset (C. Lu et al. 2013), UCSD Ped dataset (Mahadevan et al. 2010) and the Action Recognition Dataset (Choi et al. 2009). With this empirical evaluation, we demonstrate the capability of the self-building AI to represent input data in exploratory manner for further processing. Functionality of the components of the framework is demonstrated separately. The self-building AI platform is materialized for this experiment using GSOM algorithm discussed in Section 2.2.3, however, in instances with multiple streams of large data volumes, we can achieve a super linear speed advantage using the scalable implementation of GSOM presented in Section 2.2.4.

4.1 Experimental Setup

The experiment intends to demonstrate capabilities for Layers 2 and Layer 3 of the proposed AI framework. The experimental setup is four-fold:

-

1.

First, we evaluate local feature map generation at the on-site local processors reside in Layer 2 of the proposed AI framework. We derive SOM based local feature maps to demonstrate limitations exist in structuring the input data space into a latent space representation. The objective of this experiment is to explore the validity of using a self-structuring AI core algorithm in order to represent the input space with context based structural adaptation. This experiment validates the benefit of structural adaptation in the AI technology.

-

2.

Second, we develop the local feature maps using the growing counterpart of SOM, i.e., GSOM, with multi-granular structural adaptation and show how the granularity calibration can be implemented based on the requirements of the context. This capability enables the AI to self-build representations at different level of abstraction which could be calibrated to link objects of interest or events from different source data or time lines.

-

3.

Third, we compare the optimal representation for selected datasets with respect to its context and the granularity, demonstrating the capability of structural adaptation to optimally represent the input data for further processing. The feature in self-building AI enables automatically representing the object or event optimally without human involvement.

-

4.

Fourth, we evaluate the Global Position Maps derived using GSOM for multiple data sources demonstrating region development upon similar categories based on its visual features. The experiment justifies the use of GSOM self-building AI technology for generating a global mapping from multiple and distributed applications.

The experiments of step 1–3 demonstrate the capabilities of Layer 2 of the proposed AI framework workflow, where fourth experiment demonstrates the applicability of Layer 3.

4.2 Datasets

The CUHK Avenue dataset (C. Lu et al. 2013) was recorded street activity at City University, Hong Kong that were acquired using a stationary video camera. This dataset contains total of 37 video samples ranging from human behaviour such as people walking on walking paths and groups of people meeting on the walking path to unusual behaviour such as people throwing objects, loitering, walking towards the camera, walking on the grass and abandoned objects.

The UCSD pedestrian Dataset (Mahadevan et al. 2010) was captured focusing on a pedestrian walkway. This dataset captures different crowd scenes, ranging from sparse to dense crowd flow in its video samples. Here we utilize the training data set which contains 34 video samples. For the experimental purposes, we manually labelled the videos based on their crowd density into 4 categories (low, mid, high, very high).

The Action Recognition Dataset (Choi et al. 2009) was selected for demonstration of fusion exploratory analytics as it contains 44 short video clips acquired under unconstrained real-world conditions. The videos are 640 × 480 pixels in size and were recorded using a consumer handheld camera. The video dataset contains a number of scenarios broadly grouped under road surveillance and indoor surveillance. Road surveillance scenarios contain videos where pedestrians crossing the road, walking on the sidewalk, waiting near a bus stop, walking in groups and having conversations beside the road. The indoor surveillance video scenarios contained videos of people having conversations in shopping malls, waiting in queues for delivery at food courts and people walking in an indoor environment.

As the video samples have different dimensionality, we pre-process the inputs by resizing the extracted frames to 30 × 30 pixels, and normalizing pixel values by scaling between 0 and 1. Then we extract the histogram of optical flow (HOF) (T. Wang and Snoussi 2012) and histogram of gradients (HOG) (Dalal and Triggs 2005) to be utilized as feature vector for online structural adaptation.

4.3 Representation of Smart City Video on SOM

The aim of the first experiment is to demonstrate the qualitative effect of using self-building AI algorithm to develop local feature maps at the on-site local processors that reside in Layer 2 of the proposed AI framework. On this basis, we analyse GSOM with respect to its constrained counterpart, i.e., SOM. Initially, we have developed SOM based local feature maps for the UCSD pedestrian dataset (Fig. 4) and Avenue datasets (Fig. 5), which we consider as two data sources of our system. For this feature map development, we used 20 × 20 feature map with a learning rate of 0.01. In the growing phase, the SOM feature maps were learnt for 100 iterations.

The latent representation of the pedestrian flow of UCSD dataset can be visualized in the SOM map shown in Fig. 4. The colour code elicits the density of the pedestrian flow. It can be identified here that the dense pedestrian movements are clustered in the center and middle of the SOM map. However, low dense and mid-dense videos are scattered throughout without any fine-grained cluster region. Analysis of the SOM visualization shows that the self-structuring of the neural network has become restricted due to the rigid structure (pre-defined 20 × 20 grid). Thereby, initial learning of dense pedestrian movements has been firmly clustered while the rest of the scenarios were constrained to grow as required by the feature representation.

The latent space representation of the avenue dataset is plotted (Fig. 5) with detailed frames each SOM node has been clustered. A fine grain analysis resulted in identifying 4 unique regions, which have different characteristics of images. Figure 5 (A) is composed of frames that are idle with minimal crowd present and negligible motion activity. Figure 5 (B) contains frames that large crowd stays idle and slight movements at distance. Figure 5 (C) contains rapid movements across the frame (left-right), whereas Fig. 5 (D) contains movements of crowd to and out of the camera position.

Both the SOMs that were developed upon the UCSD and Avenue datasets are constrained by the initial node size constraint. Thus, the structural adaptation of the node map is restricted making the natural spread limited to a pre-defined threshold. Due to this limitation, we propose the growing variant of the SOM, i.e., GSOM, to be utilized for the structural adaptation of the video input data in the proposed AI framework workflow, specifically Layer 2. From a smart city perspective, the diversity and distribution of input data in a single stream (e.g., video data) could be unpredictable, thereby, forming a pre-defined structure is unrealistic. This further emphasizes the need for unconstrained self-organizing algorithm such as GSOM.

4.4 Representation of Smart City Video on Structural Adapting Network

The second experiment aims to demonstrate capability of using GSOM to overcome the aforementioned limitations with constrained self-organization as shown previously. In addition, we explore multi-granular structural adaptation capability lies with GSOM and how the granularity calibration can be implemented based on the requirements of the context. The capability shown here relates to the Layer 2 of the proposed self-building AI framework workflow. In this experiment, we develop growing feature maps for two selected datasets using the GSOM algorithm. Due to the unconstrained nature, we do not need to specify the map size constraint. We let the GSOM algorithm to structurally adapt based the dynamicity of the input feature space on the video data.

The growing feature map for multiple granularities for UCSD dataset is depicted in Fig. 6. The structural adaptation has been conducted for three levels of abstractions, namely spread factor of 0.3, 0.5 and 0.8. For demonstration purposes, we have selected 8 distinct video frames from different crowd density characteristics (plotted identically in Fig. 6). The colour code elicits the density of the crowd flow. Based on the structural adaptation, it can be seen that the network growth wider when the spread factor increases. At the same time, the data points that were closer in Fig. 6 (a) have parted when spread increases, i.e., Fig. 6 (b). This will enable the GSOM map to represent input data space in detailed with different calibrations of spread factor.

Similarly, we derive GSOM feature maps (Fig. 7) for Avenue datasets with different abstraction levels. Same observations we identified in UCSD dataset applies here, such that, the calibration of spread factor change the granularity of representation of the input data.

It can be seen from the multiple feature maps with multiple abstraction levels, that the structural adaptation is possible with GSOM algorithm. Here, the growth/structural adaptation of the neuron network is not restricted by a pre-defined map size constraint, but allow the input data space to decide how it should spread, restricted only using a friction parameter (i.e., spread factor). Thus, in contrast to the SOMs, GSOM has the capability to structurally adapt with respect to the input data space dynamicity and optimal to utilize in the proposed framework. In the context of smart city, multi-granular exploration capability is imminent as the distribution and diversity of input data is not known prior. For instance, consider a scenario that requires facial recognition from a surveillance camera. Depending on the targeted person’s position from the camera (i.e., distance from the camera lens), it might be required to change the granularity in which the face should be identified.

In addition to the structural adaptation analysis, we conducted a quantitative analysis of the topology preservation of SOM and GSOM for both the datasets. We used the topographic error (TE) proposed by Kiviluoto (Kiviluoto 1996), which is a measure of the proportion of data vectors in the input space that have non-adjacent first and second best matching units (BMU) in the output space. The TE is defined in Eqs. (4) and (5), where, K is the number of input data points.

Typically, the TE converges to zero for a perfectly preserving map. In practical settings, TE can be used to compare topography preservation between number of self-organized feature maps. As such, we evaluate the topology preservations for the UCSD Pedestrian Dataset and Avenue datasets, which are presented in Table 2. The results demonstrate that for both the datasets, GSOM has a higher topology preservation comparatively to the SOM.

In the next experiment, we will further explore the positioning capability of the proposed AI framework.

4.5 Structural Adaptation with Context Awareness

The objective of the third experiment is to evaluate the optimal structural adaptation of the feature maps for pre-defined context requirements. Here we define the context requirement for the UCSD data source as to detect the flow of pedestrians, and context requirement for the Avenue data source as to detect forward facing frames that are optimal to run through face recognition due to pixilation constraints (i.e., direct facing people with a large pixel coverage).

Fine grain analysis of the UCSD feature maps shows that the minimally spread feature map (Fig. 8 (a)) is optimal to represent the crowd density, based on the color-coded nodes as well, the manually tagged sample video frames (note that Fig. 6 (a) is replicated as Fig. 8 (a)). In contrast, in the feature maps of Avenue dataset, we have analyzed the manually tagged video frames as highlighted at the pointer level in the Fig. 7. Based on this region analysis, it can be seen that feature map of SF = 0.3 (Fig. 7 (a)) is closely grouped together thus both forward facing frames as well across walking frames are closely represented. The feature map of SF = 0.8 (Fig. 7 (c)) shows a sparse representation. However, the feature map with SF = 0.5 (Fig. 7 (b)) provided a fine cluster of forward-facing frames. Therefore, it can be shown that, for the current context requirement of identifying forward facing frames, the feature map of SF = 0.5 is optimal. Thereby, we have replicated Fig. 7 (b) as Fig. 8 (b) for a close comparison. It is important to note that if the context requirement differs, then the optimal represented feature map could be different from what is current optimal.

In the context of smart cities, diverse sensory devices have specific requirements. For instances, there are CCTV cameras that are designed to capture anomalies from the surveillance context, detect faces in entrances, automatically detect vehicle license plates and/or speed of vehicles and for general surveillance. These specific cameras would have different objectives, thus, each would need to calibrate for specific context requirement. I.e., anomaly detection cameras should be calibrated to capture high granular movements to capture subtle changes in motion, facial detection cameras should be calibrated to obtain optimal representation of face regions and vehicle detection cameras should be calibrated to zoom into vehicle license plate and extract that specific region. Thereby, having the capability to derive optimal structural adaptation for each local feature map for pre-defined context requirements is a prominent capability provided by the proposed self-building AI framework to smart city context applications.

4.6 Fusion Map for Smart City Video

The fourth experiment intends to explore the Global Position Maps derived using growing self-structuring algorithm for multiple data sources demonstrating region development upon similar categories based on its visual features. For this experiment, we utilize the Action Recognition Dataset in order to demonstrate the fusion of diverse videos to regions of the same map. Due to the focused nature of UCSD and Avenue dataset, we did not only use those two datasets but augmented the diversity of video contexts with video samples from the Action Recognition Dataset. We evaluated the fused feature map for both a lower spread of 0.3 and a higher spread of 0.5 for the structural adaptation to explore the multi granularities representation, as illustrated in Fig. 9.

Figure 9 (a) illustrates the initial region analysis of the surveillance data. In contrast, Fig. 9 (b) shows the marked region A and B expanded into finer details. In Fig. 9 (a), region A contains videos of people walking on pathways both on sidewalks, between buildings and in parks. Our previously used UCSD Pedestrian video samples have been clustered into this region, as they contain pedestrians walking on a pathway. In Fig. 9 (b), the same region has expanded and the videos were sub regions into multiple granularities such that one sub-region contained people walking on roadside sidewalks and another region contained people walking on sidewalks near parks. One video, where the people walk between buildings, has moved to a single node with no similar videos as it was the only video of that kind. Similarly, in Fig. 9 (a), region B contains videos of people crossing the road, and vehicles moving. The same region has expanded in Fig. 9 (b) such that the videos which the vehicles are passing by having clustered together and the videos of people crossing the road have clustered together. It can be visualized that the Avenue video samples has been clustered in the region C. However, it further illustrates that the region C in both GSOM maps are together. The granularity which the spread was increased has not been adequate to expand the cluster, which includes videos of indoor, in a shopping environment where people formed as groups.

We clustered the video samples into 5 regions, as shown in Fig. 10. In the interpretation, the region 1 contains videos of people in indoor environments, where people meet, conduct casual conversations, etc. In the region 2, the grouped video samples contain a people in queues. Interestingly, the Avenue dataset video samples have been grouped here, especially the frames where people stay still under the shade, similar to a queue. The region 3 contains outdoor and garden locations as well pedestrian walks near them. UCSD dataset video samples have also been grouped into this section. Further, it can be visualized that region 4 and region 5 contains similar types of video samples of road traffic, where region 4 mostly people waiting near the road and region 5 with pedestrian crossings.

Apart from these, there were a few video samples that were not grouped into the selected regions but had similar characteristics. That is due to the feature representation approach we undertook, which flow (temporal) and gradient (spatial) features of the video.

This experiment demonstrates how the fusion of local on-site map outcomes can be used to develop a global holistic understanding of the context, thereby, provide feedback to local on-site maps on context requirements. The above experiment only presented the interpretable regions in order to convey the outcomes, however, in practice even the non-human-interpretable clustered regions will be taken into account when developing the holistic view. From smart city based application context, fusion of multiple sensory modalities can aid to provide a holistic view of the environment. Further, the use of GSOM will enable distinguish the view points and cluster them in order for convenient surveillance.

4.7 Analysis of Computational Overhead

The experiments were carried out on a multicore CPU at 2.4 GHz with 16GB memory and GPU of NVIDIA GeForce GTX 950 M. The average processing time for activity representation (HOG and HOF features) and self-organization was respectively 12 milliseconds and 144.7 milliseconds per frame. Thus, the overall computational is 156.7 milliseconds per frame, i.e., the algorithm is able to process 7 frames per second (FPS). From the runtime analysis, it is evident that the maximum computation occurs at self-organization phase, thus, by speeding up this process using parallelized implementation, it would be possible to further enhance runtime efficiency.

In general, a typical video camera can acquire surveillance footages at 30 FPS, i.e., the time difference between two consecutive frames are 33.4 milliseconds. However, in the context of video surveillance, an anomaly, or an unexpected action to happen considerably it will take more time than that. For instance, for the surveillance of pedestrians the proposed runtime (i.e., 157 milliseconds per frame) can be considered adequate to serve anomaly detection requirements. However, in a scenario where a vehicle is travelling in a highway at 100 km/h, the vehicle would have moved close to 4.36 m during the 157 milliseconds. As such, the proposed computational technique can be considered to perform near-real time, and could further improve its runtime efficiency with better computing resources for real-time use.

5 Discussion and Conclusion

Smart cities are becoming an essential requirement for most countries around the world due to migration of the population into urban areas. Using technological advancement as the base, smart cities are expected not only to cater to the needs of a huge increase in population, but also provide improved living environments, utilize resources more efficiently and responsibly as well as be environmentally sustainable. The main technologies that are expected to support the creation of smart cities are the cloud based IT systems, and AI and machine learning based intelligent data processing and advanced analytics.

5.1 Theoretical Implications

This research introduces and highlights unsupervised learning and self-structuring AI algorithms to cater to the needs of the big data era. In the past, supervised machine learning techniques have been more popular where the use of AI/machine learning has been for clearly defined tasks which are well separated defined and scoped. As described in the introduction of this paper, the new digital era with a plethora of technologies and processors generating streams of data, it is now not only possible but necessary that AI and machine learning are able to process natural events and situations represented digitally in this new digital era.

This paper highlights three major limitations in current AI and machine learning technology when used in volatile and dynamic, non-deterministic environments such as smart cities. First limiting factor in current smart city AI applications is that most are carried out in isolation due to the difficulty of integration of different localities or data sources. Second is the need for human expertise and experience in deciding the appropriate structure (or architecture) of the model, that limits real-time automated applications difficult. Third is the need for labelled past data to build and train supervised models. These three factors were highlighted as limitations which arise due to the assumption of deterministic environments when using these techniques. Such training/learning was suitable for the post mortem type data processing and analysis of the past but not at all suitable to take advantage from the new digital environments and eco-systems (where data and digital representations impact and influence each other). With big data, it is difficult or impossible in many situations to have sufficient knowledge of the distributions, dimensions, granularity and frequency of data to be able to successfully pre-build appropriate AI structures. As a solution, this paper proposes self-structuring AI which can be created with a few starting nodes and develop the suitable structure incrementally to suit the environment as represented by the data. The proposed framework is built upon a suite of unsupervised self-organization algorithms with the Growing SOM as the base building block.

The extendibility of the proposed framework in multi-modal diverse data sources addresses the first limitation discussed above. As such, the new structure adapting AI framework enables local processing and modelling of data as well as the generation of global positioning of related data sources. The global positioning provides a way of linking different but related applications and data sources with the result of enabling localized applications to ascertain their relative position. Detailed descriptions of the different tasks and processing requirements were highlighted. The key components and functionality were demonstrated using several smart city related benchmark data sets. The second and third limitations are addressed in the proposed framework with the use of unsupervised machine learning with self-organization as building blocks. Due to the unsupervised self-learning capability of the GSOM algorithm, any human involvement or labelled (annotated) data sets are not needed for the framework to function. The paper further presents extensions to the GSOM algorithm which enables incremental unsupervised learning, GSOM fusion and distributed and scalable GSOM learning to address practical needs of real situations using unsupervised learning algorithms.

Although many AI based applications are currently being used successfully in smart cities, the real advantage lies in the ability to consider the data created from multiple sources as a whole. This paper proposes self-building AI as a base for addressing the key limitations for holistic processing, integration and analysis of multiple data sources. The volume, variety and volatility of the data (Big Data) make it essential that the technology has the ability to self-adapt, with human involvement becoming impossible. The self-building AI proposed in this paper can self-learn in an unsupervised manner, grow or shrink the architecture to optimally represent the data and develop multiple levels of abstract representations of the same event. As such, instead of attempting to match each individual event with similar events or behaviours, it will be possible to identify similarity in an abstract sense; for example level of density in traffic and drill down to possible causes such as is it road works, large trucks, etc. or link to other sources such as pedestrian movements to identify causality or predict next events.

5.2 Practical Implications

Due to the ability to generate global scenarios from local information, the proposed technology could be used to support decisions on diverting traffic during emergencies, police and security positioning, understand flu or epidemic spread within neighborhoods, optimize road or infrastructure repairs based on traffic, public functions, etc. and also improve delivery of essential services such as electricity, gas, water.

The proposed self-building AI framework is primarily focused on smart cities in this paper that can be materialized for Big Data driven traffic management systems (Bandaragoda et al. 2020; Nallaperuma et al. 2019), intelligent video surveillance in public places/ pedestrian walks with capability to detect anomalies (Nawaratne et al. 2019a, 2019b, 2019c), recognize human actions (Nawaratne et al. 2019a, 2019b, 2019c), summarise surveillance video to detect unusual behaviour (Gunawardena et al. 2020), intelligent energy meter reading for smart energy (Silva et al. 2011) and digital health (Carey et al. 2019) in smart city environments. In addition, the proposed self-building AI framework can even be extend to develop resource-efficient computing infrastructure to support effective implementation of smart cities (Jayaratne et al. 2019; Kleyko et al. 2019).

Future work will focus on conducting specialized local and global analytics over time to ascertain the value of the framework with its ability to continuously update based on new data. It is also planned to implement the framework on a cloud environment to further simulate the complete local and global functionality with the inter layer communications.

References

Adikari, A., De Silva, D., Alahakoon, D., & Yu, X. (2019). A cognitive model for emotion awareness in industrial Chatbots. 2019 IEEE 17th international conference on industrial informatics (INDIN), 1, 183–186.

Alahakoon, D., Halgamuge, S. K., & Srinivasan, B. (2000). Dynamic self-organizing maps with controlled growth for knowledge discovery. IEEE Transactions on Neural Networks, 11(3), 601–614. https://doi.org/10.1109/72.846732.

Allam, Z., & Dhunny, Z. A. (2019). On big data, artificial intelligence and smart cities. Cities, 89, 80–91.

Alter, S. (2019). Making sense of smartness in the context of smart devices and smart systems. Information Systems Frontiers, 1–13.

Bandaragoda, T., Adikari, A., Nawaratne, R., Nallaperuma, D., Luhach, A, Kr., Kempitiya, T., Nguyen, S., Alahakoon, D., De Silva, D., & Chilamkurti, N. (2020). Artificial intelligence based commuter behaviour profiling framework using internet of things for real-time decision-making. Neural Computing and Applications. https://doi.org/10.1007/s00521-020-04736-7.

Boccaletti, S., Grebogi, C., Lai, Y. C., Mancini, H., Maza, D., & Lai, Y.-C. (2000). The control of chaos: Theory and applications. Physics Reports, 329(3), 103–197. https://doi.org/10.1016/S0370-1573(99)00096-4.

Bundy, A. (2017). Preparing for the future of artificial intelligence. Springer

Carey, L., Walsh, A., Adikari, A., Goodin, P., Alahakoon, D., De Silva, D., Ong, K.-L., Nilsson, M., & Boyd, L. (2019). Finding the intersection of neuroplasticity, stroke recovery, and learning: Scope and contributions to stroke rehabilitation. Neural Plasticity., 2019, 1–15. https://doi.org/10.1155/2019/5232374.

Chen, Y., Qin, B., Liu, T., Liu, Y., & Li, S. (2010). The comparison of SOM and K-means for text clustering. Computer and Information Science, 3(2), 268–274.

Chen, B.-W., Imran, M., Nasser, N., & Shoaib, M. (2019). Self-aware Autonomous City: From sensing to planning. IEEE Communications Magazine, 57(4), 33–39. https://doi.org/10.1109/MCOM.2019.1800628.

Choi, W., Shahid, K., & Savarese, S. (2009). What are they doing?: Collective activity classification using spatio-temporal relationship among people. Computer vision workshops (ICCV workshops), 2009 IEEE 12th international conference on, 1282–1289.

Cziko, G, A. (2016). Unpredictability and indeterminism in human behavior: Arguments and implications for educational Research: Educational Researcher. https://doi.org/10.3102/0013189X018003017,

Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), 1, 886–893 vol. 1. https://doi.org/10.1109/CVPR.2005.177.

De Silva, D., Sierla, S., Alahakoon, D., Osipov, E., Yu, X., & Vyatkin, V. (2020). Toward intelligent industrial informatics: A review of current developments and future directions of artificial intelligence in industrial applications. IEEE Industrial Electronics Magazine, 14(2), 57–72. https://doi.org/10.1109/MIE.2019.2952165.

Eldrandaly, K. A., Abdel-Basset, M., & Abdel-Fatah, L. (2019). PTZ-surveillance coverage based on artificial intelligence for smart cities. International Journal of Information Management., 49, 520–532. https://doi.org/10.1016/j.ijinfomgt.2019.04.017.

Emam, A. (2015). Intelligent drowsy eye detection using image mining. Information Systems Frontiers, 17(4), 947–960.

Fonseka, A., & Alahakoon, D. (2010). Exploratory data analysis with multi-layer growing self-organizing maps. 2010 fifth international conference on information and automation for sustainability, 132–137. https://doi.org/10.1109/ICIAFS.2010.5715648.

Fonseka, A., Alahakoon, D., & Rajapakse, J. (2011). A dynamic unsupervised laterally connected neural network architecture for integrative pattern discovery. In B.-L. Lu, L. Zhang, & J. Kwok (Eds.), Neural information processing (pp. 761–770). Berlin Heidelberg: Springer.

Fritzke, B. (1994). Growing cell structures—A self-organizing network for unsupervised and supervised learning. Neural Networks, 7(9), 1441–1460.

Ganegedara, H., & Alahakoon, D. (2011). Scalable data clustering: A Sammon’s projection based technique for merging GSOMs. In B.-L. Lu, L. Zhang, & J. Kwok (Eds.), Neural information processing (pp. 193–202). Berlin Heidelberg: Springer.

Ganegedara, H., & Alahakoon, D. (2012). Redundancy reduction in self-organising map merging for scalable data clustering. The 2012 International Joint Conference on Neural Networks (IJCNN), 1–8. https://doi.org/10.1109/IJCNN.2012.6252722.

Garcia-Font, V., Garrigues, C., & Rifà-Pous, H. (2016). A comparative study of anomaly detection techniques for Smart City wireless sensor networks. Sensors (Basel, Switzerland), 16(6). https://doi.org/10.3390/s16060868.

Guelzim, T., & Obaidat, M. S. (2016). Cloud computing systems for smart cities and homes. In M. S. Obaidat & P. Nicopolitidis (Eds.), Smart Cities and Homes (pp. 241–260). Morgan Kaufmann. https://doi.org/10.1016/B978-0-12-803454-5.00012-2.

Gunawardena, P., Amila, O., Sudarshana, H., Nawaratne, R., Luhach, A, Kr., Alahakoon, D., Perera, A, S., Chitraranjan, C., Chilamkurti, N., & De Silva, D. (2020). Real-time automated video highlight generation with dual-stream hierarchical growing self-organizing maps. Journal of Real-Time Image Processing. https://doi.org/10.1007/s11554-020-00957-0.

Gupta, P., Chauhan, S., & Jaiswal, M. P. (2019). Classification of Smart City research—A descriptive literature review and future research agenda. Information Systems Frontiers, 21(3), 661–685. https://doi.org/10.1007/s10796-019-09911-3.

Hall, R. E., Bowerman, B., Braverman, J., Taylor, J., Todosow, H., & Von Wimmersperg, U. (2000). The vision of a smart city (BNL-67902; 04042). Upton, NY (US): Brookhaven National Lab https://www.osti.gov/biblio/773961.

Hebb, D, O. (1949). The organization of behavior; a neuropsychological theory. Wiley.

Jayaratne, M., Alahakoon, D., Silva, D, D., & Yu, X. (2017). Apache spark based distributed self-organizing map algorithm for sensor data analysis. IECON 2017 - 43rd Annual Conference of the IEEE Industrial Electronics Society, 8343–8349. https://doi.org/10.1109/IECON.2017.8217465

Jayaratne, M., Silva, D. de, & Alahakoon, D. (2019). Unsupervised machine learning based scalable fusion for active perception. IEEE Transactions on Automation Science and Engineering, 1–11. https://doi.org/10.1109/TASE.2019.2910508.

Jones, S., Irani, Z., Sivarajah, U., & Love, P, E. (2017). Risks and rewards of cloud computing in the UK public sector: A reflection on three Organisational case studies. Information Systems Frontiers, 1–24.

Kar, A. K., Ilavarasan, V., Gupta, M. P., Janssen, M., & Kothari, R. (2019). Moving beyond smart cities: Digital nations for social innovation & sustainability. Information Systems Frontiers, 21(3), 495–501.

Khadartsev, A. A., & Eskov, V. M. (2014). Chaos theory and self-Organization Systems in Recovery Medicine: A scientific review. Integrative Medicine International, 1(4), 226–233. https://doi.org/10.1159/000377679.

Kiran, B. R., Thomas, D. M., & Parakkal, R. (2018). An overview of deep learning based methods for unsupervised and semi-supervised anomaly detection in videos. Journal of Imaging, 4(2), 36. https://doi.org/10.3390/jimaging4020036.

Kiviluoto, K. (1996). Topology preservation in self-organizing maps. Proceedings of International Conference on Neural Networks (ICNN’96), 1(1), 294–299. https://doi.org/10.1109/ICNN.1996.548907.

Kleyko, D., Osipov, E., Silva, D. D., Wiklund, U., & Alahakoon, D. (2019). Integer self-organizing maps for digital hardware. International Joint Conference on Neural Networks (IJCNN), 2019, 1–8. https://doi.org/10.1109/IJCNN.2019.8852471.

Kohonen, T. (1997). Exploration of very large databases by self-organizing maps. Neural Networks, 1997., International Conference On, 1, PL1-PL6 vol. 1.

Lana, I., Ser, J. D., Velez, M., & Vlahogianni, E. I. (2018). Road traffic forecasting: Recent advances and new challenges. IEEE Intelligent Transportation Systems Magazine, 10(2), 93–109. https://doi.org/10.1109/MITS.2018.2806634.

Li, S., Da Xu, L., & Zhao, S. (2015). The internet of things: A survey. Information Systems Frontiers, 17(2), 243–259.

Lin, A., & Chen, N.-C. (2012). Cloud computing as an innovation: Percepetion, attitude, and adoption. International Journal of Information Management, 32(6), 533–540. https://doi.org/10.1016/j.ijinfomgt.2012.04.001.

Liu, B. (2018). Natural intelligence—The human factor in AI.

Liu, W, C., & Lin, C, H. (2017). A hierarchical license plate recognition system using supervised K-means and support vector machine. 2017 international conference on applied system innovation (ICASI), 1622–1625.

Lu, C., Shi, J., & Jia, J. (2013). Abnormal event detection at 150 FPS in MATLAB. 2013 IEEE international conference on computer vision, 2720–2727. https://doi.org/10.1109/ICCV.2013.338.

Mahadevan, V., Li, W., Bhalodia, V., & Vasconcelos, N. (2010). Anomaly detection in crowded scenes. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2010, 1975–1981. https://doi.org/10.1109/CVPR.2010.5539872.

Marinescu, D, C. (2017). Cloud computing: Theory and practice. Morgan Kaufmann.

Marsland, S., Shapiro, J., & Nehmzow, U. (2002). A self-organising network that grows when required. Neural Networks, 15(8–9), 1041–1058.

Melo Riveros, N. A., Cardenas Espitia, B. A., & Aparicio Pico, L. E. (2019). Comparison between K-means and self-organizing maps algorithms used for diagnosis spinal column patients. Informatics in Medicine Unlocked, 16, 100206. https://doi.org/10.1016/j.imu.2019.100206.

Mikalef, P., Pappas, I. O., Krogstie, J., & Pavlou, P. A. (2020). Big data and business analytics: A research agenda for realizing business value. Information & Management, 57(1), 103237.

Mohammad, N., Muhammad, S., Bashar, A., & Khan, M. A. (2019). Formal analysis of human-assisted smart city emergency services. IEEE Access, 7, 60376–60388.

Nallaperuma, D., Nawaratne, R., Bandaragoda, T., Adikari, A., Nguyen, S., Kempitiya, T., Silva, D. D., Alahakoon, D., & Pothuhera, D. (2019). Online incremental machine learning platform for big data-driven smart traffic management. IEEE Transactions on Intelligent Transportation Systems, 20, 1–12. https://doi.org/10.1109/TITS.2019.2924883.

Nawaratne, R., Bandaragoda, T., Adikari, A., Alahakoon, D., De Silva, D., & Yu, X. (2017). Incremental knowledge acquisition and self-learning for autonomous video surveillance. IECON 2017 - 43rd Annual Conference of the IEEE Industrial Electronics Society, 4790–4795. https://doi.org/10.1109/IECON.2017.8216826

Nawaratne, R., Alahakoon, D., De Silva, D., Chhetri, P., & Chilamkurti, N. (2018). Self-evolving intelligent algorithms for facilitating data interoperability in IoT environments. Future Generation Computer Systems, 86, 421–432. https://doi.org/10.1016/j.future.2018.02.049.

Nawaratne, R., Alahakoon, D., Silva, D. D., & Yu, X. (2019a). Spatiotemporal anomaly detection using deep learning for real-time video surveillance. IEEE Transactions on Industrial Informatics, 16, 1–1–1–402. https://doi.org/10.1109/TII.2019.2938527.

Nawaratne, Rashmika, Alahakoon, D., De Silva, D., Kumara, H., & Yu, X. (2019b). Hierarchical two-stream growing self-organizing maps with transience for human activity recognition. IEEE Transactions on Industrial Informatics, 1–1. https://doi.org/10.1109/TII.2019.2957454.

Nawaratne, Rashmika, Alahakoon, D., De Silva, D., & Yu, X. (2019c). HT-GSOM: Dynamic self-organizing map with transience for human activity recognition. 2019 IEEE 17th international conference on industrial informatics (INDIN), 1, 270–273. https://doi.org/10.1109/INDIN41052.2019.8972260.

Pappas, I, O., Mikalef, P., Giannakos, M, N., Krogstie, J., & Lekakos, G. (2018). Big data and business analytics ecosystems: Paving the way towards digital transformation and sustainable societies. Springer.