Abstract

We argue for an account of chemical reactivities as chancy propensities, in accordance with the ‘complex nexus of chance’ defended by one of us in the past. Reactivities are typically quantified as proportions, and an expression such as “A + B → C” does not entail that under the right conditions some given amounts of A and B react to give the mass of C that theoretically corresponds to the stoichiometry of the reaction. Instead, what is produced is a fraction α < 1 of this theoretical amount, and the corresponding percentage is usually known as the yield, which expresses the relative preponderance of its reaction. This is then routinely tested in a laboratory against the observed actual yields for the different reactions. Thus, on our account, reactivities ambiguously refer to three quantities at once. They first refer to the underlying propensities effectively acting in the reaction mechanisms, which in ‘chemical chemistry’ (Schummer in Hyle 4:129–162, 1998) are commonly represented by means of Lewis structures. Besides, reactivities represent the probabilities that these propensities give rise to, for any amount of the reactants to combine as prescribed. This last notion is hence best understood as a single case chance and corresponds to a theoretical stoichiometric yield. Finally, reactivities represent the actual yields observed in experimental runs, which account for and provide the requisite evidence for/against both the mechanisms and single case chances ascribed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Probability: ontology and statistical modelling methodology

The role of probability in chemical modelling practice is a remarkably poorly studied philosophical topic. While there have been many discussions regarding reductionism and ontology within the philosophy of chemistry, the nature of chance and probability in these discussions, and in chemistry at large, remains underexplored. This is surprising since, for chemistry, the reductive basis cannot but ultimately be atomic physics–and hence quantum mechanics. Since many take quantum mechanics to introduce irreducible objective chance into the description of atomic and sub-atomic processes, the discussion would be expected to turn to a large extent on chemical probabilities. Yet, probability barely makes an appearance in the philosophy of chemistry literature. One explanation is that physics reductionism has few friends these days; but the more likely explanation is an (unfounded) rejection of any macroscopic objective chances. The explanation is unfounded because in philosophy of probability there are nowadays some good arguments in favor of macroscopic objective chance–and not just in an indeterministic world. It may be just as well to begin by reviewing some of these arguments–and how they allow for objective chances in molecular organic and inorganic chemistry, including reactivity phenomena.

There are two types of argument for the reality of objective macro-chances. One derives from considerations of ontology and related issues of reduction. The other one, by contrast, comes out of statistical modelling practice. For several reasons, we ascribe greater weight to the second set of arguments but let us here first quickly run through the first type. Hoefer (2019) and Glynn (2010), amongst others, have argued that macroscopic chance can be real even if the underlying dynamics of our world is deterministic. They both draw in different ways from David Lewis’ (1986) theory of chance and argue that on a generous version of Lewis’ best system analysis of chances, these certainly can be real at any level of description–and not merely in microphysics. For, the systems that best systematize the Humean mosaic (the whole tapestry of actual spatiotemporal coincidences, which everything else supervenes on according to the doctrine of Humeanism) at these levels may well be those systems that contain chances defined over macro-events and processes at those levels. Thus, a best system analysis of chances that understands the Humean mosaic generously to include higher level primitive facts–not merely pointwise spacetime coincidences–ought to have no problems accommodating chance at those levels. This does contrast with other theoretical approaches to chance including–perhaps most poignantly–, any that expressly links chance to the indeterministic character of the dynamics. And while it is true that Lewis’ original account rules out macro-chances, this is not so much the result of the best system analysis of chance per se, as the outcome of the ancillary commitment to a strong form of empiricism, which is encapsulated in Lewis’ thesis of Humean supervenience. That is, unless one insists on a sort of strong empiricism à la Lewis ruling out composite macro-events from the Humean mosaic, there is no reason why chances may not appear at higher levels of description, as part of the best system laws that account for the phenomena at those levels. Hence, there is scope within a best system analysis of chances for higher level emergent chance. Ontology per se does not rule out macroscopic chances.

Yet, when it comes to understanding the nature of chance, ontology has overall been a rather poor guide. Except for the success of such generous extensions of the best systems’ analysis, there is no account of the nature of chance that genuinely holds water. The frequency and propensity interpretations of probability attempt to fully define probability and suffer from genuine difficulties as a result. Many of us think that these difficulties make those two interpretations unviable, because at their heart there is an identity thesis between probabilities and either frequencies or propensities that is untenable. The difficulties are related to the notorious reference class problem, and Humphreys’ paradox, respectively. As regards the former problem (Gillies 2000; Hájek 1996, 2009; Hoefer 2019; Strevens 2016) the fact that the reference classes (or collectives, as Von Mises (1928 [1981]) called them) are undefined indicates that frequencies are not well defined. They rather depend on a given place selection within a sequence, and on the ongoing hypothetical progression of finite frequencies. By contrast, objective probabilities, or chances, have a robustness and stability that is lacking in frequencies. Any attempt to solve this problem by appeal to limiting theorems on indefinitely long frequencies also meets objections that many of us regard as unsurmountable (Hájek 2009).

The propensity interpretation of objective probability fares no better. Wesley Salmon first provided us with arguments to the effect that not all conditional probabilities can be understood as propensities. This is in fact most obvious when considering the inverse of the conditional probabilities that can be regarded as propensities, such as the propensity that shooting someone has to kill them. While Prob (K/S) > Prob (K/not S) may be regarded as a propensity measuring the strength of the tendency that shooting has to kill, the inverse conditional probability Prob (S/K) > Prob (K/S), which follows by Bayes conditionalization, cannot be similarly rendered into a propensity. It is evident that killing has no retrospective propensity to cause any shooting. Even the statement seems wrong, and the causal arrow–not just the temporal arrow–is clearly drawn the wrong way.

A few years later, Humphreys (1985, 2004) presented us with an argument to the effect that conversely not all propensities are probabilities. There are perfectly legitimate propensities that cannot be given a probability interpretation. What Humphreys did was to conceive of a thought experiment where it is evident that the physical propensities of a photon, if expressed as conditional probabilities, cannot possibly obey Kolmogorov axioms. Very briefly described, consider a source that emits photons spontaneously at some time t1; a few of these reach a half-silver mirror at a certain distance later at t2; some amongst these are then absorbed and some make it through and are transmitted at time t3. Denote for each photon by Bt1 the background conditions at the time t1 of its emission; by It2 the event of its incidence upon the mirror, and by Tt3 the event of its transmission through the mirror. If we then suppose that propensities are conditional probabilities, Humphreys (2004, p. 669) argues that in the physical situation described the following are the values for the propensities at the time of emission:

-

(i)

Propt1 (Tt3/It2 & Bt1) = p > 0.

-

(ii)

1 > Propt1 (It2/Bt1) = q > 0.

-

(iii)

Propt1 (Tt3/ ~ It2 & Bt1) = 0.

He then shows (conclusively to our minds) that these expressions together with a reasonable assumption regarding forwards in time causation are inconsistent with the Kolmogorov axioms; they specifically violate the fourth axiom–namely the one that defines conditional probability as the ratio: Prob (A/B) = Prob (A&B)/Prob (B). By reductio, either the Kolmogorov axioms must be replaced with a different formulation, or (the solution that we adopt here–see Suárez 2013), the identity thesis that proclaims propensities to be probabilities is false. Rather than interpreting probabilities, propensities are in statistical modelling practice intended to explain probabilities.

The frequency and propensity interpretations of probability are part of an ontological approach to the nature of probability. They are different attempts at answering the same questions regarding the truth makers of probability statements. They thus attempt to provide some referent in the world for what ‘chance’ can putatively denote. On a frequency account, statements about chances are essentially about the ratios of outcomes within given (actual and finite, or hypothetical and infinite) sequences. On a propensity account, by contrast, they are statements about the dispositions of certain objects and systems to generate those frequencies.Footnote 1 So, although they postulate different entities in the world as the referent of the term “chance”, or “objective probability”, they agree that there is just one thing in the world that our statements about chance refers to. Yet, neither of these approaches to objective chance succeeds. We need a different approach. We suggest one that does not start with issues of ontology and the metaphysics of chance. Instead we propose to start with statistical modelling practice and extract ontological conclusions only when needed (Suárez 2020). Since modelling practices are heavily context dependent, and they are located within precisely defined and highly idiosyncratic locales and communities, it also follows that those ontological conclusions will lack any generality. They will be rather specific to the contexts at hand.

In this paper, we first propose to apply this approach to chance to the modelling practice in what we call ‘chemical chemistry’. We then argue that significant lessons can be learned from such an application to the sorts chemical modelling practice that is typically employed for calculating reactivities. More specifically, we argue that the practice calls for a tripartite distinction between propensities, single-case probabilities, and frequencies, where none are subsumed under the others, or in any way analyzed away (Sect. 4). This has some implications for how to best conceptualize the chemical bond in chemical elements and reactions, and we suggest that the nature of the bond is best approached as a complex nexus of these three different notions as they are functionally operative within the practice of modelling chemical reactivities.

Lewis structures: reactivities, stabilization, and the stoichiometric yield

In an interesting paper, Joaquim Schummer (1998) emphasized what he called the ‘chemical core’ of chemistry. He emphasized the classical Lewis structures that ordinary chemists continue to employ in their everyday work in obtaining reactivities for possible compounds and their yields in laboratory situations. All that matters to them is whatever laboratory techniques are effective to anticipate, predict and control the yields of reactivities of different compounds. This is in striking contrast with the high minded and theoretical approach that quantum and physical chemists often take and that involves explanatory models of the physical aspects of molecular bonding, in either a semi-classical approach, or, ideally, a fully quantum treatment. Schummer finds the latter sort of practice relatively esoteric and rarely part of what is involved in most chemical laboratories and in most chemists’ practice. The ordinary work of most chemists–the ‘chemical core of chemistry’–still mainly involves the application of classical old-fashioned Lewis structures as indicative rules of thumb in the representations of covalent bonds, that is, as inferential blueprints, or vehicles towards the target of calculating yields.

We agree with this view and would like to use Schummer’s work as a linchpin for the distinction between what we call ‘chemical chemistry’ and the branch of physical chemistry that is better known to philosophers. The former is the common practice of using classical chemical structures to model the formation of compounds, to descriptively understand reactivities. The latter is, by contrast, the attempt, often of a theoretical kind, to provide an explanatory physical foundation for chemistry, which is presumably to be found in quantum mechanics. (As a referee points out, the distinction between explanation and understanding is by now widespread in philosophy of science, and we follow essentially common lore in appealing to it). If relative yields between the different compound elements of a given reaction are regarded as relative probabilities, then we can redeploy the distinction that we already drew in the first part of the article between physical and chemical chemistry. The former, recall, is ultimately interested in finding out the underlying and fundamental truth makers of reactivity yields. The presumed foundations of these reactions lie in quantum mechanical interactions between electrons in covalent bonds which explain the resulting energetic values of the different compounds. From this perspective, these quantum chemical interaction Hamiltonians fundamentally determine yields, in any given situation. It may be very hard to resolve the quantum mechanical treatment, even impossibly so, for most reactions, but this is merely a practical limitation, according to this viewpoint. In theory, at least, the truth makers for all reactivities are well founded in the quantum mechanical description of the underlying quantum molecular processes.

Just as quantum physical chemistry provides the truth makers–the ontology–for our probabilistic ascriptions regarding reactivity yields, it is the role of ‘chemical chemistry’ to provide a description of much methodological practice–the bulk of chemical modelling in laboratories across the world. This involves no sophisticated quantum mechanical treatment, according to Schummer, but the much more common application of Lewis structures in the rough and ready predictions and calculations of ordinarily observed reactivity yields in laboratory situations. As in the first part of this article, and as regards reactivity yields, preponderances, and percentages in their putative probabilistic interpretation, physical chemistry is an attempt to interpret away those quantities in the more apt terms of quantum mechanics, while chemical chemistry is devoted entirely to establishing methodological rules for the inference of those quantities whenever they are required for practical purposes. The striking thing is that here, as in discussions over the ontology of probability, disagreement about the nature, reach, and consistency of the quantum mechanical treatments is rife. By contrast, the application of Lewis structures in ordinary chemical practice is both entrenched and relatively uncontroversial. It thus seems properly in line with the spirit of our proposal to concentrate on the latter, the practical methods, since it suits our purposes in providing an overall description of the common methodology for obtaining of reactivity yields and preponderances.

Chemical reactivities suggest an indeterministic approach to the mechanisms underlying chemical reactions. For example, an expression such as “A + B → C” does not mean that in the appropriate conditions given amounts of the substances A and B react to give the mass of C that theoretically corresponds to the stoichiometry of the reaction. Instead, what is produced is a percentage or fraction α of this theoretical amount. This fraction α is usually known as the yield of the reaction. An α < 1 can be attributed to two different causes:

-

(i)

Impurities in the reactants, or side reactions with other substances in the environment. In other words, “A + B” is only an approximated description of the initial state. This is, in fact, a version of the more general problem of our unavoidable limited precision in the specification of the initial state of the reactants. Similar issues plague the interpretation of physical probabilities in deterministic systems.

-

(ii)

In general, given a group of reactants there is more than one possible reaction between them. Thus, in our model example, “A + B → C” does not fully describe the reactivity of A and B. To specify the reactivity of A and B it would be more accurate to write:

A + B → C

A + B → D

A + B → E

And so on to exhaust all the possible reactions between A and B. Thus, the yield α for a given product also expresses the preponderance of its reaction.

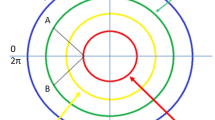

We focus here on the second case, which expressly suggests that yields measure the relative likelihoods or chances of the possible reactions. The usual explanation of reactivity within chemical chemistry is closely linked to a reaction mechanism: “a specification, by means of a sequence of elementary chemical steps, of the detailed process by which a chemical change occurs” (Lowry and Richardson, 1987, p. 190). And the usual way of presenting a mechanism is by means of graphical Lewis structures (Lewis 1913, 1916, 1923 [1966]). For example, Fig. 1 represents the reactions of electrophilic aromatic substitution:

These graphs can also be used to study the influence of a substituent group on the relative yields when more than one product is generated. For example, an amino group (-NH2) directs the reaction to the ortho and para products, by means of a resonant stabilization of the cationic aromatic intermediate (Fig. 2). On the other hand, in the reaction to the meta-product, the positive charge cannot be delocalized over the amino group, being less stable (Fig. 3). As a conclusion, the yields of the ortho- and para- substitutions are expected to be of the same order, and both substantially greater than the yield of the meta- substitution. Such sorts of predictions can be extended to any activating groups and have been experimentally confirmed for decades. They thus figure in any introductory textbook on organic reactions, and not merely for didactic purposes.

To conclude, Lewis structures are graphical descriptions of reactivities of the diverse atomic elements they involve, enabling us to infer both their possible reactions and their qualitative relative proportions. They capture functional powers of the respective mechanisms, including some observable features of the competing reactions, such as their kinetics or their stereospecificity. As a result, we shall now urge, this justifies taking them to represent underlying propensities displaying themselves in single case chances that may be known imprecisely, and perhaps only qualitatively.

The complex nexus of the chemical bond

The role of Lewis structures within chemical theorizing may thus seem paradoxical. On the one hand, they are critically instrumental to chemical chemists’ explanations of reactivities and yields. On the other hand, they afford a limited degree of control over most of these reactions. We propose to address the apparent paradox by turning to the nature of the chemical bond. As is well known this has been the focus of much philosophical debate in the last decade or so (Hendry 2008, 2021; Weisberg 2008; Seifert 2022). The idea that there are in essence two different views of the nature of the bond, one structural and the other energetic, originates in Hendry (2008). The structural conception of the bond is the one employed in classical Lewis structures; where it is conveniently represented as a link between atoms that symbolizes a shared electron, or more. On this view the bond is genuinely a real thing, standing between the atoms that compose a given molecule. And it is the properties of this thing, however structurally described, that putatively explain the reactivity and the yields of most reactions. We take this conception of the bond to roughly inform the implicit notion that is most prevalent amongst practitioners of ‘chemical chemistry’. It is of course also prevalent throughout the educational establishment, not only in secondary school, where its proximity to everyday conceptions and simplified models of the atom gives it currency. It is a fair educational comment that most of us begin thinking about molecular compounds, reactions, and chemical reactivity, with very much this notion of the bond in mind. Yet, its importance goes even beyond this context: graphs for Lewis structures depicting such bonds are a critical core of professional chemists’ reasoning patterns about molecular structure (Barradas and Sánchez Gómez, 2014).

By contrast, the energetic conception arises in the context of Linus Pauling’s attempts to ground the use of classical chemical structures in quantum mechanics in view of the obvious deficiencies of the classical structural formulas. Hendry (2008, pp. 917ff) and Weisberg (2008) emphasize two deficiencies: quantum mechanical holism or non-separability, and charge delocalization. The first one is the familiar problem of the indistinguishability of identical particles, which entails that molecular wavefunctions must be symmetrical with respect to electron permutations. Yet, the picture in the classical structural formulas is of bonds as made up by obviously distinguishable electrons. And this is clearly wrong: Electrons cannot figure in the molecular wavefunctions if understood as possessing haecceitist identities of their own. And a chemical bond cannot really be the mere physical sum of those electrons that are shared in covalent formulas. This is compounded by the second problem, namely the fact that electron charge density in a molecule is delocalized, or highly smeared out. This entails that the bonds depicted in Lewis structures cannot in fact exist as depicted for, contrary to the depiction, the atoms that compose the molecules in Lewis structures are not precisely localized in any given point of space and neither are the electrons that they share.

Hence, Lewis structures would mislead if taken at face value, as a 3D representation of the actual physical structure of the bond, and chemists rightly reject such an interpretation. But what other value can they have as representations? We suggest instead to interpret Lewis structures as effective tools for the inference of the relevant capacities of atoms in a molecule to combine and form compounds. As Hendry (2008, p. 911) puts it, Lewis structures represent “their capacities to combine with fixed numbers of atoms of other elements”. On our account of the role of probability in mechanisms of chemical reactivity, this entails that Lewis structures represent the atoms’ propensities for recombination with other atoms rather than–as they would, if they were literal depictions–the physical makeup of the molecules that they form. Hence, in the context of possible combinations of atoms within any molecules, Lewis structures display the propensities for every possible bonding reaction.

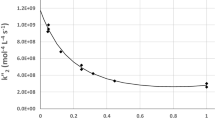

What the energetic view contributes is the apt representation of the single case chances that display such propensities. As we saw, Lewis structures provide no formal or quantitative probabilities. Yet, stoichiometric yields may be rendered as imprecise probabilities; and this is particularly the case when we analyze the relative yields in different reactions. The explanation of both what the possible reactants are, and of how likely each of these possible reactions is must inevitably refer to the molecular quantum wavefunction. The normed squared amplitude of that wavefunction, if it were only possible to calculate it, yields the appropriate probabilities, so it seems natural to suppose that the observed yields would correspond to those quantum mechanical probabilities, even in those cases where we are very far from being able to calculate them, or to in any way resolve the Schrödinger equation for the molecular wavefunction. On the energetic view, there are no bonds per se, but rather ‘bonding’ events, phenomena, or effects. Such ‘bondings’ correspond to the least energy-consuming configurations of atoms within the molecular wavefunction. Thus, stable configurations perdure if they are energetically cost efficient for the molecules to maintain them. The relative stoichiometric yields thus reflect the relative costs carried by each possible bonding. To make this point more formally, let us briefly review reaction mechanism models within quantum chemistry.

In the Born–Oppenheimer approximation, the reactants in any possible arrangement of the products must correspond to the minima of the potential hypersurface on which nuclei are assumed to move. The points of this hypersurface are the eigenvalues of the electronic Schrödinger equation for the system for every nuclear configuration. A reaction then corresponds to a transition from one of the minima (that of the reactants) to another one, namely that describing the products. A typical model of a reaction mechanism in quantum chemistry then ascribes a nuclear wave packet to the reactants, and lets it evolve over the whole hypersurface until a stationary state is reached, in accordance with the Born–Oppenheimer version of the Schrödinger equation for the nuclear coordinates (Öhrn 2015). Although the final stationary nuclear wave function is spread over the hypersurface, its amplitude is concentrated around its different minima. The probabilities for a given reaction, and thus their relative stoichiometric yields, are given by the amplitudes of the wave function around their respective minima. On this picture, then, bonding events are essentially probabilistic configurations and the precise single case probabilities are provided by solutions to the Schrödinger equation, when they are available.

On the other hand, as is well known, the Schrödinger equation cannot be analytically solved beyond the cationic one-electron H2+ molecule (and even this, only within the Born–Oppenheimer framework). In all other cases we only have at our disposal a diverse range of approximations. Weisberg (2008) chronicles them well, beginning with the valence bond models, which assume that two isolated atoms are brought to interact together, satisfying neither delocalization nor indistinguishability. In the simple version for H2 each hydrogen nuclei have an associated wave function a and b, and each of these bring along one of two distinguishable electrons 1 and 2. The wavefunctions then mix and yield a combined wavefunction: \(\psi =ca\left(1\right)b(2)\). This yields an estimate for the dissociation energy of the molecule, the energy that is required to pull the atoms apart, remove the interaction and destroy the bonding. This estimate can be checked experimentally and turns out to be a distant approximation (Weisberg 2008, p. 937).

A fully quantum mechanical model satisfying indistinguishability was notoriously provided by Heitler and London (1927). They allowed a compound wavefunction incorporating the permuted positions of each electron: \(\psi =ca\left(2\right)b(1)\) corresponding to the electrons ‘swapping places’, to yield the linear combination: \(\psi =ca\left(1\right)b\left(2\right)+ca\left(2\right)b(1)\). This description turns out to provide estimates for the dissociation energy much closer to the experimental results, but it does not allow us to identify electron’s identities as in Lewis structures and, moreover, delocalizes the electrons rather dramatically. Thus, the Heitler and London model only allows us to calculate the probability density function for electron localization around the molecule with sharp interchangeable peaks at each of the atomic centers. The model can be further refined by including further correction terms into the wavefunction to account for ionization, which occurs when the molecule is scored heavily towards one of the atomic centers. Further molecular orbital models have been devised where the atomic centers are assumed to be at rest and the electronic wavefunction is derived for the entire molecule. The resulting energy profile determines the equilibrium distances between the nuclei and the electrons. So, there is hardly any localization going on beyond the electron density function itself, which moreover fluctuates in response to the underlying nuclear potential fields. Yet, these models are still more empirically accurate, coming very close to the molecular dissociation energies that are experimentally observed. It seems that, in general, further de-localization, and a wider spread in the probability density function for the individual electrons’ positions, leads to greater molecular stability, and brings the models closer to experimental results (Weisberg 2008, p. 938).

We thus argue that Lewis structures are representations of the underlying chemical propensities of molecular compounds. These propensities display themselves in the electronic probability density functions for stable molecules that are best represented–when they can be–in molecular orbital and covalent bond models. While the former representations in terms of Lewis structures depict the nuclei and the electrons as localized and distinguishable, the probability functions describe unlocalized, indistinguishable electron densities. The “capacities to combine” (Hendry dixit) of atomic elements are therefore displayed in single case chances over stable molecular configurations. And the relative stabilizations of these configurations, we argue, are good indicators of the likelihoods of the reactions. When molecular models for these stabilizations are available, the probabilities can be predicted with extremely good, albeit not complete accuracy. When the models are not available, we can presume those probabilities exist, nonetheless, even if we cannot quantitatively predict them. Lewis structures there only serve to delimit the possibilities in the sample space, as it were (since Lewis structures inform us about the range of possible reactions), but they cannot quantify over this space in any way. But this is not entirely atypical in statistical modelling, which is sometimes qualitative and can only produce ordinal rankings of likely possibilities. Finally, the models and their prescribed density functions can then be tested in the laboratory by confronting them with the obtained stoichiometric yields. The underlying tripartite probabilistic structure of chemical bond modelling is thus no different to what arguably also goes on in physics (Suárez 2007), or evolutionary biology (Suárez 2022).

The application of the complex nexus of chance to chemical reactions thus requires us to keep separate the Lewis structures, the covalent and orbital molecular bonds, and the laboratory stochiometric yields. This triad (describing theoretical propensities, single case modeled probabilities, and laboratory frequencies) renders what we may call the complex nexus of the chemical bond. This nexus results from a pragmatic attitude to the nature of the bond, attending to the methodology that chemists use to establish ‘bondings’, while refraining from inquiring further into their ontology. This has the great advantage to accommodate both the structural and energetic conception of the bond. The structural conception is right to suppose that the best or more readily available description of the capacities of atomic elements to bond is structural. But the energetic conception is right to suppose that the single case probabilities that display those propensities are best captured in semi-classical quantum models. In these models sub-molecular and directional ‘bonds’ are not self-standing entities, but rather correspond to dynamical bonding processes that determine the probabilities for different stabilizations. These models, together with their probabilistic predictions, can then be tested against the relative stochiometric proportions of reactants as measured in laboratories. The “chemical bond” is thus revealed to be a convenient label for all three notions and their mutual interrelations in the practice of the modelling of chemical reactions.

Notes

There are in fact two types of propensity theory: Long-run and single case theories. (See, e.g., Gillies 2000 for an excellent exposition; or Suárez 2020). The long run theory assumes that propensities are dispositions to generate certain frequencies of outcomes in long run sequences of identically prepared repeated experiments. The single case theory, by contrast, assumes that they are dispositions to generate a given outcome with a certain probability in each experimental run. So, if we take the case of a coin toss, a fair coin will have the propensity to land heads = ½ if i) it generates a long sequence of tosses where the coin lands heads about one half of the times (“long-run”) and ii) it generates in every toss an outcome heads-up with a probability = ½ (“single-case”). Note the revealing appearance of the term “probability” in the definiens of the latter notion. This is significant since it reveals that, on a single case propensity account, propensity and probability are in fact distinct, and a propensity is a disposition to manifest a probability.

References

Barradas-Solas, F., Sánchez, P.J., Gómez: Orbitals in chemical education: an analysis through their graphical representation. Chem. Educ. Res. Pract. 15(3), 311–319 (2014)

Gillies, D.: Philosophical Theories of Probability, 1st edn. New York, Routledge (2000)

Glynn, L.: Deterministic chance. Br. J. Philos. Sci. 61(1), 51–80 (2010)

Hájek, A.: Fifteen arguments against hypothetical frequentism. Erkenntnis 70(2), 211–235 (2009)

Hájek, A.: Mises Redux--Redux: fifteen arguments against Finite Frequentism’. Erkenntnis 45(2/3), 209–227 (1996)

Heitler, W., London, F.: Wechselwirkung neutraler atome und homöopolare bindung nach der quantenmechanik. Zeischrift Für Physik 44(6–7), 455–472 (1927)

Hendry, R.F.: Two conceptions of the chemical bond. Philos. Sci. 75(5), 909–920 (2008)

Hendry, R.F.: Structure, scale and emergence. Stud. History Philos. Sci. Part A 85, 44–53 (2021)

Hoefer, C.: Chance in the World: A Humean Guide to Objective Chance. Oxford Studies in Philosophy of Science. Oxford University Press, Oxford (2019)

Humphreys, P.: Why propensities cannot be probabilities. Philos. Rev. 94(4), 557–570 (1985)

Humphreys, P.: Some considerations on conditional chances. British J. Philos. Sci. 55(4), 667–680 (2004)

Lewis, G.N.: Valence and tautomerism. J. Am. Chem. Soc. 35, 1448–1455 (1913)

Lewis, G.N.: The atom and the molecule. J. Am. Chem. Soc. 38, 762–785 (1916)

Lewis, G.N.: Valence and the Structure of Atoms and Molecules. Dover, New York (1923)

Lewis, D.: A subjectivist’s guide to objective chance. In: Philosophical Papers, vol. 2, pp. 83–132. Oxford University Press, Oxford (1986)

Lowry, T., Richardson, K.: Mechanism and Theory in Organic Chemistry, 2nd edn. Harper and Row, New York (1987)

Öhrn, Yngve. Chapter 3: Time-dependent treatment of molecular processes. Adv. Quan. Chem. 70, 69–109 (2015)

Schummer, J.: The chemical core of chemistry I: a conceptual approach. Hyle 4, 129–162 (1998)

Seifert, V.: The chemical bond is a real pattern. Philos. Sci. 1, 47 (2022)

Smith, M., March, J.: Advanced Organic Chemistry: Reaction, Mechanisms and Structure, 6th edn. Wiley-Interscience, New York (2007)

Strevens, M.: The reference class problem in volutionary biology: Distinguishing selection from drift. In: Pence, Charles, Ramsey, Grant (eds.) Chance in Evolution. University of Chicago Press, Chicago (2016)

Suárez, M.: Quantum propensities. Studies in History and Philosophy of Science Part b: Studies in History and Philosophy of Modern Physics 38(2), 418–438 (2007)

Suárez, M.: Propensities and pragmatism. J. Philos. 110 (2), 61–92 (2013)

Suárez, M.: Propensities, probabilities, and experimental statistics. In: Massimi, M., Romeijn, J.W., Schurz, G. (eds.) EPSA15 Selected Papers: European Studies in Philosophy of Science, vol. 5, pp. 335–345. Springer, Dordrecht (2017)

Suárez, M.: The complex nexus of evolutionary fitness. Eur. J. Philos. Sci. 12, 9 (2022)

Suárez, Mauricio. Philosophy of Probability and Statistical Modelling. Cambridge Elements in the Philosophy of Science. Cambridge University Press, Cambridge (2020)

Von Mises, Richard. 1928 [1981]. Probability, Statistics, and Truth. 1st English Edition from: translated from the original in German dated in 1928. Dover, New York (1957)

Weisberg, M.: Challenges to the structural conception of chemical bonding. Philos. Sci. 75(5), 932–946 (2008)

Acknowledgements

We thank the audience at the ISPC conference (Lille, France, August 2022) as well as two referees for comments, and acknowledge financial support from the Spanish Agency for Research (AEI) projects PGC2018-099423-B-100 and PID2021-126416NB-I00.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Suárez, M., Sánchez Gómez, P.J. Reactivity in chemistry: the propensity view. Found Chem 25, 369–380 (2023). https://doi.org/10.1007/s10698-023-09477-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10698-023-09477-8