Abstract

The conditional extremes framework allows for event-based stochastic modeling of dependent extremes, and has recently been extended to spatial and spatio-temporal settings. After standardizing the marginal distributions and applying an appropriate linear normalization, certain non-stationary Gaussian processes can be used as asymptotically-motivated models for the process conditioned on threshold exceedances at a fixed reference location and time. In this work, we adapt existing conditional extremes models to allow for the handling of large spatial datasets. This involves specifying the model for spatial observations at d locations in terms of a latent \(m\ll d\) dimensional Gaussian model, whose structure is specified by a Gaussian Markov random field. We perform Bayesian inference for such models for datasets containing thousands of observation locations using the integrated nested Laplace approximation, or INLA. We explain how constraints on the spatial and spatio-temporal Gaussian processes, arising from the conditioning mechanism, can be implemented through the latent variable approach without losing the computationally convenient Markov property. We discuss tools for the comparison of models via their posterior distributions, and illustrate the flexibility of the approach with gridded Red Sea surface temperature data at over 6,000 observed locations. Posterior sampling is exploited to study the probability distribution of cluster functionals of spatial and spatio-temporal extreme episodes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Statistical modeling of spatial extremes

The availability of increasingly detailed spatial and spatio-temporal datasets has motivated a recent surge in methodological developments to model such data. In this work, we are concerned with modeling extreme values of spatial or spatio-temporal processes, which we denote by \(\{Y(s): s \in \mathcal {S} \subseteq \mathbb {R}^2\}\) and \(\{Y(s,t): (s,t) \in \mathcal {S}\times \mathcal {T} \subseteq \mathbb {R}^2\times \mathbb {R}_+\}\). The goal of modeling spatio-temporal extremes is often to enable extrapolation from observed extreme values to future, more intense episodes, and consequently requires careful selection of models suited to this delicate task.

Early work on spatial extremes focused almost exclusively on max-stable processes (Smith 1990; Coles 1993; Schlather 2002; Padoan et al. 2010; Davison and Gholamrezaee 2012). These are the limiting objects that arise through the operation of taking pointwise maxima of n weakly dependent and identically distributed copies of a spatial process. However, this is a poor strategy when data exhibit a property known as asymptotic independence, which means that the limiting process of maxima consists of everywhere-independent random variables. Moreover, even when the process is asymptotically dependent, meaning that the limit has spatial coherence, the fact that the resulting process is formed from many underlying original events (Dombry and Kabluchko 2018) can hinder both interpretability and inference. More recently, analogues of max-stable processes suited to event-level data have been developed (Ferreira and de Haan 2014; Dombry and Ribatet 2015; Thibaud and Opitz 2015; de Fondeville and Davison 2021), but use of these generalized Pareto or r-Pareto processes also requires strong assumptions on the extremal dependence structure.

Broadly, spatial process data can be split according to whether they exhibit asymptotic independence or asymptotic dependence. As mentioned, these can be characterized by whether the data display independence or dependence in the limiting distribution of pointwise maxima, but when considering threshold exceedances other definitions are more useful. Consider two spatial locations \(s, s+h \in \mathcal {S}\). For \(Y(s) \sim F_s\), define the tail correlation function (Strokorb et al. 2015) as

If \(\chi (s,s+h) = 0\) for all \(h \ne 0\) then Y is asymptotically independent, or asymptotically dependent where limit (1) is positive for all h. Intermediate scenarios of asymptotic dependence up to a certain distance are also possible. Asymptotic dependence is a minimum requirement for use of max-stable or Pareto process models, but in practice more rigid assumptions are imposed as these models do not allow for any weakening of dependence with the level of the event — a feature common in most environmental datasets.

Recent work on modeling of spatial extremes has focused on the twin challenges of incorporating flexible extremal dependence structures, and developing models and inference techniques that allow for large numbers of observation locations. Huser et al. (2017, 2021) suggest Gaussian scale mixture models, for which both types of extremal dependence can be captured depending on the distribution of the scaling variable. The model of Huser and Wadsworth (2019) was the first to offer a smooth transition between dependence classes, meaning it is not necessary to make a choice before fitting the model. However, owing to complicated likelihoods, each of these models is limited in practice to datasets with tens of observation locations. Modifications in Zhang et al. (2021) suggest that hundreds of sites might be possible, but further scalability looks elusive for now.

Wadsworth and Tawn (2022) proposed an alternative approach based on a spatial adaptation of the multivariate conditional extreme value model (Heffernan and Tawn 2004; Heffernan and Resnick 2007), which has been further extended to the space-time case by Simpson and Wadsworth (2021). Both types of extremal dependence can be handled and the likelihoods involved are much simpler. However, application thus far has still been limited to hundreds of observation locations. In this work, we seek to exploit the power of Gaussian Markov random fields and the integrated nested Laplace approximation (INLA) in this context in order to permit substantially higher dimensional inference and prediction, and to achieve more flexible modeling by replacing parametric structures with semi-parametric extensions. We note that, in the restricted case of Pareto processes, de Fondeville and Davison (2018) perform inference for a 3600-dimensional problem via a gradient-score algorithm. In this work, we handle inference for problems of comparable dimension, using the more flexible conditional extremes models with likelihood-based inference. Opitz et al. (2018) have previously used INLA in an extreme value analysis context, focusing on regression modeling of threshold exceedances, but this is the first time it has been utilized in the conditional extremes framework.

In the remainder of the introduction, we provide background on the conditional extremes approach, and introduce briefly the idea of the INLA methodology. In Section 2, we detail modifications to the conditional modeling approach that allow for inference through a latent variable framework using INLA; it is these tools that enable inference to become feasible at thousands of observation locations.

1.2 Conditional extremes models

The aforementioned conditional extremes approaches, originating with Heffernan and Tawn (2004) in the multivariate case, involve the construction of models by conditioning on exceedances of a high threshold in a single variable. The spatial setting studied by Wadsworth and Tawn (2022), and subsequent spatio-temporal extension of Simpson and Wadsworth (2021), require conditioning on threshold exceedances at a single spatial or spatio-temporal location, with additional structure being introduced by exploiting the proximity of the other locations to this conditioning site.

In the spatial setting, denote by \(\{X(s): s \in \mathcal {S}\}\) a stationary and isotropic process with marginal distributions possessing exponential-type upper tails, i.e., \(\Pr \{X(s)>x\}\sim ce^{-x}\) as \(x\rightarrow \infty\), \(c>0\). This is achieved in practice via a marginal transformation, explained further in Section 4.2. Let \(s_0\) denote the conditioning site. We assume that \(\{X(s)\}\) possesses a joint density, so that conditioning on the events \(\{X(s_0)>u\}\) or \(\{X(s_0)=u\}\) as \(u \rightarrow \infty\) leads to the same limiting process (Wadsworth and Tawn 2022); see also Drees and Janßen (2017) for further discussion on the conditioning event in a multivariate setting. We comment on handling anisotropy in Section 7.

The assumption of stationarity of the process \(\{X(s)\}\) is usually convenient to derive flexible conditional extremes models, as done by Wadsworth and Tawn (2022), but also to obtain stationarity with respect to the choice of the conditioning location \(s_0\), such that inference carried out for a given and observed \(s_0\) can be generalized conveniently to other conditioning locations. However, stationarity with respect to \(s_0\) is not strictly necessary for the model to work, and if there is a particular location of interest to study this should be chosen as the conditioning location.

For a finite set of locations \(s_1,\dots ,s_d\), Wadsworth and Tawn (2022) assume that there exist normalizing functions \(a_{s-s_0}(\cdot )\) and \(b_{s-s_0}(\cdot )\) such that as \(u\rightarrow \infty\),

where \(\varvec {z} = (z_1,\ldots , z_d)\), and the vector \(\{Z^0(s_1),\ldots ,Z^0(s_d)\}\) represents a finite-dimensional distribution of some stochastic process \(\{Z^0(s)\}\), referred to as the residual process. Several theoretical examples are provided therein to illustrate this assumption. The first of the normalizing functions is constrained to take values \(a_0(x)=x\) and \(a_{s-s_0}(x)\in [0,x]\), and is usually non-increasing as the distance between s and \(s_0\) increases: the residual process therefore satisfies \(Z^0(s_0)=0\). Furthermore, under assumption (2), the excess of the conditioning variable \(X(s_0)-u\mid X(s_0)>u\) is exponentially distributed, and independent of the residual process.

Assumption (2) is exploited for modeling by assuming that it holds approximately above a high threshold u. In particular, we can assume that

Suitable choices for \(a_{s-s_0}(\cdot ),b_{s-s_0}(\cdot )\) and \(\{Z^0(s)\}\) lead to models with different characteristics. Wadsworth and Tawn (2022) propose a theoretically-motivated parametric form for the normalizing function \(a_{s-s_0}(\cdot )\), as well as three different parametric models for \(b_{s-s_0}(\cdot )\) that are able to capture different tail dependence features. They propose constructing the residual process by first considering some stationary Gaussian process \(\{Z(s)\}\), and either subtracting \(Z(s_0)\) or conditioning on \(Z(s_0)=0\) to ensure the condition \(Z^0(s_0)=0\) on \(\{Z^0(s)\}\) is satisfied. Marginal transformations of \(\{Z^0(s)\}\) are considered therein in order to increase the flexibility of the models.

We note that assumptions (2) and (3) depend on the choice of \(s_0\). In some applications, there may be a location of particular interest that would make a natural candidate for \(s_0\), but for other scenarios the choice is not evident. However, under the assumption that \(\{X(s)\}\) possesses a stationary dependence structure, in the sense that the joint distributions are invariant to translation, the form of the normalization functions \(a_{s-s_0},b_{s-s_0}\) and the form of the residual process \(\{Z^0(s)\}\) do not in fact depend on \(s_0\), so that inference made using one conditioning location is applicable at any location. We discuss this issue further in Section 4.6.

The approach to inference taken by Wadsworth and Tawn (2022) involves a composite likelihood. This allows different locations to play the role of the conditioning site, and combines information across each of these. Inference under this “vanilla” version of the model, with \(\{Z^0(s)\}\) constructed from a Gaussian process and parametric forms for the normalizing functions, can currently be performed for hundreds of observation locations. However, scalability to thousands of locations is impeded by the \(O(d^3)\)-complexity of matrix inversion in the Gaussian process part of the likelihood. Additionally, in contrast to other areas of spatial statistics, we have n replicates of the process to be used for inference; this is less problematic than having large d, since computation time increases linearly in n assuming the replicates are independent.

1.3 INLA and the latent variable approach

In order to facilitate higher dimensional inference, we represent our model for observations at d locations in terms of an m-dimensional latent Gaussian model, where \(m \ll d\). This has the effect of creating a model that is amenable to use of the INLA framework, which allows for fast and accurate inference on the Bayesian posterior distribution of a parameter vector of interest, \(\varvec{\theta }\). It is particularly computationally convenient when the m-dimensional latent Gaussian component is endowed with a Gaussian Markov covariance structure, so that the precision matrix is sparse.

The general form of the likelihood for models amenable to inference via INLA is

where the observations \({\varvec{v}} =({v}_1,\ldots ,{v}_d)^\top \in \mathbb {R}^d\), but the vector \({\varvec\eta } = (\eta _1,\ldots ,\eta _d)\) with \(\eta _i=\eta _i( \varvec{W})\) a linear function of the m-dimensional latent Gaussian process. This specification of the distribution of \({\varvec\eta }\), via a sparse precision matrix, allows for inference on the posterior distribution of interest, \(\pi ({\varvec\theta }|{\varvec{v}})\), through a Laplace approximation to the necessary integrals. Note that the vector \({\varvec\theta }\) also includes parameters of the latent Gaussian model, and is usually termed the hyperparameter vector.

A benefit of this Bayesian approach to statistical modeling with latent variables, over alternatives like the EM algorithm or Laplace approximations applied in a frequentist setting, is that parameters, predictions and uncertainties can be estimated simultaneously, and prior distributions can be used to incorporate expert knowledge and control the complexity of the model or its components with respect to simpler baselines. Moreover, the availability of the R software package R-INLA (Rue et al. 2017) facilitates the implementation, reusability and adaptation of our models and code, making them suitable for use with datasets other than the one considered in this paper. One of the main challenges we face is reconciling the form of the conditional extremes models with the formulation in Eq. (4) that is allowed under this framework. We outline our general strategy in Section 2, but defer more detailed computational and implementation details to Section 6. Our motivation for this is to provide readers with a general understanding of the methodology, unimpeded by extensive technical details. However, we note that implementation is a substantial part of the task and therefore Section 6 provides interested parties with the necessary particulars.

1.4 Overview of paper

The remainder of the paper is structured as follows. In Section 2, we provide a discussion on flexible forms for the conditional spatial extremes model that are possible under the latent variable framework. We discuss details of our inferential approach in Section 3, then apply this to a dataset of Red Sea surface temperatures in Section 4, considering a range of diagnostics to aid model selection and the assessment of model fit. A spatio-temporal extension is presented in Section 5. Section 6 is aimed at those readers interested in the specifics of implementation, and includes more detail on INLA, the construction of Gauss-Markov random fields with approximate Matérn covariance, and implementation of our models in R-INLA. Section 7 concludes with a discussion. Supplementary Material contains code for implementing the models we develop, and is available at https://github.com/essimpson/INLA-conditional-extremes.

2 The latent variable approach and model formulations

2.1 Overview

In this section, we begin by outlining details of the latent variable approach. We then build on the conditional extremes modeling assumption given in Eq. (3) to allow for higher-dimensional inference under this latent variable framework. We discuss specific variants of the conditional extremes model that are possible in this case, summarizing the options in Section 2.5.

2.2 Generalities on the latent variable approach

Here, we provide some general details on the latent variable approach for spatial modeling, denoting the observed data generically by \(\varvec{V} = (V_1,\ldots ,V_d)^\top\), which in our context will correspond to observations at d spatial locations. When modeling spatial extreme values, it is always necessary to have replications of the spatial process in question in order to distinguish between marginal distributions and dependence structures, and to define extreme events. We comment further on the handling of temporal replication in Sections 6.5 and 6.6; we will later also explicitly model temporal, as well as spatial, dependence.

In hierarchical modeling with latent Gaussian processes, we define a latent, unobserved Gaussian process \(\varvec{W}=({W}_1,\ldots ,{W}_m)^\top\), with m denoting the number of ‘locations’ of the latent process. These could encompass the spatial locations used to discretize the spatial domain, or the knots used in spline functions, for example. We assume conditional independence of the observations V with respect to W, and use the so-called observation matrix \(A\in \mathbb {R}^{d\times m}\) to define a linear predictor

that linearly combines the latent variables in W into components \(\eta _i\) associated with \(V_i\), \(i=1,\ldots ,d\). The components \(\eta _i\) represent a parameter of the probability distribution of \(V_i\). The matrix A is deterministic and is fixed before estimating the model. For instance, A handles the piecewise linear spatial interpolation from the m locations represented by the latent Gaussian vector W towards the d observed sites; for this, W may contain the values of a spatial field at locations \(\tilde{s}_1,\ldots ,\tilde{s}_m\), and A has i-th line \(A_i=(0,\ldots ,0,1,0,\ldots ,0)\) if the observation location \(s_i\) of \(V_i\) coincides with one of the locations \(\tilde{s}_{j_0}\), where the 1-entry is at the \(j_0\)th position. Otherwise, several entries of \(A_i\) could have non-zero weight to implement interpolation between the \(\tilde{s}_{j}\)-locations. The distribution of \(\varvec\eta\) is also multivariate Gaussian due to the linear transformation. The univariate probability distribution of \(V_i\), often referred to as the likelihood model, can be Gaussian or non-Gaussian and is parametrized by the linear predictor \(\eta _i\), and potentially by other hyperparameters. The vector of hyperparameters (i.e., parameters that are not components of one of the Gaussian vectors W and \(\varvec\eta\)), such as those related to variance, spatial dependence range, or smoothness of a spline curve, is denoted by \(\varvec\theta\). Following standard practice from the Bayesian literature, we use \(\pi (\cdot )\) to denote various generic probability distributions. Then, the hierarchical model is structured as follows:

The matrix \(Q(\varvec\theta )\) denotes the precision matrix of the latent Gaussian vector W, whose variance-covariance structure may depend on some of the hyperparameters in \(\varvec\theta\) that we seek to estimate. In the case of observations \(V_i\) having a Gaussian distribution, we can set the Gaussian mean as the linear predictor \(\eta _i\). Then, the conditional variance \(\sigma ^2\) of \(V_i\) given \(\eta _i\) is a hyperparameter, and we define

Under the latent variable framework, if \(d>m\) as in our setting, we always need a small positive variance \(\sigma ^2>0\) in Eq. (5), since there is no observation matrix A that would allow for an exact solution to the equation \(\varvec{V} = A \varvec{W}\) with given \(\varvec{V}\). The presence of this independent component with positive variance is therefore a necessity for the latent variable approach. In some modeling contexts it can be interpreted as a useful model feature, e.g., to capture measurement errors. We discuss the consequences of this \(\sigma ^2\) parameter on our conditional model in Section 2.3. With the Gaussian likelihood (5), the multivariate distribution of V given \(\sigma ^2\) is still Gaussian, and in this case the Laplace approximation to the posterior \(\pi ({\varvec\theta }|{\varvec{v}})\) with the observation v of V is exact and therefore does not induce any approximation biases.

A major benefit of the construction with latent variables is that the dimension of the latent vector W is not directly determined by the number of observations d. The computational complexity and stability of matrix operations (e.g., determinants, matrix products, solution of linear systems) arising in the likelihood calculations for the above Bayesian hierarchical model is therefore mainly determined by the tractability of the precision matrix \(Q(\varvec\theta )\), whose dimension can be controlled independently from the number of observations. Such matrix operations can be implemented very efficiently if precision matrices are sparse (Rue and Held 2005). If data are replicated with dependence between replications, such as spatial data observed at regular time steps in spatio-temporal modeling, the sparsity property can be preserved in the precision matrix of the latent space-time process W. In this work, we will make assumptions related to the separability of space and time, which allows us to generate sparse space-time precision matrices by combining sparse precision matrices of a purely spatial and a purely temporal process using the Kronecker product of the two matrices; see Sections 6.5 and 6.6 for further details.

2.3 The latent variable approach for conditional extremes model inference

Here, we explain how the latent variable approach can be used within the conditional extremes framework to reduce the dimension of the residual process \(\{Z^0(s)\}\) for inferential purposes. We begin by presenting the conditional extremes model with parametric forms for the normalizing functions \(a_{s-s_0}\) and \(b_{s-s_0}\), following the approach of Wadsworth and Tawn (2022). In Section 2.4, we propose a more flexible semi-parametric form for \(a_{s-s_0}\) that further exploits the latent variable framework.

Consider the conditional extremes model presented in Eq. (3). Fixing \(a_{s-s_0}(x)=x\) and \(b_{s-s_0}(x)=1\) enforces asymptotic dependence, but setting \(a_{s-s_0}(x) = x\alpha (s-s_0)\) and allowing the form of \(\alpha (s-s_0)\) to depend on the distance from the conditioning location is the key aspect that enables modeling of asymptotic independence as well. To capture asymptotic independence, Wadsworth and Tawn (2022) propose a parametric form for \(\alpha (\cdot )\), defining

The resulting function \(a_{s-s_0}\) satisfies the constraint that \(a_0(x)=x\) and has \(a_{s-s_0}(x)\) decreasing as the distance to \(s_0\) increases. Wadsworth and Tawn (2022) propose three different parametric forms for the normalizing function \(b_{s-s_0}\), each with different modeling aims. We focus on the option of \(b_{s-s_0}(x)=x^\beta\), with \(\beta \in [0,1)\), throughout the rest of the paper, including the simple special case where we fix \(\beta =0\).

The existing conditional extremes approach must be simplified and slightly modified to allow for inference using the latent variable framework. First, in Section 1.2, we discussed that Wadsworth and Tawn (2022) consider marginal transformations of the residual process to increase the flexibility of their approach. A special case of this is to simply restrict \(\{Z^0(s)\}\) to have Gaussian margins. The approach we take to adopt the framework described in Section 2.2 is to assume that

We will represent the terms \(a_{s-s_0}(x)\) and \(Z^0(s)\) using basis functions and latent Gaussian variables, using a semi-parametric formulation of \(a_{s-s_0}(x)\), as we will further explain in the following two subsections. Moreover, as highlighted in Eq. (5), the use of a latent process of dimension \(m<d\) to facilitate computation requires the introduction of a small additive noise term, with variance \(\sigma ^2>0\) common to each of the observation locations. Taking this into consideration, the numerical representation of the conditional extremes model (7) that we actually implement in the INLA framework is

for \(i=1,\dots ,d\), where we set \(\epsilon _0 = 0\) at \(s_0\). A key point here is that the Gaussian noise does not represent a model feature to capture measurement error or add extra roughness to the process; it is simply included for computational feasibility.

The latent variable approach described in Section 2.2 can be applied to the residual process in Eq. (7), providing us with a “low-rank” representation of \(\{Z^0(s)\}\). The constraint that \(Z^0(s_0)=0\) can be enforced by manipulating the observation matrix A; further detail on this is provided in Section 6.4. In this case, assuming the parametric form (6) for \(\alpha (s-s_0)\) in \(a_{s-s_0}(x)=x\alpha (s-s_0)\) requires estimation of the parameters \((\lambda ,\kappa )\), in addition to the parameter \(\beta\) of the \(b_{s-s_0}\) function. Under the latent variable framework, these can be included as part of the hyperparameter vector \(\varvec\theta\). The dimension of \(\varvec{\theta }\) must remain moderate (say, at most 10 to 20 components), since INLA requires numerical integration with integrand functions defined on the hyperparameter space. In the implementation of INLA using R-INLA, estimation of the three parameters \((\lambda ,\kappa ,\beta )\) requires the use of specific user-defined (“generic”) models, which we describe in Section 6.6. We emphasize that the use of generic R-INLA models allows for the implementation of other relevant parametric forms for the functions \(a_{s-s_0}\) and \(b_{s-s_0}\), if the above choices do not provide a satisfactory fit.

For the function \(a_{s-s_0}(x)\), which is part of the mean function of the Gaussian process that we use as model for \(X(s)\mid [X(s_0)=x]\), an alternative to parametric forms is to adopt a semi-parametric approach by constructing \(a_{s-s_0}(x)\) as an additive contribution to the linear predictor with multivariate Gaussian prior distribution. However, the function \(b_{s-s_0}(x)\), which arises as part of the variance function of the Gaussian process that we use as model for \(X(s)\mid [X(s_0)=x]\), must have a parametric form with a small number of parameters included in the hyperparameter vector \(\varvec\theta\). Indeed, in the current implementation of the INLA framework in R-INLA it is not possible to represent both \(b_{s-s_0}(x)\) and \(\{Z^0(s)\}\) as latent Gaussian components, given the restriction of using a linear transformation from W to \(\varvec\eta\). This parametric form is achieved by our choice to set \(b_{s-s_0}(x)=x^\beta\). Some frequentist estimation approaches for generalized additive models implement Laplace approximation techniques where semi-parametric forms of the variance of the Gaussian likelihood are possible (Wood 2011). However, this approach is currently not available within R-INLA and may come at the price of less stable estimation due to identifiability problems and less accurate Laplace approximations, and estimation can become particularly cumbersome with large datasets, such that we do not pursue it here.

2.4 Semi-parametric modeling of \(a_{s-s_0}\)

In subsequent sections we focus on semi-parametric forms of \(a_{s-s_0}(x)\) (that continue to include the term \(\alpha (s-s_0)x\)) for their novelty, increased flexibility and computational convenience. Such semi-parametric forms can be implemented by using a B-spline function for \(\alpha (s-s_0)\), which appears as an additive component of the linear predictor \(\varvec\eta\). This is computationally convenient since INLA can handle a large number of latent Gaussian variables in \(\varvec {W}\) when calculating accurate deterministic approximations to posterior distributions, via the Laplace approximation. We constrain this function to have \(\alpha (0)=1\), ensuring that \(a_{0}(x)=x\) for the form \(a_{s-s_0}(x) = x\alpha (s-s_0)\).

In extension to the models for conditional spatial and spatio-temporal extremes developed by Wadsworth and Tawn (2022) and Simpson and Wadsworth (2021), we can further increase the flexibility of the conditional mean model by explicitly including a second spline function, denoted \(\gamma (s-s_0)\) and with \(\gamma (0)=0\), that is not multiplied by the value of the process at the conditioning site. To clarify, this implies that we have

with \(\gamma (s-s_0)\) also incorporated as a component of the linear predictor \(\varvec \eta\). An example where such a deterministic component arises is given by the conditional extremes model corresponding to the Brown–Resnick type max-stable processes (Kabluchko et al. 2009) with log-Gaussian spectral function (see Proposition 4 of Dombry et al. 2016), which are widely used in statistical approaches based on the asymptotically dependent limit models mentioned in Section 1; in this case, we obtain

with a centered Gaussian process \(\{Z(s)\}\). Therefore, by setting \(\alpha (s-s_0)=1\), in this model the \(\gamma\)-term corresponds to the semi-variogram, \(\text {Var}(Z(s)-Z(s_0))/2\). We note that for the Brown–Resnick process, \(\gamma\) should indeed correspond to a valid semi-variogram, although we will not constrain it as such in our implementation to allow for greater flexibility. However, we underline that in the INLA framework there is no impediment to using parametric forms of \(\gamma\) with parameters included in the hyperparameter vector \({\varvec\theta }\).

2.5 Proposed new models and their latent variable representation

To summarize, in the implementation of the conditional spatial extremes modeling assumption (7) using R-INLA, we propose to explore several options for the form of the model: setting \(\alpha (s-s_0)=1\) everywhere or using a spline function; whether or not to include the second spline term \(\gamma (s-s_0)\); and whether to include the parameter \(\beta\) or to fix it at 0. Together, this means that all models can be written as special cases of the representation

where we require \(\alpha (s-s_0)\le 1\) as a necessary condition for stationarity, and we suppose that \(\{Z^0(s)\}\) has a Gaussian structure, further described in Sections 6.3 and 6.4; and \(\epsilon _i \sim \mathcal {N}(0,\sigma ^2)\) i.i.d.. This opens up the framework of conditional Gaussian models and the potential for efficient inference via INLA, while closely following the conditional extremes formulation. In particular, the joint distribution of \(\{X(s_i): i=1,\ldots , d\}\), not conditional on the value of \(X(s_0)\), is non-Gaussian.

Finally, we give an illustration, linking model (9) to the general notation and principles outlined in Section 2.2. Our observation vector V is the process \(\{X(s)\}\) observed at d locations: \(\varvec{X}=(X(s_1),\ldots ,X(s_d))^\top\). The latent Gaussian component W consists of components for \(\alpha ,\gamma\), and \(\{Z^0(s)\}\): \({\varvec{W}} = ({\varvec{W}}_\alpha ^\top , {\varvec{W}}_\gamma ^\top , {\varvec{W}}_Z^\top )^\top \in \mathbb {R}^{m_{\alpha }} \times \mathbb {R}^{m_\gamma } \times \mathbb {R}^{m_Z}\), with \(m_\alpha +m_\gamma +m_Z = m\). The observation matrix \(A \in \mathbb {R}^{d \times m}\) is the concatenation of matrices for each component: \(A_\alpha \in \mathbb {R}^{d \times m_\alpha }\), \(A_\gamma \in \mathbb {R}^{d \times m_\gamma }\), and \(A_S \in \mathbb {R}^{d \times m_Z}\). We include the \(x^\beta\)-term into the process \(\varvec{W}_Z\) if we want to estimate the parameter \(\beta\), such that it does not appear in the fixed, observation matrix \(A_S\); if \(\beta\) is fixed, we could instead include the \(x^\beta\)-term into \(A_S\).

We emphasize that the model is applied to replicates of the observed process X and that while the \(\alpha\), \(\gamma\) and \(\beta\) components are fixed, the residual process and error term generally vary across replicates. All together for the jth replicate \(\varvec{X}_j\), we get

with i.i.d. Gaussian components in \(\varvec{\epsilon }_j = (\epsilon _{1,j},\ldots ,\epsilon _{d,j})^\top\), and where the j-subscripts highlight the components that vary with replicate. Therefore, using general notations from Section 2.2, the components of the linear Gaussian predictor of the INLA-model related to replicate j are \(\varvec\eta _j = A_j {\varvec{W}}_j\), with \(A_j=(x_j A_\alpha ,A_\gamma ,A_{S})\) and \({\varvec{W}}_j = ({\varvec{W}}_\alpha ^\top , {\varvec{W}}_\gamma ^\top , {\varvec{W}}_{Z,j}^\top )^\top\), and the full linear predictor is \(\varvec\eta = ( \varvec\eta _1^\top , \varvec\eta _2^\top ,\ldots )^\top\). To implement model (9) in an efficient manner for a large number of observation locations, we need to carefully consider computations related to the residual process \(\{Z^0(s)\}\); this is explained in detail in Section 6.4.

3 Inference for conditional spatial extremes

3.1 Overview

In Section 4, we apply variants of model (9) to the Red Sea surface temperature data, with the different model forms summarized in Table 1. In this section, we discuss certain considerations necessary to carry out inference and techniques to compare the candidate models. In Section 3.2, we begin with a discussion of the transformation to exponential-tailed marginal distributions that are required for conditional extremes modeling. We discuss construction of the observation matrix A and choices of prior distributions for the hyperparameters in Section 3.3. In Sections 3.4 and 3.5, we present two approaches for model selection and validation, both of which are conveniently implemented in the R-INLA package and therefore straightforward to apply in our setting.

3.2 Marginal transformation

To ensure the marginal distributions of the data have the required exponential upper tails, we suggest transforming to Laplace scale, as proposed by Keef et al. (2013). This is achieved using a semi-parametric transformation. Let Y denote the surface temperature observations at a single location. We assume these observations follow a generalized Pareto distribution above some high threshold v to be selected, and use an empirical distribution function below v, denoted by \(\tilde{F}(\cdot )\). That is, we assume the distribution function

for \(\lambda _v=1-F(v)\), \(\sigma _v>0\) and \(y_+=\max (y,0)\). Having fitted this model, we obtain standard Laplace margins via the transformation

This transformation should be applied separately for each spatial location, and we estimate the parameters of the generalized Pareto distributions using the ismev package in R (Heffernan and Stephenson 2018). It is possible to include covariate information in the marginal parameters and impose spatial smoothness on these, but we do not take this for instance by using flexible generalized additive models (see Castro-Camilo et al. (2021) for details), but we do not take this approach.

3.3 Spatial discretization and prior distributions for hyperparameters

We now discuss the distribution of the latent processes \({\varvec{W}}_\alpha , {\varvec{W}}_\gamma\) and \({\varvec{W}}_Z\), as defined in Section 2.5. Gauss–Markov distributions for these components, with approximate Matérn covariance, are achieved through the stochastic partial differential equation (SPDE) approach of Lindgren et al. (2011). The locations of the components of the multivariate Gaussian vector \({\varvec{W}}_Z\) defining the latent spatial process are placed at the nodes of a triangulation covering the study area. To generate this spatial discretization of the latent Gaussian process, and the spatial observation matrix \(A_S\) to link it to observations, we use a mesh. An example of this will be discussed for our Red Sea application in Section 4.3 and demonstrated pictorially in Fig. 2. Full technical details of the construction of spatial and spatio-temporal precision matrices Q for each component, and the observation matrix A, are provided in Section 6.

For the model components \(\alpha (s-s_0)\) and \(\gamma (s-s_0)\), we propose the use of one-dimensional splines, since they are functions only of the distance to the conditioning site, \(s-s_0\). Therefore, in contrast to the multivariate vector \({\varvec{W}}_Z\), the latent processes \(\varvec{W}_\alpha\) and \(\varvec{W}_\gamma\) correspond to Gaussian variables anchored at the spline knots used to discretize the interval \([0,d_{max})\) with an appropriately chosen maximum distance \(d_{max}\). In each case, we suggest choosing equally-spaced knots, with the left boundary knot placed at the distance \(s-s_0=0\) where we enforce the constraints that \(\alpha (0)=1\) and \(\gamma (0)=0\). For both \(\alpha (s-s_0)\) and \(\gamma (s-s_0)\), we interpolate between the knots using quadratic spline functions, which we have found to provide more flexibility than their linear counterparts. For the SPDE priors corresponding to these spline components, we suggest fixing the range and standard deviation, since we consider that estimating these parameters is not crucial. This avoids the very high computational cost that can arise when we estimate too many hyperparameters with R-INLA.

For specifying the prior distributions of the hyperparameters (e.g., variances, spatial ranges, autocorrelation coefficients for the space-time extension) we use the concept of penalized complexity (PC) priors (Simpson et al. 2017), which has become the standard approach in the INLA framework. PC priors control model complexity by shrinking model components towards a simpler baseline model, using a constant rate penalty expressed through the Kullback-Leibler divergence of the more complex model with respect to the baseline. In practice, only the rate parameter has to be chosen by the modeler, and it can be determined indirectly—but in a unique and intuitive way—by setting a threshold value r and a probability \(p\in (0,1)\) such that \(\textrm{Pr}(\text {hyperparameter}>r)=p\), with > replaced by < in some cases, depending on the role of the parameter. For example, the standard baseline model for a variance parameter of a latent and centered Gaussian prior component \(\varvec{W}\) contributing to the linear predictor \(\varvec{\eta }\) is a variance of 0, which corresponds to the absence of this component from the model, and the PC prior of the standard deviation corresponds to an exponential distribution. Analogously, we can set the PC prior for the variance parameter \(\sigma ^2\) of the observation errors \(\epsilon _i\) in Eq. (9). The parameter \(\sigma ^2\) could instead be fixed to a very small value, but to ensure we have the right amount of variance associated with \(\epsilon\) to obtain a numerically stable estimation procedure, we prefer to estimate its value from the data. For the Matérn covariance function, PC priors are defined jointly for the range and the variance, with the baseline given by infinite range and variance 0; in particular, the inverse of the range parameter has a PC prior given by an exponential distribution, see Fuglstad et al. (2019) for details. As explained by Simpson et al. (2017), PC priors are not useful to “regularize” models, i.e., to select a moderate number of model components among a very large number of possible model components. Rather, they are used to control the complexity of a moderate number of well-chosen components that always remain present in the posterior model, and they do not put any positive prior mass on the baseline model.

3.4 Model selection using WAIC

Conveniently, implementation in R-INLA allows for automatic estimation of certain information criteria that can be used for model selection. Two such criteria are the deviance information criterion (DIC), and the widely applicable or Watanabe-Akaike information criterion (WAIC) of Watanabe (2013). We favour the latter since it captures posterior uncertainty more fully than the DIC. This, and other, benefits of the WAIC over the DIC are discussed by Vehtari et al. (2017), where an explanation of how to estimate the WAIC is also provided. Using our general notation for latent variable models, as in Section 2.2, suppose that the posterior distribution of the vector of model parameters \(\varvec{\tilde{\theta }}=(\varvec{\theta }^\top , \varvec{W}^\top )^\top\) is represented by a sample \(\tilde{\varvec{\theta }}^s\), \(s=1,\dots ,S\), with the corresponding sample variance operator given by \(\mathbb {V}^S_{s=1}(\cdot )\). Given the observations \(v_i\), \(i=1,\ldots ,d\), the WAIC is then estimated as

with the first term providing an estimate of the log predictive density, and the second an estimate of the effective number of parameters. Within R-INLA, we do not generate a representative sample, but the sample means and variances with respect to \(\tilde{\varvec{\theta }}^s\) in the above equation are estimated based on R-INLA’s internal representation of posterior distributions; see also the estimation technique for the DIC explained in Rue et al. (2009, Section 6.4). Smaller values of the WAIC indicate more successful model fits, and we will use this criterion to inform our choice of model for the Red Sea data in Section 4.4.

3.5 Cross validation procedures

As mentioned previously, the main aim of fitting conditional extremes models is usually to extrapolate extreme event probabilities and related quantities to levels that have not been previously observed. However, the INLA framework also lends itself to the task of interpolation, e.g., making predictions for unobserved locations. Although interpolation is not our aim, here we discuss some procedures that allow for the assessment of models in this setting. For model selection, we can use cross-validated predictive measures, based on leave-one-out cross-validation (LOO-CV). These are relatively quick to estimate with INLA without the need to re-estimate the full model; see Rue et al. (2009, Section 2007 6.3). Here, one possible summary measure is the conditional predictive ordinate (CPO), corresponding to the predictive density of observation \(v_i\) given all the other observations \(\varvec{v}_{-i}\) i.e.,

for \(i=1,\dots ,d\). Log-transformed values of CPO define the log-scores often used in the context of prediction and forecasting (Gneiting and Raftery ). A model with higher CPO values usually indicates better predictions. We note that the CPO is not usually used for extreme value models where interpolation is often not considered the main goal. It will not be particularly informative in our application since the loss of information from holding out a single observation is negligible in the case of densely observed processes with very smooth surface. However, we include it as it may be useful for other applications where spatial and temporal interpolation are important, for example when data are observed at irregularly scattered meteorological stations, and due to the simplicity of its calculation in R-INLA.

One can also consider the probability integral transform (PIT) corresponding to the distribution function of the predictive density, evaluated at the observed value \(v_i\), i.e.,

If the predictive model for single hold-out observations appropriately captures the variability in the observed data, then the PIT values will be close to uniform. If in a histogram of PIT values, the mass concentrates strongly towards the boundaries, then two-sided predictive credible intervals (CIs) will be too narrow; by contrast, if mass concentrates in the middle of the histogram, then these predictive CIs will be too large. We refer the reader to Czado et al. (2009) for more background on PITs. We discuss such cross validation procedures in Section 4.4.

4 Application to modeling Red Sea surface temperature extremes

4.1 Overview

In this section, we propose specific model structures for the general model (9) and illustrate an application of our approach using a dataset of Red Sea surface temperatures, the spatio-temporal extremes of which have also been studied by Hazra and Huser (2021) and Simpson and Wadsworth (2021), for instance. We focus on the purely spatial case here, and consider further spatio-temporal modeling extensions for this dataset in Section 5. We use the methods discussed in Sections 3.4 and 3.5 to assess the relative suitability of the proposed models for the Red Sea data. For the best-fitting model, we present additional results, and conclude with a discussion of consequences of using a single, fixed conditioning site. Throughout this section, the threshold u in conditional model (9) is taken to be the 0.95 quantile of the transformed data, following Simpson and Wadsworth (2021). For the sake of a brevity, we do not compare results for different thresholds in the following.

4.2 Red Sea surface temperature data

The surface temperature dataset comprises daily observations for the years 1985 to 2015 for 16703 locations across the Red Sea, corresponding to \(0.05^\circ \times 0.05^\circ\) grid cells. We focus only on the months of July to September to approximately eliminate the effects of seasonality. More information on the data, which were obtained from a combination of satellite and in situ observations, can be found in Donlon et al. (2012). Extreme events in this dataset are of interest, since particularly high water temperatures can be detrimental to marine life, e.g., causing coral bleaching, and in some cases coral mortality.

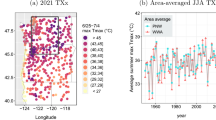

Simpson and Wadsworth (2021) apply their conditional spatio-temporal extremes model to a subset of 54 grid cells located across the north of the Red Sea. In this paper, we instead focus on a southern portion of the Red Sea, where coral bleaching is currently more of a concern (Fine et al. 2019). We demonstrate our approach using datasets of two different spatial dimensions; the first dataset contains 6239 grid cells, corresponding to all available locations in our region of interest, while the second dataset is obtained by taking locations at every third longitude and latitude value in this region, leaving 678 grid cells to consider. These two sets of spatial locations are shown in Fig. 1. Simpson and Wadsworth (2021) consider their 54 spatial locations at five time-points, resulting in a lower dimensional problem than both the datasets we consider here. On the other hand, Hazra and Huser (2021) model the full set of 16703 grid cells, but they ensure computational feasibility by implementing so-called ‘low-rank’ modeling techniques using spatial basis functions given by the dominant empirical orthogonal functions, obtained from preliminary empirical estimation of the covariance matrix of the data.

There are two transformations that we apply to these data as a preliminary step. First, as our study region lies away from the equator, one degree in latitude and one degree in longitude correspond to different distances. To correct for this we apply a transformation, multiplying the longitude and latitude values by 1.04 and 1.11, respectively, such that spatial coordinates are expressed in units of approximately 100 km. Our resulting spatial domain measures approximately 400 km between the east and west coasts and 500 km from north to south. We use the Euclidean distance on these transformed coordinates to measure spatial distances in the remainder of the paper. We also transform the margins to Laplace scale using the approach outlined in Section 3.2. We take v in (11) to be the empirical 0.95 quantile of Y, here representing the observed sea surface temperature at an individual location, so that \(\lambda _v=0.05\). Any temporal trend in the marginal distributions could also be accounted for at this stage, e.g., using the approach of Eastoe and Tawn (2009), but we found no clear evidence that this was necessary in our case.

In the remainder of this section, we apply a variety of conditional spatial extremes models to the Red Sea data with the large spatial dataset in Fig. 1, and apply several model selection and diagnostic techniques. For the conditioning site, we select a location lying towards the centre of the spatial domain of interest. We discuss this choice further in Section 4.6, where we present additional results based on the moderate dataset. In Appendix A, we provide an initial assessment of the spatial extremal dependence properties of the sea surface temperature data, based on the tail correlation function defined in Eq. (1). These results demonstrate that there is weakening dependence in the data at increasingly extreme levels, which provides an initial indication that models exhibiting asymptotic independence should be more appropriate here.

4.3 The mesh and prior distributions for the Red Sea data application

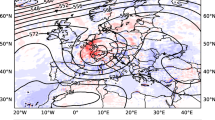

As discussed in Section 3.3, in order to carry out our latent variable approach to model inference, we require a triangulation of the area of interest. The mesh we use for the spatial domain in the southern Red Sea is shown in Fig. 2. This was generated using R-INLA, requiring the selection of tuning parameters related to the maximum edge lengths in the inner and outer sections of the mesh; these were chosen such that the dimension of the resulting mesh is much less than the number of spatial locations, and so that the most dense region corresponds to the area where we have observations. Our spatial triangulation mesh has 541 nodes, i.e., the dimension of the latent process is approximately 8.7% of the size of the large dataset, and similar in size to the moderate dataset. In this case, the extension of the mesh beyond the study region is reasonably big, in order to avoid boundary effects of the SPDE for the sea surface temperatures, whose spatial dependence range is known to be relatively large; see Simpson and Wadsworth (2021). We use a coarser resolution in the extension region to keep the number of latent Gaussian variables as small as reasonably possible.

The large set of spatial locations (red dots) and the corresponding triangulation mesh used for the SPDE model with an inner and outer boundary (blue lines). The inner boundary delimits a high-resolution zone covering the study area, while the outer boundary delimits an extension zone with lower resolution to prevent effects of SPDE boundary conditions in the study area

Due to the availability of many observations in the Red Sea dataset, we found the hyperparameter priors to only have a small impact on posterior inference in our application, and that the credible intervals of the hyperparameters are very narrow. We have chosen moderately informative PC priors through the following specification:

where \(\sigma _{Z}\) and \(\rho _{Z}\) are the standard deviation and the empirical range, respectively, of the unconstrained spatial Matérn fields \(\{Z(s)\}\). Where the \(\beta\)-parameter is to be estimated as part of a specified model, we choose a log-normal prior where the normal has mean \(-\log (2)\) and variance 1. This does not guarantee estimates of \(\beta <1\), but such a constraint could be included within the generic-model framework if required. The Matérn covariance function is also specified by a shape parameter \(\nu\). We fix \(\nu =0.5\), corresponding to an exponential correlation function. Sensitivity to \(\nu\) can be checked by comparing fitted models across different values, as demonstrated in Appendix B. We find this to have little effect on the results for our data.

As discussed in Section 3.3, for each spline function, we place one knot at the boundary where \(s=s_0\) and use a further 14 equidistant interior knots. This quantity provides a reasonable balance between the reduced flexibility that occurs when using too few knots, and the computational cost and numerical instability (owing to near singular precision matrices) that may arise with using too many. For these spline components, we have fixed the range to 100 km and the standard deviation to 0.5. If we wished to obtain very smooth posterior estimates of the spline function, we could choose parameters that lead to stronger constraints on the (prior) variability of the spline curve. We will demonstrate the estimated spline functions for some of our models in Section 4.5.

4.4 Variants of the spatial model and model comparison

In Section 2.4, we discussed choices of the normalizing functions \(a_{s-s_0}(x)\) and \(b_{s-s_0}(x)\) that are possible under the INLA framework. In Table 1, we summarize the models we consider based on the structures outlined in Eq. (9). We fit models with these different forms and subsequently select the best model for our data. Model 0 has \(\alpha (s-s_0)=1\), \(\beta =0\), resulting in a very simple asymptotically dependent model. Model 2 is also asymptotically dependent, but allows for weaker dependence than in Model 0 due to the drift that is captured by the \(\gamma (s-s_0)\) term, supposed to be negative in practice. In Model 6, the residual process has been removed, so that all variability is forced to be captured by the term \(\epsilon _i\) in Eq. (9). Models 0 and 6 are meant to act as simple baselines to which we can compare the other models, but we would not expect them to perform sufficiently well in practice. As mentioned in Section 1.2, Wadsworth and Tawn (2022) propose two options for constructing the residual process \(\{Z^0(s)\}\), each based on manipulation of a stationary Gaussian process \(\{Z(s)\}\). For all models in Table 1, we focus on a residual process of the form \(\{Z^0(s)\}=\{Z(s)\}-Z(s_0)\), further detail on which is given in Section 6.4.

Alongside the models in Table 1, we provide the corresponding WAIC and CPO values, as discussed in Sections 3.4 and 3.5, respectively. The computation times for each model are also included, as this information may also aid model selection where there is similar performance under the other criteria.

Beginning with the WAIC, we first recall that smaller values of this criterion are preferred. Models 1 and 3 are simplified versions of Models 5 and 4, respectively, in that the value of \(\beta\) is fixed to 0 rather than estimated directly in R-INLA. In both cases, the results are very similar whether \(\beta\) is estimated or fixed, suggesting the simpler models with \(\beta =0\) are still effective. The estimated WAIC values suggest Models 3 and 4 provide the best fit for our data. The common structure in these models are the terms \(\alpha (s-s_0)x\) and \(\gamma (s-s_0)\), indicating that their inclusion is important. In Model 4, the posterior mean estimate of \(\beta\) is 0.29, but despite simplifying the model, setting \(\beta =0\) as in Model 3 provides competitive results.

On the other hand, the CPO results are relatively similar across Models 0 to 5, but clearly substantially better than Model 6, which we include purely for comparison. Model 6 performs poorly here since all spatially correlated residual variation has been removed. We provide a histogram of PIT values for Model 3 in Appendix C, with equivalent plots for Models 0 to 5 being very similar. The histogram has a peak in the middle, suggesting that the posterior predictive densities for single observations are generally slightly too “flat”; however, here the variability in the posterior predictive distributions is very small throughout. Therefore, the fact of slightly overestimating the true variability, which is very small, does not cause too much concern about the model fit. If the PIT values concentrated strongly at 0 and 1, this would indicate that posterior predictive distributions would not allow for enough uncertainty, i.e., the model would be overconfident with its predictions; however, this is not the case here. Due to the smoothness of our data we essentially have perfect predictions using Models 0 to 5, and these plots are not particularly informative, but again may be useful in settings where spatio-temporal interpolation is a modeling goal.

Finally, we consider using a further cross validation procedure to compare the different models. This involves removing all data for locations lying in a quadrant to the south-east of the conditioning site, and using our methods to estimate these values. The difference between the estimates and original data, on the Laplace scale, can be summarized using the root mean square error (RMSE). These results are also provided in Table 1, where Model 3 gives the best results, although is only slightly favoured over the other Models 0 to 5.

4.5 Results for Model 3

For our application, it is difficult to distinguish between the performance of Models 0 to 5 using the cross validation approaches, but Models 3 and 4 both perform well in terms of the WAIC. We note that the run-time for Model 3, provided in Table 1, is less than one third of the run-time of Model 4 for this data, so we choose to focus on Model 3 here due to its simplicity. In general, simpler models have quicker computation times, but this is not necessarily always the case; we comment further on this in Section 5.3. We provide a summary of the fitted model parameters for Model 3, excluding the spline functions, in Table 2. The estimated value of \(\sigma ^2\) is very small, as expected. The Matérn covariance of the process \(\{Z(s)\}\) has a reasonably large dependence range, estimated to be 428.2 km.

We now consider the estimated spline functions \(\alpha (s-s_0)\) and \(\gamma (s-s_0)\) for Model 3; these are shown in Fig. 3. For comparison, we also show the estimate of \(\alpha (s-s_0)\) for Model 1 and \(\gamma (s-s_0)\) for Model 2. These two models are similar to Model 3, in that \(\beta =0\), but \(\gamma (s-s_0)=0\) for Model 1 and \(\alpha (s-s_0)=1\) for Model 2, for all \(s\in \mathcal {S}\). For Model 1, the \(\alpha (s-s_0)\) spline function generally decreases monotonically with distance, as would be expected in spatial modeling. For Model 3, the interaction between the two spline functions makes this feature harder to assess, but further investigations have shown that although \(\alpha (s-s_0)\) and \(\gamma (s-s_0)\) are not monotonic in form, the combination \(\alpha (s-s_0) x+\gamma (s-s_0)\) is usually decreasing for \(x\ge u\); i.e., there is posterior negative correlation, and transfer of information between the two spline functions. Some examples of this are given in Fig. 6 and will be discussed in Section 4.6. The behaviour of the \(\gamma (s-s_0)\) spline function for Model 2 is similar to that of the \(\alpha (s-s_0)\) functions for Models 1 and 3, highlighting that all three models are able to capture similar features of the data despite their different forms. The success of Model 3 over Models 1 and 2 can be attributed to the additional flexibility obtained via the inclusion of both spline functions. We note that in terms of representing the data, there may be little difference between suitable models, as we see here. However, we should also consider the diagnostics relating to our specific modeling purpose, which in our case is extrapolation. The results in Appendix A demonstrate that there is weakening dependence in the Red Sea data. The asymptotic dependence of Model 2 means that it cannot capture this feature, and is therefore unsuitable here. In Appendix A, we compare the empirical tail correlation estimates to ones obtained using simulations from our fitted Model 3. This model goes some way to capturing the observed weakening dependence, although the estimates do not decrease as quickly as for the empirical results. We comment further on this issue below.

Left: the spatial domain separated into 17 regions; the region labels begin at 1 in the centre of the domain, and increase with distance from the centre. The conditioning site \(s_0\) is shown in red. Right: the estimated proportion of locations that exceed the 0.95 quantile, given it is exceeded at \(s_0\) using Model 3 (green) and equivalent empirical results (purple)

Our fitted models can be used to obtain estimates of quantities relevant to the data application. For sea surface temperatures, we may be interested in the spatial extent of extreme events. High surface water temperatures can be an indicator of potentially damaging conditions for coral reefs, so it may be useful to determine how far-reaching such events could be. To consider such results, we fix the model hyperparameters and spline functions to their posterior means, and simulate directly from the spatial residual process of Model 3. If a thorough assessment of the uncertainty in these estimates was required, we could take repeated samples from the posterior distributions of the model parameters fixed to their posterior mean, and use each of these to simulate from the model. However, assessing the predictive distribution in this way is computationally more expensive, so we proceed without this step.

We separate the spatial domain into the 17 regions demonstrated in the left panel of Fig. 4. Given that the value at the conditioning site exceeds the 0.95 quantile, we estimate the proportion of locations in each region that also exceed this quantile. Results obtained via 10,000 simulations from Model 3 are shown in the right panel of Fig. 4, alongside empirical estimates from the data. These results suggest that Model 3 provides a successful fit of the extreme events, particularly within the first ten regions, which correspond to distances up to approximately 200 km from the conditioning site. At longer distances, the results do differ, which may be due to the comparatively small number of locations that contribute to the model fit in these regions and to some mild non-stationarities arising close to the coastline. The spatial dependence range of extreme events appears to be relatively long, since even for region 17, the empirical estimates are approximately 0.2 and are therefore much higher than the value of 0.05 expected for classical independence of \(\{X(s)\}\) at large distances. In Appendix C, we present a similar diagnostic where we instead extrapolate to the 0.99 quantile. Comparing the empirical results to those in Fig. 4, we again see that the data exhibits weakening dependence as we increase the threshold level. This suggests that an asymptotically independent model, as we have with Model 3, is appropriate; an asymptotically dependent model would not have captured this feature. However, these diagnostics do suggest that the dependence does not weaken quickly enough in our fitted model. It is possible that this could have been improved by a different threshold choice, but investigating this is beyond the scope of the paper.

4.6 Sensitivity to the conditioning site

A natural question when applying the conditional approach to spatial extreme value modeling, is how to select the conditioning location. Under an assumption of spatial stationarity in the dependence structure, the parameters of the conditional model defined in Eq. (3) should be the same regardless of the location \(s_0\). However, since the data are used in slightly different ways for each conditioning site, and because the stationarity assumption is rarely perfect, we can expect some variation in parameter estimates for different choices of \(s_0\).

In Wadsworth and Tawn (2022) and Simpson and Wadsworth (2021), this issue was circumvented by using a composite likelihood that combined all possible individual likelihoods for each conditioning site, leading to estimation of a single set of parameters that reduced sampling variability and represented the data well on average. However, bootstrap methods are needed to assess parameter uncertainty, and as the composite likelihood is formed from the product of d full likelihoods, the approach scales poorly with the number of conditioning sites. Composite likelihoods do not tie in naturally to Bayesian inference as facilitated by the INLA framework, and so to keep the scalability, and straightforward interpretation of parameter uncertainty, we focus on implementations with a single conditioning location. Sensitivity to the particular location can be assessed similarly to other modeling choices, such as the threshold above which the model is fitted.

In particular, different conditioning sites may lead us to select different forms of the models described in Table 1, as well as the resulting parameter estimates. To assess this, we fit all seven models to the moderate dataset, using 39 different conditioning sites on a grid across the spatial domain, with the mesh and prior distributions selected as previously. We compare the models using the WAIC, as described in Section 3.4. The results are shown in Fig. 5, where we demonstrate the best two models for each conditioning site. For the majority of cases, Model 4 performs the best in terms of the WAIC, and in fact, it is in the top two best-performing models for all conditioning sites. The best two performing models are either Models 3 and 4 or Models 4 and 5 for all conditioning locations. This demonstrates that there is reasonable agreement across the spatial domain, and suggests that using just one conditioning location should not cause an issue in terms of model selection.

To further consider how restrictive it is to only fit models at one conditioning site, we can compare the spline functions estimated using different locations for \(s_0\). We again focus on results for Model 3, as in Section 4.5, and consider estimates of \(\alpha (s-s_0)u + \gamma (s-s_0)\), with u representing the threshold used for fitting. We demonstrate the estimates of this function in Fig. 6, for the same 39 conditioning sites used in Fig. 5, highlighting results for four of these sites situated across the spatial domain. Overall, the estimated functions are reasonably similar, particularly for shorter distances. There is one function that appears to be an outlier, corresponding to a conditioning site located on the coast. Although the other coastal conditioning sites we consider do not have this issue, it does suggest that some care should be taken here.

As a final test on the sensitivity to the conditioning site, we consider the implications if we fit Model 3 at one conditioning site, and use this for inference at another location. In particular, we take the results from Section 4.5, using a conditioning site near the centre of the spatial domain, and use these to make inference at a conditioning site located on the coast. We use a method analogous to the one used to create Fig. 4. That is, we separate the spatial domain into regions, and for each one, we estimate the proportion of locations that take values above their 0.95 quantile, given that this quantile is exceeded at the conditioning location. In Fig. 7, we compare results based on simulations from the fitted model to empirical estimates. Although a different conditioning location was used to obtain the model fit, the results are still good, particularly up to moderate distances, supporting our use of a single conditioning site for inference. One issue that is highlighted here is that by fitting the model at a central conditioning site, the maximum distance to \(s_0\) is around 391 km, so we are not able to make inference about the full domain for a conditioning site near the boundary, where the maximum distance to other locations is much larger. This aspect should be taken into account when choosing a conditioning site for inference. This issue is specific to the use of spline functions for \(\alpha (s-s_0)\) and \(\gamma (s-s_0)\), and there is no such problem for parametric functions such as the one proposed by Wadsworth and Tawn (2022) for \(\alpha (s-s_0)\). There is therefore a trade-off here between the flexbility of the splines and the spatial extrapolation possible using parametric functions.

Left: the spatial domain separated into regions; the region labels begin at 1 at \(s_0\) (red), and increase with distance from this location. Right: the estimated proportion of locations that exceed the 0.95 quantile, given it is exceeded at \(s_0\) using Model 3 (green) and equivalent empirical results (purple)

5 Inference for conditional space-time extremes

5.1 Conditional spatio-temporal extremes models

Simpson and Wadsworth (2021) extend assumption (2) to a spatio-temporal setting. The aim is to model the stationary process \(\{X(s,t):(s,t)\in \mathcal {S}\times \mathcal {T}\}\) which also has marginal distributions with exponential upper tails. The conditioning site is now taken to be a single observed space-time location \((s_0,t_0)\), and the model is constructed for a finite number of points \((s_1,t_1),(s_1,t_2),\dots ,(s_d,t_\ell )\) pertaining to the process at d spatial locations and \(\ell\) points in time, where data may be missing for some of the space-time points. The structure of the conditional extremes assumption is very similar to the spatial case, in particular, it is assumed that there exist functions \(a_{(s,t)-(s_0,t_0)}(\cdot )\) and \(b_{(s,t)-(s_0,t_0)}(\cdot )\) such that as \(u\rightarrow \infty\),

for \(\{Z^0(s_i,t_j)\}_{\begin{array}{c} i=1,\dots ,d,\\ j=1,\dots ,\ell \end{array}}\) representing finite-dimensional realizations of a spatio-temporal residual process \(\{Z^0(s,t)\}\). Once more the excesses \(X(s_0,t_0)-u|X(s_0,t_0)>u\) are independent of the residual process as \(u \rightarrow \infty\), and the constraints on the residual process \(\{Z^0(s,t)\}\) and normalizing function \(a_{(s,t)-(s_0,t_0)}(\cdot )\) are analogous to the spatial case. We consider spatio-temporal variants of spatial models 1, 3, 4, and 5, which provided the best WAIC values, in Section 4.4; see the model summary in Table 3. In order to preserve sparsity in the precision matrix of the relevant latent variables, a simple autoregressive structure is employed for the temporal aspect of the residual process; further details are provided in Section 6.5. Specifically, we construct the process \(\{Z^0(s,t)\}\) as \(\{Z(s,t)\}-Z(s_0,t_0)\) using the first-order autoregressive structure in combination with the spatial SPDE model as described in Eq. (14). For the temporal auto-correlation coefficient \(\rho\) in Eq. (14), we use the same type of prior distribution as for the other hyperparameters, and therefore opt again for a PC prior. The baseline could be either \(\rho =0\) (no dependence) or \(\rho =1\) (full dependence); here, we choose \(\rho =0\) and a moderately informative prior through the specification \(\textrm{Pr}(\rho > 0.5) = 0.5\). The prior distributions for \(\alpha (s-s_0,t-t_0)\) and \(\gamma (s-s_0,t-t_0)\) are constructed according to Eq. (14), with a one-dimensional SPDE model for a quadratic spline with 14 interior knots deployed for spatial distance and replicated for each of the \(\ell\) time points, with prior temporal dependence of spline coefficients for consecutive time lags controlled by a first-order autoregressive structure; the resulting Gaussian prior processes are conditioned to have \(\alpha (0,0)=1\) and \(\gamma (0,0)=0\). Contrary to \(Z^0\), the components \(\alpha\) and \(\gamma\) are deterministic in the conditional extremes framework, but through the semi-parametric formulation we can handle them in the same way within the INLA framework. Using Gaussian process priors for spline coefficients allows for high modeling flexiblity through a relatively large number of basis functions, where hyperparameters ensure an appropriate smoothness of estimated functions.

5.2 Spatio-temporal Red Sea surface temperature data

Since the spatio-temporal models are more computationally intensive than their spatial counterparts due to a larger number of hyperparameters, and more complex precision matrices, we focus only on the moderate set of spatial locations demonstrated in Fig. 1, which contains 678 spatial locations; this will still result in a substantial number of dimensions when we also take the temporal aspect into account.

To carry out inference for the conditional spatio-temporal model, we must separate the data into temporal blocks of equal length, with the aim that each block corresponds to an independent observation from the process \(\{X(s,t)\}\). We first apply a version of the runs method of Smith and Weissman (1994) to decluster the data. Each cluster corresponds to a series of observations starting and ending with an exceedance of the threshold u at the conditioning site, with clusters separated by at least r non-exceedances of u. Once these clusters are obtained, we take the first observation in each one as the start of an extreme episode, with the following six days making up the rest of the block. Declustering is applied only with respect to the spatial conditioning site \(s_0\), but we still consider observations across all spatial locations at the corresponding time-points. We select the tuning parameter in the runs method to be \(r=12\); this is chosen following the approach of Simpson and Wadsworth (2021), who check for stability in the number of clusters obtained using different values of r, and note that since we focus only on summer months, blocks should not be allowed to span multiple years. This declustering approach yields 28 non-overlapping blocks of seven days to which we can apply our four spatio-temporal models.

5.3 Model selection, forecasting and cross validation

We compare the four models using similar criteria as in the spatial case. The WAIC and average CPO values are presented in Table 3, where the most complex Model 4 performs best in terms of the WAIC, while a slightly better CPO value arises for Model 3. We note that the model selected using the WAIC has the same form in both the spatial and spatio-temporal cases.

We also compare fitted and observed values using a variant of the root mean square error (RMSE). The results of within-sample RMSEs are almost identical for the four models, and therefore not included in Table 3, yielding a value of 0.077. To assess predictive performance, it is more interesting to consider an additional variant of cross validation in the spatio-temporal case to test the forecasting ability of the models. We carry out seven-fold cross validation by randomly separating our 28 declustered blocks into groups of four, and for each of these groups we remove the observations at all locations for days two to seven. We then fit the model using the remaining data in each case, and obtain predictions for the data that have been removed. This cross validation procedure is straightforward to implement, as in R-INLA it is possible to obtain predictions (e.g., posterior predictive means), including for time-points or spatial locations without observations. We compare the predicted values with the observations that were previously removed, presenting the cross validation root mean square error (RMSE\(_{\text {CV}}\)) in Table 3. Again, the results are quite similar, but Model 3 performs slightly better than the others. Finally, run-times are reported in the table and range between 1 hour and 4 hours, using 2 cores on machines with 32Gb of memory. When comparing the spatial models with the corresponding space-time models having the same spatial component, the order of run-times changes in our results. We emphasize that, on average, more complex latent models will require longer run-times with INLA if the observations remain the same. However, the Laplace approximations conducted by INLA require iterative optimization steps to find modes of high-dimensional functions, and in some cases these optimization steps may be substantially more computer-intensive for a simpler model, for instance when the mode is relatively hard to identify. Therefore, there is no contradiction in the reported results.

6 Computational and implementation details

6.1 Introduction

This section provides further details on INLA, the SPDE approach, and specifics of implementation that are necessary to gain a full understanding of our methods, but not to appreciate the general ideas behind the approach.

6.2 Bayesian inference with the integrated nested Laplace approximation