Abstract

We speculate on the development and availability of new innovative propulsion techniques in the 2040s, that will allow us to fly a spacecraft outside the Solar System (at 150 AU and more) in a reasonable amount of time, in order to directly probe our (gravitational) Solar System neighborhood and answer pressing questions regarding the dark sector (dark energy and dark matter). We identify two closely related main science goals, as well as secondary objectives that could be fulfilled by a mission dedicated to probing the local dark sector: (i) begin the exploration of gravitation’s low-acceleration regime with a spacecraft and (ii) improve our knowledge of the local dark matter and baryon densities. Those questions can be answered by directly measuring the gravitational potential with an atomic clock on-board a spacecraft on an outbound Solar System orbit, and by comparing the spacecraft’s trajectory with that predicted by General Relativity through the combination of ranging data and the in-situ measurement (and correction) of non-gravitational accelerations with an on-board accelerometer. Despite a wealth of new experiments getting online in the near future, that will bring new knowledge about the dark sector, it is very unlikely that those science questions will be closed in the next two decades. More importantly, it is likely that it will be even more urgent than currently to answer them. Tracking a spacecraft carrying a clock and an accelerometer as it leaves the Solar System may well be the easiest and fastest way to directly probe our dark environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

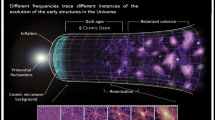

1 When the universe goes dark

General Relativity (GR) may arguably be the most elegant physics theory. It describes gravitation as the simple manifestation of spacetime’s geometry, while recovering Newton’s description of gravitation as a classical inverse-square law (ISL) force in weak gravitational fields and for velocities small compared to the speed of light. In its century of existence, GR has been extremely successful at describing gravity. From Mercury’s perihelion to gravitational lensing, to the direct detection of gravitational waves, it has successfully passed all experimental tests (see e.g. [117] and [66] and references therein). Most importantly, GR allowed for the advent of mathematical cosmology and is the cornerstone of the current hot Big Bang description of our Universe’s history.

Sitting next to GR, the Standard Model (SM) was built from the realization that the microscopic world is intrinsically quantum. Although perhaps less elegant than GR because of its numerous free parameters, it is both highly predictive and efficient not only at describing the behavior of microscopic particles, but also at mastering key technologies. Increasingly large particle accelerators and detectors have allowed for the discovery of all particles predicted by the model, up to the celebrated Brout-Englert-Higgs boson [1, 41].

Although both frameworks (GR and SM) leave few doubts about their validity and seem unassailable in their respective regimes (low vs high energy phenomena, macroscopic vs microscopic worlds), difficulties have been lurking for decades. Firstly, the question of whether GR and the SM should and could be unified remains open. Major theoretical endeavors delivered models such as string theory, but still fail to provide a coherent, unified vision of our Universe. Secondly, unexpected components turn out to make up most of the Universe’s mass-energy budget: dark matter and dark energy are undoubtedly the largest conundrums of modern fundamental physics.

1.1 Dark matter

From observations of the Coma galaxy cluster, [121] was the first to pinpoint the problem of missing matter: the motion of galaxies could not be explained from the luminous matter only. At the onset of the 1980s, [97] noticed a similar behavior on galactic scales: galaxies rotate faster than expected based on their observed luminosity. Dark matter interacts only weakly with baryonic matter, but its gravitational interaction is necessary not only to account for galaxies’ rotation curves and the dynamics of cluster galaxies, but also to explain peaks in the Cosmic Microwave Background (CMB) spectrum and the whole structure formation; no model is able to build galaxies without its presence.

Astronomers failed to find this missing matter as massive compact baryonic objects (MACHOs), making a stronger case for non-baryonic matter. Maps of the dark matter distribution at large scale, pioneered with weak gravitational lensing by [78], are now common (see e.g. [112] or [40]), and a cosmic web structured by (cold) dark matter is now central to the cosmological model.

Meanwhile, new approaches to gravitation, like MOND [80], were developed to explain observations without the need for dark matter. However, observations of colliding galaxy clusters [44] and of the fact that dark matter seems to be heated up by star formation in dwarf galaxies [90] tend to prove the existence of a particle non-baryonic dark component, albeit sterile neutrinos could also act as dark matter [32].

While astronomers were looking for dark matter gravitational signatures, particle physicists did not remain idle. The supersymmetric extension of the SM predicts new massive particles, the lightest of which was expected to account almost exactly for the amount of dark matter in the Universe. Alas, this “WIMP (Weakly Interacting Massive Particle) miracle” was a lure and has somewhat faded by now, after intensive indirect and direct searches failed to find it. Better and better direct detection experiments have been running for decades (see e.g. [22, 101] for recent reviews). Aside from DAMA/LIBRA’s observation of an annually modulated event rate [26], no experiment ever saw the signature of a WIMP hitting its detector. The axion, hypothesized as a by-product of a possible solution to the strong CP-problem in the 1970s [74], is another good candidate still actively looked for, as are newer axion-like particles (ALPs). Nevertheless, only upper bounds on the WIMPs’ mass and cross-section and on the axions and ALPs bounds to photon or electrons have so far been reported.

The current consensus remains the existence of a non-baryonic matter interacting with baryonic matter mostly gravitationally, that pervades the Universe and clumps as halos hosting galaxies and clusters of galaxies [88].

1.2 Cosmological constant, dark energy, and modified gravity

The observation of the accelerated expansion of the Universe from the measurement of the distance of supernovae [85, 93] added a new twist to cosmology and fundamental physics. Baryonic and dark matter were not alone, but a new component of the Universe was even dominating the mass-energy budget of the Universe. Since then, all cosmological probes have converged and their concordance allowed cosmologists to robustly infer the acceleration of the cosmological expansion [2, 88].

The most natural candidate to explain this acceleration is Einstein’s cosmological constant Λ [39], which may be linked to the vacuum energy. Adding it to cold dark matter, the Λ CDM cosmological model was born. However, the observed value of Λ is around 120 orders of magnitude smaller than the naive expectation from Quantum Field Theory (QFT), that should be of the Planck mass: this cosmological constant problem is still unexplained.

The acceleration of the expansion can also be caused by a dynamical, repulsive fluid. Measuring the equation of state of this dark energy has become the grail of observational cosmology, with new and near future surveys: for instance (the list is far from exhaustive), the Dark Energy Survey has already published first results [2], while ESA’s EuclidFootnote 1 and NASA’s Roman Space TelescopeFootnote 2 missions are planned to finely measure weak lensing on half the sky, and provide percent constraints on dark energy within ten years.

In the dark energy view, GR keeps its central role as the theory of gravitation, assumed valid on all scales; it is the content of the Universe which is modified. The accelerated expansion can also be explained the other way around: no new component is added to the Universe, but GR is subsumed by a more general theory of gravitation that passes Solar System and laboratory tests while having a different behavior on cosmological scales. A plethora of models have been proposed (e.g. [68, 70]), of which the scalar-tensor theories are the simplest.

GR describes the gravitational force as mediated by a single rank-2 tensor field. There is good reason to couple matter fields to gravity in this way, but there is no good reason to think that the field equation of gravity should not contain other fields. It is then possible to speculate on the existence of other such fields. The simplest way to go beyond GR and modify gravity is then to add an extra scalar field: such scalar-tensor theories are well established and studied theories of Modified Gravity [48]. From a phenomenological point of view, scalar-tensor theories link the cosmic acceleration to a deviation from GR on large scales. They can therefore be seen as candidates to explain the accelerated rate of expansion without the need to consider dark energy as a physical component. Furthermore, they arise naturally as the dimensionally reduced effective theories of higher dimensional theories, such as string theory; hence, testing them can allow us to shed light on the low-energy limit of quantum gravity theories.

Scalar fields that mediate a long range force able to affect the Universe’s dynamics should also significantly modify gravity in the Solar System, in such a way that GR should not have passed any experimental test. Screening mechanisms have been proposed to alleviate this difficulty [70]. In these scenarios, (modified) gravity is environment-dependent, in such a way that gravity is modified at large scales (low density) but is consistent with the current constraints on GR at small scale (high density). Several such models have been put forth, like the chameleon [71, 72], the symmetron [64], the Vainshtein mechanism [111], the K-Mouflage [20, 35] or the dilaton [34, 46, 47].

Those modified gravity models all predict the existence of a new, fifth force, that should be detectable through a violation of the ISL or of the equivalence principle. Despite numerous efforts, no such deviation has been detected so far (see e.g. [4, 24, 56, 108] and references therein).

1.3 Aim and layout of this White Paper; mission concept summary

Theoretical, observational and experimental efforts are still underway to shed light on dark energy or modified gravity. This White Paper proposes new ways to peek more deeply than ever into this dark sector by directly probing it in the Solar System neighborhood.

To this aim, we propose to probe gravitation in the uncharted low-acceleration regime prevalent in the Solar System outskirts. An atomic clock onboard a spacecraft drifting out of the Solar System allows for a direct measurement of the local metric. Meanwhile, an onboard accelerometer measures all non-gravitational forces acting on the spacecraft, so that they can be subtracted off and a ranging technique allows for a direct assessment of the geodetic motion of the spacecraft. Measuring the geodetic motion is equivalent to measuring the Levi-Civita connection (by comparison to clocks that directly measure the metric). Not only does this mission concept allow for a test of gravity, but it also allows for the measurement of the local dark matter density disentangled from the baryonic density (as it creates measurable friction on the spacecraft), thereby helping to break the degeneracy between the local dark matter density and the dark matter cross-section in direct detection experiments.

As the time required to fly a spacecraft farther than the Kuiper belt with traditional propulsion and space navigation techniques is prohibitively long, we will need to rely on new types of propulsion. We propose to use the concept elaborated for the Breakthrough Starshot project, using a solar sail illuminated by a high-power laser from the ground. Although this concept is in a very early phase and it is far from clear how this will evolve, there is growing interest in developing new propulsion systems [76] to enable agile, quick-response exploration within the Solar System and to enable investigation of the outer Solar System in a timescale shorter than current propulsion allows [81]. We consider it is not unreasonable to expect that there may be developments in the coming decades which could be exploited for the science described in this White Paper. With these caveats in mind, rough orders of magnitude allow us to expect to be able to send a probe of mass less than 1000 kg to hundreds of AU in two to four years, which would make it a viable mission. Recently, [19] showed how a similar mission (though in a slightly different context) can be feasible.

Such a mission is expected to be an M-class mission, although it highly depends on the availability and cost to use the Breakthrough Starshot laser, or similar, in the 2040s.

Section 2 presents, in as much detail as possible, state-of-the-art experimental efforts to shed light on the aforementioned conundrums. We detail our science case in this section. Section 3 gives a broad outline of how a spacecraft (carrying an atomic clock, an accelerometer and tracked with a ranging technique) could perform it, and provides secondary science objectives that could easily be reached. In Section 4, we attempt to give a realistic landscape of the related science in the 2040s. We mention the expected technical challenges in Section 5, then conclude in Section 6.

2 Shedding light on the local galactic dark sector

In this section, we show how direct measurements of non-gravitational forces in the Solar System neighborhood (meaning, outside the Solar System) is a necessary step to go beyond current experimental constraints on gravitation, dark energy, and dark matter.

2.1 Dark energy and gravitation

2.1.1 Fifth force searches in the solar system: current status

All theoretical attempts at explaining the three limitations mentioned in Section 1.2 modify GR and Newtonian dynamics, either at small scale or very large scale, or both. In particular, if one of them is correct, we should detect a violation of the gravitational ISL. Hence, in the weak field limit, measurements of the dynamics of gravitationally-bound objects (e.g. the behavior of a torsion balance in the Earth’s gravity field, the trajectory of an interplanetary probe, or the recession of galaxies) should show a deviation from what is expected from Newton’s equations. In other words, a fifth force should be detectable [56].

It is common-practice to parametrize a deviation from the ISL with a Yukawa potential, such that the gravitational potential created by a point-mass of mass M at a distance r is [4, 56]

where G is the gravitational constant, α is the (dimensionless) strength of the Yukawa potential relative to Newtonian gravity, and λ is its range. Note that in this paper, we focus on composition-independent violations of Newtonian dynamics, meaning that the coupling constant α does not depend on the test mass used for the experiment. Composition-dependent tests exist, which assume that α depends on the nature of the test masses; in practice, they test the weak equivalence principle (see [24] and [54] for up-to-date constraints on composition-dependent tests).

Figure 1 shows the current constraints on ISL-violations from a Yukawa potential, from millimeter scales to 1000 AU. A large region of the (α, λ) plane is still allowed by current experiments at submillimetre scales. Significant efforts are made to better constrain this region, most notably with torsion pendulums, micro-cantilever, or Casimir-force experiments [4, 5, 82]. Although these scales may be probed by bringing laboratory experiments to space (albeit it is not clear whether the performance would be improved – [23]), we do not explore this possibility in this White Paper, but only concentrate on ranges larger than planetary scales. Note that geophysical scales can be probed by torsion pendulums using the Earth as the attractor (e.g. [115]), while constraints on Earth-orbit scales are provided by a wealth of Earth-orbiting satellite experiments [43, 49, 65, 77], though care should be taken to properly account for the shape of the Earth [25].

Larger scales (from a few to hundreds of AU) can only be probed by Solar System experiments, since astrophysical and cosmological observations are by definition sensitive to much larger scales. The tightest constraints come from measurements by the Lunar Laser Range ([LLR –e.g. [83]). The LLR consists of range measurements of the Moon, so that its orbit is extremely finely monitored, with a subcentimetre precision. The most sensitive observable for testing the ISL with LLR is the anomalous precession of the lunar orbit, due to the Earth’s quadrupole field, perturbations from other Solar System bodies, and relativistic effects. Those perturbations and effects can be parametrized as a Yukawa interaction, thereby providing the constraints shown in Fig. 1.

Larger scales can be probed through planetary ephemerides [55, 113] and ranging. Ranging is done by bouncing radar signals onto planets, or using microwave signals transmitted by orbiting spacecraft. As shown in Fig. 1, such techniques provide a tight constraint on α [73]. However, this constraint becomes quickly loose as the scale increases towards the outer Solar System scale.

Probing those scales (the largest scales reachable by instruments in a reasonable amount of time) can be done by measuring the orbit of an outbound spacecraft, as it drifts away from the Sun and the planets [59, 67]. The most notable existing test in this domain was performed by NASA during the extended Pioneer 10 and 11 missions. The test resulted in the so-called Pioneer anomaly [12, 13], finally accounted for by an anisotropic heat emission from the spacecraft themselves [95, 109].

The effect of non-gravitational forces could be definitely accounted for if, instead of relying on spacecraft and environment models, whose accuracy can always be doubted, we were able to measure them directly. This can be easily done with an onboard accelerometer, as proved by missions such as GOCE [100], LISA Pathfinder [16], and MICROSCOPE [108]. Combining the model-independent measurements of non-gravitational accelerations provided by an on-board accelerometer with radio tracking data, it becomes possible to improve by orders of magnitude the precision of the comparison with theory of the spacecraft gravitational acceleration. Such experimental concepts have been proposed e.g. by [42] and [38]. This is an aspect of the mission outline that we propose in this White Paper.

2.1.2 Gravitation’s low-acceleration frontier

Baker et al. [18] classify probes and experimental/observational tests of gravitation in the potential–curvature plane (left and right panels of Fig. 2, respectively). There, the potential 𝜖 and curvature ξ are loosely defined as the Newtonian gravitational potential

and the Kretschmann scalar

created by a spherical body (Schwarzschild metric) of mass M at a distance r, where c is the speed of light and R is the Ricci curvature tensor.

Gravitational potential–curvature plane. Left: Astrophysical, cosmological and experimental probes. Right: Observationally and experimentally tested (currently or planned) regions are shown in color; the shaded region is the so-called “desert”, where no experimental data are currently available, but where a new intermediate regime of gravitation could be found, bridging between GR at higher curvature and “dark energy gravitation” at smaller curvature. Exploring this desert should be set as a priority. Figure adapted from [18]

It is clear that this plane is divided in four main regions:

-

highest curvatures and potentials correspond to compact objects and can be tested with gravitational wave observatories

-

smaller curvatures correspond to Solar System objects (small potential) and to the Galactic Center’s S-stars (higher potential); the former can be tested with planet ephemerides and spacecraft (see above), while the latter can be tested with Galactic Center observations

-

very small curvatures correspond to cosmological probes (galaxies, large scale structures) and can be tested e.g. with CMB observations or galaxy surveys

-

a desert of probes and (most importantly) of tests lies between the Solar System scale tests and cosmological tests. All kind of speculation can be allowed in this regime, and we can easily imagine that gravitation enjoys a gradual change of regime between compact objects and Solar System scales (“high” curvature, where GR holds) and cosmological scales (“very low” curvature, where GR seems to break), without even needing any of the screening mechanisms mentioned in Section 1.2.

The Solar System tests that we discussed above are shown by the “Earth S/C” symbols and the filled circles lying on the black slanted line (that stands for the Sun as the source of gravitation). It is clear that in order to barely approach the potential–curvature desert, we must precisely monitor how trans-Neptunian objects (either planetoids or spacecraft) behave under the influence of the Sun’s gravity. Having a test-mass at least 150 AU from the Sun would allow us to actually enter that uncharted desert. Additionally, when parametrizing a deviation from Newtonian gravity as a Yukawa potential, we can see from Fig. 1 that flying a spacecraft at 150 AU from the Sun and farther would test a fifth force of range for which no constraints exist yet.

In Fig. 2, we added the regions that could be tested by monitoring the motion of a spacecraft in the Jupiter and Neptune systems. Although such measurements would probe still untested regions of the potential–curvature plane, they remain even farther than tests in the Earth orbit from the potential–curvature desert: albeit interesting in their own right, their constraining and exploratory potential on the fundamentals of gravitation is much less than having a test mass 150 AU from the Sun.

We can then state the first goal of our “dark sector” science case as:

Science goal #1– Begin the exploration of the potential–curvature desert with a spacecraft. This can be done by directly measuring the gravitational potential with an atomic clock on-board the spacecraft, and by comparing the spacecraft’s trajectory with that predicted by GR through the combination of ranging data and the in-situ measurement (and correction) of non-gravitational accelerations with an on-board accelerometer (Section 3). Only by entering this potential–curvature desert will we be able to gain new experimental clues about gravity, and perhaps about physics beyond GR. We could in particular enter a new intermediate regime that bridges GR in the Solar System and “dark energy gravitation” at cosmological scales. Additionally, we would approach MOND’s acceleration, thereby allowing us to directly test MOND [19].

2.2 Dark matter density measurement

2.2.1 (Direct) detection techniques and their link to the solar neighborhood

Although dark matter mostly interacts gravitationally with baryonic matter, it is expected that it leaves tiny signatures observable non-gravitationally. Thus, indirect and direct detection methods have been devised to hunt for it.

Indirect detection relies on observing electromagnetic signals or neutrinos originating from the annihilation of dark matter particles (see e.g. [104] for a review). We will not deal with such indirect detection techniques in the remainder of this paper.

Direct detection techniques have been invented to look for different species of dark matter: axions are looked for via their resonant conversion in an external magnetic field using microwave cavity experiments [74], while WIMPs are expected to occasionally interact with heavy nuclei, thereby creating a detectable nuclear recoil (see [101] for a review of existing experiments); moreover, light dark matter (below the GeV scale) can be searched via scatters off electrons [52], while ultra-light dark matter can cause tiny but apparent oscillations in the fundamental constants that can cause minute variations in the frequency of atomic transitions [17, 60, 107] and a fifth force (e.g. [24]).

For illustrative purposes, we now restrict our discussion to the spin-independent direct detection of WIMPs, through the elastic scattering off nuclei. Figure 3 shows the current limits in the WIMP mass – WIMP-nucleon cross-section plane from several direct detection experiments. The differential rate for WIMP scattering is degenerate between the local WIMP density in the galactic halo ρ0 and the WIMP-nucleus differential cross-section dσ/dER [21, 101]

where NN is the number of target nuclei, mw is the WIMP mass, ER is the energy transferred to the recoiling nucleus, and v and f(v) are the WIMP velocity and velocity distribution in the Earth frame. The lowest allowed velocity \(v_{\min \limits }\) is that needed for a WIMP to induce a detectable nuclear recoil, while the maximum one \(v_{\max \limits }=533^{+54}_{-41}\)km/s corresponds to the escape velocity [87], meaning that WIMPs with \(v>v_{\max \limits }\) are not bound to the Galaxy.

The WIMP velocity distribution f(v) must be estimated with numerical simulations [33, 62, 105, 114], though new observations could constrain f(v) [98]. Since it cannot be determined through the dynamics of a spacecraft, we will ignore it in the remainder of this paper, but instead focus on how and why we can measure the local dark matter density ρ0. We should first note that current estimates mostly agree in the range ρ0 = (0.4 − 1.5) GeV/c2/cm3 [37, 103].

It is clear from the degeneracy in (4) that, were ρ0 revised, upper limits of Fig. 3 would be affected [58]. It is therefore particularly important to have an estimate as precise as possible of ρ0. Two traditional ways to measure it have been used for decades by astronomers. We propose a novel, in-situ way, in Section 2.2.2. But first, we summarize those two traditional observational ways (see e.g. [89] and references therein).

The local dark matter density is an average of the Galactic dark matter halo density in a small region about the Solar System (at 8 kpc from the center of the Galaxy). The first method to estimate it then relies on the measurement of the rotation curve of the Galaxy and on a model of the dark matter halo. The halo is usually taken to be an isothermal sphere with density ρ(r) ∝ r− 2, so that we recover the flat rotation curve, but can be parameterized as a better model such as a Navarro-Frenk-White (NFW) halo [84]. A bad model choice is obviously a source of systematic uncertainties. The second method does not rely on a halo model, but uses the vertical motion of stars close to the Sun. The main systematics come from the distance and velocity measurement of those stars (so that K-stars are preferentially chosen), though robust techniques have been developed [57]. Combining the two methods allows astronomers to assess whether the Galactic halo is prolate or oblate, and to look for a dark disc.

Since the measured ρ0 must be extrapolated to the position of the Earth, the local homogeneity of the dark matter halo is an important point. In particular, any clump or stream of dark matter, as well as dark matter trapped by the Sun’s potential [86] may bias the extrapolation. Although dark matter simulations’ resolution is no better than a dozen parsecs, we have good reasons to assume that the possible effects are negligible, and to consider the local dark matter halo as homogeneous [89]. The presence of a dark disc on top of the halo should not cause a problem either, as it was shown that if such a disc exists, it is thick enough not to influence the homogeneity of the close-by dark matter [89].

We shall close this presentation with a major caveat [53, 58, 89]: although measurements of ρ0 relying on dark-matter-only simulation are very robust, adding baryons to the simulations significantly complicates things. However, baryons are clear components of our neighborhood, and therefore must be accounted for.

2.2.2 In-situ measurement of the local dark matter density

Tracking a spacecraft far enough from the Sun, we can in principle estimate the contribution of the Galactic dark matter to its dynamics. We then use the same techniques as those presented above, with the stellar tracers replaced by the spacecraft.

The discussion above also highlighted two possible (linked) caveats: (i) speculating on the homogeneity of the local dark matter distribution to estimate its density in terrestrial laboratories from astrophysics estimates averaged on the Solar System neighborhood and (ii) adding baryons to dark-matter-only simulations complicates predictions about the Galactic dark matter halo. These caveats highlight the difficulty of calibrating models without an in-situ measurement of physical quantities. This is especially true in our Galactic neighborhood, since it is dominated by baryons, so that hunting for dark matter is particularly difficult.

The interplay between dark matter and baryons is most easily seen by the uncertainty on ρ0 steming from our imperfect knowledge of the baryonic contribution to the local dynamical mass, as shown with the baryonic surface density Σb in Fig. 4 [103].

Better understanding the respective contributions and distributions of dark matter and baryons through experimental in-situ measurements is not only important for direct detection experiments (as it allows us to better estimate ρ0) but also to improve our knowledge of our local environment. Although dark-matter-only simulations expect a smooth dark matter distribution, we could be surprised to discover small clumps, streams, and passing clouds of dark matter: indeed, as already mentioned, ultra-light dark matter (clumped e.g. in the form of topological defects or axion stars) can cause minute variations in the frequency of atomic transitions; an atomic clock going through such a cloud would be temporarily desynchronized compared to Earth-bound clocks [51, 96, 116]. Only a direct assessment of the gravitational environment at very small scales will allow us to close this question: as dark matter can affect the rate of a clock, it can be done with a spacecraft carrying a clock, as shown in Section 3; if the clump is sufficiently massive, its crossing can be determined by the precise monitoring of the spacecraft’s trajectory.

Regarding baryonic matter, an accelerometer on-board a spacecraft measures all non-gravitational forces applied to the satellite (gas and dust friction, solar radiation pressure, outgasing...). It is then an invaluable tool to directly probe the distribution of baryons distribution along its trajectory. In other words, it provides an in-situ experimental measurement of the local contribution of dust and gas in Σb.

Such measurements will also be valuable for cosmology, as they could be used to calibrate galaxy evolution simulations, and to better understand the systematics that still plague weak lensing surveys [99, 102].

We can then state the second goal of our “dark sector” science case as:

Science goal #2– Improve our knowledge of the local dark matter and baryon densities. It can be fulfilled by monitoring the dynamics of a spacecraft in the Solar System neighborhood, the spacecraft carrying a clock and an accelerometer. The clock will be sensitive to local dark matter inhomogeneities. The combination of ranging and accelerometric data will also be sensitive to local gravitational disturbances, such as those that could be created by a massive enough clump. Finally, accelerometric data will be sensitive to the friction of any baryonic matter (dust and gas) on the spacecraft, allowing for a direct measurement of the baryonic matter density along the spacecraft trajectory. This will allow us to perform the first truly local measurement of the dark matter halo density ρ0 and to improve the characterization of the dark matter constraints from direct detection experiments.

3 A very deep space mission concept

In this section, we present the concept of a mission that could fulfill the science goals presented above.

3.1 Overview and requirements

3.1.1 Science goal #1: low-acceleration gravitation

This goal can be fulfilled by directly measuring the gravitational potential and by looking for a deviation from the ISL.

Gravitational potential

The universal redshift of clocks when subjected to a gravitational potential is one of the key predictions of General Relativity (GR) and, more generally, of all metric theories of gravitation. It represents an aspect of the Einstein Equivalence Principle (EEP) often referred to as Local Position Invariance (LPI) [117], and it makes clocks direct probes of the gravitational potential (and thus to the metric tensor g00 component). In GR, the frequency difference of two ideal clocks is proportional to [92]

where w is the Newtonian potential and v the coordinate velocity, with g and s standing for the ground and the in-space clocks. In theories different from GR this relation is modified, leading to different time and space dependence of the frequency difference. This can be tested by comparing two clocks at distant locations (different values of w and v) via exchange of an electromagnetic signal.

An outbound Solar System trajectory (large potential difference) and low uncertainty on the observable (5) allows for a relative uncertainty on the redshift determination given by the clock bias divided by the maximum value of Δw/c2. Assuming that the Earth station motion and its local gravitational potential can be known and corrected to uncertainty levels below 10− 17 in relative frequency (10 cm on geocentric distance), which, although challenging, are within present capabilities, then for a onboard clock similar to ACES’ PHARAO (see below), with a 10− 17 bias [92], at a distance of 150 AU this corresponds to a test with a relative uncertainty of 10− 9, an improvement by almost four orders of magnitude on the uncertainty obtained by the currently most sensitive experiments [50, 63].

We can thus take as a requirement that the clock’s bias is less than 10− 17.

Inverse Square Law violation

A definitive deviation from the ISL can be detected as a deviation from the trajectory predicted by GR when taking into account the gravity of the Solar System’s bodies. What is needed is an accurate orbit restitution, making sure that the spacecraft follows a geodesic. The former can be done through orbit tracking with Radio-Science, while the latter can be ensured with a drag-free spacecraft, whose trajectory is forced to be a geodesic by actively canceling non-gravitational forces; alternatively, we can measure the non-gravitational forces with a DC accelerometer, and correct for them when estimating the orbit, therefore not needing a drag-free spacecraft. Although a model of non-gravitational forces is commonly used to correct for them, we argue that no model is better than an empirical measurement, and hence that an accelerometer (or drag-free spacecraft) is needed to definitely confirm any measured deviation from the ISL.

The required accuracy of non-gravitational forces is driven by the accuracy on the orbit estimation and depends on the time of integration for the orbit restitution: the deviations from GR should be searched for in as short as possible time segments to minimize the effect of the drift. As shown by [59], a one-meter deviation from a Keplerian orbit can be detected in a few days, requiring a precision on non-gravitational forces of the order of 10− 12 m/s2. Getting down to such a precision would significantly improve the current constraints given by the Pioneer probes, which assumed a bias in acceleration of 10− 10 m/s2.

The requirements for the large scale test of the ISL can then be summarized as [38, 59]:

-

measure the orbit of the spacecraft with a precision better than 1 m, all along the mission, from the Earth to hundreds of AU

-

measure non-gravitational accelerations at the order of 10− 12 m/s2

3.1.2 Science goal #2: dark matter: search and local density measurement

The same experimental apparatus as used for Science goal #1 is able to fulfill this goal. Although precise requirements must still be computed, we can expect that the clock will detect inhomogeneities in the dark matter distribution. While the accelerometer will be perfectly suited to measure the baryon distribution through the friction applied to the spacecraft, combining its measurements with ranging data should enable us to detect massive enough inhomogeneities in the gravitational field, possibly originating from dark matter clumps or streams.

3.2 Mission profile

The main factor limiting the viability of very deep space missions with conventional propulsion systems and space navigation techniques is time. It took between 20 and 25 years for the Voyager and Pioneer probes to reach 50 AU; New Horizons, albeit faster, took 15 years. Drawing on these numbers, reaching 100 AU and more is prohibitively long.

Therefore, if we want to reach gravitation’s low-acceleration regime, we must envisage new propulsion methods. Although there are formidable technical challenges to be overcome, there is growing interest in developing new propulsion systems [81] and several innovative concepts have been put forth to send interstellar nanoprobes. For instance, the Breakthrough Starshot project aims to send a nanoprobe (of about 10 g) to Proxima Centauri. In order to reach this star (at 4.5 light-years), the probe will be given a strong impulse with a laser (shot from the ground) hitting a solar sail. The designers of the project expect the probe to reach 15% of the speed of light.

We consider building on this concept to design a mission aiming to test the dark sector in the outskirts of the Solar System. Let m and M be the mass of Breakthrough Starshot’s probe and of our spacecraft, respectively. Then, assuming the velocity of the Breakthrough Starshot probe is 0.15c and that the same amount of energy is transferred to our spacecraft (with a solar sail) by the ground laser, then its velocity is simply

Assuming m = 10 g, then a spacecraft of mass between 100 kg and 1000 kg should be able to reach 150 AU in 2 years to 6 years.

The mission profile is then straightforward. First, launch the spacecraft in Low Earth Orbit. As for MICROSCOPE, it could be a passenger of a Soyuz-like launcher. Second, accelerate it with Breakthrough Starshot’s laser to put it into a high-velocity Solar System outbound trajectory. A gravitational boost with Jupiter or Saturn could be envisioned to gain more speed in the first stage of its cruise.

This mission concept crucially depends (and speculates) on the availability of the Breakthrough Starshot (or similar) laser propulsion system. If such a system exists in the 2040s, can be used intensively (thereby, of a low usage cost), then our concept could fit into an M-class mission.

We detail the possible payload and platform below. We then conclude the section with the discussion of possible secondary science goals.

3.3 Payload

3.3.1 Clock

As shown above, a clock is directly sensitive to the gravitational potential. We thus propose to embark an atomic clock, the design of which can be based on the PHARAO clock, set to fly as part of the ACES experiment on-board the International Space Station in the early 2020s [92].

In microgravity, the linewidth of the atomic resonance of the clock will be tuned by two orders of magnitude, down to sub-Hertz values (from 11 Hz to 110 mHz), 5 times narrower than in Earth-based atomic fountains. After clock optimization, performances in the 10− 16 range are expected both for frequency instability and inaccuracy.

Developed by CNES, the cold atom clock PHARAO combines laser cooling techniques and microgravity conditions to significantly increase the interaction time and consequently reduce the linewidth of the clock transition. Improved stability and better control of systematic effects will be demonstrated in the space environment. PHARAO can reach a fractional frequency instability of 10− 13τ− 1/2, where τ is the integration time expressed in seconds, and an inaccuracy of a few parts in 1016.

Frequency transfer (via microwave link) with time deviation better than 0.3 ps at 300 s, 7 ps at 1 day, and 23 ps at 10 days of integration time will be demonstrated by ACES.

3.3.2 Electrostatic absolute accelerometer

Correcting for non-gravitational accelerations can be done with an unbiased (DC) accelerometer. One is currently under development at ONERA: the Gravity Advanced Package (GAP) is composed of an electrostatic accelerometer (MicroSTAR), based on ONERA’s expertise in the field of accelerometry and gravimetry (CHAMP, GRACE, GOCE, and MICROSCOPE missions), and a bias calibration system [75]. Ready-to-fly technology is used with original improvements aimed at reducing power consumption, size, and weight. The bias calibration system consists of a flip mechanism which allows for a 180o rotation of the accelerometer to be carried out at regularly spaced times. The flip allows the calibration of the instrument bias along two directions, by comparing the acceleration measurement in the two positions.

The three axes electrostatic accelerometers developed at ONERA are based on the electrostatic levitation of the instrument inertial mass with almost no mechanical contact with the instrument frame. The test-mass is then controlled by electrostatic forces and torques generated by six servo loops applying well measured equal voltages on symmetric electrodes. Measurements of the electrostatic forces and torques provide the six outputs of the accelerometer. The mechanical core of the accelerometer is fixed on a sole plate and enclosed in a hermetic housing in order to maintain a good vacuum around the proof-mass (Fig. 5, middle and right panels). The electronic boards are implemented around the housing. The control of the proof-mass is performed by low consumption analog functions.

The Bias Rejection System is equipped with a rotating actuator and a high-resolution angle encoder working in closed loop operation (Fig. 5, left). The actuator for the MicroSTAR Sensor Unit rotation is a stepper motor with worm gear. The electronic boards for driving the stepper motor and controlling the closed servo loop are located inside the housing. The electrical connection between Sensor Unit and Interface Control Unit is given by thin flexible shielded wires designed for the corresponding environment and durability requirements. Sliding contacts are not necessary because the maximum turning angle is 270o to one direction. A second small actuator (linear servo motor) is foreseen to block the moving part of the rotating actuator during transport and launch.

The global performance of the acceleration measurement is allocated towards the different contributors that appear in the expression of the measurement equation. Eight posts are defined with respect to the different contributors: at first order (assuming that the non-gravitational acceleration aNG is the dominant term in a perfect measurement), the measured acceleration is:

where n is the instrumental noise, b MicroSTAR’s bias, aparasitic, acc some parasitic acceleration acting as a bias, [K] the scale factor, [K2] the quadratic factor, and [R + S] accounts for rotations and misalignments, \([\dot {{{{{\varOmega }}}}}] + [{{{{\varOmega }}}}^{2}]\) for the inertial acceleration, aparasitic, SC for the spacecraft self-gravity, and \((- [U]r + 2 [{{{{\varOmega }}}}]\dot {r} + \ddot {r})\) describes gravity gradient and deformation.

The left panel of Fig. 6 shows the current noise of the MicroSTAR accelerometer, with different contributors: a level of 10− 9 m/s2/Hz1/2 is obtained over [10− 5 - 1] Hz, with a measurement range of 1.8 × 10− 4 m/s2. The bias modulation signal, consisting of regular 180o flips of the accelerometer, allows us to band-pass filter the accelerometer noise around the modulation frequency. The right panel of Fig. 6 shows, for different periods of modulation and calibration, that after rejecting the bias, GAP is able to measure absolute accelerations down to 10− 12 m/s2.

3.3.3 Tracking, clock synchronization, and communication

Although the precision attainable on the orbit determination at distances larger than 150 AU is uncertain, the spacecraft’s orbit can be determined through ranging, Doppler tracking, and Very Long Baseline Interferometry (VLBI).

Ranging should rely on the Deep Space Network (DSN), through the measurement of the time an upward signal takes to be retransmitted by the spacecraft. The power necessary to run the transponder onboard the spacecraft, as well as the antenna gain, are at the moment not precisely known. The return on experience of the Voyager and New Horizon probes will be beneficial to quantify them.

A microwave link will enable Doppler tracking of the spacecraft and the synchronization of the onboard clock with on-ground clocks.

Orbit determination should be performed regularly, however we do not expect that a very large duty cycle will be necessary. Therefore, this mission should not need very much access to DSN facilities.

3.4 Platform

The spacecraft should provide the lowest and most axisymmetrical gravitational field, and make coincide as much as possible the dry mass center of gravity, the propellant center of gravity, the radiation pressure force line, and the accelerometer. Furthermore, the satellite should be as rigid as possible to minimize the impact of vibrations and glitches due to mechanical constraints, which could contaminate the science measurements. A platform similar to that of MICROSCOPE could be envisaged. Thus, its weight can be expected to be about 500 kg.

Radioisotope Thermoelectric Generators (RTG) are required to operate a spacecraft beyond Jupiter. Furthermore, it is known that RTG can create non-gravitational forces through the radiation they emit (they are the best candidates to explain the Pioneer anomaly –[95, 109]). The onboard accelerometer will solve this problem.

A propulsion module will be needed only if deep-space maneuvers are required. This will depend on the orbit definition, and may largely affect the design of the spacecraft. To minimize the self-gravity nuisance from a propulsion module in the spacecraft, we could envision using an external propulsion module to set the spacecraft on its final orbit, that would be eventually released once the orbit is reached.

The attitude of the spacecraft may be passively controlled, at least in part, by spinning it slowly about its principle axis of symmetry; counter-rotating the payload would allow us to perform tests in a non-rotating frame. The attitude can be estimated with stellar sensors combined with the onboard accelerometers.

3.5 Secondary objectives

A space mission as that presented above allows for several secondary objectives:

Science goal #3-1

Measurement of Post-Newtonian parameters

The Parametrized Post Newtonian (PPN) formalism relies on linearizing Einstein’s equations in the weak field and low velocity regime [117]. A spacecraft leaving the Solar System is in this exact regime, such that the mission concept described above can provide new constraints of those parameters. For instance, the Eddington parameter γ (which measures how much space curvature is produced by unit rest mass) can be estimated during any solar conjunction during the spacecraft’s cruise to repeat the Cassini relativity experiment [28].

Science goal #3-2

Variation of fundamental constants

All deviations from GR mentioned in this White Paper can be attributed to the variation of fundamental constants [110]. The space mission concept that we depicted can thus be seen as a direct probe of the evolution of those constants.

Science goal #3-3

Kuiper belt exploration

Since their first detection [69], Kuiper Belt Objects (KBOs) have revolutionized our understanding of the history of the Solar System. Thousands of them have since been discovered, that exhibit remarkable diversity in their properties (see e.g. [36] for a review about KBOs). A spacecraft passing through the Kuiper belt is affected by its gravitational potential, its shape, and the possible dust distributed in the area. Combined with giant telescopes expected to be online in a few decades, a clock and an accelerometer on-board the spacecraft are the perfect tools to measure them –see e.g. the SAGAS science case [118].

Science goal #3-4

Heliosphere, heliopause, and heliosheath

Thanks to its onboard accelerometer, the outbound spacecraft presented above will be able not only to monitor the variation of solar radiation pressure as it drifts from the center of the Solar System, but also to measure all non-gravitational forces (solar and interstellar winds) as it crosses the heliopause. It will then largely complement the data already provided by the Voyager missions as they left the Solar System a few years ago. NASA’s Senior Review 2008 of the Mission Operations and Data Analysis Program for the Heliophysics Operating MissionsFootnote 3 highlighted the importance of continuing to obtain Voyager data to gain in-situ knowledge of the heliosheath.

Science goal #3-5

Measurement of gravitational waves background

Reynaud et al. [91] proposed a new way to set bounds on gravitational waves backgrounds created by astrophysical and cosmological sources by performing clock comparisons between a ground clock and a remote spacecraft equipped with an ultra-stable clock, rather than only ranging to an onboard transponder. Their investigation can be used in a mission concept as that described above.

4 Scientific landscape in the 2030s

In this section, we attempt a short anticipation of the scientific landscape in the 2030s to 2040s. We can identify five possible advances in the fields of interest of this White Paper.

-

Test of GR in the strong field regime with LISA: the opening of the gravitational Universe with LISA (to be launched in the early 2030s) will allow for significantly improved tests of GR with compact objects. However, we should not expect that these observations explain dark energy nor dark matter.

-

Improved constraints on dark energy and dark matter: full-sky surveys like the Rubin observatory (previously known as LSST), Euclid, and the Roman Space Telescope (previously known as WFIRST) will be completed. We can expect them to significantly tighten the constraints on the dark energy equation of state and on the distribution of dark matter at cosmological scale. Will they strengthen the GR+Λ CDM model or find new physics (perhaps in the line of the current tension between local and early-Universe measurements of the Hubble constant –e.g. [94]) is a difficult question to answer. In any case, they will remain blind to the gravitational potential–curvature desert mentioned above.

-

Fifth force laboratory and ground tests will have improved and probably excluded more of the small-range interaction parameter space. However, by definition, they will remain blind to those deviations from GR that could be measured at hundreds of AU from the Sun.

-

The dark matter parameter space will be better constrained by direct detection experiments and LHC searches. As for WIMPs, it is possible that the mass – cross-section plane of Fig. 3 is completely excluded, down to the neutrino floor, by 2030, or that WIMPs have been detected. Nevertheless, the degeneracy between the local dark matter density and the WIMP cross-section will remain. We do not see any plan to improve it without an in-situ measurement performed by a spacecraft in the neighborhood of the Solar System. Similar arguments can apply to other dark matter candidates (even those that may be invented in the next decade).

-

Giant (ground-based) telescopes and the JWST will be online: they will bring new discoveries of KBOs, that could be complemented by flying through the Kuiper belt.

None of those potential advances should close the science goals highlighted in this White Paper. It then seems that flying a spacecraft hundreds of AU from the Sun and entering the low-acceleration regime of gravitation is the only way to gain fast insight into the dark sector, even as late as 2040 or 2050.

5 Technological challenges

The payload will be inherited from already flown missions. The accelerometer will be derived from those onboard Earth gravitational field observation missions (GOCE, GRACE, GRACE-Follow) or fundamental physics (LISA Pathfinder). Nevertheless, it should be miniaturized to minimize the spacecraft’s total weight. The clock can be derived from the ACES/PHARAO clock, that should fly in the International Space Station in the early 2020s.

The platform should not present any technological challenge, apart from providing a gravitational background as quiet as possible.

We identify a handful of potentially major technological challenges:

-

propulsion: sending a spacecraft at 150 AU in a relatively short amount of time cannot rely on traditional chemical propulsion and space navigation techniques, so that new innovative propulsion systems must be proposed and developed. Speculating on the development of interstellar (nano)probes accelerated with a ground-based high-power laser, we could bet on the availability of such a laser to sufficiently accelerate a spacecraft with a mass of a few hundred kilograms.

-

communication and tracking: although precise requirements on the orbit determination at distances larger than 150 AU must still be computed, it is unclear whether the current ranging technology will be precise enough. The power of the transponder and the antenna gain will have to be maximized.

-

power: although radioisotope thermoelectric generators (RTG) are technologically ready for a spacecraft on a fast outbound Solar System orbit, an uncertainty remains about the policies regulating the launch of spacecraft powered by RTG in a few decades.

-

a shield will be required to send an atomic clock to outer space. This has never been done. For comparison, ACES will fly in Low Earth Orbit, where such shielding is not necessary.

6 Conclusion

Speculating on the development and availability of new innovative propulsion techniques in the 2040s, we presented open science questions related to the characterization of the dark sector. Flying a spacecraft out of the Solar System would allow us to enter the uncharted gravitation’s low-acceleration regime, where we may witness deviations from GR, hinting toward a new theory in which gravitation would depend on the spacetime curvature, to evolve from the well-tested Solar System regime of GR to the puzzling behavior we observe at cosmological scales. Deviations from GR could be efficiently looked for by finely tracking the trajectory of the spacecraft carrying an atomic clock and an accelerometer. The clock directly probes the gravitational potential, while the accelerometer measures all non-gravitational accelerations applied to the spacecraft, allowing us to subtract them and directly compare its trajectory to that predicted by GR.

Moreover, such a mission will have the potential to probe our gravitational environment, i.e. to disentangle the contributions of baryons and dark matter. As a consequence, it will be able to measure in-situ the local density of dark matter, which directly affects the constraints on dark matter’s characteristics inferred from on-ground direct detection experiments. It will also allow for the estimation of the homogeneity of the dark matter distribution in the Solar System neighborhood, thereby allowing for a better calibration of cosmological simulations and impacting cosmological surveys still affected by the poorly understood coupling between baryonic and dark matter. Finally, a flying atomic clock could detect clumps of ultralight dark matter via its effects on the frequency on atomic transitions.

Beyond the secondary science goals that we identified (measurement of PPN parameters and of the constancy of fundamental constants, exploration of the Kuiper belt and of the heliosheath, as well as measurement of the gravitational wave stochastic background), such a mission will bring new insights about the low-acceleration regime of gravitation. As stellar systems form in this regime, it is of primary importance to better understand it, at a time when exoplanets are routinely found and provide both new clues and new puzzles about the formation of planetary systems.

We shall conclude this White Paper by emphasizing that despite a wealth of new experiments getting online in the near future, that will bring new knowledge about the dark sector, it is very unlikely that the science questions that we presented will be closed. More importantly, it is likely that it will be even more urgent than currently to answer them. Tracking a spacecraft carrying a clock and an accelerometer as it leaves the Solar System may well be the easiest and fastest way to directly probe our dark environment.

References

Aad, G., Abajyan, T., Abbott, B., Abdallah, J., Abdel Khalek, S., Abdelalim, A.A., Abdinov, O., Aben, R., Abi, B., Abolins, M., et al.: Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 716, 1–29 (2012). https://doi.org/10.1016/j.physletb.2012.08.020, arXiv:1207.7214

Abbott, T.M.C., Abdalla, F.B., Alarcon, A., Aleksić, J, Allam, S., Allen, S., Amara, A., Annis, J., Asorey, J., Avila, S., Bacon, D., Balbinot, E., Banerji, M., Banik, N., Barkhouse, W., Baumer, M., Baxter, E., Bechtol, K., Becker, M.R., Benoit-Lévy, A, Benson, B.A., Bernstein, G.M., Bertin, E., Blazek, J., Bridle, S.L., Brooks, D., Brout, D., Buckley-Geer, E., Burke, D.L., Busha, M.T., Campos, A., Capozzi, D., Carnero Rosell, A., Carrasco Kind, M., Carretero, J., Castander, F.J., Cawthon, R., Chang, C., Chen, N., Childress, M., Choi, A., Conselice, C., Crittenden, R., Crocce, M., Cunha, C.E., D’Andrea, C.B., da Costa, L.N., Das, R., Davis, T.M., Davis, C., De Vicente, J., DePoy, D.L., DeRose, J., Desai, S., Diehl, H.T., Dietrich, J.P., Dodelson, S., Doel, P., Drlica-Wagner, A., Eifler, T.F., Elliott, A.E., Elsner, F., Elvin-Poole, J., Estrada, J., Evrard, A.E., Fang, Y., Fernandez, E., Ferté, A, Finley, D.A., Flaugher, B., Fosalba, P., Friedrich, O., Frieman, J., García-Bellido, J, Garcia-Fernandez, M., Gatti, M., Gaztanaga, E., Gerdes, D.W., Giannantonio, T., Gill, M.S.S., Glazebrook, K., Goldstein, D.A., Gruen, D., Gruendl, R.A., Gschwend, J., Gutierrez, G., Hamilton, S., Hartley, W.G., Hinton, S.R., Honscheid, K., Hoyle, B., Huterer, D., Jain, B., James, D.J., Jarvis, M., Jeltema, T., Johnson, M.D., Johnson, M.W.G., Kacprzak, T., Kent, S., Kim, A.G., King, A., Kirk, D., Kokron, N., Kovacs, A., Krause, E., Krawiec, C., Kremin, A., Kuehn, K., Kuhlmann, S., Kuropatkin, N., Lacasa, F., Lahav, O., Li, T.S., Liddle, A.R., Lidman, C., Lima, M., Lin, H., MacCrann, N., Maia, M.A.G., Makler, M., Manera, M., March, M., Marshall, J.L., Martini, P., McMahon, R.G., Melchior, P., Menanteau, F., Miquel, R., Miranda, V., Mudd, D., Muir, J., Möller, A, Neilsen, E., Nichol, R.C., Nord, B., Nugent, P., Ogando, R.L.C., Palmese, A., Peacock, J., Peiris, H.V., Peoples, J., Percival, W.J., Petravick, D., Plazas, A.A., Porredon, A., Prat, J., Pujol, A., Rau, M.M., Refregier, A., Ricker, P.M., Roe, N., Rollins, R.P., Romer, A.K., Roodman, A., Rosenfeld, R., Ross, A.J., Rozo, E., Rykoff, E.S., Sako, M., Salvador, A.I., Samuroff, S., Sánchez, C, Sanchez, E., Santiago, B., Scarpine, V., Schindler, R., Scolnic, D., Secco, L.F., Serrano, S., Sevilla-Noarbe, I., Sheldon, E., Smith, R.C., Smith, M., Smith, J., Soares-Santos, M., Sobreira, F., Suchyta, E., Tarle, G., Thomas, D., Troxel, M.A., Tucker, D.L., Tucker, B.E., Uddin, S.A., Varga, T.N., Vielzeuf, P., Vikram, V., Vivas, A.K., Walker, A.R., Wang, M., Wechsler, R.H., Weller, J., Wester, W., Wolf, R.C., Yanny, B., Yuan, F., Zenteno, A., Zhang, B., Zhang, Y., Zuntz, J., Dark Energy Survey Collaboration: Dark Energy Survey year 1 results: Cosmological constraints from galaxy clustering and weak lensing. Phys. Rev. D 98(4), 043526 (2018). https://doi.org/10.1103/PhysRevD.98.043526, arXiv:1708.01530

Abdelhameed, A.H., et al.: First results from the CRESST-III low-mass dark matter program. arXiv:1904.00498 (2019)

Adelberger, E.G., Heckel, B.R., Nelson, A.E.: Tests of the gravitational inverse-square law. Annu. Rev. Nucl. Part. Sci. 53, 77–121 (2003). https://doi.org/10.1146/annurev.nucl.53.041002.110503, hep-ph/0307284

Adelberger, E.G., Gundlach, J.H., Heckel, B.R., Hoedl, S., Schlamminger, S.: Torsion balance experiments: A low-energy frontier of particle physics. Prog. Part. Nucl. Phys. 62(1), 102–134 (2009). https://doi.org/10.1016/j.ppnp.2008.08.002

Adhikari, G., et al.: An experiment to search for dark-matter interactions using sodium iodide detectors. Nature 564(7734), 83–86 (2018). https://doi.org/10.1038/s41586-018-0739-1,10.1038/s41586-019-0890-3, arXiv:1906.01791, erratum: Nature566,no.7742,E2(2019)

Agnes, P., Albuquerque, I.F.M., Alexander, T., Alton, A.K., Araujo, G.R., Asner, D.M., Ave, M., Back, H.O., Baldin, B., Batignani, G.: Low-mass dark matter search with the darkside-50 experiment. Phys. Rev. Lett. 121(8), 081307 (2018). https://doi.org/10.1103/PhysRevLett.121.081307

Agnese, R., Anderson, A.J., Aramaki, T., Asai, M., Baker, W., Balakishiyeva, D., Barker, D., Basu Thakur, R., Bauer, D.A., Billard, J.: New results from the search for low-mass weakly interacting massive particles with the CDMS low ionization threshold experiment. Phys. Rev. Lett. 116(7), 071301 (2016). https://doi.org/10.1103/PhysRevLett.116.071301

Agnese, R., Aralis, T., Aramaki, T., Arnquist, I.J., Azadbakht, E., Baker, W., Banik, S., Barker, D., Bauer, D A, Binder, T.: First dark matter constraints from a SuperCDMS single-charge sensitive detector. Phys. Rev. Lett. 121(5), 051301 (2018). https://doi.org/10.1103/PhysRevLett.121.051301, arXiv:1804.10697

Ajaj, R., Amaudruz, P.A., Araujo, G.R., Baldwin, M., Batygov, M., Beltran, B., Bina, C.E., Bonatt, J., Boulay, M.G., Broerman, B.: Search for dark matter with a 231-day exposure of liquid argon using DEAP-3600 at SNOLAB, arXiv:1902.04048 (2019)

Akerib, D.S., Alsum, S., Araújo, HM, Bai, X., Bailey, A.J., Balajthy, J., Beltrame, P., Bernard, E.P., Bernstein, A., Biesiadzinski, T.P.: Results from a search for dark matter in the complete LUX exposure. Phys. Rev. Lett. 118(2), 021303 (2017). https://doi.org/10.1103/PhysRevLett.118.021303, arXiv:1608.07648

Anderson, J.D., Laing, P.A., Lau, E.L., Liu, A.S., Nieto, M.M., Turyshev, S.G.: Indication, from pioneer 10/11, galileo, and ulysses data, of an apparent anomalous, weak, long-range acceleration. Phys. Rev. Lett. 81, 2858–2861 (1998). https://doi.org/10.1103/PhysRevLett.81.2858, arXiv:gr-qc/9808081

Anderson, J.D., Laing, P.A., Lau, E.L., Liu, A.S., Nieto, M.M., Turyshev, S.G.: Study of the anomalous acceleration of Pioneer 10 and 11. Phys. Rev. D 65(8), 082004 (2002). https://doi.org/10.1103/PhysRevD.65.082004, arXiv:gr-qc/0104064

Aprile, E., Aalbers, J., Agostini, F., Alfonsi, M., Amaro, F.D., Anthony, M., Arneodo, F., Barrow, P., Baudis, L., Bauermeister, B.: XENON100 dark matter results from a combination of 477 live days. Phys. Rev. D 94(12), 122001 (2016). https://doi.org/10.1103/PhysRevD.94.122001. arXiv:1609.06154

Aprile, E., Aalbers, J., Agostini, F., Alfonsi, M., Althueser, L., Amaro, F.D., Anthony, M., Arneodo, F., Baudis, L., Bauermeister, B.: Dark matter search results from a one ton-year exposure of XENON1T. Phys. Rev. Lett. 121(11), 111302 (2018). https://doi.org/10.1103/PhysRevLett.121.111302, arXiv:1805.12562

Armano, M., Audley, H., Auger, G., Baird, J.T., Bassan, M., Binetruy, P., Born, M., Bortoluzzi, D., Brandt, N., Caleno, M., Carbone, L., Cavalleri, A., Cesarini, A., Ciani, G., Congedo, G., Cruise, A.M., Danzmann, K., de Deus Silva, M., De Rosa, R., Diaz-Aguiló, M, Di Fiore, L., Diepholz, I., Dixon, G., Dolesi, R., Dunbar, N., Ferraioli, L., Ferroni, V., Fichter, W., Fitzsimons, E.D., Flatscher, R., Freschi, M., García Marín, AF, García Marirrodriga, C, Gerndt, R., Gesa, L., Gibert, F., Giardini, D., Giusteri, R., Guzmán, F, Grado, A., Grimani, C., Grynagier, A., Grzymisch, J., Harrison, I., Heinzel, G., Hewitson, M., Hollington, D., Hoyland, D., Hueller, M., Inchauspé, H, Jennrich, O., Jetzer, P., Johann, U., Johlander, B., Karnesis, N., Kaune, B., Korsakova, N., Killow, C.J., Lobo, J.A., Lloro, I., Liu, L., López-Zaragoza, JP, Maarschalkerweerd, R., Mance, D., Martín, V, Martin-Polo, L., Martino, J., Martin-Porqueras, F., Madden, S., Mateos, I., McNamara, P.W., Mendes, J., Mendes, L., Monsky, A., Nicolodi, D., Nofrarias, M., Paczkowski, S., Perreur-Lloyd, M., Petiteau, A., Pivato, P., Plagnol, E., Prat, P., Ragnit, U., Raïs, B, Ramos-Castro, J., Reiche, J., Robertson, D.I., Rozemeijer, H., Rivas, F., Russano, G., Sanjuán, J, Sarra, P., Schleicher, A., Shaul, D., Slutsky, J., Sopuerta, C.F., Stanga, R., Steier, F., Sumner, T., Texier, D., Thorpe, J.I., Trenkel, C., Tröbs, M, Tu, HB, Vetrugno, D., Vitale, S., Wand, V., Wanner, G., Ward, H., Warren, C., Wass, P.J., Wealthy, D., Weber, W.J., Wissel, L., Wittchen, A., Zambotti, A., Zanoni, C., Ziegler, T., Zweifel, P.: Sub-Femto-g Free Fall for space-based gravitational wave observatories: lisa pathfinder results. Phys. Rev. Lett. 116(23), 231101 (2016). https://doi.org/10.1103/PhysRevLett.116.231101

Arvanitaki, A., Huang, J., Van Tilburg, K.: Searching for dilaton dark matter with atomic clocks. Phys. Rev. D 91(1), 015015 (2015). https://doi.org/10.1103/PhysRevD.91.015015, arXiv:1405.2925

Baker, T., Psaltis, D., Skordis, C.: Linking tests of gravity on all scales: from the strong-field regime to cosmology. Astrophys. J. 802(1), 63 (2015). https://doi.org/10.1088/0004-637X/802/1/63, arXiv:1412.3455

Banik, I., Kroupa, P.: Testing gravity with interstellar precursor missions. Mon. Not. R. Astron. Soc. 487(2), 2665–2672 (2019). https://doi.org/10.1093/mnras/stz1508, arXiv:1907.00006

Barreira, A., Brax, P., Clesse, S., Li, B., Valageas, P.: K-mouflage gravity models that pass Solar System and cosmological constraints. Phys. Rev. D 91(12), 123522 (2015). https://doi.org/10.1103/PhysRevD.91.123522, arXiv:1504.01493

Baudis, L.: Direct dark matter detection: The next decade. Phys. Dark Universe 1, 94–108 (2012). https://doi.org/10.1016/j.dark.2012.10.006, arXiv:1211.7222

Baudis, L.: Dark matter searches. Ann. Phys. 528(1-2), 74–83 (2016). https://doi.org/10.1002/andp.201500114, arXiv:1509.00869

Bergé, J: ISLAND: the inverse square law and newtonian dynamics space explorer. In: Proceedings of the 52nd Rencontres de Moriond in Gravitation. arXiv:1809.00698, vol. 52, p 191 (2017)

Bergé, J, Brax, P., Métris, G, Pernot-Borràs, M, Touboul, P., Uzan, J.P.: MICROSCOPE Mission: First constraints on the violation of the weak equivalence principle by a light scalar Dilaton. Phys. Rev. Lett. 120(14), 141101 (2018a). https://doi.org/10.1103/PhysRevLett.120.141101, arXiv:1502.03167, arXiv:1712.00483

Bergé, J, Brax, P., Pernot-Borràs, M, Uzan, J.P.: Interpretation of geodesy experiments in non-Newtonian theories of gravity. Classical and Quantum Gravity 35(23), 234001 (2018). https://doi.org/10.1088/1361-6382/aae9a1, arXiv:1808.00340

Bernabei, R., Belli, P., Cappella, F., Cerulli, R., Dai, C.J., D’Angelo, A, He, H.L., Incicchitti, A., Kuang, H.H., Ma, J.M., Montecchia, F., Nozzoli, F., Prosperi, D., Sheng, X.D., Ye, Z.P.: First results from DAMA/LIBRA and the combined results with DAMA/NaI. European Phys. J. C 56, 333 (2008). https://doi.org/10.1140/epjc/s10052-008-0662-y, arXiv:0804.2741

Bernabei, R., Belli, P., Bussolotti, A., Cappella, F., Caracciolo, V., Cerulli, R., Dai, C.J., d’Angelo, A., Di Marco, A., He, H.L.: First model independent results from DAMA/LIBRA-Phase2. Universe 4 (11), 116 (2018). https://doi.org/10.3390/universe4110116, arXiv:1805.10486

Bertotti, B., Iess, L., Tortora, P.: A test of general relativity using radio links with the Cassini spacecraft. Nature 425(6956), 374–376 (2003). https://doi.org/10.1038/nature01997

Bienaymé, O, Famaey, B., Siebert, A., Freeman, K.C., Gibson, B.K., Gilmore, G., Grebel, E.K., Bland-Hawthorn, J., Kordopatis, G., Munari, U., Navarro, J.F., Parker, Q., Reid, W., Seabroke, G.M., Siviero, A., Steinmetz, M., Watson, F., Wyse, R.F.G., Zwitter, T.: Weighing the local dark matter with RAVE red clump stars. Astron. Astrophys. 571, A92 (2014). https://doi.org/10.1051/0004-6361/201424478, arXiv:1406.6896

Bovy, J., Rix, H.W.: A direct dynamical measurement of the milky way’s disk surface density profile, disk scale length, and dark matter profile at 4 kpc 4 kpc <˜R <˜9 kpc. Astrophys. J. 779(2), 115 (2013). https://doi.org/10.1088/0004-637X/779/2/115, arXiv:1309.0809

Bovy, J., Tremaine, S.: On the local dark matter density. Astrophys. J 756, 89 (2012). https://doi.org/10.1088/0004-637X/756/1/89, arXiv:1205.4033

Boyarsky, A., Drewes, M., Lasserre, T., Mertens, S., Ruchayskiy, O.: Sterile neutrino dark matter. Prog. Part. Nucl. Phys. 104, 1–45 (2019). https://doi.org/10.1016/j.ppnp.2018.07.004, arXiv:1807.07938

Bozorgnia, N., Calore, F., Schaller, M., Lovell, M., Bertone, G., Frenk, C.S., Crain, R.A., Navarro, J.F., Schaye, J., Theuns, T.: Simulated Milky Way analogues: implications for dark matter direct searches. J. Cosmol. Astropart. Phys. 2016(5), 024 (2016). https://doi.org/10.1088/1475-7516/2016/05/024, arXiv:1601.04707

Brax, P., van de Bruck, C., Davis, A.C., Shaw, D.: Dilaton and modified gravity. Phys. Rev. D 82(6), 063519 (2010). https://doi.org/10.1103/PhysRevD.82.063519, arXiv:1005.3735

Brax, P., Rizzo, L.A., Valageas, P.: K-mouflage effects on clusters of galaxies. Phys. Rev. D 92(4), 043519 (2015). https://doi.org/10.1103/PhysRevD.92.043519, arXiv:1505.05671

Brown, M.E.: The Compositions of Kuiper Belt Objects. Annu. Rev. Earth Planet. Sci. 40, 467–494 (2012). https://doi.org/10.1146/annurev-earth-042711-105352, arXiv:1112.2764

Buch, J., Leung, S.C.J., Fan, J.: Using Gaia DR2 to constrain local dark matter density and thin dark disk. J. Cosmol. Astropart. Phys. 2019 (4), 026 (2019). https://doi.org/10.1088/1475-7516/2019/04/026, arXiv:1808.05603

Buscaino, B., DeBra, D., Graham, P.W., Gratta, G., Wiser, T.D.: Testing long-distance modifications of gravity to 100 astronomical units. Phys. Rev. D 92(10), 104048 (2015). https://doi.org/10.1103/PhysRevD.92.104048, arXiv:1508.06273

Carroll, S.M.: The cosmological constant. Living Rev. Relativ. 4, 1 (2001). https://doi.org/10.12942/lrr-2001-1, arXiv:astro-ph/0004075

Chang, C., Pujol, A., Mawdsley, B., Bacon, D., Elvin-Poole, J., Melchior, P., Kovács, A, Jain, B., Leistedt, B., Giannantonio, T.: Dark Energy Survey Year 1 results: curved-sky weak lensing mass map. Mon. Not. R. Astron. Soc. 475(3), 3165–3190 (2018). https://doi.org/10.1093/mnras/stx3363, arXiv:1708.01535

Chatrchyan, S., Khachatryan, V., Sirunyan, A.M., Tumasyan, A., Adam, W., Aguilo, E., Bergauer, T., Dragicevic, M., Erö, J, Fabjan, C., et al.: Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. B 716, 30–61 (2012). https://doi.org/10.1016/j.physletb.2012.08.021, arXiv:1207.7235

Christophe, B., Andersen, P.H., Anderson, J.D., Asmar, S., Bério, P, Bertolami, O., Bingham, R., Bondu, F., Bouyer, P., Bremer, S., Courty, J-M, Dittus, H., Foulon, B., Gil, P., Johann, U., Jordan, J.F., Kent, B., Lämmerzahl, C, Lévy, A, Métris, G, Olsen, O., Pàramos, J, Prestage, J.D., Progrebenko, S.V., Rasel, E., Rathke, A., Reynaud, S., Rievers, B., Samain, E., Sumner, T.J., Theil, S., Touboul, P., Turyshev, S., Vrancken, P., Wolf, P., Yu, N.: Odyssey: a solar system mission. Exp. Astron. 23, 529–547 (2009). https://doi.org/10.1007/s10686-008-9084-y, arXiv:0711.2007

Ciufolini, I., Paolozzi, A., Koenig, R., Pavlis, E.C., Ries, J., Matzner, R., Gurzadyan, V., Penrose, R., Sindoni, G., Paris, C.: Fundamental Physics and General Relativity with the LARES and LAGEOS satellites. Nucl. Phys. B Proc. Suppl. 243, 180–193 (2013). https://doi.org/10.1016/j.nuclphysbps.2013.09.005, arXiv:1309.1699

Clowe, D., Bradač, M, Gonzalez, A.H., Markevitch, M., Randall, S.W., Jones, C., Zaritsky, D.: A Direct Empirical Proof of the Existence of Dark Matter. Astrophys. J. 648, L109–L113 (2006). https://doi.org/10.1086/508162, arXiv:astro-ph/0608407

Cui, X., Abdukerim, A., Chen, W., Chen, X., Chen, Y., Dong, B., Fang, D., Fu, C., Giboni, K., Giuliani, F.: Dark matter results from 54-ton-day exposure of pandaX-II experiment. Phys. Rev. Lett. 119 (18), 181302 (2017). https://doi.org/10.1103/PhysRevLett.119.181302

Damour, T., Donoghue, J.F.: Equivalence principle violations and couplings of a light dilaton. Phys. Rev. D 82(8), 084033 (2010a). https://doi.org/10.1103/PhysRevD.82.084033, arXiv:1007.2792

Damour, T., Donoghue, J.F.: FAST TRACK COMMUNICATION: Phenomenology of the equivalence principle with light scalars. Classical and Quantum Gravity 27(20), 202001 (2010b). https://doi.org/10.1088/0264-9381/27/20/202001, arXiv:1007.2790

Damour, T., Esposito-Farese, G.: Tensor-multi-scalar theories of gravitation. Classical and Quantum Gravity 9, 2093–2176 (1992). https://doi.org/10.1088/0264-9381/9/9/015

Delva, P., Hees, A., Wolf, P.: Clocks in space for tests of fundamental physics. Space Sci. Rev. 212(3-4), 1385–1421 (2017). https://doi.org/10.1007/s11214-017-0361-9

Delva, P., Puchades, N., Schönemann, E, Dilssner, F., Courde, C., Bertone, S., Gonzalez, F., Hees, A., Le Poncin-Lafitte, C., Meynadier, F., Prieto-Cerdeira, R., Sohet, B., Ventura-Traveset, J., Wolf, P.: Gravitational redshift test using eccentric galileo satellites. Phys. Rev. Lett. 121(23), 231101 (2018). https://doi.org/10.1103/PhysRevLett.121.231101, arXiv:1812.03711

Derevianko, A., Pospelov, M.: Hunting for topological dark matter with atomic clocks. Nat. Phys. 10, 933–936 (2014). https://doi.org/10.1038/nphys3137, arXiv:1311.1244

Essig, R., Mardon, J., Volansky, T.: Direct detection of sub-GeV dark matter. Phys. Rev. D 85(7), 076007 (2012). https://doi.org/10.1103/PhysRevD.85.076007, arXiv:1108.5383

Evans, N.W., O’Hare, C.A.J., McCabe, C.: Refinement of the standard halo model for dark matter searches in light of the Gaia Sausage. Phys. Rev. D99(2), 023012 (2019). https://doi.org/10.1103/PhysRevD.99.023012, arXiv:1810.11468

Fayet, P.: M I C R O S C O P E limits on the strength of a new force with comparisons to gravity and electromagnetism. Phys. Rev. D 99(5), 055043 (2019). https://doi.org/10.1103/PhysRevD.99.055043, arXiv:1809.04991

Fienga, A., Laskar, J., Kuchynka, P., Le Poncin-Lafitte, C., Manche, H., Gastineau, M.: Gravity tests with INPOP planetary ephemerides. In: Klioner, SA, Seidelmann, PK, Soffel, MH (eds.) Relativity in Fundamental Astronomy: Dynamics, Reference Frames, and Data Analysis, IAU Symposium. https://doi.org/10.1017/S1743921309990330, arXiv:0906.3962, vol. 261, pp 159–169 (2010)

Fischbach, E., Talmadge, C.L.: The Search for Non-Newtonian Gravity (1999)

Garbari, S., Liu, C., Read, J.I., Lake, G.: A new determination of the local dark matter density from the kinematics of K dwarfs. Mon. Not. R. Astron. Soc. 425(2), 1445–1458 (2012). https://doi.org/10.1111/j.1365-2966.2012.21608.x, arXiv:1206.0015

Green, A.M.: Astrophysical uncertainties on the local dark matter distribution and direct detection experiments. J. Phys. G Nuclear Phys. 44(8), 084001 (2017). https://doi.org/10.1088/1361-6471/aa7819, arXiv:1703.10102

Hees, A., Lamine, B., Reynaud, S., Jaekel, M.T., Le Poncin-Lafitte, C, Lainey, V, Füzfa, A, Courty, JM, Dehant, V., Wolf, P.: Radioscience simulations in general relativity and in alternative theories of gravity. Classical and Quantum Gravity 29(23), 235027 (2012). https://doi.org/10.1088/0264-9381/29/23/235027, arXiv:1201.5041

Hees, A., Guéna, J, Abgrall, M., Bize, S., Wolf, P.: Searching for an oscillating massive scalar field as a dark matter candidate using atomic hyperfine frequency comparisons. Phys. Rev. Lett. 117(6), 061301 (2016). https://doi.org/10.1103/PhysRevLett.117.061301, arXiv:1604.08514

Hees, A., Do, T., Ghez, A.M., Martinez, G.D., Naoz, S., Becklin, E.E., Boehle, A., Chappell, S., Chu, D., Dehghanfar, A., Kosmo, K., Lu, J.R., Matthews, K., Morris, M.R., Sakai, S., Schödel, R, Witzel, G.: Testing general relativity with stellar orbits around the supermassive black hole in our galactic center. Phys. Rev. Lett. 118(21), 211101 (2017). https://doi.org/10.1103/PhysRevLett.118.211101, arXiv:1705.07902

Helmi, A., Babusiaux, C., Koppelman, H.H., Massari, D., Veljanoski, J., Brown, A.G.A.: The merger that led to the formation of the Milky Way’s inner stellar halo and thick disk. Nature 563(7729), 85–88 (2018). https://doi.org/10.1038/s41586-018-0625-x, arXiv:1806.06038

Herrmann, S., Finke, F., Lülf, M, Kichakova, O., Puetzfeld, D., Knickmann, D., List, M, Rievers, B., Giorgi, G., Günther, C, Dittus, H., Prieto-Cerdeira, R., Dilssner, F., Gonzalez, F., Schönemann, E, Ventura-Traveset, J., Lämmerzahl, C: Test of the gravitational redshift with galileo satellites in an eccentric orbit. Phys. Rev. Lett. 121 (23), 231102 (2018). https://doi.org/10.1103/PhysRevLett.121.231102, arXiv:1812.09161

Hinterbichler, K., Khoury, J.: Screening long-range forces through local symmetry restoration. Phys. Rev. Lett. 104(23), 231301 (2010). https://doi.org/10.1103/PhysRevLett.104.231301, arXiv:1001.4525

Iorio, L.: Constraints on a Yukawa gravitational potential from laser data of LAGEOS satellites. Phys. Lett. A 298, 315–318 (2002). https://doi.org/10.1016/S0375-9601(02)00580-7, arXiv:gr-qc/0201081

Ishak, M.: Testing general relativity in cosmology. Living Rev. Relativ. 22(1), 1 (2019). https://doi.org/10.1007/s41114-018-0017-4, arXiv:1806.10122

Jaekel, M.T., Reynaud, S.: Gravity tests in the solar system and the pioneer anomaly. Modern Phys. Lett. A 20, 1047–1055 (2005). https://doi.org/10.1142/S0217732305017275, arXiv:gr-qc/0410148

Jain, B., Khoury, J.: Cosmological tests of gravity. Ann. Phys. 325(7), 1479–1516 (2010). https://doi.org/10.1016/j.aop.2010.04.002, arXiv:1004.3294