Abstract

Despite the accuracy of machine learning (ML) techniques in predicting invalid bug reports, as shown in earlier research, and the importance of early identification of invalid bug reports in software maintenance, the adoption of ML techniques for this task in industrial practice is yet to be investigated. In this study, we used a technology transfer model to guide the adoption of an ML technique at a company for the early identification of invalid bug reports. In the process, we also identify necessary conditions for adopting such techniques in practice. We followed a case study research approach with various design and analysis iterations for technology transfer activities. We collected data from bug repositories, through focus groups, a questionnaire, and a presentation and feedback session with an expert. As expected, we found that an ML technique can identify invalid bug reports with acceptable accuracy at an early stage. However, the technique’s accuracy drops over time in its operational use due to changes in the product, the used technologies, or the development organization. Such changes may require retraining the ML model. During validation, practitioners highlighted the need to understand the ML technique’s predictions to trust the predictions. We found that a visual (using a state-of-the-art ML interpretation framework) and descriptive explanation of the prediction increases the trustability of the technique compared to just presenting the results of the validity predictions. We conclude that trustability, integration with the existing toolchain, and maintaining the techniques’ accuracy over time are critical for increasing the likelihood of adoption.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Users of software products report undesired behaviors of the products as bug reports. Companies address these bug reports to improve their products. The number of such bug reports may be high for large products. For instance, Mozilla received 224,408 bug reports in 12 months, and Firefox received 126,967 bug reports in 18 months (Fan et al. 2018). However, submitted bug reports may be invalid. Invalid bug reports are bug reports that do not describe deviations from the defined behavior of a system and are often related to misunderstandings of the system’s functionality (Sun 2011). For Mozilla and Firefox, Fan et al. (2018) found that 31% and 77%, respectively, of the submitted bug reports were invalid.

When developers are assigned a bug report, they need to investigate if a corrective action in the code is required. To arrive at a suitable action, they need to understand the issue together with other stakeholders. This involves replication of the issue, consulting product documentation, or discussions with product owners and bug report authors. When a bug report assigned to a developer turns out to be invalid, a lot of resources have already been spent unnecessarily on its investigation. In Laiq et al. (2022), we found that 14–17% of bug reports assigned to developers turn out to be invalid. Automatically determining the likely validity of a bug report before assignment to developers may reduce the effort significantly.

Several studies (Zanetti et al. 2013; Fan et al. 2018) have used ML and deep learning (He et al. 2020) techniques for predicting the validity of bug reports with high predictive accuracy. However, these studies do not involve practitioners, use only archival bug reports data from open-source software systems, do not conduct evaluations in real settings, and have not yet been integrated into bug management processes or practices.

Despite the potential advantages of early identification of invalid bug reports in practice, the adoption of the proposed techniques in practice is yet to be investigated. Thus, in this study, we use a technology transfer model (Gorschek et al. 2006) to guide the adoption of an ML technique for the early identification of invalid bug reports in practice.

There may be several factors that affect the adoption of ML techniques (Rana et al. 2014b; Paleyes et al. 2022). In our previous work (Laiq et al. 2022), we found that it is critical for practitioners to understand the prediction results of the ML technique. However, the explainability aspect of ML techniques to determine the validity of bug reports has not been studied. Thus, in this study, we investigate how the explainability of ML techniques for early identification of invalid bug reports can be supported by means of current approaches for interpreting the predictions of ML techniques.

Another important factor for adoption is an ML technique’s performance over time. When systems evolve over time, so will the bug reports since existing issues will be solved, new features will be added, and usage patterns will change. The performance of an ML technique will likely degrade when underlying concepts in the data change; this variation is known as concept drift (Ekanayake et al. 2012). Earlier research on bug prediction has indicated that the data used for bug prediction suffers from concept drift (Bennin et al. 2020; Kabir et al. 2020). In our study, this issue became apparent when the deployed model in the company could not match the accuracy level we achieved on archival data from the company. Thus, we decided to investigate the reasons of this mismatch. After ruling out any implementation issue that may explain poor performance, we investigated if concept drift may explain the mismatch.

In this paper, we present an industrial case study to evaluate the adoption of an ML technique in real industrial settings for the early identification of invalid bug reports. Our study provides new insights into the adoption of ML techniques in proprietary contexts as we go beyond the prediction accuracy achieved on archived data, which has been the case mainly in previous work (Fan et al. 2018; He et al. 2020).

The outline of this paper is as follows. Section 2 describes the related work on determining the validity of bug reports and adopting ML techniques in software development practice. We also describe how the current paper extends our previous work on the topic. Sections 3 and 4 present the research method of our study, i.e., the case description, data collection, and design and analysis iteration for each research question. In Section 5, we present the results of our study. In Section 6, we discuss our findings. Section 7 describes the validity threats to our study. Finally, in Section 8, we conclude the paper.

2 Related Work

In this section, we discuss the related work on the validity of bug reports and the adoption of ML techniques in software development practice and describe how we extend our previous work on the topic.

2.1 Bug Report Validity

There are few studies that have investigated the validity of bug reports. The studies (Zanetti et al. 2013; Fan et al. 2018; He et al. 2020) most relevant to our work are described below.

Zanetti et al. (2013) construct a collaboration graph capturing the connections between people working on a defect’s resolution. They use two fields of bug reports from Mozilla Firefox, Mozilla Thunderbird, Eclipse, and Netbeans projects to identify a connection: (1) the field listing to whom a bug report is assigned and (2) the field listing other people who are tagged as relevant for the bug report resolution. From the resulting graphs, nine features (e.g., the clusters of highly connected people) were extracted and used to predict the validity of bug reports using a Support Vector Machine (SVM) classifier.

Building on Zanetti et al. (2013)’s technique, Fan et al. (2018) complemented the graph-based features with features extracted from the bug report’s text and the submitter’s past experience. They used 33 features as input to SVM and Random forest classifiers for predicting the validity of bug reports. Their technique considerably improved the prediction accuracy of invalid bug reports compared to Zanetti et al. .

A shared limitation of the techniques by Zanetti et al. and Fan et al. is that the required features for prediction might not be available in the early stages of bug management. He et al. (2020) found that the fields required for creating (Zanetti et al. 2013)’s collaboration graph were unavailable for a newly submitted bug report even after three days. Similarly, Fan et al. (2018) extract collaboration-related features using comments on bug reports that will be unavailable for a newly submitted bug report. Thus, these two prediction techniques can not be used in practice in the early stages of bug management (He et al. 2020).

Therefore, He et al. (2020) only used summaries and descriptions of the bug reports. They applied a deep learning technique, convolutional neural network (CNN), in the OSS context. Their technique improved the accuracy of invalid bug reports prediction compared to Fan et al. (2018)’s technique. However, their technique may be prone to overfitting since they split data into training and testing sets comprising a fixed number of bug reports instead of having incremental folds for evaluation. Furthermore, their technique could not outperform (Fan et al. 2018)’s technique for all projects.

These studies described above mainly focus on predictive accuracy, do not involve practitioners, only use archival bug reports, and do not conduct evaluations by use in real-world settings. In this study, we use (Gorschek et al. 2006)’s technology transfer model to guide the adoption of an ML technique in practice for the early identification of invalid bug reports, which involves various validation steps. Furthermore, we elicit practitioners’ adoption concerns before and after the use of an ML-based tool through (Rana et al. 2014b)’s technology adoption framework.

2.2 Adoption of ML-Techniques in Software Development Practice

Several studies have focused on adopting ML techniques in practice to assist practitioners in software maintenance tasks such as bug assignment and prediction (Oliveira et al. 2021; Borg et al. 2022; Rana et al. 2014a; Aktas and Yilmaz 2020). However, to the best of our knowledge, no study has studied the adoption of an ML technique in practice for the early identification of invalid bug reports.

A recent survey by Paleyes et al. (2022) reviewed case studies and experience reports on the industrial adoption of ML techniques. They identified various challenges, such as the explainability of the ML technique’s predictions, computational cost, integration, and updating of the ML model to handle concept drift. Rana et al. (2014b) identified similar adoption concerns of practitioners for ML techniques in the context of bug prediction. Moreover, Rana et al. (2014b) proposed a framework for the adoption of ML techniques in industry.

In this study, we utilize (Rana et al. 2014b)’s framework to identify the adoption concerns of practitioners for the ML technique for the early identification of invalid bug reports. We further investigated some solutions to address the challenges of explainability and concept drift, which were observed when ML techniques were used at the case company.

2.3 Extension of Our Previous Work

This paper significantly extends our previous work (Laiq et al. 2022), where we: (i) quantified and characterized the problem of invalid bug reports in a closed-source context, (ii) evaluated various ML techniques for predicting invalid bug reports using bug reports from an industrial setting, (iii) performed an initial validation in the industry (without the use of the technique in operation by practitioners) of the applied ML technique with practitioners, and (iv) identified the practitioners’ adoption concerns for the ML technique using a technology adoption framework.

In this work, we (v) considered additional techniques, including XGBoost, CNN, and Bidirectional Encoder Representations from Transformers (BERT), (vi) explored additional features to improve the accuracy of the ML techniques, (vii) conducted an initial in-use evaluation of the applied ML technique in an industrial setting (by having practitioners use the ML technique in operations to predict the validity of newly arriving bug reports), (viii) addressed the explainability concern of practitioners regarding the ML technique, and (ix) investigated the implications of concept drift in the bug reports data that we use to train ML techniques for early identification of invalid bug reports.

Furthermore, we extend the work (i)–(iv) with additional data from the two products (P1, P2) used in our previous work and also with data from a new product (P3) and more responses for identifying adoption concerns of practitioners, i.e., 34 responses, previously we had only three responses.

An overview of industrial problems/challenges, activities, and research questions matching with the technology transfer model by Gorschek et al. (2006)

3 Research Method

The study’s overall goal is to investigate the industrial adoption of an ML technique for the early identification of invalid bug reports.

To achieve this goal, we followed a technology transfer model (Gorschek et al. 2006) to introduce the use of ML techniques at a company. The model guided the engagement and collaboration with the case company (see Fig. 1). The iterative process it recommends has several confidence-building steps to increase the buy-in from the practitioners. Several design and analysis iterations were conducted during this collaboration with the case company. In these iterations, we followed the case study research guidelines (Runeson and Höst 2009) to answer the following research questions:

- RQ 1.:

-

What are the characteristics of invalid bug reports in a closed-source context? This research question aims to quantify and characterize the problem of invalid bug reports in the case company (step 1 in Fig. 1). We assessed the amount, lead time, priority, severity, origins, and handling of the bug reports. Additionally, we also aim to collect practitioners’ opinions regarding (a) the importance of early identification of invalid bug reports, (b) the importance of the tool support for early identification of invalid bug reports, and (c) the potential use cases of the tool support for identifying invalid bug reports.

- RQ 2.:

-

How effective are existing techniques for predicting the validity of bug reports in a large-scale closed-source context? This research question aims to identify ML techniques that yield the most promising results in a closed-source context (step 3 in Fig. 1). We investigate the accuracy of various ML techniques for predicting the validity of bug reports using past bug reports from several products at the case company. Furthermore, for one of the three products used in this study, the practitioners use an ML technique to predict the validity of newly submitted bug reports in live operation (step 6 in Fig. 1).

- RQ 3.:

-

What factors are important for practitioners when deciding to adopt an ML technique for predicting the validity of bug reports? This research question aims to identify important factors practitioners are concerned about while deciding to adopt an ML technique for determining invalid bug reports (step 5 in Fig. 1).

- RQ 4.:

-

How can we support practitioners in interpreting the predictions of bug report validity? In this research question, we explore how current approaches for explaining the prediction results of ML techniques may help practitioners understand and trust the predictions made by ML techniques regarding the validity of bug reports. The question became apparent during the initial validation of the chosen ML technique at the case company (i.e., step 5 in Fig. 1).

- RQ 5.:

-

How large an issue is concept drift in bug reports data used for predicting the validity of bug reports? In this research question, we aim to investigate the concept drift (Bennin et al. 2020) in bug reports data and how it may affect the ML techniques for predicting the validity of bug reports. The question became apparent during the operational use of the chosen ML technique (i.e., step 6 in Fig. 1).

3.1 Study Context

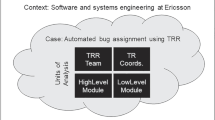

Figure 2 presents the context, case, and units of analysis (Runeson and Höst 2009). The case company is a large telecommunication vendor in Sweden, developing and maintaining large software products. It employs many individuals across multiple nations and specializes in developing embedded systems within the Information and Communications Technology (ICT) field. It uses a highly standardized development approach, e.g., TL9000 and ISO 9001 certified, and it complies with several standards set forth by IEEE, 3GPP, and ITU in its products. The development teams use agile practices and principles. The majority of the code at the company is written in C++, JavaScript, and Java.

At the case company, bug reports are filed from different sources, such as customers, testers, and developers. The change control board then reviews and assigns the submitted bug reports to a relevant development team. In the next step, developers use the submitted information to recreate the issue, identify root causes, and fix issues. However, in many cases, bug reports do not describe erroneous system behavior, i.e., issues in the code. Such bug reports are resolved as invalid.

3.2 Operational Definition of Invalid Bug Reports

Similar to prior work (Zanetti et al. 2013; Fan et al. 2018; He et al. 2020), we defined invalid bug reports according to the working definition at the case company. Generally, invalid bug reports are bug reports that do not describe deviations from the defined behavior of a system. At the case company, invalid bug reports have one of the following resolution labels: no such requirements exist, configuration issues, misunderstanding of functionality, future requirements, and insufficient information.

Examples of valid and invalid bug reports: Below, we briefly discuss examples of valid and invalid bug reports reported in Bugzilla:

-

Invalid bug report (https://bugzilla.mozilla.org/show_bug.cgi?id=1702998)

-

Heading: “Bookmarks Panel gets wide when opening the folder tree.” See the detailed description in Fig. 3. The description states that there is an issue with the bookmarks panel, i.e.,“the panel should grow vertically to accommodate the folder tree, but not horizontally.” However, the answer to the submitted bug report clarifies that it is an expected behavior. Thus, the bug report is resolved as invalid. This issue might have been submitted due to a misunderstanding of the system functionality or a lack of knowledge about the feature.

-

Invalid bug report (https://bugzilla.mozilla.org/show_bug.cgi?id=1392584)

-

Heading: “The hover state of the search field should match the spec.” See the detailed description in Fig. 4. The description states an issue with the hover state of the search field, as it does not match the specification. However, the answer to the bug report clarifies that this is not a bug (i.e., it is an expected behavior) but an issue with specification (i.e., documentation), and it will be updated. Eventually, the bug report was resolved as invalid because it described an issue with the documentation, not the code.

-

Invalid bug report (https://bugzilla.mozilla.org/show_bug.cgi?id=1421432)

-

Heading: “Imported bookmarks don’t show up in Highlights.” See the detailed description in Fig. 5. The description states an issue with the highlights for imported bookmarks. However, the answer to the submitted bug report clarifies that the bug is invalid; we actually get bookmarks, except for Safari, i.e., for Safari, we only import bookmarks, no history, so no highlights will show up. This issue might have been submitted due to a misunderstanding of the system functionality or a lack of knowledge about the feature.

-

Valid bug report (https://bugzilla.mozilla.org/show_bug.cgi?id=1719539)

-

Heading: “Pocket button can crash the browser in debug mode due to csp in about:pocket-saved.” See the detailed description in Fig. 6. The description states an issue with the pocket button, i.e., the pocket button can crash the browser in debug mode due to csp in about:pocket-saved. The submitted bug report provides detailed steps for reproducing the bug and also suggests a solution to fix the issue. In response, the suggested solution is used to solve the issue, and finally, the bug is fixed with a status of “fixed” as the bug report is valid.

3.3 Study Participants, Investigated Products and Survey Participants

3.3.1 Study Participants

This study is conducted with the active collaboration of practitioners from the case company. We collected their feedback at various stages of the adopted technology transfer model (see Fig. 1) using focus group meetings, a questionnaire, and a presentation and feedback session with an expert. The profiles of the study participants are summarized in Table 1.

3.3.2 Studied Products

In this study, we investigated three products (P1, P2, and P3) of the case company. Detailed information about investigated products is shown in Table 2.

3.3.3 Survey Participants

We used convenience sampling to recruit respondents for the survey. In addition to the respondents from the case company, other respondents were invited from our contact networks. These respondents were asked to recruit more respondents. Using convenience sampling, we received 34 responses, of which 12 belonged to the case company.

As shown in Fig. 7(a), the participants of the survey are highly experienced in software development with diverse roles (e.g., developers and project managers, see Fig. 7(d)). The participants belong to companies of varying sizes (i.e., small, medium, and large based on OECD (2023) criteria, see Fig. 7(b)), and most of them (28 out of 34) are familiar with ML for various reasons, e.g., they have tried ML using existing libraries (e.g., scikit-learn) in previous projects, see Fig. 7(c).

3.4 Data Collection

We collected data at various stages of the technology transfer model (see Fig. 1). Table 3 shows the collected data and its mapping to each research question (indicating the usage of data in answering questions). Furthermore, we also collected and reviewed the bug management-related documentation to understand how bug reports are managed at the case company.

4 Design and Analysis Iterations

For each of the five research questions (see Section 3), we followed a design (including activities like technique implementation and data collection) and analysis iteration, which are described in detail in the remainder of the section.

4.1 Design and Analysis Iteration for RQ1 (What are the Characteristics of Invalid Bug Reports in a Closed-Source Context?)

We used descriptive statistics and graphs to understand the prevalence and characteristics of bug reports at the case company. We visualized the characteristics of invalid bug reports, such as their influx, grouping based on their origins, the average time from submission to resolution, priority, and mapping to software/system quality characteristics.

At first, the analysis mentioned above was done manually on a subset of bug reports, and the results were presented to practitioners in the first focus group meeting. Then, we automated the analysis as a diagnostic tool (a Python-based pipeline) because of the case company’s interest. In the updated version of the diagnostic tool, we added support for a grouping of bug reports based on testing levels used at the case company. Later, the results were presented in the second focus group meeting.

Moreover, we used a questionnaire to collect practitioners’ opinions about the importance of early identification of invalid bug reports and the need for tool support in this regard. We used a scale of importance ranging from “Essential” (must have), “Worthwhile” (nice to have), “Unimportant” and “Unwise” (detraction). We also asked the practitioners to provide potential use cases for a tool enabling the identification of invalid bug reports.

4.2 Design and Analysis Iteration for RQ2 (How Effective are Existing Techniques for Predicting the Validity of Bug Reports in a Large-Scale Closed-Source Context?)

To answer RQ2, we applied various ML techniques to bug reports data from three products (P1, P2, and P3) at the case company. The technology transfer model followed in this study (see Fig. 1) guided us to follow several validation steps described in the remainder of the section.

4.2.1 Validation in Academia

We identified ML techniques from the literature that may yield promising results in the selected case company. We selected Support Vector Machines (SVM), Random Forest (RF), Logistic Regression (LR), XGBoost (XGB), CNN, and BERT for the following reasons:

(a) We chose SVM and RF, as Fan et al. (2018) found that these techniques can effectively identify invalid bug reports. Although their research was conducted in the context of open-source software (OSS), their work is relevant as the features used in their work and ours are similar. We use the bug report’s description and heading along with the submitter’s validity rate, total bug reports count, and recent bug reports count. Similarly, we also extract and use the features from the description of bug reports, such as “has_steps_to_reproduce” and “has_attachment.”

(b) We chose LR because of its simplicity as a classification model and its prior use and performance in a closed-source context for the automatic assignment of bug reports to engineers at a large telecommunication company (Borg et al. 2022). Although the tasks differed (i.e., bug assignment to a relevant engineer vs. our task to identify invalid bug reports), we consider these findings relevant. We also used similar features to the ones used by Borg et al. (2022), including the bug report description, summary, and priority.

(c) We chose XGB because of its performance for similar classification tasks, e.g., requirements and email spam classification (Nguyen 2021; Mustapha et al. 2020).

(d) We chose CNN, as He et al. (2020) showed that CNN can achieve slightly higher performance compared to simple ML techniques proposed by Fan et al. (2018) for identifying invalid bug reports.

(e) We chose BERT because transformer-based models are considered state-of-art models for similar classification tasks, e.g., text classification (Sun et al. 2019) and sentiment classification (Wu et al. 2021; Biswas et al. 2020).

During validation in academia (step 4, Fig. 1), the selected techniques listed above were trained and tested on archival data from the case company. The implementation details (e.g., the used hyperparameters) of the above techniques can be found in Appendix A.

4.2.2 Initial Validation in Industry

After testing the techniques on archival data, we presented the results to the practitioners (step 5, see Fig. 1). We used two focus group meetings to collect the practitioners’ feedback to improve the proposed solution.

Furthermore, we used a questionnaire to identify the concerns of practitioners when adopting an ML technique to determine the validity of newly submitted bug reports.

4.2.3 In-Use Validation

Based on the results of using the tool on archival data, the practitioners decided to test the tool in the limited scope of one product only (i.e., product P1). We handed over the tool to the practitioners managing bug reports for product P1 at the case company.

The deployed tool had the added functionality of recording the tool predictions for each bug report as well as the practitioners’ judgments. For example, the data about a bug report with id #456 (not a real ID) contained the following information: predicted confidence level of valid: 88%, predicted confidence level of invalid: 12%, predicted class: valid, and practitioner’s final judgment: valid.

We quantitatively analyzed the tool’s performance on newly submitted bug reports in 2022 using the recorded information.

4.2.4 Feature Selection

Table 4 presents features we used in the prediction model of ML techniques. The feature selection is based on previous work, including (Laiq et al. 2022 and Fan et al. 2018).

These features listed can be calculated from a newly submitted bug report immediately. For example, features related to the submitter experience (F4, F5, and F13) can be derived based on the past submission history of the submitter. Similarly, F1, F2, and F6–F11 can be obtained from the description and heading of a bug report.

Unlike Fan et al. (2018), we do not use features related to readability, completeness, and collaboration network dimensions. As readability and completeness have negligible significance in distinguishing valid and invalid bug reports (Fan et al. 2018). Also, the collaboration network is not available in the case company, which might not be available in the open source context as well in the early stages of bug management (He et al. 2020).

We calculate features F6–F11 using regular expressions from the description of a bug report. Our approach is similar to that used by Fan et al. (2018). For example, we employ regular expressions that use terms like “steps to reproduce” and “reproduce steps” to determine whether a bug report contains information about reproducing the issue. For detailed information on the extraction of these features, please refer to Fan et al. (2018)Footnote 1.

Submitter experience-related features (F4, F5, and F13) are calculated based on the historical data of an individual submitter; for example, F5: Submitter Validity Rate is a ratio of valid to total bug reports submitted by a submitter.

The F5: Submitter Total Bug Count feature does not take into account the impact of time on a submitter’s experience. For instance, if a particular submitter has reported 100 bug reports, F5: Submitter Total Bug Count for his next bug report will be calculated as 100, regardless of when he submits his next bug report, which could be a year later. This will likely decrease the validity of the bug report submitted since the reporter may not have been familiar with the systems/processes. Thus, to cover the temporal evolution of the metrics related to the submitter, we use F13: Submitter Recent Bug Count, which aims to cover the aspect of whether the submitter has been active recently or not (that is, a 90-day window similar to Fan et al. (2018)).

4.2.5 Feature Extraction

In this section, we describe the process we followed for feature extraction.

Textual Feature Extraction The standard data preprocessing is applied to textual features, including removing unnecessary data and stop words.

Unnecessary data includes whitespace and specific headings in the template used at the case company for describing a bug report. Stop words are commonly used words, such as the, we, you, etc., and they do not contribute to the prediction model.

After removing unnecessary data and stop words, we use: (a) a regular Term Frequency-Inverse Document Frequency (TF-IDF) term weighting scheme to convert our textual features into vectors. TF-IDF is a commonly used weighting scheme in the context of software engineering for text mining and information retrieval (Borg et al. 2014), (b) word2vec for word embedding using the Skip-gram algorithm, and (c) DistilBERT for word embedding. Transformer-based models are considered state-of-the-art for word embedding and can potentially achieve better accuracy in validity prediction than TF-IDF and word2vec.

TF-IDF is used with all the selected ML techniques, DistilBERT-based embeddings is used with the two top-performing ML techniques, and word2vec is used with CNN.

Categorical Feature Extraction We use the one-hot encoding technique to convert categorical features into vector form. In one-hot encoding, data for the categorical attribute is represented by a binary vector, where the length of the vector represents the number of possible values of the categorical attribute. In the binary vector, only a single place holds 1 for a particular data sample, and the remaining positions contain 0. For instance, if bug priority has categorical values [High, Medium, Low], a bug report with Medium priority would be coded as \(<0,1,0>\).

Numerical Feature Extraction The numeric features are preprocessed using the StandardScaler technique for standardized input to the prediction model. StandardScalar is typically used to handle numeric data. It standardizes the data by subtracting the mean and dividing the standard deviation from each input, shifting the distribution to have approximately zero mean and one standard deviation.

4.2.6 Experimental Setup

There are various evaluation approaches, such as cross-validation, testing on fixed data sets, and time splitting or incremental. In this work, similar to Bhattacharya et al. (2012), Wang et al. (2014) and Bettenburg et al. (2008), we used an incremental approach. This approach reduces experimental bias (Witten and Frank 2002), and it is appropriate in our context since we aim to predict the validity of future bug reports. Therefore, we divided our dataset into 11 folds after sorting chronologically. Then, we performed our experiments as follows: In the first run, fold-1 was used for training and fold-2 for testing. In the second run, fold-1 and fold-2 were used for training and fold-3 for testing. This process was repeated ten times, and in the last run, fold-1 to fold-10 were used for training and fold-11 for testing. Finally, we measured the average prediction results over each fold.

4.2.7 Evaluation Metrics

We chose the Area Under the Curve (AUC) of receiver operator characteristic (ROC) (Huang and Ling 2005) for evaluating the performance of the models. AUC is unbiased and robust towards imbalanced datasets (Tantithamthavorn and Hassan 2018; Lessmann et al. 2008). AUC scores range from 0 to 1. The higher the value, the better the classification performance. An AUC of 0.5 is no better than a random guess for binary classifications.

The value of AUC is calculated by plotting the ROC curve using True Positive Rate (TPR) and False Positive Rate (FPR) for all thresholds. TPR is plotted on the y-axis and FPR on the x-axis.

The threshold value (i.e., a value in the range of 0 to 1) is compared with the predicted probability value. If the predicted probability value is higher than the threshold for a bug report, it is classified as valid and invalid otherwise. However, AUC is threshold-independent (Bradley 1997) and evaluates prediction performance against all thresholds.

Additionally, we measure Matthew’s Correlation Coefficient (MCC) (Matthews 1975), recall, F1-score, and precision. The aim of measuring these metrics is to compare the reliability of underlying evaluation metrics (i.e., AUC) on imbalanced datasets.

MCC is suitable for evaluating imbalanced data sets (Halimu et al. 2019) and widely used in the biomedical field (Matthews 1975). MCC is a correlation coefficient between predicted and observed values of the confusion matrix and can be calculated from a confusion matrix. MCC values range from 1 to -1, where 1 shows perfect classification and -1 perfect misclassification. An MCC of 0 is no better than coin tossing.

The normalized MCC (nMCC) can be calculated as follows:

4.3 Design and Analysis Iteration for RQ3 (What Factors are Important for Practitioners When Deciding to Adopt an ML Technique for Predicting the Validity of Bug Reports?)

To identify practitioners’ concerns when deciding to adopt an ML technique for the early identification of invalid bug reports, we use the technology adoption framework proposed by Rana et al. (2014b). Our goal is to provide insight into the factors that practitioners prioritize when deciding to adopt ML techniques for identifying invalid bug reports, as well as to identify their main concerns.

The framework consists of nine factors. These factors further include sub-factors that are important for practitioners to assess prior to deciding to adopt (or not to adopt) an ML technique. The framework has been evaluated on an ML technique for software bug prediction (Rana et al. 2014a). Likewise, we identify and evaluate such aspects in our context, i.e., an ML technique for determining the validity of bug reports. Table 5 shows the identified factors and their attributes. To understand the perceived importance of these factors, we used a web-based questionnaire to collect practitioners’ opinions regarding their importance. We used a scale of importance ranging from “Essential” (must have), “Worthwhile” (nice to have), “Unimportant” and “Unwise” (detraction).

4.4 Design and Analysis Iteration for RQ4 (How Can we Support Practitioners in Interpreting the Predictions of Bug Report Validity?)

When we presented the evaluation results of ML techniques for validity prediction at the case company, the need for explainability became apparent, i.e., practitioners wanted to know the underlying reasons behind predicting a bug report as valid or invalid.

To support the explainability of ML techniques, the frameworks such as Local interpretable model-agnostic explanations (LIME) by Ribeiro et al. (2016) and SHapley Additive exPlanations (SHAP) by Lundberg and Lee (2017) could be utilized. However, LIME is limited to local explainability (i.e., it can only be used for explaining the prediction of a specific instance). SHAP provides support for both local and global explanations (i.e., it describes the overall process of how an ML model works). Furthermore, SHAP is more accurate and theoretically sound compared to LIME (Linardatos et al. 2020). Thus, in this study, we use the SHAP framework by Lundberg and Lee (2017) to explain the prediction results and features that differentiate valid and invalid bug reports.

We conducted a feedback session with a practitioner (Software Engineer & Quality Architect, see Table 1) to evaluate the usefulness of the SHAP framework for explaining the prediction of the ML technique. We conducted our feedback session as follows:

-

At first, the selected practitioner was given brief information about how we predict the validity of newly submitted bug reports.

-

Then we presented one of the examples to the practitioner, i.e., explaining the prediction results for a bug report.

-

Afterward, the practitioner was asked to read the SHAP explanations for the nine randomly selected instances to assess whether the SHAP explanations helped understand those predictions (i.e., prediction of those instances as valid or invalid bug reports). Random selection was performed to avoid selection bias.

-

Finally, we collected feedback from the practitioner based on the interpretations of the nine predictions in the previous step. We used the following question for collecting feedback on each sample: On a scale of 1 to 5, how helpful was the explanation for understanding the prediction made by the ML technique? One being not at all helpful and 5 being very helpful.

4.5 Design and Analysis Iteration for RQ5 (How Large an Issue is Concept Drift in Bug Reports Data Used for Predicting the Validity of Bug Reports?)

When the model was deployed in the company, it could not match the accuracy level we achieved on archival data from the company. Thus, we investigated the reasons for this mismatch. After ruling out any implementation issue that may explain poor performance, we investigated if concept drift may explain the mismatch.

To identify concept drift in our bug reports data, we followed the same hypothesis as used in previous work (Klinkenberg and Joachims 2000; Last 2002; Nishida and Yamauchi 2007), i.e., if the performance of an ML model degrades (in terms of prediction error rate) on the current window (i.e., bug reports data of 90 days) compared to the older window, there is concept drift; otherwise, there is no concept drift.

As shown in Fig. 8, similar to previous work (Zliobaite 2010; Kabir et al. 2019), we used an incremental window-based approach with a full-memory training strategy. Similar to previous work (Li et al. 2020; Xu et al. 2018), we chose a window size of 90 days, i.e., bug reports data of 90 days. We conducted our experiments as follows:

-

At first, we trained our ML model on 67% of the data belonging to an old window (w_o) and then tested it on (a) the remaining 33% from the old window and (b) the data from a recent window (w_r).

-

We then calculated the statistical difference of the model prediction error rate on w_o and w_r. To measure statistical significance, we used a strategy similar to de Lima Cabral and de Barros (2018), i.e., the significance of concept drift between old and recent windows using Fisher’s exact test. Fisher’s exact is relevant in our context as it is not affected by sample size, data imbalance, or data sparsity (Mehta 1994). The 2x2 contingency table of old and recent windows for Fisher’s exact is calculated as follows:

$$\begin{aligned} Errors= & [w_{r} , w_{o}] \end{aligned}$$(8)$$\begin{aligned} Hits= & [w_{r} , w_{o}] \end{aligned}$$(9) -

Finally, we calculated the p-value. If it is less than 0.05, we reject the null hypothesis (i.e., the two windows are of the same type) and consider the alternative hypothesis that there is a difference between the distribution of the two windows, i.e., the presence of concept drift.

5 Results and Analysis

5.1 RQ1: What are the Characteristics of Invalid Bug Reports in a Closed-Source Context?

A diagnostic tool was used to analyze invalid bug reports’ characteristics in terms of their prevalence, lead time, and priority. In Table 6, we present the lead time and median priority of valid (V) and invalid (I) bug reports for all three products. For P1, P2, and P3, we get around 18, 16, and 13 mean calendar days, respectively. The average lead time of invalid bug reports is similar to valid bug reports, and the average priority of three products for invalid bug reports is medium (B). This signifies the need to address the challenge of early identification of invalid bug reports at the case company.

Similarly, we analyzed the origin of invalid bug reports for all three products (P1, P2, and P3) by mapping bug reports to their corresponding testing levels used at the case company. However, we observed that most of the faults slip through to later stages (i.e., verification or verification on the target environment). Likewise, most of the software/system quality characteristics were mapped to similar categories, i.e., functional correctness and functional completeness.

For products P1, P2, and P3, 18%, 16%, and 13% of the bug reports were invalid, respectively. Over the years, P1 has improved a bit in terms of reducing the percentage of invalid bug reports. However, P2 and P3 have not changed much. Fan et al. (2018) reported 31% and 77% of invalid bug reports for the open-source projects Mozilla and Firefox, respectively. In contrast to open-source, the percentage is smaller. A potential reason for that could be the rigorous processes for bug writing being followed in a proprietary context and the discrepancy of skills and motivation of the bug submitters (Bachmann and Bernstein 2009).

In addition to quantitative analysis of the characteristics of invalid bug reports, we also collected practitioners’ opinions regarding (a) the importance of early identification of invalid bug reports, (b) the importance of tool support for early identification of invalid bug reports, and (c) the potential use cases of the tool support for identifying invalid bug reports. Below, we summarize the results of their responses.

Importance of early identification of invalid bug reports and tool support: Figure 9(a) shows that overall, the respondents considered the early identification of invalid bug reports as important. 20 of the 34 respondents considered early identification of invalid bug reports essential, and 12 considered it worthwhile, i.e., nice to have.

However, regarding tool support for identifying invalid bug reports, only 10 of the 34 respondents considered it essential, and a majority, 22 of the 34 respondents, considered it worthwhile.

Only two respondents considered the early identification of invalid bug reports as “Unimportant” and “Unwise”. The same respondents considered tool support for the early identification of invalid bug reports as “Unwise”. However, we noticed discrepancies in their rating and their responses to other questions. One of the two respondents who rated the early identification of invalid bug reports as unimportant rated having a tool to support the early identification of invalid bug reports as worthwhile. Also, in the free text field, the same respondent states that the tool could be useful in preventing the registration of such bug reports in the first place. The second respondent, who had rated early identification of invalid bug reports as unwise, states in the free text field that an ML tool could be used to provide feedback to the author of a bug report before it is registered in the system. Similarly, in the free text field, the respondents state that the tool could be useful during bug assignment and in preventing the registration of such invalid bug reports.

Potential use cases of the tool support for identifying invalid bug reports: As shown in Fig. 9(b), out of 34 respondents, 23 chose “flag likely invalid bug reports for downstream developers” as a potential use case of the tool. Another 15 respondents chose “as input for prioritizing bug reports” and “identify a candidate bug report for an additional review” as potential use cases for the tool. On further analysis of the survey responses, we found that a total of 31 respondents out of 34 agreed with at least one of the three potential use cases of the tool listed in the figure. Additionally, three respondents suggested a new use case for the tool, which is to use it to receive feedback when registering (reporting) the bug reports.

5.2 RQ2: How Effective are Existing Techniques for Predicting the Validity of Bug Reports in a Large-Scale Closed-Source Context?

In the following subsections, we present the results of the validation steps that were guided by the technology transfer model followed in this study (see Fig. 1).

5.2.1 Results of Validation in Academia

In Table 7, we present the results of the applied ML techniques using (a) the features F1–F5 and (b) the complete list of features that include newly added features (F6–F13), see Section 4.2.4 for details about feature selection. In Figs. 10, 11 and 12, we show the AUC values for each ML technique applied to three studied products over different folds.

Our results are comparable to previous work in an open-source context (Fan et al. 2018; He et al. 2020). Using the newly added features on P1, the Logistic Regression (LR) model achieved an AUC of 0.845, compared to 0.851 AUC of the old LR-based model, i.e., less than the old model but not much difference. Likewise, the performance of the Random Forest, SVM, and XGB classifiers has not changed much.

Generally, XGB is known for its superior performance compared to LR. However, our results indicate that the performance of XGB is slightly lower than that of the LR. A possible explanation for this could be data imbalance. Shahri et al. (2021) also reported that LR performed better than XGB on imbalanced datasets.

Table 8 shows the results of the top-performing simple ML technique (i.e., LR with TF-IDF) and its comparison with CNN, fine-tuned BERT and models using DistilBERT-based embeddings. The models based on DistilBERT embeddings and CNN achieve slightly higher AUC than simple ML techniques with TF-IDF. However, the fine-tuned BERT-based models notably outperform all other models.

5.2.2 Results of Initial Validation in Industry

We conducted a focus group meeting with practitioners and presented the results of the ML techniques. The practitioners’ overall response was positive. However, in the feedback, practitioners asked for providing explainability support with the predictions, i.e., underlying reasons behind predicting a bug report as valid or invalid.

Furthermore, it was decided to proceed with Logistic Regression, which was the best-performing among the simple ML techniques compared in the study. Although the Fine-tuned BERT-based model produced better results than LR (TF-IDF), we decided to use simpler techniques due to practitioners’ preferences (see detailed discussion in Section 6.6) and the similar results we achieved using LR (TF-IDF), see Table 8.

Likewise, most practitioners (i.e., 4 out of 5) suggested not applying any class-rebalancing techniques to handle data imbalance (i.e., our dataset is skewed towards the valid class of bug reports). Because class-rebalancing techniques tend to produce false positives (Tantithamthavorn et al. 2018), i.e., they will misclassify valid bug reports in our context. Their collective feedback emphasized the importance of avoiding the misprediction of valid bug reports as invalid, as it would have significant consequences in their context.

The practitioners responded that the biased prediction for the valid class is acceptable for them (i.e., the prediction of invalid bug reports as valid), while the prediction of valid bug reports as invalid should be handled carefully. Thus, we did not add penalties for misclassifying the invalid bug reports class and proceeded for in-use validation with these settings, i.e., class_weight hyperparameter of the Logistic Regression was set to default value.

Based on the feedback during the initial validation in the industry, we improved the ML-based tool as follows: (a) the use of SHAP to support explainability, (b) the improvement of the user interface (UI) of the ML-based tool, i.e., the addition of UI that allows users to upload data and make predictions using the existing model. To ensure the up-to-date use of recent data, including submitter metrics, we added support for uploading the latest data and retraining the model before making a prediction. We also added support to display the prediction confidence level for valid or invalid bug reports. Additionally, some improvements were made to the diagnostic tool used to analyze the characteristics of valid and invalid bug reports that we incorporated in the updated version of the tool (see details in Section 4.1).

5.2.3 Results of In-Use Validation

We developed a prototype tool implementing the ML technique selected by practitioners. The tool was trained on data from 2016 to 2021 and was handed over to the company for operational use to assess the validity of newly submitted bug reports in 2022. In this section, we will present the results of the in-use validation of the tool.

Based on our quantitative analysis, we found that our tool correctly classified 110 newly submitted bug reports out of 153 (130 valid and 23 invalid bug reports), which is 72% accuracy. The results of other metrics were as follows: AUC: 0.78, MCC: 0.267, nMCC: 0.63, Invalid F1-score: 0.39, Invalid Precision: 0.29, Invalid Recall: 0.60, Valid F1-score: 0.82, Valid Precision: 0.91, and Valid Recall: 0.74. The tool’s performance has decreased during the in-use validation compared to validation in academia, i.e., 0.78 AUC compared to 0.851 AUC. This prompted us to look for any changes that might have impacted the performance of the ML technique, such as product, technology, process, or organization.

On further analysis, we found that there were more than 20 new bug report submitters during 2022 in the investigated product (P1). Although they were not new at the case company, but their total bug count and validity rate features (F4 and F5, see Table 4) were computed as zero, as no prior bug reports were submitted by them for P1. As the model treated these submitters as new employees, it impacted the accuracy of the ML technique.

Together with the practitioners, we decided to set the validity rate to 0.5 for people who are new to the product but have some relevant experience, and 0 for those who are new and do not have an understanding of their systems. The rationale behind this suggestion was that individuals who understand their systems are less likely to submit invalid bug reports than those who are new and have limited knowledge. With this modification, the ML technique could correctly classify bug reports with 0.82% accuracy. The practitioners’ feedback was positive on the tool’s performance, and they acknowledged that the accuracy was reasonable.

5.3 RQ3: What Factors are Important for Practitioners When Deciding to Adopt an ML Technique for Predicting the Validity of Bug Reports?

We now present the results of the practitioners’ opinion survey regarding the importance of various adoption factors that practitioners consider when deciding to adopt an ML technique for predicting the validity of bug reports (see summary in Fig. 13). The survey had 34 responses. Out of the 34 responses, 12 belong to the case company, which could potentially impact the overall trend. However, we analyzed those 12 and the remaining 22 responses separately and found the same trend.

Two out of 34 practitioners consistently rated most adoption factors as “Not at all important” or “Slightly unimportant.” The responses count highlighted in red in Fig. 13 are mainly from those two practitioners. It would have been interesting to know the rationales behind such a rating, but unfortunately, we cannot get in touch with those two practitioners as they did not provide their email addresses.

Among the adoption factors that practitioners rated, the high accuracy of the predictions was rated as “Very important” by the highest number of practitioners (i.e., 17), followed by the explainability of the predictions and compatibility of the ML-based tool with existing systems. Both of them were rated as “Very important” by 16 practitioners.

Among the other adoption factors, the low cost of data collection and licensing was rated as “very important” by 15 practitioners, followed by easy integration into the current workflow, easy integration with existing systems, low cost for training and education of people, and availability of technical support, all of them were rated as “Very important” by 14 practitioners.

Of the remaining adoption factors, the low cost for installation, low cost for maintenance, buy-in from relevant stakeholders, and availability as an open-source tool were rated as “Very important” by 12 practitioners, followed by the ability to handle a large amount of data, low cost for computational resources, short training time, and low learning curve, which were rated as “Very important” by 11 practitioners. The adaptability to different products/projects was rated as “Very important” by nine practitioners.

While analyzing the survey results, we found several noteworthy findings described below: (a) Two practitioners rated the explainability of predictions as “Very important.” However, they rated high prediction accuracy as “Not at all important.” This may indicate that explainability is more important than accuracy for them (b) Another practitioner considers adaptability to different products/projects as “Not at all important,” while accuracy and explainability were rated very important by that practitioner. (c) Among the others, similar trends were observed for several practitioners. For example, three practitioners did not consider the tool’s availability as an open-source important but rated all other factors as “Important” or “Very important” in general.

Qualitative feedback of practitioners regarding the important adoption factors: In addition to the factors shown in Fig. 13, we also collected feedback from practitioners in a free text field about other important adoption factors for the ML-based tool for identifying invalid bug reports. Below, we list the additional factors that practitioners highlighted in the comments:

-

The ML-based tool should have a self-explanatory user interface.

-

The ML-based tool should be easy to configure.

-

The usability (not user interface but the ease of use) of the ML-based tool is key to the adoption, i.e., it should be easy to use.

-

The ML-based tool for identifying invalid bug reports should be simple and not disrupt the workflow while providing developers additional useful information (i.e., the validity of bug reports).

-

The ML-based tool should be designed to assist support staff in their work without causing frustration to customers, i.e., the tool should carefully handle false predictions of invalid bug reports.

5.4 RQ4: How Can we Support Practitioners in Interpreting the Predictions of Bug Report Validity?

To address the practitioners’ feedback regarding the need for explanations of predictions, we added an explainability aspect using the SHAP framework by Lundberg and Lee (2017). The SHAP value represents how much each feature contributes to an ML technique’s prediction on average (i.e., the average marginal contribution of a feature), considering all possible combinations of features (Molnar 2023). In other words, the SHAP value indicates whether or not a feature increases the ML technique’s prediction over a random baseline (Lundberg and Lee 2017).

In our binary classification problem (i.e., valid/invalid bug report), we want to predict valid and invalid bug reports. The valid class is positive and the invalid class is negative. By default, our ML technique attempts to predict a valid class. Thus, a positive SHAP value increases prediction towards a valid class (i.e., a valid bug report). Likewise, a negative SHAP value increases prediction towards an invalid class (i.e., an invalid bug report).

In Figs. 14, 15, 16, 17, 18 and 19, we summarize our analysis of the ML techniques’ interpretation using the SHAP framework by Lundberg and Lee (2017). Figure 14 shows the top 10 most important featuresFootnote 2 using a bee swarm plot for the Logistic Regression model. The features are sorted according to their importance on the y-axis, and their SHAP values for each instance of training data are visualized using the bee swam plot on the x-axis. The red color indicates a high feature value, and the blue color indicates a low feature value. Here, we can see that the submitter validity rate is the most important feature in the Logistic Regression model. We can see the same in Fig. 15 for the XGBoost model. This seems quite rational and is in line with the literature (Fan et al. 2018; Laiq et al. 2022), i.e., people with high validity rates are likely to submit valid bug reports and vice-versa. Likewise, we can see that the submitter’s total bug count is also an important feature in Logistic Regression and XGBoost models.

To demonstrate our findings, we further explain one valid and one invalid bug report using the SHAP force and waterfall plots; see Figs. 16–19. In Figs. 16 and 17, we can see two SHAP force plots depicting how different features contribute to the ML technique’s predictions for predicting the two selected bug reports as valid/invalid. The features in red contribute towards a positive class (i.e., valid bug report) and their length shows their importance. We can see in Fig. 16 that the submitter validity rate is the biggest contributor to predicting the selected bug report as valid (i.e., the submitter of this bug report has a 95% validity rate). Similarly, In Fig. 17, we can see that the submitter validity rate feature contribution is high compared to other features for predicting the selected bug report as invalid. Likewise, in Figs. 18 and 19, we can see two plots picturing the contribution of each feature using the SHAP waterfall plot for predicting the two selected bug reports as valid/invalid. The negative values indicate the probability of less than 0.5 for a valid class. The bottom of the waterfall plot starts with the expected value of the model output (e.g., E[f(x)] = - 0.332 in Fig. 18), and then each row shows how the contribution of each feature for valid (red) and invalid (blue) classes changes the value from the expected model output using the background training data for the selected prediction, i.e., f(x) = 2.72, see Fig. 18. These findings are also in inline with our summary plots for both Logistic Regression and XGBoost model, see Figs. 14 and 15.

We conducted a feedback session with a practitioner using nine randomly selected examples to evaluate the usefulness of the added explainability support to the ML techniques for determining the validity of bug reports. On a scale of 1 to 5 (1 being not at all helpful and 5 being very helpful), the practitioner rated the SHAP-generated explanation as follows: (a) two samples received a rating of 5. (b) four samples received a rating of 4, (c) one sample received a rating of 3, and (d) two samples received a rating of 2.

Overall, the practitioner’s response was also positive, and the practitioner commented that: “The explanation for both predictions valid and invalid seems reasonable, and I can understand from these explanations why these bug reports are predicted as valid and invalid.”

Our evaluation involves a single practitioner and nine samples. This is a limitation of our study. However, other researchers have demonstrated SHAP’s usefulness, even on a larger scale. For instance, Santos et al. (2020) used SHAP to explain software defect predictions to practitioners. In a survey of 40 developers, they found that most could understand the local explanation generated by SHAP. Additionally, SHAP has been extensively applied in various fields like medicine, banking, power, and irrigation with promising results (Moscato et al. 2021; Zhang et al. 2022, 2020; Jamei et al. 2024). Another indicator of the impact of the proposal (i.e., SHAP) is over 17k citations since its proposal in 2017.

Considering the existing research on SHAP (see above), evaluating the nine samples with the practitioner should be sufficient to demonstrate its use as a proof of concept in our context, i.e., its use in explaining the prediction of ML techniques to assess the validity of bug reports.

5.5 RQ5: How Large an Issue is Concept Drift in Bug Reports Data Used for Determining the Validity of Bug Reports?

To investigate concept drift in bug reports data used for determining the validity of bug reports, we followed an incremental approach with a 90-day window size similar to previous work (see Section 4.5). We applied both Logistic Regression and SVM classifiers and measured the significance of their prediction error rate on the old and recent windows. Table 9 presents the results of our analysis for the three products (see highlighted drift points in boldface text).

In product P1, during windows 3, 4, and 5, both the Logistic Regression and SVM have identified drift points in data. However, after that, the distribution shows no significant differences in the prediction error rate of old and recent windows until window 13, where we see another drift point. Afterward, the distribution is stable again, and the model performance does not degrade. In product P2, during window 2, both the Logistic Regression and SVM have identified a drift in data. The distribution is then stable until window 15 where we can see another drift point. SVM has identified one more drift point for window 19, and in window 22, both SVM and Logistic Regression detected a drift point. Likewise, for Product 3, the first drift has been identified during window 5 by the Logistic Regression, and the second and third drift points are identified for window 17 (i.e., by SVM only) and 18 (i.e., both the Logistic Regression and SVM have identified another drift point).

6 Discussion

In this Section, we discuss the findings of our study.

6.1 Need for Explainability and SHAP

During the initial validation in the industry, the practitioners indicated an interest in using the proposed ML technique for determining the validity of bug reports at early stages. However, their main concern was a lack of explanations behind predictions (i.e., why the predicted bug report is valid or invalid). These findings are in-line with other ML-based software analytics approaches (Dam et al. 2018) where one of the main challenges is to provide an explanation of the black box models. Nevertheless, we addressed this challenge using the SHAP framework (Lundberg and Lee 2017).

SHAP can help improve the explainability of predictions made by ML techniques. However, it has some limitations, which we discuss below:

-

In our study, we found that SHAP-generated explanations may not always be helpful for practitioners. On a scale from 1 to 5 (1 being not at all helpful and 5 being very helpful), out of 9 instances, 2 received a rating of 2 out of 5, and 1 received a rating of 3 out of 5.

-

SHAP can be computationally expensive for large datasets or complex models, e.g., pixel data (Mosca et al. 2022). In our study, we have used simple ML techniques on around 3300 bug reports data, and we did not observe such an issue. We were able to run our experiments on a machine equipped with Windows 10, 64-bit as the operating system, Intel (R) Core (TM) i7, and 16GB RAM.

-

Slack et al. (2020) showed that explanations generated by LIME and SHAP could not conceal discriminatory biases of black-box classifiers in sensitive domains, e.g., criminal justice and credit scoring. They also proposed and assessed a novel framework that can conceal such biases.

-

The assumptions made by SHAP can sometimes lead to the loss of important information, such as dependency relationships between features (Mosca et al. 2022). For example, the symmetry property of Shapely values treats features as independent, which can only be true in some cases. Several approaches have been proposed for incorporating causal dependencies (e.g., Frye et al. 2020; Heskes et al. 2020).

-

SHAP uses Shapely values to generate explanations for a model and its predictions. However, research has shown that the approach used by Shapely values to calculate feature importance is not suitable for quantifying feature importance in general (Kumar et al. 2020).

-

SHAP uses Shapely values to generate explanations for a model and its predictions. However, Kumar et al. (2020) argue that these explanations are not always easy to understand and do not match human expectations, i.e., we usually want explanations that make sense to us as humans.

6.2 Need for Not Mislabeling Valid Bug Reports as Invalid

It is important to note that during the initial validation in the industry, practitioners highlighted that the ML technique should carefully handle the prediction of false negatives (i.e., false invalid bug reports) due to the implications for bug management at the case company. Predicting an invalid bug report as valid could be fine because it will not be impacted by subsequent actions such as deprioritization. However, if a valid bug is predicted to be invalid and such action is taken, it will have negative consequences. For instance, delaying the resolution of the valid bug could lead to other problems, such as further bugs in the system and customer dissatisfaction.

To handle the aforementioned situations, one solution we proposed, which practitioners also acknowledged, was accepting predictions for invalid bug reports when the ML technique is at least 90% confident.

6.3 Potential Uses of the Predictions of Bug Report Validity

The findings from in-use validation indicate the potential of the proposed solution for supporting practitioners in identifying invalid bug reports during the bug management process. The proposed solution aims to identify invalid bug reports early during the bug management process. Early identification here means that as soon as a new bug report arrives in a bug tracking system and before it is assigned to a relevant developer/team. Early identification of invalid bug reports has practical implications. For instance, if the validity of a newly submitted bug report is evaluated before the bug triage process begins (i.e., as shown in Fig. 20 before the change control board (CCB) screening). In that case, it will significantly help practitioners to effectively use developers’ resources by prioritizing the assignment of developers to bug reports that are likely to be valid.

As shown in Fig. 20, the dotted line is used for the ML technique’s suggestion to the CCB, development teams, or developers in the decision-making process. Additionally, as suggested by several practitioners, the ML technique’s suggestion could also be used to provide feedback during the bug report registration stage.

The ML technique’s goal is to assist the CCB and other stakeholders downstream in the bug management process by indicating the likely validity of a bug report. However, this is still only decision support, e.g., the CCB still has to decide on subsequent actions for the bug report.

Existing bug assignment flow at the case company (in black) and proposed automatic validity support (in green), adopted from Laiq et al. (2022)

In this study, we only conducted an initial in-use validation of the proposed approach to predict the validity of newly submitted bug reports on a single product, which is a limitation.

6.4 Reflections on the Use of the Technology Transfer Model for Industrial Adoption of an ML Technique

Transferring solutions from academia to industry is challenging, and several approaches for successful technology transfer have been proposed (Daun et al. 2023). In this study, we used Gorschek et al. (2006)’s technology transfer model to guide the adoption of an ML technique at a company (see Fig. 1). Following the model, we systematically developed, evaluated, and iteratively improved our proposed solution based on the practitioners’ feedback. The iterative approach, with several degrees of validation and involvement of practitioners in various steps, helped practitioners gain confidence in the proposed ML-based solution. This confidence is evident in one of the teams using the ML technique in their operations. By facilitating the actual use of the techniques in practice, the model enabled us to identify additional necessary conditions for operationalizing the proposed ML-based solution, such as the need for explainability and maintaining the accuracy of the ML technique over time.

6.5 Concept Drift in the Context of Predicting the Validity of Bug Reports

Our findings from the concept drift analysis indicate the presence of drift points in bug reports data used to determine the validity of bug reports (see Section 5.5). The drift points in the data will likely affect the prediction performance of the ML technique in its operational use. The performance degradation will have consequences on the use of the ML technique in practice for predicting the validity of bug reports. Thus, it is important to continuously monitor the model’s performance, identify such drift points, and take necessary actions, such as retraining the model as soon as recent data is available and cautiously considering the current prediction.

To deal with concept drift in the context of predicting the validity of bug reports using ML techniques, the following strategies can be used:

-

Monitoring and alerting: The performance of an ML technique should be continuously monitored, and alerts should be sent to relevant people about the performance and presence of concept drift. Based on the significance of the error rate in the performance of the technique, software quality assurance teams should take relevant actions, such as retraining the technique’s model as soon as new data is available and consider the current prediction with caution.

-

Regular model updates: After the model is deployed, it is necessary to update it to ensure that it reflects recent trends in the data (Paleyes et al. 2022), that is, the ML model used for predicting the validity of bug reports should be updated at regular intervals to adapt to changing data distributions. However, simply updating the existing model may not always be sufficient to handle the concept drift. There might be a need for a new model or tuning to the existing model to handle concept drift, which is time-consuming, resource-intensive, and requires expertise. Thus, there is a need for an approach that can automatically find a suitable model for handling concept drift. A growing field of automated machine learning may be suitable for addressing this challenge (Hutter et al. 2019).

-

Use of topic modeling and similarity measuring techniques: Topic modeling and similarity measuring techniques can be used to highlight the presence of concept drift in newly reported bug reports. In the case of topic modeling, topics can be generated from past data of bug reports, and newly reported bug reports can be classified into existing topics. If the newly submitted bug reports do not match any topic with a defined similarity threshold, it could indicate concept drift. Likewise, similarity measuring techniques can be used to find similar past bug reports for a newly submitted bug report to identify whether the submitted bug report is related to old or new concepts.

Impact of data size on concept drift detection: In this section, we discuss the potential impact of data size on detecting concept drift. As shown in Fig. 8, similar to previous studies (Zliobaite 2010; Kabir et al. 2019), we used an incremental window-based approach with a full-memory training strategy. The approach used might be affected by the data size. However, based on the results shown in Table 9, the impact of data size on concept drift (CD) detection seems unlikely. For example, for P1, we identified CD during windows 3,4,5 and 13, that is, for both cases when the data size is small and when the data size is large. Similarly, for P2, we identified CD during windows 2, 15, and 22.

6.6 Overall Implication

In this study, we used simple techniques to assess the validity of newly submitted bug reports at an early stage. Simple ML techniques were chosen for their evaluation in the industry for the early identification of invalid bug reports considering the following factors:

(a) Practitioners wanted to use simpler ML techniques as long as they delivered comparable results. Previous research (He et al. 2020) and our investigation (see Table 8) found that simple ML techniques achieved comparable results to the deep learning technique, fine-tuned BERT and the techniques based on DistilBERT embeddings.

(b) Using simple ML techniques, we achieved comparable results, i.e.,(He et al. 2020)’s deep learning approach achieved an average AUC of 0.85, while we achieved 0.82. Furthermore, He et al. (2020) also reported that their DL technique could not outperform (Fan et al. 2018)’s simple ML techniques for all projects, i.e., (Fan et al. 2018)’s simple ML technique achieved an AUC of 0.92 compared to He et al. (2020)’s deep learning technique for one project, which achieved 0.86 AUC.

(c) One of the main reasons for choosing simple ML techniques was the adoption challenges of complex techniques (Paleyes et al. 2022), e.g., long-term maintenance and steep learning curve. Borg et al. (2022) also reported similar observations for adopting an ML-based tool in practice for automatic bug assignment, i.e., practitioners prefer a simple solution over a complex one that can deliver reasonably good performance. A simple model could help to demonstrate the concept of the proposed ML solution and get the end-to-end setup in place easily (Paleyes et al. 2022), which also helps in long-term maintenance (Borg et al. 2022). Haldar et al. (2019) reported that at Airbnb, they faced several issues and wasted several cycles in setting up a complex deep learning-based model. After several failed attempts, they eventually had to simplify it. Only after simplifying their deep learning model they could put it into the production pipeline.

Considering the adoption challenges (mentioned above) of ML techniques in practice and the requirement of practitioners, we wanted to keep our solution simple, i.e., simple techniques that produce results with comparable accuracy to complex techniques such as deep learning. Thus, we opted for simple ML techniques.

The results of both initial and in-use validation indicate the proposed solution’s potential, i.e., the ML technique for the early identification of invalid bug reports. Overall, the feedback from the practitioners was positive.

6.7 Takeaways from the Industrial Adoption of ML Techniques for Early Identification of Invalid Bug Reports

Below, we describe takeaways from our investigation of the industrial adoption of ML techniques for the early identification of invalid bug reports.

Use simpler technique: We found that simple ML techniques produce comparable results to deep learning techniques and techniques based on BERT for identifying invalid bug reports. Furthermore, the simpler techniques are easier to understand and show additional advantages like shorter training time and require fewer computational resources. These advantages make simpler techniques more appropriate for practical adoption.

Additionally, in industry-academia collaboration, practitioners may have requirements to use simple ML techniques that can deliver comparable results to complex techniques and offer additional advantages, such as shorter training time and fewer computational resources. For instance, the practitioners of the case company where we studied the problem of identifying invalid bug reports at an early stage had such requirements. Thus, researchers should prioritize simple ML techniques in industry-academia collaboration to improve the likelihood of adopting ML techniques.

Explainability improves trustworthiness: Similar to other research (see Section 6.1), in the context of identifying invalid bug reports using ML techniques, we found that explainability is important for practitioners, and it improves the trustworthiness of an ML-based solution. Therefore, when designing ML-based solutions to support practitioners in their daily activities, we suggest providing explainability support to improve the likelihood of adopting ML techniques in practice.