Abstract

Human values such as honesty, social responsibility, fairness, privacy, and the like are things considered important by individuals and society. Software systems, including mobile software applications (apps), may ignore or violate such values, leading to negative effects in various ways for individuals and society. While some works have investigated different aspects of human values in software engineering, this mixed-methods study focuses on honesty as a critical human value. In particular, we studied (i) how to detect honesty violations in mobile apps, (ii) the types of honesty violations in mobile apps, and (iii) the perspectives of app developers on these detected honesty violations. We first develop and evaluate 7 machine learning (ML) models to automatically detect violations of the value of honesty in app reviews from an end-user perspective. The most promising was a Deep Neural Network model with F1 score of 0.921. We then conducted a manual analysis of 401 reviews containing honesty violations and characterised honesty violations in mobile apps into 10 categories: unfair cancellation and refund policies; false advertisements; delusive subscriptions; cheating systems; inaccurate information; unfair fees; no service; deletion of reviews; impersonation; and fraudulent-looking apps. A developer survey and interview study with mobile developers then identified 7 key causes behind honesty violations in mobile apps and 8 strategies to avoid or fix such violations. The findings of our developer study also articulate the negative consequences that honesty violations might bring for businesses, developers, and users. Finally, the app developers’ feedback shows that our prototype ML-based models can have promising benefits in practice.

Similar content being viewed by others

1 Introduction

Human values, such as integrity, privacy, curiosity, security, and honesty, are the guiding principles for what people consider important in life (Cheng and Fleischmann 2010). These values influence the choices, decisions, relationships, and the concept of ethics for people and society at large whether or not they are formally articulated in this terminology (Schwartz 1992). The relationship between human values and technologies is important, especially for ubiquitous technologies like mobile software applications (apps) (Obie et al. 2021). Mobile apps are a convenience to modern society and have seen usage in carrying out both simple and complex tasks, from entertainment (e.g., video sharing apps) and health (e.g., fitness trackers) to finance (e.g., banking apps). End-users of these apps hold certain expectations influenced by their human values considerations, e.g., the privacy of data, transparency of processes in apps, and ethical behaviour of platforms and software companies (Obie et al. 2021). The violation of these value considerations is detrimental to the end-user, software platforms, companies, and society in general (Whittle et al. 2011).

Recent work on human values in software engineering (SE), based on the Schwartz theory of basic human values (Schwartz 1992, 2012), has mapped human values to specific ethical principles. For example, Perera et al. mapped values to the GDPR principles (Perera et al. 2019) and Winter et al. mapped values to the ACM Code of Ethics (Winter et al. 2018). Other studies such as Obie et al. (2021) have explored the violation of human values in mobile apps using app reviews as a proxy. The recent study by Obie et al. showed that the value of honesty (a sub-item of benevolence based on Schwartz theory (Schwartz 1992)) is violated by mobile apps (Obie et al. 2021).

Honesty, often perceived to be a very important human value (Miller 2021), describes a character quality of being sincere, truthful, fair, and straightforward, and refraining from lying, cheating, deceit, and fraud (Dictionary 2012). The importance of the value of honesty is clearly articulated in the ACM Code of Ethics: “Honesty is an essential component of trust. A computing professional should be transparent and provide full disclosure of all pertinent system limitations and potential problems. Making deliberately false or misleading claims, fabricating or falsifying data, and other dishonest conduct are a violation of the Code.” (Gotterbarn et al. 2017). Nonetheless, there have been many flagrant violations of the value of honesty by mobile app platforms and software companies in ways that are collectively called dark patterns (Dong et al. 2018; van Haasteren et al. 2019; Hu et al. 2019; Samhi et al. 2022; Gao et al. 2022). These dark patterns, a violation of the value of honesty, entail sophisticated design practices that can trick or manipulate consumers into buying products or services or giving up their privacy (Barr 2022). Furthermore, some of these dark patterns and honesty violations have been flagged in a recent report by the Federal Trade Commission (FTC) (Henderson 2022). Other examples include companies deliberately hiding data breaches from the authorities and customers (Bowman 2021; Shaffery 2021). These violations of the value of honesty result in decreased trust from users, poor uptake of apps, and reputational and financial damage to the organisations involved. This also emphasises the need to consider human values more proactively in software engineering practice.

This work aims to gain a comprehensive understanding of honesty violations in mobile apps. To this end, we conducted a mixed-method study. Given that user’s comments expressed in app reviews have been shown to be a proxy for detecting users’ challenges and requirements (AlOmar et al. 2021; Obie et al. 2021; Shams et al. 2020; Di Sorbo et al. 2016; Guzman and Maalej 2014), we first developed and evaluated seven machine learning models to learn the features that are representative of the violation of honesty in app reviews. The best-performing model (a Deep Neural Network) has an F1 score of 0.921, a precision of 0.911, and a recall of 0.932. Beyond the automatic detection of honesty violations, we then manually analysed 401 reviews containing honesty violations. Our resulting taxonomy shows that honesty violations can be characterised into ten categories: unfair cancellation and refund policies, false advertisements, delusive subscriptions, cheating systems, inaccurate information, unfair fees, no service, deletion of reviews, impersonation, and fraudulent-looking apps. Finally, we conducted a developer studyFootnote 1 with mobile app developers to explore their experiences with honesty violations. The analysis of qualitative data from the developer study resulted in identifying a set of causes (business, developer, app platform, user and competitor drivers), parties responsible for the honesty violations (product owners, managers, business analysts, user support roles – in addition to developers, and business), consequences of honesty violations on businesses, app developers, and end users (e.g., bad reputation for the company, developers experiencing negative emotions, and identity theft of users), and strategies the app developers use to avoid (e.g., strengthening designing practices) and fix (e.g., thoroughly investigating the violation and fixing it) honesty violations in the apps they develop. The developer study also shows that the automatic detection of honesty violations in app reviews bring several benefits to businesses, developers, app platforms, and users. From this point onwards, the terms “mobile app developer” and “developer” are used interchangeably.

We published preliminary results of our machine learning-based classification work at the Mining Software Repositories (MSR) conference in 2022 (Obie et al. 2022). We substantially extend this previously published work here by (i) the inclusion of two new machine learning models, deep neural network (DNN) and generative adversarial network (GAN), with the new DNN replacing the prior support vector machine (SVM) as the best model; (ii) evaluation of these new models; and (iii) adding a new research question (RQ3). RQ3 includes four sub-questions to seek mobile developers’ views on the causes behind honesty violations in mobile apps (RQ 3.1), the potential consequences of honesty violations (RQ3.2), possible solutions to avoid or fix such violations (RQ3.3), and potential benefits of automated identification of honesty violations in mobile apps (RQ3.4).

This work makes the following key contributions:

-

We present several machine learning models and datasets to aid the automatic detection of the violation of the human value of honesty in app reviews. Our publicly available replication package supports researchers and practitioners to adapt, replicate, and validate our study (Obie et al. 2022).

-

We provide insights into the different categories of honesty violations prevalent in app reviews by creating a taxonomy based on a manual analysis of the honesty violations dataset.

-

We survey 70 app developer practitioners and interview 3 practitioners to get their feedback on the prevalence of honesty violations in their mobile apps, the causes of these issues, and feedback on our proposed machine learning-based classifier to help identify such violations from user app reviews.

-

We present an actionable framework for developers which gives a better understanding of the causes and consequences of honesty violations and strategies that can be used to avoid and fix honesty violations.

-

We present a set of practical implications and future research directions to deal with the challenges of the violations of the human value of honesty in apps that would benefit end-users and society.

The rest of the paper is organised as follows: Section 2 highlights motivating examples of honesty violations. Section 3 summarises the related studies. In Section 4, we elaborate on the research design. The findings of this study are reported in Sections 5, 6, and 7 for different research questions. Section 8 reflects on the findings and provides implications, followed by reporting on possible threats in Section 9. We conclude the paper in Section 10.

2 Motivating Examples

Consider an example review of dubious charges to a user account for a calendar reminder app: “I’ve been charged $45+ on 2 separate occasions in the month I’ve had the ‘premium’ version. It advertises $3.50 for a premium subscription but saw nowhere where it said they would make additional charges.

Such reviews are common for many subscription-based apps. They are also very common for apps with optional premium versions where users find themselves unwittingly signed up to the premium charges. Many end users perceive these as deliberate attempts by app providers to dishonestly make money. Some companies have been convicted of such dishonest practices. For example, Shaw Academy offered users a free trial to its online education platform and charged them a subscription fee even if they had cancelled before the end of the trial period and refused to refund the users (Yiacoumi 2021). The outcome of an investigation by the Australian Competition & Consumer Commission (ACCC) ordered the company to refund approximately \(\$50,000\) to the affected users and pledge to improve their system (Yiacoumi 2021).

Consider another example of dishonesty from a dating app. The dating platform (http://www.Match.com) has been accused of faking love interests using bots and fake profiles to fool consumers into buying subscriptions and exposing them to the risk of fraud and other deceptive practices (Perez 2019). During a period of over three years, the company allegedly delivered marketing emails (i.e., the “You have caught his eye” notification) to potential consumers after the company’s internal system had already flagged the message sender as a suspected bot or scammer. The company also violated the “Restore Online Shopper’s Confidence Act” (ROSCA) by making the unsubscription process tedious. Internal company documents showed that users need to make more than six clicks to cancel their subscription. This resulted in the U.S. Federal Trade Commission (FTC) suing http://www.Match.com for “deceptive advertising, billing, and cancellation practices” (Perez 2019).

3 Related Work

Mining App Reviews: Many works have provided insights into app user reviews and how these reviews can aid software professionals in app requirements, design, maintenance (Pelloni et al. 2018; Carreño and Winbladh 2013; Seyff et al. 2010) and evolution (Ciurumelea et al. 2017; Li et al. 2018, 2010; Palomba et al. 2015). Guzman and Maalej adopted Natural Language Processing (NLP) techniques to locate fine-grained app features in reviews with the aim of supporting software requirements tasks (Guzman and Maalej 2014). A related work utilised Latent Dirichlet Allocation (LDA) technique and linguistic rules to group feature requests from users as expressed in their reviews, and the results from this study showed that users care about frequent updates, improved support, more customisation options, and new levels (for game apps) (Iacob and Harrison 2013).

Some studies have focused on the automatic classification of app reviews into useful categories. To aid software professionals in prioritising accessibility issues, AlOmar et al. developed a machine learning model for identifying accessibility-related complaints in app reviews (AlOmar et al. 2021). Panichella et al. introduced a taxonomy for classifying app reviews and, using a combination of NLP and sentiment analysis, classified app reviews into their proposed taxonomy (Panichella et al. 2015).

Other works have introduced tools to support the extraction of insights from app reviews. For example, Vu et al. proposed MARK, a keyword-based tool for detecting trends and changes that relate to occurrences of serious issues in reviews (Phong et al. 2015). Similarly, Di Sorbo et al. introduced SURF, a tool that condenses thousands of reviews into coherent summaries to support change requests and planning of software releases (Di Sorbo et al. 2016). Our own work has classified various app reviews into different human value violations (Obie et al. 2021, 2021), human-centric issues discussed in app reviews (Mathews et al. 2021; Khalajzadeh et al. 2022), and a myriad of problems users have with eHealth apps (Haggag et al. 2022).

The above studies show that app reviews are a useful resource for gathering requirements, detecting issues, and more generally supporting software professionals in evolving their apps. This work also aims to support app maintenance and evolution by effectively detecting potential violations of the value of honesty from the user’s perspective in app reviews. In addition, it would aid software professionals in delivering software products that build trust in users, as the honesty (real or perceived) of companies can affect how users engage with their products (Zhu et al. 2021).

Human Values in Software Engineering (SE): Human values are enduring beliefs that a specific mode of conduct or end state of existence is personally or socially preferable to an opposite or converse mode of conduct or end state of existence (Rokeach 1973). Human values have been well-studied in the social sciences and have begun to see adoption in other fields, including design (Aldewereld et al. 2015) and software engineering (SE) (Mougouei 2020; Li et al. 2021).

The study of human values in SE is a relatively nascent line of research (Perera et al. 2020; Mougouei et al. 2018) and is mostly based on the widely accepted and adopted Schwartz theory of basic human values (Schwartz 1992, 2012). The Schwartz theory is built on a survey conducted in over 80 countries covering different demographics. This theory categorises values into 10 broad categories, namely: self-direction, stimulation, hedonism, achievement, power, security, conformity, tradition, benevolence, and universalism. These 10 categories, in turn, are made up of 58 value items, e.g., the value category of benevolence covers the value items of honesty, responsible, helpful, forgiving, loyal, mature love, a spiritual life, meaning in life, and true friendship (c.f (Schwartz 1992)). However, our focus in this work is on the value item of honesty, based upon the prevalence of the value category of benevolence in prior research (Obie et al. 2021), the recent cases of the violations of honesty by companies in the media, e.g., Perez (2019); Yiacoumi (2021), and the need to understand this phenomenon more closely in SE.

Studies in the social sciences have investigated the value of (dis)honesty at the individual and organisational levels (Fochmann et al. 2021), and the policy implication of dishonesty in everyday life (Mazar and Ariely 2006); while others have explored the motivation for dishonest behaviours (Cheating 2016) including students in classroom settings (Lang 2013) and workers in crowd-working environments (Jacquemet et al. 2021). Keyes argues that euphemising the violation of the value of honesty desensitises people to its implications and consequences in society (Keyes 2004).

However, within the context of SE, Whittle et al. argued that software companies need to consider human values in the development of software systems and make them “first-class” entities throughout the software development life cycle (Whittle et al. 2011). Another study made a case for the evolution of current software practices and frameworks to embed human values in technology, instead of a revolution of the SE field (Hussain et al. 2020).

Another line of research considered methods for measuring human values in SE. For example, Winter et al. introduced the Values Q-sort instrument for measuring human values in SE (Winter et al. 2018). Applying the Values Q-sort instrument to 12 software engineers resulted in 3 software engineer values “prototype”. Similarly, Shams et al. utilised the portrait values questionnaire (PVQ) to elicit the values of 193 Bangladeshi female farmers in a mobile app development project (Shams et al. 2021). The result of the study showed that conformity and security were the most important values, while power, hedonism, and stimulation were the least important. More recently, Obie et al. argued that the instruments for eliciting and measuring values should be contextualised to specific domains (Obie et al. 2021).

App Reviews and Human Values: Recent studies have adopted the use of app reviews as an auxiliary data source for eliciting values requirements. Shams et al. analysed 1,522 reviews from 29 agricultural mobile apps to understand the values that are both represented and missing from these apps (Shams et al. 2020). Obie et al. proposed a keyword dictionary-based NLP classifier to detect the value categories violated in app reviews (Obie et al. 2021). The results of the application of the classifier to 22,119 reviews showed that benevolence and self-direction were the most violated categories, while conformity and tradition were the least violated.

Related works such as Shams et al. (2020); Obie et al. (2021) have provided insights to violations of value categories. Our work complements these by zooming in on a specific value item; honesty (within the most violated category of benevolence (Obie et al. 2021)), to provide a more nuanced understanding of its violations. In addition, we provide a taxonomy of the different categories of honesty violations in reviews to better understand how the violation of the value of honesty is reported. Our practitioner survey and interviews suggest that the automated identification of honesty violations from app reviews would be practically useful. We hope that other researchers would be encouraged to investigate other specific value categories, their discussion of violations by users in app reviews, and more generally to explore the field of human values in SE.

4 Research Design

Our goal in this study is to develop a deep understanding of honesty violations in mobile apps by automatically identifying reviews discussing honesty violations, categorising the types of honesty violations, and exploring the perspectives of app developers about their perspectives on such honesty violations. To do this, we have formulated the following research questions that we need to answer (RQs):

-

RQ1. Can we effectively identify reviews documenting honesty violations automatically? We formed a large labelled dataset of app reviews and then trained a variety of machine learning classifiers to answer this RQ. Our best-performing classifier has an F1 score of 0.921.

-

RQ2. What types of honesty violations are reported in these app reviews? We manually inspected a sample of 401 honesty violation reviews and classified the honesty violations represented by each into ten distinct categories.

-

RQ3. What is app developers’ experience with honesty violations in the mobile apps they develop and their perspective on automatic detection of honesty violations? We developed three subquestions to answer this RQ. We use in-depth interviews and a broad survey with the participation of 73 mobile app practitioners. RQ3.1.What are the causes of honesty violations in mobile apps, and who is responsible for them? We want to know, according to developers’ experience with honesty violations in mobile apps they develop, what causes these honesty violations in mobile apps and who is responsible for them. RQ3.2.What are the consequences of honesty violations in mobile apps on the end users and app developers/owners according to developers’ experience? The goal of this RQ is to understand the impacts of honesty violations on end users, and the developers themselves/owners of the mobile apps, as experienced by the mobile app developers. RQ3.3. What strategies do developers use to handle honesty violations in mobile apps? This RQ aims to identify what strategies the mobile app developers use to avoid and/or fix reported honesty violations in mobile apps (or if they indeed do so). RQ3.4. What are the benefits of automatically detecting honesty violations in mobile apps? Through this research question, we target exploring the potential benefits of automatic detection of honesty violations.

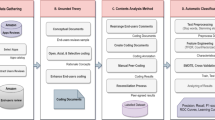

Approach for answering the above research questions. A high-level overview of our mixed-methods approach is given in Fig. 1 and elaborated in figures and text in the respective sections answering the research questions.

5 Automatic Classification of Honesty Violations (RQ1)

5.1 A Dataset of Honesty-Related Reviews

Our first step for answering RQ1 and RQ2 is creating a dataset of user reviews documenting perceived honesty violations by apps.

5.1.1 Data Collection

To build this dataset, we collected a total of 236,660 reviews - 214,053 reviews from the public dataset of Eler et al. (2019), and an additional 22,607 reviews from the public dataset of Obie et al. (2021). These reviews were collected from a total of 713 apps in 25 categories. The apps and reviews were intended to cover a diverse range of categories and audiences. Table 1 summarises the statistics of our combined app review dataset. Our dataset can be found here Obie et al. (2022).

5.1.2 Data Labeling

Given the sheer size of the dataset and the manual labour required to label the dataset, we used two approaches to label the 236,660 reviews: a keyword-based approach and manual labelling. We first adopt a set of keywords to filter the 236,660 reviews to include those related only to the value of honesty. These keywords are based on the dictionary of human values created by Obie et al. (2021). The set of keywords comprises a total of 48 words semantically related to honesty. The keywords are available here Obie et al. (2022).

After applying this keyword filter, the number of reviews was reduced from 236,660 reviews to 4,885 potential candidate honesty-related reviews (we call these 4,885 reviews honesty_potential reviews).

However, adopting a keyword-based approach is error-prone and may result in a lot of false positives. Hence, we manually analysed the honesty_potential (4,885) reviews to exclude the false positives. The application of keywords filter and subsequent manual analysis check have been applied in recent studies (Eler et al. 2019; AlOmar et al. 2021).

The honesty_potential (4,885) reviews were labelled and validated in \(25\%\) increments in the following manner. The first analyst labelled the first \(25\%\) percent of the honesty_potential reviews to determine which of the reviews contain the violation of the value of honesty as perceived by the user in the review. The second analyst validated the outcome. The disagreements were resolved in a meeting using the negotiated agreement approach to address issues of reliability (Campbell et al. 2013; Morrissey 1974). Then the next \(25\%\) were labelled by the first analyst, validated by the second analyst, and disagreements resolved in a meeting as in the first round. The same procedure was repeated for the third and fourth rounds of the labelling process. Also, the labelling and validation were done over eight weeks to avoid fatigue. Based on our manual labelling, we found that out of the 4, 885 filtered reviews (the honesty_potential reviews), only 401 were honesty violations reviews, i.e., true positives. We refer to these 401 honesty violations reviews as honesty_violations reviews. During the labelling process, we had a total of 105 reviews among the 4,885 honesty_potential reviews that we discussed further and resolved. Due to the fact that we adopted the negotiated agreement technique, measures like inter-rater agreement are not applicable. The negotiated agreement technique was employed because it is beneficial in research like ours where the main objective is to generate novel insights (Campbell et al. 2013; Morrissey 1974).

Next, we randomly selected 401 reviews from the remaining 4,484 honesty_potential reviews (4,885 honesty_potential reviews - 401 honesty_violations reviews). We refer to these 401 reviews, which contain honesty-related keywords (but not violations), as honesty_non_violations reviews. We used a total of 802 reviews: 401 honesty_violations and 401 honesty_non_violations reviews to build a balanced dataset called honesty_discussion dataset for training and evaluating machine learning models in Section 5. We note here that using the manually validated false-positive honesty_non_violations reviews is important for machine learning models. It is because these reviews include certain keywords syntactically related to honesty but semantically irrelevant to honesty violations - an important difference we want our models to learn. In summary, the honesty_discussion dataset consists of 802 reviews: 401 honesty_violations reviews and 401 honesty_non_violations reviews. These 802 reviews were the accurate results of manually labelling 4,885 reviews - a verified, accurate and balanced dataset for a more effective classifier. Other studies have used similar numbers of text documents in classification tasks (Levin and Yehudai 2017, 2019).

5.2 Classification Approach

Manually classifying honesty violations in app reviews is challenging for practitioners because it is error-prone, labour-intensive, and demands substantial domain expertise. Hence, an automated approach is required to recognise honesty violations in app reviews. This research question aims to develop machine learning models to differentiate between honesty and non-honesty reviews automatically. As shown in Fig. 2, the machine learning models are applied on the 802 honesty_discussion dataset which consists of 401 honesty_violations reviews and 401 honesty_non_violations reviews.

5.2.1 Data Preparation

We applied some common techniques to remove possible noise from the honesty_discussion dataset. This step was needed so a learning model can classify reviews correctly. To achieve this, we applied natural language processing techniques such as removing capitalisation, removing emojis, tokenising, removing stop words, and removing punctuation.

Case Normalisation: is the process of transforming original review texts into lowercase. This type of text cleaning helps us avoid repeated features of the same words with different font cases (e.g., “Honesty" and “honesty"). Furthermore, converting the text into lowercase does not affect its context as well as the users’ expressions in our scenario.

Emoji Removal: Emojis are icons or a few Unicode characters that allow users to convey ideas, concepts, and emotions. If emojis are not carefully preprocessed, they can potentially affect the performance of a model in terms of accuracy. Hence, we removed emojis from the review texts.

Tokenisation: is the process of splitting each original text into a set of words that do not contain white space. We divided apps reviews into their constituent set of words.

Stop-Word Removal: Stop words such as is, am, are, for, the, and others do not contain the conceptual meaning of a review and create noise for a classification model. Removing stop words from the review texts helps us avoid repeated features of the same phases (e.g., “the bank account" and “bank account"). In our experiment, we used a comprehensive set of stop words that are well-known to the natural language processing community.Footnote 2 While the removal of negative contractions such as “doesn’t", “couldn’t", “won’t", and others can affect the decision of machine learning models in classifying text in some cases, our empirical analysis shows that in this instance, this approach does not have a large influence on the overall performance of our model.

Punctuation Removal: We observed many reviews in the data collection containing punctuation such as “..., ??,:(," and others that do not significantly contribute to a classification model. Hence, we removed punctuation from the app reviews.

5.2.2 Feature Extraction

After cleansing and preprocessing the dataset, we converted the app reviews in the dataset into their vector representation by using the pre-trained Bidirectional Encoder Representations from Transformers model (Devlin et al. 2019), so-called BERTFootnote 3. This is a language representation model trained on the BooksCorpus with 800 million words (Zhu et al. 2015) and English Wikipedia with 2.5 billion words. The model receives a sequence of words as input and outputs a sequence of vectors. The model converted the review texts with different words into 768-dimensional vectors used as input into a machine learning model. Each of these vectors is estimated by the average of embedded vectors of its constituent words. For instance, given a review text s that consists of n-words, \(s = (w_{1}, \ldots , w_{n})\), then, \(\textbf{s} \approx \frac{1}{n} (\mathbf {w_{1}} + \ldots + \mathbf {w_{n}})\), where \((\mathbf {w_{1}} + \ldots + \mathbf {w_{n}})\) are the embedded vectors of \((w_{1}, \ldots , w_{n})\). Furthermore, these vectors capture both a semantic meaning and a contextualised meaning of their corresponding app reviews.

5.2.3 Model Selection and Tuning

Selecting a classification model that yields the optimal result is challenging. We selected seven models, such as Support Vector Machine (SVM), Decision Trees (DT), Neural Network (NN), Logistic Regression (LR), Gradient Boosting Tress (GBT), Deep Neural Network (DNN), and Generative Adversarial Networks (GANs) that are commonly used for text classification in the natural language processing community (Aggarwal and Zhai 2012). Below is a brief description of each classification model used in our work.

Logistic Regression (LR) is a linear classifier. The data is fitted into a logistic function that generates the binary output such as 0 (i.e., an honesty_non_violation app review) or 1 (i.e., an honesty violation app review) based on probability.

Support Vector Machine (SVM) (Noble 2006) is a classifier that finds hyperplane(s) in N-dimensional space (i.e., the number of features), which can further distinguish the data into multiple categories.

Decision Trees (DT) are a non-parametric supervised learning method used for classification and regression. DT predict the value of a target variable by learning simple decision rules inferred from the data features. Given a 768-dimensional vector representation of a particular review text, DT classifies the review text into the category selected by most trees.

Gradient Boosting Trees (GBT) is one of the ensemble learners that builds trees and boosts them for classification. When a new tree is created, it corrects errors of previous trees fitted on the same provided data. This repeatedly correcting errors process is known as the boosting process. In addition, the gradient descent algorithm is used for optimisation during the boosting process. Thus, the method is called gradient boosting trees. The model classifies app reviews into a category based on the entire ensemble of trees.

Neural Network (NN) is a multilayer perceptron model which contains a set of interconnected layers where each layer contains a finite number of nodes. Each neural network architecture has one input layer, at least one hidden layer, and one output layer. The input data is transformed layer by layer via the activation function(s). During the training process, optimisation techniques such as stochastic gradient descent are used to optimise the performance of the model. The classified category of a particular app review is the collected result from the output layer.

Deep Neural Network (DNN) is the extension of NN with a larger number of hidden layers that support the model to deeply learn the features in the vector representation of an app review (i.e., the embedding vector). Layers in the DNN are placed in consecutive order where the number of nodes subsequently decreases layer by layer. The first layer of the DNN is an input layer which contains N number of nodes corresponding to N-dimensions of the embedding vector. Nodes in one layer are, then, fully connected to nodes in the next layer. The Sigmoid function is applied to transform the last hidden layer of the DNN to the output layer, which contains the classified category of an app review.

Generative Adversarial Networks (GANs) (Goodfellow et al. 2014) is a generative neural network model that is widely used to generate high-quality data for evaluating machine learning tasks such as classification and prediction. The model consists of two networks; the generator network and the discriminator network. The generator network learns to curate the embedding vector of an app review with an incorrect category. Both embedding vectors with the correct category and generated embedding vectors are, then, used to train the discriminator network to classify the category of app review. This aims to increase the robustness of the discriminator in classifying the category of app reviews with less amount of labelled data.

Finding the hyperparameters for models to generate optimal results is known as the fine-tuning process. We use grid search cross-validation to perform an exhaustive search to find the best set of hyperparameters for each classifier. To reproduce our results, we provide the selected hyperparameters for each selected model and the open-source GitHub repository in Obie et al. (2022).

5.2.4 Cross Validation

To estimate the variance of the performance for each classification model, we used a K-fold cross-validation technique (Kohavi et al. 1995). The technique splits the data into K equal-sized subsets where one of the subsets is used for validation, and the remaining subsets are used for training. This process is repeated K times, the average of the validation scores is used to measure the performance for each classification model. In this study, we used a 10-fold cross-validation technique. Here, we split the dataset in Sect. 5.1 into 10 chunks of data that contains an equal number of app reviews. Then, we perform the evaluation process where the training dataset contains 9 chunks of data, and another chunk of data is used as the testing dataset. Note that this is repeated until each chunk of data has been used as the testing dataset once. This approach helps us understand how well our selected models perform on unseen data.

5.3 Results

In this section, we report the results of our experiment evaluating the performance of the different machine learning models. We adopted the generally accepted metrics of accuracy, precision, recall, and F1 score for this purpose. Other metrics such as the Matthews Correlation Coefficient (MCC) and confusion table are shown in Table 2. We note here that all of the models performed well (with F1 scores of 0.79 and above).

Table 3 shows the results of our 7 different machine learning classification algorithms. The DNN algorithm came out to be the best performing model with an accuracy of 0.914, precision of 0.911, recall of 0.932, and an F1 score of 0.921. The second-best performing algorithm is the SVM model, with an accuracy of 0.889, precision of 0.949, recall of 0.841, and an F1 score of 0.892. The high performance of our DNN model makes it useful in practical applications for detecting the violation of the value of honesty in reviews.

One of the aims of our work is to introduce an automatic method for detecting honesty violation reviews that performs better than current approaches. Similar studies on text classification have compared their approaches to either the current state-of-the-art or a baseline classifier (AlOmar et al. 2021; Maldonado et al. 2017). Hence we compare our best-performing machine learning model (DNN) with a baseline classifier only since there is no current state-of-the-art in detecting the violation of honesty in app reviews, similar to what recent works have done (AlOmar et al. 2021; Maldonado et al. 2017).

We used the statistics of our dataset to compute the metrics of the baseline classifier. The precision of the baseline classifier can be computed by dividing the number of honesty violation reviews by the total number of honesty_potential reviews:

The recall is 0.5, as there are only two outcomes for a review classification: honesty violations reviews or honesty_non_violations reviews, with a 0.5 probability of a review containing the violation of the value of honesty. Based on the precision and recall values, we compute the F1 score of the baseline classifier as:

Table 4 summarises the comparison of our best-performing machine learning model (DNN) with the baseline. As can be seen, the DNN model has a better performance than the baseline classifier. Our DNN model has an F1 score of 0.921, while the baseline classifier has an F1 score of 0.1412, respectively. Table 4 also shows that our DNN model surpasses the baseline classifier by 6.523 times in detecting honesty violation reviews.

The robustness of the DNN model on the unseen samples was evaluated by using 401 data samples which were randomly selected from the remaining 4, 083 (\(4,885 - 802\)) honesty_non_violations reviews. Based on the data in Table 2, we can show that the accuracy of the DNN model on classifying honesty_non_violations reviews is 0.814 which is calculated as:

where TN indicates true negatives. The accuracy of the model on the unseen samples (verified honesty_non_violations reviews only), however, reduces to 0.768. To further understand the limitations of the DNN model, the false positive reviews (incorrectly predicting true negatives) were selected and analysed. Examples of these reviews include:

“It was great! I loved it! But then there was a little problem. At day 5 of me using it, there was a bit of lag on the app. I checked my connection and my phone but everything was fine, then the next morning when I opened the app, it was pure black. I waited for 4-7 minutes but nothing happened. I restarted the app then open it again then everything was fine. Please fix this problem I just don’t want this to happen. I really love this app so please fix it. Thank you.”

“It was nice to see the changes you made. It is easier to delete and move things but, you then overdo it. You overcompensated. More ads. Now, it keeps telling me I’m offline when I’m not. I get to the page, touch visit, nothings happening except it telling me I’m offline, check my network connection. It was fine the other day. All my other apps are working, not sure what is going on. Help.”

“Pinterest used to be a great app for recipes with the occasional ad. Now it’s the single worst app to have because of the overabundance of ads. My screen jumps up and down and will not hold the recipe in place and when I finally do get to the recipe, my screen goes black and the app closes. With all my prep work on the counter ready to go I have to go back and endure the same pain of finding the recipe, scrolling past the ads, and hoping it doesn’t do the same again.”

In these cases, the reviews focus on describing technical issues while using app rather than the fact that the app provides inaccurate information. Furthermore, the reviews mentioned “ads” but not mentioning whether the ads were relevant to the user’s preferences. Given the sentiment of such reviews, the content of the populated ads in app may be neutral or relevant to the user’s preferences. These examples demonstrates that there are two potential limitations of the DNN model such as (1) the confusion between technical issues and inaccurate information; and (2) the confusion between ads and false ads.

6 Categories of Honesty Violations (RQ2)

6.1 Categorisation Approach

While the machine learning models in Section 5 could effectively distinguish between honesty violations reviews and honesty non-violations reviews, we are also interested in understanding the types of honesty violations reported in reviews. To this end, we applied the open coding procedure (Glaser et al. 1968) on the 401 honesty_violations reviews. As discussed in Sect. 5.1, these reviews include honesty violations. First, an analyst followed the open coding technique to label all these 401 reviews and identified 10 types of honesty violations. The 401 honesty_violations reviews were assigned to these 10 categories. The results of the open coding were stored in an Excel spreadsheet file and shared with the second and third analysts. Then, the second analyst cross-checked the first 100 labelled reviews while the third analyst cross-checked the remaining 301 labelled reviews. Next, the first analyst held Zoom meetings with the second and third analysts to discuss and resolve the conflicts and disagreements. Note that the disagreements were resolved using the negotiated agreement approach (Campbell et al. 2013; Morrissey 1974). During the labelling, we had a total of 21 reviews among the 401 honesty_violations reviews that we discussed further and resolved. Sometimes we discussed the reviews not because we had different labels, but because the analyst identified the review and recommended further clarification and discussion. However, we were able to come to an agreement for all of the reviews after discussions. Due to the fact that we adopted the negotiated agreement technique, measures like inter-rater agreement are not applicable. The negotiated agreement technique was employed because it is beneficial in research like ours where the main objective is to generate novel insights (Campbell et al. 2013; Morrissey 1974).

6.2 Results

Our analysis of the 401 honesty_violations reviews revealed 10 categories of honesty violations reported in app reviews. Below we provide a definition of these categories, sample reviews, and a summary of their prevalence. While we highlight the different categories within the violation of the value of honesty and provide example reviews, we note that the categories are not mutually exclusive. Table 5 shows these categories and the frequency of the corresponding reviews per category.

6.2.1 Unfair Cancellation and Refund Policies

This category covers all reviews where the users perceive the cancellation and refund policy as unfair, nontransparent, or deliberately misleading. It also includes situations where the user feels that the developers deliberately make it difficult for the user to cancel their subscription. For example, in some apps, the user can sign up for a subscription with the click of a button within the app but cannot cancel the subscription from within the app; the user is asked to log in to a website to cancel the subscription. In other cases, the cancellation instruction is not clear and leads to a loop of cancellation steps. Examples of reviews claiming these practices include:

“The app allows you to accidentally sign up to premium with a push of a button. When you want to cancel, however, you can’t do that via the app... You have to go to the webpage, enter details and cancel there.”

“Deceptive billing practices - information on cancelling is circular; emailed a link that advises to email. [It] doesn’t have colour tag functionality across web and app; very poor UX and worse customer service.”

Sometimes, the app also makes it easy for the user to mistakenly activate a premium subscription in the way the interface and flow are designed, e.g.:

“Use with caution. It’s unscrupulous about signing you up for a subscription when you’re skipping past the in-app ads. It’s not made clear once you’ve subscribed, and there’s no way of cancelling it through the app.”

Another aspect of this category focuses on situations where the user perceives the refund steps and policies to be dishonest and unfair. This also involves situations where the refund policy does not cater to accidental subscriptions, e.g.:

“DO NOT SIGN UP FOR FREE TRIAL! IT IS A SCAM. YOU WILL GET CHARGED ANYWAY, AND YOU WILL NEVER GET YOUR MONEY BACK!! Once again, after numerous attempts to blame Google, this developer has still not refunded my $38. Once again, I cancelled 3 full days before the free trial ended but was still charged. Once again, [I] contacted the developer, who told me that I would receive a full refund within 7 to 10 days, and still nothing. I have saved the email, pricing this to be true. DO NOT TRUST THIS DEVELOPER. SCAM!!!!”

6.2.2 False Advertisements

This category relates to situations where the user perceives that the advertised features and functionalities of the app as described by the developers are not contained in the app. The user downloads the app or pays for a subscription on the basis of accessing certain functionalities or features only to find out the descriptions, including screenshots on the app distribution platform are different from the actual functionalities available in the app. Two examples of these are shown below:

“Couldn’t find Google Assistant integration anywhere. Even though it’s been advertised everywhere when searching the web for the app... It’s even in the description of the app here. That’s false advertising. I will edit my review when it’s out of Beta and working in the final version.”

“The app doesn’t listen to the watch at all. I’ve tried completing and snoozing and it does nothing. The watch app can only add tasks, so the screenshots they’re sharing here are DECEPTIVE.”

In some cases, the app lures users into downloading the app on the basis that it is free-for-use only for the user to find out that the free-for-use is a trial version for a specific time period and not perpetually free as implied in the app description:

“The actual free version doesn’t allow you anything, not even to learn how to use the app properly. That role is filled by 7 days of free premium. The free, on the description, is a lie. Is a paid-only app with temporary free access to its full features that gets practically useless after the 7-day trial... I don’t like to be lied to.”

In addition, the app developers (through the app description) make promises to users to give them certain benefits like a free premium subscription when a particular action is carried out (e.g., inviting a particular number of friends to sign up). However, they never truly fulfil their promises when the user fulfils their end of the bargain. These unfulfilled obligations are perceived by the end-user as a violation of honesty, e.g.:

“I love this app however I sent the link to several friends and they got the app and I received no premium time whatsoever. Don’t be dishonest with your apps. That’s lame.”

Another example relates to scenarios where the user is invited to make certain commitments based on a future reward and the developers bail out on their prior commitment:

“Shame on Them! Liars. I paid for the season pass TWICE (ONCE for my apple device and the other for my Samsung Device). I was falsely promised access to ALL FUTURE CONTENT. Now they are trying to charge me for the Parisian Inspired TOKENS! HOW DARE THEY LIE AND BAIT AND SWITCH.”

6.2.3 Delusive Subscriptions

Any review describing complaints related to unfair or nontransparent automatic subscription processes are classified under this category. There are instances where no notifications are provided to let the user know they are subscribed to the app or premium version of the app, and the user only finds out about the subscription from the deductions in their bank accounts:

“I just realised that I have been charged for some crappy premium service fee which I had no idea about when using the app. Why is this charge by default? Why was I not informed in the first place? Beware of scam for useless monthly premium fees!”

“I can’t believe I was charged 55.99. What are you giving me? Gold? I unsubscribed but saw mysterious charge in my bank account.”

Additionally, there is the issue of lack of user consent in the subscription process where certain apps do not provide a confirmation mechanism that prevents accidental subscriptions by the user, e.g.:

“Made me pay 1 year worth of subscription without my confirmation. Only used its free trial because I had to use it once. What a scam...”

In some scenarios, the automatic subscription is hidden behind an in-app ad/feature, and an unsuspecting user who clicks on the feature is automatically subscribed to the premium version of the app without a clear warning or confirmation, e.g.:

“Deceptive practices. If you click the in-app “ad" that simply says enable notifications, you’ll automatically be signed up and billed for their premium service. This bypasses the Google/Apple stores subscription model and bills your card directly. Not to mention it’s impossible to downgrade from this service in the app itself; you have to visit their website, which is a deliberately obstructive hurdle considering you can upgrade in the app just fine.”

6.2.4 Cheating Systems

All reviews concerning the user’s perception of fraud by other persons or cheating within the inner workings of the app are classified under this category. Users complain of unfairness in either the process or outcome of the app, especially processes/outcomes that are supposedly statistically random. While accusations of this kind from the users are prevalent and subjective, they may not really be the case. However, we labelled these kinds of reviews based on the perception of the users as captured in their comments. Reviews related to this category are mostly found in games or game-like systems. For example:

“This game cheats. It uses words not found in the dictionary. Also it told me a word was unplayable, but it was the first best word option.”

“I play it with my sister often. However, there is the problem of the game and AI cheating. I rolled a 2 and a 3 at the start of the game and it moved me FOUR spaces forward not five. Four. That happened several times and I can assure you I was looking everytime it happened. I am very disappointed at the fact this game is cheating...”

In some of the reviews, users complain that the game works properly when the user loses and parts with money and only freezes when the AI system in the app is about to lose. Based on the reviews, the users seem to be using real money in the games/apps. This complaint is a recurring theme within this category:

“You have to pay for it, then the game just freezes when you win against the CPU? Reset it over and again, keeps freezing unless it rolls something to not land on my property. Also, is the dice rigged against the CPU? Honesty? With as much as I owned in the beginning, none of the 3 CPUs would land on anything I owned. Anytime the last CPU needs to raise money, game freezes, guess ya just can’t win.”

“there’s a glitch in it that freezes the game from continuing when you’re winning. The dice just disappears, but the trains and clouds and aircrafts keep moving. It’s like It is designed so that one doesn’t win them.”

“When playing against the computers when you’re about to win and bankrupt the final computer the game conveniently freezes. It does not allow you to win. Not a very fun game to play, I want my money back.”

We consider this category important as some of these apps require the use of real money to play or for in-app purchases. If apps are dishonest in the underlying process of the systems that are expected to be fair, then that constitutes not only a violation of the value of honesty, it might potentially be a crime. This is worth considering, especially when the exact issue is raised by several users:

“Although you say that the dice is random, i cannot help but feel that it is rigged. Take a look at your reviews, there are many other players that feel the same. Can’t be all of us are wrong. Or maybe we are suffering from mass hysteria?”

Other non-game examples include cases where the user reports not having the full value of the fee they were charged for the app and feels cheated. For instance:

“Whenever I pay for parking the app always steals 5 minutes off my parking time. For example, I pay for 60 minutes and the timer starts at 54 minutes and 59 seconds. I am very upset, this has been happening for a while and probably to many more people as well. That is a lot of money!”

“This app will not give you re requested amount of parking time. If you park for 15 minutes it will immediately say you have 11 minutes left. I understand that you have to charge but at least give me the requested amount of parking time.”

6.2.5 Inaccurate Information

This category covers where users perceive that the app provides false or inaccurate information as captured in their reviews. This includes situations where inaccurate information can increase the likelihood of the user inadvertently making wrong selections at a cost to them. In the review below, the user complains the design of an app feature tricks them into paying for the wrong parking spot:

“When you need to pay for additional time, and click ’Recent’ to pay for the most Recently parked in place - the first item is not the place you just parked in so it tricks you into paying for the wrong place (dark pattern). Please make the Recent accurately reflect the most recently parked in place.”

Another example review in this category is quite severe as it relates to a health emergency app providing potentially inaccurate information that might be detrimental to the user:

“Try to use this in an actual emergency and you’ll just end up as a dead idiot holding a cellphone. The information is either useless or completely false in most cases. Don’t bother downloading.”

Other less severe but important reviews where the user perceives the app provides inaccurate information or notification are shown below:

“Do not buy unless you are sure you want to. You will NOT be able to get it set up and working within the 15 minute refund window. The instructions online are so cryptic it (and wrong).”

“Very annoying every time when you open the app it shows you have a notification. Then checking your notifications you don’t have any.”

6.2.6 Unfair Fees

This category relates to issues surrounding what the user considers to be unfair fees or charges. This also applies to cases where the user feels that they have not received a fair deal or that the app charges more money than it ought to. Because the definition of honesty also covers fairness, we also consider these kinds of issues a potential violation of the value of honesty. In the example below, the user complains of being charged more than they think is fair; they were charged a car parking rate for parking a bike.

“Went through the sign up process and parked my bike in a bike parking zone. Put in the correct zone details for the bike parking area and got charged a car parking rate. Rang support and they said there is no bike parking at that location. I explained there was and they told me to ring the council.”

Other examples of fees considered by the user to be unfair are:

“The app charges you 0.25 per transaction. So I paid 0.75 to pay for parking it charged me 0.25 service fee then I extended my parking 0.25 and it charged me again 0.25!!! Biggest scam in the world.”

“The only annoying things are that I have to buy any extra Monopoly Board in the same game when I already paid the main game. Can you not give extra Monopoly Boards in the same game for free. You are not fair!”

This category is also reflected in the form of hidden charges where the user is not aware of subsequent charges made to their account. These hidden charges can take the form of a vague bill (as shown in the review below) or not notifying the user with respect to extra charges.

“This is a notorious company with horrible app I’ve ever used. They hide the history and details very deep for you to check and trace. And the monthly bill is also vague. I experienced they secretly bill me!”

“LOOK OUT PEOPLE. THIS IS A SCAM. THEY DID NOT WARN OF A DEPOSIT FEE AND THEY TOOK 33% OF THE DEPOSIT. I RECOMMEND SUING THEM NOW.”

Another related issue within this category is dubious charges where the user account has been charged, and it is not clear why those charges occur. Abnormally high fees (more than the standard subscription fees) and overcharging of the user account are also captured under this category. For example:

“It charged me £74.50 when I bought a ticket for £1.50 it’s a absolute scam I want my money back!”

6.2.7 No Service

This category mainly covers reviews in which the user complains of not being able to access the app’s main functionality after purchase, leading to undesirable consequences for the user. The main difference between the false advertisement category and this category is that the former deals with features/functionalities of the app that do not work as advertised. The latter deals with situations where the app does not work at all, i.e., does not even serve its main purpose for the user after the user has made financial commitments in the form of a purchase or subscription. In the example below, the user is fined for illegal parking after paying for parking using the app:

“Horrible experience with this app. Causing a lot of frustrations with users. when it fails and I get a ticket there is no much help I can get. sometimes I just pay the fines just because the complaint system is awfully inconvenient. I feel cheated and it looks like a money making tool for whoever is collecting the fines.”

Another related example is shown below:

“I spent 20 euros with all the DLCs included, I feel pretty deceived not being able to play the game.”

6.2.8 Deletion of Reviews

This category highlights reviews where the app developers are suspected of deleting reviews left by the user, especially negative reviews. A review captures user feedback, describing their experience of an app, and intending users of an app typically consult the reviews left by other users on the app distribution platform before downloading the app (Obie et al. 2021). Thus, the act of deleting unfavourable reviews by the app developers is perceived as a dishonest practice by the users because leaving only positive reviews may not paint an accurate picture of the app. Users may also feel like the app developers are trying to hide their complaints or other nefarious practices.

It can be argued that certain comments are deleted by app developers because those comments contain ad hominem attacks from the users instead of complaints relating to the app itself. While it is debatable whether app developers are justified in deleting perhaps vitriolic ad hominem comments, we do not make any judgement as to this but simply categorise users’ perceptions and complaints of this practice as captured in their reviews. Examples of reviews depicting this accusation are shown below:

“I left them a negative review and the developer deleted it. Now I’m going to review them on YouTube and all social media platforms. Basically, they are scammers.”

“Deleted my honest review. Warning. Steer clear. They keep trying to make you slip up and pay for premium. I signed up for a free trial last year and they make it too difficult for you to find where to cancel. Was charged about $40... shame such a good app is tarnished by such shady practices.”

6.2.9 Impersonation

An impersonation is an act of pretending to be another person or entity (Dictionary 2021). It also involves the act of giving a false account of the nature of something. This category covers all reviews relating to impersonation or misrepresentation by the app or app developers. This includes scenarios where an app pretends to have the authority of (or relationship to) an organisation when in reality, it has no such relationship. An example review is captured below:

“STAY AWAY... this app is a scam. the stickers make it look like it’s Brisbane council approved. it’s not and they are no help. I still got a fine for using the app correctly and the Brisbane council parking police have no access to check if you have paid or not and do not accept this as a payment method.”

Another example in this category reflects situations where users feel that they are interacting with bots instead of humans when they have signed up to the platform to interact with humans. This is similar to false advertising-related lawsuits of the Match.com platform described in Section 2. An example of this is:

“Good game, fake players online. I wanted a challenging Monopoly game. But when I start. I can tell that some are bots not real people online. For example, they quickly trade when it is their turn. A normal human will take some time to choose options.”

6.2.10 Fraudulent-looking Apps

This category includes reviews reporting suspicious-looking apps based on observations of users or apps deemed to be fake by the users. We created a separate category for these kinds of reviews. Although the users flag the apps in these reviews as fraudulent, they do not provide specific reasons for their accusations beyond their perception of the app as fake or fraudulent. Furthermore, these types of reviews do not fit any of the categories described above, and we sought to highlight them based on the user accusations captured in their reviews. Examples of these reviews include:

“...Be careful with this kind of dishonest apps”

“This is a fraud app don’t download”

Furthermore, Table 6 shows the breakdown of honesty violations across different app categories. Out of the 401 honesty_violations reviews, Games (28.9%), Auto & Vehicles (22.2%), and Finance (12.7%) are the app categories with the most number of honesty violations, while Medical (0.2%) and Music & Audio (0.2%) are the app categories with the least number of honesty violations.

The Games category with the highest number of honesty violations often experiences a high degree of competition, as developers strive to attract users to their apps amidst a crowded marketplace. This fierce competition might lead some developers to misrepresent or exaggerate the features of their games to improve visibility and attract a larger audience.

Apps within the Auto & Vehicles category may experience a similar pressure to compete for users. These apps often target niche markets and specific user demographics, such as those searching for parking services. In an attempt to attract these users, developers might also stretch the utility of their app features to increase exposure, and perhaps hide certain features that the users expect to be standard behind a paywall, e.g., a notification that parking is about to expire. Knowingly or unknowingly, the designs of these apps sometimes mislead users into accidentally signing up for a premium subscription to access these features. The Finance category often includes apps that provide services related to money management, investment, and banking. Due to the lucrative nature of the financial sector, dishonest developers may engage in deceitful practices to target users seeking financial advice or services (e.g., crypto trading), which can lead to increased revenue for the app and the developers.

The Medical category is likely to have a lower number of honesty violations due to the critical nature of the information and services provided by these apps. Users rely on medical apps for accurate information and reliable tools, and any violation of honesty could significantly affect their health and well-being. As a result, there is a higher expectation of integrity from developers and a stronger regulatory environment for such apps, which may contribute to the low number of honesty violations in this category. Apps in the Music & Audio category may experience fewer instances of dishonesty due to the relatively straightforward nature of the services they provide. Users typically expect to access music or audio content, and there may be less room for misrepresentation or exaggeration.

7 Developers’ Experience With Honesty Violations in Mobile Apps (RQ3)

7.1 Practitioner Study Design Approach

Our analysis of app reviews in RQ1 and RQ2 indicates that honesty violations exist in mobile apps from the perspective of end users. But what about app developers’ experience with them? This motivated us to explore mobile app developers’ experience with honesty violations in mobile apps.

We took an interview and survey-based approach, referred to as the developers’ study, (Fig. 3) to understand developers’ experience with honesty violations in the mobile apps they develop. In parallel, we conducted a set of in-depth semi-structured interviews, and we conducted a broad survey – both with mobile app developers. Collecting data from both interviews and surveys strengthened our findings well. The replication package, which consists of the artefacts we developed to collect data in both studies, is available online.Footnote 4 In this section, first, we explain the interview study and then the survey study.

7.1.1 Step Int: Interview Study

Step Int.1: Protocol Development: For the interview study, to recruit participants, we prepared an advertisement and an explanatory statement; and to collect data, we prepared a pre-interview questionnaire and an interview guide. The details about the artefacts are explained under participant recruitment and data collection below.

Step Int.2: Participant Recruitment: We recruited participants by sharing the explanatory statement and posting an advertisement on social media platforms such as LinkedIn, Twitter, and Facebook with a link to the explanatory statement. The explanatory statement consisted of details of the study, including the procedure, potential benefits, and how we preserve the confidentiality of the participants. Potential participants contacted us, showing their interest in participating in the interview study. We recruited three participants to proceed with the data collection.

Step Int.3: Data Collection: Data collection for the interview study consisted of two parts: a pre-interview questionnaire and an online interview.

The data collection for the interviews was done by the third author, who has a pragmatic view and has 8+ years of experience working with and interviewing developers.

Pre-interview Questionnaire. Each participant was given a pre-interview questionnaire to fill in before the interview. The questionnaire consisted of questions about their demographics (age, gender, country of residence, professional experience, including total experience in the software industry and total mobile app development experience), context (type of apps the participants develop based on the list of app types as in Google Play Store, types and frequency of honesty violations the participant had experienced for their apps), who is responsible for honesty violations in mobile apps, and the participant’s opinion about automatic detection of honesty violations in mobile apps (usefulness, beneficiaries, how beneficial). We included the definition of honesty and honesty violations at the beginning of the pre-interview questionnaire so that participants’ interpretation of the terms aligns with ours. We also repeated the definition of honesty violations at the beginning of each relevant question section to ensure that participants’ interpretation of the term remains the same until the end of the questionnaire. The pre-interview questionnaire was hosted on QualtricsFootnote 5 and took around fifteen minutes to complete. Having a pre-interview questionnaire helped us in collecting data on closed-ended questions early so that we had a high-level understanding of participants’ background with honesty violations in mobile apps and also led us to have more time for in-depth discussions during the interviews.

Online Interview. After we confirmed that participants had filled out our pre-interview questionnaire, we conducted online interviews with them at an agreed time using Zoom. Each semi-structured interview lasted approximately thirty minutes and was audio recorded. During the interviews, we focused on asking open-ended questions from the participants so that we could gain much more rich data on their experiences with honesty violations in mobile apps. The interviews started by showing some examples of honesty violations reported in mobile app reviews to the participants. This made it easy for the participants to answer the questions. First, we asked the participants about the reasons for honesty violations happening in mobile apps, then asked about the impact of honesty violations on end users and developers/owners of the mobile apps. After that, we asked participants what possible strategies exist to avoid honesty violations. We also asked them what strategies they adopted to address honesty violations that they had encountered in their mobile apps (if any). The interviews ended with an open question, allowing the participants to share anything else they liked to share about honesty violations in mobile apps. After the interviews ended, the audio recordings were transcribed using Otter.Footnote 6

Step Int.4: Data Analysis: Qualitative data analysis. The qualitative data collected were analysed using open coding and constant comparison techniques as in Glaser and Strauss’s Grounded Theory (Glaser et al. 1968). The third author analysed the data and findings were shared with the team in weekly meetings. We used MAXQDAFootnote 7 to analyse the data. The responses to the questions (raw data) were interpreted in small chunks of words (codes), and they were constantly compared to group similar codes together to develop the concepts. The concepts were then constantly compared to develop categories. Figure 4 shows an example of qualitative analysis. Quantitative data analysis. As some closed-ended questions of the interview study were repeated in the survey study, the quantitative data were analysed together with the quantitative data collected from the survey study. Descriptive statistics were used to analyse the data.

7.1.2 Step Survey: Survey Study

Step Survey.1: Survey Questionnaire Development: We developed a questionnaire with a mix of open-ended and closed-ended questions. The survey contained demographics, contexts, causes, consequences, strategies, and automatic detection of honesty violations in mobile apps. The questions on demographics, context, and automatic detection were the same questions we used in the pre-interview questionnaire of the interview study. We further used open-ended questions to allow participants to freely share their experiences about causes, consequences, and strategies.

Step Survey.2: Participant Recruitment: We used ProlificFootnote 8 to recruit participants for the survey study. The participants were recruited batch-wise, i.e., 10 x 7, which altogether resulted in recruiting 70 participants.

Step Survey.3: Data Collection: The data collection of the survey study was straightforward. The link to the survey questionnaire hosted on Qualtrics was shared through a Prolific post. The participants took around twenty minutes on average to complete the survey.

Step Survey.4: Data Analysis: The same procedure as in the interview study was followed to analyse the collected data.

7.2 Interview and Survey Study Results: Participant Information and Their Context

7.2.1 Participant Information.

Figure 5 is a summary of the participant information (location, gender, age, total experience, mobile app development experience). The majority of the participants were from Spain (10 participants), followed by South Africa and Greece (8 participants each); were male (59 participants); had an average total software engineering experience of 8.55 years, and an average mobile app development experience of 2.60 years.

7.2.2 Types of Mobile Apps Participants Develop.

A summary of the types of mobile apps our participants develop is shown in Fig. 6. While the developers are not limited to developing one type of app, our participants selected many types, and the key app type they mentioned as they develop is tools (13.33%).

7.2.3 Developer Experience: Reported Honesty Violations In App Reviews.

We asked our participants which types of honesty violations they received for their apps they developed for the company that they worked for. The results are shown in Fig. 7. According to our participants’ experience, the most reported honesty violation by the users is inaccurate information (sometimes+about half the time+most of the time=73.97% of participants). This is followed by no service (sometimes+about half the time+most of the time=54.79% of participants).

7.3 Interview and Survey Study Results

We found several causes, consequences, and mitigation and fixing strategies for honesty violations in mobile apps. Further, we found how useful automatic detection of honesty violations is, the benefits of automatic detection which mitigates many causes, and consequences of honesty violations, and helps improve strategies in handling honesty violations in mobile apps. These are summarised in Table 7 and explained in the subsections below. We quote interview participants by IP<ID> and survey participants SP<ID>.

7.3.1 Causes (RQ3.1)

QUANTITATIVE FINDINGS: The majority of the participants (22.65%) selected the choice product owners as responsible for honesty violations in mobile apps, followed by developers (20.54%), managers (19.34%), business analysts (13.81%), and user support roles (7.18%) (Fig. 8). But, as the answers to the open–ended questions, and during the interviews, the experiences they shared were about businesses, developers, app platforms, users and competitors causing honesty violations (explained under qualitative findings below). However, in agile contexts, as a common practice at present, the developers are cross–functional and play multiple roles.

QUALITATIVE FINDINGS: Our participants identified several causes of the existence of honesty violations in mobile apps. We categorised them according to what the driver of the violation seems to be: business drivers, developer drivers, app platform drivers, user drivers, and competitor drivers. Some honesty violations in mobile apps may of course have multiple of these drivers.

Business Drivers. We found intentional and unintentional reasons driven by business needs or perceived needs, for the existence of honesty violations in their mobile apps.

Intentional reasons: Businesses intentionally violate honesty in mobile apps, as identified by our participants. The reasons for ill-intended activities mentioned include due to revenue maximisation and market competition.

Maximise revenue: Our participants mentioned that when the objective of a business is to gain more profit, they may be tempted to scam/fool their app users:

“I try to think these ‘violations’ aren’t meant to be there, but I did see ‘tricks’ to implement them, mostly: unfair fees or hidden fees, and mostly is because corporate greediness” - SP46

“I try to think these ‘violations’ aren’t meant to be there, but I did see ‘tricks’ to implement them, mostly: unfair fees or hidden fees, and mostly is because corporate greediness” - SP46

This has become relatively easy as the smartphone user population is high and most users are not knowledgeable enough to understand the violation:

It’s an easy way to make money since most of the population has a smartphone and many of those users are not knowledgeable. - SP26

It’s an easy way to make money since most of the population has a smartphone and many of those users are not knowledgeable. - SP26

As shared by SP37, tricking users into gaining more money has also led to bad practices such as doing R & D on these tricks:

“I think though that most app developers that do that kind of violations are not at all naive and do it for the money. In every app store (Apple or Google) there are tons of apps that aim to make the most money with the cheapest tricks. I feel like that a good chunk of the market is only there to milk naive consumers. I have heard stories of fellow developers/managers that pour more R & D on how to trick people out of their money rather than put time and effort to come up with a good idea for an app and polish it” - SP37

“I think though that most app developers that do that kind of violations are not at all naive and do it for the money. In every app store (Apple or Google) there are tons of apps that aim to make the most money with the cheapest tricks. I feel like that a good chunk of the market is only there to milk naive consumers. I have heard stories of fellow developers/managers that pour more R & D on how to trick people out of their money rather than put time and effort to come up with a good idea for an app and polish it” - SP37

Market competition: Due to high competition in the mobile app market, businesses may make releases that include honesty violations. These could be innovative features: