Abstract

Edge computing is a distributed computing paradigm aiming at ensuring low latency in modern data intensive applications (e.g., video streaming and IoT). It consists of deploying computation and storage nodes close to the end-users. Unfortunately, being distributed and close to end-users, Edge systems have a wider attack surface (e.g., they may be physically reachable) and are more complex to update than other types of systems (e.g., Cloud systems) thus requiring thorough security testing activities, possibly tailored to be cost-effective. To support the development of effective and automated Edge security testing solutions, we conducted an empirical study of vulnerabilities affecting Edge frameworks. The study is driven by eight research questions that aim to determine what test triggers, test harnesses, test oracles, and input types should be considered when defining new security testing approaches dedicated to Edge systems. preconditions and inputs leading to a successful exploit, the security properties being violated, the most frequent vulnerability types, the software behaviours and developer mistakes associated to these vulnerabilities, and the severity of Edge vulnerabilities. We have inspected 147 vulnerabilities of four popular Edge frameworks. Our findings indicate that vulnerabilities slip through the testing process because of the complexity of the Edge features. Indeed, they can’t be exhaustively tested in-house because of the large number of combinations of inputs, outputs, and interfaces to be tested. Since we observed that most of the vulnerabilities do not affect the system integrity and, further, only one action (e.g., requesting a URL) is sufficient to exploit a vulnerability

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Business and private individuals are increasingly relying on data-intensive services provided by remote systems; examples include music streaming, video conferencing, E-gaming, cloud storage, and remote surveillance.

Because of the real-time transmission of large amounts of data, latency is one of the main issues affecting the above-mentioned services. To minimize latency, the Edge Computing paradigm has been introduced Stankovic (2014). It consists of distributed storage and computing resources close to the end-users with the objective of minimizing latency and ensuring real-time services.

When data is the main asset of a service, security is a major concern. Unfortunately, by moving data and computation closer to the end-user (e.g., TV boxes), service providers have less control on the infrastructure, which is often physically accessible, might be difficult to update (e.g., because updates take place overnight when the system is turned off), and might be installed on a large number of diverse hardware and OS layers whose configurations might be difficult to be tested extensively. Consequently, compared to services executed on traditional infrastructures (e.g., Cloud), services executed on Edge computing infrastructures may expose a wider set of attack surfaces (e.g., because physically accessible) and be more likely affected by vulnerabilities (e.g., because it is not possible to test all the configurations of the software, or because it is not possible to ensure that the underlying environment is up to date).

Because of the reasons above, infrastructure providers are looking for solutions to assess that Edge frameworks and applications are free from vulnerabilities. In this paper, we focus on software security testing, which, differently from other approaches (e.g., security analysis), provides evidence of the presence of vulnerabilities; for example, test failures show how an attacker can exploit a vulnerability.

As a starting point towards the definition of Edge security testing solutions we conduct an empirical study of the vulnerabilities affecting Edge frameworks. Our study partially relies on a recent study by Gazzola (2017). The work of Gazzola et al., although focused on functional failures and not security aspects, has guided us towards the characterization of the vulnerable components (e.g., plugins), the type of failures being observed (e.g., signalled or silent), the complexity of the required testing procedures (i.e., how many actions should be performed to detect a vulnerability), and the reasons why vulnerabilities slip through the development process (e.g., because of the combinatorial explosion of the inputs to be tested). In addition, different from Gazzola et al., we characterized the preconditions (e.g., sub-nets should be set-up) and the inputs (e.g., sending crafted messages) required to exploit Edge vulnerabilities. Finally, similar to other vulnerability studies by Mazuera-Rozo et al. (2019), we analyzed the distribution of MITRE (2022) identifiers (i.e., types of weaknesses leading to Also, we studied their severity, based on the CVSS entries of the National Vulnerability Database (2022).

In total, we defined eight research questions. We surveyed 263 bug reports concerning four Edge frameworks (Mainflux Framework 2022, K3OS 2022a, KubeEdge 2023, and Zetta 2022a). Among them, we identified 147 vulnerability reports. Our results show that the large number of combinations of configurations and inputs (i.e., combinatorial explosion) is the main reasons for security vulnerabilities not being detected at testing time (\(\textbf{RQ}_{1}\)). Vulnerabilities mostly affect the main Edge framework components (i.e., controllers), a minor presence is observed in network components and plugins, while other components (i.e., APIs, drivers, services, and resources) are less affected (\(\textbf{RQ}_{2}\)). Generally, vulnerabilities can be observed when the software under test (SUT) is in a specific state or configuration (\(\textbf{RQ}_{2}\), \(\textbf{RQ}_{4}\)), which clarifies why vulnerabilities are not detected at testing time because of combinatorial explosion. Security failures (\(\textbf{RQ}_{3}\)) are silent (i.e., not detected by the SUT) and concern value failures (e.g., illegal data being returned), network (e.g., data erroneously routed), or actions (e.g., the software performs illegal operations on the environment). Once the SUT is in the vulnerable state, vulnerabilities can be exploited with a single action (\(\textbf{RQ}_{5A}\)) that usually consists of providing specific data (\(\textbf{RQ}_{5B}\)) to the SUT. The security property that is likely violated by Edge vulnerabilities is confidentiality (\(\textbf{RQ}_{6}\)). Confidentiality issues are mainly due to developer mistakes concerning authentication mechanisms or information management errors (\(\textbf{RQ}_{7}\)). Further, failures are observed because the SUT performs improper access control or improper control of resources over lifetime (\(\textbf{RQ}_{7}\)). NVD data indicates that more than 50% of Edge vulnerabilities have a high severity and are easy to exploit, thus highlighting their criticality and the need for improved testing solutions (\(\textbf{RQ}_{8}\)).

Based on the characteristics summarized above, to ensure timely discovery of vulnerabilities (e.g., before attackers), we suggest to automatically execute test cases directly in the field (e.g., on the deployed Edge system); such practice is known as field-based testing (Bertolino et al. 2021). Indeed, automated testing might be executed, in the field, when configurations not tested in-house are observed; also, the detection of vulnerabilities might be simplified by the fact that only a single action is sufficient to exploit them. Further, testing might focus on confidentiality thus not requiring the identification of mechanisms to compensate for integrity problems caused by the testing process itself. All the data collected to perform our study are available online (Malik and Pastore 2023).

This manuscript proceeds as follows. Section 2 presents background information including a glossary. Section 3 describes the study design. Section 4 presents our results. Section 5 presents a discussion of threats to validity. Section 6 provides reflections on the research directions for Edge security testing, based on our results. Section 7 discusses related work. Section 8 concludes the manuscript.

2 Background

In this section we provide a brief overview of Edge technology, related studies, and a glossary.

2.1 Edge Computing

The Edge computing paradigm has been introduced to enable data transfer with extremely low latency for real-time services. Well known services relying on Edge computing include, for example, E-sports Alvin Jude (2023), live streams broadcasts Todd Erdley (2023); SES Luxembourg (2022), package tracking Murphy (1995), and internet connectivity services for cruise lines SES Luxembourg (2022b) and aviation SES Luxembourg (2022a).

The development of services leveraging the Edge paradigm is supported by Edge frameworks; well known examples are KubeEdge (2022b), Yomo Framework (2022), K3OS (2022a), and Mainflux Framework (2022). In this paper, we rely on the term Edge framework to indicate a set of software components, including Web services and APIs, that are extended to provide a service relying on the Edge paradigm. Our definition is consistent with the definition of framework provided by IEEE: partially completed software subsystem that can be extended by appropriately instantiating some specific plugins IEEE:SSE (2017). Further, our definition of Edge framework recalls the definition provided by Fayad and Schmidt for middleware integration frameworks, which are used to integrate distributed applications and components; middleware integration frameworks are designed to enhance the ability of software developers to modularize, reuse, and extend their software infrastructure to work seamlessly in a distributed environment Fayad and Schmidt (1997). An Edge framework integrates a broad range of technologies including Cloud services and virtualization environments; therefore, an Edge framework is often implemented as an integration of multiple frameworks developed by third parties. In this paper, we treat all the technologies cooperating with an Edge framework as one single framework. We call Edge application the software that implements the logic to provide a service to the end-user. We call Edge system what results from the integration of an Edge framework, one or more Edge applications, and external services that the Edge framework and applications may be configured to interact with.

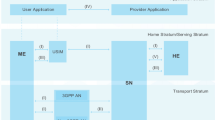

Figure 1 provides a generic architecture of an Edge system. In Figure 1, the software components that constitute the Edge framework are annotated with the UML stereotype SUT (i.e., software under test). We use the term SUT to identify Edge frameworks’ components because they are the target of our investigation.

The main architectural components in an Edge system are Cloud servers, Edge servers, and Nodes. Cloud servers provide centralized services (e.g., end-user authentication for a video streaming).Edge servers are deployed close to the end-user to minimize latency Bai et al. (2020); for example, they include caching mechanisms for the data provided by the Cloud server thus reducing latency. Nodes, instead, are deployed at the end-user’s side; depending on the service provided through the Edge system, Nodes might be desktop computers, sensors, or IP camera. In Figure 1, Nodes are annotated with the stereotype Node. The Edge system may interact with external components providing specific services, for example a network file system. In Figure 1, we annotated external services with the stereotype Service.

The Cloud server executes a Cloud server controller component that interacts with the Edge server controller through the Edge server API. The Cloud server controller manages the Edge server instances (e.g., to provide monitoring and policy enforcement). Also, it provides and collect service data. Examples of provided data are on-demand video streaming and file streaming. Examples of collected data include information about devices (e.g., offline status of a surveillance camera) or end-user data (e.g., movies’ rating or list of videos watched in a video streaming service).

The Edge server executes the Edge server controller, which has the responsibility of controlling access to resources, instantiate drivers, access plugins, manage resources, and control nodes. We use the term resource to indicate any medium used to store data, for example, configuration files or databases (see the Resource stereotype in Figure 1).

The Edge server controller includes a container manager, which is responsible for managing containers and Nodes. The Edge server controller usually integrates an MQTT (1995) to communicate with devices.

Nodes execute the Edge client, which integrates the client of the MQTT component. The Edge client sends the data gathered from the physical environment (e.g., temperature) to the Edge server. Desktop Nodes usually execute Virtual Machine (VM) Nodes, which may execute multiple Pods. Pods are the smallest deployable units of computing that can be managed by Container Managers Kubernetes (2022).

In the rest of the paper, we use the term software environment to refer to the operating system or any software component not belonging to the categories SUT and (SUT’s) API.

2.2 Testing of Edge Systems

Edge frameworks are tested according to standard software engineering practices Hagar (2002). Information about the development process in place for proprietary frameworks is limited; however, we note that large companies embrace a testing culture and provide test automation support for the developers of Edge applications (e.g., for Microsoft 2022). The open-source frameworks considered in our study (i.e., KubeEdge, Mainflux Framework 2022, K3OS 2022a, and Zetta) are supported by private companies. KubeEdge is supported by the Cloud Native Computing Fundation and 27 additional private companies (e.g., ARM 2022, Huawei 2022, ci4rail 2022); Mainflux is developed and maintained by Mainflux Labs, which is a for-profit technology company; K3OS is part of Rancher, a framework developed by the open source software development company Suse (2022). However, since industry participation in open source projects does not provide any guarantee about software security Gopalakrishn et al. (2022), we investigated the testing procedures in place for the subjects of our study and describe them in the following paragraphs

All the open-source frameworks considered in our study include automated test suites. KubeEdge includes automated unit KubeEdge (2022a), integration KubeEdge (2023a) and system test cases KubeEdge (2022c). Also, KubeEdge’s development process includes on code review activities (e.g., contributions are revised by senior membersFootnote 1) and two security teams KubeEdge (2023d, 2023e) that audit the system and respond to reports of security issues. Finally, KubeEdge is based on Kubernetes, whose development team includes a group of security experts Kubernetes (2022a). Mainflux includes automated test cases and a dedicated benchmark Mainflux (2022b); further, MainFlux Labs perform security audits MainFlux (2022). Finally, both K3OS (2022) and Zetta (2022) include automated test suites. To conclude, automated test execution is a state-of-the-practice approach for Edge frameworks; however, security seems to be better targeted by KubeEdge and, partly, by Mainflux.

The literature on Edge security highlights that security assurance of Edge systems should account for multiple attack surfaces (from physical layer to data security) and holistic, dedicated analyses are missing Jin et al. (2022). A recent survey of attack strategies and defense mechanisms for Edge systems points out that one of the causes of security vulnerabilities in Edge systems is the non-migratability of most security frameworks to the Edge context Xiao et al. (2019); further, the provided attack descriptions show that, in general, the identification of security vulnerabilities is often delegated to manual activities (e.g., side-channel attacks or specification-based testing Chen et al. 2014) and automated tools concern vulnerabilities that might affect related systems (e.g., code injection or dictionary attacks for authentication). The lack of automated security testing solutions for Edge can be noticed from other surveys on the topic Alwarafy et al. (2021); Mosenia and Jha (2017), that suggest manual testing as a key solution to determine if the system appropriately respond to attack scenarios, thus further motivating our work. Finally, these surveys on attack methods do not provide details about the underlying vulnerabilities, thus, contrary to our work, not supporting the development of automated vulnerability testing solutions dedicated to the Edge.

2.3 Field Failures

Field failures are caused by faults that escape from the in-house testing process. For their characterization we refer to the work of Gazzola et al. Gazzola (2017), who performed a comprehensive study about causes and nature of field failures (i.e., failures affecting software deployed in the production environment or at end-user premises).

The study of Gazzola et al. is based on bug reports of open-source software (i.e., OpenOffice, Eclipse, and Nuxeo). The analysis in the study is based on four research questions:

-

Why are faults not detected at testing time? Authors classified faults that are not detected at testing time into five categories (i.e., Irreproducible execution condition, Unknown application condition, Unknown environment condition, Combinatorial explosion, and Bad testing).

-

Which elements of the field are involved in field failures? Authors identified five possible elements (i.e., Resources, Plugins, OS, Driver, Network) to be involved in field failures; sometimes none of them is involved.

-

What kinds of field failures can be observed? Following the literature on the topic Bondavalli and Simoncini (1995); Aysan et al. (2008); Avizienis et al. (2004); Chillarege et al. (1992); Cinque et al. (2007), authors classified failures according to failure types and detectability. They report three failure types: value, timing and system failures. As for detectability, they focus on three categories, which are signaled, unhandled, and silent.

-

How many steps are needed to reproduce a field failure? Authors report on the number of user actions (called steps) required to reproduce a failure.

Different from Gazzola et al., we do not target faults affecting the functional properties of the software but faults affecting its security properties. Also, we have extended and refined the set of research questions considered in our study. Precisely, our refined research questions aim to facilitate the identification of security testing solutions to address the limitations of current security testing tools and practices. In our study, we address eight research questions instead of four.

2.4 Security Testing Glossary

Below, we provide definitions for security terminology appearing in the paper; we do not sort terms in alphabetical order but provide term definitions before their use in following descriptions.

Security failure. A security failure is a violation of the security requirements of the system.

Vulnerability. A vulnerability is a “weakness in an information system, system security procedures, internal controls, or implementation that could be exploited or triggered by a threat source” Dempsey et al. (2022). In our work we focus on vulnerabilities affecting Edge frameworks, in other words, mistakes in the implementation, design, or configuration of the Edge framework that prevent either the framework or the software running on it from fulfilling its security requirements.

A vulnerability is said to be exploited by a malicious user U through an input sequence I, when (a) the malicious user provides the input sequence I to the software under test, (b) the input sequence exercises the vulnerability (i.e., the software executes the functionality affected by the weakness), and (c) a security failure is observed (i.e., there is a violation of security requirements). In a software testing context, it is the software tester who aims to identify input sequences that may reveal the presence of vulnerabilities.

Test oracle. A test oracle (or, simply, an oracle) is a procedure to determine if the software behaves according to its specifications Barr et al. (2015), otherwise a test failure should be reported. In the context of security testing, test oracles should report security failures. Test oracles may either be automated or manual; in this paper, we focus on automated test oracles because we look for testing solutions that can be automatically executed.

CVE. MITRE (2022a) is a database managed by the MITRE Corporation (2022). It lists publicly disclosed vulnerabilities.

The CVE list is enumerated and managed by the CVE Numbering Authorities (CVE Numbering Authorities 2022. All the registered vulnerabilities are characterized with a univocal identifier, a textual description, and additional details including severity, registration date, vulnerable product.

CWE. Common Weaknesses Enumeration (CWE) is a public database managed by the MITRE Corporation (2022). It lists the weaknesses that may lead to a vulnerability; a weakness can be an invalid action taken by the software or a developer mistake performed when implementing or designing the software. For each weakness, the CWE database reports the CWE ID, its description, the creation date, a link to the NVD database, and references to external links (e.g., GitHub) to further explain the details about the vulnerability.

The CWE weaknesses constitute a catalog of vulnerability types organized according to different views (i.e, taxonomies) that group them in a hierarchical structure. The top level entries of such structures are called pillars. The views considered in our study are research concepts and software development. We excluded views that concern hardware design, are mappings to other taxonomies, or concern problems related to specific systems. The research concepts view focuses on the software behaviour and includes the following categories: Improper Access Control, Improper Interaction Between Multiple Entities, Improper Control of a Resource Through its Lifetime, Incorrect Calculation, Insufficient Control Flow Management, Protection Mechanism Failure, Incorrect Comparison, Improper Handling of Exceptional Conditions, Improper Neutralization, Improper Adherence to Coding Standards. The software development concepts view focuses on the development (e.g., design and programming) mistakes that lead to the vulnerability; it consists of 40 pillars including, among the others, API / Function Errors, File Handling Issues, Data Validation Issues, and Memory Errors.

Security properties. In our work we consider three security properties of software (i.e., Confidentiality, Integrity, Availability — CIA) that we define according to the NIST Information Security report NIST-800-137 Dempsey et al. (2022):

-

Confidentiality concerns “preserving authorized restrictions on information access and disclosure, including means for protecting personal privacy and proprietary information” Dempsey et al. (2022).

-

Integrity concerns “guarding against improper information modification or destruction, and includes ensuring information non-repudiation and authenticity” Dempsey et al. (2022).

-

Availability concerns “ensuring timely and reliable access to and use of information” Dempsey et al. (2022).

NVD. The National Vulnerability Database (NVD) is the U.S. government repository of vulnerability data National Vulnerability Database (2022). Vulnerabilities are reported using the Security Content Automation Protocol (SCAP), which consists of information including, among others, the CVE data and the Common Vulnerability Scoring System (CVSS). All the CVE vulnerabilities appears also on the NVD repository.

CVSS. The Common Vulnerability Scoring System (CVSS) is a framework for communicating the characteristics and severity of software vulnerabilities Common Vulnerability Scoring System (1995). According to CVSS, each vulnerability is associated to a set of attributes: Attack Vector, which captures the context of the attack (Network, Adjacent, Local, Physical), Attack Complexity (Low, High), Privileges Required (None, Low, High), User Interaction, which indicates if the attacker needs to interact with another user (None, Required), Scope, which indicates whether a vulnerability in one vulnerable component impacts resources in components beyond its security scope (Unchanged, Changed), and Impact Metrics. Impact Metrics report how much the software security properties (i.e., Confidentiality, Integrity, and Availability) might be impacted (High, Low, None) by an exploit for the vulnerability. The CVSS attributes are represented through a string that reports the initials of each attribute along with its value. For example, for CVSS version 3.1, the string

indicates a Local (L) Attack Vector (AV), Low (L) Attack Complexity (AC), Low (L) Privileges Required (PR) to exploit the vulnerability, No interaction with an additional user being required (User Interaction, UI), Unchanged (U) Scope (S), and High impact (H) on Confidentiality (C), Integrity (I), and Availability (A).

The CVSS attribute values are used to derive a score between 0 and 10 that captures the severity of a vulnerability; score ranges are interpreted as follows: None (0.0), Low (0.1-3.9), Medium (4.0-6.9), High (7.0-8.9), Critical (9.0-10.0).

3 Study Design

The goal of our study is to investigate security vulnerabilities affecting Edge computing frameworks. The purpose is to identify the characteristics of Edge vulnerabilities with the aim of driving improvements in the security testing process and supporting the identification of appropriate solutions for the development of security testing tools. The context consists of 147 vulnerabilities reported between January 2019 and December 2021. They concern four Edge frameworks, which are KubeEdge, Mainflux, K3os and Zetta. All the data used in our study are available online Malik and Pastore (2023).

This study addresses eleven research questions, which we defined by focusing on those aspects that may drive the definition of an automated security testing technique. We focus on aspects that help identifying the testing opportunity (i.e, determine in which scenarios existing methods are insufficient), evaluating the feasibility of security testing automation (e.g., to avoid severe consequences on the integrity of the system), and defining the technical solution (i.e., design an input selection strategy, an automated test oracle, test harnesses and, in general, supporting procedures).

Figure 2 provides an overview of the relations between our research questions (RQs) and the final objective of this work (i.e., support the development of effective testing approaches for Edge systems); precisely, in Figure 2, we organize our RQs according to their objectives (i.e., identifying the testing opportunity, evaluating the feasibility of security testing automation, and defining the technical solution) and indicate which information is acquired by addressing each RQ. In this manuscript, our RQs are sorted according to the data used to address them: first we present the RQs addressed through the manual inspection of vulnerability reports (\(\textbf{RQ}_{1}\) to \(\textbf{RQ}_{6}\), with the four RQs inspired by Gazzola et al.’s work first), then we present research questions addressed using data available on the CVE and NVD databases (\(\textbf{RQ}_{7}\) to \(\textbf{RQ}_{8}\)). A detailed description of our research questions follows:

\({\textbf{RQ}}_{1}\): Why are Edge vulnerabilities not detected during testing?

Like any other software system, an Edge system shall undergo a security testing phase in which engineers verify that it meets its security requirements Felderer et al. (2016); Mais et al. (2018). The presence of vulnerabilities not being detected during testing but discovered later (e.g., once the system has been already released and deployed in the field), indicates pitfalls in the testing process. This research question aims to determine the reasons that prevented the detection of vulnerabilities during testing and whether further research is needed to prevent security failures in the field or, alternatively, if field failures can be avoided simply through the improvement of the testing process in place (i.e., testing was insufficiently conducted).

\({\textbf{RQ}}_{2}\): What are the types of components involved in a security failure?

Similarly to the study of Gazzola et al., we aim to determine which components are involved in a security failure. However, to better support the definition of automated security testing techniques, we aim to distinguish between (A) the failing components, which indicate what should be the targets of test oracles, (B) the components that should be in a specific state to exploit the vulnerability, which may indicate the conditions under which the software should be tested (e.g., with an overloaded network), (C) the components receiving the input, which influence the type of input interfaces that should be managed by the testing technique (e.g., a Web interface or an input file), and (D) the vulnerable components, which indicate what to test. The analysis of the types of components involved in a security failure should support the identification of appropriate testing strategies. Therefore, we refine our research question into four:

-

\({\textbf{RQ}}_{2A}\) What are the components manifesting an Edge security failure?

-

\({\textbf{RQ}}_{2B}\) What are the components that are in the state required to exploit an Edge vulnerability?

-

\({\textbf{RQ}}_{2C}\) What are the components that receive the inputs that trigger an Edge vulnerability?

-

\({\textbf{RQ}}_{2D}\) What are the faulty (i.e., vulnerable) Edge components?

\({\textbf{RQ}}_3\): What kind of failures are observed when an Edge vulnerability is exploited?

To automatically test a software system, it is necessary to specify test oracles (see Section 2.4). The implementation of an automated test oracle depends on the nature of the failures to be detected; for instance, the program logic required to automatically detect a crash might be based on response timeout, which is likely different than the logic required to detect unauthorized access to a resource, which might consist of verifying the data returned to the caller.

\({\textbf{RQ}}_4\): What is the nature of the precondition enabling the attacker to exploit Edge vulnerabilities?

A vulnerability may be exploited only if a certain precondition holds (e.g., a subnet has been set-up). Since it might be difficult for an automated approach to meet certain preconditions (e.g., automatically set-up a network), to evaluate the potential benefits of test automation (e.g., the proportion of vulnerabilities it might detect), we investigate the nature of such preconditions for different vulnerabilities.

\({\textbf{RQ}}_5\): What Inputs Enable Exploiting Edge Vulnerabilities?

The effectiveness of a test automation approach depends on the degree of complexity of the input to be generated, which we may characterize in terms of the number of required interactions with the SUT and the structure and type of input actions to perform (e.g., providing data, changing software configurations, or simulating network disruptions). For instance, a vulnerability that requires a long input sequence to be exploited may be more difficult to detect than one can be detected with single input. We therefore refine \({\textbf{RQ}}_5\)into two separate questions:

-

\({\textbf{RQ}}_{5A}\): How many Steps are Required to Exploit an Edge Vulnerability?

-

\({\textbf{RQ}}_{5B}\): What is the Nature of the Input Action Enabling the Attacker to Exploit a Vulnerability?

simulating network disruptions).

\({\textbf{RQ}}_{6}\): What Security Properties are Violated by Edge Vulnerabilities?

The type of security properties being violated by Edge security failures impact on the definition of automated oracles. Also, they may affect the test harness solutionsFootnote 2 to put in place. For example, vulnerabilities that affect availability can be detected by oracles that look for the lack of responses from the system; instead, to detect authorization vulnerabilities it is necessary an oracle that is aware of the system’s access policies. Concerning test harness, after discovering availability issues, it may be necessary to restart the system (e.g., to prevent blocking other testing processes), which is not required after discovering confidentiality problems (confidentiality issues do not alter the state of the system). Instead, the discovery of an integrity issue may imply restoring the configuration of the system after discovery.

\(\textbf{RQ}_7\): What Faults Cause Edge Vulnerabilities?

The input selection strategy implemented by a test automation approach depends on the types of faults being targeted. In the case of security testing, for example, the inputs to be selected to identify an SQL injection attack are different than the ones used to detect a path traversal vulnerability (e.g., they rely on different grammars). To categorize faults, we can rely on the CWE vulnerability types, which is well-known and largely adopted taxonomy. Additional aspects to take into account are the erroneous software behaviors caused by the vulnerability (e.g., improper access control) and by the developer mistakes leading to the vulnerability (e.g., memory buffer errors). Erroneous software behaviors are captured by the CWE pillars for the CWE view Research concepts; developer mistakes are captured by the CWE pillars for the CWE view Developer concepts. We therefore refine \(\textbf{RQ}_7\)into three RQsthat reflect the information collected in our process:

-

\(\textbf{RQ}_{7A}\): What is the CWE Vulnerability Type?

-

\(\textbf{RQ}_{7B}\): What are the Erronous Software Behaviours Leading to Edge Security Failures?

-

\(\textbf{RQ}_{7C}\): What are the Developer Mistakes Leading to Edge Vulnerabilities?

\(\textbf{RQ}_{8}\): How Severe are Edge Vulnerabilities?

To evaluate the importance of improving Edge security testing approaches, \(\textbf{RQ}_{8}\)discusses severity based on NVD CVSS scores (see Section 2.4); severity analysis provides an indication about the urgency for automated security testing approaches.

\({\textbf{RQ}}_{1}\), \({\textbf{RQ}}_{2}\), \({\textbf{RQ}}_3\), and \({\textbf{RQ}}_{5A}\)are inspired by the work of Gazzola et al.; however, we have extended the analysis method to better fit the context of this study. Precisely, the taxonomies used to address \({\textbf{RQ}}_{1}\)and \({\textbf{RQ}}_{5A}\)match the one used by Gazzola et al.; the taxonomies used for \({\textbf{RQ}}_{2}\)and \({\textbf{RQ}}_3\)are an extension of the one proposed by Gazzola et al. Further, we address \({\textbf{RQ}}_4\)and \({\textbf{RQ}}_{5B}\)using a taxonomy that we introduce in this article. For \({\textbf{RQ}}_{6}\)we rely on the CIA security properties (but we distinguish between data and system integrity). For \(\textbf{RQ}_{7A}\), \(\textbf{RQ}_{7B}\), \(\textbf{RQ}_{7C}\)we rely on CWE categories. Finally, for \(\textbf{RQ}_{8}\), we rely on NVD CVSS attributes.

3.1 Data Collection

Figure 3 provides an overview of the process adopted to collect data and answer our research questions.

For our study, we selected Edge frameworks that fulfill the following criteria: (C1) being open-source and publicly available, which enables the investigation of software patches for a better understanding of the vulnerability, (C2) having active user base (i.e., users reporting bugs and vulnerabilities online) and support (i.e., responses are provided to 90 (C3) having at least five vulnerabilities reported by end-users either on the CVE databases or GitHub (not all the vulnerabilities are necessarily reported on the CVE database).

We focus on Edge frameworks rather than services or applications developed to run on Edge frameworks since the latter delegate security management to the underlying frameworks KubeEdge (2022).

First, we have identified 15 open-source Edge frameworks by executing a Web search with the Google search engine; we searched for the keywords ‘edge framework’ and ‘IoT framework’. The identified frameworks are shown in Table 1, whereas columns C1, C2, and C3 indicate which of the above-mentioned criteria had been satisfied.

Based on our criteria, we selected as subjects of our study KubeEdge, Mainflux, Zetta, and K3os. KubeEdge (2022b) is the framework with the largest number of users providing comments in the issue tracker, probably because it is the most widely adopted one. It is developed as an open-source project by (CNCF) Clound Native Computing Foundation (2022). It is an open-source product built upon Kubernetes (2022c), which is a system for automating deployment, scaling, and management of containerized applications. KubeEdge extends containerization capabilities to Edge devices. KubeEdge’s bug reports and vulnerabilities are available on its GitHub page KubeEdge (2023) and MITRE (2022a), respectively.

Mainflux Framework (2022) is an open-source framework designed by Mainflux Labs to support smart devices in the Internet of Things (IoT) ecosystem. It has a simpler architecture than KubeEdge (i.e., less components) and serves as a middleware between Edge devices and cloud-based orchestration platforms; it targets systems that largely rely on the Edge paradigm (i.e., IoT). Its bug reports can be accessed on GitHub Mainflux (2022a).

Zetta (2022a) is an open-source, Web-based Edge framework which provides connectivity to different types of smart devices. The Zetta’s centralized device controller (Zetta hub) is designed to work on low-powered devices capable of running an OS such as BeagleBone Black, Intel Edison, or Raspberry Pi. Zetta’s bug reports and vulnerabilities are available on the GitHub and CWE database Zetta (2022b).

K3OS (2022a) is an open-source Edge framework designed to work in low resource environments with the capability of being managed through a light-weight Kubernetes dashboard called k3s. For example, it is used by Rancher, a multi-Cloud container management platform Rancher (2022).

For each Edge framework, we analyzed the vulnerabilities reported in its bug repository (GitHub) and the ones appearing in the CVE database. To identify vulnerabilities in the GitHub repository, we used the GitHub built-in search functions to search for bug reports containing security-related keywords (i.e., security, vulnerability, crash, and privacy) either in their title or in the description of the vulnerability.

To select vulnerabilities in the CVE database, we used the built-in search function to identify CVE records including the name of the framework. Also, we searched for vulnerabilities referring to components implementing the containerization and communication features used by our frameworks, which are MQTT brokers (e.g., Mosquitto 2022 and VerneMQ Broker 2022), Raspberry pi (configured as end-device or client manager for pods), and container managers (i.e., Kubernetes, Docker, and Cri-o). Precisely, KubeEdge components include Kubernetes, Cri-o, Raspberry Pi, Mosquitto or verneMQ, whereas Mainflux components include only Docker. K3os components include Kubernetes; Zetta’s components include Raspberry Pi. However, to avoid duplicates in our study, the Edge vulnerabilities concerning Kubernetes (61, in total) and Raspberry Pi (two, in total) had been counted as part of KubeEdge only. Since we do not aim to compare frameworks but study the nature of Edge vulnerabilities, our choice should not bias our results.

In our study, we considered all the GitHub bug reports submitted till 31 November 2021, and all the CVE vulnerabilities dated between 1 January 2019 and 31 November 2021.

Table 2 provides the number of reports collected from GitHub and CVE, for each selected framework. The total number of reports ranges from 5 (Zetta) to 125 (KubeEdge); unsurprisingly, such number is related to the complexity of the framework (i.e., the largest frameworks, including their dependencies, are the ones with the largest number of vulnerabilities).

Column Vulnerabilities in Table 2 cin the collected reports, which are 201, in total. Vulnerability reports were identified by the first author of the paper who read all the report descriptions. Among all the vulnerability reports, we excluded the ones that concern Edge components (e.g., Docker) but affect features not used by Edge frameworks. An example is vulnerability MITRE: CVE202131938 (2022) in Kubernetes (2022c), which concerns the Microsoft Visual Studio Code Kubernetes tool Microsoft (2022). Such tool is not executed at runtime within the Edge system but is used at configuration time to implement scripts for the Kubernetes framework; therefore, the vulnerability is out of scope. After filtering, we count 147 vulnerabilities affecting the Edge frameworks considered in our study (see column Edge vulnerabilities in Table 2). Please note that the requirement of minimum five vulnerabilities to select an Edge framework for our study concern the total number of vulnerability-related reports in GitHub or CVE (i.e., column Total in Table 2), not the number of Edge vulnerabilities selected at the end of the process.

3.2 Analysis Method

This section explains the metrics and the procedures put in place to answer our research questions based on the collected vulnerability reports.

For our study, we proceeded as follows. The first author of the paper has carefully read the 147 vulnerability reports indicated above along with links to related electronic documents (e.g., detailed vulnerability descriptions provided on the frameworks’ Web sites) and code commits registered on their versioning systems (e.g., git code commits selected by relying on either the vulnerability ID or a bug fix ID reported in related electronic documents). We resorted to the inspection of code commits when the description of the vulnerability was not clear (i.e., it did not enable us to answer some of our RQs). By reading the vulnerability descriptions and the related electronic resources, to address each RQ, the first author (1) classified each vulnerability according to the categories specified to address \({\textbf{RQ}}_{1}\)to \({\textbf{RQ}}_{6}\)and (2) collected the data required to address \(\textbf{RQ}_{7A}\)to \(\textbf{RQ}_{8}\). To minimize subjectivity in the manual classification, the authors of the paper have defined together the answers for each RQand discussed at least one concrete case for each class. In practice, the first 30 vulnerabilities inspected at the beginning of the project had been reviewed by both the two authors to ensure common understanding. Further, randomly selected cases and unclear cases had been discussed. In total, about 50 vulnerabilities had been inspected by both authors. For a subset of the first 30 vulnerabilities there had been disagreement due to definition of common terminology and criteria, which lead the first author to re-classify, from scratch, all the 30 vulnerabilities till agreement was reached. For the remaining 20 randomly selected cases, the two authors were in agreement. Addressing \(\textbf{RQ}_7\)and \(\textbf{RQ}_{8}\)did not require any specific agreement between the authors because it relies on information available with the vulnerability report.

Table 3 provides the data collected for the vulnerabilities mentioned as examples in the following paragraphs.

3.2.1 \({\textbf{RQ}}_{1}\): Why are Edge Vulnerabilities not Detected During Testing?

To address this research question, we classify each vulnerability report according to the same five categories reported in Gazzola’s work:

-

Irreproducible Execution Condition (IEC). It indicates that the vulnerability cannot be identified at testing time because it is not feasible to reproduce the conditions under which it can be exploited. An example is Kubernetes vulnerability MITRE: CVE20213499 (2022), which reports that Kubernetes is unable to apply multiple DNS firewall rules during egress communication (i.e., communication leaving the local network). Without knowing the specific firewall rules to apply during testing, it is unlikely to discover this vulnerability.

-

Unknown Application Condition (UAC). It indicates that the security failure depends on an input that was not identified by the testing engineer because not specified in the documentation. An example is vulnerability MITRE: CVE20208565 (2022), which reports that, with logging level 9, the system exposes administrator details by writing them in logs as plain text, including authorization and bearer token (i.e., an hexadecimal string used for requesting access to a resource). Since the availability of logging level 9 is not well documented Kubernetes (2022b), testing engineers may have overlooked it.

-

Unknown Environment Condition (UEC). It indicates that the precondition or the type of input required for triggering the vulnerability depends on a characteristic of the environment (software environment or physical environment) that was not known to security engineers (e.g., because not well documented). name-space, thus escalating privileges, which may compromise the cluster, causing massive DOS in the system. An example is Kubernetes vulnerability report MITRE: CVE20208559 (2022), which indicates that a malicious user can redirect update requests. This vulnerability has been likely not discovered at development time because of the limited documentation on redirect responses, which concerns the communication protocol. to identify the timestamp at which ESC was deployed, possible combination in brute-force attack could be minimized.

-

Combinatorial Explosion (CE). Sometimes, to detect a vulnerability at testing time, it is necessary to exercise the system with inputs derived by combining values belonging to different input partitionsFootnote 3, for different input parameters or configurations. When the system is large, the combination of values belonging to different input partitions for different parameters and functions lead to a number of test cases that is very large and thus infeasible to be defined, executed, or verified (i.e., the number of test cases explode). Also, when inputs can have a complex structure adhering to a specific grammar (e.g., xpaths), testing different combinations of valid and invalid grammar tokens becomes challenging. Unfortunately, without details about the development budget for our case study subjects, it is not possible to determine a threshold above which it is impractical for software engineers to test different input (or grammar token) combinations. Therefore, we conservatively assume that combinatorial explosion is the cause of any vulnerability that can be triggered only with specific combinations of input parameters, independently from the number of parameters, input partitions, or grammar tokens, for the vulnerable function. Indeed, in large systems, it is common practice for engineers to limit testing cost by exercising only few combinations of inputs (e.g., by relying on the weak equivalence class testing strategy Amman and Offutt (2016)). Please note that although functional testing approaches such as N-wise coverage Amman and Offutt (2016) may have enabled engineers to address combinatorial explosion and discover vulnerabilities, the available information does not enable us to determine if such strategies had been applied in our case study subjects. Therefore, we simply report all the combinatorial cases together, independently of the strategy followed to test them. An example CE is provided by the Kubernetes vulnerability report MITRE: CVE202125737 (2022); it indicates that the user can redirect network traffic into a subnet, which is typically not allowed by the administrator.

-

Bad Testing (BT). We consider a vulnerability to slip through the testing process because of bad testing when it is not possible to find a justification for the lack of testing effectiveness in terms of lack of feasibility (i.e., IEC), lack of documentation (i.e., UEC and UAC), or lack of test budget (i.e., CE). In practice, following the guidelines of Gazzola et al., anything not categorized in the above-mentioned scenarios is considered due to bad testing Gazzola (2017). In practice, as for the study of Gazzola et al., we conservatively consider caused by bad testing only those cases where a basic security feature of the SUT is always not functioning as specified (e.g., when access to a feature is always granted, even if the username/password combination is wrong).

Our classification has been performed by reading each vulnerability report to determine the features that should be exercised to detect the vulnerability. Further, we inspected the available documentation to (1) determine UAC and UEC cases (they concern the lack of detailed documentation) and (2) to determine what are the possible input partitions. When available, we also inspected bug-fix commits to have a better understanding of the vulnerability. Although it is not possible to know the exact cause of each field failure without involving the actual developers of the frameworks, our investigation helps determining reasonable ones (i.e., causes that may not be true for the considered case study but might have been true for a system with the same characteristics).

3.2.2 \({\textbf{RQ}}_{2}\): What are the Types of Components Involved in a Security Failure?

This research question aims to characterize the components exercised when a security failure is observed. sub-question for \({\textbf{RQ}}_{2}\)are listed below: As mentioned in Section 3, this research question is divided into four:

-

\({\textbf{RQ}}_{2A}\) What are the components manifesting an Edge security failure?

-

\({\textbf{RQ}}_{2B}\) What are the components that are in the state required to exploit an Edge vulnerability?

-

\({\textbf{RQ}}_{2C}\) What are the components that receive the inputs that trigger an Edge vulnerability?

-

\({\textbf{RQ}}_{2D}\) What are the faulty (i.e., vulnerable) Edge components?

The above-mentioned RQs are addressed by tracing, for each vulnerability report, the types of components involved in the activities captured by \({\textbf{RQ}}_{2A}\)- \({\textbf{RQ}}_{2D}\). We have refined the list of components introduced by Gazzola et al., which included resources, plugins, OS, drivers, and network. Our refined list of components includes additional elements that characterize Edge systems (see Section 2.1), which are API, Nodes, and hardware (i.e., the machine on which the software is running). Also, we explicitly indicate if the failure concerns the SUT (i.e., the Edge framework under test). We exclude the OS category from our analysis because the activity of the OS is generally invisible to the Edge frameworks and we did not identify any vulnerability related to it; further, OS-support tools are often part of Edge frameworks themselves.

All our components are described in the following:

-

Resources.Resource refers to any software medium used to store data, for example files or databases. An example is given by Kubernetes vulnerability report MITRE: CVE202028914 (2023), which indicates that a malicious user can access restricted folders (i.e., resources) with both read and write permissions using a guest account.

-

Drivers. Driver indicate devices drivers for the operating system controlled by the Edge server controller (see Section 2.1).

-

Plugins.A plugin is an add-on component or module that enhances the system’s capabilities. An example is provided by Kubernetes vulnerability MITRE: CVE202131938 (2022), which concerns the Kubernetes plugin Helm. Helm exchanges username and password without encryption, therefore, a malicious user may introduce a custom URI in the system configuration to steal the username and passwords of its users. In the case of \({\textbf{RQ}}_{2A}\), it is Helm (i.e., the plugin) what experiences the effect of the vulnerability (i.e., receives username and password). For \({\textbf{RQ}}_{2B}\), the component in the required state is a resource; precisely, a configuration file that contains the custom URI to exploit the vulnerability. For \({\textbf{RQ}}_{2C}\), the component receiving the input that triggers the vulnerability is the Helm plugin. For \({\textbf{RQ}}_{2D}\), the faulty component is the SUT, since it should not allow end-users to change the configuration files in which the Helm URI is located. Another case is provided by the docker vulnerability MITRE: VE202139159 (2022), where the faulty component is the plugin matrix-media-repo. The plugin matrix-media-repo minimizes the size of the images saved on the server side. However, accessing stored images from the database requires a decompression process; a malicious user may upload special crafted images that exhaust the decompression process and cause a security failure (i.e., a denial-of-service) on the SUT (i.e., KubeEdge).

-

Software Under Test (SUT). We introduced this term to indicate cases in which issues concern the Edge framework under test. An example is provided by vulnerability MITRE: CVE202134431 (2022) in Docker, in which the faulty component is the Mosquitto (2022) MQTT Broker (SUT, according to Figure 1). During the handshake process between the client and the server, a CONNECT packet should be sent from the client to the server only once. The server is responsible for processing the CONNECT request and reply; the presence of multiple CONNECT requests being sent to the server by a same client is considered a protocol violation which results in the client being disconnected. The vulnerability concerns Mosquitto, in which the disconnection of a client leads to a memory leak that may end-up into a denial-of-service. In the example, the node is the component affected by the effects of the vulnerability (\({\textbf{RQ}}_{2A}\)), the network protocol should be in a specific state (i.e., the CONNECT state) (\({\textbf{RQ}}_{2B}\)), the network receives the input which triggers the vulnerability (\({\textbf{RQ}}_{2C}\)), and the SUT (i.e., Mosquitto) is the faulty component (\({\textbf{RQ}}_{2D}\)).

-

Services. Services are executable programs that provide the data required by the SUT. An example is provided by Kubernetes vulnerability report MITRE:CVE201911252 (2022), which indicates that the services bound to loopback address (127.0.0.1) are accessible by other hosts on the network. Those services should only be accessible to local processes. In this case, these loopback services are the components experiencing the effects of the vulnerability (\({\textbf{RQ}}_{2A}\)).

-

Network. Components implementing network-related functionalities (i.e., communication protocols, firewalls, and ports) belong to this category. An example is provided by the Kubernetes vulnerability report MITRE: CVE202128448 (2022), which describes the incapability to enforce multiple firewall rules for DNS traffic during egress communication. In CVE-2021-28448, for \({\textbf{RQ}}_{2A}\), the SUT is what experiences the effects of the security failure since its data could be shared with otherwise restricted URLs over the Internet (DNS filters are not working properly). For \({\textbf{RQ}}_{2B}\), the network (specifically the network firewall) is the component in the state required to exploit a vulnerability. For \({\textbf{RQ}}_{2C}\), it is the network what receives the input traffic exploiting the vulnerability. For \({\textbf{RQ}}_{2D}\), it is the network the vulnerable component.

-

Node. A Node is an execution environment; it includes a file system and all the programs and services running on it. In this category, we include also virtual machines and Pods. An example is provided by vulnerability MITRE:CVE202015157 (2022) in Kubernetes; it concerns Pods leaking passwords to a phishing URI. In kubernetes, a container can be exported using two formats (i.e., .OCI and .v2). Importing a container from these images initiates dependency resolution through the Web. A malicious user can inject a phishing URL as a dependency to be resolved during the import of container; it will enable the malicious user to steal credentials. Importing an infected container image will thus result in credentials theft during dependency resolution. In the example, the newly deployed node is what it is compromised (\({\textbf{RQ}}_{2A}\)), the node is also what needs to be in the state that requires resolving dependencies (\({\textbf{RQ}}_{2B}\)), the SUT (i.e., Kubernetes) is what receives the input to import the container from an image (\({\textbf{RQ}}_{2C}\)), Kubernetes (i.e., our SUT) is the faulty component (\({\textbf{RQ}}_{2D}\)).

-

API. API indicates the components implementing the APIs used for controlling the Edge system (see Section 2.1). An example vulnerability is MITRE: CVE202132783 (2022), which concerns the Contour controller API in Kubernetes. Typically, an access request from outside of the network is prohibited, therefore, the access is denied. However, the Contour controller is not capable of correctly handling multiple access requests thus resulting in a denial of service (DoS). In CVE-2021-32783, it is the SUT what is compromised after exploiting the vulnerability (\({\textbf{RQ}}_{2A}\)). Instead, it is the Contour API that is faulty (\({\textbf{RQ}}_{2D}\)), needs to be in the necessary state to exploit the vulnerability (\({\textbf{RQ}}_{2B}\)), and receives the input that triggers the vulnerability (\({\textbf{RQ}}_{2C}\)).

-

Hardware. Hardware refers to the hardware components of the system, which include physical devices running the SUT (e.g., IoT devices, servers, or desktops) and network assets (e.g., routers and switches). An example is provided by vulnerability MITRE: CVE202138545 (2022) in Raspberry Pi, which results in a Glowworm attack Nassi et al. (2021). When speakers are connected to Raspberry Pi, voltage fluctuations caused by the use of speakers impact on the power supplied to the led of the Raspberry Pi module. If the led light is monitored, voltage fluctuations can be reconstructed and it is possible to reproduce the sound being played on the speakers Nassi et al. (2022). In the example, the failure affects a hardware component (\({\textbf{RQ}}_{2A}\)); indeed, the led violates the implicit security requirement “it should not be possible to determine the sounds being played from light fluctuations”. Further, the hardware should be in the necessary state (i.e., speakers being connected, \({\textbf{RQ}}_{2B}\)), the hardware is the component that receives the input (sound data) to trigger the vulnerability (\({\textbf{RQ}}_{2C}\)), and the hardware is the faulty component (i.e., it does not include a mechanism to avoid such light fluctuations, \({\textbf{RQ}}_{2D}\)).

Please note that not all the components mentioned above may be part of Edge framework distributions; indeed, only SUT, API, and Resources (e.g., configuration files) are released with Edge framework distributions. The other components (i.e., Drivers, Plugins, Services, Network, Node, Hardware) are usually developed by third-parties but are strongly coupled with an Edge framework and their CVEs provide references to such Edge framework. (\({\textbf{RQ}}_{2B}\)), or show the failure (\({\textbf{RQ}}_{2A}\)). Second, components concerning \({\textbf{RQ}}_{2A}\)to \({\textbf{RQ}}_{2C}\). Examples of the second category of components follow. One example is CVE-2021-26928, which concerns the service BIRD daemon (it can be exploited to disrupt the integrity of Kubernetes). Another case is CVE-2020-13597, which concerns the Network layer of Calico and leads to information disclosure if IPv6 is enabled but unused. Last CVE-2021-38545, which concerns the hardware of Raspberry Pi.

3.2.3 \({\textbf{RQ}}_3\): What Kind of Failures are Observed When an Edge Vulnerability is Exploited?

Like Gazzola et al., for each vulnerability we determine failure type and detectability based on the description in the vulnerability report and bug fix commit, when available. Gazzola et al. determined category entries based on the taxonomies of Bondavalli and Simoncini (1995), Aysan et al. (2008), Avizienis et al. (2004), Chillarege et al. (1992), and Cinque et al. (2007). We extended their set with entries specific for our security context.

The failure type concerns how a failure appears to an observer external to the system Gazzola (2017). We extended the set of failure types provided by Gazzola et al. (i.e., value, timing, or system) with two additional entries (i.e., action, and network). They are all described below.

-

Value. Value failures occur when the system provides an output that does not match its specifications. In our context, they range from returning an illegal value (e.g, after exploiting an integrity vulnerability), to providing sensitive information (e.g., for a vulnerability concerning confidentiality).

-

Timing. Timing failures include two cases: (1) the system takes longer than expected (according to specifications) to generate an output, (2) the system takes shorter than expected to generate output. An example is KubeEdge GitHub issue #1736 KubeEdge (2023c), which indicates that, during initialization, a Pod may try to allocate a storage volume according to configuration files that shall be provided by the Edge-core (i.e., the Edge server controller). Since the Pod is unable to find the configuration files in the directory, it hangs and results in a denial-of-service (i.e., a timing failure).

-

System. System failures occur when the system crashes. An example is provided by the vulnerability report MITRE: CVE202128166 (2022), which concerns Mosquitto communicating with an MQTT broker. CVE-2021-28166 indicates that an authenticated MQTT client can send a crafted packet CONNACK (connection Acknowledgment) to the broker thus causing a null pointer dereference that crashes the system (system failure). vulnerability. Different types of failures are discussed below for a clear understanding.

-

Action. Action failures consist of the system performing an illegal interaction with the environment. We introduced this category to compensate for the original categorization by Avizienis et al. (2004) used by Gazzola et al., which considers the SUT as a black-box and excludes the possibility to observe other output interfaces rather than the ones with the end-user. To further clarify the difference between action failures and value failures, we report that a value failure occurs when a system output is expected (e.g., after an input or periodically) but the output data does not match specifications, an action failure occurs when the output is not expected at all. An example is the vulnerability report MITRE: CVE202035514 (2023) of Kubernetes, which indicates that OpenShift, a containerization platform, fails to enforce restrictive write access policy for the Kubernetes kubeconfig file thus allowing an illegal modification (i.e., the action). Another case is Docker vulnerability CVE-2020-8564, which indicates that registry credentials are written into log files (i.e., the action) when Docker is configured with logging level 4.

-

Network. Network failures concern any aspect of the network. Since networking components follow dedicated protocols, network failures (i.e., failing to comply with the protocol) are unlikely to belong to any category described above; for this reason, we introduced a specific category. An example is provided by the Kubernetes vulnerability report MITRE: CVE20208558 (2023); it describes a case in which services bound to the loopback address are accessible by other pods and containers on the local LAN network. Any other category different than network failure would not clearly capture the characteristics of such a failure.

The detectability attribute characterizes the difficulty of detecting the failure. Following Gazzola et al., we consider the categories signaled, unhandled, and silent. From the work of Gazzola et al., we exclude self-healed since Edge systems do not include any self-healing feature for security issues.

-

Signalled. It concerns cases in which the system prompts an error message. This could primarily happen when an application encounters memory errors, prompting the user with an error message and asking for further actions. An example case is the KubeEdge GitHub report #2362, which indicates that the Edge device prompts an error because it is unable to connect with the Cloud through its API. memory to overflow; therefore, the operating system (OS) terminates the application.

-

Unhandled. A failure that the Edge system does not handle and that leads to a crash. The system does not detect the failure, while the user detects the uncontrolled crash of the application. An example is the GitHub issue #335 Zetta (2023) of Zetta, which is about a memory overflow leading to a crash. It occurs when a dependency request is installed before the handler process starts, it leads to a slow but continuous memory consumption resulting in a crash.

-

Silent A security failure that is not detected; consequently, the system operates with wrong parameters and values thus producing undesirable behaviors and output. This is the case of failures that are observable (e.g., the person who reported the bug was capable of observing them) but not automatically reported by the system as such (e.g., because implementing the logic to automatically determine if the system fails is not feasible since it relates to the oracle problem in software testing Barr et al. 2015; Mai et al. 2019). as a plugin, so that configuration files to access the plugin are stored locally in the kubernetes system. The configuration file contains the URI for the helm plugin, which is used with username and password for access. The username and password are not secured and are shared in plain text. A malicious user can modify the URL in the helm configuration file with a custom URI, which receives credentials upon request. An example is provided by the Kubernetes vulnerability report MITRE: CVE20208563 (2022), which indicates that with logging level set to 4, the credentials of the vsphere controller are written into the controller log file as plain text. Only an end-user inspecting the log may notice such a security failure.

3.2.4 \({\textbf{RQ}}_4\): What is the Nature of the Precondition Enabling the Attacker to Exploit Edge Vulnerabilities?

To address this research question, for each vulnerability, we keep track of the type of precondition that shall hold to enable exploiting the vulnerability, based on the description appearing in the vulnerability report. secure communication but a malicious user is capable of crafting and manipulating the second token at his end to escalate privilege’s and gain access to otherwise restricted modules of the system. Precondition for exploiting the vulnerability specifies the system to be in specific configured state which in the example is the use of older version of ESP. We identified the following categories:

-

Data. What brings the system into a vulnerable state is a specific sequence of input data. A example is the vulnerability MITRE:CVE202015157 (2022) presented earlier; it affects a Kubernetes Pod, which may leak passwords to a phishing URI while resolving malicious dependencies during the import of a container. In this case, the data consists of the phishing dependencies inserted by a malicious user.

-

Lack of Data. What brings the system into the vulnerable state is the lack of an expected input (e.g., a missing initialization of a resource). It differs from Data since, in this case, the required data is not provided; in the case of Data, instead, the data is provided but with crafted values or in an unexpected order.An example is KubeEdge bug report #2362 MITRE (2022), which indicates that the end-user cannot connect to the Kubernetes server (availability problem) because no credentials are shared between the Cloud server and the Edge server. In this case the problem depends on a specific connection command not being automatically executed on the Cloud server.

-

Resource Busy. It indicates that a required resource cannot be accessed because it is already busy. An example is provided by Kubernetes bug report #1017 KubeEdge (2023b), which indicates that two different go-routine requests for a resource already in use make the system unavailable.

-

Resource Unavailable. It indicates that a required resource does not exist in the system. An example is Kubernetes vulnerability MITRE: CVE202035514 (2023), which indicates that the Kubelet Edge device agent fails to manage the storage in a Pod; indeed, increasing the storage consumption may lead to writing data to the configuration files of a Kubelet agent resulting in compromising the Node. In this case, the unavailable resource is the file system storage.

-

System Configuration. It indicates a misconfiguration of the system. An example is vulnerability MITRE:CVE202013597 (2022) in Calico (a network security solution for containers); if a Pod is configured to work on IPv4 and meanwhile IPv6 is enabled and not being used, a specifically crafted request may cause the Pod to disclose information or cause a DoS.

-

Delay Causing Missing Resource. It indicates the case in which a delay (e.g., in input, output, or module initialization) causes any resource to be missing (it differs from Resource Unavailable since in this case the missing resource is an output of the SUT). An example for such case was presented earlier, it concerns KubeEdge report #1736 KubeEdge (2023c), which indicates that, during the initialization of the SUT, a Pod tries to allocate storage volume using configuration files that should be created by the Edge-core. If the initialization of the Edge-core is delayed, then the pod is unable to find the configuration files in the directory and ends up with a denial of service.

-

None. This case indicates that there is no precondition to be satisfied in order to exploit the vulnerability.

3.2.5 \({\textbf{RQ}}_{5A}\): How many Steps are Required to Exploit an Edge Vulnerability?

To answer this research question we determine, by reading the vulnerability report, the number of steps required to exploit the vulnerability, once the system is in the state required to exploit the vulnerability. However, the type of action to be performed depends on the case study subject. Generally, a step is an action that can be described with a simple sentence using terminology that is well-understood in the domain. For example, the sentence delete the content of the configuration file settings.xml is a single step even if, in practice, implies opening a file first. For example, the Kubernetes vulnerability MITRE: CVE202120218 (2022) reports a single step, consisting of executing the copy command on the Fabric8 plugin Fabric8 Maven Plugin (2022). This step enables a malicious user to share restricted files and folders in the system. The docker vulnerability report MITRE:CVE-2014-5278 (2022), instead, describes a single step which consists of creating a new container with a name already assigned on the host. The vulnerability enables an attacker to intercept commands and control other containers with the same name.

3.2.6 \({\textbf{RQ}}_{5B}\): What is the Nature of the Input Action Enabling the Attacker to Exploit a Vulnerability?

This research question aims to characterize the types of inputs that enable a malicious user to exploit a vulnerability. We rely on the same categories reported for \({\textbf{RQ}}_4\). An example concerning the Data category is that of Kubernetes vulnerability MITRE: CVE202121334 (2022), which reports that an input request for cloning a container image (the name of the image is the required data) will result into the disclosure of information associated with the container image.

The category None should be used when no input is needed to exploit the vulnerability. This may be the case for vulnerabilities leading to the printout of credentials in log files without the need for further inputs from a malicious user.

3.2.7 \({\textbf{RQ}}_{6}\): What Security Properties are Violated by Edge Vulnerabilities?

We address this research question by determining the security property that is violated when the vulnerability is successfully exploited. We consider availability, confidentiality, and integrity, which are the security properties described in most security standards (see Section 2.4). Concerning integrity, we distinguish between data integrity and system integrity. They are all described in the following:

-

Availability. An example availability issue appears in KubeEdge bug report #1017 KubeEdge (2023b), which has been introduced previously. It concerns two go-routines trying to access, concurrently, a same web-socket. As a result, only one of the two routines succeeds; consequently, the availability of the function implemented by the failing routine is compromised.

-

Data Integrity. Data-integrity restricts our focus on the integrity of the data stored by either the SUT or the environment in which the SUT is working. An example is provided by the Kubernetes vulnerability report MITRE: CVE202121251 (2022). It concerns the tarutils tool, which is used to extract compressed files. This vulnerability is a zip slip vulnerability, i.e., a vulnerability that enables an attacker to overwrite arbitrary files when the compressed file is packed in a specific manner.

-

System Integrity. It concerns cases in which exploiting the vulnerability leads to a modification of the configuration of the system. An example is the CVE vulnerability MITRE:CVE20202211 (2022), which concerns the Jenkins Kubernetes CI/CD plugin. The YAML parser in the plugin is not configured properly; consequently, it allows the upload of arbitrary file types, which leads to remote code execution therefore compromising the system integrity. Generating multiple connection request to Contour results in DoS.

-

Confidentiality. This category concern vulnerabilities affecting confidentiality. An example is the CVE vulnerability report MITRE: CVE20208566 (2002), which concerns the Ceph RADOS Block Device (RBD). RBD is the Kubernetes component for storage provisioning. When logging level is set to 4, RBD writes sensitive information (i.e., passwords) to the log file in plain text.

Violated security properties are reported also in NVD CVSS attributes (see Section 2); precisely, CVSS attributes capture the impact that a vulnerability has on each security property (i.e., None, Low, High). However, we do not have CVSS IDs for all the vulnerabilities considered in our study but only for the ones collected from the CVE database. Further, CVSS attributes capture all the security properties that might be affected, which results in multiple security properties being likely violated by each vulnerability; in our analysis, instead, we report only one security property for each vulnerability, which we identify as either the security property that is easier to violate through an exploit (e.g., less steps to perform) or, if multiple properties can be violated with a same simple input, the security property that can be identified as being violated first. For example, the malicious modification of the configuration of the system (system integrity) may result in a Node not responding to requests (availability); in this case, although both system integrity and availability are violated, system integrity is the first property being violated. The reason for our choice is that, with our study, we aim to drive the implementation of software testing tools, which will likely discover scenarios that are short and easy to process; in other words, they will detect violations of security properties that are easier to trigger and report the first security being violated (without waiting for other effects).

3.2.8 \(\textbf{RQ}_7\): What Faults Cause Edge Vulnerabilities?

In the following, we present the three different kinds of data collected to address \(\textbf{RQ}_7\):

-

\(\textbf{RQ}_{7A}\): What is the CWE Vulnerability Type? We keep track of the CWE IDs associated to each vulnerability report. Although there is no guarantee that every CVE vulnerability report presents a set of CWE IDs capturing the vulnerability type, they are usually reported (for our case study subjects, 89.8% of the vulnerabilities present a CWE ID, see Section 4.8). The vulnerabilities without a CWE ID are not considered to address \(\textbf{RQ}_{7A}\).

-

\(\textbf{RQ}_{7B}\): What are the Erronous Software Behaviours Leading to Edge Security Failures? For each CWE ID associated to a vulnerability, we inspect the Research Concept taxonomy and identify the corresponding pillars.

-

\(\textbf{RQ}_{7C}\): What are the Developer Mistakes Leading to Edge Vulnerabilities? For each CWE ID associated to a vulnerability, we inspect the Developer Concept taxonomy and identify the corresponding pillars.

3.2.9 \(\textbf{RQ}_{8}\): How Severe are Edge Vulnerabilities?

For each CVE vulnerability, we inspect the corresponding entry in the NVD database and keep track of both the NVD severity score and the CVSS entry.

To discuss severity, we comment on the distribution of CVSS scores; for example, a high median for the CVSS score is a strong motivation for improvement in Edge security testing practices. Also, we report the percentage of vulnerabilities with a high impact on security properties.

To discuss the easiness of attacks, which should lead to easy test automation, we discuss the distribution of CVSS attributes Attack Complexity (Low/High) and Privileges Required (None/Low/High).

4 Results

This section presents our findings; each research question (RQ) is discussed individually. In this section we do not provide example cases for the vulnerabilities investigated in our study because they are already described in Section 3.2; the reader can also refer to Table 3 to search for example cases through the manuscript.

For \({\textbf{RQ}}_{1}\)to \({\textbf{RQ}}_{5A}\), we also compare our results with those of Gazzola (2017); \({\textbf{RQ}}_4\)to \(\textbf{RQ}_{8}\), instead, were not studied by Gazzola et al.

4.1 \({\textbf{RQ}}_{1}\): Why are Edge Vulnerabilities not Detected During Testing?