Abstract

Cloud-native applications constitute a recent trend for designing large-scale software systems. However, even though several cloud-native tools and patterns have emerged to support scalability, there is no commonly accepted method to empirically benchmark their scalability. In this study, we present a benchmarking method, allowing researchers and practitioners to conduct empirical scalability evaluations of cloud-native applications, frameworks, and deployment options. Our benchmarking method consists of scalability metrics, measurement methods, and an architecture for a scalability benchmarking tool, particularly suited for cloud-native applications. Following fundamental scalability definitions and established benchmarking best practices, we propose to quantify scalability by performing isolated experiments for different load and resource combinations, which asses whether specified service level objectives (SLOs) are achieved. To balance usability and reproducibility, our benchmarking method provides configuration options, controlling the trade-off between overall execution time and statistical grounding. We perform an extensive experimental evaluation of our method’s configuration options for the special case of event-driven microservices. For this purpose, we use benchmark implementations of the two stream processing frameworks Kafka Streams and Flink and run our experiments in two public clouds and one private cloud. We find that, independent of the cloud platform, it only takes a few repetitions (≤ 5) and short execution times (≤ 5 minutes) to assess whether SLOs are achieved. Combined with our findings from evaluating different search strategies, we conclude that our method allows to benchmark scalability in reasonable time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Following the rise of cloud computing as preferred deployment infrastructure for many applications, we are now witnessing how large-scale software systems are increasingly being designed as “cloud-native” applications (Gannon et al. 2017; Kratzke and Quint 2017). Under the umbrella term “cloud-native”, a wide range of tools and patterns emerged for simplifying, accelerating, and securing the development and operation of software systems in the cloud. Key concepts are containers, dynamic orchestration, and microservices, which provide a new level of hardware abstraction, while still providing a high flexibility regarding the system’s deployment. An entire ecosystem of such tools has grown in recent years under the umbrella of the Cloud Native Computing Foundation,Footnote 1 a suborganization within the Linux Foundation. Most prominent among these tools is probably Kubernetes (Burns et al. 2016), which has become the de-facto standard orchestration tool for cloud-native applications. Nowadays, all major cloud providers offer managed Kubernetes clusters. With such offerings, users only specify the desired cluster size (e.g., number of virtual or physical nodes and properties of nodes). For the actual operation of their applications (e.g., scaling, updating, or repairing), they only interact with the Kubernetes API. Recently, a further level of abstraction can be observed. For example, the Google Cloud Platform launched its Kubernetes Autopilot in 2021, which adjusts underlying node pools based on the current demand and only charges users for the containers they actually deploy.

A definition of the term “cloud-native” is provided by the Cloud Native Computing Foundation, which states that “cloud native technologies empower organizations to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds” (Cloud Native Computing Foundation 2018). This definition already includes the requirement for scalability. Similarly, microservice architectures (a common pattern for cloud-native applications (Balalaie et al. 2016)) are often adopted to cope with scalability requirements (Kratzke and Quint 2017; Soldani et al. 2018; Knoche and Hasselbring 2019). However, although scalability is often named as a crucial motivation for adopting cloud-native architectures or deployments, research is lacking a commonly accepted method to empirically assess and compare the scalability of cloud-native applications.

In empirical software engineering research, benchmarks are an established research method to compare different methods, techniques, and tools based on a standardized method (Sim et al. 2003; Tichy 2014; Hasselbring 2021). For traditional performance attributes such as latency or throughput, well-known (and often straightforward) metrics and measurement methods exists (Kounev et al. 2020). For scalability, in particular in the context of cloud-native applications, the situation is different: On the one hand, precise definitions exist and were refined over the last two decades (Jogalekar and Woodside 2000; Duboc et al. 2007; Weber et al. 2014). On the other hand, we observe that for cloud applications, no commonly accepted scalability benchmarking method exists, employed benchmarking methods are insufficiently described or not aligned with scalability definitions, and benchmarking methods for other application types cannot be transferred. Even though most studies from academia and industry share similar understandings of scalability, the lack of well-defined metrics and measurement methods contradicts the fundamental principle of benchmarking.

With this paper, we aim to bridge the gap between research on defining scalability and experimental scalability evaluations of cloud-native applications. Our goal is to provide a solid framework, allowing researchers and practitioners to conduct empirical scalability evaluations. We propose, discuss, and evaluate a benchmarking method that can be used by benchmark designers (e.g., standardization organizations or research communities) and benchmarkers (e.g., software engineers, cloud vendors, or researchers), who wish to benchmark the scalability of different software artifacts or deployment options. Our study consists of two parts: a pre-study for engineering the benchmarking method and an experimental evaluation.

Pre-study

We review scalability definitions and benchmarking best practices from industrial consortia and academia. We analyze which quality attributes of benchmarks have to be fulfilled by which benchmarking components and derive requirements for fulfilling them for the special case of scalability benchmarking. Based on the distinction between metric, measurement method, and tool architecture, we engineer a benchmarking method implementing the particular requirements.

We require that a scalability metric for cloud-native applications should be aligned with accepted definitions of scalability in cloud computing. We find that this can be achieved by two scalability metrics that describe scalability as functions. Our demand metric indicates how the required amount of provisioned resources evolves with increasing load intensities. Our capacity metric indicates how the processible load intensity evolves with increasing amounts of provisioned resources. Both metrics use the notion of service level objectives (SLOs) for quantifying whether a certain resource amount can handle a certain load intensity.

A scalability measurement method should provide statistically grounded results to allow for reproducibility. On the other hand, it should also not be too time consuming to remain usable. While measuring scalability according to our proposed metrics provides an accurate quantification of a system’s scalability, it also requires many experiments to be performed. Combined with the need for sufficiently long experiment durations or repetitions, this may lead to long runtimes for benchmarking scalability. Our proposed measurement method provides several configuration options to balance the overall runtime and statistically grounding of its results.

For a scalability benchmarking tool, we require in particular usability to support reproducibility and verifiability of benchmarking studies. This includes a simple installation and the declarative description of benchmarks and their executions. Due to the complexity of such a benchmarking tool, the tool and corresponding benchmarks should not be coupled. We propose a benchmarking architecture fulfilling these requirements by adopting common patterns for managing cloud-native applications. Additionally, this architecture contains a data model for separately describing benchmarks and their executions, taking the different types of actors involved in benchmarking into account.

Experimental evaluation

We experimentally evaluate whether our proposed method allows one to obtain statistically grounded results within reasonable execution times. We use an implementation of our proposed benchmarking tool architecture to experimentally evaluate the individual configuration parameters, which control the statistically grounding of benchmark results as well as the corresponding execution times. Test subjects of our experiments are two stream processing engines, which implement a set of four benchmarks. Our experiments are conducted in Kubernetes clusters running at two public cloud vendors (Google Cloud Platform and Oracle Cloud Infrastructure) and one private cloud. Specifically, we address the following research questions:

- RQ 1 :

-

For how long should experiments be executed that evaluate whether a certain combination of load intensity and provisioned resources fulfill the specified SLOs?

- RQ 2 :

-

How many repetitions of such experiments should be performed?

- RQ 3 :

-

How does the assessment of SLOs evolve with increasing resource amounts?

- RQ 4 :

-

How does the assessment of SLOs evolve with increasing load intensities?

We find that in most cases, it only takes a few repetitions (≤ 5) and short execution times (≤ 5 minutes) to assess whether a certain resource amount can handle a certain load intensity. When accepting a small error in the derived resource demand, also resource-load combinations for which this assessment is more extensive can be run with a few repetitions and short execution times. Moreover, the space of load intensities and resource amounts experiments are executed for can massively be reduced with search strategies for both our presented metrics.

Recommendation

As benchmarking activities are often restricted in time or resources, we recommend to focus on expanding the evaluated load and resource space instead of exhaustively evaluate individual load and resource combinations. While individual experiments should be repeated and warm-up periods should be considered, evaluating more load intensities or resource amounts allows to gain a better impression of a system’s scalability.

Contributions

In summary, the main contributions of this paper are as follows:

-

Requirements on scalability benchmarking of cloud-native applications, arranged by scalability metric, measurement method, and benchmarking tool architecture.

-

A scalability measurement method, which performs isolated experiments for different load intensities and resource amounts in a configurable manner and evaluates whether specified service level objectives (SLOs) are met.

-

An architecture for a cloud-native scalability benchmarking tool implementing the proposed measurement method. It supports different benchmarks, which do not need to be explicitly designed for scalability benchmarking.

-

An extensive evaluation of different configuration options of our proposed method. A replication package and the collected data of our experiments is published as supplemental material (Henning and Hasselbring 2021b), such that other researchers may repeat and extend our work.

The remainder of this paper starts by providing the background and context of this study in Section 2. Based on this overview, we derive requirements for a scalability metric, a measurement method, and a benchmarking tool in Section 3. Afterwards, we propose scalability metrics in Section 4, a corresponding scalability measurement method in Section 5, and our scalability benchmarking architecture in Section 6. We experimentally evaluate our proposed benchmarking method in Section 7 and discuss related work in Section 8. Section 9 concludes this paper and points out future research directions.

2 Context and Background

In this section, we provide the context and background of this paper. Benchmarks consist of multiple components to empirically evaluate a quality, namely scalability in this work. Figure 1 shows these components and highlights the scope of this paper. The goal of this paper is not to present new benchmarks, i.e., SUTs and load generator, as those do already exists. Instead, we present a scalability benchmarking method, which consists of metrics, measurement method, and an architecture for executing such benchmarks. In the following, we briefly describe the components of benchmarks along with respective quality attributes in Section 2.1 and provide an overview of scalability definitions in Section 2.2.

2.1 Benchmarking in Empirical Software Engineering

2.1.1 Components of Benchmarks

The recently published ACM SIGSOFT Empirical Standard for Benchmarking (Ralph et al. 2021; Hasselbring 2021) names four essential components of a benchmark:Footnote 2

-

the quality to be benchmarked (e.g., performance, availability, scalability, security)

-

the metric(s) to quantify the quality

-

the measurement method(s) for the metric (if not obvious)

-

the workload, usage profile and/or task sample the system under test is subject to (i.e., what the system is doing when the measures are taken)

In addition, benchmarks usually come with a benchmarking tool to automate the benchmarking process. A typical benchmarking tool architecture contains separate components for the load generation and SUT (Bermbach et al. 2017). The SUT is either a ready-to-use software (or service) or a software (or service) that implements a task sample defined by the benchmark. The load generation component stresses the SUT according to the workload or usage profile defined by the benchmark. Additional components in a benchmarking architecture are responsible for experiment controlling, data collection, and data analysis and, thus, implement the benchmark’s measurement method (Bermbach et al. 2017). Sometimes also a visualization component for passive observation is included (Bermbach et al. 2017).

2.1.2 Quality Attributes of Benchmarks

A set of five desired quality attributes for benchmarks is presented by v Kistowski et al. (2015), which represents the perspectives of the SPEC and TPC committees:

-

Relevance How closely the benchmark behavior correlates to behaviors that are of interest to consumers of the results

-

Reproducibility The ability to consistently produce similar results when the benchmark is run with the same test configuration

-

Fairness Allowing different test configurations to compete on their merits with-out artificial limitations

-

Verifiability Providing confidence that a benchmark result is accurate

-

Usability Avoiding roadblocks for users to run the benchmark in their test environments

These and similar quality attributes can also be found by Sim et al. (2003), Huppler (2009), and Folkerts et al. (2013) as well as in textbooks on (cloud) benchmarking (Bermbach et al. 2017; Kounev et al. 2020).

2.1.3 Application-driven Benchmark Design

Often a distinction between microbenchmarking and application benchmarking is made (Kounev et al. 2020). We focus on benchmarking entire cloud-native applications or independently deployable components such as microservices as those can only be scaled at a whole. This implies that from a benchmarker’s perspective, the SUT is a black box and we have to rely on metrics exposed by the orchestration platforms, middlewares, or the SUT itself.

2.2 Definition of Scalability

Initial definitions for scalability of distributed systems were presented by Bondi (2000) and Jogalekar and Woodside (2000), which were later generalized by Duboc et al. (2007).

2.2.1 Scalability in Cloud Computing

More recently, such definitions have been specified to target the peculiarities of scalability in cloud computing (Herbst et al. 2013; Lehrig et al. 2015; Brataas et al. 2017). A definition of scalability in cloud computing is, for example, given by Herbst et al. (2013), which states that “scalability is the ability of [a] system to sustain increasing workloads by making use of additional resources”. In a subsequent work, Weber et al. (2014) further refine this definition and highlight that scalability is characterized by the following three attributes:

- Load intensity:

-

is the input variable to which a system is subjected. Scalability is evaluated within a range of load intensities.

- Service levels objectives (SLOs):

-

are measurable quality criteria that have to be fulfilled for every load intensity.

- Provisioned resources:

-

can be increased to meet the SLOs if load intensities increase.

A software system can be considered scalable within a certain load intensity range if for all load intensities within that range it is able to meet its service level objectives, potentially by using additional resources. Both load intensity and provisioned resources can be evaluated with respect to different dimensions. Typical load dimensions are, for example, the amount of concurrent users or number of requests, while resources are often varied in the number of processing instances or equipment of the individual instances. This understanding of scalability (albeit less formally) is shared by textbooks addressed to practitioners (Kleppmann 2017; Gorton 2022).

A similar definition, although formulated inversely, is used in multiple publications of the “CloudScale” project (Lehrig et al. 2015; Brataas et al. 2017; Brataas et al. 2021). They define scalability as “a system’s ability to increase its capacity by consuming more resources” (Brataas et al. 2021), where capacity describes the maximum load the system can handle while fulfilling all “quality thresholds”. Here, the notion of quality thresholds corresponds to what Weber et al. (2014) and others call SLOs.

2.2.2 Vertical and Horizontal Scalability

A distinction is often made between horizontal and vertical scalability (Michael et al. 2007; Lehrig et al. 2015). While horizontal scaling refers to adding computing nodes to cope with increasing load intensities, vertical scaling means adding resources to a single node. A special case of vertical scaling in cloud computing is migrating from one VM type to another (Weber et al. 2014). In cloud-native deployments, the underlying physical or virtualized hardware is usually abstracted by containerization and orchestration techniques. Nevertheless, different types of scaling resources also exist in cloud-native applications. The scalability definition presented previously covers both horizontal and vertical scalability as both refer to different types of provisioned resources (Weber et al. 2014).

2.2.3 Scalability vs. Elasticity

Another quality that is often used in cloud computing is elasticity (Lehrig et al. 2015). Scalability and elasticity are related, but elasticity takes temporal aspects into account and describes how fast and how precisely a system adapts its provided resources to changing load intensities (Herbst et al. 2013; Islam et al. 2012). Scalability, on the other hand, is a prerequisite for elasticity, but is a time-free notion describing whether increasing load intensities can be handled eventually.

3 Requirements on Scalability Benchmarking

To identify requirements for scalability benchmarking method, we build upon the established benchmark quality attributes listed in Section 2.1.2. For each quality attribute, we identify the benchmark components (cf. Section 2.1.1) that can contribute most to implementing the attribute. Based on this, we derive for each benchmark component covered by this work (metric, measurement method, and tool architecture) a set of requirements that needs to be fulfilled for benchmarking scalability.

Table 1 describes the desired behavior of the individual benchmark components in order to implement the respective quality attribute. We conclude that relevance and fairness have mainly to be implemented at the level of the task sample and, to some extent, by the metric. The measurement method and the tool architecture, on the other hand, should primarily be designed for reproducibility, verifiability, and usability. In the following, we propose a set of requirements for scalability benchmarking for each of the benchmark components metric, measurement method, and tool architecture.

3.1 Requirements for Scalability Metrics

As described in Section 2, scalability is defined by the three attributes load intensity, service levels objectives (SLOs), and provisioned resources. We aim for a general scalability metric, which is applicable to different types of systems and scalability evaluations. Therefore, we require that this metric is not restricted to a specific type of any of these attributes.

Support for different load types

Various types of load for a cloud-native application exist. For example, in the context of web-based systems load is often considered as the number of requests arriving at a web server within some period of time, while in event-driven architectures it is often the amount of messages written to a dedicated messaging system. Such load types can be further broken down to distinguish, for example, between the amount of concurrent users sending requests and the frequency users send requests with. Other typical load types are the size per message or request or, in the case of request–response systems (e.g., databases), the size of responses. In previous work, we also highlighted domain-specific load types for the case of big data stream processing (Henning and Hasselbring 2021c).

Support for different SLOs

The notion of SLOs in scalability definitions provides us a measure to check, whether a system is able to handle a certain load intensity. Typical SLOs are, for example, that no more than a certain percentage of requests or messages may be processed with a certain latency (e.g., maximum allowed latency at the 99.9 percentile) or that no more than a certain amount of requests is discarded. The choice of such SLOs always depends on the application domain and should not be defined by the scalability metric. Additionally, a metric should also support multiple SLOs, which all have to be fulfilled.

Support for different resource types

Depending on the desired deployment, the resources that can be added to sustain increasing workloads may be of different types. According to the traditional distinction between vertical and horizontal scalability, this means upgrading the computing capabilities of existing nodes or expanding the node pool by additional nodes. In orchestrated cloud-native architectures, the assignment of application or service instances is usually abstracted and managed by a tool such Kubernetes. One option is to increase the requested CPU or memory resources for a so-called pod, which may contain multiple containers. Kubernetes then ensures that the pod will be scheduled on a node, with provides sufficient resources. This can be seen as a form of “virtualized” vertical scaling. On the other hand, pods can also be scaled by increasing their number of replicas, which corresponds to “virtualized” horizontal scaling. Here, we use the term virtualized since it is not guaranteed that indeed more nodes or more powerful nodes are used. Nonetheless, even underlying hardware or VMs should be supported to be scaled such as machine sizes configured via the cloud provider.

3.2 Requirements for the Scalability Measurement Method

Robust statistical grounding

Performance experiments exhibit a large variability in their results for various reasons (Maricq et al. 2018). For experiments in public cloud environments, this variability is even larger due to effects of changing physical hardware or software of different customers running on the same hardware (Abedi and Brecht 2017). In order to obtain reproducible results, measurements should therefore be repeated and the confidence in the final results should be quantified (Papadopoulos et al. 2021). Scalability benchmarking conducts performance experiments to assess whether SLOs are achieved. This means that experiments in scalability evaluations should be executed for a sufficient amount of time as well as repeated multiple times.

Time-efficient execution

Increasing the statistical grounding of performance experiments as described above leads to longer execution times. Hence, the requirement for reproducibility conflicts with the requirement for usability and, thus, verifiability as with increasing execution time also costs increase. For a usable measurement method, we therefore require to find a balance between statistically grounded results and a time-efficient execution.

3.3 Requirements for the Scalability Benchmarking Architecture

Operating a distributed software system in the cloud is a complex task, which indeed motivated the development of powerful orchestration tools such as Kubernetes (Burns et al. 2016). Typical situations that have to be handled are, for example, unpredictable network connections, deviations in the underlying hardware or software infrastructure as well as complex requirements on the order of starting many interacting components. Such situations must also be accounted for when running benchmarks in orchestrated cloud platforms, where experiments should be executed for several hours without user intervention to achieve usability of the benchmarking tool. Hence, we require approaches, which are similar to those that are used for operating cloud-native applications.

Simple Installation

Installing or deploying cloud-native tools is often complex as even for a single tool or service, several resources have to be deployed, such as Deployments, Services, ConfigMaps and many others in the case of Kubernetes. This becomes even more difficult if for different clusters or cloud providers, different adjustments have to be made. With our Theodolite benchmarking tool (Henning and Hasselbring 2021c), for example, we experienced that persistent volumes are created differently for different cloud providers, components must explicitly be deployed on certain node classes, or certain features of a benchmarking setup should be disabled due to missing permissions. To simplify the installation of a benchmarking tool, we thus require the adoption of patterns and tools that are established for setting up cloud-native applications in production.

Declarative benchmark and experiment definition

A common situation when operating software systems in orchestrated cloud platform is that the desired and previously configured system state deviates form the actual state. Orchestration tools such as Kubernetes address this by providing declarative APIs, which are used to describe the desired state of the system. The orchestration tools continuously compare the actual state to the desired state and perform the necessary reconfigurations. Accordingly, we also require that cloud-native benchmarking tools should be designed in a way such that users only describe what system they would like to benchmark with which configuration, while the benchmarking tool handles the actual execution.

Support for different benchmarks and SUTs

There already exists a set of reference implementations and benchmarks for different types of systems, focusing on different attributes. For a generic benchmarking tool, we require that it should be able to support different benchmarks.

Support for different SUT configurations

With benchmarks, often not only different systems or frameworks are compared, but also different configurations or deployment options. A generic benchmarking tool should support setting these configurations via its declarative API such that no new installation or even re-building of the benchmark implementation is required.

4 Scalability Metrics

We proposed and discussed the scalability metrics presented in this section at the International Workshop on Load Testing and Benchmarking of Software Systems 2021 for the special case of stream processing systems (Henning and Hasselbring 2021a). From there, we received the feedback that these preliminary metrics could be widened in their scope to cover cloud-native applications in general.

Our metrics take up the three attributes of scalability in cloud computing presented in Section 2.2.1 and generalize them according to our requirements from Section 3. We define the load type as the set of possible load intensities for that type, denoted as L. For example, when studying scalability regarding the number of incoming messages per unit of time, L would simply be the set of natural numbers. Similarly, we define the resource type as the set of possible resources, denoted as R. While for horizontal scalability, R is typically the set of possible instance numbers (e.g., container or VM instances), for vertical scalability, R is the set of possible CPU or memory configurations (e.g., for a container or VM). We also require that there exists an ordering on both sets L and R. We define the set of all SLOs as S and denote an SLO s ∈ S as Boolean-valued function

with slos(l,r) = true if a system deployed with r resource amounts does not violate SLO s when processing load intensity l.

Based on the previous characterization of scalability, we propose two functions as metrics for scalability. In many cases, both functions are inverse to each other. However, we expect both metrics to have advantages, as discussed in our previous paper (Henning and Hasselbring 2021a).

Resource Demand Metric

The first function maps load intensities to the resources, which are at least required for handling these loads. We denote the metric as demand: L → R, defined as:Footnote 3

The demand metric shows how the resource demand evolves with increasing load intensities. Ideally, the resource demand increases linearly. However, in practice higher loads often require excessively more resources or cannot be handled at all, independently of the provisioned resources.

Load Capacity Metric

Our second metric maps provisioned resource amounts to the maximum load, these resources can handle. We denote this metric as capacity: R → L, defined as:

Analogously to the demand metric, the capacity metric shows at which rate processing capabilities increase with increasing resources. It allows to easily determine whether a system only scales up to a maximum resource amount (e.g., when a maximum degree of parallelism is reached). This is the case if increasing resources do not lead to higher load capacities.

According to the requirements identified in Section 3, both our metrics do not make any assumption on the type of load, resource, or SLO. This implies that these metrics allow to evaluate the same system with respect to different load and resources of varying dimensions. Typical load dimensions are, for example, the number of concurrent users at a system, the amount of parallel requests, or the size of requests. Also multi-dimensional load and resource types (e.g., different VM configurations) could be evaluated, provided that there is an ordering on the load or resource values to be tested. For cloud configuration options, such an ordering usually exists in terms of the costs per configuration (Brataas et al. 2017).

5 Scalability Measurement Method

Our scalability measurement method approximates our scalability metrics by running experiments with finite subsets of the considered load and resource types, \(L^{\prime } \subseteq L\) and \(R^{\prime } \subseteq R\). The sizes of the chosen subsets \(L^{\prime }\) and \(R^{\prime }\) determine the resolution of the metrics, but also the overall runtime of the method. The basic idea of our measurement method is to run isolated experiments for various load \(l \in L^{\prime }\) and resource \(r \in R^{\prime }\) combinations, which serve to evaluate whether specified SLOs are met. We decided to run these experiments in isolation as scalability does not take temporal aspects into account (e.g., how fast can a SUT react to a changing load, cf. Section 2.2.3). Measuring the throughput for a fixed, high load or increasing the load at runtime might cause wrong results (Henning and Hasselbring 2021a). Testing only at a fixed high load also fails to reveal deviations from expected trends, such as a linear increase in resource utilization with the load and nearly constant memory occupancy at constant load.

In the following, we describe the two main components of our proposed measurement method: the execution of experiments to evaluate whether SLOs are met and search strategies, which determine the SLO experiments to be executed. Afterwards, we discuss how the requirements for statistical grounding and time-efficient execution relate with each other.

5.1 SLO Experiments

Formally, an SLO experiment determines whether for a given set of SLOs S, a SUT deployed with \(r \in R^{\prime }\) resources can handle a load \(l \in L^{\prime }\) in a sense that each SLO s ∈ S is met, i.e., slos(l,r) = true.

Our measurement method deploys the SUT with r resources and generates the constant load l over some period of time. During this time, the SUT is monitored and data is collected, which is relevant to evaluate the SLOs. For example, for an SLO that sets a limit on the maximal latency of processed messages, monitoring would continuously measure the processing latency. The duration for which SLO experiments are executed should be chosen such that enough measuring data is available to draw statistically rigorous conclusions. On the other hand, this duration should not be unnecessarily long to achieve the required time-efficient execution and, thus, increase usability. To meet the requirement for statistically grounded results, measured values of an initial time period are discarded (warm-up period). Measurements during this time usually deviate from those of the further execution as, for example, optimizations are performed after start-up. Another measure to increase statistical rigor is to repeat SLO experiments with the same load and resource combination. To finally compute slos(l,r), the monitored data points of all repetitions are aggregated in an SLO-specific way.

5.2 Search Strategies

Our proposed scalability measurement method is configurable by a search strategy, which determines the SLO experiments that will be performed to accurately approximate the scalability metrics. In the case of the demand metric, the goal is to find the minimal required resources for each load intensity \(l \in L^{\prime }\). For the capacity metric, the maximal processible load intensity for each resource configuration \(r \in R^{\prime }\) should be found.

Figure 2 gives an overview of selected search strategies, which we describe in the following for the case of the demand metric. Nonetheless, these strategies can easily be transferred to the capacity metric. Apart from these examples, also more complex strategies are conceivable. Figure 3 provides an illustrative example of our measurement method for each strategy. A colored cell corresponds to an SLO experiment for a certain load intensity and resource configuration, which is executed by the respective search strategy. Green cells represent that the corresponding SLO experiment determined that the tested resources are sufficient to handle the tested load. Red cells represent that the resources are not sufficient. Framed cells indicate the lowest sufficient resources per load intensity. The resulting demand function is plotted in Fig. 3a.

Full search

The full search strategy (see Fig. 3a) performs SLO experiments for each combination of resource configuration and load intensity. Its advantage is that it allows for extensive evaluation after the benchmark has been executed. This also includes that based on the same SLO experiments, both the demand and the capacity metric can be evaluated. However, this comes at the cost of significantly longer execution times.

Linear search

The linear search strategy (see Fig. 3b) reduces the overall execution time by not running SLO experiments whose results are not required by the metric. That is, as soon as a sufficient resource configuration for a certain load intensity is found, no further resource configurations are tested for that load.

Binary search

The binary search strategy (see Fig. 3c) adopts the well known algorithm for sorted arrays. That is, the strategy starts by performing the SLO experiments for the middle resource configuration. Depending on whether this experiment was successful or not, it then continues searching in the lower or upper half, respectively. The binary search is particularly advantageous if the search space is very large (i.e, larger than in Fig. 3). However it is based on the assumption that with additional resources for the same load, performance does not substantially decrease. More formally, this strategy assumes:

We evaluate this assumption for the special case of event-driven microservices in Section 7.4 and show that it does not hold in all cases.

Lower bound restriction

The lower bound restriction (see Fig. 3d–f) is an example for a search strategy that uses the results of already performed SLO experiments to narrow the search space. It starts searching (with another strategy) beginning from the minimal required resources of all lower load intensities. Note that when combined with the binary search strategy, the lower bound restriction may also cause different experiments to be performed (see upper right of Fig. 3f). The lower bound restriction is based on the assumption that with increasing load intensity, the resource demand never decreases. More formally, this strategy assumes:

In Section 7.4, we show that for the special case of event-driven microservices, we are safe to make this assumption.

5.3 Balancing Statistical Grounding and Time-efficiency

The runtime of a scalability benchmark execution depends on the number of evaluated resource amounts \(\vert R^{\prime }\vert \), the amount of evaluated load intensities \(\vert L^{\prime }\vert \), the duration of an SLO experiment τe as well as the associated warm-up period τw, the number of SLO experiment repetitions ρ, and the applied search strategy δ. Likewise, these values also control the statistical grounding of the results. Table 2 summarizes the effect of each configuration option on statistical grounding, while the following formulas show the runtime Φ for both the demand and the capacity metric:

6 Scalability Benchmarking Architecture

In this section we propose an architecture for a benchmarking tool that implements our proposed measurement method and, thus, our proposed scalability metrics. Moreover, our architecture is designed according to the requirements defined in Section 3. We start by outlining our proposed benchmarking process in Section 6.1, which distinguishes the definition of benchmarks and their execution. Afterwards, we present a data model for defining these benchmarks and executions in Section 6.2. In Section 6.3, we present how benchmarks and executions defined with this model can be executed in cloud-native environments.

6.1 Overview of the Benchmarking Process

Figure 4 gives an overview of our proposed scalability benchmarking process. In general, we can observe two actors involved in benchmarking:

Benchmark designers

are, for example, researchers, engineers, or standardization committees, which are experts regarding a specific type of application or software service. They are able to construct representative and relevant task samples or workloads for that type of software. Moreover, they know about relevant load intensity types, resources types, and SLOs, regrading which scalability should be evaluated. Benchmark designers bundle all of this in Benchmarks. Benchmarks can be published as supplemental material of research papers, but ideally they are versioned and maintained in public repositories (e.g., at GitHub). Benchmarks are stateless as they can be executed arbitrarily often.

Benchmarkers

intend to compare and rank different existing SUTs, evaluate new methods or tools against a defined standard, or repeat previous experiments. A detailed description of the benchmarker actor can be found by Kounev et al. (2020). Benchmarkers retrieve existing Benchmarks from their public repositories and execute them in the desired cloud environment. For this purpose, they describe the experimental setup for running a single Benchmark in a so-called Execution. Benchmarkers deploy both the Execution and the corresponding Benchmark to the benchmarking tool, which applies our proposed scalability measurement method. Executions are then assigned a state, which is typically something like Pending, Running, Finished, or Failed if an error occurred. Executions can be shared, for example, as part of a research study that benchmarks the scalability of different SUTs. The same or other benchmarkers can then again retrieve and copy Executions, for example, to replicate benchmarking studies.

6.2 Benchmarking Data Model

Based on the previously distinction between benchmarks and their executions, we propose a data model for defining them in a declarative way. Figure 5 visualizes the central elements of our data model and their relations as UML class diagram. In the following, we describe this data model starting from the central entities Benchmark and Execution.

Benchmark

A Benchmark is a static representation of a SUT and an associated Load Generator, where SUT and Load Generator are represented as sets of Deployment Artifacts. Such Deployment Artifacts are, for example, definitions of Kubernetes resources such as Pods, Services, or ConfigMaps.Footnote 4

According to our scalability metrics, benchmarks support different SLOs, Load Types, and Resource Types. An SLO represents the computations on gathered monitoring data, which are necessary to check an SLO. This may include the queries to the monitoring system, statistical calculation on the returned data, thresholds, or warm-up durations. Load Types and Resource Types are both represented as sets of Deployment Artifact Patchers. These patchers are associated with a Deployment Artifact and modify it in a certain way when running an SLO experiment.

Existing cloud-native benchmarks for other qualities can be utilized to define scalability benchmarks by aggregating their deployment artifacts and specifying load types, resource types, and SLOs. Benchmarks do not have a life-cycle and can be executed arbitrarily often by Executions.

Execution

An Execution represents a one-time execution of a benchmark with a specific configuration. It evaluates a subset of the SLOs provided by the Benchmark, which can additionally be configured by an SLO Configuration, which adjusts SLO parameters such as warm-up duration or thresholds. As specified by our measurement method, scalability is benchmarked for a finite set of load intensities of a certain load type and a finite set of resource amounts of a certain resource type. In our data model, these sets are represented as Loads and Resources. Since the Benchmark declares its supported Load Types and Resource Types, the specified Loads and Resources refer to the corresponding Load Type or Resource Type, respectively (thus the subset constraints).

Furthermore, an Execution can configure the SUT and the Load Generator by Deployment Configurations. Such Deployment Configurations consist of a Deployment Artifact Patcher and a fixed value, which the corresponding deployment is patched with. This allows, for example, to evaluate different configurations of the same SUT, for example, via environment variables. An Execution supports the configuration options of our measurement method discussed in Section 5, namely a Search Strategy as well as a Repetition Count and an Experiment Duration for the SLO experiments. Warm-up period durations are SLO-specific and, thus, specified as part of SLO Configurations.

In contrast to Benchmarks, Executions have a life-cycle. They can be planned, executed, or aborted. Each execution of a benchmark is represented by an individual entity. This supports repeatability as executions can be archived and shared.

6.3 Benchmark Execution in Cloud-Native Environments

We propose a benchmarking tool architecture based on the operator pattern (Ibryam and Huss 2019). This pattern is increasingly used to reduce the complexity of operating applications by integrating domain knowledge into the orchestration process. Core of this pattern are the operator and, in the case of Kubernetes, so-called Custom Resource Definitions (CRDs). Artifacts (or resources) of these CRDs can be created, altered, or remove by the user via the API of the orchestration tool. In a sophisticated reconciliation process, the operator continuously observes the currently deployed artifacts and may react to changes by creating, modifying or deleting other deployment artifacts.

Figure 6 shows our proposed architecture for a cloud-native scalability benchmarking tool. We envisage CRDs for the Benchmark and Execution entities of our benchmarking data model such that benchmarkers can deploy Benchmarks and Executions to the orchestration API. Whenever new Executions are created, the scalability benchmarking operator is notified and, if no other benchmark is currently executed, it starts executing a benchmark according to the specified Execution. This means, it alters the Deployment Artifacts of the SUT and the load generator according the provided Deployment Configuration, applies the configured search strategy to decide which SLO experiments should be performed, and also adjusts the Deployment Artifacts according to the selected load and resources. It then deploys all (potentially adjusted) Deployment Artifacts for the specified duration and repeats this procedure multiple times according to the defined Execution.

During this time, a monitoring component such as PrometheusFootnote 5 collects monitoring data of the SUT, which is then used by the operator to evaluate the specified SLOs. The operator stores all (raw) results persistently to allow for offline analysis, archiving, and sharing. Additionally, a visualization tool such as GrafanaFootnote 6 might be used to let benchmarkers observe the execution of benchmarks.

This architecture causes a significant effort for the installation of the entire benchmarking infrastructure. To implement the requirement for a simple implementation, we propose to employ a cloud-native package management tool such as Helm,Footnote 7 which installs the operator, the CRDs as well as dependent systems such as the monitoring and the visualization tool.

7 Experimental Evaluation

In this section, we perform an experimental evaluation of our proposed scalability benchmarking method to answer the research questions posed in Section 1. Specifically, we empirically evaluate the effect of our benchmarking method’s configuration options (see Section 5.3) on the statistically grounding of its results. The overarching goal of this evaluation is to find configuration parameters such that the results are reproducible, while the overall execution time is kept as short as possible.

We conduct our experiments for multiple SUTs, which implement different benchmarks, employ different software frameworks, and run in different cloud environments. This way, we also seek to find out whether the choice of configuration parameters should depend on the cloud provider, implementation, or benchmark. We focus on benchmarks for a specific type of cloud-native applications, namely event-driven microservices, to make the individual results comparable. However, our evaluation method is also intended to serve as a blueprint to repeat our evaluation for other SUTs.

After a detailed description of our experimental setup in Section 7.1, we conduct the following evaluations:

-

In Section 7.2, we address RQ 1 and study the duration SLO experiments are executed for as well as their warm-up period duration. We evaluate how both durations should be chosen such that we can decide with sufficiently high confidence whether evaluated SLOs are achieved.

-

In Section 7.3, we address RQ 2 and evaluate how many repetitions of an SLO experiment should be performed to decide with sufficiently high confidence whether evaluated SLOs are achieved.

-

In Section 7.4, we address RQ 3 and evaluate how the assessment of SLOs evolves with increasing resource amounts. This evaluation helps in determining whether the binary search strategy can applied with our demand metric and whether the lower bound restriction strategy can be applied with our capacity metric.

-

In Section 7.5, we perform a similar evaluation to address RQ 4 and evaluate how the assessment of SLOs evolves with increasing load intensities. This evaluation helps in determining whether the binary search strategy can be applied with our capacity metric and whether the lower bound restriction strategy can be applied with our demand metric.

In all four sections, we first describe the employed experiment design, before we present and discuss the experiment results. Finally, we discuss threats to validity in Section 7.6.

7.1 Experiment Setup

In the following, we present the general experiment setup for the following evaluations. First, we introduce our Theodolite scalability benchmarking tool, which implements our proposed benchmarking method. Afterwards, we describe the SUTs used for our evaluations. Our SUTs are implementations of benchmarks for event-driven microservices. Event-driven microservices are an emerging architectural style, in which microservices primarily communicate via asynchronous messaging. To parallelize data processing, such microservices employ distributed stream processing techniques (Fragkoulis et al. 2020). We consider 4 benchmarks, which are implemented by 2 stream processing engines and executed in 3 cloud environments. This results in 24 SUTs as summarized in Table 3.

7.1.1 The Theodolite Reference Implementation

Our cloud-native benchmarking tool Theodolite implements the architecture presented in Section 6 and, thus, our proposed benchmarking method. Theodolite is open sourceFootnote 8 research software (Hasselbring et al. 2020) with publicly available documentation.Footnote 9 We presented an early version of Theodolite in a previous publication (Henning and Hasselbring 2021c) along with benchmarks for distributed stream processing engines. Section 8 discusses how we extend our previous work.

We use the following technologies in Theodolite: Kubernetes as orchestration tool, Helm as package manager, Prometheus for monitoring the SUT, and Grafana for the visualization. These technologies are generally understood as being part of the cloud-native landscape.Footnote 10 In addition to our proposed architecture, Theodolite includes first-class support for configuring and monitoring a messaging system. Such a messaging system is used in almost all benchmarking studies for distributed stream processing and should therefore be also included in a scalability benchmarking tool (Henning and Hasselbring 2021c). For the implementation of our proposed architecture, this means that for an SLO experiment not only Kubernetes resources are deployed, but also corresponding messaging topics are created. We decided to not consider the messaging system as part of the SUT as, for example, in the case of Apache Kafka, its deployment takes a significant amount of time.

7.1.2 The Theodolite Stream Processing Benchmarks

Theodolite accepts benchmarks that are defined according to the data model depicted in Fig. 5. That is, a benchmark consists of a task sample (SUT and load generator) as well as supported load types, resource types, and SLOs. Theodolite comes with 4 benchmarks for event-driven microservices, which we use for our evaluation. In the following, we give an overview of these benchmarks and show how we employ them.

Task Samples

In our previous paper (Henning and Hasselbring 2021c), we derived 4 task samples from common stream processing use cases for analyzing Industrial Internet of Things sensor data (Henning et al. 2021). The individual tasks samples are:

- UC1:

-

Incoming messages are written to an external database. This task sample focuses solely on data processing and, therefore, is stateless.

- UC2:

-

Incoming messages are aggregated by a message key within fixed-size, non-sliding time windows to reduce the total quantity of messages (downsampling).

- UC3:

-

Incoming messages are aggregated by their key and a time attribute (e.g., day of week) in large sliding windows. Such computations are common to compute a seasonal trend over a long period of time (e.g., an aggregated weekly course over a period of several months).

- UC4:

-

Incoming messages are aggregated to groups and groups of groups in a hierarchical fashion (Henning and Hasselbring 2020).

For a more detailed description, please refer to our original publication (Henning and Hasselbring 2021c).

We evaluate implementations of these tasks samples with the two stream processing engines Apache Kafka Streams (Wang et al. 2021) and Apache Flink (Carbone et al. 2015).

Load type

A stream processing engine is usually subject to a load of messages coming from a central messaging system. In many of such systems, messages contain a key, which is used for data partitioning and, thus, the major means for parallelizing stream processing tasks (Fragkoulis et al. 2020). In our evaluation, we therefore focus on scaling with the amount of distinct message keys per unit of time. For benchmark UC1, UC2, and UC3, the load type corresponds to the amount of keys, where for each key one message per second is generated. For benchmark UC4, the load type corresponds to nested groups n (Henning and Hasselbring 2021c), which results in 4n keys, generating one message per second. For reasons of conciseness, we present the generated load also as 4n messages per second instead of the group size n in the results tables of our evaluation.

Resource type

The resource type used in our evaluation is the number of instances of the stream processing engine. This corresponds to what we referred to as “virtualized” horizontal scaling in Section 3. For Kafka Streams, this is simply the amount of pods, containing the same Kafka Streams application. All necessary coordination among instances to distribute tasks and data is then handled by the Kafka Streams framework. For Flink, the resource type is the amount of Taskmanager pods. Additionally, an environment variable has to be set, which notifies the Flink instances about the desired parallelism, which in our case corresponds to the amount of Taskmanager pods. Flink’s coordinating Jobmanager pod is not scaled, which is the suggested deployment.Footnote 11

SLO

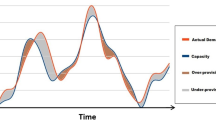

The SLO we use for this evaluation is based on the lag trend metric (Henning and Hasselbring 2021a). The lag of a stream processing job describes how many messages are queued in the messaging system, which have not been processed yet. The lag trend describes the average increase (or decrease) of the lag per second. It can be measured by monitoring the lag and computing a trend line using linear regression. The slope of this line is the lag trend. Figure 7 illustrates the concept of the lag trend.

Examples of the monitored lag and the trend line computed using linear regression (Henning and Hasselbring 2021c)

We use the lag trend metric to define an SLO, whose function evaluates to true if the lag trend does not exceed a certain threshold. The basic idea is: A fluctuating lag is acceptable (e.g., because message are consumed in batches) as long as the amount of queued messages does not increase over a longer period of time. Ideally, the threshold should be 0 as a non-positive lag trend means that messages can be processed as fast as they arrive. However, it makes sense to allow for a small increase as even when observing an almost constant lag, a slightly rising or falling trend line will be computed due to outliers.

We expect that in most cases, checking the lag trend alone suffices as an SLO for stream processing engines. The architectures of modern engines make it unlikely that SLOs such as a maximum tolerable processing latency can be fulfilled by scaling provisioned resources. An advantage of defining an SLO based on the lag is that it can be collected very efficiently and does require data from the stream processing engine, which might be incorrect under high load. In our experiments, Prometheus queries the current lag every 15 s from Apache Kafka, the messaging system used in our experiments.

7.1.3 Evaluated Cloud Platforms

The experimental evaluations presented in this section are performed in two public and one private cloud platforms. The two public cloud vendors are Google Cloud Platform (GCP) and Oracle Cloud Infrastructure (OCI), where we rely on the managed Kubernetes services with virtual machine nodes. We chose Google Cloud Platform as it is one of the largest cloud providers, whose Kubernetes offering can be regarded as most matured since Google significantly leads the Kubernetes development. Oracle Cloud Infrastructure is representative of a niche cloud provider, which provides a less sophisticated managed Kubernetes service. As private cloud infrastructure, we chose the Software Performance Engineering Lab (SPEL) at Kiel University. In contrast to the public clouds, its Kubernetes cluster runs on 5 bare metal nodes with considerably more powerful hardware. Besides also representing a realistic deployment platform used in many industries, the private cloud serves as a reference, ruling our the influence of public cloud performance peculiarities. Table 4 summarizes the configuration of the Kubernetes clusters, we use in our evaluation.

7.1.4 Replication Package

We provide a replication package and the collected data of our experiments as supplemental material (Henning and Hasselbring 2021b), allowing other researchers to repeat and extend our work. Our replication package includes the Theodolite Executions (see Section 6.2) used in our experiments and interactive notebooks used for analyzing our experiment results. An additional online version of our notebooks is available as a web service.Footnote 12

7.2 Evaluation of Warm-up and SLO Experiment Duration

Our scalability measurement method and, thus, our proposed benchmarking tool architecture is configurable by the duration, SLO experiments are executed for, and by the duration that is considered as warm-up period. We evaluate how the choice of warm-up period and duration influences the result of the SLO experiments. Goal of this evaluation is to minimize the experiment duration, without substantially scarifying the quality of the results.

7.2.1 Experiment Design

With this evaluation, we perform SLO experiments for all 24 SUTs depicted in Table 3. For each SUT, we aim to select SLO experiments, in which the resource amounts approximately correspond to the resource demand of the load. Those are probably the most difficult to access, while combinations of high load and few resources or vice versa are likely to require less time to be evaluated.

We performed preliminary experiments to find reasonable load-resource combinations to evaluate. For each SUT, we determine an approximation of the load that can be handled by 4–5 instances. We found those instance counts to be reliably working in all cloud platforms. To find suitable load-resource combinations, we run single, explorative experiments for a short time and manually observe the lag via Theodolite’s dashboard (Henning and Hasselbring 2021c). These results are not statistically grounded and do not necessarily represent the real resource demand. Instead, they represent a deployment, in which the provisioned resources approximately match the resource demand to bootstrap the following experimental evaluations. In addition to the resource amounts that approximately match the demand of a load, we perform experiments for one instance more and less, representing a slight over or underprovisioning. In our private cloud environment, we additionally perform these experiments with loads twice as high. Also for this case we find approximately matching instance counts, but due to higher instance numbers, we use two instances more and less to represent over or underprovisioning. Table 5 shows the load intensities and resource amounts that we use for the following evaluation.

To obtain an approximation of the true, long-term lag trend, we perform the SLO experiments in this evaluation over a period of one hour. According to our measurement method and the lag trend SLO, we let Theodolite monitor the lag during this time. For each experiment, we compute the lag trend over the entire experiment duration with different warm-up periods. The computed lag trends serve as reference values, used in the following approach to reduce the experiment duration.

For each experiment and evaluated warm-up period, we now evaluate how much shorter the experiment duration can be chosen such that the result of the SLO evaluation does not deviate from the reference value. For this purpose, we retroactively reduce the experiment duration by discarding the latest measurements. We evaluate two options as decision criterion for when no further measurements should be discarded:

-

1.

We reduce the duration as long as the computed trend slope does not deviate by more than a certain error from the reference value.

-

2.

We reduce the duration as long the binary result of the SLO evaluation does not change. More specifically, we first determine whether the reference values exceeds a threshold t. Then we reduce the duration as long as the lag trend does not rises above t or falls below t.

Our replication package (Henning and Hasselbring 2021b) allows to evaluate our experiment results according to the described method for different warm-up durations, allowed errors and lag trend thresholds.

7.2.2 Results and Discussion

Our results show that when using a maximum allowed error as decision criterion, the time required to reach a stable value decreases with increasing allowed error. Figure 8 illustrates this for errors of 1%, 10%, and 20% with a warm-up duration of 120 s. However, we observe the same trend also for other errors and warm-up durations. We cannot identify a significant impact of the cloud provider or the stream processing engine on the required execution time. Our results suggest that more complex stream processing benchmarks require shorter execution times, but this would need further experiments.

When using a maximum allowed error as decision criterion, we observe that even with an allowed error of 20%, the required execution time remains excessively high: For example with the data presented in Fig. 8, more than 50% of the experiments, require more than half an hour execution time for a single SLO experiment. Referring to the runtime formula of our method (see Section 5.3), this would quickly lead to a total runtime of several days. On the other hand, the time required to decide whether the lag trend exceeds a threshold is significantly lower, independently of the cloud provider, stream processing engine, and benchmark. While Fig. 8 illustrates this observation for a threshold of t = 2000 with a warm-up duration of 120 s, the results for other thresholds t > 0 and warm-up durations are quite similar. Thresholds close to t = 0 require longer experiment durations, but as described in Section 7.1.2 allowing for a small lag trend increase is sensible. As for our scalability metric we are ultimately only interested in whether the SLO is met, we only look at the executing times required to decide if the lag trend does not exceed the threshold.

Figure 9 summarizes the required execution times with a threshold of t = 2000 for different warm-up durations between 30 s and 480 s (multiples of the sampling interval) and all evaluated SUTs as box plots. We observe that in the vast majority of cases, warm-up periods of 60 s and 120 s result in required execution times of less than 5 minutes. Summarized over all SUTs, longer warm-up durations lead to less variability in the required execution duration, but also cause longer execution times in most of the cases. We make similar observations independently of the chosen threshold.

From our experiment results, we consider a warm-up duration of 120 s to be a good trade-off. In contrast to 60 s warm-up, 120 s result in longer median execution times, but minimize the execution duration for the vast majority of experiments (see the upper whisker). When only looking at the private cloud or benchmark UC3, also significant shorter warm-up durations of 30 s could be chosen.

Table 6 shows the execution times for all evaluated SUTs for a warm-up duration of 120 s and a threshold of t = 2000. In line with Fig. 9, we see that certain resource-load combinations require significant longer execution times. However, we can observe that in these cases testing the same load with slightly less or slightly more instances only requires a fraction of the time. Hence, with a significantly shorter execution time, we can get a good approximation of the resource demand.

7.3 Evaluation of Repetition Count

Our scalability measurement method and, thus, our proposed benchmarking tool architecture support repeating SLO experiments multiple times to increase the confidence of their result. In this section, we evaluate how many repetitions are required to decide with sufficiently high confidence whether SLOs are met.

7.3.1 Experiment Design

As in the previous evaluation, we perform SLO experiments for all 24 SUTs depicted in Table 3 with the same amounts of resources and load intensities (see Table 5). According to our results from the evaluation of warm-up and experiment duration, we run each SLO experiment for 5 minutes with the first 2 minutes considered as warm-up period. We perform 30 repetitions of each experiment as suggested, for example, by Kounev et al. (2020) to apply the Central Limit Theorem.

For the majority of SUTs, we observed a normal distribution on the computed lag trend slopes. Deriving mean \(\overline {x}\) and standard deviation s (with N − 1 degrees of freedom) of the lag trend slopes for an SUT, we can now approximate how many repetitions are required to obtain a certain confidence interval for the true mean (Kounev et al. 2020). However, similar to the previous evaluation, we are ultimately only interested in whether the lag trend slope is above or below a threshold t. Thus, we do not need to approximate the number of repetitions to obtain a two-sided confidence interval with a certain error around the mean, but instead only consider a one-sided confidence interval of \((-\infty , t)\) or \((t, \infty )\), respectively. We approximate the required number of repetitions n for such a 95% confidence interval with:

7.3.2 Results and Discussion

Figure 10 summarizes the approximated number of repetitions for different thresholds t as box plots. Our replication package (Henning and Hasselbring 2021b) allows to obtain these values also for other thresholds. We observe that independent of the chosen threshold, the required number of repetitions for most SUTs is very low: 50% of all SUTs only require 1–2 repetitions. Furthermore, the observed variability decreases with higher thresholds. While in most cases for t = 0 up to 12 repetitions are necessary, this value decreases to 6 repetitions for t = 1000 and 5 repetition for t = 2000. For t = 10000 even in the vast majority of cases only one repetition is necessary. However, a threshold of t = 10000 means that an increase of 10 000 messages per second is tolerable, which in some evaluated configurations already corresponds to half the generated load. This raises the chance of considering resource amounts as sufficient which in fact are not. A dependency on the threshold can be observed independently of the cloud platform, stream processing engine, and the benchmark. Generally, when looking at thresholds t ≥ 1000, slightly more repetitions are required in the public clouds (with more repetitions in the Google cloud than in the Oracle cloud). A possible explanation is that performance in public clouds is often influenced by co-located tenants (“noisy neighbor”) and, thus, is less stable (Leitner and Cito 2016). In the private cloud, on the other hand, we exclusively control the entire hardware. However, the observed deviation between public and private cloud is rather low. Another explanation, thus, could simply be that considerably more computing resources are available in our private cloud, resulting in a lower hardware utilization. It is also noticeable that Flink requires more repetitions than Kafka Streams. We cannot identify a clear pattern suggesting that particular benchmarks require more repetitions than others.

Table 7 shows the approximated number of repetitions of all evaluated SUTs and load intensities for different numbers of instances and a threshold of t = 2000. In addition to the box plots presented in Fig. 10, we can see that in certain cases very high numbers of repetitions (highlighted in red) would be required in order to tell with sufficiently high confidence whether the lag trend slope is above or below the threshold. However, in almost all of these cases, we would only need a few repetitions when evaluating the same SUT with slightly more or fewer instances. We also observed this when choosing a different threshold. Transferred to our scalability measurement method, this means that only a few repetitions are required to obtain a good approximation of the resource demand. Therefore, only a few repetitions are required to determine the demand function when accepting a small error in the function.

7.4 Evaluation of SLO with Increasing Resources

In this section, we evaluate how the computed lag trend evolves with increasing the provisioned resource amounts, while keeping the generated load constant. The goal of this evaluation is to analyze whether SLOs might be violated for higher resource amounts when they have been achieved before for lower resource amounts.

7.4.1 Experiment Design

In this evaluation, we evaluate selected SUTs in more detail. From our previous evaluation, we observed that the results for both public clouds do not differ significantly. The same applies for the benchmarks. To not go beyond the scope of this paper, we focus on the two benchmarks UC2 and UC3 and restrict our experiments to the private cloud and the Google cloud. This results in 8 SUTs (see Table 8).

For each SUT, we conduct a set of isolated SLO experiments, in which we generate a constant load, equal to the loads of Table 5, and different resource amounts. We repeat each SLO experiment 5 times with 5 minutes of experiment duration including 2 minutes of warm-up. Table 8 summarizes the experiment set-up. For each SLO experiment, we compute the lag trend allowing us to analyze how the lag trend evolves with increasing resource amounts. In Google Cloud Platform, we additionally performed SLO experiments in a Kubernetes cluster with 6 instead of 3 nodes as explained in the following section.

7.4.2 Results and Discussion

Figure 11 shows for each evaluated SUT how the median lag trend evolves with increasing resource amounts. Additionally, a horizontal line at a lag trend of 2000 is drawn to visualize a possible threshold for the lag trend metric.

In general, we can observe that in the private cloud the lag trend decreases with increasing amounts of instances, until it reaches a value of approximately 0 and, thus, falls below the defined threshold. This marks the resource demand of the tested load intensity according to our demand metric. After that, the lag trend fluctuates considerably for 3 out of 4 SUTs, before it stabilizes at around 0. These fluctuations occur more strongly with Flink than with Kafka Streams and more strongly with UC3 than with UC2. In particular, we can observe that 10 instances seem to perform better than 9 and 11 instances. One possible reason for this may be found in the fact that we use 40 Kafka partitions and the stream processing engines might work particularly efficiently if the partition count is a multiple of the instance count.

For the experiments in the public cloud, these effects can not clearly be observed. However, we observe that with a cluster size of 3 nodes, the lag trend increases again after some point when further instances are added. As we expect this to be due to exhausted node resources, we repeat the same experiments in a Kubernetes cluster with twice the number of nodes. From this, we can see that the same instance numbers result in lower lag trends and, especially, the lag trend remains below the threshold for higher instance numbers. Thus, we see our assumption confirmed that the increase for higher loads is caused by a high utilization of the cluster.

Regardless of the actual reasons for both observations, we can conclude that the lag trend is not always decreasing with higher resource amounts. Therefore, our proposed binary search strategy must be used with caution for the demand metric. For our capacity metric, this means that increasing resources might also lead to violations of SLOs such that the lower bound restriction can not always be applied.

7.5 Evaluation of SLO with Increasing Load

In this section, we now evaluate how the lag trend slope evolves with increasing loads, while fixing the number of processing instances. The goal is to analyze whether SLOs might be violated for lower load intensities while they are achieved for higher loads.

7.5.1 Experiment Design

Similar to the previous evaluation, we now fix the amount of instances and perform a set of SLO experiments for increasing load intensities. The remaining setup corresponds to that of Section 7.4 and is summarized in Table 9.

7.5.2 Results and Discussion

Figure 12 shows for each evaluated SUT how the median lag trend evolves with increasing load intensities. Again, a horizontal line at a lag trend of 2000 visualizes a possible threshold for the lag trend metric.

For 7 out of 8 evaluated SUTs, we observe that for low load intensities the lag trend stays reasonably constant and fluctuates only slightly around 0, until a certain load intensities is reached. In all of these cases, it does not exceed 2000, which suggests that t = 2000 is a reasonable order of magnitude for the threshold. For the Kafka Streams implementation of UC3 in Google Cloud Platform, the lag trend is always greater than the threshold since our evaluated load intensities are too high. For all other SUTs, we can first observe a slight drop in the lag trend once a certain load intensity is exceeded, which is followed by monotonically increase. This marks the capacity of the evaluated resource configuration according to our capacity metric, i.e., the maximal load it can process. The drop can be explained by the fact that the load is already high enough such that messages are massively queuing up while the SUT starts up. Once the SUT reaches its normal throughput, messages have already been accumulated and are then continuously processed, leading to a decrease in the lag. The drop of Flink deployments is stronger compared to Kafka Streams since Flink has a longer start-up time as we investigated manually. Again, the Kafka Streams implementation of UC3 in Google Cloud Platform is the only SUT, for which the the lag trend is not monotonically increasing. More specifically, for load intensities of 20 000 and 40 000 messages per second, the lag decreases. However, as we can not make this observation on other than the median data, we expect this to be outliers.

In summary, we conclude that the binary search strategy can be used to evaluate large sets of load intensities with the capacity metric, at least when benchmarking event-driven microservices with an SLO based on the lag trend metric. As furthermore the computed lag trend is monotonically increasing after exceeding the defined threshold, we expect also the lower bound restriction to be applicable for the demand metric.

7.6 Threats to Validity

The goal of this experimental evaluation was to assess how configuration options of our scalability benchmarking method influence their results. In the following, we report on the threats and limitations to the validity of our evaluation.

Threats to Internal Validity

Cloud platforms in general allow to make only little assumptions regarding the underlying hardware or software infrastructure (Bermbach et al. 2017). Due to techniques such as containerization, cloud-native application abstract this even further. Hence, we only have little influence on the execution environment and cannot control possible influences on our result. Major part of this evaluation was to investigate the variability of results and hence the underlying processing capabilities of stream processing engines. Nevertheless, we reused the same clusters among all our experiments such that we cannot rule out that a recreation of the cluster on potential other hardware or with other co-located VMs will cause different results. Furthermore, we only performed our experiments in a relatively short time frame. We performed experiments of the same type mostly in a sequence such that we cannot rule out that general performance variations over several hours bias our results. While early works on cloud benchmarking found that performance exhibits clear seasonal patterns (Iosup et al. 2011), more recent research were not able to confirm this (Leitner and Cito 2016). Our cluster configuration of the private cloud and the public clouds is very different, which may make it difficult to compare them.

Threats to External Validity