Abstract

Test-to-code traceability links model the relationships between test artefacts and code artefacts. When utilised during the development process, these links help developers to keep test code in sync with tested code, reducing the rate of test failures and missed faults. Test-to-code traceability links can also help developers to maintain an accurate mental model of the system, reducing the risk of architectural degradation when making changes. However, establishing and maintaining these links manually places an extra burden on developers and is error-prone. This paper presents TCTracer, an approach and implementation for the automatic establishment of test-to-code traceability links. Unlike existing work, TCTracer operates at both the method level and the class level, allowing us to establish links between tests and functions, as well as between test classes and tested classes. We improve over existing techniques by combining an ensemble of new and existing techniques that utilise both dynamic and static information and exploiting a synergistic flow of information between the method and class levels. An evaluation of TCTracer using five large, well-studied open source systems demonstrates that, on average, we can establish test-to-function links with a mean average precision (MAP) of 85% and test-class-to-class links with an MAP of 92%.

Similar content being viewed by others

1 Introduction

Unit testing is an integral part of software development, however, to fully realise the benefits of unit testing, it is necessary to maintain an accurate picture of the relationships between the tests and the tested code. Traceability links provide an intuitive mechanism for modelling these relationships.

Once established, test-to-code traceability links can improve the software engineering process in several ways, including making changes to the system safer, facilitating the reuse of artefacts, and aiding program comprehension (De Lucia et al. 2008; Antoniol et al. 2002; Winkler and von Pilgrim 2010). Changes to the system become safer as, when a developer makes a change to a piece of tested code, they can use the traceability links to easily discover which tests also need to be changed, and vice-versa. This helps to promote the co-evolution of code as it highlights to the developer code that needs to evolve along with a change. This is important as previous work has shown that test repair and test modification is a common and important task (Pinto et al. 2012) and that co-evolution is desirable but typically does not happen consistently over the course of a project (Zaidman et al. 2011). This work has shown that testing is often done in short intense periods between periods of increasing test stagnation. Co-evolution, therefore, is often not consistent in practice and the utilisation of automated test-to-code traceability link establishment could help to improve this and reduce the risk of desynchronisation between the tests and code, an issue that can cause test failures and prevent the discovery of new faults. While developers can use fault localisation techniques to discover which functions may be causing test failures, traceability links have the benefit of being bidirectional, so developers can start from a function and find the corresponding tests. Traceability links are also used in regression test suite optimisation in continuous integration (Elsner et al. 2021) to identify and execute tests that are potentially affected by a change and where executing the full test suite would be too expensive. This parallel between test-to-code traceability link establishment and regression test case selection is also noted by Soetens et al. (2016) who discovered that existing test-to-code traceability techniques, such as naming conventions, fixture element types, static call graphs, and LCBA can work well but are very situational.

Industrial need for the automated establishment of test-to-code traceability links is demonstrated by Ståhl et al. (2017) through case studies and developer interviews. The developer interviews were focused on themes and the theme that encompasses this work, ’Test Results and Fault Tracing’, attracted the most number of relevant statements, with interviewees stating, for example, that it was ’particularly important’ and ’super crucial’. Using trace links to ‘drill down’ when troubleshooting failed tests was specifically mentioned. The developers also made clear that automation is crucial as manual traceability handling is a major blocker for more frequent deliveries of software. Traceability is also gaining importance due to the recent growth of machine learning for software engineering, where traceability links have been used to build corpora of training data. Watson et al.(2020), White and Krinke (2018, 2020) are examples of work that utilise test-to-code traceability links for building a training corpus for neural networks that generate test code for a given function. In this use case, test-to-code traceability links are used to train sequence to sequence machine learning models to generate test code using a function as input. Therefore, a large, high-quality data set of test-to-code traceability links is required to train and test the model. As the performance of the model is dependent on the size and quality of the data set, developing approaches for automatically and accurately establishing traceability links can produce larger data sets and reduce the amount of noise, thus improving the ability of the models to solve these problems.

While there has been an effort on some projects to have developers manually maintain traceability links, this practice is not common as it creates extra work for developers. Instead, developers often employ naming conventions, e.g., matching the names of test classes with the names of tested classes, with ‘Test’ appended. In most instances, where projects have attempted to manually maintain traceability links, these have been at the class level where the number of links is more manageable and the relationships between test artefacts and tested artefacts are usually simple. Therefore, to avoid creating extra work for the developers and the errors associated with the manual maintenance of traceability links, the research community has focused on developing approaches for the automatic establishment of traceability links.

The difficulty in establishing test-to-code links lies in the fact that not all code executed by a test is part of the code that is being tested. This is because many tests will call functions that are not considered to be amongst the functions under test, such as helper functions, getters and setters, or functions that initialise the state of an object before the functions under test are invoked. Therefore, simply considering all executed code as tested code (Hurdugaci and Zaidman 2012) is not an accurate technique of establishing test-to-code traceability links.

In this paper, we present TCTracer, an approach and implementationFootnote 1 which aims to overcome the weaknesses of existing test-to-code traceability link establishment approaches by employing a wide range of techniques that utilise information from dynamic call traces and static information. TCTracer also joins these techniques to produce a combined score that performs better overall than any individual technique. In addition, unlike previous work, TCTracer is applied to both the method level and the class level which allows us to establish links between individual tests and their tested functions as well as whole test classes and their tested classes. TCTracer uses its multilevel aspect to create a flow of information between the levels that can improve effectiveness.

Our approach is evaluated using a manually curated ground truth (White and Krinke 2021), at both the method and class levels, from five non-trivial and well-studied subject projects.Footnote 2 Our findings show that, on average, using our combined technique, we can achieve an increase in effectiveness over existing techniques at both the method and the class levels. At the class level, our findings reveal that static naming techniques alone can produce results equivalent to the combined score.

In addition to this evaluation, we conduct experiments to assess an alternative technique for combining scores using machine learning (ML), the effect of weighting techniques during combination, and a manual investigation into the causes of false negatives and false positives.

This is an extension to our previous work (White et al. 2020) where we first introduced TCTracer. We build on the previous work by incorporating static techniques, investigating alternate combination methods and technique weighting schemes, expanding the ground truth, and performing a more in-depth analysis of the accuracy of the approach.

The main contributions of this paper are:

-

An approach to test-to-code traceability that utilises an ensemble of techniques using dynamic and static information and a multilevel flow of information.

-

A comparative evaluation of each technique at both the method and class levels and across information types.

-

An evaluation of the benefit gained by utilising multilevel information.

-

An evaluation of two methods for combining individual techniques and the effect of weighting individual techniques prior to combination

-

A manual investigation into the causes of false positive and false negative links

-

An updated manually curated ground truth dataset (White and Krinke 2021) of test-to-function and test-class-to-class links.

The paper is structured as follows: Section 2 provides the background and motivation for this work by presenting a review of previous work which explores the current state-of-the-art and highlights the weaknesses of existing approaches which TCTracer addresses. Section 3 presents an overview of the approach used by TCTracer. Section 4 provides the details of the techniques used while Section 5 describes how we utilise the techniques within our approach to generate the predicted traceability links. Section 6 describes the implementation of TCTracer and TCagent, the Java Virtual Machine agent used for the collection of the dynamic trace information. Section 7 presents the evaluation, including the experimental setup, research questions, and results and Section 8 presents a discussion of these results and other findings and discussion points, including the main takeaways. Section 9 describes the internal and external threats to validity and ethical considerations. Section 10 discusses additional related work that does not form part of the background in Section 2. Finally, Section 11 presents the overall conclusion of the work.

2 Background

Establishing and maintaining traceability links between tests and their tested functionality has received significant attention from the research community as traceability links have multiple applications in the software engineering process, such as determining which test cases need to be rerun after a change has been made, maintaining consistency during refactoring, and providing a form of documentation. Test-to-code traceability can, for example, help to locate the fault that causes a test case to fail. Qusef et al. (2014) describe these benefits in detail and (Parizi et al. 2014) presents an overview of the achievements and challenges of test-to-code traceability. Prior research has investigated the use of gamification to improve manual maintenance of traceability links (Parizi 2016; Meimandi Parizi et al. 2015) but this approach has not seen significant adoption.

Most previous work on test-to-code traceability (see Parizi et al. (2014) for an overview) has focused on the class level, where test classes are linked to their tested classes (Van Rompaey and Demeyer 2009; Qusef et al. 2014; Gethers et al. 2011; Kicsi et al. 2018; Csuvik et al. 2019a, 2019b).

Van Rompaey and Demeyer (2009) is the closest work to ours as they investigate six traceability techniques to link test classes to classes-under-test over three projects from which they extracted a ground truth of 59 links. They report perfect precision and recall for the use of naming conventions, but report very low precision and recall for using similarity (LSI) between test classes and classes-under-test. Rompaey and Demeyer investigate mostly static techniques and only use tracing to establish LCBA. While they only investigate on the class level, we investigate dynamic techniques on the class and the method level over much larger ground truths.

SCOTCH+ (Source code and Concept based Test to Code traceability Hunter) is a traceability system introduced by Qusef et al. (2014) that achieves better accuracy and provides more benefit to developers than LCBA or NC (Qusef et al. 2013). SCOTCH+ applies dynamic slicing to identify a set of candidate tested classes which it then filters using a textual coupling analysis called Close Coupling between Classes (CCBC) and name similarity (NS) scores.

Kicsi et al. (2018) explore the usage of Latent Semantic Indexing (LSI) over source code to establish traceability links between test classes and tested classes by assuming that a test class should be lexically similar to its tested class. They extract a ground truth from five open source systems by extracting only the links between test classes and tested classes that follow (exact) naming conventions. They report that the ground truth link is ranked top between 30% and 62% and is present in the top 5 between 57% and 89%, suggesting a low recall (precision is not investigated). Csuvik et al. (2019b) replaced LSI with word embeddings within the same approach and report better precision when using word embeddings (no investigation of recall has been done). They also compare LSI, word embeddings and TF-IDF (Csuvik et al. 2019a) in the same way and report that word embeddings perform best in terms of precision and recall.

Not much work has been done on the method level (Bouillon et al. 2007; Hurdugaci and Zaidman 2012; Ghafari et al. 2015), where individual unit tests are linked to their tested functions, despite being shown to be helpful for developers (Hurdugaci and Zaidman 2012).

EzUnit (Bouillon et al. 2007) is a framework that allows developers to annotate tests with links to the method-under-test. To do so, it performs static analysis and identifies the methods called by a test which are suggested for annotation. EzUnit highlights the linked methods when an error in the test occurs. A similar tool is TestNForce (Hurdugaci and Zaidman 2012) which links tests to methods-under-test. Like our approach, tracing is used to identify the methods that are called by a test. No further filtering is done and their approach will thus include a large number of utility methods leading to low precision. Ghafari et al. (2015) also work at the method level where they break down test cases into sub-scenarios for which they attempt to establish the tested function, termed the focal method. This is done using static data flow analysis. The results for this technique are promising, however, two of the four subjects used for the evaluation are very small (130 and 43 tests), while the other two are still smaller than our smallest subject. As it is easier to achieve higher precision and recall on smaller projects, due to fewer candidate links, the results cannot be directly compared to those presented in this paper.

Our work is the first to address both the class level and the method level simultaneously. This allows us to construct both types of links and utilise a cross-level flow of information to improve overall performance. This gives our approach a more accurate and fine-grained view of the relationships between the artefacts. Our work also distinguishes itself from previous work by utilising both dynamic and static information and ranking potential links, instead of the purely static information that has typically been used before to generate sets of (unranked) links.

Therefore, the development of a new approach to test-to-code traceability establishment is motivated primarily by the fact that all existing techniques have some weaknesses that make them unsuitable for use as a general solution. One of the most common techniques for establishing traceability links, naming conventions (NC), is a good example of this. This approach relies on using the naming conventions for test artefacts (unit tests or test classes) to identify their links to tested artefacts (functions or classes). For example, JUnit 3 required a prefix of ‘test’ to identify test methods. The specific conventions used may vary between projects, however, the standard convention is that a test artefact should share the same name as the artefact that it is testing, with test prepended or appended (Van Rompaey and Demeyer 2009; Madeja and Porubȧn 2019). For example, a function named union will be considered to be tested by a test named testUnion. However, this technique is not effective if the project does not adhere to the naming conventions and can have poor recall even for projects that do. This is because it assumes a one-to-one relationship between test artefacts and tested artefacts when this is not always the case. The Commons Collections projectFootnote 3 provides a real-world example of this, where the function disjunction is tested by the tests testDisjunctionAsUnionMinusIntersection and testDisjunctionAsSymmetricDifference. As this is a one-to-many relationship, the names do not match the naming conventions and NC would not be able to recover these links. While test-to-code traceability based on name similarity has good accuracy on the class level, as developers usually follow naming conventions for the test classes, on the method level there exist various guidelines on how to name a test method. Madeja and Porubȧn (2019) investigated 5 popular Android projects and found that only 49% of tests contain the full name of the method-under-test in the test name and that 76% of tests contain a partial name of a method-under-test in the test name.

Last Call Before Assert (LCBA) is another existing technique that has severe limitations. LCBA operates on the assumption that the function which returned last before an assert is called is the function that the assert is testing. However, this assumption is often incorrect. One common example of this is when the purpose of a tested function is to change the state of an object. In this case, to check that the function has performed the correct operation, a state checking function must be called to get the changed state so that it can be compared to an oracle. This causes LCBA to incorrectly identify the state checking function as the tested function. Even if the tested function does directly return the value that needs to be checked, this value will often not be checked by an assert immediately after being returned. This could be because the test needs to call helper functions before the assert, possibly to establish the oracle.

Finally, textual similarity measures based on information retrieval techniques have also been used in an attempt to recover test-to-code traceability links, with varying degrees of success (Antoniol et al. 2002; Csuvik et al. 2019a). However, none of them are sufficient on their own as techniques designed for natural language do not directly translate to code. This is due to the bimodality of code which leads to the possibility that two code snippets may be closely related semantically but completely different lexically, or vice-versa (Allamanis et al. 2018).

Given these inherent weaknesses in the individual existing techniques, there is a strong motivation to design a new approach that, while exploiting the strengths of the individual techniques, collectively overcomes their weaknesses. This is the approach utilised in TCTracer and presented in this paper.

A secondary motivation for the development of a new approach to test-to-code traceability stems from the fact that existing work has only focused on either the method level or the class level. As both levels can provide useful information to a developer, we were motivated to develop a single approach that worked at both levels simultaneously. This resulted in the multilevel aspect of TCTracer, which in turn facilitated the use of multilevel information flow to further increase the effectiveness of the approach.

3 Approach

Our approach utilises dynamic call traces and static information to create candidate links between test artefacts and tested artefacts. It assigns scores to the candidate links using an ensemble of techniques and these scores are used to rank the candidates and predict which of them are true test-to-code traceability links. The predicted links can then be used, e.g., in an IDE, to navigate between tests and the tested artefacts.

We utilise dynamic information as it provides us with the call traces showing which functions were executed by which tests, thus providing a natural filtering that serves as a starting place for establishing traceability links. However, as dynamic analysis requires the system-under-analysis to be executed, gathering dynamic information is not possible in all scenarios, such as where a large and diverse corpus of code is being used. For example, if an approach uses a corpus that includes the top 1000 GitHub projects, having to build and execute every project would be prohibitively time-consuming. In this scenario, static information is the only practical information source and we, therefore, incorporated techniques that only require static information to determine the usefulness of the approach in this scenario.

As we are establishing links on the method-level as well as on the class-level, we use the terms function or method-under-test when referring to a tested method and the terms tested class or class-under-test for the class-level. Moreover, on the class-level, a class-under-test is tested by one or more test classes, and on the method-level, a method-under-test is tested by one or more test methods.

Our multilevel approach starts by dynamically collecting information about the function calls made by each test, specifically, which function was called and the depth in the call stack of the function call relative to the calling test and the set of functions that were executed immediately before an assert. Static information, which consists of the fully qualified names (FQNs) and bodies of all classes, test classes, functions, and tests is also collected by parsing the source code of the project-under-analysis. We then apply an ensemble of traceability techniques to the method level, using the collected dynamic and static information. This results in a set of test-to-function scores for each technique, each of which encodes the likelihood that a given function is the tested function for a given test that calls it. We refer to these scores collectively as the method level information. The same process is then applied at the class level, where sets of test-class-to-class scores are established using the same techniques, providing us with the class level information. At this stage, we create a cross-level flow of information by utilising the method level information for class level predictions and the class level information to augment the method level predictions.

To compute our scores we start with the techniques which utilise the dynamic information, for which we selected two existing test-to-code traceability techniques and formulated six new techniques. Six of the techniques produce a score in the interval [0,1] for every possible link, indicating the likelihood that the link is correct, while the other two produce binary scores. For the techniques which utilise static information, we selected the dynamic techniques which were applicable and modified them to work with static instead of dynamic information. We also compute a combined score for all the individual techniques. The method of combining scores is explored in Section 7.2.5. These scores are used to rank the candidate links so that those ranked highest are most likely to be true traceability links. Thresholds are then applied to construct the sets of predicted links.

We describe our techniques in the following section where, for simplicity, we will present them at the method level. To apply them on the class level, test classes are used instead of test methods and tested classes instead of tested functions.

4 Techniques

As discussed in Section 2, existing test-to-code traceability techniques have weaknesses that we try to overcome with new techniques. Despite their weaknesses, we selected two established techniques, Naming Conventions (NC) (Van Rompaey and Demeyer 2009) and Last Call Before Assert (LCBA) (Van Rompaey and Demeyer 2009) because they perform well in certain situations. The new techniques formulated for TCTracer include four string-based techniques: a variant of Naming Conventions (NCC), two variants of Longest Common Subsequence (LCS-B and LCS-U), and using the Levenshtein edit distance (Levenshtein 1966), which all utilise name similarity. Two statistical call-based techniques (SCTs) based on Tarantula fault localisation (Jones et al. 2002) and Term Frequency–Inverse Document Frequency (TFIDF) (Manning et al. 2010) are also included in the new techniques. All the mentioned techniques will be discussed in their dynamic (Section 4.1) and static (Section 4.2) variants.

The original NC was selected for our technique ensemble as it should have high precision, especially in projects where the naming conventions are strictly followed and is a common method by which developers identify tests for a given method during development (Hurdugaci and Zaidman 2012; Madeja and Porubȧn 2019). LCBA was selected as it can perform well in certain circumstances, specifically when the tests conform to the style of using an assert to test the returned value from a function immediately after the function is called. As both NC and LCBA are well-established techniques for test-to-code traceability recovery (Qusef et al. 2013, 2014; Madeja and Porubȧn 2019; Csuvik et al. 2019a), they also make good candidates to serve as comparison points for our other techniques.

NCC requires that the name of the test contains the name of the tested artefact. It was included in the technique ensemble as it utilises the strengths of NC but should achieve higher recall as it can establish many-to-one relationships between functions and tests, as opposed to the solely one-to-one relationships that are discoverable with traditional NC. This helps to alleviate some of the problems with traditional NC, as discussed in Section 2. LCS-B and LCS-U compute the ratio of the name lengths and the length of the longest common subsequence of the names of the test and the tested artefact. They were used as they utilise the same intuitions as NC and NCC respectively but instead of producing a binary score, they produce a real-valued score that indicates how close to satisfying NC/NCC the potential link is. This is useful as there are instances where NC/NCC are not satisfied but are very close to being satisfied, for example, in the case of NC, if there are extra words before or after the name of the function or, in the case of NCC, if the name of the function is abbreviated or has grammatical differences in the name of the test case. In these instances, the real-valued scores of LCS-B and LCS-U are more useful than the binary scores of NC and NCC as we can still determine if a test and a function are likely related. We include the normalised Levenshtein distance between the names as a technique as it provides a different view of name similarity to the longest common subsequence which is used in the LCS-B and LCS-U techniques. For these naming techniques, we use the simple method or class names as using FQNs causes the scores to have less difference between them. For example, all the FQNs in Commons Lang share the common prefix org.apache.commons.lang3 and many share even longer prefixes. Using the FQNs, therefore, squashes the distribution of the naming scores towards the high end making it more difficult to distinguish correct links from incorrect links.

We include the Tarantula technique as, intuitively, the task of recovering test-to-code traceability links is similar to the task of fault localisation as, if a function is causing a test to fail, it is likely that the function and the test should be linked. Therefore, our intuition is that by adapting a well-known fault localisation technique to traceability we may find an effective method of recovering test-to-code trace links. The inclusion of the TFIDF technique is motivated similarly to Tarantula in that we view the task of determining the relevance of terms to a document as being analogous to the task of determining which functions are most relevant to a test case and therefore which functions are most likely to be the targets of that test. As TFIDF is a standard, well-tested method of establishing term relevance, we adapted this method to test-to-code traceability.

All of the above techniques will be evaluated to identify individual strengths and weaknesses and compared to the established techniques NC and LCBA to establish if their known weaknesses can be overcome. We also include all techniques in a combined score as we believe that each technique has the potential to provide at least some information that cannot be wholly obtained using any other technique.

All of our techniques utilise dynamic trace information and, where possible, we have adapted the techniques to create variations that use static information. We opted to only adapt the naming-based techniques to use static information as to use the call-based techniques we would have to use static call graphs which are inherently an over-approximation with regards to polymorphic function calls that are resolved at run-time. This results in very low precision when using them for traceability techniques.

We have discarded a series of other techniques. First, Fixture Element Types (FET) (Van Rompaey and Demeyer 2009) and SCOTCH+ (Qusef et al. 2014) cannot be applied on method-level and Static Call Graph (SCG). Second, Lexical Analysis (LA), and Co-Evolution (Co-Ev) have been discarded because of their low precision and recall (Van Rompaey and Demeyer 2009; Parizi et al. 2014; Kicsi et al. 2018).

4.1 Dynamic Techniques

In this subsection we describe the techniques that use dynamic information to compute traceability scores.

4.1.1 Naming Conventions

As naming conventions can change between projects (Van Rompaey and Demeyer 2009), we have selected two techniques for traceability recovery using naming conventions: traditional and contains.

Traditional Naming Conventions (NC). NC establishes links by considering a function to be linked to a test if the name of the test is the same as the function after the word test has been removed from the test name. For example, a function named union will be considered to be tested by a test named testUnion.

where nt and nf are the names of t and f respectively, after the word test has been removed from the name of test t.

Naming Conventions – Contains (NCC). NCC is a derivative of traditional NC which replaces the requirement that the test name must match the function name exactly, with the more relaxed requirement that the test name only needs to contain the function name. Therefore, NCC considers a function to be linked to a test if the name of the test contains the name of the function, after removing test from the test name. A positive NCC result is counted as a score of 1 while a negative NCC result is counted as 0:

4.1.2 Name Similarity

Name similarity is a variation of the Naming Conventions approach and is based on the premise that developers, following established naming conventions, give unit tests names that are similar to or match the name of the function. Our hypothesis is that name similarity measures have the potential to perform better than the existing NC approach as they are less strict on exact matches and allow for slight variations in name, for example, due to grammatical reasons. For instance, a method named clone would not be identified under NC for a test named testCloning, whereas it would be possible under name similarity measures for clone to be assigned a high traceability score with testCloning. We consider the name for a method to be simply the name of the method in lower case without the class name and with the string test removed from test names when performing comparisons. For example, for the fully qualified method name com.example.ExampleClass.testComputeScore(boolean), we perform name similarity comparisons on computescore.

To compute the name similarity, we use two well-established techniques, Longest Common Subsequence (LCS) and Levenshtein Distance.

To establish the LCS similarity, we compare the length of the longest common subsequence to the length of the function and test name. The longest common subsequence techniques give function names that have more characters in common with (and in the same order as) a test name a higher score.

Longest Common Subsequence – Both (LCS-B) In the first LCS variant, we maximise the score at 1 when the method and function names coincide exactly (aligned with the behaviour of the NC approach), that is, when nt = nf and LCS(nt,nf) = nt. We divide the length of the LCS by the greater of the length of the two strings as follows:

Longest Common Subsequence – Unit (LCS-U) In the second variant, we divide the length of the LCS by the length of the function name only. This variant is more closely aligned with the behaviour of the NCC approach, with the score maximised at 1 when the function name is contained in the test name.

Levenshtein Distance The Levenshtein distance (Levenshtein 1966), often known as edit distance, measures the distance between two strings by measuring the minimum number of edits it takes to transform one string into the other. Under this technique, the distances between the function names and test names are computed and links with the lowest Levenshtein distance are awarded the highest scores. We first normalise the Levenshtein distance by dividing it by the length of the longest string and then take the compliment so that higher scores are given to closer strings:

4.1.3 Last Call Before Assert (LCBA)

LCBA attempts to establish traceability links by working on the assumption that the function returned last before an assert is called is the function that the assert is testing. Therefore, LCBA will establish links between a test and every function that is returned last before an assert that appears in that test. In TCTracer, if an LCBA link is established between a test and a function it is counted as a traceability score of 1 while no LCBA link is counted as a score of 0:

4.1.4 Tarantula

Tarantula (Jones et al. 2002) is an automatic fault localisation technique that assigns a suspiciousness value to code, with higher suspiciousness values indicating a higher probability of the code in question being responsible for the fault. The use of automatic fault localisation is based on the idea that it would point to the most relevant entity if the current test fails. The suspiciousness of a code entity e is defined as follows:

where failed(e) is the number of tests that executed e and failed, totalfailed is the number of tests that failed in total, passed(e) is the number of tests that executed e and passed, and totalpassed is the number of tests that passed in total.

To obtain the traceability score for a given test-to-function pair, where the test executes the function, we compute the suspiciousness of the function with respect to the test, assuming that the test under consideration fails and all others pass.Footnote 4 It is a heuristic to identify the methods that are most specific to the current test. Tarantula decreases the suspiciousness of methods executing during passing tests – in our case, passing tests that execute the method. Using this heuristic we can derive our traceability score equation from (8):

where T is the set of all tests in the test suite and \(f \in t^{\prime }\) indicates that function f is executed by test \(t^{\prime }\). For pairs where the test t does not execute the function f, a score of 0 is assigned.

4.1.5 Term Frequency–Inverse Document Frequency (TFIDF)

Term frequency–inverse document frequency (TFIDF) is a measure traditionally used in information retrieval to determine how significant a term is to a document. TFIDF takes into account the prevalence of the term in the document and in the corpus as a whole, with the intuition being that if a term is frequent in a particular document but not frequent in the rest of the corpus, that term must carry a high significance to the document and carries useful information about the semantics of the document. We apply this to the domain of test-to-code traceability by having tests take the role of the documents and functions take the role of the terms. This expresses the intuition that if a function is executed frequently by a particular test and infrequently by other tests, it is likely that the test is testing the function. We define our traceability score using TFIDF as:

The usual definition of the term frequency (tf) function does not match the test/function scenario. Thus, tf and idf are defined as:

where T is the set of all tests in the test suite and F is the set of all functions in the system. The tf function measures how the information of a test is spread over the called functions and the idf function measures how common the function is over all tests.

4.2 Static Techniques

In this section, we describe the techniques we selected to adapt to using static information and the changes that we had to make to them.

4.2.1 Naming Conventions

For the static versions of naming conventions, we use the same variants that we use for our dynamic versions, namely the traditional and contains variants. However, in contrast to how they are used in the dynamic approach, when using them statically we must utilise both the function name and the class name. This is due to the fact that in the dynamic approach we are only using the names of functions that have been executed, whereas in the static approach we are using all the functions in the project. Therefore, if we were to use only the function name in the static approach, we would likely have a very low precision as it is often the case that multiple classes contain functions of the same name. This effect is most obvious when examining commonly overloaded functions such as toString. In this instance, using only the simple name would result in any test for a toString function being linked to all toString functions in the project instead of just the one belonging to the appropriate class.

Static Naming Conventions (Static NC). Similar to the dynamic approach, we compare function and test names after the word test has been removed from the test name. However, we now also incorporate the class name and perform the same comparison with the test class name. Therefore, we now link a test to a function if the test and function names match and the test class and functions class names match.

where nt and nf are the names of t and f respectively and ntc and nfc are the names of the classes containing t and f respectively, after the word test has been removed from the names of the test and test class.

Static Naming Conventions – Contains (Static NCC). Similar to static NC, we adapt the NCC technique from the dynamic version to incorporate the class names for the static version. Therefore, static NCC considers a function to be linked to a test if the name of the test contains the name of the function and the name of the test class contains the name of the functions class, after removing test from the test name and test class name.

4.2.2 Static Name Similarity

We use the same name similarity techniques in our static approach as in our dynamic approach, namely LCS-B, LCS-U, and Levenshtein distance. The way the scores are computed remains unchanged from the dynamic techniques, as described in Section 4.1. However, in the case of the static techniques, we use the FQNs of the functions and the tests, instead of just the simple names and we remove the word test from anywhere it appears in the whole FQN of the test or test class. We use the FQN because, like the static naming conventions techniques, we have to account for the fact that multiple classes are likely to have functions of the same name. Using the FQNs accounts for this, as well as for the situation where different packages may contain classes of the same name.

4.3 Score Scaling

Our approach utilises two techniques for scaling traceability scores which can be applied independently as well as composed together.

4.3.1 Call Depth Discounting

Tests often do not invoke the tested functions directly, for example when a public method delegates the actual implementation to a private method. The TCTracer approach utilises the intuition that the relative depth between a test and a function in the call stack can serve as an indicator of if the function is tested by the test. We hypothesise that functions that are closer to a test in the call stack are more likely to be the tested functions than functions that are far away. Therefore, we utilise a relative call depth discount factor γ ∈ [0,1], which discounts the traceability score for a test-to-function pair in proportion to the distance between them in the call stack:

where scored is the discounted score, score is the non-discounted score, and dist(t,f) is the distance between the test and the function in the call stack. We subtract one from the distance so as to apply no discount to functions that are called directly by the test.

4.3.2 Normalisation

The computed scores can be used to rank the possible links to called functions within a test directly, using the top-ranked link as the most likely link. However, the actual distribution of scores can vary between techniques and between tests. Therefore, we normalise the scores so that the largest score within a test is 1:

where scoren is the normalised score. Normalisation allows us to define a threshold around the top-ranked link.

In the end, we focus on thirteen individual techniques, shown in Table 1. NC, NCC variants and LCBA are binary, i.e., they produce scores of either 1 or 0 which are used directly. The eight other non-binary techniques are normalised and use call depth discounting.

5 Link Prediction

To construct link predictions, we first apply our traceability techniques to the method level and class level individually. The techniques can be directly applied to the class level by using the test classes instead of test methods and tested classes instead of tested methods. The information extracted from each level is then propagated between levels to produce another set of links at each level. The propagation is done by utilising method level scores in the computation of class level scores and class level scores in the computation of method level scores.

5.1 Method-Level Prediction

The process starts by executing each of our individual traceability techniques at the method level, resulting in a matrix of scores for each technique:

where T is the set of all tests in the system and F is the set of all functions. Each element of M is the traceability score for a given test-to-function pair (t,f) ∈ (T ×F).

Another matrix is then constructed for the combined technique by averaging over all the individual technique matrices and normalising the rows, using (15).

Each of these nine matrices is used to build sets of predicted test-to-function traceability links. To convert the real-valued scores into boolean link/no-link predictions we introduce a set of thresholds, one for each technique (shown in Table 1), and consider scores above the threshold as positive link predictions. (17) defines how each set of method level traceability links are constructed.

where Mtf is the score for the given test-to-function pair and τ is the threshold for the technique.

5.2 Class-Level Prediction

We now move to the class level where, in the same way as the method level, we apply our individual traceability techniques and combine them, resulting in nine matrices, one for each technique:

where TC is the set of all test classes in the system and FC is the set of all non-test classes. Each element of C is the traceability score for a given test-class-to-class pair (ct,cf) ∈ (TC ×FC).

Similarly to the method level, C is used to compute sets of class level traceability links using (19).

5.3 Method- to Class-Level Propagation

Given the method level and class level score matrices, we can now propagate information across levels. First, we elevate the method level information to the class level by extracting scores from M and organising them into class level pairs. This allows us to use them for computing class level traceability scores. To do this, for each test-class-to-class pair (ct,cf), we construct a matrix EM(ct,cf) to hold the relevant method level information:

where t(ct) is the set of tests in test class ct, f(cf) is the set of functions in class cf. Each element of EM(ct,cf) is the method level traceability score for a given test-to-function pair (t,f) ∈ (t(ct) ×f(cf)).

To obtain the traceability score for the test-class-to-class pair, the method-level scores in EM(ct,cf) are summed along both dimensions, resulting in a scalar score.

This process is executed for each test-class-to-class pair in the system and the produced scores are used to create a symmetric matrix that holds the scores for all pairs:

Therefore, each element of EM is the score for a given test-class-to-class pair (ct,cf) ∈ (TC ×FC) that is derived from method level information. All rows in EM are normalised using (15).

The scores in EM are then used to produce a set of class level predicted links using (22).

5.4 Class- to Method-Level Propagation

To propagate information from the class level to the method level, we take the method level information in M and augment it with the class level information in C, creating a new matrix \(\mathbf {AM} \in \mathbb {R}^{|\mathrm {T}| \times {} |\mathrm {F}|}\). For each test-to-function pair (t,f), the augmentation is performed by first finding the test-class-to-class pair (ct,cf) that corresponds to the test-to-function pair, i.e., the test class ct that contains the test t and the tested class cf that contains the function f. We then take the score for the method level pair from M and the score for the class level pair from C and multiply them to produce the augmented method level score for AM, as shown in (23).

Where c(m) returns the class containing method m.

From AM, the set of augmented method level traceability link predictions are produced using (24).

6 Implementation

TCTracer is compatible with any Java system that uses the JUnit 3, 4, or 5 test framework and is compatible with Java 8 or newer. Dynamic trace data is collected from JUnit test suite executions, which is then used for computing the dynamic traceability links by the techniques described in Section 4.1.

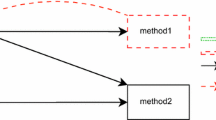

To collect the dynamic execution traces, TCTracer requires the system-under-analysis to be instrumented. The Java Agent API was used for this as it provides access to the bytecode of Java classes and allows for them to be transformed before being loaded by the JVM. As shown in Fig. 1, the instructions for transforming the bytecode are provided by a Java program, TCagent, which is passed to the JVM at runtime through the -javaagent flag. TCagent utilises the ByteBuddyFootnote 5 library and allows us to easily transform the bytecode of the running system to log the data that is used by TCTracer to compute the traceability links.

The execution traces are parsed to collect the dynamic information for each test and record the set of methods that were the last return before an assert was called, as is needed for LCBA. Methods that are not defined in the project-under-analysis, such as those from third-party APIs, are filtered out.

The static information is obtained by scanning for .java files in the source and test folders in the project-under-analysis and using Java ParserFootnote 6 to parse the classes and test classes. These are used to extract the functions and tests from the project which are stored in TCTracer.

The main challenge of working with static information is the number of test-to-function pairs that we need to compute scores for. This is essentially the total number of tests in the project-under-analysis multiplied by the total number of functions. This leads to a very large number of candidates pairs, even in medium-sized projects. For example, Commons Lang has a total of 9,522,771 candidate pairs. This creates a problem when it comes to link prediction as it causes the matrices to be very large and results in the time and space complexity of the analysis increasing to the point where it is intractable on even high resource computers. To work around this problem we set a threshold on the sum of the static scores, which we use to discard any candidate pair that does not meet the threshold, before progressing to the link prediction phase. This threshold was set by finding the highest value which does not have any impact on the recall and, therefore, does not filter out any true links. This does not mean that we will achieve 100% recall overall, just that recall is not lower than it would be without this threshold being applied. By doing this, we can filter out over 90% of the candidate links before the link prediction phase, effectively managing the size of the matrices, while not affecting the ability of TCTracer to find correct links.

In the final phase, TCTracer computes the sets of predicted links described in Section 5 using the dynamic information, the static information, and configuration parameters, such as threshold and call depth discount factor. If a ground truth is present, TCTracer computes the evaluation metrics for each set of predicted links.

7 Evaluation

This section presents our research questions, the design of the experiments carried out to answer these questions, the results, and a discussion of the findings.

7.1 Experimental Setup

The experimental setup consists of running TCTracer on a set of open source subjects and computing a set of evaluation measures for each subject, using a manually established ground truth.

Subjects. For our subjects, we selected three well known open source projects that are written in Java and utilise the JUnit testing framework: Commons IO,Footnote 7 Commons Lang,Footnote 8 and JFreeChart.Footnote 9 These subjects were selected as they are well known, widely used, and sufficiently large to demonstrate the applicability of TCTracer to real-world systems. For the evaluation of TCTracer, we established a ground truth for these projects at both the method level and the class level. To establish the method level ground truth, we used a team of three judges, one PhD student and two final-year undergraduate students, who each independently inspected a set of tests selected uniformly at random from the subjects and made determinations about which functions were tested by each test. In order to make these judgements, the judges looked at evidence such as which functions were called, how often they were called, how many other functions were called, how often called functions were called by other tests, the names of the tests, and which functions returned values that were then checked by an assert. After conducting this process independently, the judges collectively inspected any instances where there were disagreements and were able to reach a final, unanimous judgement, resulting in full inter-rater agreement.

In addition to our own method level ground truth, we searched for an existing ground truth that has been used in previous work to broaden our results and cross-validate our ground truth creation protocol. This resulted in the discovery of two other ground truths which contained seven projects between them. We investigated the given link sets for all seven of these projects but decided to only use one of them. The reasons for rejecting the link sets for the other projects were numerous, with all of the link sets suffering from multiple problems. The list of problems affecting these projects included a lack of random sampling, poor project selection, including interface methods as tested methods, choosing functions in base classes that were tested by many tests identical tests, choosing tests that are too similar to each other, and inaccuracies in the links. Only the links for the project GsonFootnote 10 from the TestRoutes (Kicsi et al. 2020) data set were not affected by any of these issues, allowing us to utilise them. In total, the method level ground truth contains 218 oracle links and an analysis of the method level ground truth shows the number of functions per test ranges from 1 to 12, with a median of 1 for all projects and a mean average of 1.66. The difference between the median and the mean is due to a handful of tests in each project having an unusually large number of tested functions. For example, in Gson, there is one test with 12 tested functions and another with 11 tested functions. This causes the average to be much higher at 1.89 while the median is still 1.

The class level ground truth was provided mostly by the developers as, in all three projects, a subset of the test classes contain a comment at the start of the class specifying which classes it tests. These developer provided links were extracted and then manually verified by a judge to confirm that they are still valid. To boost the number of links for the project with the least developer links, Commons IO, a random sample was drawn from the set of all test classes and the tested classes for this sample were decided by two judges in the same way as the method level sample, again resulting in full inter-rater agreement. Another class level ground truth had previously been established by SCOTCH+ (Qusef et al. 2014), which we also investigated for use. However, due to the age of the projects, they were all no longer able to be built or were incompatible with our tracing agent, TCagent, which requires Java 8 or newer. The only ground truth links that we were able to use were for Apache AntFootnote 11 and the results cannot be compared directly as the oldest version of Apache Ant that was compatible with TCagent was newer than the version used by SCOTCH+. The links that we used from SCOTCH+ were independently established by three judges with an average inter-rater agreement of 90%. In total, our class level ground truth contains 608 links. Information about the subjects and ground truth is given in Table 2.

As we use Gson at the method level, we have also investigated using it for a class level ground truth, however, the nature of this project does not lend itself to an evaluation at the class level. This is because most of the test classes test the same class com.google.gson. This is due to the fact the library exposes the serialisation and deserialisation methods through this class, which makes up the bulk of the libraries interface. Thus the test classes testing different aspects of serialisation and deserialisation are all linked to this single class. This makes Gson not representative of software projects in general and therefore not useful for an evaluation which needs to produce well generalised results.

In similar fashion, as we only have class level links for the Ant project, we investigated using Ant for a method level ground truth also. However, Ant is not suited to providing a method level evaluation as many of the tests are not unit testing individual functions but are testing the execution of Ant tasks. A set of Ant tasks have been pre-defined for testing purposes and the tests call into the execute method of the task runner to run them. The runner then runs the task and returns the output, which is then checked. This testing pattern doesn’t fit in with our approach as it more closely resembles integration testing, rather than unit testing, and does not allow us to establish clear test-to-function relationships.

Some previous work (Csuvik et al. 2019a) has used naming conventions to establish a ground truth. However, as demonstrated by our work, this technique has low recall and would introduce bias. Ultimately, when creating a new ground truth, one cannot simply apply an existing traceability technique, as it causes a bias towards that type of technique.

Evaluation Measures. The evaluation measures we selected are: precision, recall, F1 score, mean average precision (MAP), and area under the precision-recall curve (AUC) (Manning et al. 2010). We selected precision and recall as they are elementary measures for evaluating the performance of a binary classifier and allow us to measure the proportion of true positives out of all positive predictions and the proportion of all positive examples that are retrieved. As precision and recall generally represent a trade-off between each other, the F1 score is a useful measure as it evenly weights both precision and recall, allowing us to determine which techniques best handle the trade-off. We also use the mean average precision (MAP) as it takes into account the rank of the true positives in our link prediction lists. This is useful information as it shows which techniques are better at ranking true positives higher than false positives and will also punish techniques that more often return no positives at all.

Finally, we use the area under the precision-recall curve (AUC) as it gives us a view of the performance of each technique that is threshold independent. As most of our techniques need a threshold to make predictions, the performance of these techniques can be very sensitive to the values used for their thresholds. An incorrectly chosen threshold can give the incorrect impression of the usefulness of a technique and, therefore, while we have attempted to select the best threshold for each technique, AUC gives us a general measure of the performance of these techniques that is not affected by threshold values. We selected a precision-recall (PR) curve over a receiver operating characteristics (ROC) curve because the classes in our domain are unbalanced, there are many more negative links than positive links, and PR curves exhibit better characteristics in this situation (Davis and Goadrich 2006). All scores are presented as integer percentages for the sake of readability.

Van Rompaey and Demeyer (2009) also measure applicability, i.e., the ratio of tests for which at least one link is retrieved. However, because of the normalisation that we apply, all non-binary techniques will always produce at least one link, resulting in 100% applicability.

7.2 Research Questions

In the following, we will evaluate the presented techniques according to a list of research questions:

-

1.

How effective are our techniques at the method level?

-

2.

How effective are our techniques at the class level?

-

3.

What effectiveness is achieved by utilising method level information for class level traceability?

-

4.

Can we improve method level predictions by augmenting with class level information?

-

5.

Can we improve predictions by combining the individual technique scores into a single score?

-

6.

What are the reasons for the occurrence of false negatives and false positives?

The six research questions and findings will be presented below. While the first five research questions are answered with a quantitative evaluation, the sixth required a qualitative evaluation. The results are discussed in Section 8.

7.2.1 Research Question 1 (Method Level)

How effective are our techniques at the method level?

This research question investigates how effective each of the techniques are for establishing test-to-function links using only method level information. To answer this question, we compute the evaluation measures over the link sets produced using (17).

* Findings

From the results for RQ1, shown in Table 3, we see that, on average, LCS-U is the most desirable as it performs best for F1 and AUC while only trailing the best MAP (LCS-B) by one point. This means that it is good at balancing precision and recall, is consistent when changing thresholds, and could benefit from a further optimised threshold selection. For precision alone, NC is the best, while LCS-B is best for recall. When comparing variants, the dynamic techniques consistently outperform the static techniques.

7.2.2 Research Question 2 (Class Level)

How effective are our techniques at the class level?

This research question investigates how effective each of the techniques are for establishing test-class-to-class links, using only class level information. To answer this question, we compute the evaluation measures over the link sets produced using (19).

Findings

From the results for RQ2, shown in Table 4, we see that Static Levenshtein is the most desirable overall with the best F1 score and only one point lower than the best MAP (Static LCS-B) and the best AUC (Leven). It is also evident that at the class level, the static techniques outperform the dynamic techniques with generally higher precision and recall. For pure precision, NC variants win again.

7.2.3 Research Question 3 (Elevated Method Level)

What effectiveness is achieved by utilising method level information for class level traceability?

This research question investigates how each of the techniques perform for establishing test-class-to-class links when we use method level information that has been elevated to the class level. To answer this question, we compute the evaluation measures over the link sets produced using (22).

Findings

From the results shown in Table 5 we see that TFIDF is the best for MAP, F1 score, and AUC by a clear margin. Static NC wins on precision and this time LCS-B is best for recall.

7.2.4 Research Question 4 (Augmented Method Level)

Can we improve method level predictions by augmenting with class level information?

This research question investigates if the method level traceability performance can be improved by augmenting the method level information with class level information. To answer this question, we compute the evaluation measures over the link sets produced using (24).

Findings

The results for RQ4, shown in Table 6, show that, on average, LCS-U is the most desirable technique when utilising augmented scores as it has the highest F1 and AUC scores. However, the average scores for LCS-U are similar to the average scores when using the unaugmented method level technique. For pure precision TFIDF is the best technique. It can be observed that for a number of techniques the augmentation produces a drastically higher number of false positives.

7.2.5 Research Question 5 (Technique Combination)

As described in Section 5, we also compute a combined score which averages and normalises the individual technique scores. As this score takes a simple average and weights all techniques equally we refer to it as the simple combination method.

Technique Exclusion: Can we achieve optimal performance with a subset of the individual techniques?

Given that we are combining a set of techniques, it is natural to ask if any of the techniques are redundant, or even harmful to performance, and if we can optimise or improve performance by removing any of the techniques from the combined score. To investigate this, we ran the method level experiments again looking at the combined technique performance when one of the individual techniques was excluded. This analysis was run once for each technique to determine what the impact of removing that technique was.

Technique Weighting: Can we improve the performance of the combined scores by weighting individual techniques differently?

As we are combining techniques, it is natural to investigate if there is a way we can improve the results achieved using the combined score by weighting the individual technique scores before combining them. To perform a weighting we must define a weight vector that contains a value for each of the thirteen individual techniques which we can multiply with the scores matrix before combination. This gives us an extremely large space of possible weight vectors to choose from. There are many possible approaches for determining the values of the weight vectors as it is simply an optimisation problem to which a wide range of search-based approaches can be applied. However, before investing large amounts of time and resources into optimising the weight vector, some preliminary work is required to determine if using weightings even has the potential to deliver significant results. To test the hypothesis that weighting can significantly change the results we used a simple approach to weighting called precision-based weighting which allows us to select a weight vector that should intuitively have a good chance of being beneficial. When using precision-based weighting, we set the weight of a technique to the precision achieved by that individual technique. For example, the weight for the Levenshtein technique at the method level is set to 0.66 as it achieves a 66% precision in the RQ1 results. This provides an intuitive weight vector as the more precise a technique is, the higher is it weighted.

Machine Learning: Can a machine learning method for technique combination outperform our standard approach?

Choosing the right weights for combining the scores is complex due to the size of the search space. We, therefore, investigate if a machine learning method can outperform our standard approach.

As the development of machine learning techniques for combination is not a primary focus of this paper, we opted to use a very simple feedforward network consisting of just a single hidden layer with 64 units. To use our feedforward network for technique combination, we supply the vector of individual technique scores between a test and a function as the input and use a single real-valued output as the probability that the test and function form a true link. To implement this, we used the keras.Sequential model from TensorFlow with the mean squared error loss function and the Adam optimiser. The model was constructed with one hidden layer of 64 units and 1 unit in the output layer. We trained for 12000 steps, checkpointing every 1000 steps, and selected the checkpoint with the best accuracy for inference. The biggest challenge with this approach is obtaining a labelled data set for training and validation. As discussed in Section 7.1, creating ground truth data sets for traceability is time-consuming and error-prone. Therefore, manually creating an entire data set that is large enough to train a neural network is not feasible. Our solution to this was to augment our manually created ground truth with extra links which we extracted by assuming that links that had an NC score of 1 were true positive links, and links that had a very low sum of technique scores were true negative links. We make the first assumption as the results for RQ1 and RQ2 show that the NC technique has perfect precision. We need the second assumption as we need to label as many true negatives as true positives to have a balanced data set. Therefore, we take a sum of the scores for all the techniques and mark the lowest scoring N links as true negatives, where N is the number of links marked as true positive using the manual ground truth and the NC assumption. This gives us 2N total links with a 50/50 split between true positive labels and true negative labels. We drew the training and validation sets from the Commons IO, Commons Lang, and JFreeChart projects, leaving the Gson and Apache Ant projects as hold outs to check for project overfitting.

Findings

The results, shown in Tables 7 and 8, reveal that, on average, the simple combined technique outperforms any individual technique. The results from the technique exclusion (not shown in the tables) revealed that removing any technique made the results worse for at least two of the projects with the exception of naming conventions, for which the removal had no effect on the results. As for precision-based weighting, at the method level it under-performs no weighting, while at the class level both approaches are essentially equivalent. The results for the machine learning based combination reveal that the standard combination approach consistently outperforms the neural network approach.

7.2.6 Research Question 6 (False Negative and False Positive Analysis)

What are the reasons for the occurrence of false negatives and false positives?

Although we achieve high F1 scores using the simple combined technique there are still instances where we produce false positives and false negatives. Investigating these instances and determining the reasons for them is useful as it may reveal ways in which we can improve the approach or show opportunities for improving the software engineering process. To do this, we investigated every false positive and false negative in the Commons IO, Commons Lang, and JFreeChart projects using the simple combined score at the method level and categorised the reason for it. This was done by determining the cause of the false positive or false negative and either adding that cause to the list of categories or assigning it to the existing category if we had already encountered that cause for another example. The resulting categories are defined in Table 9.

Findings

The results for RQ6, shown in Table 10, show the largest sources of false negatives are the tested function scoring poorly in the naming techniques versus some other non-tested function and the test being named after an issue number rather than a tested function. The primary source of false positives is non-tested functions being called frequently, leading to high scores from the SCTs, and the fact that fail calls are not captured by LCBA because they are not executed in passing tests.

7.3 Extended Manual Precision Evaluation

To further demonstrate the generalisability of the results we executed TCTracer on two other projects: Commons NetFootnote 12 and Commons TextFootnote 13 and manually labelled a sample of 25 predicted links from each project as true positives or false positives. These links were produced by the combined technique with simple combination, as RQ5 shows this is the most effective technique and were selected uniformly at random. The links were then independently judged by two judges and the inter-rater agreement was computed using Fleiss’ kappa. Once the inter-rater agreement over the original ratings had been computed the judges conferred to resolve differing judgements leaving one canonical set of judgements from which the precision could be calculated.

The results, presented in Table 11, show a very large difference between the two projects with Commons Text performing very well with 88% precision while Commons Net lags behind with only 20%. There are several reasons for this. Firstly, Commons Net contains a sizeable number of empty tests and abstract functions. TCTracer does not currently filter these out and where one of these empty tests or abstract functions were predicted in a link, that link was necessarily a false positive. This issue is easily resolvable by simply filtering out those artefacts. Another contributing factor is the number of classes in Commons Net that have very similar names due to them implementing the same logic for different protocols. For example, UnixFTPEntryParser, VMSFTPEntryParser, NTFTPEntryParser, OS2FTPEntryParser, OS400FTPEntryParser, and others have very similar names, making false positives more likely. In projects where this regularly occurs, it may be possible to somewhat negate this effect by setting the thresholds more strictly to reduce the number of false positives.

7.4 Parameter Value Selection

Our approach includes tunable parameters; a threshold value for each technique and the call depth discount factor, all of which are real numbers. The current values for the thresholds and the call depth discount have been established in a pre-study with smaller ground truth and a smaller set of projects. We used the pre-study to empirically determine the threshold for each technique by starting from zero and incrementing the threshold in steps of 0.01, each time recording the precision, recall, and F1 score. We then gathered all of the results and selected thresholds that generalised well across projects for the F1 score. We, therefore, consider the current thresholds to be sufficiently general and the best performing overall. However, the score distributions can vary between projects and a practitioner may want to alter the thresholds to match to a specific project if they have a ground truth or some other heuristic on which to base this decision. In the absence of a mechanism to measure precision and recall on a specific project, we suggest that practitioners use the given thresholds.

We also observed that a discount factor <= 0.5 usually gives the highest F-score and varying the factor between 0 and 0.5 does not change the results. Increasing the factor above 0.5 has only a small effect on recall and a larger negative effect on precision, lowering the F-score overall. Given these results, we selected a final discount factor of 0.5.

7.5 Call Depth Discounting Analysis

As discussed in Section 4.3.1, we incorporate the principal of call depth discounting into our approach as it encodes the assumption that the further away in the call stack a function is from its calling test, the less likely it is that the function is tested by the test. Using our evaluation, we can determine the accuracy of this assumption by looking at the depth of the known tested functions in our ground truth links. This data, as shown in Table 12, reveals that Commons IO is the only project which has more than one tested function that is not called directly by the test and, therefore, has a depth greater than zero. These results support the assumption behind call depth discounting in general. However, as Commons IO has a relatively large number of such examples, it shows the quality of this assumption can vary between projects. We, therefore, also wanted to assess how well our approach handles tested functions that have a depth greater than zero when they do occur. Table 12 shows the number of ground truth links at each depth that we discover using the combined score and shows that our approach handles tested functions at depth one and two very well as we correctly identify seven out of the eight tested functions at these depths. We do not discover the few tested functions at depth three and four as the scores are so heavily discounted at this level that we very rarely make a prediction at those depths. In general, this is the correct approach as the few counter-examples at depth three and four are outliers in the way the tests are implemented and are not representative of tests in general. A breakdown of the number of functions we predict to be the tested function at each depth is presented in Table 13 and the numbers shown here are broadly in line with the distribution of tested functions over depths in the ground truth data where the two projects that have tested functions at a depth greater than zero are the two projects that have a lower proportion of predicted functions at depth zero.

8 Discussion

The results reveal some insights that allow us to draw conclusions about the relative effectiveness of the techniques and differences between the projects, weighting, and combination techniques.

8.1 Techniques

First, we compare the naming conventions techniques, NC and NCC. At the method level, NC has perfect precision for the dynamic variant and very high precision for the static variant. This is expected as it is unlikely that a test and function will share the same name, after the word test has been removed, without being linked. However, this strictness results in generally low recall for NC. In contrast, NCC trades-off some of this precision for more recall, resulting in better F1 scores for the NCC variants. However, due to their overall low recall on the method level, NC and NCC are unsuitable at that level. On method-level, other naming conventions are often followed. This observation was also made by Madeja and Porubȧn (2019). At the class level, NC beats NCC for F1 score as it is easier for developers to maintain traditional naming conventions at this level compared to the method level and, therefore, recall does not suffer as much with NC at the class level.

When comparing LCS-U and LCS-B, we see that LCS-U usually performs better for precision, whereas LCS-B generally performs better for recall. This could be explained by the interaction between the distribution of the scores and the way the thresholds are selected. As the two techniques produce difference score distributions and the thresholds are optimised for F1 score, the natural point at which the F1 is maximised may differ for the two techniques, with the optimal F1 being reached at a high recall/low precision point for LCS-B and a high precision/low recall point for LCS-U. This intuitively matches with the thresholds that were chosen by this process: 0.55 for LCS-B and 0.75 for LCS-U as higher threshold values are typically associated with higher precision.

However, as the difference in precision is greater than the difference in recall, LCS-U is the better choice overall as evidenced by its better F1 score.

LCBA performs poorly in general but is especially bad for Commons IO in RQ1. This is an artefact of the nature of Commons IO, where the effect of many function calls is to change some state, rather than return the result of a computation. Therefore, the returns of method calls are not as frequently testable by simply comparing the return value to an oracle; instead, a further function call is required to check that the state was changed correctly. This causes many false positives for LCBA. Commons Lang is the opposite of Commons IO in this regard, as tested functions usually have their return values checked against oracles immediately after returning, resulting in a relatively high LCBA score. The differences in scores for LCBA are due to the simplicity of the LCBA heuristic which cannot distinguish between acting and asserting methods (when the arrange–act–assert pattern is used).