Abstract

Testing students on information that they do not know might seem like a fruitless endeavor. After all, why give anyone a test that they are guaranteed to fail because they have not yet learned the material? Remarkably, a growing body of research indicates that such testing—formally known as prequestioning or pretesting—can benefit learning if there is an opportunity to study the correct answers afterwards. This prequestioning effect or pretesting effect has been successfully demonstrated with a variety of learning materials, despite many erroneous responses being generated on initial tests, and in conjunction with text materials, videos, lectures, and/or correct answer feedback. In this review, we summarize the emerging evidence for prequestioning and pretesting effects on memory and transfer of learning. Uses of pre-instruction testing in the classroom, theoretical explanations, and other considerations are addressed. The evidence to date indicates that prequestioning and pretesting can often enhance learning, but the extent of that enhancement may vary due to differences in procedure or how learning is assessed. The underlying cognitive mechanisms, which can be represented by a three-stage framework, appear to involve test-induced changes in subsequent learning behaviors and possibly other processes. Further research is needed to clarify moderating factors, theoretical issues, and best practices for educational applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In the science of learning, the concept of test-enhanced learning has become largely synonymous with retrieval practice, which is the strategy of taking practice tests on information that has previously been learned. That association is justified: Retrieval practice is well-established as a potent enhancer of learning for a plethora of materials across a wide array of educationally relevant circumstances (for reviews, see Carpenter, 2012; Carpenter et al., 2022; Dunlosky et al., 2013; Pan & Rickard, 2018; Roediger & Karpicke, 2006; Rowland, 2014, and others). Taking practice tests on information that has already been learned helps consolidate knowledge and increases its future accessibility (Bjork, 1975; Roediger & Butler, 2011). Research dating back to the 1960s and 1970s, however, suggests that an alternative approach that is, in a way, the opposite of retrieval practice—taking practice tests before information has been learned—may also be beneficial for learning. That approach, which is commonly known as prequestioning or pretesting (and sometimes adjunct prequestioning, errorful generation, failed testing, unsuccessful testing, among other appellations), is the subject of this review.

The effects of prequestioning were first addressed in the adjunct questions literature, a body of research that peaked in the 1970s and examined the pedagogical consequences of test questions encountered before, during, or after the reading of text materials (i.e., adjunct prequestions, adjunct postquestions, or advance questions; for reviews, see Anderson & Biddle, 1975; Frase, 1968; Hamilton, 1985; Rickards, 1979; for a meta-analysis, see Hamaker, 1986). In an early example, Rothkopf (1966) experimentally manipulated whether participants attempted practice questions or not prior to reading excerpts of a book on marine life. When asked to recall information targeted by practice questions on a subsequent posttest, participants that had engaged in prior testing well outperformed participants that had not. The implication is that testing led participants to better encode—and, as a consequence, better remember—at least some portions of the information that they were exposed to. That performance advantage, which is now known as the prequestioning effect or the pretesting effect, underscores the potential of prequestioning and pretesting as useful learning strategies in their own right. Evidence of prequestioning and pretesting effects was repeatedly observed in that literature (e.g., Berlyne, 1954; Hartley, 1973; Memory, 1983; Pressley et al., 1990; Rickards, 1976a, 1976b; Samuels, 1969), but not in all cases (e.g.,Frase, 1968; Gustafson & Toole, 1970; Rothkopf & Bisbicos, 1967; Sagaria & Di Vesta, 1977). Studies in the adjunct questions literature, however, commonly lacked appropriate reference conditions, had no controls (or measures) of time-on-task, and intermixed prequestions with retrieval practice (for discussion see Carver, 1971), all of which complicate interpretation.

The modern prequestioning and pretesting literature, in contrast, incorporates more robust experimental controls and exclusively focuses on the case of practice testing prior to learning activities. It can be traced to Kornell et al. (2009) and Richland et al. (2009), which investigated prequestioning and pretesting for the cases of trivia facts/paired associate words and science texts, respectively, and reported robust learning benefits. Those results revitalized interest in prequestioning and pretesting, and in the decade-and-half since, over 60 peer-reviewed articles have been published on the topic. Aside from a recent introductory review (Carpenter et al., 2023) and other works that address selected aspects of this literature (e.g., retrieval success as in Kornell & Vaughn, 2016; learning from errors as in Mera et al., 2022; Metcalfe, 2017), however, there has not yet been a comprehensive, up-to-date review that focuses specifically on the prequestioning and pretesting literature.

The present review examines that literature to address the overarching question: Are prequestioning and pretesting viable learning strategies? Anecdotally, a common perspective among some cognitive and educational psychologists is that any benefits of such testing are small in magnitude and ephemeral (i.e., only manifesting when learning is assessed very shortly afterwards), especially relative to retrieval practice. This review examines the literature for evidence of that perspective. It begins by defining common approaches that have been used to investigate prequestioning and pretesting. Studies that focus on benefits for memory as well as transfer of learning are then examined. These studies involve laboratory, online, or classroom settings, various forms of practice testing, and different timing and retention intervals. Next, metacognitive factors and theoretical explanations for prequestioning and pretesting effects are considered. Finally, other relevant learning strategies and phenomena, educational applications, directions for future research, and conclusions are discussed.

It should be noted that the terms “prequestion” and “pretest” have been used interchangeably in the literature. Historically, “prequestion” dates back to the adjunct questions literature, whereas “pretest” grew in prominence following Richland et al.’s (2009) titular mention of the “pretesting effect” and has been used in over half of the relevant studies published since. “Prequestion” has been used most frequently for studies involving text or video materials, although “pretesting” has also been used in cases involving such materials. This review includes studies that have used either of these terms, and as such, for ease of exposition, we henceforth use an all-encompassing alternative, “pre-instruction testing.”

How Effects of Pre-instruction Testing Are Investigated

Studies in the pre-instruction testing literature commonly feature an experimental design with two conditions: an experimental group and a control group. Participants may be randomly assigned to either group. Alternatively, in a within-participants design, all participants undergo an experimental condition and a control condition and, across those conditions, learn two or more sets of materials (with at least one set randomly assigned to each condition). Participants in the experimental group or condition will (1) engage in practice testing of information that is to be learned (i.e., target materials), (2) have an opportunity to learn the correct answers to the practice tests that they had experienced, and then (3) take a posttest. During practice testing, participants may attempt anywhere from one question to several dozen questions, and the pace of such testing may be carefully timed or self-paced. The posttest, which is also called a final test or criterial test, may occur at any time after (1) and (2) have completed, including immediately or after a delay ranging from several minutes to several weeks. The posttest is used to measure learning.

Although all studies in the literature commonly feature an experimental group or condition that undergoes steps (1) to (3), the precise implementation of (1) and (2) varies between studies and often depends on the materials being learned. In some cases, (1) and (2) occur in separate phases, whereas in other cases, both are intermingled. Separation of (1) and (2) is more common when the materials are relatively content-rich, as in the case of text passages. The most common implementations of (1) and (2) are further considered in the next section.

The control group or condition typically does not engage in any practice testing. Rather, the initial activity usually is an opportunity to learn correct answers (or, more accurately, correct information that includes the answers to questions that will appear on a subsequent posttest). This learning opportunity is followed by a posttest that is essentially identical to the posttest that is administered to the experimental group. In some cases, however, a non-testing control activity may occur first. That control activity may simply control for time on task (e.g., solving math problems as in the case of Pan et al., 2020a, 2020b). Alternatively, the activity may also have the potential to facilitate learning of target materials (e.g., reading but not answering questions as in the case of Richland et al., 2009, experiment 5). In such cases, the control activity is followed by the opportunity to learn correct information.

The difference in performance between the experimental and control groups or conditions on the posttest, if any, serves as the measure of the effects of pre-instruction testing. In the literature, better performance in the experimental group or condition—that is, higher proportion correct on the posttest—has commonly been observed. The magnitude of that advantage is the focus of at least two recent quantitative meta-analyses (King-Shepard et al., n.d.; St. Hilaire et al., 2023).

Arrangement of Practice Testing and Subsequent Learning Opportunities

Schematics depicting the two most common implementations of pre-instruction testing, which differ primarily in the arrangement of (1) and (2), are presented in Fig. 1. In the upper panel, (1) and (2) occur in separate phases (i.e., all practice testing occurs prior to any opportunity to learn the correct answers). This approach has commonly been employed when pre-instruction tests are used to learn content from text passages (e.g., Little & Bjork, 2016), educational videos (e.g., Carpenter & Toftness, 2017), or live lectures (e.g., Carpenter et al., 2018). It is common for (2) to occur immediately after (1), although a delay of anywhere from several minutes to over several hours may occur. As the correct answers are embedded within a larger body of content, participants usually must discover those answers by engaging in searching behaviors—that is, by sifting through text, video, or lecture materials to find content that is directly relevant to the questions they had attempted. With this approach, participants are often exposed to more than just the correct answers, and as discussed in the next section, that exposure has repercussions for how learning can be assessed on the posttest.

Two common approaches for investigating the effects of pre-instruction testing on learning. In the upper panel, participants engage in practice testing prior to a learning opportunity or engage in that learning opportunity without any practice testing at all. This approach is commonly used with relatively information-rich materials such as text passages. In the lower panel, participants engage in practice testing with immediate correct answer feedback or study correct information without any practice testing at all. This approach is commonly used with simpler materials such as word pairs or facts. With both approaches, learning is assessed on a subsequent posttest

In the lower panel, (1) and (2) are intermingled using practice testing with immediate correct answer feedback. Specifically, after each question, the correct answer is displayed for participants to study. This approach, which often requires computer presentation, has been used in the cases of learning word pairs (e.g., Huelser & Metcalfe, 2012), foreign language translations (e.g., Seabrooke et al., 2019a, 2019b), and trivia facts or statements (e.g., Kornell, 2014). In such cases, learning the correct answer is a matter of attending to the provided feedback and no searching is needed. Moreover, exposure to additional materials beyond the correct answers is usually quite limited or nonexistent.

Although the two approaches depicted in Fig. 1 encompass most studies in this literature, other approaches exist. For instance, in some studies, the provision of correct answer feedback is not immediate, but rather delayed by several minutes or longer (e.g., Kornell, 2014), and there may be intervening activities beforehand (e.g., studying other items). In other studies, both immediate correct answer feedback and subsequent learning opportunities are provided (e.g., Pan & Sana, 2020, experiment 4). That is, after each practice question, the correct answer is displayed, and after completing practice testing with correct answer feedback, participants have a second opportunity to learn the correct answers from a text passage, video, lecture, or other materials. In yet other cases, pre-instruction testing is combined with, or compared against, retrieval practice (e.g., Geller et al., 2017; Lima & Jaeger, 2020). Such approaches are considered later in this review.

Yet another approach involves interpolated prequestioning or interpolated pretesting. It entails repeated cycles of practice testing followed by learning opportunities (e.g., Carpenter & Toftness, 2017; Pan et al., 2020a, 2020b). A schematic of this approach is displayed in Fig. 2. Interpolated testing can be used to divide a set of target materials, such as a video lecture, into a series of smaller segments. Participants still engage in practice testing before learning the correct answers, but do so one segment at a time. This approach originated with the adjunct questions literature, wherein text passages were often interspersed with questions preceding relevant content at intervals of one or more paragraphs (e.g., Rothkopf, 1966).

Measuring Retention and Transfer of Learning

The posttest assesses the level of learning that was attained in the experimental and control groups or conditions. In so doing, it addresses the relative effectiveness of learning through pre-instruction testing followed by studying versus studying without such testing. As described next, memory for directly tested materials is almost always assessed on the posttest. In some instances, the posttest is also used to assess the learning of materials that were not directly tested—that is, untested materials that were either presented for study or previously unpresented. Doing so addresses the extent of any pre-instruction testing benefit for those materials.

Memory for Directly Tested Materials

The posttest typically features questions that are identical or nearly identical to those that were used during pre-instruction testing. These questions measure retention or memory for the content targeted by the practice questions. For example, in Carpenter and Toftness (2017), participants attempted the practice question, “How many families originally settled on the island of Rapa Nui?”, viewed an educational video about the history of Easter Island (which is also called Rapa Nui), and received the exact same question on a subsequent posttest. If pre-instruction testing was effective at enhancing memory for tested content, then performance on that posttest question should have been higher than among participants that had not practiced with that question (i.e., those in the control group), as was observed.

As a related matter, if prior knowledge of the target materials prior to the experiment is low, as is commonly the case, then performance during practice testing should also be low. Any improvement from practice test to posttest on the same questions, therefore, would indicate that learning occurred. Indeed, such improvements are commonly the case in the experimental group, indicating that the correct answers were successfully learned after practice testing had occurred.

Transfer to Untested Materials Presented for Study

In some cases, the posttest includes questions that participants had not previously seen or attempted. These questions commonly assess knowledge of content that was not directly targeted by practice questions, but was still available for participants to study during the subsequent learning opportunity. For example, in Carpenter and Toftness (2017), the posttest also included the question, “What was the approximate population of Rapa Nui from 1722 to the 1860s?”, which the aforementioned participants had not seen before. The correct answer to that question, however, was presented in one of the video segments that those participants did see. For the experimental group, such “new” questions measured whether the effects of practice testing generalize, or transfer, to untested but previously presented materials, as opposed to “old” questions that measured memory for previously tested content.

It is important to note that the distinction between “new” (retention) and “old” questions (transfer) on the posttest applies primarily to the experimental group. For the control group, all posttest questions are usually entirely “new.” For ease of comparison, however, posttest questions administered to the control group may be yoked (that is, given “old” and “new” classifications) to questions administered to the experimental group. In other cases, however, the control group’s performance on the posttest is reported in its entirety without separation into “old” and “new” categories.

The inclusion of posttest questions that assess knowledge of untested materials presented for study is common in studies where the correct answers to pre-instruction test questions are discovered by searching through text, video, or lecture materials. In studies where the correct answers are learned solely through correct answer feedback, which usually involve simpler materials such as word pairs or trivia facts, assessing knowledge of untested materials is rare (for exceptions, see Hays et al., 2013, Experiment 3; Pan et al., 2019).

Transfer to Untested and Previously Unpresented Materials

In several studies, the posttest features questions that address other forms of transfer, including drawing inferences (e.g., St. Hilaire et al., 2019) and classifying new exemplars (e.g., Sana, Yan, et al., 2020a, 2020b). These questions might still be categorized as “new” given that they were not previously presented to participants. The correct answers to these questions, however, cannot be lifted directly from materials presented earlier in the study. Rather, answering these questions correctly requires drawing a conclusion or deriving knowledge that was not directly stated in the materials (in the case of inferences) or applying prior learning to newly presented information (in the case of classifying new exemplars). Research in the literature involving transfer of learning to new scenarios, situations, and other contexts (for related taxonomies of transfer, see Barnett & Ceci, 2002; Pan & Rickard, 2018) is currently in its infancy.

Specific Versus General Benefits of Pre-instruction Testing

When the posttest measures learning of directly tested and untested content—that is, by including “old” and “new” questions—an advantage of pre-instruction testing (i.e., a prequestioning effect or a pretesting effect) may occur across two common scenarios. In the first scenario, the experimental group outperforms the control group on both types of questions. If so, that would constitute a general benefit of prior testing wherein the learning of directly tested and untested materials is enhanced. In the second scenario, the experimental group outperforms the control group but only on questions that previously appeared on the prior practice test (i.e., “old” questions). If so, that would constitute a specific benefit of prior testing wherein only the learning of directly tested materials is enhanced ( Carpenter et al., 2023; see also Anderson & Biddle, 1975; Hartley & Davies, 1976). Whether pre-instruction testing commonly yields specific or general benefits is a major question in this literature.

Effects of Pre-instruction Testing on Learning

The following sections summarize the evidence for the effects of pre-instruction testing across a host of circumstances. First, effects on directly tested and untested information are addressed. Second, studies conducted in classrooms and other authentic educational settings, which constitute exceptions to the standard practice of relying on laboratory settings or online experiment platforms, are summarized. Next, effects involving different test formats are considered. Finally, studies that investigated different temporal intervals between practice testing and subsequent learning opportunities, as well as studies that featured a posttest that was administered after an extended retention interval, are discussed (Table 1).

Except where noted, all of the studies reviewed here feature a separate non-testing control group or condition against which the efficacy of pre-instruction testing was determined (cf. de Lima & Jaeger, 2020; Geller et al., 2017; McDaniel et al., 2011; Overoye et al., 2021). In such groups or conditions, learning without any practice testing occurred in a separate group of participants or a separate set of materials. Moreover, retrieval practice was not implemented prior to the posttest (cf. Welhaf et al., 2022). Finally, in most studies, the participants were undergraduate students or individuals of similar age. Studies involving other age groups are specifically noted as such.

Effects on Directly Tested Information

Nearly all studies of pre-instruction testing have addressed effects on directly tested information. The following studies reported data for posttest questions targeting memory of such information. As described next, these studies involved text or video materials.

Text Materials

Pre-instruction testing has been shown to enhance memory for directly tested information drawn from text passages. A prominent example is that of Richland et al. (2009), wherein participants read a two-page passage about a visual disorder, cerebral achromatopsia. In the experimental group, participants spent two minutes attempting five practice questions drawn from the passage (e.g., “What is total color blindness caused by brain damage called?”), then read the passage for eight minutes. In the control group, participants spent the entire ten-minute period reading the passage. Prior knowledge of the text passage was low; in the experimental group, participants typically answered three-quarters or more of the practice questions incorrectly. When the test questions were re-administered on an immediate posttest (experiments 1–3) or on a one-week delayed posttest (experiment 4), however, participants in the experimental group consistently outperformed the control group (see Fig. 3, upper panel). These results compellingly demonstrate the capacity of pre-instruction testing to enhance memory for the correct answers when those answers are subsequently presented in a text passage. Multiple studies have since reported similar results with text passages involving such topics as biographies, geography, history, oceanography, physics, science fiction, statistics, and weather (e.g., Hausman & Rhodes, 2018, Experiment 1; James & Storm, 2019, Experiments 1–4; Kliegl et al., 2022, Experiment 2; Little & Bjork, 2016; Sana et al., 2020a, 2020b; Sana & Carpenter, 2023, Experiment 1; St. Hilaire et al., 2019; St. Hilaire & Carpenter, 2020).

Enhanced memory for directly tested information drawn from text statements (e.g., trivia or other types of facts) has also been demonstrated following pre-instruction testing. For instance, in Kornell et al., (2009; experiment 1), participants learned a series of 20 fictional trivia facts, all presented one at a time in question-and-answer form (e.g., “Q: What treaty ended the Calumet War? A: Harris”). For half of the facts, the question and answer were presented simultaneously for 5 s, during which participants read both; for the other half, participants first attempted to answer the question for 8 s before being presented with the correct answer for 5 s (i.e., correct answer feedback). During practice testing, participants were unable to answer any questions correctly. On an immediate posttest, however, participants better remembered the answers to previously tested, as opposed to untested, trivia facts. Improved memory for factual content learned via pre-instruction testing with correct answer feedback has since been replicated across multiple other studies (e.g., Kornell, 2014; see Fig. 3, middle panel), including at a delay of up to 48 h (Vaughn et al., 2017), with use of search engines to obtain correct answers (e.g., Storm et al., 2022), and with various types of facts (e.g., obscure trivia, fictional facts, historical facts).

A large portion of the studies in this literature has used paired associate words (e.g., doctor-nurse) as learning materials. A characteristic finding has been that taking practice tests on the target word of a pair that had not previously been learned (e.g., doctor-???), followed by immediate correct answer feedback, can enhance memory for the target word. That result, however, is predicated on the two words in a given pair having at least a weak semantic association (e.g., Hays et al., 2013; Kliegl et al., 2022; Kornell, 2014; Kornell et al., 2009; Zawadzka et al., 2023; and others). If that semantic association is absent (e.g., door-shoe), then no advantage of prior testing may occur if the posttest involves cued recall (e.g., Grimaldi & Karpicke, 2012; Huelser & Metcalfe, 2012; Knight et al., 2012). Benefits of pre-instruction testing for weakly associated word pairs have since been replicated with children of kindergarten and early elementary school age, but not preschool age (Carneiro et al., 2018). Further studies have shown benefits for the case of word triplets (e.g., gift, rose, wine), indicating that the benefit of pre-instruction testing for cue-and-target materials is not limited to paired associates (Pan et al., 2019; see also Metcalfe & Huelser, 2020).

Other studies have focused on learning the definitions of foreign language vocabulary or unfamiliar words in one’s native language. For instance, in Potts and Shanks (2014), participants learned Euskara-English translations (e.g., urmael—pond) or the definitions of obscure English words (e.g., frampold—quarrelsome). Each translation or definition was learned in multiple ways, of which two were as follows: The word and its definition (or translation) were read in their entirety, or participants guessed the definition prior to viewing the correct answer (i.e., practice testing with feedback). On a multiple-choice posttest, the definitions of words that had previously been tested were better recognized than those that had been read. Improved recognition of definitions following pre-instruction testing has been replicated in several other studies (e.g., Potts et al., 2019; Seabrooke et al., 2019a, 2019b, 2021a, 2021b). When the posttest involves cued recall, however, a benefit of pre-instruction testing has typically not been observed (Butowska et al., 2021; Seabrooke et al., 2019a, 2019b). That result bears some similarity to the aforementioned findings involving paired associates that lack a semantic association (although, see Carpenter et al., 2012 for an exception).

Video Materials

Studies of pre-instruction testing with video-based materials have also reported memory benefits. In Carpenter and Toftness (2017), for instance, participants viewed a seven-minute educational video about Easter Island. In the experimental condition, six practice questions were interpolated amongst two-minute segments of video (two questions before each segment), whereas in the control condition, no practice questions were administered. On an immediate posttest, participants in the experimental condition exhibited better memory for directly tested information relative to the control condition (see Fig. 3, bottom panel). Benefits of pre-instruction testing for directly tested video content have since been repeatedly observed (e.g., James & Storm, 2019; Pan et al., 2020a, 2020b; Sana & Carpenter, 2023; St. Hilaire & Carpenter, 2020; Toftness et al., 2018). The length of the videos for which such benefits have been demonstrated has ranged from 5 to 30 min (e.g., James & Storm, 2019; St. Hilaire & Carpenter, 2020), and the addressed topics have included psychology, statistics, and history. Administering all practice questions prior to the entire video (e.g., Toftness et al., 2018) and interpolating questions throughout segments of video (e.g., Pan et al., 2020a, 2020b) have both been effective at enhancing memory.

Effects on Untested Information

A subset of the literature has investigated the effects of pre-instruction testing on the learning of information that is not directly tested. Studies addressing this issue can be classified into two categories based on the nature of the untested materials. In the more common category, untested materials were presented for participants to study during the experiment. In the other category, untested materials were not directly available for study and participants had to draw inferences or apply what they had learned to classify new information on the posttest.

Untested Materials Presented for Study

Unlike with directly tested materials, the evidence involving untested materials presented for study is highly inconsistent. Those results are summarized next. Possible explanations are considered later in this review.

Among studies involving text passages, results have been mixed. For instance, in Richland et al. (2009), enhanced learning of untested information presented for study (i.e., available in the provided text passage) was observed in one experiment but not in any of the other four similarly designed experiments. That result contrasts with the benefits of pre-instruction testing for directly tested information that was observed in all experiments. No benefit or decrement for untested information, relative to a non-testing control condition, has been observed in several other studies involving text passages (e.g., James & Storm, 2019, experiments 1–4; St. Hilaire et al., 2019, experiment 1; St. Hilaire & Carpenter, 2020). Exceptions include Little and Bjork (2016), Sana and Carpenter (2023), and St. Hilaire et al., (2019, experiment 2), wherein benefits of pre-instruction testing were observed for both tested and untested materials.

Among studies involving simpler materials, namely word pairs or triplets, two studies have addressed whether guessing a target word and studying the correct answer will yield improved memory for the cue word or words. Across two experiments, Pan et al. (2019) found that such testing did enhance learning of untested cues from word triplets (e.g., guessing the answer to gift, rose, ??? which is wine, will enhance the ability to correctly answer ???, rose, wine). Hays et al., (2013, experiment 3) observed a similar result for the case of word pairs. These findings suggest that pre-instruction testing can foster learning that is, to a degree, transferable from the answers (to simple questions) to aspects of the questions themselves.

Among studies involving video materials, results have also been mixed. Whereas James and Storm (2019, experiment 5), Pan et al., (2020a, 2020b, experiment 1), St. Hilaire and Carpenter (2020), and Toftness et al. (2018) observed no benefits of pre-instruction testing for untested materials presented in videos, Carpenter and Toftness (2017), Pan et al., (2020a, 2020b, experiment 2), and Sana and Carpenter (2023, experiment 1) did observe such benefits. As with studies involving text passages, however, a benefit for directly tested materials was observed in all of those studies.

Untested and Previously Unpresented Materials

Evidence regarding the effects of pre-instruction testing on the ability to draw inferences from a text passage (e.g., after reading a text passage about different types of brakes, inferring the primary difference between mechanical brakes and hydraulic brakes) is relatively limited. Of the two studies that have addressed the issue to date, Hausman and Rhodes (2018) found no evidence of improved inferences following pre-instruction testing on expository text passages, whereas St. Hilaire et al. (2019) observed improved performance on inference questions but only when participants were required to make inferences during practice testing and search for the answers to those inference questions while reading. Based on those limited results, pre-instruction testing does not guarantee improved inference ability, although such improvements are possible.

At least one study to date has investigated the efficacy of pre-instruction testing for learning to classify new exemplars of previously studied categories. In Sana, Yan et al., (2020a, 2020b, experiment 1), participants learned to recognize three types of statistical procedures (chi-squared test, Kruskal–Wallis test, and Wilcoxon signed-rank test) and, for each procedure, studied example scenarios or attempted four practice questions prior to studying the scenarios. On a 5-min delayed classification test (wherein participants were presented with new scenarios and had to correctly identify the appropriate statistical procedure that should be used), participants that engaged in practice testing outperformed those that had not. A second experiment observed the same results even when the control condition studied the practice questions and their answers beforehand. This result suggests that pre-instruction testing has the potential to enhance classification skills.

Effects in Authentic Educational Settings

At least four studies conducted in authentic educational settings have compared pre-instruction testing against a separate non-testing control group or condition. In Beckman (2008), undergraduate students in two sections of an aerospace course completed three units of the course; in one section, each unit was prefaced by a pretest targeting the learning objectives for that course, whereas in the other section, no pretests were administered. Each pretest was scored before being returned to the student. On high-stakes tests conducted at the end of each unit, students that had engaged in pretesting scored an average of 9–12% higher.

Benefits of pre-instruction classroom testing as measured on subsequent posttests but using more robust experimental designs (i.e., random assignment of students to groups or conditions) have also been reported by Carpenter et al. (2018) and Soderstrom and Bjork (2023). Both studies entailed the administration of practice quizzes at the start of lectures. Carpenter et al. (2018) had students in an undergraduate introductory psychology laboratory course attempt or not attempt a single prequestion individually on laptop computers at the start of a lecture period. No correct answer feedback was provided. At the end of the lecture period, all students completed a two-question posttest on which benefit of prior testing was observed (approximately 7% better performance in the prequestion condition for previously tested content). A week later, however, when the posttest was administered a second time, test performance was numerically, but not significantly, higher for students who had engaged in prequestioning. These results suggest that pre-instruction testing can enhance learning at least over the short term, but as implemented by Carpenter et al., such testing did not reliably improve long-term learning over that due to a single posttest (i.e., retrieval practice).

Soderstrom and Bjork (2023) administered multiple-choice pretests via pen and paper at the start of several lectures during a 10-week undergraduate psychology research methods course. No correct answer feedback was provided. On a high-stakes final exam administered at the end of the course, students performed 8–9% better on questions addressing content that had been previously pretested. That benefit was observed both for final exam questions that were identical to those that were used during pretesting, as well as final exam questions that targeted related but different information from that which was pretested. In this case, the results suggest that pre-instruction testing can yield learning improvements that persist for at least several weeks.

Janelli and Lipnevich (2021) investigated the effects of pre-instruction testing in a Massive Open Online Course (MOOC) about climate change. Students enrolled in the MOOC took a pretest at the start of each of five course modules or did not take any pretests at all. Depending on condition assignment, students received various forms of feedback or no feedback at all. The results showed no overall benefit of pretesting or feedback on subsequent exam performance, but with an important caveat: Students that had received pretests were more likely to drop out of the MOOC as it progressed, but among the students that persisted until the end of the MOOC, exam performance was better if they had received pretests. The authors concluded that pretesting in MOOCs has both negative and positive effects—that is, it can not only reduce persistence, perhaps by affecting motivation, but also enhance learning among the students that persevere.

Lima and Jaeger (2020) had fourth and fifth grade students read an age-appropriate encyclopedic text passage with key words partially removed and replaced with blanks. Students guessed the missing words, then re-read the passage with all words intact. When presented with the text passage again after one week, this time with previously tested keywords and other keywords removed, students were better able to recall the words that had been tested. That result highlights the potential of such testing to enhance learning at the elementary school level.

In a recent study of pre-instruction testing in clinical settings, Willis et al. (2020) had medical students learn a laparoscopic procedure in a simulation laboratory by attempting the procedure before watching an instructional video (the “struggle first” group) or watching the video prior to attempting the procedure (the “instruction-first” group). On an immediate posttest, participants in the struggle-first group were able to complete the procedure more quickly and with fewer errors. The authors attributed that result to a pre-instruction testing effect for procedural skills (alternatively, invention activities or productive failure may have played a role; both strategies are discussed later in this review).

Studies conducted in authentic educational settings but without a separate non-testing control group or condition—in which pre-instruction testing did not occur—provide further evidence. In McDaniel et al., (2011; in a middle school science course; see also Geller et al., 2017, in an undergraduate chemical engineering course), students completed practice questions prior to or at the beginning of lecture sessions. When the questions were re-presented on subsequent posttests, performance was improved. That result indicates that learning occurred following the administration of the practice questions. The conclusions that can be drawn from that study, however, is limited due to the lack of control groups.

Effects Involving Different Test Formats

Effects of pre-instruction testing have been investigated using practice tests and posttests in a variety of test formats. With few exceptions, benefits of pre-instruction testing have been observed regardless of test format.

Practice Test Format

Practice tests in cued recall or short answer (e.g., Carpenter et al., 2018; Richland et al., 2009), fill-in-the-blank (e.g., Richland et al., 2009), and multiple-choice format (e.g., Toftness et al., 2018) have all been shown to elicit pre-instruction testing effects. Table 2 features examples of each test format. Pan and Sana (2021; experiments 2–4), for example, manipulated whether participants received multiple-choice or cued recall pretests; the format of the practice test did not substantially influence the magnitude of the resulting pre-instruction testing effects.

In some circumstances, however, the test format used for practice testing can be influential. For instance, in Little and Bjork (2016), participants took multiple-choice or cued recall pretests on information drawn from text passages about the planet Saturn, Yellowstone National Park, and stimulant drugs. The multiple-choice questions featured competitive lures, which were incorrect answer alternatives referencing information in the passage (e.g., “Q: What is the tallest geyser in Yellowstone National Park – Old Faithful, Steamboat Geyser, Castle Geyser, or Daisy Geyser? A: Steamboat Geyser.”), whereas the cued recall questions, by their very nature, did not include any lures. The competitive lures (e.g., “Castle Geyser”) would ultimately be the correct answers to transfer questions on a cued recall posttest. On the posttest, whereas a pre-instruction testing effect for directly tested information was observed regardless of practice test format, a transfer effect for untested information was only observed following multiple-choice pretesting. A crucial factor for successful transfer in that case appears to have been prior exposure to untested information, in a practice testing context, via the lures that were present only in the multiple-choice pretests. Simply viewing the untested information in a non-testing context (i.e., studying) did not yield the same degree of learning (Little & Bjork, 2016). More broadly, these results suggest that not only is test format potentially influential for pre-instruction testing effects, but so is the manner with which a given format is implemented (for related discussions in the adjunct questions literature, including possible effects on the learning of directly tested and untested information, see Anderson & Biddle, 1975; Hamaker, 1986).

Posttest Format

Pre-instruction testing effects have also been observed on posttests involving recall or short answer (e.g., Carpenter et al., 2018; Richland et al., 2009), fill-in-the-blank (e.g., Richland et al., 2009), multiple-choice (e.g., Toftness et al, 2018), and recognition format (e.g., Potts et al., 2019). Table 2 features examples of each test format. These results suggest that the posttest format may not be crucially important to detect benefits of pre-instruction testing. An exception, however, involves the cases of semantically-unrelated paired associate words and unfamiliar foreign language translations. In studies using such materials wherein both cued recall and recognition posttests have been administered, a benefit of prior testing has only been observed in the case of recognition tests (e.g., Seabrooke et al., 2021a, 2021b). That result has yet to be fully explained, although one account posits that pretesting enhances attention to correct answer feedback regardless of the degree with which a cue and target are semantically associated.

Effects Across Different Timing and Retention Intervals

Most studies in the literature have featured little-to-no interval of time between pre-instruction testing and subsequent learning opportunities. The retention interval prior to the posttest is, in many cases, also as short as a few minutes or none. Exceptions to these patterns, which provide insights into implementation issues and the durability of observed effects, are discussed next.

Interval Between Practice Testing and Subsequent Learning Opportunities

In the case of paired associates, immediate correct answer feedback is often necessary for improved memory of pretested target words to occur. In Grimaldi and Karpicke (2012), Vaughn and Rawson (2012), and Hays et al. (2013), the correct answer was displayed immediately after participants had entered their guess for a given word pair or withheld until guesses had been made for multiple or all to-be-learned word pairs. In all three studies, only the provision of immediate correct answer feedback yielded a pretesting effect relative to a non-testing control condition on a subsequent cued recall posttest and delaying feedback nullified that advantage. These results contrast with findings involving text passages and other materials, wherein participants may not discover the correct answers until a learning opportunity that is at least several minutes later and after other information has been encountered in the intervening time period (e.g., Richland et al., 2009). (An alternative explanation suggested by a reviewer, however, is that in the aforementioned studies with paired associates, the lack of a pretesting effect in the delayed feedback case may be due to a spacing effect in the non-testing control condition).

To address the discrepancy between studies of paired associates versus other materials, Kornell (2014) investigated the efficacy of delayed feedback following pre-instruction testing with materials that are more semantically detailed than paired associates, namely trivia facts. Across three experiments, pre-instruction testing enhanced learning regardless of whether feedback was withheld until other intervening items had been encountered or even 24 h after participants had finished practice testing on all to-be-learned items (see Fig. 3, middle panel, for a depiction of the results). In fact, when the timing of feedback was manipulated within a single experiment, there was no difference in pre-instruction testing effect magnitude between immediate versus delayed feedback conditions (Kornell, 2014, experiment 2). These results suggest that benefits of pre-instruction testing for non-paired associate materials (i.e., more semantically detailed or complex materials as in the case of facts and text passages) can manifest even in cases where learning of the correct answers is delayed.

Retention Interval Prior to the Posttest

Among studies that have assessed learning after a retention interval of at least 24 h, there continues to be evidence of pre-instruction testing effects. As illustrated in Table 1, studies involving paired or triple associate words (e.g., Kornell et al., 2009; Pan et al., 2019; Yan et al., 2014; see also Potts et al., 2019), trivia facts (e.g., Kornell, 2014), and text passages (e.g., Kliegl et al., 2022; Little & Bjork, 2016) have all shown memory improvements that persist for at least 24 h, and in the case of text passages, at least 7 days. The reported memory improvements after at least 24 h, in effect size terms, range from Cohen’s d = 0.44 to well over 2.0 (for discussion of interpreting effect size magnitude, see Kraft, 2020).

Few of those studies, however, have addressed transfer to untested materials seen during study. In the case of triple associate words, Pan et al. (2019) reported successful transfer to cue words at a 48-h retention interval. Using text passages, Richland et al. (2009) reported no evidence of transfer at extended retention intervals, whereas Little and Bjork (2016) reported successful transfer at a 48-h retention interval. In all three studies, the transfer results at a delay mirrored patterns observed with shorter retention intervals.

Kliegl et al. (2022) investigated the efficacy of pre-instruction testing for text passages across three retention intervals—1 min, 30 min, and 1 week—within a single experiment. The magnitude of the pre-instruction testing effect grew with retention interval and was largest at a 1-week interval. That result led the authors to conclude that the benefits of pre-instruction testing manifest more fully after longer retention intervals. If so, that would suggest that the magnitude of the effects that have been reported in the current literature, most involving very brief or immediate retention intervals, may be understating the potency of pre-instruction testing for memory.

Additionally, pre-instruction testing effects have been observed in three classroom studies (Beckman, 2008; Janelli & Lipnevich, 2021; Soderstrom & Bjork, 2023), wherein the duration between practice testing and a subsequent, high-stakes test ranged from a few days to many weeks. Those results further reinforce the conclusion that the benefits of pre-instruction testing persist across educationally meaningful retention intervals.

Metacognitive Considerations

Many learners do not recognize the benefits of pre-instruction testing even after experiencing and benefiting from it. For example, Huelser and Metcalfe (2012; experiment 2) had participants learn semantically related and unrelated word pairs via pretesting and reading, take a posttest on those pairs, and then rank order the effectiveness of the techniques that they had used. Although pretested word pairs were the best remembered (in the case of semantically related words), participants nevertheless ranked pretesting as the least effective. Similarly, when asked to make predictions about the likelihood of future recall (i.e., judgments of learning or JOLs) of word pairs that were pretested or read, participants tend to give lower predictions to pretested pairs (e.g., Pan & Rivers, 2023; Potts & Shanks, 2014; Yang et al., 2017; Zawadzka & Hanczakowski, 2019). Those predictions belied subsequent posttest results.

The inability to recognize the benefits of pre-instruction testing, which constitutes a potent metacognitive illusion (Bjork et al., 2013), may stem from a tendency to eschew (or at least not favor) pre-instruction testing and other error-prone strategies. In a survey by Yang et al., (2017; experiment 3), for example, when presented with a hypothetical scenario involving the use of pretesting or reading to learn word pairs, 78% of respondents rated reading as more effective. In another survey by Pan et al., (2020a, 2020b), when presented with a hypothetical scenario that entailed learning an academic subject via a guess-and-study approach or a study-only approach, 56% of respondents rated the former and 44% rated the latter as more likely to be effective. Although more favorable towards pre-instruction testing, that result is far from a strong endorsement. In the same survey, 81% of respondents also endorsed the importance of avoiding errors during the learning process, which is a hallmark of pre-instruction testing. Overall, survey data suggest that learners often hold beliefs about learning that may predispose them to favor non-testing methods instead (for related discussions, see Huelser & Metcalfe, 2012; Metcalfe, 2017).

Two studies have investigated ways to promote metacognitive awareness of the benefits of pre-instruction testing. In Yang et al., (2017; experiment 4), participants read a brief explanation about the benefits of “errorful generation” prior to learning word pairs via pretesting and reading; doing so resulted in item-level JOLs that were less biased towards reading (but still favoring it) and JOLs over all items that favored pretesting. In Pan and Rivers (2023; experiments 3–5), participants completed two rounds of pretesting and reading on word pairs, making JOLs, and taking a posttest; after receiving performance feedback on the first-round posttest (i.e., how they scored on pretested versus read items), participants’ second-round JOLs favored pretesting outright. These results suggest that providing learners with explanations or other information about the benefits of pre-instruction testing can help correct metacognitive misperceptions.

Theoretical Perspectives

Researchers have proposed a variety of theoretical accounts to explain pre-instruction testing effects. Some accounts are relatively general, reflecting the incipient state of theoretical understanding in this literature, whereas others are more detailed and suggest specific cognitive mechanisms. Prominent and recent perspectives in the pre-instruction testing literature are summarized next. A list of those accounts, which vary in their emphasis on processes occurring during the event of taking a practice test and/or during subsequent learning opportunities, is presented in Table 3. Additional theoretical perspectives involving potentially related learning strategies and phenomena, including test-potentiated new learning and retrieval practice, are addressed later in this review.

Pre-instruction Testing with Immediate Correct Answer Feedback

At least four theoretical accounts have been proposed to explain pre-instruction testing effects on paired associate words with immediate correct answer feedback (for reviews, see Mera et al., 2022; Metcalfe, 2017). One such account, the mediator or mediation account, involves the generation of mediators (i.e., words that link cues and targets) during practice testing. This account was previously developed as an explanation for the retrieval practice effect (Carpenter, 2011; Carpenter & Yeung, 2017; Pyc & Rawson, 2010; see also Soraci et al., 1999). In the case of pre-instruction testing, the mediator account proposes that incorrect responses made during practice testing take the form of mediator words (e.g., for the word pair mother–child, the mediator word father). These mediators, in turn, support improved recall of the correct answers on a posttest. On such posttests, the mediator words are presumably recalled and used as mental “stepping stones” to the correct answer (Huelser & Metcalfe, 2012; Kornell et al., 2009; cf. Metcalfe & Huelser, 2020). The mediator account is broadly consistent with the idea of multiple potential retrieval routes to a correct answer (Kornell et al., 2009).

The search set account posits that the attempt to answer a practice test question activates a set of potential candidate answers (i.e., the search set), one of which is the correct answer. When the correct answer is presented in the form of immediate feedback, the encoding of the correct answer (which is presumably already activated in memory) is enhanced (Grimaldi & Karpicke, 2012). By this account, the correct answer must be presented immediately after a practice test trial, while the search set is still active, in order for improved learning to result. Both the search set and mediator accounts are consistent with spreading activation accounts of semantic memory (Collins & Loftus, 1975).

The recursive reminding account, which is adapted from theoretical explanations of change detection in multi-list, paired associate learning studies (Jacoby & Wahlheim, 2013; Wahlheim & Jacoby, 2013), proposes that the experience of generating an error and learning the correct answer through feedback are encoded in the same episodic event (Mera et al., 2022; Metcalfe, 2017). On a posttest, when individuals recall the context in which they generated an error, they should also be able to remember the correct answer given that it was encoded in the same episodic event. By this account, it is recursive reminding that gives rise to improved posttest performance.

The prediction error account suggests that the discrepancy between an erroneous response and subsequent correct answer feedback triggers an error signal, which in turn enhances attention and subsequent learning (Kang et al., 2011; Metcalfe, 2017; see also Brod, 2021). This account draws from the cognitive neuroscience research on learning processes involved in detecting and correcting errors (e.g., Ergo et al., 2020; Wang & Yang, 2023). It is reminiscent of the hypercorrection effect, which is the finding that errors made with high confidence are more likely to be corrected on a posttest (Butterfield & Metcalfe, 2001) and possibly due to the greater level of surprise that is felt upon the discovery of such errors (Butterfield & Mangels, 2003). (Owing to differences in experimental procedure, however, the confidence that a learner may have in their answers to a pretest question, and the corresponding level of surprise that they may experience upon learning the correct answer, may not always correspond to the conditions that give rise to the hypercorrection effect.)

The foregoing accounts potentially could be applied to scenarios involving materials other than paired associate words and without immediate correct answer feedback. Doing so, however, may encounter some difficulties. For example, it is unclear whether mediators would be generated when the learning materials are not individual cues and targets. Moreover, it seems unlikely that a search set would remain active in the case of delayed feedback (cf. Kornell, 2014). The recursive reminding and prediction error accounts, in contrast, appear to be applicable to a wider range of stimuli and learning scenarios without need for substantial modification.

Pre-instruction Testing with Text Passage, Video, and Lecture Materials

Several theoretical accounts have been applied to pre-instruction testing effects for text passage, video, and lecture materials, as well as cases where immediate correct answer feedback is not provided (for discussion see Carpenter et al., 2023). These accounts, which are often descriptive in nature, are largely consistent with the mathemagenic hypothesis from the adjunct questions literature (Rothkopf, 1966; Rothkopf & Bisbicos, 1967; see also Anderson & Biddle, 1975; Bull, 1973). The mathemagenic hypothesis posits that the experience of answering adjunct questions causes learners to process subsequently presented text materials more thoroughly. Among such accounts, it has been suggested that pre-instruction testing affects interest (Little & Bjork, 2011), curiosity (Geller et al., 2017; see also Berlyne, 1954), and/or attention (Pan et al., 2020a, 2020b), any of which may cause learners to profit more from subsequent learning opportunities.

Recent empirical research provides support for some of these accounts. For instance, there is evidence that pre-instruction testing may improve attention. In Pan et al., (2020a, 2020b), participants viewed a 26-min, four-segment video lecture about signal detection theory accompanied by pretests or no pretests (a control activity, solving math problems, occurred instead). They rated their level of attention after each video segment. For each segment, self-reported attention was higher in the pretested condition, which performed better on a subsequent posttest; a mediation analysis further revealed links between attention levels and posttest performance. These results suggest that improved attention—or reductions in the tendency to mind wander away from the task at hand—may underpin pre-instruction testing effects involving video and other types of materials (although see Welhaf et al., 2022 for a critique of that conclusion).

At least two eye tracking studies suggest that pre-instruction testing gives learners an idea of the kinds of information that they should seek out or pay attention to, shaping subsequent learning behaviors as a result. Lewis and Mensink (2012) had participants attempt or not attempt pre-instruction questions prior to reading text passages about space travel, physiology, or epidemiology; in most cases, engaging in prior testing caused learners to spend more time rereading and revisiting sentences that were relevant to the questions. Similarly, Yang et al. (2021) found that pre-instruction testing caused participants to fixate longer on portions of a 5-min video lecture about nutrition that pertained to the questions (although that effect was limited to participants with high achievement motivation). The results of both studies are broadly consistent with the suggestion that pre-instruction testing provides a “metacognitive ‘reality check’” (Carpenter & Toftness, 2017, p. 105), highlighting what one does and does not know and, in turn, encouraging information seeking or exploratory behaviors that lead to improved learning (see also Little & Bjork, 2016, Experiment 2). As with the attention account, a metacognitive ‘reality check’ could manifest following pre-instruction testing on a wide range of materials ranging from text passages to video lectures.

There is also evidence that memories for the practice test questions themselves play a role in pre-instruction testing effects. In St. Hilaire and Carpenter (2020; Experiments 2–4; see also Pan et al., 2020a, 2020b, experiment 2), participants attempted a dozen questions prior to watching a 31-min video lecture about information theory. While watching the video, participants were asked to take notes on information relevant to the questions (experiment 2) or write down/identify the answers to the questions (experiments 3–4). A pre-instruction testing effect relative to a non-testing control condition was observed only in cases where participants were able to recall the questions they had attempted (as evident by their notes or answer identification behaviors during the video). That result suggests that episodic memories for specific questions are an important contributor to pre-instruction testing effects (although it should be noted that a specific as opposed to general effect of pre-instruction testing on memory was observed in that study).

The attentional window hypothesis recently proposed by Sana and Carpenter (2023) provides a way to integrate the aforementioned accounts—that is, whether processes posited by the mathemagenic hypothesis and/or other theoretical perspectives of pre-instruction testing effects occur as a result of testing—and address conflicting data regarding specific versus general effects (i.e., inconsistency across studies). This hypothesis posits that pre-instruction testing opens an attentional window wherein learners actively search for the answers to practice test questions, and once those answers are discovered, the window closes. An implication of that account is that practice questions targeting information that will be encountered at a later point in a subsequent learning opportunity will increase the likelihood of a general benefit when untested information appears earlier in the learning opportunity. In other words, untested information benefits by being “in the attentional window” when it occurs prior to the tested information. In two experiments with practice questions that targeted information appearing early or late in a subsequently presented text passage or video, Sana and Carpenter reported results consistent with the attentional window hypothesis (i.e., specific versus general benefits, respectively, when the questions targeted content that appeared early prior to untested content, versus late after untested content, in the learning materials). The purported attentional window could entail greater levels of interest, curiosity, and potentially other cognitive processes that lead to improved learning.

A Three-Stage Theoretical Framework for Pre-instruction Testing Effects

Additional research is needed to further explicate the theoretical mechanisms that are responsible for pre-instruction testing effects. The foregoing theoretical perspectives, however, are largely consistent with the following three-stage framework. We propose this framework, which is depicted in Fig. 4, to help organize the perspectives proposed this far, some of which fit into specific stages, and to clarify the psychological processes that may be involved when pre-instruction testing is used.

Three-stage theoretical framework showing three potential routes to improved posttest performance. (a) Taking a practice test triggers a general psychological process or state, such as enhanced curiosity, that affect subsequent learning behaviors indirectly. (b) Taking a practice test causes memories to be formed (e.g., for a particular question) that drive specific learning behaviors (e.g., answer search). (c) Taking a practice test causes memories to be formed that act as retrieval cues on a subsequent posttest (largely but not entirely bypassing the second stage)

In the first stage, the event of taking a practice test engages one or more psychological processes that are not triggered, or triggered to a lesser extent, by non-testing methods. These processes may be relatively general, such as an enhanced state of curiosity; alternatively, they may be more specific, such as forming a memory for a particular practice question, generating a set of possible answers, developing a mental framework for the information that is to be learned, or generating more retrieval routes to a particular answer. In the second stage, which occurs during a subsequent learning opportunity or during the presentation of correct answer feedback, the encoding of information into long-term memory is optimized to a greater extent than in a non-testing condition. These optimizations may involve improved attention, searching for specific answers, connecting answers to memories for practice questions, and other processes that do not occur or only occur to a lesser degree in a non-testing condition. In the third and final stage, which occurs during a subsequent posttest, improved performance in the tested (i.e., experimental) condition is driven by better encoded information from the second stage, and/or with potential assistance from specific memories (e.g., mediators) or expanded retrieval routes that were formed in the first stage.

The processes described above can be categorized into three potential routes for improved posttest performance. As detailed in Fig. 4, pre-instruction testing may directly or indirectly affect subsequent learning behaviors (routes a and b), leading to improved recall. As an alternative (and perhaps less likely) possibility, memories for the practice test event itself may benefit posttest performance even without having a major impact on subsequent learning behaviors. For instance, the generation of guesses on a pretest—or consideration of the possibilities, principles, or theories addressed by a prequestion—may better equip a learner to answer a subsequent posttest question than if they had not engaged in such guessing or consideration.

The three-stage framework outlines ways that pre-instruction testing may confer a learning benefit on information that was specifically tested. As described previously, however, pre-instruction testing can also confer a general benefit on information from the learning episode that was not tested. Although the general benefit is far less common and theoretical progress toward understanding this effect is in its very early stages, the evidence so far points to a mechanism whereby pre-instruction questions orient attention to both tested and untested information during a learning episode. Consistent with this idea, pre-instruction testing is more likely to lead to both specific and general benefits when the untested information shares a noticeable relationship with the tested information (e.g., a pretest over a particular type of geyser leads to better learning of other, untested information about geysers, Little & Bjork, 2016), or when untested information is placed before tested information in the learning episode (Sana & Carpenter, 2023). Both manipulations increase the chances that pre-instruction testing will, by virtue of the relatedness and proximity between tested and untested content, stimulate attention to both types of information. The fact that these general benefits do not occur automatically, but instead appear to be sensitive to the learning materials and how they support a learner’s engagement, would suggest that the general benefits arise during the second stage. Although more research is certainly needed, these findings highlight attentional processes as candidate mechanisms contributing to the general benefits of pre-instruction testing.

As an additional point, the theoretical accounts for specific or general benefits of pre-instruction testing are not necessarily mutually exclusive. It is possible that multiple cognitive mechanisms and processes contribute to these effects. The relative contributions of these processes at each stage may also vary according to the materials being learned, the implementation of pre-instruction testing that was used, and other factors. Finally, for related theorizing that addresses the case of incorrect or correct responses to cued recall tests, see Kornell et al., (2015; also Kornell & Vaughn, 2016; Rickard & Pan, 2018).

Comparable Learning Strategies and Phenomena

At least several other learning strategies resemble or might be considered as a variant of pre-instruction testing. Studies involving these strategies provide further context for theoretical and practical issues. Additionally, some studies have investigated the effects of pre-instruction testing in combination with, or in comparison against, organizational signals (e.g., text headings or previews) or retrieval practice. Such studies exemplify an emerging line of research that addresses two or more evidence-based learning strategies simultaneously (for related discussions, see McDaniel, 2023; Roelle et al., 2023).

Test-Potentiated Learning

Taking a test prior to a study opportunity can lead to improved learning from that study opportunity. The literature on that phenomenon, which is known as test-potentiated learning, has typically been conducted using materials that are studied prior to being tested (i.e., retrieval practice) and then restudied (e.g., Arnold & McDermott, 2013; Izawa, 1970). By definition, pre-instruction testing effects could be regarded as forms of test-potentiated learning. More recently, a growing body of literature has also demonstrated that taking tests can improve subsequent learning of new materials, a phenomenon known as test-potentiated new learning or the forward testing effect (for reviews see Chan et al., 2018; Pastötter & Bauml, 2014; Yang et al., 2018; see also Boustani & Shanks, 2022). It has been suggested that the pre-instruction testing effect constitutes an example of test-potentiated new learning (e.g., Chan et al., 2018), although unlike most studies in the literature on that phenomenon, pre-instruction testing does not entail a study opportunity prior to an initial test and does not typically involving practice testing on information that is markedly different from subsequently studied information.

A host of theoretical explanations has been proffered for test-potentiated learning and test-potentiated new learning (for discussions see Chan et al., 2018; Yang et al., 2018). The explanations that are arguably most applicable to pre-instruction testing involve learners adopting more optimal encoding or retrieval strategies after practice testing. For instance, it has been suggested that the experience of taking a test causes learners to exert greater effort at encoding subsequently presented information. Alternatively, learners may focus on encoding the types of information that they were previously tested on. Those changes in learning behaviors may stem from knowledge of the type of test to follow, improved awareness of one’s own state of knowledge, or from the experience of retrieval failures.

Retrieval Practice

At least two studies to date have investigated whether pre-instruction testing prior to a lecture, followed by retrieval practice on content from that lecture, is more effective than retrieval practice alone. In Geller et al. (2017) and Carpenter et al. (2018), undergraduate students attempted a single pre-instruction question prior to a given lecture, during which the answer to the question was learned, and then attempted that question again at the end of the lecture (as a form of retrieval practice). Another, previously unpracticed question was also included at the end of lecture for retrieval practice. On a posttest occurring up to one week later, students attempted both questions again. Performance was not significantly better for the question that had been practiced twice (first as a pre-instruction question and then as retrieval practice) versus the question that had been practiced one (as retrieval practice only). These results suggest that the effects of pre-instruction testing—at least in a low-dosage form and without any further enhancements (e.g., specialized instructions or detailed feedback)—do not augment, nor detract from, the effects of retrieval practice. The theoretical basis for these results remains to be determined. The authors of both studies, however, speculated that how pre-instruction testing was implemented and characteristics of the course materials may have been factors (Carpenter et al., 2018; Geller et al., 2017).

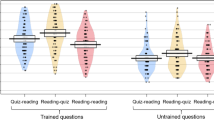

Three studies to date have directly compared the efficacy of pre-instruction testing with retrieval practice. In Latimier et al. (2019), online participants took a multiple-choice practice test with immediate correct answer feedback either before (i.e., pretesting) or after (i.e., retrieval practice) reading biology text passages. On a one-week delayed posttest, both pretesting and retrieval practice effects were observed, but the effects were larger in the case of retrieval practice. De Lima and Jaeger (2020) included a retrieval practice condition wherein elementary school students attempted to fill in missing keywords in an encyclopedia text after having read that text with all words intact; that condition outperformed a pretesting condition on a one-week delayed test. Across five experiments, Pan and Sana (2021) had participants complete practice questions in cued recall or multiple-choice format before or after reading an encyclopedic text passage. On posttests conducted after a 5-min or 48-h delay, performance was consistently higher in the pretesting versus retrieval practice conditions (with an advantage of ds = 0.30 and 0.14, across all experiments, for pretesting versus retrieval practice).

The results of these three studies—two showing an advantage for retrieval practice and one showing an advantage for pre-instruction testing—present an interesting theoretical puzzle. The mixed results raise the question of whether retrieval practice and pre-instruction testing evoke the same or different cognitive processes. On one hand, Kornell and Vaughn (2016) have argued that retrieval practice and pre-instruction testing represent two ways to tap into a common, two-stage mechanism for learning from retrieval attempts, whereas Pan and Sana (2021) have speculated that separate mechanisms may be involved (i.e., retrieval practice helps consolidate prior learning in long-term memory and pre-instruction testing spurs improvements in the subsequent encoding of information).

Invention Activities and Productive Failure

Having students attempt to develop formulas or procedures, followed by instruction on the correct formulas or procedures, can yield better learning than receiving such instruction outright. Evidence for the benefits of invention or inventing activities comes from studies in the domains of statistics and physics (e.g., Chin et al., 2016; Schwartz et al., 2011; Schwartz & Martin, 2004; see also Brydges et al., 2022). In alignment with some of the aforementioned theories of pre-instruction testing effects, the benefits of engaging in invention activities have been attributed to positive influences of those activities on subsequent learning behaviors (Schwartz et al., 2011). Alternatively, engaging in invention activities may prevent misconceptions that arise from receiving instruction over procedures and concepts before an opportunity to apply those procedures and concepts (Schwartz et al., 2011).

The productive failure literature (e.g., Kapur, 2008, 2015; for a meta-analysis, see Sinha & Kapur, 2021) indicates that having learners attempt to solve complex problems prior to instruction can be more effective than receiving instruction from the outset. Studies of productive failure have been conducted primarily in the domains of mathematics, physical sciences, and medicine and, unlike most studies of pre-instruction testing, often involve group activities (e.g., Kapur & Bielaczyc, 2012). Explanations of productive failure effects include scaffolding via the activation of relevant knowledge, attention being focused on critical problem features, and highlighting knowledge gaps (Loibl & Rummel, 2014; Sinha & Kapur, 2021). These explanations also resemble some of the theoretical accounts in the pre-instruction testing literature.

Organizational Signals and Learning Objectives

The use of signaling devices in text materials, including headings, summaries, previews, overviews, and typographical cues (e.g., underlying, bolding, italics, and capitalization), can improve memory for the information targeted by such devices (for a review, see Lorch, 1989). Such devices appear to do so by orienting readers’ attention to specific portions of a given text, which also resembles some of the theorizing in the pre-instruction testing and adjunct questions literatures (e.g., Hartley & Davies, 1976, Lewis & Mensink, 2012; see also Hamilton, 1985). Directly addressing that suggestion, Richland et al., (2009; experiments 3–5; cf. James & Storm, 2019, experiment 4) compared the effects of pretesting versus reading on a text passage that featured key sentences in italics or keywords presented in bold. Across all experiments, pretesting enhanced memory over a read-only condition. Those results suggest that the effects of pre-instruction testing for text materials surpass that conferred by signaling devices in the form of typographical cues.

Sana et al., (2020a, 2020b; experiment 2) compared the efficacy of reading learning objectives (i.e., statements about specific information that students should learn) versus taking pretests on learning objectives prior to reading text passages on neuroscience topics. On a 5-min delayed posttest, participants were better able to recall content targeted by the learning objectives when they had previously taken pretests. Similar to Richland et al.’s (2009) findings for typographical cues, these results suggest that the benefits of pretests are beyond that conferred by learning objectives and possibly other statements read in advance of a learning opportunity.

Educational Applications