Abstract

Much research shows academic self-concept and achievement are reciprocally related over time, based on traditional longitudinal data cross-lag-panel models (CLPM) supporting a reciprocal effects model (REM). However, recent research has challenged CLPM's appropriateness, arguing that CLPMs with random intercepts (RI-CLPMs) provide a more robust (within-person) perspective and better control for unmeasured covariates. However, there is much confusion in educational-psychology research concerning appropriate research questions and interpretations of RI-CLPMs and CLPMs. To clarify this confusion, we juxtapose CLPMs and RI-CLPMs relating math self-concept (MSCs), school grades, and achievement tests over the five years of compulsory secondary schooling (N = 3,425). We extend basic models to evaluate: directional ordering among three rather than only two constructs; longitudinal invariance over time (multiple school years) and multiple groups (school tracks); lag-2 paths between non-adjacent waves; and covariates (gender, primary-school math and verbal achievement). Across all basic and extended RI-CLPMs and CLPMs, there was consistent support for the REM bidirectional-ordering hypothesis that self-concept and achievement are each a cause and an effect of the other. Consistent with the logic of these models, extensions of the basic models had more effect on CLPMs, but the direction and statistical significance of cross-lagged paths were largely unaffected for both RI-CLPMs and CLPMs. This substantive-methodological synergy has important implications for theory, methodology, and policy/practice; we support the importance of MSC as a predictor of subsequent achievement and demonstrate a more robust methodological framework for evaluating longitudinal-panel models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Self-concept is a person’s perceptions of themselves, formed through their experiences with and interpretations of their environment, and impacted by others’ evaluations. It affects how we act, feel, and adjust to a changing environment. In educational settings, the focus of our study, previous research has shown that academic self-concept (ASC) is linked to a variety of educational outcomes, including academic achievement (Marsh & Craven, 2006; Marsh & Martin, 2011; Marsh, et al., 2018a, 2018b; Marsh, Hau, et al., 2005; Marsh, Trautwein, et al., 2005), interest and satisfaction in school, achievement emotions (Marsh, et al., 2018a, 2018b; Pekrun, 2006; Pekrun et al., 2017), course selection (Marsh & Yeung, 1997; Marsh et al., 2019; Parker et al., 2014) persistence, and long-term attainment (Guo et al., 2015; Guo, Marsh, et al., 2015; Guo, Parker, et al., 2015; Guo, Parker, et al., 2015; Marsh & O’Mara, 2008). Particularly good support for the generalizability of the correlation between ASC and achievement comes from the cross-national studies. The positive correlations between ASC and achievement generalize over countries based on studies using Programme for International Student Assessment (PISA) data (Basarkod et al., 2020; Marsh & Hau, 2003; Nagengast & Marsh, 2011; Seaton et al., 2009) and the combined Trends in Mathematics and Science Study (TIMSS) and Progress in International Reading Literacy Study (PIRLS) database. However, even though ASC and achievement are substantially correlated, a critical question with important theoretical and policy-practice implications is the directional ordering of these constructs. Hence, the critical question is whether this correlation reflects a non-causal association, causal effects of prior ASC on achievement, causal effects of prior achievement on subsequent ASC, or causal effects in both directions.

Here we briefly review the considerable body of research showing that ASC and achievement are reciprocally related over time, supporting a reciprocal effects model (REM). However, nearly all this research is based on a between-person perspective using traditional cross-lag-panel models (CLPMs) of longitudinal data. In contrast to this traditional approach, recent research has challenged CLPMs' appropriateness, arguing that CLPMs fail to uncover the within-person effects linking ASC and achievement (Murayama et al., 2017). CLPMs with random intercepts (Hamaker et al., 2015) have been proposed to provide a more robust (within-person) perspective and better control for unmeasured covariates. However, there is limited research juxtaposing results from these two approaches in educational psychology, and much ambiguity about appropriate use and interpretation of these models and their extensions. Hence, the overarching purpose of the present investigation is to compare these two approaches and demonstrate important extensions to them. In particular, a critical unanswered question is whether the substantial body of REM findings based on the between-person (CLPM) approach holds up for within-person (RI-CLPM) analyses and extensions of the CLPM? Following Marsh and Hau (2006), who originally coined the term, our study is a methodological-substantive synergy that applies a cutting-edge methodology to address substantive issues with implications for theory, methodology, and policy/practice.

Support for the Reciprocal Effects Model

Byrne (1984) proposed three criteria that studies addressing directional ordering must satisfy: (a) a statistical relationship must be established, (b) a clearly established time precedence must be evident, and (c) a causal model must be tested using appropriate statistical techniques such as the use of structural equation models (SEMs). Traditional approaches to this issue (Calsyn & Kenny, 1977) took an “either-or” approach—either prior achievement leads to subsequent ASC (a skill development model) or prior ASC leads to subsequent achievement (a self-enhancement model). However, integrating theoretical and statistical perspectives, Marsh (1990) argued for a dynamic reciprocal effects model (REM) that incorporates both the skill development and the self-enhancement model (see also Pekrun, 1990). This theoretical model predicts that better ASCs lead to better achievement, and that better achievement leads to better ASCs. We emphasize that the theoretical hypothesis is clearly causal. Marsh further noted that it was well established that students base their ASCs at least in part on their prior achievement (the skill development path). Hence the critical issue is whether higher ASCs also lead to higher achievement, regardless of whether this self-enhancement path is larger or smaller than the skill-development path.

The REM hypothesis is clearly causal in nature, a theoretical hypothesis of the causal ordering of variables over time. Indeed, the REM naturally leads to the hypothesis of reciprocal effects that are causal. Thus, Marsh, Trautwein, et al. (2005, p. 397) conclude: Reciprocal effects models of longitudinal data show that ASC is both a cause and an effect of achievement. The REM generated a substantial research literature that treats these reciprocal effects as causal and a number of methodological studies on how to test it (e.g., Usami, Murayama, et al., 2019; Usami, Todo, et al., 2019). For example, from a between-person perspective, a critical empirical question is: For students with the same levels of achievement at wave one, will students with higher ASCs have higher achievement in subsequent waves than those with lower ASCs? Positive evidence answering this question would support REM hypotheses and might reflect a causal effect. However, this interpretation is compromised by a lack of control for a potentially infinite number of covariates (i.e., effects might change if researchers controlled for the right covariates). Our position is that appropriate statistical models capture one key component of causality, namely directionality, by providing empirical tests of directional "causal" hypotheses.

However, there are potential competing interpretations that compromise interpretation—as is always the case with claims of causality. Nevertheless, to deflect concerns about using the broad, potentially ambiguous term of causality (or Granger causality; Granger, 1969), we use the more focused term of "directionality" (i.e., tests of directionality of causal tests effects rather than causal ordering). Indeed, tests of the directionality of effects have a long history concerning this research. Thus, for example, Bandura (1986) noted causal relations between self-efficacy and outcomes are bidirectional (i.e., reciprocal) rather than unidirectional. We also note that the terms directional, unidirectional, and bidirectional are widely used in relation to cross-lagged panel studies (e.g., Bailey et al., 2020; Wu & Griffin, 2012). Thus, the term directionality (and the reliance on bidirectional models) has a long history concerning our theoretical hypotheses, is more focused than the term causality, and better reflects what is being tested. Nevertheless, we emphasize that the REM hypothizes a bidirectional model that is causal. Hence, the central question is whether empirically demonstrated reciprocal relations between ASC and achievement can be given a causal interpretation that is consistent with the REM hypothesis.

REM's theoretical hypothesis of the directional ordering of causal relations is testable when both ASC and achievement are collected in at least two but preferably three or more waves of data. Following Marsh (1990), there is a substantial research literature supporting REM predictions, including comprehensive meta-analyses and systematic reviews (e.g., Huang, 2011; Valentine et al., 2004; Wu et al., 2021; also see Marsh & Craven, 2006; Marsh & Martin, 2011). Consistent with ASC theory and research, it is not surprising that prior achievement affects ASC. However, the meta-analyses demonstrated that the effect of prior ASC on subsequent achievement, controlling the effects of prior achievement, was also highly significant overall and positive in most of the studies they considered. These authors clearly interpreted support for the REM hypothesis of reciprocal effects as causal effects, noting implications concerning the need for interventions that simultaneously enhance both ASC and achievement. Thus, Marsh and Craven (2006; also see Huang, 2011) argued: "If practitioners enhance self-concepts without improving performance, then the gains in self-concept are likely to be short-lived….If practitioners improve performance without also fostering participants' self-beliefs in their capabilities, then the performance gains are also unlikely to belong-lasting" (p. 159).

In REM studies, achievement is typically assessed by standardized tests or school grades, but the different achievement indicators have different implications. School grades are a particularly salient source of feedback to students and their parents, are easily compared among classmates, and have important implications for academic careers. Hence, school grades tend to be more correlated with ASCs than test scores (Marsh et al., 2014, 2014a, 2014b, 2014c; Marsh et al., 2014; Marsh, Hau, et al., 2005; Marsh, Morin, et al., 2014; Marsh, Trautwein, et al., 2005). However, school grades typically are idiosyncratic to particular teachers, settings, and schools. In particular, teachers typically grade on a curve, allocating the best and worst grades to the relatively better and poorer performing students within a classroom. Hence, teachers use the classroom as a narrow frame of reference in their grading procedure, largely ignoring students' absolute levels of achievement in their class relative to a common metric that generalizes over all students. Although the classic meta-analyses support REM hypotheses for both school grades and test scores, most individual studies have included only one of these achievement indicators. Moreover, few studies have fully juxtaposed the two over an extended developmental period nor evaluated the consistency of effects over time.

In a critique of CLPMs like those used in nearly all REM studies, Hamaker et al. (2015) proposed the RI-CLPM to analyze within-person relations over time. They argue that this approach is more appropriate for evaluating within-person relations between constructs. Specifically, the RI-CLPM shows how within-person deviations in one construct are related to subsequent within-person deviations in another construct. CLPMs confound the within- and between-person processes.

Based on this within-person perspective, Ehm et al. (2019, 2021) evaluated support for the REM based on CLPMs and RI-CLPMs using the same sample of young primary school students in separate studies of reading and mathematics. For reading constructs, Ehm et al. (2019) found support for REM with CLPMs but not RI-CLPMs. Indeed, their RI-CLPMs provided no support for either skill development or self-enhancement perspectives; there were no statistically significant cross-paths from prior ASC to subsequent achievement or from prior achievement to subsequent ASC. For math outcomes (Ehm et al., 2021), the CLPM and RI-CLPM results were similar and provided partial support for the REM. For both models, the cross-lagged effects were non-significant from Year 1 to Year 2, but there were reciprocal effects for Year 2 to Year 3. In their discussion of limitations and directions for further research, Ehm et al. noted issues specific to the measurement of ASC with young children, developmental processes that are evolving at these young ages, and the need to test the generalizability of the results with older children. Indeed, a variety of theoretical perspectives suggest that children only begin to use social comparison processes as a basis of self-evaluation at the age of 7 or 8 (e.g., Harter, 1998; Piaget & Inhelder, 1969; Ruble, 1983; but also see Marsh et al., 2002). Hence, there might have been a developmental shift in the qualitative nature of ASCs, potentially undermining the rationale of the CLPMs and especially the RI-CLPMs. Emphasizing this issue and noting prior support for the REM was based largely on CLPMs with secondary-school students rather than primary-school students, Ehm et al. (2019, 2021) called for studies comparing CLPMs and RI-CLPMs based on secondary students: Further research and also open discussions about the appropriateness of different assumptions and corresponding methods for analyzing the longitudinal relations among achievement and self-concept are necessary (Ehm et al., 2019, p. 33).

In pursuit of this call for further research proposed by Ehm et al. (2019), our study is a methodological-substantive synergy. We juxtapose the application of RI-CLPMs, CLPMs, and extensions of these models to test REM hypotheses for a large longitudinal study of secondary students. Substantively we evaluate the REM for math self-concept (MSC), math achievement test scores, and math school grades over the first five years of secondary school. Methodologically, we critically evaluate and compare results for CLPMs and RI-CLPMs. In addition, we demonstrate extensions of basic CLPMs and RI-CLPMs: focusing on the measurement model, the inclusion of covariates, generalizability of multiple groups, invariance over time, and incorporation of three constructs (MSC, math achievement, and math test scores) into a single model (i.e., tripartite rather than the typical bivariate CLPMs and RI-CLPMs). For these purposes, we provide further analyses and extend the analyses presented by Marsh et al., (2018a, 2018b).

In theory, reciprocal effects linking ASC and achievement can be located at both within- and between-person levels. Specifically, from a cognitive-motivational perspective, effects of achievement on ASC, and effects of ASC on achievement, are built on within-person mechanisms. A typical causal process may involve the following steps. First, achievement (e.g., one’s grade in math) is perceived by the individual student and then attributed to ability. Especially with cumulative success or failure and consistent attributions to ability or lack of ability, respectively, these attributions lead to the formation of self-perceptions of ability that are stored in long-term memory and can be reported as self-concept of ability. Second, when confronted with achievement tasks, the student re-activates task-related ASCs from memory. These ASCs guide motivation to invest effort and make strategic choices, which, in turn, contributes to subsequent achievement (Marsh, Pekrun, Murayama et al., 2016). From this perspective, reciprocal effects of ASC and achievement are located within persons (i.e., within the individual brain) in the first place. However, when repeated over time, the within-person effects can contribute to between-person differences in achievement and ASC and drive the between-person effects that link between-person distributions of the two variables over time, as traditionally analyzed in between-person CLPMs.

Furthermore, it is unclear whether either traditional CLPMs or RI-CLPMs capture these within-person processes. In particular, although the RI-CLPM takes a within-person perspective, it does not actually posit within-person mechanisms to explain the reciprocal effects. Thus, for example, Niepel et al. (2021) argue that these traditional CLPM and RI-CLPM approaches leave the intraindividual dynamics (within-person processes) in a black box. Hence, although the RI-CLPM provides a within-person perspective in terms of the underlying statistical model (a residualized or person-centered statistical analysis), it does not test within-person mechanisms that lead to the reciprocal effects between ASC and achievement. Hence, terms such as person-centered or residualized models might more appropriately describe the RI-CLPM approach than the term within-person perspective. This is an important distinction in evaluating the strengths of the RI-CLPM. Of course, it is possible to extend both the CLPM and RI-CLPMs to test within-person mediating mechanisms. Thus, for example, Marsh, Hau, et al. (2005), Marsh, Trautwein, et al. (2005) evaluated the role of academic interest in tests of the REM based on the CLPM, showing that support for the REM was little affected by the inclusion of math interest measured at each wave of the design. They suggested the need for research that includes a variety of academic choice behaviors to evaluate better mediational processes underpinning the REM.

Methodological Focus: Models of Cross-lagged Panel Data and Reciprocal Effects

Cross-Lagged Panel Data

Cross-lagged panel data are used to test REM hypotheses about relations between MSC and math achievement. In panel designs, the same variables (MSC and math achievement) are measured repeatedly over time. Critical parameters are the stability paths (leading from one variable to the same variable in the next wave) and the cross-lag paths leading from one variable to the other variable in the next wave (e.g., effects of prior MSC on future math achievement, controlling for prior math achievement). The critical results are the directionality of the cross-lag effects—if there is a directional ordering among the variables and whether it is unidirectional or reciprocal.

Although it is possible to test CLPMs with only two waves, basic RI-CLPMs require at least three waves, and even more waves are desirable. Because most tests of REMs are based on only two waves of data, studies typically considered only lag-1 effects (i.e., paths relating variables in adjacent waves). However, when there are three or more waves, it is possible to consider the invariance of effects over multiple waves and paths between non-adjacent paths (i.e., lag-2 effects). Thus, Marsh and colleagues (Arens et al., 2017; Marsh et al., 2017, 2018a, 2018b) argued that lag-2 effects are typical in CLPMs and should be included in CLPMs. They further noted that the improved fit was achieved primarily by adding lag-2 stability coefficients; lag-2 cross-paths were largely non-significant, whereas lag-1 cross-lag paths were relatively unaffected by the inclusion of additional lag-2 paths. lag-2 paths might have a theoretical basis (e.g., skills mastered in school year t may be directly relevant in year t + 2 and beyond, in addition to their relevance to year t + 1). However, including lag-2 paths also provides a more robust control for prior effects and potentially confounding covariates than models based on a single wave (Lüdtke & Robitzsch, 2021; Marsh, et al., 2018a, 2018b; VanderWeele et al., 2020). Hamaker and colleagues (Hamaker et al., 2015; Mulder & Hamaker, 2021) also suggested that CLPMs often have to include lag-2 effects to achieve a goodness-of-fit comparable to the RI-CLPMs. As suggested by Marsh and et al., (2018a, 2018b), for us, the critical issues are whether the addition of lag-2 paths improved fit and particularly whether their inclusion altered the interpretation of the cross-lag paths and support for REMs—a sensitivity test.

Recent research has contrasted a wide variety of complex statistical models that can be applied to cross-lag-panel data (Kenny & Zautra, 1995; McArdle, 2009; Orth et al., 2021; Usami, Murayama, et al., 2019; Usami, Todo, et al., 2019; Zyphur et al., 2020). However, comparisons of the different models based on multiple data sets (Orth et al., 2021) or simulated data (Usami Murayama, & Hamaker, 2019; Usami, Todo, et al., 2019) showed that only RI-CLPMs and particularly CLPMs consistently converged to proper solutions. Although CLPMs consistently converged to proper solutions, RI-CLPMs sometimes did not—even when the RI-CLPM structure was used to generate the simulated data (Usami, et al., 2019a, 2019b). Furthermore, Orth et al. reported that the CLPM produced more consistent cross-lagged effects both within and between samples.

Because CLPMs are nested under RI-CLPMs, RI-CLPMs necessarily result in a better fit for indices that do not correct for CLPMs' greater parsimony. This is sometimes used to argue in favor of RI-CLPMs over CLPMs. However, Orth et al. (2021) noted that the choice of models should also be based on theoretical grounds and appropriate interpretations of the results rather than only goodness-of-fit. Hence, goodness-of-fit should be only one of the considerations in the choice of models and their interpretation. Furthermore, Marsh and et al. (2018a, 2018b, p. 271; also see Lüdtke & Robitzsch, 2021) argued that the addition of lag-2 paths substantially improved the fit of a CLPM and served for "providing stronger controls for preexisting differences." Although they did not consider a RI-CLPM, the fit of their CLPM with covariates and lag-2 effects approached the fit of the corresponding measurement model (in which all constructs were merely correlated). Because the RI-CLPM and CLPM are both nested under the measurement model, this suggests that their extended CLPM with lag-2 effects would have fit their data as well as a RI-CLPM. If this were the case more generally, goodness-of-fit would no longer be a critical issue in comparing the RI-CLPM and the extended CLPM with lag-2 effects. In the present investigation, we pursue this issue in our juxtaposition of the two models.

Distinguishing Between RI-CLPM (Within-Person) and CLPM (Between-Person) Perspectives

Historically, tests of the REM in ASC research have been based almost entirely on CLPMs, but, following Hamaker and colleagues (Hamaker & Muthén, 2020; Hamaker et al., 2015, 2020; Mulder & Hamaker, 2021), there has been a recent surge in the popularity of RI-CLPMs. Nevertheless, Orth et al. (2021) emphasized that the two models address different questions, result in different interpretations, and are based on different assumptions. Because both models and their juxtaposition are relevant, we argue that it is crucial to understand how the underlying rationales of these two models differ (see Fig. 1).

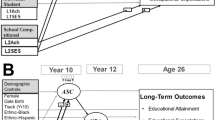

Diagram of cross-lag-panel-model (A) and random intercept cross-lag-panel-model (B) with covariates. Three constructs were measured in the first five years of secondary school (Years 5 – 9): X = math self-concept; Y = Math grade; Z = Math standardized test. Covariates included verbal and math achievement from the last year of primary school (Year 4). Math self-concept was based on responses to 6 items, but all other constructs were single-item constructs. Excluded in order to avoid clutter are correlated uniquenesses relating responses to the same math self-concept item administered in different years, and correlated residual covariances among the three constructs in Years 6 – 9

Structural Characteristics

The primary structural difference between the two models is that RI-CLPMs include a stable trait factor (Tx, Ty, and Tz in Fig. 1), whereas CLPMs do not. In this sense, CLPMs are nested under the RI-CLPM. CLPMs evaluate how the effects of individual differences at each wave are related to those in subsequent waves (an undecomposed between-person perspective). RI-CLPMs evaluate how within-person deviations at each wave differ from a student's stable trait (a decomposed between-person difference), and how these within-person differences from one wave are related to those in the next wave (a within-person perspective).

Importantly, CLPMs and RI-CLPMs differ in the interpretation of the term "between-person." In CLPMs, between-person effects reflect a combination of within-person (i.e., deviations from a global trait) and between-person (e.g., stable trait) effects. This is consistent with the term's use in most individual difference studies of relations among variables and most cross-sectional studies. However, RI-CLPMs decompose these effects into separate components reflecting within- and between-person components. Thus, within the context of each model, the use of the generic term between-person is appropriate. However, to avoid confusion, we refer to these as "decomposed" between-person effects (RI-CLPM) and "undecomposed" between-person effects (CLPM).

For our study based on three latent constructs, the critical parameters for both RI-CLPMs and CLPMs are the auto-regressive stability paths (Bxx, Byy, and Bzz in Fig. 1), and particularly the cross-paths relating achievement and MSC (Bxy, Byx, Bxz, Bzx in Fig. 1), but also cross-paths relating the two indicators (school grades and test scores) of math achievement (Byz and Bzy). If both sets of paths leading from achievement to MSC and from MSC to achievement are statistically significant, the variables are said to be reciprocally related. If only one of the sets of paths is significant (and differs significantly from the other path), the directional ordering is said to be unidirectional rather than reciprocal. Most recent CLPMs tests of REMs are latent, at least for the ASC construct (i.e., there are multiple indicators of the ASC factor—the unlabeled boxes in Fig. 1). However, the latent versions of the RI-CLPM have been developed only recently, so this model has few substantive applications (Mulder & Hamaker, 2020; also Ehm et al., 2019, 2021).

We also note that most applications of latent RI-CLPMs are bivariate models, based on two constructs (e.g., MSC and test scores) or separate analyses of each pair of constructs when more than two are considered (e.g., Ehm et al., 2019, 2021). However, our focus is on relations among three constructs (MSC, test scores, and school grades). Hence we extend the basic bivariate RI-CLPMs and CLPMs to include three constructs: tripartite RI-CLPMs and CLPMs. Our study is one of only a few applications of the tripartite RI-CLPMs with latent variables, particularly for REM studies of ASC and achievement (but see Van Lissa et al., 2021; Burns et al., 2019; also see Hübner et al., 2022; we elaborate on the importance of this contribution in Discussion section).

RI-CLPMs and CLPMs Address Different Research Questions

For longitudinal panel data, the multiple waves (level 1) are nested under the person (level 2). Here, the level 2 variables in RI-CLPMs are the average levels of ASC and achievement over time for each student (i.e., the random intercepts or latent means; Hamaker et al., 2015). The assumption is that for a given student, these represent stable traits that are consistent over time for the duration of the study but differ from student to student. In contrast, the CLPM is like a single-level model that evaluates relations between ASC and achievement within-waves and over time without controlling for person-level differences in these variables (an undecomposed between-person focus that does not separate within-person and between-person effects). The RI-CLPM estimates relations between these variables after controlling (decomposed) between-person stable trait effects, person-level intercepts; that is, it provides a within-person perspective.

To simplify this distinction, we focus on MSC (X in Fig. 1) and achievement, which could refer to either math school grades (Y in Fig. 1) or math test scores (Z in Fig. 1), or both. However, these same distinctions also apply to other pairs of variables. It is important to note that the undecomposed between-person effects in CLPMs are the effects of individual differences in some construct X (MSC) on change in the individual differences in achievement (Y or Z in Fig. 1). This is based on the covariance of X (wave-1) and achievement (wave-2), controlling wave-1 variables. Thus, for CLPMs, change is based on the residual change in individual differences in achievement from wave-1 to wave-2 and aims to predict change in individual differences (or rank-order change). In contrast, change in the RI-CLPM is based on how within-person deviations for X at wave-1 (i.e., the difference between X at wave-1 from the latent mean of X across all waves) are related to deviations in achievement at wave-2, controlling deviations in wave-1 variables. These two perspectives are easily confounded, but are related to different research questions and often lead to different interpretations. To make this distinction more concrete, we offer the following research questions that are appropriate for each model:

-

-

CLPMs: When students have high MSCs (compared to other students), are they more or less likely to experience a subsequent rank-order increase in math school grades (compared to other students)? Likewise, when students have a high achievement (compared to other students), are they more or less likely to experience a subsequent rank-order increase in MSC (compared to other students)? Thus, do individual differences in MSC positively predict rank-order change in relative achievement, and do individual differences in achievement positively predict rank-order change in relative MSC?

-

RI-CLPMs: When students experience higher than their usual MSC (compared to their long-term average MSC over the duration of the study), are they more or less likely to experience a subsequent higher than their usual achievement (compared to their long-term average achievement over the duration of the study)? Likewise, when students experience higher than their usual achievement (compared to their long-term average achievement), are they more or less likely to experience a subsequent higher than their usual MSC (compared to their long-term average MSC)?

-

The Role of Covariates

For both RI-CLPMs and CLPMs, the possible confounding of effects with unmeasured covariates is a potentially serious threat to interpreting results. Advocates of the RI-CLPM argue that the critical advantage of this approach is that it provides greater protection for time-invariant (between-person) covariates that are not measured as part of the study (e.g., Hamaker et al., 2015; Mulder & Hamaker, 2020). The logic is that true time-invariant (between-person) covariates will only affect the global trait factors (Tx, Ty, & Tz in Fig. 1), which are statistically independent of the within-person autoregressive factors (Ax, Ay & Az in Fig. 1). Thus, unmeasured (undecomposed between-person) time-invariant covariates might affect the sizes of the (decomposed between-person) global trait factors. However, they do not affect the within-person autoregressive factors used to test directional ordering (i.e., statistically, the random intercept factor and the within-person components are independent; Hamaker et al., 2015). This has critical interpretational advantages, particularly concerning tests of directional ordering.

RI-CLPMs can incorporate measured covariates as part of the study. For these measured covariates, Mulder and Hamaker (Mulder & Hamaker, 2020) proposed that these should be regressed on the manifest trait scores for variables measured by a single indicator (school grades and test scores in the present investigation). Although they did not consider a fully latent model with covariates, we interpret their approach to mean that covariates are regressed on the undecomposed latent variables (i.e., the X latent factor representing MSC in Fig. 1) rather than the manifest indicators of each trait, the within-person autoregressive factors (the Ax factors in Fig. 1). However, for a fixed covariate with time-invariant effects, all or most of the time-invariant effects will be absorbed by the global trait factors (Tx, Ty & Tz in Fig. 1).

CLPMs also control for measured variables by including them as additional covariates in the statistical models. However, CLPMs assume that all relevant covariates are measured or captured by those included covariates, a selection-on-observables strategy (Little, 2013; Reichardt, 2019). For example, if we are interested in estimating the cross-lagged effect of Xt-1 on Yt, we need to assume that all relevant variables that affect Xt-1 as well as Yt are included. Covariates that are constant across the investigated time period (e.g., demographic variables, achievement in primary school) can be easily included in CLPMs as additional predictors of Xt and Yt at each wave t. This is also the case for covariates Zt that vary across time (e.g., grades, math interest), even though the issue of time-varying covariates has received less attention in the application of CLPMs. One challenging aspect of time-varying covariates is that they should not be affected by the treatment. For example, if we estimate the effect of Xt-1 (e.g., ASC at wave t–1) on Yt (e.g., test scores at wave t) and include Zt-1 (e.g., grades at wave t–1) as a time-varying covariate in the CLPM, we need to rule out that Zt-1 has not been affected by Xt-1. Otherwise, Zt-1 would not be a confounder but a mediator (i.e., a variable that is on the causal pathway from Xt-1 to Yt). In practical applications, it is often difficult to decide whether Zt-1 acts as a mediator or confounder, particularly when the time-varying covariate Zt-1 is measured at the same time as the treatment Xt-1 (e.g., see Marsh, Hau, et al., 2005; Marsh, Trautwein, et al., 2005, on the role of time-varying measures of academic interest in REM tests of reciprocal effects between MSC and achievement).

Overall, the CLPMs are based on the assumption that all relevant covariates (time-invariant and time-varying) are measured (or controlled by those that are measured). Thus, compared to RI-CLPMs—which control the effects of time-invariant confounders—CLPMs provide less protection against the confounding effects of unmeasured (time-invariant) confounders. However, both models provide limited protection concerning unmeasured time-varying covariates or fixed-covariates whose effects vary over time.

Neither CLPMs nor the RI-CLPMs provide particularly good controls for unmeasured time-varying covariates (or fixed covariates measured only once with effects that vary from wave to wave, possibly reflecting an unmeasured process). However, support for the consistency of effects over waves (based on invariance tests) suggests that these potentially confounding covariates specific to a particular wave do not substantially affect the results. Furthermore, Marsh et al., (2018a, 2018b) suggest that the extension of the CLPM to include lag-2 effects provides stronger controls for covariates.

The most effective way to control the effects of unmeasured (fixed and time-varying) covariates is to measure them and include them in statistical models. Thus the selection of covariates is crucial for RI-CLPMs and particular CLPMs. Hence, we find it surprising that this issue has been given limited attention in the design, analysis, and interpretation of these models (see VanderWeele, 2019, for discussion of alternative strategies for selecting covariates; also see Hübner et al., 2022).

Cattell’s (1966) Data Cube

Cattell's (1966) data cube helps distinguish within- and between-person perspectives. It represents data concerning three dimensions: persons, variables, and occasions (Marsh & Grayson, 1994). Voelkle et al. (2014) emphasize that the vast majority of educational and psychological studies focus on relations between variables across persons (undecomposed between-person variation) and, to a much smaller extent, relations on variables across occasions (decomposed within-person variation). In his classic manifesto on an idiographic approach to psychology, Molenaar (2004) noted the need to shift from a focus on the level of inter individual variation in the population to the level of intra individual variation characterizing the life histories of individual subjects. Noting the importance of between-person questions (e.g., MSC predicts achievement), Voelkle et al. (2014) also emphasized the importance of evaluating the consistency of within-person effects over time for a given individual. However, they emphasized that interindividual and intraindividual effects are only likely to be equivalent under very limited conditions (ergodicity): stationarity (invariance over time of means, variances, and covariances) and homogeneity (same relations between variables for all individuals, such that the same generating model can be applied to all individuals and individuals are not grouped or nested). Because these conditions are unlikely to be met, Voelkle et al. (2014) argued that rather than seeing within- and between-person analyses as competing research paradigms, the focus should be on appropriate research questions and, perhaps, the juxtaposition between interpretations based on the two models.

Juxtaposing CLPMs and RI-CLPMs

CLPMs and RI-CLPMs are very different. They differ in terms of their conceptual and statistical underpinnings and the questions they address. Historically, most studies of unidirectional, bidirectional, and reciprocal effects have used CLPMs (an undecomposed between-person perspective). However, there has been a surge in the popularity of RI-CLPMs (a within-person model based on a decomposed between-person perspective) and much discussion about the relevance of each.

The inability of the traditional CLPM model to disaggregate within (i.e., state-like) and between (i.e., trait-like) effects (Curran & Bauer, 2011) has led to important criticisms of this approach (e.g., Berry & Willoughby, 2017; Hamaker et al., 2015; Mund & Nestler, 2019). These criticisms have led to the emergence of a wide variety of new models specifically designed to address this limitation (e.g., Biaconcini & Bollen, 2018; Curran et al., 2014; Hamaker et al., 2015; Mund & Nestler, 2019; Zyphur et al., 2020). As a result, the recent surge in popularity of the RI-CLPM has created a zeitgeist in which some educational psychology researchers suggest that the decomposed between- and within-person perspective provided by the RI-CLPM is always more appropriate (Nunez-Reueiro et al., 2021; but also see discussion by Asendorpf, 2021; Orth et al., 2021).

However, several researchers recently critiqued considering the RI-CLPM as the default approach to analyzing cross-lagged panel designs (Asendorpf, 2021; Hübner et al., 2022; Lüdtke & Robitzsch, 2021; Orth et al., 2021). More specifically, Lüdtke and Robitzsch (2021) studied the RI-CLPM from a causal inference perspective (e.g., Imbens & Rubin, 2015; Pearl et al., 2016) using mathematical derivation and simulated data. Their overall goal, consistent with our study, was "to provide a more balanced discussion of two main approaches (CLPM and RI-CLPM) for analyzing cross-lagged panel designs, and we would like to emphasize that—despite recent methodological recommendations—there are still good reasons to use the traditional CLPM when estimating cross-lagged effects" (p. 3). Using simulated data, they showed that the RI-CLPM has limited ability to control for unmeasured confounder variables, including fixed confounders (e.g., demographic variables), when their effects vary over time. Drawing in part on early research by Marsh and et al., (2018a, 2018b), Lüdtke and Robitzsch noted that beneficial consequences of including lag-2 effects to provide a stronger control for confounding (also see VanderWeele et al., 2019; 2020). They also noted that the inclusion of lag-2 effects in CLPMs resulted in goodness-of-fit that was as good as RI-CLPMs. Thus, the choice of models is not a question of fit, and this positions CLPMs with lag-2 effects as a viable alternative to the RI-CLPM, even in terms of goodness-of-fit. Noting that there are still issues with CLPMs, even with the inclusion of lag-2 effects, they argued for a selection-on-observables CLPM approach based on the observed information in the data (previous measures of the treatment and outcome, and additional covariates), instead of stable trait factors (that are based on modeling assumptions in RI-CLPMs). This approach is consistent with VanderWeele et al.’s (2020; see also VanderWeele et al., 2016) perspective on causal inference with longitudinal data and has also been recently emphasized by Hübner et al. (2022).

CLPMs and RI-CLPMs address different questions and often result in different—even contradictory—interpretations. Because the strengths, weaknesses, and appropriate interpretations of each are the basis of ongoing research and extensions of these models, applied researchers need to understand the differences between the two. In the present investigation, we intend merely to present a more balanced view of the different perspectives concerning the issue of the causal ordering of ASC and achievement based on appropriate cross-lagged panel data. We aim this presentation to applied researchers based on what might be the classic application of the cross-lagged panel design in educational psychology, the REM that dates back to at least the 1970s (e.g., Calsyn & Kenny, 1977) and has been the basis of many studies and multiple meta-analyses. Our study is one of the first to juxtapose the theoretical rationale and results based on CLPMs, RI-CLPMs, and extensions of these models in tests of the directional ordering of ASC and achievement. In pursuit of these aims, we operationalize extensions of particularly RI-CLPMs that have mainly been demonstrated with simulated data, consider lag-2 effects, and show their usefulness in our applied study (VanderWeele et al., 2020).

The Present Investigation: Two A Priori Research Hypotheses and Three Research Questions

In the present investigation, we chose for purposes of secondary data analysis what we judged to be the strongest database to juxtapose CLPMs and RI-CLPMs relating ASC, school grades, and achievement tests across secondary school years. The Project for the Analysis of Learning and Achievement in Mathematics (PALMA; Arens et al., 2017; Frenzel et al., 2012; Marsh et al., 2017, 2018a, 2018b; Marsh, Parker, et al., 2016; Marsh, Pekrun, et al., 2016; Pekrun, 2006; Pekrun et al., 2017, 2019) is a large-scale longitudinal study investigating the development of math achievement and its determinants during secondary school years. Although the directional ordering of achievement and math self-concept has been a component of previous PALMA research, this has always been from a between-person perspective. In this sense, PALMA is ideally suited for our purpose of juxtaposing CLPMs and RI-CLPMs in support of the REM. Here we extend these models to test longitudinal invariance over time (multiple school years) and multiple groups (school tracks), lag-2 paths between non-adjacent school years, and covariates (gender; primary school math and verbal achievement). CLPMs and particularly RI-CLPMs are typically based on two variables (bivariate models). However, here we extend the models to include three variables (tripartite models; MSC and the two distinct forms of achievement). The key issues here involve juxtaposing between- and within-student perspectives on the directional ordering of three variables: MSC and two achievement indicators.

Based on our review of the substantive literature on achievement and MSC, we offer the following two research hypotheses (where there is a clear basis for offering a priori directional hypotheses). In addition, we offer three additional research questions that involve critical issues and extensions of the basic CLPMs and RI-CLPMs for which there is not sufficient basis for offering a priori hypotheses. For the research questions, we also discuss the relevant issues.

Research Hypotheses

Research Hypothesis 1 Directional-Ordering: Model of Reciprocal Effects.

We hypothesize a priori that students’ MSC will be reciprocally related to both measures of achievement (grades and test scores). The paths from MSC in one wave to both achievement measures in subsequent waves will be significantly positive. Likewise, the paths from both achievement measures in one wave to MSC in the next wave will be significantly positive (see Fig. 1). Our hypotheses are consistent with the REM and extensive research showing that MSC and achievement are reciprocally related (Huang, 2011; Marsh & Craven, 2006; Valentine et al., 2004; Wu et al., 2021). Also consistent with previous research, we view these hypothesized cross-lagged effects as "causal" (but see earlier discussion of the rationale for using the term "directional ordering").

Research Hypothesis 2: Alternative Measures of Achievement.

Based on prior research and following from Research Hypothesis 1, we hypothesize a priori that all stability and cross-paths will be positive and statistically significant in models based on test scores, school grades, and the combination test scores and school grades. Also, following previous research (e.g., Marsh, 2007; also see Wu et al's., 2021, meta-analysis), we anticipate that MSC within and across waves will be more highly correlated with school grades than test scores. Compared to models relating MSC to each of these achievement indicators separately, we anticipate that the combined model based on all three will have smaller paths—particularly stability paths for the two achievement measures, and cross-paths relating the two achievement measures and MSC. However, we hypothesize that support for Research Hypothesis 1 will generalize over all models based on alternative measures of achievement.

Research Questions: Juxtaposition and Extensions of CLPMs and RI-CLPMs to Evaluate Sensitivity

Research Question 1: Juxtaposition of CLPM and RI-CLMP Results.

The central research question is whether support for the REM (Research Hypothesis 1) differs for CLPMs and RI-CLPMs. Although there is overwhelming support for the REM based on prior research, we note that particularly at the secondary school level, this support is largely based on CLPMs rather than RI-CLPMs. However, as Hamaker et al. (2015) and others (also see earlier discussion) emphasize, there is no a priori basis for anticipating how estimates from the two models will differ in size or even direction. Hence, we leave this issue as a research question.

Research Question 2: Extended Models: Lag-2 Effects.

For both CLPMs and RI-CLPMs, we evaluated extended models with lag-2 effects. Following earlier discussion (e.g., Marsh, et al., 2018a, 2018b; also see Lüdtke & Robitzsch, 2021), this extension should substantially improve the fit of the CLPMs. However, we see this extension of the models as a sensitivity test to determine whether the inclusion of lag-2 effects influences support for Research Hypothesis 1—a substantial issue. Nevertheless, an important methodological contribution is to test the supposition following from Marsh and et al., (2018a, 2018b; also see Lüdtke & Robitzsch, 2021) that adding lag-2 paths to the CLPM will largely eliminate differences in goodness-of-fit and provide stronger controls for preexisting differences.

Research Question 3: Extended Models: Covariates and Multiple Groups.

We evaluated extended models for CLPMs and RI-CLPMs that included controls for covariates (gender; prior verbal and math achievement from primary school, before starting secondary school) and multiple groups (school tracks). Although there is substantive interest in how these effects are related to students’ achievement and MSC, our primary focus is on how these covariates affect the results concerning directional ordering. Thus we again see this extension of the models as a sensitivity test in relation to support for Research Hypothesis 1, and thus a research question.

Method

Sample

Our study is based on secondary data analysis of data from PALMA, a large-scale longitudinal study investigating the development of math achievement and its determinants during secondary school in Germany. The Data Processing and Research Center (DPC) of the International Association for the Evaluation of Educational Achievement (IEA) conducted sampling and the assessments. Samples were drawn from secondary schools within the state of Bavaria and were representative of the student population of this state in terms of student characteristics such as gender, urban versus rural location, and family background (SES; for details, see Pekrun et al., 2007). The data consisted of five measurement waves spanning Grades 5 to 9 and school grades from the last year of primary school (Year 4). On the basis of the primary school results, students (N = 3,425; 50% girls; mean age = 11.7 at Year 5, SD = 0.7) were allocated to either the high-achievement (Gymnasium: 37%), middle-achievement (Realschule: 30%), or low-achievement (Hauptschule: 33%) school tracks. Students answered the questionnaire in the first two weeks of July, toward the end of each successive school year. All instruments were administered in the students’ classrooms by trained external test administrators. Participation in the study was voluntary, and parental consent was obtained for all students. The agreement was high (100% for schools and over 90% for students at each data wave), and the final sample closely represented the intended sample and population more generally (Pekrun et al., 2007). Surveys were anonymized to ensure participant confidentiality.

Measures

MSC was measured in each of the five secondary schools Years (5–9) with the same set of six items, using a 5-point Likert scale: not true, hardly true, somewhat true, largely true, or absolutely true. Across the five waves, the alpha estimates of reliability were consistently high (Year 5 α = 0.88; Year 6 α = 0.89; Year 7 α = 0.89; Year 8 α = 0.91; Year 9 α = 0.92). The items used to measure MSC were: “In math, I am a talented student”; “It is easy for me to understand things in math”; “I can solve math problems well”; “It is easy for me to write tests/exams in math”; “It is easy for me to learn something in math”; “If the math teacher asks a question, I usually know the right answer.”

Students’ achievement was measured with school grades (math in Years 4–9; German in Year 4) and math standardized achievement test scores (Years 5–9). School grades were end-of-the-year final grades obtained from school documents. The standardized PALMA Math Achievement Test (Murayama et al., 2013; Pekrun et al., 2007) was based on multiple-choice and open-ended items to measure students’ modeling and algorithmic competencies in arithmetic, algebra, and geometry. The test was constructed using multi-matrix sampling with a balanced incomplete block design; the number of items increased with each wave, varying between 60 and 90 items across the five waves, with anchor items to allow for the linkage of the two test forms and the five measurement points. The achievement scores were scaled using one-parameter logistic item response theory, confirming the unidimensionality and longitudinal invariance of the test scales (Murayama et al., 2013).

Statistical Analyses

All analyses were done with Mplus (Muthén & Muthén, 2008–20, Version 8). We used the robust maximum likelihood estimator (MLR), which is robust against many violations of normality assumptions.

Missing Data.

As is typical in large longitudinal field studies, a substantial portion of the sample had missing data for at least one measurement wave due primarily to absence or students changing schools. Across the five waves, 39% participated in all five measurement waves (i.e., Grades 5 to 9), and 9%, 19%, 15%, and 18% took part in four, three, two, or one of the assessments, respectively. We included all students who responded to at least one wave. Particularly in longitudinal studies, there is increasing awareness of the limitations of traditional approaches to missing data (Enders, 2010). Here, we applied the full-information maximum likelihood (FIML) method to fully use cases with missing data (Enders, 2010). FIML results in trustworthy, unbiased estimates for missing values even in the case of large numbers of missing values (Enders, 2010) and is an appropriate method to manage missing data in large longitudinal studies (Jeličič et al., 2009). More specifically, as emphasized in classic discussions of missing data (e.g., Newman, 2014), under the missing-at-random (MAR) assumption that is the basis of FIML, missingness is allowed to be conditional on all variables included in the analyses, but does not depend on the values of variables that are missing. This implies that missing values can be conditional on the same variable's values collected in a different wave in a longitudinal panel design. This feature of the data makes it unlikely that MAR assumptions are seriously violated, as the key situation of not-MAR is when missingness is related to the variable itself. Hence, having multiple waves of parallel data provides strong protection against this violation of the MAR assumption. Also, the appropriateness of FIML is further strengthened by support for the invariance of parameter estimates over time (see subsequent discussion of invariance constraints).

Goodness-of-Fit.

Applied SEM studies typically focus on fit indices that are relatively sample-size independent (Marsh et al., 2004; Marsh, Hau, & Grayson 2005), such as the root-mean-square error of approximation (RMSEA), the Tucker-Lewis index (TLI), and the comparative fit index (CFI). Population values of TLI and CFI vary along a 0-to-1 continuum, in which values greater than .90 and .95 typically reflect acceptable and excellent fits to the data, respectively. Values smaller than .08 and .06 for the RMSEA support acceptable and good model fits, respectively. For comparing nested models, Cheung and Rensvold (2002) and Chen (2007) suggested that if the decrease in fit for the more parsimonious model is less than .01 for incremental fit indices such as the CFI, there is reasonable support for the more parsimonious model. For indices that incorporate a penalty for lack of parsimony, such as the RMSEA and the TLI, it is also possible for a more restrictive model to result in a better fit than would a less restrictive model. For present purposes, to facilitate communication, we present primarily TLIs in the written summaries of the results. In addition, however, we present the Chi-square, degrees-of-freedom, RMSEA, CFI, and TLI in the corresponding tables. Nevertheless, these cut-off values for these indices constitute only rough descriptive guidelines rather than “golden rules” (Marsh et al., 2004).

Invariance Constraints

A Well-Defined Measurement Model.

Particularly as multiple MSC indicators are parallel over the multiple waves, it is relevant to test measurement invariance over time and multiple groups (the three school tracks). For both longitudinal and multiple group data, it is typical to evaluate a set of models that systematically vary the invariance constraints (Marsh et al., 2014; Marsh et al., 2014; Marsh, Morin, et al., 2014; Marsh, Parker, et al., 2016; Meredith, 1993; Millsap, 2012): configural (no invariance constraints), metric (factor loading invariance), and scalar (intercept invariance). For longitudinal data, Marsh et al. (2013) recommended that correlated uniquenesses relating residual variance terms for the same item in different waves should also be tested (Jöreskog, 1979; Marsh & Hau, 1996). Failure to include them will typically undermine goodness-of-fit and bias parameter estimates. Thus, the measurement model's invariance is relevant to the rationale underlying statistical models of longitudinal data. These invariance constraints also substantially reduce the number of estimated parameters, resulting in a more parsimonious model. Particularly in complex models, this can also improve the convergence behavior of models and increase power. There are two aspects to invariance in the present investigation: invariance over the multiple groups (different academic tracks) and invariance over time.

Importantly, these preliminary tests of the measurement model are not based on any particular model (e.g., CLPM or RI-CLPM) but merely evaluate the extent to which the constructs are well-defined. Nevertheless, unless there is reasonable support for at least configural invariance, the application of subsequent CLPMs and particularly RI-CLPMs is dubious. If there is no support for the invariance of factor loadings, then tests of invariance of other parameters associated with these factors (e.g., stability and cross-paths in CLPMs and RI-CLPMs) are also dubious.

Longitudinal Structural Invariance Constraints

For the CLPM studies based on three or more data waves, Marsh and et al. (2018a, 2018b) adopted the term "development equilibrium" for the imposition of invariance for the stability paths and the cross-lagged paths over time. Their focus was mainly on whether the sizes of the stability and cross-paths varied as a function of age for school students. However, this pattern of constraints is also typical in RI-CLPMs (Mulder & Hamaker, 2020), greatly facilitating the interpretation of results. Nevertheless, because this terminology is somewhat idiosyncratic to development studies, we refer to this set of constraints as longitudinal equilibrium. In invoking this constraint, we constrained to be invariant over time the three stability paths (Byy, Bxx, & Bzz; Fig. 1) and the six cross-lag paths (Bxy, Byx, Bxz, Bzx, Byz, Bzy; Fig. 1).

PreliminaryAnalyses

Longitudinal Invariance

We tested a series of measurement models based on invariance over time. The models were based on responses to 40 indicators—6 MSC items, one math test score, and one math school grade in each of five waves (i.e., 8 indicators × 5 waves). For present purposes, to facilitate interpretations, all items for MSC were standardized (Mn = 0, SD = 1) to a common metric, based on wave-1 responses (i.e., Year-5, the first year of secondary school). Marsh et al. (2013) recommended that our a priori model included correlated uniquenesses relating residual variance terms for the same items at different waves (for further discussion, see Marsh et al., 1996; Joreskog, 1979). As expected, the measurement model with no correlated uniqueness (MM0 in Table 1) provided a poorer fit than other measurement models. Measurement model MM1 (configural invariance) model with correlated uniquenesses but no invariance constraints provided a very good fit to the data (RMSEA = .016, CFI = .989, TLI = .986; see results in Table 1). In Model MM2 (metric invariance) with the imposition of factor loading invariance also resulted in a good fit (RMSEA = .018, CFI = .987, TLI = .982). In the final model MM3 (scalar invariance) model, the imposition of intercept invariance resulted in a slightly poorer fit (RMSEA = .020, CFI = .983, TLI = .979), but one that was still excellent based on traditional guidelines. The results demonstrate that the factor structure generalizes well over the multiple waves—the first five years of secondary school.

Multi-Group Invariance

Next, we tested a series of measurement models based on invariance over the multiple groups (representing the three academic tracks). We based these models on the same data as the tests of longitudinal invariance. Measurement model MM4 (configural invariance) model with correlated uniquenesses but no invariance constraints over the multiple groups provided a very good fit to the data (RMSEA = .018, CFI = .987, TLI = .982; Table 1). In Model MM5 (metric invariance) with the imposition factor loading invariance also resulted in a good fit (RMSEA = .018, CFI = .987, TLI = .983) as did MM6 (scalar invariance) model (RMSEA = .019, CFI = .985, TLI = .982). The results demonstrate that the factor structure generalizes well over the multiple groups.

Combining Longitudinal Invariance and Multi-Group Invariance

When considered separately, our results support scalar invariance over time (longitudinal invariance) and academic track (multigroup invariance). In the final model (MM7; also see Supplemental Materials for Mplus syntax), we simultaneously impose scalar invariance for both time and group. The fit of this highly constrained model is very good (TLI = .976; Table 1), further demonstrating that the factor structure generalizes over the multiple wave and multiple groups.

We use this final measurement model MM7 for subsequent CLPMs and RI-CLPMs. To facilitate interpretations, we identified all solutions by fixing the factor loading of the first indicator of each MSC factor to a constant value. However, instead of fixing the value to 1.0, we fixed it to the standardized factor loading in the scalar invariance solution. This results in a model in which factor loadings are invariant over time and group. The factor variance is 1.0 in wave-1, but is allowed to vary across waves. In this way, all responses are standardized relative to a common metric (that facilitates comparing parameter estimates in different waves), resulting in an unstandardized solution similar to a standardized solution. This parameterization is particularly useful in the comparison of different cross-lag paths associated with different constructs.

We also note that all subsequent CLPMs and RI-CLPMs considered here are nested under our measurement model MM7. Hence, the measurement model MM7 provides an important basis of comparison for all subsequent CLPMs and RI-CLPMs, the structural invariance constraints imposed on them, and their extensions to include additional lagged parameters. More specifically, because all relations among the 15 factors (MSC, school grades, and test scores in each of the five waves) are freely estimated, this model MM7 is fully saturated in terms of these relations. In contrast, all the various CLPMs and RI-CLPMs place constraints upon these relations. To the extent that the constraints are reasonable, the fit of the constrained CLPMs and RI-CLPMs should approach that of our measurement model MM2. We consider this a fundamentally important contribution to evaluating fit for the CLPMs and RI-CLPMs that is rarely considered—even in studies based on fully latent CLPMs and RI-CLPMs.

Results

Relations Among the Variables

Table 2 is a latent correlation matrix of relations between the 15 factors (MSC, school grades, and test scores in each of the five waves). This is a latent multitrait–multimethod (MTMM) correlation matrix in which time is the "method" factor (for further discussion, see Marsh & Huppert et al., 2020; Marsh, et al., 2010). Thus, the results indicate that all three constructs are highly stable and consistent over the five waves. The average lag-1 correlations (i.e., test–retest correlations in adjacent waves separated by one year) for matching traits are .71 (.68-0.75) for MSC, .82 (.77-.86) for test scores, and .64 (.58-.68) for school grades. Reflecting the typical simplex pattern, lag-2 test–retest correlations are somewhat smaller, but still substantial for all three constructs. Indeed, Year 5 factors are significantly correlated even with Year 9 factors, particularly for test scores (r = .71) but also for MSC (r = .50) and school grades (r = .45). These results indicate substantial stability over time for all three constructs, consistent with the rationale for the RI-CLPM.

Compared to the stability (test–retest) correlations, mean correlations among different constructs within the same wave are systematically smaller: .34 for MSC and Test scores; .56 for MSC and school Grades; and .45 for Grades and Test scores. Consistent with expectations (see Research Hypotheses), MSCs are systematically more highly correlated with school grades than test scores. Although lag-1 correlations among the different constructs are lower than those in the same wave, the pattern of correlations remains consistent. These results support the distinctiveness (discriminant validity) of the three constructs.

Also relevant are the relations between covariates (demographic variables) with our measures of MSC, school grades, and test scores (Table 2). Our primary interest is how incorporating these covariates into our model affects support for the REM hypotheses. However, we are also interested in relations of the covariates with MSC and achievement, and their consistency over time. Gender differences consistently favor boys for MSC and, to a lesser extent, math test scores. However, there are almost no gender differences in terms of math school grades. Also consistent with gender stereotypes, achievement at the end of primary school favors girls for verbal achievement and boys for math achievement. Primary school math grades consistently correlated highly with math test scores over the subsequent five years (.65 to .70). However, they were also significantly correlated with math school grades and MSC in subsequent years. Compared to primary school math grades, primary school German grades were less positively correlated with math test scores and grades, and were almost uncorrelated with MSC. Thus, for purposes of the present investigation, primary school grades provide particularly strong covariates to control achievement levels from before the start of secondary school.

The results also demonstrate differences between the tracks. Not surprisingly, the largest differences are for primary school grades that were the main basis for assigning students to secondary school tracks (.62 and .58 for High track, -.66 and -.66 for low track). Differences in test scores are also substantial (.43-.53 for High track, -.60 to -.51 for low track). In contrast, reflecting grading-on-a-curve and well-established frame-of-reference effects, track differences are much smaller for school grades and MSCs (for further discussion, see Marsh, et al., 2018a, 2018b).

Directional Ordering

Directional Ordering: CLPMs.

For the basic CLPMs (see Basic Models MB1a-MB3a in Tables 3 and 4), we found support for our a priori (REM) hypotheses for paths from MSC to school grades (MB1a), test scores (MB2a), and the combination school grades and test scores (MB3a). In addition, the model fit was good for all three basic CLPMs. However, the fit for the CLPM MB3a (Table 3, e.g., TLI = .954) was not as good as the corresponding measurement model MM7 (Table 1, e.g., TLI = .976).

The critical parameter estimates of the CLPMs (see Fig. 1) for testing REM's hypotheses are the cross-lag paths relating two achievement measures and MSC (Bxy, Byx, Bxz, Bzx—the values are shaded in Table 4). However, also of interest are the stability coefficients (Bxx, Byy, Bzz), and the cross-lag paths relating the two achievement measures (Bzy, Byz). We note that each of these paths was constrained to be equal across the five waves of data. In support of REM hypotheses, all eight of these paths are significantly positive in all three models (MB1a, MB2a, and MB3a in Table 4). Also consistent with Research Hypothesis 2, paths in the MB3a based on all three constructs are somewhat smaller than the corresponding paths in models with MSC and only grades (MB1a) or MSC and only test scores (MB2a).

Nevertheless, even in the more demanding model MB3a, all stability and cross-lag paths are significantly positive and consistent with models MB1a and MB2a. Substantively, the interpretation of the CLPM results is straightforward. There are modest but highly consistent reciprocal effects between MSC, math school grades, and math test scores.

The primary focus for Research Hypotheses 1 and 2 is the autoregressive stability and cross-paths relating constructs from one wave to the next. However, it is also relevant to consider the undecomposed (between-person) within-wave variances and covariances (Table 5). At wave-1, these are substantial, but residual variances and covariances for waves 2–4 (controlling values from the preceding wave) are substantially smaller. Nevertheless, the variance explained in each construct by the same constructs in the immediately preceding wave is substantial (MultR2 = .55, MSC; .54, tests; .46, grades).

Directional Ordering: RI-CLPMs.

For RI-CLPMs (Tables 3, 4 and 5), we evaluated models that parallel the corresponding CLPMs of structural invariance. Again, we found support for the REM (Research Hypothesis 1 and 2) hypotheses for MSC for school grades (MB1b), test scores (MB2b), and the combination school grades and test scores (MB3b). Each of these models had an excellent fit to the data (TLIs: .971-0.976). Furthermore, the fit of MB3b approached the fit of the corresponding measurement model (TLI = .971 for MB3b in Table 3 and .976 for MM7 in Table 1).

The global trait factors representing decomposed between-person differences are the unique feature of RI-CLPMs. Consistent with expectations and in support of the appropriateness of the RI-CLPMs, the global trait factors account for much of the variance in MSC, test scores, and school grades (Table 5). Furthermore, these trait factors are substantially correlated (rs = .64 to .80, Table 5). In contrast, the variances and covariances for the within-person components are substantially smaller, although they are all are positive and statistically significant (Table 5). Because the variance components for the global trait factors are substantial, the variance explained in each construct by the same constructs in the immediately preceding wave is modest (MultR2 = .25, MSC; .08, tests .15 grades).

In support of REM hypotheses, all three models' stability and cross-lag paths are significantly positive (MB1b, MB2b, and MB3b in Table 4). Although the paths in the MB3b based on all three constructs are somewhat smaller than the corresponding paths in MB1b and MB2b, the differences are small. Thus, RI-CLPMs show modest reciprocal effects between MSC, math school grades, and math test scores in support of REM hypotheses.

Directional Ordering: Juxtaposing CLPMs and RI-CLMPs

In Research Question 3, we noted no clear a priori basis for predicting differences in results based on latent CLPMs and RI-CLPMs, and few studies comparing them empirically. In this respect, juxtaposing CLPMs and RI-CLPMs is substantively important for ASC research and better understanding CLPMs and RI-CLPMs. Here we highlight several key findings from comparing results from CLPMs and RI-CLPMs.

First, the fit indices are marginally better for RI-CLPMs than the CLPMs (Table 3). However, given that the CLPMs are nested under RI-CLPMs, this is not surprising. Indeed, it is surprising that the differences are not larger, given the substantial stability of the constructs.

Second, the major difference in the path coefficients (Table 4) is the substantially smaller stability coefficients for the RI-CLPMs. Again, this is consistent with the theoretical rationale and control for random intercepts in RI-CLPMs (see Fig. 1 and earlier discussion on the different conceptualization of between-person differences).

Third, the variance explained in each construct by the same constructs in the immediately preceding wave is substantially larger for CLPMs (MultR2 = .46 to .55) than for RI-CLPMs modest (MultR2 = .08 to .25). This is a natural consequence of the substantial global trait factors in the RI-CLPMs.

Most importantly, both CLPMs and RI-CLPMs support the REM hypotheses, particularly for paths relating the two achievement measures and MSC (Bxy, Byx, Bxz, Bzx—the paths are shaded in Table 4). Each of these cross-lag paths is modest (.061—.136 for CLPM MB3a; .067—0.133 for RI-CLPM MB3b), but highly significant and consistent across CLPMs and RI-CLPMs. In summary, there is good support for REM hypotheses based on both CLPMs and RI-CLPMs.

Extended Models for CLPMs and RI-CLPMs

In the next series of models, we extend the basic CLPMs and RI-CLPMs based on model MB3a (CLPM) and MB3b (RI-CLPM; Tables 3 and 4) in several respects. First (the "ML" models in Tables 5 and 6), we included lag-2 paths (see Research Question 4) between non-adjacent waves (e.g., paths relating factors in wave-1 to wave 3, wave 2 to wave 4, etc.). Second (the "MC" models in Tables 5 and 6), we added covariates to the basic models (see Research Question 5). Finally, we tested models with additional lag-2 paths and covariates (the "MLC" models in Tables 5 and 6).

CLPMs: Lag-2 Paths.

We note that the basic model with no lags (MB3a in Table 3) is nested under these ML models (ML1a and ML2a), and all these models are nested under the measurement model (MM7 in Table 1), providing appropriate bases for evaluating goodness-of-fit. Consistent with expectations, additional lag-2 paths noticeably improved the fit of the CLPMs (Table 6). Compared to the fit of the lag-1 CLPM (TLI = 0.955, MB3a in Table 3), the CLPM with lag-2 paths was better (TLI = .972, ML1a in Table 6). In Model ML2a, we showed that this improved fit was primarily a function of the stability paths when we eliminated the lag-2 cross-lagged paths (TLI = .971, ML2a in Table 6). We also note that the fits of ML2a and ML2b (Table 6) were only marginally less than the fit of the corresponding measurement model (TLI = .976, MM7 in Table 1). As noted earlier, this measurement model MM7 is fully saturated in relation to the structural model constraints imposed in the CLPMs. This comparison further supports these constraints and the need to extend to CLPM to include additional lagged effects (also see earlier discussion). Nevertheless, the critical issue is how the inclusion of the lag-2 stability path influences support for the REM (Research Hypotheses 1 and 2).

Compared to the corresponding basic CLPM with no lag-2 paths (MB3a in Table 3), the stability and cross-lagged paths for models with additional lags (ML1a and ML2a, Table 7) are smaller. Not surprisingly, these differences were particularly evident for the stability coefficients (i.e., lag-1 effects were smaller when we added lag-2 paths). Thus, for example, the lag-1 stability paths in ML2a that also included lag-2 stability paths (.458, .401, .531; Table 7) were substantially smaller than the corresponding values in MB3a with only lag-1 paths (.583, .450, .675; Table 3). Although there were also differences in the cross-lagged paths relating achievement and MSC (.045, .060, .099 & .067 for ML1a vs. .061, .070, .136 & .067 for MB3a), these differences were much smaller. Critically, all the stability and cross-lagged paths were significantly positive in all CLPMs with and without lag-2 paths. Importantly, the four critical cross-lag paths that test REM (shaded in Tables 4 and 7) were all significantly positive, even though the values were slightly smaller for the CLPM that included lag-2 paths.

CLPMs: Covariates

Models with covariates are not nested under models considered thus far. Hence the fit indices are not directly comparable. To provide a basis of comparison, we fit a model in which we constrained all paths from the three covariates (math and verbal achievement from primary school and gender) to MSC, grades, and test scores to be zero (MC3a in Table 6, TLI 0.932). The fit improved when these paths were freely estimated (MC1a in Table 6, TLI 0.957). These results indicate that the effects of these covariates were not substantial, even though the covariates correlated substantially with our outcome variables (Table 2). Hence, it is not surprising that the autoregressive stability and cross-lagged path coefficients were not substantially affected by including the covariates (Models MC1a & MC2a in Table 7 compared to model MB3a in Table 4). Indeed, the changes were small and not even consistent in direction for the four critical cross-lagged paths relating achievement and MSC (those shaded in Tables 4 and 6). Nevertheless, there was some evidence that these covariates had effects beyond the first wave of data, consistent with our recommendation that paths from covariates to all data waves should be considered.

CLPM: Additional Lags and Covariates

Consistent with earlier discussion, the addition of both lag-2 paths and covariates (MLC models in Tables 6 and 7) led to a better fit (e.g., TLI = 0.957 for MC1a and TLI = 0.971 for MLC1a). Although comparisons with models in which covariate paths were constrained to be zero again showed that there were covariate effects, these effects were modest (e.g., TLI = 0.971 for MLC1a vs. TLI = 0.949 for MLC3a). Unsurprisingly, lag-1 stability paths are smaller for these MLC models than the corresponding MB, ML, and MC models. However, the effects of these lag-1 stability coefficients for the MLC models are similar to the corresponding ML models, suggesting that the effects of lag-2 paths are greater than the effects of the covariates. This pattern of results suggests that the inclusion of lag-2 effects provides some control for unmeasured, time-invariant covariates.

The critical parameters for testing the REM hypotheses are the cross-lag paths relating achievement and MSC (shaded in Tables 4 and 7). These four paths in MLC1a (Table 7) are all statistically positive and similar in size to the corresponding paths in the basic CLPM: 0.060, 0.101, 0.086, 0.069 (MLC1a, Table 7) vs. 0.059, 0.091, 0.085, 0.066 (MB3a, Table 4). In summary, the reciprocal effects that were hypothesized by the REM were robust against the inclusion of lag-2 paths and the effects of additional covariates.

Extended Models for RI-CLPMs