Abstract

The rapid development of generative artificial intelligence (GenAI), including large language models (LLM), has merged to support students in their academic writing process. Keeping pace with the technical and educational landscape requires careful consideration of the opportunities and challenges that GenAI-assisted systems create within education. This serves as a useful and necessary starting point for fully leveraging its potential for learning and teaching. Hence, it is crucial to gather insights from diverse perspectives and use cases from actual users, particularly the unique voices and needs of student-users. Therefore, this study explored and examined students' perceptions and experiences about GenAI-assisted academic writing by conducting in-depth interviews with 20 Chinese students in higher education after completing academic writing tasks using a ChatGPT4-embedded writing system developed by the research team. The study found that students expected AI to serve multiple roles, including multi-tasking writing assistant, virtual tutor, and digital peer to support multifaceted writing processes and performance. Students perceived that GenAI-assisted writing could benefit them in three areas including the writing process, performance, and their affective domain. Meanwhile, they also identified AI-related, student-related, and task-related challenges that were experienced during the GenAI-assisted writing activity. These findings contribute to a more nuanced understanding of GenAI's impact on academic writing that is inclusive of student perspectives, offering implications for educational AI design and instructional design.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

English academic writing is a complex and multi-faceted activity that requires coordinating multiple cognitive skills and knowledge to orchestrate writing processes such as facilitating goal setting, problem-solving, and strategically managing memory resources (Allen & McNamara, 2017; Flower & Hayes, 1981). The writing process is unique to each individual student; students bring their own individual characteristics, including linguistic and general literacy backgrounds, prior domain knowledge, as well as affective states (e.g., attitudes toward academic writing, engagement with the task), all of which potentially affect their performance (Gupta et al., 2022; Pineteh, 2014). This process can be even more challenging for English as a second language (ESL) students who often face language barriers. Yet, educators generally have insufficient time to offer personalized instruction and formative feedback, preventing students from having sufficient opportunities to practice writing and incorporate feedback to improve their writing experience (Aldabbus & Almansouri, 2022; Gupta et al., 2022).

Pertaining to such challenges, generative artificial intelligence (GenAI)-powered writing tools have emerged to support students in their academic writing process and enhance ESL learners’ writing skills. GenAI refers to intelligent algorithms that can interact with users through natural language exchanges (conversations) to generate new content, refine responses to meet users’ needs and simulate human intelligence (Choudhurri, 2023; Ng et al., 2021). For instance, GenAI writing systems offer continuous support during different stages of the academic writing process, from ideation (e.g., generating possible research questions and ideas) to editing and proofreading (e.g., offering correction, grammar checking, and proofreading) (Dale & Viethen, 2021; Rowland, 2023). Expanding beyond an automated evaluation and correction, AI writing systems facilitate students’ metacognition by allowing them to identify and correct language errors (Fitria, 2021), notice dissonance in their writing (Gayed et al., 2022), and improve their manuscript’s overall clarity and coherence (Liu et al., 2023). For more proficient ESL learners aiming to further improve their writing, AI writing systems provide essential pedagogical support. This includes guidance that can positively impact writing quality, such as rewriting text to enhance lexical diversity and stylistic variation, identifying errors (Fitria, 2021), and adopting a preferred writing style (Marzuk et al., 2023). Additionally, AI writing systems assist students in literature review by identifying relevant research articles (Behrooz et al., 2023), supplying background information on writing topics (Chichekian & Benteux, 2022; Rowland, 2023), summarizing texts (Behrooz et al., 2023), and providing recommendations tailored to students’ preferences and search patterns (Chichekian & Benteux, 2022; Rowland, 2023). AI writing systems also offer real-time translation and interpretation services. This enables students to overcome language barriers to access and assimilate content in multiple languages and learn diverse perspectives (Salvagno et al., 2023).

Despite its many benefits, there are still challenges and concerns associated with using GenAI for academic writing tasks, including data privacy, intellectual property, students’ over-reliance on automated tools, and plagiarism (Lund & Wang, 2023; Ray, 2023; Tlili et al., 2023). Further, GenAI has been widely reported to hallucinate content or provide incorrect guidance, which refers to when a GenAI tool generates inaccurate responses that seem realistic (Alkaissi & McFarlane, 2023). This can lead to users being unsure of how to use GenAI due to doubts related to its accuracy and, in turn, feelings that GenAI may not be a helpful resource (Choudhuri et al., 2023). Finally, the content produced by GenAI is often produced by algorithms and training data that is not transparently available to end-users and may reinforce biased or discriminatory viewpoints, further eroding trust in AI systems (Fengchun & Wayne, 2023). AI-powered writing tools are usually used unsupervised and without guidance and so students may require support to successfully interact with them in an educationally relevant manner. To be used effectively, students must cultivate skills such as communication skills to prompt GenAI for appropriate responses and critical thinking to evaluate Gen-AI content and integrate it into their academic work (Choudhuri et al., 2023). Without instruction, there is a risk of misapplication such as intentional or unintentional plagiarism or the unreflective adoption of recommendations (e.g., Prentice & Kinden, 2018; Rogerson & McCarthy, 2017). This suggests a need for more comprehensive research on the pedagogical considerations and ethical use of GenAI in educational contexts (Fengchun & Wayne, 2023). In this regard, researchers emphasizes the importance of gathering interpretable and actionable insights from learners who are the main users and subject of interactions with GenAI in learning to develop a thorough understanding of the circumstances in which the use of such systems may be appropriate, how students relate to GenAI, and what challenges arise during GenAI-assisted writing tasks (Rosé et al., 2019; Zawacki-Richter et al., 2019). Such understanding will support creating personalized instructional and technical interventions that cater to individual student needs and are educationally meaningful. This study, therefore, seeks to bridge this gap by exploring and examining students’ perceptions and experiences about GenAI-assisted writing by answering the following questions:

-

(1)

What are students’ perceptions of the expected roles of AI in AI-assisted academic writing tasks?

-

(2)

What are students’ perceptions of the advantages of AI-assisted academic writing?

-

(3)

What are students’ perceptions of the challenges of AI-assisted academic writing?

The findings of this study can develop a holistic understanding of GenAI’s impact on academic writing and offer implications for educational AI design and instructional design by reflecting on the diverse roles expected of GenAI in academic writing and the benefits and barriers to GenAI-assisted writing perceived by students.

2 Literature review

Written communication can vary wildly across writers and genres, depending on stylistic preferences, goals, contexts, and the intended audience. In higher education settings, students are expected to learn academic writing, which includes specific writing processes, structures, and characteristics that will support their academic success. Academic writing provides students with a mechanism by which they can exercise their critical thinking skills, demonstrate their knowledge, and advocate for their unique stance on a topic to persuade readers to accept their findings or conclusions (Swales & Feak, 2012). More than an act of communication, academic writing supports student engagement in the learning process, allowing students to learn different ways of constructing knowledge (Hyland, 2014). However, for ESL students, academic writing presents an extra challenge of expressing themselves in a second language which can inhibit their ability to effectively convey their ideas and incorporate various viewpoints in their writings (Zhang, 2023).

These challenges create opportunities for AI to address difficulties and serve in various roles to support students in their writing and learning. First, students may encounter difficulty in consistently applying grammatical, spelling, and notational standards in addition to structural and organizational norms. Students will find that such standards may differ across academic communities that have developed their own processes for knowledge-sharing and consensus-making or within sub-genres such as academic essays, literature reviews, or dissertations (Hyland, 2014). Academic writing should generally be concise, free of grammatical, notational, and spelling errors, and often follow a common structure and organizational patterns (McKinley & Rose, 2018). To address this challenge, AI can serve as a proofreader and support students in ensuring that their grammar and spelling are correct so that they can submit a polished final product (Malik et al., 2023). Recent research exploring how students interact with ChatGPT has shown that many university students are already using AI, specifically using it to proofread their assignments (Črček & Patekar, 2023; Singh et al., 2023), and it was found to be comparable to humans (Heintz et al., 2022). As human proofreaders may not always be readily available to offer proofreading services, such an application of AI could streamline the writing process for students. Nonetheless, it could also be argued that AI does not provide a perfect substitute for humans in this respect. An evaluation of Wordvice AI, a proofreading tool, highlighted that the tool could outperform the built-in proofreading abilities of Google Docs or Microsoft Word, but still only managed to identify 77% of what was identified by a human proofreader (Heintz et al., 2022). While AI may be able to support typos, spelling errors, and grammar mistakes, it fails to grasp nuance or a writer’s intent. These studies successfully highlight a general awareness and use of AI technologies to support writing among students, they fail to specifically explore the context of use by ESL students, leaving a gap in the literature regarding the use of AI to support students for whom English is not their primary language.

Second, some students may find it difficult to synthesize research, develop a coherent argument, conduct a literature review, or contribute to the scholarly body of knowledge (Malik et al., 2023). AI-embedded tools can support students in navigating these issues by serving as a writing tutor and providing feedback on their essays, which can, in turn, be incorporated to result in an improved completed work (Kasneci et al., 2023; Rowland, 2023; Singh et al., 2023). Students are already discovering they can get immediate feedback from AI, whereas feedback from teachers or peers may be delayed or arrive too late to incorporate into an assignment (Huang et al., 2023). Additionally, an AI-based chatbot could be utilized to support outline creation for a writing task. According to Lin & Chang (2020), AI support enhances writing structure and promotes an enjoyable learning experience. Besides, the chatbot's questioning and prompting helped students become more critical of their writing, creating a space where students could pause and reflect on their work, leading to improved self-regulation (Lin & Chang, 2020). While such research describes the rich use cases for the application of AI for academic writing, they do not solicit in-depth student perspectives to gain the necessary insight to optimize the use of this technology by learners and are mostly limited to close-ended survey items such as “I've used AI-generated essay outlines to efficiently arrange my ideas before writing” (Malik et al., 2023). Such survey responses can generally signal student perceptions of AI, but they do not uncover the specifics about what aspects of the technology is most effective or presents a challenge to student users.

Many instructors who have been exposed to GenAI express that they believe it can enhance equity in academic writing for those who struggle with written communication (i.e., those with disabilities or ESL students) because it can act as a writing resource (Cardon et al., 2023). Specifically, AI can support translation to make it easier to navigate language barriers and be useful for general writing and revision (Huang et al., 2022). In addition to this, students have expressed that they believe AI can also act as a colleague with whom they can socialize, providing them with a partner to help them brainstorm, enhance their creativity, and make them feel supported as they write (Kim & Cho, 2023). However, while students may view AI as a potential learning partner, AI could negatively affect anxiety. For example, in students participating in a writing course where a chatbot was introduced as a written conversational partner, anxiety levels were reported to increase, potentially induced by AI interaction or due to a lack of familiarity with chatbots (El Shazly, 2021). Further research into student perspectives related to the use of AI could help uncover whether such anxiety is typical and, if so, help point to the underlying cause so that it can be addressed by AI developers, instructional designers, and educators.

While AI can address challenges faced by students in academic writing, GenAI-assisted writing and learning presents its own set of challenges. Specifically, there are several ethical concerns being identified in the current discourse about GenAI. Within the academic community, there is genuine concern that use of AI will result in cases of plagiarism, particularly if academic writers do not think critically about the suggestions made by an AI and merely adopt and use whatever it recommends (Salvagno et al., 2023). Additionally, GenAI can reflect any bias contained in training data or held by its developers. As a result, by over-relying on GenAI without applying an appropriate level of skepticism, academic writers could help perpetuate such bias (Salvagno et al., 2023). While educators and researchers are expressing these ethical concerns, the degree to which students themselves are wrestling with these issues remains underexplored and the voices of students who use GenAI are lacking. Therefore, insights from students, who come from diverse backgrounds and are the primary users of such tools, are crucial for a comprehensive understanding of their effectiveness.

To address the gaps highlighted above, we aim to explore the perspectives of students, who are the primary users of these tools, to highlight the benefits and barriers of using GenAI for academic writing in a higher education context. Understanding their unique perspectives and experiences will ensure that the tools adequately address students' challenges and help place them at the center of the design and implementation process. While the benefits identified thus far are impressive, research into GenAI in education, including higher education, is still in its early stages, with more to be learned by engaging directly with students.

3 Research methods

3.1 Participant and context

This study was conducted in an international joint-ventured (Sino-British) research-led university based in Suzhou, mainland China that provides English-medium instruction (EMI). Prior to admission, undergraduate students whose primary language is not English must achieve a minimum score of 80 on the TOEFL iBT (Internet-based), or a minimum score of 5.5 on the IELTS, while graduate students must achieve a TOEFL iBT score of 90, or an IELTS score of 6.5. Along with this, the university's English language center delivers credit-bearing English language modules (e.g., academic writing and speaking, college English) as part of the first year of most students' degree programs to help students successfully transition to using English in an academic EMI context to enable students' academic success. As for master and doctoral students, academic English courses (e.g., academic English writing, English for international academic communication) are necessary since they are required to write a dissertation in English, make presentations in English at international conferences, and publish high-quality papers in international journals.

The study adopted both purposeful and snowball sampling to explore and categorize diverse perceptions of GenAI-assisted academic English writing. Twenty participants were selected according to different educational backgrounds (major, levels of degree), levels of academic writing skills (IELTS writing score within 6 months), and levels of AI literacy based on the principle of maximum variation sampling (Dörnyei, 2007). In respect to measuring participants’ level of AI literacy, we modified Long & Magerko (2020), conducted a survey in 7-point Likert scales (from “Strongly Disagree = 1” to “Strongly Agree = 7”), and classified participants into two groups (Higher or lower level of AI literacy).

Of a total of 20 participants (Table 1), 10 were female (50%) and 10 were male (50%), spanning three different levels of degree (seven Bachelor students, eight Master students, five Doctorate students). Their IELTS scores ranged from a score of 5 to 7.5. Participants were also evenly divided into high and low AI literacy categorizations. This study received ethical approval from the university’s Institutional Review Board (NO. ER-AOFE-12781164620220903234812) and informed consent from all participants.

3.2 Data collection

The study conducted a semi-structured elicitation interview of 60–90 minutes for each participant via Zoom. To facilitate interviews, each student was invited to write an academic essay on one of four randomly assigned topics (see Appendix 1) prior to the interview. To better facilitate the writing activity, we developed a ChatGPT4-embedded writing system called Writing With GPT (WWG) adapted from Han et al. (2023) by using the Unity 3D game engine (version 2021.2.3f1) as presented in Fig. 1. Our platform features two types of prompts that facilitate conversations between ChatGPT and students: (1) a hidden prompt (pre-prompting) for ChatGPT to take an academic instructor role (see Appendix 1) and (2) an open prompt for students to initiate a dialogue with the system during their writing process in (B) of Fig. 1.

All participants were randomly assigned one topic out of three (see Appendix 2) from the IELTS academic writing task (Type 2), which requires students to present a point of view, arguments, or problems. Students might be asked to agree or disagree with a point of view or argument, discuss two opposing viewpoints, or write about the advantages or disadvantages of a topic with at least 250 words. Before each task, we elaborated on the study's objectives and methods. Additionally, every participant received a comprehensive guide encompassing detailed instructions for academic essay writing, academic writing with GenAI, the writing topics, and four assessment criteria of IELTS academic writing tasks (e.g., task responses, coherence & cohesion, grammar, and Lexical resource). Participants were asked to write the essay within a 60-minute timeframe and exceed 250 words in English. Furthermore, to acquaint participants with the system interface, we provided a concise instructional video showcasing the system's primary functions and the purpose of each feature. This allowed participants to test the system for 20 minutes, ensuring that the time invested in learning the tool did not encroach upon their task completion time. This approach also minimized the influence of their initial impression on the feedback provided during the subsequent interview.

After the writing activity with ChatGPT, the participant's final version of their essay and a screen-recording of their writing process were collected and used to facilitate interviews. Participants were asked questions regarding their writing experience assisted by ChatGPT, such as "What roles did you expect ChatGPT to play during the completion of your writing tasks?" and " In what ways did GPT help your writing task process?", and "What aspects of ChatGPT do you think have negatively affected your writing process?". The interview guiding questions are presented in Appendix 3. Interviews were carried out either in Mandarin Chinese (participant's first language) or English (medium of instruction) on the participant's choice to avoid language barriers during the interviews. All interviews were recorded, transcribed, member-checked, and later translated into English for interviews in Chinese. To ensure the accuracy of the data, transcripts of interviews conducted in Chinese underwent a back translation process by a researcher proficient in both languages. By discussing these measures in member checking, we aim to provide transparency regarding the steps taken to uphold the validity and accuracy of our data, thereby enhancing the credibility and reliability of our findings.This entailed comparing the original transcripts with their translated counterparts to identify any discrepancies or errors. By discussing these measures, we aim to provide transparency regarding the steps taken to uphold the validity and accuracy of our data, further bolstering the integrity of our research outcomes.

3.3 Data analysis

To unveil emerging themes related to participants' perceptions of GenAI in academic writing, a mixed approach of inductive and deductive thematic analysis (Clarke et al., 2015) was employed following seven steps: transcription, data familiarization, coding, thematic analysis, data reduction, interpretation, and validation. Initially, the interviews were transcribed verbatim to capture all nuances and expressions of the participants. Researchers then immersed themselves in the data to gain a comprehensive understanding of the content and context of the interviews during the data familiarization stage. Subsequently, two researchers independently conducted a deductive thematic analysis, utilizing their own perspective lenses to categorize interview data into patterned themes (Glesne, 2016). Following this, an inductive analysis was applied to identify novel codes and themes not initially recognized. The generated sub-themes were systematically reviewed, with existing ones amalgamated or certain themes divided into subthemes during the data reduction phase. This iterative interpretive process continued until unanimous agreement was reached among the researchers on each theme during the data interpretation stage. Finally, the final set of themes and sub-themes underwent a rigorous validation process, involving member checks, iterative discussions, and consensus-building among the researchers to ensure credibility and trustworthiness (Glesne, 2016). While critically reflecting on the translated transcripts and converting them into sub-themes, we made sure to maintain the original voice of the participants. Finally, a total of 9 themes, including 3 themes with 4 sub-themes for the expected roles of GenAI on academic writing tasks (RQ1), 3 themes with 11 sub-themes for advantages of GenAI-assisted academic writing (RQ2), and 3 themes with 15 sub-themes for barriers to GenAI-assisted academic writing were derived (see Appendix 4).

4 Findings and discussion

4.1 Students’ perception of expected roles of AI in AI-assisted academic writing tasks

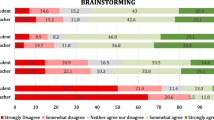

The findings from the analysis of students’ perceptions of the expected roles of AI emerged from the students' responses are summarized in Table 2. Each theme encompasses various sub-themes with corresponding percentages indicating how frequently each sub-theme was mentioned. Each students’ sentences (28 totals) were categorized into the themes and it can be captured in more than two sub-themes, reflecting the multifaceted expectations and perceptions students have regarding AI.

4.1.1 Multi-tasking writting assistant

One distinct role that students identified emerged from an expectation that the GenAI writing system should act as a search engine. For instance, P8 expressed: "If I have anything I want to search, I just ask GPT." Similarly, P6 said they used it for: "inquiring about the concept of some academic terms." Given that search engines play a vital role in human life by providing information in a fraction of seconds for various user queries (Sekaran et al., 2020), these findings reveal that students expect GenAI’s writing system to similarly help them access, integrate, and leverage domain-specific knowledge and content to support comprehension and meeting expectations associated with writing-related tasks (e.g., literature searches and information retrieval).

Another significant role of the GenAI writing system that has emerged was as a thought-provoker. Students in this study expected it to stimulate and inspire the generation of ideas, encourage critical thinking (“I hoped it could encourage me to think sharp in analyzing theoretical framework or existing research trends”, P10), foster creativity, explore and consider different perspectives (“I somehow expected it to challenge me with some questions that I have never thought of to give me a new fresh thought” P9), and delve into deeper layers of understanding (“Perhaps it could help me to get into a deeper discussion” P2). While existing literature considers the role of AI in writing as continuously generating possible ideas with engaging questions (Dale & Viethen, 2021; Rowland, 2023), students in this envision role of GenAI as a thought facilitator, facilitating various thinking processes during writing tasks, rather than merely providing information or guidance.

Furthermore, students also anticipated that GenAI would serve the role of an entry-level writer. In particular, students perceive the GenAI writing system as an entity that conducts research on the writing topic and utilizes technical writing expertise to generate, at the very least, an initial draft which serves as a starting point for the student (see Appendix 4). It is, however, worth noting that students do not mean to take advantage of GenAI’s automated writing. Rather, students believed GenAI’s initial draft generation could be helpful for expanding, clarifying, and modifying ideas, and organizing content into a meaningful sequence or flow.

AI’s initial draft at least works as a baseline that allows me to review and alter the content and develop it to be a more cohesive text that meets with the purpose and audience of this writing task (P4).

Lastly, students may find that when their writing is riddled with grammar, punctuation, or spelling mistakes, they experience an emotional barrier that makes them feel stuck during the task. In this context, students recognize that improving writing skills requires acknowledging and correcting these mistakes, and this is where students expected GenAI to serve as a proofreader. In Appendix 4, P9’s quotations reveal two important insights: First, students find it less anxiety-inducing and embarrassing to showcase their error-laden work to AI. Students felt less self-conscious about making mistakes in the presence of the AI writing system than in the presence of human instructors or friends, which has been reported by many existing studies (Alemi et al., 2015; Van den Berghe et al., 2019). Second, students are not merely seeking the correction of grammatical errors but they aim to use proofreading as a tool for self-awareness. They want to identify their weaknesses and enhance their sensitivity to written communication skills through the process. The feedback provided by GenAI is seen as a valuable resource for developing overall writing composition abilities. This underscores the perspective of GenAI as a proofreader that can function as a supportive and non-judgmental partner in their writing journey, allowing them to learn from mistakes to improve their writing skills.

4.1.2 Private virtual tutor on-demand within a minute or less

GenAI, conceived as a private virtual tutor, has been identified as a significant role of AI in AI-assisted writing. Given that AI can offer personalized interactions through comprehending and interpreting students’ inputs and generating personalized answers in real-time (Imran & Almusharraf, 2023), students specifically anticipate GenAI to serve as a 1:1 personalized instructor. As highlighted in Appendix 4, the time-boundless capacity of AI to offer step-by-step guidance anytime students want and need instills a belief in continuous on-demand tutoring, and positions GenAI as a highly influential and versatile writing assistance (Sallam, 2023). In addition, P6 particularly emphasized GenAI’s cost-effectiveness and efficiency by stating, “such quality one-on-one lessons are too expensive and not feasible” when referring to human instructors. These views reveal two important insights: First, students expect AI functioning as a private virtual tutor with personalized interaction to be cost-effective/efficient and to provide real-time responses to complement the weaknesses of human tutors (i.e., inability to offer real-time feedback) (Kim, 2023). Second, it presents a promising potential to support students’ writing skills from a lifelong learning approach rather than a one-time event, as AI could provide students flexibility and continuous access to writing education (students can learn when they want to learn it, how they want to learn it, and what they want to learn), and build a long-term relationship with students.

4.1.3 Digital peer

Students have embraced GenAI as more than just a functional tool; they perceive it as a digital peer throughout their writing journey. As P3 expresses, 'I feel a strong sense of friendship when I talk to him. When I ask it to do some tasks for me, it responds with a like, "certainly, yes, of course,"' supporting students in feeling 'a strong sense of security and friendliness.' These views demonstrate that students appreciate the AI's mutual and responsive interaction which not only enhances the sense of entertainment but also cultivates a robust community feeling (McKinnon, 2023). Students expect GenAI, as a digital peer or a friend, to create a continuous collaborative environment reminiscent of mutual dynamic interactions experienced during the learning and writing process. These views shift the tool-centric conception of technology in which the role of AI has been positioned as a powerful learning tool to effectively attain the learning goals predetermined, to the agent-centric approach driven by post-humanist theory such as Actor-Network Theory (ANT), highlighting the interplay of humans and technology and acknowledge both students and AI are agents in their own right and transact all learning activities with each other in mutually engaging and supportive way (Kim & Cho, 2023).

4.2 Students’ perceived advantages of GenAI-assisted academic writing

The findings from the thematic analysis of students’ perceived advantages of using GenAI in writing (Table 3) responses (total 31) present the primary themes identified include the productive writing process, improving writing performance, and enhancing the affective domain. To follow the general stages of the writing process (Wale & Bogale, 2021), the sub-themes under the productive writing process themes are introduced in the order of the writing process rather than by frequency.

4.2.1 Productive writting process

Students identified several key advantages throughout the phases of the writing process, which we categorized into four stages: ideation, planning, drafting, and revision. First, during the early stages of writing, students found GenAI enhances their ideation in understanding knowledge and issues around the writing topic. In support of this, GenAI gathers diverse perspectives from different sources, generates ideas by mapping out its generated content, and identifies potential correlations and gaps among them by offering sufficient and relevant content, and by asking questions back to students to specify their focus or even further develop it (see Appendix 4). By streamlining the ideation process, this finding directs GenAI not only to expedite the generation of ideas but also to empower students to approach subsequent stages of the writing process with a well-defined and enriched conceptual framework (Aldabbus & Almansouri, 2022; Gupta et al., 2022).

In the planning stage, where students organize and focus their ideas to structure their writing effectively, the perceived assistance extended to structuring the overall outline of the writing, ranging from organizing main points logically to creating a coherent flow in the writing structure. Students valued the guidance provided by GenAI in two critical aspects of planning: 1) structuring the content and 2) planning the process (see Appendix 4). This includes outlining main points logically (“it provide outline of the instruction or of some paragraphs,” P2; “the structure of my essay has become more logical,” P3), clarifying the topic aligns with the intended task structure (“this-topic- part, the GPT helped me to have a clear way,” P19), and ensuring a coherent flow in the writing structure (“GPT gave me some specific instruction such as the structure of the whole text,” P5). This illustrates the interaction with GenAI in planning the entire writing process, which includes creating a roadmap for approaching the task, setting goals, defining tasks, and establishing a completion plan for each phase.

As the writing unfolds, it traces a trajectory from general information to increasingly specific details relevant to the writer’s stance and the task (McKinley & Rose, 2018). While crafting their initial drafts, students reported a notable advantage of using GenAI in moving organically from broad concepts to intricacies that directly relate to their position, ensuring a cohesive and well-structured progression in conveying information. For instance, AI systematically breaks down the writing process into tasks or sections to enhance efficiency (see Appendix 4), crafts initial supportive sentences for relevant evidence, or produces finalized sentences based on students’ initial draft (“put my initial idea and text and AI somehow further enriches my writing or AI generates a brief paragraph and I further develop with strong arguments”, P4). It is interesting to capture that this composing process tends to be a collaborative and iterative feature in which GenAI generates text or drafts, and students then repeatedly go through cycles of writing words, phrases, sentences, and reviewing both their own and GenAI’s writing to synthesize them, ensuring a thorough and cohesively structured draft.

In the revision phase, the final stage of the writing process, students acknowledged the invaluable contribution of GenAI not only in traditional editing and proofreading but also in elevating their metacognitive skills. GenAI played a pivotal part in guiding self-correction, assisting students in identifying and rectifying language errors, inconsistencies, and dissonance in their writing. It actively participated in enhancing clarity and coherence in the draft (Gayed et al., 2022). Beyond being a mere editor, GenAI assumed responsibility as a thoughtful reviewer. In particular, students expressed deep appreciation for the instantaneous feedback provided by GenAI, enabling them to iteratively refine their work. This iterative refinement process facilitated the production of a polished final draft, embodying the collaborative efforts between the student and the GenAI in achieving a refined and well-crafted piece of writing.

4.2.2 Improving the writting performance

Students perceived a significant enhancement in their writing quality through GenAI-assisted writing, which encompasses various aspects such as clarity, coherence, organization, grammar, punctuation, and vocabulary usage (e.g., "the spelling of words and the correct use of grammar,” P7; “polish the sentences I write which can make the article more coherent,” P8; “grammar correction and vocabulary complexion,” P6). In turn, students perceived that GenAI enhanced the overall effectiveness of conveying their intended message to their target audience, as well as the clarity and coherence of their writings.

Another key advantage perceived by students is the improvement in writing speed facilitated by AI. GenAI provides continuous support throughout the stages of academic writing (see section 4.2.1.). In particular, students perceived that GenAI can play a role in reducing certain inefficiencies during the writing process, such as collecting information from browsers, repeatedly searching similar resources, and double-checking the accuracy of information they have collected, which in turn contributes to the efficiency and expeditious nature of the writing processes while enabling students to focus more on tasks that required higher-order thinking (i.e., critically organizing the necessary data they need for their writing and selectively choosing relevant information during the writing process).

Lastly, students found that AI improves their topic knowledge. Academic writing necessitates a profound understanding of the writing topic, leveraging the students' background knowledge. For this, P2 said: “GPT kindly explained certain terms that I need to understand for the writing topic and gave me many examples. With its help, I became clear what to explain and argue”. Her quote is in line with Parodi (2007) highlighting that AI's assistance in acquiring comprehensive topic knowledge supports students to establish a well-structured situation model of the writing topic to improve accuracy and coverage of content and create their own text product.

4.2.3 Enhancing the affective domain

First, students articulate the infusion of joy into their writing experiences. Students’ felt joy while posing questions to GPT and receiving continuous, sometimes insightful responses. This transformed the typically solitary writing task into an enjoyable and interactive experience (see Appendix 4).

Second, fostering question generation is identified as another advantage of GenAI assisted writing. For instance, P8 states : “I must ask questions more specifically, more clearly to have better content from GenAI. When working with AI, I have to be active in making questions”. His view resonates with inquiry-based writing instruction, a form of gaining knowledge and skills through asking for information (Wale & Bogale, 2021). In this regard, GenAI supports inquiry-based writing instruction by guiding students to pose questions, examine sources, synthesize data, propose answers, and explain and communicate arguments which helps them to develop their knowledge and facilitate the writing process (Sandoval, 2005).

Third, AI plays a crucial role in ramping up the perceived support experienced by students. The presence of invisible support significantly enhanced a sense of relief and feeling of being supported during writing processes (see Appendix 4). This finding is in line with the existing literature, highlighting that students’ perceived co-presence (Mason, 1994) offers “emotional comfort and support in the virtual environment” (Kim & Cho, 2023, p. 7). The acknowledgment of AI as a source of companionship, comfort, and assistance aligns with the potential of AI to not only enhance academic performance but also contribute to the emotional support of students navigating virtual learning environments, fostering psychological stability during the challenges of writing.

Finally, the perceived availability of AI support, when needed, seemed to significantly promote students’ self-efficacy towards task achievement.

I'm not a native speaker so I cannot express myself well in English. But with GPT’s support, writing tasks became less burdensome. Overall, I think my writing tasks have been smoothly managed. With a bit of support, I can also be good at writing in English (P2).

Through P2’s quotes, it can be seen that the integration of AI support not only transforms the perceived difficulty of writing tasks but also emerges as a powerful catalyst for fostering students’ individual belief in their own ability to successfully accomplish the writing task (Nazari et al., 2021).

4.3 Students' perceived barriers in GenAI-assisted academic writting

Table 4 presents the results of a thematic analysis on students’ perceived barriers responses (total 38) to utilizing GenAI in writing. While the table outlines the main themes identified, including obstacles related to GenAI itself, those linked to students, and factors associated with specific writing tasks, students’ responses encompassed a range of sub-themes, illustrating the diverse challenges they perceive when considering the integration of GenAI into their writing processes.

4.3.1 AI- related

First, GenAI’s hallucination (Alkaissi & McFarlane, 2023), a phenomenon when the system generates texts that are semantically or syntactically plausible but are in fact incorrect or nonsensical, was found to be the most prevalent challenge of GenAI-assisted academic writing. Students in the study expressed that ChatGPT generated content and references that appeared credible and relevant to the writing topic on the surface but lacked factual authenticity and accuracy. Such inaccurate and misleading information offered by AI leads to the perpetuation of misconceptions about the topic knowledge among students. Further, it requires students to conduct time-intensive review of the precision, credibility, and authenticity of AI-facilitated content. In addition, given that academic writing demands a meticulous interplay of discipline-specific knowledge and unwavering adherence to scholarly standards (e.g., rigorous and credible sources and evidence), AI hallucinations undermine the very purpose of academic writing and scholarly contributions.

Second, AI’s lack of contextual understanding was another significant challenge to effective GenAI-assisted writing. For academic writing tasks, students need to go beyond summarizing the relevant information. They are required to situate ideas, arguments, or practices in a larger context to construct the readers’ view of a broad-scale situation, and call attention to the relationships that surround an issue (Epting, 2018). In this respect, students expected ChatGPT to support them in perceiving the broader context in which content exists and making sense of outside information to connect between contextual features and their respective linguistic realizations (e.g., expression of content and organization of text). Yet, ChatGPT merely reacts with generic and broad information without comprehending complicated social dynamics and situations such as individual variances, socio-political concerns, historical viewpoints, or the distinctive issues encountered by different societies and cultures, thus failing to offer more in-depth and topic/disciplinary knowledge and nuanced interpretations of the content it is generating for students.

Third, while ChatGPT offers large amounts of information at lightning speed, students pointed out AI’s lack of higher-order thinking as reflected by the top 3 levels of Bloom’s taxonomy: (1) analysis (the ability to deconstruction information, and/or examine it from differing perspectives), (2) evaluation (the ability to form educated judgments and form relevant critiques) and (3) creation (the ability to present learned information in new and unique ways) (Anderson & Krathwohl, 2001) which posed a significant challenge. In this regard, Choudhuri et al. (2023) explained that a generation of an exponential volume of information and content by AI, without any assistance in synthesizing such a massive amount of content in real-time, increases cognitive load, fatigue, and frustration in comprehending, managing, and processing.

Fourth, lack of human awareness (in this study’s case, the students), as many studies pointed out, posed another challenge; this included AI’s inability to understand the student's context or environment, such as unique writing styles, tone, techniques, communication styles, learning environment, and so on (Endsley, 2000; Jiang et al., 2023). As P18 presented in Appendix 4, students expected AI to be conscious of their individual writing processes, styles, and voice and revise or give them feedback to improve the quality of writing while not losing their authentic writing styles and voice. This finding is corroborated by the earlier research in AIED, which found human awareness as an essential component in building educational AI and calls for a greater need to design student-centered and education-sensitive AI-embedded systems that understands students’ diverse characteristics within the educational domain so that it fits right into our existing educational practice and processes (Lee et al., 2023).

Fifth, a lack of understanding of the culture associated with the language was perceived as another challenge. As Lustig and Koester (2010) highlights, language is more than simply sending or receiving information. Rather it involves individuals of different cultures with different orientations and interpretations in meaning making. Reflecting on this, AI’s lack of cultural communication and understanding in Chinese leads to a breakdown in conversation, or results in errors and misunderstanding/misinterpretation of the required context to interpret and communicate (see P4’s quote in Appendix 4).

Sixth, another challenge associated with AI was its lack of relationship skills. Students described that although ChatGPT generally communicates in a neutral and sound way, it is not capable of building positive relationships using a variety of methods such as incorporating humor, sharing and asking for thoughts, recognizing strengths and improvements made by students, developing a sense of friendship, or offering motivational and emotional support to create interactions that are more productive, rewarding, and pleasing. These findings are concordant with existing research on affective and empathetic AI to account for socio-emotional interactions with students, foster students’ affective domain (e.g., motivation and engagement) during the learning process, and create constructive interaction and collaborative experience between students and GenAI (Kim et al., 2024, Kim & Lee, 2020).

Seventh, ChatGPT’s lack of pedagogical skills was found to be an important challenge. As discussed earlier, students expected AI to serve not only as a writing assistant but also as a writing tutor. Nonetheless, a GenAI-assisted writing system lacks effectiveness without the ability to plan, initiate, lead, develop, and assess instruction in both domain-specific areas, such as writing, and in topic knowledge. It must also integrate content understanding with instruction. Without these capabilities, students may not gain a thorough understanding of the AI-provided content or how to blend it into their writing tasks. Furthermore, the system should create valuable learning opportunities and experiences. These include active learning, inquiry-based learning, and educationally meaningful communication during student-GenAI interaction (Kim & Cho, 2023).

Eighth, a lack of interoperability was perceived as another challenge as identified by previous studies (Kim & Cho, 2023; Lehne et al., 2019). Students wanted a centralized hub that allows them to gather a wealth of content and viewpoints from across different platforms that they could then compare and contrast to save time and effort in browsing different websites and platforms. This would enable them to streamline essay organization, allowing them to efficiently narrow down their arguments. In addition, as P10 presented in Appendix 4, an interoperable AI system can further empower students by putting them in control of their writing tasks. Rather than being limited to a single platform, students can explore various options and find the best fit for their needs. They can access and be exposed to different views, contents, and suggestions to make decisions that align with their needs on writing tasks. This allows students to be active consumers and managers of AI-generated content on their tasks from being passive recipients of AI suggestions (Kim & Cho, 2023).

Last, but not least, another crucial challenge identified is AI’s inability to explain its knowledge: what it knows, how it knows it, and why it suggests certain information. As presented by P13 in Appendix 4, students are well aware of AI’s operational characteristics such as black box issues, where the reasoning behind the AI’s decision-making is indecipherable. Yet, students expected AI to produce a clear interpretation of its suggestions with transparent explanations for recommendations so that students could easily understand its suggestions/content. This would enable students to reproduce/improve the AI-generated content, and facilitate the writing process. Reflecting on the needs and aims of feedback, a form of explanation in education, AI’s explanation could improve, prompt self-monitoring and directing, and scaffold the learning process (Hattie & Timperley, 2007). Moreover, it can be seen as a relational process through which AI systems may encourage positive motivation and help learners build confidence and self-esteem (Price et al., 2010). Students perceive explanation not just as an end-product, but as a process that requires diverse cognitive and social interactions including troubleshooting, justification, communication, trust, and so on, and a knowledge transfer process from an explainer (AI system) to an explained (student) (Miller, 2019; Srinivasan & Chander, 2021). This indicates that a GenAI writing system that interacts with students needs to consider such socio-technical perspectives and demonstrate a range of explainable AI techniques and methodologies to better assist in sensemaking (Alqaraawi et al., 2020), enhancing user trust (Yang et al., 2020), or enabling shared decision making between student and AI system (Kim et al., 2024; Liao et al., 2020,).

4.3.2 Students-related

First of all, students in the study emphasized the importance of AI literacy for effectively engaging with AI (e.g., crafting precise and effective prompts/input to guide AI toward generating responses that are both accurate and pertinent to students' needs). Students’ statements, such as “Is AI incapable of handling this? (P11)" or "I assumed it (ChatGPT) would understand my intention even if I spoke vaguely, (P16)” illustrate that a deficiency in understanding and using AI hinders the full utilization of its functions, thereby impeding the effectiveness of student-AI interaction in learning tasks. Specifically, AI literacy is crucial for comprehending AI-generated content, navigating AI system interfaces, and engaging with prompt design, all of which affect the complete utilization of AI.

Second, students’ negative attitudes toward AI was found to be another prevailing challenge. Negative attitudes encompassed concerns about negative side effects of GenAI-assisted writing and potential adverse consequences or unintended outcomes of GenAI such as biases and errors. As seen from P6 quotes in Appendix 4, students’ negative attitude toward AI could cause reluctance to fully engage with AI-assisted tasks, and limit the potential uses of AI in the writing tasks process.

Third, the lack of higher-order thinking has emerged as a barrier in GenAI-assisted writing. For instance, students faced challenges in critically evaluating AI-generated content, formulating logical arguments, and engaging in nuanced reasoning on AI-generated content. Based on Bloom’s taxonomy, which categorizes cognitive skills into higher order (e.g., analyzing and synthesizing) and lower-level skills (e.g., remembering and applying) (Anderson & Krathwohl, 2001; Bloom et al., 1956), higher-order thinking can be expressed as interactions and the application of skills such as logical reasoning, questioning assumptions, and considering and evaluating alternative explanations (Spector & Ma, 2019). Considering these skills as integral for constructive use of AI, especially in writing tasks requiring reasoning loops of the spiral of human thinking, students' struggles become apparent.

Fourth, students' lack of task topic knowledge was found as another challenge. Topic knowledge, also known as background knowledge, encompasses what a person already knows about a topic (Marzano, 2004), and is crucial for generating content independently. Numerous studies have reported the relationship between background knowledge and achievement (Bloom, 1976; Schiefele & Krapp, 1996), as well as its correlation with the extent to which that person learns and reflects on that topic. This relationship had an effect on collaboration with GenAI, as writing on unfamiliar topics restricted students' ability to contribute unique perspectives due to a lack of background knowledge. For instance, P1's statement in Appendix 4 illustrates the relationship between knowledge of the topic and the writing task. Consequently, they had to heavily rely on AI suggestions to guide their writing process and content creation.

Lastly, a lack of writing skills acted as a barrier in collaborative writing with GenAI. Students expressed that a certain level of writing proficiency was deemed essential for optimizing the quality of AI-generated drafts. They conveyed a sense of limitation in making additional modifications and adjustments to the drafts, suggesting that a lack of writing skills hindered their ability to fully capitalize on AI support (see Appendix 4). Reflecting on this, it is crucial for students to possess a foundational level of writing competence to effectively utilizing AI suggestions and producing high-quality written content.

4.3.3 Task-related

Given the 30-minute time limit allocated for the writing activity, time constraint emerged as a barrier. For example, P1 expressed: “But in the writing task, I did not have enough time for fully assessing GenAI-generated contents. Sometimes I had to just use its contents since I didn’t have enough time to make changes.” His view reflects the practical challenges students faced in managing their time effectively within the designated window, leading to increased reliance on GenAI to handle the entire writing process. This finding echoes earlier research highlighting that time constraints, the disparity between the available time and the time needed to address a task (Benson & Beach, 1996; Rastegary & Landy, 1993), can impact students’ decision-making and task operation behavior (Gonzalez, 2004).

5 Conclusion

This study explored the expected role of GenAI in academic writing, including advantages and challenges, from students’ perspectives. The findings suggest a range of implications for educational AI design and instructional strategies for AI-assisted learning to positively impact students’ learning.

First, students articulated the various roles they expected GenAI to serve during academic writing tasks, and acknowledged the benefits of GenAI-assisted writing. Specifically, students viewed AI as more than a mere content generator, but as an active agent in their learning (e.g., virtual tutor, digital peer) to foster their learning experience and performance. Additionally, students expected AI to embrace human-like traits/characteristics including contextual understanding, higher-order thinking, human awareness, cultural awareness, relationship skills, and pedagogical skills. Such expectations could lead some individuals, particularly those from non-academic audiences, to pose questions such as “Will AI take over teaching in schools?”, or “Will AI ever reach human-level intelligence?”. The findings of the current study do not support replacing human tutors or building human-like AI. Rather, they echo existing research that emphasizes the importance of designing human-centered AI in education that prioritizes the needs, characteristics, and experiences of human users, such as students and teachers. This entails designing learning experiences that enhances learners’ performance by leveraging AI to amplify the complementary strengths of humans and AI, thereby promoting active collaboration through human-AI interactions in an educational context (Kim et al., 2022; Kim, 2023; Kim & Cho, 2023). In this respect, post-humanist theory such as Actor Network Theory (ANT) offers alternative perspectives on the roles of technology and the design of educational AI. ANT highlights the agency of both humans and technology and proposes a symbiotic interaction and relationship between humans and technology, where neither is reduced to the other nor dismissed with any other; both students and AI are active agents and transact the learning task operation process as being continuously influenced by each other. This means that the roles of AI and the nature and quality of student-AI interaction for learning should be mutually engaging, but also supportive (Latour, 2005; Kim & Cho, 2023). Reflecting on this, a rich line of research proposes diversifying the roles of GenAI to fully maximize its potential for strengthening instructional practices and learning experiences, avoiding both overuse and underuse (misuse or disuse) of AI for a balanced symbiosis of AI and human interaction in educational settings (Molenaar, 2022; Renz & Vladova, 2021). For instance, Kim (2023) suggests AI could serve as a case library that enables case-based reasoning in collaboration with human teachers. On the other hand, AI could be designed as a Teachable Agent (TA) capitalizing on learning-by-teaching interactions where students learn by teaching the TA (e.g., assessing its knowledge by asking it questions or by getting it to solve problems) (Biswas et al., 2005; Chase et al., 2009). Given that human learning is a multifaceted system characterized by various levels of abstraction and interaction between subsystems, it necessitates careful consideration when it comes to interaction between students and GenAI systems. This directs us to build a comprehensive and integrated understanding of both the interplay between human-human interaction and human-technology interaction within socio-technical systems. This approach, informed by insights from both domains, recognizes that the human element is intricately linked to the technological elements with both systems influencing and conditioning each other (Emery, 1959). Expanding on this perspective, future research should prioritize the development of theoretical and conceptual foundations for human-centered AI in learning by integrating current cognitive or behaviorism learning theories from the educational fields (e.g., relationship between schema theory and learners’ process and information organization while interacting with AI). This understanding is critical for designing GenAI systems which are complex learning systems that involve dynamic interactions and underlying learning mechanisms among students, instructors, AI, and learning tasks, influenced by process-related, technical, and ethical factors (Kim et al., 2024; Kusters et al., 2020). The integration of these perspectives may shed light on the concept of students-AI complementarity, optimal roles for GenAI systems and students and instructors, and curriculum design, such as determining learning objectives, content organization, and assessment plan for AI-assisted learning and teaching. It encompasses the creation of a framework that not only links various studies together for cumulative knowledge building, but also enables a deeper examination of student-AI interactions (SAI) in learning tasks. To address this, the development of a comprehensive framework that elucidates interaction patterns, identifies significant factors or variables influencing SAI, and informs the design of educational AI systems aligned with educational objectives is urged. By embracing a theoretical perspective and adopting a systematic approach to studying SAI, both understanding of the complex dynamics between students and AI technologies in educational settings. Moreover, such efforts will contribute to the advancement of educational AI research and the development of more effective and learner-centric learning environments.

Second, this study found a strong need to develop students’ capacity for prompt engineering, the process of crafting, optimizing, and employing text that can be interpreted and understood by GenAI. This would enable improved communication with GenAI to harness its capability to perform tasks (e.g., generating educational content) as intended, and ensure accurate, relevant, and quality outcomes. This requires that teachers develop instructional strategies for building a substantial understanding and experience on subject-specific (e.g., academic writing) AI applications and foster AI literacy, the ability to effectively use, collaborate with, and act as critical consumers of AI (Long & Magerko, 2020) and AI-driven instructional design. While discussions on AI literacy tend to be narrowly positioned within the field of computer science or STEM-related subjects (Casal-Otero et al., 2023), this study shifts AI literacy to be extensible and embedded across disciplines, including literacy education, while carefully understanding and contextualizing within a subject-specific context (Kim et al., 2022). By integrating AI literacy across different disciplines and domains, students can better connect their knowledge of AI to the domain knowledge they learn, develop a multidisciplinary understanding of AI and its diverse implications across academic areas and contexts, and critically assess AI technologies to make informed decisions in various contexts (Kim et al., 2022; Ng et al., 2021). In this regard, it is essential to encourage educators to develop interdisciplinary and collaborative AIED curricula in higher education. For instance, courses in prompt engineering could integrate concepts, components, and skills from interdisciplinary domains. This could include the technical aspects of prompt engineering such as understanding Large Language Model (LLM), training data, and prompting techniques (i.e., zero-shot prompting, few-shot prompting, chain of thought prompting), supported by programming language knowledge from computer science or data science fields. Additionally, these courses should include contextual knowledge related to using an LLM to perform tasks, subject-matter expertise, and elements of philosophy and ethics to discuss cultural sensitivity, fairness, and bias in prompts and their outputs.

Furthermore, it is worth noting that fostering both students and AI’s higher-order thinking skills is crucial. For instance, students expected AI to analyze different sources of information, evaluate the relevance of generated content, and create unique and personal content. At the same time, students perceived their own higher-order thinking skills, such as logical reasoning, critically questioning GenAI-generated content, and considering and evaluating alternative explanations, as important in avoiding AI-related challenges. These could include limiting the diversity of perspectives, further narrowing down analytical outcomes, or over-reliance on AI that could result in contextually hollow interpretations. These findings are consistent with the existing research that highlights that AIED should aspire to noble educational goals and alleviate, not create, wicked problems in an educational domain such as fostering students’ metacognition, higher-order thinking skills to realize life-long and deep learning rather than directing to improve academic performance in standardized exams by spoon-feeding customized and prespecified contents (Holmes et al., 2022; Kim et al., 2022; Kim, 2023). Bearing this in mind, educational AI should be designed to support students’ cognitive skills that are necessary for processes of higher-order thinking; for instance, GenAI could follow Bloom’s taxonomy model, and nudge and guide students to stimulate critical thinking, develop higher-level thinking questions (Zhu, 2015), analyze patterns in students’ responses, assess higher-order inquiry skills, and challenge students to think more deeply and critically by giving personalized feedback (Ketelhut et al., 2010). Meanwhile, educators need to take effective instructional measures based on an in-depth understanding of the multifaceted nature of higher-order thinking, methodical frameworks, and effective pedagogies for reasoning and questioning to guide students to augment high-level thinking with AI and have educationally relevant interactions during AI-assisted learning.

This study advances an in-depth understanding of students’ perception, expectations, and barriers related to using GenAI for writing tasks while additionally presenting implications for enhancing GenAI-assisted instruction and the design of educational AI. However, the study has some limitations that future research should consider. First, although the current study considered students’ different characteristics such as majors, AI literacy levels, writing skills, and gender to explore diverse perceptions of GenAI-assisted writing, the sample of 20 Chinese students is not large enough to fully reflect students’ views. Also, students’ participants in this study are all from a Sino-British international university. Although our findings still provide valuable insights into broader educational issues and trends, especially given the increasing globalization of higher education, the specific context and curriculum of a Sino-British university, along with the students' diverse educational backgrounds, may influence their perspectives and experiences. Therefore, future studies could be conducted in tandem with quantitative research methods with a larger number of students along with different learning tasks (i.e., argumentative discussions, creative writing) and students’ characteristics (i.e., attitude toward AI, interaction fluency with AI) in different learning environments and cultures. Second, the interviews were carried out after students’ one-time interactions with the system developed by the research team, which may not fully reveal different perceptions as interactions with the system change over evolutionary timescales (Kim et al., 2022; Kim, 2023). Future studies could be conducted with a longitudinal design in an actual classroom setting and explore students’ perceptions at different time periods of GenAI-assisted writing. Furthermore, future research can utilize qualitative data analysis (QDA) software (e.g., NVivo, Atlas.ti, MAXQDA), or text-mining techniques for alternative data analysis and interpretation.

Data availability

N/A

Code availability

N/A

Reference

Aldabbus, S., & Almansouri, E. (2022). Academic writing difficulties encountered by university EFL learners. British Journal of English Linguistics, 10(3), 1–11. https://doi.org/10.37745/bjel.2013/vol10n3111 .

Alemi, M., Meghdari, A., & Ghazisaedy, M. (2015). The impact of social robotics on L2 learners’ anxiety and attitude in English vocabulary acquisition. International Journal of Social Robotics, 7(4), 523–535. https://doi.org/10.1007/s12369-015-0286-y .

Alkaissi, H., McFarlane, S. I., & February (2023). 19, Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus, 15(2). https://doi.org/10.7759/cureus.35179 .

Allen, L. K., & McNamara, D. S. (2017). Five building blocks for comprehension strategy instruction. In León José A. & Escudero Inmaculada (Eds.), Reading comprehension in educational settings (pp. 125–144). John Bejamins. https://doi.org/10.1075/swll.16.05all .

Alqaraawi, A., Schuessler, M., Weiß, P., Costanza, E., & Berthouze, N. (2020, March). Evaluating saliency map explanations for convolutional neural networks: a user study. In Proceedings of the 25th International Conference on Intelligent User Interfaces (pp. 275–285). https://doi.org/10.1145/3377325.3377519 .

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy of learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Longman.

Behrooz, H., Lipizzi, C., Korfiatis, G., Ilbeigi, M., Powell, M., & Nouri, M. (2023). Towards Automating the identification of sustainable projects seeking financial support: An AI-powered approach. Sustainability, 15(12), 9701. https://doi.org/10.3390/su15129701 .

Benson, L., & Beach, L. R. (1996). The effects of time constraints on the prechoice screening of decision options. Organizational Behavior and Human Decision Processes, 67(2), 222–228. https://doi.org/10.1006/obhd.1996.0075 .

Biswas, G., Schwartz, D. L., Leelawong, K., & Vye, N.,TAG-V (2005). Learning by teaching: A new agent paradigm for educational software. Applied Artificial Intelligence, 19(3–4), 363–392. https://doi.org/10.1080/08839510590910200 .

Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Handbook 1: Cognitive domain. David McKay.

Bloom, B. S. (1976). Human characteristics and school learning. McGraw-Hill.

Cardon, P., Fleischmann, C., Aritz, J., Logemann, M., & Heidewald, J. (2023). The challenges and opportunities of AI-assisted writing: Developing AI literacy for the AI age. Business and Professional Communication Quarterly, 86(3), 257–295. https://doi.org/10.1177/23294906231176517 .

Casal-Otero, L., Catala, A., Fernández-Morante, C., Taboada, M., Cebreiro, B., & Barro, S. (2023). AI literacy in K-12: A systematic literature review. International Journal of STEM Education, 10(29), 1–17. https://doi.org/10.1186/s40594-023-00418-7 .

Chase, C. C., Chin, D. B., Oppezzo, M. A., & Schwartz, D. L. (2009). Teachable agents and the protégé effect: Increasing the effort towards learning. Journal of Science Education and Technology, 18(4), 334–352. https://doi.org/10.1007/s10956-009-9180-4 .

Chichekian, T., & Benteux, B. (2022). The potential of learning with (and not from) artificial intelligence in education. Frontiers in Artificial Intelligence, 5. https://doi.org/10.3389/frai.2022.903051 .

Choudhuri, R., Liu, D., Steinmacher, I., Gerosa, M., & Sarma, A. (2023, December). How Far Are We? The Triumphs and Trials of Generative AI in Learning Software Engineering. Proceedings of the International Conference on Software Engineering (pp. 1–13). https://doi.org/10.1145/3597503.3639201 .

Clarke, V., Braun, V., & Hayfield, N. (2015). Thematic analysis. In J. A. Smith (Ed.), Qualitative psychology: A practical guide to research methods (pp. 222–248). Sage.

Črček, & Patekar, J. (2023). Writing with AI: University students’ use of ChatGPT. Journal of Language and Education, 9(4), 128–138. https://doi.org/10.17323/jle.2023.17379 .

Dale, R., & Viethen, J. (2021). The automated writing assistance landscape in 2021. Natural Language Engineering, 27(4), 511–518. https://doi.org/10.1017/s1351324921000164 .

Dörnyei, Z. (2007). Research methods in applied linguistics: Quantitative, qualitative and mixed methodologies. Oxford University Press.

El Shazly, R. (2021). Effects of artificial intelligence on English speaking anxiety and speaking performance: A case study. Expert Systems, 38(3), 1–15. https://doi.org/10.1111/exsy.12667 .

Emery, F. E. (1959). Characteristics of socio-technical systems: A critical review of theories and facts about the effects of technological change on the internal structure of work organisations; with special reference to the effects of higher mechanisation and automation. Tavistock Institute of Human Relations.

Endsley, M. R. (2000). Theoretical underpinnings of situation awareness: A critical review. In M. R. Endsley, & D. J. Garland (Eds.), Situation awareness analysis and measurement (pp. 3–32). Lawrence Erlbaum Associates, Inc.

Fengchun, M., & Wayne, H. (2023). Guidance for generative AI in education and research. UNESCO Publishing. https://doi.org/10.54675/EWZM9535 .

Fitria, T. N. (2021). Grammarly as AI-powered English writing assistant: Students’ alternative for writing English. Metathesis: Journal of English Language, Literature, and Teaching, 5(1), 65–78. https://doi.org/10.31002/metathesis.v5i1.3519 .

Flower, L., & Hayes, J. R. (1981). A cognitive process theory of writing. College Composition and Communication, 32(4), 365–387. https://doi.org/10.2307/356600.

Gayed, J. M., Carlon, M. K. J., Oriola, A. M., & Cross, J. S. (2022). Exploring an AI-based writing assistant's impact on English language learners. Computers and Education: Artificial Intelligence, 3, 100055. https://doi.org/10.1016/j.caeai.2022.100055.

Glesne, C. (2016). Becoming qualitative researchers: An introduction. Pearson.

Gonzalez, C. (2004). Learning to make decisions in dynamic environments: Effects of time constraints and cognitive abilities. Human Factors: The Journal of the Human Factors and Ergonomics Society, 46(3), 449–460. https://doi.org/10.1518/hfes.46.3.449.50395.

Gupta, S., Jaiswal, A., Paramasivam, A., & Kotecha, J. (2022). Academic writing challenges and supports: Perspectives of international doctoral Students and their supervisors. Frontiers in Education, 7, 891534. https://doi.org/10.3389/feduc.2022.891534.

Han, J., Yoo, H., Kim, Y., Myung, J., Kim, M., Lim, H., Kim, J., Lee, T. Y., Hong, H., Ahn, S. Y., & Oh, A. (2023). RECIPE: How to integrate ChatGPT into EFL writing education. In D. Spikol, O. Viberg, A. Martínez-Monés, & P. Guo (Eds.), Proceedings of the Tenth ACM Conference on Learning@ Scale (pp. 416–420). Association for Computing Machinery. https://doi.org/10.1145/3573051.3596200.

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487.

Heintz, K., Roh, Y., & Lee, J. (2022). Comparing the accuracy and effectiveness of Wordvice AI Proofreader to two automated editing tools and human editors. Science Editing, 9(1), 37–45. https://doi.org/10.6087/kcse.261.

Holmes, W., Persson, J., Chounta, I. A., Wasson, B., & Dimitrova, V. (2022). Artificial intelligence and education: A critical view through the lens of human rights, democracy and the rule of law. Council of Europe.

Huang, Zou, D., Cheng, G., Chen, X., & Xie, H. (2023). Trends, Research Issues and Applications of Artificial Intelligence in Language Education. Educational Technology & Society, 26(1), 112–131. https://doi.org/10.30191/ETS.202301_26(1).0009.

Hyland, K. (2014). English for academic purposes. In Leung, C., & Street, B.V. (Eds.), The Routledge companion to English studies (pp. 392–404). Routledge. https://doi.org/10.4324/9781315852515 .

Imran, M., & Almusharraf, N. (2023). Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Contemporary Educational Technology, 15(4), ep464. https://doi.org/10.30935/cedtech/13605.

Jiang, J., Karran, A. J., Coursaris, C. K., Léger, P. M., & Beringer, J. (2023). A situation awareness perspective on human-AI interaction: Tensions and opportunities. International Journal of Human-Computer Interaction, 39(9), 1789–1806. https://doi.org/10.1080/10447318.2022.2093863.

K Epting, L. (2018). Pedagogy of academic narrative: Insights from they say/I say: The moves that matter in academic writing by Graff and Birkenstein (2014). Perspectives on Behavior Science, 41(2), 561–568. https://doi.org/10.1007/s40614-018-0175-4.

Kasneci, Sessler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., Gasser, U., Groh, G., Günnemann, S., Hüllermeier, E., Krusche, S., Kutyniok, G., Michaeli, T., Nerdel, C., Pfeffer, J., Poquet, O., Sailer, M., Schmidt, A., Seidel, T., & Kasneci, G. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences, 103, 102274. https://doi.org/10.1016/j.lindif.2023.102274.

Ketelhut, D. J., Nelson, B. C., Clarke, J., & Dede, C. (2010). A multi-user virtual environment for building and assessing higher order inquiry skills in science. British Journal of Educational Technology, 41(1), 56–68. https://doi.org/10.1111/j.1467-8535.2009.01036.x.

Kim, J. (2023). Leading teachers' perspective on teacher-AI collaboration in education. Education and Information Technologies, 1–32. https://doi.org/10.1007/s10639-023-12109-5.

Kim, J., Lee, H., & Cho, Y. H. (2022). Learning design to support student-AI collaboration: Perspectives of leading teachers for AI in education. Education and Information Technologies, 27(5), 6069–6104. https://doi.org/10.1007/s10639-021-10831-6.

Kim, J., & Lee, S. S. (2020). A comparative study on the expected roles and appearance of social robots according to students’ personality traits. Journal of Education & Culture, 26(3), 71–91. https://doi.org/10.24159/joec.2020.26.3.71.

Kim, J., & Cho, Y. H. (2023). My teammate is AI: Understanding students’ perceptions of student-AI collaboration in drawing tasks. Asia Pacific Journal of Education, 1–15. https://doi.org/10.1080/02188791.2023.2286206.

Kim, J., Ham, Y. H., & Lee, S. S. (2024). Differences in student-AI collaboration process on a drawing task: Focusing on students' attitude toward AI and the level of drawing skills. Australasian Journal of Educational Technology. https://doi.org/10.14742/ajet.8859.

Kusters, R., Misevic, D., Berry, H., Cully, A., Le Cunff, Y., Dandoy, L., & Wehbi, F. (2020). Interdisciplinary research in artificial intelligence: challenges and opportunities. Frontiers in Big Data, 3, 577974. https://doi.org/10.3389/fdata.2020.577974.

Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Oxford University Press.