Abstract

Existing algorithms for subgroup discovery with numerical targets do not optimize the error or target variable dispersion of the groups they find. This often leads to unreliable or inconsistent statements about the data, rendering practical applications, especially in scientific domains, futile. Therefore, we here extend the optimistic estimator framework for optimal subgroup discovery to a new class of objective functions: we show how tight estimators can be computed efficiently for all functions that are determined by subgroup size (non-decreasing dependence), the subgroup median value, and a dispersion measure around the median (non-increasing dependence). In the important special case when dispersion is measured using the mean absolute deviation from the median, this novel approach yields a linear time algorithm. Empirical evaluation on a wide range of datasets shows that, when used within branch-and-bound search, this approach is highly efficient and indeed discovers subgroups with much smaller errors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Subgroup discovery is a well-established KDD technique (Klösgen 1996; Friedman and Fisher 1999; Bay and Pazzani 2001; see Atzmueller 2015 for a recent survey) with applications, e.g., in Medicine (Schmidt et al. 2010), Social Science (Grosskreutz et al. 2010), and Materials Science (Goldsmith et al. 2017). In contrast to global modeling, which is concerned with the complete characterization of some variable defined for a given population, subgroup discovery aims to detect intuitive descriptions of subpopulations in which, locally, the target variable has an interesting or useful distribution. In scientific domains, like the ones mentioned above, such local patterns are typically considered useful if they are not too specific (in terms of subpopulation size) and indicate insightful facts about the underlying physical process that governs the target variable. Such facts could for instance be: ‘patients of specific demographics experience a low response to some treatment’ or ‘materials with specific atomic composition exhibit a high thermal conductivity’. For numeric (metric) variables, subgroups need to satisfy two criteria to truthfully represent such statements: the local distribution of the target variable must have a shifted central tendency (effect), and group members must be described well by that shift (consistency). The second requirement is captured by the group’s dispersion, which determines the average error of associating group members with the central tendency value (see also Song et al. 2016).

Despite all three parameters—size, central tendency, and dispersion—being important, the only known approach for the efficient discovery of globally optimal subgroups, branch-and-bound search (Webb 1995; Wrobel 1997), is restricted to objective functions that only take into account size and central tendency. That is, if we denote by Q some subpopulation of our global population P then the objective functions f currently available to branch-and-bound can be written as

where \(c\) is some measure of central tendency (usually mean or median) and g is a function that is monotonically increasing in the subpopulation size \(|Q|\). A problem with all such functions is that they inherently favor larger groups with scattered target values over smaller more focused groups with the same central tendency. That is, they favor the discovery of inconsistent statements over consistent ones—surprisingly often identifying groups with a local error that is almost as high or even higher than the global error (see Fig. 1 for an illustration of this problem that abounded from the authors’ research in Materials Science). Although dispersion-corrected objective functions that counter-balance size by dispersion have been proposed (e.g., ‘t-score’ by Klösgen 2002 or ‘mmad’ by Pieters et al. 2010), it remained unclear how to employ such functions outside of heuristic optimization frameworks such as greedy beam search (Lavrač et al. 2004) or selector sampling (Boley et al. 2012; Li and Zaki 2016). Despite often finding interesting groups, such frameworks do not guarantee the detection of optimal results, which can not only be problematic for missing important discoveries but also because they therefore can never guarantee the absence of high quality groups—which often is an insight equally important as the presence of a strong pattern. For instance, in our example in Fig. 1, it would be remarkable to establish that long-range interactions are to a large degree independent of nanocluster geometry.

To gain an understanding of the contribution of long-range van der Waals interactions (y-axis; above) to the total energy (x-axis; above) of gas-phase gold nanoclusters, subgroup discovery is used to analyze a dataset of such clusters simulated ab initio by density functional theory (Goldsmith et al. 2017); available features describe nanocluster geometry and contain, e.g., number of atoms a, fraction of atoms with i bonds \(c_i\), and radius of gyration r. Here, similar to other scientific scenarios, a subgroup constitutes a useful piece of knowledge if it conveys a statement about a remarkable amount of van der Waals energy (captured by the group’s central tendency) with high consistency (captured by the group’s dispersion/error); optimal selector \(\sigma _0\) with standard objective has high error and contains a large fraction of gold nanoclusters with a target value below the global median (0.13) (a); this is not the case for selector \(\sigma _1\) discovered through dispersion-corrected objective (b), which therefore can be more consistently stated to describe gold nanoclusters with high van der Waals energy

Therefore, in this paper (Sect. 3), we extend branch-and-bound search to objective functions of the form

where g is monotonically increasing in the subpopulation size, monotonically decreasing in any dispersion measure \(d\) around the median, and, besides that, depends only (but in arbitrary form) on the subpopulation median. This involves developing an efficient algorithm for computing the tight optimistic estimator given by the optimal value of the objective function among all possible subsets of target values:

which has been shown to be a crucial ingredient for the practical applicability of branch-and-bound (Grosskreutz et al. 2008; Lemmerich et al. 2016). So far, the most general approach to this problem (first codified in Lemmerich et al. (2016); generalized here in Sect. 3.1) is to maintain a sorted list of target values throughout the search process and then to compute Eq. (3) as the maximum of all subsets \(R_i \subseteq Q\) that contain all target values of Q down to target value i—an algorithm that does not generalize to objective functions depending on dispersion. This paper presents an alternative idea (Sect. 3.2) where we do not fix the size of subset \(R_i\) as in the previous approach but instead fix its median to target value i. It turns out that this suffices to efficiently compute the tight optimistic estimator for all objective functions of the form of Eq. (2). Moreover, we end up with a linear time algorithm (Sec. 3.3) in the important special case where the dependence on size and dispersion is determined by the dispersion-corrected coverage defined by

where \({\texttt {amd}}\) denotes the mean absolute deviation from the median. This is the same computational complexity as the objective function itself. Consequently, this new approach can discover subgroups according to a more refined selection criterion without increasing the worst-case computational cost. Additionally, as demonstrated by empirical results on a wide range of datasets (Sect. 4), it is also highly efficient and successfully reduces the error of result subgroups in practice.

2 Subgroup discovery

Before developing the novel approach to tight optimistic estimator computation, we recall in this section the necessary basics of optimal subgroup discovery with numeric target attributes. We focus on concepts that are essential from the optimization point of view (see, e.g., Duivesteijn and Knobbe 2011 and references therein for statistical considerations). As notional convention, we are using the symbol \([m]\) for a positive integer m to denote the set of integers \(\{1,\ldots ,m\}\). Also, for a real-valued expression x we write \((x)_+\) to denote \(\max \{x,0\}\). A summary of the most important notations used in this paper can be found in “Appendix C”.

2.1 Description languages, objective functions, and closed selectors

Let P denote our given global population of entities, for each of which we know the value of a real target variable \(y\!:P \rightarrow {\mathbb {R}}\) and additional descriptive information that is captured in some abstract description language \({\mathcal {L}}\) of subgroup selectors \(\sigma \! : P \rightarrow \{{\text {true}},{\text {false}}\}\). Each of these selectors describes a subpopulation \(\mathbf{ext }(\sigma ) \subseteq P\) defined by

that is referred to as the extension of \(\sigma \). Subgroup discovery is concerned with finding selectors \(\sigma \in {\mathcal {L}}\) that have a useful (or interesting) distribution of target values in their extension \(y_\sigma =\{y(p) : p \in \mathbf{ext }(\sigma )\}\). This notion of usefulness is given by an objective function \(f\! : {\mathcal {L}} \rightarrow {\mathbb {R}}\). That is, the formal goal is to find selectors \(\sigma \in {\mathcal {L}}\) with maximal \(f(\sigma )\). Since we assume f to be a function of the multiset of y-values, let us define \(f(\sigma )=f(\mathbf{ext }(\sigma ))=f(y_\sigma )\) to be used interchangeably for convenience. One example of a commonly used objective function is the impact measure \({\mathtt {ipa}}\) (see Webb 2001; here a scaled but order-equivalent version is given) defined by

where \({\texttt {cov}}(Q)=|Q|/|P|\) denotes the coverage or relative size of Q (here—and wherever else convenient—we identify a subpopulation \(Q \subseteq P\) with the multiset of its target values).

The standard description language in the subgroup discovery literatureFootnote 1 is the language \({\mathcal {L}}_{\text {cnj}}\) consisting of logical conjunctions of a number of base propositions (or predicates). That is, \(\sigma \in {\mathcal {L}}_{\text {cnj}}\) are of the form

where the \(\pi _{i_j}\) are taken from a pool of base propositions \(\varPi =\{\pi _1,\ldots ,\pi _k\}\). These propositions usually correspond to equality or inequality constraints with respect to one variable x out of a set of description variables \(\{x_1,\ldots ,x_n\}\) that are observed for all population members (e.g., \(\pi (p)\equiv x(p) \ge v\)). However, for the scope of this paper it is sufficient to simply regard them as abstract Boolean functions \(\pi \! : P \rightarrow \{{\text {true}},{\text {false}}\}\). In this paper, we focus in particular on the refined language of closed conjunctions \({\mathcal {C}}_{\text {cnj}}\subseteq {\mathcal {L}}_{\text {cnj}}\) (Pasquier et al. 1999), which is defined as \({\mathcal {C}}_{\text {cnj}}=\{\sigma \in {\mathcal {L}}_{\text {cnj}}: {\mathbf {c}}(\sigma )=\sigma \}\) by the fixpoints of the closure operation \({\mathbf {c}}\! : {\mathcal {L}}_{\text {cnj}} \rightarrow {\mathcal {L}}_{\text {cnj}}\) given by

These are selectors to which no further proposition can be added without reducing their extension, and it can be shown that \({\mathcal {C}}_{\text {cnj}}\) contains at most one selector for each possible extension. While this can reduce the search space for finding optimal subgroups by several orders of magnitude, closed conjunctions are the longest (and most redundant) description for their extension and thus do not constitute intuitive descriptions by themselves. Hence, for reporting concrete selectors (as in Fig. 1), closed conjunctions have to be simplified to selectors of approximately minimum length that describe the same extension (Boley and Grosskreutz 2009).

2.2 Branch-and-bound and optimistic estimators

The standard algorithmic approach for finding optimal subgroups with respect to a given objective function is branch-and-bound search—a versatile algorithmic puzzle solving framework with several forms and flavors (see, e.g., Mehlhorn and Sanders 2008, Chap. 12.4). At its core, all of its variants assume the availability and efficient computability of two ingredients:

-

1.

A refinement operator \({\mathbf {r}}\! : {\mathcal {L}} \rightarrow 2^{\mathcal {L}}\) that is monotone, i.e., for \(\sigma , \varphi \in {\mathcal {L}}\) with \(\varphi \in {\mathbf {r}}(\sigma )\) it holds that \(\mathbf{ext }(\varphi ) \subseteq \mathbf{ext }(\sigma )\), and that non-redundantly generates \({\mathcal {L}}\). That is, there is a root selector \(\bot \in {\mathcal {L}}\) such that for every \(\sigma \in {\mathcal {L}}\) there is a unique sequence of selectors \(\bot =\sigma _0, \sigma _1, \ldots , \sigma _l=\sigma \) with \(\sigma _{i} \in {\mathbf {r}}(\sigma _{i-1})\). In other words, the refinement operator implicitly represents a directed tree (arborescence) on the description language \({\mathcal {L}}\) rooted in \(\bot \).

-

2.

An optimistic estimator (or bounding function) \(\hat{f}\! : {\mathcal {L}} \rightarrow {\mathbb {R}}\) that bounds from above the attainable subgroup value of a selector among all more specific selectors, i.e., it holds that \(\hat{f}(\sigma ) \ge f(\varphi )\) for all \(\varphi \in {\mathcal {L}}\) with \(\mathbf{ext }(\varphi ) \subseteq \mathbf{ext }(\sigma )\).

Based on these ingredients, a branch-and-bound algorithm simply enumerates all elements of \({\mathcal {L}}\) starting from \(\bot \) using \({\mathbf {r}}\) (branch), but—based on \(\hat{f}\)—avoids expanding descriptions that cannot yield an improvement over the best subgroups found so far (bound). Depending on the order in which language elements are expanded, one distinguishes between depth-first, breadth-first, breadth-first iterative deepening, and best-first search. In the last variant, the optimistic estimator is not only used for pruning the search space, but also to select the next element to be expanded, which is particularly appealing for informed, i.e., tight optimistic estimators. An important feature of branch-and-bound is that it effortlessly allows to speed-up the search in a sound way by relaxing the result requirement from being f-optimal to just being an a -approximation. That is, the found solution \(\sigma \) satisfies for all \(\sigma ' \in {\mathcal {L}}\) that \(f(\sigma )/f(\sigma ') \ge a\) for some approximation factor \(a \in (0,1]\). The pseudo-code given in Algorithm 1 summarizes all of the above ideas. Note that, for the sake of clarity, we omitted here some other common parameters such as a depth-limit and multiple solutions (top-k), which are straightforward to incorporate (see Lemmerich et al. 2016).

An efficiently computable refinement operator has to be constructed specifically for the desired description language. For example for the language of conjunctions \({\mathcal {L}}_{\text {cnj}}\), one can define \({\mathbf {r}}_{\text {cnj}}\! : {\mathcal {L}}_{\text {cnj}} \rightarrow {{\mathcal {L}}_{\text {cnj}}}\) by

where we identify a conjunction with the set of base propositions it contains. For the closed conjunctions \({\mathbf {c}}_{\text {cnj}}\), let us define the lexicographical prefix of a conjunction \(\sigma \in {\mathcal {L}}_{\text {cnj}}\) and a base proposition index \(i \in [k]\) as \(\sigma \!\!\mid _{i}=\sigma \cap \{\pi _1, \dots , \pi _i\}\). Moreover, let us denote with \(\mathbf{i }(\sigma )\) the minimal index such that the i-prefix of \(\sigma \) is extension-preserving, i.e., \( \mathbf{i }(\sigma )=\min \{i \! : \,\mathbf{ext }(\sigma \!\!\mid _{i})=\mathbf{ext }(\sigma )\}\). With this we can construct a refinement operator (Uno et al. 2004) \({\mathbf {r}}_{\text {ccj}}\! : {\mathcal {C}}_{\text {cnj}} \rightarrow 2^{{\mathcal {C}}_{\text {cnj}}}\) as

That is, a selector \(\varphi \) is among the refinements of \(\sigma \) if \(\varphi \) can be generated by an application of the closure operator given in Eq. (5) that is prefix-preserving.

How to obtain an optimistic estimator for an objective function of interest depends on the definition of that objective. For instance, the coverage function \({\texttt {cov}}\) is a valid optimistic estimator for the impact function \({\mathtt {ipa}}\) as defined in Eq. (4), because the second factor of the impact function is upper bounded by 1. In fact there are many different optimistic estimators for a given objective function. Clearly, the smaller the value of the bounding function for a candidate subpopulation, the higher is the potential for pruning the corresponding branch from the enumeration tree. Ideally, one would like to use \(\hat{f}(\sigma )=\max \{f(\varphi ) \! : \,\mathbf{ext }(\varphi ) \subseteq \mathbf{ext }(\sigma ) \}\), which is the most strict function that still is a valid optimistic estimator. Computing this function, however, is as hard as the whole subgroup optimization problem. Thus, as a next best option, one can disregard subset selectability and consider the (selection-unaware) tight optimistic estimator (Grosskreutz et al. 2008) given by

This leaves us with a new combinatorial optimization problem: given a subpopulation \(Q \subseteq P\), find a sub-selection of Q that maximizes f. In the following section we will discuss strategies for solving this optimization problem efficiently for different classes of objective functions—including dispersion-corrected objectives.

3 Efficiently computable tight optimistic estimators

We are going to develop an efficient algorithm for the tight optimistic estimator in three steps: First, we review and reformulate a general algorithm for the classic case of non-dispersion-aware objective functions. Then we transfer the main idea of this algorithm to the case of dispersion-corrected objectives based on the median, and finally we consider a subclass of these functions where the approach can be computed in linear time. Throughout this section we will identify a given subpopulation \(Q \subseteq P\) with the multiset of its target values \(\{y_1,\ldots ,y_m\}\) and assume that the target values are indexed in ascending order, i.e., \(y_i \le y_j\) for \(i \le j\). Also, for two multisets \(Y=\{y_1,\ldots ,y_m\}\) and \(Z=\{z_1,\ldots ,z_{m'}\}\) indexed in ascending order we say that Y is element-wise less or equal to Z and write \(Y \le _eZ\) if \(y_i \le z_i\) for all \(i \in [\min \{m,m'\}]\).

3.1 The standard case: monotone functions of a central tendency measure

The most general previous approach for computing the tight optimistic estimator for subgroup discovery with a metric target variable is described by Lemmerich et al. (2016), where it is referred to as estimation by ordering. Here, we review this approach and give a uniform and generalized version of that paper’s results. For this, we define the general notion of a measure of central tendency as follows.

Definition 1

We call a mapping \(c\! : {\mathbb {N}}^{\mathbb {R}} \rightarrow {\mathbb {R}}\) a (monotone) measure of central tendency if for all multisets \(Y,Z \in {\mathbb {N}}^{\mathbb {R}}\) with \(Y \le _eZ\) it holds that \(c(Y) \le c(Z)\).

One can check that this definition applies to the standard measures of central tendency, i.e., the arithmetic and geometric mean as well as the median Footnote 2 \({\texttt {med}}(Q)=y_{\lceil m/2 \rceil }\), and also to weighted variants of them (note, however, that it does not apply to the mode). With this we can define the class of objective functions for which the tight optimistic estimator can be computed efficiently by the standard approach as follows. We call \(f\! : 2^{P} \rightarrow {\mathbb {R}}\) a monotone level 1 objective function if it can be written as

where \(c\) is some measure of central tendency and g is a function that is non-decreasing in both of its arguments. One can check that the impact measure \({\mathtt {ipa}}\) falls under this category of functions as do many of its variants.

The central observation for computing the tight optimistic estimator for monotone level 1 functions is that the optimum value must be attained on a sub-multiset that contains a consecutive segment of elements of Q from the top element w.r.t. y down to some cut-off element. Formally, let us define the top sequence of sub-multisets of Q as \( T_i=\{y_{m-i+1},\ldots , y_m\} \) for \(i \in [m]\) and note the following observation:

Proposition 1

Let f be a monotone level 1 objective function. Then the tight optimistic estimator of f can be computed as the maximum value on the top sequence, i.e., \( \hat{f}(Q)=\max \{f(T_i) \! : \,i \in [m]\} \).

Proof

Let \(R \subseteq Q\) be of size k with \(R=\{y_{i_1},\ldots ,y_{i_k}\}\). Since \(y_{i_j}\le y_{m-j+1}\), we have for the top sequence element \(T_k\) that \(R \le _eT_k\) and, hence, \(c(R) \le c(T_k)\) implying

It follows that for each sub-multiset of Q there is a top sequence element of at least equal objective value. \(\square \)

From this insight it is easy to derive an \({\mathcal {O}}(m)\) algorithm for computing the tight optimistic estimator under the additional assumption that we can compute g and the “incremental central tendency problem” \((i,Q,(c(T_1),\ldots ,c(T_{i-1})) \mapsto c(T_i)\) in constant time. Note that computing the incremental problem in constant time implies to only access a constant number of target values and of the previously computed central tendency values. This can for instance be done for \(c={\texttt {mean}}\) via the incremental formula \({\texttt {mean}}(T_i)=((i-1)\,{\texttt {mean}}(T_{i-1})+y_{m-i+1})/i\) or for \(c={\texttt {med}}\) through direct index access of either of the two values \(y_{m-\lfloor (i-1)/2 \rfloor }\) or \(y_{m-\lceil (i-1)/2 \rceil }\). Since, according to Proposition 1, we have to evaluate f only for the m candidates \(T_i\) to find \(\hat{f}(Q)\) we can do so in time \({\mathcal {O}}(m)\) by solving the problem incrementally for \(i=1,\ldots ,m\). The same overall approach can be readily generalized for objective functions that are monotonically decreasing in the central tendency or those that can be written as the maximum of one monotonically increasing and one monotonically decreasing level 1 function. However, it breaks down for objective functions that depend on more than just size and central tendency—which inherently is the case when we want to incorporate dispersion-control.

3.2 Dispersion-corrected objective functions based on the median

We will now extend the previous recipe for computing the tight optimistic estimator to objective functions that depend not only on subpopulation size and central tendency but also on the target value dispersion in the subgroup. Specifically, we focus on the median as measure of central tendency and consider functions that are both monotonically increasing in the described subpopulation size and monotonically decreasing in some dispersion measure around the median. To precisely describe this class of functions, we first have to formalize the notion of dispersion measure around the median. For our purpose the following definition suffices. Let us denote by \(Y_{\varDelta }^{{\texttt {med}}}\) the multiset of absolute differences to the median of a multiset \(Y \in {\mathbb {N}}^{\mathbb {R}}\), i.e., \(Y_{\varDelta }^{{\texttt {med}}}=\{|y_1-{\texttt {med}}(Y)|,\ldots ,|y_m-{\texttt {med}}(Y)|\}\).

Definition 2

We call a mapping \(d\! : {\mathbb {N}}^{\mathbb {R}} \rightarrow {\mathbb {R}}\) a dispersion measure around the median if \(d(Y)\) is monotone with respect to the multiset of absolute differences to its median \(Y_{\varDelta }^{{\texttt {med}}}\), i.e., if \(Y_{\varDelta }^{{\texttt {med}}} \le _eZ_{\varDelta }^{{\texttt {med}}}\) then \(d(Y) \le d(Z)\).

One can check that this definition contains the measures median absolute deviation around the median \({\texttt {mmd}}(Y)={\texttt {med}}(Y_{\varDelta }^{{\texttt {med}}})\), the root mean of squared deviations around the median \({\texttt {rsm}}(Y)={\texttt {mean}}(\{x^2 \! : \,x \in Y_{\varDelta }^{{\texttt {med}}}\})^{1/2}\), as well as the mean absolute deviation around the median \({\texttt {amd}}(Y)={\texttt {mean}}(Y_{\varDelta }^{{\texttt {med}}})\).Footnote 3 Based on Def. 2 we can specify the class of objective functions that we aim to tackle as follows: we call a function \(f\! : 2^{P} \rightarrow {\mathbb {R}}\) a dispersion-corrected or level 2 objective function (based on the median) if it can be written as

where \(d\) is some dispersion measure around the median and \(g\! : {\mathbb {R}}^3 \rightarrow {\mathbb {R}}\) is a real function that is non-decreasing in its first argument and non-increasing in its third argument (without any monotonicity requirement for the second argument).

Median sequence sets \(Q_1,\ldots ,Q_{21}\) (colored in red with median elements \(y_z\) marked by black dot) for 21 random values \(y_{1}, \ldots , y_{21}\) w.r.t. objective function \(f(Q)=|Q|/|P|-{\texttt {smd}}(Q)/{\texttt {smd}}(P)\)—where \({\texttt {smd}}(\cdot )\) denotes sum of absolute deviations from the median; the sets are identical for any arbitrary dependence on the median \({\texttt {med}}(Q)\) that could be added to f, and for any such function the optimal value is attained among those 21 sets (Proposition 2) (Color figure online)

Our recipe for optimizing these functions is then to consider only subpopulations \(R \subseteq Q\) that can be formed by selecting all individuals with a target value in some interval. Formally, for a fixed index \(z \in \{1,\ldots ,m\}\) define \(m_z \le m\) as the maximal cardinality of a sub-multiset of the target values that has median index z, i.e.,

Now, for \(k \in [m_z]\), let us define \(Q^k_{z}\) as the set with k consecutive elements around index z. That is

With this we can define the elements of the median sequence \(Q_z\) as those subsets of the form of Eq. (8) that maximize f for some fixed index \(z \in [m]\). That is, \(Q_z=Q^{k^*_z}_z\) where \(k^*_z \in [m_z]\) is minimal with

Thus, the number \(k^*_z\) is the smallest cardinality that maximizes the trade-off of size and dispersion encoded by g (given the fixed median \(y_z={\texttt {med}}(Q^k_z)\) for all k).

Figure 2 shows an exemplary median sequence based on 21 random target values. Note how the set sizes \(k^*_z\) vary non-monotonically for increasing median indices z (e.g., \(k^*_{10}=13\), \(k^*_{11}=10\), and \(k^*_{12}=11\)). The precise behavior of the \(k^*_z\)-sequence is determined by the cluster structure of the target values and the specific level-2 objective function. Below we will see that for some functions there is an additional regularity in the \(k^*_z\)-sequence that allows further algorithmic exploitation. For now, let us first note that, as desired, searching the median sequence is sufficient for finding optimal subsets of Q independent of the precise objective:

Proposition 2

Let f be a dispersion-corrected objective function based on the median. Then the tight optimistic estimator of f can be computed as the maximum value on the median sequence, i.e., \( \hat{f}(Q)=\max \{f(Q_z) \! : \,z \in [m]\} \).

Proof

For a sub-multiset \(R \subseteq Q\) let us define the gap count \(\gamma (R)\) as

Let \(O \subseteq Q\) be an f-maximizer with minimal gap count, i.e., \(f(R) < f(O)\) for all R with \(\gamma (R)<\gamma (O)\). Assume that \(\gamma (O)>0\). That means there is a \(y \in Q \setminus O\) such that \(\min O< y < \max O\). Define

Per definition we have \(|S|=|O|\) and \({\texttt {med}}(S)={\texttt {med}}(O)\). Additionally, we can check that \(S_{\varDelta }^{{\texttt {med}}} \le _eO_{\varDelta }^{{\texttt {med}}}\), and, hence, \(d(S) \le d(Q)\). This implies that

However, per definition of S it also holds that \(\gamma (S) < \gamma (O)\), which contradicts that O is an f-optimizer with minimal gap count. Hence, any f-maximizer O must have a gap count of zero. In other words, O is of the form \(O=Q^k_z\) as in Eq. (8) for some median \(z \in [m]\) and some cardinality \(k \in [m_z]\) and per definition we have \(f(Q_z) \ge f(O)\) as required. \(\square \)

Consequently, we can compute the tight optimistic estimator for any dispersion-corrected objective function based on the median in time \({\mathcal {O}}(m^2)\) for subpopulations of size m—again, given a suitable incremental formula for \(d\). While this is not generally a practical algorithm in itself, it is a useful departure point for designing one. In the next section we show how it can be brought down to linear time when we introduce some additional constraints on the objective function.

Dispersion-corrected coverage of the sets \(Q^k_z\) as defined in Eq. (8) for median indices \(z \in \{10,11,12,13\}\) and the 21 random target values from Fig. 2; the sets \(Q_z\) can be found in incremental constant time since optimal size \(k^*_z\) is within a constant range of \(k^*_{z+1}\) (Theorem 3)

3.3 Reaching linear time—objectives based on dispersion-corrected coverage

Equipped with the general concept of the median sequence, we can now address the special case of dispersion-corrected objective functions where the trade-off between the subpopulation size and target value dispersion is captured by a linear function of size and the sum of absolute differences from the median. Concretely, let us define the dispersion-corrected coverage (w.r.t. absolute median deviation) by

where \({\texttt {smd}}(Q)=\sum _{y \in Q}|y-{\texttt {med}}(Q)|\) denotes the sum of absolute deviations from the median. We then consider objective functions based on the dispersion-corrected coverage of the form

where g is non-decreasing in its first argument. Let us note, however, that we could replace the \({\texttt {dcc}}\) function by any linear function that depends positively on \(|Q|\) and negatively on \({\texttt {smd}}\). It is easy to verify that function of this form also obey the more general definition of level-2 objective functions given in Sec. 3.2, and, hence can be optimized via the median sequence.

The key to computing the tight optimistic estimator \(\hat{f}\) in linear time for functions based on dispersion-corrected coverage is then that the members of the median sequence \(Q_z\) can be computed incrementally in constant time. Indeed, we can prove the following theorem, which states that the optimal size for a multiset around median index z is within 3 of the optimal size for a multiset around median index \(z+1\)—a fact that can also be observed in the example given in Fig. 2.

Theorem 3

Let f be of the form of Eq. (9). For \(z \in [m-1]\) it holds for the size \(k_z^*\) of the f-optimal multiset with median z that

One idea to prove this theorem is to show that (a) the gain in f for increasing the multiset around a median index z is alternating between two discrete concave functions and (b) that the gains for growing multisets between two consecutive median indices are bounding each other. For an intuitive understanding of this argument, Fig. 3 shows for four different median indices \(z \in \{10,11,12,13\}\) the dispersion-corrected coverage for the sets \(Q^k_z\) as a function in k. On closer inspection, we can observe that when considering only every second segment of each function graph, the corresponding \({\texttt {dcc}}\)-values have a concave shape. A detailed proof, which is rather long and partially technical, can be found in “Appendix A”.

It follows that, after computing the objective value of \(Q_m\) trivially as \(f(Q_m)=g(1/|P|,y_m)\), we can obtain \(f(Q_{z-1})\) for \(z=m,\ldots ,2\) by checking the at most seven candidate set sizes given by Eq. (10) as

with \(k_z^-=\max (k_z^*-3,1)\) and \(k_z^+=\min (k_z^*+3,m_z)\). For this strategy to result in an overall linear time algorithm, it remains to see that we can compute individual evaluations of f in constant time (after some initial \({\mathcal {O}}(m)\) pre-processing step).

As a general data structure for quickly computing sums of absolute deviations from a center point, we can define for \(i \in [m]\) the cumulative left error \(e_l(i)\) and the cumulative right error \(e_r(i)\) as

Note that we can compute these error terms for all \(i \in [m]\) by iterating over the ordered target values in time \({\mathcal {O}}(m)\) via the recursions

and \(e_l(1)=e_r(m)=0\). Subsequently, we can compute sums of deviations from center points of arbitrary subpopulations in constant time, as the following statement shows (see “Appendix B” for a proof).

Proposition 4

Let \(Q=\{y_1,\ldots ,y_a, \ldots , y_z, \dots , y_b,\ldots , y_m\}\) be a multiset with \(1 \le a<z<b \le m\) and \(y_i\le y_{j}\) for \(i\le j\). Then the sum of absolute deviations to \(y_i\) of all elements of the submultiset \(\{y_a, \ldots , y_z, \ldots , y_b\}\) can be expressed as

With this we can compute \(k \mapsto f(Q_z^k)\) in constant time (assuming g can be computed in constant time). Together with Proposition 2 and Theorem 3 this results in a linear time algorithm for computing \(Q \mapsto \hat{f}(Q)\) (see Algorithm 2 for a pseudo-code that summarizes all ideas).

4 Dispersion-corrected subgroup discovery in practice

The overall result of Sect. 3 is an efficient algorithm for dispersion-corrected subgroup discovery which, e.g., allows us to replace the coverage term in standard objective functions by the dispersion-corrected coverage. To evaluate this efficiency claim as well as the value of dispersion-correction, let us consider as objective the normalized and dispersion-corrected impact function based on the median, i.e., \(f_1(Q)={\texttt {dcc}}(Q) {\texttt {mds}}_+(Q)\) where \({\texttt {mds}}_+\) is the positive relative median shift

This function obeys Eq. (9); thus, its tight optimistic estimator can be computed using the linear time algorithm from Sect. 3.3. The following empirical results were gathered by applying it to a range of publicly available real-world datasets.Footnote 4 We will first investigate the effect of dispersion-correction on the output before turning to the effect of the tight optimistic estimator on the computation time.

Normalized median of optimal subgroup w.r.t. uncorrected positive median shift \((Q_0)\) and w.r.t. dispersion-corrected positive median shift (\(Q_1\)) for 25 test datasets, sorted according to median difference. Error bars show mean absolute median deviation of subgroups; groups marked red have larger deviation than global deviation; fill color indicates group coverage from 0 (white) to 1 (black) (Color figure online)

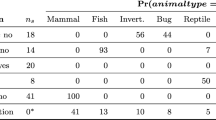

4.1 Selection bias of dispersion-correction and its statistical merit

To investigate the selection bias of \(f_1\) let us also consider the non-dispersion corrected variant \(f_0(Q)={\texttt {cov}}(Q) {\texttt {mds}}_+(Q)\), where we simply replace the dispersion-corrected coverage by the ordinary coverage. This function is a monotone level 1 function, hence, its tight optimistic estimator \(\hat{f_0}\) can be computed in linear time using the top sequence approach. Figure 4 shows the characteristics of the optimal subgroups that are discovered with respect to both of these objective functions (see also Table. 1 for exact values) where for all datasets the language of closed conjunctions \({\mathcal {C}}_{\text {cnj}}\) has been used as description language.

The first observation is that—as enforced by design—for all datasets the mean absolute deviation from the median is lower for the dispersion-corrected variant (except in one case where both functions yield the same subgroup). On average the dispersion for \(f_1\) is 49 percent of the global dispersion, whereas it is 113 percent for \(f_0\), i.e., when not optimizing the dispersion it is on average higher in the subgroups than in the global population. When it comes to the other subgroup characteristics, coverage and median target value, the global picture is that \(f_1\) discovers somewhat more specific groups (mean coverage 0.3 versus 0.44 for \(f_0\)) with higher median shift (on average 0.73 normalized median deviations higher). However, in contrast to dispersion, the behavior for median shift and coverage varies across the datasets. In Fig. 4, the datasets are ordered according to the difference in subgroup medians between the optimal subgroups w.r.t. \(f_0\) and those w.r.t. \(f_1\). This ordering reveals the following categorization of outcomes: When our description language is not able to reduce the error of subgroups with very high median value, \(f_1\) settles for more coherent groups with a less extreme but still outstanding central tendency. On the other end of the scale, when no coherent groups with moderate size and median shift can be identified, the dispersion-corrected objective selects very small groups with the most extreme target values. The majority of datasets obey the global trend of dispersion-correction leading to somewhat more specific subgroups with higher median that are, as intended, more coherent.

To determine based on these empirical observations whether we should generally favor dispersion correction, we have to specify an application context that specifies the relative importance of coverage, central tendency, and dispersion. For that let us consider the common statistical setting in which we do not observe the full global population P but instead subgroup discovery is performed only on an i.i.d. sample \(P' \subseteq P\) yielding subpopulations \(Q'=\sigma (P')\). While \(\sigma \) has been optimized w.r.t. the statistics on that sample \(Q'\) we are actually interested in the properties of the full subpopulation \(Q = \sigma (P)\). For instance, a natural question is what is the minimal y-value that we expect to see in a random individual \(q \in Q\) with high confidence. That is, we prefer subgroups with an as high as possible threshold \(l\) such that a random \(q \in Q\) satisfies with probabilityFootnote 5 \(1-\delta \) that \(y(q) \ge l\). This criterion gives rise to a natural trade-off between the three evaluation metrics through the empirical Chebycheff inequality (see Kabán 2012, Eq. (17)), according to which we can compute such a value as \({\texttt {mean}}(Q')-\epsilon (Q')\) where

and \({\texttt {var}}(Y)=\sum _{y \in Y} (y-{\texttt {mean}}(Y))^2 / (|Y|-1)\) is the sample variance. Note that this expression is only defined for sample subpopulations with a size of at least \(1/\delta \). For smaller subgroups our best guess for a threshold value would be the one derived from the global sample \({\texttt {mean}}(P')-\epsilon (P')\) (which we assume to be large enough to determine an \(\epsilon \)-value). This gives rise to the following standardized lower confidence bound score \(\tilde{l}\) that evaluates how much a subgroup improves over the global \(l\) value:

The plot on the left side of Fig. 5 shows the score values of the optimal subgroup w.r.t. to \(f_1\) (\(\tilde{l}_1\)) and \(f_0\) (\(\tilde{l}_0\)) using confidence parameter \(\delta =0.05\). Except for three exceptions (datasets 3,4, and 12), the subgroup resulting from \(f_1\) provides a higher lower bound than those from the non-dispersion corrected variant \(f_0\). That is, the data shows a strong advantage for dispersion correction when we are interested in selectors that mostly select individuals with a high target value from the underlying population P. In order to test the significance of these results, we can employ the Bayesian sign-test (Benavoli et al. 2014), a modern alternative to classic frequentist null hypothesis tests that avoids many of the well-known disadvantages of those (see Demšar 2008; Benavoli et al. 2016). With Bayesian hypothesis tests, we can directly evaluate the posterior probabilities of hypotheses given our experimental data instead of just rejecting a null hypothesis based on some arbitrary significance level. Moreover, we differentiate between sample size and effect size by the introduction of a region of practical equivalence (rope). Here, we are interested in the relative difference \(\tilde{z}=(\tilde{l}_1 - \tilde{l}_0)/(\max \{\tilde{l}_0, \tilde{l}_1\})\) on average for random subgroup discovery problems. Using a conservative choice for the rope, we call the two objective functions practically equivalent if the mean \(\tilde{z}\)-value is at most \(r=0.1\). Choosing the prior belief that \(f_0\) is superior, i.e., \(\tilde{z} < -r\), with a prior weight of 1, the procedure yields based on our 25 test datasets the posterior probability of approximately 1 that \(\tilde{z} > r\) on average (see the right part of Fig. 5 for in illustration of the posterior belief). Hence, we can conclude that dispersion-correction improves the relative lower confidence bound of target values on average by more than 10 percent when compared to the non-dispersion-corrected function.

Effect of dispersion correction on lower bound of 95-percent confidence interval of target variable; (left) improvement over global lower bound in standard deviations of dispersion-corrected objective (\(\tilde{l}_1\)) and non-dispersion-corrected objective (\(\tilde{l}_0\)) with annotations showing ids of datasets where either method provides no improvement; (right) posterior joint probabilities of the events that normalized difference \((\tilde{l}_1-\tilde{l}_0)/\max \{\tilde{l}_0,\tilde{l}_1\}\) is larger than 0.1 (\(\Pr (\tilde{l}_1)\)), less than \(-0.1\) (\(\Pr (\tilde{l}_0)\)), or within \([-0.1,0.1]\) (\(\Pr ({\mathrm {rope}})\)) according to Bayesian sign-test in barycentric coordinates (sections correspond to regions where corresponding event is maximum a posteriori outcome)

Effect of tight optimistic estimator; (right) optimistic estimation (\(\hat{f_1}(\sigma ^+_1)\), \(\hat{f_0}(\sigma ^+_0)\)) of remaining search space and value of current best solution (\(f_1(\sigma ^*_1)\), \(f_1(\sigma ^*_0)\)) resulting from tight estimator and top sequence estimator, respectively—per processed nodes for dataset binaries; (left) speedup factor (\(t_0/t_1\)) in increasing order for all datasets plus node-reduction factor (\(|{\mathcal {E}}_0|/|{\mathcal {E}}_1|\)), which indicate potential of further improvements of estimator algorithm

4.2 Efficiency of the tight optimistic estimator

To study the effect of the tight optimistic estimator, let us compare its performance to that of a baseline estimator that can be computed with the standard top sequence approach. Since \(f_1\) is upper bounded by \(f_0\), \(\hat{f_0}\) is a valid, albeit non-tight, optimistic estimator for \(f_1\) and can thus be used for this purpose. The exact speed-up factor is determined by the ratio of enumerated nodes for both variants as well as the ratio of computation times for an individual optimistic estimator computation. While both factors determine the practically relevant outcome, the number of nodes evaluated is a much more stable quantity, which indicates the full underlying speed-up potential independent of implementation details. Similarly, “number of nodes evaluated” is also an insightful unit of time for measuring optimization progress. Therefore, in addition to the computation time in seconds \(t_0\) and \(t_1\), let us denote by \({\mathcal {E}}_0, {\mathcal {E}}_1\subseteq {\mathcal {L}}\) the set of nodes enumerated by branch-and-bound using \(\hat{f_0}\) and \(\hat{f_1}\), respectively—but in both cases for optimizing the dispersion-corrected objective \(f_1\). Moreover, when running branch-and-bound with optimistic estimator \(\hat{f_i}\), let us denote by \(\sigma ^*_i(n)\) and \(\sigma ^+_i(n)\) the best selector found and the top element of the priority queue (w.r.t. \(\hat{f_i}\)), respectively, after n nodes have been enumerated.

Figure 6 (left) shows the speed-up factor \(t_1/t_0\) on a logarithmic axis for all datasets in increasing order along with the potential speed-up factors \(|{\mathcal {E}}_0|/|{\mathcal {E}}_1|\) (see Table 1 for numerical values). There are seven datasets for which the speed-up is minor followed by four datasets with a modest speed-up factor of 2. For the remaining 14 datasets, however, we have substantial speed-up factors between 4 and 20 and in four cases immense values between 100 and 4000. This demonstrates the decisive potential effect of tight value estimation even when compared to another non-trivial estimator like \(\hat{f_0}\) (which itself improves over simpler options by orders of magnitude; see Lemmerich et al. 2016). Similar to the results in Sect. 4.1, the Bayesian sign-test for the normalized difference \(z=(t_1-t_0)/\max \{t_1,t_0\}\) with the prior set to practical equivalence (\(z \in [-0.1,0.1]\)) reveals that the posterior probability of \(\hat{f}_1\) being superior to \(\hat{f}_0\) is apx. 1. In almost all cases the potential speed-up given by the ratio of enumerated nodes is considerably higher than the actual speed-up, which shows that, despite the same asymptotic time complexity, an individual computation of the tight optimistic estimator is slower than the simpler top sequence based estimator—but also indicates that there is room for improvements in the implementation.

Examining the optimization progress over time for the binaries dataset, which exhibits the most extreme speed-up (right plot in Fig. 6), we can see that not only does the tight optimistic estimator close the gap between best current selector and current highest potential selector much faster—thus creating the huge speed-up factor—but also that it causes better solutions to be found earlier. This is an important property when we want to use the algorithm as an anytime algorithm, i.e., when allowing the user to terminate computation preemptively, which is important in interactive data analysis systems. This is an advantage enabled specifically by using the tight optimistic estimator in conjunction with the best-first node expansion strategy.

5 Conclusion

During the preceding sections, we developed and evaluated an effective algorithm for simultaneously optimizing size, central tendency, and dispersion in subgroup discovery with a numerical target. This algorithm is based on two central results: (1) the tight optimistic estimator for any objective function that is based on some dispersion measure around the median can be computed as the function’s maximum on a linear-sized sequence of sets—the median sequence (Proposition 2); and (2) for objective functions based on the concept of the dispersion-corrected coverage w.r.t. the absolute deviation from the median, the individual sets of the median sequence can be generated in incremental constant time (Theorem 3).

Among the possible applications of the proposed approach, the perhaps most important one is to replace the standard coverage term in classic objective functions by the dispersion-corrected coverage, i.e., the relative subgroup size minus the relative subgroup dispersion, to reduce the error of result subgroups—where error refers to the descriptive or predictive inaccuracy incurred when assuming the median value of a subgroup for all its members. As we saw empirically for the impact function (based on the median), this correction also has a statistical advantage resulting in subgroups where we can assume larger target values for unseen group members with high confidence. In addition to enabling dispersion-correction to known objective functions, the presented algorithm also provides novel degrees of freedom, which might be interesting to exploit in their own right: The dependence on the median is not required to be monotone, which allows to incorporate a more sophisticated influence of the central tendency value than simple monotone average shifts. For instance, given a suitable statistical model for the global distribution, the effect of the median could be a function of the probability \({\mathbb {P}}[{\texttt {med}}(Q)]\), e.g., its Shannon information content. Furthermore, the feasible dispersion measures allow for interesting weighting schemes, which include possibilities of asymmetric effects of the error (e.g., for only punishing one-sided deviation from the median). More generally, let us note that numerical subgroup discovery algorithms are also often applicable in settings where numerical association rules are sought (see Aumann and Lindell 2003). The appeal of branch-and-bound optimization is here that it circumvents the expensive enumeration step of all frequent (high coverage) sets.

Regarding the limitations of the presented approach, let us note that it cannot be directly applied to the previously proposed dispersion-aware functions, i.e., the t-score \({\mathtt {tsc}}(Q)=\sqrt{|Q|}({\texttt {mean}}(Q)-{\texttt {mean}}(P))/{\texttt {std}}(Q)\) and the mmad score for ranked data \({\mathtt {mmd}}(Q)=|Q|/(2{\texttt {med}}(Q)+{\texttt {mmd}}(Q))\). While both of these functions can be optimized via the median sequence approach (assuming a t-score variant based on the median), we are lacking an efficient incremental formula for computing the individual function values for all median sequence sets, i.e., a replacement for Theorem 3. Though finding such a replacement in future research is conceivable, this leaves us for the moment with a quadratic time algorithm (in the subgroup size) for the tight optimistic estimator, which is not generally feasible (although potentially useful for smaller datasets or as part of a hybrid optimistic estimator, which uses the approach for sufficiently small subgroups only).

Since they share basic monotonicities, it is possible to use functions based on dispersion-corrected coverage as an optimization proxy for the above mentioned objectives. For instance, the ranking of the top 20 subgroups w.r.t. the dispersion-corrected binomial quality function, \({\mathtt {dcb}}(Q)=\sqrt{{\texttt {dcc}}(Q)}({\texttt {med}}(Q)-{\texttt {med}}(P))\), turns out to have a mean Spearman rank correlation coefficient with the median-based t-score of apx. 0.783 on five randomly selected test datasets (delta_elv, laser, stock, treasury, gold). However, a more systematic understanding of the differences and commonalities of these functions is necessary to reliably replace them with one another. Moreover, the correlation deteriorates quite sharply when we compare to the original mean/variance based t-score (mean Spearman correlation coefficient 0.567), which points to the perhaps more fundamental limitation of the presented approach for dispersion-correction: it relies on using the median as measure of central tendency. While the median and the mean absolute deviation from the median are an interpretable, robust, and sound combination of measures (the median of a set of values minimizes the sum of absolute deviations), the mean and the variance are just as sound, are potentially more relevant when sensitivity to outliers is required, and provide a wealth of statistical tools (e.g., Chebyshev’s inequality used above).

Hence, a straightforward but valuable direction for future work is the extension of efficient tight optimistic estimator computation to dispersion-correction based on the mean and variance. A basic observation for this task is that objective functions based on dispersion measures around the mean must also attain their maximum on gap-free intervals of target values. However, for a given collection of target values, there is a quadratic number of intervals such that a further idea is required in order to attain an efficient, i.e., (log-)linear time algorithm. Another valuable direction for future research is the extension of consistency and error optimization to the case of multidimensional target variables where subgroup parameters can represent complex statistical models (known as exceptional model miningDuivesteijn et al. 2016). While this setting is algorithmically more challenging than the univariate case covered here, the underlying motivation remains: balancing group size and exceptionality, i.e., distance of local to global model parameters, with consistency, i.e., local model fit, should lead to the discovery of more meaningful statements about the data and the underlying domain.

Notes

In this paper, we are using the simple definition of the median as the 0.5-quantile (as opposed to defining it as \((y_{m/2}+y_{1+m/2})/2\) for even m), which simplifies many of the definitions below and additionally is well-defined in settings where averaging of target values is undesired.

We work here with the given definition of dispersion measure because of its simplicity. Note, however, that all subsequent arguments can be extended in a straightforward way to a wider class of dispersion measures by considering the multisets of positive and negative deviations separately. This wider class also contains the interquartile range and certain asymmetric measures, which are not covered by Def. 2.

Datasets contain all regression datasets from the KEEL repository (Alcalá et al. 2010) with at least 5 attributes and two materials datasets from the Nomad Repository nomad-coe.eu/; see Table. 1. Implementation available in open source Java library realKD bitbucket.org/realKD/. Computation times determined on MacBook Pro 3.1 GHz Intel Core i7.

The probability is w.r.t. to the distribution with which the sample \(P' \subseteq P\) is drawn.

References

Alcalá J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2010) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult Valued Log Soft Comput 17(2–3):255–287

Atzmueller M (2015) Subgroup discovery. Wiley Interdiscip Rev Data Min Knowl Discov 5(1):35–49

Aumann Y, Lindell Y (2003) A statistical theory for quantitative association rules. J Intell Inf Syst 20(3):255–283

Bay SD, Pazzani MJ (2001) Detecting group differences: mining contrast sets. Data Min Knowl Discov 5(3):213–246

Benavoli A, Corani G, Mangili F, Zaffalon M, Ruggeri F (2014) A Bayesian Wilcoxon signed-rank test based on the Dirichlet process. In: ICML. pp 1026–1034

Benavoli A, Corani G, Demsar J, Zaffalon M (2016) Time for a change: a tutorial for comparing multiple classifiers through Bayesian analysis. arXiv:1606.04316

Boley M, Grosskreutz H (2009) Non-redundant subgroup discovery using a closure system. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 179–194

Boley M, Moens S, Gärtner T (2012) Linear space direct pattern sampling using coupling from the past. In: Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, pp 69–77

Demšar J (2008) On the appropriateness of statistical tests in machine learning. In: Workshop on evaluation methods for machine learning in conjunction with ICML

Duivesteijn W, Knobbe A (2011) Exploiting false discoveries-statistical validation of patterns and quality measures in subgroup discovery. IEEE 11th international conference on data mining. IEEE, pp 151–160

Duivesteijn W, Feelders AJ, Knobbe A (2016) Exceptional model mining. Data Min Knowl Discov 30(1):47–98

Friedman JH, Fisher NI (1999) Bump hunting in high-dimensional data. Stat Comput 9(2):123–143

Goldsmith BR, Boley M, Vreeken J, Scheffler M, Ghiringhelli LM (2017) Uncovering structure-property relationships of materials by subgroup discovery. New J Phys 19(1):13–31

Grosskreutz H, Rüping S, Wrobel S (2008) Tight optimistic estimates for fast subgroup discovery. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 440–456

Grosskreutz H, Boley M, Krause-Traudes M (2010) Subgroup discovery for election analysis: a case study in descriptive data mining. In: International conference on discovery science. Springer, pp 57–71

Huan J, Wang W, Prins J (2003) Efficient mining of frequent subgraphs in the presence of isomorphism. In: 3rd IEEE international conference on data mining. IEEE, pp 549–552

Kabán A (2012) Non-parametric detection of meaningless distances in high dimensional data. Stat Comput 22(2):375–385

Klösgen W (1996) Explora: a multipattern and multistrategy discovery assistant. In: Advances in knowledge discovery and data mining. American Association for Artificial Intelligence, pp 249–271

Klösgen W (2002) Data mining tasks and methods: subgroup discovery: deviation analysis. In: Handbook of data mining and knowledge discovery. Oxford University Press Inc., pp 354–361

Lavrač N, Kavšek B, Flach P, Todorovski L (2004) Subgroup discovery with cn2-sd. J Mach Learn Res 5:153–188

Lemmerich F, Atzmueller M, Puppe F (2016) Fast exhaustive subgroup discovery with numerical target concepts. Data Min Knowl Discov 30(3):711–762

Li G, Zaki MJ (2016) Sampling frequent and minimal boolean patterns: theory and application in classification. Data Min Knowl Discov 30(1):181–225

Mehlhorn K, Sanders P (2008) Algorithms and data structures: the basic toolbox. Springer, Berlin

Parthasarathy S, Zaki MJ, Ogihara M, Dwarkadas S (1999) Incremental and interactive sequence mining. In: Proceedings of 8th international conference on information and knowledge management. ACM, pp 251–258

Pasquier N, Bastide Y, Taouil R, Lakhal L (1999) Efficient mining of association rules using closed itemset lattices. Inf Syst 24(1):25–46

Pieters BF, Knobbe A, Dzeroski S (2010) Subgroup discovery in ranked data, with an application to gene set enrichment. In: Proceedings preference learning workshop (PL 2010) at ECML PKDD, vol 10. pp 1–18

Schmidt J, Hapfelmeier A, Mueller M, Perneczky R, Kurz A, Drzezga A, Kramer S (2010) Interpreting pet scans by structured patient data: a data mining case study in dementia research. Knowl Inf Syst 24(1):149–170

Song H, Kull M, Flach P, Kalogridis G (2016) Subgroup discovery with proper scoring rules. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 492–510

Uno T, Asai T, Uchida Y, Arimura H (2004) An efficient algorithm for enumerating closed patterns in transaction databases. In: International conference on discovery science. Springer, pp 16–31

Webb GI (1995) Opus: an efficient admissible algorithm for unordered search. J Artif Intell Res 3:431–465

Webb GI (2001) Discovering associations with numeric variables. In: Proceedings of the 7th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, pp 383–388

Wrobel S (1997) An algorithm for multi-relational discovery of subgroups. In: European symposium on principles of data mining and knowledge discovery. Springer, pp 78–87

Acknowledgements

Open access funding provided by Max Planck Society. The authors thank the anonymous reviewers for their useful and constructive suggestions. Jilles Vreeken and Mario Boley are supported by the Cluster of Excellence “Multimodal Computing and Interaction” within the Excellence Initiative of the German Federal Government. Bryan R. Goldsmith acknowledges support from the Alexander von Humboldt-Foundation with a Postdoctoral Fellowship. Additionally, this work was supported through the European Union’s Horizon 2020 research and innovation program under Grant agreement No. 676580 with The Novel Materials Discovery (NOMAD) Laboratory, a European Center of Excellence.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editors: Kurt Driessens, Dragi Kocev, Marko Robnik-Šikonja, Myra Spiliopoulou.

Appendices

Appendix A: Proof of Theorem 3

In order to proof Theorem 3, let us start by noting that for functions of the form of Eq. (9), finding the set size \(k^*_z\) corresponds to maximizing the dispersion-corrected coverage among all multisets with consecutive elements around median \(y_z\) (as defined in Eq. 8). In order to analyze this problem, let us write

for the dispersion-corrected coverage of the multiset \(Q^k_z\). Let \(\varDelta h_z\! : [m_z] \rightarrow {\mathbb {R}}\) denote the difference or gain function of \(h_z\), i.e., \(\varDelta h_z(k)=h_z(k)-h_z(k-1)\) where we consider \(Q^0_z=\emptyset \) and, hence, \(h_z(0)=0\). With this definition we can show that \(h_z\) is alternating between two concave functions, i.e., considering either only the even or only the odd subset of its domain, the gains are monotonically decreasing. More precisely:

Lemma 5

For all \(k \in [m_z] \setminus \{1,2\}\) we have that \(\varDelta h_z(k) \le \varDelta h_z(k-2)\).

Proof

For \(k \in [m_z]\), let us denote by \(q^k_z\) the additional y-value that \(Q_z^k\) contains compared to \(Q_z^{k-1}\) (considering \(Q_z^{0}=\emptyset \)), i.e., \(Q_z^k \setminus Q_z^{k-1}=\{q_z^k\}\). We can check that

With this and using the shorthands \(n=|P|\) and \(d={\texttt {smd}}(P)\) we can write

\(\square \)

One important consequence of this fact is that the operation of growing a set around median z by two elements—one to the left and one to the right—has monotonically decreasing gains. In other words, the smoothed function \(h_z(k)=h_z(k)+h_z(k-1)\) is concave or formally

Moreover, we can relate the gain functions of consecutive median indices as follows.

Lemma 6

Let \(z \in [m] \setminus \{1\}\) and \(k \in [m_{z-1}]\setminus \{1,2,3\}\). It holds that

Proof

For this proof, let us use the same shorthands as in the proof of Lemma 5 and start by noting that for all \(i \in [m]\) and \(k \in [m_z] \setminus \{1\}\) we have the equality

which we can see by extending

We can then show Eq. (14) by applying Eq. (16) two times to

and finally by checking separately the case k odd

and the case k even

Similarly, for Eq. (15) by applying Eq. (16) two times we can write

\(\square \)

Combining all of the above we can finally proof our main result as follows.

Proof

(Theorem 3) We start by showing that every \(k \in [m_{z+1}]\) with \(k<{k^*_{z}-3}\) can not be an optimizer of \(h_{z+1}\). It follows that \(k^*_{z}-3 \le k^*_{z+1}\), and, hence, \(k^*_{z} \le k^*_{z+1}+3\) as required for the upper bound. Indeed, we have

Analogously, for the lower bound, we show that every \(k \in [m_{z+1}]\) with \(k>{k^*_{z}+3}\) can not be the smallest optimizer of \(h_{z+1}\). It follows that \(k^*_{z}+3 \ge k^*_{z+1}\), and, hence, \(k^*_{z} \ge k^*_{z+1}-3\) as required. Indeed, we can write

\(\square \)

Appendix B: Additional proofs

Proof

(Proposition 4) Using \(d_{ij}\) as a shorthand for \(y_j-y_i\) for \(i,j \in [m]\) with \(i\le j\) we can write

\(\square \)

Appendix C: Summary of used notations

Symbol | Meaning | Defined in |

|---|---|---|

\(|\cdot |\) | Cardinality of a set or absolute value of a number | – |

\([k]\) | Set of integers \(\{1,\ldots ,k\}\) | |

\((x)_+\) | \(\max \{x,0\}\) for a real-valued expression x | |

\(\le _e\) | Element-wise less-or-equal relation for multisets of real values | |

\(2^{X}\) | Power set of a set X, i.e., set of all of its subsets | – |

\({\mathbb {N}}^X\) | Set of all multisets containing elements from set X | – |

\(\sigma \), \(\varphi \) | Subgroup selectors \(\sigma , \varphi \! : P \rightarrow \{{\text {true}},{\text {false}}\}\) | |

\(c\) | Measure of central tendency | |

\(d\) | Measure of dispersion | |

\(e_l(i)\), \(e_r(i)\) | Left and right cumulative errors of target values up to value i | |

f | Objective function | |

\(\hat{f}\) | Tight optimistic estimator of objective function f | |

m | Number of elements in subpopulation Q | |

\(m_z\) | Maximal size parameter k for consecutive value set \(Q_{z}^k\) | |

\(k^*_z\) | f-maximizing size parameter k for consecutive value set \(Q_{z}^k\) | |

y | Numeric target attribute \(y\! : P \rightarrow {\mathbb {R}}\) | |

\(y_i\) | i-th target value of subpopulation w.r.t. ascending order | |

P | Global population of given subgroup discovery problem | |

Q | Some subpopulation \(Q \subseteq P\) | |

\(Q_z\) | Median sequence element with median index z | |

\(Q^k_z\) | Submultiset of Q with k consecutive elements around index z | |

\(T_i\) | Top sequence element i, i.e., \(T_i=\{y_{m-i+1},\dots , y_m\}\) | |

Y | Real-valued multiset | |

\(Y_{\varDelta }^{{\texttt {med}}}\) | multiset of differences of elements in Y to its median | |

\({\mathcal {L}}\), \({\mathcal {L}}_{\text {cnj}}\) | Description language and language of conjunctions | |

\({\mathcal {C}}_{\text {cnj}}\) | Language of closed conjunctions | |

\({\texttt {amd}}(Q)\) | Mean absolute deviation of y-values in Q to their median | |

\({\texttt {cov}}(Q)\) | Coverage, i.e., relative size \(|Q|/|P|\) of subpopulation Q | |

\({\texttt {dcc}}(Q)\) | Dispersion-corrected coverage of subpopulation Q | |

\({\texttt {mean}}(Q)\) | Arithmetic mean of y-values in Q | – |

\({\mathtt {ipa}}{(Q)}\) | Impact, i.e., weighted mean-shift, of subpopulation Q | |

\({\texttt {med}}(Q)\) | Median of y-values in Q | |

\({\texttt {smd}}(Q)\) | Sum of absolute deviations of y-values in Q to their median |

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Boley, M., Goldsmith, B.R., Ghiringhelli, L.M. et al. Identifying consistent statements about numerical data with dispersion-corrected subgroup discovery. Data Min Knowl Disc 31, 1391–1418 (2017). https://doi.org/10.1007/s10618-017-0520-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-017-0520-3