Abstract

Subgroup Discovery is the process of finding and describing sufficiently large subsets of a given population that have unusual distributional characteristics with regard to some target attribute. Such subgroups can be used as a statistical summary which improves on the default summary of stating the overall distribution in the population. A natural way to evaluate such summaries is to quantify the difference between predicted and empirical distribution of the target. In this paper we propose to use proper scoring rules, a well-known family of evaluation measures for assessing the goodness of probability estimators, to obtain theoretically well-founded evaluation measures for subgroup discovery. From this perspective, one subgroup is better than another if it has lower divergence of target probability estimates from the actual labels on average. We demonstrate empirically on both synthetic and real-world data that this leads to higher quality statistical summaries than the existing methods based on measures such as Weighted Relative Accuracy.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Statistical models intend to capture the distributional information in a domain of interest. While a global statistical model is useful, it is often also of interest to capture local variations exhibited in a subset of the data. Recognising such subsets can provide valuable knowledge and opportunities to improve performance at tasks relying on the statistical model. In the area of machine learning and data mining, the problem of obtaining such statistically different subsets is known as Subgroup Discovery (SD) [6, 7, 10, 17], loosely defined as the process of finding and describing sufficiently large subsets of a given population that have unusual distributional characteristics with regard to some target attribute.

Consider a synthetic toy data set relating to someone’s dietary habits. It contains two (discretised) features: the time of the day, denoted as \(X_1 \in \{ Morning , Afternoon , Evening \}\) and the calorie consumption in the diet, denoted as \(X_2 \in \{ Low , Medium , High \}\). The target variable is \(Y \in \{ Weekday , Weekend \}\). Figure 1 visualises the data, with two potentially interesting subgroups (shaded areas). The subgroup on the right concentrates on the area of maximum statistical deviation (high calorie intake in the evening is more common during weekend), while the one on the left covers both medium and high calorie intake in the evening. In this paper we study reasons why one of these subgroups might be preferred over the other.

An example bivariate data set with two subgroups (shaded areas) defined on the discretised features, both capturing an area of statistical deviation in comparison to the overall population. The subgroup on the left is preferred by a commonly used evaluation measure (WRAcc) while the right subgroup is preferred by the one of the measures we propose in this paper.

Clearly, if a subgroup is small, distributional differences may arise purely because of random chance in sampling, so a trade-off between subgroup size and distributional deviation needs to be made. Statistical tests such as \(\chi ^2\) can be used, but are usually over-emphasising size: a very large subgroup with small deviation is more likely to be picked up than a medium-sized subgroup with considerable deviation. p-values as reported in rule-based approaches [10] tend to suffer from the same issue.

Historically, SD developed as a variation on rule-learning and other logic-based approaches, and hence it is not surprising that many existing quality measures have been adapted from decision trees and rule-based classifiers. For instance, [1] explored the use of Gini-split (among several others) as quality measure for subgroups, which hypothesises that a good binary split in a decision tree also establishes a good subgroup. One of the most commonly used measures is Weighted Relative Accuracy (WRAcc), which can be seen as an adaptation of precision, a measure that is used as a search heuristic in rule learners such as CN2 [3]. Many other subgroup quality measures have been introduced in the literature, see [6] for an overview.

Evaluation methods for SD depend on the task for which subgroups need to be found. In [10], the subgroups are used to construct a ranking model, and the area under the corresponding ROC curve is used as an evaluation measure. In [1] the obtained subgroups are used as features for a decision tree and hence they can be evaluated according to the classification performance of the trees. However, the predictive task used in evaluation (ranking or classification) is then different from the descriptive Subgroup Discovery (SD) task, and it is unclear how the predictive task affects the choice of subgroup quality measure.

In this paper we propose a novel approach to evaluate subgroups as summaries which improve on the default summary of stating the overall distribution in the population. A natural way to evaluate such summaries is to quantify the difference between predicted and empirical distribution of the target. This obviates the use of proper scoring rules, a well-known family of evaluation measures for assessing the goodness of probability estimators, to obtain theoretically well-founded evaluation measures for subgroup discovery. From this perspective, one subgroup is better than another if it on average has lower divergence of target probability estimates from the actual labels.

We derive a novel SD method to directly optimise for the proposed evaluation measure, from first principles. The method is based on a generative probabilistic model, which allows us to formally prove the validity of the method. We perform experiments on a synthetic data set where the theoretically optimal subgroup is known, and demonstrate that our method outperforms alternative methods in the sense that it finds subgroups that are closer to the theoretically optimal one. Additionally, we perform experiments on 20 UCI data sets which demonstrate that the proposed method is superior in summarising the statistical properties of the data.

The structure of this paper is as follows. Section 2 introduces the notations and concepts for SD. Section 3 provides an overview of Proper Scoring Rules (PSRs) and describes related quality measures. In Sect. 4 we propose a novel generative modelling approach to address the summarisation problem, and derive the corresponding measures. Section 5 evaluates the proposed quality measures against existing measures and Sect. 6 presents related work. Section 7 concludes this paper and discusses possible future research directions.

2 Subgroup Discovery

We start by introducing some notation. Consider a dataset \((X_i,Y_i)\), \(i=1,\dots ,n\) in the instance space \((\mathbb {X},\mathbb {Y})\). We assume a multi-class target variable, representing the k classes in \(\mathbb {Y}\) by unit vectors, i.e. class j is represented by the vector with 1 at position j and 0 everywhere else. The set of all considered subgroups is indicated by \(\mathbb {G} \subset 2^{\mathbb {X}}\). This set is typically generated by a subgroup language (e.g., the set of all conjunctions over some fixed set of literals) but here it suffices to deal with subgroups extensionally. A subgroup \(g\in \mathbb {G}\) can then be identified with its characteristic function \(g:\mathbb {X}\rightarrow \{0,1\}\) determining whether an instance \(X_i\) is in the subgroup (\(g(X_i)=1\)) or not (\(g(X_i)=0\)). A subgroup quality measure is a function \(\phi :\mathbb {G}\rightarrow \mathbb {R}\) such that better subgroups g get a higher \(\phi (g)\). The task of SD is then to find the subgroup \(g^*\) with the highest value of \(\phi \), i.e. \(g^* = {{{\mathrm{arg\,max}}}}_{g \in \mathbb {G}} \phi (g)\).

A wide range of proposed quality measures can be found in the literature. The common way of defining a quality measure is to separate them into two factors: the deviation factor and the size factor. The deviation factor is in charge of comparing the local statistic to the global statistic. In the case of a discrete target variable, the deviation factor can be seen as a function that takes two estimates of class probabilities as input and outputs a single number to indicate how different these two estimates are. The size factor is normally treated as a correction term to encourage the method to find larger subgroups, as small subgroups tend to be less valuable.

One of the most widely adopted quality measures is the Weighted Relative Accuracy (WRAcc) family [1, 2, 9, 10]. For a binary target this essentially is the covariance between the target variable and subgroup membership: since these are both Bernoulli variables this takes values in the interval \([-0.25, 0.25]\). For a multi-class target we take the average of all one-against-rest binary WRAcc values, taking the absolute value of the latter to avoid positive and negative covariances cancelling out [1]. For our purposes we derive a related but unnormalised quantity, as follows.

Denote the overall class distribution in the data set by \(\pi =(\sum _{i=1}^n Y_i)/n\) (note that \(Y_i\) and \(\pi \) are vectors of length k). Let m denote the number of training set instances belonging to the subgroup g, i.e. \(m=\sum _{i=1}^{n}g(X_i)\). Denote the class distribution in the subgroup by \(\rho ^{(g)}\), i.e., \(\rho ^{(g)}=(\sum _{i=1}^{n}g(X_i)\cdot Y_i)/m\). Then an unnormalised version of Multi-class Weighted Relative Accuracy (MWRAcc) can be calculated as:

The definition of [1] is obtained from this by normalising with \(n\cdot k\), where n is the number of training instances and k is the number of classes (both constant). Our version can be interpreted as absolute differences between observed and expected counts.

3 Proper Scoring Rules

The class distribution \(\pi \) is a very simple way to summarise the target variable across the whole training dataset. That is, we summarise the labels vectors \(Y_1,\dots ,Y_n\) with the summary \(S^{\pi }\) where we define \(S^{\pi }_i=\pi \) for \(i=1,\dots ,n\). Another possibility is to separately summarise a particular subgroup g with its class distribution \(\rho ^{(g)}\) while its complement is summarised with \(\pi \). We denote this summary by \(S^{g,\rho ^{(g)},\pi }\), and for an instance i this summary predicts \(S^{g,\rho ^{(g)},\pi }_i=\rho ^{(g)}\) if \(g(X_i)=1\) and \(S^{g,\rho ^{(g)},\pi }_i=\pi \) if \(g(X_i)=0\), which can be jointly written as \(S^{g,\rho ^{(g)},\pi }_i=\rho ^{(g)} g(X_i)+\pi (1-g(X_i))\). One could then ask which of the subgroups gives the best summary, and whether the summary is better than the default summary \(S^{\pi }\). In order to assess this, we need a way to calculate the extent to which the probability estimates within the summary deviate from the actual labels.

Proper Scoring Rules (PSRs) have been widely adopted in the area of machine learning and statistics to assess the goodness of probability estimates [16]. A scoring rule is a function \(\psi : \mathbb {S} \times \mathbb {Y} \rightarrow \mathbb {R}\) that assigns a real-valued loss to the estimate \(S_i\) within the summary S with respect to the actual label \(Y_i\) of instance i. Two of the most commonly adopted scoring rules are the Brier Score (BS) and Log-loss (LL), which are defined as:

where \(Y_{i,j}=1\) if the i-th instance is of the j-th class and 0 otherwise, \(S_{i,j}\) is the probability estimate of class j for the i-th instance, and \(S_{i,*}\) denotes the probability estimate of the i-th instance for the true class as determined by \(Y_i\).

The distance from a whole summary S to the actual labels can then be calculated as follows:

The scoring rule \(\psi \) is proper if \({{{\mathrm{arg\,min}}}}_p\psi '(S^p,Y)=\pi \) for any Y, i.e., if the actual class distribution is the minimiser of the scoring rule. In particular, both BS and LL are proper.

For every proper scoring rule \(\psi \) there is a corresponding divergence measure d which quantifies how much a class probability distribution diverges from another class distribution. Formally, the divergence d(p, q) is the expected value of the difference \(\psi (p,Y)-\psi (q,Y)\) where Y is drawn from the distribution q. The divergences corresponding to BS and LL are the squared error and Kullback-Leibler (KL) divergence, respectively.

For more details see [8].

3.1 Information Gain

Suppose we now want to decide whether to summarise the whole dataset by \(S^\pi \) or by \(S^{g,\rho ^{(g)},\pi }\) for some g. For this let us take a proper scoring rule \(\psi '\) to quantify the loss of a summary with respect to actual labels. We can now define the quality of a subgroup g as the gain in \(\psi '\) of the summary \(S^{g,\rho ^{(g)},\pi }\) over the default summary \(S^\pi \), that is:

In principle, we could consider summaries \(S^{g,\rho ,\pi }\) for any other class distribution \(\rho \). However, the summary with \(\rho ^{(g)}\) is special among these, as it is maximising the gain over the summary \(S^\pi \) due to properness of the scoring rule. This is stated in the following theorem:

Theorem 1

Let \(\psi ,\psi ',d\) be a proper scoring rule, its sum across the dataset, and its corresponding divergence measure, respectively. Then for any given subgroup g the following holds:

where \(\rho ^{(g)}\) denotes the class distribution within the subgroup g. The maximum value achieved is \(m\cdot d(\pi ,\rho ^{(g)})\) where m is the size of the subgroup g.

Proofs of all theorems are provided in Appendix A.

The theorem implies that Eq. (7) can be rewritten as follows:

In words, this quality measure multiplies the size of the subgroup by the divergence of the overall class distribution from the distribution within the subgroupFootnote 1.

If we consider Log-loss as the proper scoring rule, then the corresponding information gain measure is:

where KL is the KL-divergence. For Brier Score the corresponding measure is quadratic error:

where \(\rho ^{(g)}_{j}\) is the proportion of the j-th class in the subgroup g.

These information gain measures have a long history in machine learning, for example in decision tree learning where they measure the decrease in impurity when splitting a parent node into two children nodes. If we measure impurity by Shannon entropy this leads to Quinlan’s information gain splitting criterion; and if we measure impurity by the Gini index we obtain Gini-split. We have shown how they can be unified from the perspective of Proper Scoring Rules; we now proceed to improve them.

4 Generative Modelling

The general context in which SD is applied is where one observes a set of data points that belongs to a particular domain and the task is to extract information from the data. As mentioned in the introduction, such information can then be adopted to improve the performance of corresponding applications. Therefore, it is desirable that the subgroups as the representation of obtained knowledge would generalise to future data observed in the same domain.

Two problems need addressing when generalising to future data. First, the class distribution \(\rho ^{(g)}\) is calculated on a (small) sample and can therefore be a poor estimate of the actual distribution in the future. Second, it is not certain whether the actual distribution of the subgroup is different from the overall distribution \(\pi \). In order to capture these aspects we employ a generative model to generate a new test instance Y of the subgroup g. We assume that the observed (training) instances of subgroup g were generated according to the same model, which is defined as follows.

4.1 The Generative Model

First, we fix the default k-class distribution \(\pi \). We then decide whether the distribution of the subgroup g is different from the default (\(Z=1\)) or the same as default (\(Z=0\)):

where \(\gamma \) is our prior belief that \(Z=1\). If \(Z=1\) then we generate the class distribution Q for the subgroup g:

where \(Dir[\beta ]\) is the k-dimensional Dirichlet distribution with parameter vector \(\beta \). Finally, we assume that the test instance Y and the training instances of the subgroup g are all independent and identically distributed (iid). For simplicity of notation, let us assume that the training instances within g are the first m instances \(Y_1,\dots ,Y_m\). The distribution of \(Y_1,\dots ,Y_m\) and the test label Y is as follows:

where Cat is the categorical distribution with the given class probabilities. In the experiments reported later we used non-informative priors for Z and Q (\(\gamma = 0.5\) and \(\beta = (1,\dots ,1)\), respectively).

4.2 Proposed Quality Measures

The above model can be used to generate instances for a subgroup g. We will now exploit this model to derive two subgroup quality measures, the first one of which takes into account the uncertainty about the true class distribution in the subgroup, while the second one also models our uncertainty whether it is different from the background distribution. Therefore, we consider the task of choosing \(\rho \) which would maximise the expected gain in \(\psi '\) on the test instances. The following theorem solves this task, conditioning on the observed class distribution within the subgroup and on the assumption that this subgroup is different from background (\(Z=1\)).

Theorem 2

Consider a subgroup as generated with the model above. Denote the counts of each class in the training set of this subgroup by \(C=\sum _{i=1}^m Y_i\). Then

Denoting this quantity by \(\hat{\rho }\), the achieved maximum is \(d(\pi ,\hat{\rho })\), where d is the divergence measure corresponding to \(\psi \).

In the experiments we use \(\beta =(1,\dots ,1)\) and hence the gain is maximised when predicting the Laplace-corrected probabilities, i.e., adding 1 to all counts and then normalising. According to this theorem we propose a novel quality measure which takes into account the uncertainty about the class distribution:

where m is the size of the subgroup.

The following theorem differs from the previous theorem by not conditioning on \(Z=1\). Hence, it additionally takes into account the uncertainty about whether the distribution of the subgroup is different from the background.

Theorem 3

Consider a subgroup as generated with the model above and denote C as above. Then

where \(a=\mathbb {P}[Z=1|C=c]\). Denote this quantity by \(\hat{\hat{\rho }}\). Then the achieved maximum value is \(d(\pi ,\hat{\hat{\rho }})\), where d is the divergence measure corresponding to \(\psi \).

Following this theorem we propose another novel quality measure, which takes into account both the uncertainty about the class distribution and about whether it is different from the background distribution:

where m is the size of the subgroup. In order to calculate the value of \(a=\mathbb {P}[Z=1|C=c]\) we have the following theorem:

Theorem 4

Consider a subgroup as generated with the model above and denote C as above. Then the following equalities hold:

where \(\beta _0=\sum _{j=1}^k \beta _j\).

Referring back to Fig. 1 in the introduction, the subgroup on the left was discovered with \(\phi _{WRAcc}\) as quality measure and the right one by \(\phi _{PSR}\) with Brier Score. While WRAcc provides a larger coverage, it can be seen that the PSR measure captures a more distinct statistical deviation of the class distribution in the subgroup.

5 Experiments

In this section we experimentally investigate the performance of our proposed measures. The experiments are separated into two parts. For the first part we generated synthetic data, such that we know the true subgroup. In the second part we applied our methods to UCI data to investigate summarisation performance.

For our proposed measures, we adopt the generalised divergences of BS and LL as given in Sect. 3, Eqs. (5 and 6). Plugging these into Eqs. (16) and (18) we obtain four novel measures d-BS, d-LL, PSR-BS and PSR-LL. We compare these proposals against a range of subgroup evaluation measures used in the literature: Weighted Relative Accuracy (WRAcc), IG-LL (Eq. (10)), IG-BS (Eq. (11)), as well as the \(\chi ^2\) statistic, which is defined as follows:

5.1 Synthetic Data

In the experiments on the synthetic data we evaluate how good the methods are in revealing the true subgroup used in generating the data, as well as in producing good summaries of the data.

To provide a more intuitive illustration, we construct our data set according to a real-life scenario. Suppose one has been using a wearable device to record whether daily exercises were performed or not, for a whole year. As it turned out, there were 146 out of 365 days when the exercises were performed, which gives a probability about 2/5 that the exercises were performed on a random day. According to the website of the wearable device, the same statistics are about 1/3 for the general population. It is possible that the overall exercise frequency was different, but perhaps a more plausible explanation might be that more exercises were performed during a particular period only. SD can hence be applied to recognise the period of more intensive exercise and summarise the corresponding exercise frequency.

Following this scenario, the feature space consists of the 52 weeks of the year, hence \(\mathbb {X} = \{1,...,52\}\). We define the subgroup language as the set of all intervals of weeks of length from 2 to 8 weeks. The data set is assumed to contain a single year from January to December. This setting allows us to perform exhaustive search on the subgroup language. As here our aim is to compare the performance among different quality measures, applying exhaustive search can avoid the bias introduced by other greedy search algorithms.

The way to generate the data is then as described in the previous section. Given the default class distribution \(\pi \), the subgroup class distribution Q is sampled from a Dirichlet prior and a true subgroup is selected uniformly within the language. Therefore, all the 7 days within each week can be distributed either according to \(\pi \) or according to Q.

We evaluate each subgroup quality measure by comparing the obtained subgroup against the true subgroup. This is done by measuring similarity of the respective indicator functions Z and \(\hat{Z}\). For similarity we use the F-score as we are not really interested in the ‘true negatives’ (instances in the complements of both true and discovered subgroups). The F-score for this case can be computed as (\(Z_i\) and \(\hat{Z}_i\) are used to represent whether an instance belongs to the true subgroup and the obtained subgroup respectively):

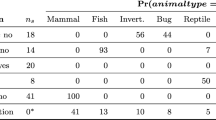

The results are given in Table 1 as the micro-averaged F-scores from 5 000 synthetic sequences, for different values of \(\pi _1\) (the first component of the class distribution vector). We can see that the PSR-based approaches generally outperform existing measures, with a slight advantage for Log-loss over Brier score. The information gain-based methods perform particularly poorly, as they have a preference for pure subgroups, whereas for skewed \(\pi \) it would be advantageous to look for subgroups with a more uniform class distribution. As \(\pi \) becomes more uniform, the ‘true’ subgroup becomes more random and harder to identify, which is why all methods are expected to perform poorly for \(\pi _1 \approx 0.5\). The variance is quite high across all methods, probably because the data set is quite small (\(52\cdot 7=364\) instances).

Since a better statistical summary is essentially our aim, the results are also evaluated according to their overall loss on a test set (also of length 1 year) drawn from the same distribution. For each quality measure, a subgroup is obtained from the training fold together with the local statistical summary (\(\hat{\hat{\rho }}\) for \(\phi _{PSR}\), \(\hat{\rho }\) for other quality measures). The loss for the obtained summarisation can then be calculated as in Eq. (4). The corresponding results are given in Tables 2 and 3 for both Brier score and Log-loss. We see a similar pattern as with the F-score results.

5.2 UCI Data

We proceed to compare our method with existing approaches on UCI data sets [13]. We selected the same 20 UCI datasets as described in [1]. The information regarding the number of attributes and instances are provided in the appendix.

The subgroup language we used here is conjunctive normal form, with disjunctions (only) between values of the same feature, and conjunctions among disjunctions involving different features. All features are treated as nominal. If the original feature is numeric and contains more than 100 values, it is discretised into 16 bins.

Since for most data sets in this experiment exhaustive search is intractable we perform beam search instead. The beam width is set to be 32 (i.e., 32 candidate subgroups are kept to be refined in the next round). The number of refinement rounds is set to 8.

The resulting average Brier scores and Log-loss are given in Tables 4 and 5. All the results are obtained by 10-fold cross-validation. As in the previous experiment, a subgroup is learned on the training folds and the class distribution estimated on the test fold is then used to compute the corresponding loss.

Given these results, it can be seen that our proposed measures generally outperform WRAcc, Chi2 and both versions of information gain. The PSR measures (first two columns) are never outperformed by the generalised divergence (last two columns) so we recommend using the former unless simplicity of implementation is an issue (as the latter don’t need estimation of a). Regarding the choice between (BS, LL), this is still an ongoing debate in the community. Here we used both to demonstrate that our novel measure can apply either as the two most well-known Proper Scoring Rules.

6 Related Work

As is the case for supervised rule learning in general, SD comprises three major components: description language, quality measure and search algorithm. A detailed comparison with rule learning can be found in [15]. While early work in SD has been surveyed in [6], we briefly describe some recent progress in the area.

Regarding the subgroup description language, most existing work defines it through logical operations on attribute values. In [14] the authors present an approach to construct more informative descriptions on numeric and nominal attributes in linear time. The proposed algorithm is able to find the optimal interval for numeric attributes and optimal set of values for nominal attributes. The results show improvements on the quality of obtained subgroups comparing to traditional descriptions.

In terms of quality measures, recent work has focused on the extension of traditional measures with improved statistical modelling. In [4, 11] Exceptional Model Mining (EMM) was introduced as a framework to support improved target concepts with different model classes. For example, if linear regression models are trained on the whole data set and different candidate subgroups, the quality of subgroups can be evaluated by comparing the regression coefficient between the global model and the local subgroup model. In [5] the authors extend the framework to support predictive statistical information. This further allows subgroups to be found where a scoring classifier’s performance deviates from its overall performance.

With respect to the search algorithm, while greedy search algorithms have been widely adopted in existing implementations, recent work in [12] presents a fast exhaustive search strategy for numerical target concepts. The authors propose and illustrate novel bounds on different types of quality measures. The exhaustive search can then be performed efficiently via additional pruning techniques.

7 Conclusion

In this paper we investigated how to discover subgroups that are optimal in the sense of maximally improving the global statistical summary of a given data set. By assuming that the (discrete) statistical summary is to be evaluated by the Proper Scoring Rule, we derived the corresponding quality measures from first principles. We also proposed a generative model to consider the optimal statistical summary for any candidate subgroup. By performing experiments on both synthetic data and UCI data, we showed that our measures provide better summaries in comparison with existing methods.

The major advantage of adopting our generative model is that it prevents finding small subgroups with extreme distributions. This can be seen as applying a regularisation on the class distribution, similar to performing Laplace smoothing in decision tree learning. Given the experiments, we can observe that the novel measures tend to perform better on small data sets (e.g. Contact-lenses, Labor).

Since in this paper we assume that only the subgroup with the highest gain will be discovered, one major direction for further work is to investigate multiple subgroups that can together improve the overall statistical summary. Previous Subgroup Discovery algorithms have extended the covering algorithm to weighted covering in order to promote the discovery of overlapping subgroups [10]. We expect that the PSR approach will be able to derive appropriate weight updates in a principled fashion.

Another direction would be to generalise our approach to numeric target variables. Although in general PSRs are designed to work with discrete random variables, Log-loss has been widely adopted in Bayesian analysis, which provides an interface to extend our approach to a general form of statistical modelling.

Notes

- 1.

In general, divergence measures are not symmetric, so \(d(\pi ,\rho ^{(g)})\) is different from \(d(\rho ^{(g)},\pi )\).

References

Abudawood, T., Flach, P.: Evaluation measures for multi-class subgroup discovery. In: Buntine, W., Grobelnik, M., Mladenić, D., Shawe-Taylor, J. (eds.) ECML PKDD 2009. LNCS (LNAI), vol. 5781, pp. 35–50. Springer, Heidelberg (2009). doi:10.1007/978-3-642-04180-8_20

Atzmueller, M., Lemmerich, F.: Fast subgroup discovery for continuous target concepts. In: Rauch, J., Raś, Z.W., Berka, P., Elomaa, T. (eds.) ISMIS 2009. LNCS (LNAI), vol. 5722, pp. 35–44. Springer, Heidelberg (2009). doi:10.1007/978-3-642-04125-9_7

Clark, P., Boswell, R.: Rule induction with CN2: some recent improvements. In: Kodratoff, Y. (ed.) EWSL 1991. LNCS, vol. 482, pp. 151–163. Springer, Heidelberg (1991). doi:10.1007/BFb0017011

Duivesteijn, W., Feelders, A.J., Knobbe, A.: Exceptional model mining. Data Min. Knowl. Discovery 30(1), 47–98 (2016)

Duivesteijn, W., Thaele, J.: Understanding where your classifier does (not) work-the SCaPE model class for EMM. In: 2014 IEEE International Conference on Data Mining (ICDM), pp. 809–814. IEEE (2014)

Herrera, F., Carmona, C.J., González, P., del Jesus, M.J.: An overview on subgroup discovery: foundations and applications. Knowl. Inf. Syst. 29(3), 495–525 (2011)

Klösgen, W.: Explora: a multipattern and multistrategy discovery assistant. In: Fayyad, U.M., Piatetsky-Shapiro, G., Smyth, P., Uthurusamy, R. (eds.) Advances in Knowledge Discovery and Data Mining, pp. 249–271. American Association for Artificial Intelligence, Menlo Park (1996)

Kull, M., Flach, P.: Novel decompositions of proper scoring rules for classification: score adjustment as precursor to calibration. In: Appice, A., Rodrigues, P.P., Santos Costa, V., Soares, C., Gama, J., Jorge, A. (eds.) ECML PKDD 2015. LNCS (LNAI), vol. 9284, pp. 68–85. Springer, Heidelberg (2015). doi:10.1007/978-3-319-23528-8_5

Lavrač, N., Flach, P., Zupan, B.: Rule evaluation measures: a unifying view. In: Džeroski, S., Flach, P. (eds.) ILP 1999. LNCS (LNAI), vol. 1634, pp. 174–185. Springer, Heidelberg (1999). doi:10.1007/3-540-48751-4_17

Lavrač, N., Kavšek, B., Flach, P., Todorovski, L.: Subgroup discovery with CN2-SD. J. Mach. Learn. Res. 5, 153–188 (2004)

Leman, D., Feelders, A., Knobbe, A.: Exceptional model mining. In: Daelemans, W., Goethals, B., Morik, K. (eds.) ECML PKDD 2008. LNCS (LNAI), vol. 5212, pp. 1–16. Springer, Heidelberg (2008). doi:10.1007/978-3-540-87481-2_1

Lemmerich, F., Atzmueller, M., Puppe, F.: Fast exhaustive subgroup discovery with numerical target concepts. Data Min. Knowl. Disc. 30(3), 711–762 (2016)

Lichman, M.: UCI machine learning repository (2013). http://archive.ics.uci.edu/ml

Mampaey, M., Nijssen, S., Feelders, A., Knobbe, A.: Efficient algorithms for finding richer subgroup descriptions in numeric and nominal data. In: IEEE International Conference on Data Mining, pp. 499–508 (2012)

Novak, P.K., Lavrač, N., Webb, G.I.: Supervised descriptive rule discovery: a unifying survey of contrast set, emerging pattern and subgroup mining. J. Mach. Learn. Res. 10, 377–403 (2009)

Winkler, R.L.: Scoring rules and the evaluation of probability assessors. J. Am. Stat. Assoc. 64(327), 1073–1078 (1969)

Wrobel, S.: An algorithm for multi-relational discovery of subgroups. In: Komorowski, J., Zytkow, J. (eds.) PKDD 1997. LNCS, pp. 78–87. Springer, Heidelberg (1997). doi:10.1007/3-540-63223-9_108

Acknowledgements

This work was supported by the SPHERE Interdisciplinary Research Collaboration, funded by the UK Engineering and Physical Sciences Research Council under grant EP/K031910/1; and the REFRAME project granted by the European Coordinated Research on Long-Term Challenges in Information and Communication Sciences&Technologies ERA-Net (CHIST-ERA), and funded by the Engineering and Physical Sciences Research Council in the UK under grant EP/K018728/1. Hao Song would like to thank Toshiba Research Europe Ltd, Telecommunications Research Laboratory, for funding his doctoral research within SPHERE.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix A: Proofs

Lemma 1

Let \(\psi \) be a proper scoring rule and d its respective divergence measure. If \(S,S'\) are random vectors representing two sets of class probability estimates for a random variable T representing the actual class, then

Proof

By using Lemma 1 from the supplementary of [8] we get the decomposition \(\mathbb {E}[\psi (S,T)]=\mathbb {E}[d(S,T)]=\mathbb {E}[d(S,\mathbb {E}[T])]+\mathbb {E}[d(\mathbb {E}[T],T)]\) and the analogous decomposition for \(S'\). The second term is shared and hence when subtracting it cancels, yielding the required result.

Theorem 1

Let \(\psi ,\psi ',d\) be a proper scoring rule, its sum across the dataset, and its corresponding divergence measure, respectively. Then for any given subgroup g the following holds:

where \(\rho ^{(g)}\) denotes the class distribution within the subgroup g. The value of achieved maximum is \(m\cdot d(\pi ,\rho ^{(g)})\) where m is the size of the subgroup g.

Proof

For simplicity of notation, let us assume that the training instances within g are \(Y_1,\dots ,Y_m\) (the first m instances). Consider a random variable T obtaining its value by uniformly choosing one \(Y_i\) that belongs to g among \(Y_1,\dots ,Y_m\). The summaries \(S^\pi \) and \(S^{g,\rho ^{(g)},\pi }\) are equal for instances \(m+1,\dots ,n\), hence \(\psi '(S^\pi ,Y)-\psi '(S^{g,\rho ^{(g)},\pi },Y)=m\cdot \mathbb {E}[\psi (\pi ,T)-\psi (\rho ^{(g)},T)]\). Using Lemma 1 this is in turn equal to \(m\cdot \mathbb {E}[d(\pi ,\mathbb {E}[T])-m\cdot \mathbb {E}[d(\rho ^{(g)},\mathbb {E}[T])]\). However, since \(\mathbb {E}[T]=\rho ^{(g)}\) then the second term is zero and the first is \(m\cdot d(\pi ,\rho ^{(g)})\), which is exactly the required result.

Theorem 2

Consider a subgroup as generated with the model above. Denote the counts of each class in the training set of this subgroup by \(C=\sum _{i=1}^m Y_i\). Then

Denoting this quantity by \(\hat{\rho }\), the achieved maximum is \(d(\pi ,\hat{\rho })\), where d is the divergence measure corresponding to \(\psi \).

Proof

Consider a random variable T obtaining its value by uniformly choosing one \(Y_i\) that belongs to g among \(Y_1,\dots ,Y_m\). Then \(\mathbb {E}[\psi '(\pi ,Y)-\psi '(\rho ,Y)|C=c,Z=1]=\mathbb {E}[\psi (\pi ,T)-\psi (\rho ,T)|C=c,Z=1]\). Using Lemma 1 this is in turn equal to \(d(\pi ,\mathbb {E}[T|C=c,Z=1])-d(\rho ,\mathbb {E}[T|C=c,Z=1])\). Since the first term does not depend on \(\rho \) this quantity is maximised by minimising the second divergence. As with any divergence, the minimal value is zero and it is obtained if the two terms are equal, i.e., \(\rho =\mathbb {E}[T|C=c,Z=1]\). It remains to prove that \(\mathbb {E}[T|C=c,Z=1]=\frac{c+\beta }{\sum _{j=1}^k c_j+\beta _j}\). This holds because it is a posterior distribution under the Dirichlet prior \(Dir(\beta )\) after observing \(c_1,\dots ,c_k\) of the classes \(1,\dots ,k\), respectively.

Theorem 3

Consider a subgroup as generated with the model above and denote C as above. Then

where \(a=\mathbb {P}[Z=1|C=c]\). Denote this quantity by \(\hat{\hat{\rho }}\). Then the achieved maximum value is \(d(\pi ,\hat{\hat{\rho }})\), where d is the divergence measure corresponding to \(\psi \).

Proof

Consider a random variable T obtaining its value by uniformly choosing one \(Y_i\) that belongs to g among \(Y_1,\dots ,Y_m\). Then \(\mathbb {E}[\psi '(\pi ,Y)-\psi '(\rho ,Y)|C=c]=\mathbb {E}[\psi (\pi ,T)-\psi (\rho ,T)|C=c]\). Using Lemma 1 this is in turn equal to \(d(\pi ,\mathbb {E}[T|C=c])-d(\rho ,\mathbb {E}[T|C=c])\). Since the first term does not depend on \(\rho \) this quantity is maximised by minimising the second divergence. As with any divergence, the minimal value is zero and it is obtained if the two terms are equal, i.e., \(\rho =\mathbb {E}[T|C=c]\). It remains to prove that \(\mathbb {E}[T|C=c]=a\hat{\rho }+(1-a)\hat{\rho }\) where \(\hat{\rho }\) is defined in the previous Theorem 2. Indeed, \(\mathbb {E}[T|C=c]=\mathbb {P}(Z=1|C=c)\mathbb {E}[T|C=c,Z=1]+\mathbb {P}(Z=0|C=c)\mathbb {E}[T|C=c,Z=0]=a\hat{\rho }+(1-a)\pi \), where \(\mathbb {E}[T|C=c,Z=0]=\pi \) due to Y (and therefore T) drawn from Bernoulli with the mean \(ZQ+(1-Z)\pi \). The achieved maximum is \(d(\pi ,\hat{\hat{\rho }})\).

Theorem 4

Consider a subgroup as generated with the model above and denote C as above. Then the following equalities hold:

where \(\beta _0=\sum _{j=1}^k \beta _j\).

Proof

Due to \(\mathbb {P}[Z=1]=\gamma \), we can obtain the first result from the Bayes formula with \(\mathbb {P}[Z=1|C=c]=\frac{\mathbb {P}[C=c\mid Z=1]\mathbb {P}[Z=1]}{\mathbb {P}[C=c]}\). To obtain the second result we note that in the subgroup \(Z=1\) the class distribution is drawn from \(Dir(\beta )\), therefore the distribution of C follows the Dirichlet-Multinomial distribution. The stated result represents simply the probability distribution function of the Dirichlet-Multinomial with \(Dir(\beta )\) and multinomial of size m. The third result is simply the probability distribution function of the Multinomial Distribution.

Appendix B: Information for the UCI Data

See Table. 6

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Song, H., Kull, M., Flach, P., Kalogridis, G. (2016). Subgroup Discovery with Proper Scoring Rules. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2016. Lecture Notes in Computer Science(), vol 9852. Springer, Cham. https://doi.org/10.1007/978-3-319-46227-1_31

Download citation

DOI: https://doi.org/10.1007/978-3-319-46227-1_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46226-4

Online ISBN: 978-3-319-46227-1

eBook Packages: Computer ScienceComputer Science (R0)