Abstract

A new Levenberg–Marquardt (LM) method for solving nonlinear least squares problems with convex constraints is described. Various versions of the LM method have been proposed, their main differences being in the choice of a damping parameter. In this paper, we propose a new rule for updating the parameter so as to achieve both global and local convergence even under the presence of a convex constraint set. The key to our results is a new perspective of the LM method from majorization-minimization methods. Specifically, we show that if the damping parameter is set in a specific way, the objective function of the standard subproblem in LM methods becomes an upper bound on the original objective function under certain standard assumptions. Our method solves a sequence of the subproblems approximately using an (accelerated) projected gradient method. It finds an \(\varepsilon\)-stationary point after \(O(\varepsilon ^{-2})\) computation and achieves local quadratic convergence for zero-residual problems under a local error bound condition. Numerical results on compressed sensing and matrix factorization show that our method converges faster in many cases than existing methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this study, we consider the constrained nonlinear least-squares problem:

where \(\Vert \cdot \Vert\) denotes the \(\ell _2\)-norm, \(F: {\mathbb {R}}^d \rightarrow {\mathbb {R}}^n\) is a continuously differentiable function, and \({\mathcal {C}} \subseteq {\mathbb {R}}^d\) is a closed convex set. If there exists a point \(x \in {\mathcal {C}}\) such that \(F(x) = {\textbf{0}}\), the problem is said to be zero-residual, and is reduced to the constrained nonlinear equation:

Such problems cover a wide range of applications, including chemical equilibrium systems [48], economic equilibrium problems [20], power flow equations [61], nonnegative matrix factorization [7, 42], phase retrieval [11, 63], nonlinear compressed sensing [8], and learning constrained neural networks [17].

Levenberg–Marquardt (LM) methods [43, 47] are efficient iterative algorithms for solving problem (1); they were originally developed for unconstrained cases (i.e., \({\mathcal {C}} = {\mathbb {R}}^d\)) and later extended to constrained cases by [40]. Given a current point \(x_k \in {\mathcal {C}}\), an LM method defines a model function \(m^k_\uplambda : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) with a damping parameter \(\uplambda > 0\):

where \(F_k :=F(x_k) \in {\mathbb {R}}^d\) and \(J_k :=J(x_k) \in {\mathbb {R}}^{n \times d}\) with \(J: {\mathbb {R}}^d \rightarrow {\mathbb {R}}^{n \times d}\) being the Jacobian matrix function of F. The next point \(x_{k+1} \in {\mathcal {C}}\) is set to an exact or approximate solution to the convex subproblem:

for some \(\uplambda = \uplambda _k\). Various versions of this method have been proposed, and their theoretical and practical performances largely depend on how the damping parameter \(\uplambda _k\) is updated.

1.1 Our contribution

We propose an LM method with a new rule for updating \(\uplambda _k\). Our method is based on majorization-minimization (MM) methods, which successively minimize a majorization or, in other words, an upper bound on the objective function. The key to our method is the fact that the model \(m^k_\uplambda\) defined in (2) is a majorization of the objective f under certain standard assumptions. This MM perspective enables us to create an LM method with desirable properties, including global and local convergence guarantees. Although there exist several MM methods for problem (1) and relevant problems [3, 4, 38, 50, 53], as far as we know, no studies have elucidated that the model in (2) is a majorization of f. Another feature of our LM method is the way of generating an approximate solution of subproblem (3). It is sufficient to apply one iteration of a projected gradient method to (3) for deriving the iteration complexity of our LM method, which leads to an overall complexity bound.

Our contributions are summarized as follows:

-

(i)

A new MM-based LM method We prove that the LM model defined in (2) is a majorization of f if the damping parameter \(\uplambda\) is sufficiently large. See Lemma 1 for a precise statement. This result provides us with a new update rule of \(\uplambda\), bringing about a new LM method for solving problem (1).

-

(ii)

Iteration and overall complexity for finding a stationary point The iteration complexity of our LM method for finding an \(\varepsilon\)-stationary point (see Definition 1) is proved to be \(O(\varepsilon ^{-2})\) under mild assumptions on the Jacobian. Because the computational complexity per iteration of our method does not depend on \(\varepsilon\), the overall complexity is also evaluated as \(O(\varepsilon ^{-2})\) through

$$\begin{aligned} (\text {Overall complexity}) = (\text {Iteration complexity}) \times (\text {Complexity per iteration}). \end{aligned}$$ -

(iii)

Local quadratic convergence For zero-residual problems, assume that a starting point \(x_0 \in {\mathcal {C}}\) is sufficiently close to an optimal solution, and assume standard conditions, including a local error bound condition. Then, if the subproblems are solved with sufficient accuracy, a solution sequence \((x_k)\) generated by our method converges quadratically to an optimal solution. See Theorem 2 for a precise statement.

-

(iv)

Improved convergence results even for unconstrained problems Our method achieves both the \(O(\varepsilon ^{-2})\) iteration complexity bound and local quadratic convergence. An LM method having such global and local convergence results is new for unconstrained and constrained problems, as shown in Table 1.

Numerical results show that our method converges faster and is more robust than existing LM-type methods [22, 26, 36, 40], a projected gradient method, and a trust-region reflective method [10, 58].

1.2 Oracle model for overall complexity bounds

To evaluate the overall complexity of LM methods, we count the number of basic operations—evaluation of F(x), Jacobian-vector multiplications J(x)u and \(J(x)^\top v\), and projection onto \({\mathcal {C}}\)—required to find an \(\varepsilon\)-stationary point, following [21, Sect. 6]. The important point is that we do not assume an evaluation of \(J_k :=J(x_k)\) but access the Jacobian only through products \(J_k u\) and \(J_k^\top v\) to solve subproblem (3). Computing vectors \(J_k u\) and \(J_k^\top v\) for given \(u \in {\mathbb {R}}^d\) and \(v \in {\mathbb {R}}^n\) is much cheaper than evaluating the matrix \(J_k\).Footnote 1 Avoiding the computation of the \(n \times d\) matrix \(J_k\) makes algorithms practical for large-scale problems where n and d amount to thousands or millions. We note that some existing LM-type methods [3, 4, 12,13,14,15,16, 36] compute the Jacobian explicitly.

1.3 Paper organization

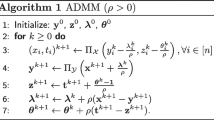

In Sect. 2, we review LM methods and related algorithms for problem (1). In Sect. 3, a key lemma is presented and the LM method (Algorithm 1) is derived based on the lemma. Sections 4 and 5 show theoretical results for Algorithm 1: iteration complexity, overall complexity, and local quadratic convergence. In Sect. 6, we generalize Algorithm 1 and present a more practical variant of Algorithm 1. This variant also achieves the theoretical guarantees given for Algorithm 1 in Sects. 4 and 5. Section 7 provides some numerical results and Sect. 8 concludes the paper.

1.4 Notation

Let \({\mathbb {R}}^d\) denote a d-dimensional Euclidean space equipped with the \(\ell _2\)-norm \(\Vert \cdot \Vert\) and the standard inner product \(\langle \cdot , \cdot \rangle\). For a matrix \(A \in {\mathbb {R}}^{m \times n}\), let \(\Vert A\Vert\) denote its spectral norm, or its largest singular value. For \(a \in {\mathbb {R}}\), let \(\lceil a\rceil\) denote the least integer greater than or equal to a.

2 Comparison with related works

We review existing methods for problem (1) and compare them with our work.

2.1 General methods

Algorithms for general nonconvex optimization problems, not just for least-squares problems, also solve problem (1). For example, the projected gradient method have an overall complexity bound of \(O(\varepsilon ^{-2})\); our LM method enjoys local quadratic convergence in addition to that bound, which seems difficult to achieve with general first-order methods. Figure 1 illustrates that our LM successfully minimizes the Rosenbrock function, a valley-like function that is notoriously difficult to minimize numerically. Although quadratic convergence is proved only locally around an optimal solution, in practice, the LM method may perform considerably better than general first-order methods, even when started far from the optimum.

Minimization of the Rosenbrock function [56], \(f(x, y) = (x - 1)^2 + 100 (y - x^2)^2\). Both the gradient descent (GD) and our LM start from \((-1, 1)\) and converge to the optimal solution, (1, 1). One marker corresponds to one iteration, and the GD and LM are truncated after 1000 and 20 iterations, respectively

Some methods, such as the Newton method, achieve local quadratic convergence using second-order or higher-order derivatives of f; our LM achieves it without the second-order derivative. Besides the fact that our LM does not require a computationally demanding Hessian matrix, it has another advantage: subproblem (3) is very tractable. Whereas our subproblem is smooth and strongly convex, those in second- or higher-order methods are nonconvex in general. The matter becomes more severe under the presence of constraints because the subproblems may be NP-hard, as pointed out in [15].

2.2 Specialized methods for least squares

Several methods, including the LM method, utilize the least-squares structure of problem (1). Focusing on those algorithms without second-order derivatives, we review them from three points of view: (i) subproblem, (ii) complexity for finding a stationary point, and (iii) local superlinear convergence. Most of the methods discussed in this section are summarized in Table 1. The table shows the following:

-

Our method can achieve an overall computational complexity bound, \(O(\varepsilon ^{-2}) \times O(1) = O(\varepsilon ^{-2})\), for finding an \(\varepsilon\)-stationary point for constrained problems.

-

To the best of our knowledge, this is the first LM that achieves such a complexity bound with local quadratic convergence, even for unconstrained problems.

2.2.1 Subproblems

Most algorithms for the nonlinear least-squares problem (1) generate a solution sequence \((x_k)_{k \in {\mathbb {N}}}\) by repeatedly solving convex subproblems, and we focus on such algorithms. There are three popular subproblems, in addition to the LM subproblem (3):

where \(\uplambda , \Delta > 0\) are properly defined constants. Methods using subproblems (4), (5), and (6) have been proposed and analyzed in [3, 4, 16, 50], [3], and [12, 24, 32, 64], respectively. Other works [13,14,15] propose methods with a more general version of (5). These four subproblems (3)–(6) are closely related in theory; one subproblem becomes equivalent to the others with specific choices of the parameters \(\uplambda\) and \(\Delta\).

In practice, these four subproblems are quite different, and the LM subproblem (3) is the most tractable one because the objective function \(m^k_\uplambda\) is smooth and strongly convex. Thanks to smoothness and strong convexity, we can efficiently solve subproblem (3) with linearly convergent methods such as the projected gradient method. Note that the objective function of (4) is nonsmooth, and (5) and (6) are not necessarily strongly convex. Although some algorithms for subproblems (4)–(6) without constraints have been proposed [4, 13, 64], efficient algorithms are nontrivial under the presence of constraints. Hence, the LM method is more practical than methods using other subproblems.

2.2.2 Complexity for finding a stationary point

For unconstrained zero-residual problems, Nesterov [50] proposed a method with subproblem (4) and showed that the method finds an \(\varepsilon\)-stationary point after \(O(\varepsilon ^{-2})\) iterations under a strong assumption (see footnote 2 of Table 1 for details). After that, for unconstrained (possibly) nonzero-residual problems, several methods with subproblems (3), (4), and (6) have been proposed [12, 57, 66], and they achieve the same iteration complexity bound under weaker assumptions such as the Lipschitz continuity of J or \(\nabla f\). The method of [12] has been extended for constrained problems [16].Footnote 2 These methods [12, 16, 50, 57, 66] have the iteration complexity bound, but computational complexity per iteration, i.e., complexity for a subproblem, is unclear.

The key to bounding complexity per iteration is that we do not need to solve subproblems so accurately to derive the iteration complexity bound. Several algorithms have been proposed based on this fact for both unconstrained [5, 6, 13, 14] and constrained [15] problems. They use a point that decreases the model function value sufficiently compared to the value at the current iterate \(x_k\). Such a point can be computed with an \(\varepsilon\)-independent number of basic operations: evaluation of F(x), Jacobian-vector multiplications J(x)u and \(J(x)^\top v\), and projection onto \({\mathcal {C}}\). Thus, the methods in [5, 13,14,15] achieve the overall complexity \(O(\varepsilon ^{-2}) \times O(1) = O(\varepsilon ^{-2})\).

Our LM method also finds an \(\varepsilon\)-stationary point within \(O(\varepsilon ^{-2})\) iterations, and the complexity per iteration is O(1) when subproblems are solved approximately like [5, 6, 13,14,15]. Thus, the overall complexity amounts to \(O(\varepsilon ^{-2})\) same as [5, 13,14,15].

2.2.3 Local superlinear convergence

For unconstrained zero-residual problems, many methods with subproblems (3)–(6) have achieved local quadratic convergence under a local error bound condition [3, 19, 23, 29,30,31,32,33,34,35, 62]. These local convergence results have been extended to constrained problems [1, 22, 26, 40]. Some methods [24, 64] have local convergence of an arbitrarily order less than 2. Other methods [25, 27, 28] achieve local (nearly) cubic convergence by solving two subproblems in one iteration. We note that the local convergence analyses in [4, 50] assume the solution sequence \((x_k)\) is in the neighborhood of a solution \(x^*\) such that \(F(x^*) = {\textbf{0}}\) and \({{\,\textrm{rank}\,}}J(x^*) = n\), which is a stronger assumption than the local error bound.

Among these methods, some [1, 3, 4, 19, 22, 29, 31, 35] use an approximate solution to subproblems while preserving local quadratic convergence. The approximate solution is more accurate than that used to derive the global complexity mentioned in the previous section. We also use the same kind of approximate solution as [1, 19, 29, 31, 35] to prove local quadratic convergence. See Condition 2 in Sect. 6 for the details of the approximate solution.

3 Majorization lemma and proposed method

Here, we will prove a majorization lemma that shows that the LM model \(m^k_\uplambda\) defined in (2) is an upper bound on the objective function. In view of this lemma, we can characterize our LM method as a majorization-minimization (MM) method.

For \(a, b \in {\mathbb {R}}^d\), we denote the sublevel set and the line segment by

3.1 LM method as majorization-minimization

MM is a framework for nonconvex optimization that successively performs (approximate) minimization of an upper bound on the objective function. The following lemma, a majorization lemma, shows that the model \(m^k_\uplambda\) defined in (2) is an upper bound on the objective f over some region under certain assumptions.

Lemma 1

Let \({\mathcal {X}} \subseteq {\mathbb {R}}^d\) be any closed convex set, and suppose \(x_k \in {\mathcal {X}}\). Moreover, assume that for some constant \(L > 0\),

Then for any \(\uplambda > 0\) and \(x \in {\mathcal {X}}\) such that

the following bound holds:

The proof is given in Sect. A.2.

The assumption in (9) is the Lipschitz continuity of J and is analogous to the Lipschitz continuity of \(\nabla f\), which is often used in the analysis of first-order methods. Equation (10) requires a sufficiently large damping parameter, which corresponds to a sufficiently small step-size for first-order methods. Equation (11) requires the point \(x \in {\mathcal {X}}\) to be a solution that is at least as good as the current point \(x_k \in {\mathcal {X}}\) in terms of the model function value.

3.2 Proposed LM method

Based on Lemma 1, we propose an LM method that solves problem (1). The proposed LM is formally described in Algorithm 1 and is outlined below. First, in Line 1, three parameters are initialized: an estimate M of the Lipschitz constant L of J, a parameter \(\eta\) used for solving subproblems, and the iteration counter k. Line 3 sets \(\uplambda\) using M as an estimate of L based on (10). Then, the inner loop of Lines 4-10 solves subproblem (3) approximately by a projected gradient method. The details of the inner loop will be described later. Lines 12–15 check if the current \(\uplambda\) and the computed solution x are acceptable. If \(\uplambda\) and x satisfy (12), they are accepted as \(\uplambda _k\) and \(x_{k+1}\). Otherwise, the current value of M is judged to be small as an estimate of L in light of Lemma 1 and is increased. We refer to the former case as a “successful” iteration and the latter as an “unsuccessful” iteration. Note that k represents not the number of outer iterations but that of only successful iterations. As shown later in Lemma 5(ii) and Theorem 2(i), the number of unsuccessful iterations is upper-bounded by a constant under certain assumptions.

Inner loop for subproblem In the inner loop of Lines 4-10, subproblem (3) is solved approximately by the projected gradient method. Here, the operator \({{\,\textrm{proj}\,}}_{{\mathcal {C}}}\) in Line 5 is the projection operator defined by

The parameter t is the inner iteration counter, and the parameter \(\eta\) is the inverse step-size that is adaptively chosen by a standard backtracking technique in Lines 6-9. As shown in Lemma 6(ii) later, Line 9 is executed a finite number of times under certain standard assumptions. Hence, the inner loop must stop after a finite number of iterations.

Input parameters Algorithm 1 has several input parameters. The parameters \(M_0\) and \(\alpha\) are used to estimate the Lipschitz constant of the Jacobian J, and the parameters \(\eta _0\) and \(\alpha _{\textrm{in}}\) are used to control the step-size in the inner loop. The parameters T and c control how accurately the subproblems are solved through the stopping criteria of the inner loop. Here, note that we allow for \(T = \infty\). As we will prove in Sect. 4, the algorithm has an iteration complexity bound for an \(\varepsilon\)-stationary point regardless of the choice of the input parameters. However, to obtain an overall complexity bound or local quadratic convergence, there are restrictions on the choice of T, as explained in the next paragraph.

Stopping criteria for inner loop There are two types of stopping criteria as in Line 10, and the inner loop terminates when at least one of them is satisfied. If \(T < \infty\), the projected gradient method stops after executing Line 7 at most T times, and then the overall complexity for an \(\varepsilon\)-stationary point is guaranteed to be \(O(\varepsilon ^{-2})\). If \(T = \infty\), we have to solve subproblems more accurately to find a \((c\uplambda \Vert F_k\Vert )\)-stationary point of the subproblem, and then Algorithm 1 achieves local quadratic convergence.

Remark 1

To make the algorithm more practical, we can introduce other parameters \(0< \beta < 1\) and \(M_{\min } > 0\), and update \(M \leftarrow \max \{ \beta M, M_{\min } \}\) after every successful iteration. As with the gradient descent method, such an operation prevents the estimate M from being too large and eliminates the need to choose \(M_0\) carefully. Inserting this operation never deteriorates the complexity bounds described in Sect. 4 and the local quadratic convergence in Sect. 5.

Remark 2

Some methods (e.g., [57, 66]) use the condition

with some \(0< \theta < 1\) to determine whether the computed solution x to the subproblem is acceptable. Our acceptance condition (12) is stronger than the classical one since (12) is equivalent to

under condition (11). Therefore, Lemma 1 is stronger than the classical statement that condition (13) holds if \(\uplambda\) is sufficiently large.

4 Iteration complexity and overall complexity

We will prove that Algorithm 1 finds an \(\varepsilon\)-stationary point of problem (1) within \(O(\varepsilon ^{-2})\) outer iterations. Futhermore, we will prove that under \(T < \infty\), the overall complexity for an \(\varepsilon\)-stationary point is also \(O(\varepsilon ^{-2})\). Throughout this section, \((x_k)\) and \((\uplambda _k)\) denote the sequences generated by the algorithm.

4.1 Assumptions

We make the following assumptions to derive the complexity bound. Recall that the sublevel set \({\mathcal {S}}(a)\) and the line segment \({\mathcal {L}}(a, b)\) are defined in (7) and (8) and that \(x_0 \in {\mathcal {C}}\) denotes the starting point of Algorithm 1.

Assumption 1

For some constants \(\sigma , L > 0\),

-

(i)

\(\Vert J(x)\Vert \le \sigma\), \(\forall x \in {\mathcal {C}} \cap {\mathcal {S}}(x_0)\),

-

(ii)

\(\Vert J(y) - J(x)\Vert \le L\Vert y - x\Vert\), \(\forall x,y \in {\mathcal {C}}\) s.t. \({\mathcal {L}}(x, y) \subseteq {\mathcal {S}}(x_0)\).

Assumption 1(i) means the \(\sigma\)-boundedness of J on \({\mathcal {C}} \cap {\mathcal {S}}(x_0)\). Assumption 1(ii) is similar to the L-Lipschitz continuity of J on \({\mathcal {C}} \cap {\mathcal {S}}(x_0)\) but weaker due to the condition of \({\mathcal {L}}(x, y) \subseteq {\mathcal {S}}(x_0)\). Assumption 1 is milder than the assumptions in the previous work that discussed the iteration complexity, even when \({\mathcal {C}} = {\mathbb {R}}^d\). For example, the analysis in [66] assumes f and J to be Lipschitz continuous on \({\mathbb {R}}^d\), which implies the boundedness of J on \({\mathbb {R}}^d\).

4.2 Approximate stationary point

Before analyzing the algorithm, we define an \(\varepsilon\)-stationary point for constrained optimization problems. Let \(\iota _{{\mathcal {C}}}: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\cup \{ +\infty \}\) be the indicator function of the closed convex set \({\mathcal {C}} \subseteq {\mathbb {R}}^d\). For a convex function \(g: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\cup \{ +\infty \}\), its subdifferential at \(x \in {\mathbb {R}}^d\) is the set defined by \(\partial g(x) :=\{ p \in {\mathbb {R}}^d \,|\, g(y) \ge g(x) + \langle p, y - x \rangle , \ \forall y \in {\mathbb {R}}^d \}\).

Definition 1

(see, e.g., Definition 1 in [51]) For \(\varepsilon > 0\), a point \(x \in {\mathcal {C}}\) is said to be an \(\varepsilon\)-stationary point of the problem \(\min _{x \in {\mathcal {C}}} f(x)\) if

This definition is consistent with the unconstrained case; the above inequalities are equivalent to \(\Vert \nabla f(x)\Vert \le \varepsilon\) when \({\mathcal {C}} = {\mathbb {R}}^d\). There is another equivalent definition of an \(\varepsilon\)-stationary point, which we will also use.

Lemma 2

For \(x \in {\mathcal {C}}\) and \(\varepsilon > 0\), condition (14) is equivalent to

Proof

The tangent cone \({\mathcal {T}}(x)\) of \({\mathcal {C}}\) at \(x \in {\mathcal {C}}\) is defined by

Note that

because \({\mathcal {C}}\) is a closed convex set and \(\partial \iota _C(x)\) is the normal cone of \({\mathcal {C}}\). We have

Therefore, condition (14) is equivalent to

which is also equivalent to (15). \(\square\)

A useful tool for deriving iteration complexity bounds is gradient mapping (see, e.g., [49]), also known as projected gradient [41] or reduced gradient [52]. For \(\eta > 0\), the projected gradient operator \({\mathcal {P}}_\eta : {\mathcal {C}} \rightarrow {\mathcal {C}}\) and the gradient mapping \({\mathcal {G}}_\eta : {\mathcal {C}} \rightarrow {\mathbb {R}}^d\) for problem (1) are defined by

The following lemma shows the relationship between an \(\varepsilon\)-stationary point and the gradient mapping.

Lemma 3

Suppose that Assumption 1holds, and let

Then, for any \(x \in {\mathcal {C}} \cap {\mathcal {S}}(x_0)\) and \(\eta \ge L_f\), the point \({\mathcal {P}}_\eta (x)\) is a \((2 \Vert {\mathcal {G}}_\eta (x)\Vert )\)-stationary point of problem (1).

The proof is given in Sect. A.4. This lemma will be used for the proof of Theorem 1(ii).

Although Lemma 3 looks quite similar to [51, Corollary 1], there exists a significant difference in their assumptions. Indeed, Lemma 3 assumes the boundedness and the Lipschitz property of J only on a (possibly) nonconvex set \({\mathcal {C}} \cap {\mathcal {S}}(x_0)\), whereas [51, Corollary 1] assumes the Lipschitz continuity on the whole space \({\mathbb {R}}^d\). This makes our proof more complicated than in [51, Corollary 1].

4.3 Preliminary lemmas

First, we bound the decrease in the model function value due to the inner loop. For \(\eta > 0\), we define the function \({\mathcal {D}}_\eta : {\mathcal {C}} \rightarrow {\mathbb {R}}\) by

We see that \({\mathcal {D}}_\eta (x) \ge -\langle \nabla f(x), x - x \rangle - \frac{\eta }{2}\Vert x - x\Vert ^2 = 0\) for all \(x \in {\mathcal {C}}\). In addition, \({\mathcal {D}}_\eta (x)\) is decreasing with respect to \(\eta\).

Lemma 4

The solution x obtained in Line 11 of Algorithm 1 satisfies

where k, \(\uplambda\), and \(\eta\) are parameters in Algorithm 1.

Proof

The second inequality in (22) follows from the nonnegativity of \({\mathcal {D}}_\eta (x)\), and therefore we will prove the first one. Let \(T'\) denote the value of t when the inner loop is completed, and for each \(0 \le t \le T'\), let \(\eta _{k,t}\) denote the values of \(\eta\) when \(x_{k,t}\) is obtained through Line 7. Our aim is to prove the first inequality in (22) with \((x, \eta )=(x_{k,T'},\eta _{k,T'})\). We have

Since \({\mathcal {D}}_\eta (x_k)\) is decreasing in \(\eta\) and \(\eta _{k,1} \le \eta _{k,2} \le \dots \le \eta _{k,T'}\), we have \({\mathcal {D}}_{\eta _{k,1}}(x_k) \ge {\mathcal {D}}_{\eta _{k,T'}}(x_k)\). On the other hand, we have \(m^k_\uplambda (x_{k,1}) \ge \dots \ge m^k_\uplambda (x_{k, T'})\). Combining these inequalities, we obtain the desired result. \(\square\)

From the above lemma and Line 12, it follows that for all k,

This monotonicity of \(f(x_k)\) in k is an important property of the majorization-minimization and will be used in our analysis.

The following two lemmas show that the parameters M and \(\eta\) in the algorithm are upper-bounded, and hence Lines 9 and 15 are executed only a finite number of times per single run.

Lemma 5

Suppose that Assumption 1(ii) holds, and let

where \(M_0\) and \(\alpha\) are the inputs of Algorithm 1. Then,

-

(i)

The parameter M in Algorithm 1 always satisfies \(M \le {\bar{M}}\);

-

(ii)

Throughout the algorithm, the number of unsuccessful iterations is at most \(\lceil \log _\alpha ({\bar{M}} / M_0)\rceil = O(1)\).

Proof

We have \({\mathcal {S}}(x_k) \subseteq {\mathcal {S}}(x_0)\) from (23), and therefore Assumption 1(ii) implies (9) with \({\mathcal {X}} = {\mathcal {C}}\). On the other hand, (22) directly implies (11). Hence, by Lemma 1 with \({\mathcal {X}} = {\mathcal {C}}\) and Lemma 4, if \(M \ge L\) holds at Line 3, the condition in Line 12 must be true. Therefore, if \(M_0 \ge L\), no unsuccessful iterations occur and the parameter M always satisfies \(M = M_0\). Otherwise, there exists an integer \(l \ge 1\) such that \(L \le \alpha ^l M_0 < \alpha L\). Since \(M = \alpha ^l M_0\) after l unsuccessful iterations, the parameter M always satisfies \(M < \alpha L\). Consequently, we obtain the first result, and the second follows from the first. \(\square\)

Lemma 6

Suppose that Assumption 1holds, and let

where \(\eta _0\) and \(\alpha _{\textrm{in}}\) are the inputs of Algorithm 1 and \({\bar{M}}\) is defined in (24). Then,

-

(i)

the parameter \(\eta\) in Algorithm 1 always satisfies \(\eta \le {\bar{\eta }}\);

-

(ii)

throughout the algorithm, Line 9 will be executed at most \(\lceil \log _{\alpha _\textrm{in}} ({\bar{\eta }} / \eta _0)\rceil = O(1)\) times.

Proof

Since the function \(m^k_\uplambda\) defined by (2) has the \((\Vert J_k\Vert ^2 + \uplambda )\)-Lipschitz continuous gradient, we have

(see, e.g., [52, Eq. (2.1.9)]). We also have \(\Vert J_k\Vert ^2 + \uplambda \le \sigma ^2 + {\bar{M}} \Vert F_0\Vert\) from Assumption 1(i) and Lemma 5. Therefore, the inequality in Line 6 must hold if \(\eta \ge \sigma ^2 + {\bar{M}} \Vert F_0\Vert\). With the same arguments as in Lemma 5, we obtain the desired results. \(\square\)

As we can see from the proofs of Lemmas 5 and 6, if \(M_0 \ge L\) and \(\eta _0 \ge \sigma ^2 + M_0 \Vert F_0\Vert\), then no unsuccessful iterations occur in both outer and inner loops. Adjusting M and \(\eta\) adaptively as in the presented algorithm avoids a too small step-size in practice.

4.4 Iteration complexity and overall complexity

We use the following lemma for the analysis.

Lemma 7

Proof

By the first-order optimality condition on (18) and the convexity of \({\mathcal {C}}\), we have

Using this inequality, we obtain

\(\square\)

We show the asymptotic global convergence and the iteration complexity bound of Algorithm 1.

Theorem 1

Suppose that Assumption 1holds, and define \({\bar{\eta }}\) by (25). Then,

-

(i)

\(\displaystyle \lim _{k \rightarrow \infty } \Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert = 0\), and therefore, any accumulation point of \((x_k)\) is a stationary point of problem (1);

-

(ii)

\({\mathcal {P}}_{{\bar{\eta }}}(x_k)\) is an \(\varepsilon\)-stationary point of problem (1) for some \(k = O(\varepsilon ^{-2})\).

Proof

We have

Summing up this inequality for \(k=0,1,\dots ,K-1\), we obtain

for all \(K \ge 0\). Therefore, we also have \(\sum _{k=0}^{\infty } \Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert ^2 \le 2 {\bar{\eta }} f(x_0)\), yielding \(\lim _{k \rightarrow \infty } \Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert = 0\), the first result.

Combining (27) with \(\min _{0 \le k < K} \Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert ^2 \le \frac{1}{K} \sum _{k=0}^{K-1} \Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert ^2\), we have \(\Vert {\mathcal {G}}_{{\bar{\eta }}}(x_k)\Vert \le \varepsilon / 2\) for some \(k = O(\varepsilon ^{-2})\). For such \(x_k\), the point \({\mathcal {P}}_{{\bar{\eta }}}(x_k)\) is an \(\varepsilon\)-stationary point from Lemma 3 and \({\bar{\eta }} \ge L_f\). Thus, we have obtained the second result. \(\square\)

From Lemma 5(ii) and Theorem 1(ii), we obtain the iteration complexity bound of our algorithm as follows.

Corollary 1

Under Assumption 1, Algorithm 1 finds an \(\varepsilon\)-stationary point within \(O(\varepsilon ^{-2})\) outer iterations, namely, \(O(\varepsilon ^{-2})\) successful and unsuccessful iterations.

From this iteration complexity bound and Lemma 6(ii), we also obtain the overall complexity bound.

Corollary 2

Suppose that Assumption 1holds. Then, Algorithm 1 with \(T < \infty\) finds an \(\varepsilon\)-stationary point after \(O(\varepsilon ^{-2} T)\) basic operations.

We use the term basic operations to refer to evaluation of F(x), Jacobian-vector multiplications J(x)u and \(J(x)^\top v\), and projection onto \({\mathcal {C}}\) as in Sect. 1.2.

In order to compute an \(\varepsilon\)-stationary point based on Theorem 1(ii), knowledge of the value of \({\bar{\eta }}\) is required. However, this requirement can be circumvented with a slight modification of the algorithm. See Sect. A.5 for the details.

5 Local quadratic convergence

For zero-residual problems, we will prove that the sequence \((x_k)\) generated by Algorithm 1 with \(T = \infty\) converges locally quadratically to an optimal solution. Let us denote the set of optimal solutions to problem (1) by \({\mathcal {X}}^* :=\{ x \in {\mathcal {C}} \,|\, F(x) = {\textbf{0}} \}\) and the distance between \(x \in {\mathbb {R}}^d\) and \({\mathcal {X}}^*\) simply by \({{\,\textrm{dist}\,}}(x) :=\min _{y \in {\mathcal {X}}^*} \Vert y - x\Vert\). Throughout this section, we fix a point \(x^* \in {\mathcal {X}}^*\) and denote a neighborhood of \(x^*\) by \({\mathcal {B}}(r) :=\{ x \in {\mathbb {R}}^d \,|\, \Vert x - x^*\Vert \le r \}\) for \(r > 0\).Footnote 3 As in the previous section, we denote the sequences generated by Algorithm 1 with \(T = \infty\) by \((x_k)\) and \((\uplambda _k)\).

5.1 Assumptions

We make the following assumptions to prove local quadratic convergence.

Assumption 2

-

(i)

There exists \(x \in {\mathcal {C}}\) such that \(F(x) = {\textbf{0}}\).

For some constants \(\rho , L, r > 0\),

-

(ii)

\(\rho {{\,\textrm{dist}\,}}(x) \le \Vert F(x)\Vert\), \(\forall x \in {\mathcal {C}} \cap {\mathcal {B}}(r)\),

-

(iii)

\(\Vert J(y) - J(x)\Vert \le L\Vert y - x\Vert\), \(\forall x,y \in {\mathcal {C}} \cap {\mathcal {B}}(r)\).

Assumption 2(i) requires the problem to be zero-residual, Assumption 2(ii) is called a local error bound condition, and Assumption 2(iii) is the local Lipschitz continuity of J. These assumptions are used in the previous analyses of LM methods [1, 3, 19, 22, 23, 29, 30, 33, 34, 40, 62].

5.2 Fundamental inequalities for analysis

Since \({\mathcal {C}} \cap {\mathcal {B}}(r)\) is compact, there exists a constant \(\sigma > 0\) such that

which implies

Let \(\sigma\) denote such a constant in the rest of this section.

For a point \(x \in {\mathbb {R}}^d\), let \({{\tilde{x}}} \in {\mathcal {X}}^*\) denote an optimal solution closest to x; \(\Vert {{\tilde{x}}} - x\Vert = {{\,\textrm{dist}\,}}(x)\). In particular, \({{\tilde{x}}}_k\) denotes one of the closest solutions to \(x_k\) for each \(k \ge 0\). Since \(\Vert {{\tilde{a}}} - x^*\Vert \le \Vert {{\tilde{a}}} - a\Vert + \Vert a - x^*\Vert \le 2\Vert a - x^*\Vert\), we have

Therefore, (29) with \(y :={{\tilde{x}}}\) implies

From the stopping criterion in Line 10 of Algorithm 1 with \(T = \infty\) and Definition 1, the solution x obtained in Line 11 satisfies

From the definition of \(x_{k+1}\) and \(\uplambda _k\), we also have the inequality with \((x, \uplambda ) = (x_{k+1}, \uplambda _k)\), i.e.,

5.3 Preliminary lemma

Lemma 8

Suppose that Assumption 2holds, and define \({\bar{M}}\) by (24). Define the constants \(C_1, C_2, \delta > 0\) by

where \(M_0\) and c are the inputs of Algorithm 1. Assume that \(x_k \in {\mathcal {B}}(\delta )\) and \(M \le {\bar{M}}\) hold at Line 3. Then,

-

(i)

The solution x obtained in Line 11 satisfies

$$\begin{aligned} \Vert x - x_k\Vert \le C_1 {{\,\textrm{dist}\,}}(x_k); \end{aligned}$$(35) -

(ii)

\(M \le {\bar{M}}\) holds when \(x_{k+1}\) is obtained;

-

(iii)

The following hold:

$$\begin{aligned} \Vert x_{k+1} - x_k\Vert&\le C_1 {{\,\textrm{dist}\,}}(x_k), \end{aligned}$$(36)$$\begin{aligned} {{\,\textrm{dist}\,}}(x_{k+1})&\le C_2 {{\,\textrm{dist}\,}}(x_k)^2. \end{aligned}$$(37)

Proof of Lemma 8(i)

From \(x_k \in {\mathcal {B}}(\delta )\), \(\delta \le r/2\), and (30), we have

Moreover, we have from \(\nabla m^k_{\uplambda }(x) = J_k^\top (F_k + J_k(x - x_k)) + \uplambda (x - x_k)\) that

We bound the terms (A)–(C) as follows:

where the first and second inequalities follow from (32) and (31), respectively, and the last inequality follows from the arithmetic and geometric means;

where the first inequality follows from \(4\langle a, b \rangle = \Vert a+b\Vert ^2-\Vert a-b\Vert ^2 \ge -\Vert a-b\Vert ^2\) and the second inequality from Lemma 9(ii), (38), and Assumption 2(iii);

Combining these bounds and rearranging terms yield

From (38), Assumption 2(ii), and \(\uplambda = M \Vert F_k\Vert \ge M_0 \Vert F_k\Vert\), we have

Applying this bound to the second term on the right-hand side of (39), we obtain the desired result (35). \(\square\)

Proof of Lemma 8(ii)

As in Lemma 8(i), let x denote the x obtained in Line 11. By (34c), (35), and \(x_k \in {\mathcal {B}}(\delta )\), we have

i.e.,

We now have \(x_k, x \in {\mathcal {C}} \cap {\mathcal {B}}(r)\). As in the proof of Lemma 5(i), by using Lemma 1 with \({\mathcal {X}} :={\mathcal {C}} \cap {\mathcal {B}}(r)\), we see that if \(M \ge L\) holds at Line 3, the outer iteration must be successful. This leads to the desired result. \(\square\)

Proof of Lemma 8(iii)

Equation (36) follows from Lemmas 8(i) and 8(ii). We prove (37) below. From (30) and (40), we have \(x_{k+1}, {{\tilde{x}}}_{k+1} \in {\mathcal {B}}(r)\). Moreover, we have

and bound the terms (D)–(F) as follows:

by Lemma 9(ii), Assumption 2(ii), and \(\Vert F_{k+1}\Vert \le \Vert F_k\Vert\) from (23); and

Combining these bounds yields

We bound \(\Vert F_k\Vert\) and \(\Vert F_{k+1}\Vert\) in the above inequality by using Assumption 2(ii) and (31), yielding

which implies the desired result (37). \(\square\)

5.4 Local quadratic convergence

Let us state the local quadratic convergence result of Algorithm 1.

Theorem 2

Suppose that Assumption 2holds, and define \({\bar{M}}\) by (24). Set \(x_0 \in {\mathcal {B}}(\delta _0)\) for a sufficiently small constant \(\delta _0 > 0\) such that

where \(C_1\), \(C_2\), and \(\delta\) are the constants defined in (34a)–(34c). Then,

-

(i)

The number of unsuccessful iterations is at most \(\lceil \log _\alpha ({\bar{M}} / M_0)\rceil = O(1)\), and

-

(ii)

The sequence \((x_k)\) converges quadratically to an optimal solution \({\hat{x}} \in {\mathcal {X}}^*\).

Proof

First, we will prove that

for all \(k \ge 0\) by induction. For \(k=0\), (42a) and (42b) are obvious. For a fixed \(K \ge 0\), assume (42a) and (42b) for all \(k \le K\). We then have (36), (37) and (42b) for \(k \le K+1\) by Lemma 8. To complete the induction, we prove (42a) for \(k = K+1\). Solving the recursion of (37) and using \({{\,\textrm{dist}\,}}(x_0) \le \delta _0\), we have

for all \(k \le K+1\). We obtain (42a) for \(k = K+1\) as follows:

Now, we have proved (42a) and (42b) for all \(k \ge 0\). \(\square\)

Proof of Theorem 2(ii)

Note that we have proved (37) and (43) for all \(k \ge 0\) in the proof of Theorem 2(i). By (43) and \(C_2\delta _0<1\) in (41), we have

As with (43), we have for \(i \ge k\),

Using this bound and (35), we obtain

for all k, l such that \(0 \le k < l\). Equations (45) and (44) imply that \((x_k)\) is a Cauchy sequence. Accordingly, the sequence \((x_k)\) converges to a point \({\hat{x}} \in {\mathcal {X}}^*\) by (44). Thus, we obtain

which implies Theorem 2(ii). \(\square\)

6 Practical variant of the proposed method

We present a more practical variant (Algorithm 3) of Algorithm 1, which also achieves the theoretical guarantees given for Algorithm 1 in Sects. 4 and 5.

6.1 Generalized version of Algorithm 1

To obtain the practical variant, we first present a generalized framework of Algorithm 1. Algorithm 1 runs the vanilla projected gradient (PG) method in the inner loop. This PG can be replaced with other algorithms keeping \(O(\varepsilon ^{-2})\) iteration complexity and quadratic convergence that were gained for Algorithm 1. Indeed, these theoretical results rely on the fact that the x obtained in Line 11 of Algorithm 1 satisfies the following conditions:

Condition 1

(for \(O(\varepsilon ^{-2})\) iteration complexity bound) There exists a constant \(\gamma > 0\) such that for all k,

Condition 2

(for local quadratic convergence) Both of the following hold:

-

(i)

\(m^k_\uplambda (x) \le m^k_\uplambda (x_k)\) for all k;

-

(ii)

There exists a constant \(c > 0\) such that x is a \((c\uplambda \Vert F_k\Vert )\)-stationary point of subproblem (3) for all k.

This fact yields a general algorithmic framework that achieves the \(O(\varepsilon ^{-2})\) iteration complexity bound together with the quadratic convergence as in Algorithm 2.

In Line 4 of Algorithm 2, any globally convergent algorithm for subproblem (3) can be employed. For example, we may use (block) coordinate descent methods, Frank-Wolfe methods, interior point methods, active set methods, or augmented Lagrangian methods. For unconstrained cases, since the subproblem reduces to solving a system of linear equations, we may use conjugate gradient methods or direct methods, including Gaussian elimination.

6.2 Proposed method with an accelerated projected gradient

A practical example of Algorithm 2 is presented in Algorithm 3. This algorithm employs the accelerated projected gradient (APG) method [45, Algorithm 1] with the adaptive restarting technique [54, Sect. 3.2] to solve subproblems and adopts the additional parameters mentioned in Remark 1. Since the solution x obtained in Line 19 of Algorithm 3 satisfies Condition 1, this algorithm enjoys the \(O(\varepsilon ^{-2})\) iteration complexity bound. In addition, it also achieves the \(O(\varepsilon ^{-2})\) overall complexity bound if \(T < \infty\) as with Corollary 2, and it achieves local quadratic convergence if \(T = \infty\). Algorithm 3 will be used for the numerical experiments in the next section.

7 Numerical experiments

We examine the practical performance of the proposed method. We implemented all methods in Python with SciPy [58] and JAX [9] and executed them on a computer with Apple M1 Chip (8 cores, 3.2 GHz) and 16 GB RAM.

7.1 Problem setting

We consider three types of instances: (i) compressed sensing with quadratic measurement, (ii) nonnegative matrix factorization with missing values, and (iii) autoencoder with MNIST dataset.

7.1.1 Compressed sensing with quadratic measurement

Given \(A_1,\dots ,A_n \in {\mathbb {R}}^{r \times d}\), \(b_1,\dots ,b_n \in {\mathbb {R}}^d\), and \(c_1,\dots ,c_n, R \in {\mathbb {R}}\), we consider the following problem:

where \(\Vert \cdot \Vert _1\) denotes the \(\ell _1\)-norm. Problem (46) formulates the situation where a sparse vector \(x^* \in {\mathbb {R}}^d\) is recovered from a small number (i.e., \(n < d\)) of quadratic observations, \(\frac{1}{2r} \Vert A_i x^*\Vert ^2 + \langle b_i, x^* \rangle\) for \(i = 1, \dots , n\). Such a problem arises in the context of compressed sensing [8, 44] and phase retrieval [11, 63]. Problem (46) can be transformed into the form of problem (1).

Generating instances First, we generate the optimal solution \(x^* \in {\mathbb {R}}^d\) with only \(d_{\textrm{nnz}} \,(< d)\) nonzero entries. The indexes of the nonzero entries are chosen uniformly randomly, and the value of those elements are independently drawn from the uniform distribution on \([-x_{\textrm{max}}, x_{\textrm{max}}]\). Each entry of \(A_i\)’s and \(b_i\)’s is drawn independently from the standard normal distribution \({\mathcal {N}}(0, 1)\). Then, we set \(R = \Vert x^*\Vert _1\) and \(c_i = \frac{1}{2r} \Vert A_i x^*\Vert ^2 + \langle b_i, x^* \rangle\) for all i. We fix \(d = 200\), \(r = 10\), and \(n = 50\), and set \(d_{\textrm{nnz}} \in \{ 5, 10, 20 \}\) and \(x_{\textrm{max}} \in \{ 0.1, 1 \}\). We set the starting point for each algorithm as \(x_0 = {\textbf{0}}\).

7.1.2 Nonnegative matrix factorization with missing values

Given \(A \in {\mathbb {R}}^{m \times n}\) and \(H \in \{ 0, 1 \}^{m \times n}\), we consider the following problem:

where \(\odot\) denotes the elementwise product, \(X \ge O\) and \(Y \ge O\) denote elementwise inequalities, and \(\Vert \cdot \Vert _{\textrm{F}}\) denotes the Frobenius norm. Problem (47) formulates the situation where a data matrix A with some missing entries is approximated by the product \(X Y^\top\) of two nonnegative matrices. Such a problem is called nonnegative matrix factorization (NMF) with missing values and is widely used for nonnegative data analysis, especially for collaborative filtering [46, 65]. For more information on NMF, see [7, 59] and the references therein. Problem (47) can also be written as problem (1).

Generating instances To generate A and H, we introduce two parameters: \(\gamma \ge 1\) and \(0 < p \le 1\). The parameters \(\gamma\) and p control the condition number of A and the number of 1’s in H, respectively. Let \(l :=\min \{ m, n \}\). First, a matrix \({{\tilde{A}}} \in {\mathbb {R}}^{m \times n}\) is generated by \({{\tilde{A}}} = U D V^\top\), and then the matrix A is obtained by normalizing \({{\tilde{A}}} = ({{\tilde{a}}}_{ij})_{i,j}\) as \(A = {{\tilde{A}}} / \max _{i, j} {{\tilde{a}}}_{ij}\). Here, each entry of \(U \in {\mathbb {R}}^{m \times l}\) and \(V \in {\mathbb {R}}^{n \times l}\) follows independently the uniform distribution on [0, 1], and \(D = {{\,\textrm{diag}\,}}(\gamma ^{0}, \gamma ^{-1/l}, \gamma ^{-2/l},\dots , \gamma ^{-(l-1)/l} ) \in {\mathbb {R}}^{l \times l}\) is a diagonal matrix. H is a random matrix whose entries follow independently the Bernoulli distribution with parameter p, i.e., each entry of H is 1 with probability p. We fix \(m = n = 50\) and \(\gamma = 10^5\), and set \(r \in \{ 10, 40 \}\) and \(p \in \{ 0.02, 0.1, 0.5 \}\). Since \((X, Y) = (O, O)\) is a stationary point of problem (47), we set the starting point to random matrices whose entries independently follow the uniform distribution on \([0, 10^{-3}]\).

7.1.3 Autoencoder with MNIST dataset

The third instance is highly nonlinear and large-scale. In machine learning, autoencoders (see, e.g., [37, Section 14]) are a popular model to compress real-world data, represented as high-dimensional vectors, into low-dimensional vectors. Given p-dimensional data \(a_1, \dots , a_N \in {\mathbb {R}}^p\), autoencoders try to learn an encoder \(\phi _x^{\textrm{enc}}: {\mathbb {R}}^p \rightarrow {\mathbb {R}}^q\) and a decoder \(\phi _y^{\textrm{dec}}: {\mathbb {R}}^q \rightarrow {\mathbb {R}}^p\), where \(q < p\). Here, x and y are parameters to be learned by solving the following optimization problem:

As we see from the optimization problem above, the autoencoder aims to extract latent features that can be used to reconstruct the original data.

For this experiment, we use the MNIST hand-written digit dataset. Each data is a \(28 \times 28\) pixel grayscale image, which is represented as a vector \(a_i \in [0, 1]^p\) with \(p = 28 \times 28 = 728\). The dataset contains 60,000 training data, of which \(N = 1000\) were randomly chosen for use. We set \(q = 16\); our model encodes 728-dimensional data into 16 dimensions. Both encoder and decoder are two-layer neural networks with a hidden layer of size 64 and logistic sigmoid activation functions. Specifically, the encoder \(\phi _x^{\textrm{enc}}\) is written as

Here, S is the elementwise logistic sigmoid function, and \(W_1 \in {\mathbb {R}}^{728 \times 64}\), \(b_1 \in {\mathbb {R}}^{64}\), \(W_2 \in {\mathbb {R}}^{64 \times 16}\), and \(b_2 \in {\mathbb {R}}^{16}\) are parameters of the network; \(x = ((W_i, b_i))_{i=1}^2\). The decoder \(\phi _y^{\textrm{dec}}\) is formulated in a similar way. When we rewrite problem (48) in the form of (1), the dimension of the function \(F: {\mathbb {R}}^d \rightarrow {\mathbb {R}}^n\) is \(d = 96{,}104\) and \(n = Np = 728{,}000\).

7.2 Algorithms and implementation

We compare the proposed method with six existing methods. The details are below.

Proposed (Algorithm 3) and Proposed-NA (Algorithm 1) method To see the effect of acceleration for subproblems, we implemented both Algorithms 1 and 3; Algorithm 3 is expected to be faster, of course. In Line 10 of Algorithm 1 and Line 18 of Algorithm 3, we have to check if \(x_{k,t}\) is a \((c\uplambda \Vert F_k\Vert )\)-stationary point, but it is not very easy. We thus replace the criterion with one using gradient mapping, i.e., check if \(\eta \Vert x_{k,t} - y\Vert \le c\uplambda \Vert F_k\Vert\). The input parameters of Algorithm 1 and 3 are set to \(M_0 = \eta _0 = 1\), \(\alpha = \alpha _{\textrm{in}} = 2\), \(\beta = \beta _{\textrm{in}} = 0.9\), \(M_{\min } = 10^{-10}\), \(T = 100\), and \(c = 1\).

Fan method [26, Algorithm 2.1] and KYF method [40, Algorithm 2.12] The Fan and KYF methods are constrained LM methods with a global convergence guarantee. To solve subproblem (3), an APG method is used as well as Algorithm 3 for a fair comparison. The difference from the APG in Algorithm 3 is in the stopping criterion; the condition “\(x_{k,t}\) is a \((c\uplambda \Vert F_k\Vert )\)-stationary point” in Line 18 of Algorithm 3 is replaced with \(\eta \Vert x_{k,t} - y\Vert \le 10^{-9}\).Footnote 4 The input parameters in [26, 40] are set to \(\mu = 10^{-4}\), \(\beta = 0.9\), \(\sigma = 10^{-4}\), \(\gamma = 0.99995\), and \(\delta \in \{ 1, 2 \}\), following the recommendations of [26, 40].Footnote 5

Facchinei method [22, Algorithm 3] This is a constrained LM method that allows subproblems to be solved inexactly. We solve the subproblems in almost the same way as the Fan and KYF methods. The input parameters in [22] are set to \(\gamma _0 = 1\) and \(S = 2\).

GGO method [36, Algorithm G-LMA-IP] This is an LM-type method that requires the solution of a linear system at each iteration. The main advantage of this algorithm is that it does not require exact projection and can be applied to problems with a complex feasible region. Still, it is reported to perform well even when the projection is easy to compute exactly [36]. The linear systems are solved via QR decomposition (scipy.linalg.qr [58]) and the input parameters in [36] are set to \(M \in \{ 1, 15 \}\), \(\eta _1 = 10^{-4}\), \(\eta _2 = 10^{-2}\), \(\eta _3 = 10^{10}\), \(\gamma = 10^{-3}\), \(\beta = 1/2\), and \(\theta _k = 0\), following [36].Footnote 6

Projected gradient (PG) method The PG method is one of the most standard first-order methods for problem (1). The step-size is adaptively chosen in a similar way to the APG in Algorithm 3 with \(\eta _0 = 1\), \(\alpha _{\textrm{in}} = 2\), and \(\beta _{\textrm{in}} = 0.9\).

Trust-region reflective (TRF) method This is an interior trust-region method for box-constrained nonlinear optimization. It was proposed in [10] and is implemented in SciPy [58] with several improvements. For the TRF method, we call scipy.optimize.least_squares [58] with a gtol=1e-5 option to avoid the long execution time caused by searching for too precise a solution.

Other information As mentioned in Sect. 1.2, there are two ways to handle Jacobian matrices: explicitly computing \(J_k :=J(x_k)\) or using Jacobian-vector products \(J_k u\) and \(J_k^\top v\). In our experiments, the latter implementation outperformed the former, so we adopted the latter if possible (i.e., for Proposed, Proposed-NA, Fan, KYF, Facchinei, and PG).Footnote 7 We note that GGO is based on QR decomposition, which is probably impossible to implement using Jacobian-vector products.

For projection onto the feasible region of problem (46), we employ [18, Algorithm 1], whose time complexity is \(O(d \log d)\).

7.3 Results

7.3.1 Compressed sensing and NMF

Figures 2 and 3 show the results of compressed sensing in (46) and NMF in (47). Each figure consists of six subfigures, and they consist of two plots; the upper one shows the worst case among ten randomly generated instances, and the lower one shows the best case.Footnote 8

Results of compressed sensing (problem (46))

Results of NMF (problem (47))

Tables 2 and 3 provide more detailed information. For the tables, each algorithm is stopped when either of the following conditions is fulfilled: (i) the algorithm finds a point where the norm of the gradient mapping is less than \(10^{-5}\); (ii) the execution time exceeds 10 seconds. The “Success” column indicates the percentage of instances (out of 10) that ended up satisfying condition (i). The other columns show the averages of the following values: the objective function value reached, the gradient-mapping norm, the execution time, the number of iterations, and the number of basic operations. JVP stands for Jacobian-vector products.

A remarkable feature of our method is its stability in addition to fast convergence. For example, while the Fan and KYF methods perform well in most cases, they sometimes do not converge fast, as shown in Table 2(e) and (f). The proposed method shows the best or comparable performance in all our settings compared to the other methods. This suggests that our method is stable without careful parameter tuning.

As seen from Tables 3(d)–(f), the Facchinei and GGO methods do not work well in some cases. As for the Facchinei method, the reason is presumably that the method does not guarantee global convergence. For GGO, it is observed from the tables that the number of iterations performed within the time limit is small, say 3 or 4. It is because the method at each iteration computes a Jacobian explicitly and solves a linear system, resulting in a high cost per iteration for large-scale problems. Our method guarantees global convergence and repeats relatively low-cost iterations without Jacobian computation, which also leads to a stable performance.

Figure 4 shows the results of the TRF method. Since this method can only handle box constraints, the results only of problem (47) are presented. One marker corresponds to one instance, representing the elapsed time and the obtained objective value.Footnote 9 TRF takes more time to converge than the proposed method; in particular, comparing Table 3(f) and Fig. 4f, we see that the elapsed time is about 1000 times longer than ours. This result may be due to the difference in how TRF and ours handle the constraint. When the optimal solution or a stationary point is at the boundary of the constraint set, our method can reach the boundary in a finite number of iterations. However, TRF does not, as it is an interior point method.

Results of NMF (problem (47)) by the TRF method

7.3.2 Autoencoder with MNIST

Figure 5 shows the results of problem (48). The results of the GGO method are omitted because the method explicitly computes the Jacobian, but it was infeasible in this large-scale setting, where \(d = 96{,}104\) and \(n = 728{,}000\). Among the existing methods, the PG method converges the fastest, but the proposed method converges about five times faster than PG. This result suggests that our method is also effective for large-scale and highly nonlinear problems.

Results of autoencoder with MNIST (problem (48))

8 Conclusion and future work

We proposed an LM method for solving constrained least-squares problems. Our method finds an \(\varepsilon\)-stationary point of (possibly) nonzero-residual problems after \(O(\varepsilon ^{-2})\) computation, and also achieves local quadratic convergence for zero-residual problems. There are few LM methods having both overall complexity bounds and local quadratic convergence even for unconstrained problems; in fact, our investigation yielded only one such algorithm [6]. The key to our analysis is a simple update rule for \((\uplambda _k)\) and the majorization lemma (Lemma 1).

We may be able to extend the convergence analysis shown in this paper to different problem settings. For example, it would be interesting to derive an overall complexity bound of LM methods for a nonsmooth function F. It would be also interesting to integrate a stochastic technique into our LM method against problems with F of a huge size. Finally, in recent years, studies on local convergence analysis for non-zero residual problems are progressive [2, 6, 39]. It is important to research our LM method further in this line.

Code availability

The source code used in our numerical experiments is available on https://github.com/n-marumo/constrained-lm.

Notes

If \(x^*\) is an interior point of the constraint \({\mathcal {C}}\), the problem can be regarded as an unconstrained one, and the quadratic convergence is easier to prove. We do not assume this, i.e., \(x^*\) may be on the boundary of \({\mathcal {C}}\).

Since \(c\uplambda \Vert F_k\Vert\) in Algorithm 3 derives from our update rule of \(\uplambda\) and our analysis, it does not seem appropriate to use a criterion with \(c\uplambda \Vert F_k\Vert\) directly in another algorithm. We thus use \(\eta \Vert x_{k,t} - y\Vert \le 10^{-9}\) instead of \(\eta \Vert x_{k,t} - y\Vert \le c\uplambda \Vert F_k\Vert\) here.

\(\delta = 1\) and \(\delta = 2\) correspond to the Fan method and the KYF method, respectively.

Because the algorithm with \(M = 1\) outperformed \(M = 15\) in our experiments, we omit the results with \(M = 15\).

When using the Jacobian-vector products, i.e., not computing the Jacobian explicitly, almost all of the algorithm’s runtime is spent solving subproblems.

Here, for each method, we determine the best and worst cases out of ten instances as follows. Each algorithm is stopped when either of the following conditions is fulfilled: (i) the objective function value falls below \(10^{-10}\); (ii) the execution time exceeds 10 seconds. First, we define that the case stopped by condition (i) is better than that stopped by condition (ii). Then, among the cases stopped by condition (i), the case with a shorter execution time is defined as better. Similarly, among the cases stopped by condition (ii), the case with a smaller objective value is defined as better. Note that from the above definition, the instances corresponding to plots in the same figure may be distinct.

We ran the TRF method for ten instances for each (r, p), but Fig. 4f has only nine markers because the algorithm stopped with the error “SVD did not converge” for one instance.

References

Behling, R., Fischer, A.: A unified local convergence analysis of inexact constrained Levenberg–Marquardt methods. Optim. Lett. 6(5), 927–940 (2012)

Behling, R., Gonçalves, D.S., Santos, S.A.: Local convergence analysis of the Levenberg–Marquardt framework for nonzero-residue nonlinear least-squares problems under an error bound condition. J. Optim. Theory Appl. 183(3), 1099–1122 (2019)

Bellavia, S., Morini, B.: Strong local convergence properties of adaptive regularized methods for nonlinear least squares. IMA J. Numer. Anal. 35(2), 947–968 (2015)

Bellavia, S., Cartis, C., Gould, N.I., Morini, B., Toint, P.L.: Convergence of a regularized Euclidean residual algorithm for nonlinear least-squares. SIAM J. Numer. Anal. 48(1), 1–29 (2010)

Bellavia, S., Gratton, S., Riccietti, E.: A Levenberg–Marquardt method for large nonlinear least-squares problems with dynamic accuracy in functions and gradients. Numer. Math. 140(3), 791–825 (2018)

Bergou, E.H., Diouane, Y., Kungurtsev, V.: Convergence and complexity analysis of a Levenberg–Marquardt algorithm for inverse problems. J. Optim. Theory Appl. 185(3), 927–944 (2020)

Berry, M.W., Browne, M., Langville, A.N., Pauca, V.P., Plemmons, R.J.: Algorithms and applications for approximate nonnegative matrix factorization. Comput. Stat. Data Anal. 52(1), 155–173 (2007)

Blumensath, T.: Compressed sensing with nonlinear observations and related nonlinear optimization problems. IEEE Trans. Inf. Theory 59(6), 3466–3474 (2013)

Bradbury, J., Frostig, R., Hawkins, P., Johnson, M. J., Leary, C., Maclaurin, D., Necula, G., Paszke, A., VanderPlas, J., Wanderman-Milne, S., Zhang, Q.: JAX: composable transformations of Python+NumPy programs (2018). http://github.com/google/jax

Branch, M.A., Coleman, T.F., Li, Y.: A subspace, interior, and conjugate gradient method for large-scale bound-constrained minimization problems. SIAM J. Sci. Comput. 21(1), 1–23 (1999)

Candes, E.J., Li, X., Soltanolkotabi, M.: Phase retrieval via Wirtinger flow: theory and algorithms. IEEE Trans. Inf. Theory 61(4), 1985–2007 (2015)

Cartis, C., Gould, N.I., Toint, P.L.: On the evaluation complexity of composite function minimization with applications to nonconvex nonlinear programming. SIAM J. Optim. 21(4), 1721–1739 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. 127(2), 245–295 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part II: worst-case function-and derivative-evaluation complexity. Math. Program. 130(2), 295–319 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: An adaptive cubic regularization algorithm for nonconvex optimization with convex constraints and its function-evaluation complexity. IMA J. Numer. Anal. 32(4), 1662–1695 (2012)

Cartis, C., Gould, N., Toint, P.L.: Strong evaluation complexity bounds for arbitrary-order optimization of nonconvex nonsmooth composite functions. arXiv preprint arXiv:2001.10802 (2020)

Chorowski, J., Zurada, J.M.: Learning understandable neural networks with nonnegative weight constraints. IEEE Trans. Neural Netw. Learn. Syst. 26(1), 62–69 (2014)

Condat, L.: Fast projection onto the simplex and the \(l_1\) ball. Math. Program. 158(1), 575–585 (2016)

Dan, H., Yamashita, N., Fukushima, M.: Convergence properties of the inexact Levenberg–Marquardt method under local error bound conditions. Optim. Methods Softw. 17(4), 605–626 (2002)

Dirkse, S.P., Ferris, M.C.: MCPLIB: a collection of nonlinear mixed complementarity problems. Optim. Methods Softw. 5(4), 319–345 (1995)

Drusvyatskiy, D., Paquette, C.: Efficiency of minimizing compositions of convex functions and smooth maps. Math. Program. 178(1–2), 503–558 (2019)

Facchinei, F., Fischer, A., Herrich, M.: A family of Newton methods for nonsmooth constrained systems with nonisolated solutions. Math. Methods Oper. Res. 77(3), 433–443 (2013)

Fan, J.: A modified Levenberg-Marquardt algorithm for singular system of nonlinear equations. J. Comput. Math. 21, 625–636 (2003)

Fan, J.: Convergence rate of the trust region method for nonlinear equations under local error bound condition. Comput. Optim. Appl. 34(2), 215–227 (2006)

Fan, J.: The modified Levenberg–Marquardt method for nonlinear equations with cubic convergence. Math. Comput. 81(277), 447–466 (2012)

Fan, J.: On the Levenberg–Marquardt methods for convex constrained nonlinear equations. J. Ind. Manag. Optim. 9(1), 227–241 (2013)

Fan, J.: Accelerating the modified Levenberg–Marquardt method for nonlinear equations. Math. Comput. 83(287), 1173–1187 (2014)

Fan, J., Lu, N.: On the modified trust region algorithm for nonlinear equations. Optim. Methods Softw. 30(3), 478–491 (2015)

Fan, J., Pan, J.: Inexact Levenberg–Marquardt method for nonlinear equations. Discrete Contin. Dyn. Syst. Ser. B 4(4), 1223–1232 (2004)

Fan, J., Pan, J.: Convergence properties of a self-adaptive Levenberg–Marquardt algorithm under local error bound condition. Comput. Optim. Appl. 34(1), 47–62 (2006)

Fan, J., Pan, J.: On the convergence rate of the inexact Levenberg–Marquardt method. J. Ind. Manag. Optim. 7(1), 199–210 (2011)

Fan, J., Pan, J.: An improved trust region algorithm for nonlinear equations. Comput. Optim. Appl. 48(1), 59–70 (2011)

Fan, J., Yuan, Y.: On the convergence of a new Levenberg–Marquardt method. Technical Report 005, AMSS, Chinese Academy of Sciences (2001)

Fan, J., Yuan, Y.: On the quadratic convergence of the Levenberg–Marquardt method without nonsingularity assumption. Computing 74(1), 23–39 (2005)

Fischer, A., Shukla, P., Wang, M.: On the inexactness level of robust Levenberg–Marquardt methods. Optimization 59(2), 273–287 (2010)

Gonçalves, D.S., Gonçalves, M.L., Oliveira, F.R.: An inexact projected LM type algorithm for solving convex constrained nonlinear equations. J. Comput. Appl. Math. 391, 113421 (2021)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Griewank, A.: The modification of Newton’s method for unconstrained optimization by bounding cubic terms. Technical report, Technical report NA/12, Department of Applied Mathematics and Theoretical Physics, University of Cambridge (1981)

Ipsen, I.C., Kelley, C., Pope, S.: Rank-deficient nonlinear least squares problems and subset selection. SIAM J. Numer. Anal. 49(3), 1244–1266 (2011)

Kanzow, C., Yamashita, N., Fukushima, M.: Levenberg–Marquardt methods with strong local convergence properties for solving nonlinear equations with convex constraints. J. Comput. Appl. Math. 172(2), 375–397 (2004)

Lan, G., Monteiro, R.D.C.: Iteration-complexity of first-order penalty methods for convex programming. Math. Program. 138(1–2), 115–139 (2013)

Lee, D., Seung, H.S.: Algorithms for non-negative matrix factorization. In: Leen, T., Dietterich, T., Tresp, V. (eds.) Advances in Neural Information Processing Systems, vol. 13. MIT Press, Cambridge https://proceedings.neurips.cc/paper/2000/file/f9d1152547c0bde01830b7e8bd60024c-Paper.pdf (2000)

Levenberg, K.: A method for the solution of certain non-linear problems in least squares. Q. Appl. Aathematics 2(2), 164–168 (1944)

Li, X., Voroninski, V.: Sparse signal recovery from quadratic measurements via convex programming. SIAM J. Math. Anal. 45(5), 3019–3033 (2013)

Lin, Q., Xiao, L.: An adaptive accelerated proximal gradient method and its homotopy continuation for sparse optimization. In: Xing, E.P., Jebara, T. (eds.) Proceedings of the 31st International Conference on Machine Learning, voll. 32, pp. 73–81. PMLR. (2014)

Luo, X., Zhou, M., Xia, Y., Zhu, Q.: An efficient non-negative matrix-factorization-based approach to collaborative filtering for recommender systems. IEEE Trans. Ind. Inf. 10(2), 1273–1284 (2014)

Marquardt, D.W.: An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 11(2), 431–441 (1963)

Meintjes, K., Morgan, A.P.: A methodology for solving chemical equilibrium systems. Appl. Math. Comput. 22(4), 333–361 (1987)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Kluwer Academic Publishers, Massachusetts (2004)

Nesterov, Y.: Modified Gauss–Newton scheme with worst case guarantees for global performance. Optimisation Methods and Software 22(3), 469–483 (2007)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

Nesterov, Y.: Lectures on Convex Optimization, vol. 137. Springer, Berlin (2018)

Nesterov, Y., Polyak, B.T.: Cubic regularization of Newton method and its global performance. Math. Program. 108(1), 177–205 (2006)

O’donoghue, B., Candès, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 15(3), 715–732 (2015)

Osborne, M.R.: Nonlinear least squares—the Levenberg algorithm revisited. J. Aust. Math. Soc. Ser. B. Appl. Math. 19(3), 343–357 (1976)

Rosenbrock, H.: An automatic method for finding the greatest or least value of a function. Comput. J. 3(3), 175–184 (1960)

Ueda, K., Yamashita, N.: On a global complexity bound of the Levenberg–Marquardt method. J. Optim. Theory Appl. 147(3), 443–453 (2010)

Virtanen, P., Gommers, R., Oliphant, T.E., Haberland, M., Reddy, T., Cournapeau, D., Burovski, E., Peterson, P., Weckesser, W., Bright, J., van der Walt, S.J., Brett, M., Wilson, J., Millman, K.J., Mayorov, N., Nelson, A.R.J., Jones, E., Kern, R., Larson, E., Carey, C.J., Polat, İ, Feng, Y., Moore, E.W., VanderPlas, J., Laxalde, D., Perktold, J., Cimrman, R., Henriksen, I., Quintero, E.A., Harris, C.R., Archibald, A.M., Ribeiro, A.H., Pedregosa, F., vanMulbregt, P.: SciPy 1.0 Contributors. SciPy 1.0. Fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020)

Wang, Y.-X., Zhang, Y.-J.: Nonnegative matrix factorization: a comprehensive review. IEEE Trans. Knowl. Data Eng. 25(6), 1336–1353 (2012)

Wiltschko, A., Johnson, M.: The Autodiff Cookbook—JAX documentation. https://jax.readthedocs.io/en/latest/notebooks/autodiff_cookbook.html

Wood, A.J., Wollenberg, B.F., Sheblé, G.B.: Power Generation, Operation, and Control. Wiley, New York (2013)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg–Marquardt method. In: Alefeld, G., Chen, X. (eds.) Topics in Numerical Analysis, pp. 239–249. Springer, Vienna (2001)

Zhang, H., Zhou, Y., Liang, Y., Chi, Y.: A nonconvex approach for phase retrieval: reshaped Wirtinger flow and incremental algorithms. J. Mach. Learn. Res. 18(1), 5164–5198 (2017)

Zhang, J.-L., Wang, Y.: A new trust region method for nonlinear equations. Math. Methods Oper. Res. 58(2), 283–298 (2003)

Zhang, S., Wang, W., Ford, J., Makedon, F.: Learning from incomplete ratings using non-negative matrix factorization. In Proceedings of the 2006 SIAM International Conference on Data Mining, pp. 549–553. SIAM (2006)

Zhao, R., Fan, J.: Global complexity bound of the Levenberg–Marquardt method. Optim. Methods Softw. 31, 805–814 (2016)

Acknowledgements

We thank the anonymous referees for their careful reading of our manuscript and their helpful comments.

Funding

Open access funding provided by University of Tokyo. This work was supported by JSPS KAKENHI Grant Numbers 20K19748 and 19H04069, and JST ERATO Grand Number JPMJER1903.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Lemmas and proofs

Lemmas and proofs

1.1 Lemma on Lipschitz-like properties

Recall that the line segment \({\mathcal {L}}(a, b)\) is defined in (8).

Lemma 9

Let \({\mathcal {X}} \subseteq {\mathbb {R}}^d\) be any (possibly nonconvex) set. For some constants \(\sigma , L > 0\), consider the following two sets of conditions:

and

Then,

- (i)

- (ii)

- (iii)

Proof

By applying the multivariate mean value theorem, i.e.,

we can easily obtain Lemma 9(i) and (ii). Lemma 9(iii) is obtained as follows:

The last inequality follows from (49a), (49b), and Lemma 9(i). \(\square\)

Remark 3

By replacing (F, J) with \((f, \nabla f)\) in Lemma 9(ii),

implies

1.2 Proof of Lemma 1

The proof requires the following lemma, which is useful for deriving the majorization lemma for (general) MM-based algorithms under the assumption of Lipschitz continuity only on a sublevel set. We will use this lemma to prove Lemma 1 as well as Proposition 1.

Lemma 10

Let \({\mathcal {X}} \subseteq {\mathbb {R}}^d\) be any convex set. Fix a point \(x_k \in {\mathcal {X}}\), and a strictly convex function \({{\tilde{m}}}: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) such that \({{\tilde{m}}}(x_k) = f(x_k)\) and \(\nabla {{\tilde{m}}}(x_k) = \nabla f(x_k)\). We consider three subsets of \({\mathcal {X}}\):

If

then \({\mathcal {R}}_1 \subseteq {\mathcal {R}}_2\), and therefore \({\mathcal {R}}_1 \subseteq {\mathcal {R}}_2 \subseteq {\mathcal {R}}_3\).

Proof

We fix \(x \in {\mathcal {R}}_1\) arbitrarily and will show \(x \in {\mathcal {R}}_2\). This is obvious if \(x = x_k\), and thus, let \(x \ne x_k\) below. Accordingly, we have

since

By the Taylor expansion

together with (53), there exists \(\theta _1 > 0\) such that

We will prove \(x \in {\mathcal {R}}_2\) by contradiction; assume \(x \notin {\mathcal {R}}_2\), i.e., there exists \(\theta _2 \in [0, 1]\) such that

Combining (55) and (54) with the intermediate value theorem yields that there exists \(\theta _3 \in (\theta _1, \theta _2)\) such that

Note that (56b) is equivalent to \((1 - \theta _3)x_k + \theta _3 x \in {\mathcal {R}}_2\). On the other hand, we also have \((1 - \theta _3)x_k + \theta _3 x \in {\mathcal {R}}_1\) by the convexity of \({\mathcal {R}}_1\) and \(x_k, x \in {\mathcal {R}}_1\). Thus, we have

by (52). Therefore, we obtain

which is a contradiction. \(\square\)

Now, we prove Lemma 1.

Proof of Lemma 1

The model function \(m^k_\uplambda\) is strictly convex and satisfies \(m^k_\uplambda (x_k) = f(x_k)\) and \(\nabla m^k_\uplambda (x_k) = \nabla f(x_k)\). We use Lemma 10 with \({{\tilde{m}}} :=m^k_\uplambda\). Note that (11) and (12) correspond to \(x \in {\mathcal {R}}_1\) and \(x \in {\mathcal {R}}_3\), respectively, where \({\mathcal {R}}_1\) and \({\mathcal {R}}_3\) are defined in (51). Thus, by Lemma 10, it suffices to prove (52). We fix \(x \in {\mathcal {R}}_1 \cap {\mathcal {R}}_2\) arbitrarily and will show \(x \in {\mathcal {R}}_3\). Let \(u :=x - x_k\). From the convexity of \({\mathcal {X}}\), \(x \in {\mathcal {R}}_2\), (9), and Lemma 9(ii), we have

From the inequality of arithmetic and geometric means, we have

Furthermore, by (2) and \(x \in {\mathcal {R}}_1\), we have

Using these inequalities, we obtain \(x \in {\mathcal {R}}_3\) as follows:

\(\square\)

The proof of Lemma 1 is a little complicated mainly because x and y in (9) are restricted on the sublevel set \({\mathcal {S}}(x_k)\). If we assume the Lipschitz continuity of J on the convex set \({\mathcal {X}}\) as in [66], Lemma 10 is unnecessary and the proof of Lemma 1 can be simplified.

1.3 Proposition on projected gradient methods

Proposition 1

Fix a point \(x_k \in {\mathcal {C}}\). For some constant \(L_f > 0\), assume that

Then, for \(\eta \ge L_f\),

Proof

For \(\eta \ge L_f\), we define

and use Lemma 10 with this function and \({\mathcal {X}} = {\mathcal {C}}\). Note that this \({{\tilde{m}}}\) is strictly convex and satisfies that \({{\tilde{m}}}(x_k) = f(x_k)\) and \(\nabla {{\tilde{m}}}(x_k) = \nabla f(x_k)\). By (61), Remark 3, and \(\eta \ge L_f\), we have \({\mathcal {R}}_2 \subseteq {\mathcal {R}}_3\), where \({\mathcal {R}}_2\) and \({\mathcal {R}}_3\) are defined in (51), and we therefore have (52). Thus, by Lemma 10, we obtain \({\mathcal {R}}_1 \subseteq {\mathcal {R}}_2 \subseteq {\mathcal {R}}_3\), which yields \({\mathcal {P}}_\eta (x_k) = \mathop {\textrm{argmin}}\nolimits _{x \in {\mathcal {C}}} {{\tilde{m}}}(x) \in {\mathcal {R}}_1 \subseteq {\mathcal {R}}_2 \subseteq {\mathcal {R}}_3\). The first result (62) is equivalent to \({\mathcal {P}}_\eta (x_k) \in {\mathcal {R}}_2\), and the second (63) is equivalent to \({\mathcal {P}}_\eta (x_k) \in {\mathcal {R}}_3\) since \({{\tilde{m}}} ({\mathcal {P}}_\eta (x_k)) = f(x_k) - {\mathcal {D}}_\eta (x_k)\). \(\square\)

1.4 Proof of Lemma 3

To prove Lemma 3, we first show the following Lipschitz-like property on \(\nabla f\).

Lemma 11

Let Assumption 1hold and define \(L_f\) by (20). Then, for \(\eta \ge L_{f}\), we have

Proof

Fix a point \(x' \in {\mathcal {C}} \cap {\mathcal {S}}(x_0)\) arbitrarily. Since \(\Vert F(x')\Vert \le \Vert F(x_0)\Vert = \Vert F_0\Vert\), Assumption 1 and Lemma 9(iii) with \({\mathcal {X}} = {\mathcal {C}} \cap {\mathcal {S}}(x')\) imply

By Proposition 1 and (64), we have \({\mathcal {L}}(x', {\mathcal {P}}_\eta (x')) \subseteq {\mathcal {S}}(x')\) for \(\eta \ge L_f\). Therefore, by using (64) again, we obtain

which is the desired result. \(\square\)

Now, we prove Lemma 3.

Proof of Lemma 3

Since

from the definitions of \({\mathcal {P}}_\eta\) and \({\mathcal {G}}_\eta\) in (18) and (19), we obtain

\(\square\)

1.5 Relaxing an assumption in Theorem 1(ii)

In order to compute an \(\varepsilon\)-stationary point based on Theorem 1(ii), knowledge of the value of \({\bar{\eta }}\) is required. However, this requirement can be circumvented with a slight modification of the algorithm. We show it in this section.

Let \(\eta _k\) be the value of \(\eta\) when \(x_k\) is obtained in Algorithm 1. As with the proof of Theorem 1(ii), we can show that

for some \(k = O(\varepsilon ^{-2})\). If (66) and \(\eta _{k+1} \ge L_f\) hold, then \({\mathcal {P}}_{\eta _{k+1}}(x_k)\) is an \(\varepsilon\)-stationary point by Lemma 3, but \(\eta _{k+1} \ge L_f\) is not necessarily true. To address this issue, we modify Algorithm 1 a little.

As can be seen from the proof of Lemma 3, even if \(\eta < L_f\), the point \({\mathcal {P}}_\eta (x_k)\) is an \(\varepsilon\)-stationary point of problem (1) as long as \(\Vert {\mathcal {G}}_{\eta }(x_k)\Vert \le \varepsilon / 2\) and the following hold:

Thus, by updating \(\eta\) by \(\eta \leftarrow \alpha _{\textrm{in}} \eta\) until (67) is satisfied when \(x_k\) is obtained in Algorithm 1, we can guarantee that \({\mathcal {P}}_{\eta _{k+1}}(x_k)\) is an \(\varepsilon\)-stationary point for some \(k = O(\varepsilon ^{-2})\). Since (67) must hold for \(\eta \ge L_f\) by Lemma 11, this modification of the algorithm does not sacrifice Lemma 6 and other convergence guarantees. The important point here is that we can check if (67) holds with no prior knowledge of constants (e.g., \(\sigma\), L, and \(L_f\)) of the problem. We have obtained the modified algorithm that does not require the knowledge of the constants.

Rights and permissions