Abstract

Sparsity finds applications in diverse areas such as statistics, machine learning, and signal processing. Computations over sparse structures are less complex compared to their dense counterparts and need less storage. This paper proposes a heuristic method for retrieving sparse approximate solutions of optimization problems via minimizing the \(\ell _{p}\) quasi-norm, where \(0<p<1\). An iterative two-block algorithm for minimizing the \(\ell _{p}\) quasi-norm subject to convex constraints is proposed. The proposed algorithm requires solving for the roots of a scalar degree polynomial as opposed to applying a soft thresholding operator in the case of \(\ell _{1}\) norm minimization. The algorithm’s merit relies on its ability to solve the \(\ell _{p}\) quasi-norm minimization subject to any convex constraints set. For the specific case of constraints defined by differentiable functions with Lipschitz continuous gradient, a second, faster algorithm is proposed. Using a proximal gradient step, we mitigate the convex projection step and hence enhance the algorithm’s speed while proving its convergence. We present various applications where the proposed algorithm excels, namely, sparse signal reconstruction, system identification, and matrix completion. The results demonstrate the significant gains obtained by the proposed algorithm compared to other \(\ell _{p}\) quasi-norm based methods presented in previous literature.

Similar content being viewed by others

1 Introduction

1.1 Motivation

In numerical analysis and scientific computing, a sparse matrix/array is the one with many of its elements being zeros. The number of zeros divided by the total number of elements is called sparsity. Sparse data is often easier to store and process. Hence, techniques for deriving sparse solutions and exploiting them have attracted the attention of many researchers in various engineering fields like machine learning, signal processing, and control theory.

The taxonomy of sparsity can be studied through the Rank Minimization Problem (RMP). It has been lately considered in many engineering applications including control design and system identification. This is because the notions of complexity and system order can be closely related to the matrix rank. The RMP can be formulated as follows:

where \(\textbf{X} \in \mathbb {R}^{m\times n}\) and \(\mathcal {M}\subset \mathbb {R}^{m\times n}\) is a convex set. The problem (1) in its generality is NP-hard [1]. Therefore, polynomial time algorithms for solving large-scale problems of the form in (1) are not currently known. Hence, recently adopted methods for solving such problems are approximate and structured heuristics. A special case of RMP is the Sparse Vector Recovery (SVR) problem involving \(\ell _{0}\) pseudo-norm minimization given by:

where \(\textbf{x} \in \mathbb {R}^{n}\), \(\mathcal {V}\subset \mathbb {R}^n\) is a closed convex set and \(\left\Vert \cdot \right\Vert _{0}\) counts the number of the non-zero elements of its argument. From the definition of the rank being the number of non-zero singular values of a matrix, it can be easily realized that (1) is a generalized form of (2).

Numerous studies, which will be expounded upon in the subsequent section, have individually addressed effective solution methods for the problems presented in (1) and (2). These approaches utilize Schatten-p and \(\ell _{p}\) quasi-norm relaxations, respectively. However, existing methods in this domain often either assume a predefined structure for the convex set \(\mathcal {M}\) in (1) or exclusively cater to the specialized case articulated in (2). Consequently, these methods lack comprehensive applicability. Leveraging the inherent relationship between the Schatten-p quasi-norm and the \(\ell _{p}\) quasi-norm of matrix singular values, we endeavor to formulate an efficient heuristic method based on Schatten-p relaxation. This method is devised to address both problems in a unified manner. The proposed approach begins with the introduction of an algorithm for solving the \(\ell _{p}\) quasi-norm relaxation of the SVR problem presented in (2). Subsequently, recognizing that (2) constitutes a specific case of (1), we utilize the developed \(\ell _{p}\) quasi-norm minimization algorithm as a foundational component for constructing the envisaged generalized algorithm for RMPs.

1.2 Related work

1.2.1 Sparse vector recovery

Given that many signals exhibit sparsity or compressibility, the SVR problem has found widespread applications in fields such as object recognition, classification, and compressed sensing, as evidenced by studies such as [2,3,4]. The concept of sparse representation of signals and systems has been extensively discussed in [5], where the authors conducted a comprehensive review of both theoretical and empirical results pertaining to sparse optimization. They also derived the sufficient conditions necessary for ensuring uniqueness, stability, and computational feasibility. Moreover, [5] explores diverse applications of the SVR problem, contending that in certain tasks involving denoising and compression, methods rooted in sparse optimization offer state-of-the-art solutions.

The problem of constructing sparse solutions for undetermined linear systems has garnered significant attention. A survey conducted in [6] comprehensively examined existing algorithms for sparse approximation. The reviewed methods encompassed various approaches, including greedy methods [7, 8], techniques rooted in convex relaxation [3, 4], those employing non-convex optimization strategies [9, 10], and approaches necessitating brute force [11]. The authors discussed the computational demands of these algorithms and elucidated their interrelationships.

Sparse optimization problems of the form \(\min {f(\textbf{x}) + \mu g(\textbf{x}) }\) have been extensively explored in the literature, where \(g(\textbf{x})\) serves as a sparsity-inducing function, f represents a loss function capturing measurement errors, and \(\mu >0\) functions as a trade-off parameter balancing data fidelity and sparsity. In [12], the authors addressed a sparse recovery problem involving a set of corrupted measurements. By defining \(g(\cdot )\) as the \(\ell _{1}\) norm, they established a sufficient condition for exact sparse signal recovery, specifically the Restricted Isometry Property (RIP).

Motivated by the convergence of the \(\ell _{p}\) quasi-norm to the \(\ell _{0}\) pseudo-norm as \(p \rightarrow 0\), the problem was extended in [13] by setting g as the \(\ell _{p}\) quasi-norm for \(p\in (0,1)\). The authors presented theoretical results showcasing the \(\ell _{p}\) quasi-norm’s capability to recover sparse signals from noisy measurements. Under more relaxed RIP conditions, it was demonstrated that the \(\ell _{p}\) quasi-norm provides superior theoretical guarantees in terms of stability and robustness compared to \(\ell _{1}\) minimization.

In [9], the authors considered the problem of SVR via \(\ell _{p}\) quasi-norm minimization from a limited number of linear measurements of the target signal. However, the proposed approach faced limitations due to its higher computational complexity compared to the \(\ell _{1}\) norm. In [14], Fourier-based algorithms for convex optimization were leveraged to solve sparse signal reconstruction problems via \(\ell _{p}\) quasi-norm minimization, demonstrating a combination of the construction capabilities of non-convex methods with the speed of convex ones.

An alternative approach for sparse reconstruction was proposed in [15], replacing the non-convex function with a quadratic convex one. Furthermore, [16] introduced an Alternating Direction Method of Multipliers (ADMM) [17] based algorithm enforcing both sparsity and group sparsity using non-convex regularization. Additionally, [18] proposed an iterative half-thresholding algorithm for expedited solutions of \(\ell _{0.5}\) regularization. The authors not only established the existence of the resolvent of the gradient of the \(\ell _{0.5}\) quasi-norm but also derived its analytic expression and provided a thresholding representation for the solutions. The convergence of this iterative half-thresholding algorithm was studied in [19], demonstrating its convergence to a local minimizer of the regularized problem with a linear convergence rate.

Conditions for the convergence of an ADMM algorithm aimed at minimizing the sum of a smooth function with a bounded Hessian and a non-smooth function are established in [20]. In [21], the convergence of ADMM is analyzed for the minimization of a non-convex and potentially non-smooth objective function subject to equality constraints. The derived convergence guarantee extends to various non-convex objectives, encompassing piece-wise linear functions, \(\ell _{p}\) quasi-norm, and Schatten-p quasi-norm (\(0<p<1\)), while accommodating non-convex constraints. Several works have explored the \(\ell _{1-2}\) relaxation objective, defined the difference between \(\ell _{1}\) and \(\ell _{2}\) norms, i.e., \(\ell _{1}-\ell _{2}\), with [22] providing a theoretical analysis on SVR through weighted \(\ell _{1-2}\) minimization when partial support information is available. Recovery conditions for exact SVR within a \(\ell _{1-2}\) objective framework are derived in [23, 24], along with references therein, establishing the theoretical foundation for ensuring accurate SVR outcomes.

1.2.2 Rank minimization

In [25], the authors sought to determine the least order dynamic output feedback, utilizing the formulation akin to (1), capable of stabilizing a linear time-invariant system. Their approach involved minimizing the trace, as opposed to the rank, resulting in a Semi-Definite Program (SDP) amenable to efficient solution techniques. Notably, their solution was specifically applicable to symmetric and square matrices. Building upon this work, [26] introduced a generalization of the aforementioned approach. This extension involved replacing the rank in the objective function with the summation of the singular values of the matrix, commonly known as the nuclear norm. The authors demonstrated that this modification yields the convex envelope of the non-convex rank objective, reducing to the original trace heuristic when the decision matrix assumes the form of a symmetric Positive Semi-Definite (PSD) matrix.

In [27], an alternative heuristic based on the logarithm of the determinant was introduced as a surrogate for rank minimization within the subspace of PSD matrices. The authors demonstrated that this formulation could be effectively solved through a sequence of trace minimization problems. In a related study, [28] delved into existing trace and log determinant heuristics, exploring their applications for computing a low-rank approximation in various scenarios. Specifically, the applications encompassed obtaining simple data models with interpretability by approximating covariance matrices for a given dataset.

Drawing inspiration from the success of the \(\ell _{p}\) quasi-norm \((0<p<1)\) for sparse signal reconstruction, an alternative method aims to enforce low-rank structure using the Schatten-p quasi-norm. This norm is defined as the \(\ell _{p}\) quasi-norm of the singular values. In [29], the authors addressed the matrix completion problem, which involves constructing a low-rank matrix based on a subset of its entries. Instead of minimizing the nuclear norm, they proposed a Schatten-p quasi-norm formulation and investigated its convergence properties. To enhance the robustness of the solution, [30] combined the Schatten-p quasi-norm for low-rank recovery with the \(\ell _{p}\) quasi-norm \((0 < p \le 1)\) of prediction errors on the observed entries. The authors introduced an algorithm based on ADMM, which demonstrated superior numerical performance compared to other completion methods. In a non-convex approach for matrix optimization problems involving sparsity, [31] developed a technique using a generalized shrinkage operation. This method enhances the separation of moving objects from the stationary background by decomposing video into low-rank and sparse components, presenting advantages over the convex case.

1.3 Contributions

In spite of the commendable performance exhibited by the array of algorithms outlined in Sects. 1.2.1 and 1.2.2, each designed to address different relaxations of (1) and (2), it is essential to acknowledge their problem-specific nature, primarily grounded in the specific structural attributes of the convex constraint sets they address. This issue of specialization results in a lack of generality across problem domains.

In this paper, we present a versatile algorithm grounded in the principles of projections onto constraint sets. A distinctive feature of this approach lies in its minimal reliance on problem-specific structural constraints, prioritizing the foundational characteristic of closed convexity. The works [32, 33] delve into a comprehensive exploration, analyzing the intrinsic attributes of the projection operation onto constraint sets. While the former addresses the issue without incorporating a crucial coupling condition for polynomial equations, the latter assumes prior knowledge of the projection technique for each given point on \(\ell _{p}\) balls.

Initially, we propose an ADMM based algorithm, termed as \(\ell _{p}\) Quasi-Norm ADMM (pQN-ADMM), designed to solve the \(\ell _{p}\) quasi-norm relaxation of (2). At each iteration, the pivotal operation involves computing Euclidean projections onto specific convex and non-convex sets. Notably, the algorithm exhibits two key properties: 1) Its computational complexity aligns with that of \(\ell _{1}\) minimization algorithms, with the additional task of solving for the roots of a polynomial; 2) It does not necessitate a specific structure for the convex set.

Subsequently, we extend the application of the proposed algorithm to address the relaxation of (1) by embracing the Schatten-p quasi-norm. In this extension, we leverage the equivalence between minimizing the \(\ell _{p}\) quasi-norm of the vector of singular values and minimizing the Schatten-p quasi-norm. Our study encompasses the following numerical instances:

-

1

An example employing SVR, wherein the primary objective is the recovery of the sparsest feasible vector from given realizations.

-

2

A matrix completion example, where the overarching goal is the reconstruction of an unknown low-rank matrix based on a limited subset of observed entries.

-

3

Addressing a time-domain system identification problem, specifically tailored for minimum-order system detection.

Our numerical results compellingly showcase the competitiveness of pQN-ADMM when bench-marked against several state-of-the-art baseline methods.

Conclusively, given the inherent reliance of the derived algorithm on a convex projection step in each iteration, our endeavor is directed towards the formulation of an expedited algorithm accompanied by a rigorous mathematical convergence guarantee. Focusing on a subset of problems where the constraint set manifests as a polytope, we leverage principles from the Proximal Gradient (PG) method to formulate a rapid algorithm. The convergence of this algorithm is established with a rate of \(O(\frac{1}{K})\), where K denotes the iteration budget assigned to the algorithm.

2 Notation

Unless otherwise specified, we denote vectors with lowercase boldface letters, i.e., \(\textbf{x}\), with i-th entry as \(x_{i}\), while matrices are in uppercase, i.e. \(\textbf{X}\), with (i, j)-th entry as \(x_{i,j}\). For an integer \(n \in \mathbb {Z}_{+}\), \([n]{\mathop {=}\limits ^{\Delta }}\{1,\ldots ,n\}\). \(\textbf{1}\) represents a vector of all entries equal to 1, while \(\mathbbm {1}_{\mathcal {G}}(.)\) is an indicator function to the set \(\mathcal {G}\), i.e., it evaluates to zero if its argument belongs to the set \(\mathcal {G}\) and is \(+\infty\) otherwise.

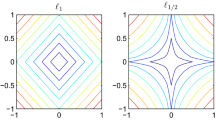

For a vector \(\textbf{x}\in \mathbb {R}^{n}\), the general \(\ell _{p}\) norm is defined as:

where, we let \(\left\Vert \textbf{x} \right\Vert\) be the well-known Euclidean norm, i.e., \(p=2\). When \(0<p<1\), the expression in (3) is termed as a quasi-norm satisfying the same axioms of the norm except the triangular inequality making it a non-convex function.

For a matrix \(\textbf{X}\), \(\left\Vert \textbf{X}\right\Vert\) represents the spectral norm, which is defined as the square root of the maximum eigenvalue of the matrix \(\textbf{X}^{\textrm{H}}\textbf{X}\). \(\textbf{X}^{\textrm{H}}\) refers to the complex conjugate transpose of \(\textbf{X}\), denoted as \(\textbf{X}^{\top }\). On the other hand, \(\left\Vert .\right\Vert _{\textrm{f}}\) signifies the Frobenius norm of a matrix.

The Schatten-p quasi-norm of a matrix \(\textbf{X}\) is defined as:

where \(\sigma _{i}(\textbf{X})\) is the i-th singular value of the matrix \(\textbf{X}\). We utilize the \(*\) subscript in (4) to differentiate the matrix Schatten-p quasi-norm from vector \(\ell _{p}\) case defined in (3). When \(p=1\), (4) yields the nuclear norm which is the convex envelope of the rank function. Throughout the paper, we consider a non-convex relaxation for the rank function, specifically \(p=1/2\).

We define the ceiling operator, denoted as \(\lceil \cdot \rceil\), the vectorization operator \(\textrm{vec}(\textbf{X}) \in \mathbb {R}^{mn}\), representing the vector obtained by stacking the columns of the matrix \(\textbf{X}\in \mathbb {R}^{m\times n}\), and the Hankel operator Hankel(.), producing a Hankel matrix from the provided vector arguments. We define the sign operator, denoted as \(\text {sign}(\cdot )\), which outputs -1, 0, or 1 corresponding to a negative, zero, or positive argument, respectively.

3 Sparse vector recovery algorithm

3.1 Problem formulation

This section develops a method for approximating the solution of (2) using the following relaxation:

where \(p \in (0, 1]\) and \(\mathcal {V}\) is a closed convex set. Problem (5) is convex for \(p\ge 1\); hence, can be solved to optimality efficiently. However, the problem becomes non-convex when \(p<1\). We present a gradient-based algorithm and consequently, it may not always converge to a global optimum solution but only to a stationary point. An epigraph equivalent formulation of (5) is obtained by introducing the variable \(\textbf{t}=[t_{i}]_{i \in [n]}\):

Let \(\mathcal {X} \subset \mathbb {R}^{2}\) denote the epigraph of the scalar function \(|x|^{p}\), i.e., \(\mathcal {X}=\{(x,t) \in \mathbb {R}^2: t \ge |x|^{p}\}\), which is a non-convex set for \(p<1\). Then, (6) can be cast as:

ADMM, as introduced in [17], leverages the inherent problem structure to partition the optimization process into simpler sub-problems, which are solved iteratively. To achieve this, auxiliary variables \(\textbf{y}=[y_{i}]_{i \in [n]}\) and \(\textbf{z}=[z_{i}]_{i \in [n]}\) are introduced, leading to an ADMM reformulation of the problem defined in (7):

The dual variables associated with the constraints \(\textbf{x}=\textbf{y}\) and \(\textbf{t}=\textbf{z}\) are \(\varvec{\lambda }\) and \(\varvec{\theta }\), respectively. Throughout the paper, the colons in the constraints of an optimization problem serve as a means to associate the constraint (appearing on the left side of the colon) with its corresponding Lagrange multiplier (found on the right side of the colon). The Lagrangian function corresponding to (8) augmented with a quadratic penalty on the violation of the equality constraints with penalty parameter \(\rho > 0\), is given by:

Considering the two block variables \((\textbf{x},\textbf{t})\) and \((\textbf{y},\textbf{z})\), ADMM consists of the following iterations:

Given the augmented Lagrangian function expressed in (9), it is evident from (10) that the variables \(\textbf{x}\) and \(\textbf{t}\) are iteratively updated by solving the following non-convex problem:

Exploiting the separable structure of (14), one immediately concludes that (14) can be split into n independent 2-dimensional problems that can be solved in parallel, i.e., for each \(i \in [n]\):

where \(\Pi _{\mathcal {X}}(.)\) denotes the Euclidean projection operator onto the set \(\mathcal {X}\). Furthermore, (9) and (11) imply that \(\textbf{y}\) and \(\textbf{z}\) are independently updated as follows:

Algorithm 1 summarizes the proposed ADMM algorithm. It is clear that \(\textbf{z}\), \(\varvec{\lambda }\), and \(\varvec{\theta }\) merit closed-form updates. However, updating \((\textbf{x},\textbf{t})\) requires solving n non-convex problems. Our strategy for dealing with this issue is presented in the following section.

3.2 Non-convex projection

In this section, we present the method used to tackle the non-convex projection problem required to update \(\textbf{x}\) and \(\textbf{t}\).

As it is clear from (15), \(\textbf{x}\) and \(\textbf{t}\) can be updated element-wise via performing a projection operation onto the non-convex set \(\mathcal {X}\), one for each \(i \in [n]\). The n projection problems can be run independently in parallel. We now outline the proposed idea for solving one such projection, i.e., we suppress the dependence on the index of the entry of \(\textbf{x}\) and \(\textbf{t}\). For \((\bar{x},\bar{t}) \in \mathbb {R}^{2}\), \(\Pi _{\mathcal {X}}(\bar{x},\bar{t})\) entails solving:

If \(\bar{t} \ge |\bar{x}|^{p}\), then trivially \(\Pi _{\mathcal {X}}(\bar{x},\bar{t})=(\bar{x},\bar{t})\). Thus, we focus on the case in which \(\bar{t} < |\bar{x}|^{p}\). The following theorem states the necessary optimality conditions for (18).

Theorem 1

Let \(\bar{t} < |\bar{x}|^{p}\), and \((x^{*},t^{*})\) be an optimal solution of (18). Then, the following properties are satisfied:

-

(a)

\({\text{sign}}(x*) = {\text{sign}}(\bar{x})\),

-

(b)

\(t^{*} \ge \bar{t}\),

-

(c)

\(|x^{*}|^{p} \ge \bar{t}\),

-

(d)

\(t^{*}=|x^{*}|^{p}\).

Proof

We prove the statements by contradiction as follows:

-

(a)

Suppose that \({\text{sign}}(x^{*}) \ne {\text{sign}}(\bar{x})\), then:

$$\begin{aligned} |x^{*}-\bar{x}|\!=\!|x^{*}-0|\!+\!|\bar{x}-0| > |\bar{x}-0|, \end{aligned}$$(19)i.e., \((x^{*}\!-\!\bar{x})^{2} \!>\! (0\!-\!\bar{x})^{2}\). Hence, \(g(x^{*},t^{*})-g(0,t^{*}) \!>\! 0\). Moreover, the feasibility of \((x^{*},t^{*})\) implies that \(t^{*}>0\). Thus, \((0,t^{*})\) is feasible and attains a lower objective value than that attained by \((x^{*},t^{*})\). This contradicts the optimality of \((x^{*},t^{*})\).

-

(b)

Assume that \(t^{*} < \bar{t}\). Then:

$$\begin{aligned} g(x^{*},t^{*})-g(x^{*},\bar{t})=(t^{*}-\bar{t})^{2} > 0. \end{aligned}$$(20)Furthermore, by the feasibility of \((x^{*},t^{*})\), we have \(|x^{*}|^{p} \le t^{*} < \bar{t}\). Thus, \((x^{*},\bar{t})\) is feasible and attains a lower objective value than that attained by \((x^{*},t^{*})\). This contradicts the optimality of \((x^{*},t^{*})\).

-

(c)

Suppose that \(|x^{*}|^{p} < \bar{t}\), i.e.,

$$\begin{aligned} -\bar{t}^{\frac{1}{p}}< x^{*}< \bar{t}^{\frac{1}{p}}. \end{aligned}$$(21)We now consider two cases, \(\bar{x}>0\) and \(\bar{x}<0\). First, let \(\bar{x} > 0\). Then, we have by \((\textit{a})\) and (21) that \(0<x^{*}<\bar{t}^{\frac{1}{p}}\). Since \(\bar{t}<|\bar{x}|^{p}\), i.e., \((\bar{x},\bar{t}) \notin \mathcal {X}\), therefore \(\bar{t}^{\frac{1}{p}}<\bar{x}\) and hence, \(0<x^{*}<\bar{t}^{\frac{1}{p}}<\bar{x}\). Pick \(x_{0} > 0\) such that \(|x_{0}|^{p}=\bar{t}\), i.e., \(x_{0}=\bar{t}^{\frac{1}{p}}\). Then clearly, \(x^{*}< x_{0} < \bar{x}\). Thus, we have:

$$\begin{aligned} g(x^{*},t^{*})-g(x_{0},t^{*})=(x^{*}-\bar{x})^{2}-(x_{0}-\bar{x})^{2} > 0, \end{aligned}$$(22)where the last inequality follows the just proven identity that \(x^{*}< x_{0} < \bar{x}\). Moreover, we have \(|x_{0}|^{p}=\bar{t} \le t^{*}\) by \((\textit{b})\). Thus, \((x_{0},t^{*})\) is feasible and attains a lower objective value than that attained by \((x^{*},t^{*})\). This contradicts the optimality of \((x^{*},t^{*})\). On the other hand, let \(\bar{x}<0\). Then, we have by \((\textit{a})\) and (21) that \(-\bar{t}^{\frac{1}{p}}< x^{*} < 0\). Since \(\bar{t}<|\bar{x}|^{p}\), i.e., \((\bar{x},\bar{t}) \notin \mathcal {X}\), then \(\bar{t}^{\frac{1}{p}}<|\bar{x}|\), i.e., \(\bar{x}<-\bar{t}^{\frac{1}{p}}\). Therefore, \(\bar{x}< -\bar{t}^{\frac{1}{p}} < x^{*}.\) Pick \(x_{0}<0\) such that \(|x_{0}|^{p}=\bar{t}\), i.e., \(x_{0}=-\bar{t}^{\frac{1}{p}}\). Then, (22) also holds when \(\bar{x}<0\). Note that \(|x_{0}|^{p} = \bar{t} \le t^{*}\) by \((\textit{b})\). Thus, \((x_{0},t^{*})\) is feasible and attains a lower objective value than that attained by \((x^{*},t^{*})\). This contradicts the optimality of \((x^{*},t^{*})\).

-

(d)

The feasibility of \((x^{*},t^{*})\) eliminates the possibility that \(t^{*}<|x^{*}|^{p}\). Now let \(t^{*}>|x^{*}|^{p}\) and pick \(t_{0}=|x^{*}|^{p}\). Then, \(\bar{t} \le |x^{*}|^{p}=t_{0}<t^{*}\), where the first inequality follows from \((\textit{c})\). Then, \(0 \le t_{0}-\bar{t} < t^{*}-\bar{t}\). Thus, we have:

$$\begin{aligned} g(x^{*},t^{*})-g(x^{*},t_{0})=(t^{*}-\bar{t})^{2}-(t_{0}-\bar{t})^{2} > 0, \end{aligned}$$(23)Furthermore, the feasibility of \((x^{*},t_{0})\) follows trivially from the choice of \(t_{0}\). Thus, \((x^{*},t_{0})\) is feasible and attains a lower objective value than that attained by \((x^{*},t^{*})\). This contradicts the optimality of \((x^{*},t^{*})\).

This concludes the proof. \(\square\)

We now make use of the fact that for (18), an optimal solution \((x^{*},t^{*})\) satisfies \(t^{*}=|x^{*}|^{p}\) and hence, (18) reduces to solving:

The first order necessary optimality condition for (24) implies the following:

By the symmetry of the function \(|x|^{p}\), without loss of generality, assume that \(x^{*}>0\) and let \(0< p=\frac{s}{q} < 1\) for some \(s,q \in \mathbb {Z}_{+}\). A change of variables \(a^{q}=x^{*}\) plugged in (25) shows that finding an optimal solution for (18) reduces to finding a root of the following scalar degree 2q polynomial:

To determine \(\Pi _{\mathcal {X}}(\bar{x},\bar{t})\), the objective is to find a root denoted as \(a^{*}\) for the polynomial in (26), while ensuring that the pair \((a^{*^q},a^{*^s})\) minimizes the function g(x, t). Algorithm 2 provides a summary of the method employed to address problem (18).

When both \(x^{*}=0\) and \(t^{*}=0\), the objective function evaluates to \(g(0,0)=\bar{x}^{2}+\bar{t}^{2}\). To optimize the objective function while upholding the constraint \(t\ge |x|^{p}\), the choice is made to set \(x^{*}=0\) and \(t^{*}=\max \{0,\bar{t}\}\). This decision ensures that \(g(0, t^{*})\le g(0,0)\). In instances where \(\bar{x}=0\) and \(\bar{t}\le |\bar{x}|^{p}\), indicating that \(\bar{t}\le 0\), the choice is to set \(x^{*}=0\). Consequently, this results in \(g(0, t)=(t-\bar{t})^{2}=(t+|\bar{t}|)^{2}\). To meet the constraint \(t^{*}\ge |x^{*}|^{p}\), the optimal selection is \(t^{*}=0\), which stands as the most suitable option for minimizing g(0, t).

3.3 Convex projection

The convex projection for \({\textbf {y}}\)-update in (16) can be formulated as the following convex optimization problem:

Convex problems can be solved by a variety of contemporary methods including bundle methods [34], sub-gradient projection [35], interior point methods [36], and ellipsoid methods [37]. The efficiency of optimization techniques relies mainly on exploiting the structure of the constraint set. As discussed in Sect. 1.3, our objective is to address the problem outlined in (5) with minimal assumptions on the set \(\mathcal {V}\). Our only requirement is that \(\mathcal {V}\) is a closed and convex set. Nevertheless, if feasible, one should capitalize on the inherent structure of \(\mathcal {V}\) to potentially streamline the computational complexity involved in solving (27).

4 Rank minimization algorithm

We consider the problem in (1) and propose a method for approximating its solution efficiently. The Schatten-p heuristic of (1) can be written as:

where \(\textrm{L}=\min \{m,n\}\) and \(\sigma _{i}(\textbf{X})\) is the ith singular value of \(\textbf{X}\). In the scenario where \(p=1\), (28) represents a convex problem, akin to the nuclear norm heuristic. We now consider a non-convex relaxation, specifically for the case where \(0<p<1\). The problem in (28) attains an epi-graph form:

such that \(\textbf{t}=[t_{i}]_{i\in [\textrm{L}]}\). Defining the epi-graph set \(\mathcal {Y}\) for the function \(\sigma (X)\), where \(\mathcal {Y}{\mathop {=}\limits ^{\Delta }}\{(\sigma (\textbf{X}),t)\!\in \! \mathbb {R}^{2}\!:\!|\sigma (\textbf{X})|^{p}\!\le \! t\} \subseteq \mathbb {R}^{2}\), the problem in (29) can be written as:

To formulate the problem in a manner amenable to ADMM, we introduce auxiliary variables, \(\textbf{Y} \in \mathbb {R}^{m\times n}\) and \(\textbf{z}=[z_{i}]_{i\in [\textrm{L}]}\). This transformation leads to the following representation of the problem in (30):

where \(\varvec{\Lambda }\), \(\varvec{\theta }\) are the dual variables associated with \(\textbf{X}\) and \(\textbf{t}\) respectively. Similar to (9), the Lagrangian function associated with (31), augmented with a quadratic penalty for the equality constraint violation with a parameter \(\rho >0\), can be represented as:

where \(Tr\{.\}\) is the trace operator. Given the 2-tuples \((\textbf{X},\textbf{t})\) and \((\textbf{Y},\textbf{z}\)), the ADMM iterations are as follows:

4.1 \((\textbf{X},\textbf{t})\) Update

By completing the square and employing some straightforward algebraic manipulations, it can be demonstrated that the problem described in (33) is equivalent to:

where \(\bar{\textbf{X}}^{k}{\mathop {=}\limits ^{\Delta }}\textbf{Y}^{k}-\frac{\varvec{\Lambda }^{k}}{\rho }\) and \(\bar{\textbf{t}}^{k}{\mathop {=}\limits ^{\Delta }}\textbf{z}^{k}-\frac{\varvec{\theta }^{k}}{\rho }\). For simplicity, we will omit the iteration index k. Let’s assume that \(\textbf{X} = \textbf{P}{\varvec{{\Sigma }}} \textbf{Q}^{\top }\) and \(\bar{\textbf{X}} = \textbf{U}{\varvec{{\Delta }}} \textbf{V}^{\top }\) represent the Singular Value Decomposition (SVD) of \(\textbf{X}\) and \(\bar{\textbf{X}}\), respectively. Here, \({\varvec{{\Sigma }}}\) and \({\varvec{{\Delta }}}\) are diagonal matrices with the singular values associated with \(\textbf{X}\) and \(\bar{\textbf{X}}\), while \(\textbf{P}\), \(\textbf{U}\), \(\textbf{Q}\), and \(\textbf{V}\) are unitary matrices. Following the steps in [38, Theorem 3], we can express the first term of (38) as:

where (a) is because \(\textbf{P}^{\top }\textbf{P}=\textbf{Q}^{\top }\textbf{Q}=\textbf{U}^{\top }\textbf{U}=\textbf{V}^{\top }\textbf{V}=\textbf{I}_{\textrm{L}\times \textrm{L}}\) with \(\textbf{I}_{\textrm{L}\times \textrm{L}}\) being an identity matrix of size \(\textrm{L}\), and exploiting the circular property of the trace while (b) holds is from the main result of [39]. In order to make \(\left\Vert \textbf{X}-\bar{\textbf{X}}^{k} \right\Vert _{\textrm{f}}^{2}\) achieve its derived lower bound, we set \(\textbf{P}=\textbf{U}\) and \(\textbf{Q}=\textbf{V}\).

The problem in (38) is then equivalent to:

where \(\textbf{x}=[x_{i}]_{i\in [\textrm{L}]}\) and \(\bar{\textbf{x}}=[\bar{x}_{i}]_{i\in [\textrm{L}]}\) are the vectors of singular values of the matrices \(\textbf{X}\) and \(\bar{\textbf{X}}\) respectively. The optimal solution \(\textbf{X}^{*}\) for (38) can be determined by first finding the optimal \(\textbf{x}^{*}\) for (40), and then obtaining \(\textbf{X}^{*}=\textbf{U} \varvec{\Sigma }^{*} \textbf{V}^{\top }\), where \(\varvec{\Sigma }^{*}=\text {diag}(\textbf{x}^{*})\) and \(\text {diag}(.)\) denotes an operator that transforms a vector into its corresponding diagonal matrix. Given that the problem in (40) is separable, we will proceed by omitting the index i and focus solely on solving:

It can be realized that (41) is similar to (18), hence, its optimal solution can be found by applying Algorithm 2.

4.2 \((\textbf{Y},\textbf{z})\) update

Upon updating \((\textbf{X},\textbf{t})\) with \(\varvec{\Lambda }\) and \(\varvec{\theta }\) held constant, the problem in (34) can be reformulated as:

which is clearly a convex optimization problem representing the projection of the point \(\textbf{X}^{k+1}+\frac{\varvec{\Lambda }^{k}}{\rho }\) on the set \(\mathcal {M}\) and can be solved by various known class of algorithms as discussed in Sect. 3.3. Following the update of \(\textbf{Y}\), the update for \(\textbf{z}\) in (35) is as follows:

which results in a closed-form solution for \(\textbf{z}^{k+1} = \textbf{t}^{k+1} + \frac{\varvec{\theta }^{k} - \textbf{1}}{\rho }\).

5 Proximal gradient algorithm

The pQN-ADMM algorithm adeptly handles the \(\ell _{p}\) relaxation of (2), refraining from assuming any specific structure for \(\mathcal {V}\) beyond its closed and convex nature. Primarily, the algorithm hinges on the computation of Euclidean projections onto \(\mathcal {V}\), as outlined in (27).

In this section, we consider a sub-class of problems with a specific structure for the convex set of the form \(\mathcal {V}=\{\textbf{x}:\,f(\textbf{x})\le 0\}\), where \(f(\textbf{x})\) is a convex function with Lipschitz continuous gradient. i.e., f is L-smooth: \(\left\Vert \nabla f(\textbf{x})-\nabla f(\textbf{y})\right\Vert \le L\left\Vert \textbf{x}-\textbf{y}\right\Vert\) for all \(\textbf{x},\textbf{y} \in \mathbb {R}^{n}\). Specifically, in order to solve:

we aim to develop an efficient algorithm with some convergence guarantees for the following Lagrangian relaxation:

where \(\mu \ge 0\) is the dual multiplier that captures the trade-off between solution sparsity and fidelity. It is imperative to acknowledge that (44) and (45) exhibit a relationship, albeit not being strictly equivalent.

A canonical problem for the regularized risk minimization has the following form:

where h is an L-smooth loss function, and g represents the regularizer term. In cases where both g and h exhibit convexity, the Proximal Gradient (PG) algorithm [40] can iteratively compute a solution to (46) through PG steps.

where \(\textbf{prox}_{g/\lambda }(.){\mathop {=}\limits ^{\Delta }}\mathop {\textrm{argmin}}\limits _{\textbf{x}} g(\textbf{x}) + \frac{\lambda }{2} \left\Vert \textbf{x}-\cdot \right\Vert ^{2}\), for some constant \(\lambda\). When g is convex, the proximal map \(\textbf{prox}_{g/\lambda }\) is well-defined, thus, the PG step can be computed.

In comparing both (45) and (46), it is observed that the convexity assumption of \(g(\textbf{x})\) in (46) is not met for \(\Vert \textbf{x}\Vert _{p}^{p}\) in (45). When the regularizer is a continuous non-convex function, the proximal map \(\textbf{prox}_{g/\lambda }\) may not exist, and computing it in closed form becomes a challenging task.

On the contrary, in the case of \(\Vert \textbf{x}\Vert _{p}^{p}\), leveraging similar reasoning as employed in the non-convex projection step introduced in Sect. 3.2, our objective is to derive an analytical solution that can be efficiently computed. Specifically, assuming \(p\in (0,1)\) is a positive rational number, the proposed method for computing the proximal map of \(\Vert \textbf{x}\Vert _{p}^{p}\) involves finding the roots of a polynomial of order 2q, where \(q\in \mathbb {Z}_{+}\) such that \(p=s/q\) for some \(s\in \mathbb {Z}_{+}\).

Since f is L-smooth, for all \(\textbf{x}, \textbf{y} \in \mathbb {R}^{n}\), we have:

Given \(\textbf{x}^k\), replacing \(f(\textbf{x})\) with the upper bound in (48) for \(\textbf{y}=\textbf{x}^k\), the prox-gradient operation naturally arises as follows:

By completing the square, (49) yields to:

Defining \({\bar{\text{x}}}^{k} \mathop = \limits^{\Delta } {\text{x}}^{k} - \frac{1}{L}\nabla f({\text{x}}^{{\text{k}}} )\), (50) can be rewritten as:

which is clearly a separable structure in the entries of \(\textbf{x}\). Therefore, for each \(i\in [n]\), we have:

where \(\bar{g}:\mathbb {R}\rightarrow \mathbb {R}_+\) such that \(\bar{g}(t)=|t|^{p}\) for some positive rational \(p\in (0,1)\).

Next, we consider a generic form of (52), i.e., given some \(\bar{t}\in \mathbb {R}\), we would like to compute:

The first-order optimality condition for (53) can be written as:

Using similar arguments as in Sect. 3.2, we can conclude that the optimal solution \(t^{*}\) attains the property that \(\text {sign}(t^{*})=\text {sign}(\bar{t})\). Without loss of generality, exploiting the symmetry of the function \(\bar{g}\), we only consider the case when \(\bar{t}>0\); hence, the optimal solution \(t^{*}\) is the smallest positive root of the following polynomial:

Similar to (26), suppose \(0< p = \frac{s}{q} < 1\) for some positive integers s and q. By employing the variable transformation \(a \triangleq (t^{*})^{\frac{1}{q}}\), the optimality condition in (55) is simplified to the task of finding the roots of a polynomial of degree 2q:

In order to solve (44) effectively, we will employ Algorithm 3, which implements the non-convex inexact Accelerated Proximal Gradient (APG) descent method as presented in [41, Algorithm 2]. In summary, Algorithm 3 is designed to tackle composite problems of the form in (46), making the assumptions that h is L-smooth and g is a proper lower-semicontinuous function such that \(F \triangleq h + g\) is bounded from below and coercive. This means that \(\lim _{\Vert \textbf{x}\Vert \rightarrow \infty }F(\textbf{x}) = +\infty\). It is important to note that neither h nor g are required to be convex. Algorithm 3 can be summarized as follows:

-

An extrapolation \(\textbf{y}_{k}\) is generated as introduced in [42] for the APG algorithm (step 3).

-

Steps 4 through 9 encompass a mechanism for a non-monotone update of the objective function. Specifically, \(F(\textbf{y}_{k})\) undergoes scrutiny concerning its relation to the maximum among the most recent l objective values. Step 9 is responsible for adjusting the gradient step accordingly. This adjustment occasionally allows \(\textbf{y}^{k}\) to increase the objective, resulting in a situation where \(F(\textbf{y}^{k})\) becomes lower than the maximum objective value observed in the latest l iterations.

-

Steps 11 and 12 represent the solution of the PG step using the non-convex projection method.

In the next part, we show that Algorithm 3 converges to a critical point and it exhibits a convergence rate of \(O(\frac{1}{K})\), where K is the iteration budget that is given to the algorithm.

Definition 1

([43]) The Frechet sub-differential of F at \(\textbf{x}\) is

The sub-differential of F at \(\textbf{x}\) is

Definition 2

[43] \(\textbf{x}\) is a critical point of F if \(0\in \partial g(\textbf{x})+\nabla h(\textbf{x})\).

By comparing (46) and (45), it can be realized that the functions \(g(\textbf{x})\) and \(h(\textbf{x})\) in definition 2 are equal to \(\left\Vert \textbf{x}\right\Vert _{p}^{p}\) and \(\frac{\mu }{2}f(\textbf{x})\), respectively.

Theorem 2

The sequence \(\textbf{x}^{k}\) generated from Algorithm 3 has at least one limit point and all the generated limit points are critical points of (45). Moreover, the algorithm converges with rate \(O(\frac{1}{K})\), where K is the iteration budget given to the algorithm.

Proof

It can easily be verified that our problem in (45) satisfies all required assumptions for Algorithm 3. Indeed,

-

1

The function \(g(\textbf{x})=\left\Vert \textbf{x}\right\Vert _{p}^{p}\) is a proper and lower semi-continuous function.

-

2

The gradient of \(h(\textbf{x})=\frac{\mu }{2}f(\textbf{x})\) is \(\bar{L}\)-Lipschitz smooth, i.e., \(\left\Vert \nabla h(\textbf{x})-\nabla h(\textbf{y})\right\Vert \le \bar{L}\left\Vert \textbf{x}-\textbf{y}\right\Vert\) for all \(\textbf{x},\textbf{y} \in \mathbb {R}^{n}\), with \(\bar{L}=\frac{\mu }{2}L\).

-

3

\(F(\textbf{x})\!=\!g(\textbf{x})\!+\!h(\textbf{x})\) is bounded from below, i.e., \(F(\textbf{x})\!\ge \!0\).

-

4

\(\lim _{\left\Vert \textbf{x}\right\Vert \rightarrow \infty }F(\textbf{x})=\infty\).

-

5

Let \(\mathcal {G}(\textbf{x}){\mathop {=}\limits ^{\Delta }}\textbf{x}-\textbf{prox}_{g/\lambda }(\textbf{x}-\nabla h(\textbf{x})/L)\). From [43, 44], \(\left\Vert \mathcal {G}(\textbf{x})\right\Vert ^{2}\) can be used to measure how far \(\textbf{x}\) is from optimality. Specifically, \(\textbf{x}\) is a critical point of (46) if and only if \(\mathcal {G}(\textbf{x})=0.\)

-

6

The introduced non-convex projection method is an exact solution for the proximal gradient step. This is because it is based on finding the roots of a polynomial of order 2q in (56).

Therefore, from Theorem 4.1 and Proposition 4.3 of [41], the sequence generated by Algorithm 3 converges to a critical point of (45). Additionally, \(\left\Vert \mathcal {G}(\textbf{x}^{k})\right\Vert ^{2}\) converges with rate \(O(\frac{1}{K})\), thereby completing the proof. \(\square\)

Remark 1

The global convergence of several exact iterative methods that solve (46) has been explored, under the framework of Kurdyka-Lojasiewicz (KL) theory, in various additional literature including [43, 45,46,47,48]. Other work (see [49] and references therein) considered the linear convergence of non-exact algorithms with relaxations on the assumptions of KL theory, however, it is difficult to verify that the sequence generated by Algorithm 3 satisfies the relaxed assumptions stated in [49].

6 Numerical results

In this section, we present numerical examples to illustrate the application of the pQN-ADMM algorithm, as expounded in Algorithm 1, and the non-convex projection method delineated in Algorithm 2. Within each of the ensuing examples, we conduct comparative analyses with the convex \(\ell _{1}\) relaxation solution, achieved through the use of the MOSEK solver [50], and alternative \(\ell _{0.5}\)-based solutions previously proposed in the literature.

The degree of the polynomial for which the roots are determined during the non-convex projection step depends on the value of q in the context of \(p=\frac{s}{q}\). It might lead one to speculate that the computational complexity of the non-convex projection step is contingent on the specific value of p, suggesting that lower values of p result in slower algorithm performance. In order to explore this aspect, we systematically performed the non-convex projection step 200 times on a vector of 1024 elements, as part of a sparse vector reconstruction example. Throughout this process, we systematically varied the values of the parameter p, considering a range of p values, specifically \(p\in \{\frac{1}{2},\frac{1}{3},\frac{1}{4},\frac{1}{5}\}\). The average time to perform the non-convex projection for the entire vector, where the roots of (26) for each p are computed using the “root” command in MATLAB, is observed to be nearly constant, approximately 0.03 s. Furthermore, our numerical experiments in this particular example indicated that for \(p\in \{\frac{1}{3},\frac{1}{4},\frac{1}{5}\}\), no substantial improvement over the \(\ell _{0.5}\) case was observed. As a result, these cases are currently undergoing further investigation and are not included in the numerical results section.

6.1 Sparse vector recovery (SVR)

In this section, we implement a sparse vector reconstruction problem and compare the solution of the pQN-ADMM algorithm with the \(\ell _{1}\) convex relaxation along with an \(\ell _{0.5}\) relaxation solution and Linear Approximation for Index Tracking (LAIT), as presented in [51] and [52], respectively.

Let \(n=2^{10}\) and \(m=n/4\), randomly construct the sparse binary matrix, \({\textbf {M}}\in \mathbb {R}^{m \times \frac{n}{2}}\), with a few number of ones in each column. The number of ones in each column of \(\textbf{M}\) is generated independently and randomly in the range of integers between 10 and 20, and their locations are randomly chosen independently for each column. Let \(\textbf{U}=[\textbf{M},-\textbf{M}]\), which is the vertical concatenation of the matrix \({\textbf {M}}\) and its negative. Following the same setup in [53], the column orthogonality in \(\textbf{U}\) is not satisfied. Let \(\textbf{x}_{\textrm{opt}} \in \mathbb {R}^{n}\) be a reference signal with \(\Vert \textbf{x}_{\textrm{opt}}\Vert _{0}=\lceil 0.2n \rceil\), where the non-zero locations are chosen uniformly at random with the values following a zero mean, unit variance Gaussian distribution. Let \({\textbf {v}}=\textbf{U}\textbf{x}_{\textrm{opt}}+{\textbf {n}}\) be the allowable measurement, where \({\textbf {n}} \in \mathbb {R}^{m}\) is a Gaussian random vector with zero mean and co-variance matrix \(\sigma ^{2}\textbf{I}_{m\times m}\), where \(\textbf{I}\) is the identity matrix. The sparse vector is reconstructed from \({\textbf {v}}\) by solving (5) with \(\mathcal {V}=\{ \textbf{x}: \Vert \textbf{U}\textbf{x}-\textbf{v}\Vert /\Vert \textbf{v}\Vert -\epsilon \le 0 \}\), where \(\epsilon =\frac{3\sigma }{\Vert \textbf{v}\Vert }\). All the algorithms are terminated if \(\Vert \textbf{x}^{k}-\textbf{x}^{k-1}\Vert /\Vert \textbf{x}^{k-1}\Vert \le 10^{-4}\) or a budget of 200 iterations is consumed.

Figure 1 depicts the correlation between sparsity levels and noise variances concerning solutions derived through \(\ell _{1}\) norm minimization, \(\ell _{0.5}\), LAIT, and pQN-ADMM techniques. A threshold of \(10^{-6}\) was imposed, designating entries of the solution vector as zero if they fell below this threshold. The reported outcomes are based on the average results obtained from 20 independently conducted random iterations. Notably, it becomes evident that the pQN-ADMM algorithm consistently yields solutions with higher sparsity levels in comparison to its counterpart baseline methods, across a range of \(\sigma ^{2}\) values. As \(\sigma ^{2}\) increases, the sparsity level for all approaches decreases, attributable to the heightened scarcity of information pertaining to the original signal within the realization vector, thereby compromising the precision of the reconstruction process.

6.2 Rank minimization problem (RMP)

Within this section, our primary focus is directed towards the exploration of the pQN-ADMM algorithm within the RMP framework, as presented in Sect. 4. We commence by engaging in a matrix completion scenario, presenting an extensive comparative analysis pitting the pQN-ADMM algorithm against various baseline methods rooted in the Schatten-p quasi-norm framework.

Additionally, we delve into a time domain system identification example. Notably, we restrict our comparative analysis to the convex nuclear norm. This singularity in focus arises from the unique constraint nature of the problem at hand, specifically the Hankel constraint. To the best of our knowledge, there are no other Schatten-p-based algorithms capable of addressing constraints of this specific nature in the proposed formulation. This serves to underscore the remarkable versatility of the pQN-ADMM algorithm in handling a broad spectrum of constraints, be they within the vector or matrix domain.

6.2.1 Matrix completion

In this section, we apply our algorithm (pQN-ADMM) to a matrix completion example and compare the result to the Matrix Iterative Re-weighted Least Squares (MatrixIRLS) [54, 55], truncated Iterative Re-weighted unconstrained Lq (tIRucLq) [56] and Iterative Re-weighted Least Squares (sIRLS-p & IRLS-p) [57] algorithms. The matrix completion problem is a special case of the low-rank minimization where a linear transform takes a few random entries of an ambiguous matrix \(\textbf{X}\!\in \! \mathbb {R}^{m\times n}\). Given only these entries, the goal is to approximate \(\textbf{X}\) and find the missing ones. The matrix completion problem with low-rank recovery can be approximated by,

where \(\mathcal {A}:\mathbb {R}^{m\times n} \rightarrow \mathbb {R}^{q}\) is a linear map with \(q \ll mn\) and \(\textbf{b} \in \mathbb {R}^{q}\). To facilitate the application of the aforementioned algorithms, the linear transform \(\mathcal {A}(\textbf{X})\) will be reformulated as \(\textbf{A} \textrm{vec}(\textbf{X})\), where \(\textbf{A} \in \mathbb {R}^{q\times mn}\) and \(\textrm{vec}(\textbf{X}) \in \mathbb {R}^{mn}\) represents a vector obtained by stacking the columns of the matrix \(\textbf{X}\).

A random matrix \(\textbf{M} \in \mathbb {R}^{m\times n}\) with rank \(\textrm{r}\) is created using the following method: 1) \(\textbf{M}=\textbf{M}_{\textrm{L}}\textbf{M}_{\textrm{R}}^{\top }\), where \(\textbf{M}_{\textrm{L}} \in \mathbb {R}^{m\times \textrm{r}}\) and \(\textbf{M}_{\textrm{R}} \in \mathbb {R}^{n\times \textrm{r}}\). 2) The entries of both \(\textbf{M}_{\textrm{L}}\) and \(\textbf{M}_{\textrm{R}}\) are i.i.d Gaussian random variables with zero mean and unit variance. Let \(\hat{\textbf{M}}=\textbf{M}+\textbf{Z}\), where \(\textbf{Z} \in \mathbb {R}^{m\times n}\) is a Gaussian noise with each entry being an i.i.d Gaussian random variable with zero mean and variance \(\sigma ^{2}\). The vector \(\textbf{b}\) is then created by selecting random q elements from \(\textrm{vec}(\hat{\textbf{M}})\). Since \(\textbf{b}=\textbf{A}\textrm{vec}(\hat{\textbf{M}})\), one can easily construct the matrix \(\textbf{A}\) which is a sparse matrix where each row is composed of a value 1 at the index of the corresponding selected entry in the vector \(\textbf{b}\) while the rest are zeros. We set \(m=n=100\), \(\textrm{r}=5\) and \(p=0.5\). Let \(\textrm{d}_{\textrm{r}}=\textrm{r}(m+n-\textrm{r})\) denotes the dimension of the set of rank \(\textrm{r}\) matrices and define \(\textrm{s}=\frac{q}{mn}\) as the sampling ratio. We assume that \(\textrm{s}=0.195\) which yields to \(q=1950\). It can be realized that \(\frac{\textrm{d}_{\textrm{r}}}{q}<1\). We set \(\sigma =0.1\), \(\epsilon =10^{-3}\), and let the algorithms terminate if a budget of 1000 iterations is reached. To compare the solutions across different algorithms, where \(\textbf{X}^{*}\) represents the solution for (59), we evaluate the average of 50 runs based on two metrics: a) the Relative Frobenius Distance (RFD) to the matrix \(\textbf{M}\), defined as \(RFD=\frac{\Vert \textbf{X}^{*}-\textbf{M}\Vert _{\textrm{f}}}{\Vert \textbf{M}\Vert _{\textrm{f}}}\), and b) the Relative Error to Singular (REtS) values of \(\textbf{M}\), given by \(REtS_{i}=\frac{|\sigma _{i}(\textbf{X}^{*})-\sigma _{i}(\textbf{M})|}{\sigma _{i}(\textbf{M})}\) for \(i\in [\min \{m,n\}]\).

In Fig. 2a, b, we report the average RFD and REtS values for all the algorithms. Despite that, all the baselines are designed to exploit the specific structure of the matrix completion problem, described in (59), while the proposed pQN-ADMM doesn’t, it is competitive against them all. This in turn shows the effectiveness of the pQN-ADMM algorithm in solving the rank minimization problems without requiring any prior information about the structure of the associated convex set.

6.2.2 Time domain system identification

We consider a stable Single Input Single Output (SISO) system operating in discrete time, wherein the input vector \(\textbf{u} \in \mathbb {R}^{T}\) corresponds to a temporal span denoted by T, representing the number of input samples. The system is characterized by an impulse response consisting of a fixed number of samples denoted as n. The resultant output of the system is represented by \(\textbf{y} \in \mathbb {R}^{m}\). However, in practical scenarios, only noisy realizations, denoted as \(\hat{\textbf{y}}\), are observable. This realization is expressed as \(\hat{\textbf{y}}{\mathop {=}\limits ^{\Delta }}\textbf{y}+\textbf{z}=\textbf{h} \circledast \textbf{u} +\textbf{z}\), where \(\textbf{h} \in \mathbb {R}^{n}\) signifies the system’s original impulse response, \(\textbf{z} \in \mathbb {R}^{m}\) is a random vector with entries drawn independently from a uniform distribution within the range \([-0.25, 0.25]\), and \(\circledast\) denotes the convolution operator.

Exploiting the window property of convolution, which asserts that \(m=n+T-1\), we establish the relationship among the components \(u_{i}\), \(h_{i}\), and \(y_{i}\) through the linear convolution relation \(y_{i}=\sum _{j=-\infty }^{\infty }h_{j}u_{i-j}\). Herein, \(u_{i}\), \(h_{i}\), and \(y_{i}\) represent the ith components of the vectors \(\textbf{u}\), \(\textbf{h}\), and \(\textbf{y}\), respectively. To succinctly represent the convolution, let \(\textbf{T} \in \mathbb {R}^{m\times n}\) be the Toeplitz matrix formed by the input \(\textbf{u}\), allowing us to express \(\textbf{h} \circledast \textbf{u}=\textbf{h} \textbf{T}^\top\). Furthermore, assuming \(\textbf{x}\in \mathbb {R}^{n}\) to be an impulse response variable, we introduce \(\textbf{X} \in \mathbb {R}^{n\times n}\) as a Hankel matrix formed by the entries of \(\textbf{x}\). From [58,59,60,61], the minimum order time domain system identification problem can be formulated as:

(60b) ensures that \(\textbf{X}\) is a Hankel matrix and (60c) holds to make the result by applying the input, \(\textbf{u}\), to the optimal impulse response, \(\textbf{x}\), fit the available noisy data, \(\hat{\textbf{y}}\), in a non-trivial sense. Defining the convex set \(\mathcal {C}\!{\mathop {=}\limits ^{\Delta }}\!\{\textbf{X} \!\in \!\mathbb {R}^{n\times n}:\left\Vert \hat{\textbf{y}}\!-\!\textbf{x} \textbf{T}^\top \right\Vert ^{2}\!\!\!-\!\epsilon \le 0, \textbf{X}\!=\!Hankel(\textbf{x})\}\), (60) can be cast as:

which is clearly identical to the problem in (1). The problem was solved using the same pQN-ADMM approach discussed in Sect. 4.

Let \(T = m = 50\) and \(n = 40\). It is pertinent to note that \(m < T + n - 1\), is a reasonable assumption aligning with practical applications where only a specific window is available to observe the output. The simulation is conducted across 5 distinct original system orders denoted by \(\eta \in \{2,4,6,8,10\}\). An input vector, \(\textbf{u}\), is generated, with its elements being independent and following a uniform distribution over the interval \([-5, 5]\). For each \(\eta\):

-

1

Fifty random stable systems are generated using the ’drss’ command in MATLAB.

-

2

The generated input is applied to each system, yielding the corresponding noisy output \(\hat{\textbf{y}}\).

-

3

Given the output \(\hat{\textbf{y}}\), the problem specified in (60) is solved, and the rank of the corresponding system is computed using singular value decomposition.

-

4

The obtained results are averaged to derive the corresponding average rank for each original \(\eta\).

Figure 3 presents the average rank results obtained through the nuclear norm and pQN-ADMM heuristics. The outcomes correspond to two distinct threshold values, wherein the threshold is defined as the value below which the singular value is considered zero. Notably, the introduced pQN-ADMM approach demonstrates superior performance compared to the nuclear norm heuristic for both threshold values. Furthermore, as the threshold value decreases from \(10^{-4}\) to \(10^{-5}\), the pQN-ADMM’s behavior remains consistent, while the average rank for the nuclear norm exhibits an increase. This observation underscores the robustness of the derived pQN-ADMM relative to the nuclear norm approach.

Table 1 provides the standard deviation values for the algorithms. It is evident that the standard deviation remains constant for the pQN-ADMM when altering the threshold; conversely, it increases for the nuclear norm as the threshold value decreases.

6.3 Accelerated proximal gradient (APG) algorithm

In this section, we present numerical results for the APG method, as outlined in Algorithm 3. Our primary objective is to address the minimization problem (45) with \(f(\textbf{x})=\left\Vert \textbf{A}\textbf{x}-\textbf{b}\right\Vert ^{2}\).

Consistent with the approach in [62], we initiate the process by generating the target signal \(\textbf{x}^{*}\) through:

where the design parameters \(\Lambda \subset [n]\), and \(\Theta _i^{(1)}, \Theta _i^{(2)}\) for \(i\in \Lambda\) are chosen as follows:

-

1

The index set \(\Lambda \subset [n]\) is constructed by selecting a subset of [n] with cardinality s uniformly at random;

-

2

\(\{\Theta _{i}^{(1)}\}_{i\in \Lambda }\) are Independent and Identically Distributed (IID) Bernoulli random variables taking values \(\pm 1\) with equal probability;

-

3

\(\{\Theta _{i}^{(2)}\}_{i\in \Lambda }\) are IID uniform [0, 1] random variables.

The measurement matrix \(\textbf{A} \in \mathbb {R}^{m\times n}\) is constructed as a partial Discrete Cosine Transform (DCT) matrix, with its rows corresponding to \(m<n\) frequencies. Specifically, these m indices are selected uniformly at random from the set [n]. The noisy measurement vector \(\textbf{b} \in \mathbb {R}^{m}\) is subsequently defined as \(\textbf{b}=\textbf{A}(\textbf{x}^{*}+\varvec{\epsilon }_{1})+\varvec{\epsilon }_{2}\), where \(\varvec{\epsilon }_{1}\) and \(\varvec{\epsilon }_{2}\) are IID random vectors with entries following zero mean Gaussian distributions with variances \(\sigma _{1}^{2}\) and \(\sigma _{2}^{2}\), respectively.

In our experiments, \(n=4096\), \(s=\lceil 0.5m\rceil\) and the APG algorithm memory to 5, i.e., \(l=5\) in Algorithm 3. Following the medium noise setup in [63], we set \(\sigma _1=0.005\), \(\sigma _2=0.001\).

For the objective function \(f(\textbf{x})=\left\Vert \textbf{A}\textbf{x}-\textbf{b}\right\Vert ^{2}\), the Lipschitz constant is given by \(L=2\Vert \textbf{A}\Vert ^2\). Our experimental design encompasses varying values of m, representing the number of noisy measurements, and \(\mu\), serving as the trade-off parameter in (45). For each unique combination of \((m,\mu )\), we conduct 20 random instances of the triplet \((\textbf{x}^{*}, \textbf{A}, \textbf{b})\) to account for the inherent statistical variability of the problem. Each random instance is subsequently solved using Algorithm 3, and the average performance is reported. The termination criterion for Algorithm 3 is defined as the relative error between consecutive iterates satisfies \(\left\Vert \textbf{x}^{k}-\textbf{x}^{k-1}\right\Vert /\left\Vert \textbf{x}^{k-1}\right\Vert \le 10^{-5}\).

In our experiments, we conducted a comparative analysis of solving (45) for \(p=0.5\) against \(p=1\), corresponding to \(\ell _1\)-optimization for sparse recovery. Specifically, for \(p=0.5\), denoting \(\ell _{0.5}\) minimization, we employed Algorithm 3, referred to as \(\ell _{0.5}\) exact. Additionally, we utilized Algorithm 2 from [64], denoted as \(\ell _{0.5}\) approx. Conversely, for \(p=1\), where the \(\ell _1\)-minimization problem is convex, we employed the FISTA algorithm from [42]. The solutions are denoted as \(\bar{\textbf{x}}\), while the target signal, derived from (62), is denoted as \(\textbf{x}^{*}\). In Algorithm 3, we initialized \(\textbf{x}^{0}\) as a zero vector, and \(\textbf{x}^{1}\) was set to the \(\ell _{1}\) norm solution.

Figures 4 and 5 illustrate the relationship between average error, sparsity, and \(\mu\) for various values of n/m. A discernible trend is observed wherein the average error decreases while sparsity increases with an increase in \(\mu\). When \(\mu\) is small, greater emphasis is placed on the loss function, emphasizing \(\ell _{0.5}\) quasi-norm minimization. Consequently, the sparsity level, as depicted in Fig. 5, remains low. Conversely, for higher values of \(\mu\), more weight is assigned to the regularization term’s minimization, resolving \(\left\Vert \textbf{A}\textbf{x}-\textbf{b}\right\Vert ^{2}\), resulting in decreased error (Fig. 4) accompanied by an increase in sparsity.

Figure 6 provides insight into the statistics of the number of iterations until convergence for both the \(\ell _{0.5}\) exact and approximate algorithms. Notably, with a sufficient number of available realizations, specifically for \(n/m=8\) and \(n/m=16\), both algorithms require approximately the same number of iterations. However, as the number of available realizations decreases, particularly for \(n/m=32\) and higher, the exact proximal solution exhibits a significantly lower number of iterations to converge. This observation, coupled with the findings in Figs. 4 and 5, suggests that our algorithm not only yields a comparable solution to the approximate method but also converges with fewer iterations.

7 Conclusion

In this paper, we introduced a non-convex ADMM algorithm, denoted as pQN-ADMM, designed for solving the \(\ell _{p}\) quasi-norm minimization problem. Significantly, our proposed algorithm serves as a versatile approach for tackling \(\ell _{p}\) problems, as it does not rely on specific structural assumptions for the convex constraint set. Moreover, we delved into the problem of solving a non-convex relaxation of RMPs utilizing the Schatten-p quasi-norm. This relaxation was established as the \(\ell _{p}\) minimization of the singular values of the variable matrix, rendering it amenable to the pQN-ADMM algorithm. For scenarios involving constraints defined by differentiable functions with Lipschitz continuous gradients, a proximal gradient step was employed, mitigating the need for a convex projection step. This enhancement not only accelerates the algorithm but also ensures its convergence. Illustrating the numerical results, we applied the pQN-ADMM to diverse examples, encompassing sparse vector reconstruction, matrix completion, and system identification. The algorithm demonstrated competitiveness against various\(\ell _{p}\)-based baselines, underscoring its efficacy across a spectrum of applications.

Availability of data and materials

Not applicable.

References

L. Vandenberghe, S. Boyd, Semidefinite programming. SIAM Rev. 38(1), 49–95 (1996). https://doi.org/10.1137/1038003

J. Wright, A.Y. Yang, A. Ganesh, S.S. Sastry, Y. Ma, Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 210–227 (2009)

E.J. Candes, J. Romberg, T. Tao, Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006)

D.L. Donoho, Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

A.M. Bruckstein, D.L. Donoho, M. Elad, From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev. 51(1), 34–81 (2009)

J.A. Tropp, S.J. Wright, Computational methods for sparse solution of linear inverse problems. Proc. IEEE 98(6), 948–958 (2010)

S.G. Mallat, Z. Zhang, Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41(12), 3397–3415 (1993)

J.A. Tropp, Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 50(10), 2231–2242 (2004)

R. Chartrand, Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 14(10), 707–710 (2007)

R. Chartrand, W. Yin, Iteratively reweighted algorithms for compressive sensing. in 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 3869–3872 (2008). IEEE

A. Miller, Subset Selection in Regression (CRC Press, 2002)

E.J. Candes, T. Tao, Decoding by linear programming. IEEE Trans. Inf. Theory 51(12), 4203–4215 (2005)

R. Saab, R. Chartrand, O. Yilmaz, Stable sparse approximations via nonconvex optimization. in 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 3885–3888 (2008)

R. Chartrand, Fast algorithms for nonconvex compressive sensing: MRI reconstruction from very few data. in 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, pp. 262–265 (2009)

N. Mourad, J.P. Reilly, Minimizing nonconvex functions for sparse vector reconstruction. IEEE Trans. Signal Process. 58(7), 3485–3496 (2010)

R. Chartrand, B. Wohlberg, A nonconvex ADMM algorithm for group sparsity with sparse groups. in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 6009–6013 (2013). https://doi.org/10.1109/ICASSP.2013.6638818

S. Boyd, N. Parikh, E. Chu, Distributed Optimization and Statistical Learning Via the Alternating Direction Method of Multipliers (Now Publishers Inc, 2011)

Z. Xu, X. Chang, F. Xu, H. Zhang, \(l_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23(7), 1013–1027 (2012). https://doi.org/10.1109/TNNLS.2012.2197412

J. Zeng, S. Lin, Y. Wang, Z. Xu, \(l_{1/2}\) regularization: convergence of iterative half thresholding algorithm. IEEE Trans. Signal Process. 62(9), 2317–2329 (2014). https://doi.org/10.1109/TSP.2014.2309076

G. Li, T.K. Pong, Global convergence of splitting methods for nonconvex composite optimization. SIAM J. Optim. 25(4), 2434–2460 (2015)

Y. Wang, W. Yin, J. Zeng, Global convergence of ADMM in nonconvex nonsmooth optimization. J. Sci. Comput. 78(1), 29–63 (2019)

J. Zhang, S. Zhang, W. Wang, Robust signal recovery for \(\ell _{1-2}\) minimization via prior support information. Inverse Prob. 37(11), 115001 (2021). https://doi.org/10.1088/1361-6420/ac274a

W. Wang, J. Zhang, Performance guarantees of regularized \(\ell _{1-2}\) minimization for robust sparse recovery. Signal Process. 201, 108730 (2022)

X. Luo, N. Feng, X. Guo, Z. Zhang, Exact recovery of sparse signals with side information. EURASIP J. Adv. Signal Process. 2022(1), 1–14 (2022)

M. Mesbahi, A semi-definite programming solution of the least order dynamic output feedback synthesis problem. in Proceedings of the 38th IEEE Conference on Decision and Control (Cat. No.99CH36304), vol. 2, pp. 1851–18562 (1999). https://doi.org/10.1109/CDC.1999.830903

M. Fazel, H. Hindi, S.P. Boyd, A rank minimization heuristic with application to minimum order system approximation. in Proceedings of the 2001 American Control Conference. (Cat. No.01CH37148), vol. 6, pp. 4734–47396 (2001)

M. Fazel, H. Hindi, S.P. Boyd, Log-det heuristic for matrix rank minimization with applications to hankel and euclidean distance matrices. in Proceedings of the 2003 American Control Conference, 2003., vol. 3, pp. 2156–21623 (2003)

M. Fazel, H. Hindi, S. Boyd, Rank minimization and applications in system theory. in Proceedings of the 2004 American Control Conference, vol. 4, pp. 3273–32784 (2004)

F. Nie, H. Huang, C. Ding, Low-rank matrix recovery via efficient Schatten p-norm minimization. in Twenty-sixth AAAI Conference on Artificial Intelligence (2012)

F. Nie, H. Wang, H. Huang, C. Ding, Joint schatten \(p\)-norm and \(l_{p}\) norm robust matrix completion for missing value recovery. Knowl. Inf. Syst. 42(3), 525–544 (2015)

R. Chartrand, Nonconvex splitting for regularized low-rank + sparse decomposition. IEEE Trans. Signal Process. 60(11), 5810–5819 (2012). https://doi.org/10.1109/TSP.2012.2208955

M.D. Gupta, S. Kumar, Non-convex p-norm projection for robust sparsity. in 2013 IEEE International Conference on Computer Vision, pp. 1593–1600 (2013). https://doi.org/10.1109/ICCV.2013.201

S. Bahmani, B. Raj, A unifying analysis of projected gradient descent for \(\ell _{p}\)-constrained least squares. Appl. Comput. Harmon. Anal. 34(3), 366–378 (2013)

C. Helmberg, F. Rendl, A spectral bundle method for semidefinite programming. SIAM J. Optim. 10(3), 673–696 (2000)

A. Beck, M. Teboulle, Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31(3), 167–175 (2003)

Y. Nesterov, A. Nemirovskii, Interior-point Polynomial Algorithms in Convex Programming (SIAM, 1994)

A. Ben-Tal, A. Nemirovski, Lectures on Modern Convex Optimization: Analysis, Algorithms, and Engineering Applications (SIAM, 2001)

Z. Zha, X. Zhang, Y. Wu, Q. Wang, X. Liu, L. Tang, X. Yuan, Non-convex weighted \(l_{p}\) nuclear norm based ADMM framework for image restoration. Neurocomputing 311, 209–224 (2018)

L. Mirsky, A trace inequality of John von Neumann. Monatshefte für mathematik 79(4), 303–306 (1975)

N. Parikh, S. Boyd, Proximal algorithms. Found. Trends Optim. 1(3), 127–239 (2014)

Q. Yao, J.T. Kwok, F. Gao, W. Chen, T.-Y. Liu, Efficient inexact proximal gradient algorithm for nonconvex problems. arXiv preprint arXiv:1612.09069 (2016)

A. Beck, M. Teboulle, A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

H. Attouch, J. Bolte, B.F. Svaiter, Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program. 137(1), 91–129 (2013)

P. Gong, C. Zhang, Z. Lu, J. Huang, J. Ye, A general iterative shrinkage and thresholding algorithm for non-convex regularized optimization problems. in International Conference on Machine Learning, pp. 37–45 (2013). PMLR

H. Attouch, J. Bolte, P. Redont, A. Soubeyran, Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Lojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

J. Bolte, S. Sabach, M. Teboulle, Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1), 459–494 (2014)

M. Razaviyayn, M. Hong, Z.-Q. Luo, A unified convergence analysis of block successive minimization methods for nonsmooth optimization. SIAM J. Optim. 23(2), 1126–1153 (2013)

P. Tseng, S. Yun, A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117(1), 387–423 (2009)

Y. Hu, C. Li, K. Meng, X. Yang, Linear convergence of inexact descent method and inexact proximal gradient algorithms for lower-order regularization problems. J. Global Optim. 79(4), 853–883 (2021)

M. ApS, The MOSEK Optimization Toolbox for MATLAB Manual. Version 9.0. (2019). http://docs.mosek.com/9.0/toolbox/index.html

S. Foucart, M.-J. Lai, Sparsest solutions of under-determined linear systems via \(\ell _{q}\)-minimization for \(0<q\le 1\). Appl. Comput. Harmon. Anal. 26(3), 395–407 (2009). https://doi.org/10.1016/j.acha.2008.09.001

K. Benidis, Y. Feng, D.P. Palomar, Sparse portfolios for high-dimensional financial index tracking. IEEE Trans. Signal Process. 66(1), 155–170 (2018). https://doi.org/10.1109/TSP.2017.2762286

D. Ge, X. Jiang, Y. Ye, A note on the complexity of \(\ell _{p}\) minimization. Math. Program. 129(2), 285–299 (2011)

C. Kümmerle, C. Mayrink Verdun, Escaping saddle points in ill-conditioned matrix completion with a scalable second order method. in Workshop on Beyond First Order Methods in ML Systems at the\(37^{th}\) International Conference on Machine Learning (2020)

C. Kümmerle, C. Mayrink Verdun, A scalable second order method for ill-conditioned matrix completion from few samples. in International Conference on Machine Learning (ICML) (2021)

M.-J. Lai, Y. Xu, W. Yin, Improved iteratively reweighted least squares for unconstrained smoothed \(\ell {q}\) minimization. SIAM J. Numer. Anal. 51(2), 927–957 (2013)

K. Mohan, M. Fazel, Iterative reweighted algorithms for matrix rank minimization. J. Mach. Learn. Res. 13(110), 3441–3473 (2012)

K. Mohan, M. Fazel, Reweighted nuclear norm minimization with application to system identification. in Proceedings of the 2010 American Control Conference, pp. 2953–2959 (2010)

M. Sznaier, M. Ayazoglu, T. Inanc, Fast structured nuclear norm minimization with applications to set membership systems identification. IEEE Trans. Autom. Control 59(10), 2837–2842 (2014)

Z. Liu, L. Vandenberghe, Semidefinite programming methods for system realization and identification. in Proceedings of the 48h IEEE Conference on Decision and Control (CDC) Held Jointly with 2009 28th Chinese Control Conference, pp. 4676–4681 (2009)

M. Fazel, T.K. Pong, D. Sun, P. Tseng, Hankel matrix rank minimization with applications to system identification and realization. SIAM J. Matrix Anal. Appl. 34(3), 946–977 (2013)

N.S. Aybat, G. Iyengar, A first-order augmented Lagrangian method for compressed sensing. SIAM J. Optim. 22(2), 429–459 (2012)

E.T. Hale, W. Yin, Y. Zhang, A fixed-point continuation method for l1-regularized minimization with applications to compressed sensing. CAAM TR07-07, Rice University 43, 44 (2007)

C. O’Brien, M.D. Plumbley, Inexact proximal operators for \(\ell _{p}\)-Quasi-norm minimization. in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4724–4728 (2018). https://doi.org/10.1109/ICASSP.2018.8462524

Acknowledgements

Not applicable.

Funding

This work has been partially supported by the National Institutes of Health (NIH) Grant R01 HL142732 and National Science Foundation (NSF) Grant 1808266.

Author information

Authors and Affiliations

Contributions

The problem formulation was initiated by CM. Analysis and development of the non-convex projection algorithms for sparse vector recovery and rank minimization were jointly developed by OS and ME. In section 6, ME conducted the experiments related to sparse vectors, while OS was responsible for the remaining numerical experiments and writing the paper. NS proposed the proximal gradient method and designed the framework for its numerical experiments. The paper was reviewed and edited by CM and NS. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All the authors provide EURASIP the consent for publication.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sleem, O.M., Ashour, M.E., Aybat, N.S. et al. Lp quasi-norm minimization: algorithm and applications. EURASIP J. Adv. Signal Process. 2024, 22 (2024). https://doi.org/10.1186/s13634-024-01114-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-024-01114-6