Abstract

We propose a method for finding approximate solutions to multiple-choice knapsack problems. To this aim we transform the multiple-choice knapsack problem into a bi-objective optimization problem whose solution set contains solutions of the original multiple-choice knapsack problem. The method relies on solving a series of suitably defined linearly scalarized bi-objective problems. The novelty which makes the method attractive from the computational point of view is that we are able to solve explicitly those linearly scalarized bi-objective problems with the help of the closed-form formulae. The method is computationally analyzed on a set of large-scale problem instances (test problems) of two categories: uncorrelated and weakly correlated. Computational results show that after solving, in average 10 scalarized bi-objective problems, the optimal value of the original knapsack problem is approximated with the accuracy comparable to the accuracies obtained by the greedy algorithm and an exact algorithm. More importantly, the respective approximate solution to the original knapsack problem (for which the approximate optimal value is attained) can be found without resorting to the dynamic programming. In the test problems, the number of multiple-choice constraints ranges up to hundreds with hundreds variables in each constraint.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The multi-dimensional multiple-choice knapsack problem (MMCKP) and the multiple-choice knapsack problem (MCKP) are classical generalizations of the knapsack problem (KP) and are applied to modeling many real-life problems, e.g., in project (investments) portfolio selection [21, 29], capital budgeting [24], advertising [27], component selection in IT systems [16, 25], computer networks management [17], adaptive multimedia systems [14], and other.

The multiple-choice knapsack problem (MCKP) is formulated as follows. Given are k sets \(N_{1}, N_{2},\ldots ,N_{k}\) of items, of cardinality \(|N_{i}|=n_{i}\), \(i=1,\ldots ,k\). Each item of each set has been assigned real-valued nonnegative ‘profit’ \(p_{ij}\ge 0\) and ‘cost’ \(c_{ij}\ge 0\), \(i=1,\ldots ,k\), \(j=1,\ldots ,n_{i}\).

The problem consists in choosing exactly one item from each set \(N_{i}\) so that the total cost does not exceed a given \(b\ge 0\) and the total profit is maximized.

Let \(x_{ij}\), \(i=1,\ldots ,k\), \(j=1,\ldots ,n_{i}\), be defined as

Note that all \(x_{ij}\) form a vector x of length \(n=\sum _{i=1}^{k}n_{i}\), \(x\in {\mathbb {R}}^{n}\), and we write

In this paper, we adopt the convention that a vector x is a column vector, and hence the transpose of x, denoted by \(x^\mathrm{T}\), is a row vector.

Problem (MCKP) is of the form

By using the above notations, problem (MCKP) can be equivalently rewritten in the vector form

where p and c are vectors from \({\mathbb {R}}^{n}\),

and for any vectors \(u, v\in {\mathbb {R}}^{n}\), the scalar product \(u^\mathrm{T}v\) is defined in the usual way as \(u^\mathrm{T}v:=\sum _{i=1}^{n}u_{i}v_{i}\).

The feasible set F to problem (MCKP) is defined by a single linear inequality constraint and the constraint \(x\in X\), i.e.,

and finally

The optimal value of problem (MCKP) is equal to \(\max _{x\in F} \ p^\mathrm{T} x\) and the solution set \(S^{*}\) is given as

Problem (MCKP) is \(\mathcal{N}\mathcal{P}\)-hard. The approaches to solving (MCKP) can be: heuristics [1, 12], exact methods providing upper bounds for the optimal value of the profit together with the corresponding approximate solutions [26], exact methods providing solutions [18]. There are algorithms that efficiently solve (MCKP) without sorting and reduction [8, 28] or with sorting and reduction [4]. Solving (MCKP) with a linear relaxation (by neglecting the constraints \(x_{ij}\in \{0,1\},\ \ i=1,\ldots ,k, \ \ j=1,\ldots ,n_{i}\)) gives upper bounds on the value of optimal profit. Upper bounds can be also obtained with the help of the Lagrange relaxation. These facts and other features of (MCKP) are described in details in monographs [13, 19].

Exact branch-and-bound methods [6] (integer programming), even those using commercial optimization software (e.g., LINGO, CPLEX) can have troubles with solving large (MCKP) problems. A branch-and-bound algorithm with a quick solution of the relaxation of reduced problems was proposed by Sinha and Zoltners [27]. Dudziński and Walukiewicz proposed an algorithm with pseudo-polynomial complexity [5].

Algorithms that use dynamic programming require integer values of data and for large-scale problems require large amount of memory for backtracking (finding solutions in set X), see also the monograph [19]. The algorithm we propose does not need the data to be integer numbers.

Heuristic algorithms, based on solving linear (or continuous) relaxation of (MCKP) and dynamic programming [7, 22, 24] are reported to be fast, but have limitations typical for dynamic programming.

The most recent approach “reduce and solve” [2, 10] is based on reducing the problem by proposed pseudo cuts and then solving the reduced problems by a Mixed Integer Programming (MIP) solver.

In the present paper, we propose a new exact (not heuristic) method which provides approximate optimal profits together with the corresponding approximate solutions. The method is based on multi-objective optimization techniques. Namely, we start by formulating a linear bi-objective problem (BP) related to the original problem (MCKP). After investigating the relationships between (MCKP) and (BP) problems, we propose an algorithm for solving (MCKP) via a series of scalarized linear bi-objective problems \((BS(\lambda ))\).

The main advantage of the proposed method is that the scalarized linear bi-objective problems \((BS(\lambda ))\) can be explicitly solved by exploiting the structure of the set X. Namely, these scalarized problems can be decomposed into k independent subproblems the solutions of which are given by simple closed-form formulas. This feature of our method is particularly suitable for parallelization. It allows to generate solutions of scalarized problems in an efficient and fast way.

The experiments show that the method we propose generates very quickly an outcome \({\hat{x}}\in F\) which is an approximate solution to (MCKP). Moreover, lower bound (LB) and upper bound (UB) for the optimal profit are provided.

The obtained approximate solution \({\hat{x}}\in F\) could serve as a good starting point for other, e.g., heuristic or exact algorithms for finding an optimal solution to the problem (MCKP).

The organization of the paper is as follows. In Sect. 2, we provide preliminary facts on multi-objective optimization problems and we formulate a bi-objective optimization problem (BP) associated with (MCKP). In Sect. 3, we investigate the relationships between the problem (BP) and the original problem (MCKP). In Sect. 4, we formulate scalarized problems \((BS(\lambda ))\) for bi-objective problem (BP) and we provide closed-form formulae for solutions to problems \((BS(\lambda ))\) by decomposing them into k independent subproblems \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\). In Sect. 5, we present our method (together with the pseudo-code) which provides a lower bound (LB) for the optimal profit together with the corresponding approximate feasible solution \({\hat{x}}\in F\) to (MCKP) for which the bound (LB) is attained. In Sect. 6, we report on the results of numerical experiments. The last section concludes.

2 Multi-objective optimization problems

Let \(f_{i}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\), \(i=1,\ldots ,k\), be functions defined on \({\mathbb {R}}^{n}\) and \(\varOmega \subset {\mathbb {R}}^{n}\) be a subset in \({\mathbb {R}}^{n}\).

The multi-objective optimization problem is defined as

where the symbol \('\text {V}\max '\) means that solutions to problem (P) are understood in the sense of Pareto efficiency defined in Definition 2.1.

Let

Definition 2.1

A point \(x^{*}\in \varOmega \) is a Pareto efficient (Pareto maximal) solution to (P) if

In other words, \(x^{*}\in \varOmega \) is a Pareto efficient solution to (P) if there is no \({\bar{x}}\in \varOmega \) such that

The problem (P) where all the functions \(f_{i}\), \(i=1,\ldots ,k\) are linear is called a linear multi-objective optimization problem.

Remark 2.1

The bi-objective problem

with Pareto solutions \(x^{*}\in \varOmega \) defined as

where

is equivalent to the problem

in the sense that Pareto efficient solution sets (as subsets of the feasible set \(\varOmega \)) coincide and Pareto elements (the images in \({\mathbb {R}}^{2}\) of Pareto efficient solutions) differ in sign in the second component.

2.1 A bi-objective optimization problem related to (MCKP)

In relation to the original multiple-choice knapsack problem (MCKP), we consider the linear bi-objective binary optimization problem (BP1) of the form

In this problem, the left-hand side of the linear inequality constraint \(c^\mathrm{T}x\le b\) of (MCKP) becomes a second criterion and the constraint set reduces to the set X. There are two-fold motivations of considering the bi-objective problem (BP1).

First motivation comes from the fact that in (MCKP) the inequality

is usually seen as a budget (in general: a resource) constraint with the left-hand-side to be preferably not greater than a given available budget b. In the bi-objective problem (BP1), this requirement is represented through the minimization of \(\sum _{i=1}^{k}\sum _{j=1}^{n_{i}}c_{ij}x_{ij}\). In Theorem 3.1 of Sect. 3, we show that under relatively mild conditions among solutions of the bi-objective problem (BP1) [or the equivalent problem (BP)] there are solutions to problem (MCKP).

Second motivation is important from the algorithmic point of view and is related to the fact that in the proposed algorithm we are able to exploit efficiently the specific structure of the constraint set X which contains k linear equality constraints (each one referring to a different group of variables) and the binary conditions only. More precisely, the set X can be represented as the Cartesian product

of the sets \(X^{i}\), where \(X^{i}:=\{x^{i}\in {\mathbb {R}}^{n_{i}}\ |\ \sum _{j=1}^{n_{i}}x_{ij}=1, x_{ij}\in \{0,1\},\ j=1,\ldots ,n_{i}\}\), \(i=1,\ldots ,k\) and

i.e.,

and \(x^{i}=(x_{i1},\ldots ,x_{in_{i}})\). Accordingly,

Note that due to the presence of the budget inequality constraint the feasible set F of problem (MCKP) cannot be represented in the form analogous to (3).

According to Remark 2.1, problem (BP1) can be equivalently reformulated in the form

3 The relationships between (BP) and (MCPK)

Starting from the multiple-choice knapsack problem (MCKP) of the form

in the present section we analyse relationships between problems (MCKP) and (BP).

We start with a basic observation. Recall first that (MCKP) is solvable, i.e., the feasible set F is nonempty if

On the other hand, if \(b\ge \max _{x\in X} c^\mathrm{T}x\), (MCKP) is trivially solvable. Thus, in the sequel we assume that

Let \(P_{max}:=\max _{x\in X} p^\mathrm{T}x\), i.e., \(P_{max}\) is the maximal value of the function \(p^\mathrm{T}x\) on the set X. The following observations are essential for further considerations.

-

1.

First, among the elements of X which realize the maximal value \(P_{max}\), there exists at least one which is feasible for (MCKP), i.e., there exists \(x_{p}\in X\), \(p^\mathrm{T}x_{p}= P_{max}\) such that \(c^\mathrm{T}x_{p}\le b\), i.e.,

$$\begin{aligned} C_{min}\le c^\mathrm{T}x_{p}\le b<C_{max}. \end{aligned}$$(6)Then, clearly, \(x_{p}\) solves (MCKP).

-

2.

Second, none of elements which realize the maximal value \(P_{max}\) is feasible for (MCKP), i.e., for every \(x_{p}\in X\), \(p^\mathrm{T}x_{p}= P_{max}\) we have \(c^\mathrm{T}x_{p}> b\), i.e., any \(x_{p}\) realizing the maximal value \(P_{max}\) is infeasible for (MCKP), i.e.

$$\begin{aligned} C_{min}\le b<c^\mathrm{T}x_{p}\le C_{max}. \end{aligned}$$(7)

In the sequel, we concentrate on Case 2, characterized by (7). This case is related to problem (BP). To see this let us introduce some additional notations. Let \(x_{cmin}\in X\) and \(x_{pmax}\in X\) be defined as

Let \(S_{bo}\) be the set of all Pareto solutions to the bi-objective problem (BP),

(c.f. Definition 2.1). The following lemma holds.

Lemma 3.1

Assume that we are in Case 2, i.e., condition (7) holds. There exists a Pareto solution to the bi-objective optimization problem (BP), \({\bar{x}}\in S_{bo}\) which is feasible to problem (MCKP), \(i.e., c^\mathrm{T}{\bar{x}}\le b\) which amounts to \({\bar{x}}\in F\).

Proof

According to Definition 2.1, both \(x_{pmax}\in X\) and \(x_{cmin}\in X\) are Pareto efficient solutions to (BP), i.e., there is no \(x\in X\) such that \((p^\mathrm{T}x, c^\mathrm{T}x)\ne (p^\mathrm{T}x_{pmax}, c^\mathrm{T}x_{pmax})\) and

and there is no \(x\in X\) such that \((p^\mathrm{T}x, c^\mathrm{T}x)\ne (p^\mathrm{T}x_{cmin}, c^\mathrm{T}x_{cmin})\) and

Moreover, by (7),

In view of (8), \({\bar{x}}=x_{cmin}\in S_{bo}\) and \({\bar{x}}=x_{cmin}\in F\) (\(c^\mathrm{T}x_{cmin}\le b\)) which means that \({\bar{x}}\) is feasible to problem (MCKP), which concludes the proof. \(\square \)

Now we are ready to formulate the result establishing the relationship between solutions of (MCKP) and Pareto efficient solutions of (BP) in the case where the condition (7) holds.

Theorem 3.1

Suppose we are given problem (MCKP) satisfying condition (7). Let \(x^{*}\in X\) be a Pareto solution to (BP), such that

Then \(x^{*}\) solves (MCKP).

Proof

Observe first that, by Lemma 3.1, there exist \(x\in S_{bo}\) satisfying the constraint \(c^\mathrm{T}x\le b\), i.e., condition \((*)\) is not dummy.

By contradiction, suppose that a feasible element \(x^{*}\in F\), i.e., \(x^{*}\in X\), \(c^\mathrm{T}x^{*}\le b\), is not a solution to (MCKP), i.e., there exists an \(x_{1}\in X\), such that

We show that \(x^{*}\) cannot satisfy condition \((*)\). If \(c^\mathrm{T}x_{1}\le c^\mathrm{T} x^{*}\), then \(x^{*}\) is not a Pareto solution to (BP), i.e., \(x^{*}\not \in S_{bo}\), and \(x^{*}\) does not satisfy condition \((*)\). Otherwise, \(c^\mathrm{T}x_{1}> c^\mathrm{T} x^{*}\), i.e.,

If \(x_{1}\in S_{bo}\), then because \(x^{*}\in S_{bo}\), then, according to (9), \(x^{*}\) cannot satisfy condition \((*)\).

If \(x_{1}\not \in S_{bo}\), there exists \(x_{2}\in S_{bo}\) which dominates \(x_{1}\), i.e., \((p^\mathrm{T}x_{2}, (-c)^\mathrm{T}x_{2})\in (p^\mathrm{T}x_{1}, (-c)^\mathrm{T}x_{1})+{\mathbb {R}}_{+}^{2}\). Again, if \(c^\mathrm{T}x_{2}\le c^\mathrm{T} x^{*}\), then \(x^{*}\) is not a Pareto solution to (BP), i.e., \(x^{*}\) cannot satisfy condition \((*)\). Otherwise, if \(c^\mathrm{T}x_{2}> c^\mathrm{T} x^{*}\), then either \(x^{*}\not \in S_{bo}\) and consequently \(x^{*}\) cannot satisfy condition \((*)\), or \(x^{*}\in S_{bo}\), in which case \(b-c^\mathrm{T}x^{*}> b-c^\mathrm{T} x_{1}\) and \(x^{*}\) does not satisfy condition \((*)\), a contradiction which completes the proof. \(\square \)

Theorem 3.1 says that under condition (7) any solution to (BP) satisfying condition \((*)\) solves problem (MCKP). General relations between constrained optimization and multi-objective programming were investigated in [15].

Basing ourselves on Theorem 3.1, in Sect. 5 we provide an algorithm for finding \(x\in S_{bo}\), a Pareto solution to (BP), which is feasible to problem (MCKP) and for which the condition \((*)\) is either satisfied or is, in some sense, as close as possible to be satisfied. In this latter case, the algorithm provides upper and lower bounds for the optimal value of (MCKP) (see Fig. 1).

Illustration to the content of Theorem 3.1; black dots—outcomes of Pareto efficient solutions to (BP), star—Pareto efficient outcome to (BP) which solves (MCKP)

4 Decomposition of the scalarized bi-objective problem (BP)

In the present section, we consider problem \((BS(\lambda _{1},\lambda _{2}))\) defined by (10) which is a linear scalarization of problem (BP). In our algorithm BISSA, presented in Sect. 5, we obtain an approximate feasible solution to (MCKP) by solving a (usually very small) number of problems of the form \((BS(\lambda _{1},\lambda _{2}))\). The main advantage of basing our algorithm on problems \((BS(\lambda _{1},\lambda _{2}))\) is that they are explicitly solvable by simple closed-form expressions (17) .

For problem (BP) the following classical scalarization result holds.

Theorem 4.1

[9, 20] If there exist \(\lambda _{\ell }>0\), \(\ell =1,2\), such that \(x^{*}\in X\) is a solution to the scalarized problem

then \(x^{*}\) is a Pareto efficient solution to problem (BP).

Without loosing generality we can assume that \(\sum _{l=1}^{2}\lambda _{\ell }=1\). In the sequel, we consider, for \(0<\lambda <1\), scalarized problems of the form

Remark 4.1

According to Theorem 4.1, solutions to problems

need not be Pareto efficient because the weights are not both positive. However, there exist Pareto efficient solutions to (BP) among solutions to these problems.

Namely, there exist \(\varepsilon _{1}>0\) and \(\varepsilon _{2}>0\) such that solutions to problems

and

are Pareto efficient solutions to problems (12), respectively. Suitable \(\varepsilon _{1}\) and \(\varepsilon _{2}\) will be determined in the next section.

4.1 Decomposition

Due to the highly structured form of the set X and the possibility of representing X in the form (3),

we can provide explicit formulae for solving problems \((BS(\lambda ))\). To this aim we decompose problems \((BS(\lambda ))\) as follows.

Recall that by using the notation (4) we can put any \(x\in X\) in the form

where \(x^{i}=(x_{i1},\ldots ,x_{in_{i}})\), \(i=1,\ldots ,k\), and \(\sum _{j=1}^{n_{i}}x_{ij}=1\).

Let \(0<\lambda <1\). According to (3) we have

for \(i=1,\ldots ,k\). Consider problems \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\), of the form

By solving problems \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\), we find their solutions \({\bar{x}}^{i}\). We shall show that

solves \((BS(\lambda ))\). Thus, problem (11) is decomposed into k subproblems (13), the solutions of which form solutions to (11).

Note that similar decomposed problems with feasible sets \(X^i\) and another objective functions have already been considered in [3] in relation to multi-dimensional multiple-choice knapsack problems.

Now we give a closed-form formulae for solutions of \((BS(\lambda ))_{i}\). For \(i=1,..,,k\), let

and let \(1\le j^{*}_{i}\le n_{i}\) be the index number for which the value \(V_{i}\) is attained, i.e.,

We show that

is a solution to \((BS(\lambda ))_{i}\) and

is a solution to \((BS(\lambda ))\). The optimal value of \((BS(\lambda ))\) is

Namely, the following proposition holds.

Proposition 4.1

Any element \({\bar{x}}^{i}\in {\mathbb {R}}^{n_{i}}\) given by (16) solves \((BS(\lambda ))_{i}\) for \(i=1,\ldots ,k\) and any \({\bar{x}}^{*}\in {\mathbb {R}}^{n}\) given by (17) solves problem \((BS(\lambda ))\).

Proof

Clearly, \({\bar{x}}^{i}\) are feasible for \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\), because \({\bar{x}}^{i}\) is of the form (16) and hence belongs to the set \(X^{i}\) which is the constraint set of \((BS(\lambda ))_{i}\). Consequently, \({\bar{x}}^{*}\) defined by (17) is feasible for \((BS(\lambda ))\) because all the components are binary and the linear equality constraints

are satisfied.

To see that \({\bar{x}}^{i}\) are also optimal for \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\), suppose by the contrary, that there exists \(1\le i\le k\) and an element \(y\in {\mathbb {R}}^{n_{i}}\) which is feasible for \((BS(\lambda ))_{i}\) with the value of the objective function strictly greater than the value at \({\bar{x}}^{i}\), i.e.,

This, however, would mean that there exists an index \(1\le j\le n_{i}\) such that

contrary to the definition of \(j^{*}\).

To see that \({\bar{x}}^{*}\) is optimal for \((BS(\lambda ))\), suppose by the contrary, that there exists an element \(y\in {\mathbb {R}}^{n}\) which is feasible for \((BS(\lambda ))\) and the value of the objective function at y is strictly greater than the value of the objective function at \({\bar{x}}^{*}\), i.e.,

In the same way as previously, we get the contradiction with the definition of the components of \({\bar{x}}^{*}\) given by (17). \(\square \)

Let us observe that each optimization problem \((BS(\lambda ))_{i}\) can be solved in time \(O(n_{i})\), hence problem \((BS(\lambda ))\) can be solved in time O(n), where \(n=\sum _{i=1}^{k}n_{i}\).

Clearly, one can have more than one solution to \((BS(\lambda ))_{i}\), \(i=1,\ldots ,k\). In the next section, according to Theorem 3.1, from among all the solutions of \((BS(\lambda ))\) we choose the one for which the value of the second criterion is greater than and as close as possible to \(-b\).

Note that by using Proposition 4.1, one can easily solve problems (P1) and (P2) defined in Remark 4.1, i.e., by applying (18) we immediately get

the optimal values of (P1) and (P2) and by (17), we find their solutions \({\bar{x}}_{1}\) and \({\bar{x}}_{2}\), respectively.

Proposition 4.1 and formula (17) allows to find \(\varepsilon _{1}>0\) and \(\varepsilon _{2}>0\) as defined in Remark 4.1. By (17), it is easy to find elements \({\bar{x}}_{1},{\bar{x}}_{2}\in X\) such that

Put

and let

where decr(p) and \(decr(-c)\) denote the smallest nonzero decrease on X of functions p and \((-c)\) from \({F}_{1}\) and \({F}_{2}\), respectively. Note that decr(p) and \(decr(-c)\) can easily be found.

Remark 4.2

The following formulas describe decr(p) and \(decr(-c)\),

where \(p^{i}\) and \(c^{i}\), \(i=1,\ldots ,k\), are defined by (4), \(p_{submax}^{i}\), \((-c)_{submax}^{i}\), \(i=1,\ldots ,k\), are submaximal values of functions \((p^{i})^\mathrm{T}x^{i}\), \(((-c)^{i})^\mathrm{T}x^{i}\), \(x^{i}\in X^{i}\), \(i=1,\ldots ,k\).

For any \(1\le i\le k\), the submaximal values of a linear function \((d^{i})^\mathrm{T}x^{i}\) on \(X^{i}\) can be found by: ordering first the coefficients of the function \((d^{i})\) decreasingly,

and next observing that the submaximal (i.e., smaller than maximal but as close as possible to the maximal) value of \((d^{i})\) on \(X^{i}\) is attained for

Basing on Remark 4.2 one can find values of \(p_{submax}^{i}\) and \((-c)_{submax}^{i}\) in time \(O(n_{i})\), \(i=1,\ldots ,k\), even without any sorting. It can be done for a given i by finding a maximal value among all \(p_{ij}\) (\(c_{ij}\)), \(j=1,\ldots ,n_{i}\), except \(p_{max}^{i}\) (\(c_{max}^{i}\)). Therefore the computational cost of calculating decr(p) and \(decr(-c)\) is O(n).

We have the following fact.

Proposition 4.2

Let \(F_{1}\), \(F_{2 }\), \({\bar{F}}_{1}\), \({\bar{F}}_{2}\), \({\bar{V}}_{1}\), \({\bar{V}}_{2}\) be as defined above. The problems

and

where

give Pareto efficient solutions to problem (BP), \({\bar{x}}_{1}\) and \({\bar{x}}_{2}\), respectively. Moreover,

i.e., \({\bar{x}}_{1}\), \({\bar{x}}_{2}\) solve problems (12), respectively.

Proof

Follows immediately from the adopted notations, see Fig. 2. For instance, the objective of problem (P1) is represented by the straight line passing through points \((F_{1},{\bar{F}}_{2})\) and \(({\bar{V}}_{1},F_{2})\), i.e.,

which gives (20). The choice of \(F_{1}\), \({\bar{F}}_{2}\) and \({\bar{V}}_{1}\), \(F_{2}\) guarantees that \({\bar{x}}_{1}\) solves (P1) (and analogously for \({\bar{x}}_{2}\) which solves (P2)). \(\square \)

5 Bi-objective approximate solution search algorithm (BISSA) for solving (MCKP)

In this section, we propose the bi-objective approximate solution search algorithm BISSA, for finding an element \({\hat{x}}\in F\) which is an approximate solution to (MCKP). The algorithm relies on solving a series of problems \((BS(\lambda ))\) defined by (11) for \(0<\lambda <1\) chosen in the way that the Pareto solutions \(x(\lambda )\) to \((BS(\lambda ))\) are feasible for (MCKP) and for which \((-c)^\mathrm{T}x(\lambda )+b\ge 0\) and \((-c)^\mathrm{T}x(\lambda )+b\) diminishes for subsequent \(\lambda \).

According to Theorem 4.1, each solution to \((BS(\lambda ))\) solves the linear bi-objective optimization problem (BP),

According to Theorem 3.1, any Pareto efficient solution \(x^{*}\) to problem (BP) which is feasible to (MCKP), i.e., \((-c)^\mathrm{T}x^{*}\ge -b\), and satisfies condition \((*)\), i.e.,

solves problem (MCKP). Since problems \((BS(\lambda ))\) are defined with the help of linear scalarization, we are not able, in general, to enumerate all \(x\in S_{bo}\) such that \((-c)^\mathrm{T}x+b\ge 0\) in order to find an \(x^{*}\) which satisfy condition \((*)\). On the other hand, by using linear scalarization, we are able to decompose and easily solve problems \((BS(\lambda ))\).

The BISSA algorithm aims at finding a Pareto efficient solution \({\hat{x}}\in X\) to (BP) which is feasible to (MCKP), i.e., \(c^\mathrm{T}{\hat{x}}\le b\) for which the value of \(b-c^\mathrm{T}{\hat{x}}\) is as small as possible (but not necessarily minimal) and approaches condition \((*)\) of Theorem 3.1 as close as possible.

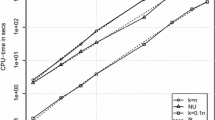

Here, we give a description of the BISSA algorithm. The first step of the algorithm (lines 1–5) is to find solutions to problems (P1) and (P2) as well as their outcomes. The solutions are the extreme Pareto solutions to problem (BP). Those points named \({(a_{1},b_{1})}^0\) and \({(a_{2},b_{2})}^0\) are presented in Fig. 3. Then (lines 6-9), in order to assert whether a solution to problem (MCKP) exists or not, a basic checking is made against value \(-b\). If the algorithm reaches line 10, no solution has been found yet, and we can begin the exploration of the search space.

We calculate \(\lambda \) according to line 13. The value of \(\lambda \) is the slope of the straight line joining \((a_{1},b_{1})\) and \((a_{2},b_{2})\). At the same time it is the scalarization parameter defining the problem \((BS(\lambda ))\) (formula 11). The outcome of the solution to problem \((BS(\lambda ))\) cannot lie below the straight line determined by points \((a_{1},b_{1})\) and \((a_{2},b_{2})\). It must lie on or above this line, as it is the Pareto efficient solution to problem (BP). Then, problem \((BS(\lambda ))\) is solved (line 14) by using formulae (16) and (17). Next, in lines 15–27 of the repeat-until loop a scanning of the search space is conducted to find solutions to problem (BP) which are feasible to problem (MCKP). If there exist solutions with outcomes lying above the straight line determined by \(\lambda \) (the condition in line 15 is true), either the narrowing of the search space is made (by determining new points \((a_{1},b_{1})\) and \((a_{2},b_{2})\), see Fig. 3, and points with upper index equal to 1), and the loop continues, or the solution to problem (MCKP) is derived. If not, the solution x from set S which outcome lies above the line determined by \(-b\) [the feasible solution to problem (MCKP)] and for which value \(f_{2}(x)+b\) is minimal in this set, is an approximate solution (\({\hat{x}}\)) to problem (MCKP), and the loop terminates. Finally (line 28), the upper bound \(f_{1}({\hat{x}})+u\) on the profit value of exact solution to problem (MCKP) is calculated.

The BISSA algorithm finds either an exact solution to problem (MCKP), or (after reaching line 27) a lower bound (LB) with its solution \({\hat{x}}\) and an upper bound (UB) (see Fig. 3). A solution found by the algorithm is, in general, only an approximate solution to problem (MCKP) because a triangle (called further the triangle of uncertainty) determined by points \((f_{1}({\hat{x}}), f_{2}({\hat{x}})), (f_{1}({\hat{x}})+u, -b), (f_{1}({\hat{x}}),-b)\) may contain other Pareto outcomes [candidates for outcomes of exact solutions to problem (MCKP)] which the proposed algorithm is not able to derive. The reason is that we use a scalarization technique based on weighted sums of criteria functions to obtain Pareto solutions to problem (BP).

Let us recall that each instance of the optimization problem \((BS(\lambda ))\) can be solved in time O(n), but the number of these instances solved by the proposed algorithm depends on the size of the problem (values k and \(n_{i}\)) and the data.

6 Computational experiments

Most publicly available test instances refer not to the (MCKP) problem (let us recall, that there is only one inequality or budget constraint in the problem we consider) but to multi-dimensional knapsack problems. Due to this fact we generate new random instances (available from the authors on request). However, to compare solutions obtained by the BISSA algorithm to the exact solutions we used the minimal algorithm for the multiple-choice knapsack problem [22] which we call EXACT and its implementation in C [23]. The EXACT algorithm gives the profit value of the optimal solution as well as the solution obtained by the greedy algorithm for the (MCKP) problem, so the quality of the BISSA algorithm approximate solutions can be assessed in terms of the difference or relative difference between profit values of approximate solutions and exact ones.

Since the difficulty of knapsack problems (see, e.g., the monograph [19]) depends on the correlation between profits and weights of items, we conducted two computational experiments: Experiment 1 with uncorrelated data instances (easy to solve) and Experiment 2 with weakly correlated data instances (more difficult to solve) (c.f.[11]). The explanation why weakly correlated problems are more difficult to solve by the BISSA algorithm than uncorrelated ones we give later.

To prepare test problems (data instances) we used a method proposed in [22] and our own procedure for calculating total cost values.

The BISSA algorithm has been implemented in C. The implementation of BISSA algorithm was run on off-the-shelf laptop (2GHz AMD processor, Windows 10), and the implementation of EXACT algorithm was run on PC machine (4x3.2GHz Intel processor, Linux). The running time for BISSA and EXACT algorithms for each of the test problems was below one second.

The contents of the tables columns containing experiment results is as follows.

- 1:

-

Problem no.

- 2:

-

Profit of the exact solution found by the EXACT algorithm.

- 3:

-

Profit of the approximate solution found by the BISSA algorithm.

- 4:

-

Difference between 2 and 3.

- 5:

-

Relative (%) difference between 2 and 3.

- 6:

-

Upper bound for (MCKP) found by the BISSA algorithm.

- 7:

-

The difference between the upper bound and profit of the approximate solution.

- 8:

-

The relative difference between the upper bound and profit of the approximate solution.

- 9:

-

Upper bound for (MCKP) found by the greedy algorithm.

- 10:

-

Number of \((BS(\lambda ))\) problems solved by the BISSA algorithm.

Experiment 1: uncorrelated data (unc) instances

We generated 10 test problems assuming that \(k=10\) and \(n_{i}=1000, i=1,\ldots ,k\) (problem set (unc, 10, 1000)), 10 test problems assuming that \(k=100\) and \(n_{i}=100, i=1,\ldots ,k\) (problem set (unc, 100, 100)), and 10 test problems assuming that \(k=1000\) and \(n_{i}=10, i=1,\ldots ,k\) (problem set (unc, 1000, 10)). For each test problem profits (\(p_{ij}\)) and costs (\(c_{ij}\)) of items were randomly distributed (according to the uniform distribution) in [1, R], \(R=10000\). Profits and costs of items were integers. For each test problem the total cost b was equal to either \(c + random(0,\frac{1}{4}*c)\), or \(c - random(0,\frac{1}{4}*c)\) randomly selected with the same probability equal to 0.5), where \(c=\frac{1}{2}\sum _{i=1}^{k}(\min _{j=1,\ldots ,n_{i}}c_{ij}+\max _{j=1,\ldots ,n_{i}}c_{ij})\), and random(0, r) denotes randomly selected (according to the uniform distribution) integer from [0, r].

The results for problem sets (unc, 10, 1000), (unc, 100, 100) and (unc, 1000, 10) are given, respectively, in tables Tables 1, 2 and 3.

Experiment 2: weakly correlated (wco) data instances

We generated 10 test problems assuming that \(k=20\) and \(n_{i}=20, i=1,\ldots ,k\) (problem set (wco, 20, 20)). For each test problem costs (\(c_{ij}\)) of items in set \(N_{i}\) were randomly distributed (according to the uniform distribution) in [1, R], \(R=10000\), and profits of items (\(p_{ij}\)) in this set were randomly distributed in \([c_{ij}-10, c_{ij}+10]\), such that \(p_{ij}\ge 1\). Profits and costs of items were integers. For each test problem the total cost b was calculated as for Experiment 1.

The results for problem set (wco, 20, 20) are given in Table 4.

In the case of uncorrelated data instances, the BISSA algorithm was able to find approximate solutions (and profit values) to problems with 10000 binary variables in reasonable time. The relative difference between profit values of exact and approximate solutions are small for each of the test problems. Upper bounds found by the BISSA algorithm are almost the same as upper bounds found by the greedy algorithm for (MCKP). Even for the problem set (unc, 1000, 10) number of \((BS(\lambda ))\) problems solved by the BISSA algorithm is relatively small in regards to number of decision variables.

In the case of weakly correlated data instances, the BISSA algorithm solved problems with 400 binary variables in reasonable time. The relative difference between profit values of exact and approximate solutions is, in average, greater than for uncorrelated test problems. As one can see in Table 4, upper bounds found by the BISSA algorithm are almost the same as upper bounds found by the greedy algorithm for (MCKP). The reason why the BISSA algorithm solves weakly correlated instances with a significantly smaller number of variables than for uncorrelated ones in reasonable time is as follows. In line 24 of the BISSA algorithm, in order to find an element \({\hat{x}}\), we have to go through the solution set S to the problem \((BS(\lambda ))\) [the complete scan of set S according to values of the second objective function of problem (BP)]. For weakly correlated data instances the cardinality of the set S may be large even for problems belonging to class (wco, 30, 30). We conducted experiments for problem class (wco, 30, 30). For the most difficult test problem in this class, the cardinality of solution set S to the problem \((BS(\lambda ))\) was 199,065,600. For greater weakly correlated problems that number may be even larger.

7 Conclusions and future works

A new approximate method of solving multiple-choice knapsack problems by replacing the budget constraint with the second objective function has been presented. Such a relaxation of the original problem allows to the smart scanning of the decision space by quick solving of the binary linear optimization problem (it is possible by the decomposition of this problem to independently solved easy subproblems). Let us note that our method can also be used for finding an upper bound for the multi-dimensional multiple-choice knapsack problem (MMCKP) via the relaxation obtained by summing up all the linear inequality constraints [1].

The method can be compared to greedy algorithm for multiple-choice knapsack problems which also finds, in general, an approximate solution and an upper bound.

Two preliminary computational experiments have been conducted to check how the proposed algorithm behaves for simple to solve (uncorrelated) instances and hard to solve (weakly correlated) instances. The results have been compared to results obtained by the exact state-of-the-art algorithm for multiple-choice knapsack problems [22]. For weakly correlated problems, the number of solution outcomes which have to be checked in order to derive the triangle of uncertainty (so also an approximate solution to the problem and its upper bound) grows fast with the size of the problem. Therefore, for weakly correlated problems we are able to solve smaller problem instances in reasonable time than for uncorrelated problem instances.

It is worth underlying that in the proposed method profits and costs of items as well as total cost can be real numbers. It could be of value when one wants to solve multiple-choice knapsack problems without changing real numbers into integers (as one has to do for dynamic programming methods).

Further work will cover investigations of how the algorithm behaves for weekly and strongly correlated instances as well as on the issue of finding a better solution by a smart “scanning” of the triangle of uncertainty.

References

Akbar, M.M., Rahman, M.S., Kaykobad, M., Manning, E.G., Shoja, G.C.: Solving the multidimensional multiple-choice knapsack problem by constructing convex hulls. Comput. Oper. Res. 33(5), 1259–1273 (2006). https://doi.org/10.1016/j.cor.2004.09.016

Chen, Y., Hao, J.K.: A reduce and solve approach for the multiple-choice multidimensional knapsack problem. Eur. J. Oper. Res. 239(2), 313–322 (2014). https://doi.org/10.1016/j.ejor.2014.05.025

Cherfi, N., Hifi, M.: A column generation method for the multiple-choice multi-dimensional knapsack problem. Comput. Optim. Appl. 46(1), 51–73 (2010). https://doi.org/10.1007/s10589-008-9184-7

Dudzinski, K., Walukiewicz, S.: A fast algorithm for the linear multiple-choice knapsack problem. Oper. Res. Lett. 3(4), 205–209 (1984). https://doi.org/10.1016/0167-6377(84)90027-0

Dudzinski, K., Walukiewicz, S.: Exact methods for the knapsack problem and its generalizations. Eur. J. Oper. Res. 28(1), 3–21 (1987). https://doi.org/10.1016/0377-2217(87)90165-2

Dyer, M., Kayal, N., Walker, J.: A branch and bound algorithm for solving the multiple-choice knapsack problem. J. Comput. Appl. Math. 11(2), 231–249 (1984). https://doi.org/10.1016/0377-0427(84)90023-2

Dyer, M., Riha, W., Walker, J.: A hybrid dynamic programming/branch-and-bound algorithm for the multiple-choice knapsack problem. J. Comput. Appl. Math. 58(1), 43–54 (1995). https://doi.org/10.1016/0377-0427(93)E0264-M

Dyer, M.E.: An O(n) algorithm for the multiple-choice knapsack linear program. Math. Program. 29(1), 57–63 (1984). https://doi.org/10.1007/BF02591729

Ehrgott, M.: Multicriteria Optimization. Springer, Berlin (2005). http://www.springer.com/gp/book/9783540213987

Gao, C., Lu, G., Yao, X., Li, J.: An iterative pseudo-gap enumeration approach for the multidimensional multiple-choice knapsack problem. Eur. J. Oper. Res. (2016). https://doi.org/10.1016/j.ejor.2016.11.042

Han, B., Leblet, J., Simon, G.: Hard multidimensional multiple choice knapsack problems, an empirical study. Comput. Oper. Res. 37(1), 172–181 (2010). https://doi.org/10.1016/j.cor.2009.04.006

Hifi, M., Michrafy, M., Sbihi, A.: Heuristic algorithms for the multiple-choice multidimensional knapsack problem. J. Oper. Res. Soc. 55(12), 1323–1332 (2004). https://doi.org/10.1057/palgrave.jors.2601796

Kellerer, H., Pferschy, U., Pisinger, D.: Knapsack Problems. Springer (2004). https://books.google.pl/books?id=u5DB7gck08YC

Khan, M.S.: Quality adaptation in a multisession multimedia system: model, algorithms, and architecture. Ph.D. thesis, AAINQ36645, University of Victoria, Victoria (1998)

Klamroth, K., Tind, J.: Constrained optimization using multiple objective programming. J. Glob. Optim. 37(3), 325–355 (2007). https://doi.org/10.1007/s10898-006-9052-x

Kwong, C., Mu, L., Tang, J., Luo, X.: Optimization of software components selection for component-based software system development. Comput. Ind. Eng. 58(4), 618–624 (2010). https://doi.org/10.1016/j.cie.2010.01.003

Lee, C., Lehoczky, J., Rajkumar, R.R., Siewiorek, D.: On quality of service optimization with discrete QoS options. In: Proceedings of the IEEE Real-Time Technology and Applications Symposium, pp. 276–286 (1999)

Martello, S., Pisinger, D., Toth, P.: New trends in exact algorithms for the 01 knapsack problem. Eur. J. Oper. Res. 123(2), 325–332 (2000). https://doi.org/10.1016/S0377-2217(99)00260-X

Martello, S., Toth, P.: Knapsack Problems: Algorithms and Computer Implementations. Wiley, New York (1990)

Miettinen, K.: Nonlinear Multiobjective Optimization. Kluwer Academic Publishers (1999). https://doi.org/10.1007/978-1-4615-5563-6. http://users.jyu.fi/~miettine/book/

Nauss, R.M.: The 01 knapsack problem with multiple choice constraints. Eur. J. Oper. Res. 2(2), 125–131 (1978). https://doi.org/10.1016/0377-2217(78)90108-X

Pisinger, D.: A minimal algorithm for the multiple-choice knapsack problem. Eur. J. Oper. Res. 83(2), 394–410 (1995). https://doi.org/10.1016/0377-2217(95)00015-I

Pisinger, D.: Program code in C (1995). http://www.diku.dk/~pisinger/minknap.c (downloaded in 2016)

Pisinger, D.: Budgeting with bounded multiple-choice constraints. Eur. J. Oper. Res. 129(3), 471–480 (2001). https://doi.org/10.1016/S0377-2217(99)00451-8

Pyzel, P.: Propozycja metody oceny efektywnosci systemow MIS. In: Myslinski, A. (ed.) Techniki Informacyjne - Teoria i Zastosowania, Wybrane Problemy, vol. 2(14), pp. 59–70. Instytut Badan Systemowych PAN, Warszawa (2012)

Sbihi, A.: A best first search exact algorithm for the multiple-choice multidimensional knapsack problem. J. Comb. Optim. 13(4), 337–351 (2007). https://doi.org/10.1007/s10878-006-9035-3

Sinha, P., Zoltners, A.A.: The multiple-choice knapsack problem. Oper. Res. 27(3), 503–515 (1979). https://doi.org/10.1287/opre.27.3.503

Zemel, E.: An O(n) algorithm for the linear multiple choice knapsack problem and related problems. Inf. Process. Lett. 18(3), 123–128 (1984). https://doi.org/10.1016/0020-0190(84)90014-0

Zhong, T., Young, R.: Multiple choice knapsack problem: example of planning choice in transportation. Eval. Program Plan. 33(2), 128–137 (2010). https://doi.org/10.1016/j.evalprogplan.2009.06.007

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bednarczuk, E.M., Miroforidis, J. & Pyzel, P. A multi-criteria approach to approximate solution of multiple-choice knapsack problem. Comput Optim Appl 70, 889–910 (2018). https://doi.org/10.1007/s10589-018-9988-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-018-9988-z