Abstract

The aim of this work is the numerical homogenization of a parabolic problem with several time and spatial scales using the heterogeneous multiscale method. We replace the actual cell problem with an alternate one, using Dirichlet boundary and initial values instead of periodic boundary and time conditions. Further, we give a detailed a priori error analysis of the fully discretized, i.e., in space and time for both the macroscopic and the cell problem, method. Numerical experiments illustrate the theoretical convergence rates.

Similar content being viewed by others

1 Introduction

Problems with multiple spatial and temporal scales occur in a variety of different phenomena and materials. Prominent examples are saltwater intrusion, storage of radioactive waste products or various composite materials [7, 15, 19]. These examples all have in common that both macroscopic and microscopic scales occur. Consequently, they are particularly challenging from a numerical point of view. However, from the application point of view, it is often sufficient to know a description of the macroscopic properties. Therefore, it is quite relevant to develop a method that includes all small-scale effects without having to calculate them simultaneously. This is the main component of (numerical) homogenization.

In this work we are interested in the following parabolic problem

with initial and boundary condtions. The precise setting is given further below. \(a\bigl (t,x, \frac{t}{\epsilon ^2}, \frac{x}{\epsilon }\bigr )\) is called the time-space multiscale coefficient and represents physical properties of the considered material. If we use standard finite element and time stepping methods, we obtain sufficiently good solutions only for small time steps and fine grids as the following example illustrates.

Example 1.1

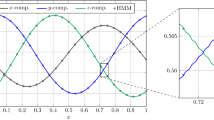

Let \(\varOmega = (0,1)\) and \(T = 1\). Furthermore we consider

Let the initial condition be \( u^{\epsilon }(0,x) = 0\) for all \(x \in \varOmega \). Figure 1a shows the error in the \(L^2\)-norm with respect to the numerical and a reference solution. The reference solutions were calculated using finite elements with grid width \(h= 10^{-6}\) and the implicit Euler method time step size \(\tau = 1/100\). The theory yields an expected quadratic order of convergence. However, this occurs here only for small grid sizes.

More precisely, the error converges only when \(h < \epsilon \), see Fig. 1a. Similar observations can be made for the time step, where one even needs \(\tau <\epsilon ^2\) in general. The reason is that \(u^\epsilon \) is highly oscillatory in space and time, see Fig. 1b.

To tackle the outlined challenges, various multiscale methods have been proposed. Focusing on approaches for parabolic space-time multiscale problems, examples include generalized multiscale finite element methods [12], non-local multicontinua schemes [18], high-dimensional (sparse) finite element methods [28], an approach based on an appropriate global coordinate transform [23], a method in the spirit of the Variational Multiscale Method and the Localized Orthogonal Decomposition [21] as well as optimal local subspaces [26, 27]. As already mentioned, we consider locally periodic problems in space and time here. Hence, we employ the Heterogeneous Multiscale Method (HMM), first induced by E and Enquist [13], see also the reviews [1, 3]. The HMM has been successfully applied to various time-dependent problems such as (nonlinear) parabolic problems [2, 4,5,6], time-dependent Maxwell equations [14, 16, 17] or the heat equation for lithium ion batteries [30]. We use the finite element version of the HMM, but note that other discretization types such as discontinuous Galerkin schemes are generally possible as well.

The present contribution is inspired by [22], which considers the same parabolic model problem and analyzes a semi-discrete HMM for it. Precisely, the microscopic cell problems are solved analytically in [22]. Our main contribution is to propose a suitable discretization of these cell problems and to show rigorous error estimates for the resulting fully discrete HMM. A particular challenge for the estimate is to balance the order of the mesh size and the time step on the one hand and the period \(\epsilon \) on the other hand. Further, we illustrate our theoretical results with numerical experiments and thereby underline the applicability of the method.

The paper is organized as follows. In Sect. 2, we introduce the setting and present the main homogenization results. In Sect. 3, we derive the fully discrete finite element heterogeneous multiscale method. The error of the macroscopic discretization is estimated in Sect. 4 and the error arising from the microscopic modeling is investigated in Sect. 5. Finally, numerical results are presented in Sect. 6.

2 Setting

In this section, we present our model problem and the associated homogenization results. Throughout the paper, we use standard notation on function spaces, in particular the Lebesgue space \(L^2\), the Sobolev spaces \(H^1\) and \(H^1_0\), as well as Bochner spaces for time-dependent functions. We denote the \(L^2\)-scalar product (w.r.t to space) by \(\langle \cdot , \cdot \rangle _0\) and the \(L^2\)-norm by \(\Vert \cdot \Vert _0\). Furthermore, we mark by \(\#\) spaces of periodic functions. Let \(X_{\#}(\varOmega )\) be such a space for an arbitrary \(\varOmega \subset \mathbb {R}^d\), then the subspace \(X_{\#,0}(\varOmega ) \subset X_{\#}(\varOmega )\) consist of all functions whose integrals over \(\varOmega \) is 0.

2.1 Model problem

Let \(\varOmega \subset \mathbb {R}^d\), be a bounded Lipschitz domain, \(T>0\) the final time and \(Y:= (-\frac{1}{2},\frac{1}{2})^d\). We consider the following parabolic problem

where \( f \in L^2((0,T),L^2(\varOmega )) \) and \( u_0 \in H_0^1(\varOmega )\). These conditions are sufficient for the well-posedness of (2.1). For the error proofs we will later assume more regularity, see the discussion after Theorem 4.1. \(a^{\epsilon }\) is the time-space multiscale coefficient as introduced in Sect. 1 and is defined by the matrix-valued function \(a(t,x,s,y) \in C([0,T] \times {\bar{\varOmega }} \times [0,1] \times {\bar{Y}}, \mathbb {R}^{d \times d}_{sym})\). The function a is \((0,1)\times Y\)-periodic with respect to s and y, furthermore it is coercive and uniformly bounded, in particular this means that there are constants \(\varLambda , \lambda > 0\), such that for all \(\xi , \eta \in \mathbb {R}^d\):

for all \((t,x,s,y) \in [0,T] \times {\overline{\varOmega }} \times [0,1] \times Y\). Further, we assume that a is Lipschitz continuous in t and x.

2.2 Homogenized problem

Analytical homogenization results for (2.1) were obtained in [8, 28]. For ease of presentation, we follow the traditional approach of asymptotic expansions here, but we emphasize that the same results are obtained with the more recent approach of time-space multiscale convergence as in [28], which is a generalization of two-scale convergence. Based on the multiscale asymptotic expansion

it is shown that \(U_0\) solves the homogenized problem

Here, the homogenized coefficient \(A_0\) is defined by

where \(\delta _{jk}\) denotes the Kronecker delta. The function \(\chi ^i \in L^2((0,T) \times \varOmega \times (0,1), H^1_{\#,0}(Y)) \cap L^2((0,T) \times \varOmega ,H^1_{\#}((0,1),H^{-1}_{\#,0}(Y)))\) solves the cell problem

Using these \(\chi ^i\), \(U_1\) in the asymptotic expansion (2.2) can be written as

[8, Chapter 2, Section 1.7] shows in Theorem 2.1 and Theorem 2.3 that

We call \(U_0\) the homogenized solution. \(U_0\) describes the macroscopic behavior of \(u^{\epsilon }\), because \(U_0\) only depends on the macroscopic scale x. \(U_1\) is called the first-order corrector.

Remark 2.1

\(A_0\) is not symmetric in \(\mathbb {R}^d\) for \(d>1\) in general since

The last term does not vanish in general, but it is zero for \(i = j\) due to integration by parts and the time-periodicity of \(\chi ^i\).

In the following we reformulate \(A_0\) in a way which we use to derive the discretized problem later. We transform the reference cell \((0,1) \times Y\) to a general cell \((t,t + \epsilon ^2) \times \{x_0\} + I_{\epsilon }\) with \(I_\epsilon {:}{=}\epsilon Y\) for \(t \in [0,T)\) and \( x_0 \in \varOmega \) fixed. Application of the chain and transformation rule allows us to write

3 The finite-element heterogeneous multiscale method (FE-HMM)

Based on the results of Sect. 2, we want to compute an approximation of the homogenized solution \(U_0\) based on the Finite-Element Heterogeneous Multiscale Method (FE-HMM). In [22], this method was already introduced, but it was assumed that the cell problems (2.5) could be solved exactly/analytically. The main aim of this section is to introduce also the (microscopic) discretization of the cell problems, allowing for a fully discrete method. Further, we also account for the non-symmetry of \(A_0\). This leads to a slightly different formulation in comparison to [22] where the symmetric part of \(A_0\) was considered throughout. In the following, we will derive the full method step by step, which is on the one hand hopefully instructive for the readers to understand the final formulation and on the other makes it easier to follow the error estimates in the following sections.

We start with the discretized macro problem. For the spatial discretization we use linear finite elements based on a triangulation \(\mathcal {T}_H\) and for the time discretization we use the implicit Euler method. Precisely, let \(V_{H} \subset H_0^1(\varOmega )\) be the space of all piecewise linear functions which are zero on \(\partial \varOmega \) and let \(\tau = T/N\) be the time step size. For \(1 \le n\le N\) we set \( t_n = n \tau \). Further, we define \(U_H^0:= Q_Hu_0\), where \(Q_H:L^2(\varOmega )\rightarrow V_H\) is the \(L^2\)-projection.

Let \(U_H^n\) then be the solution of the discretized equation

where \(f^n(x)= f(t_n,x)\) and \( \dfrac{{\bar{\partial }} U_H^n}{\partial t} = (U^n_H - U^{n-1} _H)/ \tau \). Here, the discrete bilinear form \(B[t_n,\cdot ,\cdot ]\) is defined for any \(\varPhi _H, \varPsi _H \in V_H\) via

In the integral with a quadrature formula, where \(x_K\) denotes the barycenter of \(K\in {\mathcal {T}}_H\). If we now consider the individual summands, we could calculate \(A_0(t_n,x_K)\) starting from Eq. (2.7). However, this would have several disadvantages. First, we would have to compute \(A_0(t_n,x_K) \) for all time points \(t_n\), which would require a lot of memory depending on the time step size. Furthermore, we want to change the boundary conditions later, which is not possible with this approach. Therefore, the idea is to compute \(\nabla \varPsi _H(x_K)\cdot A_0(t_n,x_K)\nabla \varPhi _H(x_K)\) directly. For this, set \(I_{\epsilon ,K}:= \{x_K\} + I_{\epsilon }\) and use reformulation (2.72.8) to give

where

with

\(\phi ^{\epsilon }_{\#}\) solves the equivalent cell problem

We can thus give the first discretization for the bilinear form in (3.1)

Note that \( B_{H,\#}\) is generally not symmetric.

In practice, the period may be known only approximately. Therefore we consider the case with cell side length \(\delta > \epsilon \) and cell time \(\sigma > \epsilon ^2\) and where the two terms \(\frac{\sigma }{\epsilon ^2} \), \(\frac{\delta }{\epsilon }\) are not integers. Thus, the periodic boundary conditions no longer hold (see [1, p.164] for the stationary case). In this case, we need to find alternative boundary and initial values. We approximate \(\nabla \varPsi _H \cdot A_0(t_n) \nabla \varPhi _H\) by replacing \(\epsilon \) by \(\delta \) and \(\epsilon ^2\) by \(\sigma \) in (3.3), and also X by

in (3.4). Then we approximate

where \(\phi ^{\epsilon }\) solves the initial value problem

This means that we replace periodic boundary conditions by Dirichlet ones and the time “boundary value problem” by an initial value problem.

In the following, we consider the bilinear form resulting from the above approximation. We set \( \mathcal {Q}_{n,K}:= (t_n,t_n + \sigma ) \times I_{\delta ,K}\) and define

where

To finally get the fully discrete method we consider a triangulation \(T_{{\tilde{h}}}\) of the unit cell Y and the resulting triangulation \(T_h(I_{\delta ,K})\) of the shifted cell with the finite element space \(V_h^p\subset H_0^1(I_{\delta ,K})\), which consist of all piecewise polynomials of order \(p \ge 2\). We stress that the mesh size h is meant with respect to the scaled triangulation \(T_h(I_{\delta ,K})\). Let \(1\le k \le N_{cell}\), \(\theta = \frac{\sigma }{N_{cell}}\) and \(s_k = k \theta \). For any \(\varPhi _H \in V_H\) seek \(\phi _{h,k}^{\epsilon }\in \varPhi _H + V_h^p\) as the unique solution of the discrete cell problem

with \(\phi _{h,0}^{\epsilon } = \varPhi _H\).

We consider the following bilinear form, which we get from the approximations above

where

Remark 3.1

In the above definition of \(A_{H,h}\) we used the trapezoidal rule for the approximation for the time integral, which is consistent with our numerical experiments below. We emphasize that the choice of other quadrature rules is equally possible. In practice, also the spatial integral over \(I_{\delta , K}\) is approximated by a quadrature rule.

We reformulate the discrete homogeneous equation by substituting \(B_{H,h}[t_n,\varPhi _H,\varPsi _H]\) into (3.1): Seek \(U_H^n\in V_H\) such that

where again \(U_H^0=Q_H u_0\).

Remark 3.2

The restriction to use only piecewise linear functions for the macrodiscretization is important for proving the claimed error bounds later. For finite elements of higher degree, one approach is to consider the linearization \(\varPhi _{H,lin}\) of finite element functions \(\varPhi _H \in V_H\), where

We refer to [1] for details in the stationary case.

In the following, we will show well–posedness as well as error estimates for the FE-HMM. Note that for the well–posedness, it is sufficient to show stability for the macrodiscretization since the microproblem is “only” used to calculate the homogenized coefficient \(A_{H,h}\) or the discrete bilinear form \(B_{H,h}\), respectively.

4 Error estimation of the macrodiscretization

We start with a stability result which we will use to show coercivity and boundedness of \(B_{H,h}\). The statement and the proof are similar to [22, Lemma 2.1]. However, we consider here the time-discretized case.

Lemma 4.1

Let \(\varOmega \subset \mathbb {R}^d\) be a bounded domain, \( T >0\) and \(\varPhi \) a linear function. Further, let \(\varphi \) be a solution to the following problem.

where \(a(t,x) = (a_{ij}(t,x))_{i,j = 1\dots d}\) fulfills the following conditions

where \({\text {Id}}_d: \mathbb {R}^d \rightarrow \mathbb {R}^d\) is the d-dimensional unit matrix. Let \(V_{h}^p \subset H_0^1(\varOmega )\) be the space of all piecewise polynomials of degree p on \(\varOmega \) using the simplicial mesh \({\mathcal {T}}_h\). For \(1 \le n \le N\) we define \(\theta = T/N\), \(t_n = n \theta \) and let \(\varphi _h^n \in \varPhi + V_h^p\) be the weak solution of the discrete problem

for all \(z_h \in V_h\) and \(\varphi _h^0 = \varPhi \). Then it holds for all \( n \in \{0,\dots ,N\}\):

Proof

Let \( n \in \{0,\dots ,N \}\) be arbitrary. Since \( \varphi _h^n = \varPhi \) on \(\partial \varOmega \) and \( \nabla \varPhi \) is constant, we get with partial integration

and, hence,

Since the second term is positive, the first inequality (4.2) is proved. For the other inequality (4.3), we use that \(\varphi \) is the weak solution of (4.1). We choose \(\varphi _h^ n - \varPhi \) as the test function to obtain

From the Cauchy–Schwarz inequality and boundedness of a it follows

Inserting this inequality into the equation above and using that

we finally obtain

Finally dividing by \(\bigl (\sum _{n = 1}^N \theta \int _{\varOmega } \nabla (\varphi _h^ n - \varPhi )(x) \cdot a(t_n,x) \nabla (\varphi _h^ n - \varPhi (x)) dx \bigr )^{1/2}\) finishes the proof. \(\square \)

Using this lemma, we now show the boundedness and coercivity of the discretized bilinear form \(B_{H,h}\).

Lemma 4.2

For all \( n \in \{1,\dots , N\}\), \(B_{H,h}[t_n,\cdot ,\cdot ]: V_H\times V_H \rightarrow \mathbb {R}\) is a coercive and bounded bilinear form.

Proof

Using the definition of \(\phi ^{\epsilon }_{h,k}\), we obtain with Lemma 4.1 and \(\sigma =N_{cell} \theta \)

where we used in the last step that \(\nabla \varPhi _H\) is constant. Summation over K shows the boundedness of \(B_{H,h}\).

It remains to show the coercivity. Since \( \frac{A_{H,h}(t_n,x_K) - A_{H,h}(t_n,x_K)^T}{2} \) is skew-symmetric, it follows that

For the symmetric part, we obtain with (2.6)

With the lower bound on \(a_{n,K}^\varepsilon \) and Lemma 4.1, we calculate

and the coercivity of \(B_{H,h}\) follows by summation over K and the fact that \(\varPhi _H\) is piecewise linear. \(\square \)

The macrodiscretization is thus a usual finite element discretization with implicit Euler time stepping of a coercive and bounded parabolic (discrete) problem, which directly implies its stability. We will now present the main error estimate which is of similar form as in [22]. We define the error arising from the estimation of microscopic data as

where

Note that the definition is analogous to [22], but includes the microscopic discretization by comparing \(A_0\) with \(A_{H,h}\) and not \(A_H\). Using the same perturbation argument as [22] one directly obtains the main error estimate.

Theorem 4.1

Let \(U_0\) and \(U^n_H\) be solutions of (2.3) and (3.11), respectively. If a and \(U_0\) are sufficiently regular, there exists a constant C independent of \(\epsilon , \delta , \sigma , H, \tau \) such that

where  is defined as

is defined as

for all \(\varPhi = \{\varPhi ^k\}_{k=1}^n\) with \(\varPhi ^k \in H_0^1(\varOmega )\).

It should be noted here that the constants in Theorem 4.1 depend on the constants \( \lambda , \varLambda \) as well as the Lipschitz constant of a (cf. e.g. [22]). We further assume that \(f \in C^2((0,T), H_0^2(\varOmega )) \) and \(u_0 \in H_0^4(\varOmega )\) fulfill the compatibility condition [25, Equation (1.3)] for \(q = 2\). Thus, the required regularity of \(U_0\) in time can be deduced by [25, (1.4)]. Using the same arguments as in the time-independent case [11] we obtain the required regularity in space.

5 Estimation of e(HMM)

In this section, we prove the error bound for e(HMM). In contrast to [22], we also consider the error of the microscopic discretization, which requires additional effort. Further slight differences to [22] arise from the lacking symmetry of \(A_0\) and \(A_{H,h}\). Instead of estimating e(HMM) directly, we introduce auxiliary matrices \({\tilde{A}}\) and \({\tilde{A}}_H\) and calculate the error with respect to them. Let \(\varPhi _H, \varPsi _H \in V_H\). \({\tilde{A}}\) is defined via

where \(\phi ^{\epsilon }_{\#}\) solves (3.5). Note that \({\tilde{A}}\) uses the macroscopic discretization as \(A_H\), but solves the cell problem with periodic boundary conditions in space as well as time. \({\tilde{A}}_H\) is defined via

where \(\phi ^{\epsilon }\) solves (3.6). Note that \({\tilde{A}}_H\) includes the approximation of the temporal integral, but in contrast to \(A_{H,h}\) solves the microscopic cell problems exactly. In the following, we write \(\varPhi ,\varPsi \) instead of \(\varPhi _H,\varPsi _H\) for simplicity and omit the variables in the integrals for readability. The central result of this section is

Theorem 5.1

If a sufficient regular, then it holds for any \(\alpha >0\)

where \(\sigma \) and \(\delta \) are the time and cell size, respectively, of the cell problem (3.6).

The first two terms arise from the oversampling as well as the change of the temporal and spatial boundary conditions and are already present in [22]. The other terms come from the discretization of the cell problems, where the leading terms orders are \(h/\epsilon \) and \(\theta /\epsilon ^2\). Linear convergence in time is expected due to the choice of implicit Euler for time stepping. Since we are in the non-symmetric case we only get a theoretical convergence order of h in space. For the case that \(A_0\) is symmetric, convergence order of \(h^2\) is expected. In a different setting with non-symmetric homogenized coefficient, [14] even observed \(h^2\) convergence numerically. The term \(h^{3-\alpha }/\epsilon ^3\) occurs due to the \(H^{-1}\)-norm estimate and the geometry of the cells \(I_{\delta ,K}\). Since we consider hypercubes, we obtain only \(H^{3- \alpha }\)-regularity for the solution of the dual elliptic cell problem with \( \alpha > 0\) arbitrarily small. Details can be found in the appendix. To sum up, when choosing \(\delta \) and \(\sigma \) in practice, one has to balance the oversampling and the microdiscretization error in e(HMM). Further, note that no additional terms \(\delta \) and \(\sigma \) appear as it is the case in [22]. The reason is that we fix the macroscopic scales in the coefficient (so-called macroscopic collocation of the HMM, cf. [3]).

For the proof we use the triangle inequality and our auxiliary matrices \({\tilde{A}}\) and \({\tilde{A}}_H\) via

In the following, these terms will be estimated in four steps. 1.Step: Estimate \(\Vert A_0 - {\tilde{A}}\Vert \). This error is caused by the wrong cell time and cell size.

To even consider the difference of \(A_0\) and \({\tilde{A}}\), we still need an alternative representation of \( \nabla \varPsi \cdot A_0(t_n,x_K) \nabla \varPhi \). Let \(l = \lfloor \sigma / \epsilon ^2 \rfloor \), \(\kappa = \lfloor \delta /\epsilon \rfloor \) and \( \tilde{\mathcal {Q}}_{n,K}:= I_{\kappa \epsilon ,K} \times (t_n,t_n + l \epsilon ^2)\). Since \({\chi _n^{\epsilon }}\) is the solution of problem (2.5), it follows that

With that, we can handle the first step of estimating e(HMM).

Proposition 5.1

There exists a constant C such that

Proof

From the calculation above it follows that

We now consider the terms one by one. We use that \( \delta - \epsilon \le \kappa \epsilon \le \delta \) and \( \sigma - \epsilon ^2 \le \kappa \epsilon ^2 \le \sigma \) to estimate

Using the same arguments, the estimate follows for \(G_2\) as well

To estimate \(G_3\) we use that \( l\dfrac{\epsilon ^2}{\sigma } \ge 1 - \dfrac{\epsilon ^2}{\sigma }\) and obtain

Altogether, after substitution and from the boundedness of \(a^{\epsilon }\) it follows that

\(\square \)

2. Step: Estimate\(\Vert {\tilde{A}} - A_H \Vert \) This error can be described as the error of using wrong boundary values and wrong time conditions.

Define \(\zeta ^{\epsilon }: = \phi ^{\epsilon } -\phi ^{\epsilon }_{\#}\). Then \(\zeta ^{\epsilon }\) satisfies the following differential equation

We derive an estimate for \(\zeta ^{\epsilon }\) in the following. This in turn provides a bound for the term \(\phi ^{\epsilon } - \phi ^{\epsilon }_{\#}\), which appears in the final calculation of the error of \( A_H \) with respect to \( {\tilde{A}}\).

Lemma 5.1

There exists a constant C independent of \(\epsilon , \delta , \sigma \) such that

for all \(\varPhi \in V_H\).

The proof can be found in [22, Lemma 3.3]. We can now complete the second step as well.

Proposition 5.2

It holds that

Proof

Using Lemma 5.1 we obtain

where we used again that \(\nabla \varPhi \) is constant. \(\square \)

3.Step: Estimate \(\Vert A_H - {\tilde{A}}_H\Vert \) This error can essentially be described as a quadrature error.

Proposition 5.3

For \( \varPhi , \varPsi \in V_H\) we obtain

The proof follows directly from the quadrature order of the trapezoidal rule. In general, if use a quadrature rule of order q in the definition of \(A_{H,h}\) (and consequently, for \({\tilde{A}}_H\)), this error will be bounded by \( \theta ^q\).

4.Step: Estimate \(\Vert {\tilde{A}}_H - A_{H,h}\Vert \) This term describes the error from the microscopic discretization. A direct calculation shows that

where

satisfies

for all \(z\in H^1_0(I_{\delta , K})\) and \(\eta (0,x) = 0\).

Analogously,

where \( \eta _{h,k} = (\eta _{h,k}^ 1, \dots , \eta _{h,k}^d )\in (V_h^p(I_{\delta ,K}) )^d\) satisfies

for all \(z_h\in V_h^p(I_{\delta ,K})\) and \(\eta _{h,0}^i=0\) for all \(i=1,\ldots d\). \(\eta \) and \(\eta _{h,k}\) obviously depend on \(\epsilon , \delta \) and \(\sigma \). To better investigate this dependence, we re-scale \(\eta \) in the following lemma.

Lemma 5.2

Define \(\xi ^i \in L^2((0, \frac{\sigma }{\epsilon ^2}), H_0^1(I_{\delta /\epsilon }))\) via \(\xi ^i(s,y) = \frac{1}{\epsilon } \eta ^i(t_n + \epsilon ^2\,s, x_K + \epsilon y)\). Then \(\xi ^i\) solves

where \({\tilde{a}}^{\epsilon }_{n,K}(s,y) = a(t_n,x_K,\frac{t_n}{\epsilon ^2}+ s,\frac{x_K}{\epsilon } + y)\).

Proof

Define

Using the transformation and chain rule, we obtain

\(\square \)

Based on the rescaling of \(\eta \), we estimate the error between \(\eta \) and \(\eta _{h,k}\), which is the key ingredient for the bound of \(\Vert {\tilde{A}}_H - A_{H,h}\Vert \).

Proposition 5.4

Assume that a is sufficiently regular. Then, it holds for any \(\alpha >0\)

Proof

Let \(\varPhi _H, \varPsi _H \in V_H\). We obtain with the boundedness of \(a_{n,K}^\epsilon \) and the fact that \(\nabla \varPsi _H\) is piece-wise constant

where we used Theorem A.1 in the last step. We now employ Lemma 5.2 and regularity results for parabolic problems [24] to estimate the above terms as follows

Inserting these inequalities, we get

\(\square \)

Summing up, we have proved the estimate for e(HMM).

Proof of Theorem 5.1

Using (5.1) and Propositions 5.1, 5.2, 5.3 and 5.4 we obtain the desired error bound.

6 Numerical experiments

In the following, numerical results calculated with the Heterogeneous Multiscale Method are presented. The convergence rate with respect to the time step and mesh width is investigated for the macrodiscretization as well as for the microdiscretization. The implementation was done in Python, building on the Fenics software library [20], where Version 2019.2.0 was used for this paper.

6.1 Setting

We choose \(\varOmega = (0,1)\) and \(T = 1\). Let the initial condition be \( u^{\epsilon }(0,x) = 0\) for all \(x \in \varOmega \). As the exact solution of the homogenized equation we choose

and accordingly the right side

with the homogenized coefficient \(A_0\). As in Sect. 4, the error between the numerical solution \(U_H\) and the exact solution \(U_0\) is investigated. For this purpose, we consider \(\Vert U_H^N - U_0(t_N,x)\Vert _{L^2(\varOmega )}\) the error at time \(t_N\). As seen in Theorem 5.1, the error bound of e(HMM) depends on the terms \(\sigma \), \(\frac{\epsilon }{\delta }\), and \(\frac{\epsilon ^2}{\sigma }\), which we get from the boundary and initial values. When choosing the parameters \(\delta \) and \(\sigma \), it is important that \(H \gg \delta \) and \(H \gg \sigma \). Here, as suggested by [22], we choose \(\delta = \epsilon ^{1/3} \) and \(\sigma = \epsilon ^{2/3}\).

6.2 First example

In the first example, we select the coefficient as

According to [28], \(A_0 \approx 3.352429824667637\). For simplicity and more efficient calculation, in f the coefficient \(A_0\) is replaced by this value. Figure 2 (left) shows the error in the \(L^2\) norm over the grid width H for different cell grid widths h. Time step sizes were fixed as \(\tau = \frac{1}{15}\) and \(\theta = \frac{\sigma }{15}\). It can be clearly seen that for coarser cell grid widths \(h = 2^{-7} \) and \(h = 2^{-8}\) the error of microdiscretization dominates and therefore we do not get convergence order \(H^2\). However, for fine cell grid widths, the expected order shows up. Similarly, we obtain linear convergence w.r.t. H in the \(H^1(\varOmega )\) norm (Fig. 2 right). Note that for the \(H^1\) norm, convergence in the macro mesh size can already be observed for relatively large cell grid widths. These results agree nicely with our findings in Theorems 4.1 and 5.1.

6.2.1 Second example

In the next example we set

independent of s. A straight forward calculation shows

We again consider the error between the numerical solution \(U_H\) and the exact solution \(U_0\). As in the previous example, we choose as time step sizes \(\tau = \frac{1}{15}\) and \(\theta = \frac{\sigma }{15}\). We again first consider the \(L^2\)-error and study its convergence w.r.t. to H, see Fig. 3 left. For \(h = 2^{-3}\) no convergence can be seen. For fine grid widths \(h = 2^{-7} \) and \(h = 2^{-8}\), quadratic convergence is initially seen, but still the microerror dominates. Only for very fine cell grid widths quadratic convergence does appear. The fact that for \(h = 2^{-10}\) the error flattens out is probably due to rounding errors. Figure 3 (right) shows the convergence in the \(H^1\) norm. Here, for sufficiently small cell grid width, the expected linear convergence is also observed. The results are again in alignment with the theory and the observations for the first example.

In addition, we investigate the error of the time discretization. For this, we fix \(H = \frac{1}{10}\) and \( h= \frac{1}{1000}\). Figure 4 shows that for fixed cell time step size \( \theta = \frac{\sigma }{4}\) linear convergence in the macro time step \(\tau \) can be observed. Again, this underlines our theoretically predicted results.

References

Abdulle, A.: The finite element heterogeneous multiscale method: a computational strategy for multiscale PDEs. In: Multiple Scales Problems in Biomathematics, Mechanics, Physics and Numerics, International Series. Mathematical Sciences and Applications, vol. 31, pp. 133–181. Gakkotosho, Tokyo (2009)

Abdulle, A.: Numerical homogenization methods for parabolic monotone problems. In: Building Bridges: Connections and Challenges in Modern Approaches to Numerical Partial Differential Equations, Lecture Notes in Computational Science and Engineering, vol. 114, pp. 1–38. Springer, Cham (2016)

The heterogeneous multiscale method: Abdulle, A., E, W., Engquist, B., Vanden-Eijnden, E.: Acta Numer 21, 1–87 (2012). https://doi.org/10.1017/S0962492912000025

Abdulle, A., Huber, M.E.: Finite element heterogeneous multiscale method for nonlinear monotone parabolic homogenization problems. ESAIM Math. Model. Numer. Anal. 50(6), 1659–1697 (2016). https://doi.org/10.1051/m2an/2016003

Abdulle, A., Huber, M.E., Vilmart, G.: Linearized numerical homogenization method for nonlinear monotone parabolic multiscale problems. Multiscale Model. Simul. 13(3), 916–952 (2015). https://doi.org/10.1137/140975504

Abdulle, A., Vilmart, G.: Coupling heterogeneous multiscale FEM with Runge–Kutta methods for parabolic homogenization problems: a fully discrete spacetime analysis. Math. Models Methods Appl. Sci. 22(6), 1250002, 40 (2012). https://doi.org/10.1142/S0218202512500029

Apoung Kamga, J.B., Pironneau, O.: Numerical zoom for multiscale problems with an application to nuclear waste disposal. J. Comput. Phys. 224(1), 403–413 (2007). https://doi.org/10.1016/j.jcp.2007.03.020

Bensoussan, A., Lions, J.L., Papanicolaou, G.: Asymptotic Analysis for Periodic Structures, Studies in Mathematics and its Applications, vol. 5. North-Holland Publishing Co., Amsterdam (1978)

Bourlard, M., Dauge, M., Lubuma, M.S., Nicaise, S.: Coefficients of the singularities for elliptic boundary value problems on domains with conical points. III. Finite element methods on polygonal domains. SIAM J. Numer. Anal. 29(1), 136–155 (1992). https://doi.org/10.1137/0729009

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods, Texts in Applied Mathematics, vol. 15, 3rd edn. Springer, New York (2008). https://doi.org/10.1007/978-0-387-75934-0

Chatzipantelidis, P., Lazarov, R.D., Thomée, V.: Error estimates for a finite volume element method for parabolic equations in convex polygonal domains. Numer. Methods Partial Differ. Equ. 20(5), 650–674 (2004). https://doi.org/10.1002/num.20006

Chung, E.T., Efendiev, Y., Leung, W.T., Ye, S.: Generalized multiscale finite element methods for space-time heterogeneous parabolic equations. Comput. Math. Appl. 76(2), 419–437 (2018). https://doi.org/10.1016/j.camwa.2018.04.028

Weinan, E., Engquist, B.: The heterogeneous multiscale methods. Commun. Math. Sci. 1(1), 87–132 (2003)

Freese, P.: The heterogeneous multiscale method for dispersive Maxwell systems. Multiscale Model. Simul. 20(2), 769–797 (2022). https://doi.org/10.1137/21M1443960

Held, R., Attinger, S., Kinzelbach, W.: Homogenization and effective parameters for the henry problem in heterogeneous formations. Water Resour. Res. 41(11) (2005)

Hochbruck, M., Maier, B., Stohrer, C.: Heterogeneous multiscale method for Maxwell’s equations. Multiscale Model. Simul. 17(4), 1147–1171 (2019). https://doi.org/10.1137/18M1234072

Hochbruck, M., Stohrer, C.: Finite element heterogeneous multiscale method for time-dependent Maxwell’s equations. In: Spectral and High Order Methods for Partial Differential Equations—ICOSAHOM 2016, Lecture Notes in Computational Science and Engineering, vol. 119, pp. 269–281. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-65870-4_18

Hu, J., Leung, W.T., Chung, E., Efendiev, Y., Pun, S.M.: Space-time non-local multi-continua upscaling for parabolic equations with moving channelized media. arXiv:2106.12010 (2021)

Kalamkarov, A.L., Andrianov, I.V., Danishevs’kyy, V.V.: Asymptotic homogenization of composite materials and structures. American Society of Mechanical Engineers Digital Collection (2009)

Langtangen, H.P., Logg, A.: Solving PDEs in Python: The FEniCS Tutorial I. Springer, New York (2017)

Ljung, P., Maier, R., Målqvist, A.: A space-time multiscale method for parabolic problems. arXiv:2109.06647 (2021)

Ming, P., Zhang, P.: Analysis of the heterogeneous multiscale method for parabolic homogenization problems. Math. Comput. 76(257), 153–177 (2007). https://doi.org/10.1090/S0025-5718-06-01909-0

Owhadi, H., Zhang, L.: Homogenization of parabolic equations with a continuum of space and time scales. SIAM J. Numer. Anal. 46(1), 1–36 (2007/2008). https://doi.org/10.1137/060670420

Sammon, P.H.: Convergence estimates for semidiscrete parabolic equation approximations. SIAM J. Numer. Anal. 19(1), 68–92 (1982). https://doi.org/10.1137/0719002

Savaré, G.: \(A(\Theta )\)-stable approximations of abstract Cauchy problems. Numer. Math. 65(3), 319–335 (1993). https://doi.org/10.1007/BF01385755

Schleuß, J., Smetana, K.: Optimal local approximation spaces for parabolic problems. Multiscale Model. Simul. 20(1), 551–582 (2022). https://doi.org/10.1137/20M1384294

Schleuß, J., Smetana, K., ter Maat, L.: Randomized quasi-optimal local approximation spaces in time. arXiv:2203.06276 (2022)

Tan, W.C., Hoang, V.H.: High dimensional finite elements for time-space multiscale parabolic equations. Adv. Comput. Math. 45(3), 1291–1327 (2019). https://doi.org/10.1007/s10444-018-09657-7

Thomée, V.: Galerkin Finite Element Methods for Parabolic Problems, Springer Series in Computational Mathematics, vol. 25. Springer, Berlin (2006)

Veszelka, Z.: Anwendung der Finite-Elemente-Heterogene-Multiskalen-Methode auf thermische Prozesse in großformatigen Lithium-Ionen-Batterien. Ph.D. thesis, Karlsruher Institut für Technologie (2022)

Acknowledgements

We thank the anonymous reviewers for their remarks, which helped to improve the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest to this work.

Additional information

Communicated by Axel Målqvist.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Projekt number 496556642. Major parts of this work were accomplished while BV was affiliated with Karlsruher Institut für Technologie.

A Finite element error estimates for parabolic problems

A Finite element error estimates for parabolic problems

In this appendix, we present and prove an a priori error estimate for the finite element discretization of an (abstract) parabolic problem with time-dependent coefficient. The statement and its proof are very similar to the well known results in the literature cf., e.g., [29]. We try to extract as high spatial convergence order as possible for each term. This is crucial to obtain (almost) balanced orders between h and \(\epsilon \) in Proposition 5.4. In the following, we use \(\Vert \cdot \Vert _m\) to denoted the \(H^m(\varOmega )\)-norm for any \(m\in \mathbb {R}^d\).

Theorem A.1

Let \(\varOmega \subset \mathbb {R}^d\) be a hypercube, \( T >0\), \(b:[0,T] \times H^1(\varOmega ) \times H^1(\varOmega )\) be bounded, coercive and Lipschitz-continuous (w.r.t. the time t) and \(f:[0,T] \rightarrow L^2(\varOmega )\) be continuously differentiable. Denote by u the solution of the following weak problem

Let further \(u_h^n \in V_h^p\) be the fully discrete approximation of u at time \(t_n = n\tau \) using implicit Euler method with time step size \(\tau \) and finite elements of order \(p\ge 2\). Then we have for any \(\alpha >0\)

Proof

We denote by \(R_h\) the Ritz projection onto \(V_h^p\) with respect to \(b(t, \cdot ,\cdot )\). Note that \(R_h\) depends on the time t, but we will omit this dependence for better readability. Inserting the Ritz projection of the exact solution into the discrete equation and using a standard stability estimate, cf., e.g., [29], provides

where

The first term can be bounded with the following arguments of [29]: Set \(e = u - R_h u\). Let \(w \in H^1(\varOmega )\) be arbitrary and find \(z \in H_0^1(\varOmega ) \) such that \(b(\cdot ,v,z) = (w,v)_{0} \). From elliptic regularity estimates it follows \(z \in H^{3-\alpha }(\varOmega )\) for any \(\alpha >0\) since \(\varOmega \) is a hypercube [9]. If we choose \( v= \partial _t e\), we obtain

where the second equality follows by differentiating the equation \(b(\cdot ,e,v_h) = 0\) for all \(v_h \in V_h^p\) with \(b^{\prime }(\cdot ,\cdot ,\cdot )\) the bilinear form obtained from \(b(\cdot ,\cdot , \cdot )\) by differentiating the coefficients with respect to t. We get

By elliptic regularity and convergence results for (at least) quadratic finite elements [9, 10]

From [9, 10] it follows for small h

For the second term in \(d_n\) we use again projection estimates and Taylor expansion

Using the triangle inequality and estimates for the Ritz projection error of the exact solution, cf. [10, 29], we obtain (A.2). \(\square \)

We emphasize that quadratic finite elements are necessary to get the required bounds for the Ritz projection error in the \(H^{-1}\)-norm, cf. [9, 10]. Since our domain of interest is a hypercube, we only get \(H^{3-\alpha }\)-regularity for any \(\alpha >0\) of a solution to an elliptic problem with right-hand side in \(H^1\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eckhardt, D., Verfürth, B. Fully discrete heterogeneous multiscale method for parabolic problems with multiple spatial and temporal scales. Bit Numer Math 63, 35 (2023). https://doi.org/10.1007/s10543-023-00973-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10543-023-00973-z

Keywords

- Multiscale method

- Numerical homogenization

- Parabolic problem

- Time-space multiscale coefficient

- A priori error estimates