Abstract

We introduce an explicit adaptive Milstein method for stochastic differential equations with no commutativity condition. The drift and diffusion are separately locally Lipschitz and together satisfy a monotone condition. This method relies on a class of path-bounded time-stepping strategies which work by reducing the stepsize as solutions approach the boundary of a sphere, invoking a backstop method in the event that the timestep becomes too small. We prove that such schemes are strongly \(L_2\) convergent of order one. This order is inherited by an explicit adaptive Euler–Maruyama scheme in the additive noise case. Moreover we show that the probability of using the backstop method at any step can be made arbitrarily small. We compare our method to other fixed-step Milstein variants on a range of test problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We investigate the use of adaptive time-stepping strategies in the construction of a strongly convergent explicit Milstein-type numerical scheme for a d-dimensional stochastic differential equation (SDE) of Itô-type on the probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\),

for \(t\in [0,T]\), \(T\ge 0\) and \(i=1,\dots , m\in {\mathbb {N}}\), where \(W=[W_1,\cdots , W_m]^T\) is an m-dimensional Wiener process, the drift coefficient \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) and the diffusion coefficient \(g: {\mathbb {R}}^d\rightarrow {\mathbb {R}}^{d\times m}\) each satisfy a local Lipschitz condition along with a polynomial growth condition and, together, a monotone condition. Both are twice continuously differentiable; see Assumptions 2.1 and 2.2. Throughout, we take the initial vector \(X(0)=X_0\in {\mathbb {R}}^d\) to be deterministic.

It was pointed out in [34] that, because the Euler–Maruyama and Euler–Milstein methods coincide in the additive noise case, and as a consequence of the analysis in [15], an explicit Milstein scheme over a uniform mesh cannot converge in \({L}_p\) to solutions of (1.1). We propose here an adaptive variant of the explicit Milstein method that achieves strong \({L}_2\) convergence of order one to solutions of (1.1). As an immediate consequence of this, in the case of additive noise an adaptive Euler–Maruyama method also has \({L}_2\) convergence of order one. To prove our convergence result it is essential to introduce a new variant of the admissible class of time-stepping strategies introduced in [17, 18], which we call path-bounded strategies.

Several variants on the fixed-step Milstein method have been proposed, see for example the tamed Milstein [20, 34], projected and split-step backward Milstein [1], truncated Milstein [10], implicit Milstein methods [13, 35] and a recent tamed stochastic Runge–Kutta (of order one) method of [8], all designed to converge strongly to solutions of SDEs with more general drift and diffusions, such as in (1.1). However, with few exceptions (see [1, 20]) explicit methods of this kind have only examined the case where the diffusion coefficients \(g_i\) satisfies a commutativity condition. We do not impose a commutativity restriction and hence must consider the associated Lévy areas (see Lemma 2.2).

A review of methods that adapt the timestep in order to control local error may be found in the introduction to [17]; we cite here [2, 7, 16, 21, 28, 31] and remark that our purpose is instead to handle the nonlinear response of the discrete system see also [5, 6] and discussion in [17, 18]. A common feature of the adaptivity is the use of both a minimum and maximum time step where the magnitude of the minimum step is controlled by a free parameter which requires some a-priori knowledge on the part of the user. The approach of [5, 6] was recently extended to McKean–Vlasov equations in [30] and include a Milstein approximation. In addition we note the fully adaptive Milstein method proposed in [14] for a scalar SDE with light constraints on the coefficients. There the authors stated that such a method was easy to implement but hard to analyse and as a result considered a different, but related method.

Our framework for adaptivity was introduced in [17] for an explicit Euler–Maruyama method, and has since been extended to SDE systems with monotone coefficients in [18] and to SPDE methods in [3]. These methods all use a backstop method when the chosen strategy attempts to select a stepsize below the minimum step. We demonstrate here, for a path-bounded strategy, that the probability of using the backstop can be made arbitrarily small by choosing an appropriately large \(\rho \), and an appropriately small \(h_{\max }\). This is consistent with observation, and with the intuitive notion that the use of the backstop should be rare in practice.

The structure of the article is as follows. Mathematical preliminaries are considered in Sect. 2, including precise specifications of the conditions imposed on each f and \(g_i\), and the characterisation of an explicit Milstein method on an arbitrary mesh. The construction and result of the adaptive time-stepping strategy is outlined in Sect. 3, where we formulate the adaptive Milstein scheme with backstop which will be the subject of our main theorem. Both main results: on strong \(L_2\) convergence and on the probability of using the backstop method, are stated in Sect. 4; we defer their proofs to Sect. 7. In Sect. 5 we compare the adaptive scheme numerically to other fixed step methods and illustrate both convergence and efficiency. The proof of Lemma 2.2 is in Appendix A.

2 Mathematical preliminaries

We consider the d-dimensional Itô-type SDE (1.1) and for the remainder of the article let \(({\mathcal {F}}_t)_{t\ge 0}\) be the natural filtration of W. For all \(x \in {\mathbb {R}}^d\) and for all \(\phi (x)\in \textrm{C}^2({\mathbb {R}}^d,{\mathbb {R}}^d)\), the Jacobian matrix of \(\phi (x)\) is denoted \({\textbf{D}}\phi (x)\in {\mathcal {L}}({\mathbb {R}}^d,{\mathbb {R}}^d)\); the second derivative of \(\phi (x)\) with respect to a vector x forms a 3-tensor and is denoted \({\textbf{D}}^2\phi (x)\in {\mathcal {L}}({\mathbb {R}}^{d\times d},{\mathbb {R}}^d)\); and \([x]^2:=x\otimes x\) stands for the outer product of x and itself. Furthermore, let \(\Vert \cdot \Vert \) denote the standard \(l^2\) norm in \({\mathbb {R}}^d\), \(\Vert \cdot \Vert _{{\textbf{F}}(a\times b)}\) the Frobenious norm of the matrix in \({\mathbb {R}}^{a\times b}\); for simplicity we write \(\Vert \cdot \Vert _{{\textbf{F}}}\) as the Frobenious norm of the matrix in \({\mathbb {R}}^{d\times d}\). \(\Vert \cdot \Vert _{{\textbf{T}}_3}\) denotes the induced tensor norm (spectral norm) of the 3-tensor in \({\mathbb {R}}^{d\times d\times d}\) and it is defined as \(\big \Vert \cdot \big \Vert _{{\textbf{T}}_3}:=\sup _{h_1,h_2\in {\mathbb {R}}^d, \Vert h_1\Vert ,\Vert h_2\Vert \le 1}\big \Vert \cdot (h_1\otimes h_2)\big \Vert \). For \(a,b\in {\mathbb {R}}\), \(a\vee b\) denotes max\(\{a,b\}\) and \(a\wedge b\) denotes min\(\{a,b\}\). We frequently make use of the elementary inequality

and of the following two standard extensions of Jensen’s inequality (see [23, Corollary A.10]). For \(f\in L^1\), if \(p\ge 1\),

For \(a_i\in {\mathbb {R}}\) and \(p\ge 1\),

We now present our assumptions on f and \(g_i\) in (1.1).

Assumption 2.1

Let \(f\in \textrm{C}^2({\mathbb {R}}^d,{\mathbb {R}}^d)\) and \(g\in \textrm{C}^2({\mathbb {R}}^d,{\mathbb {R}}^{d\times m})\) with \(g_i(x)=[g_{1,i}(x),\dots ,g_{d,i}(x)]^T\in \textrm{C}^2({\mathbb {R}}^d,{\mathbb {R}}^{d})\). For each \(\varkappa \ge 1\) there exist \(L_{\varkappa }>0\) such that

for \(x,y\in {\mathbb {R}}^d\) with \(\Vert x\Vert \vee \Vert y\Vert \le \varkappa \), and there exists \(c\ge 0\) such that for some \(\eta \ge 2\)

In addition, for some constants \(c_{ 3,4,5,6 }\), \(q_1\), \(q_2\ge 0\); \(i=1,\dots ,m\), we have

Furthermore, for some \(c_{1,2}\ge 0\); \(i=1,\dots ,m\), we have

Under (2.4) and (2.5), the SDE (1.1) has a unique strong solution on any interval [0, T], where \(T < \infty \) on the filtered probability space \((\Omega , {\mathcal {F}}, ({\mathcal {F}}_t )_{t \ge 0}, {\mathbb {P}})\), see [11, 25] and [33].

Assumption 2.2

Suppose that (2.5) in Assumption 2.1 holds with

where \(q:=q_1\vee q_2\), \(q_1\) and \(q_2\) are from (2.7) in Assumption 2.1.

We now give the following Lemma on moments of the solution.

Lemma 2.1

[26, Lem. 4.2] Let f and g satisfy (2.4) and (2.5), and suppose that Assumption 2.2 holds. If g further satisfies (2.7), then there is a constant \(C_{\texttt {X}} >0\) such that the solution of (1.1) satisfies

Next we present the fixed-step Milstein method (see [19, Sec. 10.3]) that is the basis of the adaptive method presented in this article.

Definition 2.1

(Milstein method) For \(n\in {\mathbb {N}}\), \(s\in [t_n, t_{n+1}]\) and given \(Y(t_n)\), the fixed-step Milstein scheme for (1.1), interpolated over the interval \([t_n,t_{n+1}]\), is given by

where following [1, 34], the stochastic integral and the iterated stochastic integral are defined as

Expanding the last term in (2.10) we have that

where the term \(A_{ij}^{t_n,s}\) is the Lévy area (see for example [22, Eq. (1.2.2)]) defined by

and we have used the relations \(I_{i,i}^{t_n,s} = \frac{1}{2}\big ( (I_{i}^{t_n,s})^2 - |t-s|\big )\) and \(I_{i,j}^{t_n,s} + I_{j,i}^{t_n,s} = I_{i}^{t_n,s} I_{j}^{t_n,s}\). As mentioned in the introduction many authors assume the following commutativity condition: suppose that \({\textbf{D}}g_i(y)g_j(y)={\textbf{D}}g_j(y)g_i(y)\) for all \(i,j=1,\dots , m\) and \(y\in {\mathbb {R}}^d\). When this holds, the last term in (2.12) vanishes, avoiding the need for any analysis of \(A_{ij}^{t_n,s}\) defined in (2.13). We do not impose such a condition in this paper, and therefore make use of the following conditional moment bounds on the Lévy areas.

Lemma 2.2

(Lévy Area) For all \(i,j =1,\dots ,m\), \(0\le t_n\le s<T\) and for a pair of Wiener process \((W_i(r),W_j(r))^T\) where \(r\in [t_n,s]\) and the Lévy area \(A_{ij}^{t_n,s}\) defined in (2.13), there exists a finite constant \(C_{\texttt {LA}}\) whose explicit form is in (A.1) such that for \(k\ge 1\)

For proof see Appendix A.

3 Adaptive time-stepping strategies

To deal with the extra terms that arise from Milstein over Euler–Maruyama type discretisations, we introduce a new class of time-stepping strategies in Definition 3.5. Let \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) be a sequence of strictly positive random timesteps with corresponding random times \(\{t_n:=\sum _{i=1}^{n}h_i\}_{n\in {\mathbb {N}}\backslash \{0\}}\), where \(t_0=0\).

Definition 3.1

Suppose that each member of \(\{t_n\}_{n\in {\mathbb {N}}\backslash \{0\}}\) is an \({\mathcal {F}}_t\)-stopping time: i.e. \(\{t_n\le t\}\in {\mathcal {F}}_t\) for all \(t\ge 0\), where \(({\mathcal {F}}_t)_{t\ge 0}\) is the natural filtration of W. If \(\tau \) is any \(({\mathcal {F}}_t)\)-stopping time, then (see [27, p. 14])

In particular this allows us to condition on \({\mathcal {F}}_{t_n}\) at any point on the random time-set \(\{t_n\}_{n\in {\mathbb {N}}}\).

Assumption 3.1

For the sequence of random timesteps \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\), there are constant values \(h_{\max }>h_{\min }>0\), \(\rho >1\) such that \(h_{\max }=\rho h_{\min }\), and

In addition, we assume each \(h_{n+1}\) is \({\mathcal {F}}_{t_n}\)-measurable.

Definition 3.2

Let \(N^{(t)}\) be a random integer such that

and let \(N=N^{(T)}\) and \(t_N=T\), so that T is always the last point on the mesh. Note that \(N^{(t)}\) indicates the step number such that \(t\in \big [t_{N^{(t)}-1},\,t_{N^{(t)}}\big ]\). Furthermore, by Assumption 3.1, \(N^{(t)}\) only takes values in the finite set \(\{N^{(t)}_{\min },\dots ,N^{(t)}_{\max }\}\), where \(N^{(t)}_{\min }:=\lfloor t/h_{\max }\rfloor \) and \(N^{(t)}_{\max }:=\lceil t/h_{\min }\rceil \).

In Assumption 3.1, the lower bound \(h_{\min }\) given by (3.2) ensures that a simulation over the interval [0, T] can be completed in a finite number of time steps. In the event that at time \(t_n\) our strategy attempts to select a stepsize \(h_{n+1} \le h_{\min }\), we instead apply a single step of a backstop method (\(\varphi \) in Definition 3.3 below), a known method that satisfies a mean-square consistency requirement with deterministic step \(h_{n+1}=h_{\min }\) (see also discussion in Remarks 3.1 and 5.1).

First we recall the Milstein method expressed as a map. Over each step \([t_n,t_{n+1}]\) the Milstein map \(\theta :{\mathbb {R}}^d\times {\mathbb {R}} \times {\mathbb {R}}\rightarrow {\mathbb {R}}^d\) is defined as

Following [18, Def. 9], we now define an adaptive Milstein scheme combining the Milstein method and a backstop method.

Definition 3.3

(Adaptive Milstein Scheme) Let \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) satisfy Assumption 3.1. Using indicator functions to distinguish the backstop case when \(h_{n+1}=h_{\min }\) (and allowing for the possibility that the final step taken to time T is smaller than \(h_{\min }\), in which case the backstop is also used), we define the continuous form of an adaptive Milstein scheme associated with a particular time-stepping strategy \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) as

for \(s\in [t_n,t_{n+1}]\), \(n\in {\mathbb {N}}\), \({\widetilde{Y}}(0)=X(0)\), and \(\theta \) is as given in (3.4). Thus the scheme is characterised by the sequence of tuples, \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\). The backstop map \(\varphi :{\mathbb {R}}^d\times {\mathbb {R}} \times {\mathbb {R}} \rightarrow {\mathbb {R}}^d\) in (3.5) satisfies for each \(n \in {\mathbb {N}}\)

a.s, for positive constants \(C_{B_1}\) and \(C_{B_2}\).

Throughout the article it is notationally convenient to make the following definition.

Definition 3.4

Let \({\widetilde{Y}}\) be as given in Definition 3.3 and define for each \(n\in {\mathbb {N}}\)

Remark 3.1

The upper bound \(h_{\max }\) prevents step sizes from becoming too large and allows us to examine strong convergence of the adaptive Milstein method (3.5) to solutions of (1.1) as \(h_{\max }\rightarrow 0\) (and hence as \(h_{\min }\rightarrow 0\)). Note that \(\varphi \) satisfies (3.6) if the backstop method satisfies a mean-square consistency requirement. In practice, instead of testing (3.6), we choose a backstop method that is strongly convergent with rate 1.

Remark 3.2

For all \(i,j=1,2,\dots , m\), \(I_{i}^{t_n,t_{n+1}}\) in (2.11) is a Wiener increment taken over a random step of length \(h_{n+1}\), which itself may depend on \({{\widetilde{Y}}}(t_n)\) and therefore is not necessarily independent and normally distributed. However, since \(h_{n+1}\) is \({\mathcal {F}}_{t_n}\)-measurable, then \(I_{i}^{t_n,t_{n+1}}\) is \({\mathcal {F}}_{t_n}\)-conditionally normally distributed and by the Optional Sampling Theorem (see for example [32]), for all \(p=0,1,2,\dots \)

where \(\varvec{\gamma }_{p}:=2^{p/2}\Gamma \left( (p+1)/2 \right) \pi ^{-1/2}\), and \(\Gamma \) is the Gamma function (see for example [29, p. 148]). In implementation, it is sufficient to replace the sequence of Wiener increments with i.i.d. \({\mathcal {N}} (0, 1)\) random variables scaled at each step by the \({\mathcal {F}}_{t_n}\)-measurable random variable \(\sqrt{h_{n+1}}\).

We now provide a specific example of a time-stepping strategy that we use in Sect. 5 and that satisfies the assumptions for our convergence proof in Theorem 4.1. Suppose that for each \(n=0,\dots , N-1\) and some fixed constant \(\kappa >0\), we choose constant values \(h_{\max }>h_{\min }>0\), \(\rho >1\) such that \(h_{\max }=\rho h_{\min }\) and

Then (3.2) in Assumption 3.1 holds for (3.11). Notice also that, from (3.11), the following bound applies on the event \(\{h_{\min }< h_{n+1} \le h_{\max }\}\):

The strategy given by (3.11) is admissible in the sense given in [17, 18]. However, it also motivates the following class of time-stepping strategies to which our convergence analysis applies.

Definition 3.5

(Path-bounded time-stepping strategies) Let \(\big \{{{\widetilde{Y}}}(t_n),h_{n+1} \big \}_{n\in {\mathbb {N}}}\) be a numerical approximation for (1.1) given by (3.5), associated with a timestep sequence \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) satisfying Assumption 3.1. We say that \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) is a path-bounded time-stepping strategy for (3.5) if there exist real non-negative constants \(0\le Q<R\) (where R may be infinite if \(Q\ne 0\)) such that on the event \(\{h_{\min }< h_{n+1} \le h_{\max }\}\),

Note that throughout this paper we use a strategy where \(Q=0\) and \(R<\infty \). As we will see in Sect. 5.2, a careful choice of the parameter \(\kappa \) can be used to minimise invocations of the backstop method when \(\rho \) is fixed.

4 Main results

Our first main result shows strong convergence with order 1 of solutions of (3.5) to solutions of (1.1) when \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) is a path-bounded time-stepping strategy ensuring that (3.12) holds.

Theorem 4.1

(Strong Convergence) Let \((X(t))_{t\in [0,T]}\) be a solution of (1.1) with initial value \(X(0) = X_0{\in {\mathbb {R}}^d}\). Suppose that the conditions of Assumptions 2.1 and 2.2 hold. Let \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\) be the adaptive Milstein scheme given in Definition 3.3 with initial value for the first component \({{\widetilde{Y}}}_0 = X_0\) and path-bounded time-stepping strategy \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) satisfying the conditions of Definition 3.5 for some \(R<\infty \). Then there exists a constant \(C(R,\rho ,T) > 0\) such that

Furthermore,

The proof of Theorem 4.1, which is given in Sect. 7.2, accounts for the properties of the random sequences \(\{t_n\}_{n\in {\mathbb {N}}}\) and \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) and uses (3.12) to compensate for the non-Lipschitz drift and diffusion.

Our second main result shows that for the specific strategy given by (3.11) the probability of needing a backstop method can be made arbitrarily small by taking \(\rho \) sufficiently large for fixed \(\kappa \).

Theorem 4.2

(Probability of Backstop) Let all the conditions of Theorem 4.1 hold, and suppose that the path-bounded time-stepping strategy \(\{h_{n+1}\}_{n\in {\mathbb {N}}}\) also satisfies (3.11). Let \(C(R,\rho ,T)\) be the error constant in estimate (4.1) from the statement of Theorem 4.1.

For any fixed \(\kappa \ge 1\) there exists a constant \(C_{\text {prob}}=C_{\text {prob}}(T,R,h_{\max })\) such that, for \(h_{\max }\,\le \,1/C(R,\rho ,T)\),

Further for arbitrarily small tolerance \(\varepsilon \in (0,1)\), there exists \(\rho >0\) such that

For proof see Sect. 7.3.

5 Numerical examples

Remark 5.1

We use the adaptive strategy in (3.11). We ensure that we reach the final time by taking \(h_{N}=T-t_{N-1}\) as our final step, and in a situation where this is smaller than \(h_{\min }\) we use the backstop method (this is compatible with the proofs below).

In the numerical experiments below, we set the adaptive Milstein scheme (AMil) as in (3.5) with (3.11) as the choice of \(h_{n+1}\). Projected Milstein (PMil) in [1, Eq. (24)] is set to be the backstop method of AMil and the reference method of all models. Then we compare the strong convergence,looking at the root mean square (RMS) error, and efficiency, by comparing the CPU time, of AMil and PMil, Split-Step Backward Milstein method (SSBM) [1, Eq. (25)], the new variant of Milstein (TMil) in [20], and the Tamed Stochastic Runge–Kutta of order 1.0 (TSRK1) method [8, Eq. (3.8) (3.9)]. For the non-adaptive schemes, to examine strong convergence, we take as the fixed step \(h_{\text {mean}}\) the average of all time steps over each path and each Monte Carlo realization \(m = 1,\dots , M\) so that

where \(N_{m}\) denotes the number of steps taken on the \(m^{th}\) sample path to reach T.

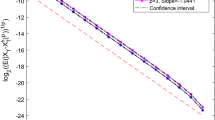

Strong convergence and efficiency of model (5.1) with a and b for additive noise; c and d for multiplicative noise. e Two paths of timestep h for \(\rho =2,6\) and in f the estimated probability of using \(h_{\min }\) for the multiplicative noise model with \(M=100\) realizations

5.1 One-dimensional test equations with multiplicative and additive noise

In order to demonstrate strong convergence of order one for a scalar test equation with non-globally Lipschitz drift, consider

For illustrating both the multiplicative and additive noise cases, we estimate the RMS error by a Monte Carlo method using \(M=1000\) trajectories for \(h_{\max }=[2^{-14}, 2^{-12}, 2^{-10}, 2^{-8}, 2^{-6}]\), \(\rho =2^2\), \(\kappa =1\), and use as a reference solution PMil over a mesh with uniform step sizes \(h_{\text {ref}}=2^{-18}\).

For additive noise we set \(G(x)=\sigma \) in (5.1), and for multiplicative noise we set \(G(x)=\sigma (1-x^2)\) with \(\sigma =0.2\) and \(X(0)=11\) in both cases. Strong convergence of order one is displayed by all methods in Fig. 1 part (a) and (c) for the additive and multiplicative cases respectively, with the efficiency displayed in parts (b) and (d).

Finally, consider Theorem 4.2. We illustrate that the probability of our time-stepping strategy selecting \(h_{\min }\), and therefore triggering an application of the backstop method, can be made arbitrarily small at every step by an appropriate choice of \(\rho \) (with fixed \(\kappa =1\)). Consider (5.1) again with \(G(x)=\sigma (1-x^2)\), this time with \(X(0)=100\), \(\kappa , T=1\), \(h_{\max }=2^{-20}\) and \(\rho =[2, 4, \dots , 16]\). In Fig. 1e, we plot two paths of h when \(\rho =2, 6\). Observe that when \(\rho =2\) the backstop is triggered only for the first \(10^5\) steps approximately, whereas once \(\rho \) is increase to 6 this is reduced to the first \(2\times 10^4\) steps approximately. Estimated probabilities of using \(h_{\min }\) are plotted on a log-log scale as a function of \(\rho \) in Fig. 1f (with \(M=100\) realizations). The estimated probability of using \(h_{\min }\) declines to zero as \(\rho \) increases. We observe a rate close to \(-1\), matching that in (4.3) with \(\kappa =1\).

5.2 One-dimensional model of telomere shortening

The following one-dimensional SDE model was given in [9, Eq. (A6)] for modelling the shortening over time of telomere length L in DNA replication

The parameter c determines the underlying decay rate of the length and a controls the intensity at which random breaks occur in the telomere; we take \((a,c)=(0.41\times 10^{-6},7.5)\) as in [9]. In this example we fix \(\rho =4\), instead adjusting the parameter \(\kappa \) in (3.11) to control use of the backstop method. Individual paths are shown in Fig. 2 where we take \(h_{\max }=2^{-18}\), and \(h=2^{-20}\) for the fixed step methods.

We set \(L(0)=1000\), noting from [9] that initial values could be as high as (say) \(L(0)=6000\) and remain physically realistic. The end of the interval of valid simulation is determined by the first time at which trajectories reach zero, and is therefore random. However this is not observed to occur in the timescale (25 days) we consider here.

By design PMil projects the data onto a ball of radius determined in part by the growth of the drift term. We see in Fig. 2a that (PMil) immediately is reduced to approximately 200.

Contrarily, the design of TMil scales both drift and diffusion terms by \(1/(1+h|L|^2)\) for this model. When \(h|L|^2\) is large this scaling can damp out changes from step to step, and in Fig. 2a we see that TMil shows as (spuriously) almost constant. The paths of the other methods, AMil, SSBM and TSRK1 are close together as shown in Fig. 2a and in high detail in (b).

Notice that we used \(\kappa =8\) in (3.11) for AMil method to reduce the chance of requiring the backstop method PMil while keeping \(\rho =4\). We avoid setting \(\kappa =1\) in this case because \(L(0)=1000\) and so the adaptive step \(h_{n+1}\) would too frequently require the backstop method.

Single paths of the Telomere length SDE (5.2) solved over 25 days. b shows a detailed plot from (a)

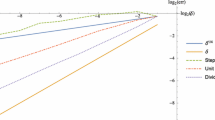

Two-dimensional system (5.3). a and b show the strong convergence and efficiency for diagonal noise, c and d with commutative and e and f for non-commutative noise. We choose \(a=3\), \(\sigma =0.2\) and \(b=1.5\)

5.3 Two-dimensional test systems

We now consider three (\(i=1,2,3\)) different SDEs:

with \(W(t)=[W_1(t),W_2(t)]^T\), where \(W_1\) and \(W_2\) are independent scalar Wiener processes, \(X(t)=[X_1(t),X_2(t)]^T\), \(F(x)=[x_2-3x_1^3,x_1-3x_2^3]^T\), and

\(G_1\) is an example of diagonal noise, \(G_2\) commutative noise, and \(G_3\) non-commutative noise.

For \(G_1\) and \(G_2\) we use \(h_{\max }=[2^{-14}, 2^{-12}, 2^{-10}, 2^{-8}, 2^{-6}]\), \(h_{\text {ref}}=2^{-18}\), \(\rho =4\) and \(\kappa =1\). In Fig. 3a, c, we see order one strong convergence for all methods. Parts (b) and (d) show the efficiency of the adaptive method.

For \(i=3\), the non-commutative noise case, take \(h_{\max }=[2^{-8}, 2^{-7}, 2^{-6}, 2^{-5}, 2^{-4}]\), \( h_{\text {ref}}=2^{-11}\), \(\rho =2^2\) and \(X(0)=[3,4]^T\). To simulate the Lévy areas we follow the method in [12, Sec. 4.3], which is based on the Euler approximation of a system of SDEs. Again, we observe order one convergence for all methods in Fig. 3e and that AMil is the most efficient in (f). Note that as TSRK1 is only supported theoretically for commutative noise we do not consider it here.

6 Preliminary lemmas

We present five lemmas necessary for the proof of Theorem 4.1 and Theorem 4.2. Throughout this section we assume that f and g satisfy Assumptions 2.1 and (except for Lemma 6.4) that we are on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\) so that (3.12) holds of Definition 3.5. We use (2.6), (2.8) and (2.7) to define some bounded constant coefficients depending on \(R<\infty \). The constants in (6.1) are then used in the development of a one-step error bound for the adaptive part of the scheme.

The following lemma provides a bound for the even conditional moments of the iterated stochastic integral in (2.11).

Lemma 6.1

(Iterated Stochastic Integral) Let \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\) be the adaptive Milstein scheme given in Definitions 3.3 and 3.5. Then there exists a constant \(C_{\texttt {ISI}}\) such that for \(k\ge 1\), \(n\in {\mathbb {N}}\) and \(s\in [t_n,t_{n+1}]\), on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\)

where

Here, \(\varvec{\gamma }_{p}\) is from (3.10), \(C^{}_{\texttt {LA}}\left( 2k\right) \) is from Lemma 2.2 with explicit form given in (A.1), and the R dependence in \(C^{}_{\texttt {ISI}}\left( k,R\right) \) arises from (6.1).

Proof

First of all, for convenience we set

By (2.12) and (2.3), we have, for \(s\in [t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\),

Applying (2.3) again and by submultiplicativity of the Euclidean norm and the fact that the induced matrix 2-norm is bounded above by the Frobenius norm, for \(s\in [t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\), we get

Applying conditional expectations on both sides, together with the pairwise conditional independence of \(I_{i}^{t_n,s}\) and \(I_{j}^{t_n,s}\) for \(i\ne j\), (2.6) and (6.1), we have for \(s\in [t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\)

Using (3.9), (3.10) and (2.14) we have

where \(C^{}_{\texttt {ISI}}\left( k,R\right) \) is in (6.3). \(\square \)

The following lemma provides a bound on the conditional moments of the adaptive Milstein scheme in (3.5) over one step, in the case where the method applies the map \(\theta \).

Lemma 6.2

Consider \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\) from Definitions 3.3 and 3.5, and let \((Y_{\theta }(s))_{s\in (t_n,t_{n+1}]}\) be as defined in Definition 3.4. Then there exists a constant \(C_{ Y_{\theta }}> 0\) such that for \(k\ge 1\), \(n\in {\mathbb {N}}\) and \(s\in {(}t_n, t_{n+1}]\), on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\),

where

with the constant \(C_{\texttt {ISI}}\) from Lemma 6.1.

Proof

By (3.7), (3.4) and (2.3), we have, for \(s\in {(}t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\),

Applying (2.3), (3.12) and (6.1) for \(s\in {(}t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\), it yields

Taking conditional expectation on both sides, with Jensen’s inequality on the last term we have for \(s\in {(}t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\)

Using (3.9), (6.2) from Lemma 6.1 and since \(|s-t_n|\le h_{\max }\le 1\) (3.2) we have

where \(C_{Y_{\theta }}(k,R)\) is in (6.5). \(\square \)

The following lemma proves regularity in time of the adaptive Milstein scheme in (3.5) when applying the map \(\theta \).

Lemma 6.3

(Scheme Regularity) Consider \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\) in Definitions 3.3 and 3.5, and let \((Y_{\theta }(s))_{s\in {(}t_n,t_{n+1}]}\) be as defined in Definition 3.4. Then there exists a constant \(C_{\texttt {SR}}\) such that for \(k\ge 1\), \(n\in {\mathbb {N}}\) and \(s\in {(}t_n, t_{n+1}]\), on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\)

where

with the constant \(C_{\texttt {ISI}}\) from Lemma 6.1.

Proof

The method of proof is similar to the proof of Lemma 6.2. \(\square \)

Remark 6.1

Our analysis requires a certain number of finite moments for the SDE (1.1), and it is necessary to track exactly what those are in order to see that the conditions of Assumption 2.2 are not violated. To this end, we introduce a superscript notation for random variables appearing as conditional expectations at this point. The notation should be interpreted according to the following example: in (6.9) below the random variable \(C_{\texttt {PR}}^{\{2k(q+2)\}}\) requires \(2k(q+2)\) finite moments of the SDE (1.1) to have finite expectation.

The following lemma examines the regularity of solutions of the SDE (1.1).

Lemma 6.4

(Path Regularity) Let f, g also satisfy Assumption 2.2, and let \((X(s))_{s\in [t_n,t_{n+1}]}\) be a solution of (1.1). Then there exists an \({\mathcal {F}}_{t_n}\)-measurable random variable \({\overline{C}}_{\texttt {PR}}^{\{2k(q+2)\}}\) such that for \(k\ge 1\), \(n\in {\mathbb {N}}\) and \(s\in [t_n,t_{n+1}]\) a.s.

where \(q=q_1\vee q_2\) is as defined in Assumption 2.2. Where a.s.

where the expectation of \({\overline{C}}_\texttt {PR}^{\{2k(q+2)\}}\) is denoted \(C^{}_{\texttt {PR}}\left( k\right) \), given by

Proof

The method of proof follows that of [25, Thm. 7.1]. The bound (6.10) follows from (2.9) and Assumption 2.2. \(\square \)

The following lemma provides a bound on the even conditional moments of the remainder term from a Taylor expansion of either the drift f or diffusion g, around \({\widetilde{Y}}(t_n)\).

Lemma 6.5

(Taylor Error) Consider \(\big \{\big (\widetilde{Y}(s)\big )_{s\in [t_n,t_{n+1}]},h_{n+1}\big \}_{n\in {\mathbb {N}}}\) from

Definitions 3.3 and 3.5, and let \((Y_{\theta }(s))_{s\in [t_n,t_{n+1}]}\) be as defined in Definition 3.4. Let \(u\in \{f, g\}\) and set \(c_{{\textbf{D}}2}:=c_1\vee c_2\). Then there exists a constant \(C_{\texttt {TE}}\) such that for \(k\ge 1\), \(n\in {\mathbb {N}}\) and \(s\in [t_n, t_{n+1}]\), on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\),

where \(C_{\texttt {TE}}\left( k,R \right) :=c_{{\textbf{D}}2}^{2k}\left( 1+ 3^{2kq+1}\left( R^{2kq}+ C_{Y_{\theta }}\left( k,R \right) \right) \right) \), where \(C_{Y_{\theta }}\big (k,R\big )\) is from Lemma 6.2.

Proof

By using (2.2), (2.3), (2.8), Lemma 6.2, (3.12) and since \(c_{{\textbf{D}}2}=c_1 \vee c_2\), \(q=q_1 \vee q_2\) we have

where \((1-\epsilon )^{2k}\,\epsilon ^{2kq}\le 1\) for \(k,q\ge 1\) and \(\epsilon \in [0,1]\). \(\square \)

7 Proof of main theorems

In this section we prove the strong convergence result of Theorem 4.1 and Theorem 4.2 on the probability of using the backstop and the role of \(\rho \).

7.1 Setting up the error function

Notice that \({{\widetilde{Y}}} (s)\), from the explicit adaptive Milstein scheme (3.5), takes either the Milstein map \(\theta \) in (3.4) or the backstop map \(\varphi \) in (3.6) depending on the value of \(h_{n+1}\). Thus, we define the error by

for \(s\in [t_n, t_{n+1}]\) and \(n\in {\mathbb {N}}\). Here

and \(Y_{\theta }(s)\) is as defined in Definition 3.4 and

with

To simplify the proof of Theorem 4.1 and Theorem 4.2, we require two lemmas. First, we find the second-moment bound of \(\Delta g_i\) in (7.5) on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\) (so that (3.12) holds).

Lemma 7.1

Let g satisfy Assumption 2.1 and \(\Delta g_i\) be as in (7.5). Take \(s\in [t_n,t_{n+1}]\), let X(s) be a solution of (1.1), consider \(\big (\widetilde{Y}(s),h_{n+1}\big )\) from Definitions 3.3 and 3.5, and let \(Y_{\theta }(s)\) be as defined in Definition 3.4. In this case there exists a constant \(C_{G}\) such that, on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\),

where

and \(C_{\texttt {ISI}}\), \(C_{\texttt {TE}}\) and \(C_{\texttt {SR}}\) are from Lemmas 6.1, 6.5 and 6.3, respectively.

Proof

Substitute \(\Delta g_i\) by (7.5) in the LHS of (7.6), add and subtract \(g_i\big ( Y_{\theta }(s)\big )\), and use (2.3) to get

To analyse \(G_{1}\), we expand \(g_i ( Y_{\theta }(s) )\) using Taylor’s theorem (see for example [23, A.1]) around \(g_i\big ({{\widetilde{Y}}}(t_n)\big )\) to get

where we recall from Sect. 2 that \([\cdot ]^2\) represents the outer product of a vector with itself. Substituting (7.9) into \(G_{1}\) in (7.8), taking out \({\textbf{D}}g_i\big ({{\widetilde{Y}}}(t_n)\big )\) as a common factor, and applying (2.3) gives

For \(G_{1.1}\) in (7.10), by submultiplicativity of the Euclidean norm and the fact that the induced matrix 2-norm is bounded above by the Frobenius norm; by (3.7), (6.1) and (6.2) in the statement of Lemma 6.1 with \(k=1\), we have

For \(G_{1.2}\) in (7.10), we apply (2.2), the Cauchy-Schwarz inequality, then using (6.11) in Lemma 6.5 with \(k=2\) and (6.6) in Lemma 6.3 with \(k=4\) we get

Substituting the bounds (7.11) and (7.12) back to (7.10) before bringing together the terms in (7.8), we have

where \(C_G(R) \) is given in (7.7). By bounding \(\Vert g_i\Vert ^2\) with \(\Vert g\Vert _{{\textbf{F}}(d\times m)}^2\), the statement of Lemma 7.1 follows. \(\square \)

The second lemma in the following gives the conditional second-moment bound of \(E_{\theta }(s)\) as in (7.3), which is the first part of the one-step error in (7.1).

Lemma 7.2

Let f, g satisfy Assumption 2.1 and 2.2. Let X(s) be a solution of (1.1) and \({{\widetilde{E}}}(s)\) be given by (7.1) with \(E_{\theta }(s)\) defined in (7.3), with \(s\in [t_n,t_{n+1}]\), \(n\in {\mathbb {N}}\). In this case there exists a constant \(C_E\) and an \({\mathcal {F}}_{t_n}\)-measurable random variable \(\overline{C}^{\{4(q+2)\}}_{M}\) such that

where

with constant \(K_1\) as defined in (7.40). The \({\mathcal {F}}_{t_n}\)-measurable random variable \(\overline{C}^{\{4(q+2)\}}_M\) is given by

with the \({\mathcal {F}}_{t_n}\)-measurable random variable \(\overline{K}^{\{4(q+2)\}}_2\) in (7.41), constant \(C_{G}\) in Lemma 7.1. Denote \({\mathbb {E}}\left[ {\overline{C}^{\{4(q+2)\}}_M(R)} \right] =:C_M(R)\), the finiteness of which is ensured in (7.44).

We recall that the superscript notation in (7.15) follows the convention introduced in the statement of Lemma 6.4 and indicates the number of finite moments required of the SDE solution.

Proof

Throughout the proof, we restrict attention to trajectories on the event \(\{h_{\min }<h_{n+1}\le h_{\max }\}\), since by (7.3), \(E_\theta (s)\) is only nonzero on this event, otherwise (7.13) holds trivially. Applying the stopping time variant of Itô formula (see Mao & Yuan [27]) to (7.3), we have,

Take expectations on both sides conditional upon \({\mathcal {F}}_{t_n}\), and since \(\int _{t_n}^{t_{n+1}}\big |J_f\big | dr\) has finite expectation (by the boundedness of \(\widetilde{Y}(t_n)\) in (3.12) and the finiteness of absolute moments of X(r) see (2.9)), using Fubini’s Theorem (see for example [4, Proposition 12.10]) and (3.8) we have,

By Lemma 7.1, we have the bound of \({\Vert }J_{g_i}{\Vert ^2}\) in (7.16) as

For \(J_f\), by substituting \(\Delta f\) with (7.4) with adding in and subtracting out \(f(Y_{\theta }(r))\), we have

Substituting (7.18) and (7.17) back into (7.16), we have

where

For H in (7.18), and in a similar way to (7.9), we expand \(f(Y_{\theta }(r))\) using Taylor’s theorem around \({{\widetilde{Y}}}(t_n)\) to have

Then we substitute \(Y_{\theta }(r)\) in the first term on the RHS of (7.21) with (3.4) where we use the expanded form of the map as characterised in (2.12) for \(s=r\). Therefore, for the last term on the RHS of (7.19), we have

where

We will now determine suitable upper bounds for each of \(H_1\), \(H_2\), \(H_3\), \(H_4\), \(H_5\), and \(H_6\) in turn. For \(H_1\) in (7.22), by the Cauchy-Schwarz inequality, (2.1), and (6.1), we have

Next, for the analysis of \(H_2\) in (7.22), by (3.8), we firstly have

By (2.3), the Cauchy-Schwarz inequality, (6.1) and (3.9) we also have

Then, for \(H_2\) in (7.22) we firstly expand \(E_{\theta }(r)\) using (7.3) to have

For \(H_{2.1}\) in (7.26), by (7.24) we have

For \(H_{2.2}\) in (7.26), by adding in and subtracting out \(f(X(t_n))\) in \(\Delta f\) in (7.4):

Similar to \(H_{2.1}\) in (7.27), we have \(H_{2.22} = 0\). For \(H_{2.21}\) in (7.28), using the Cauchy-Schwarz inequality and (7.25) we have

By Taylor expansion of f(X(p)) around \(f(X(t_n))\) to first order, and using (2.6), the Cauchy-Schwarz inequality, Lemma 6.4 with \(k=2\) and (2.3):

where

Substituting (7.30) back to (7.29) and using that \(H_{2.22}=0\), we have

For \(H_{2.3}\) as in (7.26), using the Cauchy-Schwarz inequality, (2.3), (6.1), (3.9) and Itô ’s isometry we have

Then, by Lemma 7.1 we have

Since the integrand \({\mathbb {E}}\Big [\big \Vert g(X(p))-g(Y_{\theta }(p))\big \Vert _{{\textbf{F}}(d\times m)}^2 \Big |{\mathcal {F}}_{t_n}\Big ]\) is non-negative for all \(p\in [t_n,t_{n+1}]\), we can replace the upper limit of integration with \(t_{n+1}\). With \(\sqrt{a+b}\le \sqrt{a}+\sqrt{b}\), we have

Notice that we changed the variable of integration from p back to r for consistency. Substituting (7.27), (7.32) and (7.33) back into (7.26), we have

For \(H_{3}\) in (7.22), by the Cauchy-Schwarz inequality, triangle inequality, (2.3), (2.1), (3.10), (2.6) and (6.1) we have

For \(H_{4}\) in (7.22), by the Cauchy-Schwarz inequality, conditional independence of the Itô integrals, (3.9), triangle inequality, (2.1), Itô ’s isometry, (2.6), and (6.1), we have

For \(H_{5}\) in (7.22), by the Cauchy-Schwarz inequality, triangle inequality, (2.1), (6.1), (2.6), and Lemma 2.2 with \(b=2\), we have

For \(H_{6}\) in (7.22), by the Cauchy-Schwarz inequality, triangle inequality, and (2.1) we have (noting that \(\Vert [\cdot ]^2 \Vert _{\textbf{F}}=\Vert \cdot \Vert ^2\))

From (6.6) in Lemma 6.3 with \(k=4\), we have \(H_{6.1}\le C^{}_{\texttt {SR}}\left( 4,R\right) ^{1/2} |r-t_n|^2.\) From (6.11) in Lemma 6.5 with \(k=2\), we have \(H_{6.2} \le C^{}_{\texttt {TE}}\left( 2,R\right) ^{1/2}\). Therefore, \(H_{6}\) in (7.22) becomes

Substituting (7.23), (7.34), (7.35), (7.36), (7.37) and (7.38) back into (7.22) for H, we have

where

and with \({\overline{C}}^{\{4(q+2)\}}_{H2.21}\) from (7.31)

Substituting \({\mathbb {E}}[H|{\mathcal {F}}_{t_n}]\) from (7.39) back into (7.19), we have

Using (2.1) on the last term on the RHS of (7.42), we have

where \({\overline{C}}^{\{4(q+2)\}}_M\) is as defined in (7.15). Recall \(J_{f,g}\) is given in (7.20) so that

By Assumption 2.2 we can apply the monotone condition (2.5):

where \(C_E(R)\) is in (7.14).

To obtain the the final estimate on \(C_M(R)\) in the Lemma we use the explicit form of \({\overline{C}}_M^{\{4(q+2)\}}\), \(\overline{K}_2^{\{4(q+2)\}}\), \({\overline{C}}_{H2.21}^{\{4(q+2)\}}\), given by (7.15), (7.41), and (7.31) respectively, (6.10) in the statement of Lemma 6.5, (2.9), and Assumption 2.2 we bound the expectation of \(\overline{C}_M^{\{4(q+2)\}}\) as follows,

\(\square \)

7.2 Proof of Theorem 4.1 on strong convergence

Proof

Firstly, by (7.1) we have the conditional second-moment bound of the one-step error as

where by (3.6) and (7.2), the one-step error bound of the backstop map yields

Therefore, by substituting (7.13) and (7.46) into (7.45), and recalling (7.1) we have for any \(h_{n+1}\) that satisfies Assumption 3.1,

where we define \(\Gamma _1\), \({\overline{\Gamma }}_2\) and by (7.44) its expected form as

For a fixed \(t>0\), let \(N^{(t)}\) be as in Definition 3.2, we multiply both sides of (7.47) with the indicator function \({\textbf{1}}_{\{N^{(t)}> n+1\}}\) and sum up the steps excluding the last step \(N^{(t)}\) to have

Since \(t\in \big [t_{N^{(t)}-1},t_{N^{(t)}}\big ]\), we use (7.47) to express the last step, noting that it holds when \(t_n,t_{n+1}\) are replaced by \(t_{N^{(t)}-1}\) and t respectively:

By adding the both sides of (7.49) and (7.50), and taking an expectation:

where we analyse (7.51) (\(\text {LHS}\le \text {R}_1+\text {R}_2\)) below. For the LHS in (7.51), \(N^{(t)}\) is a random number taking value from \(N^{(t)}_{\min }\) to \(N^{(t)}_{\max }\), and \({\textbf{1}}_{\{N^{(t)}> n+1\}}\) is an \({\mathcal {F}}_{t_{n}}\)-measurable random variable. Therefore it is useful decompose the range of n into three parts on each trajectory. First, when \(n<N^{(t)}-1\), then \(1_{\{N^{(t)}>n+1\}}=1_{\{N^{(t)}>n\}}=1\). Second, when \(n=N^{(t)}-1\), then \(1_{\{N^{(t)}>n+1\}}=0\) and \(1_{\{N^{(t)}>n\}}=1\). Finally, when \(n>N^{(t)}-1\), then \(1_{\{N^{(t)}>n+1\}}=1_{\{N^{(t)}>n\}}=0\). Hence we obtain a telescoping sum with the appropriate cancellation that terminates at \({\mathbb {E}}\big [\Vert {\widetilde{E}}(t_{N^{(t)}-1})\Vert ^2\,1_{\{N^{(t)}>N^{(t)}-1\}}\big ]={\mathbb {E}}\big [\Vert {\widetilde{E}}(t_{N^{(t)}-1})\Vert ^2\big ]\). Applying this with the tower property for conditional expectations, and using the fact that \(\Vert {\widetilde{E}}(t_0)\Vert ^2=0\), we have

Consider the term \(\text {R}_1\) on the RHS of (7.51). By Definition 3.2 we have each \(n=N^{(r)}-1\) for \(r\in [t_n,t_{n+1}]\). So we restate \({\mathcal {F}}_{t_n}\) as \({\mathcal {F}}_{t_{N^{(r)}-1}}\), and the indicator function as \({\textbf{1}}_{\{N^{(t)}>N^{(r)}\}}\). Summing up all the steps results in an integral from 0 to \(t_{N^{(t)}-1}\) that

For \(\text {R}_2\) in (7.51), by (7.48), Definition 3.2 and \(\rho h_{\min }=h_{\max }\) we have

We see that \(4(q+2)\) is the minimum number of finite SDE moments required for a finite \(\text {R}_2\), and this is guaranteed by Assumption 2.2. Combining (7.52), (7.53) and (7.54) back into (7.51), for all \(t\in [0,T]\) we have

By Gronwall’s inequality (see [25, Thm. 8.1]), we have for all \(t\in [0,T]\)

Taking the maximum over t on the both sides, the proof follows with

\(\square \)

7.3 Proof of Theorem 4.2 on the probability of using the backstop

Proof

By (3.11) and by the Markov inequality we have

By adding in and subtracting out \(X(t_n)\) together with the tower property of conditional expectation, (2.3), (7.1) and (2.9) we have

Next, we repeatedly substitute (7.47) for decreasing values of n into the RHS of (7.57) until \(n=0\). Then with tower property, Definition 3.2, (3.11) and (7.48) we have

Since the integrand \({\mathbb {E}}\Big [\big \Vert \widetilde{E}(r)\big \Vert ^2\Big | {\mathcal {F}}_{t_{N^{(r)}-1}}\Big ]\) in the second term on the RHS of (7.58) is almost surely non-negative for all \(r\in [0,T]\), we can replace the upper limit of integration with T. Using \({{\widetilde{E}}}(t_{0})=0\), (3.11), the tower property of conditional expectation, and (7.55) from Theorem 4.1, we have

By choosing \(h_{\max }\le 1/C(R,\rho ,T)\), we substitute (7.59) into (7.58) and then (7.56) to get

and the rest of the proof follows. \(\square \)

References

Beyn, W.J., Isaak, E., Kruse, R.: Stochastic C-stability and B-consistency of explicit and implicit Milstein-type schemes. J. Sci. Comput. 70(3), 1042–1077 (2017)

Burrage, P.M., Herdiana, R., Burrage, K.: Adaptive stepsize based on control theory for stochastic differential equations. J. Comput. Appl. Math. 171(1–2), 317–336 (2004). https://doi.org/10.1016/j.cam.2004.01.027

Campbell, S., Lord, G.: Adaptive time-stepping for stochastic partial differential equations with non-Lipschitz drift. arXiv preprint arXiv:1812.09036 (2018)

Dineen, S.: Probability Theory in Finance: a mathematical guide to the Black–Scholes Formula. Graduate studies in mathematics; v.70. American Mathematical Society, Universities Press (2011)

Fang, W., Giles, M.B.: Adaptive Euler–Maruyama method for SDEs with non-globally Lipschitz drift. In: International Conference on Monte Carlo and Quasi-Monte Carlo Methods in Scientific Computing, pp. 217–234. Springer (2016)

Fang, W., Giles, M.B.: Adaptive Euler–Maruyama method for SDEs with nonglobally Lipschitz drift. Ann. Appl. Probab. 30(2), 526–560 (2020)

Gaines, J., Lyons, T.: Variable step size control in the numerical solution of stochastic differential equations. SIAM J. Appl. Math. 57(5), 1455–1484 (1997). https://doi.org/10.1137/S0036139995286515

Gan, S., He, Y., Wang, X.: Tamed Runge–Kutta methods for SDEs with super-linearly growing drift and diffusion coefficients. Appl. Numer. Math. 152, 379–402 (2020)

Grasman, J., Salomons, H., Verhulst, S.: Stochastic modeling of length-dependent telomere shortening in Corvus monedula. J. Theor. Biol. 282(1), 1–6 (2011)

Guo, Q., Liu, W., Mao, X., Yue, R.: The truncated Milstein method for stochastic differential equations with commutative noise. J. Comput. Appl. Math. 338, 298–310 (2018). https://doi.org/10.1016/j.cam.2018.01.014

Hasminskii, R.: Stochastic Stability of Differential Equations. Sijthoff & Noordhoff (1980)

Higham, D.J., Kloeden, P.E.: Maple and MATLAB for stochastic differential equations in finance. In: Programming Languages and Systems in Computational Economics and Finance, pp. 233–269. Springer (2002)

Higham, D.J., Mao, X., Szpruch, L.: Convergence, non-negativity and stability of a new Milstein scheme with applications to finance. Discrete and Continuous Dynamical Systems B, pp. 2083–2100 (2013)

Hofmann, N., Müller-Gronbach, T., Ritter, K.: The optimal discretization of stochastic differential equations. J. Complex. 17(1), 117–153 (2001). https://doi.org/10.1006/jcom.2000.0570

Hutzenthaler, M., Jentzen, A., Kloeden, P.E.: Strong and weak divergence in finite time of Euler’s method for stochastic differential equations with non-globally Lipschitz continuous coefficients. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 467(2130), 1563–1576 (2011)

Ilie, S., Jackson, K.R., Enright, W.H.: Adaptive time-stepping for the strong numerical solution of stochastic differential equations. Numer. Algorithms 68(4), 791–812 (2015). https://doi.org/10.1007/s11075-014-9872-6

Kelly, C., Lord, G.J.: Adaptive time-stepping strategies for nonlinear stochastic systems. IMA J. Numer. Anal. 38(3), 1523–1549 (2018)

Kelly, C., Lord, G.J.: Adaptive Euler methods for stochastic systems with non-globally Lipschitz coefficients. Numerical Algorithms pp. 1–27 (2021)

Kloeden, P., Platen, E.: Numerical methods for stochastic differential equations. Stoch. Hydrol. Hydraul. 5(2), 172–172 (1991)

Kumar, C., Sabanis, S.: On Milstein approximations with varying coefficients: the case of super-linear diffusion coefficients. BIT Numer. Math. 59(4), 929–968 (2019). https://doi.org/10.1007/s10543-019-00756-5

Lamba, H., Mattingly, J.C., Stuart, A.M.: An adaptive Euler-Maruyama scheme for SDEs: convergence and stability. IMA J. Numer. Anal. 27(3), 479–506 (2007). https://doi.org/10.1093/imanum/drl032

Lévy, P.: Wiener’s random function, and other Laplacian random functions. In: Proceedings of the Second Berkeley Symposium on Mathematical Statistics and Probability. The Regents of the University of California (1951)

Lord, G.J., Powell, C.E., Shardlow, T.: An Introduction to Computational Stochastic PDEs. Cambridge University Press, Cambridge (2014)

Malham, S., Wiese, A.: Efficient almost-exact Lévy area sampling. Stat. Probab. Lett. 88, 50–55 (2014)

Mao, X.: Stochastic Differential Equations and Applications, 2nd edn. Woodhead Publishing, Cambridge (2007)

Mao, X.: The truncated Euler-Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 290, 370–384 (2015)

Mao, X., Yuan, C.: Stochastic Differential Equations with Markovian Switching. Imperial College Press, London (2006)

Mauthner, S.: Step size control in the numerical solution of stochastic differential equations. J. Comput. Appl. Math. 100(1), 93–109 (1998). https://doi.org/10.1016/S0377-0427(98)00139-3

Papoulis, A., Pillai, S.U.: Probability, Random Variables, and Stochastic Processes, 4th edn. McGraw-Hill, New York (2002)

Reisinger, C., Stockinger, W.: An adaptive Euler–Maruyama scheme for Mckean–Vlasov SDEs with super-linear growth and application to the mean-field FitzHugh–Nagumo model. arXiv preprint arXiv:2005.06034 (2020)

Shardlow, T., Taylor, P.: On the pathwise approximation of stochastic differential equations. BIT Numer. Math. 56(3), 1101–1129 (2016)

Shiryaev, A.: Probability, 2nd edn. Springer, Berlin (1996)

Tretyakov, M.V., Zhang, Z.: A fundamental mean-square convergence theorem for SDEs with locally Lipschitz coefficients and its applications. SIAM J. Numer. Anal. 51(6), 3135–3162 (2013)

Wang, X., Gan, S.: The tamed Milstein method for commutative stochastic differential equations with non-globally Lipschitz continuous coefficients. J. Differ. Equ. Appl. 19(3), 466–490 (2013)

Yao, J., Gan, S.: Stability of the drift-implicit and double-implicit Milstein schemes for nonlinear SDEs. Appl. Math. Comput. 339, 294–301 (2018). https://doi.org/10.1016/j.amc.2018.07.026

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Communicated by David Cohen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Proof of Lemma 2.2 (Lévy Area)

Appendix A: Proof of Lemma 2.2 (Lévy Area)

Proof

Set \(\texttt{i}^2=-1\). Since the pair of Wiener processes \((W_i(r),W_j(r))^T\), \(r\in [t_n, s]\), are mutually independent, by [22, Eq. (1.3.5)] the characteristic function of the Lévy area (2.13) is given by \(\phi (\lambda )=(\cosh \left( \frac{1}{2}|s-t_n|\lambda \right) )^{-1}.\) This was applied in the context of numerical methods for SDEs in [24]. The Taylor expansion of the function \(\cosh \left( \frac{1}{2}|s-t_n|\lambda \right) \) around 0 gives

where \({\textbf {E}}_{2N}\) stands for the \(2N^{\text {th}}\) Euler number, which may be expressed as

All odd Euler numbers are zero. The \(k^{\text {th}}\) derivative of the characteristic function with respect to \(\lambda \) is

As \(\lambda \rightarrow 0\), since all terms vanish unless \(k=2N\), we have

In the calculation of expectations, we make use of the mutual independence, conditional upon \({\mathcal {F}}_{t_n}\), of the pair of Brownian increments \((W_i(t),W_j(t))^T\). Therefore, the \(k^{\text {th}}\) conditional moment of \(A_{ij}^{t_n,s}\) is

where for all \(a=1,2,3,\dots \)

which is finite, as a finite product of finite factors. When k is even, we have

When k is odd, i.e. \(k=2c+1\) for all \(c=0,1,2,\dots \), we have a.s.

Therefore, in conclusion we have

where

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kelly, C., Lord, G.J. & Sun, F. Strong convergence of an adaptive time-stepping Milstein method for SDEs with monotone coefficients. Bit Numer Math 63, 33 (2023). https://doi.org/10.1007/s10543-023-00969-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10543-023-00969-9

Keywords

- Stochastic differential equations

- Adaptive time-stepping

- Milstein method

- Non-globally Lipschitz coefficients

- Strong convergence