Abstract

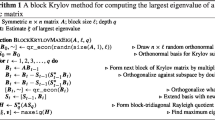

We present randomized algorithms based on block Krylov subspace methods for estimating the trace and log-determinant of Hermitian positive semi-definite matrices. Using the properties of Chebyshev polynomials and Gaussian random matrix, we provide the error analysis of the proposed estimators and obtain the expectation and concentration error bounds. These bounds improve the corresponding ones given in the literature. Numerical experiments are presented to illustrate the performance of the algorithms and to test the error bounds.

Similar content being viewed by others

Notes

Note that even if we can access the individual entries of the matrices, the cost of computation of LU or Cholesky factorization is prohibitive.

In fact, the speedup of Algorithms 1 and 2 with structured random matrices is very limit because one multiplication by A will destroy the structure of the random matrices.

In fact, this case does not happen in practice since in this case we naturally choose Algorithm 2 with the same input settings because it can achieve higher accuracy with similar computational complexity. Here, we mainly want to show that our bounds may still tighter in this impractical and unfair case.

Note that \(\mathrm{image}(Q)\subset \mathrm{image}({\widehat{Q}})\). Then \(\mathrm{Tr}(A)-\mathrm{Tr}(T)\le \mathrm{Tr}(A)-\mathrm{Tr}(QQ^{*}A)\). Similar to the rest proof of upper bounds, we can recover the structural upper bounds of trace estimator given in [32]. That is, the upper bounds from [32] are also applicable to Algorithm 2. The case for the log-determinant estimator is similar.

References

Aizenbud, Y., Averbuch, A.: Matrix decompositions using sub-Gaussian random matrices. Inf. Infer. J. IMA 8(3), 445–469 (2019)

Aizenbud, Y., Shabat, G., Averbuch, A.: Randomized LU decomposition using sparse projections. Comput. Math. Appl. 72(9), 2525–2534 (2016)

Anitescu, M., Chen, J., Wang, L.: A matrix-free approach for solving the parametric Gaussian process maximum likelihood problem. SIAM J. Sci. Comput. 34(1), A240–A262 (2012)

Aune, E., Simpson, D.P., Eidsvik, J.: Parameter estimation in high dimensional Gaussian distributions. Stat. Comput. 24(2), 247–263 (2014)

Avron, H., Toledo, S.: Randomized algorithms for estimating the trace of an implicit symmetric positive semi-definite matrix. J. ACM 58(2), Art. 8 (2011)

Barry, R.P., Pace, R.K.: Monte Carlo estimates of the log determinant of large sparse matrices. Linear Algebra Appl. 289(1–3), 41–54 (1999)

Boutsidis, C., Drineas, P., Kambadur, P., Kontopoulou, E.M., Zouzias, A.: A randomized algorithm for approximating the log determinant of a symmetric positive definite matrix. Linear Algebra Appl. 533, 95–117 (2017)

Clarkson, K.L., Woodruff, D.P.: Low-rank approximation and regression in input sparsity time. J. ACM 63(6), Art. 54 (2017)

Drineas, P., Ipsen, I.C.F., Kontopoulou, E.M., Magdon-Ismail, M.: Structural convergence results for approximation of dominant subspaces from block Krylov spaces. SIAM J. Matrix Anal. Appl. 39(2), 567–586 (2018)

Feng, X., Yu, W., Li, Y.: Faster matrix completion using randomized SVD. arXiv:1810.06860 (2018)

Gittens, A., Kambadur, P., Boutsidis, C.: Approximate spectral clustering via randomized sketching. arXiv:1311.2854 (2013)

Golub, G.H., Von Matt, U.: Generalized cross-validation for large-scale problems. J. Comput. Graph. Stat. 6(1), 1–34 (1997)

Gu, M.: Subspace iteration randomization and singular value problems. SIAM J. Sci. Comput. 37(3), A1139–A1173 (2015)

Haber, E., Horesh, L., Tenorio, L.: Numerical methods for experimental design of large-scale linear ill-posed inverse problems. Inverse Probl. 24(5), 055012 (2008)

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Martinsson, P.-G., Voronin, S.: A randomized blocked algorithm for efficiently computing rank-revealing factorizations of matrices. SIAM J. Sci. Comput. 38, S485–S507 (2016)

Han, I., Malioutov, D., Avron, H., Shin, J.: Approximating spectral sums of large-scale matrices using stochastic Chebyshev approximations. SIAM J. Sci. Comput. 39(4), A1558–A1585 (2017)

Han, I., Malioutov, D., Shin, J.: Large-scale log-determinant computation through stochastic Chebyshev expansions. In: Proceedings of the 32nd International Conference on Machine Learning, pp. 908–917 (2015)

Higham, N.J.: Accuracy and Stability of Numerical Algorithms, 2nd edn. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2002)

Horn, R.A., Johnson, C.R.: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Horn, R.A., Johnson, C.R.: Matrix Anal., 2nd edn. Cambridge University Press, Cambridge (2013)

Hutchinson, M.F.: A stochastic estimator of the trace of the influence matrix for laplacian smoothing splines. Commun. Stat.-Simul. Comput. 19(2), 433–450 (1990)

Li, H.Y., Yin, S.H.: Single-pass randomized algorithms for LU decomposition. Linear Algebra Appl. 595, 101–122 (2020)

Li, R.C., Zhang, L.H.: Convergence of the block Lanczos method for eigenvalue clusters. Numer. Math. 131(1), 83–113 (2015)

Lin, L.: Randomized estimation of spectral densities of large matrices made accurate. Numer. Math. 136(1), 183–213 (2017)

Musco, C., Musco, C.: Randomized block Krylov methods for stronger and faster approximate singular value decomposition. Adv. Neural Inf. Process. Syst. 28, 1396–1404 (2015)

Nelson, J., Nguyên, H.L.: Osnap: faster numerical linear algebra algorithms via sparser subspace embeddings. In: IEEE 54th Annual Symposium on Foundations of Computer Science (FOCS), pp. 117–126 (2013)

Ouellette, D.V.: Schur complements and statistics. Linear Algebra Appl. 36, 187–295 (1981)

Pace, R.K., LeSage, J.P.: Chebyshev approximation of log-determinants of spatial weight matrices. Comput. Stat. Data Anal. 45(2), 179–196 (2004)

Roosta-Khorasani, F., Ascher, U.: Improved bounds on sample size for implicit matrix trace estimators. Found. Comput. Math. 15(5), 1187–1212 (2015)

Saad, Y.: Numerical Methods for Large Eigenvalue Problems, revised edn. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2011)

Saibaba, A.K., Alexanderian, A., Ipsen, I.C.F.: Randomized matrix-free trace and log-determinant estimators. Numer. Math. 137(2), 353–395 (2017)

Tropp, J.A.: Improved analysis of the subsampled randomized Hadamard transform. Adv. Adapt. Data Anal. 3, 115–126 (2011)

Yu, W.J., Gu, Y., Li, Y.H.: Efficient randomized algorithms for the fixed-precision low- rank matrix approximation. SIAM J. Matrix Anal. Appl. 39(3), 1339–1359 (2018)

Yuan, Q., Gu, M., Li, B.: Superlinear convergence of randomized block lanczos algorithm. arXiv:1808.06287 (2018)

Zhang, Y., Leithead, W.E., Leith, D.J., Walshe, L.: Log-det approximation based on uniformly distributed seeds and its application to Gaussian process regression. J. Comput. Appl. Math. 220(1–2), 198–214 (2008)

Zhang, Y., Leithead, W.E.: Approximate implementation of the logarithm of the matrix determinant in Gaussian process regression. J. Stat. Comput. Simul. 77(4), 329–348 (2007)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Marko Huhtanen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work is supported by the National Natural Science Foundation of China (No. 11671060) and the Natural Science Foundation Project of CQ CSTC (No. cstc2019jcyj-msxmX0267)

Rights and permissions

About this article

Cite this article

Li, H., Zhu, Y. Randomized block Krylov subspace methods for trace and log-determinant estimators. Bit Numer Math 61, 911–939 (2021). https://doi.org/10.1007/s10543-021-00850-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-021-00850-7

Keywords

- Randomized algorithm

- Krylov subspace method

- Trace estimator

- Log-determinant estimator

- Chebyshev polynomials