Abstract

In 3D printing processes, there are many thermal stress related defects that can have a significant negative impact on the shape and size of the structure. Such anomalies in the heat transfer of the printing process need to be detected at an early stage. Understanding heat transfer is crucial, and simulation models can offer insights while reducing the need for costly experiments. Traditional numerical solvers for heat transfer can be complex to adapt to diverse printed part geometries, and their reliance on predefined mathematical models limits their flexibility. Our physics-informed deep learning (PIDL) approach eliminates the need for discretization, simplifying the analysis of complex geometries and enabling automation. The drawback of parametric PIDL is their scalability for high-dimensional problems. Computational time, energy and cost of training prevent real-time analysis. It often takes only a few seconds to print a single layer. We can show an energy efficient transfer and training strategy to reduce the computational effort of PIDL significantly. The approach is able to quantify relevant effects of thermal stresses and mitigate errors during selective laser melting (SLM). To this end, heat transfer is modelled, simulated and analysed using high-dimensional data obtained from printing experiments with different geometries of metal components. The proposed method is applied to the solving forward problem of heat transfer prediction. The governing results are based on the heat equation, which is integrated into a deep neural network (DNN).

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

SLM is a revolutionary additive manufacturing (AM) process that offers unparalleled capabilities for producing intricate and customized components [1, 2]. This technology brings some challenges: One of the main influencing factors in SLM processes is thermal stress, which can lead in thermomechanical distortion, porosity, and cracks in the component [3]. To prevent or mitigate such failures, real-time anomaly detection and thermal process monitoring is required. Therefore, understanding heat transfer is crucial for analyzing the behaviour of thermal stresses. Simulation models can be developed to gain a better understanding of 3D printing processes and reduce expensive experiments. In the realm of computational modeling and simulation, classical numerical solvers have been the stalwart choice for solving problems for heat transfer. However, they often require time-consuming and complicated modifications to adapt to new printed part geometries, especially those of a complex nature. Discretization, a fundamental component of numerical methods, becomes particularly challenging in such cases. The lack of automation exacerbates the problem. Moreover, these classical solvers are constrained by their reliance on predefined mathematical models and equations, limiting their data-driven learning capabilities [4,5,6]. In contrast, PIDL offer a promising way to overcome these limitations. PIDL eliminate the need for discretization, thus simplifying the process for complex geometries and offering a higher degree of automation. They also have the unique ability to learn complex physical interactions directly from sensor data, thereby bridging the gap between numerical simulation and real-world sensor measurements. The lack of interpretability of DNNs makes it difficult to gain detailed insights into the underlying physics of the learned gap. A drawback of parametric Physics-informed neural networks (PINNs) is their scalability for high-dimensional problems. Because training requires sampling in each parameter dimension, the curse of dimensionality is a limiting factor for many applications, such as 3D printing. The time, energy and cost of training prevent real-time fault mitigation. To realise the full potential of parametric PINNs, there is a great need for energy efficient transfer and training strategies. To overcome the computational burden of DNN training, we propose a pre-trained foundation model and fine tune the DNN parameters with each new printed layer based on similar heat transfer properties. This innovation significantly reduces the computation time of training and allows real-time analysis. PINNs lack guarantees. The accuracy and stability of PINNs depend on the quantity and quality of sensor data. For this purpose, we propose an intelligent data-driven simulation model using high-dimensional data from real printing experiments with different part geometries. The process of heat transfer can be described by the heat equation. We will integrate this descriptive knowledge into the learning process of a DNN. We suggest an application scenario, where we printed four different part geometries. In addition, we aim to demonstrate how a generic algorithm works independently of the built geometries to facilitate intelligent automation in AM as follows:

-

We propose a PINN to predict temperature fields and detect anomalies using real measurement data.

-

The approach is evaluated by comparing them to numerical simulation using the finite volume method (FVM).

-

We show that computational time, cost and energy of training can be reduced by the use of pre-trained foundation models.

-

We extend the input dimension of the DNN to include not only the spatial and temporal domain, but also the laser power as an essential process parameter. This allows us to obtain an arbitrary number of simulations for a defined laser power range with a single training process.

This paper is structured as follows. Section 2 presents related work that serves as a foundation for this paper, while Section 3 presents the models and techniques required to simulate the thermal processes in AM. This includes the formulation of the partial differential equation (PDE), the definition of the initial boundary value problem and the design of the individual loss functions. Section 4 presents the approximate solution for heat transfer in AM. The PINN solution is evaluated by comparison with FVM. Section 5 discusses sensor data generation, sensor data preparation, evaluation metrics and physical characteristics of the printing process. Section 6 shows the results of adding high dimensional real measurement data to the simulations and explains the detection of anomalies. Section 7 presents the discussion. Section 8 summarises the work and outlines possibilities for further work.

2 Related work

2.1 3D printing

In recent years, 3D printing has experienced a significant upswing. Components can be produced more variably and independently of their complex geometries. In particular, the SLM process has great potential to play a major role in the future. The basis for SLM is a CAD model, which digitally divides the component into slices in advance. SLM uses a laser to melt and fuse a bed of metal powder layer by layer, reducing a three-dimensional manufacturing task to two dimensions. Jandyal et al. discussed the advantages and disadvantages of numerous 3D printing processes. They presented the different fields of application of the individual processes and materials in Industry 4.0 [7]. A review of relevant information on the conventional and micro-engineering machinability of 3D printed metallic materials produced by SLM is provided by Uçak et al. In addition, they suggested machining strategies for these novel engineered materials [8]. Padmakumar et al. aims to summarise the three main techniques including SLM, for the manufacture of metal parts. The work presents the process variables, metallurgical and mechanical properties and manufacturing challenges [9]. Cozzolino et al. investigated the SLM process for INC 718 using a wide range of process parameters to analyse the influence on the efficiency and energy consumption [10]. Liu et al. gave an overview of recent advances in additive manufactured components using the Ti6A14V alloy. They also discussed the effects of defects on mechanical properties [1]. Motallebi et al. summarised in their work the hot deformation behaviour and the constitutive description of the yield stress for AM parts [11]. In our work we have done a lot of SLM experiments to generate high dimensional data for different built geometries. The materials used are a nickel based alloy INC718 and a stainless steel 316L.

2.2 Numerical simulation

Numerical simulations are a powerful tool for reducing the number of expensive experiments in highly productive processes. It is possible to describe the various physical interactions within a complex system using PDEs. Numerical approximations to these PDEs provide a viable way to simulate physical processes. There is a long history of research in numerical approximation methods such as Finite Difference Method, FVM and Finite Element Method [12,13,14,15,16]. Research milestones in these areas have led to a better understanding of the repeated heating and cooling cycles in SLM processes. One of the pioneering works in this area to be mentioned here is that of Mukherjee et al.. The work deals with modelling and simulation of powder bed fusion using FVM. The results are evaluated for different materials. These include stainless steel, titanium, nickel and aluminium based alloys [17, 18]. Ansari et al. investigated the process parameters of SLM using multiphysics simulation in their work [19]. The numerical investigation of the effects of residual stresses on the effective mechanical properties of 3D printed lattices is another recent work presented by Ahmed et al. [20]. Li et al. and Liu et al. employed an investigation of the behaviour of heat conduction within the SLM process [21, 22]. We draw on the excellent research in numerical simulation and use the FVM to solve a formulated initial-boundary value problem. We use the results to evaluate and benchmark the predictions of our PIDL approach.

2.3 Machine learning

Machine learning (ML) research continues to break new ground. From autonomous driving to cancer detection in medicine to seismology applications, ML is improving many different fields. Some of these things are even made possible by ML [23,24,25]. With ever-increasing data generation capabilities, ML has great potential for optimising production processes in complex engineering systems. Naisiri et al. showed applications of ML in predicting mechanical behaviour of 3D printed parts with benefits of challenges and perspectives [26]. Another review on machine learning in 3D printing with applications, potentials and challenges is presented by Goh et al. [27]. An ML model based on a convolutional neural network for real-time defect detection to prevent production losses and reduce human involvement in quality control is presented by Farhankhan et al. [28]. Nguyen et al. employed a new data-driven machine learning platform with an approach based on multilayer perceptron and convolutional neural network models. The platform predicts optimised parameters of the 3D printing process [29]. Tamir et al. developed a creative solution for ML-based monitoring and optimisation of processing parameters [30]. A simple surrogate model for predicting temperature evolution and melt pool size using data from an FEM model has been proposed by Pham et al. [31]. A further work dealing with multimodal ML in 3D printing is given by Bauer et al.. In this approach, an autoencoder is combined with our PINN to demonstrate the correlation of different features in heterogeneous sensor data for monitoring 3D printing processes [32].

2.4 Physics-informed neural networks

With the goal of monitoring and understanding physical processes such as 3D printing in a data-driven way, it is an intuitive decision to combine ML algorithms with physical models and force the best of both worlds. To this end, technical applications and new developments of PINNs have increased, especially in the last three years. The general idea is to accelerate learning by informing the DNN about the underlying physical laws and to increase prediction accuracy. The basis for such approaches was developed by Raissi et al.. On a number of benchmark problems in physics, they have shown how well DNNs can be used as universal function approximators to solve PDEs. Inverse problems and parameter identification of PDEs is another milestone of their research [33]. Building on this work, another approach to discover inverse computational problems from noisy data using physically-constrained ML has been proposed by Qu et al. [34]. A good review of PINNs applied to a variety of heat transfer problems is given by Cai et al. [35]. Uhrich et al. used a highly simplified two-dimensional model to predict temperature fields within the 3D print with special consideration of the heat source [36]. Du et al. developed a physics-informed machine learning approach combined with mechanistic modelling and experimentation to reduce defects in AM [37]. A PINN model taking into account heat transfer and fluid flow is given by Zhu et al.. The network is fed with the Navier-Stokes equations in addition to the energy conservation equation [38]. Wessel et al. developed the Lagrangian PINN. High order implicit Runge-Kutta time integration is used [39]. Uhrich et al. employed a differential equation-inspired ML approach for valve faults prediction [40]. Henkes et al. developed a number of sample strategies for PINNs which we include in our work [41]. Similar to our work, Zobeiry et al. and Lia et al. showed the benefits of using PINNs in the application of additive manufacturing [42, 43]. PINNs comes with a number of challenges. First, there is the complex model design. We propose a specific neural network architecture for our problem, incooperating the laser power and designing the different loss functions. There is limited theory on stability and robustness. Prediction accuracy depends on the quantity and quality of measurement data. We make use of high-dimensional data from real printing experiments. Regularization is challenging, PINNs can fit the data well but not the PDE. Initial and boundary values must be satisfied. Similar to Zhu et al., we suggest the use of hard boundary conditions. Previous research is mainly showing how well PINNs can approximate PDE solutions. However, the main challenge not addressed by previous research is the complexity of computational scalability for high-dimensional problems. In general, PINNs require computationally expensive training with respect to sampling in each dimension. This prevents real-time analysis in 3D printing. Based on the similar heat transfer behaviour of adjacent printing layers, we propose a pre-trained foundation model and a transfer learning strategy to significantly reduce training costs. Furthermore, we recommend using the prediction of our PIDL approach as a reference for the heat transfer behaviour. Deviations between predictions and observations are used to detect anomalies and monitor the printing process.

3 Problem formulation and methodology

To model heat transfer in 3D printing, we introduce the governing initial-boundary value problem for approximating a solution to the heat equation. We also describe the design of our PINN.

3.1 Heat exchange in AM

Heat can be transferred in a number of ways. Firstly, heat is transferred by direct physical contact. Metal parts and the metal powder bed have excellent thermal conductivity. Secondly, the laser melts the metal, changing its aggregate state from solid to liquid. In this case, heat is exchanged not only by conduction but also by convection. Currents in the liquid transfer the heat. A third mechanism, where heat is exchanged by electromagnetic waves without contact between objects, is radiation. All three heat exchange mechanisms are relevant to 3D printing and help to understand the temperature processes that occur during SLM. We want to make simplifying assumptions and limit ourselves to heat conduction including a phase transition from solid to liquid.

3.1.1 Heat conduction

Heat transfer or diffusion by conduction in SLM can be formalised using the heat equation, which is a PDE describing the distribution of heat over time in a given region. It is one application of the basic diffusion equation:

with the specific heat capacity c, the density \(\rho \), the thermal conductivity \(\kappa \) and the temperature T. \(\kappa \) and c are temperature dependent parameters.

3.1.2 Phase change

The heat transfer model can include a phase transition. As SLM is performed on alloys, there is not a specific temperature at which the phase change occurs instantaneously, but a solid to liquid transition between a solidus temperature \(T_s\) and a liquidus temperature \(T_l\) [44]. To incorporate this into the PDE, the liquid fraction

is introduced, which is 0 for solid and 1 for liquid material. Using this function, the latent Heat H of fusion is included into the heat equation through an additional source term [45]. For the model of pure heat conduction, this results in the changed PDE:

One option for the transition between these two values \(T_s\) and \(T_l\) is using a parameterized logistic function:

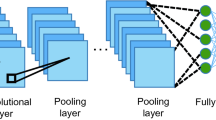

PINN Architecture - The DNN consists of two parts. One is the "uninformed" part to find a temperature approximation function. The second part influences the training process and the finding of the function with information about the heat conduction in the form of the PDE and the Neumann boundary condition

3.1.3 Initial and boundary value problem

To solve this PDE uniquely, an initial and boundary value problem must be formulated. There are different types of boundary conditions. Dirichlet boundary conditions specify the behaviour of the function at the boundary of the domain. While the Neumann boundary condition specify the normal derivative of the function at the top surface. The initial condition is specified as follows:

Dirichlet conditions are given below:

The heat flux q across the top surface defines the Neumann Boundary:

with \(\textbf{r}_\textbf{0} = (\frac{x_\textrm{b}}{4}, \frac{y_\textrm{b}}{2})\)

q describes the Gaussian heat flux of the energy source at the top surface with the laser power p, \(x_b\) and \(y_b\) are the distance from the laser beam axis, \(r_b\) is the laser radius and A is the absorption of the laser energy. v is the scanning speed.

3.2 Neural network design

Based on the Universal Neural Network Approximation Theorem, we propose to train a neural network to find a function that can uniquely represent a solution to the initial boundary value problem described above. With no restrictions on the number of hidden layers and neurons with non-polynomial activation functions, DNNs can represent arbitrarily complex continuous functions [46]. As shown in Fig. 1, a fully connected feed-forward network is created to find an approximate function that represents the heat transfer in 3D printing. A proposed transformation function that satisfies the initial and boundary conditions uses the approximation function as input. The resulting temperature function is fed into the PINN. The necessary derivatives for the heat equation are calculated using automatic differentiation [47]. There are two regularisation functions: One is the heat equation itself and the second satisfies the Neumann boundary condition on the top surface. The specificity is the extension of the DNN to another dimension of laser power in addition to the spatio-temporal domain. The laser power can be used as a fixed parameter, but also as a wide range input variable.

3.2.1 Loss function

The training of the PINN is controlled by the loss function \(\mathcal {L}(W,b)\) with the aim of reaching a global minimum. \(R_1\) provides the PDE solution to predict heat transfer without latent heat in a spatio-temporal domain. The phase change from solid to liquid is included in \(\tilde{R_1}\) and the Neumann boundary condition is ensured by \(R_2\). W and b are trainable parameters of the PINN. With \(\lambda _1\) and \(\lambda _2\) the two loss terms can be weighted between \(0-1\). The losses are summarised as follows:

3.2.2 Hard initial-boundary conditions

To avoid an even more complex multivariate optimisation, where the different loss terms increase with the number of constraints, we propose a different approach from most other papers dealing with PINNs. Two newly introduced functions, I and \(\mathcal {B}\), ensure that the initial and Dirichlet boundary conditions are satisfied. The scalar temperature field T is defined by these two functions and the output of the trained neural network:

\(\mathcal {B}(t, x,y,z,p)\) is a distribution function with zero in the initial and boundary domains. \(I(t_0,x,y,z,p)\) contains the initial and boundary values. Values are zero in the spatio-temporal domain where the heat transfer behaviour is unknown. N(t, x, y, z, p) is the output of the PINN. \(I(t_0,x,y,z,p)\) and \(\mathcal {B}(t,x,y,z,p)\) can be learned with a neural network or defined analytically.

4 Prediction and performance of physics-informed deep learning

4.1 Solution of initial-boundary value problem

In this section we present the solution of the initial boundary value problem described above. The optimisation was performed on the domain:

with \(x_\textrm{max} = 1\times 10^{-3}\)m, \(y_\textrm{max} = 0.23\times 10^{-3}\)m, \(z_\textrm{max} = 0.1\times 10^{-3}\)m and \(t_\textrm{max} = 2\times 10^{-3}\)s. \(1\times 10^{4}\) points were sampled within this domain using the Hammersley sequence. \(2\times 10^{4}\) additional points were generated on the top surface, where a Gaussian heat flux was applied via (8). The power of the laser was set to \(p = {250}\) W and its velocity to \(v = 200\times 10^{-3}\)m/s. An initial optimisation was performed with the LBFGS-optimiser of scipy for 2293 iterations. After a first convergence, \(1\times 10^{5}\) additional points within the domain are generated and sorted with respect to their loss values. The \(2\times 10^{3}\) points with the highest loss are added to the training set in order to achieve a focus on relevant areas of the domain. Their position in time and on the x-axis is shown in Fig. 2.

It is noticeable that most of the points with high losses are distributed near the laser position. Training continues with 2000 resampled points in this area and 3000 further iterations. The sampling strategy is called adaptive resampling. The benefits of optimising the two loss functions (10) and (12) in terms of convergence, can be seen in Fig. 3. The effect of adaptive resampling is evident. There is a short term penalty for the resampled points, but the loss values decrease afterwards. After about 5000 epochs a minimum of \(\mathcal {L} = 2.00\times 10^{-3}\) is reached. The required thermal and mechanical parameters for the upcoming solution are given in Table 1:

To ensure the formalised initial and boundary conditions based on the introduction of hard boundary conditions in (14), we propose the following transformation of the output:

where \(T_0\) is the ambient temperature, T is the PINN prediction, x,y,z,t describe the spatio-temporal domain, and \(x_{max}, y_{max}\) is the maximum of the x and y domain. \(5 \cdot 10^{25}\) is an empirical value. Figure 4 shows the solution of the initial-boundary value problem illustrated at three different time steps. The ambient temperature is given with 292K. The material heats up to 4019K. The molten fraction domain is marked by the dashed line. In Fig. 5 the temperature is visualised in one dimensional space over the time for several layer thicknesses. The melting temperature is reached to a depth of \(5.0\times 10^{-5}\)m.

4.2 Comparison with FVM

OpenFOAM is used to evaluate the proposed PINN framework. This allows to obtain reproducible results for the PINN optimisation. OpenFOAM is an optimised PDE solver based on the FVM [48].

4.2.1 Heat transfer without phase transition

As can be seen in Fig. 6, the approximated solution based on PINNs is comparable to that of the FVM. The relative error

is less than 5 percent, except in the boundary region, which is shown in Fig. 7. The implementation of OpenFOAM has addressed the problem of edge discontinuities resulting from different boundary conditions. The edge discontinuities are smoothed. This is in contrast to the PINN approach, where the discontinuity points remain present. Furthermore, the largest deviation between the two solutions is found in the laser region. This comparison suggests that PINNs are competitive alternative PDE solvers.

Influence of Latent Heat - x-profiles of the temperature at \(y=0.5 y_{y_max},\,z=z_{max}\, t=t_{max}\) for OpenFOAM and as predicted by the PINN, without (top) and with (middle) the inclusion of latent heat and the temperature change due to the latent heat extracted the profiles above for OpenFOAM and PINN (bottom)

4.2.2 Latent heat of fusion

To account for the phase transition, the loss function (13) is modified, consisting of (11) and (12). In Fig. 8, the top and middle plots depict two temperature profiles along the x-axis at the surface of the domain during the final timeframe. A comparison is made between the case where latent heat is absent and the case where it is present. In the absence of latent heat, both the PINN and the classical numerical method exhibit good agreement. However, when latent heat is considered, noticeable differences arise, particularly in the region where the liquid metal solidifies again after the laser has moved away. This discrepancy arises from the release of latent heat during phase transition, causing a subsequent rise in temperature. OpenFOAM accurately captures this behavior, whereas it is less pronounced in the PINN results as shown in the bottom plot of Fig. 8. Specifically, the decrease in temperature at this juncture is notably smaller in the PINN results compared to OpenFOAM. Overall, the impact of latent heat on predicted temperatures is found to be relatively minor. While qualitative agreement is generally observed, an exception lies in the region preceding melting. Here, PINN predicts a temperature decrease, which contradicts physical expectations. Melting effects should start at the beginning of melting, which is consistent with the behaviour modelled by OpenFOAM. Given the marginal influence of latent heat, it is disregarded in subsequent analyses.

4.3 Transfer learning

PINNs have the major disadvantage of being computationally expensive to train, especially in high dimensional spaces. To overcome this, we propose to explore the benefits of an initial trained foundation model and transfer learning. To do this, we use the model weights and biases from the solutions presented above. These parameters are fine tuned to approximate different laser power solutions, all other parameters being equal. By comparing the losses, the benefits in terms of computational time are investigated, as can be seen in Fig. 9. For solutions where the laser power is close to that of the pre-trained foundation model, the calculation time can be significantly reduced. The further away from the pre-trained laser power, the longer the training time. However, in all cases the computation time can be saved. For all solutions, 20000 points on the boundaries and 10000 collocation points within the domain were sampled.

4.4 Laser power parameter network

As already shown in Fig. 1, we propose to include laser power as an essential process parameter as an additional input dimension. We expect to have the benefit of a single training process for a series of simulations including a range of different laser powers \(p \in [100W, 300W]\) to increase the range of performance. To ensure the formalised initial-boundary conditions in (14), the transformation was changed to:

since the temperature was expected to rise with increasing power. This is embedded into this formula by the multiplication with p, which is intended to enhance the optimization process. c was set to \(5\times 10^{23}\), which is two orders of magnitude lower than for the original simulation. 10000 points on the boundaries and 10000 collocation points within the domain were sampled, keeping all other parameters identical. After 10222 iterations with the L-BFGS optimiser, 10000 additional points were sampled both on the boundary and within the domain. After this, the PINN was optimized again for approximately \(10^4\) iterations. The resampling strategy was repeated three times. Convergence is reached after 31380 iterations.

Figures 10 and 11 show a comparison between the pre-trained model, the parameter network and the numerical simulation for the molten fraction and the mean surface temperature respectively. There is good compliance for all three models. With respect to the laser power as a continuously distributed input variable in the parameter network, the DNN predicts not only the discrete points as in the numerical simulation and the pre-trained model, but also any number of laser powers in between. The result is a continuous solution in the laser power range.

Solving Time - Left: Solving/training time for the numerical simulation and the pre-trained models. The numerical simulation is much faster than the pre-trained models. Right: Total solution time for eight simulations depending on different laser power levels. The Parameter PINN contains any number of simulations within the laser power interval

To train the DNN, we use a Tesla V100 GPU, 32 GB RAM and an 8-core CPU. As can be seen in Fig. 12, the training time for the pretrained neural networks at p = 220W is comparable to the solution time of OpenFOAM. The parameter network takes much longer to solve than the numerical method or the pre-trained model for all eight laser power simulations. However, when it comes to increasing the number of simulations to a large number, the parameter network will outperform the FVM and the pre-trained model as no additional training is required. The big advantage of PINNs lies in the data capacity, which we will illustrate in the next sections.

5 Experiments

This section presents the SIEMENS experiments and temperature observations. Sections 5.1, 5.2 and 5.3 are based on [36].

5.1 Design, process and sensor data generation

The two laser melting machines utilized in this work have different build platform sizes, with dimensions of 250x250x325 mm\(^3\) and 280x280x365 mm\(^3\), respectively. Each machine is equipped with a thermal imaging camera with a maximum frame rate of 200 Hz and a resolution of 382x288 pixels. To manage the amount of data generated, the frame rate has been limited to 3 Hz. The thermal image measures thermal emission in the \(7\mu m\) to \(14\mu m\) wavelength range, which is influenced by the emissivity of the object under study. The work focused on two heat-resistant materials, AISI 316L steel and IN718 nickel-based alloy, which are commonly used in industrial laser melting processes. These materials are popular due to their favorable properties, such as recyclability and potential applications. AISI 316L is a type of stainless steel whose chemical composition makes it resistant to non-oxidising acids and chlorinated media. It is low carbon content distinguishes it from AISI 316L. The nickel-based alloy IN718 is ideal for use in energy technology, the oil and gas industry, aerospace and racing industries owing to its corrosion resistance, high tensile, fatigue, creep, and fracture strength up to \(700^{\circ }\)C. In a series of experiments, a large number of different component geometries with a wide range of properties were produced. These included turbine blades, inverted pyramids, tension rods and a benchmark component, as shown in Fig. 13.

5.2 Plancks law

The temperature of a surface is not measured directly by a thermal imager. Rather, it is the collection of heat radiation from a surface that is correlated to the temperature of that surface. Radiation is emitted by any body above zero Kelvin. The amount of radiation per unit area and per unit wavelength for a black body is given by Planck’s law:

with the wavelength \(\lambda \), the Boltzmann constant k, the speed of light c and the Planck constant h. The amount of radiation emitted is the integral over Planck’s law. For this model of camera, the integral is between \(7\mu m\) and \(14\mu m\), as shown in Fig. 14.

5.3 Emissivity

The temperature range given only applies to perfect black bodies, and as metal surfaces are not black, their emissivity must be taken into consideration. Depending on the oxidation and polishing of the surface, the emissivity can vary from close to 1 to as low as 0.02. Our experiments with metal powder and printed surfaces have shown that a glossy printed surface has an emissivity of 0.2, while the powder has an emissivity of 0.6. However, as the camera’s internal amplifiers and reference voltages are affected by the emissivity and temperature range, choosing a value of 0.4 for the emissivity allows metal surfaces to be monitored without overexposure. This means that one setup can be used to monitor the entire job, and the data will remain consistent even after recalibration. Although the calculated temperatures may not exactly match the numerical temperatures of the surfaces or powder, the measured temperature increase is a genuine increase. For PINN training and prediction, the precise numerical temperature value is not essential, as the model takes the sensor data into account. What is important is the estimation of the temperature change and the prediction of acceptable temperature changes in the component.

5.4 Sensor data preparation

When there is more than one printed component on the built platform, efficient data preparation plays a crucial role in the subsequent analysis of thermal characteristics. The challenge is to accurately identify and isolate individual components from thermal images of the entire platform. To initiate the data preparation process, we use object detection techniques to automatically delineate and isolate the printed components. It is used to recognise the individual components on the built platform and define the regions of interest within the thermal images for further analysis. A pre-trained convolutional neural network is used to classify the components in terms of their thermal intensity. This classification not only assists in component identification, but also helps to characterise the thermal behaviour of each component. In addition, the convolutional neural network is used to create bounding boxes around each identified component. Figure 15 shows an example thermal image demonstrating the successful application of bounding boxes. In this example, the printed pyramids are accurately detected and enclosed within bounding boxes. These bounding boxes provide a visual representation of the region of interest for subsequent heat transfer analysis.

5.5 Evaluation metric

In order to assess the effectiveness and accuracy of the proposed approach for predicting heat transfer in AM, a comprehensive evaluation is carried out. This section outlines the evaluation criteria and methodology used to measure the performance of the model. The fundamental objective of the evaluation is to compare the heat transfer predictions generated by our model with the actual sensor data obtained from a printed layer of a component during the AM process. This comparison provides a direct measure of the predictive capability of the model and its ability to replicate real-world heat transfer dynamics. To quantify the degree of agreement between the model predictions and the sensor data, we use the Mean Squared Error (MSE) as our evaluation metric:

The MSE is expected to be minimal, approaching zero, for high quality printed layers where the heat transfer prediction closely matches the sensor data. In a first part, the model can be evaluated in terms of its ability to predict the heat transfer dynamics in 3D printing with high quality layers. Conversely, when anomalies or deviations occur in the heat transfer process, the MSE increases. An increase in the MSE indicates an increasing discrepancy between the model predictions and the sensor data. If an empirically defined MSE threshold is exceeded, it can be assumed that anomalies are present. The increased MSE values provide a quantitative indication of the severity of deviations or anomalies in the AM process. This makes it a valuable tool for identifying and diagnosing problems during production.

6 Embedding high-dimensional data

After evaluating the PINN approach in Section 4.1, we propose an approach that incorporates high dimensional data into the model in addition to the information provided by the PDE, the initial and boundary conditions. The benefits of anomaly detection to prevent defects in 3D printing processes are also presented.

6.1 Geometric sampling

Heat transfer is simulated in a similar way to that described in Section 4.1, but with special consideration given to the geometries of the components. For this purpose, randomly distributed collocation points within the component geometry were sampled, as shown in Fig. 16 for the top surface. There are four different component geometries describing the turbine blade, the tie rod, the pyramid and the benchmark component. We use an empirical temperature limit to separate the component from the surrounding powder bed. Using this boundary and the measured data, random points within the existing part geometry can be sampled.

6.2 Heat transfer prediction

For the simulations of the temperature distribution for all four components we use the same initial boundary value problem formalised in Section 3.1. The initial conditions at the top surface are given by the temperature observations. The ambient temperature is set to 398.5 K, again considering the real measurements. 398K is approximately the temperature of the component panel. All the other thermal and mechanical properties are the same as those described in Section 4.1, as all the components are made of 316L stainless steel. Except for the laser power p, which is set to a fixed value of 150 W. The ADAM optimizer is used to find the minimum of the loss function. \(\mathcal {B}\) and I from (14) are learned by a neural network. The solution of the PINN for all four components for a fixed time point in the x, y plane is shown in Fig. 17.

The absolute error

is sufficiently small. An exposure time and laser position detection method is used to produce results comparable to measured data. We train the network for 10000 iterations using 87000 collocation points, then resample points with high losses in the domain using adaptive resampling and repeat this process twice. The training process is completed after 30500 iterations. The PINN can reproduce the temperature fields of the measured data. But it also predicts the temperature distribution in the z-direction, where no temperature observations are available.

6.3 Transfer learning

In order to simulate the entire printing process of a component using temperature observations, a PINN must be trained for each printed layer. Following the pattern of pre-training and fine-tuning, we propose an initial trained foundation model and refine the trainable weights with each successive print layer. This is based on the knowledge about pre-trained models in Section 4.3 and the observation that the heat transfer of adjacent layers behaves similarly. The foundation model needs only few adjustment steps and can be transfered to predict the heat transfer of the upcoming print layers. We expect that the computational time, cost and energy required for training can be significantly reduced. As can be seen in Fig. 18, the optimization loss of the subsequent layers starts close to the minimum of the previous optimisation. Knowing this, the number of iterations to be trained for each layer can be significantly reduced, thus reducing the training time.

Loss of Pre-trained Models - Left: Training process using pre-trained models to simulate heat transfer for adjacent layers. The number of training iterations can be significantly reduced by using transfer learning. Right: Pre-trained models can reduce the training/solving time significantly. While the initial training of the foundation model for the heat transfer prediction of a printed layer takes about 400s, heat transfer of subsequent layers can be predicted in approximately 20s

6.4 Anomaly detection and decision making

Physics-based machine learning simulation can be used to optimise 3D printing processes. Thermal stress leads to variations in part quality. To prevent this, we propose anomaly detection based on the models described above. For this purpose, the digital heat twin acts as a target state and the observations should be as close as possible to this target state to ensure a flawless printing process with high quality standards. A gap between the digital heat twin and the measured data indicates anomalies in the process. In addition, information on why a component is deforming can be obtained from its thermal stress behaviour, which is described by:

where \(\nabla T\) describes the gradient of the scalar temperature field. High thermal stresses are expected in areas of the component with high temperature gradients. The temperature gradients are predicted by the PINN.

Figure 19 shows the temperature field of the pyramid for a fixed time in the x, y plane for a high quality component after printing with INC718. The thermal stress behaviour is also shown. There are increased stresses at the edges and corners. These are generally the critical areas of a component during the printing process. Inside the edges the thermal stress is low.

Thermal Stress 316L - PINN prediction of thermal stress. There is a noticeable gap between the prediction of the digital heat twin and the observations. The top surface temperature should be colder than it is, and the thermal stress inside the edges is high. The boundary condition is violated. This indicates a faulty printed layer

In contrast, Fig. 20 shows the predicted temperature field for a pyramid that is severely deformed after being printed with 316L stainless steel. This is compared to the measured data which shows a completely different temperature field. There are high stresses inside the edges on the top surface. The boundary conditions are not satisfied either. Several indications point to anomalies in the printing process. These anomalies can be quantified over the entire printing process to aid decision making. If there are many successive layers with defects, it is advisable to stop the printing process at an early stage. This can save material and costs. Another option is to adjust the laser power up or down in relation to the peak temperature and thermal load. This avoids high thermal stresses that can adversely affect the shape and size of the part. As a result, the printing process can continue, ensuring part quality and reducing defects. Figure 21 shows the described anomaly detection for two pyramids from two different print jobs with two different materials. The absolute distance between the PINN prediction and the measured data is calculated for a specific critical point near the corner on the top surface. Over a long time period of more than three hours of printing.

Particularly in the last third, large differences between PINN prediction and observation can be detected. Based on this knowledge, it can be recommended to stop the printing process immediately, for example after five faulty layers. The complete algorithm for simulation and real-time anomaly detection is shown in Fig. 22. Real-time defect detection is only possible with a pre-trained model and depends on the part size. For the demonstration built parts, the average printing time for one layer is approximately 30 seconds. Training the PINN with a pre-trained model takes about 20 seconds, real-time simulation and detection seems possible.

Anomaly Detection - Deviation between prediction and observation for a critical point near the upper left corner of the part over a printing time of more than three hours for two printed pyramids. Large distances indicate anomalies in the printing process. Defective layers are consistently present in the last third of 316L

7 Discussion

In conclusion, our PIDL approach is a competitive approach to simulate heat transfer in 3D printing. Our results are comparable to those of numerical simulation with FVM and also to real measurement data. Our approach is capable of processing measurement data and has the potential to optimise 3D printing processes. Previous research has shown that PINNs work well as PDE solvers, particularly for heat transfer in 3D printing. However, model complexity and training effort prevent the implementation of real-time anomaly detection. Therefore, we exploit the similarity properties of heat transfer between adjacent print layers as an efficient transfer learning and training strategy to refine pre-trained foundation models. This significantly reduces the time, cost and energy required for training. Despite the short single-layer printing time, real-time monitoring is possible. In addition, we have shown how the heat transfer prediction of our PINN can be used as a reference label for a high quality printed layer. A deviation between this prediction and the observations of the sensor data leads to anomalies in the printing process, resulting in quality degradation characteristics. However, our approach has its limitations: Our model only considers heat conduction. We were able to show that the effects of including the phase change from solid to liquid were very small. The prediction of the heat transfer did not change significantly. Therefore, the latent heat could be neglected. It is a simplified model that can be extended to include radiation and convection. The inclusion of convection and radiation means an addition of loss functions, leading to a more complex optimisation with several orders of magnitude in the training process. There is also a lack of comparison of our data-driven method with other existing machine learning models. A comparison would help to evaluate the proposed model in more detail in the future. It has been shown that there are a number of simulations where the parameter network gives performance advantages over the other two approaches. In industry, there are usually a large number of different simulations, so this approach may become more important. Another interesting point is how the laser power parameter network can be used to optimise laser power control throughout the printing process. For example, the PINN already contains solutions for adjusting global and local overheating. Other process parameters, such as the scanning speed of the laser, could also be considered as an extended input dimension to determine the optimal parameter configuration for a printing process. In general, the computation time can be optimised in different cases. There is a possibility to parallelize the training of the DNN, which has not been done yet. The DNN architecture with hidden layers and neurons can also be optimised for more efficient prediction. Real-time simulation and anomaly detection have not yet been tested in a real production plant. However, we have shown that there is a correlation between the quality of the real printed parts and the thermal stress prediction and anomaly detection. The major advantage of our approach over purely data-driven ML algorithms is the interpretable defect detection that is explained by the heat transfer behaviour and heat accumulation that cause pores, cracks, unevenness and quality degrading properties. Purely data-driven ML approaches can also classify defects, but without a scientific understanding of the cause.

8 Conclusion

In this paper, we have presented a PIDL approach to simulate heat transfer in 3D printing based on solving an initial boundary value problem of the heat equation. We proposed a pre-trained model and a laser power parameter network to achieve computational time advantages and evaluated them with numerical simulation results. In the second part of the paper, we demonstrated a real use case with components of different quality characteristics and showed how we can use PINNs and cooperative data to optimise manufacturing processes. The predictive capability was evaluated on different component geometries. An anomaly detection method was developed that could be tested and evaluated on high quality and fully deformed components. The method is able to detect defective layers leading to dents, cracks, pores and deformations in the component and helps to understand the thermodynamic cause. We were able to show that transfer learning through similarity properties of adjacent print layers is the essential key to enable data-driven real-time analyses. The work is a proof of concept and provides possible perspectives for further work and implementation concepts for industrial manufacturing. It lays the foundation for future challenges related to automation, Industry 4.0 and huge data capacities for the analysis of manufacturing processes, with a particular focus on physically motivated problems. Further research can evaluate the results in more detail. A comparison with other existing machine learning methods should be made. Another work could investigate whether the method presented can be transferred to other 3D printing technologies that work with other materials, such as plastic.

Code and Data Availability

Selected data and code supporting the findings of this study are available on request from the corresponding author. The data sets generated by Martin Schäfer and Oliver Theile are not publicly accessible due to internal requirements of SIEMENS.

References

Liu S, Shin YC (2019) Additive manufacturing of Ti6Al4V alloy: a review. Mater Design 164:107552. https://doi.org/10.1016/j.matdes.2018.107552

Miranda G, Faria S, Bartolomeu F, Pinto E, Alves N, Peixinho N et al (2019) A study on the production of thin-walled Ti6Al4V parts by selective laser melting. J Manuf Process 39:346–355. https://doi.org/10.1016/j.jmapro.2018.12.036

Singla AK, Banerjee M, Sharma A, Singh J, Bansal A, Gupta MK et al (2021) Selective laser melting of Ti6Al4V alloy: process parameters, defects and post-treatments. J Manuf Process 64:161–187. https://doi.org/10.1016/j.jmapro.2021.01.009

Waqar S, Guo K, Sun J (2021) FEM analysis of thermal and residual stress profile in selective laser melting of 316L stainless steel. J Manuf Process 66:81–100. https://doi.org/10.1016/j.jmapro.2021.03.040

Li Y, Zhou K, Tor SB, Chua CK, Leong KF (2017) Heat transfer and phase transition in the selective laser melting process. Int J Heat Mass Transf 108:2408–2416. https://doi.org/10.1016/j.ijheatmasstransfer.2017.01.093

Roy S, Juha M, Shephard MS, Maniatty AM (2018) Heat transfer model and finite element formulation for simulation of selective laser melting. Comput Mech 62(3):273–284. https://doi.org/10.1007/s00466-017-1496-y

Jandyal A, Chaturvedi I, Wazir I, Raina A, Ul Haq MI (2022) 3D printing - a review of processes, materials and applications in industry 4.0. Sustain Oper Comput 3:33–42. https://doi.org/10.1016/j.susoc.2021.09.004

Uçak N, Çiçek A, Aslantas K (2022) Machinability of 3D printed metallic materials fabricated by selective laser melting and electron beam melting: a review. J Manuf Process 80:414–457. https://doi.org/10.1016/j.jmapro.2022.06.023

MP (2020) Additive Manufacturing of Tungsten Carbide Hardmetal Parts by Selective Laser Melting (SLM), Selective Laser Sintering (SLS) and Binder Jet 3D Printing (BJ3DP) techniques. Lasers Manufac Mater Process 7(3):338–371. https://doi.org/10.1007/s40516-020-00124-0

Cozzolino E, Lopresto V, Borrelli D, Caraviello A, Astarita A (2022) An integrated approach to investigate the energy consumption for manufacturing and surface finishing 3D printed Inconel 718 parts. J Manuf Process 79:193–205. https://doi.org/10.1016/j.jmapro.2022.04.045

Motallebi R, Savaedi Z, Mirzadeh H (2022) Additive manufacturing - a review of hot deformation behavior and constitutive modeling of flow stress. Curr Opin Solid State Mater Sci 26(3):100992. https://doi.org/10.1016/j.cossms.2022.100992

Wahyudi S, Lestari P, Gapsari F (2021) Application of Finite Difference Methods (FDM) on mathematical model of bioheat transfer of one-dimensional in human skin exposed environment condition. J Mech Eng Res Develop 44(5):1–9

Versteeg HK, Malalasekera W (2007) An introduction to computational fluid dynamics: the finite volume method. Pearson education

LeVeque RJ (2002) Finite volume methods for hyperbolic problems. Cambridge University Press, vol 31

Kang F, Zhong-Ci S, Kang F, Zhong-Ci S (1996) Finite element methods. Mathematical Theory Elastic Struct 289–385

Hsu TR (2012) The finite element method in thermomechanics. Springer Science & Business Media

Mukherjee T, Wei HL, De A, DebRoy T (2018) Heat and fluid flow in additive manufacturing-Part I: modeling of powder bed fusion. Comput Mater Sci 150:304–313. https://doi.org/10.1016/j.commatsci.2018.04.022

Mukherjee T, Wei HL, De A, DebRoy T (2018) Heat and fluid flow in additive manufacturing - Part II: powder bed fusion of stainless steel, and titanium, nickel and aluminum base alloys. Comput Mater Sci 150:369–380. https://doi.org/10.1016/j.commatsci.2018.04.027

Ansari P, Salamci MU (2022) On the selective laser melting based additive manufacturing of AlSi10Mg: the process parameter investigation through multiphysics simulation and experimental validation. J Alloy Compd 890:161873. https://doi.org/10.1016/j.jallcom.2021.161873

Ahmed N, Barsoum I, Abu Al-Rub RK (2022) Numerical investigation on the effect of residual stresses on the effective mechanical properties of 3D-printed TPMS lattices. Metals 12(8):1344. https://doi.org/10.3390/met12081344

Li Y, Gu D (2014) Parametric analysis of thermal behavior during selective laser melting additive manufacturing of aluminum alloy powder. Mater Design 63:856–867. https://doi.org/10.1016/j.matdes.2014.07.006

Liu B, Li BQ, Li Z, Bai P, Wang Y, Kuai Z (2019) Numerical investigation on heat transfer of multi-laser processing during selective laser melting of AlSi10Mg. Results Phys 12:454–459. https://doi.org/10.1016/j.rinp.2018.11.075

Saba T (2020) Recent advancement in cancer detection using machine learning: systematic survey of decades, comparisons and challenges. J Infect Public Health 13(9):1274–1289. https://doi.org/10.1016/j.jiph.2020.06.033

Dimililer K, Dindar H, Al-Turjman F (2021) Deep learning, machine learning and internet of things in geophysical engineering applications: an overview. Microprocess Microsyst 80:103613. https://doi.org/10.1016/j.micpro.2020.103613

Huang Y, Du J, Yang Z, Zhou Z, Zhang L, Chen H (2022) A survey on trajectory-prediction methods for autonomous driving. IEEE Trans Intell Vehicles 7(3):652–674. https://doi.org/10.1109/TIV.2022.3167103

Nasiri S, Khosravani MR (2021) Machine learning in predicting mechanical behavior of additively manufactured parts. J Market Res 14:1137–1153. https://doi.org/10.1016/j.jmrt.2021.07.004

Goh GD, Sing SL, Yeong WY (2021) A review on machine learning in 3D printing: applications, potential, and challenges. Artif Intell Rev 54(1):63–94. https://doi.org/10.1007/s10462-020-09876-9

Farhan Khan M, Alam A, Ateeb Siddiqui M, Saad Alam M, Rafat Y, Salik N et al (2021) Real-time defect detection in 3D printing using machine learning. Materials Today: Proceedings 42:521–528. https://doi.org/10.1016/j.matpr.2020.10.482

Nguyen PD, Nguyen TQ, Tao QB, Vogel F, Nguyen-Xuan H (2022) A data-driven machine learning approach for the 3D printing process optimisation. Virtual Phys Prototyping 17(4):768–786. https://doi.org/10.1080/17452759.2022.2068446

Tamir TS, Xiong G, Fang Q, Yang Y, Shen Z, Zhou M et al (2022) Machine-learning-based monitoring and optimization of processing parameters in 3D printing. Int J Computer Integrated Manufac pp 1–17. https://doi.org/10.1080/0951192X.2022.2145019

Pham TQD, Hoang TV, van Tran X, Pham QT, Fetni S, Duchêne L et al (2022) Fast and accurate prediction of temperature evolutions in additive manufacturing process using deep learning. J Intell Manuf. https://doi.org/10.1007/s10845-021-01896-8

Bauer M, Uhrich B, Schäfer M, Theile O, Augenstein C, Rahm E (2023) Multi-modal artificial intelligence in additive manufacturing: combining thermal and camera images for 3D-print quality monitoring. In: Proceedings of the 25th International conference on enterprise information systems. SCITEPRESS - Science and Technology Publications, pp 539–546

Raissi M, Perdikaris P, Karniadakis GE (2019) Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys 378:686–707. https://doi.org/10.1016/j.jcp.2018.10.045

Qu H, Liu H, Jiang S, Wang J, Hou Y (2023) Discovery the inverse variational problems from noisy data by physics-constrained machine learning. Appl Intell 53(9):11229–11240. https://doi.org/10.1007/s10489-022-04079-x

Cai S, Wang Z, Wang S, Perdikaris P, Karniadakis GE (2021) Physics-informed neural networks for heat transfer problems. J Heat Transfer 143(6). https://doi.org/10.1115/1.4050542

Uhrich B, Schäfer M, Theile O, Rahm E (2023) Using physics-informed machine learning to optimize 3D printing processes. In: Correia Vasco JO, de Amorim Almeida H, Gonçalves Rodrigues Marto A, Bento Capela CA, Da Silva Craveiro FG, Da Coelho Rocha Terreiro Galha Bárt HM et al (eds) Progress in digital and physical manufacturing. Springer Tracts in Additive Manufacturing. Cham: Springer International Publishing, pp 206–221

Du Y, Mukherjee T, DebRoy T (2021) Physics-informed machine learning and mechanistic modeling of additive manufacturing to reduce defects. Appl Mater Today 24:101123. https://doi.org/10.1016/j.apmt.2021.101123

Zhu Q, Liu Z, Yan J (2021) Machine learning for metal additive manufacturing: predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput Mech 67(2):619–635. https://doi.org/10.1007/s00466-020-01952-9

Wessels H, Weißenfels C, Wriggers P (2020) The neural particle method - an updated Lagrangian physics informed neural network for computational fluid dynamics. Comput Methods Appl Mech Eng 368:113127. https://doi.org/10.1016/j.cma.2020.113127

Uhrich B, Hlubek N, Häntschel T, Rahm E (2023) Using differential equation inspired machine learning for valve faults prediction. In: 2023 IEEE 21st International conference on industrial informatics (INDIN). IEEE, pp 1–8

Henkes A, Wessels H, Mahnken R (2022) Physics informed neural networks for continuum micromechanics. Comput Methods Appl Mech Eng 393:114790. https://doi.org/10.1016/j.cma.2022.114790

Zobeiry N, Humfeld KD (2021) A physics-informed machine learning approach for solving heat transfer equation in advanced manufacturing and engineering applications. Eng Appl Artif Intell 101:104232. https://doi.org/10.1016/j.engappai.2021.104232

Liao S, Xue T, Jeong J, Webster S, Ehmann K, Cao J (2023) Hybrid thermal modeling of additive manufacturing processes using physics-informed neural networks for temperature prediction and parameter identification. Comput Mech https://doi.org/10.1007/s00466-022-02257-9

Wright W (2019) Essentials of materials science and engineering. Cengage Learning

Voller VR, Swaminathan C (1991) ERAL Source-based method for solidification phase change. Numer Heat Transfer Part B Fundam 19(2):175–189

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2(5):359–366. https://doi.org/10.1016/0893-6080(89)90020-8

Baydin AG, Pearlmutter BA, Radul AA, Siskind JM (2018) Automatic differentiation in machine learning: a survey. J Marchine Learn Res 18:1–43

OpenCFD L OpenFOAM: The open source CFD toolbox. Programmer’s Guide

Acknowledgements

The authors acknowledge the financial support by the Federal Ministry of Education and Research of Germany and by the Sächsische Staatsministerium für Wissenschaft Kultur und Tourismus in the program Center of Excellence for AI-research ”Center for Scalable Data Analytics and Artificial Intelligence Dresden/Leipzig”, project identification number: ScaDS.AI. All results presented refer to the TWIN project, funded by the Federal Ministry of Education and Research, Germany (02K18D052).

Funding

Open Access funding enabled and organized by Projekt DEAL. The work is funded by the the Federal Ministry of Education and Research of Germany and by the Sächsische Staatsministerium für Wissenschaft Kultur und Tourismus in the program Center of Excellence for AI-research “Center for Scalable Data Analytics and Artificial Intelligence Dresden/Leipzig”, project identification number: ScaDS.AI and (02K18D052)

Author information

Authors and Affiliations

Contributions

Benjamin Uhrich made the substantial contribution to the conception and design of the article as well as to the data analysis. Benjamin Uhrich and Nils Pfeifer contributed to the methods, analysis and investigations of the article. The experiments and the sensor data generation were carried out by Oliver Theile and Martin Schäfer. The first draft of the manuscript was written by Benjamin Uhrich. All authors commented on earlier versions of the manuscript. All authors read and approved the final version of the manuscript. The work was supervised by Erhard Rahm. Conceptualization: Benjamin Uhrich, Methodology: Benjamin Uhrich, Nils Pfeifer, Formal analysis and investigation: Benjamin Uhrich, Nils Pfeifer; Visualization: Benjamin Uhrich, Nils Pfeifer; Writing - original draft preparation: Benjamin Uhrich; Writing - review and editing: Benjamin Uhrich, Nils Pfeifer, Martin Schäfer, Oliver Theile and Erhard Rahm; Supervision: Erhard Rahm

Corresponding author

Ethics declarations

Competing Interests

The authors do not have any relevant financial or non-financial interests to report. The authors have no competing interests to declare that would be relevant to the content of this article. All authors certify that they have no affiliation or involvement with any organisation or entity that has any financial or non-financial interest in the subject matter or materials discussed in this manuscript. No material discussed in this article has any financial or proprietary interest for the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Uhrich, B., Pfeifer, N., Schäfer, M. et al. Physics-informed deep learning to quantify anomalies for real-time fault mitigation in 3D printing. Appl Intell 54, 4736–4755 (2024). https://doi.org/10.1007/s10489-024-05402-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05402-4