Abstract

The network, with some or all characteristics of scale-free, self-similarity, self-organization, attractor and small world, is defined as a complex network. The identification of significant spreaders is an indispensable research direction in complex networks, which aims to discover nodes that play a crucial role in the structure and function of the network. Since influencers are essential for studying the security of the network and controlling the propagation process of the network, their assessment methods are of great significance and practical value to solve many problems. However, how to effectively combine global information with local information is still an open problem. To solve this problem, the generalized mechanics model is further improved in this paper. A generalized mechanics model based on information entropy is proposed to discover crucial spreaders in complex networks. The influence of each neighbor node on local information is quantified by information entropy, and the interaction between each node on global information is considered by calculating the shortest distance. Extensive tests on eleven real networks indicate the proposed approach is much faster and more precise than traditional ways and state-of-the-art benchmarks. At the same time, it is effective to use our approach to identify influencers in complex networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A complex network involves multidisciplinary knowledge and theoretical foundations, which can effectively model different components or factors in a complex system [1]. There is no denying that complex networks have been applied diffusely in dissimilar fields such as natural sciences [2,3,4], social sciences [5, 6] and medical sciences [7,8,9]. With the continuous rise of research on complex networks, influencers identification, as an indispensable branch of complex networks, has become increasingly important [10, 11]. Not only does the identification of key nodes have high guiding value in theory, but also it has comprehensive applications in diverse fields such as the transmission of infectious disease [12], cyber security [13], biological information [14], and social networking services [15,16,17]. Therefore, a large number of excellent algorithms have been gradually proposed. These algorithms are approximately classified into neighborhood-based centrality [18, 19], path-based centrality, and eigenvectors. The advantages and specific steps of each algorithm are described in Section 2.

Recently, some interaction-based gravity model algorithms have been put forward. The gravitational force of one node on another is proportional to the degree and inversely proportional to the distance between them in the gravity model. The algorithm based on the law of universal gravitation were put forward by Li et al. [20]. In addition, Liu et al. [21] further improved it. The high computational complexity is weakened, and the noise that may be generated by long-distance interactions is reduced. Li et al. [22] put forth a generalized gravity model (GGC for short), which takes into account the local clustering coefficient as the local information. But the parameters are more difficult to determine.

However, most of the above methods only consider local information or global information, which has certain limitations. The method that only considers local information creates the advantage of easy calculation. But it does not measure the structural information of the entire network, which leads to partial and inaccurate results. In addition, in some applications like the spread of infectious diseases, it is necessary to consider the global impact of nodes. This process needs to calculate how many other nodes are infected by infectious nodes within a certain period of time, which takes into account global information. At the same time, it is not feasible to consider only the global impact, which leads to high complexity. In some cases, this limitation will hinder its application. For example, it is not suitable for large-scale networks. Therefore, there is an open issue that is how to take advantage of local and global conditions to evaluate the influencers in complex networks. In order to settle the problem, a generalized mechanics model based on information entropy is proposed (named as Information Entropy-based Gravity Model, INEG for short), which combines local and global information. INEG not only makes the obtained results more accurate, but also reduces the computational complexity.

The main contribution of the paper is that information entropy is used to measure the uncertain information around the nodes. Uncertain information can be regarded as the uncertainty of the node spreading information to its neighbors. In other words, it is the probability that the neighbor is selected by the node. In the local information, INEG takes into consideration the influence of neighbor nodes in detail. The relative score is introduced in the global information, which is expressed as the weight of each node in the entire network. All in all, our main innovation is an effective combination of local and global information. What’s more, another highlight of this paper is that location information is introduced. The degree of a node is treated as mass in the traditional gravity model, which is rough. In fact, the influence of a node is not only related to its degree, but also to its position in the network. Nodes at the core of the network tend to be more important than edge nodes because of the higher connection density between core nodes. Further, nodes with higher connection density are more able to influence other nodes. For example, there are two nodes of the same degree at different locations in the network. Obviously, a node at the core of the network has more influence than a node at the edge of the network. Therefore, location information needs to be considered in identifying critical nodes. Through the proposed inheritance rule, the obtained b-index is used to record the distance between the node and the peripheral part. The f -index is used to correct nodes with low b-index but close to the core of the network. Finally, the node mass with location information is generated according to the mixed index composed of b and f.

The rest of this article is organized as follows. First of all, Section 2 describes some preliminary knowledge and basic algorithms of complex networks briefly. At the same time, three state-of-the-art approaches are given. Furthermore, the improved INEG method is presented in Section 3. Then, Section 4 demonstrates the rationality of our approach through several experiments. Some applications of this method are discussed in Section 5. Finally, the conclusions and future prospects are analysed in Section 6.

2 Related work

The representative methods based on neighborhood centrality include degree centrality (DC for short) [23], K-shell decomposition [24,25,26,27,28], and H-index [29] methods. DC holds the view that a node with more neighbors has a greater influence [23]. Nevertheless, the main disadvantage of DC is that only local information is considered. The K-shell decomposition [24] cogitates the location information of the nodes in the network. It claims that a node with a more central position in the network generally has a greater influence. Notwithstanding, its degree is smaller. But its result is too rough to discriminate nodes. The H-index deems that nodes connected to multiple neighbors with high degrees are more influential. Besides, closeness centrality (CC for short) [30] and betweenness centrality (BC for short) [31] are two representative path-based centralities ways. CC [30], which takes into account the global information of the network, evaluates the significance of one node by summarizing all the distances between it and the other nodes in the network. While BC [31] cogitates the number of shortest paths passing through a target node. Two well-studied methods based on eigenvector are the eigenvector centrality (EC for short) [32] method and the PageRank algorithm (PC for short) [33, 34]. For EC [32], the influence of a node rests with both the number of its neighbor nodes and their significances. But the information of other nodes connected to a node is completely used to evaluate the importance of the node. PC [33] ranks web pages on the basis of the link relationships of web pages. For PC, the importance of a page in the World Wide Web is determined by the number and quality of the pages that link to it. Hence, if a page is pointed to by many high-quality pages, the quality of this page is also high.

In the following subsections, firstly, the specific steps of the above methods are described. Then the recent algorithms concerning generalized mechanics model are reviewed. Lastly, two state-of-the-art algorithms are introduced.

2.1 The classic measures

2.1.1 Degree centrality

DC, which describes the direct influence of nodes, is the simplest and most intuitive way to evaluate the importance of nodes in the network. It claims that a node with a higher degree can directly affect more neighbors, which means it is more crucial. In order to compare nodes that have the same degree in networks of different scales, a normalized DC [23] is given, which is defined as follows:

where \(k_{i}= {\sum }^{}_{i}{a_{ij}} \) is the degree of node i, aij stands for the connection between node i and node j, and the denominator n − 1 is the maximum degree value possible for the node.

2.1.2 K-shell algorithm

The K-shell algorithm emphasizes the location information of nodes and quantifies the importance of nodes by assigning them to different shells. The method considers that the nodes in the center of the network are more important, while the nodes closer to the edge are less important. Nodes with larger shells are closer to the network core. The specific steps of the K-shell algorithm are as follows. In the first step, nodes with degree k = 1 and their connected edges are sequentially removed from the network. Since the first step in the removal process may reduce the degree of the node, it is necessary to continue to remove the node with degree k ≤ 1 and its connected edges. In the second step, when there are no nodes with degree k ≤ 1 in the network, create 1 − shell for the nodes removed in the first step and let their shell = 1. In the third step, the degree value is incremented by 1, and the above process is repeated until each node has a unique corresponding shell. After the execution of the above process, 2 − shell, 3 − shell and so on can be obtained. Finally, all nodes in the network are divided into different shells, and the nodes in each shell have the same shell value. Obviously, nodes in the same shell are considered to have the same importance, while nodes in the inner shell have higher influence.

2.1.3 Closeness centrality

CC determines the significance of a node by summarizing the distance between the target node and all other nodes. If the average distance between a node and other nodes is smaller, the influence on the network is greater. Given a connected network with n nodes, the CC [30] of node i is defined as:

where di stands for the average shortest distance from node i to other nodes in the network, and dij is the shortest path distance between node i and node j.

2.1.4 Betweenness centrality

BC describes the flow transmitted through the shortest path between pairs of nodes in the network. It evaluates the importance of nodes by calculating the concentration of paths. BC clings to the perspective that a node would have a greater influence if there are more shortest paths pass through it. The BC [31] of node i is defined as:

where Gst stands for paths between node s and node t, and gst is the shortest paths between s and t via node i.

2.1.5 Eigenvector centrality

The EC focuses on the surrounding environment where the node is located, such as the quantity and quality of neighbor nodes. And its score value is related to the sum of the scores of its neighbors. Given the adjacency matrix A of a network, the EC [32] of node i is defined as follows:

where xi is recorded as the importance metric value of node i, which is the value of the i th term in the normalized eigenvector of the largest eigenvalue of A. λ is a non-zero constant, which meets the following requirements when it reaches a steady state after multiple iterations:

where x is the eigenvector corresponding to the eigenvalue λ of matrix A.

2.1.6 PageRank algorithm

PC is the most famous algorithm in the field of web page ranking. At the initial moment, each node is given the same PR, and then iterated. At each step, the current PR of each node is equally assigned to all the nodes it points to. The process will end until the PR of each node is stable. The new PR of each node is the sum of its obtained PR, so the definition of the PR of node i at time t is as follows [33]:

where kj stands for the out degree of node j.

2.2 The measures based on gravity model

2.2.1 Gravity centrality algorithm

Inspired by the law of universal gravitation, gravity centrality (GC for short) regards the node’s degree as mass, and the shortest path distance in the network as the distance between two nodes. In order to solve the time-consuming and noise problem, a truncation radius is introduced here. The GC of node i is defined as [20]:

where ki and kj denote the degree of node i and node j respectively, dij represents the shortest path distance between node i and node j, and R stands for the affected radius. Li et al. [20] found an empirical relationship between the truncation radius R and the average path length \(\left \langle d \right \rangle \) through a large number of experiments. Therefore, the best results are obtained when R is set to \(0.5 \left \langle d \right \rangle \).

2.2.2 Weighted gravity centrality algorithm

The weighted gravity centrality (WGC for short) assigns a certain weight to each node through the eigenvector, which is an improvement to GC, and it can be defined as follows [21]:

where ei is the i th value of the normalized eigenvector corresponding to the largest eigenvalue of matrix A.

2.2.3 Generalized gravity centrality algorithm

GGC [22] regards the propagation ability of the node as the mass. A network is given, and it is defined as:

where dij indicates the distance between node i and node j, and R is the influenced radius. α (α ≥ 0) is a parameter, which can be flexibly modified in practical applications. Ci and Cj are the clustering coefficients, where \(C_{i}= \frac {2n_{i}}{k_{i}(k_{i}-1)}\), \(C_{j}= \frac {2n_{j}}{k_{j}(k_{j}-1)}\), ki and kj denote the degree of node i and node j respectively.

2.3 Two state-of-the-art measures

2.3.1 Local information dimensionality

The local information dimensionality (LID for short) [35] uses Shannon entropy [36, 37] to consider the number of nodes in each box. The information [38,39,40,41,42] of the nodes is adequately measured [43]. The LID of node i is defined as:

where d represents the derivative, l stands for the size of the box, ni(l) denotes the number of nodes in the box, and N shows the total amount of nodes in the network.

2.3.2 Fuzzy local dimension

Because the distance from each node to the central node is diverse, the contribution of each node is also different. As a result, fuzzy sets [44,45,46] are used for assigning nodes that contribute to the local dimensionality [47] in the fuzzy local dimension (FLD for short) [48]. The definition of FLD is as follows:

where rt stands for the radius from the center node i, ε shows the size of the box, and \(N_{i \_ r}\) represents the number of real nodes when the shortest distance between i and j is less than the ε. Aij(ε) represents the membership function when the distance from j to i is less than ε, where \(A_{ij} (\varepsilon )=exp(- \frac {d^{2}_{ij}}{\varepsilon ^{2}})\).

3 Proposed method

3.1 Algorithmic procedure

In this paper, a generalized mechanics model (named as Information Entropy-based Gravity Model) based on information entropy is proposed. The highlight of our approach is that the influence of each neighbor node is considered in the local information. INEG uses entropy to score each neighbor node, and finally each node is assigned to an objective score. The global information is quantified as the distance between each pair of nodes, and the objective score of each node is converted into a relative score to modify the relative interaction between nodes. What’s more, location information is introduced to form the mass. The major contribution of this model is to combine local information and global information effectively, and form the relative score of each node objectively. It consists of the following five steps, and the flowchart is shown in Fig. 1.

- Step 1::

-

Construct the network.

Input a real connected undirected network, and output the adjacency matrix of this network.

- Step 2::

-

Calculate the degree distribution and the shortest distance.

The adjacency matrix is input, the degree of each node and the shortest distance between different nodes are output.

- Step 3::

-

Calculate the information entropy and get the weight.

The adjacency matrix and the degree distribution matrix as input, then the information entropy of each node is calculated. Finally, the weight of each node is output.

- Step 4::

-

Calculate the mass of the nodes.

Input the degree distribution matrix from the second step and output the mass of nodes with position information.

- Step 5::

-

Calculate the interaction force and get the influence node.

Input the mass, shortest distance, and weight of each node. Then the interaction force between the nodes is calculated. Output the ranking of the node influence in the complex networks.

We will introduce each step in detail in Section 3.2 to Section 3.6.

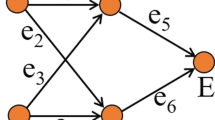

3.2 Step 1: construct the network

Given a real connected undirected network represented by G(V,E), where V and E are the set of nodes and edges respectively. And the adjacency matrix A is used to store G, where the element aij in the matrix A represents the connection relationship between the node i and the node j. aij = 0 means there is no connection between i and j. On the contrary, aij = 1 means there is a connection between i and j.

3.3 Step 2: calculate the degree distribution and the shortest distance

The adjacency matrix A of the network is given, then the degree of node i can be defined as follows:

where ki denotes the degree of node i, and aij represents the connection between i and j. At the same time, the shortest distance between disparate pairs of nodes is defined as the number of edges on the shortest path connecting these two nodes. The shortest path is an ordered list (PATHij = {(i0,i1),(i1,i2),…,(in− 1,in)}) containing the fewest connections, the number of which is L. The shortest distance dij is the length L of the shortest path. In this paper, Floyd’s algorithm [49] is used to calculate the shortest path in the network.

3.4 Step 3: Calculate the information entropy and get the weight

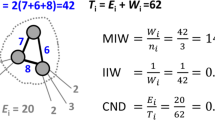

Each node in the network is affected by the global network and the local network. The local network of any node i in a complex network is denoted by L(N,D), where N represents the set of nodes in the local network, D denotes the degree set of each node in N. As shown in Fig. 2, the local network of node 1 is composed of blue nodes. N = {1,2,3,4,5}, D = {4,2,3,3,4}. We use P(i) to represent the probability set of i, which is defined as follows:

The local area network of each node includes its neighbor nodes and itself, so the scale m of the probability set is the number of node sets N. The local probability set p(i,s) of node i is defined as follows:

For node 1, m = 5, and the probability set of node 1 is \(P(1)=[\frac {4}{16}, \frac {2}{16}, \frac {3}{16}, \frac {3}{16}, \frac {4}{16}]\).

In the traditional gravity model, only the influence caused by the degree and distance of the node is considered, but the neighborhood information of the node is not considered. A node’s neighbor degree distribution is used to represent its neighborhood information. If a node’s neighbor degree distribution is more uniform, it will have less influence on the deviation of information propagation. For example, there are two nodes in the network with the same degree. If the neighbor degree distribution of a node is more uniform, the uncertainty in the direction of information diffusion will be greater. This means that it can spread the information better, and obviously its influence is greater. For another node whose neighbor degree distribution is not uniform, it has a higher probability of propagating information to nodes with a smaller degree. Therefore, it is less important. To quantify the degree distribution in the local network of nodes, the information entropy is introduced. It is well known that information entropy is used to measure the chaotic degree of distribution. The more uniform the distribution is, the greater the information entropy is. Information entropy, the expectation concerning the total amount of information, is defined as follows:

The function of the coefficient \(\frac {1}{\log (n)}\) is to make the data distributed between 0 and 1. In Fig. 2, the entropy E1 representing node 1 is the relative score of node 1 in the local network. \(E_{1}= - \frac {1}{\log (5)} \times (\frac {4}{16}\log \frac {4}{16}+\frac {2}{16}\log \frac {2}{16}+\frac {3}{16}\log \frac {3}{16}+\frac {3}{16}\log \frac {3}{16}+\frac {4}{16}\log \frac {4}{16})\).

3.5 Step 4: calculate the mass of the nodes

Traditionally, a node’s K-shell value equal to x indicates that it forms a complete subgraph with x other nodes in the network (this complete subgraph contains x + 1 nodes). A larger x denotes that the complete subgraph formed by it is larger, which means that it has a higher connection density. That is to say, a node with a larger K-shell value is more important. However, the hierarchical results of the traditional K-shell algorithm are rough, and nodes in the same shell cannot be distinguished. Therefore, a mixed location index km, which is the sum of the b index and the f index (km = b + f), is proposed. Figure 3 visually shows the b and f values of the nodes in the network. The specific calculation process is as follows.

-

1.

The calculation process of the b index

The b-index is used to measure the distance of a node from the periphery of the system. A global iteration counter is introduced based on the traditional K-shell. In order to highlight the importance of the core part compared to the periphery and to distinguish the nodes as much as possible, inheritance rules are proposed. Whenever the algorithm runs to a new shell (ie shell + 1), the b value of the node in the new shell will first inherit the b value from the neighbor nodes in the previous shell. Then, the number of global iterations to delete itself is counted. That is to say, a node’s b value is equal to the b of its neighbors in the previous shell plus its own global iteration b. The global iteration b index actually refers to the number of iterations to delete node i. The allocation steps for the b-index are described below. Initialize shell = 1 and b = 1. In the first step, the node with degree equal to shell (shell = 1) is removed from the graph and assigned b = 1. When there are nodes in the graph with degree equal to shell, repeat this step, but increase b by 1. The second step, first increment the shell by 1. Next, the initial b-values of all nodes in the new shell are equal to the sum of their neighbors’ b-values in the previous shell. Nodes with degree equal to shell are then again removed from the graph, and the global b value for a given counter is added to the b of the node. Each time a round of node deletion is performed, the b index is incremented by 1. When there are nodes in the graph with degree less than or equal to shell, this process is repeated until all nodes are removed. In this approach, nodes with larger b are closer to the core of the graph. A node whose b-value is not less than any of its neighbors’ b-values is considered a system core, and a system can have multiple cores.

-

2.

The calculation process of the f index

The f -index measures how close a node is to the system core, and is primarily used to try to improve those nodes that are close to the system core but have lower b. The f -index depends on the b-index assigned in the previous step, and the specific process is as follows. In the first step, the nodes located in the core of the graph are identified. Then, the geometric mean of the b-index of these nodes is obtained, which is the f -index value of these nodes. In the second step, the traversal starts from the core of the graph. All nodes with the highest f value in the graph assign values (f − 1) to the f -index of their undeleted neighbor nodes. After the assignment, remove from the graph those nodes with the largest f. In the third step, this process is repeated until all nodes in the graph are deleted.

Finally, the mass of node i introducing location information is expressed as follows:

where kmi represents the mixed position index of node i. \(km(\max \limits )\) and \(km(\min \limits )\) represent the maximum and minimum values of the mixed location indicators in the graph, respectively. \(\frac {km_{i}-km(\min \limits )}{km(\max \limits )-km(\min \limits )}\) stands for normalization of mixed location metrics. Obviously, when \(km_{i}=km(\min \limits )\), the location information will have no effect on the mass of the node. This means that when the mixed location metric is minimal, the main factor affecting node mass is degree.

3.6 Step 5: calculate the interaction force

In the mechanical model, the gravity is proportional to the product of their masses and inversely proportional to the square of their distance. By analogy with the mechanical model and combining the above steps, the interaction force at node i is defined as:

where dij indicates the shortest distance between i and j. \(R=0.5 \left \langle d \right \rangle \), which follows the setting principle of (7). In general, the generalized mechanics model based on information entropy consists of three parts: the mass, the shortest distance, and the information entropy weight of the node itself.

4 Experiments

In this section, we conduct three different experiments on eleven real networks and compare them with ten different methods in order to test the effectiveness of INEG.

4.1 Description of the data set

Eleven networks in the real world are selected, including collaboration networks (Jazz [50], NS [51], and GrQc [52]), communication networks (Email [53] and EEC [54]), social networks (PB [55], Facebook [56], and WV [57]), transportation networks (USAir [58]), infrastructure networks (Power [59]) and technical network (Router [60]).

Jazz [50] describes the different collaborative relationships between jazz musicians. NS [51] shows a collaborative network of scientists. In Jazz and NS, a node denotes a musician and a scientist respectively, and an edge represents the cooperation between any two musicians or scientists. GrQc [52] covers collaborative scientific papers on general relativity and the quantum universe from the arXiv preprint site. Email network [53] reveals the email exchange among members. EEC [54] depicts a network of European research members exchanging mail. For Email and EEC, a node indicates a user. If two users communicate with each other, there will be an edge between a pair of nodes. PB [55] represents the blogs’ connection relationship in the USA. The Facebook social circle is described in Facebook [56]. The dataset includes node features (profiles), circles, and ego-networks. WV [57] summarizes the network of Wikipedia voting issues. In Wikipedia, in order for a user to become an administrator, an administrative request needs to be issued. Then, the Wikipedia community decides who is promoted to be an administrator through public voting. USAir [58] is the American transportation aviation network, which describes the major airports and corresponding airlines in the United States. A node indicates an airport, and an edge denotes airlines between different airports. Power [59] represents the power grid in the western United States. For Power, the node represents a generator, converter, or substation. And an edge indicates a power line. Router [60] denotes a symmetrical snapshot of the Internet structure at the autonomous system level. These data sets can be downloaded from http://snap.stanford.edu/data/ and http://vlado.fmf.uni-lj.si/pub/networks/data/.

The specific information of these networks is shown in Table 1, where N represents the node, E denotes the edge. \(\left \langle d \right \rangle \) shows the average value of the shortest distance in the network, \(\left \langle k \right \rangle \) represents the average value of the network. C is the clustering coefficient of the network.

4.2 Simulation

4.2.1 Top-ten nodes

The SI model is the simplest infectious disease model, which can be used to evaluate the spreading ability of each node and reveal the influence of nodes. In the SI model [61], the nodes of the network are segmented into two states: susceptible state (Class S) and infectious state (Class I). The susceptible nodes refer to the nodes that don’t have the disease, but lack immunological competence, and are prone to be infected after contact with the infected nodes. Infected nodes refer to nodes infected with infectious diseases, which have a probability to affect their neighbor’s susceptible nodes and turn them into infected state. Initially, one selected node (called the seed) is in the I state while the other nodes are in the S state. When the process begins, the initially infected node has an ability to infect its surrounding nodes in S state with the probability β. Castellano and Pastor-Satorras [62] suggest that the infectious rate β is slightly above the epidemic threshold probability βc(\(\beta _{c}=\frac {\left \langle k \right \rangle }{\left \langle k^{2} \right \rangle -\left \langle k \right \rangle }\)). The standard SI model sequence for each network is obtained according to the epidemic threshold probability.

The top-ten nodes of the twelve measures on eleven different networks are demonstrated in Table 2. Because different methods evaluate importance from different aspects of the network structure, the ranking lists they obtained would be different. The ranking list of the SI model, which represents the standard sequence of the network, shows the top-ten key nodes in each network. For a clearer display, the same nodes in the ranking list for each measure and the standard sequence are shown in red, and different nodes are shown in black. For example, if node 1 is identified by the standard SI model and also identified by the DC, node 1 in the DC is marked in red. The effectiveness of each method is demonstrated by comparing each measure with the standard sequence. Compare each measure to the standard sequence to demonstrate their effectiveness. A method is more efficient if it has more nodes in common with the standard sequence.

It can be seen from Table 2 that the effectiveness of INEG is generally relatively high in all networks. In Jazz, INEG has six nodes that are the same as the standard sequence. It is second only to DC, CC, and GGC. For USAir, the ranking list for each method is almost identical to the standard sequence. The recognition performance of INEG is only inferior to GGC and LID in NS. Except for CC, BC, LID, and FLD, other measures have eight nodes that are the same as the standard sequence in EEC. For Email, CC and LID are tied for first place in performance. In PB, DC and PC are identical to the standard sequence. For Facebook, EC has the worst performance. For GrQc, the capabilities of INEG, DC, GC, and WGC are tied for first place. INEG’s performance ranks third in Router. For WV, LID identifies two vital nodes, which proves its performance is not good.

4.2.2 Individuation

The Individuation experiment, which is used to count the frequency of nodes under different rankings, is defined as follows:

where nu represents the number of nodes with unique scores, and n denotes the total number of nodes in the network. The frequency metric depicts the proportion of nodes with unique scores in the network. The experiment is used to evaluate the ability to distinguish nodes of each method. An approach with a larger rank and a smaller frequency means that it scores nodes more spread out. Therefore, this method can better distinguish the importance of nodes in the network. For example, if a method assigns the same score to all nodes in extreme cases, the ability of this method to distinguish nodes is extremely poor. This means that all nodes are ranked first and the method cannot identify crucial nodes. The specific values of the experiment are shown in Table 3. Red indicates the best performance, blue represents second, and green denotes third. What’s more, the macro effects on ten networks are shown in Figs. 4 and 5.

For Jazz, the highest ranking and lowest frequency are obtained by INEG. INEG’s capability is only inferior to WGC in USAir. INEG’s performance ranks second in NS. For EEC and PB, INEG defeats other methods. WGC and INEG tied for first place in Email. For Facebook, GrQc, and Router, the performance of INEG is only inferior to WGC. In WV, the best performance is demonstrated by INEG.

4.2.3 Kendall’s tau coefficient

The Kendall correlation coefficient [63] is named after Maurice Kendall, and often uses the Greek letter τ to indicate its value. It is a statistical value used to measure the correlation between two random sequences. Two random sequences X and Y with N elements are given, and their i th (1 ≤ i ≤ N) elements are represented by Xi and Yi respectively. At the same time, the corresponding elements form a set XY, which contains elements (Xi,Yi) (1 ≤ i ≤ N). Considered any pair of elements (Xi,Yi) and (Xj,Yj) in the set, when Xi > Xj and Yi > Yj or Xi < Xj and Yi < Yj occurs, this pair of elements is regarded consistent. When Xi > Xj and Yi < Yj or Xi < Xj and Yi > Yj occurs, this pair of elements is deemed inconsistent. When Xi = Xj and Yi = Yj, this pair of elements is considered neither consistent nor inconsistent. Therefore, Kendall correlation coefficient is defined as follows:

where nc indicates the number of identical pairs. On the contrary, nd represents the number of discordant pairs. And n denotes the length of the sequence. Its value lies between − 1 and 1. τ = 1 indicates the two sequences have totally the same rank, while τ = − 1 is the opposite.

The higher the τ is, the more precise the centrality measure is. We use Kendall’s tau coefficient to measure the correlation between the ranked list of different methods and the ranked list of standard SI model. Time t is set to 10. The quantity of infected nodes in ten steps (F(10)) is used to represent the infection ability of each node.

The results in the small-scale networks are indicated in Fig. 6. INEG defeats five methods in Jazz. The trend of INEG is roughly the same as that of WGC in USAir. In addition, INEG is superior to similar methods based on gravity models like GGC. For NS, the trends of GC and INEG are almost the same. What’s more, INEG ranks first when 0.1 ≤ β ≤ 0.12. INEG’s performance is similar to WGC in EEC. In addition, the highest τ value is obtained by INEG when 0.1 ≤ β ≤ 0.13. For Email, the performance of INEG is the best when β = 0.19. For PB, INEG and GC have almost the same trend. In particular, they almost defeat other approaches. At the same time, Fig. 7 demonstrates the results in the large-scale networks. The τ value obtained by INEG is almost the same as that obtained by GC in GrQc. Especially when 0.1 ≤ β ≤ 0.11, INEG ranks first. For Power, the trend shown by INEG is almost the same as that shown by GC. And their τ values are higher than those acquired by other methods. In Router, INEG ranks first when 0.12 ≤ β ≤ 0.15. For WV, INEG acquires the highest τ value when 0.16 ≤ β ≤ 0.21.

Kendall’s tau coefficient τ between the infection ability acquired by the SI model and the other methods for six small-scale networks. The X-axis represents the infection rate, the Y-axis indicates the Kendall’s tau coefficient, and each point represents the value of τ under the corresponding infection rate

Kendall’s tau coefficient τ between the infection ability acquired by the SI model and the other methods for four large-scale networks. The X-axis represents the infection rate, the Y-axis indicates the Kendall’s tau coefficient, and each point represents the value of τ under the corresponding infection rate

5 Applications

5.1 Explore the application of immune strategies

Immunization strategy is one of the most basic structural problems in network science. Its main goal is to effectively control the spread of diseases by vaccinating some people. The method proposed in this paper can mine this part of the key population in a large-scale network, thereby reducing the consequence caused by the virus and achieving a better immune effect.

The SI model experiment is used to measure the immune effect of each method. The top-ten nodes of each method are selected as their initial infection nodes, and β is set to the epidemic threshold probability. The ranking of the top ten nodes has been given in the Top-ten nodes experiment. We conducted one hundred experiments and the results are revealed in Figs. 8 and 9. In a certain period of time, a method that gets more infection nodes indicates that the nodes it initially chooses are more influential. In other words, the performance of this approach is better.

The number of infected nodes for dissimilar initial nodes (top-10 nodes) acquired by different approaches in six small-scale networks. The X-axis indicates the experimental simulation time t, the Y-axis denotes the number of infected nodes in the network at a specific time t, and each point reveals the number of infected nodes generated in the corresponding time

The number of infected nodes for dissimilar initial nodes (top-10 nodes) acquired by different approaches in four large-scale networks. The X-axis indicates the experimental simulation time t, the Y-axis denotes the number of infected nodes in the network at a specific time t, and each point reveals the number of infected nodes generated in the corresponding time

The experimental results in the small-scale networks are revealed in Fig. 8. For Jazz, the most infected nodes are demonstrated by INEG when t ≥ 31. Meanwhile, INEG exhibits the best performance when all approaches reach stability. In USAir, the strongest infectious ability and the perfect stability are proved by INEG when t ≥ 26. Faster infection rate is revealed by INEG in the initial stage of the NS. All methods show almost the same trend in EEC. In addition, INEG ranks fifth. For Email, CC has the strongest infection ability which surpasses other methods. For PB, the most infected nodes are acquired by INEG when t ≥ 45. Figure 9 shows the experimental results in the large-scale networks. The largest number of nodes is obtained by LID in GrQc. In Power, INEG outperforms other methods almost the entire time period. For Router, INEG’s performance ranks fourth when t = 46. For WV, INEG defeats other measures almost the entire time period.

5.2 Other applications

5.2.1 Applications for finding super-spreaders

With the outbreak of COVID-19, the World Health Organization has increased its emphasis on epidemic control. During the entire process of the outbreak, the discovery and isolation of ’super-spreaders’ has become a key measure to control the epidemic. Super-spreader, which is defined as the transmission of the virus to more than ten patients, is the key node in the epidemic transmission network. The method proposed in this paper is used to find super-spreaders in epidemic transmission models.

5.2.2 Figure pooling application

Pooling in graph classification tasks is a fundamental problem to be solved by graph neural networks. Graph neural network is a deep learning method based on graph structure, which is used for processing data structure in non-Euclidean space. Graph classification, which is a typical task in graph neural networks, is used to learn a graph classification model from the figure to the corresponding label. Graph classification focuses on the global information of graph data, including structure information and attribute information of each node. In convolutional neural networks, hierarchical pooling is an important method used to gradually extract global information. As one of the main pooling mechanism methods, Top-K is a process of continuously discarding nodes for graphs with irregular structures. Important nodes that account for a fixed proportion are looked for to replace global information, and then the remaining nodes are ignored. The approach proposed in this paper can be applied to find crucial nodes with a fixed ratio.

6 Conclusion

The paper proposes a generalized mechanics model based on information entropy to evaluate the influence of nodes in the network. The method not only considers the global information by calculating the shortest path, but also considers the degree of the node itself in the local information. In addition, the influence received from neighbor nodes is quantified in detail, and the information entropy of the node is introduced to objectively assign its own weight to each node. At the same time, location information is introduced to form the mass. Local information and global information are better combined. The proposed method in this paper performs well in different experiments on several real networks, which offers a broad prospect for identifying critical nodes.

Some ongoing works are as follows. Our approach only considers the influence of first-order neighbor nodes, while the influence of second-order or higher-order neighbor nodes are supposed to be expected. At the same time, the method in this paper is suitable for undirected networks. In the future, we will take into account improvements to the weighted network.

References

Newman ME (2003) The structure and function of complex networks. SIAM Rev 45(2):167–256. https://doi.org/10.1137/S003614450342480

Huang C, Li M, Cao F, Fujita H, Li Z, Wu X (2022) Are graph convolutional networks with random weights feasible? IEEE Trans Pattern Anal Mach Intell :1–18

Hayashi T, Fujita H, Hernandez-Matamoros A (2021) Less complexity one-class classification approach using construction error of convolutional image transformation network. Inf Sci 560:217–234. https://doi.org/10.1016/j.ins.2021.01.069

Chen L, Deng Y, Cheong KH (2021) Probability transformation of mass function: A weighted network method based on the ordered visibility graph. Eng Appl Artif Intell 105:104438. https://doi.org/10.1016/j.engappai.2021.104438

Zareie A, Sakellariou R (2021) Minimizing the spread of misinformation in online social networks: A survey. J Netw Comput Appl 186:103094–0. https://doi.org/10.1016/j.jnca.2021.103094

Wen T, Gao Q, Chen Y-w, Cheong KH (2022) Exploring the vulnerability of transportation networks by entropy: A case study of Asia–Europe maritime transportation network. Reliab Eng Syst Saf :108578

Cimr D, Fujita H, Tomaskova H, Cimler R, Selamat A (2022) Automatic seizure detection by convolutional neural networks with computational complexity analysis. Comput Methods Prog Biomed 229:107277. https://doi.org/10.1016/j.cmpb.2022.107277

Tanveer M, Rashid AH, Ganaie M, Reza M, Razzak I, Hua K-L (2021) Classification of Alzheimer’s disease using ensemble of deep neural networks trained through transfer learning. IEEE J Biomed Health Inf 26(4):1453–1463. https://doi.org/10.1109/JBHI.2021.3083274https://doi.org/10.1109/JBHI.2021.3083274

Wang S-H, Govindaraj VV, Górriz JM, Zhang X, Zhang Y-D (2021) Covid-19 classification by FGCNet with deep feature fusion from graph convolutional network and convolutional neural network. Inf Fusion 67:208–229. https://doi.org/10.1016/j.inffus.2020.10.004

Zhang H, Zhong S, Deng Y, Cheong KH (2021) LFIC: Identifying influential nodes in complex networks by local fuzzy information centrality. IEEE Trans Fuzzy Syst:3284–3296. https://doi.org/10.1109/TFUZZ.2021.3112226

Lei M, Cheong KH (2022) Node influence ranking in complex networks: A local structure entropy approach. Chaos Solitons Fractals 160:112136. https://doi.org/10.1016/j.chaos.2022.112136

Zareie A, Sheikhahmadi A, Jalili M (2020) Identification of influential users in social network using gray wolf optimization algorithm. Expert Syst Appl 142:112971. https://doi.org/10.1016/j.eswa.2019.112971

Wang X, Zhou W, Li R, Cao J, Lin X (2018) Improving robustness of interdependent networks by a new coupling strategy. Phys A Stat Mech Appl 492:1075–1080. https://doi.org/10.1016/j.physa.2017.11.037

Lei X, Yang X, Fujita H (2019) Random walk based method to identify essential proteins by integrating network topology and biological characteristics. Knowl-Based Syst 167:53–67. https://doi.org/10.1016/j.knosys.2019.01.012

Sheikhahmadi A, Zareie A (2020) Identifying influential spreaders using multi-objective artificial bee colony optimization. Appl Soft Comput 94:106436. https://doi.org/10.1016/j.asoc.2020.106436

He Q, Sun L, Wang X, Wang Z, Huang M, Yi B, Wang Y, Ma L (2021) Positive opinion maximization in signed social networks. Inf Sci 558:34–49. https://doi.org/10.1016/j.ins.2020.12.091

Lin L-F, Li Y-M (2021) An efficient approach to identify social disseminators for timely information diffusion. Inf Sci 544:78–96. https://doi.org/10.1016/j.ins.2020.07.040

Zareie A, Sheikhahmadi A, Jalili M, Fasaei MSK (2020) Finding influential nodes in social networks based on neighborhood correlation coefficient. Knowl-Based Syst 194:105580. https://doi.org/10.1016/j.knosys.2020.105580

Xiao F, Wen J, Pedrycz W (2022) Generalized divergence-based decision making method with an application to pattern classification. IEEE Trans Knowl Data Eng :1–1

Li Z, Ren T, Ma X, Liu S, Zhang Y, Zhou T (2019) Identifying influential spreaders by gravity model. Sci Rep 9(1):1–7. https://doi.org/10.1038/s41598-019-44930-9

Liu F, Wang Z, Deng Y (2020) GMM: A generalized mechanics model for identifying the importance of nodes in complex networks. Knowl-Based Syst 193:105464. https://doi.org/10.1016/j.knosys.2019.105464

Li H, Shang Q, Deng Y (2021) A generalized gravity model for influential spreaders identification in complex networks. Chaos, Solitons Fractals 143:110456. https://doi.org/10.1016/j.chaos.2020.110456

Bonacich P (1972) Factoring and weighting approaches to status scores and clique identification. J Math Sociol 2(1):113–120. https://doi.org/10.1080/0022250X.1972.9989806

Kitsak M, Gallos LK, Havlin S, Liljeros F, Muchnik L, Stanley HE, Makse HA (2010) Identification of influential spreaders in complex networks. Nature Phys 6(11):888–893. https://doi.org/10.1038/nphys1746

Zareie A, Sheikhahmadi A (2018) A hierarchical approach for influential node ranking in complex social networks. Expert Syst Appl 93:200–211. https://doi.org/10.1016/j.eswa.2017.10.018

Maji G, Mandal S, Sen S (2020) A systematic survey on influential spreaders identification in complex networks with a focus on K-shell based techniques. Expert Syst Appl 161:113681. https://doi.org/10.1016/j.eswa.2020.113681

Maji G, Namtirtha A, Dutta A, Malta MC (2020) Influential spreaders identification in complex networks with improved k-shell hybrid method. Expert Syst Appl 144:113092. https://doi.org/10.1016/j.eswa.2019.113092

Maji G (2020) Influential spreaders identification in complex networks with potential edge weight based k-shell degree neighborhood method. J Comput Sci 39:101055. https://doi.org/10.1016/j.jocs.2019.101055

Zareie A, Sheikhahmadi A (2019) EHC: Extended H-index centrality measure for identification of users’ spreading influence in complex networks. Phys A Stat Mech Appl 514:141–155. https://doi.org/10.1016/j.physa.2018.09.064

Freeman LC (1978) Centrality in social networks conceptual clarification. Soc Netw 1(3):215–239. https://doi.org/10.1016/0378-8733(78)90021-7

Newman ME (2005) A measure of betweenness centrality based on random walks. Soc Netw 27 (1):39–54. https://doi.org/10.1016/j.socnet.2004.11.009

Bonacich P, Lloyd P (2001) Eigenvector-like measures of centrality for asymmetric relations. Soc Netw 23(3):191–201. https://doi.org/10.1016/S0378-8733(01)00038-7

Brin S, Page L (2012) Reprint of: The anatomy of a large-scale hypertextual web search engine. Comput Netw 56(18):3825–3833. https://doi.org/10.1016/j.comnet.2012.10.007

Wang Y, Wang M, Fujita H (2020) Word Sense Disambiguation: A comprehensive knowledge exploitation framework. Knowl-Based Syst 190:105030. https://doi.org/10.1016/j.knosys.2019.105030

Wen T, Deng Y (2020) Identification of influencers in complex networks by local information dimensionality. Inf Sci 512:549–562. https://doi.org/10.1016/j.ins.2019.10.003

Xiao F, Pedrycz W (2022) Negation of the quantum mass function for multisource quantum information fusion with its application to pattern classification. IEEE Trans Pattern Anal Mach Intell:1–1. https://doi.org/10.1109/TPAMI.2022.3167045

Fan W, Xiao F (2022) A complex Jensen–Shannon divergence in complex evidence theory with its application in multi-source information fusion. Eng Appl Artif Intell 116:105362. https://doi.org/10.1016/j.engappai.2022.105362

Deng Y (2022) Random permutation set. Int J Comput Commun Control 17(1):4542. https://doi.org/10.15837/ijccc.2022.1.4542

Zhu C, Xiao F, Cao Z (2022) A generalized Rényi divergence for multi-source information fusion with its application in EEG data analysis. Inf Sci 605:225–243. https://doi.org/10.1016/j.ins.2022.05.012

Xiao F (2022) GEJS: A generalized evidential divergence measure for multisource information fusion. IEEE Trans Syst Man Cybernet Syst :1–13

Deng Y (2020) Information volume of mass function. Int J Comput Commun Contr 15(6):3983. https://doi.org/10.15837/ijccc.2020.6.3983

Xiao F, Cao Z, Lin C-T (2022) A complex weighted discounting multisource information fusion with its application in pattern classification. IEEE Trans Knowl Data Eng:1–16. https://doi.org/10.1109/TKDE.2022.3206871

Deng Y (2020) Uncertainty measure in evidence theory. Sci China Inf Sci 63(11):1–19. https://doi.org/10.1007/s11432-020-3006-9

Zhang S, Xiao F (2022) A TFN-based uncertainty modeling method in complex evidence theory for decision making. Inf Sci 619:193–207. https://doi.org/10.1016/j.ins.2022.11.014

Xiao F (2022) Generalized quantum evidence theory. Appl Intell:1–16. https://doi.org/10.1007/s10489-022-04181-0

Zheng L, Xiao F (2022) Complex interval number-based uncertainty modeling method with its application in decision fusion. Int J Intell Syst:1–18. https://doi.org/10.1002/int.23070

Qiang C, Deng Y, Cheong KH (2022) Information fractal dimension of mass function, vol 30. https://doi.org/10.1142/S0218348X22501109

Wen T, Jiang W (2019) Identifying influential nodes based on fuzzy local dimension in complex networks. Chaos, Solitons Fractals 119:332–342. https://doi.org/10.1016/j.chaos.2019.01.011

Floyd RW (1962) Algorithm 97: shortest path. Commun ACM 5(6):345. https://doi.org/10.1145/367766.368168

Gleiser PM, Danon L (2003) Community structure in jazz. Adv Compl Syst 6(04):565–573. https://doi.org/10.1142/S0219525903001067

Newman ME (2006) Finding community structure in networks using the eigenvectors of matrices. Phys Rev E 74(3):036104. https://doi.org/10.1103/PhysRevE.74.036104

Leskovec J, Kleinberg J, Faloutsos C (2007) Graph evolution: Densification and shrinking diameters. ACM Trans Knowl Disc Data (TKDD) 1(1):2–es. https://doi.org/10.1145/1217299.1217301

Guimera R, Danon L, Diaz-Guilera A, Giralt F, Arenas A (2003) Self-similar community structure in a network of human interactions. Phys Rev E 68(6):065103. https://doi.org/10.1103/PhysRevE.68.065103

Yin H, Benson AR, Leskovec J, Gleich DF (2017) Local higher-order graph clustering. In: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pp 555–564. https://doi.org/10.1145/3097983.3098069

Adamic LA, Glance N (2005) The political blogosphere and the 2004 US election: divided they blog. In: Proceedings of the 3rd international workshop on link discovery, pp 36–43. https://doi.org/10.1145/1134271.1134277

Mcauley J, Leskovec J (2014) Discovering social circles in ego networks. ACM Trans Knowl Disc Data (TKDD) 8(1):1–28. https://doi.org/10.1145/2556612

Leskovec J, Huttenlocher D, Kleinberg J (2010) Predicting positive and negative links in online social networks. In: Proceedings of the 19th international conference on world wide web, pp 641–650. https://doi.org/10.1145/1772690.1772756

Colizza V, Pastor-Satorras R, Vespignani A (2007) Reaction–diffusion processes and metapopulation models in heterogeneous networks. Nature Phys 3(4):276–282. https://doi.org/10.1038/nphys560

Watts DJ, Strogatz SH (1998) Collective dynamics of ‘small-world’ networks. Nature 393 (6684):440–442. https://doi.org/10.1038/30918

Spring N, Mahajan R, Wetherall D, Anderson T (2004) Measuring ISP topologies with Rocketfuel. IEEE/ACM Trans Netw 12(1):2–16. https://doi.org/10.1109/TNET.2003.822655

Yang M, Chen G, Fu X (2011) A modified SIS model with an infective medium on complex networks and its global stability. Phys A Stat Mech Appl 390(12):2408–2413. https://doi.org/10.1016/j.physa.2011.02.007

Castellano C, Pastor-Satorras R (2010) Thresholds for epidemic spreading in networks. Phys Rev Lett 105(21):218701. https://doi.org/10.1103/PhysRevLett.105.218701

Kendall MG (1938) A new measure of rank correlation. Biometrika 30 (1/2):81–93. https://doi.org/10.1093/biomet/30.1-2.81

Acknowledgements

The authors greatly appreciate the reviewers’ suggestions and the editor’s encouragement. This research is supported by the National Natural Science Foundation of China (No. 62003280), Chongqing Talents: Exceptional Young Talents Project (No. cstc2022ycjh-bgzxm0070), Natural Science Foundation of Chongqing, China (No. CSTB2022NSCQ-MSX0531), and Chongqing Overseas Scholars Innovation Program (No. cx2022024).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, S., Xiao, F. A mechanics model based on information entropy for identifying influencers in complex networks. Appl Intell 53, 18450–18469 (2023). https://doi.org/10.1007/s10489-023-04457-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04457-z