Abstract

Advancement of imaging techniques enables consecutive image sequences to be acquired for quality monitoring of manufacturing production lines. Registration for these image sequences is essential for in-line pattern inspection and metrology, e.g., in the printing process of flexible electronics. However, conventional image registration algorithms cannot produce accurate results when the images contain duplicate and deformable patterns in the manufacturing process. Such a failure originates from the fact that the conventional algorithms only use spatial and pixel intensity information for registration. Considering the nature of temporal continuity of the product images, in this paper, we propose a closed-loop feedback registration algorithm. The algorithm leverages the temporal and spatial relationships of the consecutive images for fast, accurate, and robust point matching. The experimental results show that our algorithm finds about 100% more matching point pairs with a lower root mean squared error and reduces up to 86.5% of the running time compared to other state-of-the-art outlier removal algorithms.

Similar content being viewed by others

References

Ma Y, Niu D, Zhang J, Zhao X, Yang B, Zhang C (2022) Unsupervised deformable image registration network for 3D medical images. Appl Intell 52:766–779. https://doi.org/10.1007/s10489-021-02196-7

Kawulok M, Benecki P, Piechaczek S, Hrynczenko K, Kostrzewa D, Nalepa J (2019) Deep learning for multiple-image super-resolution. IEEE Geosci Remote Sens Lett 17:1062–1066

Devi PRS, Baskaran R (2021) SL2E-AFRE : personalized 3D face reconstruction using autoencoder with simultaneous subspace learning and landmark estimation. Appl Intell 51:2253–2268. https://doi.org/10.1007/s10489-020-02000-y

Hosseini MS, Moradi MH (2022) Adaptive fuzzy-SIFT rule-based registration for 3D cardiac motion estimation. Appl Intell 52:1615–1629. https://doi.org/10.1007/s10489-021-02430-2

Mehmood Z, Mahmood T, Javid MA (2018) Content-based image retrieval and semantic automatic image annotation based on the weighted average of triangular histograms using support vector machine. Appl Intell 48:166–181. https://doi.org/10.1007/s10489-017-0957-5

Chen J, Xu Y, Zhang C, Xu Z, Meng X, Wang J (2019) An improved two-stream 3D convolutional neural network for human action recognition. In: 2019 25th International Conference on Automation and Computing (ICAC), pp 1–6. https://doi.org/10.23919/IConAC.2019.8894962

Du X, Anthony BW, Kojimoto NC (2015) Grid-based matching for full-field large-area deformation measurement. Opt Lasers Eng 66:307–319

Wang X, Liu X, Zhu H, Ma S (2017) Spatial-temporal subset based digital image correlation considering the temporal continuity of deformation. Opt Lasers Eng 90:247–253

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Image Vis Comput 60:91–110

Bay H, Tuytelaars T, Van Gool L (2006) Surf: speeded up robust features. In: Leonardis A, Bischof H, Pinz A (eds.) European conference on computer vision. Springer, Berlin, Heidelberg, pp. 404–417. https://doi.org/10.1007/11744023_32

Calonder M, Lepetit V, Strecha C, Fua P (2010) Brief: binary robust independent elementary features. In: Daniilidis K, Maragos P, Paragios,N (eds.) European conference on computer vision. Springer, Berlin, Heidelberg, pp.778–792. https://doi.org/10.1007/978-3-642-15561-1_56

Aldana-Iuit J, Mishkin D, Chum O, Matas J (2020) Saddle: fast and repeatable features with good coverage. Image Vis Comput 97:3807

Harris CG, Stephens M (1988) A combined corner and edge detector. In: Alvey vision conference. Citeseer, vol 15, pp 10–5244

Zaragoza J, Chin T-J, Tran Q-H et al (2014) As-projective-as-possible image stitching with moving DLT. IEEE Trans Pattern Anal Mach Intell 36:1285–1298

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: an efficient alternative to SIFT or SURF. In: 2011 international conference on computer vision. IEEE, pp 2564–2571. https://doi.org/10.1109/ICCV.2011.6126544

DeTone D, Malisiewicz T, Rabinovich A (2018) SuperPoint: self-supervised interest point detection and description. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp 224–236

Aryal S, Ting KM, Washio T, Haffari G (2017) Data-dependent dissimilarity measure: an effective alternative to geometric distance measures. Knowl Inf Syst 53:479–506

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Association for Computing Machinery, New York, pp 381–395. https://doi.org/10.1145/358669.358692

Barath D, Noskova J, Ivashechkin M, Matas J (2020) MAGSAC++, a fast, reliable and accurate robust estimator. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 1304–1312

Chen S, Zhong S, Xue B, Li X, Zhao L, Chang CI (2020) Iterative scale-invariant feature transform for remote sensing image registration. IEEE Trans Geosci Remote Sens 59:3244–3265

Sarlin P-E, DeTone D, Malisiewicz T, Rabinovich A (2020) SuperGlue: learning feature matching with graph neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 4938–4947

Sun J, Shen Z, Wang Y, Bao H, Zhou X (2021) LoFTR: detector-free local feature matching with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 8922–8931

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser (2017) Attention is all you need. In: Guyon I, Von Luxburg U, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds.) Advances in Neural Information Processing Systems. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Szeliski R (2006) Image alignment and stitching. In: Handbook of mathematical models in computer vision. Springer, Boston, pp 273–292. https://doi.org/10.1007/0-387-28831-7_17

Yan J, Du X (2020) Real-time web tension prediction using web moving speed and natural vibration frequency. Meas Sci Technol 31:115205

Burkardt J (2014) The truncated normal distribution. Department of Scientific Computing Website, Florida State University 1–35

Vedaldi A, Fulkerson B (2010) VLFeat: an open and portable library of computer vision algorithms. In: proceedings of the 18th ACM international conference on multimedia. Association for Computing Machinery, New York, pp 1469–1472. https://doi.org/10.1145/1873951.1874249

Pixelink Capture Software (n.d.) https://pixelink.com/products/software/pixelink-capture-software/. Accessed 25 Jul 2020

Triggs B, McLauchlan PF, Hartley RI, Fitzgibbon AW (1999) Bundle adjustment—a modern synthesis. In: Triggs B, Zisserman A, Szeliski R (eds.) Vision Algorithms: Theory and Practice. Springer, Berlin, Heidelberg, pp 298–372. https://doi.org/10.1007/3-540-44480-7_21

DiMeo P, Sun L, Du X (2021) Fast and accurate autofocus control using Gaussian standard deviation and gradient-based binning. Opt Express 29:19862–19878. https://doi.org/10.1364/OE.425118

Ma R (2021) Grid-based-patterns creation. https://github.com/cucum13er/Grid-based-patterns-creation. Accessed 3 May 2021

Acknowledgements

This work is supported in part by the National Science Foundation (CMMI #1916866, CMMI #1942185, and CMMI #1907250). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: The vibration measurement in the z-direction of the roll-to-roll printing system

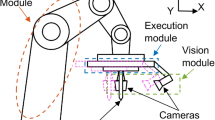

Figure 9 shows the setup of the roll-to-roll printing system in our lab. The displacement sensor is installed for inspecting the distance between the sensor and the web. Figure 10 shows the 80-second data acquired by the sensor. The maximum displacement in the z-direction is only 387 nm which can be neglected compared to the mm-scale displacements in the x and y directions.

Appendix 2: Expedition of matching algorithm for real-time applications

While the complexity of the proposed matching algorithm has been improved up to O(n) in Section 3.2, its calculation can be further expedited for real-time applications. Instead of using loops for matching each element of Pi(K) with Pi − 1(j) as shown in Fig. 11a and b, vectorized feature matrices multiplication can be adopted for the SSD calculation.

The SSD of two vectors, e.g., Vi − 1(j) and Vi(k) can be calculated by:

where ∑is the summation operator. However, calculating SSDjk for all combinational vector pairs in series using loops will be time-consuming. In the following, we attempt to parallelize the calculation by vectorization. Mathematically, Eq. (11) can be expressed as:

where Vi(k)T denotes the transpose of Vi(k). Expanding Eq. (12) from one-to-one SSD into m × n SSDs (meaning there are m features in Vi − 1 and n features in Vi),we can calculate all the SSDs between Vi − 1 and Vi (by)

where the element at the jth row and kth column of SSD can be denoted by SSDjk. The total FLOPs of Eqs. (11) and (13) are similar. However, the SSD calculation time will be significantly reduced after applying the vectorization in Eq. (13), because by taking advantage of the parallel computing capability of multi-core CPUs or GPUs, matrix multiplication using Eq. (13) is inherently faster than the computation in sequential loops using Eq. (11). Such a vectorization calculation can improve the speed of SSD calculation from O(n) to O(1). The running speed was evaluated in Section 4. Figure 11 shows the comparison of the two methods. Figure 11a shows the loop implementation of the feature matching algorithm. Figure 11b shows the SSD calculation process of (a). Figure 11c shows the vectorization implementation of the feature matching algorithm. Figure 11d shows the SSD calculation process of (c). As an example, the dimensions of all the feature vectors are set to 1 × 128.

Appendix 3: More experimental results on simulation data

1.1 Simulation data experiments

In this sub-section, we evaluate the performance of our closed-loop feedback registration algorithm with synthetic images. Consecutive repetitive and regular printed patterns are commonly encountered in real-world flexible electronics manufacturing due to the nature of printing, e.g., roll-to-roll printing. To simulate the images in the real-world production line of such repetitive and regular grid-based patterns, three synthetic image datasets are created. Each of the three datasets contains twenty images with a size of 400 × 400 pixels with their own unique basic grid patterns. The first dataset consists of squares with a side length of 41 pixels, the second dataset consists of circles with a radius of 20 pixels, and the last dataset consists of hexagons with a radius of 20 pixels. All these patterns are deformed randomly by affine transformation locally. Meanwhile, each image is corrupted by Gaussian-blurring-filtering with a mask size of 7 × 7, salt and pepper noise of density of 0.05, and white Gaussian noise of variance of 0.02. We use a distortion factor to define the level of local affine deformation, i.e., the greater the distortion factor is, the broader the random translation, rotation, scaling, and shear ranges are (readers can check the source code in [31] for more details). Figure 12 shows an example of the three datasets. For each image in the three synthetic datasets, the center positions of all the deformed patterns are saved as the ground truth when the image is created. To simulate the production lines in the real-world scenario, we set the movement between every two consecutive images (see the red arrows in Fig. 14). The moving speed of each dataset is dx = dy = 60 pixels/frame, i.e., the distance between every two undistorted pattern’s centers.

To evaluate the performance of the proposed algorithm, three feature-based algorithms are implemented. Each of the SIFT, SURF, and ORB detectors and descriptors are used on a sequence of grid-based images to detect and describe the interest points. Then, these interest points are matched through the four methods described below. In the first matching method, we implement the lowest SSD method directly. In the second method, the RANSAC outlier removal algorithm is applied to optimize the matching results in the first method. In the third method (if SIFT detector and descriptor are adopted), a new variant of SIFT, ISIFT [20] is implemented. ISIFT iteratively optimizes the results of RANSAC based on mutual information and shows higher registration accuracy than SIFT in remote sensing images. In the fourth method, the proposed closed-loop feedback matching method is implemented. An example of the matching results is shown in Fig. 13. In Fig. 13, all of the results are based on SIFT detector and descriptor. The results of SURF and ORB detectors and descriptors are shown in next sub-section. From Fig. 13a-c, we can see both the traditional and the state-of-the-art feature-based matching algorithms failed to find the correct correspondences between two grid-based images. The reason is that these algorithms ignore the smooth motion of the moving target and continuous spatiotemporal information in the consecutive image sequence, and thus cannot discriminate similar grid patterns. However, as shown in Fig. 13d, taking advantage of the prior registration results, our proposed closed-loop feedback matching algorithm has better accuracy and abundancy in the matching correspondences.

To further quantify the matching accuracy of the proposed algorithm, we first use the average Euclidean distances between the detected centers (we define the detected centers as the correspondences located inside the range of 5 pixels of the ground-truth centers) and the ground-truth centers to measure the detected center errors. Then, we also define the detected center ratio, which is the ratio of the number of the detected centers to the number of the ground-truth correspondences. Based on the moving speed (dx = dy = 60 pixels/frame in our experiment), the ground-truth centers of two consecutive images can be easily matched which can be defined as the ground-truth correspondences. Finally, to quantify the accuracy of all the detected matching correspondences (centers and non-centers), we compute the respective RMSE using:

where Pk − 1(i) and Pk(i) represent the coordinates of the ith matching point pair in imagek − 1 and imagek. Tproj(k) can be calculated using the ground-truth correspondences and the DLT method. Using Eq. (14), we can transform the matching points in imagek into the coordinate system of imagek − 1 and then calculate the average L2 distance of all the matching pairs in the coordinates of imagek − 1. Figure 14a shows an example of the ground-truth correspondences. Figure 14b shows the matching result of the same image pair using the SURF detector and descriptor with our closed-loop feedback matching algorithm. The small black circles are the detected pattern centers of this image pair.

Matching correspondences comparison, the red arrows represent the moving direction of the simulated production line (a) Ground-truth correspondences calculated by the moving speed and the center positions of the patterns (b) Matching results calculated by SURF detector and descriptor and our closed-loop feedback matching algorithm

Figure 15 shows the quantitative errors from all three synthetic datasets. The horizontal axes are the distortion factors, the vertical axes on the left (blue color) are the RMSE errors, and the vertical axes on the right (red color) are the detected center ratios. Given different distortion factors, the blue lines are the average detected center errors, the green lines are the average RMSE of all the detected correspondences, and the red lines are the average detected center ratios. Figure 15a shows the errors using the SIFT detector and descriptor and our matching algorithm, Fig. 15b shows the errors using SURF detector and descriptor and our matching algorithm, and Fig. 15c shows the errors using the ORB detector and descriptor and our matching algorithm. The detected center errors of all three detectors and descriptors are less than 2 pixels. That proves our matching algorithm can locate the distorted pattern centers accurately. Meanwhile, the SURF detector finds the most centers and keeps the detected center ratio stable when the distortion factor even increases up to 2.0. The SIFT detector finds fewer centers than SURF and the detected center ratio decreases when the distortion factor increases. The pattern centers on the edges are partially missed which can be seen from Fig. 14b. It is because that the DoG detector in the SURF and SIFT algorithms is not stable on the borders of the images due to the zero-padding for the Gaussian blurring process. The ORB detector finds more centers in the circle pattern dataset, fewer in the hexagon pattern dataset, and the least in the square pattern dataset. It is because that the ORB detector is more sensitive to corners than the centers of the pattern in the hexagon and square pattern datasets. Furthermore, compared to the other methods mentioned before, our closed-loop feedback matching algorithm successfully finds correct correspondences in the highly distorted (distortion factor up to 2.0) image sequences. Using the SIFT and SURF detectors and descriptors, the RMSEs are less than 3.5 pixels even though the distortion factor is up to 2.0. Using the ORB detector and descriptor, the RMSE is a little higher than SIFT and SURF, which is less than 8 pixels. It is because that the SIFT and SURF descriptors are more robust to noise due to the longer feature vectors. However, the local distortions cannot be simply represented by a projective transformation matrix (Tproj(k), and calculated by the ground-truth centers using Eq. (14)). Therefore, the RMSEs of the closed-loop feedback matching algorithm are acceptable.

1.2 More experiment results

Figure 16, 17, 18 and 19 show the matching results of our algorithm using ORB and SURF detectors and descriptors. They are mostly consistent as SIFT (Fig. 13d).

Appendix 4: More experiment results on real-world moving flexible targets

Implementing the proposed algorithm, Fig. 20 shows the matching examples with ORB and SURF detectors and descriptors in R2R_Autofocus1 and R2R_Autofocus2 datasets. Figure 21 shows the matching results of the two deep-learning-based end-to-end registration algorithms SuperGlue and LoFTR on R2R_Autofocus2 dataset.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ma, R., Du, X. Closed-loop feedback registration for consecutive images of moving flexible targets. Appl Intell 53, 10647–10667 (2023). https://doi.org/10.1007/s10489-022-04068-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04068-0