Abstract

Incomplete Multi-View Clustering (IMVC) attempts to give an optimal clustering solution for incomplete multi-view data that suffer from missing instances in certain views. However, most existing IMVC methods still have various drawbacks in practical applications, such as arbitrary incomplete scenarios cannot be handled; the computational cost is relatively high; most valuable nonlinear relations among samples are often ignored; complementary information among views is not sufficiently exploited. To address the above issues, in this paper, we present a novel and flexible unified graph learning framework, called Multiple Kernel-based Anchor Graph coupled low-rank Tensor learning for Incomplete Multi-View Clustering (MKAGT_IMVC), whose goal is to adaptively learn the optimal unified similarity matrix from all incomplete views. Specifically, according to the characteristics of incomplete multi-view data, MKAGT_IMVC innovatively improves an anchor selection strategy. Then, a novel cross-view anchor graph fusion mechanism is introduced to construct multiple fused complete anchor graphs, which captures more the intra-view and inter-view nonlinear relations. Moreover, a graph learning model combining low-rank tensor constraint and consensus graph constraint is developed, where all fused complete anchor graphs are regarded as prior knowledge to initialize this model. Extensive experiments conducted on eight incomplete multi-view datasets clearly show that our method delivers superior performance relative to some state-of-the-art methods in terms of clustering ability and time-consuming.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many practical applications, since the information of data is usually collected from various sensors or diverse processing methods, this data characterized by multiple perspectives is regarded as multi-view data in machine learning communities [1]. For instance, a specific piece of news can be reported simultaneously by multiple news organizations [2]; an image can be described with multiple visual features, such as GIST, PHOG, LBP, etc. [3]. Generally speaking, multi-view data is more comprehensive than an individual view because multiple views contain complementary and consistent information between views [4]. Thus, this means that making efficient use of information between views can elevate the performance of various machine learning.

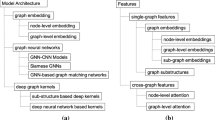

Multi-View Clustering (MVC) which can more accurately partition the sample data into corresponding groups by efficiently utilizing the complementarity and consistency information among multiple views, has become an important research topic due to the wide application of multi-view data. Most available literature reports may be roughly classified into three types based on the involved technologies, including matrix factorization-based methods [5, 6], graph-based methods [7,8,9,10,11,12], and deep learning-based methods [13, 14]. It should be noted that the aforementioned MVC methods usually need to be satisfied with a basic assumption that all instances are fully observed in each view. In many real-world applications, however, each view may lose some instances due to various reasons, such as human error or sensor failure. For example, as for a document, the texts and images are usually regarded as two views, while some documents may be missing texts or images [15]; the magnetic resonance images and blood test results can both be used to diagnose diseases, while some patients may have only one of them [16]. Thus, these data are generally referred to as incomplete multi-view data. The missing instances not only lead to a significant decrease in the information quality of each view but also make it more difficult to mine the information of complementarity and consistency between views. These factors make the conventional MVC methods unavoidably significantly degrade or even fail for mining the incomplete multi-view data, especially data with a large sample incomplete rate [17]. Since incomplete multi-view data is important and complex, Incomplete Multi-View Clustering (IMVC) has received widespread attention in recent years. To address above issues, a variety of IMVC methods have been proposed under different theoretical frameworks [18]. Roughly speaking, in terms of the techniques involved, exiting IMVC methods can be categorized into three categories: matrix factorization-based methods, deep learning-based methods, and graph-based methods.

The matrix factorization-based methods [17, 19, 20] aim to learn the optimal low-dimensional consistency representation directly from multiple incomplete views via the matrix factorization technique. For example, as a pioneering work dealing with missing instances in all views, Li et al. [17] proposed Partial multi-View Clustering (PVC), which adopts non-negative matrix factorization (NMF) and l1 norm regularization to learn the potentially consistent representation from two views. Unfortunately, PVC can only handle the incomplete multi-view data of two views and ignores the difference in the quality of the information of each view. By combining weighted semi-NMF and l2,1 norm regularization, Hu and Chen [19] further introduced Doubly Aligned Incomplete Multi-view Clustering (DAIMC) to learn the optimal unified latent feature matrix from all views. Nevertheless, DAIMC fails to explore the underlying relations between instances in each view. Wen et al. [20] provided a more effective and flexible IMVC framework, called Generalized Incomplete Multi-view Clustering with Flexible Locality Structure Diffusion (GIMC_FLSD), which integrates the local structure preserving and the individual representation learning to obtain the optimal consistent representation. The original data generally presents the nonlinear structure, while GIMC_FLSD directly encodes these data in a linear space, resulting in failure to capture more nonlinear relations among samples.

The deep learning-based methods [21,22,23,24,25,26] build a deep neural network to adaptively learn the optimal consistent representation in nonlinear space. For example, inspired by the idea of Generative Adversarial Networks (GAN), [21,22,23] leverage the common representation generated by GAN to infer the missing data and learn the optimal consistent representation simultaneously. Lin et al. [24] adopted contrastive learning and dual prediction mechanism to mutually boost the consistency learning and data recovery. However, the above deep methods require a large number of aligned samples that appear in all views to guarantee good performance. To further improve the effectiveness and flexibility of deep method, [25] and [26] integrate the view-specific encoders, graph embedding, fusion layer, and view-specific decoders into a joint pre-training network to deal with the incomplete multi-view data. Nevertheless, the above two methods cannot directly execute the clustering task in an end-to-end manner.

The graph-based methods [15, 16, 27,28,29,30,31,32,33,34] focus on finding the optimal fusion graph from different graphs calculated by all views, where these graphs cover the relationships among all samples. According to the different initialization construction strategies of the graph, we roughly summarize them as the available instance-based method, imputation-based method, and anchor-based method. From the perspective of the available instance-based method, [15] and [16] directly use the available instances of each view to construct a low-dimensional similarity graph and exploit the index matrix recording the index information for the corresponding view to restore the low-dimensional similarity graph to a complete similarity graph. Then the complete similarity graph is integrated into the optimization framework of consistent representation learning. However, this kind of method generally has a high computational cost, limiting their practical applications. As the representative imputation-based methods, [27,28,29,30,31] impute each incomplete graph and learn the optimal consensus clustering matrix simultaneously. To further explore the hidden information and interpretability of the missing views, Wen et al. [32] proposed IMVTSC-MVI that recovers the missing instances rather than the missing graph. However, the imputation-based method may introduce noise, which cannot guarantee the improvement of clustering performance. As for the anchor-based method, recent references [33] and [34] proposed to select samples appearing in all views as anchor points which are used to build the anchor graph of the corresponding view. Although the above two methods can reduce the computational complexity, especially on large-scale dataset, they require a certain amount of samples that appear in all views, which makes them inflexible.

To sum up, although most of the aforementioned methods have obtained state-of-the-art performances on incomplete multi-view clustering tasks, they still have following several limitations in practical applications. First, many methods are inflexible, only suitable for special incomplete scenarios, and usually have relatively high computational cost. Second, the underlying and significant nonlinear relations among samples are ignored. Third, the complementary information among all views has not been sufficiently exploited. To address these issues discussed above, in this article, we propose a novel and flexible unified graph learning framework, called Multiple Kernel-based Anchor Graph coupled low-rank Tensor learning for Incomplete Multi-View Clustering (MKAGT_IMVC). Specifically, as shown in Fig. 1, MKAGT_IMVC mainly includes the following three key steps. Firstly, we adopt the improved DAS to efficiently generate a batch of representative anchor points from incomplete multi-view data. The selected anchor points are exploited to build a complete anchor graph for each view and directly coalesces all complete anchor graphs to form the fused complete anchor graph, which can greatly increase the flexibility to deal with any incomplete situations and effectively reduce computational cost. Secondly, under the cross-view anchor graph fusion mechanism, given multiple pre-defined kernel functions, the proposed method can obtain a corresponding number of fused complete anchor graphs which record a large number of valuable nonlinear relationships among samples. Thirdly, we develop a graph learning model based on subspace representation, in which all the previous fused complete anchor graphs are regarded as prior knowledge to initialize this model. To guarantee that the complementary and consistent information of multiple views is fully explored, low-rank tensor constraint and consensus graph constraint are jointly imposed on this graph learning model. Therefore, MKAGT_IMVC has the potential to adaptively learn the optimal unified similarity matrix by solving the above-mentioned graph learning model through the Alternating Direction Method of Multiplier (ADMM).

The main contributions and novelty of this article are listed as follows:

-

1.

We innovatively exploit a simple and efficient anchor selection approach to generate a batch of representative anchors from incomplete multi-view data, where these anchors are used to construct view-wise complete anchor graphs.

-

2.

Instead of combining view-wise similarity graphs via imputing missing samples, we provide a novel cross-view anchor graph fusion paradigm to construct the fused complete anchor graph as prior knowledge for incomplete multi-view data, where the anchor graph fusion mechanism not only well captures more nonlinear relations between samples, but also adequately excavates the intra-view and inter-view information.

-

3.

We present a novel and effective unified graph learning framework (MKAGT_IMVC) that incorporates some prior knowledge into the subspace representation based graph learning model for incomplete multi-view clustering, where the prior knowledge can be used as the initial input for the model. Moreover, this model integrates low-rank tensor constraint and consensus graph constraint to ensure the optimal unified similarity matrix.

-

4.

We conduct extensive experiments that clearly show our algorithm delivers superior performance relative to some state-of-the-art methods in terms of clustering ability and time-consuming.

2 Notations and related works

In this section, we first introduce the notations used throughout this paper. Then, we briefly analyze several closely related works of the proposed method.

2.1 Notations

In this paper, we use italic lowercase letters, bold italic lowercase letters, bold italic capital letters, and bold calligraphy letters to denote scalars, vectors, matrices, and tensors, respectively (i.e., a, a, A,). We denote \(\boldsymbol {I} \in \mathbb {R}^{n \times n}\) as the identity matrix with an compatible size. We denote 1 as the all-one vector with an appropriate length. For convenience, we give some basic notations and their descriptions in Table 1.

2.2 Related works

2.2.1 Anchor-based Partial Multi-view Clustering (APMC)

APMC [33] utilizes anchor points to construct intra-view and inter-view similarity to address the IMVC problem. Specifically, APMC mainly consists of two steps, i.e., constructing the fused similarity graph by anchor points and obtaining the cluster indicators via spectral clustering. APMC first selects the common instances showing in all views to build an instance-to-anchor graph for each view and then integrates all instance-to-anchor graphs to create the unified similarity matrix S. Then, APMC conducts spectral clustering on the unified similarity matrix S. The model of spectral clustering is written as:

where \(\boldsymbol {F} \in \mathbb {R}^{n \times c}\) and c is the cluster indicator matrix and the number of clusters, respectively. Since the unified similarity matrix S has the property of the anchor graph, the cluster indicator matrix F can be derived by performing Singular Value Decomposition [34]. Finally, APMC executes k-means on F to get the clustering results. More details can be found in [33].

Although APMC can reduce the computational complexity, especially on the large-scale dataset, it requires a certain amount of samples that appear in all views, which makes them inflexible. In addition, performing spectral clustering directly on the unified similarity matrix S cannot guarantee adaptively obtaining optimal clustering results.

2.2.2 Low-rank representation based multi-view subspace clustering

For this paper, in fields of low-rank representation (LRR) based multi-view subspace clustering, we mainly refer to the graph learning based methods that learn a unified graph from multiple views by imposing a variety of different constraints, e.g., low-rank constraint, sparse constraint, and consensus constraint, etc. Consequently, given a multi-view dataset \(\{\boldsymbol {X}^{(v)} \in \mathbb {R}^{d_{v} \times n}\}_{v=1}^{V}\), the general framework of LRR based multi-view subspace clustering can be formulated as follows [35, 36]:

where α > 0 and β > 0 are the trade-off parameters designed to balance the value of the corresponding term, \(\boldsymbol {E}^{(v)} \in \mathbb {R}^{d_{v} \times n}\) denotes the reconstruction error matrix in the v-th view, \(\boldsymbol {S}^{(v)} \in \mathbb {R}^{n \times n}\) and \(\boldsymbol {S^{*}} \in \mathbb {R}^{n \times n}\) denotes the subspace representation matrix in the v-th view and the consistent representation among multiple subspace representation matrices, respectively. When S(v) is imposed the following constraints: \(\boldsymbol {S}^{(v)} \geqslant 0,~diag(\boldsymbol {S}^{(v)}) = 0,~(\boldsymbol {S}^{(v)})^{T} \boldsymbol {1} = \boldsymbol {1}\), S(v) can be regarded as similarity matrix reflecting the distance among instances [36]. ∥S(v)∥∗ is the regularization term used low-rank representation which can best capture the intrinsic geometric structures in v-th view. Ψ(E(v)) simulates different noises with various norm constraints, e.g., \(\|\boldsymbol {E}^{(v)}\|_{1}, \|\boldsymbol {E}^{(v)}\|_{2,1}\), and \(\|\boldsymbol {E}^{(v)}\|_{F}^{2}\). Cons(S(v),S∗) is a function which learn the consistent representation S∗ from multiple view-specific subspace representations S(v).

However, there are several drawbacks in the model of (2) to some extent. Firstly, this model requires that all views are complete. In other words, the entries of all input samples should be fully observed from multiple views. Secondly, the real-world datasets usually show the nonlinear structure. Yet the subspace representation matrices are directly encoded on the linear space in this model, which makes the model unable to handle nonlinear data well. Thirdly, this model cannot effectively learn the complementary information from all the views to improve the clustering performance.

3 Preliminaries

3.1 Problem definition

As incomplete multi-view data, each sample not only is characterized by multiple views, but also usually suffers from missing some views. To facilitate the discussion, We define “instance” as the feature representation of samples in corresponding views. Assume that we are given a dataset X = {X(1),X(2),⋯ ,X(V )} with V views, where the v −th view is denoted as \(\boldsymbol {X}^{(v)} = [\boldsymbol {x}_{1}^{(v)}, \boldsymbol {x}_{2}^{(v)}, \cdots , \boldsymbol {x}_{n}^{(v)}] \in \mathbb {R}^{d_{v} \times n}\) composed of available and missing instances. \(\boldsymbol {x}_{i}^{(v)} \in \mathbb {R}^{d_{v}}\) is denoted as the i-th instance in the v-th view. Similarly, \(\boldsymbol {Y}^{(v)} \in \mathbb {R}^{d_{v} \times n_{v}}\) and \(\boldsymbol {y}_{i}^{(v)} \in \mathbb {R}^{d_{v}}\) are denoted as the available instances matrix and the i-th available instance in the v-th view, respectively. n and nv separately stands for the number of samples and available instances in the v-th view. dv is the dimensionality of the feature representation in the v-th view. c represents the number of clusters.

3.2 Tensor nuclear norm

For our method, the tensor nuclear norm is the key component to improve the clustering performance. Therefore, it is necessary to introduce the related definitions to help understand the concept of the tensor nuclear norm. We denote \(\mathscr {I} \in \mathbb {R}^{n_{1} \times n_{1} \times n_{3}}\) as the identity tensor whose first frontal slice is an identity matrix of size n1 × n1 and the remaining frontal slices are zero. The transpose of \(\mathscr {A} \in \mathbb {R}^{n_{1} \times n_{2} \times n_{3}}\) is \(\mathscr {A}^{T} \in \mathbb {R}^{n_{2} \times n_{1} \times n_{3}}\). A tensor \(\mathscr {A} \in \mathbb {R}^{n_{1} \times n_{1} \times n_{3}}\) is orthogonal which satisfies T ∗ = ∗T =. More details of the tensor nuclear norm can be found in [37, 38].

3.2.1 Tensor Singular Value Decomposition (t-SVD)

Given a tensor \(\mathscr {A} \in \mathbb {R}^{n_{1} \times n_{2} \times n_{3}}\). The tensor Singular Value Decomposition (t-SVD) of is defined as:

where \(\mathscr {U} \in \mathbb {R}^{n_{1} \times n_{1} \times n_{3}}\) and \(\mathscr {V} \in \mathbb {R}^{n_{2} \times n_{2} \times n_{3}}\) are orthogonal tensor, \(\mathscr {S} \in \mathbb {R}^{n_{1} \times n_{2} \times n_{3}}\) is an f-diagonal tensor whose every frontal slice is a diagonal matrix.

3.2.2 t-SVD based Tensor Nuclear Norm (t-SVD-TNN)

For the tensor \(\mathscr {A} \in \mathbb {R}^{n_{1} \times n_{2} \times n_{3}}\). The t-SVD based Tensor Nuclear Norm (t-SVD-TNN) of , denoted as \(\|\mathscr {A}\|_{\circledast }\), is defined as:

where \({\sum }_{k=1}^{n_{3}} \|\mathscr {A}_{f}^{(k)}\|_{*}\) denotes the sum of singular values of entire the frontal slices of f, \(\mathscr {S}_{f}^{(k)}\) can be obtained by performing Singular Value Decomposition on \(\mathscr {A}_{f}^{(k)}\), i.e., \(\mathscr {A}_{f}^{(k)} = \mathscr {U}_{f}^{(k)} * \mathscr {S}_{f}^{(k)} * \mathscr {V}_{f}^{(k)T}\), and \(\mathscr {S}_{f}^{(k)}(i,i)\) is the i-th singular value of \(\mathscr {A}_{f}^{(k)}\).

4 The proposed method

In this section, we elaborate a novel approach, termed Multiple Kernel-based Anchor Graph coupled low-rank Tensor learning for Incomplete Multi-View Clustering (MKAGT_IMVC), which effectively learns the consistent representation from missing views. Specifically, MKAGT_IMVC mainly consists of three key steps, i.e., generating anchor points for each missing view, constructing multiple fused complete anchor graphs, and developing the representation-based graph learning model. The general framework of our MKAGT_IMVC method is shown in Fig. 1.

4.1 Anchor selection of incomplete multi-view data

Recently, some research findings [12, 33, 34, 39] suggest that the performance of multi-view clustering can be effectively improved by adopting the anchor-based strategy to construct the anchor graphs, where these anchor graphs are regarded as the prior knowledge that can be used as the initial input for multi-view learning. In general, for multi-view data, there are two frequently used methods for anchor selection, i.e., random policy and k-means policy. The random policy adopts random sampling to select a fraction of samples from multi-view data as anchor points. Although the random policy is simple and efficient, it cannot guarantee that the selected anchor points are significantly representative, which leads to great instability in the final results. By contrast, the k-means policy makes the clustering centroids as anchor points, which are more representative than random selection. Specifically, it first concatenates the features of all views as a joint feature, and then k-means is performed on the joint feature to get the joint centroids of the clustering. Finally, The multiple groups of centroids, which are obtained through splitting the joint centroids by views, are regarded as the anchor points of each view [39]. However, k-means is sensitive to the initialization of origin centroids, resulting in instability like the random sampling. To eliminate the drawback, the k-means requires numerous independent iterative calculations. Intuitively, samples belonging to the same cluster usually have similar feature representations, while the samples not in the same cluster have significant differences in feature representations. Based on this motivation, Li et al. [12] proposed an anchor selection strategy, called directly alternate sampling method (DAS) that makes a compromise between the efficiency of the random policy and the certainty of the k-means policy. The original intention of DAS is to conduct the anchor selection strategy after concatenating the features of all views in the complete multi-view data. However, when a large number of instances are missing in some views, adopting the manner of concatenating first and then anchor points selection causes DAS to inevitably degenerate or even fail. For incomplete multi-view data, the work in [33, 34] proposed to regard the samples appearing in all views as anchor points. Unfortunately, samples appearing in all views are often rare or non-existent. Considering an assumption of multi-view clustering that the cluster structure of all views should be analogous [36]. Inspired by this motivation, we extend the DAS method and apply it to the incomplete multi-view data. To be specific, for all views, we first obtain the available instance matrix \(\boldsymbol {Y}^{(v)} \in \mathbb {R}^{d_{v} \times n_{v}}\) by removing the missing instances in \(\boldsymbol {X}^{(v)} \in \mathbb {R}^{d_{v} \times n}\). Then, we can calculate the v-th intuitive score \(\boldsymbol {s}^{(v)} \in \mathbb {R}^{n_{v}}\) according to the following formula:

where Tra(⋅) denotes a non-negative operation for an instance, which each element in the feature representation subtract the smallest element. The motivation of (5) is that the feature representation of instances belonging to the same cluster usually has relatively small differences than the instances not in the same cluster. Therefore, we can get this information naturally, that is, instances of the same cluster have similar scores calculated by summing the feature values. To this end, we choose anchor points based on the scores of each instance for all views. Definitely, we pick the instance with the largest score as the initial anchor point in the v-th view according to the following formula:

In order to ensure the anchor points cover all the clusters as much as possible, it is necessary to update the scores in an alternate manner, so that the anchor points can be obtained alternately from each cluster when selecting the anchor points. Therefore, we first standardize the score of every instance by dividing the largest score. The standardized expression is as follows:

Consequently, the score of the currently selected instance is scaled into 1.

We pick the anchor points under the following principle, that is, select the instance with the largest score as the anchor point. Therefore, the following two key guidelines need to be satisfied to achieve the optimal anchor point selection. Firstly, we need to set the score of the selected instances to zero to avoid repeated selection. Second, By diminishing the extremely low or high scores and fairly exaggerating the medial scores, to ensure that the next anchor point and the currently selected anchor point are from as different clusters as possible [12]. To this end, the expression that updates the score can be defined as the following formula:

This linear computation is performed lv times to get lv anchor points for corresponding view, where lv is usually greater than the desired cluster number c. Finally, we can obtain the anchor point sets \(\{\boldsymbol {U}^{(v)} \}_{v=1}^{V}\) from all views, where \(\boldsymbol {U}^{(v)} = [\boldsymbol {u}_{1}^{(v)}, \boldsymbol {u}_{2}^{(v)}, \cdots , \boldsymbol {u}_{l_{v}}^{(v)}] \in \mathbb {R}^{d_{v} \times l_{v}}\).

4.2 Construction of multiple kernel-based fused complete anchor graphs

Inspired of the kernel trick of multi-view clustering methods [9, 40, 41], the nonlinear samples in the original sample space can be transformed into a high-order feature space with a linear subspace structure by adopting the kernel methods, where the linear subspace structure plays critical roles for addressing the clustering problem. Specifically, for the v-th view, given the available instance matrix \(\boldsymbol {Y}^{(v)} \in \mathbb {R}^{d_{v} \times n_{v}}\) and the anchor matrix \(\boldsymbol {U}^{(v)} \in \mathbb {R}^{d_{v} \times l_{v}}\). Furthermore, the kernel pool composed of m kernel functions \(\{K^{(p)}(\cdot )\}_{p=1}^{m}\) is given advance. By considering all views and these predefined kernel functions, the similarity between the i-th available instance \(\boldsymbol {y}_{i}^{(v)}\) and the j-th anchor point \(\boldsymbol {u}_{j}^{(v)}\) can be expressed as follows:

where K(p)(⋅) is the p-th predefined kernel function and ϕ(p)(⋅) is the corresponding mapping function without defined explicitly, which maps the instances from original space to the high-order feature space.

Moreover, under differently predefined kernel functions, by calculating the similarity between the available instances and anchor points in each view, we can obtain the element of truncated anchor graph which can be defined as the following formula [33]:

where Ωv ⊂{1,2,⋯ ,lv} denotes an index set of k nearest neighbors of \(\boldsymbol {y}_{i}^{(v)}\) in U(v) for the v-th view, \(\boldsymbol {\bar {Z}}^{(pv)} \in \mathbb {R}^{n_{v} \times l_{v}}\) denotes the truncated anchor graph calculated by the p-th kernel function in the v-th view.

Once we get the truncated anchor graph \(\boldsymbol {\bar {Z}}^{(pv)}\), according to [42], the anchor graph \(\boldsymbol {\bar {W}}^{(pv)}\) between all available instances can be approximated as follows:

where \(\boldsymbol {\bar {\Lambda }}^{(pv)} = diag((\boldsymbol {\bar {Z}}^{(pv)})^{T} \boldsymbol {1}) \in \mathbb {R}^{l_{v} \times l_{v}}\) is the diagonal matrix, \(\boldsymbol {\bar {W}}^{(pv)} \in \mathbb {R}^{n_{v} \times n_{v}}\) is an anchor graph calculated with the available instances of the v-th view by the p-th kernel function.

The original incomplete multi-view dataset \(\{\boldsymbol {X}^{(v)} \in \mathbb {R}^{d_{v} \times n}\}_{v=1}^{V}\) contains available instances and missing instances in each view. However, the anchor graph \(\boldsymbol {\bar {W}}^{(pv)}\) in (11) just reflects the relationships between available instances in each view. Moreover, the number of available instances in each view is inconsistent. As a result, it is impossible to directly fuse the multiple views, especially when the number of missing instances is large. To address these issues, some methods adopt imputation strategy to recover the missing graph or instances in each view [27,28,29,30,31,32], which may bring noise and make it impossible to obtain optimal results. Different from these methods, we exploit the index matrix \(\boldsymbol {H}^{(v)} \in \mathbb {R}^{n \times n_{v}}\) to adjust \(\boldsymbol {\bar {W}}^{(pv)}\) to a complete anchor graph \(\boldsymbol {W}^{(pv)} \in \mathbb {R}^{n \times n}\) with the same number and order as the original dataset. Thus W(pv) can be defined as follows:

where W(pv) is a complete anchor graph which connects all instances including the available instances and missing instances in the v-th view. \(\boldsymbol {H}^{(v)} \in \mathbb {R}^{n \times n_{v}}\) is an index matrix which summarizes the available and missing information in the v-th view. Specifically, the elements of index matrix H(v) can be expressed as the following formula:

For an incomplete multi-view dataset, there is a significant imbalance in the number of available instances per sample, especially when the number of views is large. For example, some samples appear in all views, and some only appear in one view. Therefore, by directly fusing all views to construct the fused complete anchor graph \({\sum }_{v=1}^{V}\boldsymbol {W}^{(pv)}\), there is a problem that the representation scales of all samples are different in the affine space. To this end, after obtaining the fused complete anchor graph, we divide the feature representation of each sample by the number of available instances of the corresponding sample to ensure that each sample has the same representation scale in the affine space. Then, the fused complete anchor graph \(\boldsymbol {W}^{(p)} \in \mathbb {R}^{n \times n}\) can be defined as the following formula:

where \(\boldsymbol {A} = diag(\boldsymbol {h}) \in \mathbb {R}^{n \times n}\) is the diagonal matrix, \(\boldsymbol {h} = [h_{1}, h_{2}, \cdots , h_{n}] \in \mathbb {R}^{n}\) records the number of available instances for all samples. Specifically, h can be expressed as follows:

where \(\boldsymbol {H}_{\cdot j}^{(v)}\) is the j-th column vector of index matrix H(v) in the v-th view.

4.3 Construction of unified graph learning model

4.3.1 Low-Rank tensor based multi-view representation learning

For incomplete multi-view dataset, m fused complete anchor graphs \(\{\boldsymbol {W}^{(p)}\}_{p=1}^{m}\) can be constructed through m different kernel functions. Then, we regard these fused complete anchor graphs as prior knowledge that can be incorporated into the low-rank representation based subspace clustering model in (2). However, this model cannot effectively capture and propagate the complementary information among all views to improve the clustering performance. Borrowing the idea of the t-SVD based tensor nuclear norm [43], we utilize low-rank tensor constraint to extend the matrix nuclear norm. Specifically, we first construct a similarity graph tensor \(\mathscr {S} \in \mathbb {R}^{n \times n \times m}\) by stacking m candidate similarity graphs \(\{\boldsymbol {S}^{(p)}\}_{p=1}^{m}\), where the tensor can be learned by imposing a variety of different constraints, e.g., low-rank tensor constraint, sparse constraint, subspace representation constraint, etc. Then, by performing the rotation operation of the similarity graph tensor, we can better explore the complementary information between these candidate similarity graphs [43]. Therefore, this learning model can be written as:

where λ1 > 0 is the trade-off parameter; \(\boldsymbol {W}^{(p)} \in \mathbb {R}^{n \times n}\) is a fused complete anchor graph calculated by the p-th kernel function, in which this matrix indicates feature representation of all samples in the affine space; \(\boldsymbol {S^{(p)}} \in \mathbb {R}^{n \times n}\) denotes the corresponding candidate similarity graph; \(\boldsymbol {E}^{(p)} \in \mathbb {R}^{n \times n}\) represents the corresponding reconstruction error matrix; φ(⋅) is an operator to stack all candidate similarity graphs \(\{ \boldsymbol {S}^{(p)} \}_{p=1}^{m}\) into a third-order tensor \(\mathscr {S} \in \mathbb {R}^{n \times n \times m}\); \(\boldsymbol {S}^{(p)} \geqslant 0,~diag(\boldsymbol {S}^{(p)}) = 0,~(\boldsymbol {S}^{(p)}) \boldsymbol {1} = \boldsymbol {1}\) ensures that S(p) has the graph properties reflecting the similarity among all samples.

4.3.2 Consensus representation learning

Generally, the multi-view learning model can achieve great success compared to the single view, mainly from the following two principles [18]: (1) the complementary principle, which means that multi-view data contains complementary information, in other words, different views can describe the data in distinct perspectives; (2) the consensus principle, which means that all views in the multi-view data have the analogous cluster structure. However, the model in (16) ignores the consensus principle, which may lead to the trivial solution. Inspired by this motivation, we develop the following consensus representation based multi-view learning model for improving the clustering performance:

where \(\frac {1}{m}{\sum }_{r=1}^{m}\boldsymbol {S}^{(r)}\) is regarded as the consensus representation by averaging all the candidate similarity graphs.

4.3.3 Objective function of MKAGT_IMVC

To sum up, for incomplete multi-view data, we jointly conduct the low-rank tensor representation based multi-view subspace learning model in (16) and the consensus representation based multi-view learning model in (17) within one unified model, which ensured that the final model not only satisfies the two principles of multi-view learning but also makes the all candidate similarity graphs \(\{\boldsymbol {S}^{(p)} \}_{p=1}^{m}\) much more close to the optimal subspace structure. Hence, the objective function of MKAGT_IMVC is formulated as follows:

where λ1 and λ2 are the trade-off parameters involved to balance the corresponding term. In this final objective function, the first term is the sparsity constraint of the reconstruction error matrix conducted l1-norm; the second term is the low-rank tensor constraint of the similarity graph tensor, which not only effectively captures the complementary information but also guarantees that each candidate similarity graph has the optimal low-rank subspace structure; the third term is the consensus constraint on the candidate similarity graphs, which minimizes the disagreement of the cluster structure of all candidate similarity graphs.

4.4 Optimization of proposed method

It is intractable to directly achieve the analytical solution of the final model in (18) since it is a jointly non-convex problem [44] to multiple unknown variables. Therefore, we adopt alternating direction method of multipliers (ADMM) to solve this optimization problem. Following the principle of ADMM, we introduce several auxiliary variables \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\) and a constraint = to make the objective function separable. Then, we can reformulate (18) as follows:

where \(\boldsymbol {\Theta } = \left \{\boldsymbol {S}^{(p)}, \boldsymbol {E}^{(p)}, \boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\) represents a collection of all variables to be optimized.

Moreover, the augmented Lagrangian function of (19) can be defined as follows:

where \(\left \{\boldsymbol {B}^{(p)} \in \mathbb {R}^{n \times n} \right \}_{p=1}^{m}\) and \(\left \{\boldsymbol {C}^{(p)} \in \mathbb {R}^{n \times n} \right \}_{p=1}^{m}\) represent the Lagrange multipliers, μ > 0 is actually the penalty parameter. Then, under the ADMM framework, we can calculate each variable by iteratively solving the following corresponding sub-problems.

-

Step 1. Update \(\left \{\boldsymbol {S}^{(p)}\right \}_{p=1}^{m}\): When the other variables are fixed and the terms unrelated to S(p) are removed, the optimization for each candidate similarity graph S(p) which is independent can be reformulated to the following sub-problem:

$$ \begin{array}{llll} \min\limits_{\boldsymbol{S}^{(p)}}& \begin{pmatrix} \begin{array}{ll} & \lambda_{2} \|\boldsymbol{S}^{(p)} - \frac{1}{m}\sum\limits_{r=1}^{m}\boldsymbol{S}^{(r)}\|_{F}^{2}\\ &+ \frac{\mu}{2} \| \boldsymbol{W}^{(p)} - \boldsymbol{W}^{(p)}\boldsymbol{S}^{(p)} - \boldsymbol{E}^{(p)} + \frac{\boldsymbol{B}^{(p)}}{\mu} \|_{F}^{2} \\ &+ \frac{\mu}{2} \| \boldsymbol{S}^{(p)} - \boldsymbol{G}^{(p)} + \frac{\boldsymbol{C}^{(p)}}{\mu} \|_{F}^{2} \end{array} \end{pmatrix} \\ s.t.~&\boldsymbol{S}^{(p)} \geqslant 0,~diag(\boldsymbol{S}^{(p)}) = 0,~\boldsymbol{S}^{(p)} \boldsymbol{1} = \boldsymbol{1} \end{array} $$(21)By taking the partial derivative of (21) with respect to S(p) and setting it to zero, we can get the closed-form solution as follows:

$$ \begin{array}{ll} &\widehat{\boldsymbol{S}}^{(p)} = \left( \frac{2 \lambda_{2} (m - 1)^{2}}{m^{2}}\boldsymbol{I} + \mu\boldsymbol{I} + \mu(\boldsymbol{W}^{(p)})^{T}\boldsymbol{W}^{(p)} \right)^{-1} \\ &\left( \frac{2 \lambda_{2} (m - 1)}{m^{2}}\sum\limits_{r=1,~r \neq p}^{m}\boldsymbol{S}^{(r)} + \mu\boldsymbol{G}^{(p)} - \boldsymbol{C}^{(p)} + \boldsymbol{Q}^{(p)} \right), \end{array} $$(22)where Q(p) = (W(p))T(μ(W(p) −E(p)) + B(p)). Then, we can project \(\widehat {\boldsymbol {S}}^{(p)}\) into a constrained space. The optimal solution of S(p) can be achieved through the following problem:

$$ \boldsymbol{S}^{(p)} = \operatorname*{arg~min}\limits_{\boldsymbol{S}^{(p)} \geqslant 0,~diag(\boldsymbol{S}^{(p)}) = 0,~\boldsymbol{S}^{(p)} \boldsymbol{1} = \boldsymbol{1}} \|\boldsymbol{S}^{(p)} - \widehat{\boldsymbol{S}}^{(p)}\|_{F}^{2}. $$(23)Finally, the problem (23) can be solved by an efficiently iterative algorithm presented in [32, 45]:

$$ S_{(i, j)}^{(p)}= \begin{cases} 0,&~i = j\\ \left( \widehat{S}_{(i, j)}^{(p)} + \eta_{i}^{(p)}\right)_{+},&~i \neq j\\ \end{cases} $$(24)where (⋅)+ is a function to preserve the non-negative elements and transform the negative elements into zero. \(\eta _{i}^{(p)}\) is calculated as:

$$ \eta_{i}^{(p)} =\frac{1}{n-1} - \frac{1}{n-1}{\sum}_{j=1,~j \neq i}^{n}\widehat{S}_{(i, j)}^{(p)}. $$(25) -

Step 2. Update \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\): When the other variables are fixed and the terms unrelated to G(p) are removed, the optimization for the auxiliary variables \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\) can be reformulated to the following sub-problem:

$$ \left\{\boldsymbol{G}^{(p)}\right\}_{p=1}^{m} = \operatorname*{arg~min}\limits_{\left\{\boldsymbol{G}^{(p)}\right\}_{p=1}^{m}} \|\mathscr{G}\|_{\circledast} + \frac{\mu}{2}{\sum}_{p=1}^{m} \| \boldsymbol{S}^{(p)} - \boldsymbol{G}^{(p)} + \frac{\boldsymbol{C}^{(p)}}{\mu} \|_{F}^{2}. $$(26)It is obvious to find that \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {S}^{(p)}\right \}_{p=1}^{m}\) and \(\left \{\boldsymbol {C}^{(p)}\right \}_{p=1}^{m}\) can be transformed into the tensor \(\mathscr {G} \in \mathbb {R}^{n \times n \times m}\), \(\mathscr {S} \in \mathbb {R}^{n \times n \times m}\) and \(\mathscr {C} \in \mathbb {R}^{n \times n \times m}\), respectively. Therefore, problem (26) is equivalent to the following problem:

$$ \mathscr{G} = \operatorname*{arg~min}\limits_{\mathscr{G}} \|\mathscr{G}\|_{\circledast} + \frac{\mu}{2} \| \mathscr{F} - \mathscr{G} \|_{F}^{2} , $$(27)where = + /μ. According to [43], we first rotate from size n × n × m to n × m × n. Through the rotation operation, the proposed model will benefit in three aspects. First of all, in each frontal slice, all samples can be represented by view-specific self-representation coefficients in the Fourier domain. Secondly, since each frontal slice considers the information among all samples and all views, the proposed model can be more effective to capture the complementary information. The third advantage is that the tensor rotation can significantly reduce the computational complexity while performing t-SVD-TNN in the Fourier domain [43].

The minimization problem (27) has the closed-form solution achieved through the tensor tubal-shrinkage operator [32, 46]:

$$ \mathscr{G} = {\Gamma}_{\tilde{\mu}}(\mathscr{F})= \mathscr{U} * {\Gamma}_{\tilde{\mu}}(\mathscr{Q})* \mathscr{V}^{T}, $$(28)where \(\tilde {\mu } = n / \mu \), = ∗∗T is achieved by t-SVD operation [47]. \({\Gamma }_{\tilde {\mu }}(\mathscr {Q}) = \mathscr {Q} * \mathscr {J}\), where \(\mathscr {J} \in \mathbb {R}^{n \times m \times n}\) is an f-diagonal tensor whose each diagonal element in the Fourier domain is defined as \(\mathscr {J}_{f}(i,i,k) = max\left (1 - \tilde {\mu } \big / \mathscr {Q}_{f}^{(k)}(i,i), 0\right )\).

-

Step 3. Update \(\left \{\boldsymbol {E}^{(p)}\right \}_{p=1}^{m}\): When the other variables are fixed and the terms unrelated to E(p) are removed, the optimization for each reconstruction error matrix E(p) can be reformulated to the following sub-problem:

$$ \min\limits_{\boldsymbol{E}^{(p)}}\lambda_{1} \|\boldsymbol{E}^{(p)}\|_{1}+ \frac{\mu}{2} \| \boldsymbol{W}^{(p)} - \boldsymbol{W}^{(p)}\boldsymbol{S}^{(p)} - \boldsymbol{E}^{(p)} + \frac{\boldsymbol{B}^{(p)}}{\mu} \|_{F}^{2}. $$(29)Problem (29) has a closed-from solution as follows:

$$ \boldsymbol{E}^{(p)} = {\Pi}_{\lambda_{1}/\mu} (\boldsymbol{W}^{(p)} - \boldsymbol{W}^{(p)}\boldsymbol{S}^{(p)} + \frac{\boldsymbol{B}^{(p)}}{\mu} ), $$(30)where π is the shrinkage operator [48].

-

Step 4. Update ADMM variables: The Lagrange multipliers \(\left \{\boldsymbol {B}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {C}^{(p)}\right \}_{p=1}^{m}\) and penalty parameter μ can be updated as follows:

$$ \begin{array}{lll} &\boldsymbol{B}^{(p)} = \boldsymbol{B}^{(p)} + \mu(\boldsymbol{W}^{(p)} - \boldsymbol{W}^{(p)}\boldsymbol{S}^{(p)} - \boldsymbol{E}^{(p)}) \\ &\boldsymbol{C}^{(p)} = \boldsymbol{C}^{(p)} + \mu(\boldsymbol{S}^{(p)} - \boldsymbol{G}^{(p)})\\ &\mu = min(\rho\mu, \mu_{max}), \end{array} $$(31)where ρ and μmax are the constants involved ADMM.

In each iteration, the error of algorithm can be obtained as follows:

where M(p) = W(p) −W(p)S(p) −E(p) and N(p) = S(p) −G(p), φ(⋅) stacks all matrices into a third-order tensor, ψ(⋅) converts the tensor to a vector. Then, the convergence condition of algorithm can be formulated as follows:

where t denotes the t-th iteration, 𝜖 is a pre-defined threshold value.

The optimization procedure can be terminated when it is satisfied with the convergence condition (33). After obtaining the optimal similarity graphs \(\{ \boldsymbol {S}^{(p)} \}_{p=1}^{m}\), we use the following formula to achieve the optimal unified similarity matrix S∗.

Then, MKAGT_IMVC performs the spectral clustering on the optimal unified similarity matrix S∗ to get the clustering results. Finally, the optimization steps of MKAGT_IMVC is described in Algorithm 1.

4.5 Theoretical convergence analysis

As in the optimization process described in Section 4.4, we can observe that the objective function as formulated by (20) can be separated into three convex sub-problems. Obviously, each sub-problem can find the optimal solution with respect to the corresponding variable. This means that the loss of objective function as formulated by (20) is also monotonically decreasing in the process of alternately updating all variables. That is, the convergence of the problem (20) can be guaranteed after some iteration steps.

4.6 Computational complexity analysis

As shown in Algorithm 1, the computational complexity of the proposed MKAGT_IMVC mainly consists of three stages, i.e., generating anchor points set, constructing multiple fused complete anchor graphs, solving the jointly objective function iteratively.

In the first stage, we adopt DAS to generate lv anchor points in the available instances of each incomplete view, which needs to perform lv iterations, where each iteration is required to execute a linear operation in an nv dimensional score vector. So, the computational complexity of this stage is \(\mathcal {O}(l_{v}n_{v})\) for each view.

In the second stage, we need to construct the truncated anchor graph with (10) and the complete anchor graph with (12), where the main computational complexity is \(\mathcal {O}(n_{v} l_{v} d_{v})\) and \(\mathcal {O}(n {n_{v}^{2}} + n^{2} n_{v})\) respectively. Considering that nv ≪ n, lv ≪ n and dv ≪ n for large-scale incomplete multi-view data, the computational complexity of the fused complete anchor graph with (14) in this stage can be approximated as \(\mathcal {O}({\sum }_{v=1}^{V}(m n^{2} n_{v}))\) under m predefined kernel functions.

In the third stage, the joint optimization comprises four key steps, such as solving the following variables \(\left \{\boldsymbol {S}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {E}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {B}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {C}^{(p)}\right \}_{p=1}^{m}\), and μ, respectively. In step 1, for updating \(\left \{\boldsymbol {S}^{(p)}\right \}_{p=1}^{m}\), the major computational complexity is the matrix inverse operation in (22), which requires \(\mathcal {O}(m n^{3})\). In step 2, for updating \(\left \{\boldsymbol {G}^{(p)}\right \}_{p=1}^{m}\), we need to perform the following core operations, such as FFT, t-SVD, and inverse FFT. For a tensor \(\mathscr {G} \in \mathbb {R}^{n \times m \times n}\), the corresponding computational complexity of these operations is about \(\mathcal {O}(m n^{2}log(n))\), \(\mathcal {O}(m^{2} n^{2})\), and \(\mathcal {O}(m n^{2}log(n))\) [40]. Therefore, the total computational complexity of this step is \(\mathcal {O}(m n^{2}log(n) + m^{2} n^{2})\). In step 3, for updating \(\left \{\boldsymbol {E}^{(p)}\right \}_{p=1}^{m}\), the main computational complexity is the matrix multiplication operation in (30), whose computational complexity is \(\mathcal {O}(m n^{3})\). In step 4, for update ADMM variables, i.e., \(\left \{\boldsymbol {B}^{(p)}\right \}_{p=1}^{m}\), \(\left \{\boldsymbol {C}^{(p)}\right \}_{p=1}^{m}\), and μ, they also mainly contain the matrix addition and multiplication operations in (31), and the computational complexity is \(\mathcal {O}(m n^{3})\).

According to the above analysis, the total computational complexity of the three stages is \(\mathcal {O}\left ({\sum }_{v=1}^{V}(l_{v}n_{v} + m n^{2} n_{v}) + t(3 m n^{3} + m n^{2}log(n) + m^{2} n^{2})\right )\), where t denotes the number of iterations of the optimization process in the third stage. After the solver converges, we conduct spectral clustering on the optimal unified similarity matrix S∗, whose computational complexity is usually \(\mathcal {O}(n^{3})\). Moreover, considering that nv ≪ n, lv ≪ n, and V,m,t are usually small in reality. Therefore, the main computational complexity of Algorithm 1 is actually \(\mathcal {O}(n^{3})\).

5 Experiments and analyses

In this section, we aim to comprehensively evaluate the effectiveness and the superiority of our method (MKAGT_IMVC) through conducting extensive experiments on various benchmark multi-view datasets. Specifically, we compare with some state-of-the-art incomplete multi-view clustering methods in terms of clustering performance and computational efficiency. In addition, we experimentally explore the anchor selection strategy, kernel selection strategy, parameter sensitivity, and convergence property to verify the stability of MKAGT_IMVC. All the experiments are implemented in the MATLAB R2016a and run on a Windows 10 PC machine with Intel(R) Xeon(R) W-2123 CPU @ 3.60-GHz and 32-GB RAM.

5.1 Experimental settings

5.1.1 Description of datasets and incomplete multi-view data construction

We adopt eight widely used real-world multi-view datasets to validate the superiority of the proposed MKAGT_IMVC. Table 2 summarizes the important statistical information of these datasets, where the first three are naturally incomplete and the last five are complete. We briefly give the descriptions of these datasets as follows.

-

1.

3 Sources DatasetFootnote 1 (3Sources): 3Sources is a naturally incomplete multi-view text dataset. It consists of 416 distinct news stories annotated with six topical areas, which were reported from three well-known online news organizations, i.e., BBC, Guardian, and Reuters. Of these stories, 53 are reported in one news organizations, 194 are reported in two news organizatios, and 169 appear in all three news organizations. The sample incomplete rate of this dataset is 59.38%.

-

2.

BBCSport DatasetFootnote 2 (BBCSport) : BBCSport is a naturally incomplete synthetic multi-view text dataset [2]. It consists of 737 sport news articles from the BBC Sport website, where each article was split into two related segments of text and categorized into five topical areas. Of these articles, 193 are reported in one segment and 544 are reported in two segments. The sample incomplete rate of this dataset is 26.19%.

-

3.

BBC DatasetFootnote 3 (BBC) : BBC is a naturally incomplete synthetic multi-view text dataset [2]. It consists of 2225 stories from the BBC news website, where each story was split into four related segments of text and categorized into five topical areas. Of these stories, 213 are reported in one segment, 744 are reported in two segments, 583 are reported in three segments, and 685 are reported in four segments. The sample incomplete rate of this dataset is 69.21%.

-

4.

Caltech101-7 Image DatasetFootnote 4 (Caltech7): Caltech7 is a complete multi-view image dataset [49]. It is a subset of the frequently used Caltech101 consisting of 1474 images, where each image is represented by six kinds of features [50]. Caltech7 is composed of seven object categories, i.e., Windsor-Chairs, Stop-Sign, Snoopy, Motorbikes, Garfield, Face, and Dolla-Bills.

-

5.

Multiple Features handwritten numerals DatasetFootnote 5 (Mfeat): Mfeat is a complete multi-view image dataset. It consists of 2000 handwritten numerals (‘0’–‘9’) from the UCI repository, where each handwritten numeral is described by six feature sets [51]. Mfeat is categorized into ten groups, and each group has 200 samples.

-

6.

UC Merced Land Use DatasetFootnote 6 (LandUse-21): LandUse-21 is a complete multi-view image dataset. It consists of 2100 land use images selected from aerial orthoimage [52]. where each image is represented by three kinds of features i.e., GIST, PHOG, and LBP [3]. LandUse-21 contains 21 categories, and each category has 100 images.

-

7.

15-Scene Image DatasetFootnote 7 (Scene-15): Scene-15 is a complete multi-view image dataset. It consists of 4485 images with indoor and outdoor environments [53], where each image is described by three kinds of features i.e., GIST, PHOG, and LBP [3]. Scene-15 contains 15 natural scene categories, each of which has 200 to 400 images.

-

8.

Handwritten digit 2 sources DatasetFootnote 8 (Hdigit): Hdigit is a complete multi-view image dataset. It consists of 10000 handwritten digits (‘0’–‘9’) collected from two sources, i.e., MNIST and USPS [11]. Hdigit is categorized into ten groups, and each group has 1000 samples.

- Incomplete Multi-view Data Construction: :

-

In our experiments, since the 3Sources, BBCSport, and BBC datasets are naturally incomplete multi-view datasets, we directly conduct various comparative experiments on these three incomplete multi-view datasets. For the other five complete multi-view datasets, we first randomly select ξ% samples in each dataset as incomplete samples which suffer from missing instances in some views. Then, under such condition that each sample can observe a specific value in at least one view, we randomly generate a binary vector b = (b1,b2,⋯ ,bV) for each incomplete sample, where if bi = 0, we set the instance feature in the i-th view of the sample to 0. In this way, we construct five incomplete multi-view datasets, each with different sample incomplete rates ξ% (ξ = 70,90,100), to fully assess the effectiveness of the proposed method, where ξ = 100 means that all samples are incomplete.

5.1.2 Compared Methods

To comprehensively evaluate the clustering performance of the proposed MKAGT_IMVC, we compare it with the following some state-of-the-art incomplete multi-view clustering methods.

-

1.

Best Single View (BSV) [54]: This method populates missing instances with the mean value of the available instances on each view. Then, BSV performs standard spectral clustering [55] on all views independently and reports the best result.

-

2.

Concat [54]: This method performs standard spectral clustering on the long dimension features for the final clustering result, where the long dimension features are generated through concatenating the features of all views.

-

3.

DAIMC [19]: This method learns a unified feature matrix from all views through combining weighted semi-nonnegative Matrix Factorization and L2,1-Norm regularized regression. Then, DAIMC performs k-means on the unified feature matrix to get the final clustering result.

-

4.

PIC [30]: This method converts the instance-value missing into similarity-value missing on each view. Then, PIC learns a consistent Laplacian matrix by adopting the spectral perturbation theory to balance all views. Lastly, PIC performs standard spectral clustering on the consistent Laplacian matrix for the final clustering result.

-

5.

MKKM-IK-MKC [27]: This method develops a unified learning framework, which jointly optimizes the multiple kernel imputation and consistent representation learning to achieve better clustering. Then, MKKM-IK-MKC performs k-means on the consistent representation to get the final clustering result.

-

6.

IMSC_AGL [15]: This method designs a joint optimization framework, which integrates the optimal similarity graph construction for each view and consistent representation learning for all views. Then, IMSC_AGL performs k-means on the consistent representation to get the final clustering result.

-

7.

AGC_IMC [31]: This method develops a unified learning framework, which combines incomplete graphs recovering and consistent representation learning to obtain the optimal consistent representation. Then, AGC_IMC performs k-means on the optimal consistent representation to get the final clustering result.

-

8.

GIMC_FLSD [20]: This method develops a matrix factorization model, which integrates individual representation learning for each view and consensus representation learning for all views. Meanwhile, GIMC_FLSD imposes adaptively weighted learning on the model to obtain the optimal consistent representation. Lastly, GIMC_FLSD performs k-means on the optimal consistent representation to get the final clustering result.

-

9.

APMC [33]: This method uses the common samples showing all views as anchors to construct the fused similarity matrix. Then, APMC performs standard spectral clustering on the fused similarity matrix for the final clustering result.

-

10.

IMVTSC-MVI [32]: This method develops a low-rank representation framework to well recover missing instances appearing in the certain view and learn the optimal similarity graph from all views simultaneously, where the framework is imposed multiple constraints. Then, IMVTSC-MVI performs standard spectral clustering on the optimal similarity graph for the final clustering result.

For all comparison methods, we get the available source codes from the corresponding author’s homepage. To make the experiments more fair and conclusive, we run the source codes of comparison methods either using the default parameter values or tuning them as suggested according to the original paper to obtain the best performances. For example, the number of nearest neighbors in the relevant comparison methods is either the default value of the source codes or 10% of the total sample of each dataset.

For the proposed MKAGT_IMVC, we first adopt three commonly used kernel function to construct the view-wise truncated anchor graphs, which are defined as follows:

-

1.

Radial Basis Function (RBF) Kernel:

$$ K(\boldsymbol{y}, \boldsymbol{u}) = \exp \left( -\gamma \Vert \boldsymbol{y} - \boldsymbol{u} \Vert^{2} \right), $$(35) -

2.

Cosine Similarity Kernel [56]:

$$ K(\boldsymbol{y}, \boldsymbol{u}) = 1 - \frac{\boldsymbol{y}^{T} \boldsymbol{u}} {\Vert \boldsymbol{y} \Vert \Vert \boldsymbol{u} \Vert}, $$(36) -

3.

Radial Basis Function (RBF) Chi-Square Kernel [57]:

$$ K(\boldsymbol{y}, \boldsymbol{u}) = \exp \left( -\gamma (\frac{1}{2} {\sum}_{i=1}^{d} \frac{(y_{i} - u_{i})^{2}}{(y_{i} + u_{i})})\right). $$(37)

Without loss of generality, the parameter γ is set to 1 in (35) of the RBF Kernel of and (37) of the RBF Chi-Square Kernel. Furthermore, We empirically set the number of nearest neighbors k = c for creating the view-wise truncated anchor graphs, where the c is the number of clusters of the raw data. For the 3Sources dataset, we set the parameters of the proposed MKAGT_IMVC to λ1 = 0.001 and λ2 = 0.1 in joint learning model. Meanwhile, for other datasets with different sample incomplete rates, we set these two parameters as λ1 = 0.001 and λ2 = 1. According to (43), we empirically set the proportion of anchors of 3Sources, BBCSport, BBC, Caltech7, Mfeat, LandUse-21, Scene-15, and Hdigit datasets to entire available instances of the corresponding dataset as η = {0.58, 0.8, 0.1, 1, 0.4, 1, 0.5, 0.2}, separately, where these multi-view datasets have different sample incomplete rates.

Since the k-means is sensitive to the setting of the initial centroid, we perform k-means 20 times for each experiment to eliminate the influence of random initialization, where the maximum number of iterations of k-means is set to 1000. Meanwhile, we randomly generate five different incomplete patterns with the same sample incomplete rate in an above manner and perform all comparison methods on such five patterns to record the average values with standard deviations. Since the use of different data preprocessing schemes and parameter ranges, some results may deviate from the corresponding published information.

5.1.3 Evaluation metrics

Without loss of generality, we adopt three commonly used evaluation metrics to fully assess the performance of different methods as shown in [10]: Clustering Accuracy (ACC), Normalized Mutual Information (NMI), and Purity. These evaluation metrics are calculated in a predetermined formula with clustering labels of all samples and the ground-truth labels of the dataset. For the three metrics, a higher value indicates a better performance. Specifically, the three metrics are defined as follows.

The first metric is ACC which measures clustering accuracy.

where li and ci denote the provided ground-truth label and the obtained cluster label of the i-th sample, separately; n is the number of samples; δ is the Dirac delta function

and map(ci) is the optimal permutation mapping function which arranges the cluster label ci to match the ground-truth label li via using the Kuhn-Munkres algorithm.

The second evaluation metric is NMI which assess the quality of the obtained clusters.

where the set C = {c1,c2,⋯ ,cc} and the set L = {l1,l2,⋯ ,lc} denote the obtained clusters using clustering methods and the provided ground-truth clusters, separately; the constant c is the number of clusters of the raw data; MI(C,L) denotes the mutual information between C and L; E(C) and E(L) denote the entropy of C and L, separately.

Let ni denotes the number of samples in the obtained cluster \(\boldsymbol {c}_{i}~(1 \leqslant i \leqslant c)\) using clustering methods; and nj denotes the number of samples belonging to the provided ground-truth cluster \(\boldsymbol {l}_{j}~(1 \leqslant j \leqslant c)\). Then, NMI is rewritten as

where ni,j denotes the number of samples in the common parts of ci and lj.

The third evaluation metric is Purity which calculates the percentage of correct labels to evaluate the effectiveness of the clustering method.

5.2 Clustering results and analysis

To evaluate the effectiveness and superiority of the proposed MKAGT_IMVC, we adopt a variety of incomplete multi-view clustering methods to conduct a series of comparative experiments on eight incomplete multi-view datasets, including three naturally incomplete datasets and five randomly constructed incomplete datasets. Tables 3 and 4 present the clustering results of all comparison methods on multi-view datasets with different sample incomplete rates mentioned above in terms of ACC, NMI, Purity, and CPU running time, respectively. In addition, the CPU running time measurement refers to starting from the original features of the input multi-view dataset and ending with at output of the cluster label. From these experimental results, we can make the following observations:

-

1.

BSV and Concat perform worse than the other incomplete multi-view clustering approaches in terms of ACC, NMI, Purity in most incomplete situations of these datasets. BSV simply populates missing instances with the same mean values on corresponding views, which will cause the missing instances in the corresponding view to be split into the same cluster. Meanwhile, by directly concatenating the features of all views into one long dimension feature, Concat may generate too much redundant information, which is the main reason for poor clustering results. Thus, the experimental results demonstrate that it is noteworthy to explore the relationships between multiple views to improve the clustering performance.

-

2.

We can see that the proposed MKAGT_IMVC method can handle arbitrary incomplete multi-view datasets, such as the three naturally incomplete multi-view datasets in Table 3, and the five randomly constructed incomplete multi-view datasets in Table 4, where each dataset has three sample incomplete rates 70%, 90%, and 100%, respectively. Obviously, on most above incomplete multi-view datasets, we can observe that MKAGT_IMVC significantly outperforms all the comparison methods in terms of ACC, NMI, Purity. For example, from Tables 3 and 4, MKAGT_IMVC obtains the three clustering performance metrics on the BBCSport, BBC, and Mfeat datasets, respectively, all of which are very close to 100%. From Table 4, we can observe that the experimental results of the proposed method and all the compared methods are relatively low in terms of ACC, NMI, and Purity on the LandUse-21 and Scene-15 datasets. The main reason is that LandUse-21 and Scene-15 are more complex than other datasets, especially their number of clusters is 21 and 15, respectively, which is much more than other datasets. This brings great challenges to the learning process of all methods in fitting the spatial distribution of the data. Nevertheless, the proposed MKAGT_IMVC method exceeds the second best method with 70%/90%/100% sample incomplete rates in terms of ACC, NMI, and Purity as about 14%/22%/17%, 18%/27%/21%, and 13%/23%/16% on the LandUse-21 dataset, 31%/32%/30%, 36%/38%/36%, and 25%/27%/24% on the Scene-15 dataset. On the Hdigit dataset, the proposed MKAGT_IMVC method exceeds the second best method with 90%/100% sample incomplete rates in terms of ACC, NMI, and Purity as about 15%/8%/13% and 50%/33%/48%. The main reason is that the proposed MKAGT_IMVC method can learn an optimal unified similarity matrix from multiple views by simultaneously considering the three key steps: (1) generate significantly representative anchor points; (2) construct multiple fused complete anchor graphs with various kernel functions; (3) develop an effective subspace representation based graph learning model which integrates low-rank tensor constraint and consensus graph constraint.

-

3.

The experimental results in terms of CPU running time are shown in Tables 3 and 4, we can observe that as the number of samples increases, the running time of all methods will increase significantly, especially on the Scene-15 and Hdigit datasets. Obviously, the CPU running time of APMC is much less than all comparison methods in most incomplete situations, especially on the large-scale datasets (i.e., Hdigit). The main reason is that APMC adopts the strategy of generating anchor points to construct the similarity graph, and its optimization process is a non-iterative learning algorithm. This proves that the anchor point strategy can effectively improve the operating efficiency of the incomplete multi-view clustering method. However, the clustering performance of APMC in terms of ACC, NMI, and Purity is not very good compared to other competitors, and it cannot handle datasets with sample incomplete rate of 100%, or datasets with more than 3 views. For the proposed MKAGT_IMVC method, under the condition of ensuring the best clustering performance in terms of ACC, NMI, and Purity, the CPU running time is much less than some state-of-the-art competitors (i.e., IMSC_AGL, AGC_IMC, and IMVTSC-MVI) in most scenarios, which validates the superior performance of the proposed MKAGT_IMVC method in terms of clustering ability and time-consuming.

-

4.

From Table 4, we can observe that the standard deviation values got by the proposed MKAGT_IMVC method in terms of ACC, NMI, Purity are larger than other competitors on the Secne-15 dataset with sample incomplete rate of 70% and 100%, and the Hdigit dataset with sample incomplete rate of 70%. This is mainly because that the generated anchor points are not representative or the number is not enough in some random incomplete patterns with the same sample incomplete rate. In addition, it can be observed that as the sample incomplete rate increases, the clustering performance of all comparison methods in terms of ACC, NMI, Purity commonly decreases. However, the proposed MKAGT_IMVC method still maintains stable performance in terms of ACC, NMI, Purity in most cases, especially on the large-scale datasets (i.e., Hdigit). The main reason is that the proposed MKAGT_IMVC method selects enough and representative instances as the anchor points for view-wise to construct anchor graphs.

5.3 Effect of anchor selection

We explore the effect of anchor selection on the clustering performance of the proposed MKAGT_IMVC methods by discussing the two core issues, that is, what differences will be made when MKAGT_IMVC adopts different anchor strategies and different number of anchors. Since the current anchor point selected by DAS and the next anchor point is not the same cluster with high probability, we assume that the anchor points selected within c times are evenly distributed in c clusters. To this end, the number of anchors can be obtained by the following expression:

where nY records the number of available instances in each view, c denotes the number of clusters, η denotes the proportion of anchors to the available instances, floor(⋅) rounds each element to the nearest integer less than or equal to that element, ceil(⋅) rounds each element to the nearest integer greater than or equal to that element.

The effect of different anchor strategies on the clustering performance of the proposed MKAGT_IMVC method is presented in Table 5. We adopt four anchor selection strategies, including the widely used k-means policy, the random policy, the manner of common instances appearing in all views, and the improved DAS for incomplete multi-view data, where the number of anchor points of DAS is the same as random policy. From the experimental results, we can observe that the DAS has relatively stable clustering performance in terms of ACC, NMI, and Purity compared to the other three strategies. In addition, DAS not only has a significant lead in clustering performance on the medium-size datasets (i.e., Scene-15) but also its CPU running time is much less than the random strategy with the same number of anchor points. The main reason is that DAS can efficiently select a sufficient number of the most representative anchor points from the incomplete multi-view datasets. Therefore, the improved DAS for incomplete multi-view data is the most suitable for the anchor point selection in the proposed MKAGT_IMVC method.

The effect of different number anchor points on the clustering performance of the proposed MKAGT_IMVC method is shown in Fig. 2. From the experimental results, we can observe that the start values of the clustering performance curves for ACC and NMI have relatively large values among all reported values. The overall trend of clustering performance curves keeps rising steadily with the increase of the number of anchor points on small-size datasets (i.e., BBCSport, Caltech7, BBC). Meanwhile, on the medium-size dataset (i.e., Scene-15), the clustering performance curves fluctuate up and down within a certain range as the number of anchor points increases. Whereas, it can be observed empirically that the proportion of anchors to the available instances η is within 0.5 and the optimal clustering performance can be obtained. Moreover, we can find that as the number of anchor points increases, the overall trend of the CPU running time curve shows a rapid rise. Therefore, for small-size datasets, we should maximize the number of anchor points, find the best number of anchor points in the direction of \(\eta \geqslant 0.5\), while for medium-size or large-size datasets, we can look for the optimal number of anchors in the direction of \(\eta \leqslant 0.5\).

5.4 Effect of kernel selection

To investigate the effect of the combination of different predefined kernel functions on the clustering performance of the proposed MKAGT_IMVC method, as shown in Table 6, we adopt three predefined kernel functions, including RBF Kernel, Cosine Similarity Kernel, and RBF Chi-Square Kernel, to form seven combinations to conduct comparative experiments on four incomplete multi-view datasets. For the kernel parameter γ setting of a certain competitive combination, according to the number of kernel functions in the combination, the corresponding values are selected from the set {10, 1, 0.1, 0.01} in turn. From the experimental results, we can observe that the proposed combination of multiple kernels performs robustly on the clustering performance in terms of ACC, NMI, and Purity than other comparative combinations, especially on the medium-size datasets. For example, in the Scene-15 dataset, the proposed combination not only obtains the optimal clustering performance but also its CPU running time is much less than most other comparison combinations. The main reason is that when we fuse multiple incomplete views, using multiple predefined kernel functions of different properties to construct multiple fused complete anchor graphs, the proposed MKAGT_IMVC method not only sufficiently mines the intra-view and inter-view information but also well captures more nonlinear relations between samples. Therefore, these demonstrate that the proposed MKAGT_IMVC method adopts a variety of predefined kernel functions with different kernel spaces, which can improve the effectiveness, robustness, and efficiency of the clustering results.

5.5 Parameter sensitivity analysis

There are two tunable parameters λ1 and λ2 in our final objective function as formulated by (18). In the following, we conduct some experiments to explore the influence of different parameter combinations on clustering performance. As shown in Fig. 3, we present the clustering performance in terms of ACC in different combinations of the two trade-off parameters λ1 and λ2 on the BBCSport, BBC, Caltech7, and Scene-15 datasets, where λ1 and λ2 are both derived from {1e − 4, 1e − 3, 1e − 2, 1e − 1, 1e0, 1e1, 1e2, 1e3, 1e4}. From the experimental results, we can observe that the proposed MKAGT_IMVC method is a little sensitive to parameters λ1 and λ2, but in most cases, setting the two parameters to λ1 = 0.001 and λ2 = 1 can guarantee the best clustering performance. Obviously, the optimal value of parameter λ2 is several orders of magnitude larger than the optimal value of parameter λ1. This is reasonable since λ2 is used to adjust the proportion of the diversity term in (18), where the diversity term mainly refers to the complementary and consistent information of multiple views. These experiments further demonstrate that our method can effectively capture complementary and consistent information between views from multi-view datasets to improve clustering performance.

5.6 Empirical convergence analysis

In Section 4.5, we analyze the convergence property of the proposed MKAGT_IMVC method theoretically. In the following, we conduct some experiments to further analyze its convergence property. As shown in Fig. 4, we report the results of the convergence experiment on four incomplete multi-view datasets. The experiment results show that the objective function values decrease monotonically and go stable within 20 iterations in all cases. These experiments further demonstrate that the proposed MKAGT_IMVC method has excellent convergence.

6 Conclusion

While recently proposed incomplete multi-view clustering methods have achieved advanced performance, they still have several limitations to some extent, such as inflexibility and relatively high computational cost; neglecting valuable nonlinear relations among samples; failure to sufficiently explore complementary information among views; etc. This paper develops a novel and flexible unified graph learning framework called MKAGT_IMVC for addressing the above drawbacks. MKAGT_IMVC first extends an anchor selection approach that can simply and effectively generate a bundle of representative anchor points. Then, based on the selected anchor points and pre-defined kernel functions, multiple fused complete anchor graphs are constructed to capture more valuable nonlinear relations. Moreover, MKAGT_IMVC develops a graph learning model that integrates low-rank tensor constraint and consensus graph constraint, where all fused complete anchor graphs are regarded as prior knowledge to initialize this model. These key steps make our method distinct yet superior to most methods. Extensive experiments clearly show that our method outperforms most state-of-the-art competitors in terms of clustering ability and time-consuming. In the future, we plan to further study how to adaptively select the number of anchors, rather than experimental assessments.

Notes

References

Bickel S, Scheffer T (2004) Multi-view clustering. In: Fourth IEEE international conference on data mining (ICDM’04), vol 4, Citeseer, pp 19–26

Greene D, Cunningham P (2006) Practical solutions to the problem of diagonal dominance in kernel document clustering. In: Proceedings of the 23rd international conference on machine learning, pp 377–384

Dai D, Van Gool L (2013) Ensemble projection for semi-supervised image classification. In: Proceedings of the IEEE international conference on computer vision, pp 2072–2079

Li Y, Yang M, Zhang ZM (2019) A survey of multi-view representation learning. IEEE Trans Knowl Data Eng 31(10):1863–1883

Tolić D, Antulov-Fantulin N, Kopriva I (2018) A nonlinear orthogonal non-negative matrix factorization approach to subspace clustering. Pattern Recogn 82:40–55

Huang S, Kang Z, Xu Z (2020) Auto-weighted multi-view clustering via deep matrix decomposition. Pattern Recogn 97:107015

Zhang Z, Liu L, Shen F, Shen HT, Shao L (2019) Binary multi-view clustering. IEEE Trans Pattern Anal Mach Intell 41(7):1774–1782

Zhang C, Fu H, Hu Q, Cao X, Xie Y, Tao D, Xu D (2020) Generalized latent multi-view subspace clustering. IEEE Trans Pattern Anal Mach Intell 42(1):86–99

Zhang G-Y, Zhou Y-R, He X-Y, Wang C-D, Huang D (2020) One-step kernel multi-view subspace clustering. Knowl-Based Syst 189:105126

Zhan K, Nie F, Wang J, Yang Y (2019) Multiview consensus graph clustering. IEEE Trans Image Process 28(3):1261–1270

Wang H, Yang Y, Liu B (2020) Gmc: Graph-based multi-view clustering. IEEE Trans Knowl Data Eng 32(6):1116–1129

Li X, Zhang H, Wang R, Nie F (2020) Multi-view clustering: a scalable and parameter-free bipartite graph fusion method. IEEE Trans Pattern Anal Mach Intell, 1–1

Li Z, Wang Q, Tao Z, Gao Q, Yang Z (2019) Deep adversarial multi-view clustering network. In: IJCAI’19 Proceedings of the Twenty-Eighth international joint conference on artificial intelligence, pp 2952–2958

Xu J, Ren Y, Li G, Pan L, Zhu C, Xu Z (2021) Deep embedded multi-view clustering with collaborative training. Inf Sci 573:279–290

Wen J, Xu Y, Liu H (2020) Incomplete multiview spectral clustering with adaptive graph learning. IEEE Trans Cybern 50(4):1418–1429

Liu J, Teng S, Fei L, Zhang W, Fang X, Zhang Z, Wu N (2021) A novel consensus learning approach to incomplete multi-view clustering. Pattern Recogn 115:107890

Li S-Y, Jiang Y, Zhou Z-H (2014) Partial multi-view clustering. In: Twenty-eighth AAAI conference on artificial intelligence

Yang Y, Wang H (2018) Multi-view clustering: A survey. Big Data Mining and Analytics 1 (2):83–107

Hu M, Chen S (2018) Doubly aligned incomplete multi-view clustering. In: Proceedings of the Twenty-Seventh international joint conference on artificial intelligence, pp 2262–2268

Wen J, Zhang Z, Zhang Z, Fei L, Wang M (2021) Generalized incomplete multiview clustering with flexible locality structure diffusion. IEEE Trans Cybern 51(1):101–114

Xu C, Guan Z, Zhao W, Wu H, Niu Y, Ling B (2019) Adversarial incomplete multi-view clustering. In: IJCAI’19 Proceedings of the Twenty-Eighth international joint conference on artificial intelligence, pp 3933–3939

Wang Q, Ding Z, Tao Z, Gao Q, Fu Y (2021) Generative partial multi-view clustering with adaptive fusion and cycle consistency. IEEE Trans Image Process 30:1771–1783

Zhang C, Cui Y, Han Z, Zhou JT, Fu H, Hu Q (2020) Deep partial multi-view learning. IEEE Trans Pattern Anal Mach Intell, 1–1