Abstract

Knowledge discovery combined with network structure is an emerging field of network data analysis and mining. Three-way concept analysis is a method that can fit the human mind in uncertain decisions and analysis. In reality, when three-way concept analysis is placed in the background of a network, not only the three-way rules need to be obtained, but also the network characteristic values of these rules should be obtained, which is of great significance for concept cognition in the network. This paper mainly combines complex network analysis with the formal context of three-way decision. Firstly, the network formal context of three-way decision (NFC3WD) is proposed to unify the two studies mentioned above into one data framework. Then, the network weaken-concepts of three-way decision (NWC3WD) and their corresponding sub-networks are studied. Therefore, we can not only find out the network weaken-concepts but also know the average influence of the sub-network, as well as the influence difference within the sub-network. Furthermore, the concept logic of network and the properties of its operators are put forward, which lays a foundation for designing the algorithm of rule extraction. Subsequently, the bidirectional rule extraction algorithm and reduction algorithm based on confidence degree are also explored. Meanwhile, these algorithms are applied to the diagnosis examples of COVID-19 from which we can not only get diagnostic rules, but also know the importance of the population corresponding to these diagnostic rules in the network through network eigenvalues. Finally, experimental analysis is made to show the superiority of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the gradual networking of society, many data collection and analysis usually have corresponding network contexts behind them. Currently, for the research on decision problems in networks, such as diagnosis and epidemic prevention in infectious disease networks, objects can often be divided into positive domain, negative domain and boundary domain by every attribute. Therefore, we can use the method of three-way decision and three-way rule extraction to study them. Moreover, uniting complex networks analysis and formal context of three-way decision, and mining three-way network concepts and rules have become hot research topics with great theoretical and practical significance.

Formal concept analysis theory is based on concepts and their hierarchy through a mathematical and formal representation [1], which could obtain rules through the implication relationship between concepts [2]. Furthermore, the concept cognition and learning were used as the basis of rule extraction in complex systems [3,4,5,6,7]. Kumar et al. [3], Mi et al. [4] and Zhao et al. [5] conducted a series of studies from the perspective of granular concept cognitive learning. Furthermore, in order to improve the efficiency and flexibility of concept learning, Li et al. [6] explored concept learning through granular computing from the perspective of cognitive computing. Based on the concept of granularity structure, Yao [7] combined granular computing and cognitive psychology and proposed the triangle of information computing as well as the triangle theory of granular computing to further incorporate granular computing into artificial intelligence. Considering the complexity of concepts in a network, they may not necessarily constitute concepts under Galois connections, but may only satisfy the unilateral mapping of weak concepts. Therefore, based on the above researches, the cognition and learning of weak concepts in a network will be studied in this paper.

Meanwhile, how to do three-way decision making in the real world was also studied. For instance, References [8,9,10,11] described in detail and elaborated the cognitive advantages of three-way decision making from different aspects. In addition, based on the results of cognitive science, Yao [12] studied the two fields of three-way decision and granular computing, as well as the interaction between them. To make this theory further meet more needs, many scholars have conducted related researches [13, 14], such as multi-agent three-way decision making based on rough set decision theory [15], which discussed approximate rough sets from three-way decision theory viewpoint [16]. Zou et al. [17] proposed a kind of formal concept analysis method of linguistic-valued data based on lattice implication algebra. These theories and applications have deepened people’s understanding of the three-way decision theories and broadened the scope of application.

Due to the wide application of three-way decision and formal concept, Yao [12], Wang et al. [18], and Qi et al. [19] have made three-way concept analysis become an important tool for knowledge discovery and data analysis. Gaeta et al. [20] used temporal information granules and three-way formal concept analysis for spatial and temporal reasoning. For the attribute reduction problem of three-way concept lattice, Ren and Wei [21] proposed four kinds of reductions and gave the reduction calculation method. In addition, Li et al. [22] proposed three-way cognitive concept learning through multiple granularities. Yao and Herbert [23] introduced the attribute selection problem into a three-way decision construction algorithm and applied it to a medical network system. Yao [24] examined geometric structures, graphical representations, and semantical interpretations of triads in terms of basic geometric notions, as well as more complex structures derived from these basic notions. Deng et al. [25] introduced three-way decision into a multi-scale decision information system (MS-DIS) with reference to fuzzy-neighborhood classes, and unravelled the three-way decision rule from MS-DIS. They drove the three-way decision making science towards the field of artificial intelligence. Among them, rule extraction is a key research direction [26,27,28,29,30,31]. Hu et al. [26] investigated rule acquisition in the generalized one-sided formal context and in the generalized one-sided formal context with multi-scale. Wu et al. [27] studied optimal scale selection and rule acquisition in incomplete multi-scale decision tables.

The above researches are based on three-way formal context, which do not contain information about the network where the object-object relationship is considered. At the same time, on one hand, more and more data has a network background, and on the other hand, in practical applications such as infectious disease networks, the research of three-way decision theory with network background needs to be solved urgently.

What is more, in recent years, complex network analysis about disease diagnosis has yielded fruitful research results on many fields [32,33,34,35,36,37,38,39,40,41,42]. These studies can be divided into three directions: the study of network topological eigenvalues [35, 36, 39], the research of network trends using dynamic system methods [40, 41], and the combination of data driven methods and physical methods [42]. Barabasi [35,36,37] proposed network investigation to get the functional organization of cells and the identification of new disease genes, and revealed the biological significance of disease-associated mutations. Furthermore, this kind of networks can identify drug targets and biomarkers for complex diseases [35,36,37]. Especially for the research of infectious disease networks [43,44,45], Pinto et al. [39] used several complex network models widely known in the literature to verify their topological effects on the propagation of the disease, Salje et al. [40] estimated the impact of lockdown and current population immunity by applying models to hospital and death data. Nande et al. [41] established a random epidemic model to examine the impact of the clinical progress of COVID-19 and the structure of the transmission network on the outcome of social distancing interventions. Gaeta et al. [43] combined the three-way decision model and graph theory to analyze the spread of COVID-19. Despite the effectiveness of the above methods, they still face the challenge of large-scale datasets and multiple parameters determination problems. Moreover, these complex network analysis methods seldom considered the attribute characteristics of network nodes.

To tackle with this problem, there has been some prior work on network cognition and learning in formal contexts. Snasel et al. [46] introduced formal concept analysis into social networks to facilitate understanding of internal structures and discussed the computational complexity of social network analysis. Hao et al. [47, 48] utilized formal concept analysis for efficient k-clique community networks detection. Peters and Ramanna [49] used information obtained from proximal three-way decision to study social networks. Ma et al. [50] linked complex networks and concept cognition through adjacency and association matrices in the same data framework, combining the advantages of the above two research fields, and investigated the generation and propagation of network weaken-concepts. Liu et al. [51] studied the problem of network community division based on the network formal context, which considered the characteristics of both network structure and formal contexts. Yan and Li [52] proposed a dynamic concept updating method based on three-way decision and network formal context.

Motivated by the above analysis, we further combine complex network analysis with three-way concept analysis, and make full use of the advantages of the two methods. For the networks of infectious diseases, we can take the network nodes as objects, and a network edge is viewed as a relation between objects. Moreover, using adjacency matrix to present the structure of a network, the matrix of conditional attributes and that of decision attributes whose attribute vales are 1, -1 and 0, can be combined to form the network formal context of three-way decision (NFC3WD). After that, we get a uniform data framework of the two methods. As a result, we can not only find out the network concept but also know the network eigenvalues of the obtained concept, which will lay a foundation for the further study of network rule extraction.

That is to say, the above mentioned researches to be studied can lay a foundation for concept cognition and three-way decision of the network data analysis and mining. So, it is important to unify complex network analysis and formal context of three-way decision into the same framework, since it can not only build a bridge connecting complex networks and three-way decision, but also can deepen the theoretical research and application research for these two fields.

In summary, the main contributions of this paper can be summarized as follows:

-

(1)

The NFC3WD is proposed that can unify the two studies mentioned above into one data framework.

-

(2)

The network weaken-concepts of NWC3WD are presented. Therefore, we can not only find out the network weaken-concept but also know the average influence of the network, as well as the influence difference within the sub-network.

-

(3)

The bidirectional rule extraction algorithm and reduction algorithm based on network weaken-concept and confidence degree are also explored.

The rest of this paper is organized as follows. In Section 2, basic notions related to three-way concept analysis are introduced. In Section 3, the NFC3WD is put forward, which is explained with a simple example of an infectious disease network. In Section 4, network weaken-concept and their corresponding subnetworks are proposed, and meanwhile, the characteristic values of the subnetworks are given to describe the average influence and the difference in influence within the subnetworks. Furthermore, to obtain the decision rules of NFC3WD, the network weaken-concept logic is presented in Section 5. In Section 6, the extraction algorithm and the reduction algorithm of network rules are investigated. Considering the prevention and control of infectious disease networks, the network structure-based suspected case prevention algorithm and the sequential decision algorithm for infectious disease control are further developed. In Section 7, these algorithms are verified by constructing network adjacency matrices through the UCI databases.

2 Related theoretical foundation

Definition 1

[1]. Let (U,A,I) be a formal context, where U = {x1,x2,⋯,xn} is a set of non-empty finite objects, A = {a1,a2,⋯,am} is a set of non-empty finite attributes, and I is the binary relation on the Cartesian product U × A. Among them, (x,a) ∈ I means that the object x has the attribute a, while (x,a)∉I means that the object x does not have the attribute a. In order to describe the formal concept, the following operators need to be defined: for \(\forall X\subseteq U,B\subseteq A\),

Definition 2

[1]. Let (U,A,I) be a formal context. For \(\forall X\subseteq U,B\subseteq A\), if X∗ = B, B∗ = X, then we call (X,B) as a concept, and X and B are the extent and intent of the concept, respectively.

Definition 3

[19]. Let (U,A,I) be a formal context. For \(\forall X,Y\subseteq U,B,C\subseteq A \), a pair of three-way operators derived from the object is defined as: \(\lessdot \): \(\mathcal {P}(U )\to \mathcal {D}\mathcal {P}(A ),{X^{\lessdot }}=(X^{*},X^{\bar {*}} ),\gtrdot \): \(\mathcal {D}\mathcal {P}(A )\to \mathcal {P}(U )\), \((B,C )^{\gtrdot }=\{ x\in U| x\in B^{*},x\in {C^{{\bar {*}}}} \}=B^{*}\cap {C^{{\bar {*}}}}\), and a pair of three-way operators derived from the attribute is defined as: \(\lessdot \): \(\mathcal {P}(A )\to \mathcal {D}\mathcal {P}(U ),{{B}^{\lessdot }}=(B^{*},{{B}^{{\bar {*}}}} ),\gtrdot \): \(\mathcal {D}\mathcal {P}(U )\to \mathcal {P}(A )\), \((X,Y )^{\gtrdot }=\{ a\in A| x\in X^{*},x\in Y^{\bar {*}} \}=X^{*}\cap {{Y}^{{\bar {*}}}}\).

Definition 4

[19]. Let (U,A,I) be a formal context. For \(\forall X\subseteq U,B,C\subseteq A\), if \({{X}^{\lessdot }}=(B,C )\), \({{(B,C )}^{\gtrdot }}=X\), then (X,(B,C)) is called a three-way concept derived from the object, or simply OE-concept, where X is the extent of the OE-concept, and (B,C) is the intent of the OE-concept.

Definition 5

[19]. Let (U,A,I) be a formal context. For \(\forall X,Y\subseteq U,B\subseteq A\), if \({{(X,Y )}^{\gtrdot }}=B,{{B}^{\lessdot }}=(X,Y )\), then ((X,Y ),B) is called a three-way concept derived from the attribute, or simply AE-concept, where (X,Y ) is the extent of the AE-concept, and B is the intent of the AE-concept.

Definition 6

[50]. The quadruple (U,M,A,I) is called a network formal context if U = {x1,x2,⋯,xn} is a set of network nodes, A = {a1,a2,⋯,am} is a set of non-empty finite attributes, M = {M1,M2,⋯ ,Mk} is the structure matrix of the network, Ml (l = 1,2,⋯ ,k) is the l-order adjacency matrix of the network, and I = {I1,I2,⋯,Ik,Ik+ 1}, where I1,I2,⋯ ,Ik are the binary relations on the Cartesian product U × U, and Ik+ 1 is the binary relation on the Cartesian product U × A. Among them, (xi,xj) ∈ Il means that the nodes xi and xj are adjacent to each other through at least l edges, and (xi,aj) ∈ Ik+ 1 means that the object xi has the attribute aj.

3 The network formal context of three-way decision

Definition 7

The quintuple \((U,M,\mathcal {C},\mathcal {D},I)\) is called a network formal context of three-way decision, or simply NFC3WD, if U = {x1,x2,⋯,xn} is a set of network nodes, M = {M1,M2,⋯ ,Mk} is the structure matrix of the network, \(\mathcal {C}=\{c_{1},c_{2},\cdots ,c_{m}\}\) is a set of conditional attributes, \(\mathcal {D}=\{d_{1},d_{2},{\cdots } ,d_{r}\}\) is a set of decision attributes, and \(I=\{ I_{1},I_{2},\cdots ,I_{k},I_{\mathcal {C}},I_{\mathcal {D}}\}\). Let \(I_{l}=M_{l}=(m_{ij}^{l})_{n\times n}\) denote the l-order adjacency matrix of the network. When the nodes xi and xj are adjacent to each other through at least l edges, \(m_{ij}^{l}=1\); otherwise, \(m_{ij}^{l}=0\). \(I_{\mathcal {C}}:U\times \mathcal {C}\to \{ -1,0,1\}\), \(I_{\mathcal {D}}:U\times \mathcal {D}\to \{-1,0,1\}\) are the binary relations on the Cartesian product \(U\times \mathcal {C}\) and \(U\times \mathcal {D}\), respectively. \(I_{\mathcal {C}}^{c_{r}}(x_{i})\), \(I_{\mathcal {D}}^{d_{p}}(x_{i})\) represent the values of xi under the attributes cr and dp; \(I_{\mathcal {C}}^{c_{r}}(x_{i})=1\), \(I_{\mathcal {D}}^{d_{p}}(x_{i})=1\) denote that the node xi has the attributes cr and dp; \(I_{\mathcal {C}}^{c_{r}}(x_{i})=-1\), \(I_{\mathcal {D}}^{d_{p}}(x_{i})=-1\) denote that the node xi does not have the attributes cr and dp; \(I_{\mathcal {C}}^{c_{r}}(x_{i})=0\), \(I_{\mathcal {D}}^{d_{p}}(x_{i})=0\) denote that it is not sure whether the node xi has the attributes cr and dp.

Example 1

Table 1 shows a NFC3WD \((U,M,\mathcal {C},\mathcal {D},I)\). The matrices M1,M2,⋯,Mk on the left reflect the adjacency structure between the network nodes. The information of \(\mathcal {C}\) and \(\mathcal {D}\) on the right reflect the values of the network nodes under the conditional attributes and decision attributes, respectively.

Example 2

Suppose there is an infectious diseases network as Fig. 1. By Definition 7, we can get a NFC3WD from the network as Table 2. In the Mk, let k = 1. Then we obtain the first-order adjacency matrix M1 of the network.

Here, U = {1,2,3,4,5,6,7,8,9,10,11,12,13,14,15} represents 15 patients in the infectious disease network, \(\mathcal {C}=\{a,b,c,d,e,f,g,h,i,j,k,l\}\) represents 12 symptoms of patients, and \(\mathcal {D}=\{m,n,o\}\) represents 3 infectious diseases. The symptoms from a to l are: stuffy nose, sneeze, cough, dry cough, physical weakness, body aches, headache, positive nucleic acid test, the presence of CT imaging, total white blood cell count is normal or low and lymphocyte count is decreased, fever, viral gene sequencing is highly homologous to COVID-19. In addition, m, n, o mean cold, COVID-19 and flu, respectively.

For the attributes that the nodes are not sure to have, they are followed with “\(\sim \)” in the figure. Taking the decision attribute “n” as an example, three colors represent three disease conditions. The green node means that it definitely has COVID-19, the orange node means that it is not sure whether it suffers from COVID-19, and the blue node means that it does not suffer from COVID-19. Taking the node 8 as an example, the label \(bce \sim fgk, m \sim o\) indicates that it definitely has the conditional attributes b, c, f, g, k, it is not sure whether the node 8 has the conditional attribute e, it definitely has the decision attribute o, and it is not sure whether the node 8 has the decision attribute m.

Take the node 2 as an example. In the matrix M1, the data (1,0,1,1,0,1,0,1,1,0,1,0,1,1,1) in the second line represents that the node 2 is first-order adjacent to the nodes 1, 3, 4, 6, 8, 9, 11, 13, 14 and 15.

Based on the information of \(\mathcal {C}\) and \(\mathcal {D}\), the data (− 1,− 1,− 1,− 1,1,1,1,0,0,0,1,− 1,− 1,− 1,1) in the second line represents: the node 2 owns the conditional attributes e, f, g, k, the node 2 does not own the conditional attributes a, b, c, d, l, and it is not sure whether the node 2 owns the conditional attributes h, i, j; the node 2 owns the decision attribute o, and it does not own the decision attributes m, n.

From the above example, it is observed that the NFC3WD can be obtained from a network. Conversely, it is also possible to obtain the corresponding network from a NFC3WD.

4 Network weaken-concept and its sub-networks under NFC3WD

Definition 8

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall X\in {{2}^{U}},C\in {{2}^{\mathcal {C}}},D\in {{2}^{\mathcal {D}}}\), we define the following notations:

-

(1)

\({X^{*<{\mathcal {C}}_{P}>}}=\{ c_{r}\in \mathcal {C}| \forall {x_{i}}\in X,I_{\mathcal {C}}^{c_{r}}(x_{i})=1 \}\) denotes the set of conditional attributes that all the elements in the node set X must have in common.

Here, \(*<{\mathcal {C}}_{P}>\) is just a symbol, which represents an operator, so are \(*<{\mathcal {C}}_{N}>\), \(*<{\mathcal {C}}_{B}>\), \(*<{\mathcal {D}}_{P}>\), \(*<{\mathcal {D}}_{N}>\), \(*<{\mathcal {D}}_{B}>\), \(*<{\mathcal {X}}_{P}>\), \(*<{\mathcal {X}}_{N}>\) and \(*<{\mathcal {X}}_{B}>\).

-

(2)

\({X^{*<{\mathcal {C}}_{N}>}}=\{ c_{r}\in \mathcal {C}| \forall {x_{i}}\in X,I_{\mathcal {C}}^{c_{r}}(x_{i})=-1\}\) denotes the set of conditional attributes that all the elements in the node set X don’t have in common.

-

(3)

\({X^{*<{\mathcal {C}}_{B}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in X,I_{\mathcal {C}}^{c_{r}}(x_{i})=0 \}\) denotes the set of conditional attributes that it is not sure whether all the elements in the node set X have in common.

-

(4)

\({X^{*<{\mathcal {D}}_{P}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in X,I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=1\}\) denotes the set of decision attributes that all the elements in the node set X must have in common.

-

(5)

\({X^{*{\mathcal {D}}_{N}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in X,I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=-1\}\) denotes the set of decision attributes that all the elements in the node set X don’t have in common.

-

(6)

\({X^{*<{\mathcal {D}}_{B}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in X,I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=0 \}\) denotes the set of decision attributes that it is not sure whether all the elements in the node set X have in common.

-

(7)

\({C^{*<{\mathcal {X}}_{P}>}}=\{ x_{i}\in U| \forall c_{r}\in C,I_{\mathcal {C}}^{c_{r}}(x_{i})=1 \}\) denotes the set of nodes that must have all the conditional attributes in C.

-

(8)

\({C^{*<{\mathcal {X}}_{N}>}}=\{ x_{i}\in U| \forall c_{r}\in C,I_{\mathcal {C}}^{c_{r}}(x_{i})=-1\}\) denotes the set of nodes that have no conditional attributes in C.

-

(9)

\({C^{*<{\mathcal {X}}_{B}>}}=\{ x_{i}\in U| \forall c_{r}\in C,I_{\mathcal {C}}^{c_{r}}(x_{i})=0 \}\) denotes the set of nodes that it is not sure whether they have all the conditional attributes in C.

-

(10)

\({D^{*<{\mathcal {X}}_{P}>}}=\{ x_{i}\in U| \forall d_{p}\in D,I_{\mathcal {D}}^{d_{p}}({x_{i}})=1\}\) denotes the set of nodes that must have all the decision attributes in D.

-

(11)

\({D^{*<{\mathcal {X}}_{N}>}}=\{ x_{i}\in U| \forall d_{p}\in D,I_{\mathcal {D}}^{d_{p}}({x_{i}})=-1\}\) denotes the set of nodes that have no decision attributes in D.

-

(12)

\({D^{*<{\mathcal {X}}_{B}>}}=\{ x_{i}\in U| \forall d_{p}\in D,I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=0 \}\) denotes the set of nodes that it is not sure whether they have all the decision attributes in D.

-

(13)

\({(C^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {D}}_{P}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in C^{*<{\mathcal {X}}_{P}>},I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=1\}\) denotes the set of decision attributes that all the elements in the node set \({C^{*<{\mathcal {X}}_{P}>}}\) must have in common.

-

(14)

\({(C^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {D}}_{N}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in C^{*<{\mathcal {X}}_{P}>},I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=-1\}\) denotes the set of decision attributes that all the elements in the node set \({C^{*<{\mathcal {X}}_{P}>}}\) don’t have in common.

-

(15)

\({(C^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {D}}_{B}>}}=\{ d_{p}\in \mathcal {D}| \forall x_{i}\in C^{*<{\mathcal {X}}_{P}>},I_{\mathcal {D}}^{{d_{p}}}({x_{i}})=0 \}\) denotes the set of decision attributes that it is not sure whether all the elements in the node set \({C^{*<{\mathcal {X}}_{P}>}}\) have in common.

-

(16)

\({(D^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {C}}_{P}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in D^{*<{\mathcal {X}}_{P}>},I_{\mathcal {C}}^{c_{r}}(x_{i})=1 \}\) denotes the set of conditional attributes that all the elements in the node set \({{D}^{*<{\mathcal {X}}_{P}>}}\) must have in common.

-

(17)

\({(D^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {C}}_{N}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in D^{*<{\mathcal {X}}_{P}>},I_{\mathcal {C}}^{c_{r}}(x_{i})=-1 \}\) denotes the set of conditional attributes that all the elements in the node set \({{D}^{*<{\mathcal {X}}_{P}>}}\) don’t have in common.

-

(18)

\({(D^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {C}}_{B}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in D^{*<{\mathcal {X}}_{P}>},I_{\mathcal {C}}^{c_{r}}(x_{i})=0 \}\) denotes the set of conditional attributes that it is not sure whether all the elements in the node set \({{D}^{*<{\mathcal {X}}_{P}>}}\) have in common.

-

(19)

\({(D^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {C}}_{P}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in {D^{*<{\mathcal {X}}_{N}>}},I_{\mathcal {C}}^{c_{r}}(x_{i})=1 \}\) denotes the set of conditional attributes that all the elements in the node set \({D^{*<{\mathcal {X}}_{N}>}}\) must have in common.

-

(20)

\({(D^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {C}}_{N}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in {D^{*<{\mathcal {X}}_{N}>}},I_{\mathcal {C}}^{c_{r}}(x_{i})=-1 \}\) denotes the set of conditional attributes that all the elements in the node set \({D^{*<{\mathcal {X}}_{N}>}}\) don’t have in common.

-

(21)

\({(D^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {C}}_{B}>}}=\{ c_{r}\in \mathcal {C}| \forall x_{i}\in {D^{*<{\mathcal {X}}_{N}>}},I_{\mathcal {C}}^{c_{r}}(x_{i})=0 \}\) denotes the set of conditional attributes that it is not sure whether all the elements in the node set \({D^{*<{\mathcal {X}}_{N}>}}\) have in common.

Similarly, the following notations can be defined:

The notations in Definition 8 will be illustrated in Example 3.

From Definition 8, we find that some of operators can also stand for sub-networks, such as \({C^{*<{\mathcal {X}}_{P}>}}\). So the network characteristic values of their corresponding sub-networks should be discussed. In this way, not only can the network weaken-concept be found, but also the characteristics of the sub-network can be described quantitatively.

Definition 9

[50]. Let \(\mathfrak {M}=\{ {\mathfrak {M}_{1}},{\mathfrak {M}_{2}} \}\) be the characteristic values of the network,

Here, \(c_{D}(i)=\sum \limits _{j=1}^{N}{(|x_{j}|+\sum \limits _{k=1}^{L}{|a_{ijk}|} )}\) denotes the degree of the node xi, |xj| denotes the number of edges adjacent to the node xi, \(\sum \limits _{k=1}^{L}{|a_{ijk}|}\) denotes the number of attributes shared by the nodes xi and xj, N is the number of nodes in the corresponding network, L is the number of attributes in the NFC3WD, and \(c_{D_{{\max \limits } }}\) is the largest value of cD(i). Then \({{\mathfrak {M}}_{1}}\) is called the average degree, which represents the average influence of the corresponding sub-network, and \(\mathfrak {M}_{2}\) is the difference of the influence, which represents the degree of difference of influence among the nodes within the sub-network.

The larger the \(\mathfrak {M}_{1}\), the greater the influence of the network. The larger the \(\mathfrak {M}_{2}\), the greater the difference of influence between the nodes in the network, that is, the greater the structural heterogeneity. On the contrary, the structural heterogeneity is smaller.

Based on Definitions 8 and 9, the notion of network weaken-concepts of NFC3WD is given below.

Definition 10

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall X\in 2^{U},C\in 2^{\mathcal {C}},D\in 2^{\mathcal {D}}\), we can define the network weaken-concepts of NFC3WD as follows:

-

(1)

\((\mathfrak {M}, X, X^{*<{\mathcal {C}}_{P}>}, X^{*<{\mathcal {C}}_{N}>}, X^{*<{\mathcal {C}}_{B}>}, X^{*<{\mathcal {D}}_{P}>}, X^{*<{\mathcal {D}}_{N}>}, X^{*<{\mathcal {D}}_{B}>})\) is called a network weaken-concept of NFC3WD induced by the node set X, or simply NWC3WD.

-

(2)

\((\mathfrak {M}, C^{*<{\mathcal {X}}_{P}>}, (C^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {D}}_{P}>}, (C^{*<{\mathcal {X}}_{P}>})^{*<{\mathcal {D}}_{N}>}, (C^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {D}}_{B}>})\) is called a NWC3WD induced by the set of nodes that have all the condition attributes in C.

-

(3)

\((\mathfrak {M},C^{*<{\mathcal {X}}_{N}>}, (C^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {D}}_{P}>} , (C^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {D}}_{N}>},(C^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {D}}_{B}>})\) is called a NWC3WD induced by the set of nodes that have no condition attributes in C.

-

(4)

\((\mathfrak {M}, D^{*< {\mathcal {X}}_{P}>} , (D^{*<{\mathcal {X}}_{P}>} )^{* <{\mathcal {C}}_{P}>} , (D^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {C}}_{N}>},(D^{*<{\mathcal {X}}_{P}>} )^{*<{\mathcal {C}}_{B}>})\) is called a NWC3WD induced by the set of nodes that have all the decision attributes in D.

-

(5)

\((\mathfrak {M}, D^{*<{\mathcal {X}}_{N}> }, (D^{*<{\mathcal {X}}_{N}>})^{*<{\mathcal {C}}_{P}>} , (D^{*<{\mathcal {X}}_{N}>})^{*<{\mathcal {C}}_{N}>},(D^{*<{\mathcal {X}}_{N}>} )^{*<{\mathcal {C}}_{B}>})\) is called a NWC3WD induced by the set of nodes that have no decision attributes in D.

-

(6)

\((\mathfrak {M}, D^{* <{\mathcal {X}}_{B}>} , (D^{*<{\mathcal {X}}_{B}>} )^{*<{\mathcal {C}}_{P}>} , (D^{*<{\mathcal {X}}_{B}>} )^{*<{\mathcal {C}}_{N}>},(D^{*<{\mathcal {X}}_{B}>} )^{*<{\mathcal {C}}_{B}>})\) is called a NWC3WD induced by the set of nodes that it is not sure whether they have all the decision attributes in D.

The above notations are further explained through the following example.

Example 3

Using the NFC3WD in Example 2, by Definitions 8, 9 and 10, we can compute the following NWC3WDs.

-

(1)

The NWC3WD induced by the node set X.

Let X = {3,4,10,12}. Then we have \({X^{*<\mathcal {C}_{P}>}}=\{ d,e,k \}\), \({X^{*<\mathcal {C}_{N}>}}=\{ a,b,c \}\), \(X^{*<\mathcal {C}_{B}>}=\varnothing \), \(X^{*<\mathcal {D}_{P}>}=\{n\}\), \(X^{*<\mathcal {D}_{N}>}=\{m\}\), \(X^{*<\mathcal {D}_{B}>}=\varnothing \), cD(3) = 17, cD(4) = 16, cD(10) = 18, cD(12) = 17, \({\mathfrak M}_{1}=1.42\), and \({\mathfrak M}_{2}=0.08\). So, the NWC3WD induced by the node set X is:

It shows that in the sub-network X = {3,4,10,12} corresponding to this weaken-concept, the average influence of the nodes is 1.42, and the difference of influence between the nodes is 0.08.

-

(2)

The NWC3WD induced by the node set \(C^{*<\mathcal {X}_{P}>}\).

Let C1 = {d,e,h,k}. Then \(C_{1}^{*<\mathcal {X}_{P}>}=\{3,10,12\}\), \((C_{1}^{*<\mathcal {X}_{P}>})^{*<\mathcal {D}_{P}>}=\{n\}\), \((C_{1}^{*<\mathcal {X}_{P}>})^{*<\mathcal {D}_{N}>}=\{m\}\), \((C_{1}^{*<\mathcal {X}_{P}>})^{*<\mathcal {D}_{B}>}=\varnothing \), cD(3) = 12, cD(10) = 12, cD(12) = 12, \(\mathfrak {M}_{1}=1.13\), and \(\mathfrak {M}_{2}=0\). So, the NWC3WD induced by the node set \(C_{1}^{*<\mathcal {X}_{P}>}\) is:

It shows that in the sub-network \(C_{1}^{*<\mathcal {X}_{P}>}=\{3,10,12\}\) corresponding to this weaken-concept, the average influence of the nodes is 1.13, and the difference of influence between the nodes is 0.

-

(3)

The NWC3WD induced by the node set \(C^{*<\mathcal {X}_{N}>}\).

Let C2 = {h,l}. Then \(C_{2}^{*<\mathcal {X}_{N}>}=\{1,8\}\), and we can get \((C_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {D}_{P}>}=\varnothing \), \((C_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {D}_{N}>}=\{ n \}\), \((C_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {D}_{B}>}=\varnothing \), cD(1) = 4, cD(8) = 4, \(\mathfrak {M}_{1}=0.5\), \(\mathfrak {M}_{2}=0\). So, the NWC3WD induced by the node set \(C_{2}^{*<\mathcal {X}_{N}>}\) is:

It shows that in the sub-network \(C_{2}^{*<\mathcal {X}_{N}>}=\{1,8\}\) corresponding to this weaken-concept, the average influence of the nodes is 0.5, and the difference of influence between the nodes is 0.

(4) The NWC3WD induced by the node set of \(D^{*<\mathcal {X}_{P}>}\).

Let D1 = {n}. Then \(D_{1}^{*<\mathcal {X}_{P}>}=\{3,4,10,12\}\), and we can get \((D_{1}^{*<\mathcal {X}_{P}>} )^{*<\mathcal {C}_{P}>}=\{ d,e,k \}\), \((D_{1}^{*<\mathcal {X}_{P}>} )^{*<\mathcal {C}_{N}>}=\{a,b,c\}\), \((D_{1}^{*<\mathcal {X}_{P}>} )^{*<\mathcal {C}_{B}>}=\varnothing \), cD(3) = 17, cD(4) = 16, cD(10) = 18, cD(12) = 17, \(\mathfrak {M}_{1}=1.42\), \(\mathfrak {M}_{2}=0.08\). So, the NWC3WD induced by the node set of \(D_{1}^{*<\mathcal {X}_{p}>}\) is:

It shows that in the sub-network \(D_{1}^{*<\mathcal {X}_{P}>}=\{3,4,10,12\}\) corresponding to this weaken-concept, the average influence of the nodes is 1.42, and the difference of influence between the nodes is 0.08.

-

(5)

The NWC3WD induced by the node set of \(D^{*<\mathcal {X}_{N}>}\).

Let D2 = {n,o}. Then \(D_{2}^{*<\mathcal {X}_{N}>}=\{ 1,9,14 \}\), and we can get \((D_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {C}_{P}>}=\{ a,b \}\), \((D_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {C}_{N}>}=\{ d,e,f,h \}\), \((D_{2}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {C}_{B}>}=\varnothing \), cD(1) = 9, cD(9) = 8, cD(14) = 9, \(\mathfrak {M}_{1}=0.81\), \(\mathfrak {M}_{2}=0.03\). So, the NWC3WD induced by the node set of \(D_{2}^{*<\mathcal {X}_{N}>}\) is:

It shows that in the sub-network \(D_{2}^{*<\mathcal {X}_{N}>}=\{ 1,9,14 \}\) corresponding to this weaken-concept, the average influence of the nodes is 0.81, and the difference of influence between the nodes is 0.03.

-

(6)

The NWC3WD induced by the node set of \(D^{*<\mathcal {X}_{B}>}\).

Let D3 = {n,o}. Then \(D_{3}^{*<\mathcal {X}_{B}>}=\{6,7,11,15\}\), \((D_{3}^{*<\mathcal {X}_{B}>})^{*<\mathcal {C}_{P}>}=\{ g \}\), \((D_{3}^{*<\mathcal {X}_{B}>} )^{*<\mathcal {C}_{N}>}=\{ a,b,c \}\), \((D_{3}^{*<\mathcal {X}_{B}>} )^{*<\mathcal {C}_{B}>}=\{ h,l \}\), cD(6) = 2, cD(7) = 8, cD(11) = 9, cD(15) = 11, \(\mathfrak {M}_{1}=0.63\), and \(\mathfrak {M}_{2}=0.29\). So, the NWC3WD induced by the node set of \(D_{3}^{*<\mathcal {X}_{B}>}\) is:

It shows that in the sub-network \(D_{3}^{*<\mathcal {X}_{B}>}=\{6,7,11,15\}\) corresponding to this weaken-concept, the average influence of the nodes is 0.63, and the difference of influence between the nodes is 0.29.

5 The network weaken-concept logic of three-way decision

In order to further discuss the rules between NWC3WDs, we need to introduce logic between the network weaken-concepts of three-way decision.

Note that the logical description language of NWC3WD follows that of the rough set theory. Firstly, we can define the antecedent formula and the consequent formula corresponding to the network weaken-concept. Conversely, the corresponding attribute sets can be obtained by the antecedent formula and the consequent formula. Moreover, the network decision rule extraction algorithm and its rule confidence degree can be investigated.

The logic language of NWC3WD uses φ, ψ to represent the antecedent formula and the consequent formula, and adopts the logical connectors ∧, ∨, ¬, → and \(\leftarrow \) to form more complex logical expressions in a recursive manner.

Definition 11

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall C\in {{2}^{\mathcal C}}, D\in {{2}^{\mathcal {D}}}\), the antecedent formula and the consequent formula are denoted by \(\varphi _{x}(C )=\underset {{{c}_{i}}\in C}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x ) )\), \(\psi _{x}(D )=\underset {{{d}_{i}}\in D}{\mathop {\wedge }} (d_{i},I_{\mathcal {D}}^{d_{i}}(x) )\), respectively.

In particular, \({{\varphi }_{P}}(C)=\underset {c_{i}\in C}{\mathop {\wedge }}(c_{i},1 ),{{\varphi }_{N}}(C )=\underset {c_{i}\in C}{\mathop {\wedge }}(c_{i},-1 )\), \({{\varphi }_{B}}(C )=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},0 )\), \({{\psi }_{P}}(D )=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},1 )\), \({{\psi }_{N}}(D )=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},-1 )\), \({{\psi }_{B}}(D )=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},0 )\).

Definition 12

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For ∀X ∈ 2U, the antecedent formula and consequent formula of the node set X are defined as:

Similarly, for \(\forall D\in {{2}^{\mathcal {D}}}\), the antecedent formula and consequent formula of the decision attribute set D can be defined as:

Example 4

Let D1 = {n}. We can get \(\varphi _{P} ((D_{1}^{*<\mathcal {X}_{P}>})^{*<\mathcal {C}_{P}>})=(d,1 )\wedge (e,1 )\wedge (k,1 )\), \(\varphi _{N} ((D_{1}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {C}_{N}>} )=(d,-1 )\), and \(\varphi _{B} ((D_{1}^{*<\mathcal {X}_{B}>} )^{*<\mathcal {C}_{B}>} )=(h,0 )\wedge (l,0 )\).

Definition 13

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall C\in {{2}^{\mathcal C}}, D\in {{2}^{\mathcal {D}}}\),

is the set of conditional attributes corresponding to the antecedent formula φP(C).

Similarly, we can define

In addition, we can define

They are in fact the sets of decision attributes corresponding to the consequent formulas ψP(D), ψN(D) and ψB(D), respectively.

Example 5

Let φP(C1) = (d,1) ∧ (e,1) ∧ (k,1). Then it is easy to get \((\phi _{P}(C_{1} ))^{*<\mathcal {C}>}=\{ d,e,k \}\).

Definition 14

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD, and \(C^{*<\varphi _{P}>}=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},1 )\), \(C^{*<\varphi _{N}>}=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},-1 )\), \(C^{*<\varphi _{B}>}=\underset {c_{i}\in C}{\mathop {\wedge }}(c_{i},0 )\) be the logical formulas of conditional attributes. We rewrite them uniformly as \(C^{*<\varphi _{x}>}=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x))\). That is, \(C^{*<\varphi _{x}>}\) is equivalent to φx(C).

Similarly, the logical formulas for decision attributes can also be defined as \(D^{*<\psi _{x}>}=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},I_{\mathcal {D}}^{d_{i}}(x))\). In particular, \(D^{*<{{\psi }_{P}}>}=\underset {d_{i}\in D}{\mathop {\wedge }}(d_{i},1 ), D^{*<\psi _{N}>}=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},-1 )\), and \(D^{*<\psi _{B}>}=\underset {d_{i}\in D}{\mathop {\wedge }} (d_{i},0 )\).

Example 6

Let D1 = {m,n,o}. Then we can get \(D_{1}^{*<\psi _{N}>}=(m,-1 )\wedge (n,-1 )\wedge (o,-1 ), D_{1}^{*<\psi _{P}>}=(m,1 )\wedge (n,1 )\wedge (o,1 )\), and \(D_{1}^{*<\psi _{B}>}=(m,0 )\wedge (n,0 )\wedge (o,0 )\).

Property 1

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall X\in 2^{U},C\in {{2}^{\mathcal {C}}},D\in {{2}^{\mathcal {D}}}\), \((\varphi _{x}(C ))^{*<\mathcal {C}>}, (\psi _{x}(D))^{*<\mathcal {D}>}, C^{*<\varphi _{x} >}\) and \(D^{*<\psi _{x} >}\) have the following properties:

-

(1)

\(((\varphi _{P}(C ))^{*<\mathcal {C}>} )^{*<\varphi _{P}>}=\varphi _{P}(C),((\psi _{P}(D))^{*<\mathcal {D}>})^{*<\psi _{P}>}=\psi _{P}(D)\);

-

(2)

\(((\varphi _{N}(C))^{*<\mathcal {C}>} )^{*<\varphi _{N}>}=\varphi _{N}(C)\), \(((\psi _{N}(D))^{*<\mathcal {D}>} )^{*<\psi _{N}>}=\psi _{N}(D)\);

-

(3)

\(((\varphi _{B}(C ))^{*<\mathcal {C}>} )^{*<\varphi _{B}>}=\varphi _{B}(C)\), \(((\psi _{B}(D))^{*<\mathcal {D}>} )^{*<\psi _{B}>}=\psi _{B}(D)\);

-

(4)

\((C^{*<\varphi _{P}>})^{*<\mathcal {C}>}=C\), \((D^{*<\psi _{P}>})^{*<\mathcal {D}>}=D\);

-

(5)

\((C^{*<\varphi _{N}>})^{*<\mathcal {C}>}=C\), \((D^{*<\psi _{N}>})^{*<\mathcal {D}>}=D\);

-

(6)

\((C^{*<\varphi _{B}>})^{*<\mathcal {C}>}=C\), \((D^{*<\psi _{B}>})^{*<\mathcal {D}>}=D.\)

Proof

We only prove (1) and (4) since the rest can be similarly proved.

(1) According to Definition 13, we can get \((\varphi _{P}(C ))^{*<\mathcal {C}>}=\{ c_{i}\in \mathcal {C}|\varphi _{P}(C )=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},1 ) \}=C\), then \(((\varphi _{P}(C ))^{*<\mathcal {C}>})^{*<\varphi _{P}>}=(C )^{*<\varphi _{P}>}=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},1 )=\varphi _{P}(C )\).

In a similar way, we can obtain \(((\psi _{P}(D))^{*<\mathcal {D}>})^{*<\psi _{P}>}=\psi _{P}(D)\).

(4) By Definition 14, we can get \(C^{*<\varphi _{P}>}=\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},1 )\), and \((C^{*<\varphi _{P}>} )^{*<\mathcal {C}>}={{(\underset {c_{i}\in C}{\mathop {\wedge }} (c_{i},1 ) )}^{*<\mathcal {C}>}}=C\).

In a similar way, we can obtain \((D^{*<\psi _{P}>})^{*<\mathcal {D}>}=D\). □

Note that \(\varphi _{x} (C )^{*<\mathcal {C}>}\) and \(C^{*<\varphi _{x} >}=\varphi _{x} (C)\) are a pair of operators, so are \(\psi _{x}(D)^{*<\mathcal {D}>}\) and \({D}^{*<\psi _{x} >}=\psi _{x}(D)\). The properties of these operators are discussed below.

For convenience, we do not distinguish the operators φP, φN, φB, and use φx to represent them uniformly. For the operators ψP,ψN,ψB, we use ψx to represent them uniformly.

Property 2

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall X\in 2^{U},C_{1},C_{2}\in 2^{\mathcal {C}}, D_{1},D_{2}\in 2^{\mathcal {D}}\), the attribute sets \(\varphi _{x}(C)^{*<\mathcal {C}>}\) and \(\psi _{x}(D)^{*<\mathcal {D}>}\) for logical formulas satisfy the following properties:

-

(1)

\(({\varphi _{x}}(C_{1}))^{*<\mathcal {C}>}\cup ({\varphi _{x}}(C_{2} ))^{*<\mathcal {C}>}=({\varphi _{x}}(C_{1} )\wedge {\varphi _{x}} (C_{2} ) )^{*<\mathcal {C}>}\);

-

(2)

\(({\psi _{x}}(D_{1} ))^{*<\mathcal {D}>}\cup ({\psi _{x}}(D_{2}))^{*<\mathcal {D}>}=({\psi _{x}}(D_{1} )\wedge {\psi _{x}}(D_{2} ) )^{*<\mathcal {D}>}\).

Proof

By Definition 14 and Property 1, we can get \({\varphi _{x}}(C_{1} )=\underset {c_{i}\in C_{1}}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x))\), \({\varphi _{x}}(C_{2})= \mathop \wedge \limits _{c_{k} \in C_{2}} (c_{k},I_{\mathcal {C}}^{c_{k}}(x))\), then \(({\varphi _{x}}(C_{1} ))^{*<\mathcal {C}>}=C_{1}\), \(({\varphi _{x}}(C_{2} ))^{*<\mathcal {C}>}=C_{2}\). As a result, \(({\varphi _{x}}(C_{1} ))^{*<\mathcal {C}>}\cup ({\varphi _{x}}(C_{2} ))^{*<\mathcal {C}>}=C_{1}\cup C_{2}\). On the other hand, \(({{\varphi _{x}}}(C_{1} )\wedge {{\varphi _{x}}}(C_{2} ))^{*<\mathcal {C}>}=((\underset {{c_{i}\in C_{1}}}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x) ))\wedge (\underset {c_{k}\in C_{2}}{\mathop {\wedge }} (c_{k},I_{\mathcal {C}}^{c_{k}}(x) ) ))^{*<\mathcal {C}>}=\underset {c_{k}\in C_{1}\cup C_{2}}{\mathop {\wedge }} (c_{k},I_{\mathcal {C}}^{c_{k}}(x) ) =C_{1}\cup C_{2}\). So, \(({\varphi _{x}}(C_{1}))^{*<\mathcal {C}>}\cup ({\varphi _{x}}(C_{2} ))^{*<\mathcal {C}>}=({\varphi _{x}}(C_{1} )\wedge {\varphi _{x}} (C_{2} ) )^{*<\mathcal {C}>}\).

In a similar way, we can prove \(({\psi _{x}}(D_{1} ))^{*<\mathcal {D}>}\cup ({\psi _{x}}(D_{2}))^{*<\mathcal {D}>}=({\psi _{x}}(D_{1} )\wedge {\psi _{x}}(D_{2} ) )^{*<\mathcal {D}>}\). □□

Property 3

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For \(\forall C_{1},C_{2}\in 2^{\mathcal {C}},D_{1},D_{2}\in 2^{\mathcal {D}}\), the logical formula \(C^{*<\varphi _{x} >}\) of conditional attributes and the logical formula \(D^{*<{\psi _{x}}>}\) of decision attributes satisfy the following properties:

-

(1)

\((C_{1}\cup C_{2} )^{*<\varphi _{x} >}=C_{1}^{*<\varphi _{x} >}\wedge C_{2}^{*<\varphi _{x} >}\), \((D_{1}\cup D_{2} )^{*<{\psi _{x}}>}=D_{1}^{*<{\psi _{x}}>}\wedge D_{2}^{*<{\psi _{x}}>}\);

-

(2)

\(C_{1}^{*<\varphi _{x} >}\wedge C_{2}^{*<\varphi _{x} >}\Rightarrow (C_{1}\cap C_{2} )^{*<\varphi _{x} >}\), \(D_{1}^{*<{\psi _{x}}>}\wedge D_{2}^{*<{\psi _{x}}>}\Rightarrow {{(D_{1}\cap D_{2} )}^{*<{\psi _{x}}>}}.\)

Proof

-

(1)

By Definition 14, we obtain \(C_{1}^{*<\varphi _{x} >}=\underset {c_{i}\in C_{1}}{\mathop {\wedge }}(c_{i},I_{\mathcal {C}}^{c_{i}}(x) ),C_{2}^{*<\varphi _{x} >}=\underset {c_{i}\in C_{2}}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x))\). So \( C_{1}^{*<\varphi _{x} >}\wedge C_{2}^{*<\varphi _{x}>}=(\underset {c_{i}\in C_{1}}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x)) )\wedge (\underset {c_{i}\in C_{2}}{\mathop {\wedge }} (c_{i}\),\(I_{\mathcal {C}}^{c_{i}}(x)) ) =\underset {c_{i}\in C_{1}\cup C_{2}}{\mathop {\wedge }} (c_{i},I_{\mathcal {C}}^{c_{i}}(x))=(C_{1}\cup C_{2} )^{*<\varphi _{x} >}\). Similarly, we can prove \((D_{1}\cup D_{2} )^{*<{\psi _{x}}>}=D_{1}^{*<\psi _{x}>}\wedge D_{2}^{*<{\psi _{x}}>}\).

-

(2)

According to the first item of Property 3, we get \(C_{1}^{*<\varphi _{x}>}\wedge C_{2}^{*<\varphi _{x}>}={{(C_{1}\cup C_{2} )}^{*<\varphi _{x}>}}=\underset {c_{k}\in C_{1}\cup C_{2}}{\mathop {\wedge }}(c_{k},I_{\mathcal {C}}^{c_{k}}(x))\Rightarrow \underset {c_{k}\in C_{1}\cap C_{2}}{\mathop {\wedge }} (c_{k},I_{\mathcal {C}}^{c_{k}}(x))=(C_{1}\cap C_{2})^{*<\varphi _{x}>}\). Similarly, we can prove \(D_{1}^{*<\psi _{x}>}\wedge D_{2}^{*<{\psi _{x}}>}\Rightarrow (D_{1}\cap D_{2} )^{*<\psi _{x}>}\).

□

In order to extract the rules between NWC3WDs and analyze them later, we continue to give some operators related to the rules.

Definition 15

Let \(r_{ij}^{*<\mathcal {X}>}=\{ x_{k}\in U| x_{k}\vDash r_{ij} \}\), where \(x_{k}\vDash r_{ij}\) means that the node xk satisfies the rule rij : φi → ψj. All the rules satisfied by xk are represented by \(\mathcal {R}(x_{k} )=\{ r_{ij}\in R_{u} | x_{k}\vDash r_{ij}\}\), where Ru = {rij|rij : φi → ψj} denotes a set of the rules extracted from the network weaken-concepts of three-way decision.

Definition 16

For \(r\subseteq R_{u}\), let \(r^{*<\mathcal {X}>}=\{ x_{k}\in U| \forall r_{ij}\in r,x_{k}\vDash r_{ij} \}=\underset {r_{ij}\in r}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}\). That is, \(r^{*<\mathcal {X}>}\) is the set of the nodes in U that satisfy all the rules in r.

Definition 17

For \(X\subseteq U\), let \(X^{*<\mathcal {R}>}=\{ r_{ij}\in R_{u}| \forall x_{k}\in X,x_{k}\vDash r_{ij}\}=\underset {x_{k}\in X}{\mathop {\bigcap }} \mathcal {R}(x_{k} )\). That is, \(X^{*<\mathcal {R}>}\) is the set of the rules which are commonly satisfied by all the nodes in X.

Property 4

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD. For ∀X1,X2 ∈ 2U, the following properties hold:

-

(1)

\((X_{1}\cup X_{2} )^{*<\mathcal {R}>}=X_{1}^{*<\mathcal {R}>}\cap X_{2}^{*<\mathcal {R}>}\);

-

(2)

\((X_{1}\cap X_{2} )^{*<\mathcal {R}>}\supseteq X_{1}^{*<\mathcal {R}>}\cup X_{2}^{*<\mathcal {R}>}\).

Proof

-

(1)

As \(X_{1}^{*<\mathcal {R}>}=\underset {x_{k}\in X_{1}}{\mathop {\bigcap }} \mathcal {R}(x_{k} )\) and \(X_{2}^{*<\mathcal {R}>}=\underset {x_{k}\in X_{2}}{\mathop {\bigcap }} \mathcal {R}(x_{k} )\), we can get \(X_{1}^{*<\mathcal {R}>}\cap X_{2}^{*<\mathcal {R}>}=\left (\underset {x_{k}\in X_{1}}{\mathop {\bigcap }} \mathcal {R}(x_{k}) \right )\bigcap \left (\underset {x_{k}\in X_{2}}{\mathop {\bigcap }} \mathcal {R}(x_{k}) \right ) =\underset {x_{k}\in X_{1}\cup X_{2}}{\mathop {\bigcap }} \mathcal {R}(x_{k}) =(X_{1}\cup X_{2} )^{*<\mathcal {R}>}.\)

-

(2)

As X1 = (X1 − X2) ∪ (X1 ∩ X2), X2 = (X2 − X1) ∪ (X1 ∩ X2), according to the first item of Property 4, we can get \( X_{1}^{*<\mathcal {R}>}=\underset {x_{k}\in (X_{1}-X_{2} )\cup (X_{1}\cap X_{2} )}{\mathop {\bigcap }} \mathcal {R}(x_{k})=(X_{1}-X_{2} )^{*<\mathcal {R}>}\cap (X_{1}\cap X_{2} )^{*<\mathcal {R}>}\). Similarly, we can obtain \(X_{2}^{*<\mathcal {R}>}=(X_{2}-X_{1} )^{*<\mathcal {R}>}\cap (X_{1}\cap X_{2} )^{*<\mathcal {R}>}\).

To sum up, \(X_{1}^{*<\mathcal {R}>}\cup X_{2}^{*<\mathcal {R}>}=((X_{1}-X_{2} )^{*<\mathcal {R}>}\cup (X_{2}-X_{1} )^{*<\mathcal {R}>} )\cap (X_{1}\cap X_{2} )^{*<\mathcal {R}>}\subseteq (X_{1}\cap X_{2} )^{*<\mathcal {R}>}\). □

Property 5

Let \((U,M,\mathcal {C},\mathcal {D},I)\) be a NFC3WD and Ru = {rij|rij : φi → ψj} be a set of the rules extracted from the network weaken-concepts of three-way decision. For \(\forall r_{1},r_{2}\subseteq R_{u}\), the following properties hold:

-

(1)

\({{(r_{1}\cup r_{2} )}^{*<\mathcal {X}>}}=r_{1}^{*<\mathcal {X}>}\cap r_{2}^{*<\mathcal {X}>}\);

-

(2)

\({{(r_{1}\cap r_{2} )}^{*<\mathcal {X}>}}\supseteq r_{1}^{*<\mathcal {X}>}\cup r_{2}^{*<\mathcal {X}>}\).

Proof

-

(1)

According to \(r_{1}^{*<\mathcal {X}>}=\underset {r_{ij}\in r_{1}}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}\) and \(r_{2}^{*<\mathcal {X}>}=\underset {r_{ij}\in r_{2}}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}\), we can get \(r_{1}^{*<\mathcal {X}>}\cap r_{2}^{*<\mathcal {X}>}=\left (\underset {r_{ij}\in r_{1}}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}\right )\bigcap \left (\underset {r_{ij}\in r_{2}}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}\right )=\underset {r_{ij}\in r_{1}\cup r_{2}}{\mathop {\bigcap }} r_{ij}^{*<\mathcal {X}>}={{(r_{1}\cup r_{2} )}^{*<\mathcal {X}>}}\).

-

(2)

As r1 = (r1 ∩ r2) ∪ (r1 − r2), r2 = (r1 ∩ r2) ∪ (r2 − r1), according to the first item of Property 5, we can get \(r_{1}^{*<\mathcal {X}>}=(r_{1}\cap r_{2} )^{*<\mathcal {X}>}\cap (r_{1}-r_{2} )^{*<\mathcal {X}>}\), \(r_{2}^{*<\mathcal {X}>}=(r_{1}\cap r_{2} )^{*<\mathcal {X}>}\cap (r_{2}-r_{1} )^{*<\mathcal {X}>}\). Then we obtain \(r_{1}^{*<\mathcal {X}>}\cup r_{2}^{*<\mathcal {X}>}=\left ((r_{1}\cap r_{2} )^{*<\mathcal {X}>}\cap (r_{1}-r_{2} )^{*<\mathcal {X}>} \right )\bigcup \left ((r_{1}\cap r_{2} )^{*<\mathcal {X}>}\right .\left .\cap (r_{2}-r_{1} )^{*<\mathcal {X}>} \right )=(r_{1}\cap r_{2} )^{*<\mathcal {X}>}\cap ((r_{1}-r_{2} )^{*<\mathcal {X}>}\cup (r_{2}-r_{1} )^{*<\mathcal {X}>} )\subseteq (r_{1}\cap r_{2} )^{*<\mathcal {X}>}\).

□

Based on the above properties and the network weaken-concept logic, the extraction and simplification of the rules are ready to be discussed.

6 The bidirectional rule extraction and simplification for the network weaken-concepts of three-way decision

6.1 The bidirectional rule extraction based on NWC3WD

Definition 18

For the rule \(\varphi \xrightarrow {\mu }\psi \), its confidence degree is defined as

It represents the ratio of the number of nodes that satisfy both the antecedent φ and the consequent ψ to the number of nodes that only satisfy the antecedent φ.

Definition 19

We call \(\psi \xrightarrow {\omega }\varphi \) the reverse rule of \(\varphi \xrightarrow {\mu }\psi \), and its confidence degree is defined as

It represents the ratio of the number of nodes that satisfy both the formulas φ and ψ to the number of nodes that only satisfy the consequent ψ.

By Definitions 18 and 19, the larger ω and μ, the higher the homogeneities of their rules. And at the same time, it is not difficult to find that the reverse rule of \(\psi \xrightarrow {\omega }\varphi \) is \(\varphi \xrightarrow {\mu }\psi \).

According to the above definitions, we can first get \(\psi \xrightarrow {\omega }\varphi \), and then get \(\varphi \xrightarrow {\mu }\psi \). For the meaning and need of mining diagnostic rules, we only discuss the following five kinds of types. To achieve this task, the idea of bidirectional rule extraction is adopted. For the sake of simplicity, let Di = {di}. We compute \(D_{i}^{*<\mathcal {X}_{P}>}\), \(D_{i}^{*<\mathcal {X}_{N}>}\), \(D_{i}^{*<\mathcal {X}_{B}>}\), and further find \((D_{i}^{*<\mathcal {X}_{P}>} )^{*<\mathcal {C}_{P}>}\), \((D_{i}^{*<\mathcal {X}_{N}>} )^{*<\mathcal {C}_{N}>}\), \((D_{i}^{*<\mathcal {X}_{B}>} )^{*<\mathcal {C}_{B}>}\) which are the conditional attribute sets corresponding to \(D_{i}^{*<\mathcal {X}_{P}>}\), \(D_{i}^{*<\mathcal {X}_{N}>}\) and \(D_{i}^{*<\mathcal {X}_{B}>}\), respectively. Thus, we obtain \(r:\psi \xrightarrow {\omega }\varphi \) as follows:

Then, for \(x_{j}\in D_{i}^{*<\mathcal {X}_{P}>}\) and \(x_{k}\in D_{i}^{*<\mathcal {X}_{N}>}\), we can further get \(\{ x_{j} \}^{*<\mathcal {C}_{P}>}\) and \(\{ x_{k} \}^{*<\mathcal {C}_{N}>}\). Finally, taking \(\{ x_{j} \}^{*<\mathcal {C}_{P}>}\) and \(\{ x_{k} \}^{*<\mathcal {C}_{N}>}\) as prior information to mine the reverse rule \(r:\varphi \xrightarrow {\mu }\psi \), we obtain

For \(r_{ij}:\psi _{i}\xrightarrow {\omega _{ij}}\varphi _{j}\) and its reverse rule \(r_{ji}:\varphi _{j}\xrightarrow {\mu _{ji}}\psi _{i}\), if ωij = μji = 1, it is said that the rules \(r_{ij}:\psi _{i}\xrightarrow {\omega _{ij}}\varphi _{j}\) and \(r_{ji}:\varphi _{j}\xrightarrow {\mu _{ji}}\psi _{i}\) are equivalent, and they are coordination rules.

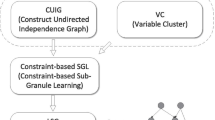

The above idea of rule extraction is a kind of bidirectional mining. In other words, we get the rule rij, and further we get the reverse rule rji. The schematic diagram of bidirectional rule extraction can be shown in Fig. 2.

Based on the above discussion, the bidirectional rule extraction algorithm based on NFC3WD is given in Algorithm 1, where Step 1 is the initialization process, Steps 2-5 are to divide the object set into three categories according to different decision attribute values, Steps 6-14 are to extract the corresponding rules from the decision attributes, and Steps 15-28 are to traverse each object to obtain the rules. In addition, the time complexity of Algorithm 1 in the worst case is \(O(| U |^{2}| \mathcal {C} ||\mathcal {D}|)\).

Example 7

Based on the NFC3WDs in Example 2 and Algorithm 1, the diagnostic rules for COVID-19 can be obtained. Considering the medical common knowledge of COVID-19, we select the appropriate conditional attributes d, e, f, g, h, i, j, k and l for rule extraction. It starts with the decision attribute n which means COVID-19. Then we get \(\{n\}^{*<\mathcal {C}_{P}>}\), \(\{n\}^{*<\mathcal {C}_{N}>}\), \(\{n\}^{*<\mathcal {C}_{B}>}\), and we further obtain the following rules:

-

Rule 1:

\((n,1 )\xrightarrow {\omega _{P} =1}(d,1 )\wedge (e,1 )\wedge (k,1 )\).

-

Rule 2:

\((n,-1 )\xrightarrow {\omega _{N} =1}(d,-1 )\).

-

Rule 3:

\((n,0 )\xrightarrow {\omega _{B}=1}(h,0 )\wedge (l,0)\).

On the contrary, starting with \(x_{i}\in n^{*<\mathcal {X}_{P}>}\) and \(x_{j}\in n^{*<\mathcal {X}_{N}>}\), we can obtain \(\{ x_{i} \}^{*<\mathcal {C}_{P}>}\) and \(\{ x_{j} \}^{*<\mathcal {C}_{N}>}\). Then, we get the corresponding rules as follows:

-

Rule 4:

\((d,1 )\wedge (e,1 )\wedge (h,1)\wedge (j,1 )\wedge (k,1 )\xrightarrow {\mu _{P} =1}(n,1)\).

-

Rule 5:

\((d,1 )\wedge (e,1 )\wedge (f,1 )\wedge (k,1)\wedge (l,1 )\xrightarrow {\mu _{P} =1}(n,1 )\).

-

Rule 6:

\((d,1 )\wedge (e,1 )\wedge (f,1 )\wedge (g,1 )\wedge (h,1 )\wedge (k,1 )\xrightarrow {\mu _{P} =1}(n,1 )\).

-

Rule 7:

\((d,1 )\wedge (e,1 )\wedge (h,1 )\wedge (i,1 )\wedge (k,1 )\xrightarrow {\mu _{P} =1}(n,1 )\).

-

Rule 8:

\((d,-1)\wedge (e,-1 )\wedge (f,-1 )\wedge (g,-1 )\wedge (h,-1)\wedge (i,-1 )\wedge (j,-1)\wedge (l,-1 )\xrightarrow {\mu _{N} =1}(n,-1)\).

-

Rule 9:

\((d,-1 )\wedge (l,-1 )\xrightarrow {\mu _{N} =1}(n,-1 )\).

-

Rule 10:

\((d,-1)\wedge (h,-1 )\wedge (i,-1 )\wedge (j,-1)\wedge (l,-1 )\xrightarrow {\mu _{N} =1}(n,-1)\).

-

Rule 11:

\((d,-1)\wedge (e,-1 )\wedge (f,-1 )\wedge (g,-1 )\wedge (h,-1)\wedge (k,-1 )\xrightarrow {\mu _{N} =1}(n,-1)\).

-

Rule 12:

\((d,-1 )\wedge (i,-1 )\wedge (j,-1)\wedge (l,-1)\xrightarrow {\mu _{N} =1}(n,-1)\).

-

Rule 13:

\((d,-1 )\wedge (e,-1 )\wedge (f,-1 )\wedge (h,-1)\wedge (k,-1 )\xrightarrow {\mu _{N} =1}(n,-1)\).

6.2 Reduction of three-way decision network rules

This subsection is to discuss the issue of reduction of three-way decision network rules. That is, we find and remove some reducible conditional attributes from the antecedent of a rule while preserving the confidence degree of the rule.

Definition 20

For the rule \((c_{1},I_{\mathcal {C}}^{c_{1}}(x ) )\wedge {\cdots } \wedge (c_{i},I_{\mathcal {C}}^{c_{i}}(x))\wedge {\cdots } \wedge (c_{m},I_{\mathcal {C}}^{c_{m}}(x ) )\xrightarrow {\mu }(d_{1},I_{\mathcal {D}}^{d_{1}}(x ) )\wedge {\cdots } \wedge (d_{r},I_{\mathcal {D}}^{d_{r}}(x ) )\), we remove the conditional attribute ci and get the rule \((c_{1},I_{\mathcal {C}}^{c_{1}}(x ) )\wedge {\cdots } \wedge (c_{i-1},I_{\mathcal {C}}^{c_{i-1}}(x ))\wedge (c_{i+1},I_{\mathcal {C}}^{c_{i+1}}(x ))\wedge {\cdots } \wedge (c_{m},I_{\mathcal {C}}^{c_{m}}(x ) )\xrightarrow {\mu _{i}}(d_{1},I_{\mathcal {D}}^{d_{1}}(x ) )\wedge {\cdots } \wedge (d_{r},I_{\mathcal {D}}^{d_{r}}(x ) )\). If μ = μi, it is said that ci is reducible in the rule.

Definition 21

If each conditional attribute in the rule \((c_{1},I_{\mathcal {C}}^{c_{1}}(x ))\wedge {\cdots } \wedge (c_{m},I_{\mathcal {C}}^{c_{m}}(x ) )\xrightarrow {\mu } (d_{1},I_{\mathcal {D}}^{d_{1}}(x ) )\wedge {\cdots } \wedge (d_{r},I_{\mathcal {D}}^{d_{r}}(x ) )\) is irreducible, it is called as a simplest rule.

The rule reduction algorithm is given as Algorithm 2, where Step 1 is the initialization process, Steps 3-8 are to obtain the reducible attribute, and Steps 9-11 are to obtain the reduction rule according to Definition 20 and Definition 21. In addition, the time complexity of Algorithm 2 in the worst case is O(|C||U|).

Example 8

Take the obtained rules (Rules 4-13) in Example 7 as an example to illustrate the idea of rule reduction since the antecedents of Rules 1-3 have already been the simplest forms.

- For Rule 4::

-

\((d,1 )\wedge (e,1 )\wedge (h,1 )\wedge (j,1 )\wedge (k,1 )\xrightarrow {\mu _{P} =1}(n,1 )\), the conditional attributes d, e, j and k are removed one by one, and the new confidence degree μP is still equal to 1. So, the simplest form of this rule after reduction is

$$ (h,1 )\xrightarrow{\mu_{P} =1}(n,1 ). $$

A similar simplification of Rule 6 and Rule 7 can be performed, and the final reduction results are the same as that of Rule 4. Similarly, the reduction of Rule 5 is performed, and its simplest form is

For Rule 10, we get two simplest rules:

A similar simplification can also be performed for Rules 8, 9, 11, 12, 13, and the final reduction results are the same as that of Rule 10.

According to the above rules, COVID-19 can be diagnosed according to whether nucleic acid test is positive and the virus gene sequencing of the node is highly homologous to COVID-19.

Note that the symptoms of COVID-19 and flu are very similar. So, it is natural for us to add these two conditional attributes into the antecedents of the rules for flu diagnosis, and the following rules are obtained.

- Rule 14::

-

\((e,1 )\wedge (f,1 )\wedge (g,1 )\wedge (k,1 )\wedge (l,-1 )\xrightarrow {\mu _{P} =1}(o,1 )\).

- Rule 15::

-

\((f,1 )\wedge (g,1 )\wedge (k,1 )\wedge (h,-1 )\wedge (l,-1)\xrightarrow {\mu _{P} =1}(o,1 )\).

In a similar manner, Rule 14 and Rule 15 can be reduced as follows:

6.3 The rule extraction based on three-way decision network structure

Considering the characteristics of infectious diseases spreading on the network, the spread of infectious diseases is often different under different network structures, so are the prevention and control measures.

Definition 22

Let Nbk(xi) be the set of all k-order neighbors of the node xi, and we call Nbk(xi) the k-order adjacency set of xi. In particular, the set of first-order neighbors of xi is denoted by Nb(xi).

When a case xi is found in an infectious disease network, the node connected to the case xi becomes a suspected case.

The main framework of suspected case recognition based on the network structure is given in Algorithm 3, where \(X_{i}=\bigcup \limits _{j}{Nb(x_{j} )}\) indicates that the set of nodes who are contacted with the patients definitely suffering from the disease di. When the target case has contact with a confirmed case, it is put into the set of suspected cases and further the rules of suspected cases can be mined. In addition, the time complexity of Algorithm 3 in the worst case is \(O(|\mathcal {D}|| U |^{2}+|\mathcal {C}||\mathcal {D}||U|)\).

Example 9

According to the network data in Example 2 and Algorithm 3, the set of suspected cases of COVID-19 in the network structure is {5,6,7,11,15}. The detailed analysis is given below.

The nodes 3, 4, 10 and 12 are diagnosed with COVID-19, so the set of nodes who have contact with these patients is {2,5,6,7,11,13,15}. However, the viral gene sequencing of the nodes 2 and 13 is not highly homologous to COVID-19, which means that they are not suspected cases of COVID-19. Node 5 shows that the total white blood cell count is normal or low and lymphocyte count is decreased, and there are CT imaging findings, which is a suspected case of COVID-19. Node 6 shows headache and the total white blood cell count is normal or low and lymphocyte count is decreased, so it is a suspected case of COVID-19. Nodes 7, 11 and 15 have dry cough, fatigue, body aches, headaches and other symptoms, so they are suspected cases of COVID-19.

6.4 The sequential decision making based on the network structure

In Subsection 6.3, we have discussed how to find the suspected cases of COVID-19. So, it is necessary to take additional measures to control them. To achieve this task, we need to further investigate sequential decision making based on the network structure.

Definition 23

For ∀xj ∈ Nbk(xi), let dt(xi) = vt. Then we denote

where dt(xi) is the value of the decision attribute of xi at time t, and S(dt+ 1(xj)) is the measure taken for the node xj at time t + 1 that has contact with xi.

Example 10

Additional measures are taken for the nodes xj who are first-order adjacent to xi when xi takes different values under COVID-19. The details are shown in Fig. 3.

Suppose the node 3 in Example 2 is the target node. Since the node 3 is diagnosed as COVID-19 at time t, we have dt(3) = 1. The set of the first-order adjacent nodes is Nb(3) = {2,4,6,10,11,12,13,15}, so these nodes need to be centralized isolation at time t + 1.

7 Experiments and results

In this section, some numerical experiments are conducted to evaluate the performances of Algorithms 1 and 2, and to illustrate the effectiveness of the algorithm and the rationality of the concept cognition method under NFC3WD.

7.1 Experimental environment and related descriptions

The machine used for experiments is a WIN 10 operating system with a 16.0 GB of RAM, a 2.10 GHz CPU, and the coding language is Matlab.

Due to the confidentiality of the infectious disease data, we can only test our algorithm by combining the UCI data sets and their induced networks. Meanwhile, nine UCI datasets Footnote 1 were selected for our methods in the experiments: Heart Disease, Breast Cancer Wisconsin (Original), Iris, Acute Inflammations, Seeds, Breast Cancer Coimbra, Hepatitis, Lymphography, Spect Heart. The details of the chosen data sets are shown in the Table 3.

Since these nine data sets are presented in the form of multi-valued attributes or continuous attributes, by considering the need for creating the network formal contexts of three-way decision, the data should be pre-processed before analysis. For convenience, the attribute labels in each data set are represented by letters a,b,⋯ in turn.

7.2 Data preprocessing

Firstly, three-way value conversion. Taking Heart Disease data set as an example, the original data was transformed into the network formal context of three-way decision. Note that the original data has two types of continuous attributes and multi-valued attributes. Then, combining general medical knowledge, the test data were divided into three parts: Normal, Abnormal, and Uncertain. Then, the Normal part was set to -1, the Abnormal part was set to 1, and the rest to 0. In particular, for the attribute Sex, the male value was set to 1, and the female value was set to -1. For example, the values of the attributes in the Heart disease data set were transformed by the data as shown in Table 4.

Then, adjacency matrix was constructed by using the similarity between nodes. Here, the similarity degree of nodes was defined as the ratio of the number of attributes that take the same value under the same attribute to the total number of attributes between nodes. By setting the similarity threshold, when the similarity degree between xi and xj is more than 0.5, then mij = 1; otherwise, mij = 0. Finally, the adjacency matrix M = (mij)303×303 between nodes was generated.

7.3 Experimental results

Based on the rule extraction algorithm and the rule reduction algorithm (see Algorithms 1 and 2 for details), we can calculate the values \(\mu ,\omega ,{{\mathfrak {M}}_{1}},{{\mathfrak {M}}_{2}}\) of each rule, and the results are shown in Tables 5 and 6.

In order to understand the data in Tables 5 and 6, additional explanations are given below. For the sake of brevity, the 14 attributes corresponding to the Heart Disease data set are denoted by letters a,b,⋯ ,n, and n is used as the decision attribute. Firstly, we get the rules from back to front: \({{\psi }_{P}}(d_{i},1 )\to \varphi _{P}({{(D_{i}^{*<\mathcal {X}_{P}>} )}^{*<\mathcal {C}_{P}>}} )\), \(\psi _{N}(d_{i},-1 )\to \varphi _{N}({{(D_{i}^{*<\mathcal {X}_{N}>} )}^{*<\mathcal {C}_{N}>}} )\), and then the rules from front to back are \(\varphi _{P}({{\{ x_{j} \}}^{*<\mathcal {C}_{P}>}} )\to (d_{i},1 )\), \(\varphi _{N}({{\{ x_{j} \}}^{*<\mathcal {C}_{N}>}} )\to (d_{i},-1 )\). Thus, we can generate the bidirectional rule extraction results, and the main rules in Table 5 are the three rules with the largest ω.

In Table 5, the rule with the greatest ω is r1,r2, and the rule with the greatest μ is also r1,r2. For r1,r2, \(\mathfrak {M}_1\) is largest, while \({{\mathfrak {M}}_{2}}\) is small. That is, the sub-network corresponding to the rules has large average importance but small difference of the importance, and the rules satisfy structural homogeneity. We can also find ω = μ, so r1,r2 satisfy the rule coordination. The rest rules can be similarly analyzed.

The experimental results of the rest 4 data sets are shown in Table 6. According to Table 6, we can obtain the following conclusions:

In the Breast Cancer data set, the rule with the largest ω is (f,1) → (j,1), where μ = 0.8127 indicates that the ratio of the number of nodes that satisfy this rule (f,1) → (j,1) to the number of nodes that must have conditional attributes f. Meanwhile, ω = 0.8967 indicates that the ratio of the number of nodes that satisfy this rule (j,1) → (f,1) to the number of nodes that must have decision attributes j. \({\mathfrak M}_1= 90.7643\) indicates that in the sub-network corresponding to the node that satisfies this rule, the average influence of the nodes is 90.7643. \({\mathfrak M}_2= 46.8699\) indicates that the difference of the influence between nodes in this sub-network is 46.8699. The rest rules can be similarly explained.

In the Breast Cancer and Acute Inflammations data sets, the rule with medium ω has the largest \(\mathfrak M_1\) and smallest \(\mathfrak M_2\). That is to say, in its corresponding sub-network, the average influence of the nodes is relatively large, but the difference is small. So, the rule satisfies medium rule homogeneity and higher structural homogeneity.

In the Iris data set, the rule with largest ω has the largest \(\mathfrak M_1\) and \(\mathfrak M_2\). That is to say, in its corresponding sub-network, the average influence of the nodes is large, and the difference is large too. So, the rule satisfies higher rule homogeneity and structural heterogeneity.

In the Seeds data set, it is observed that the rule with medium ω has the largest \(\mathfrak M_1\) and the medium \(\mathfrak M_2\). So, the rule satisfies medium rule homogeneity and structural heterogeneity.

7.4 Comparison of the proposed algorithm BiR with some machine learning methods

In this subsection, we compare the proposed algorithm BiR (exactly the classifier induced by BiR) with the method in [25] and some common machine learning methods: Bayes net, Random forest, and Decision tree algorithms. The algorithm in [25] is a multi-scale rule extraction algorithm which has been applied to every property with multiple scales. Bayes net algorithm uses a graphical method to describe the relationship between data, with clear semantics and easy to understand. Random forest algorithm trains and predicts samples from multiple trees. Decision tree is an error rate reduction pruning method. In order to evaluate the performance of the proposed algorithms, in the experiments we selected two evaluation indicators: accuracy and Auc. The larger the values of the two evaluation indices, the better the performances of the algorithm to be evaluated. The detailed evaluation results are reported in Table 7 and the best results are shown in bold.

As listed in Table 7 and Fig. 4, the Auc and accuracy values of BiR in all the data sets are greater than those of the compared algorithms except the Breast Cancer Wisconsin data set. At the same time, its average accuracy is 91.50%, which is 4.79% higher than the second ranked Bayes net and 8.52% higher than the last ranked Decision tree. Besides, it seems that BiR has better overall performance and more stable than the selected other algorithms.

Specifically, we compare our algorithm with the one in [25]. The advantage of the method in [25] is that it is suitable for decision tables with multiple scales of conditional attributes and only one scale of decision attributes. But the final rules have only one scale for each conditional attribute. We discretized each conditional attribute into three values with three different scales, and compared our algorithm with it. Our algorithm is still superior to the algorithm in [25].

All of the comparison results vividly illustrate that the idea of bidirectional rule extraction can greatly improve the rule extraction ability of the proposed model.

8 Conclusion

The study of network formal context can make it possible to combine complex network analysis with formal concept analysis. In this paper, the network formal context of three-way decision (NFC3WD) has made the network data with three-way decision not only obtain the cognition of network weaken-concepts but also obtain the network characteristic values. The bidirectional rule extraction and reduction algorithms for the network weaken-concepts of three-way decision have been developed to demonstrate the effectiveness of the NFC3WD method.

In addition, there are some interesting problems that need to be further investigated. For example, under the NFC3WD background, how to learn the way and speed of the spread of network weaken-concepts, the mutual influence of viewpoints under the network weaken-concepts, and the final formation of such kinds of viewpoints.

References

Ganter B, Wille R (1999) Formal concept analysis: mathematical foundations. Springer, Berlin

Xu WH, Li JH, Wei L, Zhang T (2016) Formal concept analysis: theory and application. Science Press, Beijing

Kumar CA, Ishwarya MS, Loo CK (2015) Formal concept analysis approach to cognitive functionalities of bidirectional associative memory. Biol Inspired Cogn Archit 12:20–33

Mi YL, Li JH, Liu WQ, Lin J (2018) Research on granular concept cognitive learning system under Map Reduce framework. Chin J Electron 46(2):289–297

Zhao YX, Li JH, Liu WQ, Xu WH (2017) Cognitive concept learning from incomplete information. Int J Mach Learn Cybern 17(4):1–12

Li JH, Mei CL, Xu WH, Qian YH (2015) Concept learning via granular computing: a cognitive viewpoint. Inf Sci 298:447–467

Yao YY (2016) A triarchic theory of granular computing. Granul Comput 1(2):145–157

Yao YY (2010) Three-way decisions with probabilistic rough sets. Inf Sci 180(3):341–353

Yao YY (2011) The superiority of three-way decisions in probabilistic rough set models. Inf Sci 181(6):1080–1096

Yao YY (2012) An outline of a theory of three-way decisions. Lect Notes Comput Sci 7413:1–17

Yao YY (2016) Three-way decisions and cognitive computing. Cogn Comput 8:543–554

Yao YY (2018) Three-way decision and granular computing. Int J Approx Reason 103:107–123

Fujita H, Gaeta A, Loia V, Orciuoli F (2019) Resilience analysis of critical infrastructures: a cognitive approach based on granular computing. IEEE Trans Cybern 49(5):1835–1848

Zhi HL, Qi JJ (2022) Common-possible concept analysis: a granule description viewpoint. Appl Intell 52:2975–2986

Yang XP, Yao JT (2012) Modelling multi-agent three-way decisions with decision theoretic rough sets. Fundamenta Informaticae 115(2–3):157–171

Deng XF, Yao YY (2014) Decision-theoretic three-way approximations of fuzzy sets. Inf Sci 279:702–715

Zou L, Kang N, Che L, Liu X (2022) Linguistic-valued layered concept lattice and its rule extraction. Int J Mach Learn Cybern 13(2):83–98

Wang Z, Wei L, Qi JJ, Qian T (2020) Attribute reduction of SE-ISI concept lattices for incomplete contexts. Soft Comput 24:15143–15158

Qi JJ, Wei L, Yao YY (2014) Three-way formal concept analysis. Lect Notes Comput Sci 8818:732–741

Gaeta A, Loia V, Orciuoli F, Parente M (2021) Spatial and temporal reasoning with granular computing and three way formal concept analysis. Granul Comput 6(4):797–813

Ren RS, Wei L (2016) The attribute reductions of three-way concept lattices. Knowl-Based Syst 99:92–102

Li JH, Huang CC, Qi JJ, Qian YH, Liu WQ (2017) Three-way cognitive concept learning via multi-granularity. Inf Sci 378:244–263

Yao JT, Herbert JP (2007) Web-based support systems with rough set analysis. In: Proceedings of international conference on rough sets and intelligent systems paradigms. Springer, Berlin, pp 360–370

Yao YY (2021) The geometry of three-way decision. Appl Intell 51:6298–6325

Deng J, Zhan JM, Wu WZ (2021) A three-way decision methodology to multi-attribute decision-making in multi-scale decision information systems. Inf Sci 568:175–198

Hu ZY, Shao MW, Liu H, Mi JS (2021) Cognitive computing and rule extraction in generalized one-sided formal contexts. Cognitive Computation, https://doi.org/10.1007/s12559-021-09868-z

Wu WZ, Qian Y, Li TJ, Gu SM (2017) On rule acquisition in incomplete multi-scale decision tables. Inf Sci 378:282–302

Simsek MU, Okay FY, Ozdemir S (2021) A deep learning-based CEP rule extraction framework for IoT data. J Supercomput 77:8563–8592

Liu L, Qian T, Wei L (2016) Rules extraction in formal decision contexts based on attribute-induced three-way concept lattices. Journal of Northwest University (Natural Science Edition) 46(4):481–487

Ren RS, Wei L, Qi JJ (2018) Rules acquisition on three-way weakly consistent formal decision contexts. Journal of Shandong University (Natural Science) 53(6):76–85

Wang YB, Yang SC (2018) Rules extraction in formal decision contexts based on the merging of three-way concept lattices. Journal of University of Electronic Science and Technology of China 47(6):913–920

Lin JR (2009) Social network analysis: theory method and application. Beijing Normal University Publishing House, Beijing

Guizani N, Elghariani A, Kobes J, Ghafoor A (2019) Effects of social network structure on epidemic disease spread dynamics with application to ad hoc networks. IEEE Netw 33(3):139–145

He L, Zhu LH (2021) Modeling the COVID-19 epidemic and awareness diffusion on multiplex networks. Commun Theor Phys 73(3):14–22

Barabasi AL, Oltvai ZN (2004) Network biology: understanding the cell’s functional organization. Nat Rev Genet 5(2):101–113

Barabasi AL (2009) Scale-free networks: a decade and beyond. Science 325(5939):412–413

Barabasi AL, Gulbahce N, Loscalzo J (2011) Network medicine: a network-based approach to human disease. Nat Rev Genet 12(1):56–68

Gysi DM, Valle ID, Zitnik M, Ameli A, Gan X, Varol O, Ghiassian SD, Patten JJ, Davey RA, Loscalzo J, Barabasi AL (2021) Network medicine framework for identifying drug-repurposing opportunities for covid-19. Proc Natl Acad Sci 118(19):e2025581118

Pinto ER, Nepomuceno EG, Campanharo ASLO (2020) Impact of network topology on the spread of infectious diseases. Trends Comput Appl Math 21(1):95–115

Salje H, Kiem CT, Lefrancq N, Courtejoie N, Bosetti P, Paireau J, Andronico A, Hoze N, Richet J, Dubost CL, Strat YL, Lessler J, Levy-Bruhl D, Fontanet A, Opatowski L, Boelle PY, Cauchemez S (2020) Estimating the burden of SARS-cov-2 in France. Science 369(6500):208–211

Nande A, Adlam B, Sheen J, Levy MZ, Hill AL (2021) Dynamics of COVID-19 under social distancing measures are driven by transmission network structure. PLoS Comput Biol 17(2):e1008684

Liu R, Zhong JY, Hong RH, Chen E, Aihara K, Chen P, Chen LN (2021) Predicting local COVID-19 outbreaks and infectious disease epidemics based on landscape network entropy. Science Bulletin 66(22):2265–2270

Gaeta A, Loia V, Orciuoli F (2021) A method based on graph theory and three way decisions to evaluate critical regions in epidemic diffusion. Appl Intell 51(5):2939–2955

Li SP, Zhao XR (2020) Network percolation of the disease transmission based on bipartite networks. Int J Mod Phys B 34(6):2050029

Sahu IK, Panda GK, Das SK (2019) Application of rough set theory in medical health care data analytics. Int J Adv Sci Technol 129:29–42

Snasel V, Horak Z, Abraham A (2008) Understanding social networks using formal concept analysis. international conference on web intelligence and intelligent agent technology. Computer Society, Washington, D.C. pp 390–393 IEEE

Hao F, Park DS, Min JY, Jeong YS, Park JH (2016) K-cliques mining in dynamic social networks based on triadic formal concept analysis. Neurocomputing 209:57–66

Hao F, Min G, Pei Z, Park DS, Yang LT (2017) K-clique community detection in social networks based on formal concept analysis. IEEE Syst J 11(1):250–259

Peters JF, Ramanna S (2021) Proximal three-way decisions: theory and applications in social networks. Knowl-Based Syst 91:4–15

Ma N, Fan M, Li JH (2019) Concept-cognitive learning under complex network. Journal of Nanjing University (Natural Sciences) 55(4):609–623

Liu WX, Fan M, Li JH (2021) Research on community division method under network formal context. Journal of Frontiers of Computer Science and Technology 15(8):1441–1449

Yan MY, Li JH (2022) Knowledge discovery and updating under the evolution of network formal contexts based on three-way decision. Inf Sci 601:18–38

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 11971211 and 12171388)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note