Abstract

Predicting the number of COVID-19 cases in a geographical area is important for the management of health resources and decision making. Several methods have been proposed for COVID-19 case predictions but they have important limitations in terms of model interpretability, related to COVID-19’s incubation period and major trends of disease transmission. To be able to explain prediction results in terms of incubation period and transmission trends, this paper presents the Multivariate Shapelet Learning (MSL) model to learn shapelets from historical observations in multiple areas. An experimental evaluation was done to compare the prediction performance of eleven algorithms, using the data collected from 50 US provinces/states. Results show that the proposed method is effective and efficient. The learned shapelets explain increasing and decreasing trends of new confirmed cases, and reveal that the COVID-19 incubation period in the USA is around 28 days.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) caused a pandemic around the world, and became a global threat in the past months [1]. It’s also known as the Corona Virus Disease 2019, and in short of COVID-19. The COVID-19 has caused 7,720,830 active cases, and produced 1,013,992 deaths till September 31, 2020. China reported the pandemic in Wuhan city, in late December 2019. World Health Organization reported the pandemic on June 7, 2020.

Governments and authorities have been struggling to make critical decisions. The COVID-19 prediction is a novel area, and an important tool for predicting future events or situations, such as, allocation of medical supplies and dispatch of medical staff. The prediction tools generate predictions of the spread of the virus, and support decisions, such as preventive medicine and healthcare intervention strategies.

Several methods have been done to predict the number of COVID-19 cases. These methods build a model for each area, and ignore the interconnections among areas. Meanwhile, their interpretability is poor, such as COVID-19’s incubation period [2] and transmission trends.

Our goal is to develop a high interpretable model [3] to predict upcoming COVID-19 cases in multiple areas. The model should tackle the two issues as follows: (1) the determination of COVID-19’s incubation period among geographically connected areas, such as provinces/states in a country. (2) the obtainment of key transmission trends in multiple connected areas.

Inspired by the interpretability of shapelets [4], we exploit shapelets to represent key trends of the COVID-19 time series in multiple areas, and use the shapelet length to denote the incubation period length. The shapelets are introduced to enhance classifiers [5]. They are defined as discriminative subsequences of several time series, which belong to a common class. In the past decade, shapelet studies mainly focus on improving the classifier performance in terms of accuracy and speed. Can shapelets be representative subsequences of several interrelated time series? Can shapelets predict the upcoming values of those time series as well?

We propose a model named “Multivariate Shapelet Learning (MSL)” to efficiently solve the two questions. The MSL consists of four procedures as follows. (1) To degrade the search complexity, we adopt the one-step-forward split to window multivariate time series. Another benefit of the one-step-forward split is the transformation from time series data to supervised data, which means the MSL can be trained. (2) We design a shapelet layer to store the shapelets. (3) To learn and keep shapelets in the shapelet layer, we use the softmin distance to measure the distance between a time series and a shapelet. (4) A linear layer is adopted to connect the softmin distances and model outputs. Therefore, good shapelets can be found by minimizing the gap between model outputs and real observations.

The major contributions of this paper are summarized below.

-

(1)

The determination of COVID-19’s incubation period in geographically connected areas.

-

(2)

The obtainment of trends on COVID-19 transmission in geographically connected areas. These trends are visualized by the learned shapelets, which show the high interpretability of MSL.

-

(3)

The MSL model is proposed to simultaneously predict the upcoming new COVID-19 cases with better performance.

The rest of this paper is organized as follows. Section 2 addresses this research. Section 3 gives formulations and notations. Section 4 illustrates our proposed method. Section 5 gives descriptions of the COVID-19 data, performance criteria and experimental configurations. Section 6 analyses evaluated results, visualizes and the learned shapelets. Finally, a conclusion is drawn in Section 7.

2 Related work

This section addresses this research via reviewing recent studies.

2.1 COVID-19 prediction

According to the nature of methods, we categorize COVID-19 prediction methods into parsimonious methods and mathematical methods.

Parsimonious methods fit a linear or non-linear function from training data and show positive effects on the early prediction of the pandemic [6]. [7] uses ARIMA models and polynomial functions to predict daily cumulative COVID-19 cases in 145 countries, where each country has a tuned model. [8] uses ARIMA models to predict the daily cumulative confirmed cases in 3 European countries. [9] uses ARIMA models to predict the daily new confirmed cases for the 7-day period.

Mathematical methods model epidemic situations to enhance predictions. [10] applies mathematical models to describe the outbreak among passengers and crew members on Princess Cruises Ship. [11] realizes forward prediction and backward inference of the epidemic situation. [12] introduces a Composite Monte Carlo method to predict daily new confirmed COVID-19 cases, which is enhanced by deep learning and fuzzy rule induction. [13] exploits epidemic propagation model to predict daily cumulative confirmed cases of five worst affected states in India.

The above methods build a model for each time series. Hence, the interrelationship of these time series are ignored, such as the transmission among geographical areas. Moreover, not only parsimonious methods but also mathematical methods can not interpret their models, such as the determination of incubation periods, and the key trends of disease transmission. In this paper, an interpretable model using shapelet learning is proposed to predict COVID-19 cases in several interconnected areas.

2.2 Shapelet learning

The shapelets concept is first introduced by [4] for data mining. Its original definition is “subsequences that are in some sense maximally representative of a class”. The shapelet has high interpretability and good explanations. But it is still a challenge to efficiently find good shapelets.

According to the way of shapelet obtainment, these methods are divided into two categories: (1) shapelets mining, which optimizes the procedures on searching the optimal time series segment, such as brute force searching [14] and tree-based pruning [15]; (2) shapelet learning, which learns several shapelets by optimizing a classification loss function, such as LTS [5] and FastLTS [16]. As a key component in learning shapelets, the distance measurement between a shapelet and a segment is studied as well [17]. These methods are developed to deal with time series classification tasks. Their target is to learn discriminative shapelets from each inputted time series in a class, i.e., a classification task [18].

However, we learn representative shapelets from multiple time series, and generate predictions for each time series, i.e., a regression task. These shapelets have a probability of being similar or common segments. We adopt the shapelets concept to describe the key data points of the observed time series. Meanwhile, we use the one-step-ahead split method to segment multiple time series. Based on this split method, the shapelets are learned under linear complexity.

3 Formulations and notations

This section gives formulations and notations. The main symbols used are listed in Table 1.

Multivariate time series (MTS)

We adopt a MTS to describe the observed daily new confirmed cases in multiple states/provinces in the America. The symbol \(\boldsymbol {Z} \in \mathbb {R}^{I \times K}\) denotes the cases of I areas in K consecutive days.

Look-back window

A look-back window of size T is an ordered sub-sequence of a MTS. and is exploited to observe the cases in a certain period. We use symbol \(\boldsymbol {Z}_{:, t+1:t+T} \in \mathbb {R}^{I \times T}\) to denote a look-back window.

Shapelet. A shapelet of size T is an ordered sequence of values, and is employed to represent the key data points of a series. To represent key data points of a MTS, we need several candidate shapelets. These shapelets is denoted by \(\boldsymbol {S} \in \mathbb {R}^{C \times T}\). C is the number of shapelets.

Distances between shapelets and MTS

The distance measurement is a critical step to learn shapelets from a MTS. The distance between a time series and a shapelet is defined as:

where Di,c is the distance between i-th time series and c-th shapelet, and \(\boldsymbol {D} \in \mathbb {R}^{I \times C}\) is the total distances between candidate shapelet S and observed Z.

MTS prediction problem

Typically, the MTS prediction problem is formulated as:

where \(\hat {\boldsymbol {Z}}_{:,T+1}\in \mathbb {R}^{I}\) is the predicted values of I areas in the upcoming day, Z:,1:T = [Z:,1,Z:,2,⋯ ,Z:,T] is observations over a look-back window of size T, and F(⋅) is the mapping.

The problem of MTS prediction via shapelet learning is formulated as:

where \(\boldsymbol {S} \in \mathbb {R}^{C \times T}\) is the learned shapelets.

4 The MSL model

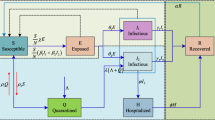

This section illustrates the proposed MSL. The diagram of the proposed MSL is graphically displayed in Fig. 1.

Firstly, the normalization of MTS data, see the upper left part in Fig. 1. Because there are significant differences in the range of confirmed cases data in different regions, we normalize those data into [0, 1]. The normalized data can also speed up the training process of models.

Secondly, the transformation from MTS data to supervised data, see the upper left part in Fig. 1. Due to the MTS data can not be directly fed into a model, we use one-step-ahead to split MTS data into supervised data, and use the supervised data to train models.

Thirdly, the shapelet learning stage, see light blue shade parts in Fig. 1. There are two tasks in this stage: (1) obtainment of key data points, i.e., shapelets. (2) accurate predictions of future values; For the first task, we designed a shapelet layer, a distance layer, and a softmin layer to learn parameters that are close or similar to import inputs. For the second task, we add a linear layer to receive the minimum distances and generate predictions.

Finally, the de-normalization from model outputs to predicted values, see the upper right part in Fig. 1. To obtain the predictions, we de-normalize the model outputs, since the model are trained using normalized data.

The pseudo code for training MSL is shown in Algorithm 1.

4.1 Normalization and time series transformation

Min-Max normalization

The Min-Max normalization is chosen to compress all the variables into the range [0,1]. The normalization formula and its de-normalization formula are as follows:

where \(\boldsymbol {d} \in \mathbb {R}^{K}\) denotes a vector of all the observed samples, M is the number of observed samples, \(\boldsymbol {d}^{\prime } \in \mathbb {R}^{K}\) is the normalized data, \(\max \limits (\boldsymbol {d})\) is the maximum value of d, and \(\min \limits (\boldsymbol {d})\) is the minimum value of d. The de-normalization formula is applied for outputs of models in the post-processing stage.

One-step-ahead split

Given a MTS Z with K consecutive time intervals, the one-step-ahead split is formulated as:

where the left part is inputs of a model, a.k.a, windowed MTS, the right part is the output of a model. For lucid presentation, let \(\boldsymbol {\mathcal {X}} \in \mathbb {R}^{(K-T-1) \times I \times T}\) and \(\boldsymbol {\mathcal {Y}} \in \mathbb {R}^{(K-T-1) \times 1 \times T}\) denote inputs and outputs, respectively. Each sample in \((\boldsymbol {\mathcal {X}}, \boldsymbol {\mathcal {Y}})\) is denoted by \((\boldsymbol {X} \in \mathbb {R}^{I \times T}, \boldsymbol {Y} \in \mathbb {R}^{1 \times T})\).

4.2 Shapelet learning and prediction generation

Shapelet layer

The shapelet layer receives the input X, and passes the values of shapelets to the subsequent distance layer.

Let symbol C denote the number of shapelets. The shapelets can be denoted by \(S \in \mathbb {R}^{C \times T}\). From the data structure perspective, a shapelet of length T is an ordered sequence of values [5].

The shapelets are parameters, and designed to approximate the key data points of input data, which can help predicting future values. The approximation of shapelets from historical observations consists of two steps. Firstly, we calculate the distances between all inputted windowed time series and shapelets. Secondly, we use a softmin layer to figure out the minimum distance.

Distance layer

This layer receives inputs and shapelets from prior layers. To figure out the minimum distance between inputs and shapelets, the distances of inputs and shapelets should be calculated.

The distances between inputs and shapelets are formulated as:

where Di,c denotes the distance between the i-th windowed time series and the c-th shapelet, Xi,t is the COVID-19 case value in the i-th area at the t-th inputted time step, and Sc,t is the shapelet value of the c-th shapelet at the t-th time step.

Other distance measurements can be applied to calculate the distances between inputs and shapelets as well, such as Euclidean distance. The reason we choose mean squared error (MSE) is that, the loss function of the MSL is also MSE.

Softmin layer

This layer receives distances, applies softmin function to those distances, and the softmin distances are delivered to the subsequent linear layer.

The minimum distance is employed to search a target shapelet, which is the closest or most similar to the inputs. We adopt softmin function to select the closest shapelet, and generate predictions based on the shapelet, via inputting it into a linear layer. The softmin distance is defined as:

where α is a constant parameter, and Mi,c is the softmin distance. The α should be a small negative value, since a big value would cause numeric overflow. According to our experience, we set α to -10.

Linear layer

This layer linearly combines the softmin distances, and generates values for post-processing. The combination of the softmin distances is formulated as follows:

where \(\hat {\boldsymbol {P}} \in \mathbb {R}^{1 \times T}\) is the model outputs, and \(\boldsymbol {W} \in \mathbb {R}^{C}\) is the shared weights assigned to received softmin distances.

5 Experimental configuration

This section gives evaluation metrics and comparable methods.

5.1 Data collection

The COVID-19 data collections are publicly available and daily updated on the GitHub websiteFootnote 1. The basic statistics of COVID-19 cases on provinces/states in the US are listed in Table 2.

The duration of the collected data ranges from January 22, 2020 to September 17, 2020. Tens of thousands of COVID-19 infected people were newly confirmed in a day. Those colonized regions of America are removed. The confirmed cases in 50 provinces or states are counted in days. We organize those statistics into two groups: cumulative confirmed cases and new confirmed cases. The statistics consist of minimum value, maximum value, mean value and standard deviation.

The infected statuses of California, Florida and Texas are the most serious in America, and the new confirmed cases in these states commonly increase more than 10,000 in recent days. Whereas, the statuses of Vermont, Wyoming, Maine and Alaska are moderate, and the total number of infected persons are all less than 7,000.

STD denotes standard deviation, which reflects the degree of dispersion of a set of data points. When observing the STD values of new confirmed cases of each province/state, (1) California, Florida and Texas all exceed 200,000, which means serious outbreaks; (2) but for Vermont, Wyoming, Maine and Alaska, their infectious statuses are relatively stable.

When observing the maximum values of new confirmed cases, (1) the cases of California, Florida and Texas are all about 1500, which means the situation has always been threats; (2) but the cases of Vermont and Maine are all less than 100, which shows their situations are under control. The main reason for these phenomenons is these states have a large population. Another potential reason is government control and policies.

This paper aims to predict upcoming new COVID-19 cases for these 50 provinces/states. Moreover, the COVID-19’s incubation periods of the US and major transmission trends should be learned from the above observations. To create a model with high interpretability, we learn core shapelets from past observations.

5.2 Metrics

Many evaluation metrics can be applied to measure the performance of MTS prediction. For a fair competition, we follow the metrics in [20,21,22]. The three metrics are formulated as follows:

-

Relative Absolute Error (RAE):

$$ \begin{aligned} RAE = \frac{\sqrt{{\sum}_{(i,t)\in {\varOmega}_{Test}}{| \boldsymbol{Z}_{i,t} - \hat{\boldsymbol{Z}}_{i,t} |}}} {\sqrt{{\sum}_{(i,t)\in {\varOmega}_{Test}}{|\boldsymbol{Z}_{i,t} - mean(\hat{\boldsymbol{Z}}_{i,:}) |}}}, \end{aligned} $$(10) -

Relative Squared Error (RSE):

$$ \begin{aligned} RSE = \frac{\sqrt{{\sum}_{(i,t)\in {\varOmega}_{Test}}{(\boldsymbol{Z}_{i,t} - \hat{\boldsymbol{Z}}_{i,t})^{2}}}} {\sqrt{{\sum}_{(i,t)\in {\varOmega}_{Test}}{(\boldsymbol{Z}_{i,t} - mean(\hat{\boldsymbol{Z}}_{i,:}) )^{2}}}}, \end{aligned} $$(11) -

Empirical Correlation Coefficient (CORR):

$$ \begin{aligned} &CORR = \\ & \frac{1}{I} {\sum}_{i=1}^{I} \frac{{\sum}_{t}{(\boldsymbol{Z}_{i,t} - mean(\boldsymbol{Z}_{i,:}) )} {\sum}_{t}{(\hat{\boldsymbol{Z}}_{i,t} - mean(\hat{\boldsymbol{Z}}_{i,:}) )}}{\sqrt{{\sum}_{t}{(\boldsymbol{Z}_{i,t} - mean(\boldsymbol{Z}_{i,:}) )^{2}} {\sum}_{t}{(\hat{\boldsymbol{Z}}_{i,t} - mean(\hat{\boldsymbol{Z}}_{i,:}) )^{2}}}}, \end{aligned} $$(12)

where \(\boldsymbol {Z}, \hat {\boldsymbol {Z}} \in \mathbb {R}^{I \times N}\) are ground true values and model predictions in the MTS task, respectively. I is the number of areas, N is the number of time steps in the testing set, and ΩTest is the set of time stamps used for testing.

RAE is a normalized version of mean absolute error (MAE), and RSE is also a normalized version of mean absolute error (RMSE). Hence, both RAE and RSE are not sensitive to the data scale. For RAE and RSE, the lower value is the better performance. Whereas, for CORR, the higher value is the better performance. In reality, RAE and RSE describe the prediction accuracy, and CORR describes the similarity.

5.3 Methods for comparison

The proposed model is compared with following methods:

-

GAR combines an autoregressive component with a log-linear component, and allows the use of global features to compensate for the lack of data.

-

AR is a statistical method to process time series, which is a kind of linear predictive model. The advantage of this method is that it needs little data and can be predicted by its own variable sequence.

-

VAR [23] is a generalization of AR, which maps the future values to all past observed values. MA and ARMA can also be transformed into VAR under certain conditions.

-

LSTM [24] is a kind of recurrent neural network, which is composed of a cell, an input gate, an output gate and a forget gate. The number of hidden neurons is tuned to optimize the model.

-

GRU [25] is a variant of LSTM, which uses an update gate to replace the hidden gates and cell gates of LSTM. The GRU method adjusts hidden neurons to control the scale of a neural network.

-

Encoder-decoder (ED) [26] is an extraordinarily ordinary framework in deep learning, which uses RNN in the encoding process and the decoding process, respectively. It’s an end-to-end learning framework.

-

LSTNet [21] contains a convolutional layer [27] to extract the local dependency patterns, a recurrent layer to capture long-term dependency patterns, and a recurrent-skip layer to capture periodic properties in the input data for prediction.

GAR, AR and GAR are traditional baseline methods. LSTM, GRU and ED are RNN series, which are designed for time series data or sequential data. LSTNet is a state-of-the-art method based on deep neural networks, which is designed for MTS data.

5.4 Configurations

All models are trained using the Adam optimizer [19]. The mean squared error (MSE) is chosen as the loss function of all the models. The batch size is set to 32. For RNN, LSTM, ED and LSTNet, the number of hidden neurons is in {32, 64}. Their learning rates are set to 0.001.

The COVID-19 cases data are divided into two subsets: the first part, from the January 22, 2020 to the July 31, 2020, is used to build and train models; the remaining part, from August 1, 2020 to the September 17, 2020, is utilized to assess the learned models. The ratio of the training set to the test set is 8 : 2.

6 Experimental results and analyses

This section gives several experiments on parameters of the proposed MSL, and compares the MSL with other methods. These experiments are intended to address the following questions:

-

(1)

How T and C affect predictions? Technically, how the inputted data and the learned shapelets affect predictions?

-

(2)

Can MSL outperform other comparable methods?

-

(3)

Finally, what is harvested from the learned shapelets?

6.1 Effects on C and T

To study how the shapelets and windowed time series affect shapelet learning and predictions, we measure the performance in terms of RAE, RSE and CORR. We change C or T while holding other parameters. There are so many compositions of parameter C or parameter T. To efficiently search the parameters, C is first randomly set to a small value, and then T is tuned to obtain the optimal prediction performance. The window size T can be tuned by one of the comparable methods as well. In these experiments, the best performance of comparable methods is found when T = 28.

The effects on C are plotted in Fig. 2. As Fig. 2a and b state, we hold window size T = 28 and change shapelet size C from 2 to 7 with step size 1, both the optimal values of RAE and RSE are found when C = 3. As Fig. 2c reveals, the optimal value of CORR is found at C = 2. There are few learned shapelets if the shapelet size C is small. Meanwhile, few learned shapelets have better prediction performances, which means the features of disease outbreaks are few. In reality, the trends of COVID-19 outbreaks in the provinces/states of America are similar.

The effects on T are plotted in Fig. 3. As presented in Figs. 2a, 2b and 2c, the optimal values of these metrics are found at T = 28, which is shown in the red dash lines. Compared the window size T with some quick onset disease, such as HFMD [28, 29] and infectious diarrhea [2], the optimal value T of COVID-19 is larger than the value of other diseases. A possible reason is that the COVID-19 has a longer incubation period than other quick onset diseases, or it can spread to other persons in the incubation period.

6.2 Method comparison

According to the conducted experiments on shapelet size C and window size T, we learn the effects on the parameters with respect to the three metrics, the optimal values of these metrics can be found at around C = 3 and T = 28. Note that, the optimal value of CORR is found at 2 when T = 28. The CORR metric measures the directions differences between two vectors, which can also be adopted to measure the trend differences between real confirmed cases and predicted confirmed cases. For a fair competition, the inputted window size T of all methods is set to 28. The parameter C of MSL is set to 3.

As presented in Fig. 4, all the comparable methods are well-tuned, and their performances are measured in terms of RAE, RSE and CORR.

The following summarizes the key conclusions we observe from the results:

-

(1)

The proposed MSL outperforms other methods in terms of three metrics.

-

(2)

The GAR has the second best performance in terms of RAE and CORR.

-

(3)

The AR has the second best performance in terms of RSE.

-

(4)

From the perspective of RAE, the LSTM poorly performs than other methods.

-

(5)

From the perspective of RSE, the VAR and LSTM has the worst performance and second worst performance, respectively.

-

(6)

From the perspective of CORR, the ED has the worst performance.

The GAR linearly transforms inputs to targets, and shares common weights for all the inputted variables (i.e., areas). The AR linearly transforms the inputs of an area to the target of this area, and the weights of areas are not shared. The GAR has the second best performance in terms of RAE and CORR. Meanwhile, the AR has the second best performance in terms of RSE. This reveals that new confirmed cases in the US are linearly increased somehow.

The VAR linearly connects all inputs to all targets, where each target is mapped from all the inputs. However, the performance is worst in terms of RSE. Meanwhile, the performances of RNN models are poor, such as LSTM, GRU and ED. This discovers that the connections of disease statuses between any two states are relatively independent.

The MSL globally considers important subsequences of time series data. It learns core shapelets from inputs, and generates prediction based on the learned shapelets. Hence, the learned shapelets should be analyzed to investigate the improvements.

6.3 Analyses on shapelets

The visualization of three shapelets is given in Fig. 5. Since the best prediction performance is found at shapelet size C = 3 and window size T = 28, we obtain three shapelets and the length of each shapelet is 28. For a better comparison and understanding of these shapelets, these shapelets are mapped to range [0,1] using Min-Max normalization, which is presented in (4).

The major observations from these learned shapelets are as follows:

-

(1)

The length of periods in the three shapelets are around seven days. This reveals that the activities in American have a strong periodic connection to the disease.

-

(2)

When observing periods in Fig. 5a and 5c, it takes two days to degrade the new confirmed cases, but will strongly increase in the coming five days.

-

(3)

According to the slopes of the trend lines, the growth rate is larger than the descent rate. This suggests that the infection among people is going stronger.

From the above observations, the trends of new cases in some areas are still increasing quickly (e.g., California), while some areas are slowly descending. The proposed MSL learns these trends, and then generates predictions based on them. It also suggests that future prediction models should consider the periodic events, such as weekdays and weekends.

7 Conclusions

This paper investigated the simultaneous prediction of the upcoming new confirmed COVID-19 cases in 50 provinces/states in America. The MSL is proposed to generate predictions via shapelet learning. Experimental results on real data collections show the effectiveness of the proposed method. Meanwhile, experimental analyses show the COVID-19’s incubation period is around 28 days. Moreover, three learned shapelets depict the growing trend and descending trend of the disease.

In the future, the multi-horizon COVID-19 prediction will be further investigated, which would provide further visions for disease prevention and control.

References

WHO (2020) Coronavirus disease (covid-19) outbreak situation. https://www.who.int/emergencies/diseases/novel-coronavirus-2019https://www.who.int/emergencies/diseases/novel-coronavirus-2019. (accessed 2020)

Wang Z, Huang Y, He B, Luo T, Wang Y, Fu Y (2020) Short-term infectious diarrhea prediction using weather and search data in xiamen, china. Sci Program 2020:1–12. https://doi.org/10.1155/2020/8814222

Assaf R, Schumann A (2019) Explainable deep neural networks for multivariate time series predictions. In: Proceedings of the 28th international joint conference on artificial intelligence, IJCAI Organization, Macao, China, pp 6488–6490

Ye L, Keogh EJ (2009) Time series shapelets: a new primitive for data mining. In: Proceedings of the 15th international conference on knowledge discovery and data mining, ACM, Paris, France, pp 947–956

Grabocka J, Schilling N, Wistuba M, Schmidt-Thieme L (2014) Learning time-series shapelets. In: Proceedings of the 20th international conference on knowledge discovery and data mining, ACM, New York, US, pp 392–401

Bertozzi AL, Franco E, Mohler G, Short MB, Sledge D (2020) The challenges of modeling and forecasting the spread of COVID-19. Proceedings of the National Academy of Sciences 117(29):16732–16738. https://doi.org/10.1073/pnas.2006520117

Hernandez-Matamoros A, Fujita H, Hayashi T, Perez-Meana H (2020) Forecasting of COVID19 per regions using ARIMA models and polynomial functions. Appl Soft Comput 96:106610. https://doi.org/10.1016/j.asoc.2020.106610

Ceylan Z (2020) Estimation of COVID-19 prevalence in italy, spain, and france. Science of The Total Environment 729:138817. https://doi.org/10.1016/j.scitotenv.2020.138817

Singh RK, Rani M, Bhagavathula AS, Sah R, Rodriguez-Morales AJ, Kalita H, Nanda C, Sharma S, Sharma YD, Rabaan AA, Rahmani J, Kumar P (2020) Prediction of the covid-19 pandemic for the top 15 affected countries: Advanced autoregressive integrated moving average (arima) model. JMIR Public Health Surveill 6(2):e19115. https://doi.org/10.2196/19115

Mizumoto K, Chowell G (2020) Transmission potential of the novel coronavirus (COVID-19) onboard the diamond princess cruises ship, 2020. Infectious Disease Modelling 5:264–270. https://doi.org/10.1016/j.idm.2020.02.003

Li L, Yang Z, Dang Z, Meng C, Huang J, Meng H, Wang D, Chen G, Zhang J, Peng H, Shao Y (2020) Propagation analysis and prediction of the COVID-19. Infectious Disease Modelling 5:282–292. https://doi.org/10.1016/j.idm.2020.03.002

Fong SJ, Li G, Dey N, Crespo RG, Herrera-Viedma E (2020) Composite monte carlo decision making under high uncertainty of novel coronavirus epidemic using hybridized deep learning and fuzzy rule induction. Appl Soft Comput 93:106282. https://doi.org/10.1016/j.asoc.2020.106282

Farooq J, Bazaz MA (2020) A deep learning algorithm for modeling and forecasting of COVID-19 in five worst affected states of india. Alexandria Engineering Journal. https://doi.org/10.1016/j.aej.2020.09.037

Keogh EJ, Rakthanmanon T (2013) Fast shapelets: A scalable algorithm for discovering time series shapelets. In: Proceedings of the 13th SIAM international conference on data mining, SIAM, Austin, Texas, USA, pp 668–676

Li G, Yan W, Wu Z (2019) Discovering shapelets with key points in time series classification. Expert Syst Appl 132:76–86. https://doi.org/10.1016/j.eswa.2019.04.062

Hou L, Kwok JT, Zurada JM (2016) Efficient learning of timeseries shapelets. In: Proceedings of the 30th international conference on artificial intelligence, AAAI Press, Phoenix, Arizona, USA, pp 1209–1215

Deng H, Chen W, Shen Q, Ma AJ, Yuen PC, Feng G (2020) Invariant subspace learning for time series data based on dynamic time warping distance. Pattern Recogn 102:107210. https://doi.org/10.1016/j.patcog.2020.107210

Raychaudhuri DS, Grabocka J, Schmidt-Thieme L (2017) Channel masking for multivariate time series shapelets. arXiv:1711.00812 [cs]

Kingma DP, Ba J (2015) Adam: A method for stochastic optimization. In: Proceedings of the 3rd international conference on learning representations, OpenReview.net, San Diego, CA, USA

Shih S-Y, Sun F-K, Lee H-Y (2019) Temporal pattern attention for multivariate time series forecasting. Mach Learn 108(8-9):1421–1441. https://doi.org/10.1007/s10994-019-05815-0

Lai G, Chang W-C, Yang Y, Liu H (2018) Modeling long- and short-term temporal patterns with deep neural networks. In: Proceedings of the 41st international conference on research development in information retrieval, ACM, Ann Arbor, MI, USA, pp 95–104

Chang Y-Y, Sun F-Y, Wu Y-H, Lin S-D (2018) A memory-network based solution for multivariate time-series forecasting. arXiv:1809.02105 [cs]

Zhang GP (2003) Time series forecasting using a hybrid arima and neural network model. Neurocomputing 50:159–175. https://doi.org/10.1016/S0925-2312(01)00702-0

Cao J, Li Z, Li J (2019) Financial time series forecasting model based on ceemdan and lstm. Physica A: Statistical mechanics and its applications 519:127–139. https://doi.org/10.1016/j.physa.2018.11.061

Cho K, van Merrienboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: Encoder-decoder approaches. In: The 8th EMNLP workshop on syntax, semantics and structure in statistical translation, Doha, Qatar, pp 103–111

Cho K, van Merrienboer B, Gülçehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the 2014 conference on empirical methods in natural language processing, Doha, Qatar, pp 1724–1734

Djenouri Y, Srivastava G, Li JC-W (2020) Fast and accurate convolution neural network for detecting manufacturing data. IEEE Transactions on Industrial Informatics, p 1–1. https://doi.org/10.1109/TII.2020.3001493

Wang Z, Huang Y, He B (2021) Dual-grained representation for hand, foot, and mouth disease prediction within public health cyber-physical systems. Software: Practice and Experience, Early View. https://doi.org/10.1002/spe.2940

Wang Z, Huang Y, He B, Luo T, Wang Y, Lin Y (2019) TDDF: HFMD outpatients prediction based on time series decomposition and heterogenous data fusion in xiamen, china. In: Proceedings of the 15th international conference advanced data mining and applications, Dalian, China, pp 658–667

Acknowledgments

This work was supported in part by Jimei University (no. ZP2021013), the Education Department of Fujian Province (CN) (no. JAT200277), the Natural Science Foundation of Fujian Province (CN) (no. 2019J01713) and the Natural Science Foundation of China (nos. 41971424 and 61701191). The authors would like to thank the editor and anonymous reviewers for their helpful comments in improving the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

None.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Artificial Intelligence Applications for COVID-19, Detection, Control, Prediction, and Diagnosis

Rights and permissions

About this article

Cite this article

Wang, Z., Cai, B. COVID-19 cases prediction in multiple areas via shapelet learning. Appl Intell 52, 595–606 (2022). https://doi.org/10.1007/s10489-021-02391-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02391-6