Abstract

The goal of this paper is the assessment of an optimal reimbursement strategy for employer-based health insurance plans (HP), that cover several categories of medical services. Indeed, a health plan may offer several cost-sharing provisions for these categories, and the “Percent Expense Paid” by the plan also known as “Actuarial Value” represents a summary measure of the protection provided. Starting from a wide industrial applied model in insurance for rate-making, the generalized linear models (GLM), we estimate the expected value and variance of the health expenditure for each category. Moreover, different reimbursement rules (e.g. deductibles, co-payments, policy limits, etc.) involve a change of the “Actuarial Value” calculated as the ratio between the benefits paid by the plan (i.e. reimbursement amounts) and the expenses paid by policyholders (i.e. expenditures). The latter is the percentage of expenditure reimbursed by the plan and is sometimes defined in actuarial literature as Indicated Deductible Relativity (IDR); an IDR can be calculated for each category covered by the Health Plan or per policyholder and is a commonly used method for scoring the benefits of health insurance. Hence, we calculate the optimal IDR for each category, using the optimization problem proposed by de by Finetti (Il problema dei pieni, Giornale dell’istituto italiano degli attuari, 1940) in the context of proportional reinsurance. The goal is the minimization of the variance of the total reimbursement of the Health Plan by fixing the total gain. Furthermore, we propose a numerical application to a real dataset, containing observed expenditures of an Italian HP.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many developed countries, the ageing of the population due to lower fertility and mortality rates and the increasing cost of new medical technologies are leading to increase in health spending. This phenomenon has been accentuated by the spread of the Covid-19. In 2019, OECD countries spent, on average, around 8.8% of their GDP on health care, with an average per capita health expenditure estimated to be more than USD 4000 (OECD, 2021). Government financing schemes are the main form of financing of health care in many of OECD countries but rising costs, the economic crisis and budgetary constraints have led to financial cuts in many countries and a reduction of the overall share of public health spending (Rechel, 2019). This leads to an increased demand for enrolment in health plans and for buying health insurance policy on the market. e.g., in Italy health plans policyholders increased from less than 7 million in 2013 to more than 14 million in 2019 (Ministero della Salute, 2021).

The first pillar in the Italian health system is represented by the National Health Insurance (Servizio Sanitario Nazionale, SSN) that accounts for 74% of total health spending (OECD, 2021). The SSN does not allow people to opt out of the system, therefore there are no substitute insurance but only complementary and supplementary private health insurance. They cover several medical services not provided by SSN, offer a higher level of comfort in hospital facilities and allow for a wider choice between public and private providers (Tikkanen et al., 2020). The second pillar is mainly characterized by private group health plans that are differentiated in “full-insured” and “self-insured”.

Financial sustainability analysis of “full-insured” and “self-insured” health plans requires the adoption of the actuarial risk assessment methodology (Carroll & Mange, 2017). This is especially true for a self-insured health plan which is a true risk-taking entity as it retains the risk for paying medical claims made by the plan’s participants and operates on its own.

Academic literature has mainly focused on forecasting the annual cost of healthcare in both the public and the private sectors due to the overall impact on the economy. Projections for annual healthcare expenditure, for an individual or a group, has been obtained using several methodologies: loss models, multivariate regression models (Frees et al., 2011, 2013), phase-type model (see Govorun et al. (2015) and literature therein) and, more recently, artificial intelligence methodologies (Vimont et al., 2021; Duncan et al., 2016). For a review of models applied to healthcare in an actuarial context reader can refer to Yang et al. (2016) and Duncan (2018).

A standard methodology to estimate health plans expenditure are two-part models, where the first part models a frequency component and the second a claim amount (or expenditure) (Frees et al., 2011; Duncan et al., 2016). In actuarial literature, frequency-severity approach (Frees, 2014) has been extensively used in modeling non-life insurance claim amount. In a standard frequency-severity approach the number of claims and expenditure per claim are used. For an example of the use of a frequency-severity model in health insurance see (Keeler & Rolph, 1988).

A key element in determining the expenditure of a health plan is the introduction of reimbursement limitations (e.g. deductibles, co-payments,...). On the one hand, they reduce costs for the health plan through cost sharing between plan and policyholders and allow contribution reduction, on the other hand they motivate policyholders to reduce expenses and, more in general, counteract moral hazard (Daykin et al., 1993; van Winssen et al., 2016).

Coverage modification changes the claim frequency and severity distributions (e.g. censoring or truncation). Parametric loss models for deductible ratemaking allow to measure the effects of coverage modification on claim frequency and severity distributions. The main advantage of these models is that they are accurate and allow to calculate theoretically correct deductible rates (Klugman et al., 2012).

Alternatively, the regression approach could be adopted, including the deductible as an explanatory variable inside a Generalized Linear Models (GLM) (Lee, 2017). A standard approach proposed in the actuarial literature for reimbursement limitation is focused on the estimate of “Indicated Deductible Relativity” (IDR) that provides an assessment of how much the insurance loss is reduced by a deductible. Considering that a health plan offers several medical services, reimbursement rules may differ among services (e.g. dental care, specialist medical visits). Therefore, in determining a reimbursement limitation strategy the level of protection offered to members should also be considered, along with the costs and risks of the health plan. Cost sharing strategies can make it difficult for members to understand the degree of coverage offered by a health plan. A possible index is given by the Actuarial Value (AV). AV is a measure of the relative percentages paid by a health benefits plan and its members. It is calculated using the expected health claims from a standard population, along with a plan’s cost-sharing provisions, to simulate the payment of claims. It ranges from 0 for a plan that pays nothing to 1 for a plan that pays all medical expenses.

The main goal of the paper is to assess the optimal IDR for each health service, by defining a mean-variance optimization problem. In this way, we calculate the IDRs by minimizing the total variance of the expenditure of the Health Plan (HP), fixing the expected gain. The optimization problem is solved both in the special case of no-correlation and the general case of correlation among health services. Such a problem adapts, in the health insurance context, the original optimization problem introduced by de Finetti De Finetti (1940) in the context of proportional reinsurance. Furthermore, our optimization problem requests as input the estimate of the mean and variance of expenditure for each health service. Hence, we propose a regression approach based on GLM. Starting from an Italian health plan data set, we compare two approaches: Two-Part and Tweedy. In general, these two approaches are used to determine the expected value of the response variable but results are not significantly different. However, for the purposes of this work is relevant to measure the variance of the expenditure in order to compare the optimization results using different methods to estimate mean and variance of the expenditure.

The paper is organized as follows. Section 2 describes the actuarial framework, the introduction of reimbursement limitation and the optimization problem. Section 3 compares Two-Part regression model and Tweedie regression model for the estimate of mean and variance of the expenditure. Section 4 presents a case study based on a real dataset and discusses the main findings. Section 5 concludes.

2 Actuarial framework and optimization problem

2.1 The frequency-severity model

We consider a health plan (HP) offering health care services to r policyholders. We group the health services in J groups (or ’branch’). Let:

-

i index the ith policyholder, \(1\le i \le r\);

-

j index the jth branch of health expenditure, \(1\le j \le J\).

Following a standard approach in actuarial literature, we adopt a double stochastic aggregate expenditure model, where both the number of claims and the size of each claim are stochastic variables (see, for instance, (Daykin et al., 1993)), the so called frequency-severity model. Therefore, we separately model the number of claims in a certain time period (frequency) and the expenditure per claim (severity). In the following we denote with:

-

N the random variable (r.v.), number of claims per year;

-

Y the r.v. expenditure for single claim;

In the classical collective risk model, the expenditure of the i-th policyholder given a specific branch j is:

where \(Y_{i,j,k}\) is the r.v. expenditure for ith policyholder, due to the kth claim in the jth branch.

Under the typical assumption of independence between \(N_{i,j}\) and \(Y_{i,j,k}\) and identical distribution on \(Y_{i,j,k}\sim Y_{i,j}, \forall k=1,\ldots ,N_{i,j}\) (However, there are works that investigate the dependence between frequency and claim amount. For further details, see Garrido et al. (2016) and Clemente et al. (2022)), we get the well-known formulas for mean and variance of \(Z_{ij}\) see (Daykin et al., 1993):

In order to reduce its expenditures, the health plan could introduce some payment limitations. The reimbursement amount \(L_{i,j,k}\), after the application of a coverage modification, is obtained as a function \(h(Y_{i,j,k},\Theta )\), with \(\Theta \) a set of possible coverage limitation rules. To measure the impact of the reimbursement rules on the expenditure, we focus on the r.v. proportion of expenditure reimbursed (IDR) for a single policyholder, service and claim:

whereas, the \(IDR_j\) at branch level is:

2.2 The effect of reimbursement limitations in the profit and loss of an health plan.

In the following we assume no administrative and operational costs and the related expense loading. We assume that the total contribution requested by the health plan for the jth service group, \(C_j\), is determined as the sum of the expected expenditures for the service, \(\textrm{E}[Z_j]\), and a safety loading \(m_j\):

Considering the typical actuarial assumption of independence between policyholders, the expected value and variance of the expenditure for single branch are:

respectively.

In the following, we assume that a proportional reimbursement rule (coinsurance) is applied to each claim, based on a coinsurance factor \(0\le \alpha _j\le 1\) for each branch j. Hence the r.v. reimbursement for single claim \(L_{i,j,k}\) are obtained as follows:

where \(\alpha _j\) is the jth element of the J-vector \(\varvec{\alpha }=(\alpha _1,\ldots ,\alpha _J)\) and it represents the proportion of expenditure reimbursed for a single claim.

It is easy to show by Eq. (5) that \(\alpha _j\) corresponds to the Indicated Deductible Relativity of the jth branch (i.e. \(IDR_j=\alpha _j\)).

Anyway, the introduction of a policy limitation reduces not only the reimbursement at branch level but also the contribution of the same proportion. Then, we can define a new r.v. profit (loss) for the jth branch as follows:

whose expected value is \(\textrm{E}\left[ G_j(\alpha _j)\right] =\alpha _j\cdot m_j\).

Therefore, the total expected gain of the HP after the introduction of the reimbursement rule is a function of the \(IDR_j=\alpha _j\) as follows:

It is important to note that the r.v. expected total profit for the HP, before the application of the reimbursement rules, i.e. if \(\alpha _j=1, j=1,\ldots , J\), is \(\textrm{E}\left[ G({\textbf {1}})\right] =\sum _{j=1}^{J}m_j\).

Under the assumption that the health service groups are uncorrelated, the variance of total profit is given by:

whereas, for correlated service groups \(Var[G(\varvec{\alpha })]\) is:

where \(\sigma ^2_j=\textrm{Var}\left[ Z_j \right] \) is the variance of \(Z_j\) and \(\sigma _{jw}=\textrm{cov}\left[ Z_j,Z_w \right] \) is the covariance between \(Z_j\) and \(Z_w\).

2.3 The optimization problem

Once the expected value and variance of r.v. \(Z_j\) have been defined, it is possible to introduce an optimization problem to determine the optimal \(IDR_j=\alpha _j, j=1,\ldots , J\) for each branch. The optimal strategy is achieved by finding the optimal vector \((\varvec{\alpha }^*)\) that minimizes the total variance \(\textrm{Var}\left[ G(\varvec{\alpha }) \right] \) once the expected profit target \(\bar{G}\) has been set. Then, considering \(\Sigma \) as the non singular variance covariance matrix, we are able to formalize the optimization problem:

where \({\textbf {m}}\) is the J-vector of safety loadings, whose generic element is \(m_j\).

Following Pressacco and Ziani (2010), it is possible to write the Lagrangian of this problem as:

Making recourse to the Karush-Kuhn-Tucker (KKT) conditions, also known as the Kuhn-Tucker conditions, it turns out that \(\alpha ^{*}\) is optimal if there exists a triplet \(\left( \tau ^{*},{\textbf {u}}^{*},{\textbf {v}}^{*} \right) \) non negative vector such that:

-

i)

\(\varvec{\alpha }^{*}\) minimizes \(L(\varvec{\alpha },\tau ,{\textbf {u}}^{*},{\textbf {v}}^{*})\);

-

ii)

\(\varvec{\alpha }^{*}\) is feasible;

-

iii)

either \({\alpha _j}^{*}=0\) or \({v_j}^{*}=0\) (or both) and either \({\alpha _j}^{*}=1\) or \({u_j}^{*}=0\) (or both).

The optimization problem (14) is the same proposed in De Finetti (1940), where a solution for the optimal retention problem in reinsurance market is provided.

De Finetti described an algorithm to solve this optimization problem for uncorrelated case and suggested a procedure to solve the general case of correlated risks under implicit “regularity hypothesis” (see Pressacco and Ziani (2010)). The basic idea underlying the de Finetti solution, even in the case of correlation, is that, rather than dealing with two goal variables (variance and expectation), it is convenient to work with what he called advantage functions:

The advantage functions intuitively capture the advantage coming at \(\alpha \) from a small reduction of the jth risk. As stated in Pressacco and Ziani (2010), “Given the form of the advantage functions, it was easily seen that this implied a movement on a segment of the cube characterized by the set of equations \(F_i(x) = \lambda \) for all the current best performers. Here \(\lambda \) plays the role of the benefit parameter. And we should go on this segment until the advantage function of another, previously non efficient, risk matches the current value of the benefit parameter, thus becoming a member of the efficient set. Accordingly, at this point, the direction of the efficient path is changed as it is defined by a new set of equations, with the addition of the equation of the newcomer risk.” (Pressacco and Ziani, 73).

In our context it means that, starting from the case of absence of reimbursement rules, i.e. \(\bar{G}=\textrm{E}\left[ G({\textbf {1}}) \right] ={\textbf {m}}\), the introduction of reimbursement rules requires the reduction of the most efficient branch in terms of advantage function, i.e. \(\left\{ j:\underset{j}{max}F_j({\textbf {1}})\right\} \).The reduction on branch j stops when the advantage function of another branch, say w, becomes higher than the one measured on j.

To find the path of the optimal solutions, we solve the optimal problem (14) considering several decreasing value of the expected contribution \(\bar{G}\).

In order to solve the optimization problem, it is firstly necessary to get a robust estimate of \(\hat{\textrm{E}}\left[ Z_{ij}\right] \) and \(\hat{\textrm{Var}}\left[ Z_{ij}\right] \) and than calculate \(\hat{\textrm{E}}\left[ Z_{j}\right] \) and \(\hat{\textrm{Var}}\left[ Z_{j}\right] \) according to Eqs. (7) and (8). To this aim we propose in the following section two actuarial approaches based on regression models.

3 One-part model versus two-part model for health expenditure estimate

In actuarial literature, to predict the cost of a claim, it is a usual approach to decompose two-part data, one part for the frequency - indicating whether a claim has occurred or, more generally, the number of claims - one part for the severity, indicating the amount of a claim. This approach is also known as Two-Part or Two-Stage model (Duan et al., 1983) and in health care expenditure analysis has been proposed in Frees (2010), Frees et al. (2013), among others.

However, the request of health-care services can be influenced by a set of demographic and/or geographic variables, among others. Hence, an univariate analysis for each homogeneous risk class is not feasible, and a multivariate approach is more appropriate. We adopt a GLM (Mc Cullagh & Nelder, 1989) as it represents the standard model in industry for ratemaking. For further details on the basic theory of GLM see Appendix 1. For a full description of the regression model in actuarial and financial application reader can refer to Frees (2010).

As the expenditure per single policyholder and category has a mass probability at zero and a positive, continuous, and often right-skewed component for positive values, Tweedie regression is a valid alternative to predict such an expenditure (Kurz, 2017) respect to Two-Part models.

3.1 A negative binomial-Gamma two-part regression model for the estimate of the health expenditure

Fixing the jth branch, the goal is the calculation of \(\textrm{E}[N_{i,j}]\), \(\textrm{Var}[N_{i,j}]\), \(\textrm{E}[Y_{i,j}]\), \(\textrm{Var}[Y_{i,j}]\) necessary for the estimate of mean and variance of \(Z_{i,j}\) (see (2) and (3)). To this aim, we consider a two part model where a Negative binomial regression is used for the estimate of frequency component \(N_j\) and a Gamma regression for the estimate of severity component \(Y_j\).

Considering the (29) with a logarithm link function it is possible to write the density of Negative Binomial r.v. as:

Then a likelihood function is associated to the Negative Binomial model:

Therefore, it is possible to estimate \(\beta _{N_j}\) and \(\theta _j\) parameters, by maximizing the (18). Exploiting (29) and (28) it is possible to calculate an estimate for \(\textrm{E}\left[ N_{i,j}\right] \) and \(\textrm{Var}\left[ N_{i,j}\right] \). It is important to note that a number J of Negative Binomial GLM must be carried on.

For the severity component, selecting the logarithm link function it is possible to write the density of Gamma r.v. as:

Then, a likelihood function is associated to the Gamma model:

Therefore, it is possible to estimate \(\beta _{Y_j}\) and \(\eta _j\) parameters, by maximizing the (20). Exploiting (29) and (28) it is possible to calculate an estimate for \(\textrm{E}\left[ Y_{i,j}\right] \) and \(\textrm{Var}\left[ Y_{i,j}\right] \). It is important to note that a number J of Gamma GLM must be carried on.

3.2 Tweedie regression model for the estimate of mean and variance of the expenditure

In this section, we use a Tweedy regression to get by a single model an estimate of mean and conditional variance of a response variable. Consider the collective risk model in Eq. (1), we temporarily remove for sake of simplicity the dependence on i and j. Suppose that N has a Poisson distribution with mean \(\psi \), and each Y has a gamma distribution shape parameter \(\zeta \) and scale parameter \(\rho \). The Tweedie distribution is derived as the Poisson sum of gamma variables. By using iterated expectations, mean and variance can be expressed as:

Now, define three parameters \(\mu \), \(\phi \), and p through the following reparametrization

it is easy to show that \(\textrm{E}(Z)=\mu \) and \(\textrm{Var} (Z)=\phi \mu ^{p}\) (see Kaas (2005), Tweedie (1956)). As a consequence, under these assumptions Z denotes a Tweedie random variable with mean \(\mu >0\), variance \(\phi \mu ^{p}\) \(\left( \phi >0\right) \), where \(\phi \) is the so-called dispersion parameter, and \(p \in (- \infty ,0] \cup [1,\infty )\) the power parameter.

It is worth noting that Tweedie distribution is a special case of exponential dispersion models, a class of models used to describe the random component in GLMs, allowing us to use the Tweedie distribution with GLMs to model \(\textrm{E}(Z_{i,j})\) and \(\textrm{Var}(Z_{i,j})\).

The conditional expected expenditure and variance for the ith policyholder and jth branch are given by:

The estimate of the row-vector of regression parameters \({\beta }_{Z_j}\), of the dispersion parameter \(\phi \) and the power p are provided by a Maximum Likelihood Estimation (MLE) approach (see Frees (2014)).

It is worth noting that a number J of Tweedy regressions must be carried on. In the following section, we report a numerical investigation applied to a real dataset.

4 Numerical application

4.1 Data set

We consider a dataset from an Italian HP, where \(J=5\) branches are covered. A description of each branch is shown in Table 1.

The observation has a time frame of three years (2017, 2018 and 2019). Each year contains claims from \(r=16,206\) policyholders per year, whose age varies from 0 to 69. In the following we report some descriptive statistics of this HP between 2017 and 2019.

In Table 2 the number of claims and total expenditures (data in EUR thousand) are classified for branch; SMV (\(j=5\)) is the branch with the greatest number of claims, whereas the greatest annual expenditure corresponds to DC (\(j=3\)).

In Table 3, the observed frequency for the three most claimed branches (SMV, DC and OH) are reported for sex and age class.

The SMV shows greater frequency for females than males with a peak in age class [50,60). It is also important to highlight that the females have a higher frequency also for DC and OH, but differences between gender are lower than SMV case.

In Table 4, observed average expenditure per claim for the three most claimed branches (SMV, DC and OH) are reported for sex and age class.

The OH has no significant differences for sex, conversely females are in general more expensive than males for SMV and DC.

In Table 5 observed average per policyholder expenditure for the three most claimed branches (SMV, DC and OH) are reported for sex and age class. It is important to note that in SMV case females have an expenditure per policyholder greater than males, with a peak in age class [40;50). In DC and OH case, the higher riskiness of females is confirmed; furthermore, considering the whole population, the most expensive age classes are [50;60) for DC (144.85 EUR thousand) and [40;50) for OH (16.67 EUR thousand).

4.2 Regression models results

In the following paragraph, regression models outcomes are reported. All the regression models introduced in the previous section use sex and age as covariates. This choice is motivated by actuarial practice and literature (Frees, 2014; Duncan, 2018), where this demographic factors are considered crucial in each model for the annual expenditure. We limit the analysis to this two risk factors to facilitate the representation of results. Age variable is treated as quantitative and we use a polynomial function whose degree is selected by a stepwise procedure.

For the estimate of the expected number of claims we consider a negative binomial model; in Table 6 the results are largely in line with those found in the literature (Frees et al., 2011); indeed, sex and age are statistically significant and females shows greater frequencies than males for all the branches in accordance with the observed frequencies in Table 3. The stepwise regression suggests a third-degree polynomial to model age, without the coefficient of degree 1.

For the amount of expenditures (Gamma regression model), Table 7 shows that almost every age coefficient is not significant. It is important to note that the most significant age coefficients are the ones of SMV, in accordance with 4. Age is modelled by a third-degree polynomial without the coefficient of degree 1.

As previously explained, a valid alternative approach to estimate mean and variance of \(Z_{i,j}\) consists on the application of Tweedie regression, whose results are reported in Table 8. As one can see, females are riskier than males, in accordance with observations reported in Table 5. The coefficients are all significant. Age is modeled by a third-degree polynomial without the coefficient of degree 1.

In order to measure the estimation accuracy, we introduce the goodness-of-fit (GoF) measure called Nash-Sutcliffe Efficiency (NSE). The latter is a normalized statistic that determines the relative magnitude of the residual variance compared to the measured data variance (see (Nash & Sutcliffe, 1970)). Given a dependent variable y and its estimate \(\hat{y}\), NSE can be defined as: \(NSE=1- \frac{\sum (y - \hat{y})^2 }{\sum (y -E(y))^2}\).

The NSE can be interpreted as test statistic for the accuracy of model predictions. The NSE ranges from \(-\infty \) to 1: if \(NSE=1\), there is a perfect match of the modeled to the observed data; if \(NSE=0\), the model predictions are as accurate as the mean of the observed data, if \(-\infty<NSE<0\), the observed mean is a better predictor than the model. It is important to note that NSE is a GoF measure that allows to accept or reject the model using 0 as a critical value.

In Table 9: we report results for NSE:

where all the values are compliant with acceptance.

Finally, exploiting Eqs. (2) and (3) it is possible to calculate \(\textrm{E}[Z_{i,j}]\) and \(\textrm{Var}[Z_{i,j}]\) in the case of Two-Part model. whereas the Tweedie model gives a direct estimate of this values. By (7) and (8) we can get an estimate of mean and variance of the expenditure for each branch, whose results for 2019 are reported in Table 10

The branch DC has the greatest variance of expenditure. If we consider the ratio between variance and mean representing the variance per unit of mean, the DC value is the greatest (1558 and 1807 in two-part and Tweedie estimate respectively) and the OH value is the lowest (102 and 96 in two-part and Tweedie estimate respectively) confirming the ranking observed in Table 11

4.3 The calculation of the optimal IDR

We show an example of the calculation of optimal IDRs in the case of no-correlation and correlation among branches. Before proceeding, we need to establish the general rule to assess the risk loading \(m_j\) for each branch j. To this aim, standard actuarial pricing principles (see Gerber (1979)) use a functional that assigns a loaded premium, called pure premium, to any distribution of the expenditure per policyholder. Traditional “loaded” net premium principles correct the equivalence principle to generate a premium that is bigger than the expected loss. In the following context we use the simplest method, the so-called Equivalence Premium, that starting from the expected loss assigns a positive “safety loading” to avoid ruin with a certainty proportional to the expected loss, i.e. \(m_j=\beta \cdot \textrm{E}[Z_j]\), \(\beta >0\). Therefore, in the following we consider \(\beta =10\%\) for each branch and the risk loading \(m_j \, (j=1,\ldots , 5)\) are obtained according to the values \(\textrm{E}[Z_j]\) show in Table 10. To find the path of the optimal solutions, we solve the optimal problem proposed in Eq. (14) considering several decreasing values of the expected contribution (\(\bar{G}\)) according to a proportion \(0\le \delta \le 1\) of the maximum contribution \(\textrm{E}[G({\textbf {1}})]\), i.e. \(\bar{G}=\delta \cdot \textrm{E}[G({\textbf {1}})]\). These solutions are equivalent to consider an overall IDR (or AV) equal to \(\delta \).

4.3.1 The no-correlation case

Assuming the absence of correlation among branches, the optimization problem in Eq. (14) can be solved numerically using Eq. (15) where the objective function is easily expressed as the weighted sum of the \(\textrm{Var}[Z_j]\), with weights \(\alpha _j\).

As an alternative, identical solutions can be achieved by following the De Finetti “algorithm” (for further details see Pressacco and Ziani (2010)) proposed in his famous “problema de pieni” (De Finetti (1940)). The de Finetti “algorithm”, in the uncorrelated case, can be summarized as follows:

-

i)

order branches by the terms \(F_j(\textbf{1})=\frac{\sigma ^2_j }{m_j}\), \(j=1,\ldots ,J\), which is the expression of the advantage function in Eq. (16) in the no-correlation case;

-

ii)

by reducing the expected gain to a level \(\bar{G}\), the IDRs must be reduced consequently, following the order defined in step i: the first IDR to be reduced is the one for which \(\frac{\sigma ^2_j }{m_j}\) is maximum and the last to be reduced is the one for which \(\frac{\sigma ^2_j }{m_j}\) is minimum;

-

iii)

For any \(0\le \lambda \le \max _j {\frac{\sigma ^2_j }{m_j}}\), an optimal retention is given by: \(\alpha _j^*=min\left\{ \frac{\lambda }{\frac{\sigma ^2_j }{m_j}},1 \right\} \)

As previously stated \(\lambda \) plays the role of the benefit parameter. Table 11 shows the values of \(F_j(\textbf{1})\) obtained using values in Table 10.

As observable, according to the de Finetti algorithm we expect that the first branch to move is DC and the last OH. This is confirmed by value reported in Table 12 where the optimal IDR respect to a decreasing contribution \(\delta \), according to the Two-Part regression model. Similar values are obtained with the Tweedie regression model.

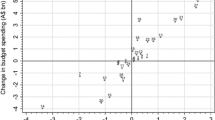

Fig. 1 gives a straight representation of the concept of the functioning of the de Finetti algorithm. Indeed, the first branch reduced is DC as it has the maximum \(F_3(\textbf{1})\), whereas the last one is OH where is observed the minimum \(F_1(\textbf{1})\).It is worth noting that, that the second highest value of the ratio is \(F_2(\textbf{1})\) (SC) where the Tweedie model shows a higher value compared to the Two-Part model. This involves a quicker movement from 1 to 0 of SC IDR concerning higher values of \(\delta \), in the Tweedie model compared to Two-Part one.

4.3.2 The general case of correlated branches

To provide an analysis on correlated branches, it is necessary to introduce the correlation matrix \(\Omega \) and, consequently, calculate the variance/covariance matrix \(\Sigma \), concerning the two regression models provided.

Once again, the optimization problem in Eq. (14) can be solved numerically and, as an alternative, de Finetti “algorithm” can be improved. Anyway, the latter, is based on the advantage function in Eq. (16) where the \(F_j(\varvec{\alpha })=\lambda \) depends on variance and covariance between branches. Hence, a more complex algorithm compared to the one introduced in the no-correlation case is required. For further details, the reader can refer to Pressacco and Serafini (2007). However, the basic rule of the maximum advantage function to identify starting point for the first declining risk is respected. Table 13 shows the ranking obtained in the correlated branches cases.

As one can see in Table 14, the first branch to move is DC and the last is OH as in the no-correlation case. Anyway, this time, the Tweedie model does not show similar values as shown in Fig. 2. Indeed, the ranking in this case changes, as the branch with maximum value of \(F_i(\textbf{1})\) is SC instead of DC (see Table 13) as in the Two-Part model.

5 Conclusion

This study investigates a methodological framework to assess optimal reimbursement strategy for employer-based health insurance plans. The reimbursement strategy is measured in terms of the percentage of expenditure reimbursed by the health plan defined in actuarial literature as Indicated Deductible Relativity and in the U.S. Actuarial Value. The IDR may be different for each category of services or branch provided by HP and the optimal strategy may be found assuming as objective the minimization of the risk, measured through the variance, or maximizing the expected gain. To this aim, we propose an optimization problem similar to the one proposed and solved by (De Finetti (1940)) in the context of proportional reinsurance. Firstly, we adopt a basic actuarial regression model based on a two-part approach (Negative Binominal - Gamma model) and we compare it with a Tweedie regression model. The latter has the main advantage to analyze the distribution of the loss, representing the total expenditure per policyholder, as a whole. This distribution is particularly indicated considering the large number of zero claims we observe in the data for each group of services. The regression models allow for the calculation of the mean and variance of the expenditure r.v. for each policyholder profile considered. Then, assuming independence among policyholders, we assess the overall expected expenditure and variance for each branch. These values are considered as the inputs of the optimization problem whose aim is to identify the optimal IDR for each branch considering the absence or the presence of correlation among branches.

We calibrate our model to a real database that offers five types of services. The findings provided confirm that the advantage function, as defined by de Finetti, represents the main quantity to be controlled to define an optimal strategy. In the case of the HP, the positive correlation observed among branches represents another relevant element to be considered. As observable, in the following Fig. 3 we compare the set of optimal solutions obtained with \(\delta =0.60\).

The quantitative analysis shows that to obtain the same gain, the positive correlation cases require a greater reduction of IDR. In such cases, the IDR is reduced over \(50\%\) but this condition may be not feasible as the IDR or AV is a commonly used method by policyholders for scoring a health insurance plan.

In the US HP context, it is relevant that the IDR is not lower than 60% as stated in Obamacare (2010) to ensure that policyholder can have a proper insurance cover. This means that further researches can be addressed to use 60% as lower limit for \(\alpha \) in the optimization problem (14). Such new constraint will give a sub-optimal result but is more compliant with indications in Obamacare (2010).

References

Carroll H., Mange J. (2017). The actuarial role in self-insurance: A health section strategic initiative. In Health Watch Issue 84, SOA.

Clemente, G. P., Savelli, N., Spedicato, G. A., & Zappa, D. (2022). Modeling general practitioners’ total drug costs through GAMLSS and collective risk models. North American Actuarial Journal, 26(4), 610–625.

Daykin, C. D., Pentikainen, T., & Pesonen, M. (1993). Practical risk theory for actuaries. Chapman and Hall/CRC.

De Finetti, B., (1940). Il problema dei pieni. Giornale dell’istituto italiano degli attuari

Duan, N., Manning, W. G., Morris, C. N., & Newhouse, J. P. (1983). A comparison of alternative models for the demand for medical care. J of Business and Economic Statistics, 1, 115–126.

Duncan I., (2018). Healthcare risk adjustment and predictive modeling, (2nd edn), ACTEX Learning.

Duncan, I., Loginov, M., & Ludkovski, M. (2016). Testing alternative regression frameworks for predictive modeling of health care costs. North American Actuarial Journal, 20(1), 65–87.

Frees, E. W. (2010). Regression modeling with actuarial and financial applications. Cambridge University Press.

Frees, E. W. (2014). Frequency and severity models. In E. W. Frees, G. Meyers, & R. A. Derrig (Eds.), Predictive modeling applications in actuarial science. Cambridge University Press.

Frees, E. W., Gao, J., & Rosenberg, M. A. (2011). Predicting the frequency and amount of health care expenditures. North American Actuarial Journal, 15(3), 377–392.

Frees, E. W., Jin, X., & Lin, X. (2013). Actuarial applications of multivariate two-part regression models. Annals of Actuarial Science, 7, 258–287.

Garrido J., Genest C., Schulz J., (2016). Generalized linear models for dependent frequency and severity of insurance claims. Insurance: Mathematics and Economics, Elsevier, vol. 70, (C), pp. 205–15.

Gerber, H., (1979). An introduction to mathematical risk theory. Huebner Foundation.

Govorun, M., Latouche, G., & Loisel, S. (2015). Phase-type aging modeling for health dependent costs. Insurance Mathematics and Economics, 62, 173–183.

Kaas, R., (2005). Compound poisson distribution and GLM’s-Tweedie’s distribution in proceedings of the Contact Forum “3rd actuarial and financial mathematics day”, pp. 3–2. Brussels: Royal Flemish Academy of Belgium for Science and the Arts.

Keeler, E. B., & Rolph, J. E. (1988). The demand for episodes of treatment in the health insurance experiment. Journal of Health Economics, 7, 337–367.

Klugman, S. A., Panjer, H. H., & Willmot, G. E. (2012). Loss models: from data to decisions (4th ed.). Wiley.

Kurz, C. F. (2017). Tweedie distributions for fitting semicontinuous health care utilization cost data. BMC Medical Research Methodology, 17, 171.

Lee, G. Y. (2016). General insurance deductible ratemaking. North American Actuarial Journal, 21(4), 620–638.

Mc Cullagh, P. A., & Nelder, J. A. (1989). Generalized linear models. Taylor & Francis.

Ministero della Salute, Le attività dell’Anagrafe fondi sanitari e i dati del Sistema Informativo Anagrafe Fondi, Rome (2021).

Nash, J. E., & Sutcliffe, J. V. (1970). River flow forecasting through conceptual models part I-a discussion of principles. Journal of Hydrology, 10(3), 282–290.

OECD.: Health at a glance 2021: OECD indicators, OECD Publishing, (2021). https://doi.org/10.1787/ae3016b9-en

Pressacco, F., Ziani, L., (2010). Bruno de Finetti forerunner of modern finance. Convegno di studi su Economia e Incertezza, Trieste, 23 ottobre 2009, Trieste, EUT Edizioni Università di Trieste, pp. 65–84.

Pressacco, F., & Serafini, P. (2007). The origins of the mean-variance approach in finance: Revisiting de Finetti 65 years later. Decisions in Economics and Finance, 30, 19–49.

Rechel, B. (2019). Funding for public health in Europe in decline? Health Policy, 123(1), 21–26. https://doi.org/10.1016/j.healthpol.2018.11.014

The patient protection and affordable care act of 2010, Pub. L. No. 111-148, 110 Stat. 2033 (2010). https://www.govinfo.gov/content/pkg/PLAW-111publ148/pdf/PLAW-111publ148.pdf

Tikkanen, R., Osborn, R., Mossialos, E., Djordjevic, A. and Wharton, G., (2020). International profiles of health care systems [online] Commonwealthfund.org. Available at: https://www.commonwealthfund.org/sites/default/files/2020-12/International_Profiles_of_Health_Care_Systems_Dec2020.pdf

Tweedie, M. C. K. (1956). Some statistical properties of inverse Gaussian distributions. Virginia Journal of Science New Series, 7, 160–165.

van Winssen, K. P., van Kleef, R., & C., van de Ven, W.P. (2016). Potential determinants of deductible uptake in health insurance: How to increase uptake in The Netherlands? The European Journal of Health Economics,17(9), 1059–1072.

Vimont, A., Leleu, H., & Durand-Zaleski, I. (2021). Machine learning versus regression modelling in predicting individual healthcare costs from a representative sample of the nationwide claims database in France. The European Journal of Health Economics, 23(2), 211–223.

Yang, S.-Y., Wang, C.-W., & Huang, H.-C. (2016). The valuation of lifetime health insurance policies with limited coverage. Journal Risk and Insurance, 83, 777–800.

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Fabio Baione declares he has no conflict of interests, Davide Biancalana declares he has no conflict of interests, Massimiliano Menzietti declares he has no conflict of interests. This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Basic elements of GLMs

Appendix A Basic elements of GLMs

This section deals with the introduction of the essential elements of the Generalized Linear Models, which are regression techniques that are useful in the insurance context, amongst others, and were introduced by Nelder and Mc Cullagh (Mc Cullagh and Nelder (1989)). GLMs are based on the following three building blocks:

-

1.

The dependent variable Y belongs to the exponential family with the probability density function \(f\left( y,\kappa , \varphi \right) =exp\left\{ \frac{y \kappa -b\left( \kappa \right) }{a\left( \varphi \right) }+c\left( y,\varphi \right) \right\} \), where a, b and c are known functions; \(\kappa \) and \(\varphi \) are unknown canonical and dispersion parameters, respectively. The function \(a\left( \varphi \right) \) is commonly in the form \(a\left( \varphi \right) =\varphi / \omega \), where \(\varphi \) is constant over observation, and \(\omega \) is a known prior weight that can vary from observation to observation.

-

2.

A linear combination of independent variables is considered as \(\vartheta =\sum _{h}{x_h}\beta _h \), where \(\beta _h\) are unknown parameters and \({x}_{h}\) are given values of predictors.

-

3.

The dependence of the conditional mean is described by a link function g, which is strictly monotonous and twice differentiable \(\textrm{E}\left( Y\left| \right. x\right) =\textrm{E}\left( Y\right) =\mu =g^{-1} \left( \vartheta \right) \).

The expectation and variance can be obtained under the assumption that b is twice continuously differentiable, as follows:

where the latter expression can be rewritten using the variance function which is defined as:

It means that the variance only depends on the mean value. The maximum likelihood method is used to estimate the parameters of GLMs.

In the following Table 15, the members of the exponential family we refer to herein are shown.

The gamma distribution is positive right-skewed, with a sharp peak and a long tail to the right. Gamma is the most adopted distribution in practice for modeling severity.

Let \(\left( \textbf{x}_i,y_i\right) \) be a member of the set of observations \(\left( i=1,\ldots ,r\right) \) where \(y_i\) is a dependent variable in the regression equation, and \(\textbf{x}_i=\left( x_{i1},\ldots ,x_{im}\right) \) is a row-vector of independent variables (covariates). The conditional mean for the ith policyholder is given by:

where \(\beta \) is the column-vector of regression coefficients, introduced in point 2 of this paragraph. In some cases, the choice of the link function g is bound with the features of statistical procedures.

In applications, the choice is often made to simplify practical considerations. In the insurance ratemaking context, a logarithm function is usually selected as it allows to combine two models by means of a factorization of their conditional expectation. Then a log-link function eq. (29) could be re-written as:

Moreover, GLM provide also an estimate of conditioned variance and of the whole conditioned probability distribution by estimating the dispersion parameter \(\varphi \). Overdispersion is a phenomenon that is often observed in practice where the variance need not to be equal to the expected value and \(\varphi \) scales the relationship between the variance and the mean as in (28). An estimate of the dispersion parameter could be provided in several ways. The most robust model error estimation is based on Pearson’s chi-square statistic \(X^2\) (see Mc Cullagh and Nelder (1989)). An approximately unbiased estimator of \(\varphi \) is:

The solution follows statistical theory where \(X^2\) is approximately \(\chi _{r-k}^2\)- distributed, with k standing for the number of regression coefficients, so that \(\textrm{E}\left[ X^2\right] \sim r-k\).

The knowledge of conditional mean and variance for each \(\left( i=1,\ldots ,r\right) \) allows to well-define the parameters for the exponential family distributions used in GLMs.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Baione, F., Biancalana, D. & Menzietti, M. Optimal reimbursement limitation for a health plan. Ann Oper Res (2023). https://doi.org/10.1007/s10479-023-05677-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10479-023-05677-9