Abstract

Passive investment strategies, such as those implemented by Exchange Traded Funds (ETFs), have gained increasing popularity among investors. In this context, smart beta products promise to deliver improved performance or lower risk through the implementation of systematic investing strategies, and they are also typically more cost-effective than traditional active management. The majority of research on index replication focuses on minimizing tracking error relative to a benchmark index, implementing constraints to improve performance, or restricting the number of assets included in portfolios. Our focus is on enhancing the benchmark through a limited number of deviations from the benchmark. We propose a range of innovative investment strategies aimed at minimizing asymmetric deviation measures related to expectiles and quantiles, while also controlling for the deviation of portfolio weights from the benchmark composition through penalization. This approach, as compared to traditional minimum tracking error volatility strategies, places a greater emphasis on the overall risk of the portfolio, rather than just the risk relative to the benchmark. The use of penalization also helps to mitigate estimation risk and minimize turnover, as compared to strategies without penalization. Through empirical analysis using simulated and real-world data, we critically examine the benefits and drawbacks of the proposed strategies in comparison to state-of-the-art tracking models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past few decades, there has been a growing attention towards passive investment products, including ETFs and similar offerings, which aim to mimic the performance of a benchmark with lower management costs than active funds. The popularity of these instruments is largely driven by the low fees, high liquidity and high transparency (Trackinsight, 2022). In recent years the market of passive investment funds witnessed an increasing diversification in the product lines to meet investors’ needs. Among these, a category that raised the interest of the investors are the active ETFs and the smart beta products. These products are positioned as a compromise between active and passive investment, promising improved performance and lower risk, while still maintaining low management costs through the use of systematic investment strategies (Richard & Roncalli, 2015).

According to the Global ETF Survey conducted by Trackinsight (Trackinsight, 2022), the percentage of institutional investors with active ETFs in their portfolios is above 55%, and 37% of the investors plan to increase their exposures to these products by at least 5% in the next year. The interest is motivated by portfolio diversification, alpha potential, and reduced costs compared to active funds. The range of strategies and investment goals adopted by these funds is vast, and further research is necessary in order to fully understand their future development and backtesting their performance (Arnott et al., 2016).

The extended academic literature on enhanced index tracking is mostly concentrated on the replication of the index with a limited number of asset and on the increase of expected returns over the benchmark (see for example de Paulo et al., 2016 and Guastaroba et al., 2020 and references therein). The classical set-up typically involves the minimization of the so-called tracking error volatility (TEV) with respect to the benchmark, while imposing a constraint on the excess return (Roll, 1992). Enhanced index tracking with cardinality constraints (Maringer & Oyewumi, 2007; Canakgoz & Beasley, 2009; Guastaroba et al., 2016) is known to be NP-hard and different methods have been proposed, ranging from using global search heuristics to relying on regularization methods for finding the optimal sparse portfolios (Krink et al., 2009; Sant’Anna et al., 2017). While heuristics can address the optimization problem directly, they often come with a cost in terms of computational complexity and may not guarantee convergence to the global optimum (Scozzari et al., 2013). Regularization methods based on convex penalties, such as Slope (Kremer et al., 2020, 2021) can identify sparse optimal solutions by imposing a penalty on the asset weight vector. As an alternative, non convex penalties, such as \(\ell _q\), Log and Scad, have also shown interesting performance in index tracking (Giuzio et al., 2018), although they come with further optimization and computational burden. Additionally, optimization models have been used to improve the trade-off between transaction costs and similarity to the benchmark (Strub & Baumann, 2018), and to handle liquidity constraints (Vieira et al., 2021).

So far, the literature has largely centered on improving replication models with the aim of enhancing performance and controlling portfolio size. However, little attention has been given to reducing the overall risk of portfolios in relation to replication strategies. Jorion (2003) highlights that focusing solely on tracking error volatility may result in suboptimal allocations that decrease the TEV from the benchmark, but increase the overall risk. In fact, as Jorion (2003) and Bertrand (2010) pointed out, constraining the tracking error volatility induces the manager to optimize only in the excess-return space, while ignoring the investor’s overall portfolio risk. Hence, the variance of the tracking error of the optimal portfolios does not depend on the choice of the benchmark and it is positively connected with the excess expected return. Indeed, Jorion (2002) found that a sample of enhanced index funds, which may be generated through excess-return optimization, tend to exhibit higher risk levels than the benchmark. The study shows that by setting a fixed tracking error volatility (TEV) and adding a constraint on the total portfolio volatility, the performance of the managed portfolio can be significantly improved. Moreover, Bertrand (2010) suggests that allowing the tracking error to vary while maintaining a fixed level of risk aversion to variance can lead to desirable properties for the tracking portfolio.

Unlike traditional replication strategies, which tend to concentrate on higher returns and portfolio size control, we propose an approach that places emphasis on risk management as part of the replication process. This is in line with Jorion (2002, 2003) and Bertrand (2010), and our contribution introduces two main improvements compared to these works. First, we go beyond a mean-variance framework, proposing investment strategies based on the minimization of asymmetric deviation and risk measures as in Giacometti et al. (2021). Hence we expect an improvement in the tail risk of the portfolio. Second, we control the deviations from the benchmark portfolio composition using penalization methods rather than by considering the TEV or other tracking error measures. In fact, we set up an optimization problem where we minimize a deviation measure under a set of constraints, and we add a penalty term in the objective function on the difference between portfolio’s and benchmark’s weights. This penalty has the role of limiting the deviations from the benchmark in a flexible and effective way. The penalty-based control of the benchmark deviation offers practical benefits for the implementation of investment strategies: by using LASSO and elastic net penalties we obtain portfolios in which only a limited number of assets have weights different from the ones in the benchmark. This allows the asset manager to implement the optimal strategy by first replicating the benchmark, and then add a limited number of positions in individual stocks. An alternative approch to limit the number of assets whose exposure differ from the benchmark is to include cardinality constraints, that have the drawback to increase significantly the complexity of the optimization problem.Footnote 1

Our strategy has several advantages. First, compared to minimum TEV optimization it allows to focus on the total risk of the portfolio instead of the risk relative to the benchmark. Second, compared to Jorion (2003), the model can be easily used outside the mean-variance framework (e.g. using more appropriate risk measures capable to focus on the tails). We show that the optimization problem can be easily solved by quadratic programming. Instead introducing TEV constraints or non-convex penalties could lead to non-quadratic problems. Third, the introduction of regularization by elastic net penalty brings advantages in terms of out-of-sample performances, as it allows to effectively deal with multicollinearity and large dimensional problems (i.e. a large number of assets and short time series). Finally, by penalizing deviations in weights from the benchmark instead of constraining TEV, our strategy potentially reduces modeling risk, as new constraints may further increase estimation error.

The general framework is flexible and can accommodate different types of risk functionals and regularization penalties. Empirical analysis on simulated data and on the S &P 100 US, FTSE 100, and EUROSTOXX 50 indices provides the opportunity to critically evaluate the advantages and disadvantages of the proposed framework in comparison to current state-of-the-art tracking models.

The paper is structured as follow. In Sect. 2, we discuss the building blocks of our model, in particular asymmetric deviation measures and the choice of the appropriate loss function. Many application of risk management can be nested providing a link between finance and estimation theory. In Sect. 3, we introduce the Minimum Tracking Error Deviation Measures portfolio. In Sect. 4, we present our enhanced replication model, while Sect. 5 comments on the empirical results. Section 6 reports the main conclusion.

2 Deviation measures and loss function

We start our analysis with the discussion of deviation measures since our benchmark replication framework requires, from one side, to quantify the deviation of a portfolio from the benchmark and, on the other hand, to minimize a risk measure computed on the entire tracking portfolio. A key aspect of the analysis is the notion of deviation measures and their relationship with risk measures. Let us focus here on a family of asymmetric deviation measures, presenting the main analytical concept useful for the analysis.

Denote by \({\mathbb {L}}^{p}\) the set of all random variables X with \({\mathbb {E}}[ \vert X\vert ^{p} ] < +\infty \), defined on a probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\), where \(p \in [1, +\infty )\). Consider a univariate random variable X with \(X \in {\mathbb {L}}^{p}\), a real value function \(L(\cdot )\), called the loss function, and a scalar \(\xi \in {\mathbb {R}}\).

A location measure is the value around which the data have the minimum dispersion. Mean and median are the most popular. Denote \(\mathcal {S}_L(X)\) the location measure (following Rockafellar and Uryasev, 2013, also called the statistic) induced by the loss function \(L(\cdot )\), which can be obtained as the solution of the following variational problem:

where \({\mathbb {E}}(\cdot )\) is the expectation operator.

The associated deviation measure \(D_L(X)\) describes the dispersion of a random variable around the measure of location \(\mathcal {S}_L(X)\), which can be obtained as:

Variance and standard deviation are the most commonly used deviation measures in the financial literature.

A popular family of loss functions is \(L(x) =\vert x\vert ^p \), the p-th power of the absolute value. If \(p=1\) we recover the median as location and the mean absolute deviation (MAD) as deviation, while if \(p=2\) we recover the mean as the location and the variance as deviation.

In both cases the deviation is computed by weighting in the same way departures above and below the location measure. In order to introduce an asymmetric deviation measure, an asymmetric loss function is considered:

Positive and negative departures are identified by the two piecewise linear functions \(X_{+} = \max {(0,X)}\) and and \(X_{-} = \max {(0,-X)}\). The measure is asymmetric as it assigns different weights to negative and positive departures (\(1-\tau \) to \(X_{-}\) and \(\tau \) to \(X_{+}\), with \(\tau \in (0,1)\)). For financial application, it is sensible to use a value of \(\tau <0.5\) that overweights the negative values, thus giving more relevance to the lower tail compared to the upper one. Considering specific cases, for p equal to 1 and 2 we notice that:

-

if \(p=1\), the loss function is an asymmetric piecewise linear, convex function

$$\begin{aligned} L_{1,\tau }(X) = \rho (X) = X_{+}+\frac{(1-\tau )}{\tau }X_{-} \end{aligned}$$and we have as the location the Value at Risk with a confidence level \(1-\tau \), denoted \(VaR_{1-\tau }\), where

$$\begin{aligned} VaR_{1-\tau }(X):= - \arg \min _{\xi \in {\mathbb {R}}} \left( {\mathbb {E}}[(X-\xi )_+] + (1-\tau )/\tau {\mathbb {E}}[(X-\xi )_-]\right) . \end{aligned}$$The deviation \(\mathcal {D}_{\rho }(X)\) is strictly linked to the Conditional Value at Risk with a confidence level \(1-\tau \) denoted \(CVaR_{1-\tau }\). The relationship between deviation \(\mathcal {D}_{\rho }(X)\) and \(CVaR_{1-\tau }\) is given by

$$\begin{aligned} \mathcal {D}_{\rho }(X) = CVaR_{1-\tau }(X) + {\mathbb {E}}[X]. \end{aligned}$$The relationship between risk and deviation measures has been investigated by Rockafellar and Uryasev (2013), who proposed the risk quadrangle, a framework that links risk and deviation measures, proposing an estimation procedure based on the minimization of an error measure given by the expected value of a loss function.

-

If \(p=2\), the loss function is a piecewise quadratic convex function:

$$\begin{aligned} L_{2,\tau }(X) = \eta (X) = X^2_{+}+\frac{(1-\tau )}{\tau }X^2_{-} \end{aligned}$$and the obtained location is the expectile, introduced by Newey and Powell (1987). Expectiles have attracted a lot of research attention (e.g. Bellini and Di Bernardino, 2017; Jakobsons, 2016) thanks to their properties, namely that they are coherent and elicitable. The extension of the quadrangle to expectiles is discussed in Giacometti et al. (2021). The \(1-\tau \) expectile is defined as:

$$\begin{aligned} EVaR_{1-\tau }(X):= - \arg \min _{\xi \in {\mathbb {R}}} ( {\mathbb {E}}[(X-\xi )^2_+] + (1-\tau )/\tau {\mathbb {E}}[(X-\xi )^2_-]. \end{aligned}$$The associated deviation \(\mathcal {D}_{\eta }(X)\) is known as asymmetric variance or variancile:

$$\begin{aligned} \mathcal {D}_{\eta }(X) = \min _{\xi \in {\mathbb {R}}}{{\mathbb {E}}[\eta (X - \xi )]}. \end{aligned}$$

In practice, it is relevant to quantify how different two random variables are. A replicating portfolio is constructed by minimizing some tracking error deviation measures which quantify how different a benchmark and a portfolio (i.e. a linear combination of stocks) are. A natural way to quantify the discrepancy is to replicate what we do for scalars: we compute a measure of divergence between the two. The most popular are the Euclidean distance, the squared distance or alternatively the absolute difference. Hence, consider the deviation measure \(L_{p,\tau }\) computed on the absolute difference between X and Y:

defined for variables \(X,Y\in {\mathbb {L}}^p\). As discussed in the following section, the divergence \(L_{p,\tau }\) has a straightforward application in the index tracking problem, where an investor needs to find an asset allocation that tracks as closely as possible a benchmark. More specifically, when one of the random variables is given (the benchmark), we can search for a set of portfolio weights which minimizes the deviation between the tracking portfolio and the benchmark. Depending on the values of p and \(\tau \) we have different properties:

-

Piecewise quadratic function (\(p=2\)). When \(\tau =0.5\), we obtain the squared loss function typically used in regression settings, where the error is the expected loss. This choice of the loss function has two properties: it is always non negative and it grows quadratically with the absolute difference, penalizing symmetrically large departures from the mean. On the opposite, when the two random variables are very close, their squared difference will be tiny. If \(\tau <0.5\) we have an asymmetric penalization of the errors, assigning more weight to negative departures. This allows to estimate and better control for left tail risk.

-

Piecewise linear function (\(p=1\)). When \(\tau =0.5\), the loss function is the absolute loss. This choice of the loss function has two properties: it is always non negative but it grows linearly with the absolute difference and is more accurate than the previous in the search of a zero loss solution of the associated median regression model. When \(\tau <0.5\), we have an asymmetric penalization of the errors (i.e. modified Koenker and Bassett error function), used in the estimation of the quantile regression. The use of quantile regression to explain the behavior of random variables at different quantiles is ideal for examining tail risk and is one of the main tools commonly employed by researchers to analyze the tail risk of asset of portfolio returns (Adrian, 2016).

3 The minimum tracking error deviation measures portfolio

ETFs provide a convenient and low-cost way to implement indexing or passive management. While ETFs are structured to track an index, they could just be easily designed to mirror any existing mutual fund or pursue a particular investment objective. These products typically aim to replicate the performance of an index as accurately as possible, either by perfectly replicating the composition of the index, or by performing an optimized sampling of the index asset holdings. Other ETFs can provide investor products that aim to deliver above-average returns i.e. with an enhancement component. These instruments, that are typically known as smart beta ETFs or active ETFs, are increasingly popular, and lie between active and passive investment strategies.

Looking at the academic literature, an enhanced tracking portfolio is typically built by minimizing some tracking error deviation measures such as the TEV (tracking error volatility, Rudolf et al., 1992), TEMAD (tracking error mean absolute deviation Rudolf et al., 1999) or CVaR-based ratio measures (Guastaroba et al., 2020), while including constraints on the performances, such as an expected return larger than the benchmark.

Given \(\varvec{R}\) an n-variate random variable denoting the universe of stock returns and \(R_{bmk}\) the univariate benchmark return, consider an \([n \times 1]\) vector of weights \(w\in {\mathbb {R}}^n\), where n is the number of assets, and \(\tau \) is the confidence level with \(\tau \in (0, 1)\) for VaR, CVaR and expectiles. An index tracking portfolio scheme that minimizes the deviation based on the loss \(L_{p,\tau }\) can be formulated as:

where \(\varvec{w}_{bmk}\) is the vector of benchmark’s weights, the tracking error is \(TE(\varvec{w})=\varvec{w}' \varvec{R}- R_{bmk}\), \(R_{bmk}= \varvec{w}_{bmk}'\varvec{R}\) and k is an enhancement parameter.Footnote 2

This approach has two main drawbacks. First, the tracking portfolio composition is likely to be different from the benchmark, showing more concentrated positions, or short/long extreme position, increasing the potential effect of estimation error on out-of-sample portfolio performance. Second, the overall risk of the replicating portfolio is ignored as the optimization focuses on minimizing the deviations from the benchmark, without any constraint on the total risk of the portfolio. This leads to a sub-optimal replication in terms of total risk. The sub-optimality is due to a lack of control of the co-movements between the benchmark and the replicating portfolio. To illustrate the point, consider a mean-variance setting in which the risk of the portfolio is measured by its variance \({\mathbb {V}}(\varvec{R}\varvec{w})\). Let be \(q= \varvec{w}-\varvec{w}_{bmk}\), then we can decompose the portfolio variance as:

where \({\mathbb {C}}(bmk,TE)\) is the covariance between the benchmark and the tracking error. Since the variance of the tracking error \({\mathbb {V}}(TE)\) cannot be negative, the risk of the portfolio can be smaller than the one of the benchmark only if the sign of \({\mathbb {C}}(bmk,TE)\) is negative. Hence, by minimizing the TEV\(= \sqrt{{\mathbb {V}}(TE)}\) as in Roll (1992), an investor can not guarantee to reduce the risk of the portfolio as it does not controls explicitly the sign and magnitude of the correlation \({\mathbb {C}}(bmk,TE)\).Footnote 3

Coming back to problem in Eq. (2), assume we consider the p-power loss function with p = 2 and \(\tau =0.5\). In other words the asset manager minimizes the TEV of the portfolio. The minimization of the TEV is equivalent to the minimization of the variance of the replication portfolio minus its covariance with the benchmark.Footnote 4

Ideally, the investor can end up with the minimum TEV but with an overall riskier portfolio positively correlated with the benchmark as highlighted by Jorion (2003). A less correlated portfolio can provide a better solution, in terms of risk of the tracking portfolio, with a similar tracking error and a lower overall risk level. As suggested by Jorion (2003) a possible solution to control the overall risk can be obtained reformulating the problem and maximizing the excess return constraining the portfolio volatility and the tracking error volatility to equate the benchmark volatility and a positive constant, respectively. These constraints imply that \((\varvec{w}-\varvec{w}_{bmk})'\varvec{\Sigma }\varvec{w}_{bmk}=-TEV^2(\varvec{w})\) i.e. the benchmark deviations have a negative covariance with the index.Footnote 5

Having in mind these considerations, we propose an alternative framework that aims to control directly for the replicating portfolio risk and to avoid the problem related to a high concentration in few assets.

4 Penalized enhanced replication strategies

The aim of this section is to formulate an enhancement tracking model which controls for the deviation between an index or benchmark and a replicating portfolio, while minimizing the overall portfolio risk and at the same time maintaining a high diversification. This strategy follows the guidelines proposed by Jorion (2003). The idea is to find the portfolio with the best risk-return profile imposing a penalization on the portfolio weights rather than an explicit constraint on the tracking error volatility. The advantage of our framework is that it can be applied outside the mean-variance approach, considering for instance deviation measures that focus on the tails, while resulting in quadratic programming formulations easy to solve with available software for suitable penalization functions. On the contrary, TEV constraints may lead to non-quadratic optimization problems. The goal of our model is to reduce the tracking error by shrinking the number of positions with weights different from the benchmark, reducing at the same the estimation error, as specified below:

where \(\mathcal {D}(\cdot )\) is a suitable deviation measure, \(f_\lambda (\cdot )\) is a penalization function applied to the difference between portfolio’s and benchmark’s weights, and k is a target expected return. When the optimization problem includes an expected return constraint, it is equivalent to minimize a risk measure or a corresponding deviation measure computed using the risk quadrangle framework: \(\mathcal {R}(X)=\mathcal {D}(X)-E(X)\) (Rockafellar & Uryasev, 2013). For this reason in the rest of the work we will talk interchangeably of the minimization of risk, and the minimization of a deviation measure.

The proposed penalization function applied to the difference between portfolio’s and benchmark’s weights is known as elastic net (EN), a convex combination of the piecewise linear LASSO and the piecewise quadratic RIDGE functions:

where \(\Vert \cdot \Vert _1\) and \(\Vert \cdot \Vert _2\) are the \(\ell _1\)- and \(\ell _2\)-norm, respectively. \( \alpha \in [0,1]\) and \(\lambda >0\) are the parameters which control the shape and intensity of the penalization (Zou & Hastie, 2005). The EN method combines the characteristics of the LASSO and RIDGE (see Fig. 1). LASSO does both continuous shrinkage toward zero and automatic selection inducing sparsity. Moreover, if there is a group of highly correlated variables, then the LASSO tends to select one variable from a group and ignore the others. RIDGE does not produce parsimonious models, keeping all the variables in the model. The advantage of this technique is that it can better to deal with multicollinearity and exhibits better prediction properties than LASSO (Tibshirani, 1996). The EN penalty exploits the characteristics of both LASSO and RIDGE, while still resulting in a convex penalty.

The parameter \(\lambda \) controls the intensity or amount of regularization. We obtain for \(\lambda = 0\) the un-regularized optimal portfolio, which does not control for the deviation from the benchmark. For positive and increasing values of \(\lambda \) we have a progressively more regularized portfolio and finally for \(\lambda \rightarrow \infty \) and \(\kappa =0 \) the solution collapses to the benchmark portfolio (see Fig. 2).

The parameter \(\alpha \) controls the model selection ability i.e. sparsity of the portfolios weights relative to the benchmark’s ones. For \(\alpha = 1\) we have a pure LASSO penalty, while for \(\alpha = 0\) we have a pure RIDGE penalty. In our analysis we set either \(\alpha =0.5\) or \(\alpha =1\).

For the deviation measures \(\mathcal {D}_{\eta }\) and \(\mathcal {D}_{\rho }\), we propose a formulation of the optimization problem with EN penalty as a quadratic program. For the minimum \(\eta _\tau \) deviation portfolio (expectile), the optimization can be expressed as follows:

For the minimum \(\eta _\rho \) deviation portfolio (quantile), the optimization problem is formulated as follows:

where \(\varvec{\nu }, \varvec{\gamma }\), and \(\varvec{\phi }\) are positive auxiliary variables. Both optimization are quadratic with linear constraints and can be easily solved with available software, making the proposed framework even more appealing.

To make the portfolios more suitable for real-world implementation, we further introduced turnover and gross exposures constraints:

where \(\varvec{w}^{t_-}\) is the vector of portfolio weights immediately before the rebalancing, and GE, \(TO \in {\mathbb {R}}^+\) are the maximum gross exposure and turnover, respectively. The constraints can be linearized using standard linear programming techniques (see e.g. Gass, 2003).

5 Empirical analysis

In this section, we present an in-sample study on simulated data and an out-of sample analysis on real data. In the in-sample analysis, we compare the optimal portfolio in Eq. (5) estimated a grid of values of \(\lambda \), to the minimum TEV portfolio in Eq. (2) and to the benchmark. The aim of this analysis is to test the effectiveness of the weight penalization scheme in reducing the tracking error volatility of the portfolio as the strength of the penalization increases, as shown in Sect. 4, and to compare the proposed portfolio strategies that minimize different deviation measures. Finally, we test the out-of-sample performances of the optimal asset allocations proposed on three datasets, constructed including the constituents of three US and European indices.

5.1 In-sample simulation analysis

We generated the data from a n-variate Student’s t-distribution with \(n= 90\) and degrees of freedom equal to 5. Moreover, the first and second moment of the distribution are calibrated on the return of 90 randomly selected constituents of the S &P500 index in the period 01/01/2007 – 30/06/2020 in order to have synthetic but still realistic data.Footnote 6

We first study the role of the parameter \(\lambda \) on the portfolio by computing the optimal penalized portfolio strategy with a grid of lambda values. We set the values to be exponentially spaced and the extremes are tuned to include a relevant range of portfolios.

Figure 3 shows the in-sample tracking error volatility, the portfolio volatility, the correlation between the tracking error and the benchmark, and the \(\ell _1\)-norm of the difference between the weights of the portfolio and the weights of the benchmark. In other words, we analyze separately the different constituents of Eq. 3 in relation to the strength of the penalization. We set the length of the estimation windows equal to 500 days and we take the average across 10 simulation runs. For brevity, in this in-sample analysis, we focus on a quadratic and symmetric loss function consistent with the mean-variance framework, imposing an expected extra return \(k=1\%\) per year of the portfolio over the benchmark. We call these portfolios minimum risk portfolios to evoke the idea that we minimize a risk or deviation measure (in this example the variance) and progressively approach the benchmark by increasing the strength of the penalization applied on the weights. In this analysis, we set \(\alpha =1\), which corresponds to the case of the LASSO penalty. As a comparison for minimum risk portfolios, we consider the portfolio obtained minimizing directly the TEV portfolio as in problem (2), with the same extra expected return constraint. We call these portfolios minimum TEV portfolio.

A Tracking Error Volatility (TEV). B portfolio volatility. C Covariance between the Tracking Error and the benchmark (Cov(TE, bmk)). D \(\ell _1\)-norm of the difference between portfolio and benchmark weights for our penalized minimum risk (Eq. 5) based on variance, and minimum TEV portfolio, for a grid of exponentially spaced values of \(\lambda \)

Figure 3 shows in Panels from A to D the behavior of the constituents of Eq. 3 as the strength of the penalization increases, as well as the deviation from the benchmark in terms of weight composition. We see in Panel A that the minimum risk portfolio has lower TEV for larger values of \(\lambda \), as the portfolio weights approach the benchmark (see Fig. 2). The relationship is not linear, and the value of TEV does not reach zero due to the presence of the extra expected return constraint. The TEV of the minimum risk portfolios remains higher compared to the minimum TEV portfolios for all level of \(\lambda \), although it converges to reasonably small levels (less than 1% per year). Panel B shows the volatility of the portfolio, a quantity that measures the total risk of the portfolio, rather than the risk relative to the benchmark. Here, we notice that the minimum TEV portfolio has a standard deviation very close to the benchmark, while the minimum risk portfolio has a smaller standard deviation for lower values of the penalization parameter \(\lambda \). That is, by using a minimum risk portfolio with a low penalization, the investor has the opportunity to reduce the overall risk compared to the benchmark, albeit at the cost of a higher tracking error volatility. Panel C shows that the optimal portfolios are characterized by a negative covariance between the tracking error (TE) and the benchmark, which is consistent with the analysis in Sect. 3. Hence, the negative covariance between the two portfolios contributes to reducing the risk of the optimal portfolio compared to the benchmark. Finally, Panel D shows the \(\ell _1\)-norm (i.e. the sum of the absolute value difference) of the differences between portfolio and benchmark weights, and we see an inverse relationship between this indicator and the penalization parameter \(\lambda \), meaning that the weights of the portfolio converge to the ones of the benchmark as \(\lambda \) increases. Together, the plots in Fig. 3 show that the proposed framework allows to handle the trade-off between risk control and adherence to the benchmark without imposing any explicit constraint on the tracking error volatility or other deviation measure computed on the tracking error, offering flexibility in terms of choice of the risk measure targeted in the optimization, and extending the principles of the papers of Jorion (2003) and Bertrand (2010) outside the mean-variance framework.

In-sample portfolio variance, portfolio deviation \(\mathcal {D}_{\eta }\) and \(\mathcal {D}_{\rho }\) versus tracking error volatility for portfolio strategies that minimize Variance, \(\mathcal {D}_{\eta }\) and \(\mathcal {D}_{\rho }\) for a set of penalized portfolios computed using a grid of exponentially spaced values of \(\lambda \)

As further analysis, we compare the portfolio performance of optimization schemes based on alternative deviation measures on the same dataset. We focus on the following three measures: \({\mathcal {D}}_\eta (X)\) (asymmetric piecewise quadratic function), \({\mathcal {D}}_\rho (X)\) (asymmetric piecewise linear function, equivalent to minimize the CVaR of the portfolio) and \(\sigma ^2(X)\) (quadratic function, equivalent to minimize variance). For each of the optimization framework, we consider as a benchmark the equally weighted portfolio, a target excess return over the benchmark of \(k=1\%\) per year and \(\tau =0.1\). On an exponentially spaced grid of \(\lambda \) values, we compare the proposed deviation measures. Figure 4 provides a comparison of the optimization schemes: Panel A compares the portfolios in terms of standard deviation, Panel B in terms of \({\mathcal {D}}_\eta (X)\) and Panel C in terms of \({\mathcal {D}}_\rho (X)\). As expected, we see a trade off between risk and TEV for all the deviation measures, and that each optimal portfolio provides lower risk according to the measure optimized for each level of TEV, hence the penalized mean-variance portfolio obtains a lower variance compared to the others, and so on.

Interestingly, we see that the portfolios are relatively close to each other in terms of risk, regardless of the specific measure used. This is not surprising as we expect that the advantage of using measures of deviation focused on the tails may be more relevant for data with non-elliptical distributions.

5.2 Out-of-sample real-world analysis

5.2.1 The data

In order to test the performances of the optimal asset allocations proposed, we conduct an empirical analysis on real-world data. We consider three datasets, constructed including the constituents of the following indices: Standard and Poor’s 100 index (SP100), FTSE 100 index (FTSE100), and EUROSTOXX 50 index (EUROSTOXX). We include in the analysis only the stocks for which a complete time series of daily data is available, obtaining 91, 90, and 49 stocks for SP100, FTSE100 and EUROSTOXX, respectively.

We estimate the optimal portfolio weights using a rolling window of 500 daily observations. We recalibrate the portfolio every twenty days. The investing period is from 01/01/2012 to 12/12/2022. The benchmark is a synthetic cap-weighted index constructed using the stocks in the dataset in order to avoid dealing with changing index composition. We impose a turnover constraint of 10% every recalibration, and we consider a gross exposure constraint of 110% (meaning that short position should not exceed 5% of the portfolio). Concerning the overperformance, we require in the optimization problem a daily extra-return over the benchmark of 0.0001 (corresponding to roughly 2.5% per year). In Appendix C we perform several robustness checks on the optimization parameters and the selection of the estimation set.

5.2.2 Tuning of the penalization parameters

The tuning of the parameter \(\lambda \) (for both the LASSO and EN portfolios) is performed using cross validation on past data, and such calibration aims to find a balance between risk and tracking error. In particular, for each estimation window we use the previous 10 in-sample rolling windows as training sets to estimate the optimal portfolios for different values of \(\lambda \). The corresponding 10 out-of-sample rolling windows are the test sets to compute the average standard deviation of the optimal portfolios. We stress the fact that this calibration procedure uses only past data, and the usage of previous rolling windows for the calibration of the set allows to improve the code performances: optimal portfolios for the cross validation do not need to be recomputed as we can use the results obtained for portfolio optimization in the previous windows. We choose the parameter over an exponentially spaced grid of \(\lambda \) values by picking the \(\lambda \) that minimizes the following function:

where \(\sigma _{X,\lambda ,i}\) and \(TEV_{X,\lambda ,i}\) are the volatiliy and the standard deviation of the optimal portfolio X in the i-th estimation window with penalization \(\lambda \), and \(z(X)=\frac{X-{\bar{X}}}{\sigma _X}\) is the z-score computed within each cross-validation test window across all lambda values (this standardization is done to avoid that the choice of \(\lambda \) is dominated by testing windows with anomalous market conditions). This procedure allows us to struck a balance between tracking accuracy and overall risk: an investor interested in a different equilibrium between risk and tracking error may apply weights to the two additive components in (10). If the standard deviation component in (10) is overweighted, then the priority is to reduce portfolio risk and a smaller lambda is chosen; on the contrary, if tracking is most important for the investor, the TEV component should be overweighted, leading to a portfolio that tracks more closely the benchmark. Finally we underline that the advantage of tuning the lambda using cross-validation, rather than apply a constraint to the TEV as in Jorion (2002), is that our procedure can account for the presence of estimation error: In case the optimal portfolios with low lambdas tend to perform badly in the test windows (e.g. in case of strong market turmoil or regime switches), the procedure can select a portfolio more aligned to the benchmark, reducing the effects of estimation error. In the empirical analysis we consider 8 exponentially spaced values, and the upper and lower values are chosen in order to include a relevant range of portfolios (see Appendix B for an example of the visual inspection tools used in the empirical analysis).

The parameter \(\alpha \) for the EN portfolio, that controls the ratio between the LASSO and RIDGE penalties, is not tuned but instead is kept fixed at 0.5 and 1 (i.e. equivalent to the LASSO penalty). As discussed in Giacometti et al. (2021), \(\alpha \) represents a choice of the investors, that is willing to obtain a portfolio that is more or less concentrated in some assets (in our case the deviations from the benchmark are more or less concentrated in some of the assets). The value of 0.5 allows a comparison with LASSO, while maintaining a larger number of assets with zero deviation from the benchmark weight. We stress that a future line of research may explore parameters’ tuning based on market’s conditions, similarly e.g. to dynamic smart beta strategies.

5.2.3 Empirical results

A list of the acronyms used for the different asset allocation models is provided in Table 1, while the description is reported in Appendix A. We consider the asset allocation techniques discussed so far, as well as the equally weighted portfolio (EW) and minimum tracking error volatility (min-TEV) portfolios (Roll, 1992) as benchmarks.

Table 2 reports the out-of-sample results in term of risk and performance measures of the portfolios obtained applying the proposed allocation strategies on the three datasets in the period 2012–2022 with the target daily expected return 0.0001 higher than the benchmark (\(\kappa =0.0001\)). We are mostly interested in evaluating the penalized strategies (MQ-L, MQ-EN, ME-L, and ME-EN) compared to the non-penalized optimal strategies (MQ, ME), the benchmark and two common strategies (min-TEV, and the equally weighted portfolio).

We see that the most effective strategies in reducing risk are the non-penalized portfolios (MQ, ME), that have smaller CVaR and standard deviation across all the specifications. The penalized strategies (MQ-L, MQ-EN, ME-L, and ME-EN), despite losing against the non-penalized ones, are less risky than the benchmark and the min-TEV strategies, and with only a few exceptions they are less risky than the equally weighted portfolios. Looking at Sharpe ratios and average returns, we see that the non-penalized portfolios show mixed results, with large variations (both in positive and negative) with the benchmark performances. In contrast, the average returns of the penalized strategies are aproximately aligned to the ones of the benchmark and the ones of the min-TEV portfolios. We stress that expected returns are known to be challenging to forecast, and their integration in portfolio optimization is always a relevant source of estimation error (see e.g. Michaud, 1989; Black and Litterman, 1992). By constraining the weights of the portfolio to be close to the ones in the benchmark we limit the modeling risk, reducing our exposure to estimation error. The higher modeling risk is reflected also in the maximum drawdown, that happens to be particularly high for non-penalized portfolios in the SP100 dataset. This high level of maximum drawdown is likely due to the underperformance of the non-penalized models in turmoiled markets such as during the COVID crisis. In contrast, the penalized portfolios show maximum drawdowns lower or aligned to the ones of the benchmark, since they tend to behave closely to the benchmark and to control tail risk. Focusing on the TEV, we see that penalized portfolios show a very good adherence to the benchmark performances. This has both positive and negative implications: on the positive side it limits the exposures to modeling risk. Moreover, low tracking error is typically approciated by investors interested in passive funds (Trackinsight, 2022). The negative note is that a low tracking error may reduce opportunities to obtain better risk-adjusted performances of the portfolio. As shown in Sect. 3, to reduce the volatility of the portfolio compared to the benchmark, the correlations between the tracking error and the benchmarks have to be negative. Indeed, we see that they are negative for all the proposed portfolios (see last column), while in the FTSE100 and the EUROSTOXX datasets the min-TEV strategy result in correlations close to zero.

Table 3 reports the corresponding portfolio statistics related to the portfolio turnover, gross exposure, density and excess density. The portfolio turnover is computed as \(\sum _{s = 1}^{{\bar{s}}}(\sum _{i = 1}^{n}\vert w_i^{(t_s)}-w_i^{(t_s^-)}\vert /2)/{\bar{s}}\), where \(s = 1,\dots ,{\bar{s}}\) is the number of the rebalancing period, \(t_s\) the instant at the end of period s, immediately after the portfolio rebalancing, and \(t_s^-\) the instant immediately before the rebalancing. Gross exposure is the sum of the absolute value of all long and short positions. Finally, the density is defined as the percentage of assets with non-zero weight, while the excess density is the percentage of assets with weights different from the benchmark.

Comparing the optimal portfolios with and without penalization, we see that the non-penalized have typically higher gross portfolio exposures and turnover, while the penalized ones have more limited short positions and a smaller turnover. Indeed, the gross exposure and turnover constraints are typically binding, for the non-penalized portfolios, while they are not for the penalized ones. The penalized portfolios show their capacity to control the TEV with limited turnover and short exposures.

The analysis of excess density helps us to appreciate the differences between the LASSO and the elastic net penality: the former finds portfolios with a lower number of positions with weights different from the ones of the benchmark. This peculiarity may be preferable if an investors aims to implement this strategy by adding individual positions to an already existing replicating portfolio or a cheap replication instrument such as an ETF. The minimum-TEV portfolio in contrast is characterized by more than 60% the assets with non-zero position for all the dataset considered.

Tables 4, 5, and 6 show the significance of the differences in standard deviation, CVaR, and Sharpe ratio, respectively. For the standard deviation and the Sharpe we use the bootstrap procedure of Ledoit and Wolf (2011) and Ledoit and Wolf (2008). For the CVaR we obtained a confidence interval by estimating the standard error of the difference between CVaRs using the bootstrap procedure in Ledoit and Wolf (2008), then we test if the difference is statistically significantly different from zero. We find that most of the differences are statistically significant at the 1% or 5% level. On the contrary, the differences of Sharpe ratios are in most cases not statistically significant. The lack of significance for the Sharpe ratio may be related to the very high estimation error typically associated to the expected returns (see e.g. Michaud, 1989; Black and Litterman, 1992.

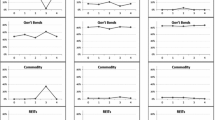

SP100. Time series evolution of portfolio wealth (1 USD at the beginning of the out-of-sample period),tracking error volatity (60 days rolling averages), excess returns over benchmark, optimal \(\lambda \). For brevity we report only the plots for the MQ and MQ-L. Other results are available upon request

FTSE100. Time series evolution of portfolio wealth (1 GBP at the beginning of the out-of-sample period),tracking error volatity (60 days rolling averages), excess returns over benchmark, optimal \(\lambda \). For brevity we report only the plots for the MQ and MQ-L. Other results are available upon request

Figures 5, 6, and 7 report some time series of the portfolios for the three datasets. For brevity we report only the images for the ME and ME-L datasets. Plots for the quantile based portfolios and for elastic net penalty lead to similar considerations. The top-left panels show the evolution of wealth of 1 Dollar/Euro/Pound invested in 2012. We see that the wealth of the penalized portfolios (ME-L) are tracking closely the ones of the benchmark for all three datasets. For the non penalized (ME) portfolios instead, we see that the final wealth is higher than the benchmark for the FTSE100 and EUROSTOXX dataset, while it is noticeably lower for the SP100. The plot of excess returns over the benchmark (bottom-left panels) confirm the quite relevant deviations of the ME portfolios, both positive and negative. The top-right panels report the TEV, that is lower and more stable for the penalized portfolios (ME-L) compared to the unpenalized portfolios (ME). Finally, the bottom-right panels show the evolution of optimal lambdas.

Concerning computational times, the optimization runs relatively fast: on an Intel i7-9750 H 6 core processor with 16 gb of RAM, Matlab r2021a, and no parallelization, the EN penalized portfolios were the slowest, and for each rolling window it took less than 5 s to compute the 8 optimization required for lambda cross-validation on the largest SP100 dataset (91 assets).

EUROSTOXX 50. Time series evolution of portfolio wealth (1 EUR at the beginning of the out-of-sample period),tracking error volatity (60 days rolling averages), excess returns over benchmark, optimal \(\lambda \). For brevity we report only the plots for the MQ and MQ-L. Other results are available upon request

6 Conclusion

Passive and smart-beta strategies are increasingly popular among investors. The literature on (sparse) index replication portfolio strategies often overlooks the relevance of the correlation between TE and index returns, leading to potential inefficiencies. Indeed, the focus is often on minimizing the TEV, failing to consider explicitly the overall portfolio risk, which can be quantified by different risk measures. Here, we propose a new framework based on general risk definitions, which allow to explicitly consider tail-risk measures. Moreover, we introduce a penalization scheme based on EN regularization, which controls for the deviations with respect to the index portfolio holdings. We show that the proposed optimization schemes can still be solved by quadratic programming for EN, making the new framework an attractive and flexible tool for investors. The in-sample analysis allows to point out the effect of the penalization parameters as well the desirable properties of the proposed optimal strategies with respect to state-of-art tracking models. In particular, we show that by using a minimum risk portfolio with low penalization, the investor can reduce the overall risk compared to the benchmark, despite paying a small price in terms of higher tracking volatility. Moreover, the optimal portfolios are characterized by a negative covariance between the tracking error and the benchmark which contributes to reduce the overall portfolio risk. The new framework allows then to handle the trade-off between the risk control and adherence to the benchmark without imposing any explicit constraint on the tracking error volatility or other deviation measures that would potentially result in non-quadratic optimization. An added benefit of the penalization methods that we use is that they result in portfolios in which the portfolio weights deviate from the assets for only a small number of assets. This allows us to implement the strategies more easily as an investor only needs to enter in a limited number of position on top of a passive replication fund that he/she already manages, or that can be purchased in the market at low cost. When focusing on real-world out-of-sample analysis, we notice that the proposed strategies lead to a reduction in risk compared to the benchmark, together with small tracking error volatility, limited turnover, and conservative asset weights, thereby avoiding extreme positions. The negative correlations between the tracking error and the benchmarks allow then to propose a replicating strategy which provides a hedge on the global portfolio. Further research high on the agenda is to explore parameters’ tuning based on markets’ conditions, similarly e.g. to dynamic smart beta strategies as well as to consider alternative penalization functions capable of extending the appealing properties of the proposed strategies also to further contexts of applications, including robo-advisory.

Notes

Our approach still requires to invest in all the assets in the benchmark, leading potentially to relatively high costs, especially for the tracking of indices with a large number of constituents. Still, according to Dyer and Guest (2022) 52% of funds that track an index use a full replication strategy and, when compared to products that adopt a sampling replication strategy, the ones with full replication have typically lower costs, lower turnover, and better tracking performances. This suggests that in several setting full replication may be a feasible strategy. Moreover, even if the replication of the index is not convenient, an investors could buy a passive ETF for the index and adjust this position with exposures to individual securities to obtain the desired optimal allocation.

Note that if \(k=0\) and there are no additional constraints, then \(\varvec{w}=\varvec{w}_{bmk}\) and the optimal solution is an exact replication of the benchmark.

Indeed, Jorion (2002) shows that for a sample of stock based enhanced index funds the risk of the portfolios is systematically higher than the benchmark.

Let be \(q= \varvec{w}-\varvec{w}_{bmk}\) then \(TEV=\sqrt{q'\Sigma q}\). We can decompose as follows: \(TEV^2(\varvec{w}) =\varvec{w}'\varvec{\Sigma }\varvec{w}-2 \varvec{w}'\varvec{\Sigma }\varvec{w}_{bmk}+\varvec{w}_{bmk}'\varvec{\Sigma }\varvec{w}_{bmk}\) where the last addendum is constant.

Let be \(q= \varvec{w}-\varvec{w}_{bmk}\) then \( (\varvec{w}_{bmk}+q)'\Sigma (\varvec{w}_{bmk}+q)= \sigma _{bmk}^2 \), which implies \( 2q'\Sigma \varvec{w}_{bmk}= -TEV^2\) where \(TEV=\sqrt{q'\Sigma q }\).

The estimated parameters and the sample of random data used for the estimation are available upon request.

References

Adrian, T., & Markus, B. K. (2016). CoVaR. The American Economic Review, 106(7), 1705–1741.

Arnott, R., Beck, N., Kalesnik, V., & West, J. (2016). How can “smart beta” go horribly wrong. Available at SSRN 3040949.

Bellini, F., & Di Bernardino, E. (2017). Risk management with expectiles. The European Journal of Finance, 23(6), 487–506.

Bertrand, P. (2010). Another look at portfolio optimization under tracking-error constraints. Financial Analysts Journal, 66(3), 78–90.

Black, F., & Litterman, R. (1992). Global portfolio optimization. Financial Analysts Journal, 48(5), 28–43.

Canakgoz, N. A., & Beasley, J. E. (2009). Mixed-integer programming approaches for index tracking and enhanced indexation. European Journal of Operational Research, 196(1), 384–399.

de Paulo, W. L., de Oliveira, E. M., & do Valle Costa, O. L. (2016). Enhanced index tracking optimal portfolio selection. Finance Research Letters, 16, 93–102.

Dyer, T., & Guest, N. (2022). A tale of two index funds: Full replication vs. representative sampling. Available at SSRN 4057537.

Gass, S. I. (2003). Linear programming: Methods and applications. Courier Corporation.

Giacometti, R., Torri, G., & Paterlini, S. (2021). Tail risks in large portfolio selection: penalized quantile and expectile minimum deviation models. Quantitative Finance, 21(2), 243–261.

Giuzio, M., Eichhorn-Schott, K., Paterlini, S., & Weber, V. (2018). Tracking hedge funds returns using sparse clones. Annals of Operations Research, 266(1–2), 349–371.

Guastaroba, G., Mansini, R., Ogryczak, W., & Speranza, M. (2016). Linear programming models based on omega ratio for the enhanced index tracking problem. European Journal of Operational Research, 251(3), 938–956.

Guastaroba, G., Mansini, R., Ogryczak, W., & Speranza, M. G. (2020). Enhanced index tracking with CVaR-based ratio measures. Annals of Operations Research, 1–49.

Jakobsons, E. (2016). Scenario aggregation method for portfolio expectile optimization. Statistics & Risk Modeling, 33(1–2), 51–65.

Jorion, P. (2002). Enhanced index funds and tracking error optimization. Graduate School of Management, University of California at Irvine: Unpublished Paper.

Jorion, P. (2003). Portfolio optimization with tracking-error constraints. Financial Analysts Journal, 59(5), 70–82.

Kremer, P., Brzyski, D., Bogdan, M., & Paterlini, S. (2021). Sparse index clones via the sorted \(\ell \)1 - norm. Quantitative Finance, 15(1), 1–32.

Kremer, P., Lee, S., Bogdan, M., & Paterlini, S. (2020). Sparse portfolio selection via the sorted \(\ell \)1 - norm. Journal of Banking & Finance, 110, 105687.

Krink, T., Mittnik, S., & Paterlini, S. (2009). Differential evolution and combinatorial search for constrained index tracking. Annals of Operations Research, 172, 153–176.

Ledoit, O., & Wolf, M. (2008). Robust performance hypothesis testing with the Sharpe ratio. Journal of Empirical Finance, 15(5), 850–859.

Ledoit, O., & Wolf, M. (2011). Robust performances hypothesis testing with the variance. Wilmott, 2011(55), 86–89.

Maringer, D., & Oyewumi, O. (2007). Index tracking with constrained portfolios. Intelligent Systems in Accounting, Finance & Management: International Journal, 15(1–2), 57–71.

Michaud, R. O. (1989). The Markowitz optimization enigma: Is ‘optimized’ optimal? Financial Analysts Journal, 45(1), 31–42.

Newey, W. K., & Powell, J. L. (1987). Asymmetric least squares estimation and testing. Econometrica: Journal of the Econometric Society, 55(4), 819–847.

Richard, J.-C., & Roncalli, T. (2015). Smart beta: Managing diversification of minimum variance portfolios. In Risk-Based and Factor Investing, (pp. 31–63). Elsevier.

Rockafellar, R. T., & Uryasev, S. (2013). The fundamental risk quadrangle in risk management, optimization and statistical estimation. Surveys in Operations Research and Management Science, 18(1–2), 33–53.

Roll, R. (1992). A mean/variance analysis of tracking error. Journal of Portfolio Management, 18(4), 13–22.

Rudolf, M., Wolter, H.-J., & Zimmermann, H. (1999). A linear model for tracking error minimization. Journal of Banking & Finance, 23(1), 85–103.

Sant’Anna, L. R., Filomena, T. P., Guedes, P. C., & Borenstein, D. (2017). Index tracking with controlled number of assets using a hybrid heuristic combining genetic algorithm and non-linear programming. Annals of Operations Research, 258(2), 849–867.

Scozzari, A., Tardella, F., Paterlini, S., & Krink, T. (2013). Exact and heuristic approaches for the index tracking problem with UCITS constraints. Annals of Operations Research, 205(1), 235–250.

Strub, O., & Baumann, P. (2018). Optimal construction and rebalancing of index-tracking portfolios. European Journal of Operational Research, 264(1), 370–387.

Tibshirani, R. (1996). Regression shrinkage and selection via the LASSO. Royal Statistical Society, 58(1), 267–288.

Trackinsight (2022). Global ETF survey 2022. Trackinsight: Technical report.

Vieira, E. B. F., Filomena, T. P., Sant’Anna, L. R., & Lejeune, M. A. (2021). Liquidity-constrained index tracking optimization models. Annals of Operations Research, 1–46.

Zou, H., & Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, 67(2), 301–320.

Funding

This work was supported by the Czech Science Foundation (GACR) under Project 19-11965S, and SGS Research Project SP2021/15 of VSB–TU Ostrava. Open access funding provided by Università degli studi di Bergamo within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A The optimization models

Model 1: MQ minimize the quantile based deviation \(\mathcal {D}_{\rho _{\tau }}\) of the portfolio with no penalization subject to turnover and gross exposures constrains. Note that this model does not control in any way for the tracking error with the benchmark. The expected return of the portfolio can be set to any value, typically we use the expected return of the benchmark plus an enhancement \(\kappa \).

Model 2: MQ-L minimize the same quantile deviation measure \(\mathcal {D}_{\rho _{\tau }}\) considered in (11), but with a LASSO penalty on the difference between the benchmark weights and the portfolio weights (the stronger the penalty, the closer is the optimal portfolio to the benchmark). The parameter \(\lambda \) is calibrated using a cross validation procedure.

Model 3: MQ-EN minimize the same quantile deviation measure \(\mathcal {D}_{\rho _{\tau }}\) considered in (11), but with an EN penalty on the difference between the benchmark weights and the portfolio weights (the stronger the penalty, the closer is the optimal portfolio to the benchmark). The parameter \(\lambda \) is tuned using a cross validation procedure, while the parameter \(\alpha \) is set to 0.5.

Model 4: ME minimize the expectile based deviation \(\mathcal {D}_{\eta _{\tau }}\) of the portfolio with no penalization. Note that this model does not control in any way for the tracking error with the benchmark. The expected return of the portfolio can be set to any value, typically we use the expected return of the benchmark plus an enhancement k.

Model 5: ME-L minimize the same expectile deviation measure \(\mathcal {D}_{\eta _{\tau }}\) considered in Eq. (14), but with a LASSO penalty on the difference between the benchmark weights and the portfolio weights (the stronger the penalty, the closer is the optimal portfolio to the benchmark). The parameter \(\lambda \) is calibrated using a cross validation procedure.

Model 6: ME-EN minimize the same deviation measure \(\mathcal {D}_{\rho _{\tau }}\) considered in Eq. 14, but with an EN penalty on the difference between the benchmark weights and the portfolio weights (the stronger the penalty, the closer is the optimal portfolio to the benchmark). The parameter \(\lambda \) is tuned using a cross validation procedure, while the parameter \(\alpha \) is set to 0.5.

Model 7: EW Equally weighted portfolio. It is a well known benchmark for portfolio allocations.

Model 8: TEV Minimize the Tracking Error Volatility under budget and expected return constraints:

We underline that for \(k=0\) the optimal portfolio is equivalent to the benchmark. For this reason this portfolio is omitted for the analyses with zero excess return over the benchmark (Tables 2 and 3).

Model 9: BMK Buy and hold investment in the index. Note that we do not consider the actual returns of the index, but a synthetic capitalization version of the index to avoid dealing with changing composition.

B Diagnostic plots for the calibration of \(\lambda \)

The penalization procedure applied to the portfolios requires the tuning of the parameter \(\lambda \). The goal of this step is to balance risk and TEV, and we use the cross-validation procedure described in Sect. 5.2.2. The lambda is selected on an exponential grid of values whose extremes are defined thanks to the analysis of diagnostic plots. Figure 8 reports an example of these plots. The upper panel shows the evolution of the wealth for the portfolios composed using a fixed lambda, as well as the benchmark and the portfolio with the optimal lambda. The bottom panels report the out-of-sample CVaR, TEV, and excess density for the portfolios with fixed lambda (blue line), and for the one with the optimal lambda (horizontal orange line). We see that the grid of values allows us to span a set of portfolios between the unregularized optimal portfolios and the (enhanced) benchmark, and that the portfolio with the tuned lambda strikes a balance between risk and TEV with a very low excess density (i.e. with only a few assets deviating from benchmark weights). For illustrative purposes we show here the results for the entire sample, while in a real-world application the fine-tuning of the extremes for the lambda grid is performed on past data. For brevity we report the plots for a single dataset and asset allocation. Other portfolios and dataset show analogous results and are available upon request.

Diagnostic plots for the calibration of lambda. Evolution of wealth for portfolios with fixed lambda, no penalization (\(\lambda =0\)), and the benchmark (top panel), CVaR (bottom-left panel), TEV (center-bottom panel), and excess density (bottom-right panel). CVaR and TEV are computed on the entire out-of-sample period, excess density is the average across all the investment periods. In the bottom panels the blue line is for fixed \(\lambda \) portfolios, and the horizontal orange line is the value for the portfolio with the optimal \(\lambda \) tuned with cross validation. For brevity we report only the plots for the MQ portfolios with EN penalty for the dataset SP100

C Robustness checks

In this section we report the results for a set of robustness checks. In particular we test different length of in-sample time series: 125 days in Table 7 and 250 days in Table 8 (baseline is 500); different length of holding period (10 days in Table 9 and 40 days in Table 10 (baseline is 20 days); a higher expected excess return (0.0002 per day, corresponding to roughly 5% per year, Table 11); a long-only constraint in Table 12; portfolios optimized using weekly returns instead of daily returns (Table 13).

Overall the results confirm the ones in the main text. More in details we notice that the effect of changing the estimation window sizes or the holding periods is limited, resulting in qualitatively similar results. Increasing the expected return tends to increase the realized returns, although the effects are not as sharp as implied by the constraint. This is likely due to the well know difficulty to estimate expected returns. Interestingly, we see that the imposition of long-only constraints reduces the ability of the strategy to reduce risk, suggesting that a moderate amount of short selling has beneficial effects in terms of risk reduction. Increasing the possibility of short position by setting a max gross exposure to 120% instead has little effect on the penalized portfolios since the constraint in most cases is not binding (on the contrary the constraint is typically binding for the non-penalized minimum-deviation portfolios). Finally, the analysis on weekly returns shows qualitatively similar results, suggesting that the methodology is suitable also for weekly data.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Torri, G., Giacometti, R. & Paterlini, S. Penalized enhanced portfolio replication with asymmetric deviation measures. Ann Oper Res 332, 481–531 (2024). https://doi.org/10.1007/s10479-023-05576-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-023-05576-z