Abstract

This paper develops a model for predicting the failure time of a wide class of weighted k-out-of-n reliability systems. To this aim, we adopt a rational expectation-type approach by artificially creating an information set based on the observation of a collection of systems of the same class–the catalog. Specifically, we state the connection between a synthetic statistical measure of the survived components’ weights and the failure time of the systems. In detail, we follow the evolution of the systems in the catalog from the starting point to their failure–obtained after the failure of some of their components. Then, we store the couples given by the measure of the survived components and the failure time. Finally, we employ such couples for having a prediction of the failure times of a set of new systems–the in-vivo systems–conditioned on the specific values of the considered statistical measure. We test different statistical measures for predicting the failure time of the in-vivo systems. As a result, we give insights on the statistical measure which is more effective in contributing to providing a reliable estimation of the systems’ failure time. A discussion on the initial distribution of the weights is also carried out.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In reliability theory, it is important to assess the failure times of systems with interconnected components, and this leads to interesting questions in mathematical statistics and probability modelling. Here, we propose a stochastic model for evaluating and predicting the expected time of failure of a class of weighted k-out-of-n systems–introduced by Wu and Chen (1994). We recall that the weighted k-out-of-n systems have weighted components, and the failure of the system occurs when the survived components have aggregated weight below a given threshold. As we will see, we are slightly different with respect to the original conceptualization of the weighted k-out-of-n systems. In particular, a system fails when a given number of its components fail, and the weight of a failed component is assumed to be opportunely reallocated to the surviving components–under the so-called reallocation rule.

To this end, we apply a Bayesian approach from a rational expectations perspective.

Some words on our conceptualization of rational expectations are in order. We postpone them at the end of the introduction when the framework of the model will be clear.

At the moment, rational expectations are used in finance to estimate the fundamental price of a given asset. The fundamental price is obtained as an expectation value of the price at time t conditioned on all available information relevant to the given asset. Since people are assumed to be rational (i.e. to make trading decisions without biases), taking the expectation value should give the fair or fundamental price. The idea is that every time new information (relevant for the price of an asset) arrives (e.g. new information about earnings, interest rates, mergers, etc) this will impact the fundamental price of an asset. In similar fashion, our idea is that every time new information arrives concerning individual component failure, this should lead to a new (optimal) prediction for the failure of the ensemble of components, i.e. system failure. We would like to point out that in finance “all relevant information” is in principle infinite and very vague to quantify: e.g. how does new information of the sickness of the CEO impact future earnings of a company? On the contrary, in our case “information” is crystal clear to quantify via the new values of component weights of the system. Specifically, as we will see, we estimate the expected failure time of the given systems conditioned by the distribution of their weights, on the basis of an extensive collection of preliminary experiments in which such weights are observed.

Generally, in the literature (see, for example, (Sanyal et al., 1997; Krishnamurthy & Mathur, 1997; Gokhale et al., 1998), and Yacoub et al. (2004)), the failure of reliability systems is forecast by using scenario analysis based on the observation of real systems. Once the failure of a system has been observed, scenario analysis is performed to understand what happened to that specific system.

To predict the failure time of the investigated systems, referred to here as “in-vivo" systems, we use all the information stored in a previously constructed “information set". In particular, we create a catalogue of synthetic systems and observe their time evolution, from the beginning to failure. For each system in the catalogue, we store the time-varying weights—i.e., the configurations of the weights–and the associated failure times.

As said before, we adopt a rational expectation-type approach. The concept of rational expectation comes from the economic context. In this respect, in a celebrated paper, Muth (1961) pointed the need of making reliable predictions on the way expectations modify once the available information and/or the structure of the considered system change. This can be seen as the starting point of the rational expectations theory. We adopt this perspective by developing a rational expectation-type model for predicting the failure time of our class of reliability systems on the basis of the available information. In more detail, we build the information set by creating the catalog of systems of the same class and observing the connection between their ‘performance’ and their failure times. For us, the considered systems are weighted k-out-of-n systems and the performance is measured through a pre-selected synthetic measure of their weights.

Under this perspective, rational expectations are obtained by taking the mean of the failure times of the systems of the catalog, conditioned to a specific value of the performance. This procedure is applied for each observed value of the performance. In so doing, the prediction of the failure times conditioned to the performance is obtained as an arithmetic mean–which plays here the role of the expected value–of the corresponding failure times of the systems in the catalog having the same value of the perfomance. This explains why we take the arithmetic mean. We do not have any reason for over- or under-estimate the contribution of some systems of the catalog. Then, we compare these expected values with the failure times of in-vivo systems with a similar configuration of the weights. In doing so, we explore the informative content of the weights of the system components so that we can then use an extensive simulation approach to forecast failure times.

The method is inspired by the one proposed by Andersen and Sornette (2005); Sornette and Andersen (2006) for the prediction of failure time of the overall system, conditioned on the information revealed by the damage occurring up until the time at which the system is being evaluated—i.e., according to the particular weight configuration. This idea was in turn influenced by the method known as “reverse tracing of precursors" (RTP) (see (Keilis-Borok et al., 2004), (Shebalin et al., 2004)) for earthquake prediction based on seismicity patterns.

There are two aspects to this. First, we discuss the initial distribution of the weights to identify which of them leads to more effective rational expectations-based predictions. Second, we hypothesize that the similarity of the weight configurations is captured by the similarity of one of their statistical indicators; accordingly, we test several statistical indicators to find out which has the highest predictive power.

For the initial distribution of the weights, we follow the insights of (Li & Zuo, 2002; Sarhan, 2005; Asadi & Bayramoglu, 2006; Eryilmaz, 2011; Van Gemund & Reijns, 2012), and Zhang (2020) and compare five different initial distributions of the weights, viz., the uniform distribution in the unit interval and four types of Beta distribution, whose parameters cover the cases of symmetry and asymmetry to the left and to the right.

We also exploit the existing literature to some extent for the analysis of the statistical indicators, considering the variance, skewness, kurtosis, Gini coefficient, and Shannon entropy. These statistical dimensions were chosen for their crucial informative content.

Concerning the use of moments in forecasting models, some authors consider the lowest moments of the distributions (the second in our case, i.e., the variance) to be more efficient than the higher moments (the third, skewness, and the fourth, kurtosis), which are attested to be less stable and reliable (see, e.g., (Reijns & Van Gemund, 2007; Amari et al., 2012; Ramberg et al., 1979), and (Kinateder & Papavassiliou, 2019)).

The usefulness of the Gini coefficient when dealing with failure prediction models is discussed by Ooghe and Spaenjers (2010).

Shannon Entropy has been used for predictive purposes especially in papers dealing with extreme events (see, e.g.,(Franks & Beven, 1997; Mahanta et al., 2013; Karmakar et al., 2019), and (Ray & Chattopadhyay, 2021)). This said, to the best of our knowledge our paper is the first one exploiting Shannon entropy for predicting the failure time of a class of weighted k-out-of-n reliability systems. In our model, the use of Shannon’s entropy leads us to very satisfactory predictive results. Therefore, such statistical measure turns out to be an excellent tool, especially in the presence of very small values in the weight distribution of the system components.

Notably, the present paper provides a bridge between reliability theory and rational expectations.

Indeed, the main message of this paper is the relevance of a rational expectations-type approach for making prediction in reliability theory. As time goes by, the increase in the information available to predict failure times clearly improves the possibilities for prediction. So, after the initial phase of the trends, where the information available is highly random and there is no gain in rational expectations, the statistical indicators included in the analysis prove to be good predictive tools. The increase in knowledge we have about the system allows us to achieve better performance than the benchmark. In particular, at every time step, we observe an improvement in the error curves.

Our results agree with the existing literature regarding the initial weight distributions, confirming the predictive superiority of the negative-exponential distribution. Surprisingly, among the statistical measures, the Gini coefficient and the Shannon entropy give us the best predictive results, even though they are almost never used in forecasting models.

The rest of the paper is organized as follows. Section 2 contains a review of the main literature relating to our research. Section 3 discusses the relevant reliability systems, with particular reference to their structure, the main properties of their components, and the failure rule. Section 4 is devoted to the extensive simulations validating the theoretical proposal. Section 5 contains the results and a critical discussion. The last section concludes and suggests future lines of research.

2 Literature review

Here we review the basic literature that has inspired this research. There are two main themes: k-out-of-n reliability systems and rational expectations.

The k-out-of-n systems involve a variety of special cases and generalizations. However, we can distinguish two types of systems depending on the heterogeneity of their components. Specifically, systems with homogeneous components are ones where all the components contribute equally to the reliability of the system; otherwise a system is said to have heterogeneous components.

As already said in the Introduction, scientific research on weighted k-out-of-n systems was introduced in Wu and Chen (1994). Studies of systems with homogeneous components and related applications can be found in Ge and Wang (1990); Boland and El-Neweihi (1998), and Milczek (2003). The heterogeneous case is more challenging. Cases with random and mutually independent weights can be found in Cerqueti (2021); Xie and Pham (2005); Li and Zuo (2008); Eryilmaz and Bozbulut (2014); Eryilmaz (2014, 2019); Taghipour and Kassaei (2015); Zhang (2018, 2020), and Sheu et al. (2019). In all the cases, the prediction of the failure times of k-out-of-n systems has seen the development of several methods and is widely debated among scholars. It is worth mentioning some of the most relevant contributions:

-

Da Costa Bueno and do Carmo, I. M. (2007) applied active redundancy or minimal standby redundancy depending on the nature of the treated systems, using a martingale approach.

-

Eryilmaz (2012) explored the mean residual lifetime as a fundamental characteristic to be used for dynamic reliability analysis.

-

Wang et al. (2012) considered the reliability estimation of weighted k-out-of-n multi-state systems.

-

Zhang et al. (2019) used a Monte Carlo simulation approach to confirm the accuracy of a model which assesses the reliability of a given system on the basis of the available information.

More generally, one can distinguish two main approaches to failure prediction in k-out-of-n systems in the existing literature: a probabilistic approach that seeks to calculate the probability distribution of a system’s failure time using techniques of stochastic calculus, and a Bayesian computational approach that estimates the average failure time of a system, conditioned on the description of a scenario in which the evolution of the given reliability system is observed.

In the former group, Oe et al. (1980) used autoregressive models to predict the failure of a stochastic system. For this purpose, they considered four types of performance indicators: quadratic distance of autoregressive parameter differences, variance of the residuals, Kullback information, and the distance of the Kullback information (divergence measure). Furthermore, Azaron et al. (2005) used the reliability function for systems with standby redundancy. The system fails when all connections between input and output that are connected to the main components are broken. From a different viewpoint, Parsa et al. (2018) introduced a new stochastic order based on the Gini-type index, showing how it could be used to gain information about the ageing properties of reliability systems, and thus establishing the characteristics of active or already failed components.

In the probabilistic approach, a key role is played by the so-called coherent systems, i.e., systems without irrelevant components; moreover, such systems certainly work when all the components are active and fail when all the components have failed. An important contribution here is due to Navarro and coauthors, who give some insight into the case of dependent components (see (Navarro et al., 2005, 2013), and Navarro et al. (2015)).

In the same context, Gupta et al. (2015) compared the residual lifetime and the inactivity time of failed components of coherent systems with the lifetime of a system that had the same structure and the same dependence. In doing so, the paper cited is particularly close to our own approach, in that it proposes a comparison between a test system and the investigated one–as we do here, with the comparison between the investigated systems and those in the catalogue. In contrast, Zarezadeh et al. (2018) investigated the joint reliability of two coherent systems with shared components, obtaining a pseudo-mixture representation for the joint distribution of the failure time.

This Bayesian approach includes many papers that estimate the average time to failure of these systems using asymmetric loss functions. Among them, Mastran (1976) presented a procedure for exploring the connection between component failure and system collapse in the case of independent components. Mastran and Singpurwalla (1978) also proposed examples of series systems with independent components and parallel systems with component interdependence by using prior data to build in this interdependence. Barlow (1985) modeled a combination of information between components and systems by exploiting lifetime data. Martz et al. (1988) proposed a very detailed procedure and explanatory examples for either test or prior data at three or more configuration levels in the system. Martz and Waller (Martz & Wailer, 1990) extended (Martz et al., 1988) for the particular cases of series and parallel subsystems. Regarding applications, we should mention (Van Noortwijk et al., 1995), who developed a Bayesian failure model for the observable deterioration characteristics in a hydraulic field. Gunawan and Papalambros (2006) presented a Bayesian-type reliability system model in the field of engineering. Kim et al. (2011) explored deteriorating systems by conditioning on monitoring data based on three-state continuous time homogeneous Markov processes. Along the same lines, Aktekin and Caglar (2013) presented Markov chain Monte Carlo methods in the area of software reliability to investigate the failure rate of systems with components that change stochastically. Regarding Bayesian methods, it is also worth mentioning (Bhattacharya, 1967; El-Sayyad, 1967; Canfield, 1970; Varian, 1975; Zellner, 1986) and Basu and Ebrahimi (1991).

In line with several of the above-mentioned studies, the weighted k-out-of-n systems presented here have components with an inner dependence structure. The failure of a given system is assumed to depend on the number and importance of the components. The approach we follow is of Bayesian type. Indeed, our aim is to estimate the failure time of a system by using the information collected in an observed catalogue as the prior. In doing so, we introduce a new element of reliability theory by adopting a rational expectations perspective.

The way expectations are created is a classical theme in the economic debate. Indeed, in the modern theory of behavioral economics, agents are divided into two different categories depending on the hypothesis they use to form economic expectations: those who follow the hypothesis of adaptive expectations, and others who instead follow the hypothesis of rational expectations. Under the first hypothesis, future economic decisions are made according to what happened in the past (see, e.g., (Friedman, 1957) and Chow (1991)). In the context of rational expectations, however, future outcomes are computed as the conditional expectation of the observed realizations of the quantity to be predicted given the available information (see the breakthrough contributions by (Muth, 1961; Lucas, 1972; Sargent et al., 1973; Sargent & Wallace, 1975), and Barro (1976)).

The rational expectations hypothesis is a fundamental assumption in many theoretical models, with implications for economic analysis, and thanks to the increasing accessibility of big data in recent years, studies have been carried out on the use of rational expectations to identify prediction errors in large samples. So, rational expectations are important in any situation in which the agents’ behavior is influenced by expectations (see Maddock and Carter (1982)).

In the context of forecasting, we mention (Atici et al., 2014) who applied Cagan’s model of hyperinflation on discrete time domains. From a different perspective, Becker et al. (2007) compared the rational expectations hypothesis with the bounds and likelihood heuristic to explain average forecasting behavior.

The rational expectations perspective proposed here is used to forecast the failure time of a reliability system, given the available information. Specifically, we check whether the conditional expectation of the realizations in the catalogue of such a quantity given a peculiar state of the weights leads to a suitable identification of the failure time of a system with the same weight configuration.

The crucial aspect of our paper concerns the use of some statistical measures to synthesize the available information on the components’ weights to predict the failure time of a weighted k-out-of-n system. Specifically, we provide information also on the role of the considered statistical measures for predicting the failure time of the system itself. Furthermore, we also provide information about the initial distribution of the weights for prediction. In doing so, we adopt a rational expectations-type approach in the context of reliability theory with an affordable computational complexity. This is the main novelty of our paper.

3 The reliability system

We consider a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) containing all the random quantities used throughout the paper. We denote the expected value operator related to the probability measure \(\mathbb {P}\) by \(\mathbb {E}\).

We denote the reliability system–or, simply, the system–by \(\textbf{S}\), and assume that it comprises n components denoted by \(C_{1},\dots ,C_{n}\) and collected in a set \(\mathcal {C}\).

As we will see, in our framework the system can be considered to be of weighted k-out-of-n type in the sense that it fails when some of its components fail.

The state of \(\textbf{S}\) is a binary quantity. If the system is active and works, then its state is 1. Otherwise, the state of \(\textbf{S}\) is 0, and the system is said to have failed. The state of \(\textbf{S}\) evolves in time and is denoted by Y(t) at time \(t \ge 0\). At the beginning of the analysis (time \(t=0\)), the system is naturally assumed to be in state 1.

Analogously, the state of the j-th component \(C_j\) at time t is denoted by \(Y_j(t)\), and it takes value 1 when \(C_j\) is active and 0 when \(C_j\) has failed. At time \(t=0\) we have \(Y_j(0)=1\), for each \(j=1, \dots , n\).

3.1 The structure of the system

To express the dependence of the state of \(\textbf{S}\) on the states of its components, we simply introduce a function \(\phi :\{0,1\}^n \rightarrow \{0,1\}\)

In reliability theory, \(\phi \) is usually called the structure function of the system.

The elements of \(\{0,1\}^n\) are called configurations of the states of the components of the system or, briefly, configurations.

The function \(\phi \) in (1) has the role of clustering the set of configurations into two subsets: those leading to failure (F) of the system and those associated with the not-failed (NF) system. Thus, we say that \(K_F\subseteq \{0,1\}^n\) is the collection of configurations such that \(\phi (x_F)=0\), for each \(x_F \in K_F\), while \(K_{NF}\subseteq \{0,1\}^n\) is the collection of configurations such that \(\phi (x_{NF})=1\), for each \(x_{NF} \in K_{NF}\). By definition, \(\{K_F,K_{NF}\}\) is a partition of \(\{0,1\}^n\).

We may reasonably assume that the system is coherent and that the following three conditions are satisfied:

First, \((0, \dots , 0)\in K_F\) and \((1, \dots , 1)\in K_{NF}\). This condition means that, when all the components of the system are active (not active), then the system as a whole is also active (not active).

Second, \(\phi \) is non-decreasing with respect to its components. This has an intuitive explanation: the failure of one of the components of the system might worsen the state of the system and cannot improve it.

Third, each component is able to determine the failure of the system. Formally, this condition states that, for each \(j=1,\dots , n\), there exists \(\left( y_1, \dots , y_{j-1},y_{j+1}, \dots , y_{n}\right) \in \{0,1\}^{n-1}\) such that \((y_1, \dots , y_{j-1},1,y_{j+1}, \dots , y_{n}) \in K_{NF}\) and \((y_1, \dots , y_{j-1},0,y_{j+1}, \dots , y_{n}) \in K_{F}\).

3.2 Components and weights

We now note three natural assumptions about the components of the system, inspired by standard reliability theory: first, the various components of the system don’t all have the same "relevance". This means that a possible measure of the centrality of the components’ role in the overall system would lead to a heterogeneous distribution. As we will explain in more detail soon, "relevance" here stands for the role played by the components’ failure in contributing to the failure of the system; second, the components of the system are interconnected and exhibit different levels of interconnection; third, relevance and interconnection levels change over time, with the changing status of the components of the system. We now spell this out.

For each \(j=1, \dots , n\) and \(t \ge 0\), the relative importance of the component \(C_j\) over the entire system at time t is measured by \(\alpha _j(t)\), where \(\alpha _j:[0, +\infty ) \rightarrow [0,1]\) and \(\sum _{j=1}^n \alpha _j(t)=1\), for each t.

For each \(t \ge 0\), we collect the \(\alpha (t)\)’s in a time-varying vector \(\textbf{a}(t)=(\alpha _j(t))_{j}\), where

If a component is not active at time t, then its relevance for the system is null. Moreover, each active component has positive relative relevance, i.e., the system does not contain irrelevant active components. Formally,

Condition (3) is useful, in that it allows us to describe the status of the system’s components directly through the \(\alpha \)’s.

For each \(j=1, \dots , n\), the relative relevance of \(C_j\) varies with the variation of the state of each of the system’s components. Once a component fails, it disappears from the reliability system – i.e., its relative relevance becomes null–and the relative relevances of the components of the remaining active ones are modified on the basis of a suitably defined reallocation rule.

Blob graph representing the proportional reallocation rule described in Example 1: [Top] A component has failed and is deleted from the system. Then, [Bottom] The relevance of the failed component is reallocated over the remaining active components in proportion to their \(\alpha \) values (bubble size) before the failure

The next example proposes a way to build a reallocation rule.

Example 1

Consider a system \(\textbf{S}\) whose component set is \(\mathcal {C}=\{C_1,C_2,C_3,C_4,C_5\}\). Assume that, at time \(t=0\), we have \(\alpha _1(0)=0.1\), \(\alpha _2(0)=0.15\), \(\alpha _3(0)=0.3\), \(\alpha _4(0)=0.2\), \(\alpha _5(0)=0.25\).

Now, suppose that the first failure of one of the components of the system occurs at time \(t=7\), when \(C_3\) fails. Of course, \(\alpha _j(t)=\alpha _j(0)\), for each \(t \in [0,7)\) and \(j=1,2,3,4,5\). Moreover, \(\alpha _3(7)=0\).

We consider a specific reallocation rule which states that the relevance is reallocated over the remaining active components in proportion to their \(\alpha \) values before the failure (see Fig. 1). This means that

In general, if \(\tau _1, \tau _2\) are the dates of two consecutive failures, with \(\tau _1<\tau _2\), we have

The \(\alpha \)’s are step functions, with jumps each time one of the components fails.

Regarding the interconnections among the components, we define their time-varying relative levels using functions \(w_{ij}:[0, +\infty ) \rightarrow [0,1]\), for each \(i,j=1, \dots , n\), so that \(w_{ij}(t)\) is the relative level of the interconnection between \(C_i\) and \(C_j\) at time \(t \ge 0\). We assume that the arcs in the resulting network are oriented, so that in general \(w_{ij}(t) \ne w_{ji}(t)\), for each t. Moreover, by construction, \(\sum _{i,j=1}^n w_{ij}(t)=1\), for each t. We also assume that self-connections do not exist in our network, i.e., \(w_{ii}(t)=0\), for each i and t.

For each \(t \ge 0\), the w(t)’s are collected in a time-varying matrix \(\textbf{w}(t)=(w_{ij}(t))_{i,j}\), with

If \(C_i\) is a non-active component at time t, then \(w_{ij}(t)=w_{ji}(t)=0\), for each \(j=1,\dots , n\). This condition simply formulates the idea that a failed component is disconnected from the system. It suggests that the failure of a component might generate disconnections among the components of the system.

The behavior of the w’s is analogous to that of the \(\alpha \)’s. In this case, too, the relative levels of interconnections change when one of the components of \(\textbf{S}\) changes its state, and there is a reallocation rule for the remaining levels of interconnection.

We denote the whole set of reallocation rules for the weights on nodes and arcs by \(\mathcal {R}\). Therefore, a natural rewriting of the system \(\textbf{S}\) with components in \(\mathcal {C}\) and reallocation rule \(\mathcal {R}\) at time t is

Notice that (5) highlights the observable features of the system with a given set of components and a specific reallocation rule, i.e., the weights on the nodes and on the arcs. Thus, according to (5), we can refer to \(\{\bar{\textbf{a}}, \bar{\textbf{w}}\}\) as an observation of the system at a given time, where \(\bar{\textbf{a}} \in [0,1]^n\) and \(\bar{\textbf{w}} \in [0,1]^{n \times n}\).

When needed, we will conveniently remove the dependence on t from the quantities in (5).

3.3 Failure of the system

As mentioned, time \(t=0\) represents today–the point at which we begin to observe the evolution of the system. Since the system is coherent in the sense that there are no irrelevant components, at time \(t=0\) all the components are active and the system works. The failure of the system is then a random event, which occurs when the system achieves one of the configurations belonging to \(K_F\).

We define the system lifetime by

Analogously, the n-dimensional vector of component lifetimes is \(\textbf{X}=(X_{1},\dots ,X_{n})\), where

To be as general as possible, we assume that the failure lifetimes of the components of the system \(\{X_{1}, \dots , X_{n}\}\) are not independent random variables and do not share the same distribution. In fact, for each component failure, the \(\alpha \)’s and w’s change in accordance with the reallocation rule \(\mathcal {R}\); this also modifies the probability of subsequent failures of the system components in the very natural case where failures depend on the weights. Moreover, we can reasonably assume that the failure of the system coincides with the failure of one its components.

To fix ideas, we provide an example.

Example 2

Assume that \(\mathcal {C}=\{C_1, C_2,C_3,C_4,C_5\}\) and

Suppose that the reallocation rules \(\mathcal {R}\) for relative relevance and interconnection levels are of proportional type, as in Example 1. Such reallocations are implemented if the system has not failed.

Furthermore, assume that the failure of a component has a twofold nature: on the one hand, it can be brought about by an idiosyncratic shock; on the other, it can be driven by the failure of the other components. Specifically, we hypothesize that, if a given component fails, then other components that are only connected to that component will fail as well, independently of their levels of interconnection. In contrast, the idiosyncratic shocks are assumed to be captured by a Poisson process with parameter \(\lambda \)–giving the timing of the failures–jointly with a uniform process over \(\mathcal {C}\), independent of the Poisson process, which identifies the failed component.

Moreover, suppose that the system fails at the first time in which components with aggregated relative relevance greater than 0.4 fail.

Now, suppose that the first failure is observed at time \(t=8\), when \(C_2\) fails. Then, automatically, \(C_3\) fails as well, since it is connected only to \(C_2\). The aggregate relative relevance before the failures is \(\alpha _2(8^-)+\alpha _3(8^-)=0.5+0.2>0.4\), and the system fails.

To compute the expected failure time of the system under a rational expectations approach, we use the information contained in the specific values of the weights at time t, namely \((\textbf{a}(t), \textbf{w}(t))\). Specifically, we compute the expected value of \(\mathcal {T}\) at time t, conditioned on the specific values of the weights \((\textbf{a}(t), \textbf{w}(t))\).

If \(RE_t\) is the value of the rational expectations prediction, issued at time t, of the failure time \(\mathcal {T}\), given all the possible observations of the system, we have

The formula (8) gives the expected value of the lifetime of \(\textbf{S}(t)\) conditioned on the specific observations of the state of the system at time t, viz., \((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\).

We note the link to rational expectations used in finance, with the left hand side of (8) corresponding to the fundamental price of an asset, which can be obtained as an expectation value of the price at time t conditioned on all available information relevant for the given asset. in finance, the idea is that every time new information arrives (e.g. new information about earnings, interest rates, mergers, etc) this will impact the fundamental price of an asset. In similar fashion our idea is that every time new information arrives concerning individual component failure, this should lead to a new (optimal) prediction for the failure of the ensemble of components, i.e. system failure. We would like to point out that in finance “all relevant information” is in principle infinite and very vague to quantify: e.g. how does new information of the sickness of the CEO impact future earnings of a company? On the contrary, in our case “information” is crystal clear to quantify via the new values of \((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\).

In order to calculate, for a given system at a given time, the conditioned expected value in (8) for the investigated systems, referred to here as “in vivo" systems, the central trick is to first create a “catalogue” of information. To produce the catalogue, we generate a set of M systems and we follow their lives from the beginning to failure. For each time t, we record the state of each system of the catalogue \((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\) and the failure time \(\mathcal {T}\), i.e., we effectively record the pair (\((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\), \(\mathcal {T}\)). In this way, we create an “information set" \(\textbf{I}^M\). Now, each in vivo system at each time step t presents a specific configuration \((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\), and we can predict its failure time \(RE_t\) using (8) and referring to the systems in \(\textbf{I}^M\).

In practice, the use of (8) to create a large enough information set on a computer requires a lot of CPU time and storage space. We reduce the dimensionality of the problem by introducing a function f that maps the weights \((\bar{\textbf{a}}(t), \bar{\textbf{w}}(t))\) into real numbers. We then replace (8) by

The idea now is to test our method using the variance, skewness, kurtosis, Gini coefficient, and Shannon entropy as the function f. The next section details the simulation procedure.

4 Simulation experiments

We now present the simulation procedure used to test our methodological proposal.

4.1 Specifying the systems

The scenario analyses performed in the works dealing with prediction simulate real systems and are based on all the observations of the simulated systems (see, e.g., Sanyal et al. (1997), Krishnamurthy & Mathur (1997), Gokhale et al. (1998) and Yacoub et al. (2004)). Differently, from this approach, we compare the failure time of the real systems (we will call them "in-vivo" systems) with those observed in a previously recorded catalog (denoted by "information set") in correspondence to several statistical measures related to the weights of the survived components. In detail, we firstly consider the distribution of variance, skewness, kurtosis, Gini coefficient and Shannon entropy of the weights of the catalog systems’ components as the individual components fail. Then, we condition the failure times to various percentiles of such statistical measures to see what happens for different levels of value (small, medium and large). Finally, we compare the conditioned failure times of the "in-vivo" systems with those observed in the information set–hence, obtaining insights into the predictive role of the considered statistical measures.

More specifically, the procedure for the failure of components works in a stepwise form.

The system is assumed to fail the first time the number of failed components exceeds N/2.

The reallocation rule is of proportional type, as in Example 1, so the relevance of the failed component is reallocated over the remaining active components in proportion to their \(\alpha \) values before the failure. Such a reallocation rule, together with the condition for failure described above, clearly indicates the stochastic interdependence of the lifetimes of the system components. This said, for the sake of simplicity, we keep this interdependence implicit by setting the links among the components equal, i.e., at any given time t, any active component \(C_i\) is connected with the same strength \(w_{ij}\) to any other active component \(C_j\), whence all the entries in \(\textbf{w}(t)\) can be taken as equal to unity, for each time t. We can thus remove any further reference to the matrix \(\textbf{w}\).

We now consider the condition for the failure of a component. At a generic time t, one component, say \(C_j\), is selected at random, i.e., from a uniform distribution on the set of active components, as the candidate failed component. Then, a random number r is sampled from a uniform distribution U(0, 1). If \(\alpha _j(t) > r\), the component \(C_j\) fails at time t, and \(\alpha _j(s)=0\), for each \(s>t\). On the other hand, if \(\alpha _j(t) \le r\), the component \(C_j\) does not fail. This procedure is then reiterated at time \(t+1\) and so on, until the system fails.

4.2 Stepwise description of the procedure

We consider two different sets of systems, information set systems and in-vivo systems. The in-vivo systems are the ones we wish to predict, the information set systems are the systems we use to create an information set, from which we can issue a prediction of an in-vivo system via Eq. 9. We first create an information set by letting a certain number of systems M fail. For each time t we record the state of the system \(f(\bar{\textbf{a}}(t))\), and when the system fails, at say time \(\mathcal {T}\), we record the pair (\(f(\bar{\textbf{a}}(t)\)),\(\mathcal {T}\)). Repeating this procedure for M different systems, we thereby create an “information set" \(\textbf{I}(t)^M_f\). The idea behind the rational expectations approach is then, for each in vivo system at each time step t, to use the systems in \(\textbf{I}(t)^M_f\) with the same information to give an averaged evaluation of the prediction time via (9).

We present the simulation procedure in a stepwise form:

-

1.

For each function f, we build the information set \(\textbf{I}_f=(\textbf{I}(t)^m_f:\, t \ge 0; \, m=1,\dots , M)\) by creating and following the lives of M systems from time \(t=0\) until they fail. For each time t we record the state of the m-th system \(f(\bar{\textbf{a}}_m(t))\), and when the system fails, at say time \(\mathcal {T}_m\), we record the pair (\(f(\bar{\textbf{a}}_m(t)),\mathcal {T}_m\)). We do this for each \(m=1, \dots , M\).

-

2.

We follow the same procedure by creating X in-vivo systems and following their lives from the beginning at time \(t=0\) until they fail. For each time t, we record the state of the x-th system \(f(\bar{\textbf{a}}_x(t))\), and its failure time \(\mathcal {T}_x\), i.e., the pair (\(f(\bar{\textbf{a}}_x(t))\),\(\mathcal {T}_x\)).

-

3.

We now compute the rational expectations in (9) on the basis of the information set \(\textbf{I}_f\).

-

First, we state and check a tolerance threshold condition. Specifically, we fix a tolerance level \(\Theta >0\); then, for each in vivo system \(\bar{x}=1, \dots , X\), time \(\bar{t} =0,1,\dots , \mathcal {T}_{\bar{x}}\), and observed configuration \(f(\bar{\textbf{a}}_{\bar{x}}(t))\), we identify the systems in the catalogue with label \(m \in \{1, \dots , M\}\) such that the following Condition holds:

$$\begin{aligned} |f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))- f(\bar{\textbf{a}}_m(\bar{t}))| < \Theta . \end{aligned}$$(10)We denote the number of systems in the catalogue for which (10) is satisfied by \(m[f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))]\). Hypothetically, one might have \(m[f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))]=0\). In this unlucky case, the catalogue does not provide complete information about the configurations of the systems. To avoid such an inconsistency, we have reasonably selected values of \(\Theta \) and M large enough to guarantee that \(m[f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))]>0\) for each \(\bar{x}\) and \(\bar{t}\) (see the next subsection, where we introduce the parameter set we used here).

-

To apply (9), we compute the arithmetic mean of the failure times of the systems of the catalogue satisfying (10), so that

$$\begin{aligned} \mathbb {E}\left[ \mathcal {T}\,|\, f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))\right] = \frac{1}{m[f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))]} \sum _{m=1}^M \mathcal {T}_m \cdot 1(m \text { satisfies }(10)), \end{aligned}$$(11)where \(1(\bullet )\) the indicator function with value 1 if \(\bullet \) is satisfied and 0 otherwise.

-

-

4.

In order to assess the quality of such predictions, we proceed as follows:

-

First, at each time \(\bar{t}\), we consider the 10th, 50th, and 90th percentiles of the distributions of the observed configurations of the catalogue \(\bar{\textbf{a}}_m(\bar{t})\)’s, with \(m=1, \dots , M\). We denote these percentiles by \(\bar{\textbf{a}}^{(10)}(\bar{t})\), \(\bar{\textbf{a}}^{(50)}(\bar{t})\), and \(\bar{\textbf{a}}^{(90)}(\bar{t})\), respectively.

-

We assign system \({\bar{x}}\) at time \(\bar{t}\) with configuration \(\bar{\textbf{a}}_{\bar{x}}(\bar{t})\) to the percentile \(\bar{\textbf{a}}^{(per(\bar{x}))}(\bar{t})\) if and only if the following condition is satisfied:

$$\begin{aligned} |\bar{\textbf{a}}_{\bar{x}}(\bar{t}) - \bar{\textbf{a}}^{(per(\bar{x}))}(\bar{t})| < 0.8\times std(\bar{t}), \end{aligned}$$(12)where \(per=10,50,90\), \(per(\bar{x})\) is the percentile related to \(\bar{x}\), and \(std(\bar{t})\) is the standard deviation of the configurations of the in vivo systems at time \(\bar{t}\), namely the \(\bar{\textbf{a}}_{x}(\bar{t}) \)’s with \(x =1, \dots , X\).

-

We compute

$$\begin{aligned} \tilde{\mathbb {E}}\left[ \mathcal {T}\,|\, f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))\right] = \frac{1}{|per(\bar{x})|} \sum _{x=1}^X \mathcal {T}_x \cdot 1(x \text { satisfies }(12)), \end{aligned}$$(13)where \(|per(\bar{x})|\) is the cardinality of the set of the percentile \(per(\bar{x})\).

-

We compute the error at time \(\bar{t}\) and at a given percentile per–i.e., the difference in absolute value between the term in (11) and the one in condition (13) conditioned on the percentile per:

$$\begin{aligned} E_{RE}|per(\bar{t})=\left| \mathbb {E}\left[ \mathcal {T}\,|\, f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))\right] -\tilde{\mathbb {E}}\left[ \mathcal {T}\,|\, f(\bar{\textbf{a}}_{\bar{x}}(\bar{t}))\right] \right| . \end{aligned}$$(14)In this way, at each time \(\bar{t}\) we obtain three different distributions of errors conditioned on the percentiles:

-

\(E_{RE}|10(\bar{t})\)

-

\(E_{RE}|50(\bar{t})\)

-

\(E_{RE}|90(\bar{t})\)

-

-

We also compare the distributions in (14) with a naive benchmark error \(E_B\) given by the errors made without the use of rational expectations, as follows:

$$\begin{aligned} E_B=\frac{1}{X}\sum _{x=1}^X \left| \frac{1}{M} \sum _{m=1}^M \mathcal {T}_m - \mathcal {T}_x \right| \end{aligned}$$(15)As the formula (15) states, the performance of the naive benchmark prediction is obtained by constantly issuing the failure time of a given in vivo system, using the averaged failure time of the systems in the information set. The benchmark prediction is a natural measure for making forecasts without using rational expectations; indeed, it assumes that the failure prediction is given by the average of the failures of the systems in the catalogue.

-

For the purposes of comparison, we normalize all the times at the percentile level, so that for each percentile per, the maximum time over all the in vivo systems in which \(\bar{\textbf{a}}^{(per)}\) is observed is unity, while the minimum time is 0.

-

4.3 Setting the parameters

The parameters for the catalogue and in vivo systems are: \(n=10\), \(M=5000\) and \(X=5000\). This choice leads to satisfactory results without resulting in too much computational complexity.

The initial distribution of the weights in \(\textbf{a}(0)\) is assumed to be generated from different types of random variable with particular characteristics. Specifically, we take:

-

Uniform distribution U(0, 1).

-

Some cases of the two-parameter Beta distribution B(a, b). This distribution has support in (0,1); moreover, depending on the values assigned to the shape parameters a and b, the behavior of the density function of B(a, b) can be of different type. We consider four combinations of shape parameters (see Fig. 2):

-

\(a=1\) and \(b=3\), which is an asymmetric distribution more concentrated over the values close to zero;

-

\(a=b=0.5\), which is a symmetric distribution bimodal over the endpoints 0 and 1;

-

\(a=b=2\), which corresponds to a platykurtic symmetric distribution centered in 0.5;

-

\(a=1\) and \(b=0.5\), which is an asymmetric distribution on the right with a high concentration of values close to 1.

Note that a B(a, b) with \(a=b=1\) is a special case, corresponding to U(0, 1).

-

The tolerance level \(\Theta \) plays a key role in the analysis, as (10) suggests. We evaluated three different tolerance levels, taking \(\Theta =0.005, 0.05, 0.5\).

It turned out that the results were scarcely affected by the tolerance levels. Thus, we present here only the case \(\Theta =0.05\).

5 Results and discussion

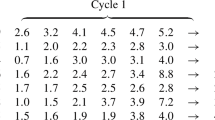

The main results of our method are all encompassed in Figs. 3, 4, 5, 6 and 7. Each figure has three panels A–C, corresponding to the percentiles \(per=10,50,90\). For each plot, the y-axis shows the error in the prediction given by (14), while the x-axis shows the time. The five different graphs correspond to the five initial distributions of the weights.

To allow an intuitive analysis of the predictive performance of the five statistical indicators f and compare the systems, time is normalized to unity at failure as explained in the last section.

The straight line in each plot corresponds to the performance of the benchmark prediction (15).

A quick look at all three panels in all five figures gives an intuitive confirmation of how well our method works: in all five figures, in all three panels, and for all five functions our predictions are better than the benchmark at almost any time t. More specifically, the only clear but very short period where it would be better to use the benchmark prediction would be if the initial weights were randomly distributed, in the case of the 50% percentile of the variance in the prediction of the in vivo systems. In this special case, we observe that the benchmark initially outperforms the rational expectations predictions; however, for longer times–even in this extreme case–the rational expectations predictions beat the benchmark predictions.

Moreover, the rational expectations predictions with any function f become greatly superior to the benchmark prediction for times that are not too far away from failure. This outcome remains valid for any of the five considered distributions of the initial weights of the nodes. This is a clear illustration that the more information you have of a given in vivo system, the better your prediction can be. In contrast, close to the starting time–e.g., if we consider the case where only one node has yet been broken – all the in vivo systems look similar. In this case, the difference in performance of the various functions f is negligible, whatever initial weight distribution is selected. In short, the best you can do without the benefit of any further information is to make the naive benchmark prediction. But as the in vivo system begins to deteriorate, every additional broken node provides new information, and that information should be used to optimize the prediction.

Uniform distribution of initial weights. The y-axis shows the prediction error (absolute value) and the x-axis shows the time normalized so that failure occurs at \(t=1\) in order to compare performance of the predictions across systems. Symbols correspond to the five different choices of function f as indicated in the key. Panel a) shows the errors of the 10th percentile of the distribution for each function calculated using the information set \(\textbf{I}^M\). Panels b) and c) show likewise the errors of the 50th and 90th percentiles. The horizontal line in each panel shows the performance of the benchmark prediction

It is clear that, because the information obtained by each of the five possible functions f will be different, it will lead to different possibilities for optimizing predictions. Consider the case of initially uniformly distributed weights shown in Fig. 3. Note that, for systems with abnormally small values of the statistical indicator (the 10% percentile), the Shannon entropy gives predictions of the failure times which are around 80% of the time better than those given by the other measures described by f. However, the particular in vivo system that is observed to deteriorate may not be one with a small Shannon entropy. It may instead have a persistently large Gini coefficient, say belonging to the 90% percentile. In this case, it is the Gini coefficient that provides the optimal predictions.

This finding illustrates that as time goes by one should opportunistically switch between the different measures introduced by the five choices for f. This combined approach will ensure the path to globally optimal predictions, i.e., ones that are optimal at any given time t.

Beta distribution of initial weights with \(a=1\) and \(b=3\). See caption to Fig. 3 for further explanation

So, what happens to the other initial weight distributions? Fig. 4 shows that the case of the Beta distribution with \(a=1\) and \(b=3\) has many features similar to the uniform distribution in Fig. 3, such as the Shannon entropy being the best function for making predictions for the 10% and 50% percentiles, while the Gini coefficient is the best for the 90% percentile. So the fact of having a system with a higher initial concentration of small nodes, like the Beta distribution with \(a=1\) and \(b=3\), does not seem to give rise to a profoundly different optimal choice in our rational expectations predictions about the statistical measures.

This particular initial weight distribution corresponds to what in the literature is considered the ideal distribution to allow the best minimization of the prediction errors in absolute value, the negative exponential distribution. Following the insights of some literature contributions such as Li & Zuo (2002), Sarhan (2005), Asadi and Bayramoglu (2006), and Eryilmaz (2011), we confirmed the predictive superiority of this form of distribution over the others. In fact, by comparing Fig. 4 with the others we can notice that the level of error drops slightly compared to the uniformly distributed realizations already from the starting point–which is the one with zero information available. The predictive gain is very high instead, when compared to the other distributions.

Consider then the more extreme case of systems that tend to have clusters of both small and large nodes, but few nodes of moderate size, corresponding to the Beta distribution with \(a=b=0.5\), illustrated in Fig. 5. Again the Shannon entropy works well for the 10% and 50% percentiles, but now the 90% percentile of the kurtosis predicts just as well as the 90% percentile of the Shannon entropy, while it is still the 90% percentile of the Gini coefficient that performs best.

Beta distribution of initial weights with \(a=b=0.5\). See caption to Fig. 3 for further explanation

For systems that have their nodes concentrated around their mean, corresponding to the Beta distribution with \(a=b=2\) shown in Fig. 6, the Shannon entropy is only performs best for small percentiles, while the Gini coefficient is better for the 50% and 90% percentiles. Somewhat surprisingly, for systems that have their nodes concentrated around large values, corresponding to the Beta distribution with \(a=1\) and \(b=0.5\) shown in Fig. 7, performance does not differ notably from the uniform distribution (Fig. 3).

Beta distribution of initial weights with \(a=b=2\). See caption to Fig. 3 for further explanation

Beta distribution of initial weights with \(a=1\) and \(b=0.5\). See caption to Fig. 3 for further explanation

We note that the Gini coefficient is not usually used as a performance measure in failure prediction models. One exception is Ooghe and Spaenjers (2010), whose authors would agree with us that the Gini coefficient is a powerful and attractive measure. In accordance with the conclusions of this study, the Gini coefficient turns out to be an excellent predictive tool, especially for very high abnormal values. In the literature, several studies have been carried out in the field of reliability theory–specifically, considering k-out-of-n systems–which exploit the various statistical moments to validate their models. We refer for example to Reijns and Van Gemund (2007) or Amari et al. (2012). According to these authors, lower moments are more robust than higher moments. In contrast, our results seem in general to indicate an advantage in using the kurthosis rather than the variance, and both the variance and the kurtosis seem in general to perform better than the skewness. However, there are exceptions, notably for the asymmetric distributions which allow large values–i.e., the Beta distribution with \(a=1\) \(b=0.5\). For the 90% percentile for these two distributions, the skewness equals or outperforms both the variance and the kurtosis. This makes sense since knowing for an asymmetric distribution that the remaining set of nodes has a large skewness indicates a system that has a large fraction of nodes with large values. This information is useful in predicting the (probably longer than usual) failure time. We confirm the predictive goodness of the Shannon entropy in all the proposed cases–in line with the other papers using it as a forecasting tool (see, e.g.,(Franks & Beven, 1997; Mahanta et al., 2013; Karmakar et al., 2019) and (Ray & Chattopadhyay, 2021)).

6 Conclusions

In this paper, we have shown that using rational expectations – together with the idea of using different measures that can change over time–enables us to come up with tools for failure predictions for weighted k-out-of-n systems. The core idea in our method is that every time a node breaks, this provides new information, and one should use this information via rational expectations to optimize predictions continually.

Through extensive computer simulations, we have shown how our new method can dynamically outperform static predictions. We have explored how the procedure works for systems with five different initial distributions of the weights of the nodes. To see how different information influences the predictions for systems with different initial distributions of the weights, we have tested five measures: mean, variance, kurtosis, Gini coefficient, and Shannon entropy. As we have shown, different measures may be optimal depending on the given initial distribution of a system. However, as we have also shown, we can obtain optimal predictions by adopting a dynamic perspective–viz., by switching between different measures at different times, depending on the state of deterioration of the given system.

The presented framework is general and can be suitably modified and adapted to several contexts. In particular, it is easy to modify the method to apply it for any given initial distribution of weights. Obviously, the five measures we introduced were meant only to illustrate how using different information might be optimal depending on the state of the system and the initial distribution of the weights. Our claim is not that these measures are “the best” but rather to illustrate their different impact on the accuracy of prediction. Better measures might possibly be found for different circumstances.

We argue that our method could have real practical relevance in economics and finance, for example, for banking networks or for assessing the systemic risk of a country, the Eurozone, or sovereign credit, among other things. Our future research will focus on such practical examples.

Actually, the rational expectations prediction method should lead to a broad range of studies. A natural extension would be to try out other reallocation rules instead of the proportional one; for other systems, it may be more natural to apply a uniform reallocation rule or a threshold-based reallocation rule. One could also consider rules that depend on the trajectories of previous failures. And as mentioned beforehand, the analysis could be extended by comparing additional statistical indicators: the Frosini index to compare with the Gini coefficient, the Bienaym–Chebyshev inequality, the Pearson index to capture the dependence between the components, and Goodman and Kruskal’s index, taking into account the correlation between the components. Other measures belonging to the Shannon family of entropies could also be studied, such as the Kullback–Leibler divergence, the Jeffreys distance, the K divergence, or the Jensen difference. In short, we encourage the reader to use our method to study the performance of other measures for their specific system of choice.

References

Aktekin, T., & Caglar, T. (2013). Imperfect debugging in software reliability: A Bayesian approach. European Journal of Operational Research, 227(1), 112–121.

Amari, S. V., Pham, H., & Misra, R. B. (2012). Reliability characteristics of \( k \)-out-of-\( n \) warm standby systems. IEEE Transactions on Reliability, 61(4), 1007–1018.

Andersen, J. V., & Sornette, D. (2005). Predicting failure using conditioning on damage history: Demonstration on percolation and hierarchical fiber bundles. Physical Review E, 72(5), 056124.

Asadi, M., & Bayramoglu, I. (2006). The mean residual life function of a k-out-of-n structure at the system level. IEEE Transactions on Reliability, 55(2), 314–318.

Atici, F. M., Ekiz, F., & Lebedinsky, A. (2014). Cagan type rational expectation model on complex discrete time domains. European Journal of Operational Research, 237(1), 148–151.

Azaron, A., Katagiri, H., Sakawa, M., & Modarres, M. (2005). Reliability function of a class of time-dependent systems with standby redundancy. European Journal of Operational Research, 164(2), 378–386.

Bal, J., Cheung, Y., & Hsu-Che, W. (2013). Entropy for business failure prediction: An improved prediction model for the construction industry. Advances in Decision Sciences, 2013, 1–13.

Barlow, R. E. (1985). Combining component and system information in system reliability calculation. Probabilistic Methods in the Mechanics of Solids and Structures (pp. 375–383). Berlin, Heidelberg: Springer.

Barlow, R. E., & Heidtmann, K. D. (1984). Computing k-out-of-n system reliability. IEEE Transactions on Reliability, 33(4), 322–323.

Barro, R. J. (1976). Rational expectations and the role of monetary policy. Journal of Monetary Economics, 2(1), 1–32.

Basu, A. P., & Ebrahimi, N. (1991). Bayesian approach to life testing and reliability estimation using asymmetric loss function. Journal of Statistical Planning and Inference, 29(1–2), 21–31.

Becker, O., Leitner, J., & Leopold-Wildburger, U. (2007). Heuristic modeling of expectation formation in a complex experimental information environment. European Journal of Operational Research, 176(2), 975–985.

Bhattacharya, S. K. (1967). Bayesian approach to life testing and reliability estimation. Journal of the American Statistical Association, 62(317), 48–62.

Blanchard, O. J., & Watson, M. W. (1982). Bubbles, rational expectations and financial markets (No. w0945). National Bureau of Economic Research.

Boland, P. J., & El-Neweihi, E. (1998). Statistical and information based (physical) minimal repair for k out of n systems. Journal of Applied Probability, 35(3), 731–740.

Canfield, R. V. (1970). A Bayesian approach to reliability estimation using a loss function. IEEE Transactions on Reliability, 19(1), 13–16.

Cerqueti, R. (2021). A new concept of reliability system and applications in finance. Annals of Operations Research. https://doi.org/10.1007/s10479-021-04150-9

Chow, G. C. (1991). Rational versus adaptive expectations in present value models. Econometric Decision Models (pp. 269–284). Berlin, Heidelberg: Springer.

Da Costa Bueno, V., & do Carmo, I. M. (2007). Active redundancy allocation for a k-out-of-n: F system of dependent components. European Journal of Operational Research, 176(2), 1041–1051.

Delcey, T., & Sergi, F. (2019). The Efficient Market Hypothesis and Rational Expectations. How Did They Meet and Live (Happily?) Ever After.

El-Sayyad, G. M. (1967). Estimation of the parameter of an exponential distribution. Journal of the Royal Statistical Society: Series B (Methodological), 29(3), 525–532.

Eryilmaz, S. (2011). Dynamic behavior of k-out-of-n: G systems. Operations Research Letters, 39(2), 155–159.

Eryilmaz, S. (2012). On the mean residual life of a k-out-of-n: G system with a single cold standby component. European Journal of Operational Research, 222(2), 273–277.

Eryilmaz, S. (2014). Multivariate copula based dynamic reliability modeling with application to weighted-k-out-of-n systems of dependent components. Structural Safety, 51, 23–28.

Eryilmaz, S. (2019). (k1, k2, ..., km)-out-of-n system and its reliability. Journal of Computational and Applied Mathematics, 346, 591–598.

Eryilmaz, S., & Bozbulut, A. R. (2014). Computing marginal and joint Birnbaum, and Barlow-Proschan importances in weighted-k-out-of-n: G systems. Computers & Industrial Engineering, 72, 255–260.

Franks, S. W., & Beven, K. J. (1997). Bayesian estimation of uncertainty in land surfaceatmosphere flux predictions. Journal of Geophysical Research: Atmospheres, 102(D20), 23991–23999.

Friedman, M. (1957). Introduction to A theory of the consumption function. A theory of the consumption function (pp. 1–6). Princeton: Princeton University Press.

Ge, G., & Wang, L. (1990). Exact reliability formula for consecutive-k-out-of-n: F systems with homogeneous Markov dependence. IEEE Transactions on Reliability, 39(5), 600–602.

Gokhale, S. S., Lyu, M. R., & Trivedi, K. S. (1998). Reliability simulation of component-based software systems. In Proceedings Ninth International Symposium on Software Reliability Engineering, (pp. 192-201).

Gunawan, S., & Papalambros, P. Y. (2006). A Bayesian approach to reliability-based optimization with incomplete information. Journal of Mechanical Design, 128(4), 909–918.

Gupta, N., Misra, N., & Kumar, S. (2015). Stochastic comparisons of residual lifetimes and inactivity times of coherent systems with dependent identically distributed components. European Journal of Operational Research, 240(2), 425–430.

Hansen, L. P., & Singleton, K. J. (1982). Generalized instrumental variables estimation of nonlinear rational expectations models. Econometrica Journal of the Econometric Society, 50(5), 1269–1286.

Hecht, M., & Hecht, H. (2000). Use of importance sampling and related techniques to measure very high reliability software. In 2000 IEEE Aerospace Conference. Proceedings, (vol. 4, pp. 533-546).

Jain, S. P., & Gopal, K. (1985). Recursive algorithm for reliability evaluation of k-out-of-n: G system. IEEE Transactions on Reliability, 34(2), 144–150.

Karmakar, S., Goswami, S., & Chattopadhyay, S. (2019). Exploring the pre-and summer-monsoon surface air temperature over eastern India using Shannon entropy and temporal Hurst exponents through rescaled range analysis. Atmospheric Research, 217, 57–62.

Keilis-Borok, V., Shebalin, P., Gabrielov, A., & Turcotte, D. (2004). Reverse tracing of short-term earthquake precursors. Physics of the Earth and Planetary Interiors, 145(1–4), 75–85.

Kim, M. J., Jiang, R., Makis, V., & Lee, C. G. (2011). Optimal Bayesian fault prediction scheme for a partially observable system subject to random failure. European Journal of Operational Research, 214(2), 331–339.

Kinateder, H., & Papavassiliou, V. G. (2019). Sovereign bond return prediction with realized higher moments. Journal of International Financial Markets, Institutions and Money, 62, 53–73.

Krishnamurthy, S., & Mathur, A. P. (1997). On the estimation of reliability of a software system using reliabilities of its components. In Proceedings the Eighth International Symposium on Software Reliability Engineering, 146-155.

Li, X., & Zuo, M. J. (2002). On the behaviour of some new ageing properties based upon the residual life of k-out-of-n systems. Journal of Applied Probability, 39, 426–433.

Li, W., & Zuo, M. J. (2008). Reliability evaluation of multi-state weighted k-out-of-n systems. Reliability Engineering & System Safety, 93(1), 160–167.

Lucas, R. E., Jr. (1972). Expectations and the neutrality of money. Journal of Economic Theory, 4(2), 103–124.

Maddock, R., & Carter, M. (1982). A child’s guide to rational expectations. Journal of Economic Literature, 20(1), 39–51.

Mahanta, S., Chutia, R., & Baruah, H. K. (2013). Uncertainty analysis in atmospheric dispersion using Shannon entropy. Annals of Fuzzy Mathematics and Informatics, 5(2), 417–427.

Martz, H. F., & Wailer, R. A. (1990). Bayesian reliability analysis of complex series/parallel systems of binomial subsystems and components. Technometrics, 32(4), 407–416.

Martz, H. F., Wailer, R. A., & Fickas, E. T. (1988). Bayesian reliability analysis of series systems of binomial subsystems and components. Technometrics, 30(2), 143–154.

Mastran, D. V. (1976). Incorporating component and system test data into the same assessment: A Bayesian approach. Operations Research, 24(3), 491–499.

Mastran, D. V., & Singpurwalla, N. D. (1978). A Bayesian estimation of the reliability of coherent structures. Operations Research, 26(4), 663–672.

Milczek, B. (2003). On the class of limit reliability functions of homogeneous series k-out-of-n systems. Applied Mathematics and Computation, 137(1), 161–176.

Muth, J. F. (1961). Rational expectations and the theory of price movements. Econometrica Journal of the Econometric Society, 29(3), 315–335.

Navarro, J., del Águila, Y., Sordo, M. A., & Suárez-Llorens, A. (2013). Stochastic ordering properties for systems with dependent identically distributed components. Applied Stochastic Models in Business and Industry, 29(3), 264–278.

Navarro, J., Pellerey, F., & Di Crescenzo, A. (2015). Orderings of coherent systems with randomized dependent components. European Journal of Operational Research, 240(1), 127–139.

Navarro, J., Ruiz, J. M., & Sandoval, C. J. (2005). A note on comparisons among coherent systems with dependent components using signatures. Statistics and Probability Letters, 72(2), 179–185.

Oe, S., Soeda, T., & Nakamizo, T. (1980). A method of predicting failure or life for stochastic systems by using autoregressive models. International Journal of Systems Science, 11(10), 1177–1188.

Ooghe, H., & Spaenjers, C. (2010). A note on performance measures for business failure prediction models. Applied Economics Letters, 17(1), 67–70.

Parsa, M., Di Crescenzo, A., & Jabbari, H. (2018). Analysis of reliability systems via Gini-type index. European Journal of Operational Research, 264(1), 340–353.

Pham, H., & Upadhyaya, S. J. (1988). The efficiency of computing the reliability of k-out-of-n systems. IEEE Transactions on Reliability, 37(5), 521–523.

Rai, S., Sarje, A. K., Prasad, E. V., & Kumar, A. (1987). Two recursive algorithms for computing the reliability of k-out-of-n systems. IEEE Transactions on Reliability, 36(2), 261–265.

Ramberg, J. S., Dudewicz, E. J., Tadikamalla, P. R., & Mykytka, E. F. (1979). A probability distribution and its uses in fitting data. Technometrics, 21(2), 201–214.

Ray, S. N., & Chattopadhyay, S. (2021). Analyzing surface air temperature and rainfall in univariate framework, quantifying uncertainty through Shannon entropy and prediction through artificial neural network. Earth Science Informatics, 14(1), 485–503.

Reijns, G. L., & Van Gemund, A. J. (2007). Reliability analysis of hierarchical systems using statistical moments. IEEE Transactions on Reliability, 56(3), 525–533.

Sanyal, S., Shah, V., & Bhattacharya, S. (1997). Framework of a software reliability engineering tool. In Proceedings 1997 High-Assurance Engineering Workshop, (pp. 114-119).

Sargent, T. J., Fand, D., & Goldfeld, S. (1973). Rational expectations, the real rate of interest, and the natural rate of unemployment. Brookings Papers on Economic Activity, 1973(2), 429–480.

Sargent, T. J., & Wallace, N. (1975). Rational expectations, the optimal monetary instrument, and the optimal money supply rule. Journal of Political Economy, 83(2), 241–254.

Sarhan, A. M. (2005). Reliability equivalence factors of a parallel system. Reliability Engineering & System Safety, 87(3), 405–411.

Shebalin, P., Keilis-Borok, V., Zaliapin, I., Uyeda, S., Nagao, T., & Tsybin, N. (2004). Advance short-term prediction of the large Tokachi-oki earthquake, September 25, 2003, M= 8.1 A case history. Earth, Planets and Space, 56(8), 715-724.

Sheu, S. H., Liu, T. H., Tsai, H. N., & Zhang, Z. G. (2019). Optimization issues in k-out-of-n systems. Applied Mathematical Modelling, 73, 563–580.

Sornette, D., & Andersen, J. V. (2006). Optimal prediction of time-to-failure from information revealed by damage. Europhysics Letters, 74(5), 778.

Taghipour, S., & Kassaei, M. L. (2015). Periodic inspection optimization of a k-out-of-n load-sharing system. IEEE Transactions on Reliability, 64(3), 1116–1127.

Van Gemund, A. J., & Reijns, G. L. (2012). Reliability analysis of \( k \)-out-of-\( n \) systems with single cold standby using Pearson distributions. IEEE Transactions on Reliability, 61(2), 526–532.

Van Noortwijk, J. M., Cooke, R. M., & Kok, M. (1995). A Bayesian failure model based on isotropic deterioration. European Journal of Operational Research, 82(2), 270–282.

Varian, H. R. (1975). A Bayesian approach to real estate assessment. Studies in Bayesian econometric and statistics in Honor of Leonard J. Savage, 195–208.

Wang, Y., Li, L., Huang, S., & Chang, Q. (2012). Reliability and covariance estimation of weighted k-out-of-n multi-state systems. European Journal of Operational Research, 221(1), 138–147.

Wu, J. S., & Chen, R. J. (1994). An algorithm for computing the reliability of weighted k-out-of-n system. IEEE Transactions on Reliability, 43, 327–328.

Xie, M., & Pham, H. (2005). Modeling the reliability of threshold weighted voting systems. Reliability Engineering & System Safety, 87(1), 53–63.

Yacoub, S., Cukic, B., & Ammar, H. H. (2004). A scenario-based reliability analysis approach for component-based software. IEEE Transactions on Reliability, 53(4), 465–480.

Zarezadeh, S., Mohammadi, L., & Balakrishnan, N. (2018). On the joint signature of several coherent systems with some shared components. European Journal of Operational Research, 264(3), 1092–1100.

Zellner, A. (1986). Bayesian estimation and prediction using asymmetric loss functions. Journal of the American Statistical Association, 81(394), 446–451.

Zhang, Y. (2018). Optimal allocation of active redundancies in weighted k-out-of-n systems. Statistics & Probability Letters, 135, 110–117.

Zhang, Y. (2020). Reliability analysis of randomly weighted k-out-of-n systems with heterogeneous components. Reliability Engineering & System Safety, 205, 107184.

Zhang, N., Fouladirad, M., & Barros, A. (2019). Reliability-based measures and prognostic analysis of a K-out-of-N system in a random environment. European Journal of Operational Research, 272(3), 1120–1131.

Zhang, M. H., Shen, X. H., He, L., & Zhang, K. S. (2018). Application of differential entropy in characterizing the deformation inhomogeneity and life prediction of low-cycle fatigue of metals. Materials, 11(10), 1917.

Funding

Open access funding provided by Universitá degli Studi Roma Tre within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A preliminary version of the work was posted on HAL, see https://halshs.archives-ouvertes.fr/halshs-01673338v2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Andersen, JV., Cerqueti , R. & Riccioni, J. Rational expectations as a tool for predicting failure of weighted k-out-of-n reliability systems. Ann Oper Res 326, 295–316 (2023). https://doi.org/10.1007/s10479-023-05300-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-023-05300-x