Abstract

Increasing levels of urbanisation and the rapid growth of modern cities require that particular attention be paid to ensuring the safety and protection of living conditions for their inhabitants. In this context, natural and human-induced disasters pose a major threat to the safety and normal operational procedures of buildings and infrastructures. In consequence, disaster management and built assets operations demand modern tools to be effectively prepared in order to better respond to such critical events. This study explores the potential of artificial intelligence in these operational fields by developing a deep learning model that is able to provide a rapid assessment of an asset’s structural condition in the case of a seismic excitation. The proposed simulation model makes an accurate prediction of the damage status of individual elements in a built asset, thus leading to operational improvements across all disaster management phases. In addition, the above development integrates the deep learning algorithm into building information modelling and then uploads the graphical information to a web dashboard. By following the framework proposed, an integrative model is designed that provides a visual and user-friendly interface that allows different stakeholders to navigate and comprehend essential information on the effects of a disaster; thus enabling quicker decision making and strengthening operational resilience in critical events.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The unexpected occurrence of disasters, both natural and human-induced, often has dramatic consequences, including human losses and significant economic impacts (Dubey et al., 2019a; GAR, 2019). These critical events usually highlight the unpreparedness of communities to respond promptly and efficiently (Alsubaie et al., 2016). Indeed, the need to develop swift and accurate responses to disasters is boldly underlined in the United Nations Office for Disaster Risk Reduction regulations and frameworks (UNDRR, 2021).

Consequently, the literature on disaster management (DM) is garnering attention and growing as a discipline that aims to optimise operations management in terms of efficient planning and effective response to emergency situations, thus minimising the adverse impacts of catastrophes on the population and surrounding environment (Adnan et al., 2015; Bang, 2014). Nevertheless, research into DM from the perspective of operations management is relatively new (Gupta et al., 2016). Moreover, the uniqueness of disasters, their intrinsic uncertainty, and the operational constraints associated with these critical events, mean that DM is considered to be a challenging area from an operative lens (Rolland et al., 2010). Hence, disaster operations is identified in the operations research literature as a domain growing in importance, where new studies and different approaches are required (Altay & Green, 2006; Holguín-Veras et al., 2012; Kovács & Spens, 2011; Seifert et al., 2018; Vanany et al., 2021).

Disasters hugely affect the built environment. In this regard, the rapid growth in human population, together with rural–urban migration, is leading to increasing urbanisation (United Nations, 2019). Consequently, the number of built assets (buildings and infrastructures) damaged by calamitous events has grown dramatically in recent years (GAR, 2015). Furthermore, the protection of critical infrastructures providing key services to communities becomes crucial in order to reduce disaster vulnerability and enable operations in the aftermath of such events. There is therefore an identified need to assess and improve the operational resilience of built assets (Bosher et al., 2007; Cerѐ et al., 2017).

Research on the application of emergent digital technologies in DM has recently been gaining momentum (Marić et al., 2021; Rodríguez-Espíndola et al., 2020). The use of technology is showing huge potential to benefit the pre- and post-event stages of DM (Marić et al., 2021). Nonetheless, some challenges surrounding the use of technologies in DM have also been identified; first, the need for improved information sharing among stakeholders (Sakurai & Murayama, 2019); second, the integration of digital tools to provide information that is rapidly available and easily understandable so as to accelerate decision making (Waring et al., 2018; Zhang et al., 2019).

Among the disruptive technologies with applications in DM, artificial intelligence (AI), and its subsets such as machine learning (ML) or big data analytics (BDA) are already showing their potential to address problems in disaster operations due their capability to make accurate predictions, which is a key factor given the unexpected nature of disasters (Akter & Wamba, 2019). In addition, the capability of these technologies to process huge amounts of data (including images) rapidly enables quick access to essential information in this time-sensitive context (Ofli et al., 2016). AI also has the potential to play a prominent role in improving decision making across the four DM stages (Sun et al., 2020).

However, the use of deep learning (DL), one of the principal branches of ML, has not been widely researched in DM. Applying DL algorithms as tools to improve decision making in DM is still in its early infancy (Aqib et al., 2020; Pouyanfar et al., 2019). The use of DL to improve the operational resilience of built assets is therefore a largely unresearched topic in an area where the need for interdisciplinary approaches has been stressed in order to gain greater understanding (Belhadi et al., 2021; Bosher et al., 2007; Haigh & Amaratunga, 2010). Another unresolved issue observed when applying DL in DM is how to treat the outputs obtained from complex analysis so as to transform them into information that can be easily understood by different stakeholders, thus enabling quicker decision making (e.g., Devaraj et al., 2021; Wei et al., 2002).

Given the aforementioned context, the main purpose of this research article is to explore the application of DL to improve the operational resilience of built assets and to enhance disaster management operations. Thus, in order to comprehensively achieve this aim, three research objectives are defined. First, to develop a ML model that is able to predict a built asset’s damage status. Second, to integrate the ML model into a data-rich 3D model which is uploaded to a web dashboard that combines information and enables easy understanding of the ML outputs. Third, to assess the potential of this DL-enhanced simulation tool for built asset operations and DM.

This study proposes a framework in which to develop a rigorous simulation method that integrates a DL model and provides visual information of damage status in built assets after a disaster. By following this framework, a DL algorithm was initially designed which is able to predict built asset safety levels. The DL model is then integrated into a visual 3D environment that is easy to understand and share. This integrative visual model has huge potential to run analyses and react more efficiently in the case of an extreme hazard. Overall, applying this simulation model has important implications for DM in terms of increasing the operational resilience of the built environment.

The rest of the article is structured as follows; Sect. 2 provides the theoretical contextualisation and positioning of this study by reviewing literature on disaster management, built asset resilience and the role of emerging technologies in these operational fields; Sect. 3 presents the methodological framework and a detailed explanation and justification of the research method adopted; Sect. 4 presents the framework’s empirical application and shows the results of the development; Sect. 5 discusses the main implications regarding the DL-enhanced simulation tool, outlining the limitations and future research lines; and finally, Sect. 6 summarises the main conclusions.

2 Theoretical background

This section presents an overview of the existing literature of relevance to this research study. It begins with a contextualisation of the disaster management discipline and concept of built environment resilience. Digitalisation in disaster management and built asset operations are then examined, with particular focus on the current role of AI in these fields. The challenges identified in the literature motivating this research are then presented.

2.1 Disaster management

The term disaster is used to describe an extreme adverse event, either gradual or sudden, that causes wide-scale damage and/or casualties, and disrupts communities, systems and infrastructures in the surrounding area (Adnan et al., 2015; Bang, 2014: Gunasekaran et al., 2018). Disasters include both natural (earthquakes, floods, wildfires, volcanic eruptions, tsunamis, etc.) and human-induced (war, terrorism, cyber-attacks, etc.) events. These critical events have co-existed with civilisation since ancient times, and their increased frequency and degrees of disruption have led to the emergence and development of DM as a discipline (Adnan et al., 2015; Bang, 2014).

DM is a discipline that essentially seeks to minimise the adverse consequences of ruinous events. DM comprises a series of actions tailored by governments, non-governmental organisations (NGOs), policymakers, private organisations and academia which aim to develop frameworks and regulations so as to optimise operations for both the pre- and post-event stages (Altay et al., 2018; Dubey et al., 2019b; Tufekci & Wallace, 1998). These two main stages are usually deployed in four phases that make up the DM cycle: (1) Mitigation (2) Preparedness (3) Response and (4) Recovery (Fig. 1).

The Mitigation phase includes actions taken in advance of an extreme event, and are aimed at preventing the onset of a disaster and/or minimising its impact (Altay & Green, 2006). The Preparedness phase refers to actions that ensure an effective response by delivering effective and timely warnings (UNISDR, 2021). In the response phase, actions are aimed at implementing procedures to preserve life, the environment and the social and economic structures of the impacted area (Altay & Green, 2006). The Recovery phase encompasses actions deployed after the impact of a disaster, these can be long-term, and aim to stabilise the situation in the affected area and restore the status quo (Altay & Green, 2006).

2.2 The importance of a resilient built environment in disaster management

Disasters hugely affect the built environment and cause significant disruption to economic and social activities (Malalgoda & Amaratunga, 2015). In this respect, increasing levels of urbanisation due to growth in human population and the global trend to live in cities are leading to a higher number of damaged built assets after calamitous events United Nations, 2019). Built assets can be affected at both structurally and non-structurally, and the impact is on three key components: structure, systems and occupants (Cerѐ et al., 2017). In terms of natural disasters, infrastructures and buildings often suffer the effects of flooding (Kelman & Spence, 2004), earthquakes (Hall et al., 1995; Hu et al., 2012), Tsunamis (Park et al., 2013), and high winds (Eurocode, 2010).

The built environment has a crucial role to play in protecting people from natural and human-induced disasters, and helps to increase society’s resilience (Haigh & Amaratunga, 2010; Ransolin et al., 2020). The importance of the built environment in DM is best illustrated by understanding it in a broad context where most human endeavours take place (Bartuska & Young, 2007). Consequently, damage to the built environment may lead to dramatic situations such as the relocation of populations, or even environmental disasters when industrial facilities (chemical, nuclear, etc.) are affected (Krausmann et al., 2010). Hence, the importance of creating a resilient built environment that is designed, built, operated and maintained to maximise the capability of built asset key components (structure, systems and occupants) to withstand the impact of an extreme event (Bosher, 2008b). The operational resilience of built assets becomes particularly paramount for critical facilities and infrastructures (those essential to key operations in the economy, defence and public health) that need to be fully operational during and after a disaster (Bosher et al., 2007; McAllister, 2013). Indeed, the built environment’s lack of resilience is considered a key factor that incurs greater risk of wider damage caused by hazardous events (Mannakkara & Wilkinson, 2013). Likewise, in the post-disaster phase, impacted critical infrastructures increase the vulnerability of communities in terms of suffering subsequent or future hazards (Haigh & Amaratunga, 2010).

Therefore, it is essential to increase built environment resilience, and the application of new technologies is expected to play an increasingly important role in achieving this (Amaratunga & Haigh, 2011). Nonetheless, the increase in costs associated with creating resilient buildings and infrastructures, together with negative connotations of resilience enhancement (i.e. heightened perceptions of risk) are identified as factors hindering the development of a more resilient built environment (Bosher, 2008a, 2014). What is clear, however, is the increasing need for agents related to the built environment; architects, civil engineers, operators and owners, to better understand the importance of creating operational resilience for built assets (Malalgoda & Amaratunga, 2015). In this respect, there is currently a call for interdisciplinary approaches, including technological and DM perspectives, to create better equipped and more resilient built assets (Bosher et al., 2007; Doorn et al., 2019; Haigh & Amaratunga, 2010).

2.3 Digitalisation and AI in disaster management

Previous literature on DM reveals the existence of a trend stressing the need for further research into the application of new technologies to improve operational quality and efficiency in this area (Modgil et al., 2020; Vinck, 2013; Wassenhove, 2006). Accordingly, the publication of studies in this research line have become more numerous since the beginning of the second decade of the twenty first century (Behl & Dutta, 2019; Marić et al., 2021).

Digitalisation enables both internal and interorganisational processes to be integrated and offers solutions for the automation and “smartization” of activities (Ghobakhloo, 2020; Schroeder et al., 2016; Xu et al., 2018). Recent studies in the available literature have analysed the effect of digital technologies in different DM domains such as; risk and assessment (Ivanov et al., 2019); humanitarian logistics (Delmonteil & Rancourt, 2017); Supply chain resilience (Bag et al., 2021); Agility, trust and coordination in humanitarian supply chain (HSC) (Dubey et al., 2020; Kabra & Ramesh, 2015; Lu et al., 2018; Shayganmehr et al., 2021); Supply chain management (Ye et al., 2022); emergency relief (Gavidia, 2017); epidemic control strategies (Kumar et al., 2021); and emergency-response information systems (Abdalla & Esmail, 2018). In this respect, the use of digital technologies in DM is displaying its potential to improve collaboration and coordination among stakeholders, enhance performance assessment methods and alleviate the adverse consequences of disasters (Marić et al., 2021; Xiong et al., 2021). Increased digitalisation in DM also demands front-line operators who are technologically savvy (Chatterjee et al., 2022).

The integration of AI in operations research has been explored since the early 2000s (Dixon & Ginsberg, 2000), with scheduling, maintenance, forecasting, fault identification and process design deemed as the key areas of application (Guner et al., 2016; Kobbacy et al., 2007; Kumar et al., 2022). Moreover, optimisation processes, which are a core goal in operations research, are now being enhanced by the use of AI-aided decision making (Nedělková et al., 2018). Also, AI tools in the area of prediction and reasoning, such as DL, are helping to improve the reliance of communities on operations research (Kourou et al., 2015; Min & Lee, 2005).

Applying AI in DM is particularly beneficial in decision making processes (Sun et al., 2020). The prominent use of AI in DM may be linked to the uncertain nature characterising this field, that makes the ability of AI to enable prognostic decision making notably suitable (Gupta et al., 2021). In recent literature, different AI applications in DM are outlined; to improve resilience and decision making in HSC (Griffith et al., 2019; Guillaume et al., 2014; Papadopoulos et al., 2017; Sahebjamnia et al., 2017; Swaminathan, 2018); to predict natural disasters (Stickley et al., 2016); optimise warehousing in HSC (Dash et al., 2019); in disaster response (Mulder et al., 2016; Ofli et al., 2016); decision support systems (Drosio & Stanek, 2016; Horita et al., 2017); and to predict crowd behaviour and improve efficiency in DM (Bellomo et al., 2016; Ragini et al., 2018).

2.4 Digital transformation and AI in built asset operations

The AECOO (architects, engineers, contractors, operators, owners) industry is embracing a period of digital transformation (Hjelseth, 2017). This process has been sparked by the emergence of building information modelling (BIM) technologies and tools that are transforming the way data are created, stored and shared in the built environment (Galera-Zarco et al., 2016; Kubicki et al., 2019).

BIM methodology can be understood as a set of processes, technologies or activities that enhance collaboration among disciplines and organisations within the AECOO industry, and subsequently improve productivity and efficiency, thus leading to improved overall construction project management throughout the entire lifecycle (Azhar et al., 2012; Ghaffarianhoseini et al., 2017; Succar, 2009). In BIM methodology, central focus is on the production of a digital three-dimension (3D), data-rich model that includes graphical and non-graphical information on the built asset (Criminale & Langar, 2017; Ghaffarianhoseini et al., 2017). This data-rich 3D model becomes an information hub for the physical asset that can be leveraged by its integration with other digital technologies to support operations, facility management and maintenance (Ghaffarianhoseini et al., 2017; Liu, et al., 2020a, 2020b; Rüppel & Schatz, 2011).

Although the BIM paradigm was essentially created to improve collaboration among stakeholders in the design and construction phases, it is increasingly engaging adjacent research areas throughout the built asset’s lifecycle (Boje et al., 2020). This evolution of the BIM paradigm is unleashing the expansion of digital technologies such as the Internet of things (IoT) and AI subsets (ML, DL, BDA), which have the potential to be integrated into BIM (Boje et al., 2020; Howell & Rezgui, 2018). The incorporation of these emergent technologies into BIM enables built assets operations to become automated and intelligent, thus providing efficient support for facility managers in decision making processes (Liu et al., 2020a, 2020b). Nonetheless, BIM currently faces challenges related to software and data interoperability that inhibit the full potential of these technologies in BIM as solutions to operations automation and its expansion to broader contexts (Pauwels et al., 2017).

In particular, integrating AI into BIM enables the establishment of interactive bidirectional information flows offering the potential to provide value to end-users (Deng et al., 2021; Liu, et al., 2020a, 2020b). This integration mainly benefits the monitoring and control of built assets during their entire lifecycle (Deng et al., 2021). Specifically, applying AI in built assets operations comprises; predictive maintenance for facility buildings (Bouabdallaoui et al., 2021), condition monitoring and diagnostics (Lebold et al., 2003), fault diagnosis (Hallaji et al., 2021), building energy behaviour (Halhoul Merabet et al., 2021; Wang et al., 2021), HVAC control (Azuatalam et al., 2020), inhabitant and activity recognition (Zou et al., 2018), structural damage detection (Feng et al., 2019; Gharehbaghi et al., 2020; Mangalathu et al., 2019); and anomaly detection (Araya et al., 2017).

2.5 Challenges in the literature and research gap

Despite the fact that the published literature pays increasing attention to DM, public administrations, policymakers and NGOs call for further research in order to join forces in the common objective of becoming better prepared to respond to disasters more effectively (Altay et al., 2018; Dubey et al., 2019a, 2019b). Concurrently, in relation to the development of a more resilient built environment, a lack of methods and tools to enhance and assess built asset resilience has been identified (Ribeiro & Gonçalves, 2019), and the need for multidisciplinary studies to fill this gap specifically underlined (Bosher & Dainty, 2011; Cai et al., 2018; Cerѐ et al., 2017; Shavindree et al., 2022).

Nevertheless, digital technologies are demonstrating their potential to reduce the negative effects of calamitous events and mitigate impact on critical infrastructures (Pedraza-Martinez & Wassenhove, 2016). Indeed, the United Nations Office for Disaster Risk Reduction recently expressed, in its Agenda 2030, the need for more applied research on the use of new technologies to improve DM operations (UNDRR, 2020). Among these digital technologies, the design and development of AI-based tools that are able to support decision making in uncertain contexts, such as the case of a disaster, remains unresearched (Alanne & Sierla, 2022). In particular, further research is needed to develop AI decision-support frameworks in complex systems, such as the case of built asset operations following a disaster (Chen et al., 2021).

Structural damage detection is identified as an AI application field in built asset operations offering the potential to improve operational resilience in the built environment (Feng et al., 2019; Gharehbaghi et al., 2020; Mangalathu et al., 2019). Nevertheless, in most of these approaches, the study of damage is performed at macro level (i.e., areas affected by a natural disaster), and do not assess the damaged asset at individual-component level (i.e., Hajeb et al., 2020; Mangalathu et al., 2020; Naito et al., 2020). Similarly, many of these studies come from engineering areas and the AI developments presented remain difficult to understand and interpret. In particular, the lack of visualisation tools reduces stakeholders’ chances of rapidly becoming aware of a damaged built asset’s functional status (Liu et al., 2020b). In a time-sensitive field such as DM, this factor becomes critical as decisions need to be taken rapidly, having reached a quick consensus among different stakeholders. In addition, visual information may reduce the likelihood of accidents and presence of poor management (Lin & Wald, 2007). Hence, the importance of integrating visual tools into DM that enable easy and rapid understanding of information derived from complex algorithms.

Finally, AI integration into BIM to enhance built asset management and provide visual solutions is a field that requires further research efforts (Lu et al., 2020). In this respect, the use of simulation models combining BIM and AI is yielding results in energy-related studies (Clarke, 2018). Nonetheless, there is a call for future research exploring the integration of technologies in BIM, and which explains the development of AI-enabled asset operations tools (Lu et al., 2020).

3 Methodology

3.1 Research design

This research aims to explore DL’s potential to benefit both built asset operations and DM in an extreme event. With this purpose in mind, a framework is proposed that leads to the development of a rigorous simulation method which integrates a DL algorithm into BIM to supply accurate, visual information on built asset damage status, and support critical decision making.

This study adopted a positivist research approach because a solution was modelled in a rational way, whilst maintaining a technology-centred orientation. The research design combines prototyping and simulation; first, a DL algorithm that performs predictive analysis was prototyped; this algorithm was then integrated into the BIM model and a simulation ran. This method is suitable to address the study objectives, as simulations offer the opportunity to attain a holistic and dynamic perspective from different disciplines, and provide a comprehensive understanding of systems behaviour. Furthermore, simulations alleviate the intrinsic challenges facing complex and dynamic processes such as strategy formulation, assessment and implementation. Finally, in order to assess the potential of this DL-enhanced simulation tool, its applicability in terms of building operational resilience in extreme events was discussed by confronting the tool’s possibilities with the challenges identified in the DM literature.

The proposed framework includes the following stages (Fig. 2):

-

1.

Creating a 3D BIM model of a built asset including data on the relevant parameters (i.e., material characteristics, cost, downtime, etc.) for each element

-

2.

Using structural and probabilistic analysis software to generate a dataset that reflects how the different intensity levels of an extreme event (i.e., earthquakes, flooding, explosions, etc.) affect the built asset’s structural behaviour

-

3.

Prototyping a DL model that is able to predict the damage status of each element of the built asset by using the previously created dataset

-

4.

Integrating the DL model into the 3D BIM model in order to combine information from BIM and information predicted by the DL algorithm

-

5.

Visualising the DL algorithm outputs in the 3D BIM model and integrating the smart 3D model into a visual web dashboard

The framework herein considers the development of a DL model as a predictive tool. It has been shown that DL algorithms effectively address a variety of problems that other machine-learning algorithms struggle with. DL is considered very efficient in terms of performance, and the most popular method in the AI community for solving new complex problems (LeCun et al., 2015; Palanisamy et al., 2008; Yuan et al., 2020). The explanation for this performance capacity of DL models lies in the non-linear functions operating within their hidden layers. For example, DL is used in complex problem-solving tasks with large datasets such as environmental remote sensing (which is potentially the case here) or in image and speech recognition (LeCun et al., 2015; Palanisamy et al., 2008; Yuan et al., 2020).

The use of BIM responds to a dual objective; on the one hand, via the interaction of data produced by the DL model with data already established in the BIM model, higher levels of accuracy can be reached for health and safety, cost and downtime analysis (Charalambos et al., 2014). On the other hand, the use of a BIM model is aimed at increasing the knowledge and awareness of facility owners, occupants and any other related stakeholders with regards to an impacted built asset’s functional status (Liu, et al., 2020a, 2020b).

4 Research method

The generic framework proposed in the previous section is specified here. The aim is to provide a paradigmatic exemplification of the framework by using a building as a basic case of built asset, and earthquakes as a kind of extreme event that is widespread all over the world. Indeed, seismic excitations cause important adverse consequences in terms of number of deaths, people affected and total annual damage (EM-DAT, 2021). Moreover, the United Nations has stated that eight out of the ten most populated cities are located on fault lines (United Nations, 2010).

This section presents a detailed explanation of the stages followed in the development of a simulation method that is able to predict and visualise building damage status after a seismic excitation (Fig. 3).

-

Stage 1 3D model development

In this first step, the 3D model of the asset is created by using a BIM authoring tool (i.e., Autodesk Revit Software). Modelling is developed on an individual basis, meaning each element separately. At this point, non-graphical information (parameters) can be added to each element. The type and amount of non-graphical data contained in the 3D model will determine the analysis competency after combining these data with the results from the DL model. For example, data such as each element’s repair time and cost have the potential to interact with the data predicted by the DL model, and to produce an accurate repair cost analysis immediately after an extreme event. Therefore, the variety of parameters that can be included in the 3D model depends on the type of analysis that the built asset’s operators and other relevant stakeholders require.

In the case of a building, the information regarding each room’s safety level becomes quite relevant. However, as modelling takes place on an individual basis, all the elements belonging to each room need to be tagged and grouped. This process can be done manually, in the case of complex buildings, however, this may be time-consuming, and could be automated using a visual programming language for BIM authoring tools (i.e., Dynamo). Indeed, this visual programming language in BIM has the potential to tag elements automatically depending on needs and preferences.

-

Stage 2 Generating the dataset to feed the DL model

In the second stage, a dataset to train the DL model is created. The building’s structural model created in BIM can be imported to structural analysis software (i.e., CSi SAP 2000). The rationale behind using this software is to obtain data on the building’s structural behaviour under a series of seismic excitations. For this purpose, seismic ground motion records collected from the database INNOSEIS were used. This database, containing medium and high intensity seismic records, was created by the European Commission’s Research Fund for Coal and Steel to examine the design of innovative steel-based earthquake-proof elements. As it is used to test innovative processes related to seismic excitations, the accelerated time-history of each seismic excitation recorded in this database was used to feed the DL model (INNOSEIS, 2021).

By using structural analysis software for each ground motion, non-linear time history analysis was performed to identify each storey’s relative floor displacement (RFD) in the building. The software also ran the computation for each floor’s storey drift ratios (SDR) according to the critical direction. Equation (1) describes the calculation for each floor’s SDR, which is the result of subtracting the RFDs corresponding to two adjacent storeys divided by storey height. RFD is the subtraction of the absolute maximum values of displacement recorded on each floor and the ground displacement (Eq. 2).

Equation (3) calculates each ground motion’s intensity measurement (IM), which is the result of the square root of the product of the ground motion’s spectral accelerations in both directions. Both the spectral accelerations of each ground motion are calculated based on the Newmark methodology for linear accelerations developed in Python script (Online Appendix A) (Chopra, 2007).

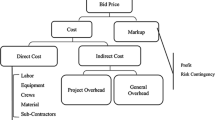

The above results are needed to calculate damage status (DS), which will ultimately be the target variable in the DL model. Hence, in order to obtain this variable for the dataset, analysis of the damage produced by each ground motion was conducted. The performance-based earthquake engineering framework (PBEE) was to be adopted in the method here (Günay & Mosalam, 2013). PBEE is a process that aims to improve seismic risk decision-making by identifying a built asset’s performance metrics (Deierlein et al., 2003; Zhang et al., 2018). Damage characterisation of the built asset’s elements is a key feature in order to identify its performance metrics. Comparison of the damage to elements caused by seismic excitations with their strength limits, as defined by the American Society of Civil Engineers, helps engineers identify the values of immediate occupancy (IO or DS ≥ 1), life safety (LS or DS ≥ 2) and collapse prevention (CP or DS ≥ 3) (ASCE, 2007). These performance metrics are therefore linked to civil engineering concepts such as the built asset’s structural response metrics (Fig. 4) (Deierlein et al., 2003; Zhang et al., 2018).

(Adapted from Deierlein et al., 2003)

Damage status thresholds for a built asset

An increasingly popular methodology related to PBEE to assess a built asset’s structural performance has recently been developed by the US Federal Emergency Management Agency (FEMA) (Cremen and Baker, 2019; Zeng et al., 2016). FEMA has created the Performance Assessment Calculation Tool (PACT), which employs a probabilistic method that links each element’s damage to the likelihood of leading to an unsafe environment by checking the damage’s level of exceedance according to a fixed ratio (Zhang et al., 2018).

PACT is used in our study to obtain DS values for each element of the building (PACT, 2021). PACT requires that the category and quantity of elements existing on each floor be selected. The previously calculated values for SDRs and IM are then inserted in PACT software for each ground motion. As part of its analysis process, PACT applies Monte Carlo analysis and generates a distribution of 200 possible DS scenarios ranging from zero to four (0,1,2,3,4) for each element on each storey. In this study, of the possible 200 DS scenarios produced by each ground motion, the most frequent values in the distribution were selected in order to identify the most likely DS of each element on each storey.

At the end of this second stage, a dataset for each element on each storey is produced (Fig. 5).

For every ground motion, we have the values for IM, RFD for the bottom (RFDB) and top floors (RFDT) on each storey, and DS. For example, if this methodology is applied to a built asset with K number elements, the dataset’s final format is as shown in Table 1

-

Stage 3: DL Model development

This stage prototypes a DL algorithm which is capable of predicting DS for each element for a given ground motion. First, as the dataset created in the previous stage contains a categorical column that determines element type, transformation of the categorical values into dummy variables is required. This process creates new columns for each categorical value. The new columns contain value one if it corresponds to the respective categorical value or value zero if not. This is shown in Table 2.

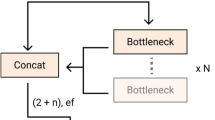

Following this initial step, a DL model is developed for the multinomial classification of the DS for each built asset element. The DL model’s architecture (Online Appendix B) includes an input layer, output layer, and a N number of hidden neuron layers (Fig. 6) (LeCun et al., 2015).

(Adapted from LeCun et al., 2015)

An example of a multi-layered neural network

The key elements considered in the DL model development are: (1) the neurons, (2) activation functions, (3) loss functions and (4) optimisation techniques. First, the input values are needed, which are the DL model’s initiation neurons that travel between all the synapses engineered in the model to produce an output. The neurons in this methodology’s output layer produce a categorical value. Prior to model training, and in order to gain operational efficiency, the standardisation function needs to be applied to the input values (Eq. 4) (LeCun et al., 2012). This function subtracts the mean from each value and divides the result by standard deviation.

Each neuron contained in the model’s layers is linked via synapses to the neurons contained in the following layer. The synapse function is responsible for passing the signal to each set of neurons. This is the linear function described in Eq. (5) (LeCun et al., 2015). Weight “w” in the equation describes the synapse’s importance (“weight”) between the two layers, as it dictates whether the signal passes or not, and to what extent it does so (LeCun et al., 2012).

The value received by each neuron in a layer is then transformed based on an activation function specified by the model’s engineer. The activation function determines whether the neuron is needed in the model or not (Nwankpa et al., 2018). In this methodology the activation function for the non-linear rectified linear unit (ReLu) is selected for the neurons in the hidden layers (Eq. 6), where x is the neuron’s input value. The reason why this is selected for the neurons in the hidden layers is because this approach has been successfully applied in recent years by the AI community (LeCun et al., 2015). Moreover, since this study aims to predict categorical values, the selected activation function for the output layer is softmax (Nwankpa et al., 2018) (Eq. 7), where z is a vector of K real numbers that are normalised into a probability distribution consisting of K probabilities which are proportional to the exponentials of the input numbers.

During DL model training, the predicted value of the neurons contained in the output layer are compared with their actual value. Based on a loss function (e.g., mean squared error, cross entropy etc.), the error is calculated. As the methodology is applied to categorical values, the categorical cross-entropy loss function is selected (Eq. 8) (Zhang, 2019), where \(\hat{y}\) is the i-th scalar value in model output, y is the corresponding target value, and output size is the number of scalar values in model output.

After this step, the DL model starts the process of back propagation (Fig. 7), which propagates the error backwards from the output layer and, based on a learning algorithm, adjusts the weights of Eq. 5 and recalculates the predicted values up until the optimal value minimising the performance metric error is identified (LeCun et al., 2015). The optimisation function applied in this methodology for model training is adaptive moment estimation (Adam) (Eqs. 9, 10), which is the result of combining the momentum and root mean square propagation gradient descent methodologies. In these equations, value m is the aggregate of gradients at time t (initially 0), w are the weights at time t, ε is a small positive constant (\(10^{ - 8}\)), u is the sum of the squares of past gradients at time t, and β1 and β2 (0.9 and 0.999) are the decay rates of average gradients that come from the momentum and root mean square propagation gradient descent methodologies.

(Adapted from LeCun et al., 2015)

Error calculation and back propagation process

This is usually used for large datasets as it is computationally efficient, requires little memory storage, and works appropriately against noisy gradients (Kingma & Ba, 2017). Furthermore, in order to identify the best DL model, an algorithm was designed that runs a random search of specific hyperparameters to identify the set that best performs. The hyperparameters targeted for identification are the number of the DL model’s hidden layers and number of neurons contained in each one. Two arrays containing a range of values for each of the hyperparameters were therefore created. Later, the Random Search process was applied (Agrawal, 2021), and then10 iterations were run that randomly combined the values of the two hyperparameters each time, fitted them into the DL model, and returned the set of values that achieved the best performance. In the next step, having identified the best hyperparameters to tune the model, a k-fold cross-validation test was performed to ensure that the dataset was not biased (Fig. 8). Cross-validation was applied to estimate how the model was expected to perform when used to make predictions on data not used during model training. By following this process, it was ensured that the DL model’s prediction skill was less biased.

Finally, having defined all the necessary parameters, the model was trained based on the dataset produced in stage 2, this was tested and saved in a file. Also, the scale parameter that performed data standardisation was saved in a file.

-

Stage 4: Integrating the DL Model into BIM

In the fourth stage, the DL algorithm was integrated into the 3D BIM model created in Stage 1. To achieve this objective, a visual programming language for BIM authoring tools (dynamo software) was used. The integration process was performed in two sub-stages:

First, the values corresponding to the DL algorithm input values need to be integrated for each built asset element or group of elements that corresponds to the DL model input values. In order to implement this, a code block was written which receives a file containing the ground motion data and time-history of accelerations in both directions. A second code block receives the time-history of each floor’s displacements in the critical direction. A code item was then integrated that calculates the IM, and another that receives RFD data. A code item was then written to assign the correct dummy variable to each element. Finally, these data are merged into a list in order to structure the input values in the correct format so that they can be understood by the DL model.

Second, the DL model and file containing the scale parameter that performs standardisation for the DL model input values were integrated. After this step, the previously created list of each element or group of elements was inserted into the DL model, and the predictions simulating the final performance of the built asset’s elements on each floor for the critical direction were computed. The algorithms developed were the same for every floor in the building. The only modification was the code block containing the information on the level it referred to.

-

Stage 5 Visualising the information predicted by the DL model in BIM and integrating it into a web dashboard

In this stage, dynamic visualisations of the information predicted by the DL model were generated. The objective was to provide insightful visual information that integrates the DL algorithm outputs. To achieve this, in the previously used visual programming environment (Dynamo software), a new algorithm was developed that assigns the predicted DS as a new parameter for each built asset element. Four steps can be determined in the integration process of outputs from the DL model into BIM; first, every element in the 3D model is allocated to a specific room. Second, a list for each room exclusively containing the allocated elements is created. Third, the algorithm compares the DS parameter values of the elements included in each room and assigns the most critical value as a new parameter for each room, which is called safety level. In the fourth step, the algorithm calculates the repair cost for each room in order to reach a threshold of accepted safety level. The complete repair cost is also calculated for each room. Both costs are assigned as parameters for the elements belonging to each room. Applying this algorithm enables the visualisation of rooms in 5 different colours (green, chartreuse yellow, yellow, orange, red) depending on their predicted safety level.

Finally, as the use of a BIM authoring tool can be challenging for inexperienced users, the 3D BIM model is integrated into a web platform with a user-friendly dashboard (i.e., Autodesk Forge). The platform connects the 3D BIM model to the cloud via an application programming interface (API). In order to visualise the safety level as well as the repair cost information for each room on the web dashboard, this information must be stored within the 3D elements configuring each room. As a result, a dashboard with all the key information is created, linking the 3D model to statistical charts on safety levels and repair costs.

5 Empirical analysis

Application of the defined method to our unit of study is explained in this section, and provides all aspects relating to its implementation and the results obtained.

5.1 Designing the 3D BIM model

As a unit of study, a 3D model was designed and developed using a BIM authoring tool (Autodesk Revit). In order to simplify calculations and make the method application more easily understandable, a simple a six-storey building was created. The building’s dimensions are 21 × 28 m, and height of each storey 3.5 m (Fig. 9). The floor plans are identical for all six storeys (Fig. 10).

Regarding the non-graphical information included in the model, a set of parameters are assigned for each element and each room in the building (Fig. 11).

The parameter “room tag” is assigned to each element to show the room it belongs to. This process of room allocation for each element was automated by using Dynamo software. The DS parameter was also generated, which stores each element’s DS predicted by the DL model, and the repair cost parameter (in US dollars), which stores information on each element’s repair cost (Table 3). At room level, the safety level parameter was created to store information on a room’s safety based on the DS of the elements making it up. Finally, two further parameters were created for each room that reflect the room’s reconstruction costs to an accepted safety level (Room repair cost (SL < 2) and total repair cost (Room (Total) Repair Cost (SL < 1)) respectively (Table 4).

Here, it is worth mentioning that the simulation method can be implemented either for all the building’s elements or for a set of selected building elements. Also, analysis of seismic excitations could be run either in the critical direction or in both directions. To avoid further complexity in this empirical analysis, the simulation method was only applied in the critical direction. Similarly, based on their role in structural behaviour, the following structural elements were selected for examination: (1) Metal panel walls; (2) Glazed walls; (3) Steel columns/beams; (4) Steel bracings (Table 5).

5.2 Generating the dataset for the DL model

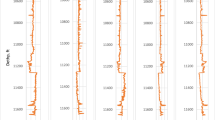

The building’s structural elements in the 3D BIM model are exported to the structural software to compute structural calculations. Non-linear time history analyses of ground motions from the INNOSESIS database are then conducted. The building’s structural behaviour was calculated for 150 different ground motions (30 originally obtained from the INNOSEIS database and 120 scaled in the critical direction). Structural software (SAP 2000) was used to calculate the RFD for each floor and the respective SDR (Fig. 12). In addition, the Newmark methodology for linear accelerations was implemented in a Python script to calculate the IM for each ground motion (Online Appendix A).

The PACT tool was then employed to obtain the elements’ DS. PACT software requires that the elements be categorised, and a set of inputs introduced in order to produce results (PACT, 2021). To run the analysis, the structural elements were categorised as seen in Table 6. The element type contained in the building is therefore specified, categorised into groups, and quantified for each floor. As input values for each element, the SDR and IM (calculated in the previous step) were introduced. A simplified type of probabilistic analysis then evaluated the response of the building elements examined for each ground motion.

The same category of structural elements yields different results for the same ground motion depending on the storey’s location, as each storey has a different SDR. A dataset containing 150 rows for each of the four element categories on each of the six storeys was therefore generated. When all the datasets from each category and each storey were merged, a final dataset with 3600 rows and the following columns was obtained: (1) the IM for ground motion, (2) the RFD for the bottom and top floors, (3) element type and (4) the element’s final damage status. A sample of the dataset in its final format can be seen in Table 7.

5.3 DL model development

First, the dataset obtained to transform the categorical values in the element category column into dummy variables was pre-processed. The standardisation scaling function (Eq. 4) was then applied to the six input values (IM, RFD bottom floor, RFD top floor and three dummy variables). Three dummy variables were created as this study examines four element categories (Columns, Bracings, Interior Walls, Curtain Walls) (Fig. 13).

A DL model was then designed (Online Appendix B). In this model, the activation, loss and optimiser functions (ReLu, SoftMax, Categorical Cross-Entropy and ADAM) were selected following the architecture described and justified in the research method (Stage 3). As explained in the methodology, in order to tune the hyperparameters of the layers and neurons contained in each layer of the DL model, multiple iterations using a variety of values were examined. Finally, an input layer was selected with six neurons, three hidden layers with 40, 40 and 10 neurons respectively, and an output layer with five expected prediction values (Fig. 14).

In addition, to prevent overfitting in the neural network, dropout layers with a value of 0.3 were added following each of the input and hidden layers. The dataset was then split in two; a dataset containing 70% of the data was used for training, and the other dataset containing 30% of the data to test the DL model. The DL model was trained to predict the damage status of the structural elements for a given ground motion. The test results yielded 97% accuracy as shown in the confusion matrix (Fig. 15). The trained DL model and scaling value were then saved in a H5 and a pickle file.

5.4 Integrating the DL model into BIM

In this stage, Dynamo software is used to integrate the DL model into BIM. A flowchart of the main steps followed in this process is presented in Fig. 16.

After designing the 3D model and prototyping the DL algorithm, the trained DL model and scaling value are uploaded by linking them to the BIM model via python script (Online Appendix C). The scaling value is required because the six input elements need to be scaled in the DL model. Moreover, in order to implement the simulation, a ground motion must be selected (Fig. 17).

The ground motion’s acceleration time-history in both directions, and each floor’s displacement time-histories in the critical direction are structured as files in their specific format. They are then inserted into the algorithm in Dynamo in order to start the simulation. The algorithm generates a list of values for each storey containing the IM for the ground motion and RFD for the bottom (RFDB) and top floors (RFDT) (Fig. 18) (Online Appendix D). Finally, in the same list of values, the dummy variables categorising each structural element are inserted. Following this step, a list for each element category on each storey is created. This list contains the input values, which are scaled according to the scaled parameter and then inserted into the DL model for the damage status predictions for each element category (Online Appendix C).

5.5 Visualising the DL model outputs in BIM and integrating the smart model into a web dashboard

In the final stage, the algorithm developed in Dynamo assigns each building element to the corresponding room. It leverages the room tag parameter created for each element examined and allocates the elements their respective rooms. Then, based on the highest DS among the elements configuring each room, the respective room’s safety level is assigned. The algorithm assesses each room’s safety level and generates two cases concerning each room’s repair cost; repair cost to lower the safety level to acceptance level and total repair cost (Fig. 19).

Finally, the algorithm provides visual information on each room in Revit by using colour tags in green, yellow, orange and red, based on the room’s safety level (Figs. 20 and 21).

The final step in this stage is to generate a user-friendly interface so that the building’s stakeholders can navigate and visualise the results obtained in the BIM model. In order to do so, the BIM model with all the graphical and non-graphical information (parameters) was saved and uploaded to a local server containing the Autodesk Forge dashboard (Forge, 2021). Figures 22 and 23 show examples of visual results using this interface.

6 Implications, limitations and future research

This section presents the main implications of the research study, and discusses the potential of the prototyped DL-enhanced simulation tool for both DM and built asset operations management.

6.1 Implications for disaster management and the strengthening of operational resilience in the built environment

This research puts forward a five-stage framework to develop a simulation method that integrates a DL algorithm into BIM. This tool ultimately has the potential to provide visual information on damage to a built asset after a disaster, and to produce useful insights associated with such damage (safety levels, repair costs, expected downtime, etc.). The five stages making up our proposed framework are: (1) creating a 3D BIM model; (2) developing a dataset that reflects the structural behaviour of the different elements comprising the asset under different intensities of a particular extreme event; (3) developing a DL algorithm that is able to predict DS of each element analysed of the built asset; (4) integrating the DL model into the 3D BIM model in order to combine the information from both sources; (5) creating different visualisations that reflect the key insights gained in an easily understandable format.

In this study, a step-by-step guide has been produced showing a paradigmatic application of this framework. To do so, a six-storey building was used as the archetype of a built asset, and earthquakes were considered an illustrative example of extreme event that can impact the built environment. The empirical results obtained by this exemplary application show that the DL-enhanced simulation method has the potential to have an impact on all the different phases in the DM cycle, and not only in the response phase as the majority of AI applications in this field (Sun et al., 2020). By using this simulation tool, the different actors involved in DM can gain better understanding of potential damage to the built environment caused by an extreme event of any given magnitude. Anticipated knowledge of the most likely impacts in different assets would help improve strategic plans that alleviate the adverse consequences of a disaster as it has shown in previous studies (Stickley et al., 2016). In the Preparedness phase, this simulation tool could help stakeholders draw up better evacuation plans based on predicted damage to the built environment, complementing other technologies in emergency evacuation planning (Yin et al., 2020; Yoo & Choi, 2019). Similarly, in the Response phase, accurate responses could be designed according to the most probable simulated scenario, which would result in efficient health and safety response actions to protect built assets and end-users. Furthermore, since this simulation method has the potential to generate reliable visual information extremely rapidly, it can be leveraged in the Response phase immediately after the disaster. In this line, effective visual risk communication is recognised as a key element in emergency management as it allows risk and disaster managers to react quickly and transfer this information directly to general public (Stephens et al., 2015). Once the disaster’s magnitude is known or, at least, estimated, this DL-enhanced tool is able to simulate outcomes in order to establish the built asset safety levels. This would therefore provide emergency and rescue teams with a realistic picture of the impact on the environment, enabling them to improve evacuation and rescue plans. This point responds to one of the identified needs on the use of technology in humanitarian operations (Marić et al., 2021). Finally, in the Recovery phase, the information supplied by this tool could be very useful to assess the damage to impacted assets and better understand which of the asset’s elements are most unsafe. Such information is crucial when attempting to prevent subsequent accidents during recovery actions (Krausmann et al., 2011). Moreover, reconstruction would be improved since the repair costs and time needed to repair buildings and infrastructures would be more quickly and better understood.

This study also contributes to the digitalisation of the AECOO and responds to one of the research challenge in BIM research by providing an exemplary application that integrates a DL model into BIM (Pauwels et al., 2017). This research exploits the visual potential and information contained in a 3D BIM model as a platform to integrate the valuable insights generated by the DL model. Developing this kind of simulation tools could help to understand, from the built asset’s initial design phase, which parameters need to be integrated into BIM in order to run different types of simulation analyses that enhance built asset operations. In this damage prediction application, decisions to improve built asset resilience can be made at the design phase, thus saving the cost of having to make improvements once an asset has been built. Hence, this tool can be applied to design a safer and more resilient built environment, contributing to the generation of future-proofing buildings and infrastructures (Love et al., 2018). Furthermore, using the BIM model to store information in the digital building database affords the opportunity to interact with predicted information, enabling the implementation of different analyses (i.e., repair cost analysis or recovery time analysis).

In addition to the above, this tool can help to better understand how to operate a built asset in the event of a disaster by helping operators to react more swiftly and effectively. This could be particularly important for the operability of critical infrastructures after a disaster (Alsubaie et al., 2016). Better knowledge of the impacts on built assets could lead to shorter recovery times and better understanding of how to continue operating safely. This is crucial when the operability of these critical assets is essential in order to respond to the calamitous event.

Finally, integrating the model into a web dashboard supports asset owners and operators by creating a user-friendly interface to navigate, plan and assess parameters. This contributes to the improving of the interpretability of DL model’s results in comparison with previous studies applying AI in structural damage detection.

6.2 Key contributions and practical insights

It is argued here that the key contribution of this research is not the prototyped DL-enhanced simulation tool itself, rather what this tool illustrates, and the door for similar or alternative developments that it opens. Thus, it is considered that an important outcome of this study is that a five-stage framework is provided which is adaptable to other contexts and applications. It can be applied to any built asset affected by any extreme event could potentially damage the built environment. Accordingly, different scenarios can be simulated to respond to the different needs that DM actors and built asset owners and operators are able to define based on their specific contexts.

We also believe that the potential of this simulation tool for health and safety analysis must be outlined; indeed, emergency teams can apply this type of simulation tool to increase their capability to find survivors in destroyed and severely damaged buildings after a catastrophe.

Furthermore, focusing on the empirical results, the simulation of the built asset’s final DS can help insurance companies calculate the monetary losses according to different scenarios. Hence, the degree of uncertainty associated with extreme events can be lessened and more affordable insurance policies offered, thus increasing the number of insured assets and resulting in a less vulnerable built environment. Indeed, this application is quite relevant, based on data collected by EM-DAT, a recognised global database on natural and technological disasters, where it has been observed that of total damage costs due to seismic excitations, only a small proportion was insured (Fig. 24) (EM-DAT, 2021).

In conclusion, it is important to note that the framework herein could be specifically adapted to better understand how to respond to particular natural disasters which are more likely to occur in a given region or context, and where the specific data available to feed the DL model are better in terms of volume and quality.

6.3 Limitations

The limitations of this study mainly concern the unit of study’s relative simplicity. The rationale behind this decision is that a model was sought in which the framework’s different stages were recognisable and the analyses easy to follow; also, to avoid the risk of adding unnecessary complexity to this novel approach. Following this logic, a very simple building was examined and only a few key elements contained in the 3D model were selected to run the structural analysis, whose results were entered into the DL model. Moreover, the empirical analysis only examined the seismic excitation’s critical direction.

Furthermore, although the framework is generalisable, it must be acknowledged that specific DL models would have to be developed and trained depending on the built asset’s specific characteristics (e.g. geometry, rigidity, etc.). Regarding the integration of the DL model into BIM, there could be additional difficulties when applied to more complex cases, but this would only involve small modifications to the Dynamo environment, depending on the file format containing the recorded data.

One final point, the input data for the DL model training were based on a probabilistic structural analysis. Therefore, there is a degree of uncertainty with regard to the simulation method’s outputs following a seismic excitation. Consequently, statutory inspections of the asset should still be made. Nonetheless, this simulation method does not seek to replace the legally established examination methods for an asset’s structural health, rather to increase awareness of structural damage both before and shortly after a disaster.

6.4 Future research

It is proposed that the integration of real-time data into the model by using Internet of Things (IoT) technology should be investigated. It would enable the development of a Digital Twin where more accurate algorithms could be designed, since they would be fed real data, leading to improved outputs and applications.

Moreover, another line for future work could be to test the framework in more complex-built assets by implementing more detailed structural analyses, and considering more elements for analysis, in order to provide a more comprehensive dataset to train the DL algorithm. Moreover, it is suggested that the framework could be applied to a BIM model containing a larger amount of information to be combined with the output generated by the DL algorithm, thus enabling improved and varied analyses (i.e., health and safety, monetary). Applying this methodology to a real-world building with a fully developed BIM model could therefore be an interesting case study.

Finally, further assessments on how the visual information produced by the simulation model can be utilised are required to find new ways of informing end-users and thereby reduce causalities.

7 Conclusion

This research responds to the different challenges posed by recent literature on DM, Operations management and built asset management. First, the need for interdisciplinary approaches that include technology in order to respond more effectively to disasters (Altay et al., 2018; Dubey et al., 2019a, 2019b; Wassenhove, 2006). Second, calls for the potential of AI, and DL in, to be explored to enhance operational solutions in uncertain contexts such as disasters (Alanne & Sierla, 2022). And third, the need to improve operational resilience in the built environment (Gharehbaghi et al., 2020; Mangalathu et al., 2019), and to apply new technologies in order to make decision making quicker (Lin & Wald, 2007).

The proposed framework to develop a DL-enhanced simulation method, and subsequent application to the case described, fulfil the proposed research objectives. An ML model was prototyped that is able to predict the DS of a built asset. Moreover, different algorithms were implemented in a visual programming language for BIM (Dynamo software) that detail the integration of a DL model into a BIM environment. In addition, the simulation model creates visual information, which is easy to understand and share, thus supporting quick decision-making. Later, in the implications section, the simulation tool’s applicability to the different DM stages is discussed so as to address the current challenges. Finally, the impact of this method to strengthen operational resilience of the built environment is discussed.

In conclusion, it can be argued here that the DL approach implemented in this article demonstrates DL’s potential, particularly in combination with BIM, as a powerful tool to perform different analyses that are able to improve operations management and decision making in uncertain environments.

References

Abdalla, R., & Esmail, M. (2018). WebGIS for disaster management and emergency response. Springer.

Adnan, A., Ramli, M., & Sk Abd Razak, S. M. (2015). Disaster management and mitigation for earthquakes: Are we ready?

Agrawal, T. (2021). Hyperparameter optimization using scikit-learn. In T. Agrawal (Ed.), Hyperparameter optimization in machine learning: make your machine learning and deep learning models more efficient. APress.

Akter, S., & Wamba, S. F. (2019). Big data and disaster management: A systematic review and agenda for future research. Annals of Operations Research, 283(1), 939–959. https://doi.org/10.1007/s10479-017-2584-2

Alanne, K., & Sierla, S. (2022). An overview of machine learning applications for smart buildings. Sustainable Cities and Society, 76, 103445. https://doi.org/10.1016/j.scs.2021.103445

Alsubaie, A., Alutaibi, K., & Martí, J. (2016). Resilience assessment of interdependent critical infrastructure. In E. Rome, M. Theocharidou, & S. Wolthusen (Eds.), Critical information infrastructures security. Springer International Publishing.

Altay, N., & Green, W. G. (2006). OR/MS research in disaster operations management. European Journal of Operational Research, 175(1), 475–493. https://doi.org/10.1016/j.ejor.2005.05.016

Altay, N., Gunasekaran, A., Dubey, R., & Childe, S. J. (2018). Agility and resilience as antecedents of supply chain performance under moderating effects of organizational culture within the humanitarian setting: A dynamic capability view. Production Planning and Control, 29(14), 1158–1174. https://doi.org/10.1080/09537287.2018.1542174

Amaratunga, D., & Haigh, R. (2011). Post-disaster reconstruction of the built environment: Rebuilding for resilience. Wiley.

Aqib, M., Mehmood, R., Alzahrani, A., & Katib, I. (2020). A smart disaster management system for future cities using deep learning, GPUs, and in-memory computing. In R. Mehmood, S. See, I. Katib, & I. Chlamtac (Eds.), Smart infrastructure and applications: foundations for smarter cities and societies. Springer International Publishing.

Araya, D. B., Grolinger, K., ElYamany, H. F., Capretz, M. A. M., & Bitsuamlak, G. (2017). An ensemble learning framework for anomaly detection in building energy consumption. Energy and Buildings, 144, 191–206. https://doi.org/10.1016/j.enbuild.2017.02.058

ASCE. (2007). Seismic rehabilitation of existing buildings. American Society of Civil Engineers. https://doi.org/10.1061/9780784408841

Azhar, S., Khalfan, M., & Maqsood, T. (2012). Building information modelling (BIM): Now and beyond. Construction Economics and Building. https://doi.org/10.5130/AJCEB.v12i4.3032

Azuatalam, D., Lee, W.-L., de Nijs, F., & Liebman, A. (2020). Reinforcement learning for whole-building HVAC control and demand response. Energy and AI, 2, 100020. https://doi.org/10.1016/j.egyai.2020.100020

Bag, S., Gupta, S., Choi, T.-M., & Kumar, A. (2021). Roles of innovation leadership on using big data analytics to establish resilient healthcare supply chains to combat the COVID-19 pandemic: a multimethodological study. IEEE Transactions on Engineering Management. https://doi.org/10.1109/TEM.2021.3101590

Bang, H. N. (2014). General overview of the disaster management framework in Cameroon. Disasters, 38(3), 562–586. https://doi.org/10.1111/disa.12061

Bartuska, T. J., & Young, G. (2007). The built environment: Definition and scope. The Built Environment: A Collaborative Inquiry into Design and Planning, 2, 3–14.

Behl, A., & Dutta, P. (2019). Humanitarian supply chain management: A thematic literature review and future directions of research. Annals of Operations Research, 283(1), 1001–1044. https://doi.org/10.1007/s10479-018-2806-2

Belhadi, A., Mani, V., Kamble, S. S., Khan, S. A. R., & Verma, S. (2021). Artificial intelligence-driven innovation for enhancing supply chain resilience and performance under the effect of supply chain dynamism: An empirical investigation. Annals of Operations Research. https://doi.org/10.1007/s10479-021-03956-x

Bellomo, N., Clarke, D., Gibelli, L., Townsend, P., & Vreugdenhil, B. J. (2016). Human behaviours in evacuation crowd dynamics: From modelling to “big data” toward crisis management. Physics of Life Reviews, 18, 1–21. https://doi.org/10.1016/j.plrev.2016.05.014

Boje, C., Guerriero, A., Kubicki, S., & Rezgui, Y. (2020). Towards a semantic construction digital twin: Directions for future research. Automation in Construction, 114, 103179. https://doi.org/10.1016/j.autcon.2020.103179

Bosher, L. (2008b). Introduction: The need for built-in resilience. In Hazards and the Built Environment. Routledge

Bosher, L. (2008). Hazards and the built environment: Attaining built-in resilience. Routledge.

Bosher, L. (2014). Built-in resilience through disaster risk reduction: Operational issues. Building Research and Information, 42(2), 240–254. https://doi.org/10.1080/09613218.2014.858203

Bosher, L., Carrillo, P., Dainty, A., Glass, J., & Price, A. (2007). Realising a resilient and sustainable built environment: Towards a strategic agenda for the United Kingdom. Disasters, 31(3), 236–255. https://doi.org/10.1111/j.1467-7717.2007.01007.x

Bosher, L., & Dainty, A. (2011). Disaster risk reduction and ‘built-in’ resilience: Towards overarching principles for construction practice. Disasters, 35(1), 1–18. https://doi.org/10.1111/j.1467-7717.2010.01189.x

Bouabdallaoui, Y., Lafhaj, Z., Yim, P., Ducoulombier, L., & Bennadji, B. (2021). Predictive maintenance in building facilities: a machine learning-based approach. Sensors. https://doi.org/10.3390/s21041044

Cai, H., Lam, N. S. N., Qiang, Y., Zou, L., Correll, R. M., & Mihunov, V. (2018). A synthesis of disaster resilience measurement methods and indices. International Journal of Disaster Risk Reduction, 31, 844–855. https://doi.org/10.1016/j.ijdrr.2018.07.015

Cerѐ, G., Rezgui, Y., & Zhao, W. (2017). Critical review of existing built environment resilience frameworks: Directions for future research. International Journal of Disaster Risk Reduction, 25, 173–189. https://doi.org/10.1016/j.ijdrr.2017.09.018

Charalambos, G., Dimitrios, V., & Symeon, C. (2014). Damage assessment cost estimating, and scheduling for post-earthquake building rehabilitation using BIM. Computing in Civil and Building Engineering. https://doi.org/10.1061/9780784413616.050

Chatterjee, S., Chaudhuri, R., González, V. I., Kumar, A., & Singh, S. K. (2022). Resource integration and dynamic capability of frontline employee during COVID-19 pandemic: From value creation and engineering management perspectives. Technological Forecasting and Social Change, 176, 121446. https://doi.org/10.1016/j.techfore.2021.121446

Chen, J., Lim, C. P., Tan, K. H., Govindan, K., & Kumar, A. (2021). Artificial intelligence-based human-centric decision support framework: An application to predictive maintenance in asset management under pandemic environments. Annals of Operations Research. https://doi.org/10.1007/s10479-021-04373-w

Chopra, A. K. (2007). Dynamics of structures. Pearson Education.

Clarke, J. (2018). The role of building operational emulation in realizing a resilient built environment. Architectural Science Review, 61(5), 358–361. https://doi.org/10.1080/00038628.2018.1502157

Criminale, A., & Langar, S. (2017). Challenges with BIM Implementation: A Review of Literature.

Dash, R., McMurtrey, M., Rebman, C., & Kar, U. K. (2019). Application of artificial intelligence in automation of supply chain management. Journal of Strategic Innovation and Sustainability, 14(3), 4585.

Deierlein, G., Krawinkler, H., & Cornell, C. (2003). A framework for performance-based earthquake engineering.

Delmonteil, F.-X., & Rancourt, M. -È. (2017). The role of satellite technologies in relief logistics. Journal of Humanitarian Logistics and Supply Chain Management, 7(1), 57–78. https://doi.org/10.1108/JHLSCM-07-2016-0031

Deng, M., Menassa, C. C., & Kamat, V. R. (2021). From BIM to digital twins: A systematic review of the evolution of intelligent building representations in the AEC-FM industry. Journal of Information Technology in Construction (ITcon), 26(5), 58–83. https://doi.org/10.36680/j.itcon.2021.005

Devaraj, J., Ganesan, S., Elavarasan, R. M., & Subramaniam, U. (2021). A novel deep learning based model for tropical intensity estimation and post-disaster management of hurricanes. Applied Sciences. https://doi.org/10.3390/app11094129

Dixon, H. E., & Ginsberg, M. L. (2000). Combining satisfiability techniques from AI and OR. The Knowledge Engineering Review, 15(1), 31–45. https://doi.org/10.1017/S0269888900001041

Doorn, N., Gardoni, P., & Murphy, C. (2019). A multidisciplinary definition and evaluation of resilience: The role of social justice in defining resilience. Sustainable and Resilient Infrastructure, 4(3), 112–123. https://doi.org/10.1080/23789689.2018.1428162

Drosio, S., & Stanek, S. (2016). The Big Data concept as a contributor of added value to crisis decision support systems. Journal of Decision Systems, 25(sup1), 228–239. https://doi.org/10.1080/12460125.2016.1187404

Dubey, R., Altay, N., & Blome, C. (2019a). Swift trust and commitment: The missing links for humanitarian supply chain coordination? Annals of Operations Research, 283(1), 159–177. https://doi.org/10.1007/s10479-017-2676-z

Dubey, R., Bryde, D. J., Foropon, C., Graham, G., Giannakis, M., & Mishra, D. B. (2020). Agility in humanitarian supply chain: An organizational information processing perspective and relational view. Annals of Operations Research. https://doi.org/10.1007/s10479-020-03824-0

Dubey, R., Gunasekaran, A., Childe, S. J., Roubaud, D., Fosso Wamba, S., Giannakis, M., & Foropon, C. (2019b). Big data analytics and organizational culture as complements to swift trust and collaborative performance in the humanitarian supply chain. International Journal of Production Economics, 210, 120–136. https://doi.org/10.1016/j.ijpe.2019.01.023

EM-DAT. (2021). EM-DAT Public [Database]. EM-DAT Public. https://public.emdat.be/.

European Committee for Standardization (CEN). (2010). Eurocode 1: Actions on structures – Part 1–4: General actions – wind actions. EN 1991-1-4:2005/ AC:2010 (E). Europe: European Standard (Eurocode), European Committee for Standardization (CEN).

Feng, C., Zhang, H., Wang, S., Li, Y., Wang, H., & Yan, F. (2019). Structural damage detection using deep convolutional neural network and transfer learning. KSCE Journal of Civil Engineering, 23(10), 4493–4502. https://doi.org/10.1007/s12205-019-0437-z

Forge. (2021). Autodesk Forge. Learn Forge. https://learnforge.autodesk.io/#/.

Galera-Zarco, C. G., Bustinza, O., & Perez, V. F. (2016). Adding value: How to develop a servitisation strategy in civil engineering. Proceedings of the Institution of Civil Engineers Civil Engineering, 169(1), 35–40. https://doi.org/10.1680/jcien.15.00023

Gavidia, J. V. (2017). A model for enterprise resource planning in emergency humanitarian logistics. Journal of Humanitarian Logistics and Supply Chain Management, 7(3), 246–265. https://doi.org/10.1108/JHLSCM-02-2017-0004

Ghaffarianhoseini, A., Tookey, J., Ghaffarianhoseini, A., Naismith, N., Azhar, S., Efimova, O., & Raahemifar, K. (2017). Building Information Modelling (BIM) uptake: Clear benefits, understanding its implementation, risks and challenges. Renewable and Sustainable Energy Reviews, 75, 1046–1053. https://doi.org/10.1016/j.rser.2016.11.083

Gharehbaghi, V. R., Nguyen, A., Noroozinejad Farsangi, E., & Yang, T. Y. (2020). Supervised damage and deterioration detection in building structures using an enhanced autoregressive time-series approach. Journal of Building Engineering, 30, 101292. https://doi.org/10.1016/j.jobe.2020.101292

Ghobakhloo, M. (2020). Industry 4.0, digitization, and opportunities for sustainability. Journal of Cleaner Production, 252, 119869. https://doi.org/10.1016/j.jclepro.2019.119869

Global assessment report on disaster risk reduction 2015. (n.d.). Retrieved 29 December 2021, from https://www.undrr.org/publication/global-assessment-report-disaster-risk-reduction-2015

Global assessment report on disaster risk reduction 2019. (n.d.). Retrieved 29 December 2021, from https://www.undrr.org/publication/global-assessment-report-disaster-risk-reduction-2019

Griffith, D. A., Boehmke, B., Bradley, R. V., Hazen, B. T., & Johnson, A. W. (2019). Embedded analytics: Improving decision support for humanitarian logistics operations. Annals of Operations Research, 283(1), 247–265. https://doi.org/10.1007/s10479-017-2607-z

Guillaume, R., Marques, G., Thierry, C., & Dubois, D. (2014). Decision support with ill-known criteria in the collaborative supply chain context. Engineering Applications of Artificial Intelligence, 36, 1–11. https://doi.org/10.1016/j.engappai.2014.06.013

Günay, S., & Mosalam, K. M. (2013). PEER Performance-Based Earthquake Engineering Methodology. Revisited. Journal of Earthquake Engineering, 17(6), 829–858. https://doi.org/10.1080/13632469.2013.787377

Gunasekaran, A., Dubey, R., Wamba, S. F., Papadopoulos, T., Hazen, B. T., & Ngai, E. W. T. (2018). Bridging humanitarian operations management and organisational theory. International Journal of Production Research, 56(21), 6735–6740.