Abstract

Artificial intelligence (AI) is being used increasingly to detect fatal diseases such as cancer. The potential reduction in human error, rapid diagnosis, and consistency of judgment are the primary motives for using these applications. Artificial Neural Networks and Convolution Neural Networks are popular AI techniques being increasingly used in diagnosis. Numerous academics have explored and evaluated AI methods used in the detection of various cancer types for comparison and analysis. This study presents a thorough evaluation of the AI techniques used in cancer detection based on extensively researched studies and research trials published on the subject. The manuscript offers a thorough evaluation and comparison of the AI methods applied to the detection of five primary cancer types: breast cancer, lung cancer, colorectal cancer, prostate cancer, skin cancer, and digestive cancer. To determine how well these models compare with medical professionals’ judgments, the opinions of developed models and of experts are compared and provided in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The recent advancement of AI applications in the medical sector has demonstrated success in rapid and precise diagnosis. The assessment of AI approaches used in cancer detection is considered essential since the disease is one of the most deadly and has a significant need for diagnosis. Many academics have conducted studies and developed methods to assess the effectiveness of a particular cancer detection technology.

By analyzing and contrasting the six primary cancer types provided in this paper, the primary objective of this study is to provide a broader view of various methodologies and malignancies in a single manuscript. This article is helpful to readers to get a comprehensive idea of cutting-edge AI methodologies, which further stimulates constructive research.

AI has made advances in many medical fields, including diagnosis, treatment, drug development, patient care, etc. Several studies on AI in cancer diagnosis have been undertaken. Cancer is still a leading cause of worldwide fatalities, and hence the error-free diagnosis of cancer is crucial. AI has proven to be significantly accurate in the imaging diagnosis of tumors (i.e., pathological diagnosis and endoscopic diagnosis). AI assists doctors in providing more accurate and effective medical care to patients (Dong et al. 2020).

Correct identification necessitates the mining of quantitative information for an informed diagnosis. However, human error is possible, and intricate faults make the issue worse. To effectively treat patients, AI must be used in therapeutic imaging assessment. Image segmentation, image registration, and image visualization are its three major pillars. In advanced countries, the Multidisciplinary Group (MDT), a team of specialists and medical professionals, is used to determine the most effective course of therapy for a condition. AI gathers data from numerous sources for a comprehensive analysis and treatment plan.

It is often expected (Shastry and Sanjay 2022) that a health professional’s diagnosis will be more precise with more information they have about the patient. The quantity of information that is currently available to health practitioners, however, is sometimes seen as overwhelming. Because it may be used to find pertinent patterns in complicated data, machine learning provides a solution to this issue. ML is applicable to “precision cancer treatment” for treating cancer patients. Based on the unique genetic profiles of each patient’s tumors, “precision cancer treatment” seeks to reliably forecast the appropriate drug treatments for a given patient (Injadat et al. 2021).

Deep learning (DL), a branch of machine learning, is employed in drug discovery, identification, and diagnostics to simulate human intelligence. It makes use of an Artificial Neural Network (ANN) to mimic how input is processed by artificial neurons, how output is generated, and the working of other hidden layers in the network. DL has been used in mechanical surgical treatments for gynecological disorders and heart valve replacement and is expected to play a significant role in the fight against cancer (Shastry and Sanjay 2022).

The literature is primarily derived from relevant past studies conducted on AI in cancer diagnosis. This survey consist of 36 research and review-based papers detailing AI applications and their comparisons across the diagnosis of major cancers. This review is created by thoroughly examining the relevant prior research and compiling the data in an organized manner. The primary objectives are to collect information about currently used and leading AI applications, their performance analysis, comparison of different study experiments, and understanding of the future scope in a single manuscript. This paper reviews heterogeneous set of studies for diverse cancer types to further analyze AI approaches, indicating a clear conclusion on the usage and influence of AI techniques in cancer detection. Furthermore, the utilization of numerous studies enables a clear comparison of methodologies to identify the best-performing models hence stimulating future research.

The studies conducted in the past on the most recent developments in AI for cancer detection primarily emphasized the evaluation of a particular method or experiment that led to the development of a more effective model. Other research experiments compared various approaches to see which ones are more effective against specific malignancies. This review adopts a different strategy by offering a thorough analysis of 6 significant tumors. The comparison of research is described, tabulated and potential new methods resulting from study experiments have been discussed. The primary medical modalities and AI techniques discussed in the paper are as shown in the Fig. 1.

In the fight against cancer, AI techniques are powerful tools. AI is gradually being utilized for cancer screening. Automated approaches for detecting cancer are being implemented with the help of AI. Computer algorithms are being used to analyze magnetic resonance imaging (MRI) images, leaving little room for error. AI is also showing an immense contribution to drug discovery. Better cancer surveillance is taking place due to the advanced technology offered by the development of AI (Saba 2020). There are a variety of AI models for each cancer type discussed in this paper. Every method uses a different dataset to train and test the model. The training process with each AI technique may use biased input data. In this case, the generalized approach of AI for cancer detection needs to be presented. Data Preprocessing is an important strategy that needs to be performed in such a way that the training data fits the diverse groups. Another strategy is use bias detection techniques during the development of the model and deployment of the model. This important strategy is analysis of the data and the output of the model to identify potential biases.

This paper is organized as follows: First section gives the introduction. This section presents an extensive overview of AI applications in five primary malignancies. Section 2 presents a thorough analysis of the artificial intelligence methods utilized in the detection of breast cancer. Section 3 describes lung cancer detection techniques with detailed analysis. Section 4 presents AI techniques and their analysis for colorectal cancer type. Section 5 presents prostate cancer detection techniques using AI. Section 6 describes skin cancer and Sect. 7 describes digestive cancer detection techniques. Section 8 presents describes the ML approaches used for personalized cancer treatment using genotypic features. Section 9 presents a discussion on the all the techniques studied. The authors discusses here the problems with AI techniques and the confidentiality of data. Section 10 presents conclusion and future research directions.

2 AI techniques for breast cancer

AI-based breast cancer detection techniques leverage the power of artificial intelligence and machine learning algorithms to analyze medical data including mammograms, MRIs, and ultrasounds for early detection and diagnosis of breast cancer. These algorithms recognize the patterns and anomalies within the images that may indicate the presence of tumors or suspicious lesions, often with high accuracy. By continuously learning from vast amounts of data, AI systems can improve their performance over time. AI-based solutions help radiotherapists to become more skilled investigators and aid in the early diagnosis of breast cancer. Mammography, tomography, breast ultrasound, MRI, CT scans, and PET scans are the most frequently utilized tools for the diagnosis of breast cancer (Shastry and Sanjay 2022). Mammography is also known as breast screening. It is highly possible to detect breast cancer during screening requiring a minimal amount of time. Ultrasound technique delivers sound waves inside the body to examine the internal structure of the body. A transducer is used in this technique. This provides sound waves. It is located on the skin. They treat tissues as obstacles and react to them and echo. These echoes are recorded. The echo values are then transformed to grayscale for digital analysis. The positron emission tomography technique employs F-fluorodeoxyglucose. Imaging the body allows physicians to locate a tumor. The technique has its basis in detecting radiolabel cancer cells. These cells are specific tracers. MRIs are employed for detection. Elastography is a method that makes it possible to remove breast cancer tissue larger than normal parenchyma. Elastography is used for imaging by sound waves bouncing off the tissues. These waves are recorded, and the values are converted to grayscale for digital analysis. Elastography can differentiate between malignant and benign tumors (Sharif 2021). Active microwave imaging is a newly discovered technique for early detection in breast detection. When microwaves are bombarded with cancer tissues, they exhibit properties very different from healthy tissues (Bindu et al. 2006).

Mammography has been proven as one of the most useful screening tools for breast cancer. In previous investigations, half of the mammographically identified breast tumors were evident. Double reading of mammograms have been proven more potent in comparison to a single reading. In double reading, the sensitivity rate is enhanced by 5–15% (Watanabe et al. 2019). 10–30% of breast cancers are missed on mammography, which is ascribed to thick parenchyma hiding lesions, inadequate placement, perception mistakes, and interpretation errors.

The goal of the study by researchers was to create an artificial intelligence algorithm for mammography breast cancer diagnosis and observe its improved diagnostic accuracy. An AI algorithm was developed and validated. This experiment was carried out using 170,230 mammography examinations from five institutions in the United States, South Korea, and the United Kingdom. For the multicentre, observer-blinded, reader study, 320 mammograms were obtained independently from two institutions, with 160 being cancer-positive, 64 benign, and 96 normal. 14 radiologists acted as readers, assessing each mammogram for the probability of malignancy, likelihood of malignancy (LOM), location of malignancy, and the need to remember the patient, first without and then with the AI algorithm’s assistance. The performance of AI and radiologists was evaluated using the LOM-based area under the receiver operating characteristic curve (AUROC), as well as recall-based specificity and sensitivity.

To diagnose breast cancer, an AI system was built that employs the biggest breast cancer data set among existing AI algorithms. The algorithm was able to exhibit comparable performance in validation data sets from different nations since it was trained with data from multiple institutions. With the use of large-scale mammography data, the AI program outperformed doctors in terms of diagnosis, notably in early-stage invasive breast tumors. Mammographic characteristics of tumors found by the AI system were analyzed through a comparative study with radiologists to gain a better understanding of AI behavior. This comparison is shown in Fig. 2.

When compared to radiologists, AI algorithm constructed using large-scale mammography data performed better in breast cancer detection. The considerable increase in radiologists’ performance was observed when assisted by AI justifies using AI as a diagnostic assistance tool for mammography. According to this study, AI has the potential to improve early-stage breast cancer diagnosis in mammography. The performance of radiologists was greatly enhanced when assisted by AI, particularly in thick breast regions on a mammogram, which represents one of the primary challenges in screening. Such advancements leads to an increase in screen-detected malignancies and a decrease in interval cancers, improving mammography screening effectiveness. (Kim 2020)

The radiologists’ accuracy is compared with AI systems using a non-inferiority null hypothesis (margin = 0.05) based on differences in the area under the receiver operating characteristic (AUROC) curve. The sensitivities of the radiologist and the AI system are compared with HMRC using standard analysis of variance (ANOVA) of two multi-reader multi-case (MRMC) modalities with the same level of specificity. The AUC of the AI system (0.840) is statistically non-inferior to that of the 101 radiologists (0.814) with a difference of 0.026 resulting in slightly higher for the AI system for low and mid-specificity.

Although the AI system has shown better results than the collective performance of the radiologists, it consistently performs low compared to the best radiologist. An ideal AI system should be able to function to the limits of the imaging modality itself, in other words, not be able to detect mammographic occult cancers while minimizing false positives. These systems are suitable for mass population screening to provide better and consistent results in a short time. It is beneficial in countries that lack experienced breast radiologists. The tested AI system based on deep learning algorithms has a similar performance to an average radiologist for detecting breast cancer in mammography (Rodriguez-Ruiz et al. 2019).

From previous mammograms, the radiologists studied and classified the retrospective data into three categories:

-

1.

Actionable: This mammography was found in 155 previous mammograms from 90 individuals.

-

2.

Non-actionable: In this category, because the lesion that was subsequently biopsied fell below the recollection threshold, it is not possible to be utilized.

-

3.

Excluded: In this category,prior lumpectomy on the ipsilateral side and synthetic or three-dimensional tomography pictures are excluded (Watanabe et al. 2019).

cmAssist is a newly invented AI-CAD for mammography that uses deep learning, a prominent kind of artificial intelligence. The cmAssist method uses a combination of unique deep learning-based networks to accomplish great sensitivity without losing specificity. In addition, to enrich the varied appearances of cancer and benign structures in training dataset, the algorithms are trained using a proprietary, patent-pending data enrichment approach. The dataset consisted of 2D Full-Field Digital Mammograms (FFDM) collected from a community healthcare facility in Southern California for a retrospective study. All patients in the collected dataset were females, aged 40–90 years, who had a biopsy performed between October 2011 and March 2017. Of 1393 patients, 499 had a cancer biopsy, 973 had a benign biopsy, and 79 had both cancer and benign biopsies. The training set is made up of photos taken using a variety of mammography machines of different kinds.

Radiologist accuracy enhanced substantially as a result of the usage of cmAssist, as shown by a 7.2% improvement in the area-under-the-curve (AUC) of the receiver operating characteristic (ROC) curve for the reader group with a two-sided p-value of 0.01. With the usage of cmAssist, all radiologists observed substantial increase in cancer detection rate (CDR) with two-sided p-value of 0.030 and a confidence interval of 95%.

When radiologists employed cmAssist, their accuracy and sensitivity for identifying cancers that had previously gone undetected improved significantly. After utilizing cmAssist, the percentage improved drastically in CDR for the radiologists on the reader panel which went from 6 to 64% (mean 27%), while there is no increase in the number of false-positive recalls.

On this data set of missed malignancies, cmAssist has a maximum feasible sensitivity of 98% when used in stand-alone mode. Future work in AI-assisted false-positive reduction is underway, leading to even greater gains in cancer diagnosis accuracy (Watanabe et al. 2019).

2.1 Breast cancer detection using artificial neural networks

Basic data mining problems like classification and regression are effective in solving the breast cancer detection problems. While Artificial Neural Networks are frequently used to identify breast cancer with good amount of accuracy. ANN based design consists of a collection of linked neurons organized into three layers: input, hidden, and output. This kind of network learns to perform assigned tasks by taking into consideration a sufficient number of instances. The input layer consists of several neurons which is equal to the number of characteristics in the dataset. The hidden layer is another component of ANN with all hidden layers counted as a single one. In the past experiments with ANN, 31 neurons are connected to 9 of the hidden layers. There is a 9–9 mapping of connection between the first two hidden layers. As the problem is of the binary classification there is only a single neuron at the output.

The cross-validation was executed using a ten-fold technique, which meant that the dataset was divided into ten equal groups. The dataset used is the breast cancer dataset acquired from the University of California Irvine (UCI) machine learning repository. This dataset consists of 699 instances classified as benign or malignant, with 458 instances (65.50%) being benign and 241 instances (34.50%) being malignant. The model was calibrated for 100 epochs with five batch sizes, and the activation function is employed in the hidden layers while the sigmoid is employed at the output layer. The ANNs outperformed and computed 98.24% accuracy. The model produced by ANN is more robust and accurate than any other method, and it has the scope to make major advancements in breast cancer prediction.

However, ANN does not account for the training time and False Negative (FN) outcome when training the model. The researchers proposes a new strategy and the usage of the convolutional auto-encoder for breast cancer detection. The proposed hybrid convolutional network uses two models of convolutional autoencoders. First for picking up features and the second for categorizing those features. The fully connected layer is used for the output of the convolutional layers for categorizing input pictures as benign or malignant only after acquiring the most critical attributes. With a shorter training time, the suggested model achieves superior performance. The model’s sensitivity is found as 93.50% which is better than the previous studies (Sharif 2021).

Research has proven ANN to be highly accurate in case the of a breast cancer diagnosis. However, this method has some limitations:

-

1.

ANN has some parameters to be tuned at the beginning of the training process such as hidden layers and hidden nodes, learning rates, and activation function.

-

2.

It takes a long time for the training process due to the complex architecture and parameters update process in each iteration that needs expensive computational costs.

-

3.

It can be trapped to local minima so that the optimal performance cannot be guaranteed.

Several attempts have been made to find answers to the constraints of neural networks. Huang and Babri showed that Single Hidden Layer Neural Networks (SFLN) can tackle those problems using a three-step extreme learning procedure termed ELM. ELM was compared to traditional Gradient-Based Back Propagation Artificial Neural Networks in terms of performance (BP-ANN). Performance is measured using sensitivity, specificity, and accuracy. It is observed that Extreme Learning Machine Neural Networks (ELM ANN) gives superior results in general than BP-ANN. Doctors may benefit from intelligent classification algorithms, particularly in reducing errors caused by inexperienced practitioners.

Three main differences between BP-ANN and ELM ANN are observed based on the definition:

-

1.

We need to adjust several parameters like the number of hidden nodes, momentum, rate of learning, and termination criteria for BP ANN. Whereas, ELM ANN is an easy algorithm that only requires defining the number of nodes and no tuning.

-

2.

In hidden and output nodes, ELM ANN can utilize both differentiable and non-differentiable activation functions whereas BP ANN can only employ differentiable activation functions.

-

3.

The BP ANN is trained with a model which has a low training error to terminate at a local minimum. Whereas, ELM ANN is trained with a model that has minimal training error and weight norm, allowing it to develop better-generalized models and reach global minima.

The performance measures used for classification problems were accuracy, specificity, and sensitivity. Similar experiments were conducted with BP ANN for comparison. Results showed that generally ELM ANN was better than BP ANN.

ELM ANN surpassed BP ANN in breast cancer diagnosis. Even though the specificity rate found slightly lower, sensitivity and accuracy rates are significantly better in ELM ANN. The researchers concluded that ELM ANN has an enhanced generalization model than BP ANN based on these outcomes. The dataset used for these findings is the Breast Cancer Wisconsin Dataset obtained from the University of Wisconsin Hospital, Madison by Dr. William H. Wolberg. The dataset consists of 699 instances with 10 attributes plus the class attributes, with a class distribution of 65.5% (458 instances) for benign and 34.5% (241 instances) for malignant (Prasetyo et al. 2014).

2.2 Breast cancer detection using active microwave imaging

Microwave imaging is an upcoming technique that offers therapeutic applications. The interactions with the tissues are studied. The malignant cancer tissues show visibly more scattering than the normal tissues. This can help in the detection of the tissues in the early stages where cancer could be cured. In this technique, malignant tissues are estimated to have higher water content than normal ones.

Methods in Active Microwave Imaging are sated below:

-

1.

Confocal microwave technique: It is a non-ionizing technique. This method takes advantage of the fact of the see-through properties of the breast to create a dielectric contrast of tissues based on water content. Time shifting and the summing of the signals are used for the detection of cancer tissues. Acquiring data: There is an antenna that is used as a trans-receiver. The antenna is set around the sample to observe it. An observation is taken every 10 degrees. At last, signals are recorded at every step added to get the final signal. The data is validated using time-domain analysis based on the finite difference method.

-

2.

2D-microwave tomographic imaging: This is the traditional method used in breast cancer diagnosis. Acquiring data: The breast sample is brought into view using the bow-tie antenna. A frequency of 3000MHz is used for the sample. The antenna behavior is the same as the confocal microwave technique. The sample readings are taken in steps of 10 degrees. With the experimentation on active microwave imaging, following conclusions are made:

-

Active microwave imaging is very useful in the detection of cancer in its early stages.

-

Studies show that microwave tomographic imaging could effectively distinguish and image the tissues showing the significant difference in dielectric-permittivity.

-

Tumor location can be determined using Confocal Imaging. This is achieved by variations seen in signal strength due to water content (Bindu et al. 2006).

-

Table 1 represents the observations among AI-enabled Breast Cancer detection techniques.

3 AI enabled lung cancer detection techniques

The AI techniques share similarities in their application to breast cancer as well as the lung cancer. Each cancer type mentioned here presents unique challenges and considerations. Therefore, AI solutions are tailored to address the specific characteristics and complexities of each cancer type, as well as the clinical context in which they are deployed. The safety of AI-based approaches in cancer diagnosis and treatment for breast cancer and the lung cancer is measure with the similar set of tools.

AI-based lung cancer detection techniques use advanced machine learning algorithms to analyze medical data including chest X-rays, CT scans, and PET scans for the early detection and diagnosis of lung cancer. These algorithms are trained on large datasets of annotated images to recognize subtle patterns and abnormalities indicative of lung tumors, often achieving high levels of accuracy in classification tasks. AI systems can assist radiologists by flagging suspicious areas for further examination, reducing interpretation time, and potentially improving diagnostic accuracy. The most popular ways of detecting lung cancer are lung radiography and computed tomography (CT) scans (Mustafa and Azizi 2016).

3.1 CT lung cancer detection

CT lung cancer detection is one such AI-backed solution that is used to aid physicians, lowering their workload, improving hospital operational processes and giving them more time to create a high-quality doctor-patient connection. Computer-aided detection (CAD) employing CT scans relieves clinicians’ workload and increases efficiency by discovering previously undetected lung cancer nodules (Sathykumar and Munoz 2020).

Small-cell lung carcinoma (SCLC) and non-small-cell lung carcinoma (NSCLC) are the two common types of lung cancer used for therapeutic reasons. Lung cancer staging is a method of determining the extent to which cancer has progressed from its initial site and it is one of the elements that affect lung cancer diagnosis and possible treatments (Mustafa and Azizi 2016).

Radiologists employ X-ray imaging of the lungs to diagnose early lung cancer. When existent tissue is to be separated from the absence of an early tumor, radiology physician monitoring becomes more challenging. This accurate detection result may aid radiologists in more properly assessing and diagnosing patients’ ailments. The study’s purpose is to develop smart application software or intelligent machines that can identify and categorize early tumor types by utilizing an artificial neural network. This precise detection result may assist radiologists to analyze and diagnose patients’ diseases more effectively so that early lung tumor test findings might benefit patients in treatment by avoiding danger or no risk (Pandiangan et al. 2019).

The authors compared and assessed the results of four separate research experiments. Sensitivity, accuracy, the area under the curve (AUC), specificity, and the receiver operation characteristics (ROC) curve are some of the metrics that the models examined. High specificity number suggests a low rate of lung cancer misdiagnosis whereas a low specificity value indicates a high probability of false positives. The model’s accuracy is the percentage of data that was properly categorized. Finally, both the ROC and AUC curves are employed in the various research group model performance measurements.

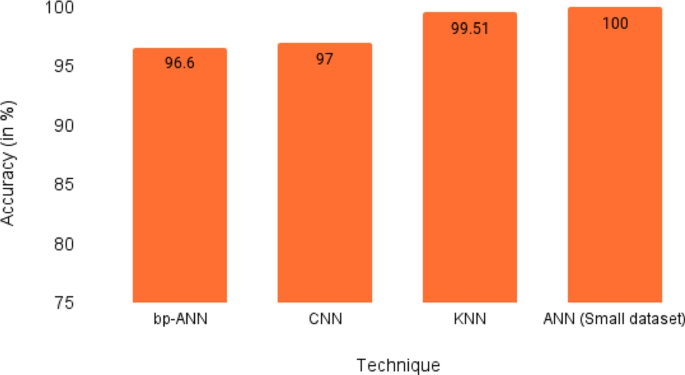

In a research experiment done by Toğaçar et al. the authors used lung CT images for lung nodule cancer detection. The use of image augmentation, Minimum Redundancy Maximum Relevance (MRMR), principal component analysis (PCA), and suitable feature selection resulted in the model performing very accurately. Out of the various iterations, the use of deep features with KNN and MRMR gives the best result with an accuracy of 99.51%. Three other research studies considered got a less accurate result in comparison due to the lack of image augmentation and feature selection techniques (Sathykumar and Munoz 2020).

3.2 AI based lung segmentation techniques

The researchers devised a lung segmentation technique to improve segmentation accuracy and separate and remove the trachea from the lungs. Digital image processing techniques have been utilized to improve quality and accuracy, as well as to demonstrate the evolution of the field.

The approach used in research is focused on meeting the goals of the study. Traditional lung X-ray image processing and ANN procedures are used to identify and classify early cancers. These findings are utilized to help enhance visual observation of the target item. The image processing system includes pre-processing, image noise reduction,image enhancement,lung organ segmentation, object edge detection, and tumor boundary identification. In X-ray pictures, low contrast is used to distinguish between malignant and non-malignant tumors.

The back-propagation technique is used in this study. The benefit of this technique is that it properly reduces mistakes caused by the discrepancy between the actual output and the predicted outcomes. The performance of artificial neural networks with graphics is demonstrated.

Image processing techniques are used on 25 lung nodule samples and 25 non-lung nodule samples from each of the 50 standard lung X-ray samples of parents. An artificial intelligence (AI) machine has been developed that can identify 10 samples correctly. The machine works by combining two-dimensional (2D) X-ray images with previously studied tumor characteristics. After training with a large dataset, it should be able to achieve close to 100% detection performance (Pandiangan et al. 2019).

Recently, Cengil and Cinar proposed a CNN-based model for the prediction of lung cancer. This technique makes use of the TensorFlow library for the detection mechanism. The authors used SPIE-AAPM-LungX dataset which contains images of 60 patients. The model supports an accuracy of 70% which is less than the standards required.

Another CNN-based approach is presented by Sasikala et al. This approach is applied to Lung Images Dataset Consortium and Image Dataset Resource Initiative (LIDC-IDRI) dataset. The pre-processing layers consisted of median filters which removed any unwanted features from the images. The accuracy achieved was 96%. A comparison study is presented by Gunaydin et al. They compared models like KNN, SVM, and decision trees to detect lung cancer. Data set used in this study is the Standard Digital Image Dataset from the Japanese Society of Radiology Technology. All techniques shows good results with the accuracy of 95.05%. Asuntha and Srinivasan proposed a different model with new approaches using deep learning techniques. They have used highly efficient feature extraction techniques such as wavelet transform, HoG nodes, Zernike moment, SIFT, and LBP. After the primary feature has been extracted, a fuzzy particle swarm optimization is used to select the most evident features. The model is tested on several data sets and has shown the accuracy of 97% (Patel 2022).

Chemotherapy, radiation, and surgery are the methods for treating lung cancer, depending on the kind of cancer. One of the most commonly used surgical treatments for the initial stage of lung cancer is the elimination of a lung lobe. The chemotherapy treatment is determined by the kind of tumor. In advanced instances, chemotherapy increases overall survival as well as the quality of life when compared to supportive treatment alone. For patients who are unable to have surgery, radiotherapy is frequently combined with chemotherapy. Smoking cessation and prevention are both effective methods for avoiding the development of lung cancer. Long-term vitamin A, D, or E supplementation has not been shown to lessen the incidence of lung cancer. The larger consumption of fruits and vegetables appears to reduce the risk, though the fact is that there is no established link between food and lung cancer (Mustafa and Azizi 2016).

Despite the many diverse types of cancer, lung cancer, with its unique development and spreading processes, can influence normal cells and disturb the cell signaling process, which modifies the function of cell division. To date, an enormous number of studies have been conducted in several aspects of diagnosis of cancer or pre-cancer stages by using artificial intelligent systems-based algorithms. Some algorithms are supervised and some set of algorithms are un-supervised along with different features extracted from pathological images. To the best of the author’s knowledge, there have been no systematic review and meta-analysis studies to evaluate the performance as well as to estimate the current status of existing approaches to lung cancer. The well-known databases are explored in this systematic review and meta-analysis based solely on a Boolean query for lung cancer and the accompanying artificial intelligent systems. Preferred Reporting Items for Systematic Reviews and Meta-Analyses Diagnostic Test Accuracy (PRISMA-DTA) is used to conduct the systematic review, The English-language papers that use various sorts of prediction models to distinguish between healthy and malignant cell pictures are taken from the databases. The relevant publications that had the necessary data, such as the true positive, true negative, total sample size, false positive, and false negative values, are then chosen from the search results. The studies with insufficient data are excluded from further examination.

The subsequent research employed a variety of artificial intelligent systems, including support vector machines (SVMs), artificial neural networks (ANNs) with diverse training strategies, and statistical techniques, despite the different retrieved features from lung cancer images. Artificially intelligent systems are used in each of them to make decisions similar to those of a clinical practitioner when diagnosing lung cancer. I-squared parameters generated by Meta-DiSc software enable a detailed assessment of the heterogeneity in a meta-analysis study and the potential impact of the included studies on it. I-squared indices are classified as low, moderate, or high depending on whether they fall within the range of 0 to 25%, 25 to 50%, or above 75%. Additionally, the SROC curve and the estimated values for the combined diagnostic odds ratio, AUC, sensitivity, and specificity are 0.77, 0.74, 17.22, and 0.872, respectively. Since the AUC value is higher, it is concluded that artificial intelligence systems are effective in differentiating between types of lung cancer. The findings for the development trends in the quantity of success in the performance of the artificially intelligent systems have been presented, taking lung cancer diagnosis and conducting a meta-analysis on the papers into consideration. Eventually, two publication bias tests have shown that the possibility of publication bias exists. Additionally, while sensitivity and specificity trends are moderate, the diagnostic odds ratio and AUC values shows enormously high trends (Sokouti et al. 2019).

Table 2 represents the critical observations of AI techniques for lung cancer detection.

4 AI techniques for colorectal cancer

The lung cancer detection techniques discussed in the previous section share some commonalities with colorectal cancer in terms of diagnostic and treatment challenges. These two cancer types are distinct in terms of anatomical notation. Clinical decision support systems are used in both the cases of lung cancer as well as colorectal cancer. Artificial intelligence has seen significant growth in tumor evaluation for CRC over the past years. Various techniques and steps involved in tumor recognition are the evaluation of some categorical as well as numeric data. Obtaining a correct diagnosis from clinical data during the initial steps of the medical assessment might reduce the chance of human error while also saving time. Colonoscopy, one of the most widely used computer-aided diagnosis techniques, is the most commonly used method for detecting and screening colorectal cancer (Lorenzovici and Dulf 2021).

4.1 Prediction of colorectal cancer using AI

The high occurrence and fatality rate of CRC presents the question of artificial intelligence’s usage in colorectal cancer epidemiology. As broad approaches to understanding research and data have started, several stages of using deep learning in epidemiological studies have started. However, they do experience some difficulty because of factors like data access, classification and accuracy. Various facets of artificial intelligence (AI) utilized for colorectal cancer prediction include:

-

1.

Geo AI: It is a subset of AI that was initially used in environmental healthcare and now being used by healthcare professionals. It functions by using information from a given region to collect more specialized data using artificial intelligence. Information is readily available on a wide range of topics, including geographic (terrain) information, food consumption information, healthcare information etc. The idea behind GeoAI is that particular regions that have a higher rate of colorectal cancer can gain the most from this kind of data collection.

-

2.

Digital epidemiology: It is a promising but possibly divisive area of AI. This technique focuses on obtaining data from digital sources including social media and digital devices, and this data is utilized for the early identification of SARS and real-time surveillance of Covid-19. This provides access to a vast body of knowledge that was previously unavailable to doctors and can help with early illness identification and public health surveillance. The predicted information might not, however, be what the doctors require.

-

3.

Data mining: It is another application that serves as a practical tool. It analyzes massive amounts of data gathered from several sources and extracts facts, patterns, and data from them. When used to diagnose colorectal cancer, data mining tools helps in finding connections, linkages, and a variety of other potentially hidden characteristics that are never explored and may contain a significant piece of the information for what causes colorectal cancer (Yu and Helwig 2022).

4.2 AI techniques in colorectal cancer screening

-

1.

Colonoscopy: It is a highly rated method due to its attributes like high sensitivity as well as decision-making power on lesions. However, small-size or flat polyps have a high chance of being missed by the naked eye (Yu and Helwig 2022). A flexible tube (endoscope) with a small video camera at the tip is used to do colonoscopy exploration. When polyps bigger than 10 mm are detected, traditional colonoscopy is effective. Although DCE (dye-based chromoendoscopy) may identify microscopic or flat polyps, it has significant limitations, such as interobserver and intraobserver variability. White light alone is used in an AI-based method to determine whether polyps are present in a video frame. Polyp categorization is the process of cataloging the type of polyp found after it has been discovered. Using three well-defined methods, depending on whether the polyp seems to be benign, pre-malignant, or malignant, the doctor can make a better judgment about whether or not to remove it. Colorectal polyps are characterized as adenomatous, hyperplastic, or serrated and inflammatory based on their anatomical pathogenesis. AI might help medics distinguish polyps in the future, which is a promising development. There is a form of serrated polyp known as a sessile serrated adenoma that is not generally neoplastic (Viscaino and Bustos 2021). An experiment study of colonoscopy with 1058 patients was conducted, which resulted in the conclusion that colonoscopy reduces the mortality rate.

-

2.

Blood tests: Studies have found that certain blood test data and some features could be used to identify patients carrying the risk of CRC. One such test is the Complete Blood Count. Slow bleeding from cancer in CRC causes iron deficiency anemia, which is accounted for by this test. A machine learning model, MeScore, is developed and tested rigorously for calculating the risk factors of CRC.

-

3.

CT colonography: A non-invasive imaging test for CRC is CT colonography (CTC). The ability of CTC to diagnose and distinguish between various lesions can be reduced, and the capacity for detection can be increased by CAD.

-

4.

Colon capsule endoscopy: It is an invasive procedure. It is to be noted that the invasion is minimal. It required more laxatives. It removes the capsule from the GI tract by flushing it out. It required manual reading, which increases the error rate. AI techniques are applied to these images to interpret the results.

-

5.

Polyp characterization: This is a critical step. The characterization of polyps carry a lot of weight. AI comes into play here for predicting malignant or benign lesions. Most colorectal polyps are hyperplastic. Even in that case, it is absolutely important to have a high-precision diagnosis. A retrospective study was carried out at three hospitals, using methods like conventional white light endoscopy, etc., to construct and evaluate deep learning models for the automatic classification of colorectal lesions histologically in white-light colonoscopy images. This study contained a total of 3828 pictures from 1339 participants. The results showed a promising future for deep learning models.

-

6.

Magnifying narrow-band imaging: It is a less bandwidth-intensive form of endoscopy that uses image enhancement. It is an optical filter. These filters are used for green and blue light illumination in a sequence. It comes in handy for polyp characterization. A computer-based method is tested for classification. All 214 study participants underwent a zoomed NBI (narrow band imaging) colonoscopy and had a total of 434 polyps measuring 10 mm or less in size. The diagnostic agreement between two specialists and computer-aided classifier systems is 98.7%, with no discernible difference.

-

7.

Magnifying chromoendoscopy: It is a technique to amp up the visualization of pit patterns on the surfaces of polyps to distinctly identify benign and neoplastic polyps. An analysis is done on an automated computer system named HuPAS, which could outline the boundaries of the pits. The aided system is in 100% compliance with the expert diagnosis for type I and II pits. For types IIIL and IV, it shows values of 96.6 and 96.7, respectively.

-

8.

Endocytoscopy: This technique involves magnifying real-time images by 380–500 folds. This allows for clear visuals. In esophageal cancer (EC), a contact light microscopy system is added to the colonoscope’s distal tip, which permits instantaneous evaluation of nuclei and cytological structures. CAD-EC is developed to automate export-dependent diagnosis. The database consists of images from 242 patients. CAD-EC shows a sensitivity of 89.4%, a specificity of 98.9%, an accuracy of 94.1%, a PPV of 98.8%, and an NPV of 90.1% in differentiating invasive cancer from adenoma.

-

1.

Confocal endomicroscopy/confocal laser endomicroscopy: It allows for real-time \(\times 1000\) image magnification. This technique requires a lot of training and is hence performed only by experts. To distinguish between neoplastic and non-neoplastic polyps, a study of the diagnosis capacity of computer-based automated pCLE classification was conducted, and it was contrasted with experienced endoscopists who made a diagnosis based solely on pCLE recordings. For computer-based automated pCLE classification and expert performance in differentiating neoplastic and non-neoplastic lesions, the results showed a sensitivity, specificity, and accuracy of 92.5 vs. 91.4%, 83.3 vs. 84.7%, and 89.6 vs. 89.6%, respectively. These differences were not statistically significant.

-

2.

Laser-induced fluorescence spectroscopy: This technique gives us a real-time automated distinction between benign and neoplastic polyps. It includes an optical fiber device called WavSTAT. This component emits laser waves onto targeted tissue and then releases the light to give the results. A new version of LIFS is studied which makes use of WavSTAT4. The accuracy of LIFS using WavSTAT4 in predicting polyp histology was 84.7%. For distal colorectal diminutive polyps only, the NPV for excluding adenomatous histology increased to 100%. The dataset used was a combination of electronic medical record data from two unrelated patient populations in Israel and the UK to develop an ML-based prediction model for identifying individuals at high risk of colorectal cancer based on attributes such as complete blood count (CBC), age, and sex (Goyal and Mann 2020).

-

1.

4.3 AI for colorectal cancer diagnosis

One of the numerous sectors in which machine learning techniques are gaining prominence is the development of computer-aided diagnosis systems. The most widely used traditional machine-learning algorithms in medical applications for data analysis are decision trees. Important factor when working with CAD (computer-aided diagnosis) in tumor recognition is confidence analysis (Lorenzovici and Dulf 2021). CAD tries to locate aberrant or suspicious areas in order to improve detection rates while lowering false-negative rates (FNR). (Viscaino and Bustos 2021)

-

1.

Endoscopy and MRI/CT imaging: One of the most effective methods for diagnosing colorectal cancer makes use of convolution neural networks (CNN), an ANN type, and computer vision, endoscopy, and MRI/CT imaging. It analyses a vast number of images to find patterns and objects. The first steps in improving the endoscope have been made possible by segmentation technology, which derives from computer vision’s capacity to distinguish between things. The important thing to remember is that optimal segmentation would allow for the separation of anatomy and malignant and normal masses. Pattern recognition is used to distinguish between normal and abnormal conditions. The detection, segmentation and classification of pictures are currently the most significant areas where AI has been able to influence this kind of imaging. Additionally, general initiatives to enhance picture quality for better reading and advancements in segmentation technology have been observed. Along with the obvious advantages of improved diagnostic accuracy, using deep learning in colonoscopies also has the added benefit of reducing any variability in detection rates. One of the major challenges was dealing with bigger artifacts, which might lead to misunderstanding of data or false-positive results. However, the lack of relevant datasets is the main issue. When attempting to employ CNNs for image or object identification, there are generally certain restrictions or problems. Pixel appearance anomalies in images can cause misinterpretations owing to missing or irregular pixelation. Because anatomy is not always the same in all people, it might be mistakenly perceived. Anatomical variations or patient posture practices can affect how an image is taken, causing positional alterations that could result in misunderstanding. Additionally, it might be difficult to distinguish between useful information and information that is only an artifact when segmenting data. The limitations of endoscopes and CT/MRI imaging serve as a reminder that AI is still being improved and is not yet ready for full application and they also highlight the continued need for technicians in the interpretation of imaging.

-

2.

Genetic and pathological diagnosis: Some progress has been made in training AI to categorize cancers based on histology. When inflammatory tissue are present in data sets, the systems, however, has identification problems. The inability of AI to detect pictures may not be its fault; instead, CNNs requires higher-quality training data, and stain normalization in hematoxylin and eosin pictures are able to aid AI by increasing its accuracy. By paying more attention to characteristics like textures, spatial connections, and morphology, the accuracy may be increased even further. Fuzzy systems have also proven effective since they allow for more thorough information interpretation. Any type of pathology can use this scope to find abnormalities or forecast the possibility of malignancy based on predetermined criteria. (Yu and Helwig 2022)

4.4 AI for colorectal cancer treatment

-

1.

Therapeutic assessment: Rectal cancer treatment using an AI model enables the early-onset differentiation between therapeutic full response (CR) and not-respond to therapy (NR). AI makes it possible to comprehend some metabolic processes and drug-induced changes that are directly related to the development of colorectal cancer. AI has significantly enhanced the processing of complicated networks of biological information. Additionally, the accuracy of AI’s colorectal cancer ANN algorithm prediction is rising in importance. ANN is distinguished by nonlinear models that are adaptable to medical and clinical research. The following are a few advantages observed with this technique:

-

1.

It has the potential to improve the optimization process, resulting in adaptable nonlinear models that are cost-effective, particularly in huge data scenarios.

-

2.

It predicts clinical outcomes with high accuracy and reliability.

-

3.

Improves academic dialogue and knowledge dissemination.

-

1.

-

2.

IBM Watson for oncology: Clinical decision-support systems (CDSSs), a new AI trend for therapeutic suggestions, shows enormous potential for therapeutic management in cancer given the increasing growth of clinical information. By imitating human thinking, it makes it possible to acquire and analyze knowledge in a way that is superior to the traditional human touch. The selection of cancer therapies has been expedited by AI with a cognitive computing system called IBM Watson for Oncology (WFO). WFO is gaining recognition in the field of cancer therapy. It formulates recommendations using natural language processing and clinical data from many sources (treatment guidelines, professional opinions, works of literature, and medical records). The datasets used in the study included 10,000 to 40,000 CT images for studies on CT imaging and AI. These images were gathered by the research parties themselves. AI training data is frequently labeled and supervised. The known input and output values have been connected clearly. However, doctors’ notes and other unstructured, person-specific information make up the majority of a case record, making it challenging to feed WFO (Yu and Helwig 2022).

Colorectal surgery is another promising area in which AI has been used. According to research, AI greatly decreased the need for extra surgery after T1 CRC endoscopic resection. In particular, a support vector machine for supervised machine learning was developed to identify patients who required extra surgical intervention. As a result of surgery’s difficult, time-consuming, and non-scalable nature, the use of AI in surgery is still limited. The only purpose of AI’s presentable results is to expedite the decision-making process (Yu and Helwig 2022).

An experiment was performed by Noémi Lorenzovici et al. to maximize the effectiveness of the colorectal cancer detection system by using an innovative dataset. For every patient, the dataset contains 33 blood and urine samples. Also, when determining the diagnosis, the patient’s living environment was taken into consideration. Various machine learning approaches, such as classification and shallow and deep neural networks are used to create the intelligent computer-aided colorectal cancer diagnosis system. The problem is solved using two approaches, traditional machine learning algorithms, and the regression problem solved using artificial neural networks. The initial step in the first approach is pre-processing and it is done by classifying each record from the dataset as unhealthy (denoted by 1) or healthy (denoted by 0). If there is the presence of other diseases or risk factors, then it is denoted as 1, otherwise 0. The response variable is obtained using two approaches; the first, absolute deviation; and the second, by finding the weight of each predictor. In the first approach, the weights are calculated on the assumption that each variable had roughly the same effect on the diagnosis. The second method for determining the proper weights is to expand individual variables’ healthy and unhealthy ranges. But even though the second technique appeared to be more rational than the first, the results did not support these assumptions in the theory.

The first goal of the experiment is to solve the binary classification problem. Therefore, for the training of the model, a labeled response variable is needed to represent the answer of the model to the input data. After obtaining the predictors and the response variable, various models are trained using the Classification Learner toolbox in MATLAB. This toolbox allows to train models, and each model is trained using two separate validation strategies, each with three alternative approaches. The first validation technique used is k-fold cross-validation and it had various approaches such as 5-Fold Cross-Validation, 25-Fold Cross-Validation, and 50-Fold Cross-Validation. Regarding the percentage of data held out, three different methods are tested, and those are 5% of data held out for validation, 15% of data held out for validation, and 25% of data held out for validation.

In the second approach, taking into consideration the fact that deep neural networks perform better on large datasets than basic machine learning algorithms, solving a regression problem was not performed using the regression learner but rather with the Neural Network Toolbox in MATLAB. The first three types of neural networks are shallow neural networks, having only one hidden layer and a varying number of neurons on that hidden layer. In the first case, when the hidden layer has only three neurons, an MSE(mean squared error) of 1.94 is obtained after training the network. In the second case, a network with 10 neurons on one hidden layer is constructed, and in the third case, a shallow neural network having 20 neurons was made. The next three networks are built on a 5-hidden layer architecture and, therefore are called deep neural networks. Evaluating the results obtained after training the first network with five hidden layers, it is concluded that this network has the worst performance. After training the classification algorithms, the resulting models’ performances are compared. Traditional machine learning techniques achieves a maximum accuracy of 77.8% while solving the binary classification problem. In terms of mean squared error minimization, however, the regression issue addressed with deep neural networks performed substantially better, with a value of 0.0000529. In conclusion, deep neural networks are both more reliable and more efficient in colorectal cancer detection than traditional machine learning algorithms (Lorenzovici and Dulf 2021).

Although evaluation of slides by pathologists is the standard for cancer detection, with the increase in workload the authors have seen the development of computerized screening. This is done by whole slide images (WSI), which are a digital form of glass slides. computer-aided diagnosis (CAD) is possible through the application of medical image analysis, CNN, and AI. With increasing cases of CRC, the number needed to diagnose increases as a study shows only 7.4% of cancers are detected as positive.

Unique AI based deep learning model is trained for screening colonic malignancies in colorectal specimens for better detection and classification. This unique algorithm developed by Qritive consists of two models, first to detect the high-risk regions in Whole Slide Images (WSIs) by gland segmentation and second to classify the WSIs into “low risk” (benign, inflammation) and “high risk” (dysplasia, malignancy) categories. The WSIs of 294 colorectal specimens are collected from the archives of Singapore General Hospital’s pathology and The Cancer Genome Atlas (TCGA). The attributes selected from the data included the histological features present in the WSIs, such as glandular structures, dysplastic regions, blood vessels, necrosis, mucin, and inflammation, which were used to train the AI models for accurate detection and classification of high-risk regions indicative of malignancy. Slides containing poor-quality images and other malignancies are excluded. The gland segmentation model is a deep learning model that uses Faster Region-Based Convolutional Neural Network (Faster-RCNN) and Resnet-101 for feature extraction. The second model is a gradient-boosted decision tree classifier, which classifies WSIs as high risk and low risk. The model is initially trained based on 39 WSIs annotated by pathologists. The gland segmentation model is trained by dividing the WSIs into tiles. These tiles are separated into categories if tissue is found and others are discarded. The model is thoroughly trained by using iterative training to reduce the loss function. Data augmentation by rotation, mirroring, contrasting, changing brightness, and adding Gaussian noise and Gaussian blur is done to increase the training set and make the model robust.

The trained segmentation model was applied to the 105 WSIs from the Singapore General Hospital to number them according to the following features:

-

1.

Area classified as dysplasia or adenocarcinoma with a minimum of 70% certainty.

-

2.

Area-weighted average prediction confidence for adenocarcinoma or dysplasia objects.

-

3.

The boolean flag is set to 1 if the slide has a prediction certainty of more than 85%.

-

4.

Predictions for cancer or dysplasia are in the bottom 1 percentile.

After the numeric classification, a fivefold cross-validation is carried out to train, tune, and compare several models. The best-performing model is selected. This model is tested on 150 WSIs and the results are compared to those of pathologists. It is found that the model could classify 119 out of 150 slides correctly, thus resulting in a high sensitivity of 97.4%, a specificity of 60.3%, and an accuracy of 91.7%.

The confusion matrix generated from the predicted results of biopsies is depicted in table 3 below.

On evaluating the data from the testing dataset, it is observed that its high accuracy of 91.7 shows uniformity between the AI model and the pathologist. With a sensitivity of 97.4%, the model assures the minimization of false negatives (Goyal and Mann 2020). This model guarantees the identification of high-risk CRCs. These identified specimens are then be sent to a pathologist for final verification. Thus, the AI model is used as a screening tool to help reduce the diagnostic burden of pathologists by identifying significant cases (Ho and Zhao 2022; Viscaino and Bustos 2021).

Table 4 lists the observations in literature for colorectal cancer.

5 Prostate cancer

Though the risk factors, diagnostic methods, and treatment modalities are different for prostate cancer with respect to breast cancer, lung cancer, and colorectal cancer, there are common AI methods to deal with all four types of cancers. Medical Image analysis is the common technique used among all the mentioned types of cancers. AI techniques for colorectal cancer involve analyzing medical imaging data like CT scans. Similarly, AI techniques for skin cancer, especially melanoma, use deep learning to analyze dermatoscopic images, identifying malignant lesions by visual patterns. By utilizing imaging data and machine learning, AI enhances early detection and treatment strategies, highlighting its transformative potential in cancer care. The second most prevalent cancer to cause death in males is prostate cancer, which is also the most frequently diagnosed non-skin malignancy in men. The volume of prostate biopsies has increased, and there is a dearth of urological pathologists, which strains the ability to diagnose prostate cancers. These difficulties are encountered during prostate therapeutic interventions (Booven and Kuchakulla 2021). Prostate cancer should be found early and located while it is still curable since excellent cancer-specific survival is anticipated for the majority of locally contained illnesses. Radiation therapy and radical prostatectomy are frequent therapies for individuals with locally advanced, high-risk illnesses. The Gleason grading system’s pathologic grade is the main factor in clinical risk evaluation. Generally speaking, cancers with just Gleason pattern 3 are regarded as low risk. The risk of developing metastatic illness and dying from cancer is increased in tumors with dominant Gleason patterns 4 and 5 (Harmon and Tuncer 2019).

It is anticipated that the development and acceptance of digital pathology technology will increase the precision of anatomic pathology diagnosis. Machine-learning algorithms have shown some accuracy in recognizing disease phenotypes and characteristics when applied to digital images (Perincheri and Levi 2021).

There is widespread knowledge of the value of multiparametric magnetic resonance imaging (mpMRI) as a method for detecting prostate cancer. The prostate and pelvic areas can be seen in high-contrast, high-resolution anatomical pictures thanks to mpMRI. Radiologists must possess a high degree of competence in order to assess and interpret all mpMRI sequences. Literature has been developed to describe the complicated heterogeneity of localized prostate cancer using the functional imaging properties of mpMRI. In the past ten years, there has been a significant rise in the usage of AI applications in both radiology and pathology (Harmon and Tuncer 2019).

5.1 AI in localized prostate cancer

Contributions of AI to prostate mpMRI are particularly expected to increase the sensitivity of prostate cancer diagnosis and lower inter-reader variability. The TZ shows the largest advantage, enabling readers with average competence to reach 83.8% sensitivity with automatic detection as opposed to 66.9% with mpMRI alone. Litjens et al. are able to show that integrating AI-based prediction with the Prostate Imaging Reporting and Data System (PI-RADS) enhances both cancer detection and clinically relevant (aggressive) disease classification. While there haven’t been as many studies on the automated identification of prostate cancer using mpMRI using deep learning. Early research suggests increased detection rates compared to earlier studies. Here, PI-RADS v2 classification in conjunction with automated detection enhanced the diagnosis of clinically relevant malignancy over radiologist-only detection. Biopsy intra-lesion spatial targeting of aggressive spots on mpMRI is not best suited for AI algorithms. The algorithm should be educated using voxel-based classifiers against a system that classifies each tumor “patch” according to its regional Gleason score in order to enable more precise spatial learning (Harmon and Tuncer 2019).

Machine learning algorithms used in cancer therapy often include semantic segmentation of stromal, epithelial, and lumen components. Due to how time-consuming it is to geographically annotate at the full resolution of digital pathology pictures, this degree of spatial annotation is not viable. Theoretically, more thorough pathologic details of each lesion would be necessary as input to enhance spatial labeling at the mpMRI level. The use of AI in pre-processing stages to enable more intricate histopathologic spatial analysis with radiologic imaging has shown potential. According to research by Kwak et al., the tissue component density is substantially correlated with the MRI signal characteristics. The differences between tumors with Gleason patterns 3, 4, and 5 were shown by Chatterjee et al. to be bigger for the gland component volumes than for cellularity measures. The detection and classification of intermediate prostate tumors are now constrained by pathology and imaging. Most models are built using weakly labeled data, and there aren’t many high-quality annotations available. The foundation for high-resolution radio-logic labeling can be improved through pathological classifier performance (Harmon and Tuncer 2019).

Digital pathological evaluation using automated Gleason grading has been the main focus of prostate cancer research. Building classifiers from manually created characteristics obtained in relatively uniform environments has been the foundation of traditional machine-learning techniques. Prostate cancer grading systems may now perform better thanks to more recent developments in deep learning applications. Deep learning and machine learning were used to create a novel network for prostate cancer diagnosis and grading. According to the results, the accuracy of epithelial identification was 99%, while the accuracy of low- and high-grade illness was between 71 and 79%. New possibilities for image-based jobs at full-scale resolution will open up as technology advances in processing power (Harmon and Tuncer 2019).

5.2 AI methods in prostate cancer diagnosis

-

1.

ANN and Histopathologic Diagnosis of Prostate Cancer: A prostate cancer diagnosis frequently depends on the histological recognition of prostatic adenocarcinoma. Numerous investigations were undertaken, and it was shown that many of them demonstrate the potential of AI ANNs to provide more precise patient counseling and circumvent histopathologic variabilities.

-

2.

ANN and Magnetic Resonance Imaging (MRI) diagnosis of prostate cancer: Detection and aggressiveness assessment using magnetic resonance imaging (MRI) have both been studied as treatment options. Although MR spectroscopy, T2-weighted MR imaging, and apparent diffusion coefficient (ADC) have been useful methods to evaluate prostate cancer, there is still disagreement about how to best apply them. These AI classifier techniques have been proposed as potential methods for non-invasive tumor progression detection and decision support for active monitoring programs.

-

3.

Artificial Neural Network in Biomarker Diagnosis and Risk Stratification: PSA level testing has helped to inform prostate cancer diagnosis and prognosis. A deluge of biomarkers has been discovered and incorporated into clinical assays over the past ten years. Analyzing and verifying the biomarkers can be greatly aided by ANNs. In several investigations, clinical prediction models were created using machine learning techniques. The findings demonstrate that novel non-invasive biomarkers can be found using computationally directed proteomics.

-

4.

ANN for patient interaction and patient centered care Many prostate cancer patients are still uncertain about their potential treatment options after receiving a diagnosis. Understanding how specific therapies are implemented might therefore help patients feel more at ease and enjoy their treatment more. Numerous research shows the potential of AI ANNs to enable the creation of useful patient-centric tools to aid in informing patients about their treatment options and illness progress monitoring.

-

5.

Classification system for prostate cancer risk stratification using ANN: Numerous criteria work in conjunction with ANN to classify patients into risk groups that are classified into 7 categories ranging from extremely low to very high risk. Although these risk categories provide a solid framework for stratification, none of them takes the possibility of repetition into account. Therefore, a deeper understanding of the mechanisms underlying recurrence could equip us with the knowledge necessary to modify risk variables and, consequently, select the most appropriate therapies (Booven and Kuchakulla 2021).

5.3 Study experiments

A study experiment is presented in this section to evaluate Paige Prostate’s performance on a prostate core biopsy. In this study, Sudhir Perincheri et al. evaluated Paige Prostate’s performance on a prostate core biopsy using the Whole Slide Images dataset from Yale Medicine, which the algorithm has never seen before. Paige Prostate is a machine-learning algorithm that classifies a whole-slide image as “suspicious” if any prostatic lesions are found or “not suspicious” if none are found. It is developed at Memorial Sloan Kettering Cancer Center (MSKCC) in New York using data from its digital slide collection.

The primary objective of the study is to investigate two possible use case scenarios. The first is its use as a pre-screening tool to spot negative cores that don’t need a pathologist to manually evaluate them, and the second is its use as a second read tool to spot cancer foci the pathologist missed. 1876 prostate core samples from 118 consecutive individuals are included in the analysis. The primary diagnostic is a board-certified pathologist’s clinically recorded unique diagnosis. The Paige Prostate algorithm is run on scanned, identifier-free stained samples.

Except for High-Grade Prostatic Intraepithelial Neoplasia (HG-PIN), the algorithm classified each core biopsy as suspicious if it discovered abnormalities.

Additionally, if the distribution of the core-needle biopsy slides differed noticeably from the thumbnail, it flagged the slides as being out of distribution. Digital images and/or glass slides were inspected to confirm the presence of diagnostic lesion tissue at the scanned level. Without being aware of the final diagnosis or algorithm classification, two genitourinary pathologists manually inspected the entire slides. Thirty randomly chosen core biopsy photos are combined with erratic biopsy images. When one of the two reviewers observed atypia in a core biopsy, the algorithm classified the sample as “suspicious,” and when neither of the two reviewers did, the sample is classified as “discrepant.” The same pathologists manually evaluated the pictures of any core biopsies that had discrepancies following blinded assessment while emphasizing the area of concern.

5.3.1 Results of the study experiment

Of the 118 patients represented by the 1876 core biopsies in the research, 86 are found to have prostatic adenocarcinoma, and 32 are found to be cancer-free. According to Paige Prostate’s analysis, at least one core is deemed questionable in 84 of the 86 individuals with adenocarcinoma, whereas none is in 26 of the 32 patients who did not have carcinoma or glandular atypia.

Among the non-suspicious labels, 16 of the 46 discrepant cores are not interpretable manually, resulting in the final diagnosis of adenocarcinoma. Diagnostic lesional tissue is not found in the scanned picture of 19 additional discrepant cores. Taking away the 16 faulty scans and considering the 19 cores with no diagnostic tissue in the scanned picture as concordant, leaves 11 discrepant cores.

Thirty-four (34) of the 477 core biopsies classified as “suspicious” by Paige Prostate resulted in a benign prostatic tissue diagnosis. In 16 instances, at least one of the reviewers identified unusual glands during the manual review. The system classified two cores as out of distribution because they lacked prostatic glandular tissue. With the exception of one core that developed granulomatous prostatitis, the remaining cores were completely benign.

In suspected circumstances, Paige Prostate includes a tool that shows regions of concern. At least one of the two manual reviewers classified six more cores as odd after re-examining suspected discrepant cores with Paige Prostate annotation. To avoid bias, 30 randomly chosen core biopsy images were combined with discrepant cores for manual reviewers, which included 22 benign cores. The manual readings and the final results are identical, with the exception of one core having adenocarcinoma. In the data set, Paige Prostate shows a positive predictive value of 97.9%, a negative predictive value of 99.2%, sensitivity of 97.7%, and specificity of 99.3%.

The study by Sudhir Perincheri et al. carefully studies the model’s performance on unseen data and identifies areas where the algorithm might be improved. Through their findings, the false negative rate might be decreased, for instance, by better out-of-focus scan detection. On the other hand, disregarding photos with a single focus smaller than 0.25 mm would reduce the algorithm’s false positive rate. Paige Prostate has the potential to be a powerful tool with a wide range of use-case applications in anatomic pathology clinical settings (Perincheri and Levi 2021).

In a study experiment conducted by Derek J Van Booven, et al. the authors analyzed the advancements in AI-based artificial neural networks (ANN) and their current role in prostate cancer diagnosis and management. For this systematic review, the authors explored PubMed, Embase, and the Web of Science to find and evaluate publications about the use of active surveillance and machine learning in the detection, staging, and management of prostate cancer. To make decisions during the diagnosis and treatment of prostate cancer, evidence from several modalities must be interpreted. MRI-guided biopsies, genomic biomarkers, Gleason, and PSA levels grading are just a few examples of the tools that are utilized to diagnose, risk stratify, and subsequently monitor patients during corresponding follow-ups. A process that is extremely challenging and time-consuming for humans can be made possible by artificial intelligence (AI), allowing therapists to recognize complex correlations and manage vast data volumes. Utilizing artificial intelligence-based ANN tools that could be seamlessly integrated with some of the crucial instruments frequently used in prostate cancer detection and lowering the degree of subjectivity, it is possible to use limited resources while enhancing the overall efficiency and accuracy in prostate cancer diagnosis and treatment (Booven and Kuchakulla 2021). An exceptional possibility for AI applications to enhance detection, classification and overall prognostication arises from the close relationship between histology and functional imaging parameters. Improvement in systematic assessment and characterization of pathological and radiologic interpretation is clinically necessary for prostate cancer. To continue making progress in this area, mature data sets with top-notch annotations are required (Harmon and Tuncer 2019).

Observations in literature for prostate cancer have been shown in the table 5.

6 AI techniques for skin cancer detection

In most of the available literature, Gray Level Co-occurrence Matrix1 (GLCM) is a go-to technique for feature extraction and GoogleNet for classification. Such models had an average accuracy of around 85%. Out of all the literature’s, Esteva et al. achieved a notable accuracy of 96% using GoogleNet.

Stanley et al. uses fuzzy-based histogram analysis for differentiating benign skin lesions from melanomas in clinical images. The model is trained upon 129 melanoma and 129 benign clinical images. Results shows that the model is able to distinguish between both of them with true positive rates between 89.17 and 93.30% and true negative rates between 84.33 and 86.04%.

Al Nazi and Abir proposes a model where augmentation techniques are used to overcome the issue of having fewer images in the dataset. ISIC 2018 and \(PH^{2}\) data set is used for this purpose. The model achieves an impressive 92% accuracy despite having fewer images in the dataset.

Han et al. proposes a Region-based CNN model for SC prediction. 11,06,886 images are used to train the region-based CNN. The model is trained with two approaches, one with 80% sensitivity to malignant modules with an F1 score of 72.1% and the second with 90% sensitivity to malignant nodules with an F1 score of 76.1%. The model provides better accuracy than dermatologists (Patel 2022).

The computer-aided systems can easily extract features like color variation, asymmetry, texture, etc. There have been many different methods, like the 7-point checklist, ABCD rule and Menzies method to improve the diagnosis of skin cancer. The major steps in the computer-aided diagnosis of melanoma are the acquisition of skin lesions, segmentation of lesions from the skin region (to detect areas of interest), extraction of geometric features (accountable for increasing accuracy) and feature classification. The feature extraction in CAD depends majorly on the ABCD rule i.e. asymmetry, border irregularity, color, and diameter (Kanimozhi and Murthi 2016). In treating suspicious cases, physicians have been assisted by system-aided technology. They can also be used by less experienced doctors as an extra tool to obtain a preliminary estimate and enhance patient follow-up processes. These techniques are often divided into two categories: the key category is related to attribute mining from skin images, and the second category uses picture properties like texture and color to recognize patterns.

6.1 AI-based melanoma detection

The technique that is used for melanoma detection is computerized detection using Artificial Neural Networks, and this technique proves to be more helpful and powerful than dermoscopy. MATLAB has been extensively used for image processing and developing diagnostic algorithms. The methodology for the detection of melanoma using image processing is depicted below:

-

1.

Image pre-processing: An image of the lesion is captured by any lens and in any lighting condition. This image thus needs to be pre-processed.

-

(a)

Image scaling: The size of the image is enlarged or reduced to make the lesion more clearly visible.

-

(b)

RGB to grayscale: The colored image is then converted into a grayscale image using the rgb2gray function.

-

(c)