Abstract

The amount of data generated owing to the rapid development of the Smart Internet of Things is increasing exponentially. Traditional machine learning can no longer meet the requirements for training complex models with large amounts of data. Federated learning, as a new paradigm for training statistical models in distributed edge networks, alleviates integration and training problems in the context of massive and heterogeneous data and security protection for private data. Edge computing processes data at the edge layers of data sources to ensure low-data-delay processing; it provides high-bandwidth communication and a stable network environment, and relieves the pressure of processing massive data using a single node in the cloud center. A combination of edge computing and federated learning can further optimize computing, communication, and data security for the edge-Internet of Things. This review investigated the development status of federated learning and expounded on its basic principles. Then, in view of the security attacks and privacy leakage problems of federated learning in the edge Internet of things, relevant work was investigated from cryptographic technologies (such as secure multi-party computation, homomorphic encryption and secret sharing), perturbation schemes (such as differential privacy), adversarial training and other privacy security protection measures. Finally, challenges and future research directions for the integration of edge computing and federated learning are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a new Internet technology, the Smart Internet of Things (SIoT) has ushered in a new wave of development in correspondence with the rise in artificial intelligence technologies, which can greatly help the development of intelligence in various fields (Shen et al. 2023). IoT devices have increasingly advanced sensing technologies, storage capabilities, and computing and processing capabilities. They are widely deployed for various sensing tasks, such as in wearable medical monitoring devices to assist in diagnosis (Jan et al. 2023), smart agricultural monitors (Garg and Alam 2023), and smart industrial control (Adhikari et al. 2023). Successful practices in these fields must consider the foundations of big data, large-scale data analyses, and the training and application of machine learning (ML) models. However, traditional centralized ML processes are facing ever-growing, multi-source, heterogeneous, and complex distributed IoT data. These data cannot be managed by relying only on the limited functional services provided by cloud service centers (Ge et al. 2023; Shuvo et al. 2022). The cloud-centric computing model uploads data collected by edge-IoT devices to a central cloud server for centralized storage, processing, and computing. Nevertheless, the centralized processing model based on the cloud center cannot withstand the explosive growth in data volume. Moreover, data related to user privacy are uploaded to the cloud center to address security issues, increasing privacy leakages. Edge computing (EC) is a new distributed computing paradigm (Shi et al. 2016) for storing, processing, and applying data collected by edge-IoT devices on the edge side close to the data source. The EC approach effectively reduces the pressure of performing data processing in the cloud center. The bottleneck in the transmission communication retains the training data in edge device nodes to avoid the risk of privacy data leakages to a certain extent (Li et al. 2022a; Ranaweera et al. 2021). EC approaches combining ML (Hua et al. 2023; Ning et al. 2023) and deep learning (DL) (Ahmad et al. 2023; Zhang et al. 2023a; Ghosh and Grolinger 2020) have been applied in many fields.

Federated learning (FL) is a paradigm for training statistical learning models on distributed edge networks, and was proposed by Google in 2016 (Mcmahan et al. 2017). It is a mainstream solution for solving the problems concerning huge communication overheads, data privacy security, and heterogeneous data fusion (Fan et al. 2023; Xu et al. 2023). As a distributed ML (DML) method, FL realizes global model training without local source data. Through FL, each local device only needs to encrypt the local model parameter updates and then upload them to a central aggregation server. The central aggregation server then uses a federated average algorithm to obtain a global model parameter update. Next, each local device can download the global model parameters and decrypt them. Finally, each local device uses the global model parameters for the local ML model training. This process cannot be stopped until the maximum number of iterations or required model accuracy is reached. FL has been successfully applied in medical (Fan et al. 2022; Li et al. 2023a; Myrzashova et al. 2023; Sharma and Guleria 2023), industrial (Zheng et al. 2023; Guo et al. 2023), agricultural (Durrant et al. 2022; Friha et al. 2021) and other fields. It enables models to be trained on edge devices, which also means that EC is an appropriate environment for using FL. Therefore, problems such as high communication costs, privacy and data security needs, and heterogeneous data isolation in edge-Internet of Things (edge-IoT) networks can be alleviated by utilizing FL technology in the EC environment. Moreover, FL solves the privacy and security problem of sensitive data in ML in the EC environment. However, the processes of uploading and downloading the model update parameters, training iterations, and other processes still expose the FL environment to a series of risks. For example, malicious hacker attacks and dishonest participants may use the model parameters to infer the original data (Phong et al. 2017). The data security and privacy-preserving schemes in FL in an EC environment face the following threats and challenges.

-

Data security threats. In EC environment, communications between devices and between devices and aggregation servers may be subject to various network attacks, and edge devices are usually distributed in untrusted environments and may be subject to physical attacks or malicious tampering, etc. FL systems in an EC environment need to send data to the edge layer close to the data source for processing, which still involves the risk of data security. These systems may also be subject to various forms of attacks, such as poisoning attack. A malicious client in the FL system may send incorrect model updates to the parameter aggregation server to probe the remaining participating side datasets or destroy the model accuracy. In the edge-IoT network environment, participants can access the FL system any time, verify their identity credibility, and grant access to the system. Building a trusted FL system in an untrusted environment remains a key issue in the design of FL security systems.

-

Data privacy-preserving issues. Edge computing drives services closer to end-users, and FL transfers models from edge service nodes to the user's local for training, mitigating the risk of privacy leakage caused by user data leaving the local area. However, information such as model training parameters or gradients communicated between local devices and edge service nodes may still leak information about the local raw dataset (Ge et al. 2023). Meanwhile, since service providers are usually honest but curious and have the most private information at their disposal, even partial model updates may lead to serious privacy data leakage problems. Therefore, the constant updating of attack types also puts new requirements on the research of privacy-preserving FL techniques under edge computing.

-

Communication and computation overhead. The rapid popularization of SIoT applications has led to an explosive growth in the amount of data at edge nodes, and the high computational cost brought by massive data training and the high communication cost generated by the exchange of large-scale model parameters have seriously hindered the widespread application of FL techniques. Meanwhile, the low computational performance, limited communication bandwidth, network real-time and high quality of service requirements of edge devices also pose challenges to the research of novel FL techniques in EC environments.

-

In EC, FL enables large-scale device collaboration for training AI models in a privacy-preserving manner. However, the scale of edge devices involved in FL is huge, and the performance and computational power of hardware devices may vary greatly. Meanwhile, the geographic distribution of edge devices varies widely, which may lead to heterogeneity problems such as network latency, instability, and bandwidth limitations. Finally, each edge device as a FL participant usually has non-independent and homogeneously distributed datasets, and heterogeneous data sources bring huge negative impact on the accuracy of FL models. Device heterogeneity in IoT EC environments poses significant challenges for FL implementations.

Several current research works are devoted to the innovation and application of FL theory in EC. Edge terminal devices with high mobility and exposed to the open edge network environment are prone to various malicious attacks, which not only affect the performance of the FL model, but also bring serious data security and privacy leakage problems to the FL process. To address the data security problem of the FL process under EC, (Huang et al. 2023a) proposed a reliable FL mechanism for mobile EC, designing endpoint selection algorithms based on the reputation mechanism for the construction of the reputation model and the concealment of the selected endpoints, and maintaining the model performance through elite campaigning to reduce the impact of poisoning attacks on the model. (Ni et al. 2023) proposed a new Byzantine robust FL framework, which identifies and discards malicious gradients through a dual filtering mechanism design, and uses an adaptive weight adjustment scheme to dynamically reduce the aggregation weights of potentially malicious gradients, to realize secure and trustworthy FL in IoT. (Li et al. 2023b) address the security attacks that FL is vulnerable to in distributed adversarial environments, and non-independent and homogeneous distribution of data further weakening the robustness of the existing FL methods. The Mini-FL scheme was proposed. This scheme performs unsupervised learning on the received gradients to define a grouping policy, and the aggregation server groups the received gradients according to the grouping policy and calculates the weighted average of the gradients in each group to update the global model. Existing FL data security technology schemes mainly involve endpoint selection, hardware device secure communication, model secure aggregation, and security detection, etc., and the related technology development and innovation are still ongoing.

To address the privacy protection of FL process under EC, (Zhu et al. 2023) proposed an enhanced FL model with reinforcement learning, and designed a partially encrypted secure multiparty broadcast computation algorithm by combining the advantages of end-to-end homomorphic encryption and secure multiparty computation, which realizes that the edge device and the roadside unit collaborate to train the learning model without exposing the original data. (Li et al. 2023c) proposed a ubiquitous intelligent FL privacy protection scheme, designing matrix masks to ensure secure transmission between embedded devices and edge servers, while using differential privacy mechanisms to train residual models on edge servers to provide privacy protection for data under EC. (Liu et al. 2023a) discussed the problem that most FL privacy protection protocols only provide single round privacy guarantees, and proposed a long-term privacy protection aggregation protocol, which uses a batch partition deletion update policy and integrates with advanced privacy-preserving aggregation protocols to satisfy single- or multi-round privacy guarantees. Privacy protection is an important issue in the combination of FL and EC, and the existing technical solutions mainly include encryption techniques, differential privacy techniques, etc. The FL process in EC environment involves information exchange and model training among multiple devices or edge nodes, and requires comprehensive consideration of various factors such as algorithms, protocols and policies.

Distinguished from other published works focusing on FL within EC, this paper distinctly delineates the landscape of security issues and privacy threats intrinsic to FL within edge networks. Additionally, it meticulously categorizes and expounds upon the adverse consequences stemming from diverse privacy security attacks, comprehensively dissecting their impacts on the FL process. Compared to the literature (Huang et al. 2023a; Ni et al. 2023; Li et al. 2023b, 2023c; Zhu et al. 2023; Liu et al. 2023a) that explores the data security and privacy protection issues involved in FL under EC. This review systematically sorts out the current research results of privacy-preserving FL in edge networks, and focuses on the problems of multi-party conspiracy to steal private data and malicious adversaries destroying the FL process in FL and the corresponding security defense schemes.

This article aims to provide a comprehensive discussion of the development of safe and reliable FL systems in an EC environment. First, we introduce concepts related to EC and FL. We then summarize the data security risks and privacy leakage threats in the current edge-IoT environment. Next, we review the research progress on FL's existing privacy data security protection technologies in EC. Finally, we analyse future research hotspots in FL privacy security protection in edge-IoT, and provide several research suggestions for establishing a secure and trusted FL system under edge-IoT.

2 Fundamentals of federated learning

This section introduces the concepts and working principles of EC and FL. We also summarize the data security and privacy leakage attacks faced by FL systems in current edge-IoT environments.

2.1 Related technologies

2.1.1 Edge computing

At present, ML training data mainly comes from edge-IoT devices, which not only have a large amount of data, but also a high degree of data heterogeneity. With increasing attention being paid to the security protection of private data, the traditional architecture model of centralized processing in a cloud center can no longer meet the needs of the current technological developments. As a new type of distributed computing technology, the core of the EC technology involves loading raw data into edge network devices (such as edge servers) for storage, processing, and computing. In the edge-IoT, the breakthroughs in EC technology have meant that many ML model trainings can be implemented locally without having to be delivered to a cloud center. The EC processes and analyses data in real time near the data source, providing the advantages of high data processing efficiency, strong real-time performance, and low network latency. This mode is closer to the user and solves requirements at the edge node. It effectively improves the computing processing efficiency, reduces channel pressure, and protects the security of private data.

2.1.2 Deep learning

DL is currently used in a wide range of applications, including computer vision and natural language processing. Terminal devices such as smartphones and IoT sensors generate data to be analysed in real time using DL and to train DL models. However, DL inferences and training require significant computing resources for quick execution. EC's fine grid of computing nodes placed close to terminal devices is a viable way to meet the high computing and low latency requirements of edge device DL and also provides privacy technology protection, bandwidth efficiency, and scalability. However, there is a risk that the sensitive information of data owners in the EC environment will be leaked when the data leaves the edge server node during local upload. Service providers are usually honest, but may be curious. FL was initially proposed to provide a collaborative data-training solution. It provides considerable privacy enhancements by coordinating multiple client devices to train a shared ML model without directly exposing the underlying data.

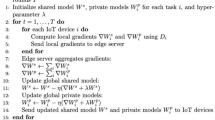

2.1.3 Definition of FL

FL problems involve learning a single global statistical model from data stored in a large number of remote devices. An example of a traditional FL architecture is shown in Fig. 1. The goal of FL is to learn the model under the constraints of local storage and the processing of the data generated by the device, and to periodically update the model parameters to be communicated with the cloud parameter server. In other words, the goal is to minimize the following objective function, i.e., to minimize the average training loss for all customers, as follows:

where, \(m\) is the total number of devices participating in training, \({p}_{k}\) specifies the relative weight of influence attributed to each individual device, and \({F}_{k}(w)\) is the local objective function of the \(k\) th device. \({F}_{k}(w)\) is usually defined as the empirical risk of the local data, as follows:

where, \({n}_{k}\) is the data volume of the \(k\) th device, \({f}_{i}(w,{x}_{i},{y}_{i})\) is the loss function of the model with the parameter \(w\) on the instance \(({x}_{i},{y}_{i})\) in the \(k\) th device-local dataset. The optimization process within FL focuses on the minimization of the value associated with the local loss function.

The FL architecture can also be designed using peer-to-peer networking, as shown in Fig. 2. This architecture eliminates the hidden dangers caused by a single failure point, further ensuring system security. It is easy to scale but may consume a greater amount of computing resources in the encryption and decryption of message communication.

2.2 FL data security and privacy leakage

In edge-IoT, a large number of edge-IoT devices are often connected to the Internet, which undoubtedly significantly increases the security risks. Currently, most of the various security attack methods are conducted through the Internet, and the success rate is generally high. In this environment, there are a variety of data security and privacy leakage threats in FL systems. This paper mainly discusses the data security attacks and privacy leakage attacks involved in FL in edge-IoT.

2.2.1 FL data security attacks

FL under EC utilizes the computational power of edge devices to collaboratively train models. However, insecure network communication environments and design flaws of traditional FL structures lead to many data security issues facing the FL process. At the same time, current FL security techniques are difficult to defend against constantly innovative and sophisticated security attacks. Malicious adversaries often use vulnerable edge-IoT devices to intrude into internal targets, destroying the global model convergence as well as the model performance, thus posing security threats to the entire FL process. In the following, the more common data security attacks are described and categorized in detail.

Poisoning attack: In the FL system, a poisoning attack (Bhagoji et al. 2019; Chen et al. 2023) aims to use poisoned data to disrupt the model training and reduce the federated model accuracy. Poisoning attacks can be divided into model and data poisoning. Model poisoning attacks are injected into the model trained by the edge nodes. Data poisoning can disrupt the model training by injecting poisoned data into a local dataset of an edge device. Both methods will produce malicious updates to the model training of FL systems, and it is difficult to detect such attackers (Fang et al. 2020; Guo et al. 2022). In the edge-IoT environment, the large and complex number of networked devices participating in the FL system and degrees of device trustworthiness are unknown, posing a potential threat to the security of the FL system. Therefore, it is necessary to develop defensive measures to protect FL systems from poisoning (Rodríguez-Barroso et al. 2022a).

Sybil attacks: In FL-distributed networks, attackers can use a single node to forge multiple identities. Attackers use these forged identities to control or affect other normal nodes in a distributed network, resulting in network robustness losses (Douceur 2002; Singh and (2006) Eclipse attacks on overlay networks: Threats and defenses. In: Proceedings IEEE INFOCOM 2006;). In the context of edge intelligent networks, distributed collaborative intelligent applications need to exchange a large amount of data and information to ensure the efficient operation of different programs, and the distributed network environment provides conditions for Sybil attacks, where attackers can create multiple identities to interfere with the normal operation of the system or steal services for profit (Hammi et al. 2022). Traditional FL allows distributed clients to join and exit the system, and attackers can use multiple colluding aliases to join the system to execute attacks, for this reason, some researchers have set up a trusted third party for centralized FL systems to verify false node identities or use blockchain technology to implement decentralized and reliable FL in untrustworthy networks, and utilize the structural properties of the blockchain and cryptography to defend against Sybil attacks (Xiao et al. 2022; Fang et al. 2022).

Backdoor attack: Backdoor attack is when an attacker intentionally inserts a malicious message or "backdoor" into a system in order to trigger malicious behavior under certain conditions. Backdoor attack may cause a system to perform well under normal conditions, but perform illegal and improper tasks when certain conditions are met. Since FL involves multiple participants, an attacker can attempt to insert a backdoor into the local model of some trusted participants and then disrupt the FL process by tampering with gradient updates, sending malicious update parameters. Meanwhile, after multiple iterations of FL, the model parameter aggregation may change and the embedded backdoor may gradually fail. Therefore, malicious actors often increase the persistence of backdoor attacks in FL by slowing down the learning rate during training (Nguyen et al. 2024; Gong et al. 2022; Yang et al. 2023a).

Byzantine attack: As the most typical attack method in FL, the attacker tries to tamper with the model update parameters submitted by trusted nodes so that the actual model aggregation deviates far from the model convergence direction, resulting in a decrease in the FL model accuracy and serious deviations in the predicted values. This type of security attack is the most common and is highly effective. Correspondingly, the FL security systems for Byzantine attacks are also being updated. The use of secure robust aggregation is currently recognized as an effective means for defending against Byzantine attacks (Li et al. 2023d; Miao et al. 2022; Wan et al. 2022).

Free-riding Attack: In FL, free-riding attack is the process of a free-rider generating false model update parameters to report to the parameter server. Then, the free-rider uses the global model parameters to update its local model but does not contribute its own local data to the global model aggregation. Free-riding attacks reduce the amount of data involved in global model training. Spurious parameter updates can also affect the global model accuracy (Lin et al. 2019; Fraboni et al. 2021).

Adversarial attack: Adversarial attack in FL deceive federated models by adding subtle perturbations to the local raw data to generate adversarial samples. Adversarial attacks can be divided into white-box, black-box, targeted, and non-directed attacks. In a white-box attack, the adversary masters the model and training data information; however, this is generally inconsistent with actual situations. Under a black-box attack, the adversary has little knowledge of the model and training set information. This is in line with the actual scenarios, and is currently the main research direction. Under a targeted attack, a multi-classification ML model classifies and outputs input samples into specified categories. Under a non-directional attack, the adversary uses generative adversarial samples to deceive the FL model. Adversarial attacks help adversaries evade FL system security detection and can generate poisoned samples to undermine the FL system accuracy (Goodfellow et al. 2014; Nair et al. 2023).

2.2.2 FL privacy leakage attacks

In terms of privacy leakage, during the entire training cycle of the FL model, information such as weight updates and gradient updates may be leaked as sensitive private data. During this period, there may be a risk of privacy leakage, whether from the participating clients of the FL, central servers, or third-party servers. Recent studies have found that even some model gradient information can be leaked as local private data samples or features. Malicious attackers can also steal the training datasets of a local client in an FL iteration, or rebuild the training datasets through an FL global model inversion.

Model inversion attack: This type of attack method obtains the training set information from a target model that has completed the training. A model inversion attack infers the training set information through a reverse analysis. This information can be the information of members participating in the training and/or certain statistical characteristics of the training set data. For example, an attack method that uses a model inversion attack to infer actor identity information is called a member inference attack. Member inference attacks are designed to obtain information by checking for the presence of raw data in the training set (Hu et al. 2023). In an FL member inference attack, with each iteration, the data contributor uploads its own model update parameters to the parameter server. At this time, the server understands the model characteristics and global model parameters of each party. Thus, it can easily be determined whether a specific data sample is in the local training dataset. In some cases, parameter servers can become malicious adversaries and conduct member inference attacks. The remaining participants in the FL system may be potential adversaries. They can determine whether the estimated data originates from the target model training set. Subsequently, the adversaries judge whether the data are member data. During the entire attack process, the FL model parameter contributors don’t know the opponent's inference attack behavior, leading to the privacy data of the model contributors being leaked unknowingly (Hatamizadeh et al. 2023).

Refactoring attack: This type of attack focuses on reconstructing all of the training set information of the model contributors or certain sensitive feature categories of the training set (e.g., attribute category labels). Several reconstruction attack methods have been proposed, such as generalized adversarial network (GAN) attacks. (Hitaj et al. 2017) first proposed a GAN-based data reconstruction attack to steal the private data of model contributors. When using the GAN to attack the FL, an adversary generates a prototype of the training data of the target by training the GAN. By injecting fake training data samples into the model server, the model contributor is tricked into contributing more local training data. Eventually, the adversary may use this information to restore the local original data of the model contributor and thereby steal sample data. Through GAN attacks, adversaries can also complete data category inferences (Liu et al. 2023b) and label inferences (Jin et al. 2023). Because the FL system server does not know much regarding the reputation and honesty of the various parties, it is difficult to distinguish a GAN-based data-inference attack. Therefore, when building and maintaining FL security systems, it is necessary to strengthen the identification of and defend to such reconstruction attack methods.

Model extraction attack: In 2016, (Tramèr et al. 2016) proposed a model extraction attack method focused on reconstructing alternative models similar to the target model. In the FL context, the adversary obtains black-box access to the target model. It obtains the return result by sending data in a loop and uses these return values to steal the FL model update parameters or model functions. It restores the FL target model or reconstructs a similar target FL model as much as possible (Li et al. 2023e).

The above data security and privacy leakage attacks are common attack types in FL. Table 1 and 2 compare and analyse the methods and effects of the security and privacy attack methods.

2.3 FL privacy security threats in EC

The traditional cloud-centric computing approaches are gradually proving inadequate in addressing the security challenges posed by the vast amounts of privacy-sensitive data generated by edge terminal devices in the intelligent Internet of Things landscape. EC technology, by processing and analyzing data near its source in real-time, not only caters to the demand at the edge nodes but also significantly enhances processing efficiency, alleviates communication overhead, and safeguards data privacy. Concurrently, FL enables model training on edge devices, making EC an apt environment for FL deployment. Nevertheless, EC, being a nascent distributed computing paradigm, harbors distinctive privacy security risks. The FL model training process within EC confronts similar privacy security threats. The intricate service model of edge computing, coupled with real-time application requisites and the resource constraints of edge terminal devices, alongside the heterogeneous nature of edge user privacy data from multiple sources, may exacerbate privacy security concerns during the FL training process. For instance, the exigency for refined security authentication mechanisms at the edge nodes, coupled with the inadequacy of traditional encryption technologies for edge computing environments, poses risks of privacy security breaches, particularly in untrusted execution environments. The primary data security threats and privacy protection challenges encountered by FL within EC encompass:

-

Secure sharing and storage of privacy data: The collection of user data by edge terminal devices encompasses sensitive information such as personal location, health data, and identity details. Storing such privacy data in third-party servers, like edge servers, within edge computing environments raises concerns regarding data leakage and unauthorized tampering. Moreover, the presence of numerous unknown trust nodes within the intricate edge network environment poses additional security risks. The connection of FL model learning and training tasks to the network exposes vulnerabilities that malicious adversaries can exploit through various security attack methods. Common network security threats in the EC environment include denial of service attacks, information injection, malicious code attacks, gateway forgery, and man-in-the-middle attacks. The fundamental challenge persists in securely uploading local data to the network or entrusting it to third-party servers for storage and processing.

-

Fine-grained authentication access control: EC, being a distributed computing system, operates across multiple trust domains. However, even authorized nodes within untrusted network environments face trust issues. Establishing authentication identities for network nodes across diverse trust domains and ensuring secure access verification between them imposes elevated requirements and challenges on the design of fine-grained access control mechanisms within complex edge networks.

-

Design requirements of lightweight privacy security algorithms: The majority of edge terminal devices in edge computing environments suffer from limited resource performance, including constrained computing power and battery capacity, especially prevalent in mobile terminal devices. This limitation inhibits the effective implementation of traditional security encryption algorithms, access control mechanisms, and security defense measures on resource-constrained terminal devices. Consequently, the development of lightweight privacy and security algorithms tailored for the EC environment becomes imperative for the secure and efficient execution of FL processes.

-

Data consistency and quality issues: Data in EC environments is typically distributed across edge devices, leading to synchronization challenges due to performance disparities among terminal devices. Asynchronous execution of learning algorithms and computing tasks further complicates ensuring data consistency and the accuracy of FL local model training parameters. Addressing these issues remains a formidable challenge within FL in EC.

3 Related works

This section discusses FL studies on the development of data security and privacy protection technologies in the edge-IoT environment, and analyses, compares, and summarizes the mainstream solutions in the industry.

3.1 FL data security

FL is a variant of distributed learning that enables the training of shared models without the need to access private data from different sources. Despite its benefits in terms of privacy protection, the distributed nature of FL and its privacy constraints make it vulnerable to data security attacks. These include poisoning, sybil, backdoor, and adversarial attacks. In the edge-IoT scenario, cyber-physical systems integrate sensing, computing, control, and network processes into physical objects and infrastructure elements connected via the Internet to perform common tasks. Once the learning and training of the FL model are connected to the network, hackers can use a series of security attack methods and the security mechanism vulnerabilities of the host to launch security attacks. Therefore, the tight coupling of the network and physical systems poses challenges to the stability, security, efficiency, and reliability of FL. In this section, we summarize the means and mechanisms for providing robust performance protection of FL models, including data security attack intrusion detection mechanisms and improvements in FL model security robustness.

3.1.1 Federated robust aggregation algorithms

With the steady developments in edge device computing power, storage capacity, and other performances, information transmission, local storage, and network computing tasks have gradually shifted to edge devices. In the face of access to complex edge devices, this undoubtedly has brought significant challenges to the security of FL. FL aggregation algorithms play an important role in updating the global model, such as privacy data protection, efficient model convergence, and security attack defense. Different FL aggregation algorithms are designed with different advantages and disadvantages. Secure aggregation, as an important criterion in the design of FL aggregation algorithms, aims to protect the security and reliability of the participants' local models and the FL training process. However, in the untrustworthy EC environment, the current federated aggregation algorithms cannot well defend against the Byzantine attacks that are common in distributed computing systems. Meanwhile, the model communication of massive edge participants also puts higher requirements on efficient FL aggregation algorithms. For this reason, the development of secure and efficient FL aggregation algorithms is an important means to implement robust FL processes in untrustworthy EC environments. This section focuses on a systematic and in-depth analysis of the more advanced FL aggregation algorithms, and discusses and summarizes the model aggregation algorithms under different FL and EC application scenarios by comparing different advanced schemes in terms of data security, model performance, and system efficiency (Qi et al. 2023).

In terms of defending data security attacks, Nuria et al. (Rodríguez-Barroso et al. 2022b) deeply analyzed the model poisoning backdoor attack in FL, discussed the pattern key backdoor attack and distributed backdoor attack that may be triggered by adversarial participants with outlier behaviors in FL, and developed a new robust and resilient FL aggregation operator, i.e., robust filtering of one-dimensional outliers, in response to the above problems, which filters out the univariate outliers by performing the standard deviation method on model participant updates for each dimension to identify univariate outliers and thus filter out adversarial participants in FL. (Zhang et al. 2023b) supported backdoor detection for FL secure aggregation through two new primitives, inadvertent random grouping and partial parameter disclosure. Inadvertent random grouping divides FL participants into one-time random subgroups, which prevents collusive attackers from knowing each other's group membership assignments and detect backdoor attacks using statistical distributions of subgroup aggregation parameters based on learning iterations. Compared to the robust filtering operator for one-dimensional outliers proposed by Nuria. Zhang's design scheme has better performance in terms of communication and computational cost, but Nuria focuses more on the detection of outlier behaviors of adversarial participants, with a focus on defending against model-poisoning attacks based on data poisoning and model updating enhancements of adversarial participants.

Nevertheless, current FL systems are unable to monitor the local training process of edge devices in real-time when dealing with distributed collaborative learning among a large number of IoT devices, leading malicious attackers to exploit the vulnerability for byzantine attacks. (Ni et al. 2023) proposed a dual filtering mechanism for byzantine attacks during FL under edge-IoT to identify and discard malicious gradients and increase the security of the FL training process, while considering the impact of potential malicious gradients, designing an adaptive weight adjustment scheme to correct the size of local and normal gradients to keep the same size using a dynamic trimming method to ensure effective model aggregation. However, Ni's scheme does not consider that the real datasets usually used by FL participants in real EC environments are non-independently and identically distributed, and the non-independently and identically distributed original datasets further weaken the robust performance of existing FL aggregation algorithms and increase the possibility of the FL global model to be attacked and corrupted in non-independently and identically distributed scenarios.

For this reason, (Li et al. 2023b) evaluated the effectiveness of existing byzantine robust FL methods in non-independently and identically distributed scenarios, and proposed a mini-FL scheme, which proposes a grouping aggregation method based on participant geographic, temporal, and user characteristics as a grouping principle. The scheme introduces a clustering method, considering that the uploaded gradients naturally tend to cluster due to location, time and user clustering. The parameter server divides the received gradients into different subgroups and performs byzantine robust aggregation separately, the similar behavior of each subgroup results in a smaller range of gradients leading to a smaller attack space. The introduction of clustering method reduces the attack surface and effectively enhances the FL robustness in practical non-independent same-distribution scenarios (He et al. 2023) similarly analyzed the challenges posed by non-independent same-distribution data to the byzantine robustness of FL, and proposed the byzantine robust stochastic model aggregation method, which utilizes robust stochastic model aggregation to obtain the byzantine robustness to non-independent same-distribution data, and analyzed and proved that the byzantine convergence of the robust stochastic model aggregation scheme in distributed nonconvex learning. Compared with the stronger generality of the scheme proposed by Li, He proved the convergence of the scheme in distributed nonconvex learning even more from theoretical analysis. (Zhang et al. 2023c) studied the existing FL attacks and detection schemes, and found that most detection schemes have high false positive rates in the setting of non-independent and identical distribution. Therefore, they proposed a Kalman filter-based cross-round detection. This detection scheme identifies adversaries by looking for behavioral changes before and after attacks, so as to adapt to data heterogeneity and improve detection accuracy.

In terms of FL model performance enhancement, existing FL secure aggregation algorithms cannot satisfy the security and reliability of the FL process for resource-constrained IoT devices without significantly affecting the model accuracy and performance. Since the performance of edge-IoT devices is usually limited to support high performance consuming FL robust aggregation algorithms, (Cao et al. 2024) proposed a secure robust FL framework with a trusted execution environment, which adopts a shared representation learning approach to classify the model into a sensitive model and a representational model used for client training, where the sensitive model is always retained in the secure environment, and the representational model is in the real environment for normal training and aggregation. The representation learning approach allows each FL participant to train its own personalized model, which improves the model convergence rate and accuracy. Meanwhile, the framework, in order to enhance the robustness of FL models in non-independent and homogeneously distributed scenarios, designs a robust affiliation-based multi-model aggregation method, which uses affiliations generated by soft clustering to classify clients and uses multi-model methods to perform aggregation separately to enhance robustness.

(Du et al. 2023a) considered that the device operating environment restricts high-quality annotated data extraction among the participants, and propose a forgotten optimized aggregation strategy combining Kalman filter and cubic exponential smoothing, which improves the global aggregation of models to enhance model performance. Meanwhile, a deep learning network combining multi-scale convolution, attention mechanism, and multilevel residual connectivity is also used to extract multi-client data features simultaneously to improve the accuracy and generalization of the aggregation algorithm in local model training with multiple participants.

(Wang et al. 2023) focused on the fact that the performance of the over-the-air FL is usually limited by the devices with the worst channel conditions of the edge servers, and considered that the use of reconfigurable smart surfaces can alleviate the communication problem of over-the-air FL and develop a learning algorithm based on graph neural network to map the channel coefficients directly to the optimized network parameters, which reduces the computational complexity of the algorithm by exploiting the alignment equivalence and invariance of the graph to achieve the aggregation algorithm dimensionality independently of the number of edge devices.

The schemes proposed by Cao et al., Du et al. and Wang et al. focus on the performance of FL robust aggregation algorithms under the constraints of edge-IoT device resources and operating environment. And the former scheme focuses on evaluating the model accuracy of the proposed aggregation algorithms when resource-limited IoT devices are subject to byzantine and backdoor attacks, while the latter scheme focuses on improving the accuracy of the model aggregation as well as the communication and computational efficiency when a large number of local models are aggregated, focusing on improving the accuracy and efficiency of the FL system. However, allowing all devices to participate in the FL process is not a long-term feasible solution, and the heterogeneity of edge devices under IoT in terms of data quality power, arithmetic, storage, etc., and the poor communication links of some of the participants can affect the FL performance. Therefore, optimal client selection also becomes an important stage in the FL process.

3.1.2 FL client selection algorithms

In the FL process, the client devices participating in each round of training can be accurately and efficiently selected based on the device performance, connection quality and other indicators, which helps to improve the efficiency and performance of FL. Meanwhile, a fair client selection algorithm design can also prevent malicious clients from participating in training using techniques such as authentication and reputation assessment, reducing the harm of malicious attacks on the model (Mayhoub and M. Shami T, 2023). To this end, this section reviews recent client selection algorithms for FL, analyzes and evaluates client selection algorithms in terms of both features and limitations.

In designing client selection algorithms to enhance FL robustness, reduce communication and computational overhead and improve model convergence speed and accuracy. (Jiang et al. 2023) analyzed a number of security strategies to mitigate label-flipping attacks in FL, and proposed a malicious client detection approach for defending against label-flipping attacks against the huge computational overhead and lack of robustness required by these strategies, which is achieved by training a parameter server with a lightweight generator on the parameter server to detect the quality of training data for each client. The generator performs data quality detection without retraining and does not require any prior knowledge, which satisfies the lightweight design and privacy security requirements. However, Jiang et al.'s scheme does not focus on the frequent exchanges of model parameters carried out between the massive edge client devices and the server side, and due to the limited communication resources, the frequent exchanges of massive parameters cause large communication delays and affect the efficiency of the FL system.

Aiming at how to design the FL client selection algorithm to improve the FL communication efficiency, (Yang et al. 2023b) first proposed a client selection scheme based on the kernelized stan difference between the global posterior and the local skewed distributions, the updates provided by clients with large kernelized stan differences can minimize the local free energy of each iteration, and the probability of such clients to be selected is the largest, and the resulting communication overhead is also smaller.

Meanwhile, (Wehbi et al. 2023) proposed an intelligent client selection method by considering the problems of data quality, computational and communication resource heterogeneity that exists among IoT devices, and analyzed the substantial problems of schemes that select FL clients based on a random selection strategy. The method overcomes the limitation that most client selection schemes follow a unilateral selection strategy, and uses matching game theory to propose a bilateral client selection method for FL, taking into account the preferences of FL servers and client devices in the selection process.

(Huang et al. 2023b) investigated the use of a clustered FL approach to solve the problem of heterogeneous data, and found that the current clustered FL process is relatively slow. Considering the lack of an effective client selection strategy, proposed the use of active learning to select participating clients for each cluster. The scheme filters out some of the group clients that partially provide the most information in each round of FL based on active learning metrics and only aggregates their model updates to update cluster-specific models. Where the active learning metrics are set to uncertainty sampling, committee query, and loss. The active client selection FL scheme proposed by Huang requires fewer participating clients, which can significantly speed up the learning process and significantly improve the model accuracy with lower communication overhead.

Enhancing the robustness and data security of FL systems in EC environments involves multiple factors and considerations, and more current technical solutions are mostly researched from the perspectives of communication security, device security, model robustness, communication and computation overhead, and client selection. However, the network attacks in untrustworthy EC environments are gradually diversified and complicated, and how to develop FL frameworks with higher security performance while taking into account the system overhead and efficiency is still a key direction for future research on secure robust FL.

3.2 FL information privacy-preserving

FL solves the privacy security problem of sensitive data in ML environments. However, the uploads and downloads of the model update parameters, training iterations, and other processes still expose the FL environment to a series of risks. Examples include malicious speculation by semi-honest adversaries and theft of data by curious attackers (Narayanan and Shmatikov 2008). The privacy in FL can be divided into global and local privacy. Global privacy requirements and local device-generated model updates protect the privacy of all unreliable third parties except for the trusted central aggregation server in each iteration. Local privacy requires model updates to protect the server privacy. At present, typical technologies for improving FL privacy security include cryptographic technologies, disturbance technologies, adversarial training (AT), blockchain, and KD.

3.2.1 Cryptography technologies

Common cryptography technologies in FL encrypt the model parameter information that must be uploaded in plaintext. This process enhances the privacy and security protection performance of the FL systems. At present, the commonly used encryption methods include secure multi-party computation (SMPC) and homomorphic encryption (HE). Each encryption technology has unique technical characteristics. For example, SMPC can keep user input data confidential and allows multiple parties to perform joint computation on private data, but the computing overhead is expensive. Compared with SMPC, HE schemes have a similar performance in security protection. However, they consume fewer computing resources than SMPC.

SMPC, also known as MPC, was originally proposed to protect the inputs of multi-party participants. In the FL framework, the SMPC is used to protect model updates for clients. SMPC ensures that each participant in the FL system only recognizes its own inputs and outputs, and ensures that it has a complete lack of knowledge regarding other clients. Using SMPC to build an FL security model can increase efficiency by reducing the security requirements. Kilbertus et al. (Kilbertus et al. 2018) used SMPC methods for validation and model training to prevent local private data from being known to other users. Sharemind (Bogdanov et al. 2008) designed an SMPC framework as a secure and efficient computer system. (Kalapaaking et al. 2022) proposed a CNN-based FL rack by combining SMPC-based aggregation and cryptographic inference methods. Encrypted on-premise models were sent to the cloud for SMPC-based cryptographic aggregation, resulting in an encrypted global model. Ultimately, the encrypted global model was returned to each edge server for more localized training, thereby further improving the accuracy of the model. This solution solved the FL privacy security problem in the IoT environment under 6th-generation networks, and ensured the accuracy of the model and confidentiality of the model parameters. However, this solution did not consider the costs of multi-source heterogeneous data encryption processing and communication resource consumption in the IoT environment.

(Li et al. 2023f) designed a vertical FL-ring (VFL-R) for FL models with limited communication sources and low computing power in the coordinators. VFL-R was a novel vertical FL framework combined with a ring architecture for multi-party collaborative modeling. The VFL-R framework simplified the intricate communication architecture of all parties and provided protection against semi-honest attacks. In addition, it reduced the influence of coordinators in the modeling process. (Berry and Komninos 2022) faced the problems of the SMPC's large number of computational rounds and high costs of transmitting data between parties. They proposed an efficient optimization framework for a CNN with SMPC. This framework combined various optimization methods from a broader privacy-preserving DL field. It included batch normalization for privacy-preserving and polynomial approximations of the activation functions.

The SMPC is a lossless solution that allows multiple parties to perform joint computations on private data. SMPC keeps data content confidential and provides strong privacy protection. As a research-oriented solution, the SMPC-based FL privacy protection scheme still faces many challenges. The main problem is the trade-off between the FL system efficiency and privacy. The SMPC encryption and decryption process takes a long time, which may negatively affect the model training. The design of a lightweight SMPC solution remains a significant challenge. Therefore, many researchers choose HE technologies. Under the premise of having the same security performance scheme, the lower computing consumption of HE technologies has made them the first choice for many researchers in designing FL security protocol frameworks.

HE refers to plaintext and encryption operations. The obtained result is equivalent to a result obtained by first encrypting the plaintext to obtain ciphertext and then performing the same operation on the ciphertext. Owing to this advantageous feature, ML can entrust a third party to process data without revealing the information.

HE has been introduced into the FL framework to encrypt local gradient updates, gradient parameters, and other model parameter information. This prevents private data from being leaked by adversaries. The early HE algorithms used single-key arithmetic. Homomorphic operations could only be performed between values with the same public key. This required the clients to share the same private key. However, if the same private key was shared by multiple clients, there was a risk of private key leakage. There was also an increased risk of malicious clients accessing the data of other clients. This was undoubtedly a significant test for FL privacy protection using HE schemes. Therefore, (López-Alt et al. 2012) proposed a multi-key HE method. Later generations have continued to innovate and improve on this basis to solve the limitations of single-key HE approaches. It has recently been discovered that multi-key HE can be used in FL scenarios to protect the privacy of model updates. However, they may not be protected against attacks by malicious actors that disrupt the course of learning, such as Byzantine attacks.

(Ma et al. 2022a) proposed a multi-key fully HE multi-key-Cheon-Kim-Kim-Song (MK-CKKS) scheme for supporting approximate fixed-point algorithms. It required an aggregated public key for encryption and decryption and required each device to calculate its decryption share. The ciphertext was successfully decrypted only when the number of private keys participating in decryption reached a certain threshold. MK-CKKS required all data contributors to collaborate to decrypt the aggregated results, thereby guaranteeing the confidentiality of the model updates. It was robust to attacks by malicious actors and collusive attacks by actors on servers. (Hou et al. 2021) proposed a verifiable privacy protection scheme (VPRF) based on a vertical joint random forest. The VPRF utilized homomorphic comparisons and voting statistics with a multi-key HE to protect privacy. (Zhang et al. 2023d) combined distributed Paillier cryptography and zero-knowledge proofs based on existing Byzantine robust FL algorithms. They proposed an FL scheme that balanced robustness and privacy protection.

In solving the problems of traditional privacy protection, FL solutions cannot simultaneously provide efficient data confidentiality and lightweight integrity verification. (Ma et al. 2022b) proposed a verifiable privacy-preserving FL scheme (VPFL) for EC systems. The VPFL combined the distributed selection stochastic gradient descent method with the Paillier HE system. They also proposed an online and offline signature method for lightweight gradient integrity verification. (Zhao et al. 2022) designed a decentralized privacy-preserving and verifiable federated learning framework based on efficient and verifiable cryptographic matrix multiplication. The framework effectively defends against various inference attacks by ensuring the confidentiality of global and local model updates and the verifiability of all training steps. It realizes the integrity of the federated learning model training and improves the training efficiency of the federated learning system.

In recent years, secret sharing schemes have been widely concerned and used to design federated security protocols as a special encryption method that is both secure and efficient. Secret sharing technology can be applied to the training process of FL to ensure the privacy of model parameter sharing. Secret sharing splits the parameters of the federated learning model into multiple parts and sends them to different participants, and ensures that only when the number of participants exceeds the recovery threshold specified by the system, can they cooperate with each other to recover the model information. In theory, the federated learning based on secret sharing can protect the local data set of the client from the semi-honest central aggregation server and other participants. In the case of collusion between some clients and the central aggregation server, secret sharing can still provide privacy security guarantee.

(Zhou et al. 2021) proposed a privacy-preserving FL framework that combines Shamir with HE to ensure that aggregate values can be correctly decrypted only when the number of participants is greater than t. Tasiu et al. (Muazu et al. 2024) proposed a secure FL system based on data fusion, which employs a convolutional neural network with an effective weight sharing method for prediction, and uses multi-party computation and additive secret sharing to encrypt the weights of the model to protect the privacy of the gradient parameters of the federated learning training model. (Wang et al. 2022a) proposed a privacy-preserving scheme for FL under EC, and designed a lightweight privacy-preserving protocol based on shared secret and weight mask, which achieves higher accuracy and training efficiency than HE, and can resist device dropout and collusion attacks between devices. However, the above schemes solve the trade-off between prediction accuracy and model privacy by protecting the gradient parameters, and do not consider the high communication cost caused by the transmission of massive secret segments. At the same time, in the EC environment, the processing and transmission delay of edge devices with different performance are also quite different.

Therefore, (Liu et al. 2022) designed a secure aggregation protocol based on an effective additional secret sharing in fog computing setting to solve the problem that the training process of FL needs to perform secure aggregation frequently. Firstly, the protocol used fog nodes as intermediate processing units to provide local services to help the cloud server aggregate the sum during the training process. Then a lightweight Request-then-Broadcast method is designed to ensure that the protocol is robust to lost clients. The protocol achieves low communication and computation overhead. (Xie et al. 2022) designed a lossless multi-party federated XGBoost learning model based on secret sharing. The model framework reshaped the segmentation criterion calculation process of XGBoost in the secret sharing setting, and solved the quadratic optimization problem in a distributed way to perform leaf weight calculation, so that the model could run quickly in a secure manner. However, when facing the complex and large FL network, the secret sharing technology needs to allocate more secret segments. At the same time, due to the different equipment performance of each participant in the FL, and the different types, structures, and quality of the data sets, the local model training time and quality of each participant are different, which leads to the fact that multiple participants cannot receive or submit their own secret clips at the same time, and the FL system has higher bandwidth requirements for network transmission.

Nevertheless, it remains necessary to solve the problems of member inference attacks and reverse attacks leading to training data privacy leakages, e.g., the address security problems of most existing cryptography approaches in FL and required additional computing. HE still has great room for technological innovation and improvements in terms of the computing efficiency, complexity of the interaction logic, and secret sharing schemes in terms of communication delay and communication bandwidth cost.

3.2.2 Differential privacy technology

In DML, fuzzy processing technology is often used to protect the privacy security of the training datasets. This includes performing randomization, noise disturbance, generalization, and compression to obfuscate the training data and improve the privacy performance to a certain extent. In FL, DP is often used to add noise disturbance to the original training set data, model parameters, or gradient information, so as to hide key features of the data. DP can achieve privacy data protection.

(Dwork 2008) proposed the concept of DP in 2006 and provided a rigorous mathematical proof of its security. DP mainly protects data privacy and security by adding noise to sensitive data. The introduction of DP in FL to add noise disturbances to the model parameters uploaded by FL participants or to use generalization methods to hide key data features prevents a reverse retrieval of data, so that the ML models can resist adversarial samples (Ibitoye et al. 2021).

DP has a lower overhead than SMPC's high communication overhead. Many advanced DP algorithms have been proposed in existing studies. For example, (Wang et al. 2019a) designed a deep neural network (DNN) learning framework that supported DP by considering the risk of privacy leakages of sensitive crowdsourced data. The framework evaluated important features related to the target class labels. It used adaptive noise figures to accommodate heterogeneous input features. Finally, a noise disturbance was added to the affine transformation of the input features according to the importance and heterogeneity of the input features. (Geyer et al. 2017) proposed a client-side DP-protection FL optimization algorithm. (McMahan et al. 2017) added user-level privacy protection to the FL averaging algorithm to design a user-level DP training algorithm for large neural networks. The purpose of both was to protect private data by hiding the local model parameters uploaded by users during training, thereby balancing the model performance and privacy loss. Both algorithms were validated using actual datasets. This proved that, with sufficient devices participating in federated training, privacy protection could be achieved with a small additional overhead. Simultaneously, both approaches guaranteed high model accuracy.

However, this method did not consider that the introduction of DP in FL with fewer participants may lead to impaired overall model accuracy. To this end, (Huang et al. 2020) substituted DP noise into a neural network by pruning a given layer of the neural network, aiming to protect private data from leakage without reducing the model accuracy. Lin et al. (Lin et al. 2022) designed a novel privacy-preserving learning framework based on graph neural networks (GNNs). The framework had a formal privacy guarantee based on edge-local DP to protect both node features and edge privacy. It was highly integrated with a GNN with a privacy utility guarantee to protect users' data privacy under a given privacy budget.

In general, the factors with greater influence on the accuracy of the model are the noise disturbances and clipping degrees. Bu et al. (Bu et al. 2021) utilized the advantages of the linear algebraic properties of neural tangent kernel matrices. A convergence analysis framework for DP DL suitable for general neural network structures and loss functions was established. In a continuous-time analysis, the authors verified that the main influence on the model convergence was not noise, but the degree of sample clipping. Thus, a global cropping method was designed. Compared to traditional local cropping methods, the global clipping loss was small, the calibration was better, and the model prediction accuracy was less-impacted. However, the discrete-time convergence at large learning rates required further study (in such cases, the addition of noise affects the model convergence to some extent.

The above improvements strove to strike a balance between privacy protection and model accuracy. However, they did not deeply consider the privacy computing costs of model iterations or the added model complexity with the introduction of DP. To this end, (Zhao et al. 2021) designed a multi-level and multi-participation dynamic allocation method for a privacy budget. A new adaptive differential private FL algorithm was designed to balance privacy and utility. (Andrew et al. 2021) proposed an adaptive gradient-clipping strategy. This strategy added noise to a specified layer while applying adaptive fractional clipping to an iterative DP mechanism. This strategy alleviated the problem of excessive hyperparameters in DP algorithms.

3.2.3 Adversarial training

In recent years, information privacy-preserving methods based on cryptography and disturbance technologies have been widely applied in FL. These technologies primarily concern raw data, parameter encryption, and secure local computing. They pass the results of the computation to a third party to aggregate the computation results, which can significantly reduce the risk of privacy leakages in the distributed learning process. However, malicious attackers can steal data from other honest actors by deploying GANs. (Wang et al. 2019b) designed a GAN on a central aggregation server to steal the private data of users. Using calculated gradient information, the adversary could reverse some or all of the private data. In recent years, many approaches have been proposed for stealing private data in FL systems through GANs. Aiming to resist such adversarial attacks, a significant amount of research has been conducted aiming to protect the privacy in FL. The main objectives are detection and defense. Defenses against adversarial attacks have the following three main directions.

-

Modify the training process or modify the input sample during the testing stage.

-

Modify the neural network, such as by improving the activation function or loss function and adding or deleting the number of sublayers in the neural network.

-

Identify adversarial samples or completely classify adversarial samples.

AT is the first line of defense against adversarial attacks, and has been introduced into FL to strengthen data privacy security. AT participates in federated model training, using the real and adversarial samples as a training set. AT enhances local real-world data privacy security through adversarial sample perturbation. AT is an active defense technique. It attempts to arrange all attacks from the training phase of the client, making the FL global model robust to known adversarial attacks (Tramèr et al. 2017). AT requires the use of large amounts of training data and high-intensity adversarial samples; therefore, it can regularize neural networks to reduce overfitting. In turn, the resistance of the neural network is enhanced and the best empirical robustness is obtained (Papernot et al. 2018; Croce and Hein 2020; Tramer et al. 2020).

Early AT defenses were focused on detection and prevention. For example, (Baracaldo et al. 2017) used background information such as sources and transformations to detect toxic sample points in a training set, and (Arjovsky et al. 2017) prevented inference attacks by generating fake training data. However, recent studies have found that the security threats to concentrated AT are gradually increasing. (Song et al. 2019) found that AT makes ML models more vulnerable to member inference attacks than those trained using the original training set. (Mejia et al. 2019) found that with a model inversion attack, an attacker can use an AT model to generate images that are similar to actual training samples. (Zhang et al. 2022) developed a new privacy attack method that destroyed the privacy of the DL systems in AT models. First, the feature information was recovered from the gradient. The recovered features were then used as supervised reconstruction inputs.

Facing insecure AT, (Ryu and Choi 2022) proposed a hybrid AT method. This scheme used clean images denoised by denoising networks, clean images without denoising, and adversarial samples to train DNN models. This scheme improved the robustness of DNNs against a wide range of adversarial attacks. (Wang et al. 2022b) introduced a semi-supervised learning mechanism with virtual AT to avoid overfitting during DL model training. (Rashid et al. 2022) used IoT datasets to explore the impacts of adversarial attacks on DL, and proposed a method using AT. This method significantly improved the performance of IDSs in adversarial attacks.

At present, most AT methods use AT examples to improve the model robustness. However, most AT approaches require additional computational time and overhead for computational gradients. To this end, (Jia et al. 2022) designed an adversarial initialization method for the dependent samples of a fast AT. This method realized sample dependence by generating benign samples and their gradient information in the training target network. However, this law did not consider the high computational costs and time required to deploy large-scale AT on resource-constrained edge devices in FL networks. (Tang et al. 2022) proposed a federated adversarial decoupling learning framework. The framework applied decoupled greedy learning (DGL) to federated AT to reduce computations and memory usage. In addition, the framework added an auxiliary weight decay to improve the vanilla DGL and mitigate target inconsistencies. The experimental results showed that the federated adversarial decoupling learning framework significantly reduced the computing resources consumed by AT while maintaining almost the same accuracy and robustness as concentrated joint training.

Most researchers have focused on the accuracy of AT models. AT injects adversarial sample samples into the model training to improve the robustness of DNN models against adversarial GANs. A slight perturbation of the adversarial sample to the original sample may affect the accuracy of the model. To this end, (Yu et al. 2022a) designed a meta-learning-based AT algorithm framework to avoid the performance degradations caused by the generated adversarial samples. (Zhou et al. 2022) proposed a latent-boundary-guided AT framework. The framework trained DNN models on adversarial samples, as guided by potential boundaries. High-quality adversarial sample samples were generated by adding perturbations to potential features. This approach achieved a better trade-off between the standard accuracy and adversarial robustness. In general, AT improves the privacy of the user data. Adding AT samples minimizes the threat of inference to the actual training data. In the latest research on improving the robustness in AT, the trade-off between standardization and robustness has received widespread attention. This also provides a new direction for the future development of federal confrontation training.

3.2.4 Blockchain

The traditional centralized FL framework relies on a central aggregation server and therefore has a single point of failure. When communication is busy, the central node incurs higher communication costs and low efficiency. Participants lack incentive mechanisms and are not highly motivated to participate in joint learning. There is also a lack of security mechanisms to identify malicious users who compromise the model. To address these shortcomings, many researchers have combined blockchain with FL. First, the participating nodes of the blockchain are used to replace the central server to reduce the single-point-failure problem. Next, miner nodes are used to calculate the local device model update parameters without uploading raw data. Subsequently, the consensus mechanism of the blockchain is used to verify and record the local device model updates. The aggregate model parameters are uploaded by local devices, and the global model updates are added to new blocks. Finally, each local device downloads the global model from the blockchain blocks.

(Miao et al. 2022) designed a blockchain-based privacy-preserving Byzantine robust FL (PBFL) scheme. The PBFL used cosine similarity to determine the gradients uploaded by malicious clients. Fully HE was used to provide secure aggregation. In general, PBFL uses a blockchain system to facilitate the implementation of transparent PBFL processes and regulations, thereby mitigating the impacts of central servers and malicious clients. (Durga and Poovammal 2022) proposed a novel framework based on blockchain and FL models. The FL model was responsible for reducing the complexity, whereas the blockchain helped protect the privacy of distributed data. This framework used a hybrid capsule learning network to develop models that protected privacy while performing accurate predictions. (Wang et al. 2022c) aimed at the problem of untrusted third parties in FL by adopting a distributed blockchain to distribute tasks and collection models. A reputation calculation method was proposed to calculate the real-time reputations of task participants. (Yu et al. 2022b) designed an overall framework for a blockchain-based FL system. The framework utilized distributed ledger technology to mitigate the problems of single points of failure and low-quality or poisoned data interference models in FL systems. It was designed to enhance the security and scalability of FL systems.

The blockchain-based FL technologies introduced above have focused on using the characteristics of blockchain technology to alleviate privacy and security issues in FL systems. However, blockchain technology can increase the complexity of FL systems. It is also possible that the FL system may become inefficient because of an inefficient consensus mechanism. To this end, many researchers regard the research and development of efficient and lightweight blockchain consensus mechanisms as a major research hotspot for the future development of blockchain-based FL technologies. (Yang et al. 2022) designed a credit data and model-sharing architecture based on FL and blockchain. The framework ensured the secure storage and sharing of credit information in a distributed environment. The framework proposed a permission control contract and credit verification contract for the security authentication of the results of the credit sharing model under FL. The efficient credit data storage mechanism, combined with a removable bloom filter, ensured a unified consensus of the training and calculation processes. (Li et al. 2022b) discussed existing quantum blockchain schemes and analyzed the reasons for the inefficiency of the current blockchain consensus mechanisms. A consensus mechanism called a quantum-delegated proof was constructed using quantum voting and provided rapid decentralization for quantum blockchain schemes. (Du et al. 2023b) investigated a blockchain-assisted EC scenario. A matching mechanism based on a smart contract was proposed to establish a lease association between EC nodes and data service operators. A trust-driven proof-of-benefit consensus mechanism was designed to realize verification of the transactions and fair remuneration distributions.

The integration of blockchain and FL technologies has largely alleviated the issues faced in the traditional FL field. However, after the integration of these two technologies, there remain problems caused by the blockchain itself. For example, the traditional blockchain consensus mechanism and network structure cause problems such as long transaction confirmation times, limited throughput, and complex communication structures. This also leads to an increase in the model update parameter aggregation delays in the blockchain network for each round of the FL process. Each FL participant uses a different local device. When the uploaded model is updated in the blockchain network, the time delays of each device may not be uniform. This can also lead to a decrease in the prediction accuracy of the trained global model (Zhu et al. 2022). Considering the existing problems of the blockchain-based FL frameworks, the current decentralized FL architecture approach is rapidly gaining popularity (Hu et al. 2019). Decentralized training has also been shown to be more efficient than centralized training when running federated systems in low-bandwidth or high-latency networks (Xiao et al. 2020; Liu et al. 2020; Jiang et al. 2020). Combined with the blockchain-based asynchronous FL framework proposed by (Feng et al. 2021), blockchain ensures that the model parameters in the chain are not tampered with. Simultaneously, the asynchronous FL accelerates the global aggregation. We find that an asynchronous FL framework based on blockchain can solve the problems in balancing privacy, security, and efficiency faced in current FL technology development to a certain extent.

3.2.5 Knowledge distillation

KD technology originated from the concept of transferring knowledge from large models to small models, and was formally proposed by (Hinton et al. 2015) in 2015. The core idea of KD concerns the transfer of knowledge. A student model obtains an accuracy comparable to that of a teacher model by imitating it. A complete KD system consists of three parts: knowledge, distillation algorithm, and teacher-student architecture. The distillation algorithm is the core step for determining how the knowledge of the teacher model is transferred to the student model.