Abstract

A multi-objective coyote optimization algorithm based on hybrid elite framework and Meta-Lamarckian learning strategy (MOCOA-ML) was proposed to solve the optimal power flow (OPF) problem. MOCOA-ML adds external archives with grid mechanism on the basis of elite non-dominated sorting. It can guarantee the diversity of the population while obtaining the Pareto solution set. When selecting elite coyotes, there is a greater probability to select the elite in sparse areas, which is conducive to the development of sparse areas. In addition, combined with Meta-Lamarckian learning strategy, based on four crossover operators (horizontal crossover operator, longitudinal crossover operator, elite crossover operator and direct crossover operator), the local search method is adaptively selected for optimization, and its convergence performance is improved. First, the simulation is carried out in 20 test functions, and compared with MODA, MOPSO, MOJAYA, NSGA-II, MOEA/D, MOAOS and MOTEO. The experimental results showed that MOCOA-ML achieved the best inverted generational distance value and the best hypervolume value in 11 and 13 test functions, respectively. Then, MOCOA-ML is used to solve the optimal power flow problem. Taking the fuel cost, power loss and total emissions as objective functions, the tests of two-objective and three-objective bechmark problems are carried out on IEEE 30-bus system and IEEE 57-bus system. The results are compared with MOPSO, MOGWO and MSSA algorithms. The experimental results of OPF demonstrate that MOCOA-ML can find competitive solutions and ranks first in six cases. It also shows that the proposed method has obtained a satisfactory uniform Pareto front.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The optimal power flow (OPF) problem is a large-scale, highly nonlinear, non-convex optimization problem (Singh et al. 2021). In 1962, Carpentier studied economic scheduling and added more constraints (Carpentier 1962). This expanded the economic dispatch problem, which is served as the foundation for the development of the OPF problem. The basic task of the power system is to operate safely and reliably to meet the power supply demand of the load side (Abdelaziz et al. 2016). The OPF flow is an effective tool, which can help experts make decisions on the planning and dispatching of power systems. Its core process is to adjust the control variables, such as active power generated by thermal power units, and obtain the power transmitted on each branch and the voltage of each node through power flow calculation. Through multiple iterations, the decision variables of the system are modified to obtain satisfactory operation status (Khunkitti et al. 2021).

The OPF problem has many constraints and multiple local optimal solutions (Davoodi et al. 2018). It means that there are a lot of infeasible solutions in the system. And it is easy to fall into the local optimal and stagnate in the process of solving. It is difficult for traditional optimization methods to find satisfactory results, such as nonlinear programming (Lavaei and Low 2011), Newton method (Santos and Costa 1995) and gradient method (Dommel and Tinney 1968). Intelligent algorithms with global search capabilities have received widespread attention. They are widely used in optimization problems in science and engineering, and can obtain competitive solutions. Many intelligent algorithms are employed in order to tackle the OPF problems and economic dispatch problem, including Particle Swarm Optimization (PSO) (Gomez-Gonzalez et al. 2012), Cuckoo Search (CS) (Ponnusamy and Rengarajan 2014), Ant Lion Optimizer (ALO) (Ali et al. 2016), Differential Evolution (DE) (Sayah and Zehar 2008) and Mine Blast Algorithm (MBA) (Ali and Abd Elazim 2018). These algorithms employ continuous iterations until a predefined termination condition is met. In each iteration, each individual updates according to certain rules or formulas, and constantly moves to the global optimal position. Many algorithms are faced with the problems of unbalanced exploration and exploitation and difficulty in jumping out of the local optimum. Some scholars have proposed different improvement strategies for the OPF problem.

Salma et al. proposed an improved salp swarm algorithm (ISSA), which incorporates random mutation and adaptively adjusts the exploration and exploitation process. The study considers the multiple fuel costs, valve point effects and prohibited operating zones of generators in the OPF system. ISSA has been able to find competitive solutions in multiple case studies (Abd El-sattar et al. 2021). Awad et al. proposed a new differential evolution algorithm, called DEa-AR, to solve the stochastic optimal active–reactive power dispatch (OARPD) problems involving renewable energy. DEa-AR uses arithmetic compound crossover strategy and adjusts the scaling factor based on Laplacian distribution. It also added an archive to place the inferior solution for later use. The simulation results show that the proposed algorithm can effectively solve the OARPD problem containing renewable energy and provide a high-quality solution (Awad et al. 2019). Farhat et al. proposed an enhanced slime mold algorithm (ESMA) based on neighborhood dimension learning search strategy so as to enhance its exploitation capability. Its test system incorporates wind and photovoltaic generators, and its objective function incorporates a carbon tax in order to reduce emissions. The testing results show that ESMA obtains the optimal solution and show better convergence performance (Farhat et al. 2022). Bentuati proposed an Enhanced Moth Swarm Algorithm (EMSA), which combines MSA with a reverse learning strategy to maintain the diversity of the moth population. It was tested in 12 cases of three OPF testing systems, and the results showed that EMSA had better performance (Bentouati et al. 2021).

Real problems usually have multiple objective functions. If these indexes do not conflict with each other, an optimal solution can be found by using optimization techniques. However, it is more common for objective functions to conflict with each other, and the improvement of one objective function will inevitably lead to the reduction of another objective function. This problem is known as a multi-objective optimization problem (MOP), and its optimal solutions form a set called the Pareto solution set (Rizk-Allah et al. 2020). The OPF problem consists of multiple objective functions, such as thermal power unit fuel costs, active power loss and emissions, which are inherently conflicting with one another (Fonseca and Fleming 1993). Therefore, the OPF problem is regarded as a multi-objective optimization problem to balance these conflicting objective functions. In many literatures above, the OPF problem is treated as a single objective optimization problem, and its objective functions are optimized separately. However, this approach is no longer suitable at present. American ecosystem conservation organizations strongly urge power plants not only to pursue the lowest power generation cost, but also to consider the pollution index (Taher et al. 2019). So the trend in recent years is to develop a multi-objective method to solve the OPF problems. Intelligent algorithms combining multi-objective thought have achieved exciting results on this problem, including NSGA-II (Jeyadevi et al. 2011), MOPSO (Hazra and Sinha 2011), MOEA/D (Medina et al. 2014), MOGJO (Snášel et al. 2023), etc. According to the law that there is no free lunch in the world, no perfect algorithm can have excellent performance in any problem, so the multi-objective optimization algorithm for OPF needs further research.

Shabanpour et al. proposed a modified teaching–learning-based optimization (MTLBO) based on an adaptive wavelet mutation strategy, which attached an external archive and used fuzzy clustering techniques to maintain the diversity of the external archive. It solves the multi-objective OPF problem including power generation cost and emissions, and obtains a set of Pareto solutions (Shabanpour-Haghighi et al. 2014). El-Sattar et al. used a Jaya optimization algorithm to solve the OPF problem, and solved the single-objective and multi-objective cases respectively. In the multi-objective framework, the Jaya algorithm is combined with the Pareto concept to obtain the non-dominant solution, and then the fuzzy set theory is used to obtain the optimal compromise solution. However, the solution set obtained by this method in solving multi-objective OPF problem is uneven (El-Sattar et al. 2019). Zhang proposed an improved decomposition method based on multi-objective evolutionary algorithm (MOEA/D) to deal with the competition of each index in the optimal power flow. An improved Chebyshev decomposition method is introduced to decompose each index in order to obtain uniformly distributed Pareto frontiers on each target. Simulation results show that it can find well-distributed Pareto solution sets (Zhang et al. 2016). Khan et al. proposed a multi-objective hybrid firefly and particle swarm optimization algorithm (MOHFPSO) by using a multi-objective structure based on non-dominated sorting and crowded distance methods. And MOHFPSO applied the ideal distance minimization method to select the optimal compromise solution from the Pareto optimal set. Although the Pareto solution set obtained is improved compared with the standard algorithm, its coverage rate decreases (Khan et al. 2020). Chen et al. proposed a Novel Hybrid Bat Algorithm (NHBA) to modify the local search formula and add a mutation mechanism by using a monotone random fill model (MRFME) based on extreme value. In order to obtain more feasible solutions, a non-dominated sorting method combining the Pareto fuzzy dominance (CPFD) of constraints is proposed. The results of OPF show that this method can deal with constraints better (Chen et al. 2019). Zhang et al. improved the NSGA-III algorithm named I-NSGA-III and applied it to the multi-objective OPF problem. An adaptive elimination strategy was proposed to reduce the use of selection strategies, and boundary point preservation strategy was integrated to maintain population diversity. Experimental results of OPF show that the proposed algorithm had better performance on three objectives, but not on two objectives (Zhang et al. 2019).

Multi-objective optimization algorithms often employ two strategies: population elitism and archive elitism. Population elitism algorithms (such as MOJAYA, MOHFPSO, NHBA) typically have a fixed population size. Excellent individuals may not be preserved and can be discarded during the evolutionary process. For algorithms with an archive (such as MTLBO, MOEA/D), the archive is usually used to store non-dominated solutions. However, the evolutionary process of the population is non-greedy, which does not guarantee the convergence and stability of the algorithm’s search for optimal solutions. We believe that combining these two aspects can help maintain the stability of the algorithm’s search for optimal solutions and find better solutions. Moreover, due to the different nature and characteristics of various problems, the same operator may perform well or poorly on different problems. For example, DE algorithm has developed many operators to adapt to different types of optimization problems. In the absence of prior knowledge, we are committed to developing an adaptive, parameter-free local optimizer that allows the algorithm to spontaneously select the appropriate operator for position updating.

In this paper, the coyote optimization algorithm (COA) is selected for research. COA is a new optimization algorithm proposed by Pierezan and Coelho in 2018 (Pierezan and Coelho 2018). COA combines the principles of evolution and swarm intelligence and has a unique algorithm setup, which includes swarm search of sub-populations and considers the birth and death process of coyotes. The algorithm has demonstrated excellent performance and has been successfully applied in numerous fields. Souza proposed a binary version of COA, which utilizes a hyperbolic transfer function to select the best feature subset for classification and employs the naive Bayes classifier to verify the performance of COA. The results show that COA can find subsets with fewer features and achieve better classification accuracy (Souza et al. 2020). Li added a differential evolution strategy to COA and combined it with the fuzzy Kapoor entropy and fuzzy median aggregation method to utilize it in the realm of threshold image segmentation and exhibit improved image segmentation quality (Li et al. 2021). Ali applied COA to solve the Unit Commitment (UC) problem in power systems, which aims to satisfy constraints while achieving an economic minimum cost over time. During the simulation experiments, COA was employed to determine the optimal generation schedule. The obtained results demonstrated that COA outperformed the existing literature in terms of both total cost reduction and shorter CPU running time (Ali et al. 2023).

Existing multi-objective algorithms for the multi-objective OPF problem face challenges in balancing convergence and diversity simultaneously. It is necessary to provide sufficient pressure during the offspring selection process and enhance the diversity and convergence of multi-objective optimization algorithms. This will help in discovering a higher quality solution set in the MOOPF problem. In this paper, a multi-objective COA based on hybrid elite mechanism and Meta-Lamarckian learning strategy (MOCOA-ML) is proposed for solving multi-objective OPF problems. The main contributions are as follows.

-

(1)

The coyote optimization algorithm was combined with non-dominant ranking. Non-dominant ranking was used to judge the dominant relationship among individuals, and the individuals equal to the population number were selected from all the individuals to enter the next iteration.

-

(2)

An external archive is added to retain the excellent individuals, which is similar to the archive in MOPSO and adopts the mechanism of grid. The role of the archive is to make the stored solution set more diverse and to have a greater probability of selecting the elite in the sparse area when selecting the elite coyote in COA. It is conducive to the development of the sparse area.

-

(3)

A local development optimizer based on the Meta-Lamarckian learning strategy is proposed to optimize the population solution. The local optimizer integrates four kinds of crossover operators, and adaptively adjusts the probability of each operator in the optimization process to achieve more efficient search.

The remaining sections of the paper are organized as follows. In Sect. 2, the mathematical model of the OPF problem is presented. In Sect. 3, the proposed MOCOA based on hybrid elite mechanism and Meta-Lamarckian learning is introduced. In Sect. 4, the performance of the proposed algorithm is tested by benchmark functions. In Sect. 5, six cases are selected in IEEE 30-node system and IEEE 57-node system for simulation experiments. In Sect. 6, the conclusion and future research direction are presented.

2 Mathematical model of multi-objective optimal power flow problem

2.1 Multi-objective optimization problem

Comparing solutions is a simple task in single-objective optimization since there is only one objective function to consider. For the minimization problem, the solution X is superior to Y if and only if f(X) is less than f(Y). However, in the field of multi-objective problems, each solution has multiple evaluation indexes, so some definitions need to be introduced.

Definition 1

Pareto domination. When a solution X is superior to a solution Y in all objectives, then the solution X dominates the solution Y, or alternatively, the solution X is dominated by the solution Y. If the solution X has at least one goal better than the solution Y, and there is some index worse than the solution Y, then the solution X and the solution Y do not dominate each other.

Definition 2

Pareto optimal solution. Solutions that are not dominated by either solution are called Pareto optimal solutions and are also called non-dominated solutions.

Definition 3

Pareto solution set. A set of non-dominant solutions is called a Pareto solution set.

Definition 4

Pareto frontier. Pareto solution sets form Pareto frontier after function mapping.

The multi-objective optimal power flow (MOOPF) problem is a constrained optimization problem. The objective is to minimize the selected objective functions under the condition of satisfying the equality constraints and inequality constraints. Since each index conflicts with each other, the answer to this problem is a Pareto solution set, which represents the optimal trade-off between multiple objectives. Mathematically, the MOOPF problem can be expressed in the following form:

where, \(f(x, y)\) represents the objective function of the OPF problem; \(F\left(x, y\right)\) represents the set of multiple objective functions; \(g(x,y)\) represents the equality constraint; The inequality constraint is represented by \(h(x,y)\); \(x\) and \(y\) represent control variables and state variables respectively.

2.2 Objective function

In this experiment, a total of three objective functions are selected, which are fuel cost, active power loss and pollution emission.

2.2.1 Fuel cost

The fuel cost of each thermal power unit has a certain functional relationship with the active power. In the study, approximate fitting is performed in the form of quadratic function, which is shown in Eq. (2).

where, \({a}_{i}\), \({b}_{i}\), \({c}_{i}\) are the fuel cost coefficient of the \(i\)-th generator, and \({P}_{{G}_{i}}\) are the active power emitted by the \(i\)-th generator; \({N}_{G}\) is the total number of generators.

2.2.2 Active power loss

There are resistance and conductance with fixed parameters in transmission line. Active power loss occurs when power is transferred through the grid. The mathematical formula of active power loss is shown in Eq. (3).

where, \(i\) and \(j\) are the \(i\)-th and \(j\)-th nodes respectively, \({G}_{ij}\) is the conductance between the two nodes, \(V\) is the node voltage, and \(\delta\) is the phase angle corresponding to the node voltage; \(Nl\) is the total number of transmission lines.

2.2.3 Emission

In the current society, environmental protection is an important topic. It is necessary to reduce the emission index of thermal power units. The total emission of air pollutants such as \({CO}_{x}\) and \({NO}_{x}\) produced by thermal power units can be defined as:

where, \({\alpha }_{i}\), \({\beta }_{i}\), \({\gamma }_{i}\), \({\xi }_{i}\) and \({\lambda }_{i}\) are the emission coefficients of the \(i\)-th generator.

2.3 Control variables

The control variables are the quantity that can be adjusted manually in the power system. They mainly include the active power output by the generator, the voltage of the generator bus, the tap position of the on-load tap changer and the reactive power of the shunt capacitor. The operation state of the power system can be changed by changing the control variables.

where, the active power output of the generator is\({P}_{{G}_{2}}, ...,{P}_{{G}_{NG}}\); The magnitude of the generator bus voltage is\({V}_{{G}_{1}}, ...,{V}_{{G}_{NG}}\); \({T}_{1}, ...{,T}_{NT}\) is the setting of the transformer tap position; \({Q}_{{C}_{1}}, ..., {Q}_{{C}_{NC}}\) is the reactive capacity of shunt capacitor; \(NT\) is the number of transformers; \(NC\) is the number of reactive capacitors.

2.4 State variables

State variables are called dependent variables, which changes as the control variable changes. The state variable in the OPF problem is shown in Eq. (6). Once the control variables in the system are defined, by employing the Newton–Raphson method, the power flow of the entire system and the voltage value of each node can be determined.

where, \({P}_{{G}_{1}}\) is the active power input by the balance node (in 30-node system and 57-node system); NL respectively represent the number of load nodes (PQ nodes). \({V}_{{L}_{1}}, ...,{V}_{{L}_{NL}}\) is the voltage of each load node in the power system; \({Q}_{{G}_{1}}, ...,{Q}_{{G}_{NG}}\) is the reactive power generated by the generator; \({S}_{{l}_{1}}, ..., {S}_{{l}_{Nl}}\) is the power transmitted on the line;

2.5 Equality constraints

The power in the power system must satisfy the law of conservation of energy, which means that the power emitted is equal to the power consumed. The most typical equality constraint is the balance of active power and reactive power in the system, as shown in Eqs. (7 and 8).

Equation (7) is the active power equation constraint, and \({P}_{{D}_{i}}\) is the active power demand of load. Equation (8) is the constraint of reactive power equation, and \({Q}_{{D}_{i}}\) is the reactive power demand of load. \({\delta }_{i}\) represents the phase Angle of the \(i\)-th bus. \({G}_{ij}\) and \({B}_{ij}\) are the conductance and inductance of the transmission line between the \(i\)-th bus and the \(j\)-th bus, respectively. \(NB\) indicates the number of nodes.

No additional treatment is needed for this equality constraint, because the termination condition of Newton–Raphson method can meet Eqs. (7 and 8). The successful execution of the power flow calculation program indicates that the results conform to the equation constraints.

2.6 Inequality constraints

Inequality constraints mainly restrict the safe operation of devices in the system. The following four parts are considered here: generator constraints, reactive capacitor capacity constraints, transformer constraints and safety constraints.

(1) Generator constraints

(2) Reactive compensation constraint

(3) Transformer constraint

(4) Safety constraints

where, \({{\text{S}}}_{{{\text{l}}}_{{\text{n}}}}^{{\text{max}}}\) represents the maximum transmission power on the i-th transmission line.

Some of these inequality constraints restrict the value range of control variables, and the upper and lower limits of control variables can meet these inequality constraints. The other part is to limit the value range of the state variables and the penalty function method is selected to deal with it. The penalty function method can transform the constrained optimization problem into an unconstrained optimization problem. Equations (16 and 17) are the penalty function and the modified objective function formula respectively.

where, \({f}_{i}\) is the i-th objective function; \(penalty\) is a penalty item; The value of \({k}_{p}\) is set to \({10}^{6}\); The value of \({k}_{Q}\) is set to \({10}^{6}\); The value of \({k}_{V}\) is set to \({10}^{9}\); The value of \({k}_{S}\) is set to \({10}^{6}\). The voltage constraint of the load node is easily violated, so the maximum penalty coefficient is set for it.

2.7 Fuzzy membership function

After solving the MOOPF problem, a set of Pareto solutions are obtained. Because these solutions are in the same dominant level, the pros and cons of each solution in Pareto frontier cannot be directly judged. In MOP, the fuzzy system can be used to deal with the contradictory relations of various objective functions. The concept of fuzzy membership function is introduced in Ref. Hazra and Sinha (2011). Membership function defined by a single objective function can be described as follows:

where, \({f}_{i}^{max}\) is the maximum value of the \(i\)-th objective function in Pareto solution set, and \({f}_{i}^{min}\) is the minimum value of the \(i\)-th objective function in Pareto solution set. The image of this function is shown in Fig. 1.

For the \(k\)-th individual in the solution set, the normalized membership function \({\mu }^{k}\) is defined as follows:

where, \(Po\) represents the number of Pareto solution sets, and \(N\) represents the number of objective functions.

The greater the value of normalized membership function \({\mu }^{k}\), the higher the satisfaction of the solution. The solution with the maximum membership function is the best compromise solution.

3 Multi-objective coyote optimization algorithm based on hybrid elite mechanism and Meta-Lamarckian learning strategy

3.1 Coyote optimization algorithm

COA is inspired by the behavior of coyotes and operates on a swarm-based approach. COA does not prioritize the wolf hierarchy and has a distinct algorithmic structure. The focus of COA is to imitate the social structure and experience-sharing aspect of coyotes. In the COA, the population of coyotes is divided into \({N}_{p}\) packs with \({N}_{c}\) coyotes in each pack. The number of coyotes in each pack is fixed. Therefore, the population number in this algorithm is obtained by multiplying \({N}_{p}\) and \({N}_{c}\). Each coyote has a social condition attribute (a set of decision variables), and the social condition of the \(c\)-th coyote of the \(p\)-th pack is written as:

where, \(SOC\) represents the decision variable, \(D\) is the search space dimension. The first step is to initialize the coyote population. As a randomized algorithm, the initial social conditions for each coyote of COA are set randomly. It passes through Eq. (21) and assign a random value to the \(j\)-th dimension of the \(c\)-th coyote of the \(p\)-th pack in the searching space during the t-th iteration.

where, \({lb}_{j}\) and \({ub}_{j}\) represent the lower and upper bounds of the \(j\)-th dimensional control variable respectively, \({r}_{j}\) is a random number between [0, 1]. Coyotes were then assessed for their adaptation to current social conditions.

where, \({fit}_{c}^{p,t}\) is the fitness value (objective function value).

There is one alpha coyote in each pack, and it is the individual with the best fitness value. In the minimization problem, the alpha of the \(p\)-th pack at time \(t\)-th is defined as:

COA assumes that coyotes have a certain amount of intelligence and organization, and each population shares social conditions that will help the population develop. Thus, the COA associates individual information from coyotes and calculates it as a cultural trend for the group.

where, \({O}^{p,t}\) denotes the social condition ranking of all coyotes in the \(p\)-th pack in the range [1, D] at the \(t\)-th iteration. All in all, the cultural disposition of the pack was equal to the median of the social conditions of all coyotes in the pack.

For showing the social conditions of different coyotes in pack affect each other, the COA assumes that each coyote individual receives alpha effects (\({\delta }_{1}\)) and population effects (\({\delta }_{2}\)). The former represents the cultural difference between the random coyote \({cr}_{1}\) and the alpha coyote, while the latter represents the difference between the cultural tendency of the random coyote \({cr}_{2}\) and the group. \({\delta }_{1}\) and \({\delta }_{2}\) are shown in Eq. (25).

Therefore, the new social conditions of coyotes are updated by the influence of alpha and group.

where, \({r}_{1}\) represents the weights affected by alpha and population. \({r}_{1}\) is defined as random numbers in the range [0, 1] generated with uniform distribution. \({r}_{2}\) decreases linearly with the number of iterations, \({r}_{2}=1-it/Maxit\). The new social situation is then assessed by Eq. (27).

Coyotes have the cognitive ability to judge whether new social conditions are better than old ones, which means that only when they get better social conditions, they will be updated.

After each pack position update, coyote births and deaths are considered. The birth of a new coyote is a crossover of the social conditions of the parents (chosen at random) and then the random effects of the environment. The formula for the birth is shown in Eq. (29).

where, \({r}_{1}\) and \({r}_{2}\) are random coyote individuals in the \(p\)-th pack; \({j}_{1}\) and \({j}_{2}\) are the two random dimensions of the problem; \({P}_{s}\) is the scattering probability, \({P}_{a}\) is the association probability; \({R}_{j}\) is a random number in the range of control variables for the \(j\)-th dimension; and \({rnd}_{j}\) is a random number generated with a uniform distribution in the range [0, 1]. Scattering and association probabilities guide the cultural diversity of coyotes so that \({P}_{s}\) and \({P}_{a}\) can be defined as:

where, \({P}_{a}\) has the same effect on both parents.

After evaluating the fitness values of all coyotes, the one with the highest fitness value is chosen as the global optimal solution for the problem. The pseudo code for COA is presented in Algorithm 1.

3.2 Meta-Lamarckian learning

Traditionally, Meta-Lamarckian Learning is often used in memetic algorithm (MA), and a local search process is added after the iterative update of MA (Ong and Keane 2004). It is difficult for a single local search method to achieve good results in different problems. Therefore, multiple local search (LS) methods are often used in MA searches. Meta-Lamarckian learning is motivated by the desire to improve search performance and reduce the probability of using inappropriate local methods. Meta-Lamarckian learning (adaptive) strategy can be described as cooperative and/or competitive. Competition means that LS method with higher fitness improvement has a higher chance to be selected for subsequent optimization. Cooperation means that LS and their improvement rewards work together to select an LS for subsequent optimization (Konstantinidis et al. 2018). Meta-Lamarckian Learning usually uses the improvement of the fitness value of a single objective function as an indicator to establish a reward mechanism, so it is often used in single-objective optimization and multi-objective optimization based on decomposition. For being used in multi-objective optimization, the reward mechanism is defined as follows:

where, \({\rho }_{k}\) is the reward value of the \(k\)-th LS method, \(n\) is the number of times that the LS method is used in the iteration, and \({n}_{s}\) is the number of times that the LS method is used to generate a non-inferior solution.

The incentive mechanism is to calculate the ratio of the number of non-inferior solutions generated by using each local optimization strategy to the number of generated individuals. In each iteration, after obtaining the reward value \({\rho }_{k}\) of each LS method, the probability of updating the roulette LS method after normalization is used for the next iteration. In other words, if a certain LS is used to generate the highest proportion of high-quality solutions, it is more likely to be selected in the next iteration. The probability of each LS method being selected at the beginning is equal. With the progress of iteration, the method with high reward value obtains higher probability of adoption. Random roulette works as follows:

-

Step 1: Calculate the reward value \({\rho }_{k}\) of each LS method.

-

Step 2: Standardize (normalize) the reward value of each LS method to obtain the relative reward value.

-

Step 3: Allocate space for each LS based on relative reward value.

-

Step 4: Generate a random number and select the LS method of the disk position corresponding to the random number.

The common local optimization methods include crossover, mutation, Powell method and simplex search method. In this experiment, a total of four crossover operators are adopted into the local optimizer, which are respectively called horizontal crossover operator, longitudinal crossover operator, elite crossover operator and direct crossover operator.

3.2.1 Transverse crossover operator

Inspired by the crisscross optimization algorithm (CSO) (Meng et al. 2014), the transverse crossing process is selected as the LS. As shown in Eq. (32), the function of this operator is to generate new individuals at the position between parents with a high probability and individuals at the extension line of parents with a low probability.

where, \({Xnew}_{i,d}\) is the i-th new individual in the d-th dimension; \({r}_{1}\) is a random number between [0, 1]; \(a\) is the random number between [-1, 1]; \({X}_{{i}_{1},d}\) and \({X}_{{i}_{2},d}\) are randomly selected parents in the cross operation.

3.2.2 Longitudinal crossover operator

Inspired by the CSO (Meng et al. 2014), the longitudinal crossover process is selected as the LS. As shown in Eq. (33), the effect of the operator is to change the value of one dimension of the individual.

where, \({r}_{2}\) is a random number between [0, 1]; \({X}_{i,{d}_{1}}\) and \({X}_{i,{d}_{2}}\) are values of the same individual in the dimensions of \({d}_{1}\) and \({d}_{2}\). Since solutions may have different upper and lower limits in different dimensions, the values of each dimension should be normalized.

3.2.3 Direct crossover operator

Equation (29) is selected as the LS, whose function is to generate individuals in the position of parent or parent.

3.2.4 Elite crossover operator

An elite crossover operator is proposed based on the direct crossover operator. As shown in Eq. (34), it serves to cross the position of the current coyote with that of the alpha coyote it follows.

where, \({alpha}_{i,d}\) is an elite coyote that \({X}_{i,d}\) has followed.

3.3 Multi-objective COA based on hybrid elite framework and Meta-Lamarckian learning strategy

3.3.1 Elite non-dominant sorting

In the proposed MOCOA, NSGA-II’s elite non-dominant ranking method and the crowding distance method to maintain diversity are introduced. The crowding distance is calculated to rank the populations of the same non-dominant level. First, a non-dominant ranking was used to obtain non-dominant levels of different individuals, and then the crowding distance method was used to maintain the diversity between the optimal sets.

3.3.1.1 Fast non-dominated sorting

Firstly, all targets of the objective function \(F\) are evaluated for each solution obtained from the basic search method (COA) or the initially generated random population \({P}_{O}\). Each solution \(p\) has two properties, \({n}_{p}\) is the number of solutions that dominate individual \(p\), and \({S}_{p}\) is the set of solutions that individual \(p\) dominates.

-

(1)

For solutions with \({n}_{p}=0\), the solutions are not dominated by any individual, whose non-dominated level \({p}_{rank}\) is set to 1 and stored in set \({F}_{1}\).

-

(2)

For each solution \(p\) with \({n}_{p}= 0\), access each member \(q\) in the set \({S}_{p}\), and its dominant count \({n}_{q}\) decreases by 1. If the \({n}_{q}\) count drops to zero, the corresponding solution \(q\) is stored in the second non-dominated level set \({F}_{2}\), whose non-dominated level \({p}_{rank}\) is set to 2.

-

(3)

Repeat the process for each member of the second non-dominated level to obtain the third non-dominated level, and then repeat the process until the whole population is divided into different non-dominated levels.

3.3.1.2 Determine crowding distance

To ensure that Pareto optimal solutions are well-distributed in the objective space, NSGA-II utilizes a crowding distance method to assess the quality of each solution within the same front, resulting in a more evenly distributed solution set. The main goal of using the crowding distance approach is to preserve population diversity by achieving a trade-off between solutions. Specifically, it refers to the density of individuals in a single rank layer after the non-dominant sorting of a population in accordance with the dominant relationship.

The crowding degree/crowding distance is calculated as follows. For each objective function, find two solutions adjacent to the current solution and calculate the functional difference between the two solutions. To calculate the crowding distance of a given solution, the differences between the objective function values of neighboring solutions are summed. The individual crowding degree at the boundary of each non-dominant layer is directly set to infinity (Jeyadevi et al. 2011). The sum of the two sides of the rectangle in Fig. 2 is the crowding distance of the \(p\)-th individual.

3.3.1.3 Crowding comparison operator and elite strategy

After the previous fast non-dominant ranking and crowding degree calculation, the \(i\)-th individual in the population has two attributes: the non-dominant layer \({p}_{rank}\) (the number of levels) and the crowding distance \({p}_{d}\). According to these two attributes, the crowding degree comparison operator can be defined as follows. The individual \(p\) is compared with another individual \(q\). If any of the following conditions are true, the individual \(p\) wins.

-

(1)

If the non-dominated layer of individual \(p\) is better than the non-dominated layer of individual \(q\), \({p}_{rank}<{q}_{rank}\);

-

(2)

If they have the same rank and the individual p has a larger crowding distance than the individual \(q\), that is, \({p}_{rank}={q}_{rank}\) and \({p}_{d}>{q}_{d}\).

The first condition ensures that the selected individual belongs to the superior non-inferior rank. The second condition can select the individual in the less crowded area (with a greater distance of crowding) among two individuals with the same non-inferior rank.

The elite strategy is used to select individuals to enter the next iteration. The new population \(P\) generated in the \(t\)-th iteration is combined with the old population \(Q\). Then a series of non-dominated sets are generated by non-dominated sorting, and the degree of crowding is calculated. Set the population number to \(N\) in the iteration, and select from the first layer until enough \(N\) individuals are selected according to the crowding comparison operator. These \(N\) better individuals enter the next iteration process and continue to update according to the formula of COA. This selection process is shown in Fig. 3.

3.3.2 Archives based on grid mechanism

3.3.2.1 Grid mechanism

For the solution set stored in the archive, the target space is divided equally by grid, and the number of grids on each target is set manually. Figure 4 is a schematic diagram in two-dimensional space. The number of grids on each target is 5. Each grid containing the solution is given an index number. For example, the index number of grid A in Fig. 4 is (2, 2). The purpose of the grid mechanism is to distinguish the density of the archive space in order to find a more crowded or sparse area for the next operation (Coello and Lechuga 2002).

3.3.2.2 External archive

An external archive is a storage unit defined as a fixed size. It can save or retrieve the non-dominant Pareto optimal solution obtained so far. The key module for archiving is an archiving controller that can control archiving when the solution wants to access the archive or when the archive is full. It is important to note that the archive has a maximum number of members. During the iteration, the non-dominant solutions obtained to date were compared to archived data. There are three different cases that can happen.

-

(1)

New members are dominated by at least one archive member. In this case, the solution should not be allowed to enter the archive.

-

(2)

The new solution dominates one or more solutions in the archive. In this case, delete the dominant solution from the archive and allow the new solution to enter the archive.

-

(3)

If the new solution and archive members are not mutually dominant, the new solution should be added to the archive.

If the archive is full, the grid mechanism should first be run to rearrange the segmentation of the object space. Through the roulette selection technique, the grids are selected to remove one of the solutions and the probability of each grid being selected is shown in Eq. (35). Then, the new non-dominated solution is recorded in the archive to improve the diversity of Pareto optimal frontier. As shown in Fig. 4, when the archive is full, there is a greater probability to select B(5, 1), the most crowded area, and randomly delete one of the solutions.

where, \(E\) is a constant and \(n\) is the number of solutions in the grid.

3.3.2.3 Elite selection mechanism

Elite is the alpha coyote in COA. Firstly, the grid mechanism is used to divide the archive. Then select a solution from the archive as alpha coyote through roulette. The probability of selection is calculated by Eq. (36).

where, \(E\) is a constant and \(n\) is the number of solutions in the grid.

The fewer the number of solutions in the grid, the greater the chance that the grid will be selected. As shown in Fig. 4, there is only one solution in A (2, 2), so A has the highest probability of being selected. The mechanism selects the sparser location solution as alpha coyote (elite). Alpha coyote, as the leader of the population, will guide the population to search for a more sparse solution set space, which can help it to find a more uniform Pareto front.

3.3.3 The process of MOCOA-ML

The proposed multi-objective coyote optimization algorithm (MOCOA) uses the multi-objective framework of non-dominated sorting and external files to obtain the Pareto optimal solution. There is one and only one optimal solution obtained by the single objective COA, which is the solution corresponding to the optimal fitness value. The MOCOA adopts the idea of COA to update the population position, merges the new solution set and the old solution set. Then, the non-dominant sorting and the crowding distance methods are used to get the undominated relations in the new set. After that, according to the size of the population, select the better individuals to enter the next iteration process, and other poor individuals are eliminated (dead). Archive the non-dominated solution (the first frontier individual) obtained from the non-dominated sorting. If the archive is full, use the grid mechanism to delete and add the individual. In addition, during the iteration process, alpha coyotes (leaders) are also selected from the archive according to the roulette method. After the iteration, output the Pareto solution set in the archive.

The Meta-Lamarckian learning strategy was combined with multi-objective coyote optimization algorithm, and it was named MOCOA-ML. On each loop, after the coyote position in each pack is updated, the local optimizer based on Meta-Lamarckian learning starts working, randomly picking individuals in the pack for a local search. Each time LS is selected and the scheme is selected by way of roulette according to the reward value in the last iteration. The individual generated by the local optimizer is compared with one of its parents. If the new individual dominates the parent, the new individual replaces the parent. If the new individual and the parent do not dominate, or the new individual is dominated by the parent, the new individual replaces any individual in the previous iteration. In other words, we tend to retain solutions generated by the local optimizer. This approach does not add any more computational pressure to the non-dominated sorting process, since the number of individuals participating in non-dominated sorting is still twice as large as the number of populations. The flow chart of MOCOA-ML is shown in Fig. 5. It should be noted that MOCOA-ML differs from MOCOA in whether it contains a local optimizer under the Meta-Lamarckian learning strategy.

4 Test function simulation and result analysis

In order to verify the performance of MOCOA-ML, several test functions were selected for testing, and the results were compared with MOCOA, MODA, MOPSO, MOJAYA, NSGA-II, MOEA/D, MOAOS and MOTEO. Because the algorithms used in the experiments were all random algorithms, and in order to be true and fair, when MOCOA-ML and other multi-objective intelligent optimization algorithms were used for the test, each group of experiments were independently run 10 times. The maximum number of iterations is set to 300, the population number N is set to 100, and the size of archive is set to 100. The parameter settings of the multi-objective improved algorithm and comparison algorithms are shown in Table 1. For each algorithm, calculate the fuzzy membership function of each solution according to Eqs. (18, 19) in Sect. 2.7, and the solution with the maximum membership function \({\mu }_{max}\) is considered the best compromise solution.

4.1 Performance metrics

Convergence and diversity are two key points in finding an appropriate Pareto optimal solution set for a particular problem. Convergence refers to the ability of multi-objective algorithm to determine the accurate approximation of Pareto optimal solution. Diversity refers to the ability of the algorithm to find a more complete Pareto front. The ultimate goal of the multi-objective optimization algorithm is to find the most accurate approximate value of the true Pareto optimal solution (convergence) with uniform distribution (diversity) on all targets. In this part, three commonly used indicators are selected to reflect the advantages and disadvantages of Pareto solution set of each algorithm. They are inverted generational distance (IGD) (Coello and Cortés 2005) and hypervolume (HV) (Zitzler and Thiele 1999). The first indicator is a reverse indicator, and the second is a positive indicator.

4.1.1 Inverted generational distance

The IGD metric is used to calculate the minimum distance between an individual on the actual Pareto frontier and the set of individuals generated by the algorithm. It can be expressed as:

where, \(ko\) is the number of Pareto solutions. It is expressed as the Euclidean distance between the \(p\)-th real Pareto solution and the nearest obtained Pareto solution.

4.1.2 Hypervolume

The HV value is the volume of the space covered by the Pareto front. The higher the HV value, the better the diversity and convergence of the corresponding Pareto frontier.

where, \(\delta\) is a Lebesgue measure used to measure volume. \(|N|\) represents the number of Pareto solution sets, and \({c}^{i}\) represents the hypercube formed by the reference point and the i-th solution in the solution set.

4.2 Function optimization simulation and result analysis

4.2.1 Simulation result and analysis of benchmark test functions

In order to prove the performance of MOCOA-ML, experiments were carried out on the test functions ZDT1-ZDT4, ZDT6, DTLZ2 and DTLZ4-DTLZ7. MOCOA, MODA, MOPSO, MOJAYA, NSGA-II, MOEA/D, MOAOS and MOTEO were selected as the comparison algorithms. Tables 2, 3 records the optimal value, average value and standard deviation of each algorithm in IGD and HV. Figure 6 shows the Pareto frontier of double-objective test functions, and Fig. 7 shows the Pareto frontier of three-objective test functions.

From the experimental results, it can be observed that MOCOA-ML has the ability to find the Pareto front of each test function and has better convergence and coverage. The average rankings obtained from the Friedman test are listed in Tables 2 and 3, and MOCOA-ML ranks first in both IGD and HV. This indicates that it outperforms MOCOA, MODA, MOPSO, MOJAYA, NSGA-II, MOEA/D, MOAOS and MOTEO on most test functions. Additionally, MOCOA-ML performs better than MOCOA in all performance metrics, demonstrating the effectiveness of the Meta-Lamarckian learning strategy. Among the 10 test functions, MOTEO performs well, second only to MOCOA-ML. NSGA-II and MOPSO also have good results, while MOJAYA performs the worst. MOCOA-ML exhibits better competitiveness in both bi-objective and tri-objective problems. In summary, MOCOA-ML showcases commendable performance and can be considered as a viable alternative algorithm. It is important to note that in Table 3, the rankings obtained from the Friedman test are inverted due to HV being a positive indicator.

4.2.2 UF test function results and analysis

In this section, MOCOA-ML was used to solve the test functions UF1-UF10. MOCOA, MODA, MOJAYA, MOPSO, NSGA-II, MOEA/D, MOAOS and MOTEO were selected as the comparative algorithms. The best values, average values, standard deviations of IGD and HV obtained by each algorithm, and the average rankings obtained from the Friedman test are recorded in Tables 4, 5. Figure 8 shows the best Pareto front obtained from the experiments. From Tables 4 and 5, it can be observed that MOCOA-ML exhibits significantly better convergence and diversity in UF1-UF6, UF8, and UF10, while its performance is slightly worse in UF9. The average rankings obtained from the Friedman test show that MOCOA-ML achieves the first rank in both IGD and HV indicators among the nine algorithms. MOCOA ranks second, followed by MOPSO. These results indicate that MOCOA-ML has highly competitive performance on the UF series test functions.

4.3 Population diversity analysis

MOCOA-ML utilizes a grid mechanism to maintain the diversity of the archive. To validate the effectiveness of this mechanism, the diversity of the archive during the convergence process of MOCOA-ML on different test functions was analyzed. The diversity curves for some test functions are shown in Fig. 9. The formula for calculating the population diversity \(div\) is given by Eq. (39) (Zamani et al. 2021).

where, \(N\) represents the number of individuals in the archive, \(D\) represents the maximum dimension of the decision variables, \({x}_{id}\) represents the value of the \(i\)-th individual on the \(d\)-th dimension, and \({x}_{mean,d}\) represents the mean value of all individuals in the archive on the \(d\)-th dimension.

In the curve shown in Fig. 9, smaller values indicate poorer diversity in the archive, while larger values indicate higher population dispersion. It can be observed that in ZDT1, div decreases continuously. This is because the true Pareto solution set of ZDT1 contains a significant number of zeros. During the iterative process, the individuals in the archive gradually approach the true Pareto solution set, resulting in this outcome. On the other hand, in other test functions, the div values remain at a high level, indicating good diversity in the archive. This further demonstrates the effectiveness of the grid mechanism in maintaining diversity.

4.4 Performance index analysis

To compare the performance of different algorithms, them are evaluated by using the Performance Index (PI) (Deep and Thakur 2007). PI is a positive indicator that takes into account the algorithm’s runtime. A higher value of PI indicates better algorithm performance. The detailed formulas for calculating PI are given by Eqs. (40 and 41).

where, \(A{vet}_{i}^{j}\) represents the average runtime of the \(i\)-th algorithm on the \(j\)-th test function, \(H\) represents the number of runs of an algorithm on a test function, \(Time(T)\) represents the time taken by the algorithm in the \(T\)-th run, \({PI}_{i}\) is the performance index of the \(i\)-th algorithm, \(Nf\) represents the total number of test functions, \(Min{f}^{j}\) represents the minimum average error value obtained by all algorithms on the \(j\)-th function, \(A{vef}_{i}^{j}\) represents the average error value obtained by the \(i\)-th algorithm on the \(j\)-th function, and \(Min{t}^{j}\) represents the minimum time obtained by all algorithms on the j-th function. \(\alpha\) and \(\beta\) are parameters in the range [0, 1] and have a linear relationship, \(\alpha +\beta =1\).

In this section, \(\alpha\) is set to 0, 0.2, 0.4, 0.6, 0.8, and 1. \(Min{f}^{j}\) and \(A{vef}_{i}^{j}\) are used as the average minimum IGD value and the average IGD value obtained by the \(i\)-th algorithm, respectively. The PI values of these algorithms on different series of test functions are plotted in Fig. 10. It can be observed that the MOCOA-ML algorithm has a certain advantage in terms of PI values on the test functions in all three series.

4.5 Wilcoxon signed-rank test

Wilcoxon signed-rank test is a non-parametric hypothesis test used to compare whether there is a difference in medians between two related samples (Zamani et al. 2022). It is suitable for situations where the data of the two related samples do not follow a normal distribution. In this test, the p-value with a significance level of 95% (α = 0.05) is calculated. If the p-value is less than or equal to the significance level (0.05), the null hypothesis was rejected. If the p-value is greater than the significance level, we fail to reject the null hypothesis.

Wilcoxon signed-rank tests are conducted to compare the IGD and HV values of MOCOA-ML on different test functions with eight other algorithms. The results are shown in Tables 6 and 7. The “+” sign indicates that the algorithm is significantly better than MOCOA-ML, the “−” sign indicates that the algorithm is significantly worse than MOCOA-ML, and the “=” sign indicates that there is no significant difference between the algorithm and MOCOA-ML. From the results in the tables, it can be concluded that in most cases, MOCOA-ML performs better than the eight compared algorithms.

4.6 Mean absolute error

The mean absolute error (MAE) is used to analyze the IGD indicators obtained by all algorithms to determine the difference between the obtained Pareto frontier and the real Pareto frontier. The calculation formula of MAE is shown in Eq. (42) (Zamani et al. 2021). The obtained results are shown in Table 8. MOCOA-ML ranked second in the ZDT test functions and first in the DTLZ and UF test functions. MOCOA-ML also performs well in MAE analysis.

where, \(Nf\) is the number of functions, \({C}_{j}\) is the optimal IGD value of the \(j\)-th function, \({D}_{j}\) is the optimal IGD value obtained from the \(j\)-th function.

5 Case study of optimal power flow

In order to verify the performance of the proposed MOCOA-ML to solve the MOOPF problem, simulation studies are carried out in IEEE 30-bus system and IEEE 57-bus system respectively in this section.

5.1 Test system and parameter setting

IEEE 30-node system is shown in Fig. 11. The system consists of 6 generators, 4 adjustable transformers and 9 capacitors. On the basis of 100 MVA, the active power demand at the load side is 283.4 MW and the reactive power demand is 126.2 MVAr. The voltage range of generator bus is 0.95–1.1 p.u.. The normal range of load bus voltage is 0.95–1.05 p.u. Bus 1 is the balance bus. The parameters of the generators are listed in Table 9, including cost coefficients and emission coefficients.

The structure of the IEEE 57 node system is shown in Fig. 12. The system consists of 7 generators, 50 load buses, 80 transmission lines, 17 adjustable transformers and 3 capacitors. The total active power demand at the load side is 1250.8 MW and the total reactive power demand is 336.4MVAr. The load bus voltage range is 0.94–1.06 p.u., and the transformer tap range is 0.9–1.1 p.u.. Shunt capacitor maximum reactive power is 30 MVAr. The cost and emission coefficients of generators are shown in Table 10. In this experiment, fuel cost, active power loss and total emissions were selected as objective functions and tested in two systems, with a total of 6 cases. The specific case combinations are shown in Table 11.

5.2 Simulation results and analysis

In order to verify the performance of MOCOA-ML, the results were compared with MOCOA, MOPSO, MOGWO and MSSA. For the IEEE 30-node test system, the population of each algorithm is set to 100, and the maximum number of iterations is 300. For the IEEE 57 node test system, the population of each algorithm is set to 100, and the maximum number of iterations is 700. Detailed parameter settings of each algorithm are shown in Table 12. They are independently run 30 times in each case.

5.2.1 Case 1

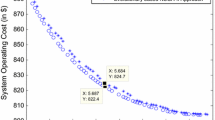

Fuel cost and active power loss are considered in this case. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA on each objective are shown in Table 13. MIN C represents the solution corresponding to the minimum fuel cost, MIN P represents the solution corresponding to the minimum active power loss, and COMP represents the best compromise solution. The minimum fuel cost and the minimum power loss obtained by MOCOA-ML are 800.7669 $/h and 3.1147 MW, respectively, and the compromise solutions are 834.6730 $/h and 5.3332 MW, which are not dominated by the compromise solutions of 836.5858$/h and 5.2834 MW obtained by MOCOA. The simulation result is shown in Fig. 13, showing the Pareto frontier found by each algorithm. It can be seen that MOCOA-ML has a Pareto solution set with more advanced position, and the result is better than MOCOA. Table 14 compares the compromise solutions of each algorithm. The compromise of MOCOA-ML is superior to MOPSO, MOGWO, MSSA, NSGA-III (Chen et al. 2019), PSO-Fuzzy (Liang et al. 2011) and EGA (Herbadji et al. 2019).

5.2.2 Case 2

Fuel cost and emissions are considered in this case. The simulation result is shown in Fig. 14, showing the Pareto frontier found by each algorithm. It can be seen that MOCOA-ML has a more advanced Pareto solution set, with slightly better results than MOCOA. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA on each objective are shown in Table 15. The minimum fuel cost and minimum emissions obtained by MOCOA-ML are 800.7411 $/h and 0.20485 ton/h respectively, and the compromise solutions are 834.2074 $/h and 0.2454 ton/h. Table 16 compares the compromise solutions of each algorithm. The compromise scheme of MOCOA-ML is superior to that of MOCOA, MOGWO, MSSA and AGSO (Daryani et al. 2016), and it has the same dominant level as the compromise scheme of other algorithms.

5.2.3 Case 3

Fuel cost, emissions and active power loss are considered in this case. The simulation result is shown in Fig. 15, which shows the Pareto frontier found by each algorithm. It can be seen that MOCOA-ML has a more advanced Pareto solution set, and the result is better than MOCOA. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA in each objective are shown in Table 17. The minimum fuel cost, minimum emission and minimum active power loss obtained by MOCOA-ML are 800.8717 $/h, 0.20484 ton/h and 3.1408 MW respectively, and the compromise solutions are 873.9523 $/h, 0.2191 ton/h and 4.3810 MW. Table 18 compares the compromise solutions of each algorithm. The compromise of MOCOA-ML is superior to that of MOCOA, MOPSO and MOGWO, and is at the same dominant level as that of other algorithms.

5.2.4 Case 4

In this case, fuel cost and active power loss are considered and simulated in IEEE 57 node system. The simulation result is shown in Fig. 16, showing the Pareto frontier found by each algorithm. It can be seen that MOCOA-ML has a more advanced and more malleable Pareto solution set, and its results are superior to MOCOA. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA in each objective are shown in Table 19. The minimum fuel cost and minimum active power loss obtained by MOCOA-ML are 41,675.44 $/h and 10.0428 MW respectively, and the compromise solution is 42,146.23 $/h and 11.0192 MW. Table 20 compares the compromise solutions of each algorithm. The compromise of MOCOA-ML is superior to that of MOCOA, MOPSO, MOGWO, MSSA, BMPSO (Qian and Chen 2022) and MOJFS (Shaheen et al. 2021).

5.2.5 Case 5

Fuel cost and emissions are considered in this case. The simulation result is shown in Fig. 17, showing the Pareto frontier found by each algorithm. It can be seen that the Pareto solution set of MOCOA-ML has obvious advantages, and the result is better than that of MOCOA. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA in each objective are shown in Table 21. The minimum fuel cost and minimum emissions obtained by MOCOA-ML are 41,698.88 $/h and 0.9546 ton/h respectively, and the compromise solutions are 42,474.51 $/h and 1.0632 ton/h. Table 22 compares the compromise solutions of each algorithm. The compromise of MOCOA-ML is superior to that of MOCOA, MOPSO, MSSA, MPIO-PFM (Chen et al. 2020), NSGA-III (Chen et al. 2019) and MOJFS (Shaheen et al. 2021).

5.2.6 Case 6

Fuel cost, emissions and active power loss are considered in this case. The simulation result is shown in Fig. 18, showing the Pareto frontier found by each algorithm. It can be seen that MOCOA-ML has a more advanced Pareto solution set, and the result is better than MOCOA. The optimal solutions and compromise solutions of MOCOA-ML and MOCOA on each objective are shown in Table 23. The minimum fuel cost, minimum emission and minimum active power loss obtained by MOCOA-ML are 41,695.75 $/h, 0.9556 ton/h and 10.3392 MW respectively, and the compromise solutions are 42,669.53 $/h, 1.0682 ton/h and 11.0802 MW. Table 24 compares the compromise solutions of each algorithm. The compromise of MOCOA-ML is superior to that of MOCOA, MOGWO, MSSA, MPIO-PFM (Chen et al. 2020) and MOALO (Herbadji et al. 2019), and is at the same dominant level as the compromise of other algorithms.

5.3 Evaluation on performance metrics

In this section, two performance indicators, IGD and HV, are selected to evaluate the algorithm. The former is an inverse index, reflecting the difference between the Pareto solution set found by the algorithm and the real Pareto solution set. The latter is a positive indicator, reflecting convergence and coverage. This is described in details in the previous section. Since the real Pareto frontier of OPF problem cannot be obtained, all the solution sets obtained by running each algorithm for 30 times are taken as a whole, and the non-dominant solution is found to replace the real Pareto frontier. The experimental results of IGD and the average rankings obtained from the Friedman test are shown in Table 25, and the box diagram is shown in Fig. 19. The results of HV and the average rankings obtained from the Friedman test are shown in Table 26, and the box diagram is shown in Fig. 20. The values within parentheses in Tables 25, 26 are the p-values obtained from the Wilcoxon signed-rank test (at a significance level of 95%). This test compares the results of MOCOA-ML with those of other algorithms. The IGD and HV values of the proposed algorithm are better than those of other algorithms. And MOCOA-ML obtained the optimal index in all cases. At the same time, its standard deviation is also smaller, which indicates the stability of the optimization algorithm to a certain extent.

6 Conclusions and future works

In this paper, a MOCOA based on hybrid elite framework and Meta-Lamarckian learning strategy is proposed to deal with multi-objective OPF problems with complex constraints. MOCOA-ML retains the main position updating formula of COA, and selects better populations by non-dominated sorting. Additional external archive can store uniform and diverse Pareto solution sets. Combined with the Meta-Lamarckian learning strategy, a local optimizer is established to further improve the performance of the algorithm. The experimental contents and results are as follows. (1) The proposed method was tested on 20 test functions, including ZDT series, DTLZ series and UF series, which were run independently 10 times in each case to record the performance metrics of the algorithm. The results of the test functions show that MOCOA-ML can find true Pareto frontiers on most functions, and the diversity of solution sets is best. (2) Simulation experiments were conducted on 6 OPF cases in IEEE 30-bus system and IEEE 57-bus system with fuel cost, active power loss and emissions as objective functions. The experimental results of OPF demonstrate that MOCOA-ML outperforms other advanced multi-objective optimization algorithms, such as MOPSO, MSSA and MOGWO. It effectively balances convergence performance with ductility, resulting in a superior and more uniformly distributed Pareto solution set.

The OPF problem has complex constraints, so proposing and selecting different constraint processing methods will directly affect the quality of the solution set. This paper only uses the basic penalty function method. The future work will focus on the constraint processing technology. In addition, the integration of new energy into the power grid is the future research trend. In the future work, the modeling of wind turbines and photovoltaic power stations will be carried out, and new energy will be added into the OPF problem and simulation will be carried out so as to deal with the challenges brought by the uncertainty of power and load demand of distributed generation.

Data availability

There are no data available for this paper.

Abbreviations

- \({f}_{cost}\) :

-

Objective function of fuel cost

- \({f}_{Ploss}\) :

-

Objective function of active power loss

- \({f}_{Emission}\) :

-

Objective function of emission

- \({a}_{i}\), \({b}_{i}\),\({c}_{i}\) :

-

Fuel cost coefficient of the \(i\)-th generator

- \({P}_{{G}_{i}}\) :

-

Active power emitted by the \(i\)-th generator

- \({Q}_{{G}_{i}}\) :

-

Reactive power emitted by the \(i\)-th generator

- \({N}_{G}\) :

-

Number of generators

- \({G}_{ij}\) :

-

Conductance between the two nodes

- \({B}_{ij}\) :

-

Inductance between the two nodes

- \(V\) :

-

Node voltage

- \(\delta\) :

-

Phase angle corresponding to the node voltage

- \(Nl\) :

-

Number of transmission lines

- \({\alpha }_{i}\),\({\beta }_{i}\),\({\gamma }_{i}\), \({\xi }_{i}\),\({\lambda }_{i}\) :

-

Emission coefficients of the \(i\)-th generator

- \(T\) :

-

Setting of the transformer tap position

- \(Q\) :

-

Reactive capacity of shunt capacitor

- \(NT\) :

-

Number of transformers

- \(NC\) :

-

Number of reactive capacitors

- \({S}_{l}\) :

-

Power transmitted on the line

- \({P}_{{D}_{i}}\) :

-

Active power demand of load

- \({Q}_{{D}_{i}}\) :

-

Reactive power demand of load

- \({soc}_{c}^{p, t}\) :

-

Social condition of the \(c\)-th coyote of the \(p\)-th pack at the \(t\)-th generation.

- \(lb\),\(ub\) :

-

Lower and upper bounds

- \({alpha}^{p,t}\) :

-

Social condition of elite coyote of the \(p\)-th pack at the \(t\)-th generation

- \({cult}^{p,t}\) :

-

Median of the social conditions of the \(p\)-th pack at the \(t\)-th generation

- \({pup}^{p,t}\) :

-

Social conditions of offspring of the \(p\)-th pack at the \(t\)-th generation

- \({P}_{s}\) :

-

Scattering probability

- \({P}_{a}\) :

-

Association probability

References

Abd El-sattar S, Kamel S, Ebeed M et al (2021) An improved version of salp swarm algorithm for solving optimal power flow problem. Soft Comput 25:4027–4052

Abdelaziz AY, Ali ES, AbdElazim SM (2016) Implementation of flower pollination algorithm for solving economic load dispatch and combined economic emission dispatch problems in power systems. Energy 101:506–518

Ali ES, AbdElazim SM (2018) Mine blast algorithm for environmental economic load dispatch with valve loading effect. Neural Comput Appl 30:261–270

Ali ES, AbdElazim SM, Abdelaziz AY (2016) Ant lion optimization algorithm for renewable distributed generations. Energy 116:445–458

Ali ES, AbdElazim SM, Balobaid AS (2023) Implementation of coyote optimization algorithm for solving unit commitment problem in power systems. Energy 263:125697

Anantasate S, Bhasaputra P (2011) A multi-objective bees algorithm for multi-objective optimal power flow problem. The 8th electrical engineering/electronics, computer, telecommunications and information technology (ECTI) Association of Thailand-Conference 2011. IEEE, pp 852–856

Awad NH, Ali MZ, Mallipeddi R et al (2019) An efficient differential evolution algorithm for stochastic OPF based active–reactive power dispatch problem considering renewable generators. Appl Soft Comput 76:445–458

Azizi M, Talatahari S, Khodadadi N et al (2022) Multiobjective atomic orbital search (MOAOS) for global and engineering design optimization. IEEE Access 10:67727–67746

Bentouati B, Khelifi A, Shaheen AM et al (2021) An enhanced moth-swarm algorithm for efficient energy management based multi dimensions OPF problem. J Ambient Intell Human Comput 12(10):9499–9519

Berrouk F, Bouchekara H, Chaib AE et al (2018) A new multi-objective Jaya algorithm for solving the optimal power flow problem. J Electr Syst 14(3):165–181

Biswas PP, Suganthan PN, Mallipeddi R et al (2018) Optimal power flow solutions using differential evolution algorithm integrated with effective constraint handling techniques. Eng Appl Artif Intell 68:81–100

Biswas PP, Suganthan PN, Mallipeddi R et al (2020) Multi-objective optimal power flow solutions using a constraint handling technique of evolutionary algorithms. Soft Comput 24(4):2999–3023

Carpentier J (1962) Contribution to the economic dispatch problem. Bullet Soc Francoise Electric 3(8):431–447

Chaib AE, Bouchekara H, Mehasni R et al (2016) Optimal power flow with emission and non-smooth cost functions using backtracking search optimization algorithm. Int J Electr Power Energy Syst 81:64–77

Chen G, Yi X, Zhang Z et al (2018) Solving the multi-objective optimal power flow problem using the multi-objective firefly algorithm with a constraints-prior pareto-domination approach. Energies 11(12):3438

Chen G, Qian J, Zhang Z et al (2019) Applications of novel hybrid bat algorithm with constrained Pareto fuzzy dominant rule on multi-objective optimal power flow problems. IEEE Access 7:52060–52084

Chen G, Qian J, Zhang Z et al (2020) Application of modified pigeon-inspired optimization algorithm and constraint-objective sorting rule on multi-objective optimal power flow problem. Appl Soft Comput 92:106321

Coello CAC, Cortés NC (2005) Solving multiobjective optimization problems using an artificial immune system. Genet Program Evol Mach 6(2):163–190

Coello CAC, Lechuga MS (2002) MOPSO: a proposal for multiple objective particle swarm optimization. Proc Congress Evol Comput 2:1051–1056

Daryani N, Hagh MT, Teimourzadeh S (2016) Adaptive group search optimization algorithm for multi-objective optimal power flow problem. Appl Soft Comput 38:1012–1024

Davoodi E, Babaei E, Mohammadi-ivatloo B (2018) An efficient covexified SDP model for multi-objective optimal power flow. Int J Electr Power Energy Syst 102:254–264

de Souza RCT, de Macedo CA, dos Santos CL et al (2020) Binary coyote optimization algorithm for feature selection. Pattern Recogn 107:107470

Deep K, Thakur M (2007) A new mutation operator for real coded genetic algorithms. Appl Math Comput 193(1):211–230

Dommel HW, Tinney WF (1968) Optimal power flow solutions. IEEE Trans Power Appar Syst 10:1866–1876

El-Sattar SA, Kamel S, El Sehiemy RA et al (2019) Single-and multi-objective optimal power flow frameworks using Jaya optimization technique. Neural Comput Appl 31(12):8787–8806

Farhat M, Kamel S, Atallah AM et al (2022) ESMA-OPF: Enhanced slime mould algorithm for solving optimal power flow problem. Sustainability 14(4):2305

Fonseca CM, Fleming PJ (1993) Genetic algorithms for multiobjective optimization: formulation discussion and generalization. Icga 93:416–423

Ghasemi M, Ghavidel S, Ghanbarian MM et al (2014) Multi-objective optimal power flow considering the cost, emission, voltage deviation and power losses using multi-objective modified imperialist competitive algorithm. Energy 78:276–289

Gomez-Gonzalez M, López A, Jurado F (2012) Optimization of distributed generation systems using a new discrete PSO and OPF. Electric Power Syst Res 84(1):174–180

Hazra J, Sinha AK (2011) A multi-objective optimal power flow using particle swarm optimization. Eur Trans Electr Power 21(1):1028–1045

Herbadji O, Slimani L, Bouktir T (2019) Optimal power flow with four conflicting objective functions using multiobjective ant lion algorithm: a case study of the Algerian electrical network. Iran J Electr Electron Eng 15(1):94–113

Jeyadevi S, Baskar S, Babulal CK et al (2011) Solving multiobjective optimal reactive power dispatch using modified NSGA-II. Int J Electr Power Energy Syst 33(2):219–228

Khan A, Hizam H, Abdul-Wahab NI et al (2020) Solution of optimal power flow using non-dominated sorting multi objective based hybrid firefly and particle swarm optimization algorithm. Energies 13(16):4265

Khunkitti S, Siritaratiwat A, Premrudeepreechacharn S (2021) Multi-objective optimal power flow problems based on slime mould algorithm. Sustainability 13(13):7448

Konstantinidis A, Pericleous S, Charalambous C (2018) Meta-Lamarckian learning in multi-objective optimization for mobile social network search. Appl Soft Comput 67:70–93

Kumar S, Jangir P, Tejani GG et al (2022) MOTEO: a novel physics-based multiobjective thermal exchange optimization algorithm to design truss structures. Knowl-Based Syst 242:108422

Lavaei J, Low SH (2011) Zero duality gap in optimal power flow problem. IEEE Trans Power Syst 27(1):92–107

Li L, Sun L, Xue Y et al (2021) Fuzzy multilevel image thresholding based on improved coyote optimization algorithm. IEEE Access 9:33595–33607

Liang RH, Tsai SR, Chen YT et al (2011) Optimal power flow by a fuzzy based hybrid particle swarm optimization approach. Electric Power Syst Res 81(7):1466–1474

Medina MA, Das S, Coello CAC et al (2014) Decomposition-based modern metaheuristic algorithms for multi-objective optimal power flow—a comparative study. Eng Appl Artif Intell 32:10–20

Meng A, Chen Y, Yin H et al (2014) Crisscross optimization algorithm and its application. Knowl-Based Syst 67:218–229

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27:1053–1073

Mirjalili S, Saremi S, Mirjalili SM et al (2016) Multi-objective grey wolf optimizer: a novel algorithm for multi-criterion optimization. Expert Syst Appl 47:106–119

Mirjalili S, Gandomi AH, Mirjalili SZ et al (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Ong YS, Keane AJ (2004) Meta-Lamarckian learning in memetic algorithms. IEEE Trans Evol Comput 8(2):99–110

Pierezan J, Coelho LDS (2018) Coyote optimization algorithm: a new metaheuristic for global optimization problems. In: 2018 IEEE congress on evolutionary computation (CEC). IEEE, pp 1–8

Ponnusamy A, Rengarajan N (2014) Optimal power flow solution using cuckoo search algorithm. ARPN J Eng Appl Sci 9(12):2687–2691

Pulluri H, Naresh R, Sharma V (2017) An enhanced self-adaptive differential evolution based solution methodology for multiobjective optimal power flow. Appl Soft Comput 54:229–245

Qian J, Chen G (2022) Improved multi-goal particle swarm optimization algorithm and multi-output BP network for optimal operation of power system. IAENG Int J Appl Math 52(3):1

Rizk-Allah RM, Hassanien AE, Slowik A (2020) Multi-objective orthogonal opposition-based crow search algorithm for large-scale multi-objective optimization. Neural Comput Appl 32:13715–13746

Santos AJ, Da Costa GRM (1995) Optimal-power-flow solution by Newton’s method applied to an augmented Lagrangian function. IEE Proc-Gener, Transmission Distrib 142(1):33–36

Sayah S, Zehar K (2008) Modified differential evolution algorithm for optimal power flow with non-smooth cost functions. Energy Convers Manag 49(11):3036–3042

Shabanpour-Haghighi A, Seifi AR, Niknam T (2014) A modified teaching–learning based optimization for multi-objective optimal power flow problem. Energy Convers Manag 77:597–607

Shaheen AM, El-Sehiemy RA, Alharthi MM et al (2021) Multi-objective jellyfish search optimizer for efficient power system operation based on multi-dimensional OPF framework. Energy 237:121478

Singh MK, Kekatos V, Giannakis GB (2021) Learning to solve the AC-OPF using sensitivity-informed deep neural networks. IEEE Trans Power Syst 37(4):2833–2846

Sivasubramani S, Swarup KS (2011) Multi-objective harmony search algorithm for optimal power flow problem. Int J Electr Power Energy Syst 33:745–752

Snášel V, Rizk-Allah RM, Hassanien AE (2023) Guided golden jackal optimization using elite-opposition strategy for efficient design of multi-objective engineering problems. Neural Comput Appl 35(28):20771–20802

Taher MA, Kamel S, Jurado F et al (2019) An improved moth-flame optimization algorithm for solving optimal power flow problem. Int Trans Electr Energy Syst 29(3):e2743

Zamani H, Nadimi-Shahraki MH, Gandomi AH (2021) QANA: quantum-based avian navigation optimizer algorithm. Eng Appl Artif Intell 104:104314

Zamani H, Nadimi-Shahraki MH, Gandomi AH (2022) Starling murmuration optimizer: a novel bio-inspired algorithm for global and engineering optimization. Comput Methods Appl Mech Eng 392:114616

Zhang J, Tang Q, Li P et al (2016) A modified MOEA/D approach to the solution of multi-objective optimal power flow problem. Appl Soft Comput 47:494–514

Zhang J, Wang S, Tang Q et al (2019) An improved NSGA-III integrating adaptive elimination strategy to solution of many-objective optimal power flow problems. Energy 172:945–957

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Trans Evol Comput 3(4):257–271

Acknowledgements

This work was supported by the Basic Scientific Research Project of Institution of Higher Learning of Liaoning Province (Grant No. LJKZ0293), and the Postgraduate Education Reform Project of Liaoning Province (Grant No. LNYJG2022137).

Funding

This study was funded by Basic Scientific Research Project of Institution of Higher Learning of Liaoning Province, LJKZ0293, Postgraduate Education Reform Project of Liaoning Province, LNYJG2022137.

Author information

Authors and Affiliations

Contributions

J-HZ participated in the data collection, analysis, algorithm simulation, and draft writing. J-SW participated in the concept, design, interpretation and commented on the manuscript. X-YZ, Y-CW, H-MS, YZ and XL participated in the critical revision of this paper.

Corresponding author

Ethics declarations

Conflict of interest