Abstract

In the era of digital communication and data security, image encryption plays a crucial role in safeguarding sensitive information. Protecting sensitive visual data from unauthorized access drives the pursuit of advanced image encryption methods. This paper proposes a novel approach to enhance image encryption by combining the power of a chaotic map, elliptic curve cryptography, and genetic algorithm. The chaotic map, specifically Arnold’s cat map, is employed to introduce chaos and randomness into the encryption process. The proposed image encryption process involves applying Arnold’s cat map for shuffling the pixel positions, followed by elliptic curve cryptography for encrypting the pixel values using public and private keys. Additionally, a genetic algorithm is employed to optimize the key generation process, enhancing the security of the encryption scheme. The combined utilization of these techniques aims to achieve a high level of confidentiality and robustness in image encryption. The algorithm underwent thorough analysis. It achieved a maximum entropy score of 7.99, indicating a high level of randomness and unpredictability in the encrypted data. Additionally, it exhibited near-zero correlation, which suggests strong resistance against statistical attacks. Moreover, the cryptographic range of possible keys was found to be \(2^{511}\). This extensive key space makes the algorithm highly resilient against brute force attacks. It took only 0.5634 s to encrypt a moderately sized \(512\times 512\) pixel image with an 8-bit image on a standard desktop computer with a 2.3 GHz processor and 16 GB of RAM. The experimental findings confirm that the proposed approach is highly effective and efficient in safeguarding sensitive image data from unauthorized access and potential attacks. This scheme has the benefit of allowing us to protect our private image data while it’s being transmitted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In today’s digital era, the security of sensitive information, especially images, has become a critical concern. With the increasing prevalence of image-based communication and storage, it is essential to ensure the confidentiality and integrity of images against unauthorized access and tampering Liao et al. (2017, 2019); Cao et al. (2003). Image encryption techniques play a vital role in safeguarding sensitive visual data from malicious attacks and unauthorized disclosures. However, with the advancements in computing power and cryptography-breaking techniques, there is a constant need to enhance image encryption methods to ensure robust security.

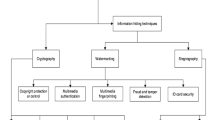

Image encryption techniques are algorithms designed to transform an image into a ciphered form, making it unintelligible to unauthorized individuals. Various image encryption approaches have been developed over the years, including conventional methods like symmetric and asymmetric encryption and more advanced techniques such as chaos-based encryption and cryptographic transformations. Some algorithms have been proposed in which images were encrypted using ECC and S-boxes Hussain et al. (2023); Khalid et al. (2022); Mahboob et al. (2022). Each technique employs different principles and mechanisms to achieve image security Choi et al. (2016); Hu et al. (2017); Takkar and Singh (2017); Xu et al. (2017).

This research paper introduces a novel approach designed to significantly enhance image encryption. It leverages the power of three distinct technologies: the Arnold cat map for pixel shuffling, elliptic curve cryptography (ECC) for pixel value encryption, and genetic algorithm (GA) for key generation. Each of these elements contributes significantly to the overall robustness and security of the image encryption process.

The primary objectives of this paper are multifaceted. Firstly, it aims to present a comprehensive image encryption methodology that seamlessly integrates these distinct components the Arnold cat map, ECC and GA in image encryption. This unique combination sets the research apart and promises a high level of data security through chaos-based pixel shuffling, robust encryption, and optimized key generation. Secondly, it strives to underline the effectiveness of this integrated approach, emphasizing its capability to impart a high degree of data confidentiality and bolster resistance against unauthorized access and adversarial attacks. Lastly, through a series of carefully designed experiments, this paper endeavors to substantiate the practical viability and efficiency of the proposed method in its mission to shield sensitive image data.

The increasing demand for secure image transmission, storage, and sharing necessitates continuously enhancing image encryption techniques. Improving image encryption methods is crucial to address the evolving challenges posed by emerging technologies and sophisticated attacks. Robust encryption schemes should provide high-level security and offer efficiency, scalability, resistance to cryptanalysis, and compatibility with different image formats. By enhancing image encryption, we can ensure the confidentiality, integrity, and authenticity of sensitive images, thereby safeguarding private and valuable visual information. Choi et al. (2016); Hu et al. (2017); Takkar and Singh (2017); Xu et al. (2017).

In the following sections, we will delve into the methodology, detailing the individual components of our approach and elucidating how they synergize to create an efficient and secure image encryption scheme. Furthermore, we will present experimental results that underscore the effectiveness and efficiency of our proposed method, demonstrating its practical utility in safeguarding sensitive image data.

2 Preliminary

2.1 Arnold’s cat map

Chaotic maps are mathematical models that display chaotic behavior, described by extreme sensitivity to initial conditions and the presence of deterministic randomness. One widely studied and widely used chaotic map is Arnold’s cat map.

Arnold’s cat map is a two-dimensional discrete chaotic map that operates on a square grid of pixels in an image. It was introduced by Vladimir Arnold in the 1960 s and has since been discovered in applications in different fields, including image encryption. The map’s name is inspired by the distinct behavior it exhibits when applied to an image of a cat Hariyanto and Rahim (2016).

Arnold’s cat map iteratively transforms the positions of pixels in an image based on a set of simple mathematical operations. Each pixel’s new position is determined by a permutation and linear transformation. The permutation step involves swapping the pixel’s coordinates, while the linear transformation step multiplies the coordinates by a fixed matrix and takes the modulo operation. The iterations of these steps create a scrambling effect on the image, redistributing the pixels in a chaotic manner Parida et al. (2021).

The chaotic nature of the Arnold's cat map ensures that even minor changes in the initial conditions or parameters can lead to substantial differences in the resulting image. This property contributes to the map’s suitability for image encryption, as it introduces a high level of randomness and diffusion, making it difficult for unauthorized individuals to decipher the encrypted image without the correct decryption key Bao and Yang (2012).

In the context of the proposed enhanced image encryption scheme, the Arnold's cat map is integrated as a crucial component to enhance the randomness and diffusion of the encryption process. By applying the map’s iterations to the image pixels, the scheme achieves a higher level of security and protects the encrypted image from various attacks.

2.2 Genetic algorithm

The Genetic Algorithm (GA) is a computational optimization approach that pulls inspiration from natural selection and evolution. Developed by John Holland in the 1970 s, this technique has found broad applications in different domains, including image encryption.

The core concept of the Genetic Algorithm is based on the principles of Darwinian evolution and the survival of the fittest. The algorithm mimics the natural evolution process by iteratively generating a population of potential solutions by employing genetic operators like selection, crossover, and mutation. The solutions can evolve and improve iteratively across generations.

In the context of image encryption, the Genetic Algorithm can be employed to optimize the encryption parameters or key space. By formulating the encryption process as an optimization problem, the Genetic Algorithm explores the solution space to find the most suitable set of parameters or key values that enhance the encryption strength and security.

In each iteration of the Genetic Algorithm, a fresh generation of potential solutions is generated by selecting the most suitable individuals from the previous generation. Genetic operators are then employed to produce offspring with altered characteristics. The fitness of each individual is determined by an objective function that assesses the encryption scheme’s quality or effectiveness.

The selection process favors individuals with higher fitness values, allowing them to contribute their genetic material to the next generation. Crossover and mutation operators introduce genetic diversity and exploration by combining and modifying the characteristics of selected individuals. The iterative process persists until a specified termination criterion is fulfilled, such as reaching a maximum number of generations or achieving convergence.

The Genetic Algorithm offers several advantages in image encryption, including its ability to handle large solution spaces, its adaptability to complex optimization problems, and its potential for discovering globally optimal solutions. By leveraging the Genetic Algorithm, the encryption scheme can dynamically adapt and optimize its parameters to improve the encryption strength and enhance the resistance against attacks Mirjalili and Mirjalili (2019).

In the proposed image encryption scheme, the Genetic Algorithm is integrated to optimize critical aspects such as parameter selection, key generation, and other components of the encryption process. This integration enables the encryption scheme to achieve better security, robustness, and efficiency.

2.3 Elliptic curve cryptography

Elliptic Curve Cryptography (ECC) is a contemporary and effective public-key cryptographic algorithm renowned for its efficiency. It relies on the principles of elliptic curves, which are characterized by equations formulated in the following manner:

where a and b are constants.

ECC presents numerous advantages compared to alternative public-key cryptography systems like RSA. Notably, ECC achieves equivalent security levels while utilizing substantially smaller key sizes. This characteristic renders ECC highly suitable for resource-limited environments, including mobile devices and embedded systems, where limitations in computational power and storage capacity are significant considerations.

The security of ECC depends on the challenge of solving the Elliptic Curve Discrete Logarithm Problem (ECDLP). This problem involves determining the integer k when provided with a point L on the elliptic curve and the scalar multiplication \(k*L\). The inherent mathematical properties of elliptic curves make this problem computationally demanding, posing difficulties even for high-performance computers Koblitz (1987).

The fundamental operations in ECC involve.

2.3.1 Point addition

Point addition refers to the process of merging two points on an elliptic curve to generate a third point. Suppose we have two points \(L(u_1, v_1)\) and \(S(u_2, v_2)\) on the curve. By performing the addition operation \(L + S\), we obtain a third point \(T(u_3, v_3)\) according to the following formulas::

2.3.2 Scalar multiplication

Scalar multiplication involves multiplying a point L on the elliptic curve by an integer k to produce a new point. The result is denoted as kL. Mathematically, scalar multiplication is performed iteratively using the point addition operation. For example, to calculate 4L, we can start with L and perform the point addition operation four times using \(L+L+L+L\).

2.3.3 Point subtraction

Point subtraction is the inverse operation of point addition. Given two points L and S, where \(S = L + T\), we can find the point T by subtracting L from S. Mathematically, the subtraction of two points is defined as

where \(-L\) is the negation of point L.

2.3.4 Key generation

In ECC, the procedure of key generation entails the selection of a private key, which is a randomly chosen integer within a defined range. The public key is subsequently derived by performing scalar multiplication of the private key with a base point, a predetermined point on the elliptic curve. Mathematically, the public key can be expressed as follows:

Public Key = Private Key × Base Point.

The base point, also known as the generator point, is a predefined point on the curve that generates all other points when multiplied by an integer.

To use ECC for encryption, a public-private key pair is generated. The public key, which consists of a point on the elliptic curve, is shared with others for encryption. The private key, which is a scalar value, is kept secret and used for decryption.

2.3.5 Encryption and decryption

If A intends to encrypt a message \((P_m)\) to transmit it to B, the resulting cipher text \((P_c)\) is obtained as follows:

where k is a randomly chosen integer, and \(P_B\) represents B’s public key, which is calculated using B’s private key \((n_B)\) as \(P_B = n_B G\).

Now B will decrypt cipher point \(P_c\) into original message \(P_m\). So, for this decryption multiply the \(x-coordinate\) of cipher point \(P_c\) with B’s secret key \(kG \times n_B\) and now subtract \((kG \times n_B)\) from \(y-coordinate\) of cipher point \(P_c\).

We know that \(P_B = n_B \times G\)

Hence B get the same message \(P_m\) which is send by A.

The effectiveness of ECC (Elliptic Curve Cryptography) relies on the specific properties and parameters associated with the selected elliptic curve. The size and properties of the curve play a crucial role in determining its security. Typically, elliptic curves defined over prime fields or binary fields are employed. To ensure consistent security levels, the National Institute of Standards and Technology (NIST) has established a set of standardized elliptic curves.

In the proposed image encryption technique, Elliptic Curve Cryptography is integrated as a robust and efficient method for key generation and communication. By leveraging the mathematical properties of elliptic curves, the encryption scheme achieves secure and efficient encryption and decryption processes, enhancing the overall security of the system.

3 Related work

In the realm of image encryption, several researchers have made significant contributions to enhancing the security and robustness of encryption methods. Rezaei et al. proposed a new image encryption technique that leverages the Henon chaotic map and the Imperialist Competitive Algorithm (ICA) Rezaei et al. (2023). The ICA optimizes the parameters of the Henon map to generate unique pseudorandom numbers for each input image, thus improving the encryption technique. Experimental results exhibit the effectiveness and robustness of this method against common attacks, outperforming the Henon function with fixed parameters and further enhancing entropy and reducing correlation coefficients in the encrypted image.

In a similar vein, Prusty et al. presented an image encryption approach using Arnold’s Cat Map for pixel shuffling and the Henon Map for generating pseudo-random numbers Prusty et al. (2013). The encryption process involves XOR operations between pixel values and key values generated by the Henon Map. The algorithm successfully encrypts and decrypts images, showcasing its suitability for internet image encryption and secure communication of private data.

Furthermore, Hakan Tora et al. introduced a novel method for generating the transformation matrix of Arnold’s Cat Map (ACM), enhancing security by expanding the matrix space and making it harder to predict Tora et al. (2022). This approach removes the fixed structure limitation of traditional ACM matrices, leading to a larger set of transform matrices with no predictable pattern. As a result, the difficulty for third parties to discover the transform matrix used for scrambling increases, enhancing resistance against brute-force attacks.

In the pursuit of information security, Minati Mishra et al. emphasized the significance of spatial domain LSB substitution combined with Arnold’s transform for data embedding Mishra et al. (2014). This method demonstrates high security and data-hiding capacity. However, its robustness against noise and compression may be a concern, prompting potential future research on transform domain methods to achieve both robustness and high capacity.

On the other hand, Narendra K. Pareek and Vinod Patida proposed a method that utilizes genetic algorithms to encrypt gray scale medical images, incorporating key-dependent processes to enhance robustness Pareek and Patidar (2016). The method undergoes rigorous security analysis, demonstrating strong statistical properties and resistance against various attacks, thus being considered suitable for the immediate transfer of digital grayscale medical images.

Addressing medical image encryption from a different perspective, Amal Hafsa proposed an improved cryptographic approach that combines AES and ECC Hafsa et al. (2021). The method optimizes the ECC hardware architecture to achieve a balance between area, power dissipation, and speed while also modifying AES to reduce time complexity while maintaining encryption principles. The proposed cryptosystem demonstrates high security, speed, and efficiency.

In another innovative image encryption approach, Zhongjian Zhao and Xiaoqiang Zhang combine code computing and ECC to establish secure image interaction Zhao and Zhang (2013). The method involves encoding the original image to obtain a code matrix A, conducting code addition operations to derive a new code matrix C, and further transforming and decoding the packages to obtain the encrypted image. ECC is employed to encrypt the secret key. Experimental results and security analyses affirm the robust encryption capabilities of the proposed scheme, effectively mitigating exhaustive, statistical, and differential attacks.

Hussain et al. (2023) presented a modified encryption scheme based on Serpent, introducing a unique Cipher design using power associative (PA) loops and permutations. This alteration surpasses Galois Field (GF) in complexity and key space size, enhancing randomness.

Khalid et al. (2022) introduced an image encryption scheme, ECIES, ensuring data confidentiality and user authentication. Using Diffie-Hellman key exchange and SHA-256, it allocates 128 bits for confidentiality and authentication each. The system employs affine power transformations and elliptic curve-derived sequences for confusion and diffusion.

Mahboob et al. (2022) presented an article highlighting the significance of robust S-boxes in cipher security and a new method using fractional transformations to create strong S-boxes. These S-Boxes undergo thorough testing, exhibiting superior security against attacks compared to previous models. Integrated into an image encryption scheme, they prove highly effective in safeguarding image data, outperforming other S-Box-based encryption methods.

Luo et al. (2019) introduced an innovative image encryption method using elliptic curve ElGamal and chaotic theory. It utilizes SHA-512 hash to initialize a chaotic system, enabling secure encryption and reducing correlations between pixels. The technique exhibits robustness against known-plaintext and chosen-plaintext attacks, promising high security and efficiency for secure image communications.

Parida et al. (2021) introduced an Elliptic Curve Cryptography-based image encryption and authentication model that ensures secure transmission. It employs ECDH key exchange and ElGamal encoding, integrating 3D and 4D Arnold Cat maps for pixel transformation.

Ghazvini et al. (2020) introduced a hybrid image encryption method, leveraging genetic algorithms and chaos. The process involves confusion and diffusion phases employing chaotic maps like Chen’s and Logistic-Sine maps. The genetic algorithm optimizes the encrypted images, yielding the best cipher image.

Lin et al. (2021) introduced an intelligent symmetric cryptography approach using chaotic maps and quantum-based key generation for encrypting medical images. The scheme involves generating random cipher codes, training GRA-based decryptors, and evaluating decrypted images. The hybrid chaotic map and quantum-based key generator enhance chaotic complexity, creating cipher codes to alter pixel values in 2D medical images.

Lastly, Lin Chia-Hung et al. proposed an intelligent approach to symmetric cryptography that combines a chaotic map and a quantum-based key generator (KG) to encrypt and decrypt medical images Lin et al. (2021). The scheme incorporates a hybrid chaotic map and quantum-based KG to enhance the complexity and unpredictability of cipher codes, resulting in visually uncorrelated encrypted images and improved resistance against passive eavesdroppers.

Overall, these works significantly help in the advancement of image encryption techniques, offering various methods to enhance security, robustness, and efficiency for safeguarding sensitive image information across different application domains.

4 The proposed image encryption scheme

The enhanced image encryption scheme combines multiple techniques to provide robust security and confidentiality to image encryption. The method comprises four main components: Arnold’s Cat Map, elliptic curve cryptography (ECC), genetic algorithm (GA), and secure key management. The proposed method is divided into three phases: Chaotic map-based pixel shuffling, Elliptic curve cryptography for image encryption, and Genetic algorithm for key generation. Each component plays a crucial role in the encryption process to ensure the integrity and privacy of the image data.

4.1 Image scrambling with Arnold’s cat map

-

The initial image is initially transformed into a 2D matrix containing pixel values, where each pixel corresponds to a coordinate point (u, v) within the image grid.

-

The Arnold’s cat map is employed to transform the pixel positions represented as (u, v) within the image.

-

The equations for Arnold’s cat map are used to calculate new pixel positions \((u', v')\) for each pixel:

$$\begin{aligned} \begin{pmatrix} u'\\ v' \end{pmatrix} = \begin{pmatrix} 1 &{} r\\ s &{} rs + 1 \end{pmatrix} \begin{pmatrix} u\\ v \end{pmatrix} mod M \end{aligned}$$where positive integers r and s are controlled parameters and M is the dimention value of a \(M\times M\) image.

Pseudo code for scrambling method is given in algorithm 1.

Algorithm 1 Scrambling using Arnold Cat

4.2 ECC key generation using genetic algorithm

-

Before proceeding with ECC encryption, a private key is randomly generated. This private key is a secret integer chosen from a specific range, typically \([1, p-1]\), where p is a large prime number generated for ECC.

-

Next, a genetic algorithm (GA) is employed to optimize the ECC public key generation process:

-

The GA operates for a specified number of generations, seeking to find a public key that is as close as possible to a target point on the elliptic curve defined by parameters a, b, and base point G.

-

The GA uses a fitness function to evaluate how close each public key candidate is to the target point. Lower fitness values indicate a closer match.

-

In each generation, a population of ECC public keys (represented as chromosomes) is selected, crossed over, and mutated to create a new generation of keys. The fittest keys from the current generation are more likely to be selected as parents.

-

The GA aims to evolve a population of keys that converge toward the target point, enhancing the security of the encryption process.

-

4.3 ECC encryption of pixel values

-

With the ECC public key optimized through the GA, the encryption of pixel values can begin.

-

For each pixel in the shuffled image:

-

The pixel value is treated as a message to be encrypted.

-

The ECC encryption algorithm uses the public key to transform the pixel value into a ciphertext.

-

The cipher text is stored in the encrypted image.

-

Pseudo code for proposed encryption is given in algorithm 2.

Algorithm 2 Encryption using ECC and GA

This process combines the strengths of the chaotic map for pixel shuffling, ECC for pixel value encryption, and a genetic algorithm for optimizing the ECC public key to enhance the overall Safety of the image encryption. The result is a highly secure and robust image encryption scheme that protects sensitive image data against unauthorized access and attacks.

5 Experimental methodology

5.1 Experimental Setup

The experiments were conducted using a regular desktop computer with some specific features. The computer had a processor running at a speed of 2.3 GHz, which means it can perform tasks quickly. Additionally, it had 16 GB of RAM, which is a type of memory used to temporarily store data for fast access. The data set comprises standard images from publicly available databases, such as Lena, Baboon, Cameraman, Barbara, and Peppers. For the elliptic curve cryptography part of the proposed algorithm, a secure 512-bit elliptic curve from ECC BrainpoolElliptic Curve Cryptography(ECC) Brainpool Standard Curves and Curve Generation (2021) was employed. The specific parameters of this elliptic curve, essential for the implementation, are detailed in Table 1.

5.2 Encryption process using the proposed scheme

The selected data set is encrypted using the enhanced image encryption scheme described in Sect. 4. Each image in the data set undergoes the encryption method, which involves applying Arnold’s Cat Map for pixel shuffling, utilizing elliptic curve cryptography and genetic algorithm for key generation and encryption. The encryption process ensures that the image data is transformed into a highly secure and unintelligible form, protecting its confidentiality.

5.3 Performance metrics and evaluation criteria

To assess the performance of the proposed enhanced image encryption scheme, various performance metrics and evaluation criteria are considered. These metrics include:

-

1.

Statistical Analysis: The analysis of statistical properties, such as histogram distribution, correlation, and entropy, to measure the randomness and information preservation of the encrypted images.

-

2.

Key Sensitivity: The impact of key modifications on the decrypted image quality, evaluating the robustness of the scheme against key alterations.

-

3.

Security: The scheme’s resistance to cryptographic attacks, such as brute-force attacks, differential attacks, and statistical attacks.

-

4.

Comparison with Existing Techniques: Evaluating the performance of the proposed scheme in comparison to other contemporary image encryption methods, considering factors like security, efficiency and image quality preservation.

The experimental methodology aims to evaluate the effectiveness and performance of the proposed enhanced image encryption scheme. By considering appropriate data sets, conducting the encryption process, and utilizing relevant performance metrics and evaluation criteria, the experimental results will provide insights into the scheme’s security, efficiency, and applicability for real-world image encryption applications.

6 Results and analysis

The experimental results of the proposed enhanced image encryption scheme are presented, followed by a detailed statistical analysis, key sensitivity, security, and image quality preservation.

6.1 Statistical analysis

6.1.1 Histogram analysis

Histogram analysis is a widely used technique to study the distribution of the pixel intensities present in an image Liu et al. (2016); Niyat et al. (2017). It provides insightful details about the brightness, contrast, and existence of particular patterns or features in the image. The histogram displays the frequency of individual pixel intensity levels in an image. By examining the histograms of the original and encrypted images, we can evaluate how the encryption process impacts the distribution of pixel intensities. A suitable encryption method should ideally produce a cipher image with a uniformly distributed histogram Chai et al. (2017); Liu et al. (2016). In Fig. 1 We are able to view representations of a number of images, each created with the suggested image encryption technique, including the plain image, the histogram that corresponds to it, and the histogram of the encrypted image.

One notable observation is that the histogram of the encrypted image exhibits a more uniformly distributed intensity profile when compared to that of the plain image. This suggests that the encryption scheme effectively introduces randomness and diffusion in the cipher image, making it challenging to discern any specific patterns or information. Overall, the histogram analysis supports the effectiveness of the suggested enhanced image encryption scheme in modifying the pixel intensity distribution, enhancing the security of the image and making it resistant to unauthorized access.

6.1.2 Correlation analysis

Correlation analysis is an effective method for determining the relationship between neighbouring pixel values in an image Enayatifar et al. (2017). By conducting correlation analysis between the plain image and the cipher image, we can evaluate the correlation or relationship between the pixel values in both images. This analysis provides insights into how the encryption process affects the correlation patterns of pixel values.

In order to calculate the correlation coefficient in the diagonal, vertical, and horizontal directions, we apply the equations (4-7) Zhou et al. (2014); Enayatifar et al. (2017); Abdullah et al. (2012).

For both the original image and the cipher image created by using the suggested enhanced image encryption scheme, correlation analysis was performed to look into the inter-pixel correlation in the vertical, horizontal, and diagonal directions.

We randomly chose 10,000 pairs of neighboring pixels, and the correlation coefficients were calculated for each pair of adjacent pixels in both the plain and cipher images. The correlation coefficient quantifies the intensity and direction of the linear association between two variables. The findings demonstrate that the correlation coefficients of neighboring pixels in the original image exhibit relatively high values Belazi et al. (2017); Enayatifar et al. (2017). This implies a strong linear relationship between adjacent pixels, indicating the existence of spatial patterns or structures within the image.

In contrast, the correlation coefficients of neighboring pixels in the encrypted image exhibit a significant decrease compared to those in the plain image. The encryption process successfully disrupts the linear relationships between neighboring pixels, introducing randomness and diffusion. This decrease in correlation coefficients implies a higher level of randomness and enhanced security in the cipher image.

Results are shown in Table 2 and in Fig. 2, which illustrates the correlation coefficient of neighbour pixels in plain and cipher images.

These results affirm the effectiveness of the proposed image encryption scheme in reducing the correlation between adjacent pixels, thereby increasing the security and resistance against unauthorized access.

6.1.3 Entropy analysis

The analysis of entropy is utilized to measure the level of randomness and information content found in both the plain and encrypted images resulting from the application of the suggested image encryption method.

Equation (5) calculates the information entropy Abdullah et al. (2012); Liu et al. (2016):

In the provided context, N signifies the quantity of bits needed to represent the symbol \(s_j\), while \(P(s_j)\) represents the likelihood of the symbol \(s_j\) occurring.

We conducted entropy analysis to assess the level of randomness and information content in both the original and cipher images. The entropy values were calculated using Shannon’s theory of information. An ideal encryption system should produce a encrypted image with an entropy value of 8 Xu et al. (2017); Matthews (1989). We found that the entropy values of the cipher images produced by our suggested scheme were higher than those of the encryption techniques currently used, indicating a higher level of randomness and improved security. Table 3 shows the entropy value for plain and encrypted image for different images. These results demonstrate the efficiency of our encryption scheme in introducing greater uncertainty and protecting image data from unauthorized access.

6.1.4 Contrast

Contrast refers to the difference in luminance or color that makes an object distinguishable. It’s a measure of the separation between the darkest and lightest parts of an image or the range of different tones present.

High-contrast images have well-defined borders between light and dark areas, resulting in a visually striking and clear image. Conversely, low-contrast images have a narrower range of tones, making it challenging to differentiate between different elements or details within the image. Results are shown in Table 4. Mathematically, contrast can be measured by the following equation:

where n(g, h) denote the number of gray-level co-occurrence matrices and g, h are pixels of image.

6.1.5 Energy

Energy, also known as angular second moment or uniformity, is a statistical measure used to characterize the texture or spatial distribution of pixel intensities in an image. It quantifies the uniformity of pixel values.

The energy (E) of an image or a specified region within an image is calculated based on the Gray-Level Co-occurrence Matrix (GLCM), which represents the occurrences of pixel intensity pairs at specified spatial relationships (such as distance and angle). The formula to calculate energy from the GLCM is:

where M is the number of gray levels in the image and GLCM(g, h) represents the count of occurrences of the pixel intensity pair (g, h) at a specific distance and angle.

The energy value ranges between 0 and 1. Higher energy values indicate greater uniformity in the texture of the image, where pixel values are more consistent or uniform. Results are shown in Table 4.

6.1.6 Homogeneity

The uniformity or similarity of pixel intensities within an image or within a particular area of an image is measured as homogeneity. It quantifies how close the distribution of pixel values is to being uniform or consistent.

The homogeneity of an image can be computed using the Gray-Level Co-Occurrence Matrix (GLCM), similar to how other texture properties like energy or contrast are calculated. Results are shown in Table 4

The formula to calculate homogeneity from the GLCM is often derived from the GLCM elements, which represent the occurrences of pairs of pixel intensities at specified spatial relationships:

where

M is the number of gray levels in the image.

GLCM(p, q) represents the count of occurrences of the pixel intensity pair (p, q) at a specific distance and angle.

The homogeneity value ranges between 0 and 1. Higher values indicate more uniform or homogeneous texture in the image, where pixel values are more similar or closely packed.

6.2 Key analysis

6.2.1 Key space analysis

Key space analysis entails examining the size and intricacy of the encryption key space utilized in the proposed encryption scheme. A larger key space enhances security by rendering it exceedingly difficult for attackers to guess or exhaustively search for the key. In our proposed algorithm, the key space is \(2^{511}\), which is sufficiently large. A larger key space exponentially expands the number of potential key combinations, making it computationally infeasible to try all combinations within a reasonable time frame.

These findings emphasize the effectiveness of the proposed encryption method in creating a strong and secure key space, ensuring the integrity and confidentiality of the encrypted image data.

6.2.2 Key sensitivity analysis

To evaluate the sensitivity of the encryption key and its impact on the encrypted image, we conducted key analysis experiments. The key analysis involved systematically varying the encryption key while keeping all other parameters constant. During the key analysis, we selected a set of representative images and encrypted them using the proposed encryption scheme. We then generated multiple cipher images by modifying specific bits or components of the encryption key. By comparing the resulting cipher images, we assessed the effect of key variations on the encryption process. We analyzed the changes in the encrypted image quality, security, and resistance against decryption attempts. The key analysis revealed that even slight modifications in the encryption key led to significant changes in the cipher image. This demonstrated the high sensitivity of the encryption key and its crucial role in ensuring the security and confidentiality of the encrypted data.

6.3 Differential attacks

The proposed enhanced image encryption scheme demonstrates a remarkable sensitivity to even the most minor alterations in the original image, which is a crucial characteristic of image encryption. This means that even a slight modification, such as a single bit change, should result in a substantial difference in the resulting cipher image.To assess this sensitivity, the encrypted images of the original image and its modified version can be compared using evaluation metrics such as the Number of Pixels Change Rate (NPCR) and Unified Average Changing Intensity (UACI). These metrics offer a quantitative assessment of the extent of change in the cipher image when a single pixel in the original image is altered. By employing these measures, the proposed scheme ensures a high level of responsiveness to modifications in the plain text, thereby enhancing the overall security and integrity of the encrypted image data. NPCR and UACI can be computed using Equations (12–14).

In this experiment, the image sizes U and V are considered, and \(\gamma _1\) and \(\gamma _2\) represent the corresponding cipher images obtained from the original image. A comparison is made between the cipher image resulting from encrypting the unchanged original image and the cipher image generated from modifying a single pixel in the original image and then encrypting it. The NPCR and UACI values are calculated to measure the extent of pixel changes between these two cipher images. The results are presented in Table 5. Its modified version differs by only one pixel. The optimal values for NPCR and UACI are determined to be 0.9967 and 0.3349, respectively. These parameters provide quantitative insights into the ability of the encryption scheme to detect and propagate modifications accurately, further highlighting its robustness and security.

6.4 Robustness analysis

In the real world, when images are transmitted, they can be affected by noise or data loss. To create a reliable image encryption algorithm, it should be able to handle these challenges effectively. The algorithm should be robust, meaning it can endure noise and data loss while still allowing for the recovery of the original image without losing vital information Hua et al. (2018); Liu et al. (2016); Noshadian et al. (2018).

In our analysis, we assess the robustness of the proposed image encryption method by subjecting it to two types of attacks: cropping and noise attacks. To evaluate the quality of the decrypted image, we rely on a crucial metric known as PSNR, or Peak Signal to Noise Ratio. This metric helps measure the fidelity of the decrypted image in comparison to the original plain image.

The PSNR is calculated using the following formula Hu et al. (2013):

where:

U and V stand for the image’s width and height, respectively.

O(s, t) stands for the pixel value at position (s, t) in the plain image.

C(s, t) represents the pixel value at the same position (s, t) in the decrypted image.

\(MAX^2\) corresponds to the square of the maximum pixel value found in the image.

Essentially, the PSNR measures the variation between the unencrypted and encrypted images, taking into account both their sizes and pixel counts. A higher PSNR value indicates a better quality decrypted image, signifying that less information has been lost or distorted during the encryption and decryption processes.

In Fig. 3, we observe the impact of data occlusion in the encrypted image at different levels: 1/16, 1/4, and 1/2. Correspondingly, in Fig. 3, we can see the resulting decrypted images achieved through the proposed encryption method. Table 6 provides us with the Peak Signal to Noise Ratio (PSNR) values, which indicate the quality of the decrypted images when subjected to a cropping attack. This evaluation is conducted not only for our proposed image encryption scheme but also for references Hu et al. (2017); Wang and Liu (2017); Liu and Wang (2012); Chai et al. (2019); Zhan et al. (2017). The experimental findings demonstrate that even when up to 50% of the pixels in the encrypted image are obscured, the image can still be effectively reconstructed. In simpler terms, our proposed encryption scheme exhibits robustness against cropping attacks, ensuring the successful recovery of the image even in the presence of substantial data occlusion.

To evaluate how effectively our encryption approach withstands noise-based attacks, we intentionally added Salt & Pepper noise to the encrypted images, introducing different noise densities: 0.005, 0.05, and 0.1. Subsequently, we calculated the Peak Signal to Noise Ratio (PSNR) by comparing the original images with their respective decrypted versions. In Fig. 4, we can observe the ciphered image of Lena subjected to Salt & Pepper noise with densities of 0.005, 0.05, and 0.1. The resulting decrypted images are shown in Fig. 4 as well. Table 7 provides us with the PSNR values, which indicate the quality of the decrypted images under the noise attack. This evaluation encompasses both our proposed encryption scheme and references Hu et al. (2017); Wang and Liu (2017); Liu and Wang (2012); Chai et al. (2019); Zhan et al. (2017). The experimental outcomes suggest that even in the presence of Salt & Pepper noise, the decrypted images remain highly recognizable. In summary, our proposed encryption scheme demonstrates robustness against Salt & Pepper noise attacks, ensuring the successful reconstruction of images despite varying noise densities.

6.5 NIST SP 800-22

NIST Special Publication 800-22, officially known as “A Statistical Test Suite for Random and Pseudorandom Number Generators for Cryptographic Applications,” is a publication released by the United States National Institute of Standards and Technology (NIST). Its primary purpose is to offer an extensive collection of statistical tests specifically designed for assessing the reliability and randomness of both random number generators (RNGs) and pseudorandom number generators (PRNGs) that are utilized in cryptographic contexts.

The primary purpose of NIST SP 800-22 is to help ensure the security and reliability of cryptographic systems by assessing the randomness and unpredictability of the random numbers generated by these systems. Cryptographic algorithms and protocols rely on the use of random or pseudorandom numbers for tasks such as key generation, initialization vectors, and nonce values. If these random numbers are not truly random or do not exhibit the necessary statistical properties, it can lead to vulnerabilities in cryptographic systems. The document includes a suite of 15 statistical tests, each designed to detect specific deviations from randomness, such as bias, correlations, and patterns, in a sequence of random numbers. The p-value is a statistical measure used in NIST SP 800-22 tests to assess the randomness of a sequence of bits that varies between 0 and 1 Al-Hazaimeh et al. (2022); Mahalingam et al. (2023). The results for NIST SP 800-22 are given in Table 8. A low P-value suggests deviations from randomness, while a high P-value indicates that the bits exhibit a uniform distribution and are statistically strong for cryptographic use. When the P-value is greater than or equal to 0.001, the bit sequence is considered to have the desired statistical properties for cryptographic purposes.

6.6 Computational complexity

The time complexity of encryption algorithms is of significant importance, especially in scenarios involving real-time internet tasks and the processing of extensive data sets. To address this concern, a series of experiments were carried out using MATLAB R2018a on a computer configuration featuring 16.0 GB of RAM and an Intel(R) Core(TM) CPU with a clock speed of 2.3 GHz. These experiments employed gray scale images like “Lena”, “Baboon”, “Peppers”, “Barbara”, and “Cameraman”, each with dimensions of 512x512 pixels as the original images. These tests were repeated ten times, and the average duration for both the encryption and decryption processes are detailed in Tables 9 and 10. It is worth noting that the proposed encryption scheme consistently exhibited superior performance in terms of speed when compared to the alternatives. These experimental findings affirm that the proposed encryption method not only surpasses Ref. Hu et al. (2017); Wang and Liu (2017); Liu and Wang (2012); Chai et al. (2019); Zhan et al. (2017); Mahalingam et al. (2023) in terms of speed but is also well-suited for practical applications that demand efficiency.

The proposed enhanced image encryption scheme offers several advantages compared to existing image encryption techniques. Here in Table 11, a comparison of the proposed scheme with other approaches presented in terms of Entropy Analysis, Correlation Analysis, Key Space Analysis, UACI, and NPCR by taking a data set of images Lena, Baboon, Barbara, Cameraman, and Peppers. A comparison with average values of Entropy Analysis, Correlation Analysis, UACI, and NPCR by the proposed method is shown in Table 12.

Through this comparison, it becomes evident that the proposed enhanced image encryption scheme outperforms existing techniques in terms of encryption strength, computational efficiency, security, image quality preservation, scalability, and practical implementation. Its unique combination of Arnold’s Cat Map, ECC, and GA optimization provides a robust and efficient solution for secure image encryption.

7 Discussion

A comprehensive discussion is provided regarding the proposed enhanced image encryption scheme, including a comparison with existing encryption techniques, evaluation of its advantages and limitations, and identification of potential areas for future improvement.

7.1 Comparison with existing encryption techniques

The security, computing efficiency, and attack resistance of the suggested scheme are compared with other well-known image encryption methods. This comparison highlights the strengths and unique features of the proposed scheme, demonstrating its superiority or complementary aspects compared to existing approaches.

7.2 Evaluation of the proposed scheme’s advantages, limitations, and challenges

The advantages and limitations of the proposed enhanced image encryption scheme are critically evaluated. The advantages include high-security levels, robustness against attacks, efficient key generation, and preservation of image quality. The limitations could involve computational complexity, key sensitivity, or specific constraints in certain scenarios. The proposed algorithm faces several challenges. It introduces computational overhead due to elliptic curve cryptography (ECC) and genetic algorithm (GA), potentially impacting efficiency on resource-limited devices. Managing cryptographic keys securely, ensuring robustness against attacks, and fine-tuning GA parameters are crucial yet challenging aspects. Striking a balance between strong encryption and acceptable speed presents a persistent challenge.

7.3 Potential areas for future improvement

Based on the analysis and evaluation, potential areas for future improvement and research are identified. These may involve exploring alternative chaotic maps or cryptographic algorithms, enhancing the resistance against advanced attacks, optimizing computational efficiency, or addressing specific application requirements. Suggestions are provided to inspire further research and development in the field of enhanced image encryption.

8 Conclusion

A conclusion is drawn based on the research conducted on the enhanced image encryption scheme using Arnold’s Cat Map, Elliptic Curve Cryptography (ECC), and Genetic Algorithm (GA).

8.1 Recap of the research objectives

The research objectives are revisited to emphasize the primary goals of the study, which include enhancing image encryption techniques by integrating the Arnold’s Cat Map for chaos and randomness, utilizing ECC and GA for secure key generation and encryption and communication. These objectives serve as the foundation for evaluating the effectiveness of the proposed scheme.

8.2 Summary of key findings and contributions

This includes highlighting the improved security and robustness achieved through the integration of Arnold’s Cat Map, ECC, and GA. The proposed scheme demonstrates enhanced resistance against attacks, efficient key management, and effective preservation of image quality during the encryption process. The research findings shed light on the potential of integrating multiple techniques to enhance image encryption.

8.3 Implications and future directions for image encryption

The proposed scheme holds implications for various applications, such as secure image transmission, privacy protection, and digital content authentication. Additionally, potential future directions for image encryption research are highlighted, including exploring advanced chaotic maps, refining ECC algorithms, and integrating other optimization techniques. These directions provide avenues for further advancements and improvements in image encryption methods.

In conclusion, the research has successfully demonstrated the effectiveness of the proposed image encryption scheme using Arnold’s Cat Map, ECC, and GA. The findings contribute to the field of image security by providing an innovative approach that addresses the challenges of encryption strength, randomness, and key management. The research opens doors for further exploration and development in image encryption techniques, ultimately contributing to the advancement of information security in the digital age.

References

Abdullah AH, Enayatifar R, Lee M (2012) A hybrid genetic algorithm and chaotic function model for image encryption. AEU-Int J Electron Commun 66(10):806–816. https://doi.org/10.1016/j.aeue.2012.01.015

Al-Hazaimeh OM, Al-Jamal MF, Alomari A et al (2022) Image encryption using anti-synchronisation and bogdanov transformation map. Int J Comput Sci Math 15(1):43–59. https://doi.org/10.1504/IJCSM.2022.122144

Asif M, Asamoah JKK, Hazzazi MM, Alharbi AR, Ashraf MU, Alghamdi AM (2022) A novel image encryption technique based on cyclic codes over Galois field. Comput Intell Neurosci 2022:1–9. https://doi.org/10.1155/2022/1912603

Attaullah Shah T, Jamal SS (2020) An improved chaotic cryptosystem for image encryption and digital watermarking. Wirel Pers Commun 110:1429–1442. https://doi.org/10.1007/s11277-019-06793-1

Banu SA, Al-Alawi AI, Padmaa M, Priya PS, Thanikaiselvan V, Amirtharajan R (2023) Healthcare with datacare-a triangular DNA security. Multimed Tools Appl 82:1–18. https://doi.org/10.1007/s11042-023-16303-y

Bao J, Yang Q (2012) Period of the discrete arnold cat map and general cat map. Nonlinear Dyn 70:1365–1375. https://doi.org/10.1007/s11071-012-0539-3

Belazi A, El-Latif AAA, Diaconu AV, Rhouma R, Belghith S (2017) Chaos-based partial image encryption scheme based on linear fractional and lifting wavelet transforms. Opt Lasers Eng 88:37–50. https://doi.org/10.1016/j.optlaseng.2016.07.010

Cao F, Huang HK, Zhou X (2003) Medical image security in a HIPAA mandated PACS environment. Comput Med Imaging Gr 27:185–196. https://doi.org/10.1016/s0895-6111(02)00073-3

Cavusoglu U, Kacar S (2019) A novel parallel image encryption algorithm based on chaos. Clust Comput 22:1211–1223. https://doi.org/10.1007/s10586-018-02895-w

Chai X, Gan Z, Yuan K, Chen Y, Liu X (2019) A novel image encryption scheme based on DNA sequence operations and chaotic systems. Neural Comput Appl 31(1):219–237. https://doi.org/10.1007/s00521-017-2993-9

Chai X, Chen Y, Broyde L (2017) A novel chaos-based image encryption algorithm using DNA sequence operations. Opt Lasers Eng 88:197–213. https://doi.org/10.1016/j.optlaseng.2016.08.009

Choi J, Seok S, Seo H, Kim H (2016) A fast ARX model-based image encryption scheme. Multimed Tools Appl 75:14685–14706. https://doi.org/10.1007/s11042-016-3274-9

Dash S, Padhy S, Devi SA, Sachi S, Patro KAK (2023) An efficient Intra-Inter pixel encryption scheme to secure healthcare images for an IoT environment. Expert Syst Appl 231:120622. https://doi.org/10.1016/j.eswa.2023.120622

Elliptic Curve Cryptography(ECC) Brainpool Standard Curves and Curve Generation. https://tools.ietf.org/html/rfc5639/. Accessed 20 Feb 2021

Enayatifar R, Abdullah AH, Isnin IF, Altameem A, Lee M (2017) Image encryption using a synchronous permutation-diffusion technique. Opt Lasers Eng 90:146–154. https://doi.org/10.1016/j.optlaseng.2016.10.006

Ghazvini M, Mirzadi M, Parvar N (2020) A modified method for image encryption based on chaotic map and genetic algorithm. Multimed Tools Appl 79:26927–26950. https://doi.org/10.1007/s11042-020-09058-3

Hafsa A, Sghaier A, Malek J, Machhout M (2021) Image encryption method based on improved ECC and modified AES algorithm. Multimed Tools Appl 80:19769–19801. https://doi.org/10.1007/s11042-021-10700-x

Hariyanto E, Rahim R (2016) Arnold’s cat map algorithm in digital image encryption. Int J Sci Res 5(10):1363–1365. https://doi.org/10.21275/ART20162488

Hu H, Liu L, Ding N (2013) Pseudorandom sequence generator based on the Chen chaotic system. Comput Phys Commun 184(3):765–768. https://doi.org/10.1016/j.cpc.2012.11.017

Hu T, Liu Y, Gong LH, Ouyang CJ (2017) An image encryption scheme combining chaos with cycle operation for DNA sequences. Nonlinear Dyn 87:51–66. https://doi.org/10.1007/s11071-016-3024-6

Hua Z, Jin F, Xu B, Huang H (2018) 2D Logistic-Sine-coupling map for image encryption. Signal Process 149:148–161. https://doi.org/10.1016/j.sigpro.2018.03.010

Hussain S, Asif M, Shah T, Mahboob A, Eldin SM (2023) Redesigning the serpent algorithm by PA-Loop and its image encryption application. IEEE Access 11:29698–29710. https://doi.org/10.1109/ACCESS.2023.3261568

Khalid I, Shah T, Eldin SM, Shah D, Asif M, Saddique I (2022) An integrated image encryption scheme based on elliptic curve. IEEE Access 11:5483–5501. https://doi.org/10.1109/ACCESS.2022.3230096

Koblitz N (1987) Elliptic curve cryptosystems. Math Comput 48(177):203–209

Liao X, Qin Z, Ding L (2017) Data embedding in digital images using critical functions. Signal Process Image Commun 58:146–156. https://doi.org/10.1016/j.image.2017.07.006

Liao X, Yu Y, Li B, Li Z, Qin Z (2019) A new payload partition strategy in color image steganography. IEEE Trans Circuits Syst Video Technol 30(3):685–696. https://doi.org/10.1109/TCSVT.2019.2896270

Lin CH, Wu JX, Chen PY et al (2021) Intelligent symmetric cryptography with chaotic map and quantum based key generator for medical images info security. IEEE Access 9:118624–118639. https://doi.org/10.1109/ACCESS.2021.3107608

Liu Y, Wang J, Fan J, Gong L (2016) Image encryption algorithm based on chaotic system and dynamic S-boxes composed of DNA sequences. Multimed Tools Appl 75:4363–4382. https://doi.org/10.1007/s11042-015-2479-7

Liu H, Wang X (2012) Image encryption using DNA complementary rule and chaotic maps. Appl Soft Comput 12(5):1457–1466. https://doi.org/10.1016/j.asoc.2012.01.016

Liu W, Sun K, Zhu C (2016) A fast image encryption algorithm based on chaotic map. Opt Lasers Eng 84:26–36. https://doi.org/10.1016/j.optlaseng.2016.03.019

Luo Y, Ouyang X, Liu J, Cao L (2019) An image encryption method based on elliptic curve elgamal encryption and chaotic systems. IEEE Access 7:38507–38522. https://doi.org/10.1109/ACCESS.2019.2906052

Mahalingam H, Veeramalai T, Menon AR, Amirtharajan R (2023) Dual-domain image encryption in unsecure medium: a secure communication perspective. Mathematics 11(2):1–23. https://doi.org/10.3390/math11020457

Mahalingam H, Velupillai Meikandan P, Thenmozhi K, Moria KM, Lakshmi C, Chidambaram N, Amirtharajan R (2023) Neural attractor-based adaptive key generator with DNA-coded security and privacy framework for multimedia data in cloud environments. Mathematics 11(8):1–23. https://doi.org/10.3390/math11081769

Mahboob A, Asif M, Nadeem M, Saleem A, Eldin SM, Siddique I (2022) A cryptographic scheme for construction of substitution boxes using quantic fractional transformation. IEEE Access 10:132908–132916. https://doi.org/10.1109/ACCESS.2022.3230141

Matthews R (1989) On the derivation of a chaotic encryption algorithm. Cryptologia 13(1):29–42. https://doi.org/10.1080/0161-118991863745

Mirjalili S, Mirjalili S (2019) Genetic algorithm. Evol Algorithms Neural Netw Theor Appl 780:43–55

Mishra M, Routray AR, Kumar S (2014) High security image steganography with modified Arnold cat map. Int J Comput Appl 37(9):16–20. https://doi.org/10.48550/arXiv.1408.3838

Mozaffari S (2018) Parallel image encryption with bitplane decomposition and genetic algorithm. Multimed Tools Appl 77:25799–25819. https://doi.org/10.1007/s11042-018-5817-8

Nepomuceno EG, Nardo LG, Arias-Garcia J, Butusov DN, Tutueva A (2019) Image encryption based on the pseudo-orbits from 1D chaotic map. Chaos Interdiscip J Nonlinear Sci. https://doi.org/10.1063/1.5099261

Niyat AY, Moattar MH, Torshiz MN (2017) Color image encryption based on hybrid hyper-chaotic system and cellular automata. Opt Lasers Eng 90:225–237. https://doi.org/10.1016/j.optlaseng.2016.10.019

Noshadian S, Ebrahimzade A, Kazemitabar SJ (2018) Optimizing chaos based image encryption. Multimed Tools Appl 77:25569–25590. https://doi.org/10.1016/j.gltp.2021.08.055

Pareek NK, Patidar V (2016) Medical image protection using genetic algorithm operations. Soft Comput 20:763–772. https://doi.org/10.1007/s00500-014-1539-7

Parida P, Pradhan C, Gao XZ et al (2021) Image encryption and authentication with elliptic curve cryptography and multidimensional chaotic maps. IEEE Access 9:76191–76204. https://doi.org/10.1109/ACCESS.2021.3072075

Prusty AK, Pattanaik A, Mishra S (2013) An image encryption and decryption approach based on pixel shuffling using Arnold Cat Map and Henon Map. In 2013 International conference on advanced computing and communication systems. IEEE 5:1–6. https://doi.org/10.1109/ICACCS.2013.6938729

Raj V, Janakiraman S, Amirtharajan R (2023) Reconfigurable color medical image encryptor using hardware accelerated Chao (S)-box triplets. J Real-Time Image Process 20(2):1–26. https://doi.org/10.1007/s11554-023-01278-8

Rezaei B, Ghanbari H, Enayatifar R (2023) An image encryption approach using tuned Henon chaotic map and evolutionary algorithm. Nonlinear Dyn 111(10):9629–9647. https://doi.org/10.1007/s11071-023-08331-y

Takkar P, Singh V (2017) Image encryption approach using chaotic map for gray scale images. PhD thesis

Tora H, Gokcay E, Turan M, Buker M (2022) A generalized Arnold’s Cat Map transformation for image scrambling. Multimed Tools Appl 81(22):31349–31362. https://doi.org/10.1007/s11042-022-11985-2

Wang X, Liu C (2017) A novel and effective image encryption algorithm based on chaos and DNA encoding. Multimed Tools Appl 76:6229–6245. https://doi.org/10.1007/s11042-016-3311-8

Xu L, Gou X, Li Z, Li J (2017) A novel chaotic image encryption algorithm using block scrambling and dynamic index based diffusion. Opt Lasers Eng 91:41–52. https://doi.org/10.1016/j.optlaseng.2016.10.012

Zhao Z, Zhang X (2013) ECC-based image encryption using code computing. In Proceedings of the 2012 International Conference on Communication, Electronics and Automation Engineering. Springer, Berlin. 181:859-865. https://doi.org/10.1007/978-3-642-31698-2121

Zhan K, Wei D, Shi J, Yu J (2017) Cross-utilizing hyperchaotic and DNA sequences for image encryption. J Electron Imaging 26(1):013021–013021. https://doi.org/10.1117/1.JEI.26.1.013021

Zhou Y, Bao L, Chen CP (2014) A new 1D chaotic system for image encryption. Signal Process 97:172–182. https://doi.org/10.1016/j.sigpro.2013.10.034

Author information

Authors and Affiliations

Contributions

Both authors contributed to the study conception and design. Material preparation and analysis were performed by SK. The first draft of the manuscript was written SK. Supervision, reviewing and editing were done by DS.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kumar, S., Sharma, D. A chaotic based image encryption scheme using elliptic curve cryptography and genetic algorithm. Artif Intell Rev 57, 87 (2024). https://doi.org/10.1007/s10462-024-10719-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10462-024-10719-0