Abstract

Temporal Action Detection (TAD) aims to accurately capture each action interval in an untrimmed video and to understand human actions. This paper comprehensively surveys the state-of-the-art techniques and models used for TAD task. Firstly, it conducts comprehensive research on this field through Citespace and comprehensively introduce relevant dataset. Secondly, it summarizes three types of methods, i.e., anchor-based, boundary-based, and query-based, from the design method level. Thirdly, it summarizes three types of supervised learning methods from the level of learning methods, i.e., fully supervised, weakly supervised, and unsupervised. Finally, this paper explores the current problems, and proposes prospects in TAD task.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, with the development of multimedia technology and the rapid popularization of digital equipment (Graziani et al. 2022), the amount of Internet video data has grown significantly. Therefore, how to deal with these multimedia data efficiently and accurately has become a hot research topic (Le et al. 2021). The purpose of video understanding is to automatically identify and parse the content of a video using intelligent analysis technology. Given the success of deep learning in image processing and detection, researchers have introduced deep learning methods to the field of video understanding (Hutchinson and Gadepally 2021).

Computer vision tasks relating to human action mainly include action recognition (Tran et al. 2015; Hu et al. 2022b; Wang et al. 2022b), action prediction (Kong and Fu 2022), and temporal action detection (Xia and Zhan 2020). Important achievements have been made in the field of action recognition in respect of facial recognition (Li et al. 2022b) and video surveillance. Researchers typically use edited videos for action recognition with only one action. Therefore, action recognition only needs to classify the action without detecting the duration of the action. However, most videos in real life are untrimmed and may contain multiple instances of actions in different categories, each with an unknowable time boundary and duration. In 2017, during the ActivityNet Big Action Recognition Challenge organized by CVPR (Ghanem et al. 2017), video understanding was divided into five branch tasks: untrimmed video classification, trimmed action recognition, temporal action proposals, temporal action localization (temporal action detection), and dense-captioning events in videos. Temporal action detection refers to locating action instances and identifying action categories in untrimmed videos, which are more complex tasks than action recognition. Therefore, in this paper we focus on temporal action detection. Figure 1 shows an example of long-jump temporal action detection, where the start and end times of the movement are obtained by localization. The target of detection is a predefined action category, and the time interval of other activities that do not belong to this group of actions is called the time background.

Standardization efforts in temporal action detection date back to 2007, when Ke et al. (2007) used handcrafted feature methods to detect specific actions in fixed-camera kitchen cooking videos. With the emergence of the THUMOS-14 dataset, the temporal action detection task has been further developed. In 2014, Oneata et al. (2014a) and Wang et al. (2014) used DT features and single-frame CNN features respectively to generate candidate segments of specific sizes through sliding windows, and built a framework for temporal action detection.Later, Yuan et al. (2016) proposed a temporal action detection algorithm using iDT features, which uses iDT features to extract pyramid score distribution features (PSDF) to describe actions in videos.

However, traditional feature extraction methods have time and storage overhead in sequential action detection. In order to solve this problem, Shou et al. (2016) introduced the anchor mechanism in 2016 and proposed a multi-stage SCNN, which combines the sliding window and the proposal generation network to effectively perform action detection. However, it still requires a lot of computation when processing action instances of different durations. Therefore, researchers have also proposed some improved methods based on the anchor mechanism, such as boundary-based temporal action detection algorithms TAG (Xiong et al. 2017) and SSN (Lin et al. 2018), and methods that use action probability distribution curves to improve the confidence of candidate segments, which can provide flexible temporal boundaries make up for the shortcomings of anchor-based methods in terms of precise action boundaries, but may generate proposals with relatively low confidence.Moreover, query-based temporal action detection methods have garnered significant interest. These approaches model action instances as a collection of learnable action queries, eliminating the constraints of manually designing anchor points and boundaries, and offering the benefit of simplifying the computational pipeline.

All in all, multiple algorithms have made significant progress in the domain of temporal action detection. From the earliest hand-engineered feature techniques to deep learning-based methods, researchers have persistently introduced innovative algorithms and models to tackle numerous challenges in temporal action detection. These methods not only improve the accuracy and robustness of the technology, but also bring a lot of value to practical applications. Especially in the areas of anomaly detection, teaching video analysis, and sports video analysis, sequential action detection has achieved good practical results. For example, it can automatically identify and locate abnormal events in videos, which greatly guarantees safety monitoring; in teaching video analysis, it can automatically segment and locate key actions in videos, which greatly facilitates learners’ learning process. In the future, this field will continue to flourish, further expanding its influence across a wide range of application scenarios.

However, in real-world situations, temporal action detection encounters a multitude of obstacles and unresolved matters, the key hurdles being as follows:

-

(1)

Time information. Because of the one-dimensional time series information, static image information cannot be used for temporal action detection. It must be combined with time series information;

-

(2)

The boundary is unclear. Unlike in object detection, the boundary of the target is usually very clear, and a clearer boundary box can be drawn for the target. However, there may be no reasonable definition of the exact timeframe for the operation. Therefore, it is impossible to provide exact boundaries at the beginning and end of an action;

-

(3)

The time span is large. The span of time action segments can be very large. For example, waving a hand takes only a few seconds, while rock climbing or cycling can last several minutes. The task spans differ in length, making it extremely difficult to extract schemes from them. In addition, in an open environment, there are problems of multiscale, multi-target, camera action, etc.

In the previous review by Xia and Zhan (2020) in 2020, time series action detection was divided into single-stage and two-stage types. The one-stage method was briefly described as the generation of candidate proposals and the classification of movements simultaneously. The two-stage approach was described as processing the candidate proposals first, then classifying and regressing the actions. This classification method is simple, ignores the internal design ideas and characteristics of the model, and only works around the process to perform a simple induction, which makes it suitable for beginners to learn. In addition, most of the learning materials focus on fully supervised learning. Although the current performance of weakly supervised learning is weak, weakly supervised learning will be closer to reality, and its development over the past two years has been relatively rapid; it is a method that cannot be ignored and should be introduced in detail. In 2022, Baraka and Mohd Noor (2022) published a review on weak supervision, which introduced concepts, strategies, and technologies related to weak supervision in detail. Weak supervision was divided into two methods, bottom-up and top-down, which were not comprehensively classified or detailed in the introduction to their paper.

In this review we use a new classification method, namely multi-instance learning and direct localization, to introduce weak supervision and the development of unsupervised learning. Full and limited supervision methods are equally important, and we consider them in this paper. The contributions of this review are as follows:

-

(1)

The literature in recent years is summarized and updated comprehensively in respect of each stage of temporal action detection;

-

(2)

Video feature extraction methods are summarized in detail using three learning methods;

-

(3)

The system model is horizontally divided into three categories (anchor-based, boundary-based, and query-based) according to the implementation method, and vertically divided into full-supervision and limited-supervision learning methods. This review may help scholars to understand the task of temporal action detection comprehensively.

The structure of the review is as follows. Section 2 introduces the relevant background, which can assist beginners in understanding the basic concept of temporal action detection tasks, including the form of the dataset and the explanation of common nouns. Section 3 also presents a CiteSpace analysis. With the help of CiteSpace software, the research hotspots and research areas of this task are presented visually, and the topic of this paper is objectively and comprehensively explained using keyword co-occurrence, topic clustering, and other methods. Section 4 introduces research in respect of video feature extraction, which is divided into traditional methods and deep learning methods. Video feature extraction must be introduced as a public step of video task processing. In this review we introduce feature extraction by means of CNN, RNN, and transformers. Section 5 introduces algorithms based on algorithm structure; these can be divided into three types according to the design methods: anchor-based, boundary-based and query-based. Section 6 introduces algorithms based on the supervision mode, focusing on weak supervision, and provides a general introduction to full supervision and no supervision. To enable readers to obtain a deeper and more comprehensive understanding of temporal action detection, Sects. 5 and 6 introduce temporal action detection in horizontal and vertical ways, from the design method to the learning method, using two different research routes to make the structure of the paper more rigorous. Sections 7 and 8 present conclusions and prospects. Figure 2 shows the structure diagram of the article.

2 Background

This section introduces some relevant information in respect of temporal action detection. Section 2.1 introduces the basic concepts and commonly used evaluation methods, and Sect. 2.2 reviews the video datasets widely used in temporal action detection tasks.

2.1 Basic concepts and evaluation metrics

Definition 1

Temporal action detection: Temporal action detection can be regarded as image target detection with a time sequence channel, aiming at dividing and identifying the action intervals in untrimmed video, then outputting the start and end time of each action and the action category. It can be expressed as:

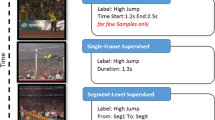

where \(\omega _{a}\) is a group of action examples; N indicates the number of actions in this group of action instances; \(\Psi _{a}\) the A-th action instance; \(t_{s}\),\(t_{e}\),\(l_{a}\) indicate the start time, end time, and corresponding label of the action instance, respectively; labels \(l_{a}\) belong to \(\left\{ 1,2,3,...,C \right\}\); and C is the category in the dataset. One can select different annotation information in the action instance when dealing with different learning methods, as shown in Fig. 3.

Definition 2

Temporal proposals: Temporal proposal P is a segment that may contain or partially contain an action; the comment information for each P includes \(t_{s}\),\(t_{e}\),\(l_{a}\) and the confidence score c; the confidence score is the probability of predicting that P contains an instance of the action. Therefore, P can be represented as \((t_{s},t_{e},l_{a},c)\), as shown in Fig. 3.

Definition 3

Action classification: After the generation of the temporal proposal, the proposal needs to be fed into the action classifier for action classification. Most temporal action detection models use existing action classifiers for classification.

Definition 4

Video feature extraction: For untrimmed video, it is difficult to input the whole video into the encoder for feature extraction; therefore, the video needs to be segmented and input into the pre-trained video encoder for feature extraction. Each video can be represented by a series of visual features that are further processed for action detection. Common visual encoders are two-stream (Simonyan and Zisserman 2014), I3D (Carreira and Zisserman 2017), C3D (Tran et al. 2015), TSN (Wang et al. 2016b), R(2 + 1)D (Tran et al. 2018), and P3D (Qiu et al. 2017). These are explained in Sect. 4.

Definition 5

Evaluation metrics for temporal action proposal: The commonly used evaluation criterion for this task is average recall (AR). Intersection over union (IOU ) thresholds are also set. For the two most widely used public datasets, ActivityNet\(-\)1.3 and THUMOS14, the thresholds of IOU are generally set as [0.5,0.05,0.95] and [0.5,0.05,1]. To more accurately evaluate the relationship between the recall rate and the average number of proposals, the relationship curve AR@AN between the average recall rate (AR) and the average number of proposals (AN) is generally adopted.

Definition 6

Evaluation metrics for temporal action detection(TAD): For TAD tasks, mean average precision (MAP) is used as the average standard, and average accuracy (AP) is calculated in respect of the class of pairs of actions. For the two most widely used public datasets, ActivityNet\(-\)1.3 and THUMOS14, the thresholds of IOU are generally set as [0.5,0.75,0.95] and [0.3,0.4,0.5,0.6,0.7]. The higher the IOU threshold, the more difficult an object is to detect. IOU with different threshold values is usually selected in experiments to comprehensively test the model’s performance.

Definition 7

Dataset Preprocessing: Dataset preprocessing consists of two main steps: data collection and data segmentation. In order to collect data, researchers can use publicly available datasets, like UCF101(Soomro et al. 2012) and AVA (Gu et al. 2018) mentioned in Sect. 2.2, or they can obtain data through specialized video and sensor collection equipment. Moreover, data segmentation is a vital aspect of temporal action detection dataset preprocessing. This process involves splitting lengthy videos or continuous time series data into shorter segments or time windows so that action instances can be processed individually. Popular segmentation methods encompass: fixed-time interval segmentation, motion boundary detection-based segmentation, and motion event-based segmentation. Figure 4 shows the flow chart of dataset preprocessing, drawn with Definition8, Definition9 and Definition10.

Definition 8

Labeling and Annotation of Datasets: Accurate labeling and annotation are essential for providing vital information needed for model training and evaluation. Dataset labeling and annotation involve time annotation, category annotation, and bounding box annotation:

-

(1)

Time annotation is utilized to mark the start and end timestamps of an action, enabling the chronological identification of events within a video or time series.

-

(2)

Category annotation entails assigning a specific category or group of categories to each action instance, indicating the action’s type or class.

-

(3)

Bounding box annotation indicates the spatial location of an action within the video or image. Bounding boxes can be rectangles or polygons that encompass the area where the action takes place.

For efficient and effective annotation, professional annotation tools are available. These tools offer visual interfaces and interactive features, allowing researchers to effortlessly annotate time, category, and bounding boxes. Widely used labeling tools include Labelbox, OpenLabeling, and others.

Definition 9

Temporal sliding window: The temporal sliding window technique is often used in action segmentation during dataset preprocessing for temporal action detection. Its purpose is to detect actions within video sequences. The fundamental idea is to slide a fixed-size window along the timeline, carrying out feature extraction and action detection on the data within the window at each position. By moving the window across the timeline, continuous action detection can be performed on actions at different locations in the video sequence,Regarding the settings of the temporal sliding window, the following description can be given:

Among them, X represents the original video sequence, \(window_{size}\) represents the window size, and Stride represents the step size of the sliding window. On the time series X, starting from an index position i, slide the window with a step size of Stride to obtain a series of fixed-size subsequences. Figure 5 shows a schematic of the temporal sliding window, where \(window_{size}=3\), \(Stride=3\).The window slides along the video frames at a rate of overlapping 1 frame each time.

Definition 10

Overlap rate setting:In temporal action detection, the sliding window setup is usually configured according to Definition 9. In order to capture as much information from the video as possible, the sliding window moves across the entire video, thereby generating a certain overlap rate. This refers to the ratio of frames shared between two consecutive windows.

Typically, the stride (or moving speed) of a sliding window determines the overlap rate between windows. Establishing an optimal stride size is crucial, as it assists researchers in capturing vital actions without compromising on the efficacy of action detection. Generally speaking, if the stride size is set small, the overlap rate will be large, and there will be a large number of shared video frames between two adjacent windows. As a result, although the accuracy of action detection is increased, it also increases processing requirements and computational costs. If the stride size is set larger, the overlap rate will be lower and fewer video frames will be shared between two adjacent windows. However, the accuracy of action detection may be reduced because some important short-term actions may be missed.

In temporal action detection, the overlap rate of the sliding window is generally set at 50%-75%. The specific settings depend on various factors, including the availability of computing resources, the specific characteristics of the actions that need to be detected, and the characteristics of the dataset. This requires a lot of experimentation and optimization work.

2.2 Datasets

There are many common datasets in the field of video understanding (Pareek and Thakkar 2021). Comparison of the performance of different models requires datasets as the carrier. There are two problems with most datasets in use today: first, compared with the rich types of actions in human life, the number of categories in most datasets is very small, such as in KTH (Schuldt et al. 2004), UCF sports (Rodriguez et al. 2008), Weizmann (Weinland et al. 2007), etc.; second, the source of many datasets is not real enough and lacks the unique interference that occurs in the real environment. For example, HOHA (Marszalek et al. 2009) and UCF Sports are composed of professionally photographed teams or non-real scenes taken from movie clips. However, as action-capture systems mature and crowdsourced tagging services improve, these problems will become easier to mitigate. Next, we introduce some mainstream temporal action detection datasets, as listed in Table 1.

-

(1)

UCF101 (Soomro et al. 2012)

UCF101, an action recognition dataset of realistic action videos, contains 101 action categories and a total of 13,320 videos, with a total duration of 27 h. As an extension of the UCF50 dataset, UCF101 has enriched the categories of movements, including five categories: human-object interaction, simple body movements, human-person interaction, playing musical instruments, and sports. As the first large-scale action recognition dataset, UCF101 has a representative position in video datasets. THUMOS ’14, MEXaction2, and other datasets all refer to some of the videos in UCF101. In addition, UCF Sports, UCF11 (Liu et al. 2009), UCF50 (Reddy and Shah 2013), and UCF101 are four action datasets produced by UCF in chronological order. In this order, each dataset contains data from the previous dataset (Fig. 6).

-

(2)

HMDB51 (Kuehne et al. 2011)

Previously, researchers had been working on databases of still images collected on the Internet, but the action-recognition datasets were far below average. Like the previously popular KTH, Weizmann, and IXMAS (Weinland et al. 2007) datasets, a common feature of these datasets is that there are only a small number of occlusion objects and a limited number of complex actors in the video clip environment, meaning they do not adequately represent the complexity and richness of the real world. Moreover, the recognition rate of such datasets is often very high. Therefore, to promote the sustainable development of action recognition and improve the richness and complexity of datasets, Kuehne et al. proposed HMDB51. The HMDB51 dataset is a small and easy-to-use human movement dataset, containing 51 action categories and a total of 6849 clips including manual annotation. There are five action categories: general facial action, facial action and object action, general body action, human interaction, and human action. Videos were obtained from a variety of sources, from digitized movies to YouTube (Fig. 7).

-

(3)

THUMOS’14 (Jiang et al. 2014)

THUMOS’14 originated from the THUMOS Challenge 2014. This dataset contains many untrimmed videos of human behaviour in real-world environments, and the ability to predict activity in untrimmed video sequences can be evaluated. The main tasks are action recognition and sequential action detection. Currently, most papers use this dataset for testing and evaluation. In the action recognition task, the training set contains 13,320 trimmed videos, covering 101 action categories. The validation set contains 1010 untrimmed videos, while the test set contains 1574 untrimmed videos. For the temporal action detection task, the training set contains 213 trimmed videos, which contain 20 action categories. The validation set contains 200 untrimmed videos, while the test set also contains 1574 untrimmed videos. In addition, THUMOS-14 has been further developed in 2015’s THUMOS-15, containing more than 430 h of video data, which is about 70% larger than THUMOS-14. This makes it a more challenging video action dataset.

-

(4)

ActivityNet (Caba Heilbron et al. 2015)

While there has been an explosion in video data, with more than 300 h of video uploaded to YouTube every minute, there have been no corresponding advances in recognizing and understanding human action tasks. Most datasets ignore the vast variability in execution styles; the complexity of visual stimuli in terms of camera movement, background clutter, and changes in viewpoint; and the level of detail and amount of activity that can be identified. For example, UCF Sports (Rodriguez et al. 2008) and Olympic Sports (Niebles et al. 2010) increase the complexity of movements by focusing on highly defined physical activities. Another significant limitation is that most computer vision algorithms for understanding human activity are based on datasets covering a limited number of activity types. In fact, existing databases tend to be specific and focus on certain types of activities. To address the limitations of this dataset, Heilbron et al. proposed a flexible framework for capturing, annotating, and segmenting online video, also known as ActivityNet. The ActivityNet dataset is also used for a large-scale behavior recognition contest; the dataset consists of 27,801 videos, including 13,900 training videos, 6950 validation videos, and 6950 test videos. ActivityNet offers 203 activity categories in the current release, with an average of 137 untrimmed videos per category and 1.41 activity instances per video for 849 h.

-

(5)

AVA (Gu et al. 2018)

The AVA dataset is a spatiotemporal detection dataset containing 430 15-minute videos tagged with 80 action categories, 1.58 million action labels, and 81,000 action tracks. To increase the diversity of the dataset, the producers cut 15-minute clips into 897 overlapping 3-second movie clips in one-second steps. The 430 videos were divided into 235 training videos, 64 verification videos, and 131 test videos. The AVA dataset is a new annotated video dataset that is human-centric, sampled at a frequency of 1Hz, and framed for each person. The side label of the boundary box is the actor’s action, and the interaction between the objects is generated. In the AVA dataset, the actions of all persons are marked in the keyframe, but the result is an uneven class of actions of the Zipf law type. The action recognition model should be based on the real long-tail action distribution (Horn and Perona 2017) rather than on an artificially balanced dataset.

-

(6)

MEVA (Corona et al. 2021)

Datasets used for action recognition often fail to meet public safety community requirements, for example AVA, moments in time (Monfort et al. 2019), and YouTube-8 M (Abu-El-Haija et al. 2016). These datasets present short, high-resolution video specificities centered on the activity of interest in both time and space. In real life, more datasets are required with an actual spatial scope. Multiview extended video with activities (MEVA) is a new dataset for human action recognition. MEVA comprises more than 9300 h of continuous uncut video that contains spontaneous background activity. In this dataset, there are 37 kinds of actions, spanning 144 h in total, and actors and props are framed with borders. In addition, about 100 actors were gathered to perform scripted scenes and spontaneous background activities in a gated and controlled venue over three weeks, and video was collected in various ways so that indoor and outdoor views overlapped or did not overlap.

-

(7)

MoVi (Ghorbani et al. 2020)

Ghorbani et al. introduced a new human action and video dataset, MoVi, which will soon be publicly available. It comprises data from 60 female and 30 male actors performing 20 predefined daily and motor movements and one optional move. During the five-round capture process, the same actors and actions were recorded using different hardware systems, including optical action capture systems, cameras, and inertial measurement units (IMUs). In some capture rounds, the actors were recorded in their natural clothing, while in other rounds, they wore very little. The dataset contains 9 h of action capture data, 17 h of video data from four different angles, including a handheld camera, and 6.6 h of IMU data. Ghorbani et al. describe how the dataset was collected and post-processed and discuss examples of potential research that could be achieved with the dataset.

-

(8)

Charades (Sigurdsson et al. 2016)

Computer vision technology can help people in their daily lives by finding lost keys, watering plants, or reminding people to take their medicine. To accomplish these tasks, researchers need to train computer vision methods from real and diverse examples of everyday dynamic scenarios. Sigurdsson et al. proposed a novel Hollywood family approach to collecting such data. Instead of shooting videos in a lab, Sigurdsson et al. ensured diversity by distributing and crowdsourcing the entire video creation process, from scripting to video recording and annotation. Following this process, the authors collated a new dataset, i.e., Charades. Hundreds of people recorded videos and went about their daily leisure activities at home. The dataset consists of 9848 annotated videos, with an average length of 30 s, showing the activities of 267 people across three continents, with more than 15 percent featuring more than one person. Multiple free text descriptions, action labels, action intervals, and interaction object classes annotate each video in the dataset. Users can employ this wealth of data to evaluate and provide baseline results for multiple tasks, including action recognition and automatic description generation. The dataset’s authenticity, diversity, and randomness will present unique challenges and new opportunities to the computer vision community.

-

(9)

FineAction (Liu et al. 2022b)

Temporal action detection is an important and challenging problem in video comprehension. However, most existing TAD benchmarks are based on the coarse-grained nature of the action class. This presents two major limitations for this task. First, the rough action level causes the location model to over-adapt to high-level contextual information while ignoring the atomic action details in the video. Secondly, the rough action class usually leads to the fuzzy annotation of the time boundary, which is unsuitable for temporal action detection. To address these issues, Liu et al. developed a new large-scale fine-grained video dataset called FineAction for temporal action detection. FineAction contains 103K time instances of 106 action categories, annotated in 17K untrimmed videos. Due to the rich diversity of the fine motor class, the intensive annotation of multiple instances, and the concurrent actions of different classes, the fine motor class provides new opportunities and challenges for temporal action detection. In order to benchmark FineAction, Liu et al. systematically examined the performance of several popular TAD methods and analyzed in depth the impact of short-time and fine-grained instances of TAD.

3 Citespace analysis

Visualization of scientific knowledge based on social networks and graph theory comprises a new field of bibliometric methods. CiteSpace (Chen 2004, 2006, 2013; Chen et al. 2010) has received extensive attention worldwide due to its advanced and powerful functions. Therefore, CiteSpace was used in this study to analyze TAD tasks visually. We used CiteSpace (5.3.R11) to visualize the data. We established parameters, including time slices (annual slices were used for co-author analysis and keyword co-occurrence), keyword sources (title, abstract, author keywords, and keyword plus), and node types (author, institution, country, cited reference, cited author). Literature analysis based on CiteSpace can identify the research content and hotspots in a certain field more conveniently and quickly.

Since deep learning technology was introduced into the field of video understanding, the task of TAD has developed rapidly. In this study, 1326 articles relating to TAD from the past 12 years were retrieved via a Web of Science search for temporal action detection (TAD) and temporal action localization (TAL). As shown in Fig. 8, a keyword heatmap was created. The larger the circle, the more times the keyword appears. The round layer from the inside out represents the past to the present, and the redder the layer, the more attractive popular it is. The figure shows that keywords with more frequency have larger circles, while keywords with less frequency have smaller circles and are not displayed. From the cluster graph, it can be seen that "action recognition", "feature extraction", and "temporal action localization" are prominent. This indicates that TAD is always active in these research fields. In the figure, "location awareness" and "proposal network" are darker keywords, indicating that they appeared later; researchers need to pay more attention to these. In addition, with the development of TAD, tasks such as action prediction and sound localization have been further developed.

Figure 9 shows the time sequence diagram of the occurrence of high-frequency keywords. The first 11 keywords with high frequency from 2014 to 2022 were counted. “Strength” represents the strength of keywords. The higher the value, the more times the keyword has been cited. The line on the right is the timeline from 2014 to 2022. “Begin” indicates the time when the keyword first appears. “Begin” and “End” show the activity of the keyword during the year. It can be seen from the figure that words such as "localization" and "recognition" are hot topics for temporal action detection. Keywords such as "human action recognition" and "temporal action proposal generation" are also the focus of this paper. The keywords in Fig. 8 complement each other and make up for the ambiguity of the time information in Fig. 8. Through these keywords, readers can better understand the hotspot direction in respect of TAD.

With the development of deep learning, increasing numbers of scholars and teams have begun to study TAD, and they jointly promote the progress and development of TAD algorithms. In this study we analyzed 1326 articles from 2010 to 2022 (data obtained from Web of Science); the author contribution graph, as shown in Fig. 10, was obtained, in which the larger the font, the more papers have been published by the author, and the greater their influence. WANG L designed the first video-level framework to learn video representation and created the most classic TSN (Wang et al. 2016b) model. WANG L was also the first to apply action detection to weak supervision and designed UntrimmedNet (Wang et al. 2017), making remarkable contributions to the field. Zhao Y introduced the idea of image detection into time sequence action detection for the first time and designed an SSN (Zhao et al. 2017) network with an obvious improvement effect. Liu J mainly studied action recognition in TAD, involving the field of action prediction.

We retrieved 1418 articles in respect of TAD and their citation frequency through a Web of Science search for TAD and TAL. In Fig. 11, the blue bar chart shows the number of posts, and the red line shows the frequency of citations. As shown in Fig. 11, the number of papers published in the field of TAD is rising, and the frequency of citations is increasing rapidly. The drop in the 2022 figures is because the 2022 figures are only available through August. In 2012, the number of papers was relatively small, the field had not been fully developed, and there were fewer researchers. After 2013, there was rapid development, but the number of papers was still low. Compared with the field of action recognition, temporal action detection has great prospects in respect of development and application.

We also identified scholars from different regions and summarized them by country. As shown in Fig. 12, a global map is used to show the contributions of different countries through different shades of color. According to the color in the lower-left corner, we ranked the contribution degree from the highest to the lowest. The color red indicates that the number of papers contributed is more than 300; orange indicates that the number of papers contributed is between 200 and 300; gold indicates that the number of papers contributed is between 100 to 200; green indicates that the number of papers contributed is less than 100; white indicates that the relevant areas have not contributed to TAD research. It can be seen that China and the United States, followed by Japan, Canada, and some European countries, have made great contributions to temporal action detection research. More countries have begun to pay attention to research in respect of temporal action detection tasks.

4 Video feature extraction

Due to limited computer resources, a video cannot be directly applied to TAD as the input. Generally, the video needs to be input into a visual encoder. After processing by a visual encoder, the video can be represented by a series of visual features that are further processed for subsequent tasks. According to the history of video feature extraction, this section is divided into feature extraction via traditional methods and feature extraction via deep learning methods.

4.1 The traditional methods

Some early methods used manual features or local space-time descriptor operators as representations of videos to classify and detect video actions. Laptev (2005) proposed the space-time interest point (STIP) in 2003 by extending the Harris corner detector to 3D. SIFT and HOG were extended to SIFT 3D and HOG3D for action recognition by Scovanner et al. (2007) and Klaser et al. (2008), and others. Ke et al. proposed a cuboid feature (Ke et al. 2007) for behavior recognition in 2007. Sadanand and Corso established ActionBank (Sadanand and Corso 2012) for action recognition in 2012. The most representative algorithm is the dense trajectories (DT) algorithm proposed by Wang et al. in 2011. Firstly, the feature trajectories in the video frame sequence are obtained by the optical flow field, and then feature extraction is carried out based on the feature trajectories. However, feature extraction via the DT algorithm is often subject to environmental constraints. For this reason, Wang et al. (2013) proposed an improved dense trajectory (IDT) method in 2013. This is a more advanced video feature extraction method. The IDT descriptor shows how spatial and temporal signals can be processed differently. Instead of extending the Harris corner detector to 3D, it starts with densely sampled feature points in the video frame and uses the optical flow to track them. For each tracker corner, a different manual feature is extracted along the track. This method can weaken the influence of camera motion on feature extraction, making the IDT algorithm the best method with the best effect and stability before deep learning entered the field. However, this method requires much computation and has difficulty in dealing with large-scale datasets. Moreover, its features lack flexibility and extensibility.

4.2 Deep learning methods

Deep learning technology solves the problem of the feature extraction of large-scale datasets, enabling many datasets to be trained to extract the spatial and temporal features of videos and generate a good model. At present, deep learning of human behavior recognition can be divided into three categories: CNN, RNN, and transformers (Hu et al. 2022c). Next, relevant work will be introduced in these three categories.

4.2.1 CNN feature extraction

Inspired by deep learning breakthroughs in the image field (Krizhevsky et al. 2017), various pre-trained convolutional networks (Jia et al. 2014) can be used to extract image features. Feature extraction methods based on convolutional neural networks can be divided into the two-stream CNN and the 3D CNN. In this section we review the two schemes.

-

(1)

Two-stream 2D CNN

The progress of image recognition methods promotes two-stream convolutional networks (Hu et al. 2022a) for action recognition. Before this, many video action recognition methods were based on local spatiotemporal features of shallow high-dimensional coding. Simonyan and Zisserman (2014) first proposed a two-stream convolutional network containing space-time and optical flow in 2014. As shown in Fig. 13, the network consists of two parts that process data in both time and space dimensions; each network is made up of a CNN and the last layer is Softmax. Since two-stream convolutional networks only operate one frame (spatial network) or a single heap frame in short segments (temporal network), they have poor modeling ability for the time structure in a long time-range and limited ability to capture contextual relations.

Two-stream architecture for video classification. (Simonyan and Zisserman 2014)

In view of the shortcomings of the two-stream convolutional network, Wang et al. (Wang et al. 2016b) summarized two problems that need to be solved in 2016:

-

(a)

How to design an effective video-level framework for learning video representation that captures long time structures;

-

(b)

How to train a neural convolutional network model with limited samples.

Therefore, Wang et al. proposed the classical TSN network and introduced a very deep neural convolutional network structure. The model can be combined with complete video information by using sparse time sampling and video-level supervision. As shown in Fig. 14, the TSN model framework is divided into spatial stream convolutional networks and optical stream convolutional networks. The processing objects are no longer single frames or single heap frames but sparsely sampled snippets. TSN then fuses the category scores of different segments through temporal segment networks. TSN enables end-to-end learning of long video sequences within a reasonable budget of time and computer resources.

TSN Module. (Wang et al. 2016b)

In 2019, Feichtenhofer et al. (2019) proposed a slow-fast network inspired by the two-stream idea and biological research in respect of retinal ganglion cells in primate visual systems (Hubel and Wiesel 1965; Livingstone and Hubel 1988; Derrington and Lennie 1984; Felleman and Van Essen 1991; Van Essen and Gallant 1994), and achieved excellent performance. This model uses a slow path running at a low frame rate, which can be any convolution model (Tran et al. 2015; Feichtenhofer et al. 2017; Carreira and Zisserman 2017; Wang et al. 2018; Hu et al. 2023) and resolves static content in the video by capturing spatial semantics. There is also a fast path that runs at a high frame rate, capturing motion with good temporal resolution to analyze the dynamic content in the video. As shown in Fig. 15, the two paths are the low frame rate and the high frame rate. T and C of the slow path are the benchmarks of the fast path. For video, the slow path samples T frames as the input. At the same time, the fast path needs to process high-frequency information; the whole process does not use the time domain downsampling layer, so the input is always -framed. The two paths are fused by a transverse connection and finally fed into a full connection layer for classification. The validity of the model has been proven using 6 datasets.

SlowFast Model. (Feichtenhofer et al. 2019)

Video feature extraction based on deep learning has obvious performance advantages compared with traditional manual feature extraction methods. The two-stream network divides video sequences into time and space in a pioneering way, providing a research space for subsequent researchers. However, the speed of the two-stream convolutional network is slow, making it unsuitable for use with large-scale real-time video; 3DCNN can make up for this deficiency.

-

(2)

3DCNN

Another idea for video feature extraction is to expand the 2D convolution kernel used for image feature extraction into a 3D convolution kernel to train a new feature extraction network. The general approach of feature extraction algorithms based on 3D convolutional networks is to take a spatiotemporal cube formed by stacking a small number of continuous video frames as the model input. Then, the spatial and temporal representation of the video information is adaptively learned through the hierarchical training mechanism under the supervision of the given action category label.

In 2015, Tran et al. (2015) proposed a simple and effective spatiotemporal feature-learning method called C3D. Experiments show that 3D convolutional networks are more suitable for spatiotemporal feature learning than 2D convolutional networks and have significantly improved efficiency compared with two-stream methods. However, C3D is not learned from a full video. Therefore, the modeling ability for long-range spatiotemporal dependence is not strong. Diba et al. (2017) proposed time 3DCNN (T3D) in 2017, which strengthened the modeling of long-range spatiotemporal dependence. The time transition layer can model the depth of the time convolution kernel. T3D can efficiently capture short-, medium-, and long-term time information. Varol et al. (2017) proposed a long-term time convolution (LTC) network in 2017, which increased the time range of the 3D convolution layer at the cost of reducing spatial resolution and enhanced the modeling of the long-term time structure.

Due to the introduction of the time dimension, the network parameters become larger, and the training cost becomes increasingly higher. Some researchers aim to decompose 3D convolution. Qiu et al. (2017) proposed a pseudo-3D residual network in 2017. Pseudo-3D decomposes 3D convolution into a two-dimensional spatial convolution with a convolution kernel of 1\(\times\)3\(\times\)3 and a one-dimensional time convolution with a convolution kernel of 3\(\times\)1\(\times\)1 to simulate 3D convolution. Carreira et al. (2017) proposed the I3D model in 2017 using the same refinement idea. The main idea was to extend Inception’s 2D model into a 3D model. I3D can achieve excellent performance after full pre-training in kinetics. Similarly, Tran et al. proposed a new spatiotemporal convolution module called R(2+1)D in 2018, which approximates the complete three-dimensional convolution with a two-dimensional convolution kernel and a one-dimensional convolution, thus separating the processing of space and time. Lin et al. (2019a) proposed the temporal shift module (TSM) network in 2019. TSM moves some features forward and backwards along the time dimension, allowing the network to achieve the performance of a 3D CNN but maintain the complexity of a 2D CNN. As shown in Fig. 16, for the image input of a single frame, only the first 1/8 feature graphs of each residual block are saved and cached in memory during feature processing. The red box in the figure is the 1/8th feature of the cache. The author uses the cache feature map for the next frame to replace the first 1/8 of the current feature and 7/8 of the current feature map to create the next layer. As a result, TSM gives an inter-frame predictive delay that is almost identical to the 2D CNN baseline.

TSM Model. (Lin et al. 2019a)

Compared with two-stream convolution, 3D convolution is faster and more efficient. However, the existing network cannot make full use of video temporal and spatial characteristics, and the recognition rate is low. Therefore, feature extraction methods for human action recognition still need to be optimized.

4.2.2 RNN feature extraction

RNNs can be used to analyze temporal data due to recursive joins in its hidden layer. However, traditional RNNs have the problem of disappearing gradients, and cannot effectively model long time series. Therefore, most current approaches adopt gated RNN architectures, such as LSTM (Hu et al. 2021), which can effectively model video-level temporal information.

Donahue et al. (2015) proposed the long-term recurrent convolutional network (LRCN) model in 2014. This model uses 2D-CNN as a feature extractor to extract frame-level RGB features and connects LSTM for prediction. In 2015, Yue-Hei Ng et al. (2015) proposed two methods for processing long videos. The first method explores various convolution time feature pool architectures, including conv pooling, late pooling, and slow pooling, and using the aggregation technology characterized by maximum pooling, frames can be extracted at a higher frame rate while still being able to extract the full video in the capture context. The second method uses a recursive neural network composed of LSTM units to model the video explicitly as an ordered sequence of frames. As shown in Fig. 17, the input original frame and optical flow enter the feature aggregation after CNN feature computation. LSTM networks run on frame-level CNN activations and can learn how to aggregate feature information over time. The network can share parameters over time, and both architectures are able to maintain a constant number of parameters while capturing a global description of the video’s temporal evolution.

Overview of NG’S approach. (Yue-Hei Ng et al. 2015)

In the same year, Srivastava et al. (2015) proposed a recursive neural network based on LSTM, namely the encoder LSTM and the decoder LSTM. An encoder LSTM is used to map the input video to a fixed-length representation, and then the decoder LSTM decodes it to obtain the processed video features. In 2019, Wu et al. (2019) proposed a LITEEVAL framework based on rough and fine LSTM. As shown in Fig. 18, rough LSTM (cLSTM) is used to process the features extracted by lightweight CNN from the video and obtain rough features. It determines whether to calculate the fine features based on the rough features and historical information. If further checks are required, fine features are exported to update the fine LSTM (Flstm); otherwise, the two LSTMS are synchronized. Fine LSTM can obtain all the feature information it sees. Majd and Safabakhsh (2020) proposed a novel \(C^2\) LSTM in 2020, which uses convolution and cross-correlation operators to learn the spatial and action features of videos and extract time dependence.

Overview of the LITEEVAL approach. (Wu et al. 2019)

With the introduction of spatial and temporal attention, LSTM has undergone new development. Sharma et al. (Sharma et al. 2015) added spatial attention to the LSTM unit for the first time. The model recursively outputs the attention diagram and pays more attention to the spatial information of features. In 2019, Sudhakara et al. (Sudhakaran et al. 2019) introduced a recurrent unit with built-in spatial attention called long short-term attention (LSTA), which can spatially localize discriminative information on video input sequences. As shown in Fig. 19, LSTA extends the LSTM with two new components, the circular attention and the output pool. The first part (red) tracks the weight plot to focus on relevant features, while the second part (green) introduces high-capacity output gates. At the core of both is a pool operation \(\xi\) that enables smooth attention tracking and flexible output gating. To make full use of spatial features in the video, Li et al. (2018b) proposed the VideoLSTM model in 2016. VideoLSTM uses convolution and action-based attention mechanisms to obtain spatial correlation and action-based attention graphs in video frames. In 2021, Muhammad et al. (2021) proposed an attentional mechanism based on bidirectional long- and short-term memory, which includes a dilated convolution neural network (DCNN). DCNN extracts CNN features from input data, and the network can selectively focus on valid features in video input frames.

Overview of the LSTA approach (Sudhakaran et al. 2019)

Video feature extraction methods based on 3D CNN usually perform spatiotemporal processing at limited intervals through window-based 3D convolution operations, in which each convolution operation only pays attention to the relatively short-term context of the video. At the same time, RNN-based methods recursively process video sequence elements, so they cannot model relatively long-term spatiotemporal dependence. However, transformers can be directly involved (Sun et al. 2022b).

4.2.3 Transformer feature extraction

Transformer networks (Vaswani et al. 2017; Acheampong et al. 2021) have significant performance advantages and are becoming popular in deep learning. Compared with traditional deep learning networks such as convolutional neural networks and cyclic neural networks, transformers are more suitable for feature extraction because their network structure is easy to deepen and the model deviation is small (Ruan and Jin 2022); they perform well in long-term dependence modeling.

Given the success of transformers in natural language processing, more researchers are using transformers in video processing. Girdhar et al. (2019) proposed an action-based transformer model in 2019. This model uses the initial layer of space-time I3D to generate the basic features and then generates the boundary proposal using the regional proposal network (RPN). The basic feature map and each proposal are obtained through the action transformer to obtain the proposed features. An action transformer treats the feature graph for each particular topic as a query and the features from adjacent frames as keys and values. As shown in Fig. 20, the video footage is taken as the input, and the backbone network (usually the initial layer of I3D) is used to generate the spatiotemporal feature representation. The central frame of the feature map generates the bounding box proposal through the RPN, the feature map (filled with positional embedding) and each proposal obtains the proposal’s characteristics through the "head" network. The head network is made up of action transformer units (Tx units) to generate the features to be categorized. QPr and FFN refer to the query preprocessor and feedforward network, respectively.

Overview of action transformer (Girdhar et al. 2019)

Bertasius et al. (2021) proposed the TimeSformer in 2021. TimeSformer is an adaptation of the standard transformer structure to video through learning spatiotemporal features directly from a series of frame-level patches (Dosovitskiy et al. 2020). TimeSformer removes the CNN entirely for the first time and applies temporal and spatial attention to each piece. Inspired by the vision transformer (ViT), Arnab et al. (2021) proposed a pure transformer model called ViViT in the same year. By degenerating different components of the transformer encoder in spatial and temporal dimensions, a large number of spatial-temporal markers encountered in the video can be effectively handled. As shown in Fig. 21, the model extracts space-time tokens from the input video and then encodes the space-temporal tokens through a series of transformation layers. Three effective model components are also shown in the figure, which handle the long sequence of tokens encountered in the video: factorized encoder, factorized self-attention, and factorized dot-product.

Overview of ViViT (Arnab et al. 2021)

Only after pre-training a large amount of data can a pure transformer achieve better performance than a CNN, but it will inevitably require substantial memory and computing consumption. Zha et al. (2021) proposed a shifted chunk transformer with a pure self-attention block in 2021. In processing video frames, a pure transformer divides each frame into several local windows called image blocks and builds a layered image block converter. The converter uses locally sensitive hashing to enhance dot-product attention in each block (Hu et al. 2022c), thereby significantly reducing memory and computing consumption. In addition, to fully consider the action effect of the object, Zha et al. also designed a powerful self-attention module, namely the shifted self-attention module:the module explicitly extracts correlations from nearby frames. Furthermore, a frame-by-frame attention module clip encoder based on a pure transformer was designed to model the complex inter-frame relationship with minimal additional computational cost.

In addition,a series of Transformer-based models have recently emerged. For example, MotionFormer proposed by Patrick et al. (Patrick et al. 2021) in 2021 is used for video action recognition of people. This model proposes a new video Transformer framework called Trajectory Attention for modeling temporal correlation in dynamic scenes. As shown in Fig. 22, the figure shows the trajectory attention flow chart, which consists of two stages: the first step forms a set of ST trajectory markers for each space-time location st, and the second step utilizes 1D temporal attention operations Converge along these trajectories. In this way, trajectory attention can effectively accumulate information about the motion paths of objects in videos. In addition, MotionFormer also proposes a new method to calculate the quadratic dependence between memory and input size, which is especially important for high-resolution and long videos. However, MotionFormer has large calculation and memory overhead. Liu et al. (2021) introduced the Swin Transformer in the same year, addressing the challenges related to applying the Transformer from the language domain to the visual domain, such as the significant difference in the scale of visual entities and the high pixel resolution of images compared to words in text. As shown in Fig. 23, this illustration presents two consecutive Swin Transformer blocks, where W-MSA and SW-MSA have multi-head attention modules with regular and shifted window configurations, respectively. Swin Transformer introduces a hierarchical Transformer structure, utilizing shifted windows for computing representations. In various tasks, the experimental results have significantly surpassed previous state-of-the-art models, demonstrating the potential of Transformer-based models as visual backbone networks.

Overview of motionformer (Patrick et al. 2021)

Overview of swim transformer (Liu et al. 2021)

Although Swin Transformer has linear computational complexity,it may face issues such as memory limitations for particularly large image inputs. In 2021, Fan et al. (Fan et al. 2021) introduced the Multiscale Vision Transformers (MViT), a multi-scale visual Transformer model designed for video and image recognition that combines the concept of multi-scale feature hierarchies with the Transformer model.MViT is better able to model the dense nature of visual signals through feature hierarchies at multiple scales. The MM-ViT proposed by Chen et al. (2022) in 2022 has been expanded and improved on the basis of MViT. MM-ViT incorporates multi-modal processing and cross-modal attention mechanisms,which handle data including motion vectors, residuals, and audio waveforms, and features three distinct cross-modal attention mechanisms.These cross-modal attention mechanisms can be seamlessly integrated into the Transformer architecture.

However,when a set of video frames is mixed in random order and is different from the original frame, it may be classified with the same label as the original recognition result; if this is the case, these models have clearly been over-fitted or biased toward other factors than the semantic information learned in respect of the actions. To solve this problem. Truong et al. (2022) proposed the DirecFormer model in 2022. The model learns the correct frame order in action videos by taking advantage of the direction of attention and the amount of attention between frames. Furthermore,to address the inability of conventional transformers to effectively quantify their forecast inaccuracies. Guo et al. (2022) proposed the uncertain guidance transformer (UGPT) in 2022, which treats the attention score of the transformer as a random variable to capture random dependencies and uncertainties in the input. The features extracted from the CNN are input into the UGPT after location encoding, and advanced embedding is the output.

Due to the large memory footprint of untrimmed video, the current advanced TAD is on top of the features of precomputed clip videos. These features may not be suitable for TAD. Specifically, the video encoder is trained to map different short films within an action sequence to similar outputs, thus predicting insensitivity to the time boundary of the action. As shown in Fig. 24, the image and video classification models can be fine-tuned to work with pre-training, thanks to the availability of large relevant datasets (such as ImageNet and UCF101 in the figure). However, the existing datasets for TAD tasks are too small for model pre-training or lack time boundaries, leading to low efficiency. Therefore, we believe that solving the limitations of the training design of TAD has great potential to improve the model’s performance.

In 2021, Alwassel et al. (2021) proposed a novel supervised pre-training paradigm for editing. This paradigm not only trains the classification of foreground activities, but also considers background clips and global video information to improve time sensitivity. As large video datasets with time boundary annotations are difficult to collect, Xu et al. (2021a) designed the boundary-sensitive pretext (BSP) in 2021. They propose to transform the existing action classification dataset of clip videos to synthesize large-scale untrimmed videos with time boundary annotations. Specifically, they generate artificial time boundaries that are relatively consistent with changes in video content by splicing clips containing different classes, splicing two video lines of the same class, or manipulating the speed of different parts of the video instance. Xu et al. (2021b) proposed a simple and limited low-fidelity (LoFi) video encoder optimization method in the same year. They did this by introducing a strategy characterized by a new intermediate training phase, in which both the video encoder and the TAD head use lower temporal and spatial resolution (i.e., low-fidelity) for end-to-end optimization in small batch constructs. In 2022, Zhang et al. (2022) proposed a new unsupervised pretext learning method called pseudo action localization (PAL). PAL first builds training sets by cheaply converting existing large-scale TAD datasets. Then, two-time regions with random time lengths and proportions are randomly clipped from a video as pseudo actions. The model can align the pseudo-action features of the two synthesized videos.

Transformer addresses the problem that CNN- and RNN-based approaches cannot model relatively long-term spatial-temporal dependencies. The transformer can participate directly in the completion of video sequences through its extensible self-attention mechanism, thus effectively learning the remote spatiotemporal relationships in the video. The Table 2 summarizes the video feature extraction networks based on deep learning methods.

5 TAD according to the design method

Natural language processing and video understanding are different branches of artificial intelligence. The two are different in terms of application objects. The application object of natural language processing is two-dimensional text data, while the application object of TAD is three-dimensional action data. From the perspective of practical application, both are more in line with the characteristics of unknown information and rich information in real scenes, so there is a certain correlation in processing ideas and methods. Natural language processing can be seen everywhere in the design of TAD.

Natural language processing presents complex and challenging tasks related to languages, such as machine translation, questions and answers, and summaries. Nonlinear programming involves designing and implementing models, systems, and algorithms to solve the practical problems of understanding human language (Lauriola et al. 2022). Thanks to recent advances in deep learning, the performance of natural language processing applications has been improved in an unprecedented way, attracting increasing interest from the machine learning community (Kotsiantis et al. 2006). For example, the most (Wang et al. 2021a) advanced phrase-based statistical methods in machine translation have been gradually replaced by neural machine translation (Yadav and Vishwakarma 2020). Neural machine translation involves large deep neural networks that achieve better performance (Bahdanau et al. 2014). After the advent of text vectors and unsupervised pre-training, the last real boost in NPL was the transformer model (Vaswani et al. 2017). The most popular pre-trained transformer model is BERT (Devlin et al. 2018). BERT aims to pre-train deep bidirectional representations in the unlabeled text by jointly moderating left and right contexts in all layers. Inspired by BERT, several pre-training models followed, such as RoBERTa (Liu et al. 2019b), ALBERT (Lan et al. 2019) and DistilBERT (Sanh et al. 2019). Other related approaches based on the same concept are generation pre-training (GPT) (Radford et al. 2018, 2019), Transformer XL (Dai et al. 2019), and its extension XLNet. Today, these methods continue to achieve excellent performance for a wide range of natural language processing tasks, such as question and answer (Garg et al. 2020; Shao et al. 2019; Kumar et al. 2019), text classification (Sun et al. 2019), sentiment analysis (Abdelgwad 2021), biomedical text mining (Lee et al. 2020a), and named entity recognition (Yu et al. 2019).

Temporal action detection can be regarded as the time version of image detection. The research in respect of TAD relies heavily on the timing proposal effect of the target action, and the video data have a complicated structure and different durations of action. In 2017, ActivityNet, a video understanding competition, proposed the concept of the TAD task, which introduced the problems of locating multiple target action video surveillance analyses, network video retrieval, and other tasks. According to the design method, TAD is divided into three categories: anchor-based, boundary-based, and query-based. Anchor-based TAD (5.1) generates a time proposal by assigning dense and multiscale intervals with predefined lengths to evenly distributed time positions in the input video. Boundary-based TAD (5.2) does not set predefined proposals, so proposals with precise boundaries and flexible durations can be generated. Query-based TAD (5.3) proposes to map a set of learnable embeddings to action instances to generate action proposals directly. In this section we follow the three design methods (Fig. 25).

The three design methods are Anchor-based,Boundary-based,Query-based (Vahdani and Tian 2022)

5.1 Anchor-based methods

Anchor-based approaches are also known as top-down approaches. The designer first designs multiscale anchoring on each network of the feature sequence. Then, action classification and boundary regression are carried out according to the candidate proposals. The methods are mainly based on using sliding windows or anchor kernels to generate temporal action proposals. This section introduces anchor-based models in detail, from the earliest to the latest.

Most previous methods have relied on hand-selected features with significant performance improvements. Some researchers have attempted to combine IDT (Wang and Schmid 2013) with the appearance features self-extracted by frame-level deep networks (Oneata et al. 2014b). Due to the great success of deep learning in object detection, Shou et al. (Shou et al. 2016) first proposed the S-CNN model in 2016 by means of region-based convolutional neural networks (R-CNNs) (Girshick et al. 2014) and their modifiers. The S-CNN method uses fixed windows of multiple sizes to process video clips and then uses a three-stage S-CNN for processing. As shown in Fig. 26, the overall framework is divided into three parts:

-

(a)

Step 1 is called the proposal network. The proposal generation network is used to calculate the probability of action in all video clips;

-

(b)

Step 2 is called the classification network. Classification networks are used to classify different actions and backgrounds;

-

(c)

Step 3 is called the localization network. The similar output of the location and classification networks is still the probability of each action.

In training, overlap loss based on the IOU score is increased to make better use of the overlap rate. In theory, this method only has a high degree of overlap, the better the effect, but it produces a lot of redundancy. In addition, the convolution kernel of standard C3D used by SCNN is 3, and the receptive field is too small, so only short time-sequence information can be used.

Segment-CNN Model (Shou et al. 2016)

Considering the limitations of the sliding window method, Gao et al. (2017a) proposed in 2017 that the TURN model could reduce the amount of computation and improve accuracy. The main idea of this model is to draw on the boundary regression in Faster-RCNN (Ren et al. 2015). As shown in Fig. 27, the video should first be divided into fixed-size units, such as 16 video frames, and each group should learn one feature (using C3D). Then, each group or multiple groups will be used as the central anchor unit (referring to Faster-RCNN) and expand to both ends to create a fragment pyramid. Next, coordinate regression is performed at the unit level, and the regression model has two sibling outputs. The first output is the trustworthiness score, which is used to determine whether the action is present in the fragment. The second output is the time coordinate regression offset. Compared with frame-level coordinate regression, unit-level coordinate regression is easier to learn and more efficient. An innovative new architecture based on time-coordinate regression and capable of running at over 800fps is proposed.

DAPs (Escorcia et al. 2016) and sparse prop (Heilbron et al. 2016) use average recall and the average number of search proposals (AR-AN) to evaluate TAP performance. There are two problems with the AR-AN metric:

-

(a)

the correlation between the AR-AN of TAP and the mean average precision (mAP) of action positioning has not been discussed

-

(b)

the average number of proposals retrieved correlates with the average video length of the test dataset, which makes AR-AN less reliable for evaluation across different datasets. Spatiotemporal action detection (Yu and Yuan 2015; Wang et al. 2016a) uses the recall and proposal number (R-N), but this index does not consider the video length.

The TURN method adopts the brand-new temporal action proposal (TAP) indicator AR-F to solve the above two problems. However, it does not fundamentally solve the problem of inaccurate division of the action boundary.

Architecture of TURN (Gao et al. 2017a)

Because most current models obtain good results through predetermined anchor points, they are susceptible to the interference of a large number of outputs and different anchor sizes. Lin et al. (2021) proposed a completely anchor-free temporal positioning method in 2021, which is more portable. The overall framework is divided into three parts:

-

(a)

In the video feature extraction part, an I3D network is used to extract features and finally transform them into a 1D feature pyramid;

-

(b)

Rough prediction, which is the first part of the anchoring, predicts the length of each video segment for each pyramid layer and classifies that segment;

-

(c)

In fine prediction, significant boundary features are found for proposals generated in rough prediction. Then, in turn, the proposed boundary is optimized with the boundary features to obtain fine prediction results and output the confidence of the proposal.

As shown in Fig. 28, given a video as input, the I3D model is used to extract features and construct 1D time pyramid features. Next, each pyramid feature is fed into two basic prediction modules: a regressor generating a rough boundary score and a classifier generating a rough category score. Finally, the saliency-based refinement module adjusts class scores and the start and end boundaries, and predicts the corresponding quality scores for each rough proposal. The main contribution of this method is its use of fewer parameters and outputs and obtaining a good performance. The influence of boundary features is explained by making full use of an unanchored frame. Finally, the method proposes a new consistent learning strategy for a better learning boundary control model. Yang et al. (Yang et al. 2020) showed that although the results of anchor-free methods are weak, there is evidence in the task of target detection (Girshick et al. 2014) that such methods should, in principle, be compared with anchor-based methods.

Architecture of AFSD (Lin et al. 2021)

Given the great success of target detection in images, researchers have begun to apply Faster R-CNN to video TAD. However, several challenges have arisen in shifting the field, and the first is how to deal with the dramatic change in the duration of action, from a still image to a moving video; the second is how to use timing information. The moments before and after the action instance contain key information for positioning and classification, which is to some extent more important than spatial context information. The final issue is how to fuse multi-stream features. To address these challenges, Chao et al. (2018) proposed the TAL-Net network in 2018. TAL-Net uses a multi-tower network and dilatation time convolution to strengthen alignment between the receptive field and the span of the anchor. It uses a multiscale architecture to alter the receptive field so that it can adapt to continuous changes in action duration. By expanding the receptive field, TAL-Net can make better use of the time background to generate candidate proposals and classification. As for the feature fusion method, TAL-Net proposes a two-stream frame late-fusion scheme. Conceptually, this is equivalent to performing traditional late fusion in the proposal generation and action classification phases. The experimental results prove the feasibility of feature fusion in the later stage.

The above method can also be called the two-stage method. The two-stage method first generates the action candidate proposal, and then the generated candidate proposal is classified in the second stage. Most of the current models are inspired by R-CNN. The model shown in (a) in Fig. 29 comprises a time candidate window with a high recall rate provided by the anchor core introduced above, and then the candidate proposal is passed to the later classification stage. The two-stage approach treats the proposal and classification as two separate sequential processing stages, which inhibits collaboration between them and leads to double counting between the two stages. Figure 29b shows an end-to-end trainable approach that tightly integrates proposal generation and classification to provide a more efficient architecture for uniform TAD. Currently, most of the one-stage methods are based on the predefined anchor core, and the anchor-related hyperparameters need to be set with the knowledge of the action distribution in advance.

The single-stage model synchronizes proposal-making and proposal classification. Currently, most models adopt the two-stage model, which first proposes and then classifies the proposal. One inevitable problem with such a framework is that the boundaries of the action examples are already defined in the classification step. To solve this problem, in 2017 Lin et al. (2017) proposed a single-shot temporal action detection network (SSAD) based on a one-dimensional time convolution layer. SSAD can directly detect action instances in uncut videos and skip the generation of proposals. In SSAD, features of the single-lens object detection models SSD (Liu et al. 2016) and YOLO (Redmon et al. 2016) are adopted. As shown in Fig. 30, the model includes three modules:

-

(a)

Base layer: The input video feature sequence is processed, the feature length is shortened, and the receptive domain is expanded;

-

(b)

Anchor layer: This uses time convolution to reduce the feature map and output the anchored feature map;

-

(c)

Prediction layer: The class, confidence, and position of each action instance are obtained by anchoring the feature graph. SSAD’s biggest contribution is to eliminate the candidate proposal-generation step and implement an end-to-end framework.

The framework of the SSAD network (Lin et al. 2017)

Buch et al. (2019) proposed the single-stream temporal action detection (SS-TAD) network in the same year. Like SSAD, it is a single-emitter detector, and the design inspiration came from the single-emitter object detectors YOLO and SSD. The model consists of two parts. Input visual coding is used to encode low-level space-time information for the video (similar to how SSAD uses the C3D model to extract low-level space-time information). The two recurrent memory modules can effectively converge the context information to integrate it with the TAD task and output the final action instance’s time boundary and confidence score. SS-TAD uses dynamic semantic constraints in the semantic subtasks of TAD to improve training and testing performance. Both SSAD and SS-TAD are end-to-end network models and reduce the number of times required to input video streams. The feature encoding from the two memory modules is used to output the final time bound and associated class scores for the final output detection. As shown in Fig. 31, the SS-TAD model takes a video stream as input and then represents each non-overlapping "time step" t with the visual code in the \(\delta\) frame. This visual coding acts as input to the two recurrent memory modules, making both modules semantically constrained to learn proposals and classifier-based features. These features are combined before providing the final TAD output. In contrast to previous work, the authors’ approach provides end-to-end temporal action detection through a single pass of the input video stream.

SS-TAD model architecture (Buch et al. 2019)