Abstract

We study relations between the solution sets of Variational Inequalities, Minty Variational Inequalities, Natural Map problems and Nash Equilibrium Problems. Moreover, motivated by the inherent relevance of inexactness both in modeling non-cooperative games and in algorithms for variational inequalities, we consider inexact versions of such problems and we establish relations to quantify how inexactness propagates from one problem to the other.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nash Equilibrium Problems (NEP) are well-suited to capture the interactions between multiple agents in non-cooperative settings, hence they have been largely used in economics, finance, transportation and engineering (see e.g. Facchinei and Pang 2003; Bigi et al. 2019; Ferris and Pang 1997; Facchinei and Lampariello 2011; Konnov 2007; Nagurney 1998; Facchinei and Sagratella 2011; Lampariello et al. 2021; Scutari et al. 2012). They are strictly connected to other mathematical models of variational nature. In particular NEPs can be reformulated as Variational Inequalities (VI) and Minty Variational Inequalities (MVI) under standard assumptions (see Facchinei and Pang 2003; Hartman and Stampacchia 1966; Kinderlehrer and Stampacchia 2000; Minty 1962 and the references therein for VIs, MVIs and their fundamental properties). In turn, fixed point techniques have been extensively exploited to tackle such problems by relying on to the Natural Map problem (NM), see (Facchinei and Pang 2003) for an overview. Therefore, it is reasonable to analyze these problems together and to investigate their interplay.

As some degree of inexactness arises quite naturally, both in modeling and in algorithms, we find it convenient to consider also approximate versions of the above problems. In fact, approximation is inherent in numerical algorithms when devising practical stopping criteria and, clearly, complexity guarantees are closely related to the magnitude of inexactness. For example, projection type techniques are widely used to solve VIs, where the fulfillment of a NM-related condition provides a valuable stopping criterion (see He et al. 2002; Bigi et al. 2021; Lampariello et al. 2020). Inexactness on MVI-based stopping criteria and related complexity guarantees arises when relying on averaging techniques on projection iterates or cutting plane type algorithms (see e.g. Bruck 1977; Bigi and Panicucci 2010; Lampariello et al. 2022; Nguyen and Dupuis 1984; Kaushik and Yousefian 2021). On the other hand, introducing inexactness in NEPs amounts to some degree of inaccuracy in each player problem’s equilibrium condition (see e.g. Başar and Olsder 1998; Nisan et al. 2007; Morgan 2005; Sagratella 2017a, b). The relations we present are aimed at providing a connection between the inexactness that is inevitably associated to algorithmic procedures commonly used to address NEPs and the corresponding degree of sub-optimality that is achieved in each player’s problem.

Theoretical works concerning inexactness on the above problems are mainly focused on existence of approximate solutions (see Brânzei et al. 2003) and their limit behavior as inexactness vanishes (see Gürkan and Pang 2009; Morgan and Raucci 1999; Lignola and Morgan 1994, 2002). Some connections between inexact versions of VIs and MVIs have been investigated in Lignola and Morgan (1994): we aim at deepening this analysis, while expanding it to NEPs and NMs as well. Given some level of inexactness in the solution of a problem, we are able to give an estimate of the degree of inexactness up to which any other problem is solved. These estimates depend only on problem-related constants and on the level of inexactness in the solution of the original problem.

The paper is organized into two sections. In Sect. 2, we introduce exact versions of VI, MVI, NM, NEP. While we review well-known relations, we also obtain some other connections between MVIs and NEPs and MVIs and NMs. In Sect. 3, we address the inexact versions of the above four problems and study the links between them. Just a few of them follow in the footsteps of the exact case, while others require additional assumptions. Furthermore, the corresponding degrees of inexactness in the relations may turn out to be different.

2 The exact case

In this section, we introduce the exact version of the problems and gather some results concerning the correlation between their solution sets. Given \(X \subseteq \mathbb {R}^n\) nonempty, closed and convex and \(F: \mathbb {R}^n \rightarrow \mathbb {R}^n\) continuous, the Variational Inequality problem is the following

The Minty Variational Inequality problem reads

The Natural Map problem is as follows

The presence of the inequality in the above condition, which is actually an equality, is aimed at stressing the connection with the inexact version of the problem that is introduced in the next section.

Consider the case where \(X \triangleq X_1 \times \ldots \times X_N\), with \(X_\nu \subseteq \mathbb {R}^{n_\nu }\), \(\sum _\nu n_\nu = n\), and \(\theta _\nu : X \rightarrow \mathbb {R}\) are continuously differentiable. The Nash Equilibrium Problem reads

Any such \(\overline{x}\) is called equilibrium. In the case \(N = 1\), (NEP) boils down to an optimization problem.

The following propositions are common folklore in variational analysis (see e.g. Facchinei and Pang 2003). Nonetheless, we report them for the sake of completeness.

Proposition 2.1

(NM \(\longleftrightarrow\) VI) A point \(\overline{x}\) is a solution to (NM) if and only if it is a solution to (VI).

Proof

Consider a solution \(\overline{x}\in X\) to (VI). We have

which is equivalent to

that, in turn, by the characteristic property of the projection operator, is true if and only if \(\overline{x}\) is a solution to (NM). \(\square\)

Proposition 2.2

(VI \(\longleftarrow\) MVI, VI \(\overset{{\textrm{mono}}}{\longrightarrow }\) MVI)

-

(i)

If \(\overline{x}\) is a solution to (MVI), then it is a solution to (VI).

-

(ii)

Assuming F to be monotone on X, if \(\overline{x}\) is a solution to (VI), then it is a solution to (MVI).

Proof

(i) For any \(x \in X\), we define \(u_\lambda = \lambda \overline{x} + (1-\lambda )x\), \(\lambda \in (0,1)\). Since \(u_\lambda \in X\) by the convexity of X, if \(\overline{x}\) is a solution to (MVI), we can write

Dividing both sides of the relation by \((1 - \lambda )\) and taking the limit for \(\lambda \rightarrow 1\), by the continuity of F, we have \(F(u_\lambda )\rightarrow F(\overline{x})\), and, in turn, \(\overline{x}\) is a solution to (VI)).

(ii) The proof is obtained by considering Proposition 3.2(ii) with \(\varepsilon = 0\). \(\square\)

NEPs turn out to be connected to variational inequalities by considering the operator

and \(X = X_1 \times \ldots \times X_N\), as the following results show.

Proposition 2.3

(NEP \(\longrightarrow\) VI, NEP\(\overset{{\textrm{conv}}}{\longleftarrow }\) VI) Consider F given by (1).

-

(i)

If \(\overline{x}\) is a solution to (NEP), then it is a solution to (VI).

-

(ii)

Assuming, for all \(\nu\), \(\theta _\nu \left( \bullet ,x^{-\nu }\right)\) to be convex on \(X_\nu\) for every \(x^{-\nu } \in \prod _{\mu \ne \nu }X_\mu\), if \(\overline{x}\) is a solution to (VI), then it is a solution to (NEP).

Proof

(i) If \(\overline{x}\) is a solution to (NEP), by the minimum principle, for all \(\nu\),

Concatenating these inequalities for all players, \(\overline{x}\) turns out to be a solution to (VI) with F given by (1).

(ii) The proof is obtained by considering Proposition 3.3 with \(\varepsilon = 0\). \(\square\)

The proofs to the following propositions can be deduced by considering the results in the next section, specifically Propositions 3.4, 3.6 and 3.7, with \(\varepsilon = 0\) and are therefore omitted. While the claim in Proposition 2.4 is well known, to the best of our knowledge, the other two are new.

Proposition 2.4

(NEP \(\overset{{\mathrm{N=1, conv}}}{\longrightarrow }\) MVI) Consider F given by (1) and assume \(N=1\) and \(\theta _1\) convex on \(X_1\). If \(\overline{x}\) is a solution to (NEP), then it is a solution to (MVI).

Proposition 2.5

(NEP \(\overset{{\textrm{quad}}}{\longleftarrow }\) MVI) Consider F given by (1) and assume, for all \(\nu\), \(\theta _\nu (\bullet ,x^{-\nu })\) to be quadratic on \(X_\nu\) for every \(x^{-\nu } \in \prod _{\mu \ne \nu }X_\mu\). If \(\overline{x}\) is a solution to (MVI), then it is a solution to (NEP).

Proposition 2.6

(NM \(\overset{{\mathrm{L<1}}}{\longleftarrow }\) MVI) Assume F is Lipschitz continuous with modulus \(L<1\). If \(\overline{x}\) is a solution to (MVI), then it is a solution to (NM).

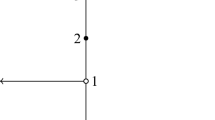

Figure 1 subsumes all the above results. The arrow connecting NEP with MVI is dashed since the relation between the two problems is proven when only a single player is considered. We also remark that the graph in Fig. 1 is not complete because the missing relations have not been established. However, the graph turns out to be connected, so that one can link, possibly not directly, any pair of problems.

3 The inexact case

The results in Sect. 2 bear witness to how the (exact) solutions of the problems we consider are deeply interrelated. However, since inexactness arises naturally, for example when resorting to numerical procedures, it is paramount to understand how the connections between these problems behave when they are solved up to some accuracy. Even more so when dealing with practical situations: suffice it to think to the NEPs modeling the interplay of clients’ accounts that are managed by financial service providers in a multi-portfolio context (see Lampariello et al. 2021). Applying numerical procedures to such problems invariably leads to the computation of points satisfying suitable stopping criteria (given in the form of MVI, or NM) up to some accuracy (see Lampariello et al. 2020, 2022, 2023). In turn, knowing the link between inexact solutions of MVIs or NMs and corresponding NEPs, one can get information on up to what actual amount of money the computed utilities of the clients are optimal.

Consider a degree of inexactness \(\varepsilon \ge 0\). The \(\varepsilon\)-inexact Variational Inequality (\(\varepsilon\)-VI) problem is the following

The \(\varepsilon\)-inexact Minty Variational Inequality (\(\varepsilon\)-MVI) problem reads

The \(\varepsilon\)-inexact Natural Map problem (\(\varepsilon\)-NM) is

The \(\varepsilon\)-inexact Nash Equilibrium Problem (\(\varepsilon\)-NEP) reads

Any such \(\overline{x}\) is also called \(\varepsilon\)-equilibrium, and in particular an \(\varepsilon\)-minimum when \(N = 1\).

Taking \(\varepsilon = 0\) in the above (inexact) problems, the corresponding exact versions (see Sect. 2) are recovered. Whenever X is bounded, the quantities

are sometimes called for in the forthcoming developments.

Inexactness brings on some difficulties in establishing correspondences between the above problems: relationships turn out to be more complicated and require also additional assumptions that are not needed in the exact case.

The relation between inexact VIs and NMs can be traced back to Lampariello et al. (2022).

Proposition 3.1

(\(\varepsilon\)-NM \(\overset{{\textrm{bound}}}{\longrightarrow }\) \((c_1\varepsilon )\)-VI, \(\sqrt{\varepsilon }\)-NM \(\longleftarrow\) \(\varepsilon\)-VI)

-

(i)

Assuming X to be bounded, if \(\overline{x}\) is a solution to (\(\varepsilon\)-NM), then it is a solution to (\((c_1\varepsilon )\)-VI), where \(c_1 \triangleq D_X+\overline{F}_X\).

-

(ii)

If \(\overline{x}\) is a solution to (\(\varepsilon\)-VI), then it is a solution to (\(\sqrt{\varepsilon }\)-NM).

Proof

(i) Consider a solution \(\overline{x} \in X\) to (\(\varepsilon\)-NM) and let \(z = P_X(\overline{x} - F(\overline{x}))\): by the characteristic property of the projection operator, we have

therefore, for all \(x \in X\),

where the third inequality is due to the boundedness of X, and the last one holds because \(\overline{x}\) is a solution to (\(\varepsilon\)-NM). Therefore, \(\overline{x}\) is a solution to (\((c_1\varepsilon )\)-VI).

(ii) Consider a solution \(\overline{x}\) to (\(\varepsilon\)-VI) and let \(z = P_X(\overline{x} - F(\overline{x}))\), we have

where the last inequality is due to the characteristic property of the projection operator. Therefore, \(\overline{x}\) is a solution to (\(\sqrt{\varepsilon }\)-NM). \(\square\)

While the second implication in the next two results follows in the footsteps of the exact case, the other one requires a more complicated proof as well as additional assumptions.

Proposition 3.2

(\((c_2\sqrt{\varepsilon })\)-VI \(\overset{{\textrm{Lips, bound}}}{\longleftarrow }\) \(\varepsilon\)-MVI, \(\varepsilon\)-VI \(\overset{{\textrm{mono}}}{\longrightarrow }\) \(\varepsilon\)-MVI)

-

(i)

Assuming F to be Lipschitz continuous on X with modulus L and X to be bounded, if \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI), then it is a solution to (\(c_2\sqrt{\varepsilon }\)-VI), where \(c_2 \triangleq 2D_X\sqrt{L}\).

-

(ii)

Assuming F to be monotone on X, if \(\overline{x}\) is a solution to (\(\varepsilon\)-VI), then it is a solution to (\(\varepsilon\)-MVI).

Proof

(i) Letting \(\lambda = 1- \sqrt{\varepsilon }/\left( D_X \sqrt{L}\right)\) with \(\varepsilon > 0\), \(\lambda \in (0,1)\) because we can consider, without loss of generality, \(\sqrt{\varepsilon } < D_X \sqrt{L}\). The proof is now obtained by contradiction. If \(\overline{x}\) is not a solution to (\(c_2\sqrt{\varepsilon }\)-VI), then there must exist \(\widetilde{x}\in X\) such that

We have

where the last inequality is due to the convexity and boundedness of X, and the Lipschitz continuity of F. Multiplying both sides by \((1-\lambda )\), we have

Therefore, since \((\lambda \overline{x} + (1-\lambda )\widetilde{x}) \in X\) due to the convexity of X, \(\overline{x}\) is not a solution to (\(\varepsilon\)-MVI), and we have a contradiction.

(ii) By the monotonicity of F, for all \(x \in X\), we have

where the last inequality holds because \(\overline{x}\) is a solution to (\(\varepsilon\)-VI). \(\square\)

Proposition 3.3

(\(\varepsilon\)-NEP \(\overset{{\textrm{Lips,bound,conv}}}{\longrightarrow }\) \((c_3\sqrt{\varepsilon })\)-VI, \(\varepsilon\)-NEP\(\overset{{\textrm{conv}}}{\longleftarrow }\) \(\varepsilon\)-VI) Consider F given by (1).

-

(i)

Assuming F to be Lipschitz continuous on X with modulus L and X bounded, if \(\overline{x}\) is a solution to (\(\varepsilon\)-NEP) such that, for all \(\nu\), \(\theta _\nu \left( \bullet ,\overline{x}^{-\nu }\right)\) is convex on \(X_\nu\), then it is a solution to (\(c_3\sqrt{\varepsilon }\)-VI), where \(c_3 \triangleq 2N\max _{\nu }(D_{X_\nu })\sqrt{L}\).

-

(ii)

Assuming \(\theta _\nu \left( \bullet ,x^{-\nu }\right)\) to be convex on \(X_\nu\) for every \(x^{-\nu } \in \prod _{\mu \ne \nu }X_\mu\) and for all \(\nu\), if \(\overline{x}\) is a solution to (\(\varepsilon\)-VI), then it is a solution to (\({\varepsilon }\)-NEP).

Proof

(i) The proof is obtained by contradiction. If \(\overline{x}\) is not a solution to (\(c_3\sqrt{\varepsilon }\)-VI), where F is given by (1), there must exist \(\widetilde{x} \in X\) such that

which yields the existence of \(i\in \{1\ldots N \}\) such that

Relying on the contrapositive of Proposition 3.2(i), \(\widehat{x}^i \in X_i\) exists such that

and finally, by the convexity of \(\theta _i\left( \bullet ,\overline{x}^{-\nu }\right)\), we have

Therefore, \(\overline{x}\) is not a solution to (\(\varepsilon\)-NEP), and we get the absurdum.

(ii) If \(\overline{x}\) is a solution to (\(\varepsilon\)-VI), for every \(i \in \{1\ldots N\}\) we can write

which is equivalent to

By the convexity of player i’s problem, we get

\(\square\)

Remark 1

The boundedness of X is an essential requirement for (i) in Propositions 3.1 and 3.2 to hold.

Taking \(X = \mathbb {R}\), the only solutions to (\(\varepsilon\)-VI) for any \(\varepsilon \ge 0\), are those points x such that \(F(x)= 0\). For any given \(\varepsilon \ge 0\), considering any \(\overline{x}\) such that \(0<F(\overline{x}) \le \varepsilon\), it is a solution to (\(\varepsilon\)-NM), but, for any \(\varepsilon '\ge 0\), it cannot be a solution to (\({\varepsilon '}\)-VI). Taking the cue from this example, the boundedness of X is easily seen to be essential also for (i) in Propositions 3.3.

Taking \(F(x)=x\) and \(X = \mathbb {R}\), and considering \(\varepsilon \ge 0\), \(\overline{x}= \sqrt{\varepsilon }\) is a solution to (\(\varepsilon\)-MVI). However, \(\overline{x}\) cannot be a solution to (\({\varepsilon '}\)-VI) for any \(\varepsilon '\ge 0\), since \(F(\overline{x}) \ne 0\).

As for the exact case, \(\epsilon\)-equilibria yield inexact solutions in the corresponding MVI when the NEP boils down to a convex optimization problem (see also [Proposition 2.1 Lignola and Morgan (1994)]).

Proposition 3.4

(\(\varepsilon\)-NEP \(\overset{{\mathrm{N=1, convex}}}{\longrightarrow }\) \(\varepsilon\)-MVI] Consider F given by (1) and assume \(N = 1\) and \(\theta _1\) to be convex on \(X_1\). If \(\overline{x}\) is a solution to (\(\varepsilon\)-NEP), then it is a solution to (\({\varepsilon }\)-MVI).

Proof

The proof is given by the following chain of relations:

where the last inequality is due to the convexity of \(\theta _1\). \(\square\)

To link (\(\varepsilon\)-MVI) to inexact equilibria, we rely on a parametric version of the classical Mean-value Theorem: considering \(x^\nu \in X_\nu\) and \(\widetilde{x} \in X\), for the differentiable (parametric in \(x^\nu\) and \(\widetilde{x}\)) real-valued function \(\varphi _\nu \left( \bullet ; x^\nu , \widetilde{x}\right) : \mathbb R \rightarrow \mathbb R\) defined as

a point \(\lambda _\nu \left( x^\nu , \widetilde{x}\right) \in (0,1)\), depending on \(x^\nu\) and \(\widetilde{x}\), exists such that

Requiring the existence of a point \(\lambda _\nu\) satisfying the above relation that is bounded away from zero on \(X^\nu \times X\), for all \(\nu\), and for every \((x^\nu , \widetilde{x}) \in X^\nu \times X\), we are able to establish a connection between solutions to (\(\varepsilon\)-MVI) and corresponding inexact equilibria.

Theorem 3.5

For every \(\nu\), and for all \(x^\nu \in X_\nu\) and \(\overline{x} \in X\), assume \(\lambda _\nu (x^\nu , \overline{x})\) satisfying

exists such that

Considering F given by (1), if \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI), then it is a solution to (\({\left( {\varepsilon }/{\min _\mu \overline{\lambda }_{\mu }}\right) }\)-NEP).

Proof

If \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI), choosing \(x = \left( x^\nu , \overline{x}^{-\nu }\right)\) for any \(\nu\), we get

which is equivalent to

Therefore, exploiting (2), we have, for any \(x_\nu \in X_\nu\),

where the inequality holds because \(((1- \lambda _{\nu }(x^\nu , \overline{x}))\overline{x}^\nu + \lambda _{\nu }(x^\nu , \overline{x}) x^\nu ) \in X_\nu\), and \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI). \(\square\)

Exploiting Proposition 3.6 and resorting to the intriguing results in Hiriart-Urruty (2021), we identify player-quadratic NEPs as a broad class of problems for which the sufficient condition (3) in Theorem 3.5 is guaranteed.

Proposition 3.6

(\((2\varepsilon )\)-NEP \(\overset{{\mathrm{quad.}}}{\longleftarrow }\) \(\varepsilon\)-MVI) Consider F given by (1) and assume, for all \(\nu\), \(\theta _\nu \left( \bullet ,x^{-\nu }\right)\) to be quadratic on \(X_\nu\) for every \(x^{-\nu } \in \prod _{\mu \ne \nu }X_\mu\). If \(\overline{x} \in X\) is a solution to (\(\varepsilon\)-MVI), then it is a solution to (\({(2\varepsilon )}\)-NEP).

Proof

For all \(\nu\) we can consider, without loss of generality,

where, for every \(x^{-\nu }\), \(A^\nu ({x}^{-\nu })\) is a symmetric \((n_\nu \times n_\nu )\)-dimensional matrix, \(b(x^{-\nu }) \in \mathbb {R}^{n_\nu }\). In this case, \(\varphi (\tau ; x^\nu ,\overline{x})\) becomes

with

Therefore, observing that, whenever \(\alpha ^\nu \left( \overline{x},x^{\nu }\right) = 0\), relation (2) is trivially satisfied with \(\lambda _\nu \left( x^\nu , \overline{x}\right) = 1/2\), and, if this is not the case, one can leverage the results about the Mean-value Theorem in the quadratic case in Hiriart-Urruty (2021), Theorem 3.5 holds with \(\min _\mu \overline{\lambda }_\mu = 1/2\), and the claim follows. \(\square\)

A first connection between inexact MVIs and NMs can be obtained through VIs by combining Propositions 3.2 and 3.1, that leads to a degree of inexactness with a magnitude of order \(\root 4 \of {\varepsilon }\). However, a direct relation holds under a stronger Lipschitz assumption, but the boundedness of X is no longer required. Moreover, it guarantees a better degree of inexactness with a magnitude of order \(\sqrt{\varepsilon }\).

Proposition 3.7

(\((c_4\sqrt{\varepsilon })\)-NM \(\overset{{\mathrm{L<1}}}{\longleftarrow }\) \(\varepsilon\)-MVI) Assume F to be Lipschitz continuous on X with modulus \(L<1\). If \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI), then it is a solution to (\({(c_4\sqrt{\varepsilon })}\)-NM), with \(c_4 \triangleq \displaystyle {1}/{\sqrt{1-L}}\).

Proof

Let \(z = P_X(\overline{x} - F(\overline{x})) \in X\). If \(\overline{x}\) is a solution to (\(\varepsilon\)-MVI), we have

Therefore, \(\overline{x}\) is a solution to (\({(c_4\sqrt{\varepsilon })}\)-NM). \(\square\)

The scheme in Fig. 2, which mirrors the one in Fig. 1 about exact relations, subsumes the previous results by depicting how the magnitude of inexactness propagates from one problem to another. Roughly speaking, the estimates of the amount of propagation are optimal. Indeed, the inexactness bounds provided in the propositions of this section are sharp, meaning that there exist instances where the bounds cannot be improved. These instances are provided and described below.

-

1.

[\(\sqrt{\varepsilon }\)-NM \(\longleftarrow\) \(\varepsilon\)-VI] Consider the problems addressed in Proposition 3.1(ii), with \(n = 2\) and

$$\begin{aligned} F(x) = (1+x_1, \; \sqrt{\varepsilon }+x_2)^T \quad X = [0,1]\times [-\sqrt{\varepsilon }, 0]. \end{aligned}$$Then \(\overline{x}= (0,0)\) is a solution to \(\varepsilon\)-VI, and \(\Vert \overline{x}-P_X(\overline{x}- F(\overline{x}))\Vert = \sqrt{\varepsilon }\) so that \(\overline{x}\) is a solution to \(\sqrt{\varepsilon }\)-NM, but not to \(\varepsilon '\)-NM for any \(\varepsilon ' < \sqrt{\varepsilon }\).

-

2.

[\((c_2\sqrt{\varepsilon })\)-VI \(\overset{{\textrm{Lips, bound}}}{\longleftarrow }\) \(\varepsilon\)-MVI] Consider the problems addressed in Proposition 3.2(i) with \(n = 1\) and

$$\begin{aligned} F(x) = x \quad X = [0,2\sqrt{\varepsilon }]. \end{aligned}$$Then \(c_2 = 4 \sqrt{\varepsilon }\) and \(\overline{x} = 2\sqrt{\varepsilon }\) is a solution to \(\varepsilon\)-MVI, furthermore,

$$\begin{aligned} \min _{x \in X} F(\overline{x})^T(x-\overline{x}) = -4\varepsilon = -c_2\sqrt{\varepsilon } \end{aligned}$$so that \(\overline{x}\) is a solution to \((c_2 \sqrt{\varepsilon })\)-VI, but not to \(\varepsilon '\)-VI for any \(\varepsilon ' < c_2\sqrt{\varepsilon }\).

-

3.

[\(\varepsilon\)-VI \(\overset{{\textrm{mono}}}{\longrightarrow }\) \(\varepsilon\)-MVI] Consider the problems addressed in Proposition 3.2(ii), with a constant operator F. Then, the two problems coincide and consequently the degree of inexactness is necessarily the same.

-

4.

[\(\varepsilon\)-NEP \(\overset{{\textrm{Lips,bound,conv}}}{\longrightarrow }\) \((c_3\sqrt{\varepsilon })\)-VI] Consider the problems addressed in Proposition 3.3(i), with \(N = n =1\) and

$$\begin{aligned} \theta (x) = \frac{1}{2} x^2 \quad X = [\sqrt{2 \varepsilon }, 2 \sqrt{\varepsilon }]. \end{aligned}$$Then \(F(x) =\nabla \theta (x) = x\), \(c_3 =2(2-\sqrt{2})\sqrt{\varepsilon }\) and \(\overline{x} = 2\sqrt{\varepsilon }\) is a solution to \((c_3\sqrt{\varepsilon })\)-VI, furthermore,

$$\begin{aligned} \min _{x \in X} F(\overline{x})^T(x-\overline{x}) = 2(\sqrt{2}-2)\sqrt{\varepsilon }\sqrt{\varepsilon } = -c_3\sqrt{\varepsilon } \end{aligned}$$so that \(\overline{x}\) is a solution to \((c_3\sqrt{\varepsilon })\)-VI, but not to \(\varepsilon '\)-VI for any \(\varepsilon '< c_3 \sqrt{\varepsilon }\).

-

5.

[\(\varepsilon\)-NEP\(\overset{{\textrm{conv}}}{\longleftarrow }\) \(\varepsilon\)-VI] Consider the problems addressed in Proposition 3.3 (ii), with \(N = 2\), \(n_1 = n_2 = 1\) and

$$\begin{aligned} \theta _1(x) = \theta _2(x) = x^1 x^2 \quad X_1 = X_2 = [-\sqrt{\varepsilon }, 1]. \end{aligned}$$Then \(F(x)= \nabla _{x^1}\theta _1(x) \times \nabla _{x^2}\theta _2(x) = (x^2,x^1)^T\), \(X = [-\sqrt{\varepsilon }, 1]^2\) and \(\overline{x}=(\sqrt{\varepsilon },0)\) is a solution to \(\varepsilon\)-VI, furthermore

$$\begin{aligned}&\theta _1(\sqrt{\varepsilon },0) - \min _{x^1\in X_1} \theta _1(x^1,0) = 0 \le \varepsilon \\&\theta _2(\sqrt{\varepsilon },0) - \min _{x^2 \in X_2} \theta _2(\sqrt{\varepsilon },x^2) = \varepsilon \end{aligned}$$so that \(\overline{x}\) is a solution to \(\varepsilon\)-NEP, but not to \(\varepsilon '\)-NEP for any \(\varepsilon ' < \varepsilon\).

-

6.

[\(\varepsilon\)-NEP \(\overset{{\mathrm{N=1, convex}}}{\longrightarrow }\) \(\varepsilon\)-MVI] Consider the problems addressed in Proposition 3.4, with a linear operator \(\theta\). Then, the two problems coincide and consequently the degree of inexactness is necessarily the same.

-

7.

[\((2\varepsilon )\)-NEP \(\overset{{\mathrm{quad.}}}{\longleftarrow }\) \(\varepsilon\)-MVI] Consider the problems addressed in Proposition 3.6. The bound is sharp for any \(\overline{x} \in X: \; \min _{x\in X}F(x)^T(x-\overline{x})=-\varepsilon\), since in this case the proof would require no inequality and therefore no approximation of the inexactness.

-

8.

[\((c_4\sqrt{\varepsilon })\)-NM \(\overset{{\mathrm{L<1}}}{\longleftarrow }\) \(\varepsilon\)-MVI] Consider the problems addressed in Proposition 3.7, with \(n = 1\) and

$$\begin{aligned} F(x) = \frac{1}{2}x \quad X= [0,1]. \end{aligned}$$Then \(c_4 = \sqrt{2}\) and \(\overline{x} = 2 \sqrt{2\varepsilon }\) is a solution to \(\varepsilon\)-MVI if \(\varepsilon < 1/8\), furthermore \(\Vert \overline{x} - P_X(\overline{x}- F(\overline{x}))\Vert = \sqrt{2}\sqrt{\varepsilon } = c_4 \sqrt{\varepsilon }\) so that \(\overline{x}\) is a solution to \(c_4\sqrt{\varepsilon }\)-NM but not to \(\varepsilon '\)-NM for any \(\varepsilon '< c_4 \sqrt{\varepsilon }\).

On the contrary, the inexactness bound in Proposition 3.1(i) seems not to be sharp because it would require the distance between a pair of specific points (namely, \(P_X(\overline{x}-F(\overline{x}))\) and the minimizer of \(F(\overline{x})^T(x-\overline{x})\) over X) to be exactly the diameter \(D_X\). Anyway, sharpness is missing by an arbitrarily small amount, or it can be recovered by exploiting a solution-dependent constant, as shown by the two following examples.

-

Consider \(n = 1\),

$$\begin{aligned} F(x) = \varepsilon \quad X= [-a,1]. \end{aligned}$$Then \(c_1 = 1 + a +\varepsilon\) and \(\overline{x}=1\) is a solution to \(\varepsilon\)-NM, furthermore

$$\begin{aligned} \min _{x \in X} F(\overline{x})^T(x-\overline{x}) = -(c_1-\varepsilon )\varepsilon , \end{aligned}$$while the inexactness bound in Proposition 3.1 is \(c_1 \varepsilon\). Nonetheless, the ratio \((c_1 - \varepsilon )/c_1\) goes to 1 as \(a \rightarrow \infty\) or \(\varepsilon \rightarrow 0\).

-

Consider \(n = 1\),

$$\begin{aligned} F(x) = 1 \quad X=[0,1]. \end{aligned}$$Then \(\overline{x}=\varepsilon\) is a solution to \(\varepsilon\)-NM, furthermore

$$\begin{aligned} \min _{x \in X} F(\overline{x})^T(x-\overline{x}) = - \varepsilon =-\overline{c}_1 \varepsilon , \end{aligned}$$where

$$\begin{aligned} \overline{c}_1 = \min \{ \Vert y-(P_X(\overline{x}- F(\overline{x})))\Vert : y \in \arg \min _{x \in X}F(\overline{x})^T(x - \overline{x}) \}+ \Vert F(\overline{x})\Vert \end{aligned}$$depends upon the solution \(\overline{x}\). This way, \(\overline{x}\) is a solution to \((\overline{c}_1\varepsilon )\)-VI but not to \(\varepsilon '\)-VI for any \(\varepsilon '<\overline{c}_1\varepsilon\).

4 Conclusions

We investigated the relations between VIs, MVIs, NMs and NEPs, in both the exact and inexact cases. This study is relevant as inexactness arises naturally when dealing with stopping criteria for numerical procedures and when complexity results are available. In particular, the connections between inexact NEPs and variational problems allow quantifying the quality of the computed approximate equilibria, which is especially relevant in applications. The connections from MVIs to NEPs proved to be the most challenging: restricting to player-quadratic objectives (which are anyhow quite common in concrete applications), one can get a direct implication (see Fig. 2) that also allows preserving the order of magnitude of the approximation. Overall, the results that we presented show that inexactness behaves somehow well: all the relations for the exact case are maintained in the inexact case, by sometimes paying the price of some additional assumptions; we have been able to quantify how inexactness propagates from a problem to the other ones, and the limit behavior, as inexactness vanishes, turns out to correspond to the exact case.

As regularization is a common technique for variational problems, a similar analysis could be conducted for regularized VIs and MVIs. Other future developments may include the extension of this analysis to generalized NEPs and quasi-variational problems, as well as nonsmooth settings that are likely to call for corresponding generalized variational problems.

References

Başar T, Olsder GJ (1998) Dynamic noncooperative game theory. SIAM, Philadelphia

Bigi G, Castellani M, Pappalardo M, Passacantando M (2019) Nonlinear programming techniques for equilibria. Springer, Berlin

Bigi G, Lampariello L, Sagratella S (2021) Combining approximation and exact penalty in hierarchical programming. Optimization 71(8):2403–2429

Bigi G, Panicucci B (2010) A successive linear programming algorithm for nonsmooth monotone variational inequalities. Optim Methods Softw 25(1):29–35

Brânzei R, Morgan J, Scalzo V, Tijs S (2003) Approximate fixed point theorems in Banach spaces with applications in game theory. J Math Anal Appl 285(2):619–628

Bruck RE (1977) On the weak convergence of an ergodic iteration for the solution of variational inequalities for monotone operators in Hilbert space. J Math Anal Appl 61(1):159–164

Facchinei F, Lampariello L (2011) Partial penalization for the solution of generalized Nash equilibrium problems. J Glob Optim 50(1):39–57

Facchinei F, Pang J-S (2003) Finite-dimensional variational inequalities and complementarity problems. Springer, New York

Facchinei F, Sagratella S (2011) On the computation of all solutions of jointly convex generalized Nash equilibrium problems. Optim Lett 5(3):531–547

Ferris MC, Pang J-S (1997) Engineering and economic applications of complementarity problems. Siam Rev 39(4):669–713

Gürkan G, Pang J-S (2009) Approximations of Nash equilibria. Math Progr 117(1):223–253

Hartman P, Stampacchia G (1966) On some non-linear elliptic differential-functional equations

He B, Liao L-Z, Han D, Yang H (2002) A new inexact alternating directions method for monotone variational inequalities. Math Progr 92(1):103–118

Hiriart-Urruty J-B (2021) Sensitivity of the “intermediate point’’ in the mean value theorem: an approach via the Legendre-Fenchel transformation. ESAIM: Proc Surv 71:114

Kaushik HD, Yousefian F (2021) A method with convergence rates for optimization problems with variational inequality constraints. SIAM J Optim 31(3):2171–2198

Kinderlehrer D, Stampacchia G (2000) An introduction to variational inequalities and their applications. SIAM, Philadelphia

Konnov I (2007) Equilibrium models and variational inequalities. Elsevier, Amsterdam

Lampariello L, Neumann C, Ricci JM, Sagratella S, Stein O (2020) An explicit Tikhonov algorithm for nested variational inequalities. Comput Optim Appl 77(2):335–350

Lampariello L, Neumann C, Ricci JM, Sagratella S, Stein O (2021) Equilibrium selection for multi-portfolio optimization. Eur J Oper Res 295(1):363–373

Lampariello L, Priori G, Sagratella S (2022) On the solution of monotone nested variational inequalities. Math Methods Oper Res, 1–26

Lampariello L, Sagratella S, Sasso VG (2023) Nonsmooth hierarchical multi portfolio selection. In: Optimization and Decision Science: Operations Research, Inclusion and Equity: ODS, Florence, Italy, August 30 - September 2, 2022, page to appear. Springer

Lignola MB, Morgan J (1994) Approximate solutions to variational inequalities and applications. Le Matematiche 49(2):281–293

Lignola MB, Morgan J (2002) Approximate solutions and \(\alpha\)-well-posedness for variational inequalities and Nash equilibria. In: Decision & control in management science. Springer, pp 367–377

Minty GJ (1962) Monotone (nonlinear) operators in Hilbert space. Duke Math J 29(3):341–346

Morgan J (2005) Approximations and well-posedness in multicriteria games. Ann Oper Res 137(1):257–268

Morgan J, Raucci R (1999) New convergence results for Nash equilibria. J Convex Anal 6(2):377–385

Nagurney A (1998) Network economics: a variational inequality approach, vol 10. Springer, Berlin

Nguyen S, Dupuis C (1984) An efficient method for computing traffic equilibria in networks with asymmetric transportation costs. Transp Sci 18(2):185–202

Nisan N, Roughgarden T, Tardos E, Vazirani VV (2007) Algorithmic game theory, 2007. Book available for free online

Sagratella S (2017) Algorithms for generalized potential games with mixed-integer variables. Comput Optim Appl 68(3):689–717

Sagratella S (2017) Computing equilibria of Cournot oligopoly models with mixed-integer quantities. Math Methods Oper Res 86(3):549–565

Scutari G, Facchinei F, Pang J-S, Lampariello L (2012) Equilibrium selection in power control games on the interference channel. In: 2012 Proceedings IEEE INFOCOM, pp 675–683. IEEE

Acknowledgements

The authors wish to thank the two anonymous referees for the careful reviews and valuable comments which helped them to improve the paper.Giancarlo Bigi is a member of the Gruppo Nazionale per l’Analisi Matematica, la Probabilitá e le loro Applicazioni (GNAMPA - National Group for Mathematical Analysis, Probability and their Applications) of the Istituto Nazionale di Alta Matematica (INdAM - National Institute of Higher Mathematics).

Funding

Open access funding provided by Università di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bigi, G., Lampariello, L., Sagratella, S. et al. Approximate variational inequalities and equilibria. Comput Manag Sci 20, 43 (2023). https://doi.org/10.1007/s10287-023-00476-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10287-023-00476-w